1. Introduction

Simultaneous localization and mapping (SLAM) is one of the most important issues in mobile robotics research [

1,

2,

3,

4,

5]. SLAM is a process by which a mobile robot can build a map of its environment and simultaneously use this map to locate itself [

6]. Therefore, an accurate motion estimation of the robot will greatly facilitate the realization of this process and has attracted considerable attention from researchers in recent decades [

1,

2,

7,

8,

9].

Scan-matching techniques are widely used to estimate mobile robot motion by matching successive range measurements from a laser range finder (LRF) [

1,

2,

4,

9,

10,

11,

12,

13,

14,

15,

16,

17]. The iterative closest point (ICP) algorithm is one of the most popular scan-matching techniques because of its efficiency and simplicity [

18,

19,

20,

21,

22]. This algorithm iteratively establishes correspondences based on the criterion of the closest point and calculates a rigid transformation that minimizes the distance error until convergence. However, ICP tends to fall into local optima when large sensor rotation is introduced, which frequently arises when a robot rotates around itself or unexpected slippage occurs [

1]. Additionally, ICP utilizes a point-to-point metric to determine correspondences, which is less accurate because of the discreteness of laser scans. In addition, ICP relies on a questionable assumption that successive scans perfectly overlap [

19,

20], which can hardly stand because of changes in the environment and occlusions from vehicle motion [

3,

20,

23]. False correspondences will arise in non-overlapping areas and thus cause the algorithm to converge upon an incorrect solution [

20].

To solve the problem of scan matching with large rotation, Minguez proposed a metric-based ICP algorithm (MbICP) [

10] that establishes correspondences and calculates transformations based on its defined metric-based distance, which simultaneously considers both translation and rotation. This algorithm is claimed [

1,

2,

10] to be robust and accurate when the rotation change is large but still limited to matching scans that perfectly overlap.

Considerable research has been conducted to improve ICP and its variants in matching partially overlapping scans by enhancing the performance of correspondence establishment and rejecting incorrect correspondences [

19,

20,

22,

23,

24,

25,

26]. In terms of establishing correspondence, Chen proposed a point-to-plane approach, which determines the correspondence by finding the projection of the source point onto the tangent plane at a destination surface point that is the intersection of the normal vector of the source point [

27], but this approach is only available for scan matching with three-dimension (3D) laser scans. Zhang proposed a biunique correspondence ICP (BC-ICP) [

20], which guarantees unique correspondence by searching for multiple closest points instead of one point. However, this strategy assumes that the corresponding point search begins from an overlapping area, which cannot be guaranteed in practice. In terms of rejecting incorrect correspondences, Druon proposed a rejection strategy that was based on a statistical analysis of the correspondence distance, which adopted μ + σ as the rejection threshold [

28,

29,

30]. Nevertheless, this strategy assumes that the distribution of distances is Gaussian, which is not a reasonable assumption in a dynamic environment [

22]. Chetverikov proposed a trimmed ICP algorithm (TrICP) [

26], which uses golden-section searching to estimate an overlapping ratio and uses a trimmed least-squares approach to discard incorrect correspondence pairs. Despite the claimed robustness of this algorithm, TrICP is time consuming because it repeatedly searches for the best overlapping ratio [

22,

24]. This algorithm may also be misled by a large change in the overlap because of occlusions from a moving platform. Pomerleau proposed an adaptive rejection technique called the relative motion threshold (RMT) [

22], which calculates the rejection threshold with a simulated annealing ratio. This technique is flawed in that the rejection threshold heavily relies on the translation vector, so a large sensor rotation may produce an incorrect rejection [

20].

This paper proposes an improved MbICP algorithm for mobile robot pose estimation by using partially overlapping laser scans from a 2D laser scanner, which is commonly used because of its low cost and effectiveness in terms of data processing. Before matching, a resampling step is executed in the object scan to make the density distribution of point clouds relatively even. In terms of scan matching, finding the nearest point is still based on a metric distance, but the procedure of determining the correspondence is improved by combining the point-to-point and point-to-line metrics. Then, a distance threshold that is based on the MAD-from-median method is calculated to reject correspondences with distances above the threshold. Experiments are designed to compare the performance of the improved algorithm and standard algorithm in terms of robustness, accuracy and convergence. Furthermore, a series of real-world experiments are conducted to test its validity in realistic situations.

The paper is organized as follows. In

Section 2, the principle of the ICP algorithm and the metric-based distance as defined by MbICP are outlined, followed by a detailed explanation of the resampling method and improved algorithm. The experimental results are presented in

Section 3 and discussed in

Section 4. The last section describes several conclusions.

2. Scan-Matching Algorithm

2.1. ICP Algorithm

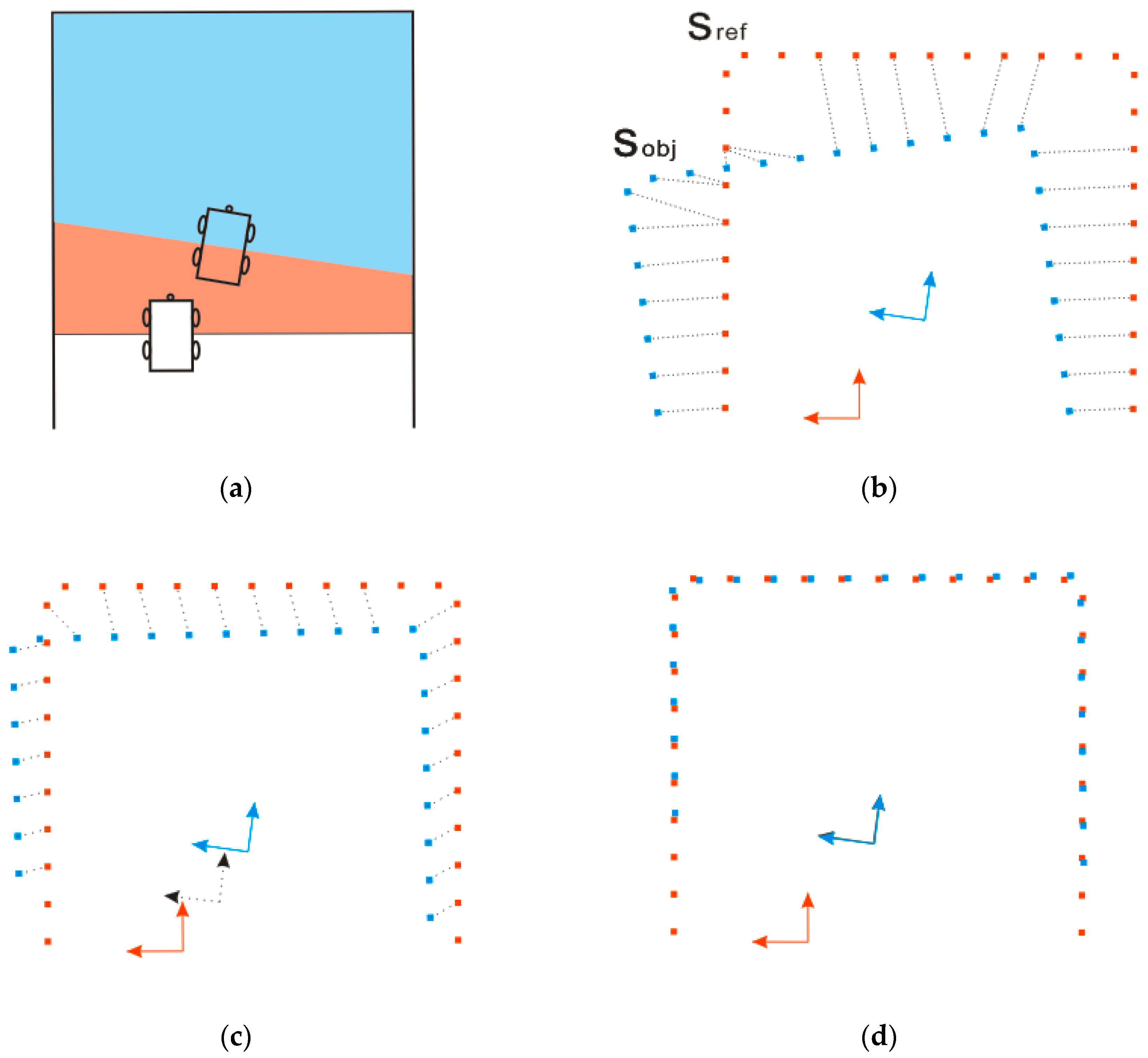

Robot motion between time steps can be represented by a transformation vector

, which consists of a translation component

and a rotational component

. Given two successive scans from a laser scanner at the beginning and end of motion, the first scan is marked as the reference scan

and the second scan is treated as the object scan

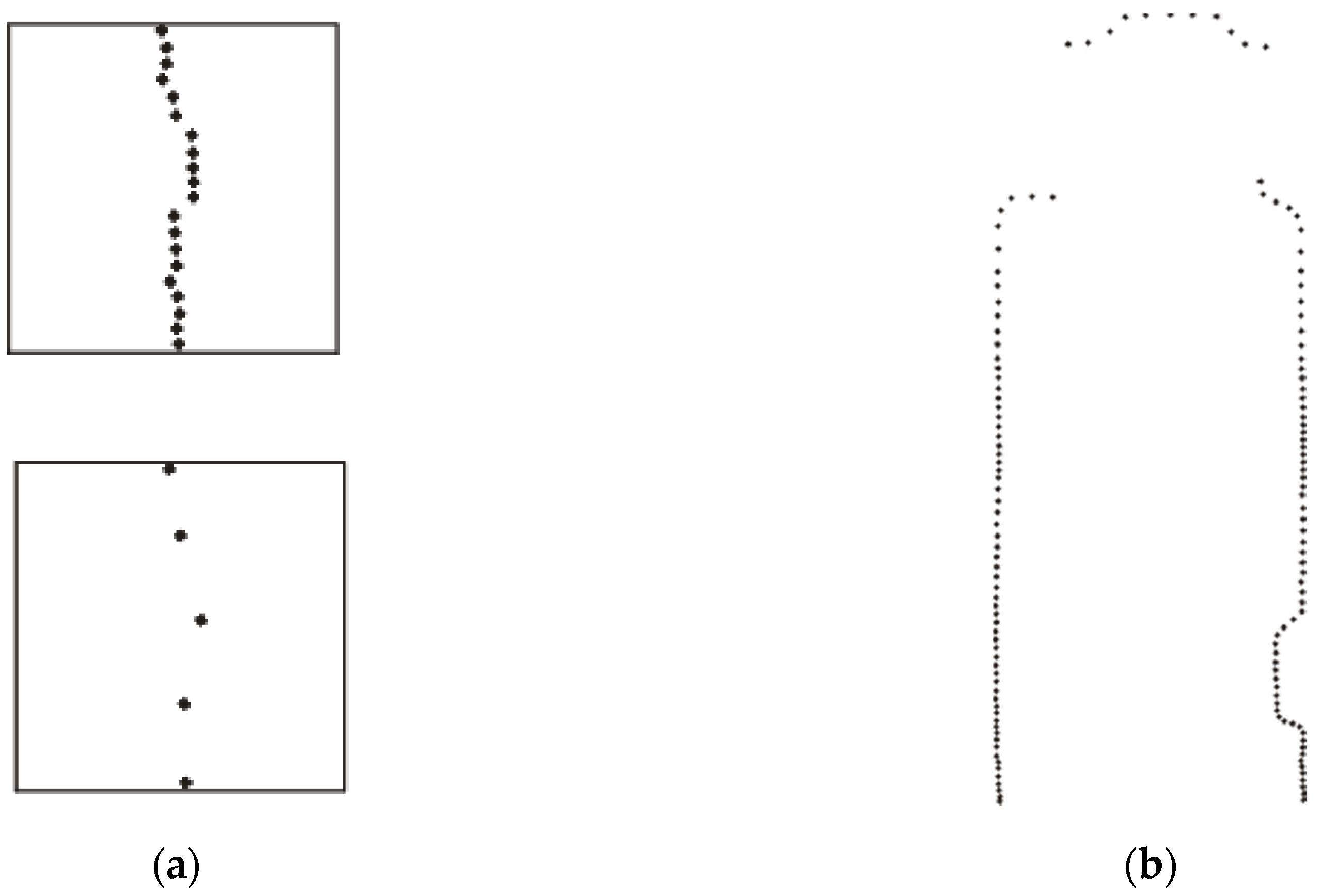

(as shown in

Figure 1b).

The transformation T can be estimated by minimizing the distance of the corresponding points between and with the ICP algorithm, which is based on a three-step iterative process:

Establish correspondence. For each point in , search for the closest point in to establish correspondence. A K-D tree is recommended to accelerate the searching process.

Calculate the transformation. The rigid transformation

consisting of rotation matrix

R and translation vector

t is calculated by minimizing the mean square error of the correspondence pairs between

and

(Equation (1)). SVD-based least-squares minimization is commonly used because of its robustness and simplicity [

31].

where

is the number of points in

,

and

.

is the closest point of

, which is defined as the correspondence.

Transform . Apply a rigid transformation to and update the related parameters.

These three steps are repeated until convergence or the iteration number exceeds the maximum number of iterations.

2.2. Metric-based Distance

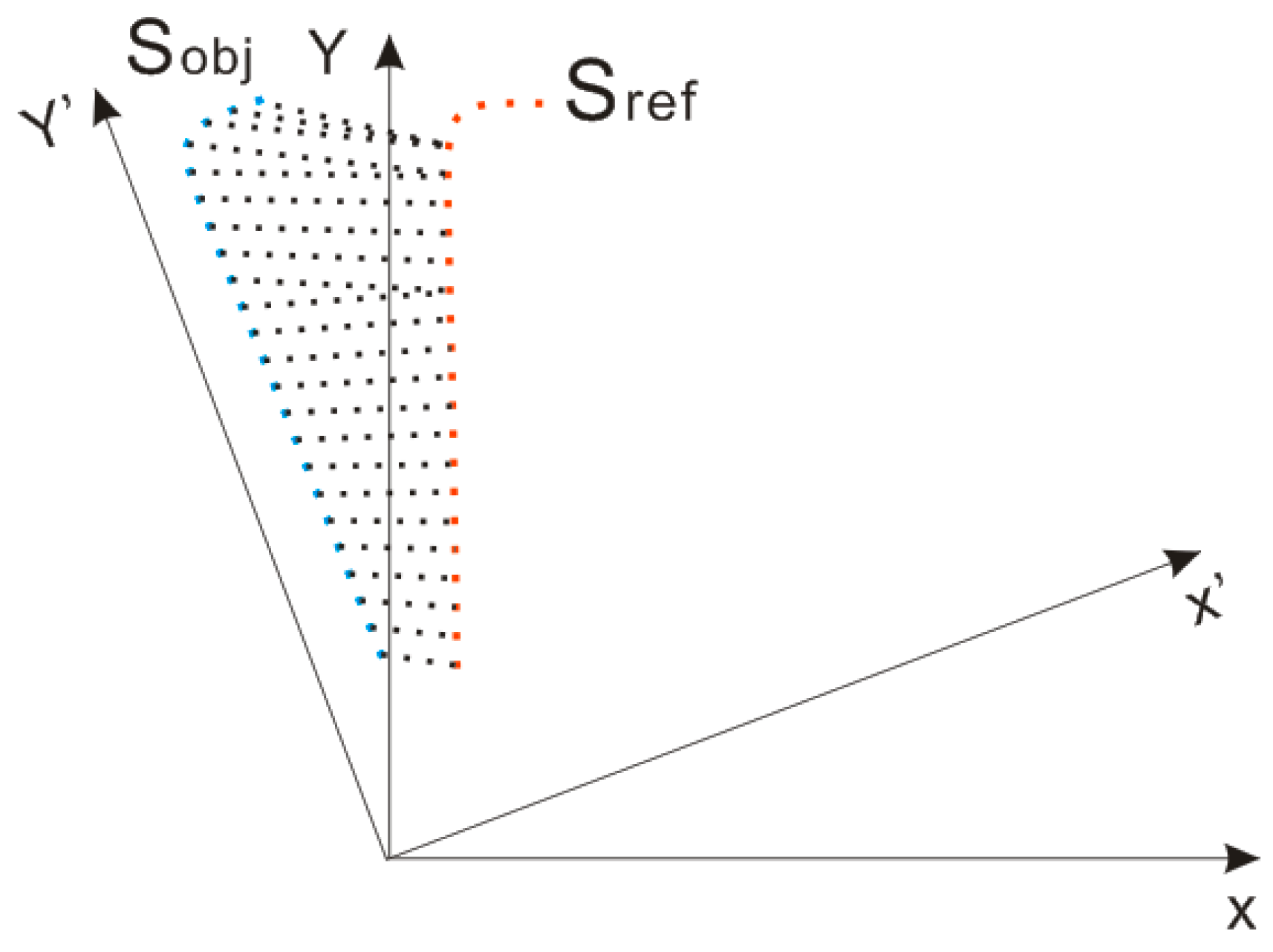

Assume that two successive laser scans with large rotation are given (

Figure 2): the reference scan

with

points,

, and the object scan

with

points,

. When establishing the correspondence, the standard ICP algorithm searches for the closest point in

M for each point in

, which is under the framework of Euclidean space. Points in

that are far from the center are also far from their actual correspondence points because of the angular difference, which easily creates false correspondence and ICP failure. Thus, finding the closest point in Euclidean space is not reliable for establishing correspondence when large rotation exists.

To overcome the defect of Euclidean distance in merely considering the translation component, MbICP defines a new distance measure called the metric-based distance, which simultaneously considers both translation and rotation. Given two points in

, the reference point

and the object point

. A set of rigid-body transformations

can be acquired that satisfies

(Equation (2)).

The metric distance

between

and

is defined as the minimum norm among the transformations (Equation (3)).

where

L is a balance parameter that is homogeneous to a length, which is used to balance the translational and rotational components.

A set of iso-distance curves relative to

and

is depicted by using the metric-based distance and Euclidean distance to visually represent the geometric features of metric-based distance (

Figure 3a). The iso-distance curves that use the metric-based distance are claimed to be ellipses in the literature [

10], while those that use the Euclidean distance are inscribed circles, which provides the metric-based distance a larger search zone than that of the Euclidean distance for the same search radius. The farther the object point is from the center, the longer the long axis of the ellipse becomes and, therefore, the more likely the object point will find its correspondence point in the rotational direction. This feature of the metric-based distance improves the probability of finding the correct correspondence for points that are far from the sensor, as shown in

Figure 3b.

2.3. Resampling

Laser scanners have a settled viewing angle and resolution, so a longer scanning distance increases the distance between neighboring scanned points. When a mobile robot moves forward in a long narrow corridor, the density distribution of point clouds from the laser scanner greatly varies according to the distance from the sensor. Sensors scan fewer points at greater distances (as shown in

Figure 4a). However, such a different density distribution of point clouds may slow down the iteration process and distort the calculation of the rejection threshold, as mentioned in chapter 2.4.1. Therefore, we utilize a resampling method that is based on grid partitioning to realize a relatively even density distribution.

First, we build a spatial grid for the object point cloud. We use a coordinate system that is centered at the sensor’s position to calculate the coordinates of all the scanned points and then obtain the minimum bounding rectangle of the point cloud. The rectangle is partitioned into grids according to a given grid length (0.1 m is used according to the sensor’s resolution), as shown in

Figure 4b.

Second, we calculate a sampling percentage for points in each grid. Given two occupied grids whose row and column indices are () and (), respectively, the distance between the grids is defined as . The sensor is contained in the center grid, so its row and column indices are both zero. We calculate the distance from each grid to the center grid according to the distance definition and obtain the max distance (). The sampling ratio for points in each grid is thus defined as the ratio of and , which guarantees that distant grids from the center grid have a relatively high sampling percentage and nearby grids have a low sampling percentage.

Finally, we resample points in the units of the grids according to the assigned sampling percentage. Given a grid with

n points and sampling percentage

, the sampling size

n′ is calculated as the ceiling of

. To preserve as much topological information as possible for the points, the first and last points are preserved and the remaining

n′ − 2 points are sampled at regular intervals, as shown in

Figure 5a.

This resampling method is based on a grid partition, which determines the sampling percentage according to the distance from the occupied grid to the center grid and then samples points in the units of the grid according to the topological information. This method can effectively dilute dense points nearby and retain their topological information, therefore accelerating the iteration process and preventing the calculation of the rejection threshold from becoming distorted by the greatly varying density distributions of point clouds.

2.4. Improved MbICP Algorithm

2.4.1. Correspondence Establishment

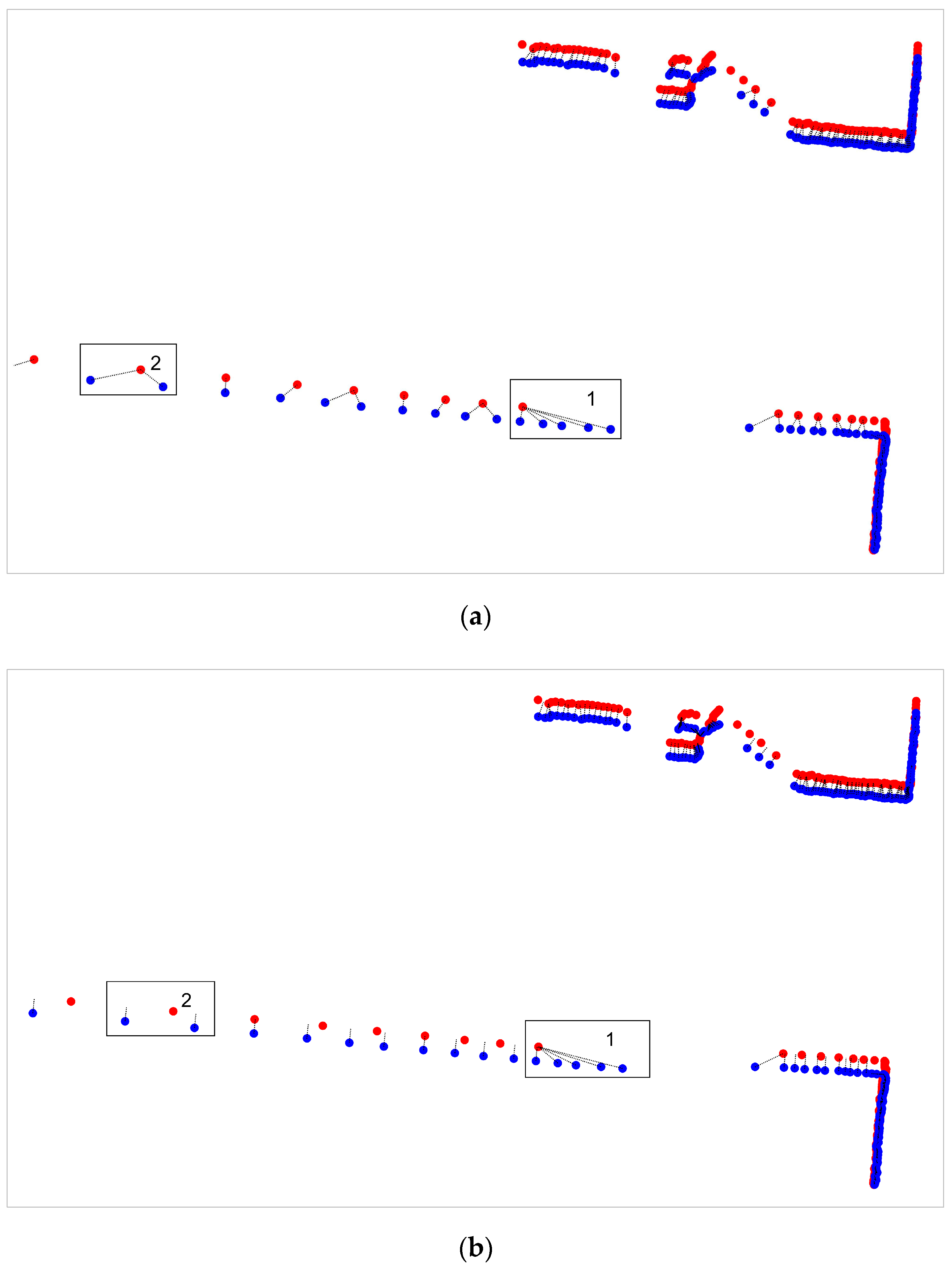

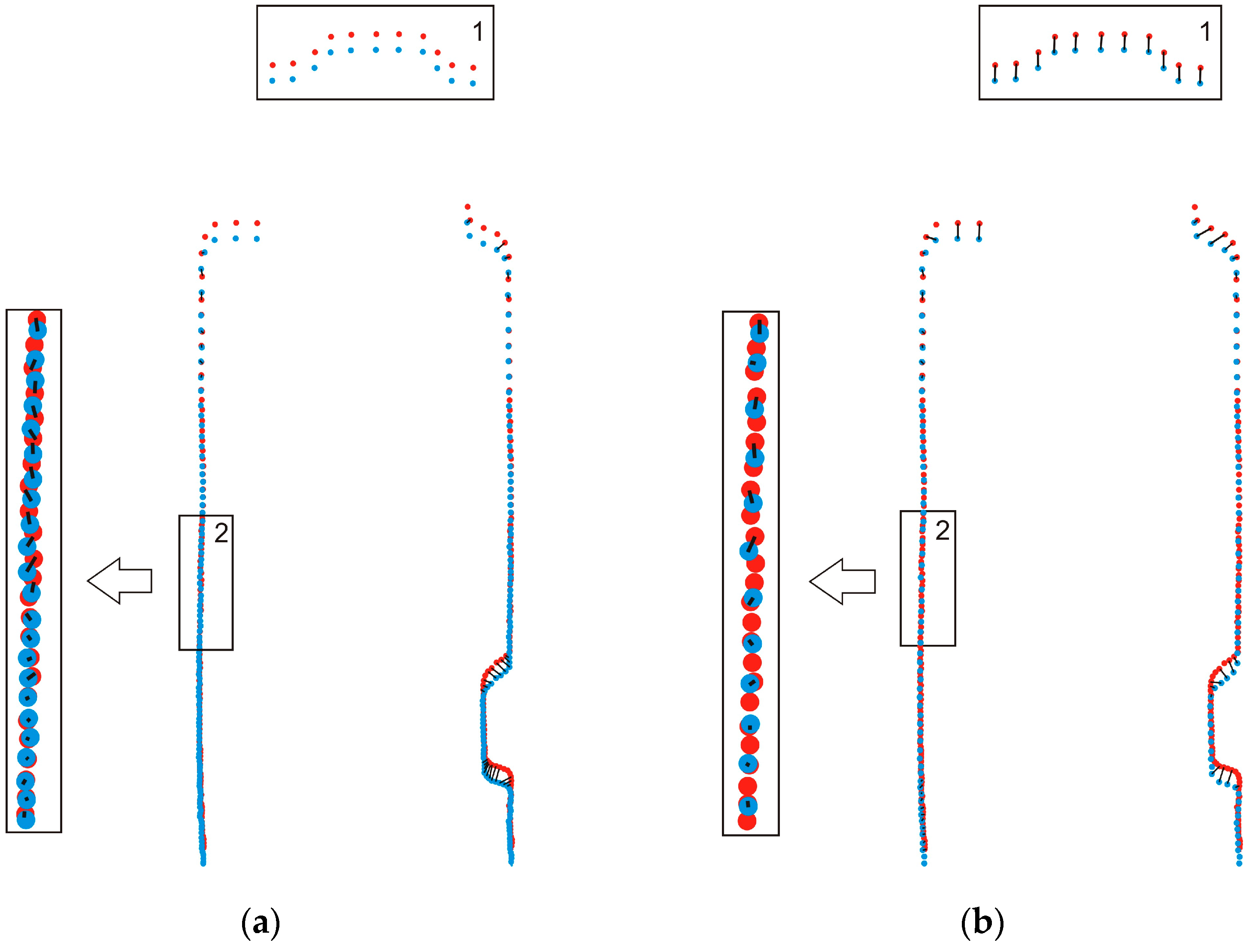

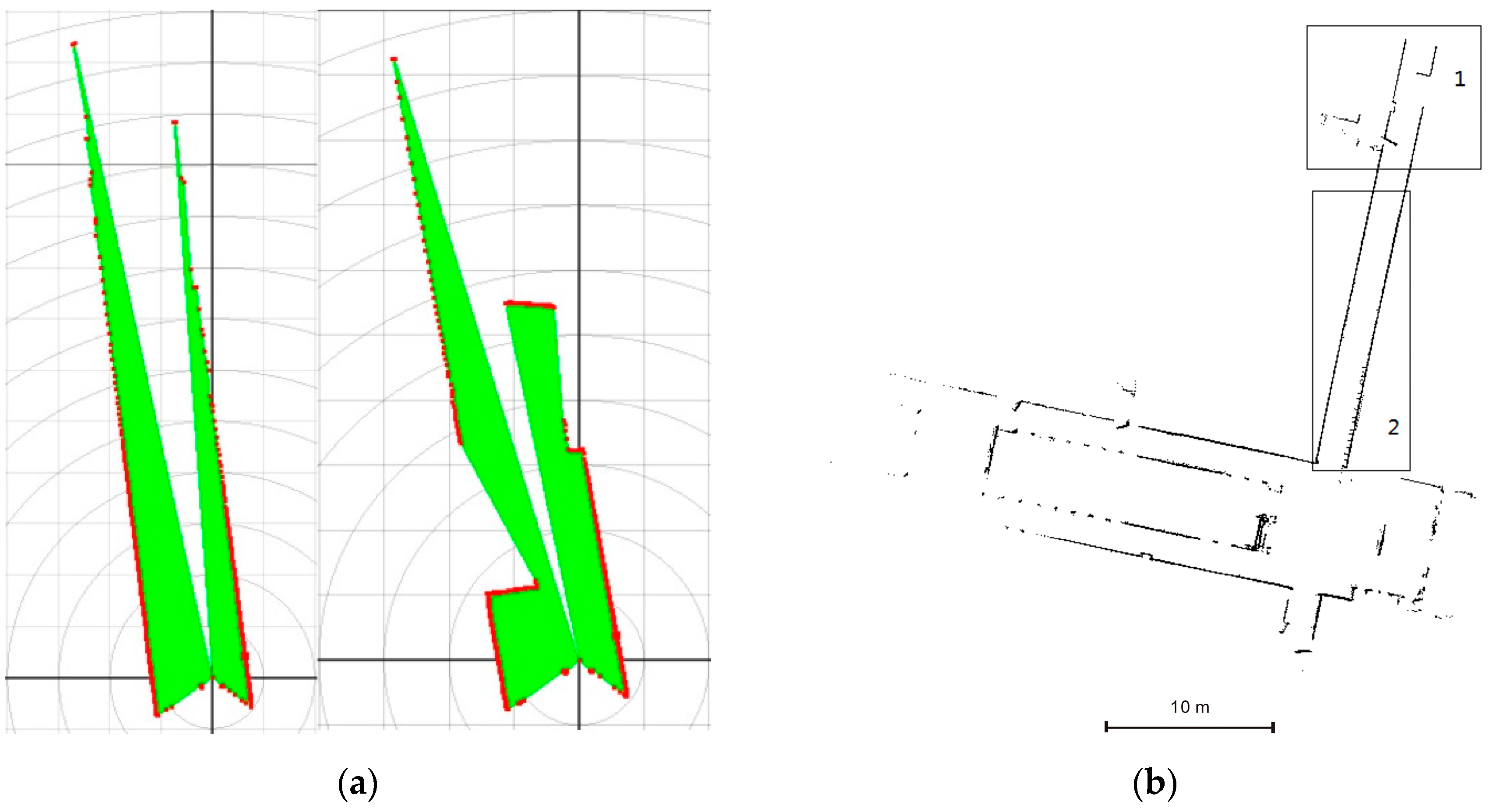

The MbICP algorithm finds correspondence points by finding the nearest point through its defined metric-based distance, which is claimed to perform better than when using the Euclidean distance when large rotation exists. However, this algorithm is still based on a point-to-point metric, which is not as reliable because of mismatching from partial overlapping and the discreteness of laser scans. For example, given two successive laser scans that are captured at the beginning and end of robot motion, partial overlapping can be clearly observed in rectangular area 1 in

Figure 6a,b. This area contains no actual correspondence points for points in the object scan, thus producing many incorrect correspondences. Additionally, different points in the object scan incorrectly correspond to the same point in the reference scan because of the discrete nature of the scan, as shown in rectangular area 2 in

Figure 6a. These incorrect correspondences will distort the follow-up calculation of the optimal transformation and thus negatively affect the final matching result.

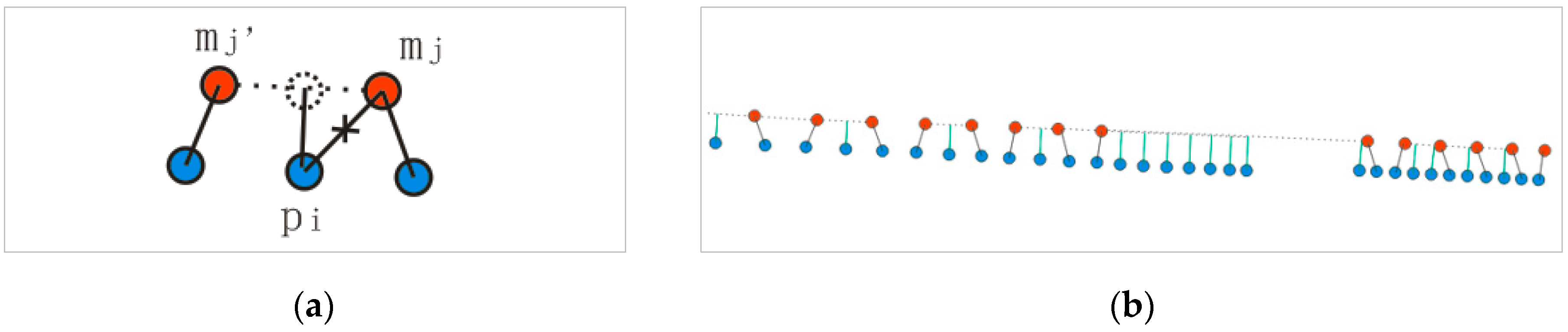

To reduce problems that are introduced by the point-to-point metric, this paper improves the procedure of determining the correspondence by combining the point-to-point and point-to-line metrics. First, the nearest point

in the reference scan is determined for each point

in the object scan by using the metric-based distance, and

is treated as a candidate correspondence pair. For those candidate correspondence pairs whose object points correspond to a reference point, only the pair with the smallest corresponding distance is preserved and the remaining pairs are modified by using an interpolation method. Taking

as an example, we search for the second-closest point

for

in the reference scan, and a segment that begins at

and ends at

is found. We calculate the projection of

onto the segment along the normal and use this interpolation point as a new corresponding point (as shown in

Figure 7a). This interpolation is less risky because it only utilizes the surface information of the reference point clouds.

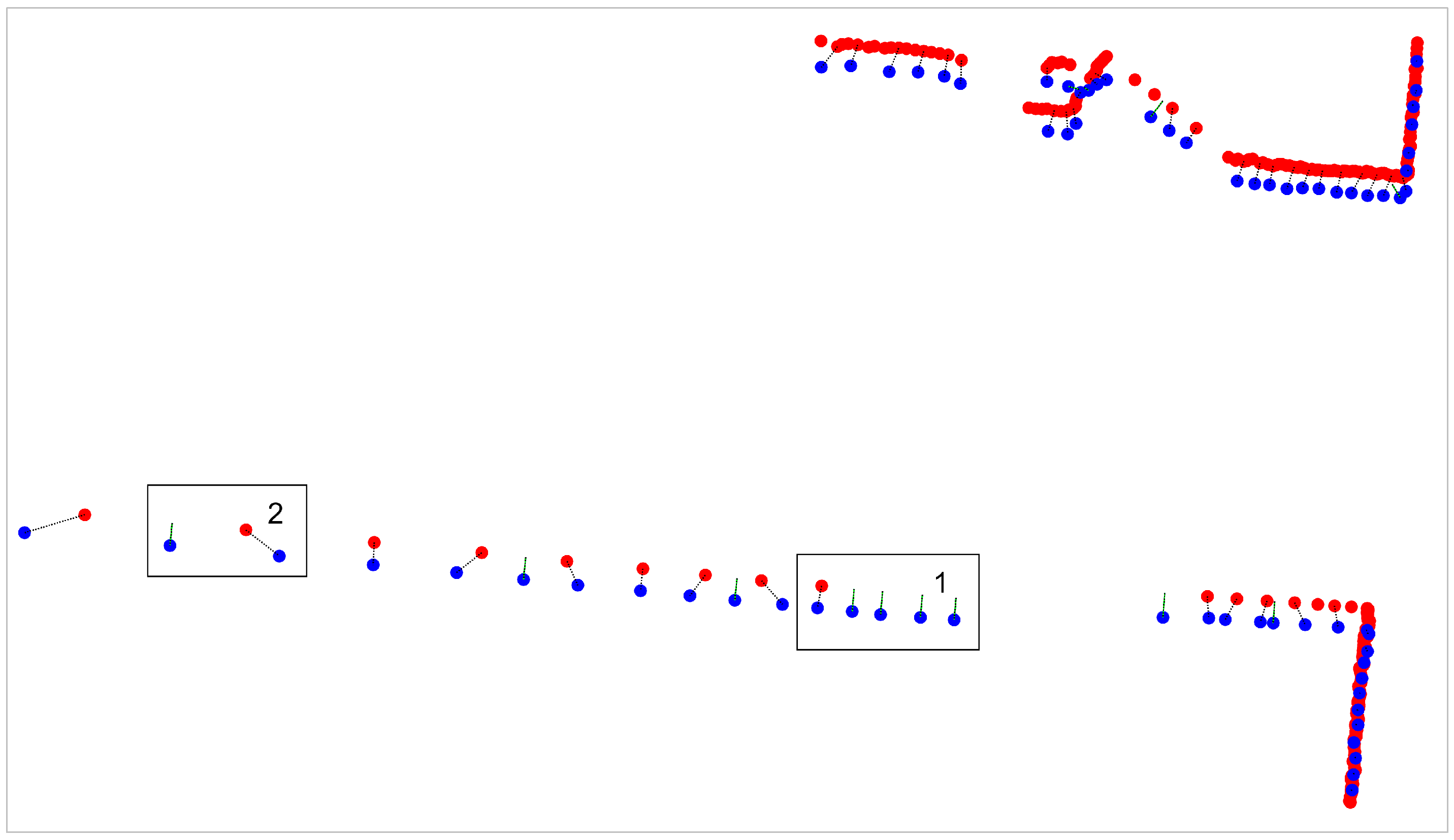

This new procedure can improve the performance of correspondence establishment, especially when non-overlapping areas exist (as shown in

Figure 8). However, a few incorrect correspondence pairs might be established because of the complexity of the environment. Thus, a rejection strategy will be explained in the next section.

2.4.2. Rejection of Incorrect Correspondences

After establishing correspondence based on the new procedure, a set of correspondence pairs are obtained, which may contain a few incorrect correspondence pairs with large distance. Therefore, a rejection strategy that is based on the corresponding distance is required to protect the follow-up calculation from transformation.

One of the most commonly used methods is the standard-deviations-from-mean approach, which removes values that are more than n standard deviations from the mean. The value of n is decided according to the practical situation. However, this method is unreliable because extreme values may distort the mean and standard deviation.

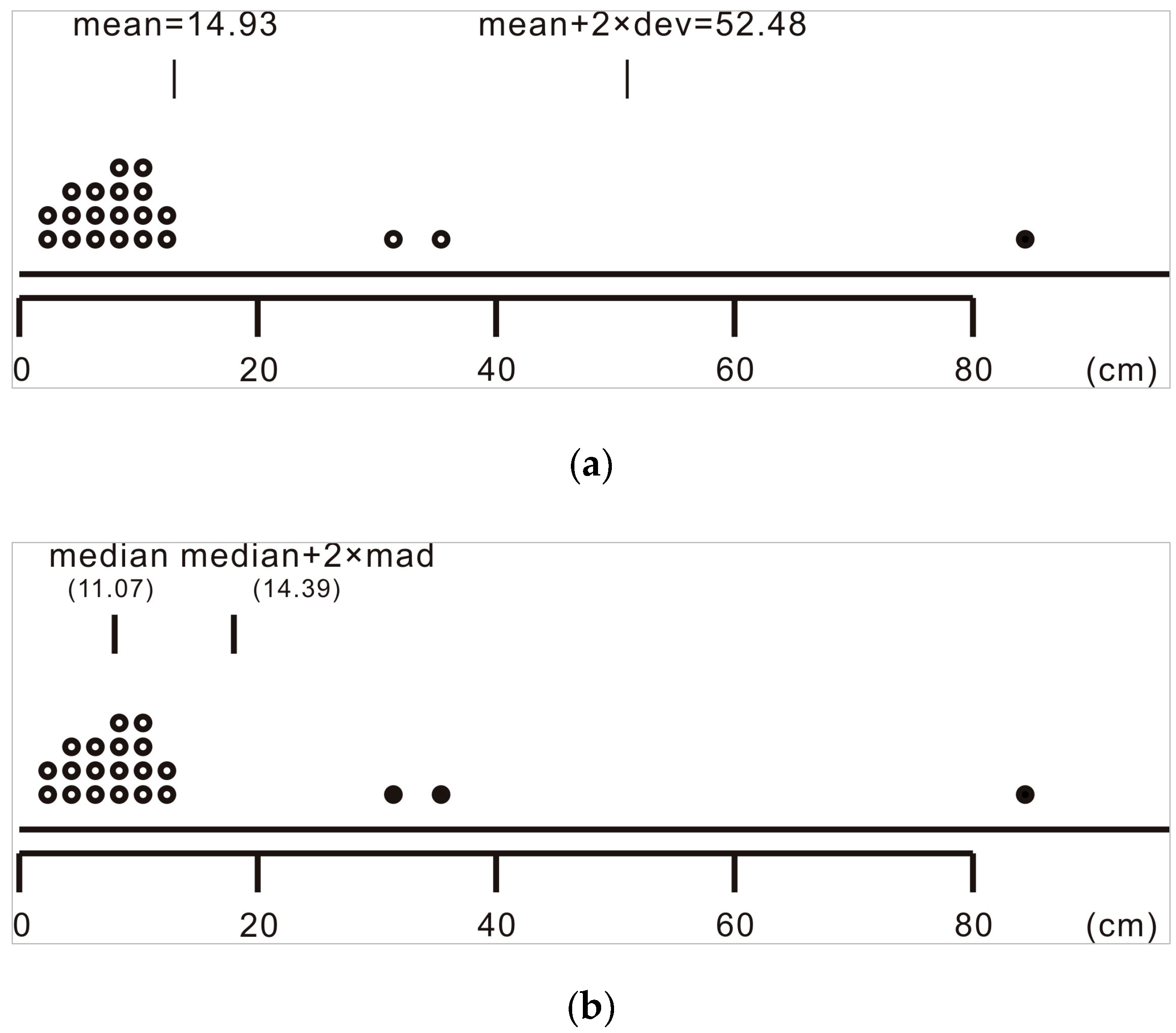

For example, given a sequence of correspondence distances,

X = {12.281, 12.270, 12.712, 11.932, 11.053, 10.768, 11.077, 11.685, 6.393, 6.001, 5.549, 38.760, 86.305, 34.497, 2.988, 3.227, 1.297, 3.539, 6.409, 12.477, 12.381}. The rejection result when using the standard-deviations-from-mean approach is depicted in a dot plot (as shown in

Figure 9a). The mean is approximately 14.93, which obviously deviates from the center of the data set, and the standard deviation is also considerably distorted. Only 86.305 is rejected as an extreme value, while 38.760 and 34.497 are ignored even though they are obviously also extreme values.

The MAD-from-median method is another rejection method, which removes values that are more than two median absolute deviations (MAD) from the median. Instead of utilizing the mean and standard deviation to measure the center and discreteness degree of the data set, this method uses the median and MAD to represent the clustering and statistical dispersion of the data set. The median is not greatly skewed by extreme values and thus can provide a better idea of the typical value than the mean can. The MAD, which is defined as the median of the absolute deviations from the median of the data set, is also robust to extreme values.

The rejection result when using the MAD-from-median method is depicted in

Figure 9b. The median is 11.07, which is a good representation of the center. Here, 38.760, 86.305 and 34.497 are all rejected as extreme values. Thus, the MAD-from-median method can more clearly differentiate extreme values and is herein adopted to reject correspondences with large distances.

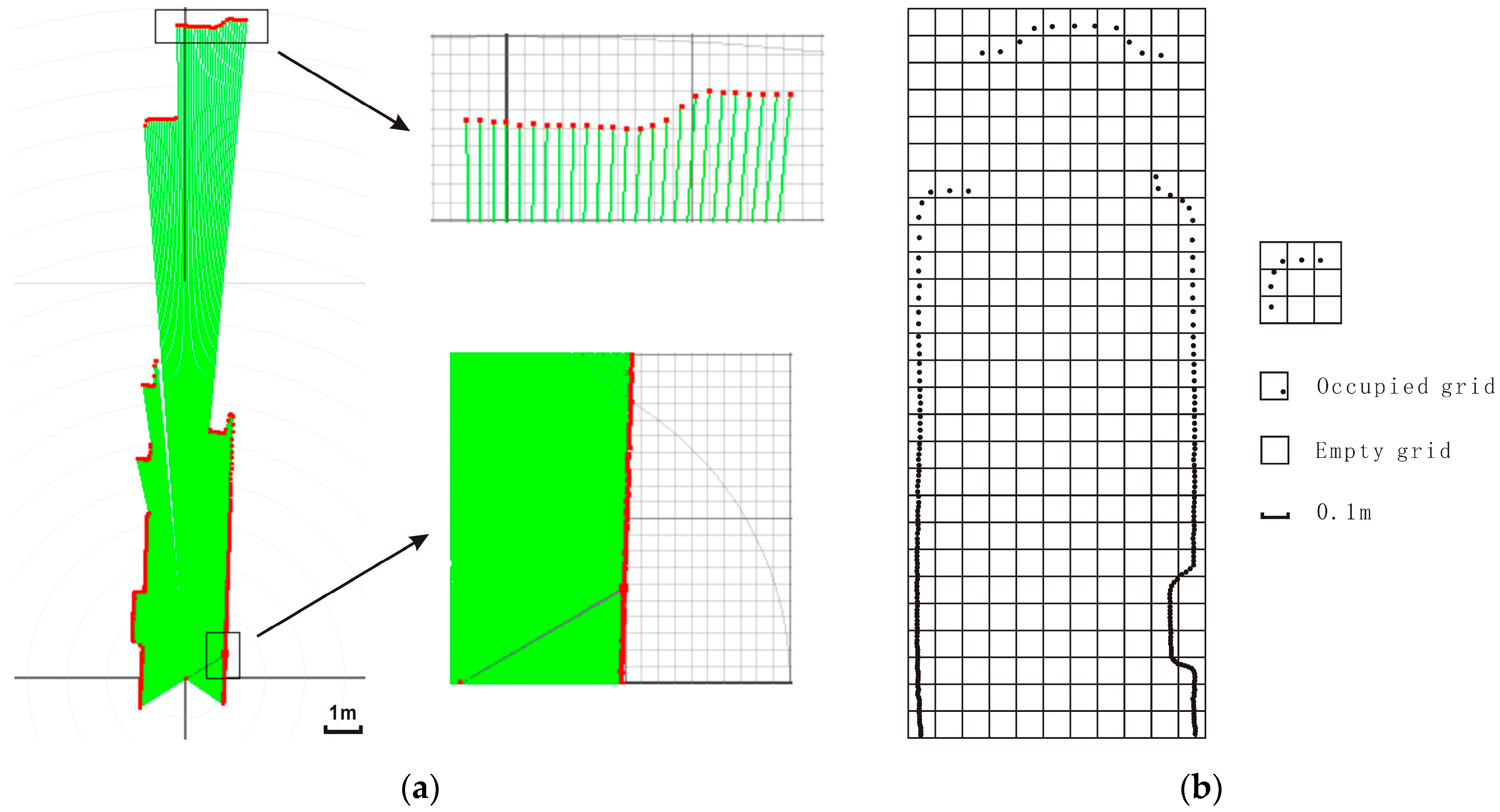

Therefore, we calculate the rejection threshold

by using Equation (5) and reject correspondences with distances above

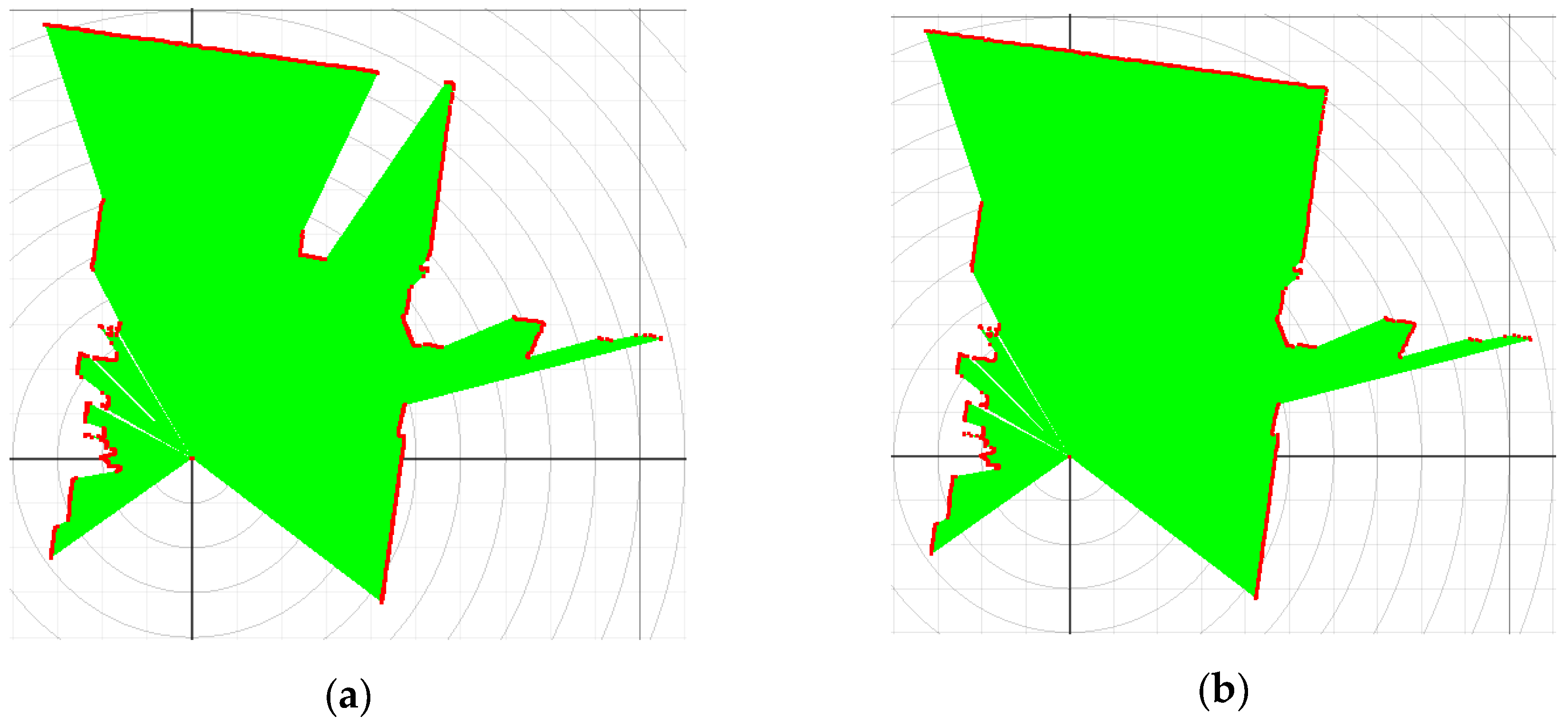

, which are likely incorrect correspondences. This rejection strategy is risky because

may be distorted by the greatly varying density distributions of point clouds. When a mobile platform moves forward in a long narrow corridor, point clouds tend to be quite dense near the sensor but sparse in more distant regions from the sensor. However, the object point clouds cannot be moved closer to the reference point clouds because of the absence of an initial pose from the odometer. The dense points that are distributed within the corridor (rectangle 2 in

Figure 10a) can hardly find actual correspondence points and are likely to establish incorrect correspondences with minor distances, which are unhelpful but are in the majority. The sparse points that are distributed within the frontal obstacles (rectangle 1 in

Figure 10a) are likely to establish correct correspondences with relatively large distances, which are vital to bring two point clouds closer but are in the minority. Therefore, directly rejecting correspondence pairs that have distances above

but that are vital for calculating the transformation, may cause potential local-minima problems. Fortunately, this risk is reduced by the resampling method in chapter 2.3, so

can effectively reject correspondences with extremely large distances and preserve vital correct correspondences (rectangle 1 in

Figure 10b).

3. Results

The experimental results are outlined in this section. We tested the improved MbICP algorithm with data from a mobile wheelchair platform that was equipped with a Hokuyo UTM-30LX laser range finder (Hokuyo Co. Ltd., Osaka, Japan). The Hokuyo UTM-30LX is a 2-D laser range finder with a 30 m effective measurement range, 270° field of view (angular resolution of 0.25°), and 40 Hz scanning rate. We designed two types of experiments to compare our algorithm with the original algorithm. The first experiment evaluated the properties of the algorithms by matching a pair of scans that partially overlapped. The second experiment evaluated the global algorithm performance with a run within our academic building.

3.1. Evaluation Method

The first experiment compared the accuracy and convergence of the improved MbICP algorithm with those of the original MbICP algorithm in matching partially overlapped scans. Before introducing more details regarding the experiment, we first explain the evaluation factors of the accuracy and convergence. Given two scans that were acquired in the same sensor location, we precisely know that the ground truth of the pose estimate is (0, 0, 0°). To quantitatively compare the algorithms’ accuracy, a random displacement is added to the initial pose estimate, and one scan is also roto-translated by this value. This transformed scan is treated as the object scan, which is marked as , while the other scan is treated as the reference scan, which is marked as . After matching, the pose estimation is determined and statistical analysis can be performed. The translational and rotational deviation between the pose estimation from the algorithm and the ground truth is used as the evaluative factor of the algorithm’s accuracy. The smaller the deviation, the more closely fits and the better the accuracy of the algorithm. In addition, the number of iterations can intuitively represent the algorithm’s convergence.

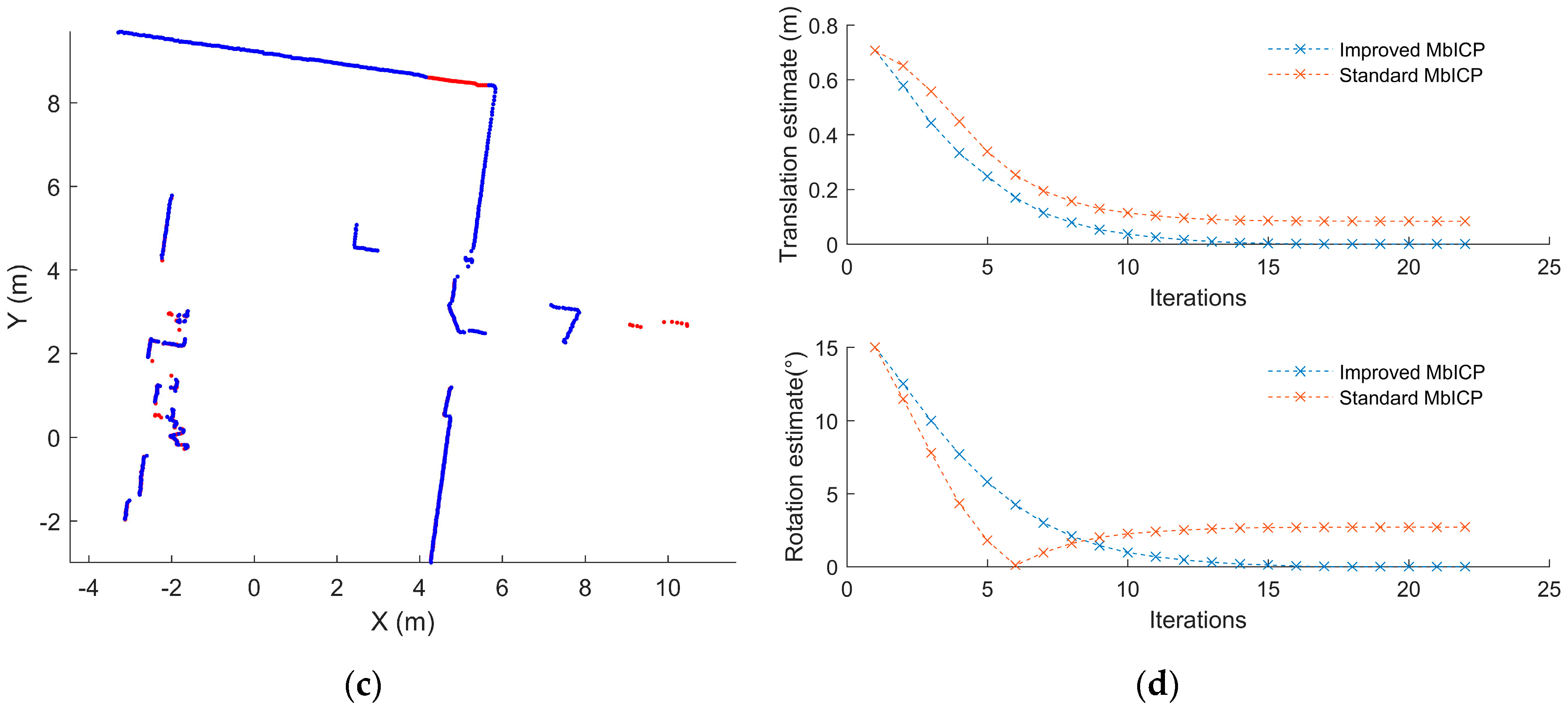

We conducted the first experiment by matching two partially overlapping scans with the improved algorithm and standard algorithm. To obtain experimental scans, we recorded data taken by UTM-30LX laser range finder in a corner by artificially adding or removing the trashcan in the field of view without moving the sensor. This way we acquired a pair of scans that partially overlap, one of which with the presence of the trashcan is shown in

Figure 11a and the other is shown in

Figure 11b. The scan with this trashcan was roto-translated with a given transformation (500 mm, 500 mm, 15°) and then used as the object scan, while the scan without the trashcan was treated as the reference scan (as shown in

Figure 12a). The matching result from the standard algorithm is depicted in

Figure 12b, which is far from satisfactory because of obvious deviation between two scans. The distance between the estimated pose and the ground truth was (75.75 mm, 34.69 mm, 2.71°), demonstrating awful accuracy. However, the matching result from the improved algorithm was relatively satisfactory because the two scans closely matched (as shown in

Figure 12c). The deviation between the estimated pose and ground truth was nearly zero, demonstrating high accuracy. The improved algorithm also exhibited a faster convergence rate (18 iterations) because of the resampling step, while the standard algorithm satisfied the convergence condition after 22 iterations (

Figure 12d).

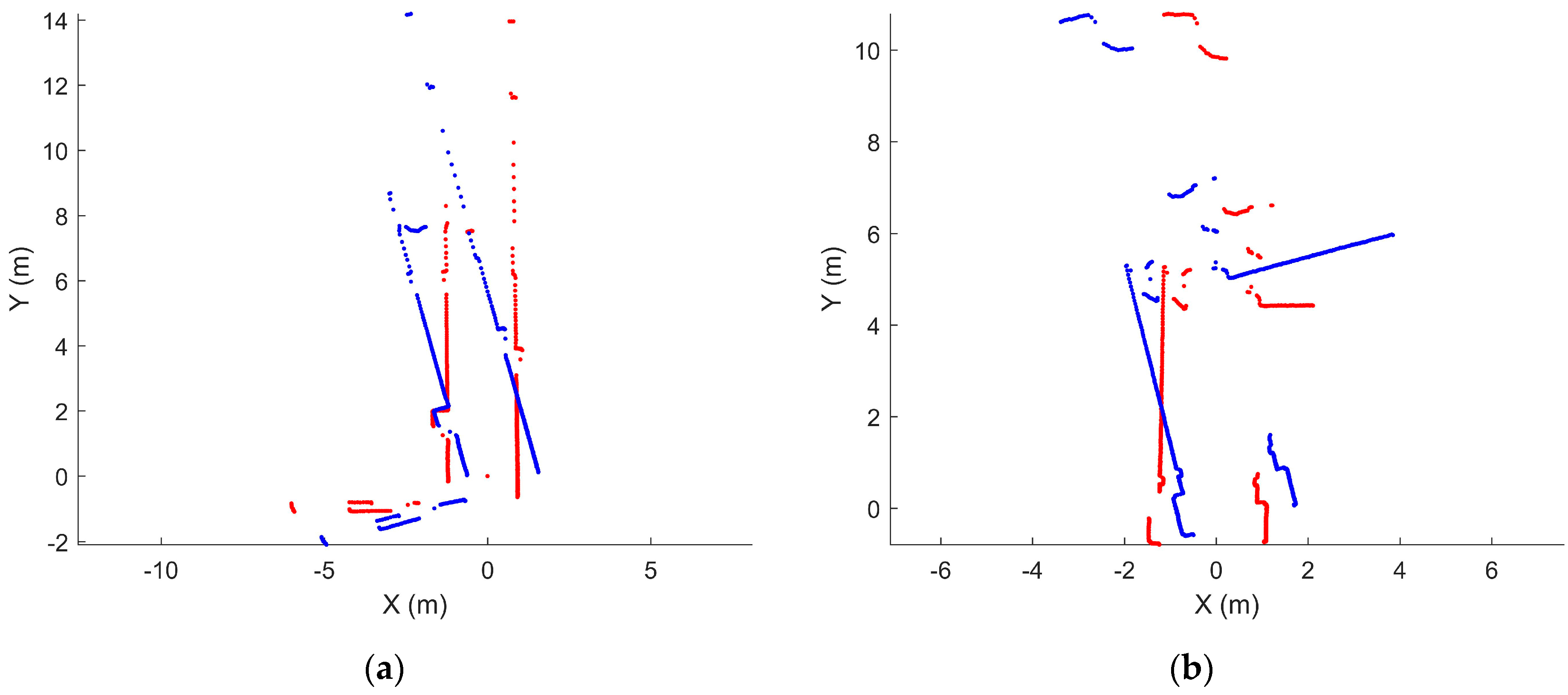

Another group of experiments was conducted to quantitatively evaluate the algorithms’ accuracy in matching scans with different overlapping ratios. Given an overlapping percentage

η% and two identical scans, one scan had 1 −

η% of the points artificially removed, while the other scan remained unchanged from the object scan. Thus, we obtained pairs of scans with an overlapping percentage

η, which varied from 60% to 100%. These scans were acquired under different scenarios, such as stairs and corners, where overlapping frequently occurs because of range holes and rotation, and corridors, where dynamic obstacles are common (as shown in

Figure 13). We repeated each matching experiment 10 times with these two algorithms for each overlapping percentage and each scenario (a total of 400 runs), and the common parameters were identical.

We first discuss the results in terms of robustness. A scan match was considered a failure when the solution was larger than 0.1 m in translation and 3.14° in rotation. Solutions with an error below the threshold were used in a follow-up precision analysis. True Positives (converged to the right solution), False Positives (converged to an incorrect solution), True Negatives (not converged but the solution is correct) and False Negatives (not converged and the solution is incorrect) are frequently used robustness characteristics.

For the standard algorithm, the true positives decreased and the false positives increased as the overlapping percentage decreased, as shown in

Table 1. Thus, the robustness of the standard algorithm was influenced by non-overlapping areas between scans. The improved algorithm showed good robustness because all the solutions were true positives despite a decrease in the overlapping percentage, as shown in

Table 2.

We calculated the deviation between the estimated pose and ground truth to indicate the precision (only using

True Positives), as shown in

Table 3. Both algorithms were accurate when two scans perfectly overlapped but experienced increasing deviation in their translation and rotation as the overlap ratio decreased. However, the improved algorithm was more accurate than the standard algorithm when the scans partially overlapped. Moreover, a decline in the overlap ratio substantially decreased the performance of the standard algorithm but had no significant effect on the improved algorithm.

3.2. Real-World Applications

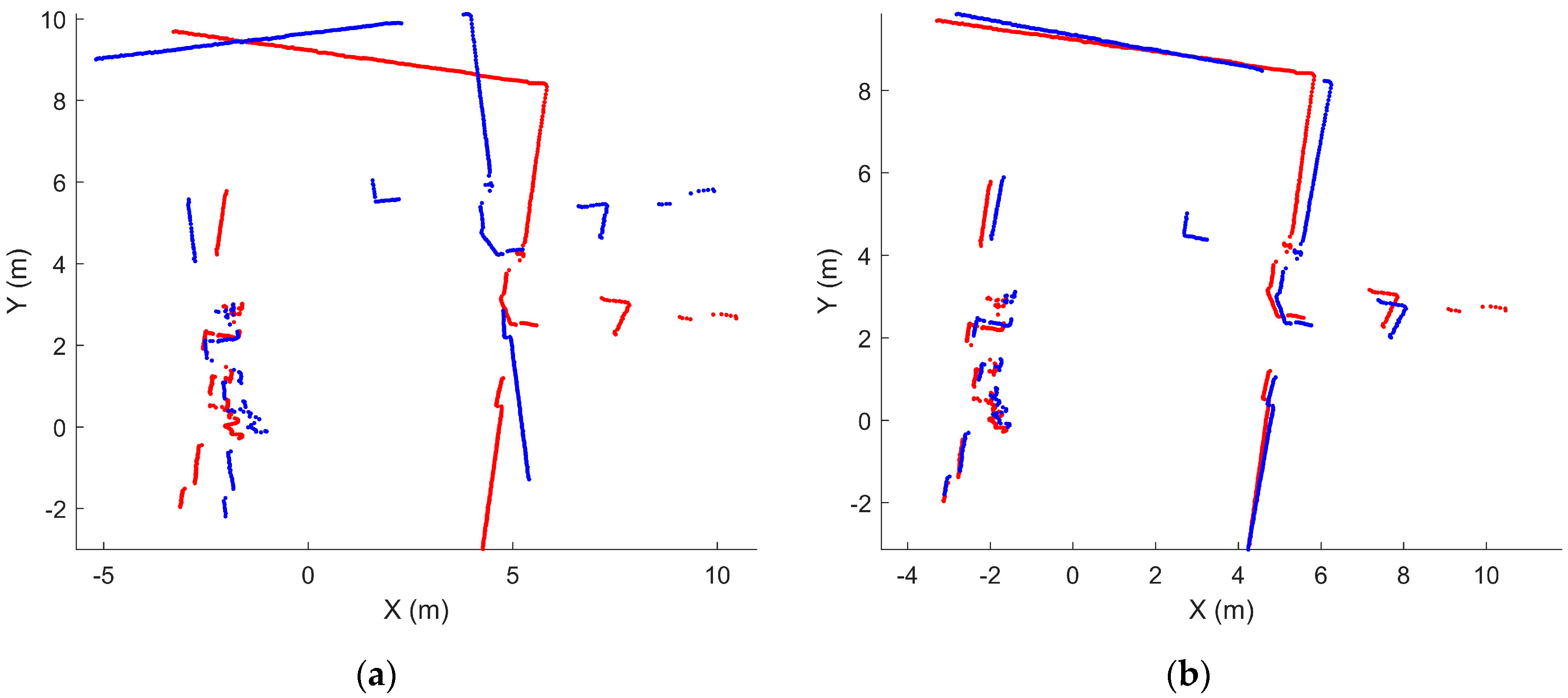

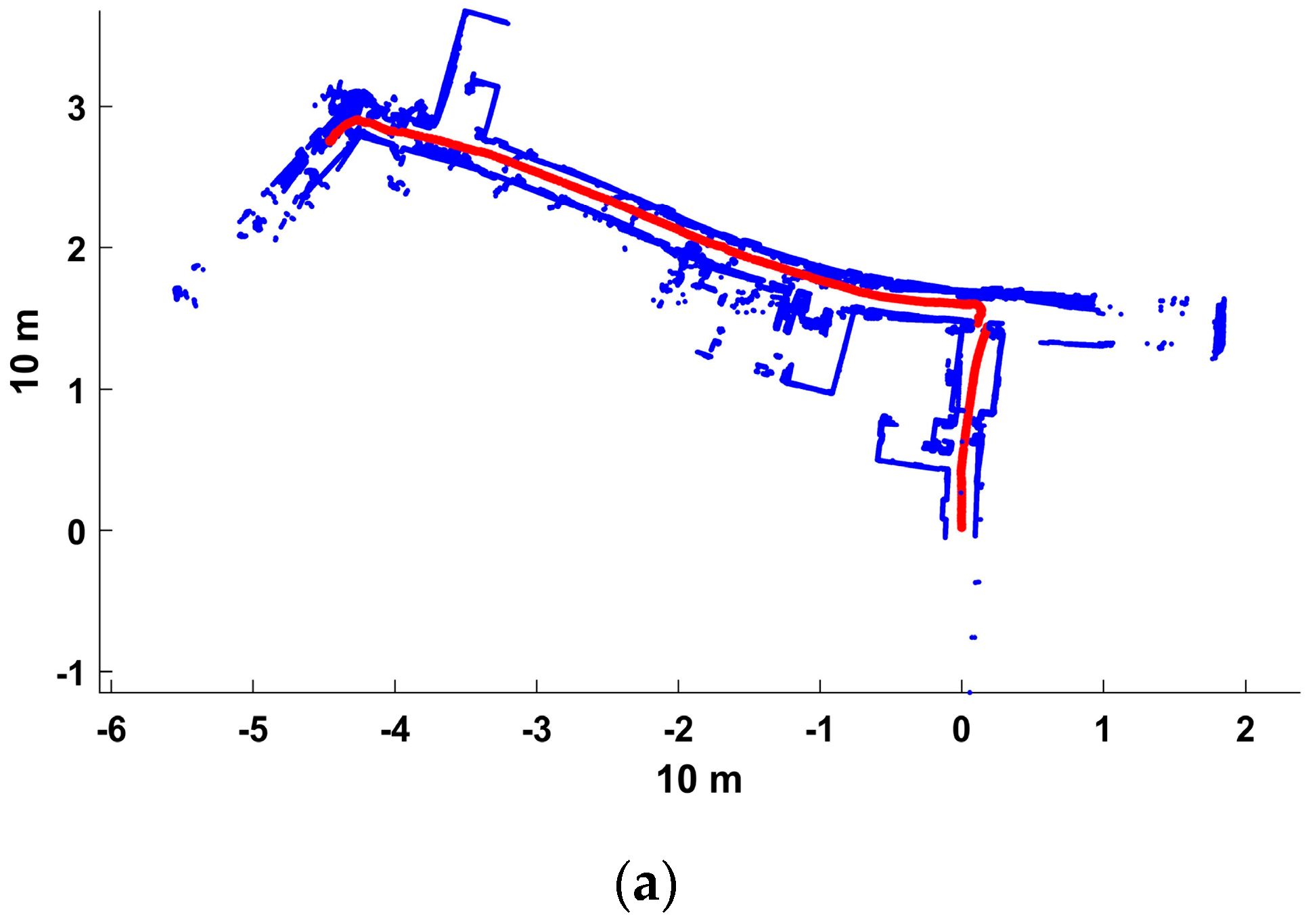

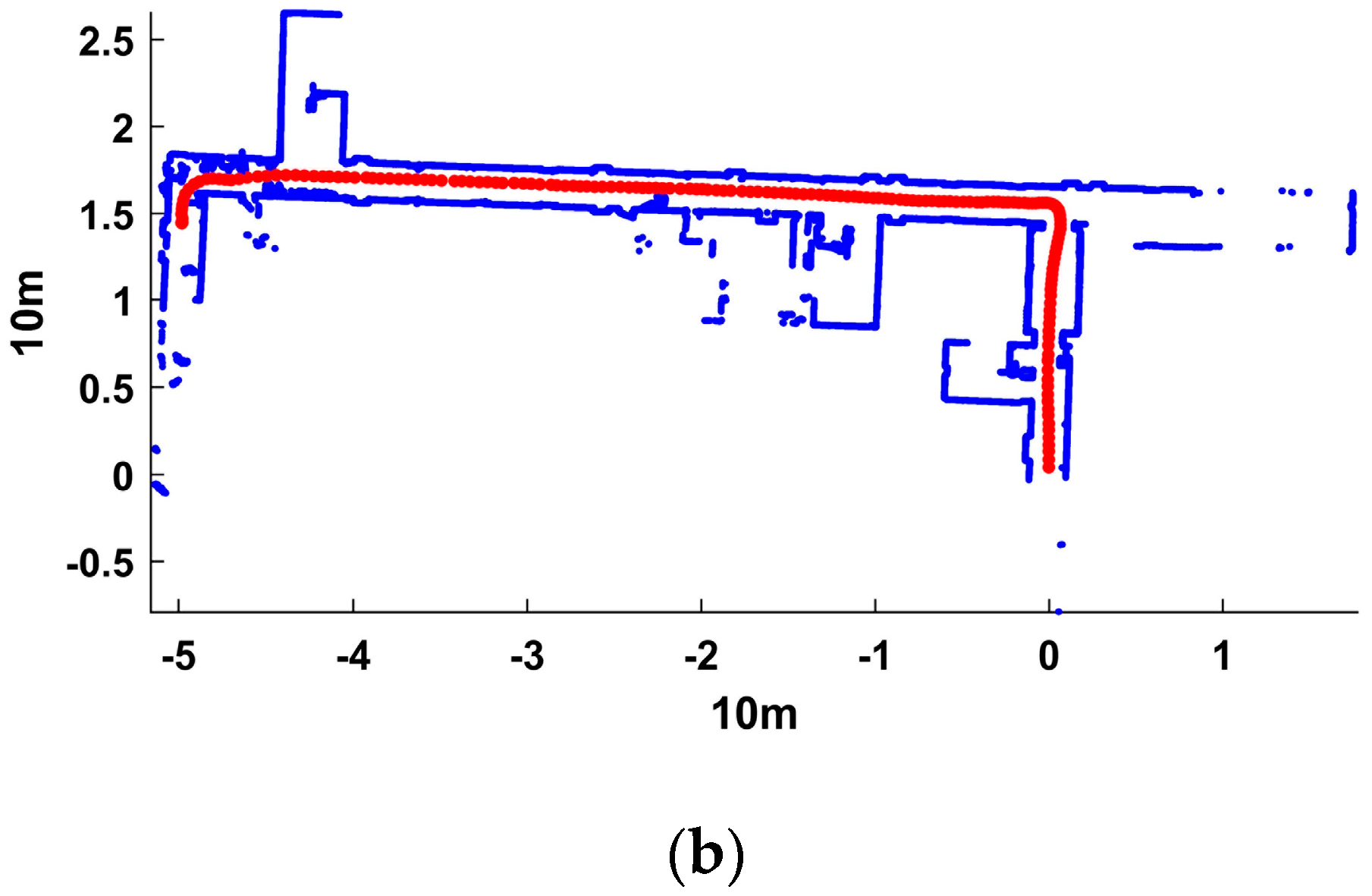

The first experiment was ingenious because it allows for matching real-word scans without requiring the ground truth, but generating scans with a specific overlapping percentage by randomly removing a certain number of points is risky and does not always conform to reality. Therefore, we conducted a second experiment, which involved a run with a mobile robot on the 4th floor of the School of Resource and Environment Science Building, Wuhan University. This building consisted of three narrow corridors and two corners, forming a U-shaped room. Stationary structures, such as offices, stairs and toilets, were distributed along the corridors. In addition, dynamic obstacles such as umbrellas and walking people were present in this scenario. We applied the two algorithms to match each pair of successive scans and output many transformed cloud points and a trajectory. This experiment was challenging because the overlapping percentage of successive scans from rotation and range holes could not be estimated in advance.

Figure 14 depicts the matching results from the two algorithms. Obtaining the exact transformation data for each step during the experiment was difficult [

1]. The ground truth and numeric values are thus actually unavailable in real-world applications because of a lack of information regarding actual robot poses, which is commonly the case in current relevant studies [

1,

10]. The results of the improved algorithm were clearly better than those of the standard algorithm because the former could adequately fit successive scans and the generated point clouds accurately presented the indoor structure. In contrast, the matching results from the standard algorithm suffered from several obvious mismatches around stairs and corners. Thus, the behavior of the improved algorithm was generally better than that of the standard algorithm under more realistic conditions.

3.3. Discussion

The improved MbICP algorithm is limited in that it may fall into local minima under specific scenarios, such as a long, narrow corridor with few frontal structures, indicating the need for further improvement. Frontal obstacles are very vital to bring two clouds together because their correspondences play an important role in calculating a ground transformation. As seen in

Figure 15a, the left scan was captured in a long narrow corridor (corresponding to rectangle 2 in

Figure 15b) where there are few frontal obstacles, while the right scan was captured in a long narrow corridor (corresponding to rectangle 1 in

Figure 15b) where there are several frontal obstacles. As seen in

Figure 15b, the matching result was satisfactory in rectangle 1 but demonstrated a “shortening” effect in rectangle 2. Bringing two point clouds closer was difficult because of lacking necessary frontal obstacles and insufficient correct correspondences that are vital for gaining an optimal transformation in each iteration, thus causing local-minima problems.

4. Conclusions

This paper presented an improved MbICP algorithm to estimate robot motion to overcome this method’s inability to match partially overlapping 2D scans. A resampling step was executed to smooth the greatly varying density distributions of point clouds, which could accelerate the iteration process and protect the follow-up rejection method from becoming distorted. Then, correspondences were established by an improved procedure that combined the simplicity of the point-to-point metric and accuracy of the point-to-line metric. Finally, a rejection threshold that was based on a MAD-from-median approach was used to discard correspondences with large distances, which were likely incorrect correspondences. The results of the first experiment demonstrated that both algorithms were disturbed by a decrease in the overlapping percentage, but the improved algorithm still exhibited better accuracy and robustness. The results of the second experiment indicated that the improved algorithm is valid in practice and exhibits better performance than the standard algorithm.

In future work, our method needs further improvement to be applied under more scenarios, including long and narrow corridors where frontal obstacles are rare. Utilizing geometric features of the point cloud, such as curvature, normal and density, is a possible solution. In addition, how our method could be extended to the scan matching of 3D point clouds needs further consideration.