1. Introduction

Personal devices, particularly smartphones, have become ubiquitous across society and are continuously carried as high-performance devices equipped with advanced sensing capabilities, including speech recognition. Given that such devices are routinely used for communication and information processing in everyday life, a socio-technical system in which individual smartphones continuously analyze ambient environmental audio (such as conversations or phone calls) in order to enable early detection and reporting of serious crimes or terrorist activities can be regarded as having reached a stage where its feasibility can be realistically examined from a technical perspective. In other words, this concept is no longer a purely speculative vision of the future, but is increasingly becoming an object of institutional consideration grounded in the extension of existing technologies.

At the same time, in recent years, advanced information-gathering techniques such as communication interception and device-level monitoring have been deployed in practice in many countries for the purposes of counterterrorism and serious crime investigations. However, there have also been numerous cases in which such technologies are reported to have been operated opaquely by intelligence agencies and used for political surveillance or abuse of authority. This suggests that the core issue lies not in the mere existence of surveillance technologies themselves, but in the institutional conditions under which they are operated.

Based on this recognition, this paper structures its discussion around two premises (pillars). The first is the recognition that personal devices, particularly smartphones, possess advanced sensing capabilities and have the potential to function as an information infrastructure that effectively covers society as a whole (Pillar 1). The second is the requirement that, for such technologies to be justified in democratic societies, not only effectiveness in public safety but also privacy protection, transparency, and institutional accountability must be simultaneously ensured (Pillar 2).

The purpose of this paper is to present the concept of utilizing smartphones as public safety infrastructure, while at the same time clarifying under what forms of institutional design this concept could be utilized with social acceptance. As a concrete analytical framework for this examination, this study proposes a governance architecture centered on the Verifiable Record of AI Output (VRAIO).

1.1. Maturity and Applications of Speech Recognition AI

This section substantiates the first pillar outlined in the preceding section by reviewing, on the basis of existing studies, the maturity of speech recognition technologies embedded in smartphone AI and home AI systems, as well as the scope of their applications.

Speech recognition technologies embedded in smartphone AI and home AI systems have already been widely commercialized. Major voice assistants (Apple Siri, Google Assistant, and Amazon Alexa) have demonstrated high recognition performance even in specialized domains such as the comprehension of medication names, indicating their suitability for practical use [

1]. In addition, systematic evaluations based on ISO 9241-11 have shown that existing commercial systems have reached a mature stage in which they can adapt to users’ operational environments [

2]. Furthermore, studies involving older adults have confirmed active use of everyday functions and high levels of user satisfaction, providing evidence that speech recognition AI has become established in real-world society across generations [

3].

Moreover, speech recognition performance has steadily improved in recent years. Studies that organize the UI design, functionality, and smart-home applications of voice assistants equipped with TinyML have reported both technological progress—including improved usability on low-resource devices and enhanced robustness under noisy conditions—and challenges that still remain [

4]. In addition, research developing and validating quantitative indicators of collaboration intensity between users and voice assistants has shown that recent systems are capable of achieving high accuracy and responsiveness in bidirectional interactions with users [

5].

Building on these speech recognition technologies, a wide variety of applications have emerged. There are studies that systematically review the applications of voice assistants and smart speakers and highlight new possibilities arising from their integration with IoT systems and intuitive conversational technologies [

6]. In the medical domain, research has also been reported in which speech abnormalities at the prodromal stage of Parkinson’s disease were detected from smartphone call audio, achieving high classification accuracy in real-world environments [

7]. Taken together, these examples demonstrate that speech recognition AI has been widely deployed across domains ranging from daily life support to medical applications.

1.2. Privacy Risks and Institutional Responses

The spread of speech recognition technology in smartphone and home AI systems has enhanced convenience, but at the same time has heightened concerns about privacy infringement. A survey conducted in the United States, the Netherlands, and Poland found that many users believe their devices are “listening” to their conversations, and that this perception is associated with experiences of conversation-related advertising and with conspiratorial tendencies [

8].

A systematic survey of security and privacy threats related to personal voice assistants has identified risks such as attacks via the acoustic channel and unauthorized data collection [

9]. Furthermore, multiple comprehensive reviews have been published on information leakage in voice assistants, risks associated with cloud processing, and the limitations of existing countermeasures from the perspective of the General Data Protection Regulation (GDPR) [

10,

11]. In addition, empirical analyses have revealed inconsistencies and deficiencies in privacy policies for Alexa skills [

12], and other studies have pointed out new ethical challenges associated with the integration of large language models (LLMs) [

13].

Analyses based on behavioral theory have confirmed a tendency for trust and convenience to outweigh privacy concerns [

14], while security analyses of commercial smart assistants have revealed specific issues such as vulnerabilities to voice replay attacks and deficiencies in authentication in multi-user environments [

15]. Moreover, user surveys of smart speaker owners have shown that current privacy settings are inadequate and that greater transparency and user control are needed [

16]. Collectively, these findings indicate that as speech recognition AI develops, privacy protection is increasingly recognized as a critical issue in various contexts.

The use of speech recognition technology in smartphone and home AI systems makes the design of institutional frameworks and legal mechanisms for privacy protection an important challenge. One study proposed a framework for evaluating AI surveillance technologies across the dimensions of function, consent, and society, emphasizing the importance of ensuring transparency, privacy, and autonomy from a civil society perspective [

17]. Another presented a comprehensive framework that addresses data security and privacy protection in AI from policy, technical, and ethical perspectives, identifying the limitations of existing measures and directions for future improvement [

18]. In addition, analyses have examined the commodification of personal data brought about by AI surveillance, positioning the strengthening of informational control rights under EU law as a corrective measure [

19], and reports have organized human rights concerns such as discrimination, privacy infringement, and unclear accountability arising from AI development, stressing the need for continuous legal evaluation and flexible responses [

20].

Research has also proposed an integrated framework for implementing both Fundamental Rights Impact Assessments (FRIAs) and Data Protection Impact Assessments (DPIAs), with a focus on the interplay between the EU AI Act and GDPR [

21]. Moreover, systematic reviews of privacy information disclosure in AI-integrated IoT environments have identified key themes such as trust, vulnerability, regulation, and user behavior [

22], and comprehensive reviews of research on privacy, security, and trust in conversational AI have organized the challenges in constructing integrated evaluation metrics [

23]. These findings indicate that, in the social implementation of speech recognition AI, not only technical measures but also legal and ethical standards are indispensable.

1.3. Crime Precursor Detection and Big Data

At present, there are only limited cases in which speech recognition technology embedded in smartphone or home AI systems has been used directly to detect crimes or their precursors. However, insights from AI-based surveillance technologies and from institutional design and technical applications in the field of public safety are highly relevant to the development of this area. For example, one study proposed the Verifiable Record of AI Output (VRAIO) framework as a means to reconcile privacy protection with social acceptability in public-space monitoring via AI-connected cameras, thereby providing a framework for the proper use of surveillance technologies [

24]. Another report analyzed the technical realities of lawful interception using existing communication infrastructure and vulnerabilities, and organized the legal and technical issues involved in criminal investigations and intelligence gathering [

25]. Such knowledge provides an important foundation for considering the institutional design and technical direction of crime precursor detection using speech recognition AI.

Detection of crimes and their precursors through social media analysis has also advanced as an important research field. One study proposed a framework for extracting posts containing criminal slang or jargon from Twitter and classifying their intent using machine learning [

26]. Furthermore, by constructing an ontology of crime-related expressions and analyzing more than 88 million tweets, the effectiveness of this method was demonstrated. These findings provide a technological foundation for the early detection of indications of criminal planning or illegal activity from the vast volume of information on social media, and serve as supporting evidence for the potential effectiveness of continuous monitoring and analysis of ambient audio by smartphones in detecting and reporting crimes or terrorist plans and incidents.

Information obtained from smartphone AI, home AI, and social media is rapidly becoming big data, and its use has significant social, political, and technological implications. Reports on China’s nationwide surveillance initiative, the “Sharp Eyes” program, have described the reality of integrating data and video monitoring that covers nearly 100% of public spaces [

27]. Another study analyzed the formation of China’s National Integrated Big Data Center system, clarifying the role of large-scale data integration in local governance and social management [

28]. Furthermore, systematic reviews of privacy-preserving data mining techniques have organized technical approaches aimed at balancing accuracy and anonymity in large-scale data analysis [

29]. These findings indicate that when information generated by speech recognition and social media analysis is collected and used as big data, a comprehensive response encompassing ethical, institutional, and technical considerations is indispensable.

Speech recognition technology embedded in smartphones and home AI systems has also become deeply integrated into users’ daily lives. A systematic review of literature on the use of voice assistants in households has shown that such devices are now permanently installed in living environments and have become part of daily routines [

30]. The constant portability of smartphones has also been utilized for health monitoring and medical applications. For example, one study demonstrated that combining a smartphone with a smartwatch for year-long in-home monitoring can enable highly sensitive assessment of the progression of early-stage Parkinson’s disease—an example of passive monitoring that leverages the “always-carried” nature of smartphones [

31]. In addition, surveys conducted between 2018 and 2024 on children and adolescents have revealed an increase in prolonged smartphone use and the trend toward constant carrying, with analyses of its impact on health and quality of life [

32].

Based on the above body of prior research, it cannot be denied that empirical cases directly demonstrating the detection of crimes or their precursors using speech recognition technologies embedded in smartphone AI or home AI systems remain limited at present. However, in general, if conversational content can be understood with high accuracy, it is conceptually reasonable to infer whether intentions to commit crimes, discussions of criminal planning, or consultations related to illegal activities are taking place. The concept proposed in this paper is grounded in technical and cognitive premises that are consistent with existing studies, and quantitative validation of its effectiveness and pilot implementations are positioned as important topics for the next stage of research toward societal deployment.

It should be noted that the sources constituting big data extend well beyond the audio information captured by smartphone-embedded microphones, which is the primary focus of this study. In principle, such sources include emails, SNS communications, sensor signals from IoT devices, video footage from street cameras, and sensor data embedded in autonomous vehicles, among many others. In this paper, priority is given to the practical feasibility of societal implementation and to maintaining conceptual clarity, and the discussion therefore focuses on ambient environmental audio acquired through smartphone microphones.

Nevertheless, efforts to leverage big data generated from these diverse sources to enhance societal safety and social efficiency are expected to grow in importance. In such contexts, governance questions—namely, which information may be used, under what conditions, and in what socially acceptable manner—arise in a common and unavoidable form. The framework proposed in this study is positioned as a foundational element for institutional design that anticipates societal implementation across multiple data sources.

1.4. Core Issue: The Necessity of Perfect Privacy Protection

From the above background and previous studies, two points can be confirmed:

- (1)

Smartphone AI is capable of recognizing and analyzing external audio.

- (2)

The vast majority of people carry smartphones at all times and use them routinely as a means of communication.

These facts mean that smartphones could serve as an extremely powerful social safety infrastructure capable of detecting and reporting early signs of serious crimes or terrorist acts. In other words, the reality that most members of society carry smartphones constantly indicates that, from a technical standpoint, an information infrastructure capable of monitoring events throughout society is already in place.

Given this reality, the following social questions are unavoidable:

- (a)

Should there be a legal obligation to notify the police or other authorities when serious crimes or terrorist acts are detected?

- (b)

Should AI be legally required to actively attempt to detect serious crimes or terrorist acts?

- (c)

To what extent should privacy protection be guaranteed, and is such protection in fact feasible?

For the obligations in (a) and (b) to be ethically justified, privacy as defined in (c) must be protected at an extremely high level. This paper places particular emphasis on the following two conditions for privacy protection: first, that conversations unrelated to serious crimes or terrorism are reliably deleted and never leaked outside the smartphone; and second, that these strict protective measures are widely known to the public so that citizens do not experience anxiety or stress in their daily lives.

The Smartphone as Societal Safety Guard concept presented here aims to achieve both public safety and privacy protection, with the following two elements at its core:

- (A)

Utilizing smartphones’ speech recognition capabilities to detect and report emergencies.

- (B)

Fully protecting privacy and earning complete societal trust in that protection.

In particular, achieving (B) is a prerequisite for social acceptance in democratic states; without it, deployment would provoke strong public resistance and become effectively impossible. Conversely, the feasibility of achieving “perfect privacy protection” is the single most critical factor determining the success or failure of this concept.

In reality, daily OS updates are a “black box,” and there is always the possibility that functionality changes or latent risks could be introduced without the user’s knowledge. The risk that a “permanent eavesdropping and reporting function” for purposes entirely lacking social approval could be surreptitiously embedded cannot be eliminated in theory. Therefore, to prevent such misuse risks, it is essential to scrutinize both the technical potential and institutional safeguards, and to build a shared societal understanding.

In conclusion, if “perfect privacy protection” can be established both technically and institutionally, the primary barrier to social deployment would be removed, and this massive information infrastructure could be instantly disseminated in response to public demand, dramatically improving public safety. Conversely, if it is determined that such protection is not currently achievable and the implementation of the Smartphone as Societal Safety Guard is abandoned, it would mean relinquishing “perfect privacy protection” for other forms of AI use as well, thereby forfeiting the diverse benefits they could bring. From this perspective, “perfect privacy protection” is a critical issue that democratic states must commit to resolving.

2. Possibilities and Challenges of Always-On Monitoring by Smartphone AI

This chapter does not present an implemented system or an empirical experiment, but introduces a conceptual reference architecture to clarify technical feasibility and institutional design requirements. It corresponds to the first of the two pillars presented in this paper, namely, the recognition that personal devices—particularly smartphones—are already becoming a sensing infrastructure capable of covering society through combinations of existing technologies. Rather than proposing new technologies, this chapter substantiates this pillar by demonstrating the maturity of current technologies and illustrating, through concrete examples, how their integration enables such capabilities.

2.1. Example Configuration of a Smartphone AI Application with “Always-On Monitoring and Reporting” Functionality

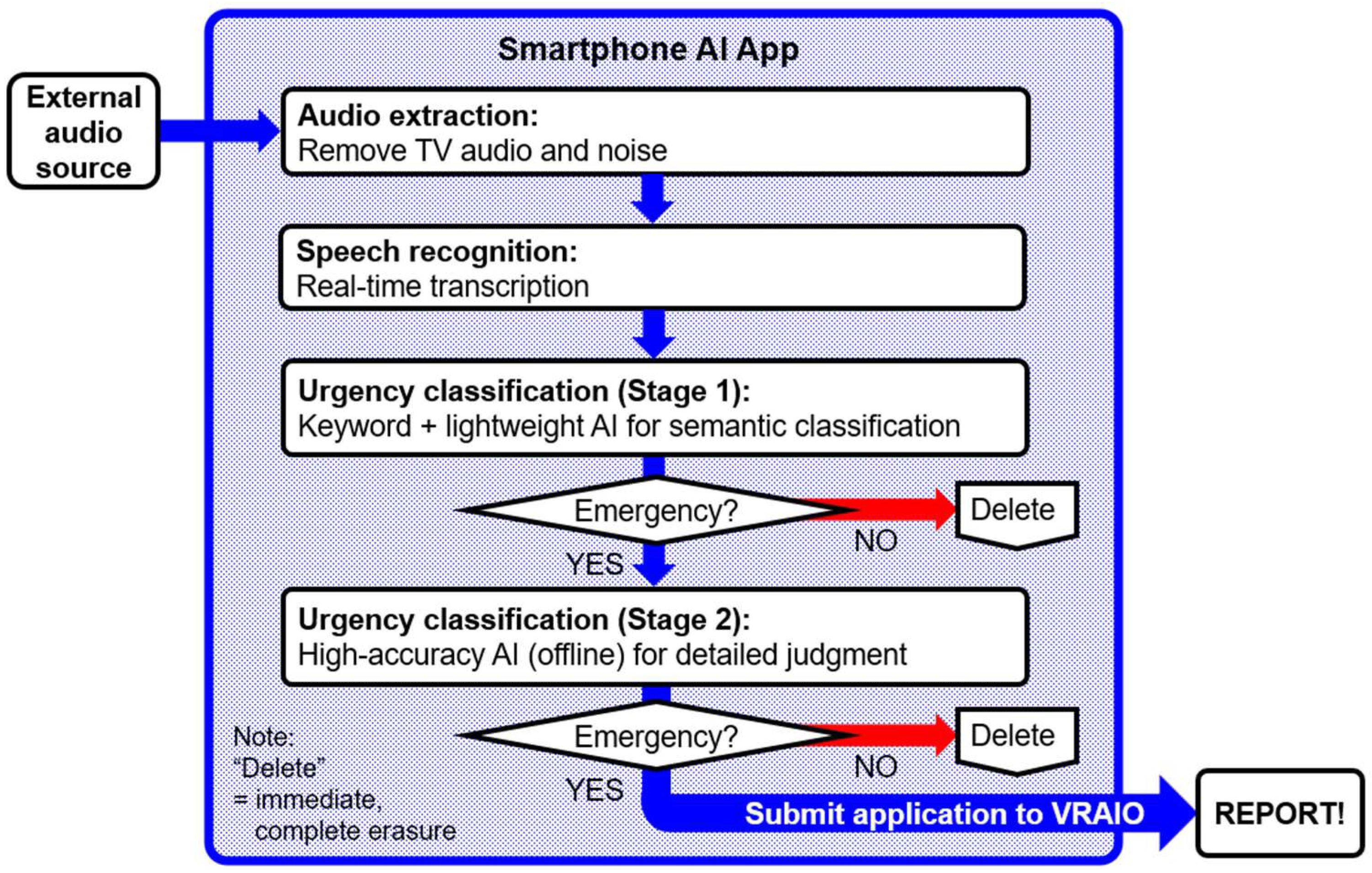

Figure 1 shows a simplified prototype configuration of a smartphone AI application equipped with an “always-on monitoring and reporting” function. This configuration assumes prototype development using free applications or free libraries, with the expectation that these would be replaced by purpose-built applications or functions for practical deployment. In this paper, we base our discussion of feasibility and challenges on this prototype assumption.

The application operates offline, performing audio extraction, recognition, and a two-stage urgency classification. Only data judged as “potentially reportable” is output via the VRAIO system. Any information not deemed urgent is immediately and completely deleted, with no output to the outside. The entire application is enclosed within the “egress firewall” shown in

Figure 1, ensuring rigorous privacy protection and realizing “AI monitoring dedicated solely to emergency reporting.”

From an institutional standpoint, the following are assumed: (1) the application cannot be deleted or disabled (it can be stopped only by turning off the device); (2) installation is mandatory on devices used domestically; and (3) implementation and permissions are equivalent to those of a pre-installed OS application. Thus, in practical deployment, deep OS-level integration (persistent operation, high privileges, non-disablable) is envisaged. However, for the sake of simplifying discussion in this paper, it is treated as a “special-mandate application” logically separated from the OS.

The processing flow is as follows. First, audio extraction is performed to remove TV audio and noise from the external audio captured by the smartphone’s built-in microphone. In this stage, various methods may be used to achieve robust detection under low-SNR conditions, including machine learning-based voice activity detection (VAD), signal processing-based noise suppression, and beamforming using multi-microphone arrays. For example, VAD methods that leverage contextual information and multi-resolution features to improve adaptability to unknown environments [

33], or mobile speech recognition frameworks that ensure noise robustness through environmental feature extraction and reference table updates [

34], have been reported.

Next, real-time transcription is performed using an offline speech recognition engine (e.g., Vosk). While speech recognition can be implemented in various forms—cloud-connected, hybrid, etc.—this system is designed as a completely offline AI application that operates entirely within the smartphone. In a fully offline design, there is no pathway by which audio data or intermediate transcription data could be sent to an external server, thereby eliminating the risk of leakage via communication channels or external storage. Furthermore, because processing is completed entirely within the device, it is unaffected by network latency or connectivity instability, minimizing response time from speech input to result display [

35]. Advances in high-performance edge AI have made it possible to achieve high-accuracy speech recognition in multiple languages even under offline conditions.

The transcribed text is then passed to the first stage of urgency classification. This classification may employ lightweight versions of large language models (LLMs)—such as computationally efficient Transformer-based models [

36]—rule-based classifiers (using predefined keywords or pattern matching), or statistical models (e.g., Naïve Bayes classifiers, logistic regression), selected according to required accuracy and computational resources. Statistical models are deployed as pre-trained finalized models, with no on-device training; they are used solely for inference. This approach minimizes computational load on the device while enabling instant probability estimation of urgency from the input text, thus balancing privacy protection with real-time performance.

If classified as urgent in the first stage, the data proceeds to the second stage for detailed judgment using a high-accuracy AI model (e.g., llama.cpp configured for offline operation). Model selection at this stage is guided during final application development by requirements for accuracy, explainability (including evaluation based on Co-12 characteristics [

37]), and regulatory compliance.

If the second stage also classifies the case as urgent, a reporting process is triggered via the Verifiable Record of AI Output (VRAIO) system. If urgency is denied at any stage, both audio and text data are immediately and completely deleted, with no output to the outside.

This second-stage high-accuracy AI is conditionally feasible on current smartphones due to advances in quantization and model compression techniques [

38]. In this system, a domain-specific model specialized for detecting indicators of emergencies such as crimes or terrorist activities is adopted, and computational load is reduced by intentionally constraining the vocabulary and decision space. No training is performed during operation; inference is strictly limited to on-device execution. Input data are either reported or discarded after classification and are immediately and completely deleted, thereby avoiding computational and I/O overhead associated with log storage or continual learning. This operational design allows device resources to be concentrated on inference, creating room—specifically for second-stage processing that does not assume continuous operation—to employ moderately larger models than in typical designs (e.g., models in the 3–4 B parameter range).

Here, the term “1 B-class” conventionally refers to models with approximately 10

9 parameters, based on a scaling perspective that uses parameter count as the primary metric [

39]. Recent studies have shown that activation-aware quantization and mobile-oriented optimization enable low-bit models (e.g., INT4) to be executed on NPUs, substantially reducing inference latency and power consumption [

38]. Indeed, it has been reported that quantizing 3–4 B-class models to INT4 and leveraging NPU acceleration can achieve the throughput and energy efficiency required for event-driven analysis [

40].

Furthermore, by incorporating domain-specific encoder-based classifiers, inference pathways can be simplified, enabling a more lightweight and stable on-device inference configuration [

41]. In contrast, continuously running general-purpose LLMs of 7 B parameters or larger on-device typically requires high-end hardware and sufficient memory bandwidth, imposing significant practical constraints [

42]. In addition, for autoregressive inference, data movement tends to dominate computation, leading to pronounced constraints in power consumption and thermal dissipation [

43]. Accordingly, the proposed framework adopts a two-stage architecture that combines a lightweight model for continuous monitoring with a high-accuracy model that is activated only when needed. Moreover, task-specific LLMs in the 0.3–1 B parameter range are expected to enable low-overhead practical deployment through knowledge distillation and quantization, thereby reducing device requirements and operational costs [

41].

In the preceding discussion, this section has focused on the technical feasibility of deploying AI-based speech recognition applications using smartphone computational resources. However, it should be noted that practical deployment raises additional operational considerations that are not examined in detail here.

In particular, continuously running background AI applications may impose burdens on device owners, including increased energy consumption (e.g., higher electricity costs and reduced battery life), competition for computational resources with other applications, and potential long-term effects such as battery degradation or performance deterioration of the device. These factors may affect user comfort and acceptance.

Addressing such issues—through measures such as energy-efficient design, event-driven or conditional activation, and minimization of computational load—is essential for real-world deployment. While resolving these implementation-level optimizations is beyond the scope of this paper, their importance is explicitly acknowledged and should be examined through future system design efforts and social trials.

The features of this configuration are as follows:

- (1)

Compatibility with VRAIO: Zero external communication under normal operation; limited output via VRAIO only when reporting.

- (2)

Stepwise filtering: Two-stage classification ensures early and complete deletion of unnecessary data, optimizing both privacy and computational resource use.

- (3)

Technical transparency: The roles of each processing stage (audio extraction, speech recognition, lightweight LLM, high-accuracy model) are clearly defined, facilitating external auditing and public explanation.

2.2. Expected Effects of the Always-On Monitoring and Reporting Function

This function has the potential to dramatically improve public safety by detecting and reporting victims’ calls for help (e.g., “Help!”), statements made by perpetrators or criminals, and even discussions or planning of crimes or terrorist acts. As a result, the following social effects can be expected:

General expected effects:

* Rapid rescue of victims when they are involved in a crime.

* Certain and immediate arrest of perpetrators after a crime, with a potential deterrent effect on criminal acts.

* Improved accuracy of systems for early detection and prevention of crimes or terrorist acts.

Specific examples of capabilities that could be realized:

* Detection and reporting of victims’ calls for help: Enables rapid rescue of victims.

* Detection and reporting of perpetrators’ statements: Allows immediate arrest following a crime.

* Detection and reporting of criminal consultations: Enables prevention through apprehension at the planning stage.

* Detection and reporting of terrorist consultations: Allows early identification and prevention of terrorist plots.

* Issuance of warnings: Displays a warning message to suspects, encouraging them to surrender or cease criminal activity.

Example warning message:

“A crime (or criminal plan) has been detected and reported. The location and all prior records have been sent to the authorities. Escape is impossible. Please wait for the arrival of police officers and follow the instructions on this device.”

Furthermore, among the data reported, information actually related to crimes or terrorism could, after anonymization, be used to improve models and extract patterns indicative of precursors. However, even in such cases, strict privacy protection measures are indispensable.

Future extensions could include not only recognition of ambient audio via the smartphone’s built-in microphone, but also analysis of “phone calls,” “emails,” and “SNS communications,” as well as integration with public surveillance systems such as street cameras. In this paper, discussion is focused first on the ambient audio recognition function using the built-in microphone.

It should be noted that while this function can bring enormous benefits to public safety, it also carries the risk of advancing a surveillance society. In democratic states, “perfect privacy protection” is indispensable for its acceptance. The next section examines the technical and institutional challenges that must be addressed to achieve this.

3. Six Technical and Institutional Challenges for Establishing “Perfect Privacy Protection”

This chapter aims to examine the second pillar presented in this paper, namely, the necessary conditions under which the concept of utilizing smartphones as public safety infrastructure can be justified within democratic societies. Specifically, it independently analyzes six challenges identified from technical and institutional perspectives. However, even if these six challenges were to be addressed at the technical and institutional levels, this alone would not suffice to establish the second pillar. Ultimately, the second pillar is realized only when the issue of societal acceptance is overcome—specifically, when citizens can understand the system and feel genuine reassurance at a fundamental level. Accordingly, this chapter systematically discusses the six challenges as prerequisite conditions for that outcome.

In the following sections, the six challenges required to realize the second pillar of “perfect privacy protection”—defined as a state that is secured both technically and institutionally and is also socially trusted—are examined by classifying them according to their nature into:

- (3.1)

Challenges that can be addressed primarily through technical means,

- (3.2)

Challenges that require institutional design as the main response, and

- (3.3)

Challenges that remain unresolved at present.

3.1. Issues That Can Be Addressed Through Technology (Possible with Existing Technology Plus Improvements)

- (1)

Preventing Unauthorized Access (External Attacks) by Non-Permitted Parties

Against access from unauthorized entities such as hackers, standard security measures are indispensable, including authentication systems, firewalls, and intrusion detection systems (IDSs). In recent years, IDSs have been enhanced through deep learning; for example, a hybrid IDS combining CNN-based feature extraction with a random forest classifier has achieved higher accuracy than conventional methods on benchmark datasets such as KDD99 and UNSW-NB15 [

44]. In IoT and large-scale distributed network environments, addressing challenges such as spatio-temporal feature extraction and data imbalance is critical, and attempts to improve IDS performance have been reported using GAN-based feature generation and training data augmentation [

45].

When implementing the Smartphone as Societal Safety Guard, these external attack countermeasures are necessary for all system elements involved in internal communications (between each smartphone and the central management AI described later), as well as in communications to external reporting targets (such as the police).

- (2)

Removal or Reduction in Privacy Information from the Data Itself

Efforts to remove or reduce privacy information from the data itself fall under privacy-preserving data mining (PPDM) techniques, including data perturbation, anonymization (k-anonymity, differential privacy), and encryption (homomorphic encryption, secure multi-party computation). Data perturbation and anonymization remove or reduce privacy-related information from the data. In contrast, encryption enables computation and sharing while keeping the data content hidden, making re-identification by third parties more difficult. These techniques aim to prevent extraction of privacy information from input data while maintaining analytical utility; however, they inherently involve a trade-off between protection strength and analytical accuracy—stronger protection leads to lower accuracy.

In multidimensional data publishing, for example, a high-accuracy and high-diversity k-anonymization algorithm (KAPP) that combines improved African vulture optimization with t-closeness has been proposed as a countermeasure against skewness and similarity attacks [

46]. For data with high-dimensional manifold structures, methods that combine k-anonymity with differential privacy ((k, ε, δ)-anonymity) have been shown to minimize information loss while balancing anonymity and utility [

47]. In encryption, the use of a distributed shuffle mechanism based on Paillier encryption has been reported to achieve centralized-level accuracy–privacy performance in the differentially private average consensus problem [

48]. PPDM is applied primarily to AI input data, but can also be applied to AI output data.

Nevertheless, these methods inevitably involve trade-offs between computational cost and accuracy, and when input data becomes massive big data, various “gaps” are conceivable, such as re-identification through matching with external datasets, cross-referencing of long-term sequentially released data, personal identification through combinations of high-dimensional features, and detection of outliers. Therefore, even combining multiple known PPDM techniques cannot realistically guarantee complete protection. In distributed machine learning approaches such as federated learning, balancing privacy protection, fairness, and model performance remains an important research challenge [

49], and society must agree upon acceptable privacy–accuracy thresholds [

50].

Since perfect defense through technology alone is limited, it is important to complement it with institutional and operational measures such as the principle of minimum data collection, restrictions on use, access auditing, and continuous third-party risk assessment.

In the Smartphone as Societal Safety Guard system, the “smartphone AI app” processes input (audio data) in its raw form at all times, but output occurs only when reporting, and after processing, it is either sent immediately or deleted without being retained in the application. Therefore, PPDM techniques are not essential during operational use. However, during model training or testing phases, input data may be temporarily stored or shared, making the application of PPDM techniques indispensable in these contexts.

- (3)

Suppression of False Positives

False positives can lead to unnecessary reports, which in turn may cause privacy infringements or unjust treatment. For example, in the operation of facial recognition technology, misidentifications have been noted to carry the risk of unjustly subjecting innocent citizens to investigation, and reductions in such errors are required from both institutional design and technical perspectives [

51]. On the other hand, excessive suppression of false positives can increase the number of false negatives (missed detections), which may result in serious consequences such as the failure to detect major crimes or terrorist acts. Therefore, balancing the control of both error types is essential.

From a technical standpoint, combining existing speech recognition or natural language processing models with multi-stage filtering can reduce false positive rates. For instance, in wake-word detection under noisy environments, a learning method that integrates task-oriented speech enhancement with an on-device detector (TASE) has achieved stable improvements in detection accuracy even under high-noise conditions, demonstrating the effectiveness of a multi-stage architecture linking front-end processing with back-end decision-making [

52]. This approach is applicable regardless of input signal type or detection task and can be extended to other speech/language processing systems and multimodal analyses.

Receiver operating characteristic (ROC) analysis is a representative method for evaluating and optimizing the trade-off between false positives and false negatives, allowing the adjustment of thresholds to balance the two [

53]. Moreover, in the field of gene expression analysis, ROC-based optimization methods have also been proposed, and the results demonstrated in that domain could be applied to speech and language processing as well [

54]. Using other metrics in combination—such as precision–recall curves (which evaluate the relationship between precision and recall and are particularly useful for performance evaluation under class imbalance) or cost-based evaluations (which quantify the loss cost associated with false positives and false negatives and identify the threshold or model configuration that minimizes total cost)—can enable optimization more closely aligned with operational environments.

When implementing the Smartphone as Societal Safety Guard, development of the “smartphone AI app” should incorporate a balanced combination of these methods while continuously improving models and refining evaluation methodologies.

3.2. Issues Primarily Requiring Institutional Design

- (4)

Careful Definition of the Scope of Application

The types and severity levels of crimes to be targeted for detection and reporting (e.g., terrorism, kidnapping, homicide, robbery, assault, threats, domestic violence, drug trafficking) should be strictly defined through public debate and under democratic procedures. This definition should be established with both (i) objective evidence regarding actual risk levels and (ii) societal perceptions of seriousness in mind, while incorporating institutional safeguards to prevent the reinforcement of discrimination or prejudice.

As an example of (i), an analysis of Japanese police statistics and newspaper reports has shown that group crimes are more likely to become violent than individual crimes, that the severity increases with group size, and that juveniles are more often involved in group crimes than adults [

55]. Such objective findings can serve as reference indicators for weighting severity or setting triggering conditions (e.g., group involvement, level of violence).

As an example of (ii), the “Crime Stereotypicality and Severity Database” (CriSSD) developed in Portugal has demonstrated that perceived seriousness can be predicted based on stereotypicality ratings, suggesting that social perceptions may inherently contain biases [

56]. Such bias-based influences should be carefully excluded from the definitional process.

In addition, to prevent arbitrary expansion of the scope, clear boundaries based on laws and regulations are essential, and these boundaries should also account for new risks arising from advances in surveillance technologies. While modern CCTV and AI analysis can contribute to public safety, they also increase the risk of privacy infringement by enabling detailed tracking of individuals’ activity histories. In using such technologies, it is necessary to incorporate a policy framework that safeguards democratic values and respect for human rights, and to limit the permissible scope of surveillance (in the Smartphone as Societal Safety Guard, the reporting scope) to a form that is transparent (clear criteria disclosed in advance) and justifiable (based on social consensus and legal procedures) [

57].

- (5)

Avoidance of Discriminatory Use

It is essential to prevent surveillance outcomes from operating in ways that are racially or socioeconomically unjust. Privacy protection must go beyond the mere formality of “not leaking personal data” and ensure that neither operational processes nor outcomes reinforce discrimination; only then can social trust be earned. In democratic states in particular, fairness, accountability, transparency, and privacy protection are mutually dependent—if any one is lacking, the reliability of the entire system is undermined. International frameworks for AI ethics have expressed similar views. For example, AI4People sets forth five principles—beneficence, non-maleficence, respect for autonomy, justice and fairness, and explicability—of which respect for autonomy, justice and fairness, and explicability all encompass the importance of privacy protection, with justice and fairness explicitly emphasizing the avoidance of discrimination [

58]. Radanliev, in comparing AI policies in the EU, the United States, and China, highlighted differences in the prioritization of transparency, fairness, and privacy, as well as in technical strategies, and emphasized the need for fairness-aware algorithms and diverse development teams [

59]. From this perspective as well, preventing discriminatory use is an indispensable component of “perfect privacy protection.”

Existing research has criticized the tendency for many algorithmic biases to be designed around profit maximization or institutional convenience, often neglecting causal impacts on the interests of vulnerable groups or the distribution of welfare [

60]. Predictive policing algorithms have been found to embed racial and social biases, with their interpretation and operation depending heavily on the surrounding social context [

61]. Furthermore, data mining and surveillance systems have been reported to produce unintended discrimination by reflecting both data incompleteness and biases inherent in society, leading to outcomes that are difficult to detect or explain [

62].

Such discrimination and bias, as well as the disadvantages imposed on certain individuals as a result, may arise as unintended byproducts of the pursuit of safety and efficiency. However, they must not be justified as a “necessary evil.” Preventing such outcomes requires continuous reflection, improvement, and revision in both institutional design and technical design.

For example, if criminals or terrorists choose not to carry smartphones in order to avoid surveillance, that behavior alone could be deemed “suspicious,” placing ordinary citizens who behave similarly at risk of becoming investigation targets. Such proxy discrimination structures can be easily entrenched through the mediation of police data and societal prejudice [

62]. Recently, statistical correction methods such as conditional score recalibration (CSR) have been proposed to reduce age- or race-related biases in predictive policing data, improving fairness metrics while maintaining predictive accuracy [

63]. In the medical field, frameworks have been proposed to mitigate institution-specific or ethnic biases introduced during data collection through reinforcement learning, with improvements observed in both fairness and generalization performance [

64]. These technical insights are applicable to the surveillance domain as well.

However, such issues cannot be fully resolved by technology alone. In contexts where structural racism and social inequality are present, an institutional framework is indispensable—one that ensures transparency, explicability, trust-building with local communities, explicit fairness commitments, and accountability throughout the entire lifecycle of algorithms, from development to operation and decommissioning [

65]. Moreover, governance structures functioning as a social safety net, designed with awareness of power structures, are needed to institutionalize transparency in surveillance standards and to guarantee accountability [

61].

Accordingly, in the Smartphone as Societal Safety Guard concept, particular attention must be paid to these considerations during the development and updating of the AI application—especially in the development and updating of specialized large-scale machine learning models (LLMs) for the two-stage danger-level classification.

Crucially, because discriminatory effects cannot be fully eliminated ex ante by technical design alone, it is essential that VRAIO-based verification and auditing mechanisms operate continuously and institutionally. By subjecting all report-triggering outputs to third-party review, tamper-proof recording, and ex post audit, the proposed framework prevents discriminatory practices from becoming opaque, routinized, or institutionally entrenched. In this sense, VRAIO functions not merely as a safeguard against misuse by authorized insiders, but as a core governance mechanism that ensures discriminatory use—if it arises—remains detectable, contestable, and correctable at the institutional level.

3.3. Unresolved Issues (Directly Addressed by VRAIO)

- (6)

Prevention of Misuse and Abuse by Authorized Parties (Internal Threats)

Intentional or unintentional misconduct by parties with legitimate access rights—such as smartphone OS providers, application operators, or device owners—constitutes an internal threat that can be as serious as, or even more serious than, external attacks. Internal actors, using their authorized privileges, can alter output content, delete logs, or conceal specific data, making it fundamentally impossible to prevent such actions through conventional safeguards such as encryption or access control alone.

Systematic reviews have identified delays in countermeasures against insider threats, presenting two major classification models based on biometric and asset indicators, summarizing effectiveness factors, and highlighting research gaps [

66]. Moreover, characteristics specific to AI systems, such as opacity and dependence on training data, may exacerbate the severity of internal threats [

67]. In domains handling highly sensitive information—such as the medical field—encryption, multi-factor authentication, secure communications, staff training, and legal compliance are considered indispensable, along with organizational mechanisms to deter insider access or exfiltration [

68]. In addition, machine learning models are known to be vulnerable to membership inference attacks (MIAs), in which information about training data can be leaked; insiders could exploit such vulnerabilities, underscoring the need for systematized and continuously improved defense methods [

69]. These measures are particularly relevant in the Smartphone as Societal Safety Guard for countering privacy leakage risks by insiders during the development of the “smartphone AI app,” especially in the creation of the high-accuracy AI used in Stage 2 of urgency classification.

In the field of medical AI, it has been demonstrated that training with differential privacy can be implemented without significantly compromising fairness or diagnostic accuracy [

70]. This approach is also promising as part of insider threat countermeasures in the development of the “smartphone AI app” for this system. However, the “output” of the smartphone AI app itself consists of high-accuracy factual information for emergency reporting (location, characteristics, phone number), and thus techniques that directly modify output content are not suitable.

As outlined above, in the Smartphone as Societal Safety Guard, current technological and institutional measures lack robust methods to prevent misuse or abuse by insiders without applying anonymization or de-identification directly to the output itself.

To address this gap, this paper proposes the Verifiable Record of AI Output (VRAIO), a large-scale technical and institutional system. Under VRAIO, a third-party “Gatekeeper” encloses the AI within an output firewall, while another third party, the “Recorder,” reviews and records the approval or denial of each output, storing the decision history in a tamper-proof and verifiable format.

On the institutional side, measures such as bounty programs for those who detect misconduct, stricter penalties for abusers, and enhanced victim compensation schemes are combined to deter insider threats and strengthen social acceptance. The next chapter discusses how VRAIO can be adapted specifically to the smartphone speech recognition and reporting AI system.

4. Pathway to “Perfect Privacy Protection” Based on VRAIO

In this study, as a countermeasure against such internal threats, we propose the introduction of the Verifiable Record of AI Output (VRAIO) [

24]. This approach does not intervene in the AI’s input or internal processing; instead, it focuses solely on regulating its output. By doing so, it preserves the system’s freedom and efficiency while institutionally ensuring privacy protection.

VRAIO was originally conceived for public spaces in which “at least one AI system-connected camera is installed in every streetlight, ensuring that every location falls within the field of view of at least one camera.” In this framework, the AI system is enclosed within an egress firewall, and a third-party organization—the Recorder—reviews all AI outputs for compliance with socially agreed-upon purposes, allowing only approved data to be released. For each output request from the AI system, the Recorder makes an approval or denial decision based on rules established through prior public debate, and sends the approval conditions back to the firewall, which then controls the opening and closing of the output control valve. Importantly, the output request contains information such as the purpose, summary, source, and intended recipient of the output, but does not include the output data itself. The Recorder stores the entire approval process in a tamper-proof and verifiable format.

To counter false declarations of output purpose, the framework incorporates (i) a system of substantial penalties for false reporting, (ii) a bounty program to reward individuals who detect such misconduct, and (iii) sufficient compensation for victims in the event that false reporting leads to privacy violations. These combined measures are intended to create strong negative incentives against false reporting, encourage active auditing by bounty hunters and others, and enhance public acceptance through the presence of robust safeguards.

In this paper, aiming to achieve “perfect privacy protection” in the Smartphone as Societal Safety Guard, and particularly to prevent misuse by authorized parties, we propose the following two approaches for introducing VRAIO:

- (1)

Single-Stage Implementation: VRAIO applied only to the smartphone AI application

In this approach, VRAIO is applied to the “environmental audio monitoring and reporting AI application” installed on the smartphone. A two-stage urgency classification is used to improve both real-time responsiveness and detection accuracy. Only when an audio situation is determined to be genuinely urgent will it be reported to the relevant authorities (e.g., police stations). All other input data and analysis data are immediately deleted, and under this principle, no such data ever leaves the smartphone.

- (2)

Two-Stage Implementation: VRAIO applied to both the smartphone AI application and the central management AI

In cases where the smartphone-based “environmental audio monitoring and reporting AI application” alone cannot achieve “perfect situational analysis” or ensure detection and reporting only of genuinely urgent situations due to processing capacity limitations, the reporting destination of the application will be set to the “central management AI system” of a report-receiving management center. The central management AI will then re-perform urgency classification with greater precision. Only when the situation is deemed genuinely urgent will it be reported to the relevant authorities (e.g., police stations). All other input and analysis data are immediately deleted, and under the same principle, no such data ever leaves the smartphone. In this approach, VRAIO is applied to both the “reporting AI application” on the smartphone and the “central management AI system” at the report-receiving management center.

4.1. Single-Stage Implementation: VRAIO Applied Only to the Smartphone AI Application

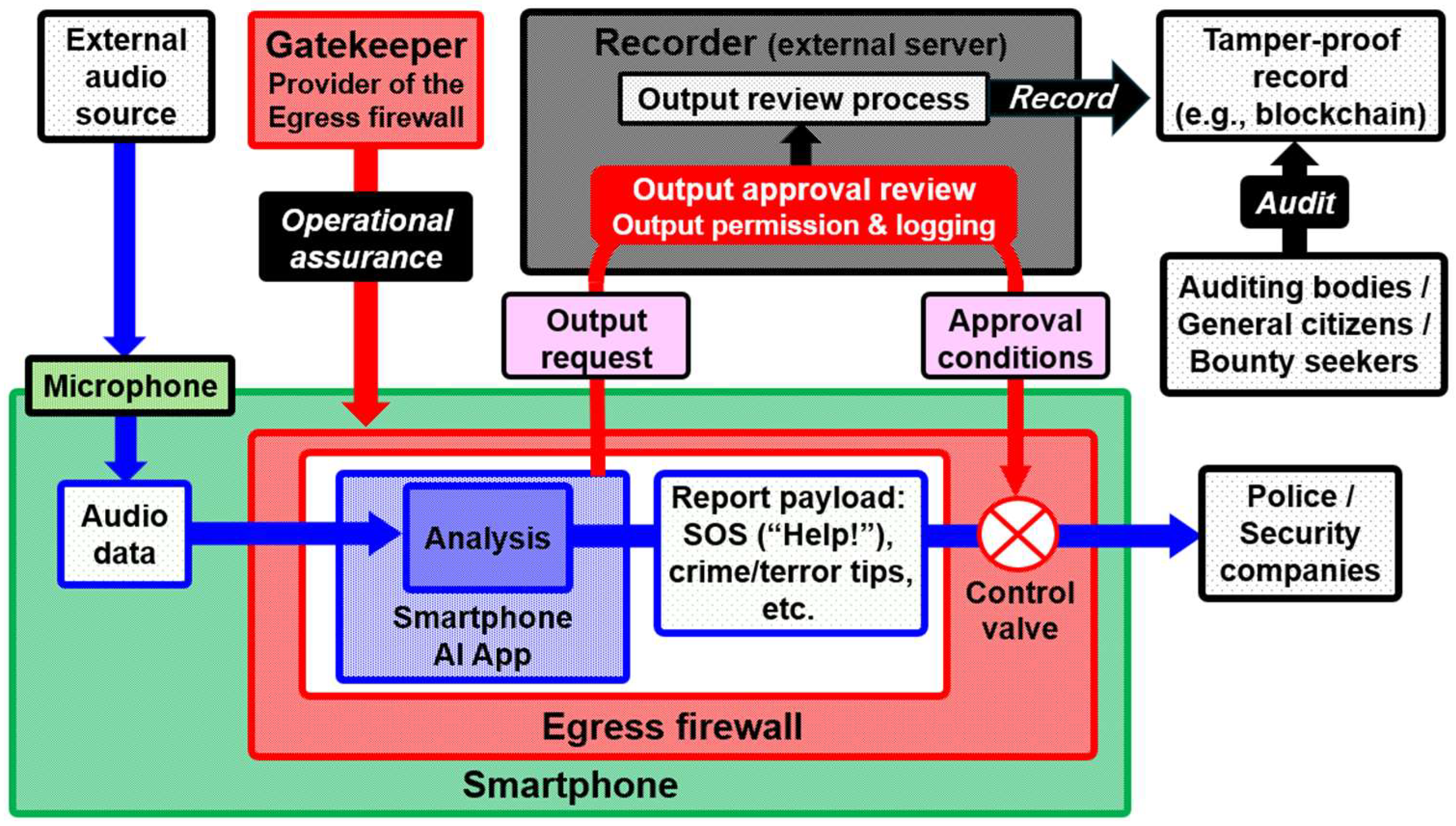

As shown in

Figure 2, outputs from the Smartphone AI App are contained by the Egress firewall, and only report data approved by the Recorder passes through the Control valve to the designated reporting destinations (e.g., Police or Security companies). The Gatekeeper is responsible for operational assurance of the firewall. The Recorder focuses exclusively on the output approval review and does not handle the actual output data itself. Audit logs are recorded in a tamper-resistant format (e.g., blockchain) and can be examined through audits. In the original VRAIO proposal, the Gatekeeper’s role was also performed by the Recorder, but here it is separated to enhance transparency.

The VRAIO framework consists of the following seven elements:

- (1)

Rule-setting based on democratic consensus

The types and severity levels of crimes to be detected and reported by the Smartphone AI App (e.g., terrorism, kidnapping, homicide, robbery, assault, threats, domestic violence, drug trafficking) are determined through democratic debate and established as “laws” or “ordinances” via legislative and administrative procedures. This ensures the legitimacy of regulation and public acceptance.

- (2)

Institutional oversight by a government agency

The Government Regulatory Agency for AI disseminates and supervises the implementation of the rules established as laws or ordinances, and mandates compliance by all relevant parties. It also evaluates the public interest and the effectiveness of privacy protection in the defined target crimes and severity levels, and provides this evaluation to society as a basis for revising the rules through democratic processes.

- (3)

Operational assurance of the Egress firewall by the Gatekeeper

The Egress firewall is developed by smartphone OS vendors, app developers, or other entities, but its operational assurance is provided by an independent third-party Gatekeeper.

Here, the term “operational assurance” refers not merely to confirming the functional correctness of the Egress firewall, but also to the continuous monitoring and verification by an independent third-party Gatekeeper of the overall system behavior, including operating system (OS) updates. Where necessary, the Gatekeeper is assumed to have the authority to request relevant specification information, source code access, or equivalent verification mechanisms from smartphone OS providers or application developers. This enables the institutional detection and deterrence of backdoor-like functional additions or eavesdropping behaviors that could otherwise be introduced unintentionally or without adequate social consensus.

Such OS-level monitoring and verification constitute an additional safeguard that is independent of VRAIO’s governance of AI outputs. At the same time, integrating these mechanisms makes it possible to simultaneously ensure governance at the output stage and the integrity of the underlying software platform. Even if OS update monitoring were to be implemented through means other than VRAIO, effective oversight would ultimately require monitoring the behavior of the OS itself, and institutional designs are therefore expected to converge toward a similar structure. In this sense, operational assurance by the Gatekeeper can be regarded as a dual-purpose institutional measure that addresses both the governance of AI outputs and the prevention of backdoor manipulation at the OS level.

- (4)

Output review by the Recorder

The Smartphone AI App is enclosed by an Outbound firewall managed by the Gatekeeper. For every external output, the app issues an Output request containing output metadata (purpose, crime type and severity, summary, source, intended recipient) to the Recorder. The Recorder determines, in a formal approval review, whether the request complies with the rules defining the target crimes and severity levels. The Control valve of the Egress firewall is operated by the Gatekeeper according to the Approval conditions set by the Recorder. Neither the Gatekeeper nor the Recorder has access to the actual output data—only to the metadata.

- (5)

Tamper-proof recording and disclosure

Output-related metadata—including the output request containing information such as purpose, crime category and severity, summary, source, and intended recipient; the decision process regarding approval or denial; and the approval conditions (excluding the output content itself)—are recorded in a tamper-resistant manner using technologies such as blockchain, and are made available for societal auditing with ensured transparency.

To prevent excessive growth of the blockchain while preserving both tamper resistance and auditability, the VRAIO framework assigns a unique output ID to each output and manages output-related metadata as minimal recording units. These records are handled on an append-only basis, and once recorded, they are not overwritten, modified, or deleted. This design institutionally constrains ex post manipulation or erasure of records.

To enable real-time or near-real-time auditing while keeping storage overhead manageable, output histories are aggregated into time-bounded index structures. These indices are periodically anchored to the blockchain and are rotated after a predefined retention period (e.g., one year). This approach avoids long-term accumulation of records while preserving traceability and verifiability of outputs.

The specific choices regarding retention periods, index update frequency, record granularity, and the configuration of participating nodes or hubs (e.g., obligations for participating companies or public institutions to operate hub nodes) should be determined through institutional design based on expected output volume, operational scale, and cost–benefit considerations. Accordingly, this paper limits itself to articulating architectural design principles and does not prescribe detailed implementation specifications.

- (6)

Third-party audits by citizens, oversight bodies, and bounty seekers (formal and spot audits)

Output-related metadata, after appropriate anonymization (e.g., removal of personal identifiers, coarsening of location data), is made available for audits by citizens, external oversight bodies, and bounty seekers (including whistleblowers). Auditors can formally verify whether the metadata complies with the defined rules. Additionally, with a judicial warrant or special approval from an audit committee, “spot audits” may compare a randomly selected actual report with its metadata. Spot audits are conducted only by specially selected members who have signed confidentiality agreements and are determined to have minimal connection to the relevant case, in order to minimize privacy risk.

- (7)

Institutional deterrence mechanisms

Recognizing the possibility of false reporting or other intentional misconduct by any stakeholder, VRAIO incorporates deterrent measures: substantial fines for violations, significant rewards for whistleblowers or bounty seekers who uncover misconduct, and generous compensation for victims of privacy violations. These measures impose strong negative incentives against misconduct and provide robust safeguards to enhance trust in the system.

In the Smartphone AI App model—where urgency classification is conducted in accordance with the rules defining target crimes and severity levels, and reports are sent to relevant agencies such as the Police Department or Fire Department—the single-stage VRAIO approach has the following pros and cons:

All processing is completed within the Smartphone AI App, ensuring that only emergencies are reported and all other data is immediately and permanently deleted, never leaving the device. This provides an irreplaceable sense of assurance for smartphone users.

With the limited resources of a smartphone, consistently accurate urgency classification (with both false positive and false negative rates approaching zero) is likely to be difficult.

To increase deployment options in practice, the next section proposes a “two-stage VRAIO implementation,” in which the Reporting AI App sends reports to the Central Control AI System at the Report Reception and Management Center.

4.2. Two-Stage Implementation: VRAIO Applied to Both the Smartphone AI App and the Central Control AI System

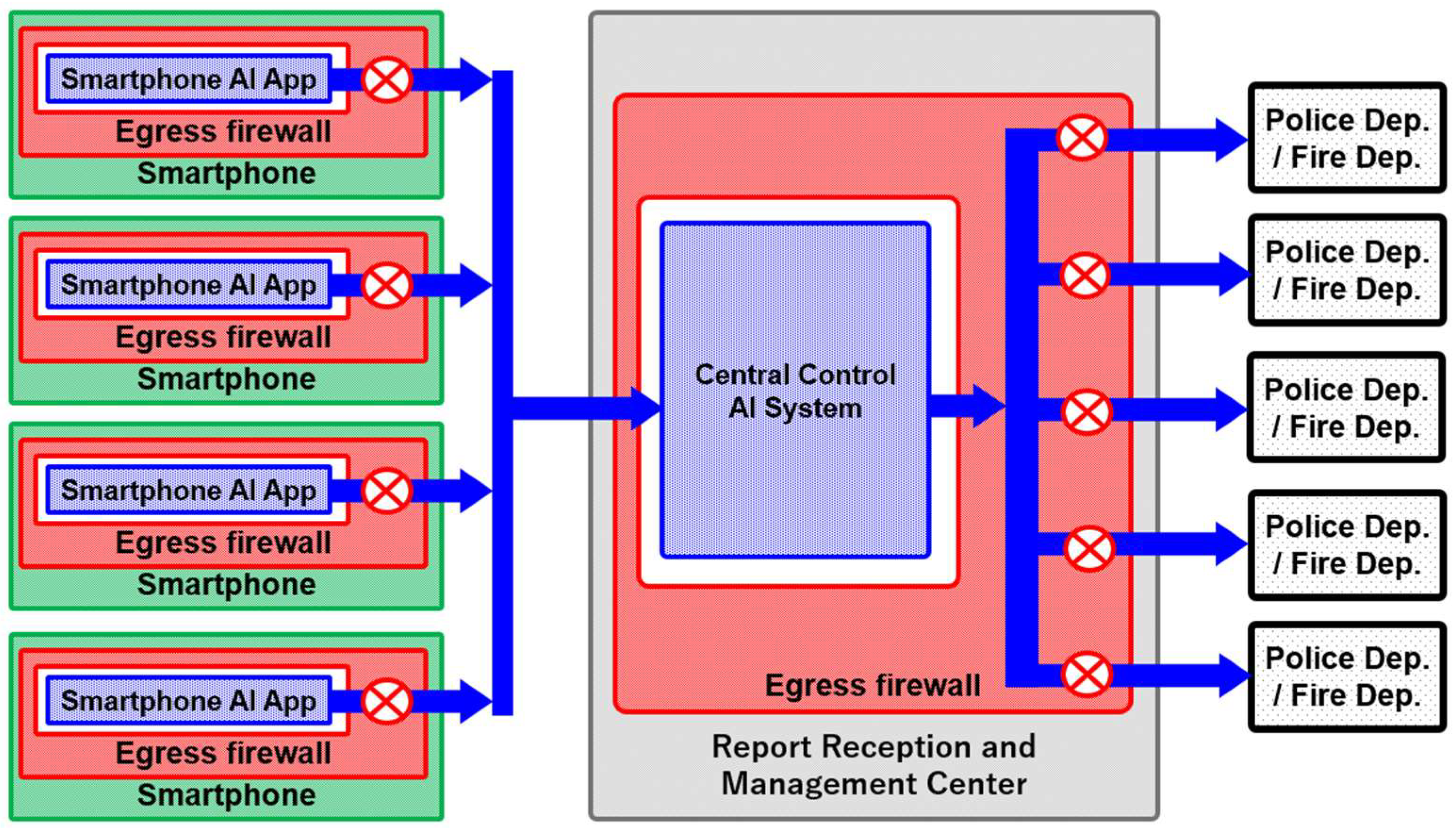

The “environmental audio monitoring and reporting AI app” installed on a smartphone may face limitations in processing capacity, making it difficult to achieve both “perfect situational analysis” and the “reliable detection and reporting of only high-urgency incidents.” To address this, the configuration shown in

Figure 3 sends reports from the Reporting AI App first to the Central Control AI System at the Report Reception and Management Center. There, higher computational capacity is used to conduct a second round of situational analysis and urgency assessment. This results in VRAIO being applied in two stages—once at the Smartphone AI App and again at the Central Control AI System.

The process flow is as follows:

- (1)

When the Reporting AI App on a smartphone determines, via Urgency classification, that an incident is urgent, it sends an Emergency Report to the Central Control AI System. This report includes the relevant data along with contextual information from before and after the trigger (e.g., audio data, analysis results).

- (2)

The Central Control AI System re-analyzes the received Emergency Report and performs Urgency classification again, based on pre-defined rules specifying the types and severity levels of crimes subject to detection and reporting (e.g., terrorism, kidnapping, homicide, robbery, assault, threats, domestic violence, drug trafficking). Reports classified as urgent are then supplemented with situational details, recommended actions (e.g., situation confirmation, suspect apprehension, questioning), and any relevant supporting information needed for response (e.g., suspect location, physical description, threat level, health status). The report is then sent to the appropriate responding agency (Police Department, Fire Department). If necessary, communication with involved parties (suspect, victim) may also be conducted via the originating smartphone.

- (3)

Throughout steps (1) and (2), VRAIO is strictly applied both to the Reporting AI App on the device and to the Central Control AI System at the Report Reception and Management Center.

This two-stage implementation of VRAIO offers the following advantages and disadvantages:

Urgency classification performed by the central control AI system can leverage high-performance computational resources, enabling highly accurate decisions with minimized false positives and false negatives. In the proposed configuration, information judged to be non-urgent at the central control level is immediately deleted and neither retained nor reused in any form, thereby institutionally ensuring that data not used for reporting do not persist at the central level.

When the Central Control AI System achieves very high classification accuracy, there is a tendency for the device-side system to send a broader range of reports—including those with uncertain urgency—in order to avoid missing an actual urgent case. This could result in a higher proportion of non-emergency information (often the private conversations of smartphone owners) being transmitted externally. Such a situation would conflict with the principle that “any information not related to an emergency is immediately deleted and never leaves the device.”

Therefore, in institutional design, it is necessary to make explicit the operational policy that, “Although false-positive information may be transmitted from the device to the Central Control AI System, such information will be immediately deleted following precise assessment by the Central Control AI System and will never be released outside the system,” in order to secure public understanding and acceptance.

5. Discussions

Smartphones, as high-performance AI devices equipped with conversation analysis functions through speech recognition, are widely disseminated and deeply embedded in society. These devices, constantly carried, from the perspective of information infrastructure, form a nationwide sensing network by integrating millions of continuously active terminals, enabling large-scale and decentralized emergency detection without additional hardware deployment.

Therefore, smartphones can function as social infrastructure for detecting serious crimes and terrorist acts. However, the potential for such continuous monitoring inevitably raises severe ethical issues in the form of privacy infringement. This duality can be seen as both the “potential to dramatically and immediately improve public safety” and the “potential for the introduction of a surveillance society without citizens’ awareness.”

Against this backdrop, three questions arise:

- (1)

Should there be a legal obligation to notify the police or other authorities when serious crimes or terrorist acts are detected?

- (2)

Should AI be legally required to actively attempt the detection of serious crimes or terrorist acts?

- (3)

To what extent should privacy protection be guaranteed, and is it actually possible to guarantee it?

As one possible answer, this paper proposes the concept of the Smartphone as Societal Safety Guard, which aims to reconcile public safety with privacy protection. The greatest barrier lies in achieving “perfect protection of the privacy of ordinary citizens.”

To achieve perfect privacy protection, six key challenges must be addressed: prevention of unauthorized access by non-permitted parties (external attacks), removal or reduction in privacy information from the data itself, suppression of false positives, careful definition of the scope of application, avoidance of discriminatory use, and prevention of misuse or abuse by authorized parties (internal threats). All of these pose difficulties, but particularly the last—preventing misuse or abuse by authorized parties—has shortcomings in existing countermeasures. To address this, the author proposes adapting and applying the Verifiable Record of AI Output (VRAIO).

VRAIO was originally proposed as a privacy-protection framework for public spaces “in which every location falls within the field of view of multiple AI-connected street cameras.” In VRAIO, all AI outputs are assessed for compliance with socially agreed purposes, and only approved outputs are released. The entire approval process is recorded in a tamper-proof and verifiable format. In this study, that framework is applied to a smartphone’s always-on audio monitoring and reporting function. New features in this adaptation include: (1) applying VRAIO to both each Smartphone AI App and the Central Control AI System (located in a single nationwide Report Reception and Management Center) that receives reports; and (2) introducing a third-party Gatekeeper to provide and ensure the operation of the Egress firewall (a role originally combined with that of the Recorder in the initial VRAIO proposal), thereby improving transparency through separation of functions.

VRAIO is a concept in which the entire AI system is enclosed within an Egress firewall. Applying VRAIO to every smartphone AI app would constitute an unprecedentedly large socio-technical system. This paper argues that without constructing such a large-scale socio-technical infrastructure, the fifth countermeasure—preventing misuse or abuse by authorized parties—cannot be realized.

Current AI regulatory frameworks, such as the EU AI Act [

71] and the U.S. AI Risk Management Framework [

72], rely primarily on documentation and auditing obligations or ex post enforcement, and do not envision a permanent societal infrastructure that controls insider actions in real time. In contrast, the proposed Verifiable Record of AI Output goes beyond the legal guidelines offered by current frameworks by integrating a dedicated technical foundation with institutional structures. In particular, to prevent insider misconduct or abuse, it is not sufficient to rely solely on ex post regulation through laws and contracts; a permanent societal infrastructure must be established that includes Egress firewalls enclosing each smartphone AI app, and third-party institutions such as the Gatekeeper and Recorder responsible for their deployment and operation. Such institutionalized and physicalized regulatory infrastructure is unprecedented, and overcoming the difficulty of its implementation is a necessary—though not sufficient—condition for achieving perfect privacy protection.

The following sections examine the technical, institutional, and societal conditions necessary for its realization.

5.1. Comparison Between Offline AI and External AI Hybrid Approaches

In the Smartphone as Societal Safety Guard concept proposed in this paper, as described in

Section 3.1, the Smartphone AI App is, in principle, assumed to operate offline. The fully offline approach, which does not use any external AI, faces the following challenges:

- (1)

Processing capacity limitations

High-accuracy models that can be deployed on a smartphone have constraints in inference speed and power efficiency, and depending on device performance, may affect real-time processing and battery life.

- (2)

Frequency of model updates and ensuring transparency

Updates depend on OS or app updates, making tamper prevention and reliability assurance key issues. For this reason, the credibility of the third-party Gatekeeper, responsible for the deployment, management, and operational assurance of the Smartphone AI App, is of critical importance.

As described in

Section 3.2, to address these limitations, this paper proposes a hybrid approach in which the Reporting AI App sends reports to the Central Control AI System at the Report Reception and Management Center. In this case, VRAIO is applied both within the smartphone and at the central control AI.

* Advantages

- -

Enables the use of large and highly accurate AI models.

- -

Reduces processing load and power consumption on the device side.

* Disadvantages

- -

Increases complexity of privacy controls due to additional communication pathways.

- -

Requires VRAIO application to the external AI as well, complicating the management structure.

- -

May limit functionality during network outages.

The recommended configuration is to equip each device with an offline Smartphone AI App and apply two-stage VRAIO jointly with the Central Control AI System. However, because privacy protection becomes more complex, an institutional design that balances reliability with public understanding is indispensable.

If the concept gains social acceptance and continuous activation on all smartphones becomes mandatory, the existence of low-performance devices will become an issue, requiring a lightweight version of the Smartphone AI App with reduced processing load for such devices. However, if legal regulations establish minimum performance standards for public safety infrastructure, this concern could be eliminated. In that case, a common Smartphone AI App could be pre-installed with performance assurance by the Gatekeeper, and strict application of the VRAIO principle of “containment + reviewed output” could be enforced.

Furthermore, if sufficient device performance is ensured and the accuracy of urgency classification approaches nearly 100%, the Central Control AI System could be simplified to function solely as a relay and routing hub. In this case, the Smartphone AI App could send Emergency Reports directly to the relevant agencies, faithfully realizing the initial design principle that “non-emergency information is never transmitted outside the smartphone,” thereby providing an even greater sense of assurance to smartphone owners.

5.2. Differences in Acceptance Depending on Political Systems

Attitudes toward the introduction of always-on monitoring via smartphones are expected to vary significantly depending on the political system. For example, in authoritarian states, social efficiency and public safety tend to take precedence over privacy, making the deployment of such surveillance systems relatively straightforward. Indeed, highly developed surveillance networks are believed to already be in operation in China [

27,

28].

By contrast, democratic states have legal frameworks in place to protect citizens’ rights and freedoms, and requirements such as auditing, accountability, and transparency are indispensable. Surveillance technologies that lack these elements are extremely unlikely to gain social acceptance. Accordingly, in democratic societies, the establishment of technical and institutional mechanisms that can reliably ensure privacy protection constitutes an urgent challenge.

To this end, the realization of “perfect privacy protection”—that is, privacy protection characterized by transparency, reliability, and the capacity to support broad social consensus—is indispensable.

In this context, the VRAIO framework proposed in this paper is not tailored to existing regulatory regimes in the European Union or the United States. Rather, its defining feature lies in providing a governance architecture in which rules governing AI outputs are socially defined and their compliance is verified and recorded by independent third parties. In other words, the specific content of output rules within VRAIO—such as reporting targets, severity thresholds, and permitted purposes of use—can be flexibly configured in accordance with national or regional legal systems, cultural contexts, and social values.

While international interoperability and regulatory harmonization would be desirable, they are not prerequisites for the viability of VRAIO. Instead, states or regions that place a high value on privacy protection may adopt VRAIO in configurations that operate on a country-specific or regionally bounded basis, consistent with their institutional realities. Although frameworks aligned with regulatory philosophies in the European Union and the United States may eventually emerge as de facto international standards, VRAIO is not predicated on subordination to any particular legal system and is designed to remain adaptable across diverse regulatory environments.

5.3. A Phased Approach Toward Societal Implementation

The societal implementation of the Smartphone as Societal Safety Guard concept should not proceed as an immediate, nationwide deployment of public safety infrastructure. Rather, it should advance through a gradual process that incrementally builds social acceptance and technological maturity. This paper envisions a realistic implementation pathway consisting of the following four phases.

In the initial phase, effective implementation focuses not on “public safety” in a broad sense, but on limited and voluntary use cases aimed at protecting individuals and their families. Representative use cases include:

Parents providing smartphones to children to detect, record, and report signs of bullying or abduction.

Automatic notification to family members or emergency services when elderly individuals living alone collapse or exhibit abnormal conditions at home.

Detection of abnormal behavior in elderly individuals with wandering tendencies and notification to caregivers.

Assistance for ordinary citizens by recording situations and supporting emergency reporting when they encounter criminal incidents.

All of these use cases are based on the explicit consent of the user or their family members and serve as a realistic entry point through which society can experience the protective value of smartphone-based AI speech recognition. At this stage, always-on analysis by smartphone AI is concretely demonstrated not merely as a convenience-enhancing function, but as a technology that directly supports the protection of life and physical safety.