Designing a System Architecture for Dynamic Data Collection as a Foundation for Knowledge Modeling in Industry

Abstract

1. Introduction

2. Related Work & Background

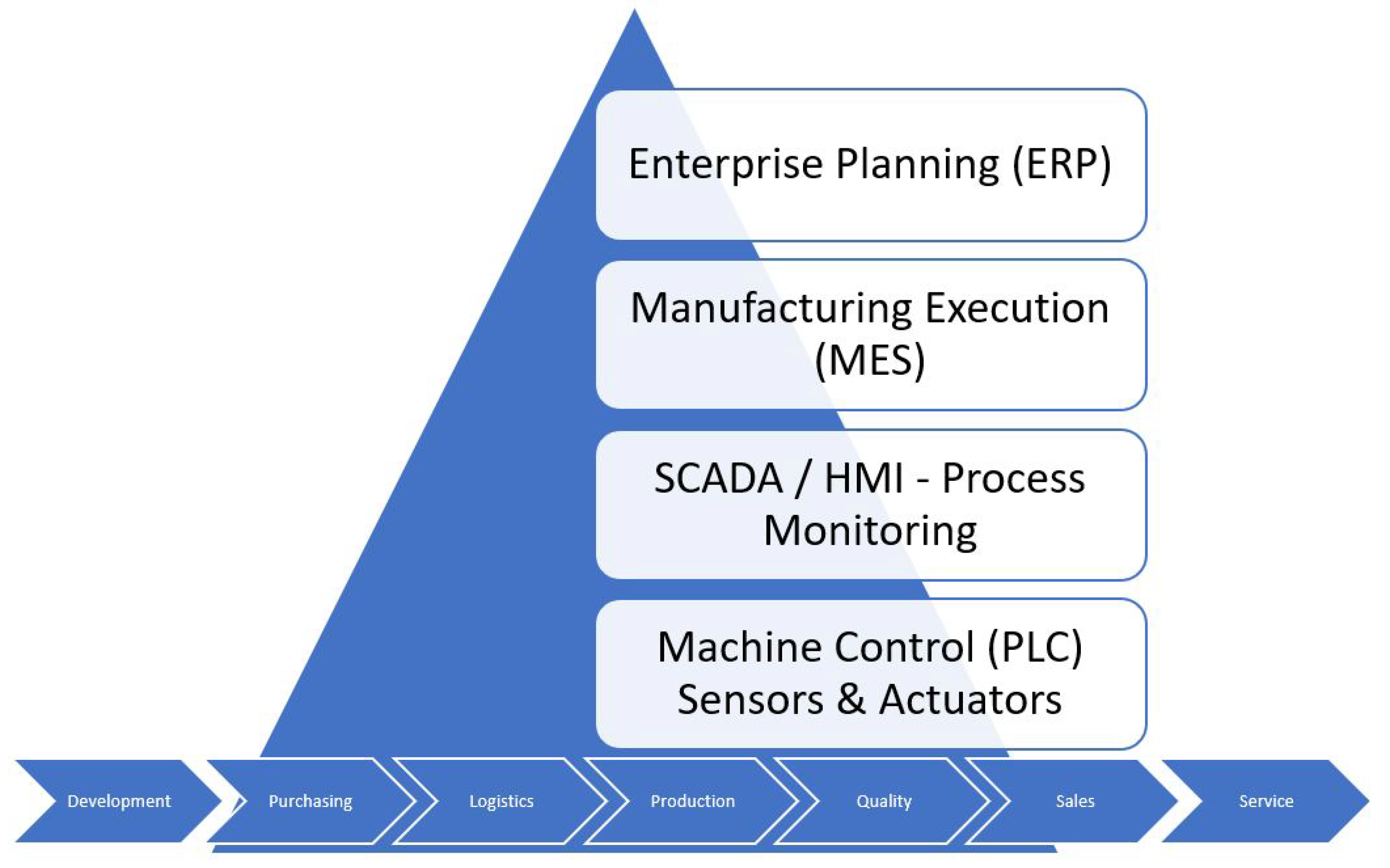

2.1. System Architectures for Industry 4.0

2.1.1. Reference Architectures for Industry 4.0

- RAMI 4.0 (Reference Architecture Model for Industry 4.0) offers a three-dimensional framework that structures the product lifecycle, together with enterprise hierarchy levels and integration layers [25]. It facilitates Industry 4.0 project planning and implementation, focusing on interoperability, standardization, and security.

- IIRA (Industrial Internet Reference Architecture) complements RAMI 4.0 by emphasizing IT and OT system integration within IIoT environments. It provides guidelines for architecture decisions, security strategies, and scalability [26].

2.1.2. Core Technologies for Industry 4.0

- Real-time data processing in industrial settings;

- Integration with programmable logic controllers (PLCs);

- Optimization of industrial networks through blockchain and security measures [31].

- Cloud-based simulations for production processes;

- E-learning systems for Industry 4.0 workforce training;

- Digital design platforms for collaborative industrial environments [33].

2.1.3. Communication Protocols and Interoperability

2.1.4. Data Analytics and Simulation of Production Processes

2.1.5. Maturity Models for Industry 4.0

- The RAMI 4.0 Maturity Index evaluates digital transformation progress across organization, technology, and process maturity [46].

- The Impuls Maturity Model is widely used to assess Industry 4.0 implementation levels [47].

- The comparative analysis of maturity models reveals a lack of universal standards [48].

2.1.6. Modular Approaches and Edge Computing

2.1.7. AI-Driven Methods

2.1.8. Integration of Heterogeneous Data Sources

2.1.9. Summary

2.2. Dynamic Data Acquisition

- Sensor layer: Collects data directly from machines or sensors.

- Edge computing layer: Pre-processes data to reduce latency and network congestion.

- Cloud layer: Stores, processes, and analyzes data using big data technologies and AI.

- Application layer: Provides processed data to interfaces and decision-support tools for users.

2.2.1. Comparison with Existing Architectures and Systems

2.2.2. Summary

2.3. Knowledge Modeling in Industrial Environments

2.3.1. Challenges in Implementing Knowledge Modeling for Industry 4.0

- Lack of skilled employees and necessary expertise.

- Inadequate technological infrastructure to support real-time data integration.

- Cybersecurity concerns requiring advanced security modeling and automated allocation.

2.3.2. The Role of Dynamic Capabilities and Quality Management in Industry 4.0

2.3.3. The Strategic Role of Knowledge Modeling in Industry 4.0

- Lack of vision and leadership from top management.

- Insufficient training programs and educational frameworks.

- Uncertainty regarding return on investment (ROI) for new technologies.

3. Scientific Methodology

- Discovery Phase: This phase involved a detailed analysis of the domain-specific problem in close collaboration with industrial stakeholders. Particular attention was given to challenges such as data heterogeneity, protocol fragmentation, and bottlenecks in knowledge transfer within real-world manufacturing environments.

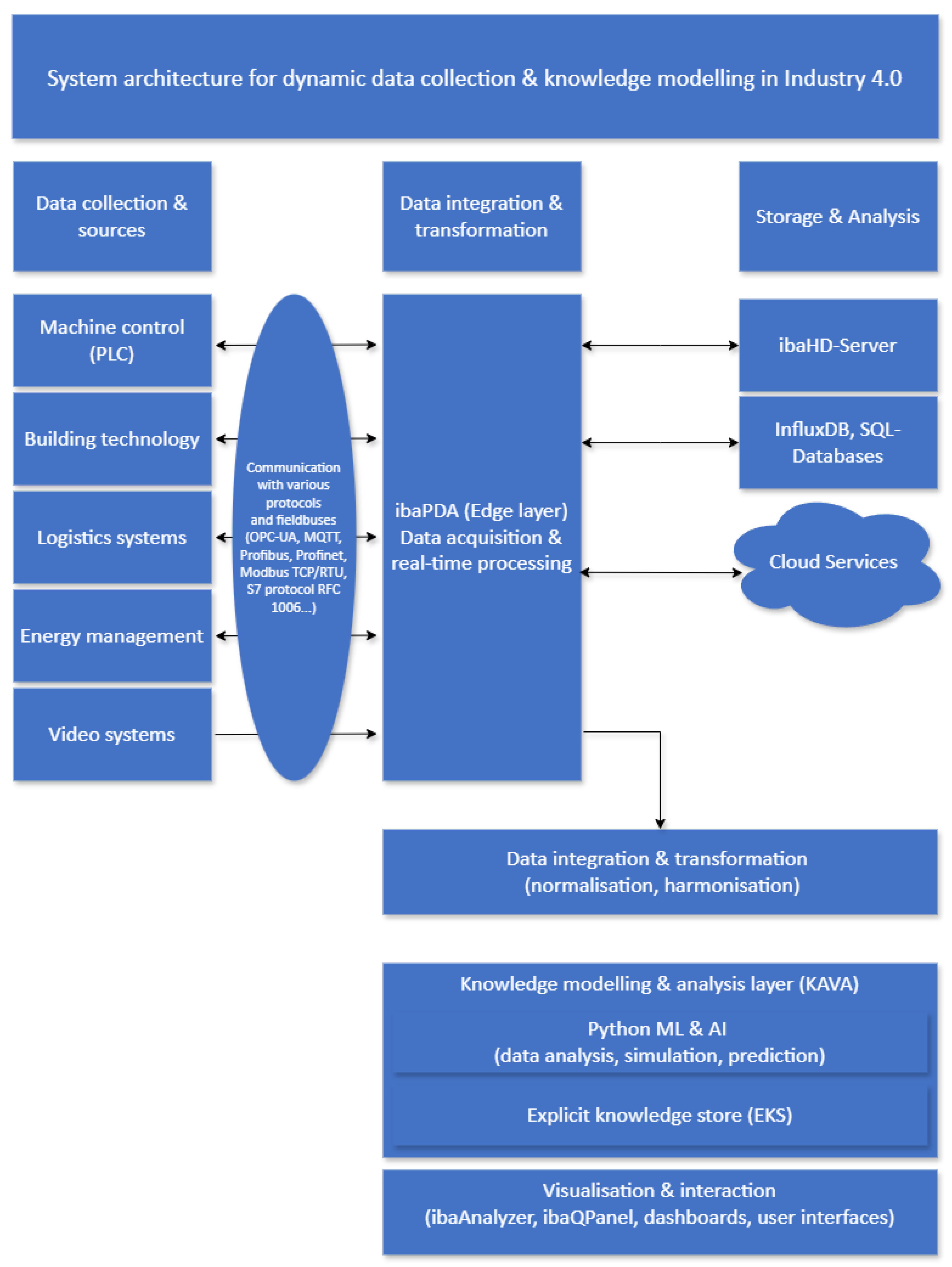

- Design Phase: Based on the insights gained during the discovery phase, a modular and scalable system architecture was designed to support real-time data acquisition and knowledge modeling. The architecture was developed with a focus on semantic interoperability, protocol integration, and human–machine interaction.

- Deployment Phase: The developed system was implemented and evaluated in a real industrial environment. Feedback from domain experts and iterative updates informed the validation and refinement of the solution.

The Knowledge Staircase as Epistemic Model

- Data Acquisition: native protocol integration (e.g., OPC UA, Profibus);

- Data Normalization: timestamping, unit harmonization, semantic labeling;

- Information Aggregation: filtering, event detection, and data stream correlation;

- Knowledge Representation: model generation using ML/AI frameworks;

- Human Interpretation: dashboard interaction, parameter refinement, rule-based feedback.

4. Problemcharacterization and Abstraction

4.1. Challenges in Data Acquisition

4.1.1. Analysis of Existing System Architectures

4.1.2. Selection of Strategic Technology Partners for Modular Architecture Development

4.2. User Survey on HMI Design

- Methodology

4.2.1. Survey Questions

- Critical information display: Which process parameters or data points should always be visible on the HMI?

- Preferred interaction type: What input method do users find most efficient (e.g., text fields, dropdown menus, buttons, or list views)? Usage frequency: How often do users expect to interact with the HMI during their work shifts?

- Work-enhancing functions: What features or tools would improve their efficiency when using the HMI? Response time importance: How critical is fast system feedback for their work?

- Additional functionality: Are there specific features users would like to see added?

- Challenges and concerns: What difficulties do users anticipate when working with a new HMI?

4.2.2. Key Findings from the User Survey

- Critical information display: Users identified process parameters, alarms, system errors, and temperature readings as the most essential data points for their daily work. Additionally, speed indicators and timestamps were frequently mentioned as important elements to display.

- Preferred interaction type: Large buttons and dropdown menus were favored for their ease of use and ability to support quick and efficient navigation.

- Work-enhancing functions: Users highlighted the importance of clearly visible large buttons, color-coded indicators, and predefined text input fields to streamline workflows and reduce interaction time.

- Challenges and usability concerns: Commonly cited challenges included operability under difficult conditions (e.g., exposure to dirt or time constraints), which could impact the effectiveness of the HMI.

5. Materials and Methods

5.1. System Design Rationale

- ibaQPanel for the visualization of measurement data in real time (value progression, limit value violations, signal status);

- Configuration of the panels via ibaPDA configuration editor.

5.2. Materials and Software Components

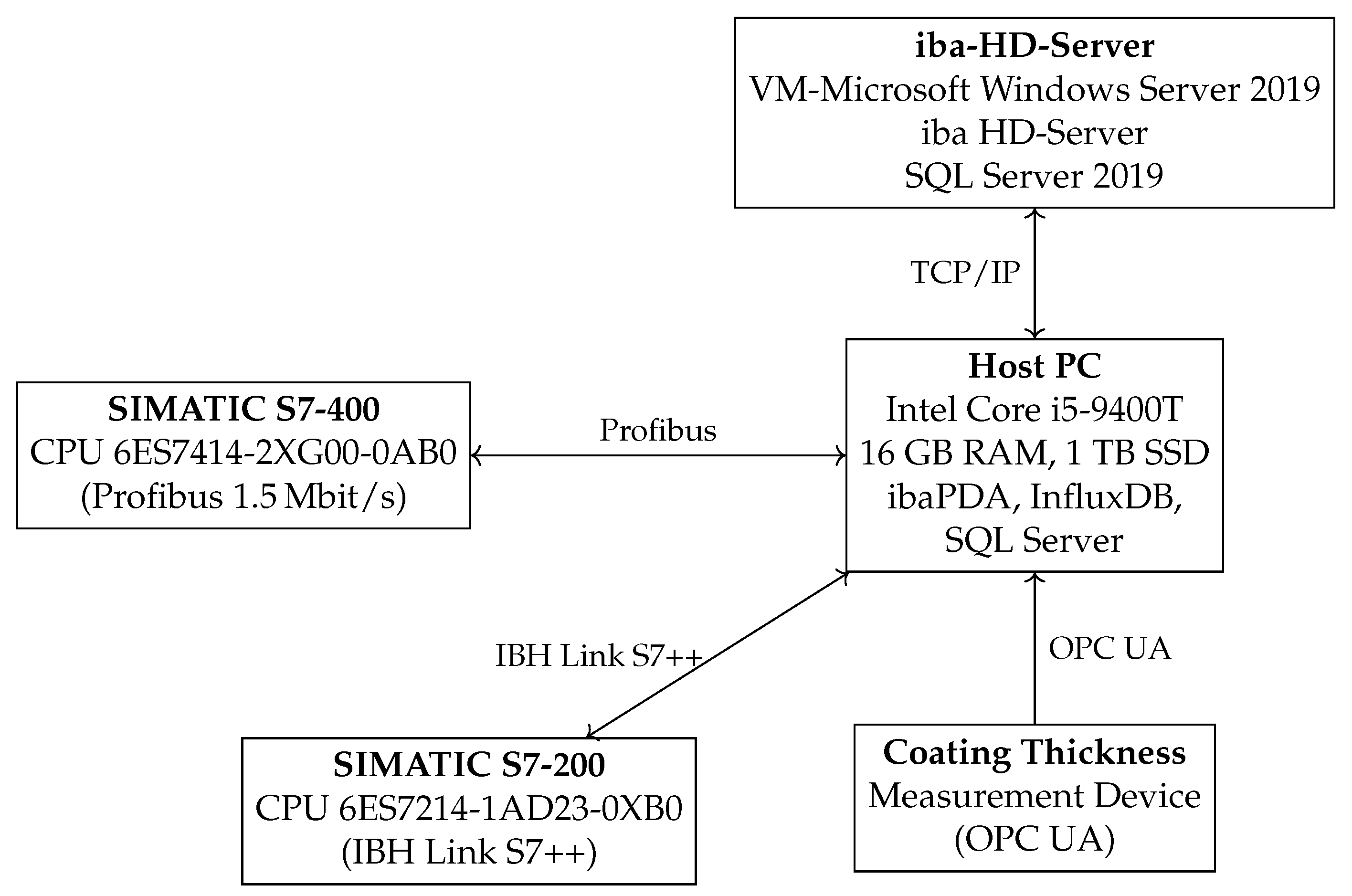

5.3. Experimental Setup

- Hardware:

- –

- Programmable Logic Controllers (PLCs):

- *

- S7-400 (CPU 6ES7414-2XG00-0AB0, Siemens AG, Munich, Germany) connected via Profibus with a bitrate of 1.5 Mbit/s.

- *

- S7-200 (CPU 6ES7214-1AD23-0XB0, Siemens AG, Munich, Germany) coupled via an IBH Link S7++ (IBHsoftec GmbH, Friedrichsdorf, Germany) interface. This setup enables communication with older S7-200 systems that do not natively support modern fieldbus protocols.

- –

- Coating Thickness Measurement Device:A dedicated sensor for measuring lacquer coating thickness (Manufacturer: NDC Technologies Ltd., Essex, UK), integrated using an OPC UA interface.

- –

- Host PC:Intel® Core i5-9400T CPU, 16 GB DDR4 RAM, 3 Ethernet interfaces with 1 GB and a 1 TB SSD (Dell GmbH, Vienna, Austria). A Siemens CP 571 6GK1571-1AA00 as a communication interface to receive data from the S7-400 via Profibus. This machine served as the central data acquisition and processing node.

- Software Configuration:

- –

- Data Acquisition: ibaPDA (Version 8.1), chosen for its real-time data handling capabilities and compatibility with multiple industrial protocols.

- –

- Database Systems: InfluxDB for short-term time-series storage and an SQL-based solution (e.g., Microsoft SQL Server 2019) for long-term archiving and more complex queries.

- –

- Visualization: ibaQPanel to monitor live signals and perform preliminary analyses (e.g., parameter tuning, status checks).

- Data Acquisition Parameters:

- –

- Sampling Rate: A frequency of 50 Hz was selected based on preliminary trials, which indicated that critical process changes can occur in the sub-second range.

- –

- Fieldbus Throughput: The Profibus connection operates at 1.5 Mbit/s. For the S7-200 via IBH Link S7++, the effective throughput depends on the PLC scan cycle and link configuration, but typically remained stable in our setup.

- –

- Network Traffic: Typical data throughput on the host PC reached up to 10–15 MB/s during peak load, requiring Quality-of-Service (QoS) mechanisms on the local network to minimize packet collisions or loss.

5.4. Rationale for Parameter Choices

5.5. Methodological Steps

5.5.1. Requirement Analysis

5.5.2. Concept Development

5.5.3. Prototyping and Implementation

- Integrate OPC UA, Modbus TCP, and native protocols for data retrieval from both legacy and modern controllers.

- Configure ibaHD-Server and InfluxDB for long- and short-term data storage, respectively.

- Provide an interactive interface (ibaQPanel) for real-time data display and initial analysis.

5.5.4. Validation and User Testing

5.6. Data Security and Privacy

5.7. Summary

6. Validation

6.1. Validation of Control System Integration

6.2. Validation of Data Integration and Processing

6.3. Validation of Data Visualization and Interaction

6.4. Validation of Data Security and Compliance

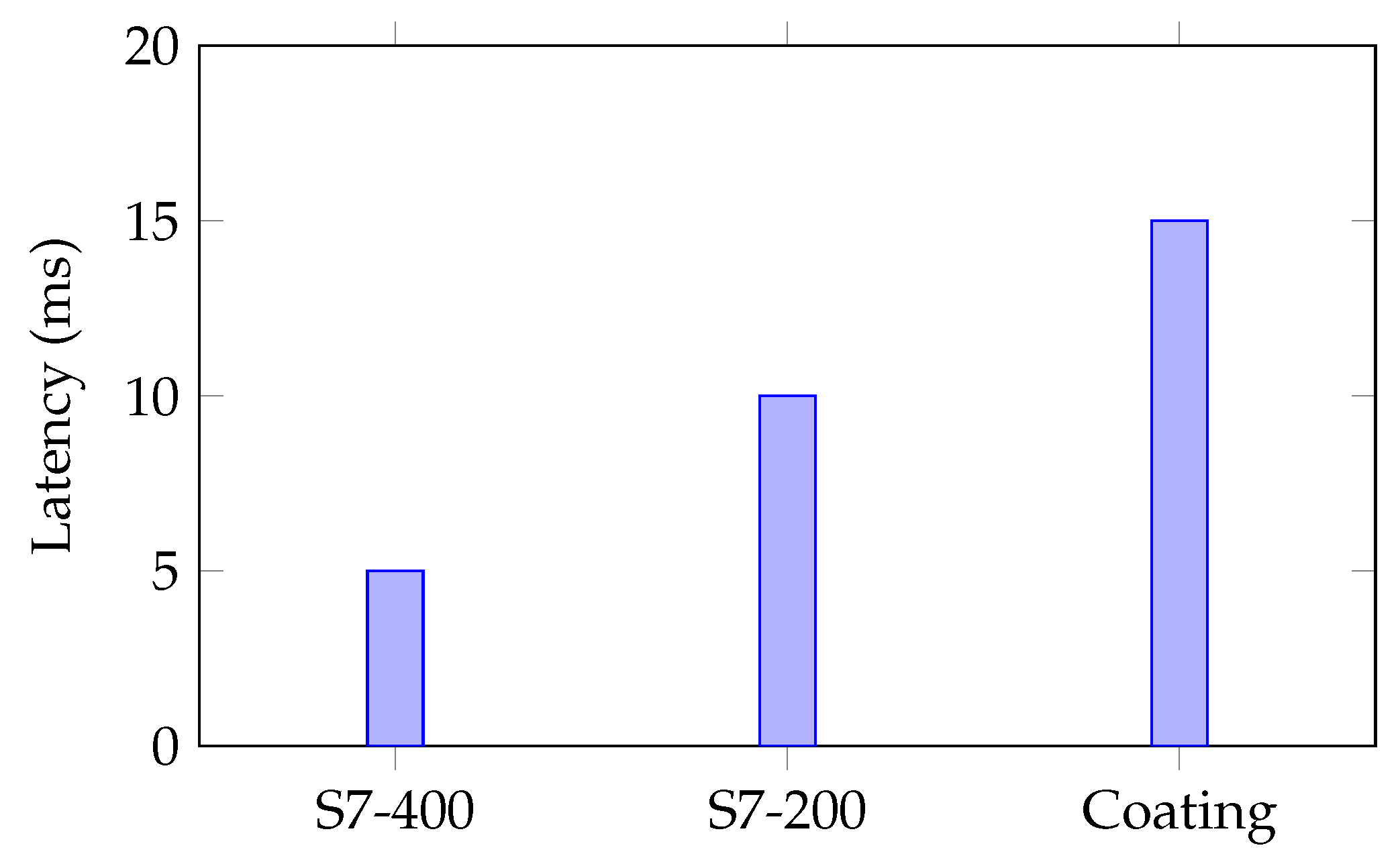

6.5. Quantitative Performance Results

- Scenario A: Only the S7-400 (Profibus) transmitting data at 50 Hz;

- Scenario B: Combined data acquisition from S7-400 (50 Hz) and S7-200 (20 Hz) via IBH Link S7++;

- Scenario C: Full integration (S7-400, S7-200, coating sensor at 10 Hz via OPC UA).

6.6. Summary

7. Lessons Learned and Limitations

7.1. Limitations

- Integration Overhead: While OPC UA and native protocols facilitated broad compatibility, legacy controllers required dedicated configuration and testing efforts, especially when using intermediate gateways (e.g., IBHLink S7++).

- Latency Tuning: Maintaining real-time responsiveness across all sources proved challenging during multi-source operation. The system required per-source buffer adjustments to ensure latency below 50 ms (see Table 1).

- UI Prototyping: The visualization layer was realized using ibaQPanel. A full implementation of the KAVA concept is planned.

7.2. Lessons Learned

- Modularity Supports Resilience: Dynamic reconfiguration and the hot-swapping of modules proved feasible, even under active operation. This validated the modularity concept of the architecture.

- Protocol Heterogeneity is Manageable: The mixed use of OPC UA and native protocols did not cause system instability, confirming that the architecture’s semantic abstraction layer worked as intended.

- User Feedback is Crucial: Early-stage operator feedback significantly influenced UI layout and system messaging, underscoring the importance of human-centric design, even in highly technical environments.

8. Reflection

8.1. Strengths

8.2. Challenges and Limitations

- Technical Challenges: Integrating heterogeneous data sources was complex due to semantic inconsistencies and data reliability issues.

- System Complexity: Designing an architecture flexible enough for various industrial scenarios required significant effort and time.

- Data Simulation: While historical data could be replayed, its analysis using machine learning (ML) techniques remains an open research question. A more advanced ML-based approach could detect patterns and anomalies, enabling predictive analysis and optimization, which necessitates further exploration.

8.3. Achievements

8.4. Areas for Improvement

- Technology Expansion: Investigating the integration of Siemens Edge and similar platforms for real-time data processing and edge computing.

- System Interoperability: Exploring hybrid systems that combine multiple architectures to enhance flexibility and adaptability.

- Machine Learning and AI: Developing predictive models for fault detection and process optimization, leveraging Python-based ML/AI frameworks.

- Human Factors and Knowledge Transfer: Investigating psychological barriers to knowledge sharing and technology acceptance, addressing concerns such as job security and resistance to automation.

9. Discussion, Contributions, and Future Directions

9.1. Overview of the Proposed Architecture

9.2. Knowledge Modeling and AI Integration

9.3. Semantic Interoperability and Standardization

9.4. Key Contributions

- Integrated Data Acquisition Across Heterogeneous Protocols: By enabling direct communication over OPC UA, ModbusTCP, Profibus, and native connections, the architecture unifies both legacy and modern infrastructures, creating a more agile data flow and paving the way for streamlined knowledge modeling.

- Scalable Edge-Cloud Architecture: The combination of on-site edge gateways (for low-latency analytics) and cloud or database-based repositories (for long-term storage) ensures near-real-time anomaly detection while retaining large-scale historical data for in-depth process optimization.

- Continuous Knowledge Modeling (KAVA): By linking industrial signals to a flexible knowledge model, our system merges automated data processing with domain expertise. This supports advanced reasoning—e.g., trend detection, anomaly classification, and rule-based decision-making—in real time.

- Practical Validation in an Industrial Setting: Field trials under varied load conditions confirm the ability to handle frequent signal updates (sampling rates up to 100 Hz) and data volumes exceeding 10–15 MB/s, underscoring the approach’s practical feasibility.

9.5. Implications for Industry 4.0

9.6. Future Directions

- Machine Learning for Predictive Maintenance: Incorporating online or streaming ML algorithms (e.g., incremental random forests) could facilitate proactive fault detection and adaptive maintenance strategies.

- Edge-Focused Computations at Higher Sampling Rates: Investigating frequencies above 100 Hz may be necessary for processes with extremely transient dynamics, where real-time classification or anomaly filtering is best handled directly at the edge.

- Hybrid Multi-Criteria Optimization: Extending the comparison of methods such as composite desirability versus TOPSIS can refine decision-making under multiple, potentially conflicting objectives (e.g., maximizing data fidelity versus minimizing computational overhead).

- Enhanced Semantic Modeling: Future work could explore ontology-based reasoning and semantic web technologies (RDF, SPARQL) for deeper knowledge extraction and broader enterprise-wide data reuse.

- Integration of Digital Twins: Real-time data from the proposed architecture can serve as a foundation for digital twin platforms, enabling virtual commissioning, “what-if” scenario analyses, and closed-loop feedback for continuous improvement.

9.7. Potential Sources of Error and Recommended Countermeasures

- Network Bottlenecks: High traffic peaks on the local network occasionally caused packet collisions, leading to short-term increases in latency.

- PLC Scan Cycles: The scan time of older PLCs (particularly the S7-200) can vary depending on the active program logic, causing fluctuations in data arrival intervals.

- Sensor Calibration: The Coating Thickness Device can exhibit measurement drifts over time if not recalibrated. This yields slight deviations from the nominal values stored in the knowledge model.

- Synchronization Delays: When multiple data streams (Profibus, IBH Link S7++, OPC UA) merge, time stamp alignment can become inconsistent unless carefully managed via a unified clock source or synchronization protocol.

- Quality-of-Service Optimization: Reserving a dedicated bandwidth segment for real-time data transmission and enabling priority queuing can minimize collisions under peak loads.

- Regular Calibration Cycles: Automating sensor calibration—especially for the coating measurement device—helps maintain data accuracy over long operating periods.

- Time Stamp Normalization: Introducing a master clock reference (e.g., via NTP) ensures that all incoming data streams are properly synchronized, reducing the risk of misaligned signals in the post-processing phase.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Drath, R.; Horch, A. Industrie 4.0: Hit or Hype? [Industry Forum]. IEEE Ind. Electron. Mag. 2014, 8, 56–58. [Google Scholar] [CrossRef]

- Helmut-Schmidt-Universität Hamburg; Gundlach, C.S.; Fay, A. Industrie 4.0 mit dem “Digitalen Zwilling” gestalten—Eine methodische Unterstützung bei der Auswahl der Anwendungen. Ind. 4.0 Manag. 2020, 36, 7–10. [Google Scholar] [CrossRef]

- Tinz, J.; Tinz, P.; Zander, S. Wissensmanagementmodelle für die Industrie 4.0: Eine Gegenüberstellung aktueller Ansätze. Z. Wirtsch. Fabr. 2019, 114, 404–407. [Google Scholar] [CrossRef]

- North, K.; Maier, R. Wissen 4.0—Wissensmanagement im digitalen Wandel. HMD Prax. Wirtsch. 2018, 55, 665–681. [Google Scholar] [CrossRef]

- Deuse, J.; Klinkenberg, R.; West, N. (Eds.) Industrielle Datenanalyse: Entwicklung einer Datenanalyse-Plattform für die Wertschaffende, Kompetenzorientierte Kollaboration in Dynamischen Wertschöpfungsnetzwerken; Springer Fachmedien: Wiesbaden, Germany, 2024. [Google Scholar] [CrossRef]

- Gräßler, I.; Oleff, C. Systems Engineering: Verstehen und Industriell Umsetzen; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Oztemel, E.; Gursev, S. Literature review of Industry 4.0 and related technologies. J. Intell. Manuf. 2020, 31, 127–182. [Google Scholar] [CrossRef]

- Sony, M.; Naik, S. Critical factors for the successful implementation of Industry 4.0: A review and future research direction. Prod. Plan. Control 2020, 31, 799–815. [Google Scholar] [CrossRef]

- Prause, M.; Weigand, J. Industry 4.0 and Object-Oriented Development: Incremental and Architectural Change. J. Technol. Manag. Innov. 2016, 11, 104–110. [Google Scholar] [CrossRef]

- Giustozzi, F.; Saunier, J.; Zanni-Merk, C. A semantic framework for condition monitoring in Industry 4.0 based on evolving knowledge bases. Semant. Web 2023, 15, 583–611. [Google Scholar] [CrossRef]

- Palmeira, J.; Coelho, G.; Carvalho, A.; Carvalhal, P.; Cardoso, P. Migrating legacy production lines into an Industry 4.0 ecosystem. In Proceedings of the 2022 IEEE 20th International Conference on Industrial Informatics (INDIN), Perth, Australia, 25–28 July 2022; pp. 429–434. [Google Scholar] [CrossRef]

- Wang, S.; Wan, J.; Li, D.; Liu, C. Knowledge Reasoning with Semantic Data for Real-Time Data Processing in Smart Factory. Sensors 2018, 18, 471. [Google Scholar] [CrossRef]

- Nagy, J.; Oláh, J.; Erdei, E.; Máté, D.; Popp, J. The Role and Impact of Industry 4.0 and the Internet of Things on the Business Strategy of the Value Chain—The Case of Hungary. Sustainability 2018, 10, 3491. [Google Scholar] [CrossRef]

- Piccarozzi, M.; Aquilani, B.; Gatti, C. Industry 4.0 in Management Studies: A Systematic Literature Review. Sustainability 2018, 10, 3821. [Google Scholar] [CrossRef]

- Federico, P.; Wagner, M.; Rind, A.; Amor-Amorós, A.; Miksch, S.; Aigner, W. The Role of Explicit Knowledge: A Conceptual Model of Knowledge-Assisted Visual Analytics. In Proceedings of the 2017 IEEE Conference on Visual Analytics Science and Technology (VAST), Phoenix, AZ, USA, 3–6 October 2017. [Google Scholar]

- Stoiber, C.; Wagner, M.; Ceneda, D.; Pohl, M.; Gschwandtner, T.; Miksch, S.; Streit, M.; Girardi, D.; Aigner, W. Knowledge-assisted Visual Analytics meets Guidance and Onboarding. In Proceedings of the IEEE Application Spotlight, Vancouver, BC, Canada, 20–25 October 2019. [Google Scholar]

- Andrienko, N.; Lammarsch, T.; Andrienko, G.; Fuchs, G.; Keim, D.; Miksch, S.; Rind, A. Viewing Visual Analytics as Model Building. Comput. Graph. Forum 2018, 37, 275–299. [Google Scholar] [CrossRef]

- Wagner, M.; Slijepcevic, D.; Horsak, B.; Rind, A.; Zeppelzauer, M.; Aigner, W. KAVAGait: Knowledge-Assisted Visual Analytics for Clinical Gait Analysis. IEEE Trans. Vis. Comput. Graph. 2019, 25, 1528–1542. [Google Scholar] [CrossRef] [PubMed]

- Rind, A.; Slijepcevic, D.; Zeppelzauer, M.; Unglaube, F.; Kranzl, A.; Horsak, B. Trustworthy Visual Analytics in Clinical Gait Analysis: A Case Study for Patients with Cerebral Palsy. In Proceedings of the 2022 IEEE Workshop on TRust and EXpertise in Visual Analytics (TREX), Oklahoma City, OK, USA, 16 October 2022; pp. 8–15. [Google Scholar] [CrossRef]

- Roth, A. (Ed.) Einführung und Umsetzung von Industrie 4.0; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Andelfinger, V.P.; Hänisch, T. (Eds.) Industrie 4.0; Springer Fachmedien: Wiesbaden, Germany, 2017. [Google Scholar] [CrossRef]

- Junoing, W.; Member, I.; Wensheng, Z.; Youkang, S.; Shihui, D. Industrial Big Data Analytics: Challenges, Methodologies, and Applications. arXiv 2018, arXiv:1807.01016. [Google Scholar]

- SAP. Was Ist Industrie 4.0? SAP: Walldorf, Germany, 2023. [Google Scholar]

- Weber, C.; Wieland, M.; Reimann, P. Konzepte zur Datenverarbeitung in Referenzarchitekturen für Industrie 4.0: Konsequenzen bei der Umsetzung einer IT-Architektur. Datenbank-Spektrum 2018, 18, 39–50. [Google Scholar] [CrossRef]

- Melzer, B. Reference Architectural Model Industrie 4.0 (RAMI 4.0). 2018. Available online: https://www.plattform-i40.de/IP/Redaktion/EN/Downloads/Publikation/rami40-an-introduction.html (accessed on 28 April 2025).

- Young, D.T.T. The Industrial Internet Reference Architecture. 2022. Available online: https://www.engineering.com/iic-releases-industrial-internet-reference-architecture-v1-10/ (accessed on 28 April 2025).

- Kuhn, T. Digitaler Zwilling. Informatik-Spektrum 2017, 40, 440–444. [Google Scholar] [CrossRef]

- Geuer, L.; Ulber, R. Digitale Zwillinge in der naturwissenschaftlichen Bildung: Konstruktivistische Perspektive. Medien. Z. Theor. Prax. Medien. 2024, Occasional Papers, 69–94. [Google Scholar] [CrossRef]

- Follath, A.; Bross, F.; Galka, S. Vorgehensmodell zur Erstellung Digitaler Zwillinge für Produktion und Logistik. Z. Wirtsch. Fabr. 2022, 117, 691–696. [Google Scholar] [CrossRef]

- Mandic, Z.; Stankovski, S.; Ostojic, G.; Popovic, B. Potential of Edge Computing PLCs in Industrial Automation. In Proceedings of the 2022 21st International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 16–18 March 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Wu, Y.; Dai, H.N.; Wang, H. Convergence of Blockchain and Edge Computing for Secure and Scalable IIoT Critical Infrastructures in Industry 4.0. IEEE Internet Things J. 2021, 8, 2300–2317. [Google Scholar] [CrossRef]

- Gui, A.; Fernando, Y.; Shaharudin, M.S.; Mokhtar, M.; Karmawan, I.G.M.; Suryanto. Drivers of Cloud Computing Adoption in Small Medium Enterprises of Indonesia Creative Industry. JOIV Int. J. Inform. Vis. 2021, 5, 69–75. [Google Scholar] [CrossRef]

- Kyratzi, S.; Azariadis, P. Cloud Computing as a Platform for Design-Oriented Applications. In Proceedings of the 24th Pan-Hellenic Conference on Informatics, Athens, Greece, 20–22 November 2020; pp. 226–228. [Google Scholar] [CrossRef]

- Saif, Y.; Yusof, Y.; Rus, A.Z.M.; Ghaleb, A.M.; Mejjaouli, S.; Al-Alimi, S.; Didane, D.H.; Latif, K.; Abdul Kadir, A.Z.; Alshalabi, H.; et al. Implementing circularity measurements in industry 4.0-based manufacturing metrology using MQTT protocol and Open CV: A case study. PLoS ONE 2023, 18, e0292814. [Google Scholar] [CrossRef] [PubMed]

- Aknin, R.; Bentaleb, Y. Enhanced MQTT Architecture for Smart Supply Chain. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 861–869. [Google Scholar] [CrossRef]

- Balduino Lopes, G.; Fernandes, R.F., Jr. A remote MQTT-based data monitoring system for energy efficiency in industrial environments. VETOR—Rev. Ciênc. Exatas Eng. 2021, 31, 25–35. [Google Scholar] [CrossRef]

- Leitner, S.H.; Mahnke, W. OPC UA—Service-Oriented Architecture for Industrial Applications. 2006. Available online: https://dl.gi.de/items/2139da16-3041-40a3-957a-8ca600bf4c23 (accessed on 28 April 2025).

- Trifonov, H.; Heffernan, D. OPC UA TSN: A next-generation network for Industry 4.0 and IIoT. Int. J. Pervasive Comput. Commun. 2023, 19, 386–411. [Google Scholar] [CrossRef]

- Vijayakumar, K. Concurrent Engineering: Research and Applications (CERA)—An international journal: Special issue on “Data Analytics in Industrial Internet of Things (IIoT)”. Concurr. Eng. 2021, 29, 82–83. [Google Scholar] [CrossRef]

- Yan, H.; Wan, J.; Zhang, C.; Tang, S.; Hua, Q.; Wang, Z. Industrial Big Data Analytics for Prediction of Remaining Useful Life Based on Deep Learning. IEEE Access 2018, 6, 17190–17197. [Google Scholar] [CrossRef]

- Lade, P.; Ghosh, R.; Srinivasan, S. Manufacturing Analytics and Industrial Internet of Things. IEEE Intell. Syst. 2017, 32, 74–79. [Google Scholar] [CrossRef]

- Matthiesen, S.; Paetzold-Byhain, K.; Wartzack, S. Virtuelle Inbetriebnahme mit dem digitalen Zwilling. Konstruktion 2023, 75, 54–57. [Google Scholar] [CrossRef]

- Cramer, S.; Huber, M.; Knott, A.L.; Schmitt, R.H. Wertschöpfung in Industrie 4.0: Virtuelle 100%-Prüfung durch Predictive Quality. Z. Wirtsch. Fabr. 2023, 118, 344–349. [Google Scholar] [CrossRef]

- Laroque, C.; Löffler, C.; Scholl, W.; Schneider, G. Einsatzmöglichkeiten der Rückwärtssimulation zur Produktionsplanung in der Halbleiterfertigung. In Proceedings ASIM SST 2020; ARGESIM Publisher: Vienna, Austria, 2020; pp. 397–401. [Google Scholar] [CrossRef]

- Santos, R.C.; Martinho, J.L. An Industry 4.0 maturity model proposal. J. Manuf. Technol. Manag. 2019, 31, 1023–1043. [Google Scholar] [CrossRef]

- Felippes, B.; Da Silva, I.; Barbalho, S.; Adam, T.; Heine, I.; Schmitt, R. 3D-CUBE readiness model for industry 4.0: Technological, organizational, and process maturity enablers. Prod. Manuf. Res. 2022, 10, 875–937. [Google Scholar] [CrossRef]

- Altan Koyuncu, C.; Aydemir, E.; Başarır, A.C. Selection Industry 4.0 maturity model using fuzzy and intuitionistic fuzzy TOPSIS methods for a solar cell manufacturing company. Soft Comput. 2021, 25, 10335–10349. [Google Scholar] [CrossRef]

- Ünlü, H.; Demirörs, O.; Garousi, V. Readiness and maturity models for Industry 4.0: A systematic literature review. J. Softw. Evol. Process. 2023, 36, e2641. [Google Scholar] [CrossRef]

- Hästbacka, D.; Jaatinen, A.; Hoikka, H.; Halme, J.; Larrañaga, M.; More, R.; Mesiä, H.; Björkbom, M.; Barna, L.; Pettinen, H.; et al. Dynamic and Flexible Data Acquisition and Data Analytics System Software Architecture. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Martínez, P.; Dintén, R.; Drake, J.; Zorrilla, M.E. A big data-centric architecture metamodel for Industry 4.0. Future Gener. Comput. Syst. 2021, 125, 263–284. [Google Scholar] [CrossRef]

- LeClair, A.; Jaskolka, J.; MacCaull, W.; Khédri, R. Architecture for ontology-supported multi-context reasoning systems. Data Knowl. Eng. 2022, 140, 102044. [Google Scholar] [CrossRef]

- Rossit, D.; Tohmé, F. knowledge representation in Industry 4.0 scheduling problems. Int. J. Comput. Integr. Manuf. 2022, 35, 1172–1187. [Google Scholar] [CrossRef]

- Havard, V.; Sahnoun, M.; Bettayeb, B.; Duval, F.; Baudry, D. Data architecture and model design for Industry 4.0 components integration in cyber-physical production systems. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2020, 235, 2338–2349. [Google Scholar] [CrossRef]

- Trunzer, E.; Calá, A.; Leitão, P.; Gepp, M.; Kinghorst, J.; Lüder, A.; Schauerte, H.; Reifferscheid, M.; Vogel-Heuser, B. System architectures for Industrie 4.0 applications. Prod. Eng. 2019, 13, 247–257. [Google Scholar] [CrossRef]

- Martikkala, A.; Wiikinkoski, O.; Asadi, R.; Queguineur, A.; Ylä-Autio, A.; Flores Ituarte, I. Industrial IoT system for laser-wire direct energy deposition: Data collection and visualization of manufacturing process signals. IOP Conf. Ser. Mater. Sci. Eng. 2023, 1296, 012006. [Google Scholar] [CrossRef]

- Ho, M.H.; Yen, H.C.; Lai, M.Y.; Liu, Y.T. Implementation of DDS Cloud Platform for Real-time Data Acquisition of Sensors. In Proceedings of the 2021 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Hualien City, Taiwan, 16–19 November 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Bosi, F.; Corradi, A.; Foschini, L.; Monti, S.; Patera, L.; Poli, L.; Solimando, M. Cloud-enabled Smart Data Collection in Shop Floor Environments for Industry 4.0. In Proceedings of the 2019 15th IEEE International Workshop on Factory Communication Systems (WFCS), Sundsvall, Sweden, 27–29 May 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Pinheiro, J.; Pinto, R.; Gonçalves, G.; Ribeiro, A. Lean 4.0: A Digital Twin approach for automated cycle time collection and Yamazumi analysis. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Canary Islands, Spain, 19–21 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Oliveira, M.; Afonso, D. Industry Focused in Data Collection: How Industry 4.0 is Handled by Big Data. In Proceedings of the 2019 2nd International Conference on Data Science and Information Technology, Seoul, Republic of Korea, 19–21 July 2019; pp. 12–18. [Google Scholar] [CrossRef]

- Gao, Z.; Cao, J.; Wang, W.; Zhang, H.; Xu, Z. Online-Semisupervised Neural Anomaly Detector to Identify MQTT-Based Attacks in Real Time. Secur. Commun. Netw. 2021, 2021, 4587862. [Google Scholar] [CrossRef]

- An-dong, S.; Fang, Z. Research on Open Source Solutions of Data Collection for Industrial Internet of Things. In Proceedings of the 2021 7th International Symposium on Mechatronics and Industrial Informatics (ISMII), Zhuhai, China, 22–24 January 2021; pp. 180–183. [Google Scholar] [CrossRef]

- Yu, W.; Liang, F.; He, X.; Hatcher, W.G.; Lu, C.; Lin, J.; Yang, X. A Survey on the Edge Computing for the Internet of Things. IEEE Access 2018, 6, 6900–6919. [Google Scholar] [CrossRef]

- Rocha, M.S.; Sestito, G.S.; Dias, A.L.; Turcato, A.C.; Brandão, D.; Ferrari, P. On the performance of OPC UA and MQTT for data exchange between industrial plants and cloud servers. Acta IMEKO 2019, 8, 80. [Google Scholar] [CrossRef]

- Peniak, P.; Holečko, P.; Bubeníková, E.; Kanáliková, A. LoRaWAN Sensors Integration for Manufacturing Applications via Edge Device Model with OPC UA. In Proceedings of the 2023 International Conference on Applied Electronics (AE), Pilsen, Czech Republic, 6–7 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Brecko, A.; Burda, F.; Papcun, P.; Kajati, E. Applicability of OPC UA and REST in Edge Computing. In Proceedings of the 2022 IEEE 20th Jubilee World Symposium on Applied Machine Intelligence and Informatics (SAMI), Poprad, Slovakia, 2–5 March 2022; pp. 255–260. [Google Scholar] [CrossRef]

- Ehrlich, M.; Wisniewski, L.; Trsek, H.; Jasperneite, J. Modelling and automatic mapping of cyber security requirements for industrial applications: Survey, problem exposition, and research focus. In Proceedings of the 2018 14th IEEE International Workshop on Factory Communication Systems (WFCS), Imperia, Italy, 13–15 June 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Ng, T.C.; Ghobakhloo, M. Energy sustainability and industry 4.0. IOP Conf. Ser. Earth Environ. Sci. 2020, 463, 012090. [Google Scholar] [CrossRef]

- Souza, F.F.D.; Corsi, A.; Pagani, R.N.; Balbinotti, G.; Kovaleski, J.L. Total quality management 4.0: Adapting quality management to Industry 4.0. TQM J. 2022, 34, 749–769. [Google Scholar] [CrossRef]

- Prifti, L.; Knigge, M.; Kienegger, H.; Krcmar, H. A Competency Model for “Industrie 4.0” Employees. 2017. Available online: https://aisel.aisnet.org/wi2017/track01/paper/4/ (accessed on 28 April 2025).

- Gupta, A.; Kr Singh, R.; Kamble, S.; Mishra, R. Knowledge management in industry 4.0 environment for sustainable competitive advantage: A strategic framework. Knowl. Manag. Res. Pract. 2022, 20, 878–892. [Google Scholar] [CrossRef]

- Ahmed baha Eddine, A.; Silva, C.; Ferreira, L. Transforming for Sustainability: Total Quality Management and Industry 4.0 Integration with a Dynamic Capability View. In Proceedings of the International Conference on Industrial Engineering and Operations Management, Lisbon, Portugal, 18–20 July 2023. [Google Scholar] [CrossRef]

- Bakhtari, A.R.; Waris, M.M.; Sanin, C.; Szczerbicki, E. Evaluating Industry 4.0 Implementation Challenges Using Interpretive Structural Modeling and Fuzzy Analytic Hierarchy Process. Cybern. Syst. 2021, 52, 350–378. [Google Scholar] [CrossRef]

- Sedlmair, M.; Meyer, M.; Munzner, T. Design study methodology: Reflections from the trenches and the stacks. IEEE Trans. Vis. Comput. Graph. 2012, 18, 2431–2440. [Google Scholar] [CrossRef]

- Koch, C. Data Integration Against Multiple Evolving Autonomous Schemata. Master’s Thesis, Institut fur medizinische Kybernetik und Artificial Intelligence Universitat Wien, Vienna, Austria, 2001. [Google Scholar]

- Sodiya, E.O.; Umoga, U.J.; Obaigbena, A.; Jacks, B.S.; Ugwuanyi, E.D.; Daraojimba, A.I.; Lottu, O.A. Current state and prospects of edge computing within the Internet of Things (IoT) ecosystem. Int. J. Sci. Res. Arch. 2024, 11, 1863–1873. [Google Scholar] [CrossRef]

- Lu, S.; Lu, J.; An, K.; Wang, X.; He, Q. Edge Computing on IoT for Machine Signal Processing and Fault Diagnosis: A Review. IEEE Internet Things J. 2023, 10, 11093–11116. [Google Scholar] [CrossRef]

- Krupitzer, C.; Müller, S.; Lesch, V.; Züfle, M.; Edinger, J.; Lemken, A.; Schäfer, D.; Kounev, S.; Becker, C. A Survey on Human Machine Interaction in Industry 4.0. arXiv 2020, arXiv:2002.01025. [Google Scholar]

- Villani, V.; Sabattini, L.; Zanelli, G.; Callegati, E.; Bezzi, B.; Baranska, P.; Mockallo, Z.; Zolnierczyk-Zreda, D.; Czerniak, J.N.; Nitsch, V.; et al. A User Study for the Evaluation of Adaptive Interaction Systems for Inclusive Industrial Workplaces. IEEE Trans. Autom. Sci. Eng. 2022, 19, 3300–3310. [Google Scholar] [CrossRef]

- Reinhart, G. (Ed.) Handbuch Industrie 4.0: Geschäftsmodelle, Prozesse, Technik; Hanser: München, Germany, 2017. [Google Scholar]

- Qaisi, H.A.; Quba, G.Y.; Althunibat, A.; Abdallah, A.; Alzu’bi, S. An Intelligent Prototype for Requirements Validation Process Using Machine Learning Algorithms. In Proceedings of the 2021 International Conference on Information Technology (ICIT), Amman, Jordan, 14–15 July 2021; pp. 870–875. [Google Scholar] [CrossRef]

- Anjum, R.; Azam, F.; Anwar, M.W.; Amjad, A. A Meta-Model to Automatically Generate Evolutionary Prototypes from Software Requirements. In Proceedings of the 2019 7th International Conference on Computer and Communications Management, Bangkok, Thailand, 27–29 July 2019; pp. 131–136. [Google Scholar] [CrossRef]

- Martelli, C. A Point of View on New Education for Smart Citizenship. Future Internet 2017, 9, 4. [Google Scholar] [CrossRef]

- IBA AG. 2024. Available online: https://www.iba-ag.com/en/security/iba-2024-03 (accessed on 28 April 2025).

- Werner, F.; Woitsch, R. Data Processing in Industrie 4.0: Data Analysis and Knowledge Management in Industrie 4.0. Datenbank-Spektrum 2018, 18, 15–25. [Google Scholar] [CrossRef]

- Cobb, C.; Sudar, S.; Reiter, N.; Anderson, R.; Roesner, F.; Kohno, T. Computer Security for Data Collection Technologies. In Proceedings of the Eighth International Conference on Information and Communication Technologies and Development, Ann Arbor, MI, USA, 3–6 June 2016; pp. 1–11. [Google Scholar] [CrossRef]

- McDonald, A.; Leyhane, T. Drill down with root cause analysis. Nurs. Manag. 2005, 36, 26–31; quiz 31–32. [Google Scholar]

- Horvat, D.; Som, O. Wettbewerbsvorteile durch informationsbasierten Wissensvorsprung. In Industrie 4.0 für die Praxis; Wagner, R.M., Ed.; Springer Fachmedien: Wiesbaden, Germany, 2018; pp. 185–200. [Google Scholar] [CrossRef]

- WKO. EU-Datenschutz-Grundverordnung (DSGVO): Grundsätze und Rechtmäßigkeit der Verarbeitung. 2024. Available online: https://www.wko.at/datenschutz/eu-dsgvo-grundsaetze-verarbeitung (accessed on 28 April 2025).

| Data Source | Protocol/Path | Sampling Rate | Latency (ms) |

|---|---|---|---|

| S7-400 | Profibus (1.5 Mbit/s) | 50 Hz | 5.2 ± 0.8 |

| S7-200 | IBH Link S7++ (Ethernet) | 20 Hz | 10.3 ± 1.5 |

| Coating Sensor | OPC UA | 10 Hz | 15.6 ± 2.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radlbauer, E.; Moser, T.; Wagner, M. Designing a System Architecture for Dynamic Data Collection as a Foundation for Knowledge Modeling in Industry. Appl. Sci. 2025, 15, 5081. https://doi.org/10.3390/app15095081

Radlbauer E, Moser T, Wagner M. Designing a System Architecture for Dynamic Data Collection as a Foundation for Knowledge Modeling in Industry. Applied Sciences. 2025; 15(9):5081. https://doi.org/10.3390/app15095081

Chicago/Turabian StyleRadlbauer, Edmund, Thomas Moser, and Markus Wagner. 2025. "Designing a System Architecture for Dynamic Data Collection as a Foundation for Knowledge Modeling in Industry" Applied Sciences 15, no. 9: 5081. https://doi.org/10.3390/app15095081

APA StyleRadlbauer, E., Moser, T., & Wagner, M. (2025). Designing a System Architecture for Dynamic Data Collection as a Foundation for Knowledge Modeling in Industry. Applied Sciences, 15(9), 5081. https://doi.org/10.3390/app15095081