Abstract

To rectify significant heading calculation errors in polarized light navigation for unmanned aerial vehicles (UAVs) under tilted states, this paper proposes a method for compensating horizontal attitude angles based on horizon detection. First, a defogging enhancement algorithm that integrates Retinex theory with dark channel prior is adopted to improve image quality in low-illumination and hazy environments. Second, a dynamic threshold segmentation method in the HSV color space (Hue, Saturation, and Value) is proposed for robust horizon region extraction, combined with an improved adaptive bilateral filtering Canny operator for edge detection, aimed at balancing detail preservation and noise suppression. Then, the progressive probabilistic Hough transform is used to efficiently extract parameters of the horizon line. The calculated horizontal attitude angles are utilized to convert the body frame to the navigation frame, achieving compensation for polarization orientation errors. Onboard experiments demonstrate that the horizontal attitude angle estimation error remains within 0.3°, and the heading accuracy after compensation is improved by approximately 77.4% relative to uncompensated heading accuracy, thereby validating the effectiveness of the proposed algorithm.

1. Introduction

Following the discovery that insects like sand ants, locusts, and bees can navigate by capturing polarized light from the sky through their unique compound eye structures, the novel navigation method of bionic polarized light navigation has emerged [1,2]. Polarized light has emerged as a prominent study focused on navigation owing to its low sensitivity to environmental changes, information-dense characteristics, adaptability to special environments, and robust resistance to interference. However, in actuality, tilting happens in airborne vehicles, including drones, missiles, and rockets, while navigating three-dimensional space, as well as in ground-moving carriers like vehicles and robots operating on uneven terrain. This results in polarized light sensors deviating from the zenith, thereby introducing tilt errors into bionic polarized light-based heading calculations. In addition, UAVs are susceptible to pitch and roll disturbances caused by wind, with RMSEs reaching 9.9868° for pitch and 3.9183° for roll at wind speeds of approximately 10 knots, which can degrade navigation accuracy [3]. In these cases, the measurement of horizontal attitude angles becomes critical, as they describe the carrier’s tilt state and facilitate the transfer of sensor data from the body frame to the navigation frame. Therefore, there is an urgent need to develop a reliable attitude measurement method that can provide accurate attitude information for polarized light navigation systems to correct tilt-induced errors in polarized light measurements.

The attitude measurement of UAVs can be achieved by diverse approaches and technologies. Common attitude measurement approaches encompass: Inertial Navigation (IMU), Satellite Navigation (GPS), Geomagnetic Navigation, Celestial Navigation, and Visual Navigation. The IMU offers strong autonomy and comprehensive information; but, its navigation errors accumulate over time, requiring compensation through algorithms or supplementary sensors [4]. GPS provides global, all-weather positioning services in open areas; yet, in environments with signal obstructions, such as urban high-rise buildings or dense forests, GPS signals may experience loss or interference, leading to reduced positioning accuracy [5]. Geomagnetic navigation uses the properties of Earth’s magnetic field distribution for navigation without the need for signal transmission, offering good concealment. However, it is environmentally sensitive, has subpar performance in dynamic environments, and only provides heading angle reference [6]. Celestial navigation, based on the passive reception of celestial radiation, demonstrates high autonomy and strong anti-interference capabilities. Nevertheless, star trackers are costly, and star pattern-matching algorithms require extensive computational resources with poor real-time performance [7]. Visual attitude measurement methods employ visual sensors to capture environmental images, identifying feature points or targets within the imagery to deduce UAV position and orientation [8,9]. Visual sensors possess distinct benefits over conventional measurement techniques, including the capacity to capture rich environmental information, low power consumption, lightweight design, high cost-effectiveness, and strong adaptability [10]. Therefore, this study employs visual methods for horizontal attitude angle estimation and utilizes the estimated attitude angle information to correct tilt-induced errors in polarized light navigation. Table 1 provides a comparative overview of the aforementioned attitude estimation methods.

Table 1.

Analysis of Application Scenarios and Performance of Attitude Estimation Methods.

In the field of visual attitude measurement, prevalent methodologies encompass artificial marker-based approaches, natural feature-based methods, multi-view geometry techniques, and optical flow algorithms. Wang et al. [11] addressed the autonomous landing of multi-rotor UAVs on moving vehicles by introducing a novel landing pad design and its recognition algorithm, achieving relative pose estimation at elevated sampling rates. However, the landing accuracy of their method exhibited a mean error of approximately 40 cm. Jeong et al. [12] proposed a semantic segmentation-based horizon detection method, achieving horizon gradient errors around 1°. However, it demands high computational resources and time for training and inference. Zardoua et al. [13] developed an innovative horizon detector that employs RGB video streams, improving detection precision in low-contrast conditions; yet its accuracy is affected by temporal information accumulation. Campos et al. [14] presented a SLAM (Simultaneous Localization and Mapping) framework that accommodates monocular, stereo, and visual-inertial sensors, achieving high-precision attitude tracking through ORB (Oriented FAST and Rotated BRIEF) feature matching and Bundle Adjustment optimization, demonstrating sensitivity to texture-deficient environments. De Croon et al. [15] achieved attitude estimation without accelerometers using motion models and optical flow, but their algorithm’s roll and pitch angle variances were 1.24° and 0.84°, respectively, and performance degraded during hover or low-speed conditions. Liu et al. [16] employed GRU (Gated Recurrent Unit) neural networks to fuse MARG (Magnetic, Angular Rate, and Gravity) sensor data with optical flow measurements, enhancing the accuracy of 3D attitude estimation. The RMSE (Root Mean Square Error) of roll, pitch, and yaw angles are 1.51°, 2.07°, and 0.87°, respectively. Dai et al. [17] devised a novel straight-line detection method based on deep convolutional neural networks, which improves detection precision with an accuracy of 93.03% on the dataset, while incurring greater computational complexity. Shi et al. [18] proposed a minimal human interaction horizon detection approach with an average computational error within 2 pixels; nevertheless, its performance is vulnerable to image quality degradation and exhibits cumulative error characteristics. Flores et al. [19] enhanced optical flow sensors using a gimbal stabilization system, achieving robustness through sliding mode control at the cost of introducing high-frequency vibrations and demanding strict hardware stability requirements. While each of these solutions has distinct advantages, they all present significant limitations in practical deployment scenarios. In contrast, horizon detection methods establish an absolute horizontal reference for attitude estimation by recognizing the physical boundary between the sky and the ground. This approach inherently displays superior robustness against textureless environments and dynamic disturbances. Consequently, our study adopts this horizon-based methodology for the estimation of UAV horizontal attitude angles.

Early studies on polarized light navigation primarily concentrated on orientation under horizontal conditions, neglecting the impact of tilt on navigation accuracy [20]. The growing demand for dynamic navigation in mobile platforms like UAVs and ground robots has made tilt-induced polarization angle error correction a critical bottleneck limiting the practical application of polarized light navigation technology. Consequently, developing efficient and robust methods for rectifying tilt-induced errors in polarized light is crucial for enhancing the practicality and reliability of polarized light navigation systems. Gkanias et al. [21] addressed the solar meridian ambiguity problem by using a biologically constrained sensor array and introduced a gating function that compensates for tilt errors by receiving sensor tilt information and modulating the response of the solar layer. Under tilted conditions, this method reduced the overall average compass error from 65.78° to 10.47°. Wang et al. [22] utilized attitude angles from an inertial navigation system (INS) to determine the zenith direction during platform tilt in motion, achieving an RMSE of 1.58° in heading angle. However, this method exhibits linear error growth with distance, leading to significant cumulative positional inaccuracies. Han et al. [23] investigated the incident light path of a polarized light compass before and following a tilt, computing the sky polarization pattern using horizontal attitude angles and dynamically identifying the zenith region in the skylight image to extract the solar meridian. The advantage of this approach lies in its constant use of polarization information from the zenith region to calculate the vehicle’s heading. Under a tilt angle of 30°, this method achieved a heading estimation accuracy with an RMSE of 1.19°. Shen et al. [24] proposed an efficient extreme learning machine (EELM)-based method to model and compensate for tilt-induced heading errors in polarized compasses during practical applications. It has an improvement of the RMSE by 86% compared with prior arts. This method employs nonlinear fitting to accurately establish the relationship between tilt angle and heading error, hence improving orientation performance considerably. Zhao et al. [25] developed a novel method for modeling and compensating heading errors in polarized light compasses under attitude variations, using Gated Recurrent Unit (GRU) neural networks. The approach achieves a 64.09% reduction in heading error, contributing to improved navigation accuracy. Nevertheless, the complexity of the algorithm limits its deployment to upper computer platforms. Wu et al. [26] proposed a tilt-compensated polarization orientation scheme for sloped road conditions, which overcomes the field-of-view limitations of imaging polarization compasses through a visual modulation model. This method achieves a remarkable improvement, reducing the root-mean-square error (RMSE) by 82% compared to traditional polarization-based orientation approaches. However, this method still relies on inertial navigation systems (INS) for horizontal attitude angles, consequently inheriting their characteristic cumulative errors. Despite the application of techniques like inertial navigation and neural networks to improve the orientation accuracy under tilted conditions to some extent, these methods are hindered by cumulative errors and significant processing complexity. In this context, the incorporation of visual methods provides a new way for the rectification of polarized light tilt errors.

This paper proposes a dark channel defogging algorithm based on Retinex theory to boost the measurement accuracy of horizontal attitude angles, hence improving adaptability in low illumination and hazy conditions. Furthermore, leveraging the distinct color contrast between the sky and ground, we develop a dynamic HSV-based threshold segmentation method for horizon detection to increase extraction accuracy. The approach then employs an improved adaptive bilateral filtering Canny operator in conjunction with a progressive probabilistic Hough transform for precise horizon extraction, subsequently estimating the UAV’s horizontal attitude angles from these horizon parameters. Finally, the obtained horizontal attitude angles are utilized to compensate for heading calculation errors in polarized light navigation under tilted conditions.

The organizational structure of this paper is as follows: Section 1 presents the background and current research status of visual attitude estimate methods and the polarized light tilt error compensation. Section 2 introduces the fundamental principles and algorithmic steps of the proposed horizon detection method. Section 3 elaborates on the theoretical framework for polarized light heading compensation. Section 4 provides a comprehensive description of experiments conducted on UAV platforms to validate the effectiveness of the proposed approach. Finally, Section 5 concludes the paper with key findings and implications.

2. Principle of Visual Horizontal Attitude Angle Measurement

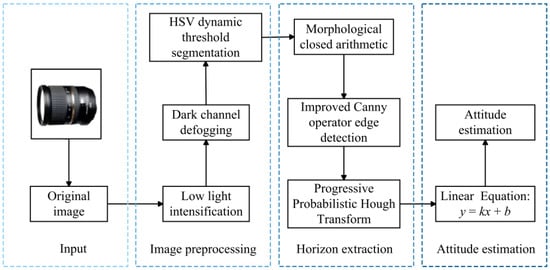

The position and angle of the horizon in the image are intricately connected to the attitude of the UAV. When the attitude of the UAV changes, the position and angle of the horizon in the image will correspondingly change. Therefore, by detecting the position and angle of the horizon in the image, the attitude variations of the UAV can be inferred. This procedure has two key issues: first, the precise extraction of the horizon from the image; second, the application of the extracted horizon for attitude estimation. The following sections of this paper will systematically elaborate on these two core tasks, with the specific technical approach illustrated in Figure 1.

Figure 1.

Flowchart of the horizon attitude estimation method.

2.1. Dark Channel Defogging Algorithm Based on Retinex Theory

To enhance the algorithm’s detection performance in low illumination and hazy circumstances, this paper proposes a dehazing-enhancement method that integrates the Retinex theory with the dark channel prior. The Retinex theory decomposes an observed image I(x) into two components: the reflectance component R(x) and the illumination component L(x), with R(x) representing the intrinsic color or reflectivity of objects, and L(x) corresponding to the intensity of ambient illumination [27]. Retinex theory posits that the original image I(x) can be mathematically expressed as the product of these two components:

where x denotes the pixel position in the image. By decomposing the image, we can independently enhance either the illumination or reflectance components, thereby improving image quality. A logarithmic transformation is applied to the image to achieve this decomposition:

The illumination component L(x) is assessed by image smoothing. Typically, this component can be obtained by applying Gaussian filtering to the input image:

where represents the Gaussian filter kernel, whereas denotes the standard deviation that controls the scale of the filter. After obtaining the illumination component L(x), the reflectance component R(x) is derived by removing the illumination component from the original image:

Since the haze accumulation effect predominantly affects the illumination component, we apply the dark channel prior specifically to the illumination component for haze removal [28], thereby preserving critical texture details in the reflectance component. The dark channel computation is performed on the illumination component as follows:

where is the local region centered at pixel x, and c denotes the RGB channel.

Next, the atmospheric light A is estimated by selecting the top 0.1% brightest pixels in . This selection strategy ensures a robust estimation by avoiding noise from isolated bright pixels while still focusing on regions heavily influenced by atmospheric scattering [29]. These selected pixels correspond to regions in the original enhanced image , from which we calculate the mean values across RGB channels to determine the atmospheric light components. Using the estimated atmospheric light A, the atmospheric transmission t(x) is then computed through:

where denotes the dehazing parameter, generally assigned a value of 0.95. By using the estimated atmospheric light A and transmission map t(x), we recover the haze-free illumination component through the following formulation:

where denotes the lower bound of transmission (typically set to 0.1).

Subsequently, the reflectance component R(x) undergoes enhancement via histogram equalization to augment image contrast and brightness. The enhanced reflectance component R’(x) achieves greater detail prominence while compensating for illumination inhomogeneity. Finally, the enhanced reflectance component R’(x) and the dehazed illumination component L’(x) are multiplied to obtain the final dehazed-enhanced image:

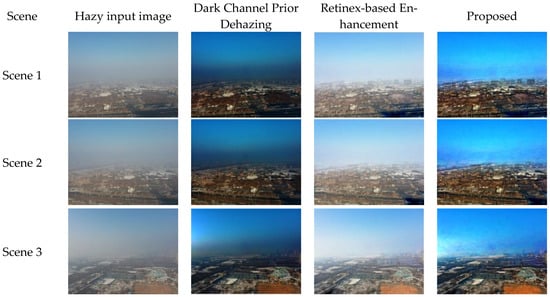

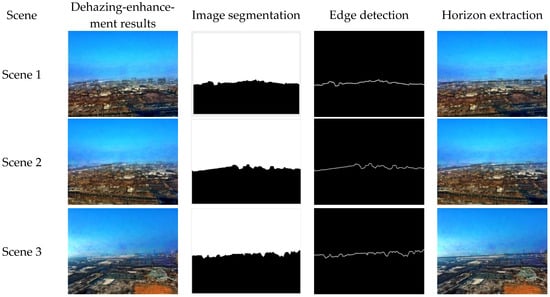

The comparative analysis was conducted across three different scenes: individual Retinex enhancement, standalone dark channel prior, and the proposed integrated dehazing-enhancement algorithm. The results for each scene are shown in Figure 2.

Figure 2.

Comparison of dehazing effect.

To provide a comprehensive and objective evaluation of the defogging performance, three no-reference image quality metrics were adopted for comparison under Scene 1, as presented in Table 2. Specifically, Entropy reflects the richness of image details, NRPBM assesses the perceptual clarity of dehazed images, and PBM evaluates the contrast and perceptual visibility. All three metrics follow a “higher is better” principle, enabling consistent and intuitive comparisons across different methods.

Table 2.

Comparison of objective evaluation results of image defogging methods.

2.2. HSV-Based Dynamic Thresholding Image Segmentation Method

Following image dehazing, segmentation is performed on the processed images. Leveraging the inherent separation characteristics of hue and luminance in the HSV color space, which effectively distinguishes chromatic and brightness variations between sky and ground regions, we employ a dynamic HSV-based threshold segmentation method.

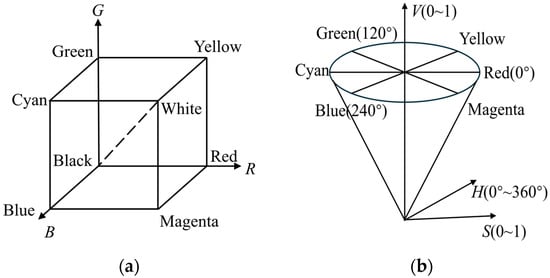

The HSV color space comprises three orthogonal components: Hue (H), Saturation (S), and Value (V) [30]. The image is initially converted from RGB to HSV space; the color space model is shown in Figure 3.

Figure 3.

Color space model. (a) RGB space model; (b) HSV space model.

The conversion formula from RGB color space to HSV color space is

where H, S, and V represent the numerical values of the same pixel in terms of hue, saturation, and value (brightness), respectively; max and min refer to the maximum and minimum values in the set {R, G, B}; where R, G, and B correspond to the red, green, and blue components of that pixel.

Target color regions are segmented by setting thresholds. Typically, due to the sky’s prevailing blue color, a specific hue range for blue can be selected to isolate the sky region. In this work, the hue range for the sky region was determined by analyzing the HSV histogram and identifying the distribution of target pixels. The threshold for the sky region was set to the hue range of [0.5, 0.7]. Depending on the screen’s brightness conditions, the precision of brightness extraction can be further improved by adjusting the saturation (S) and value (V). For the S channel, a fixed threshold range of [0.3, 1] was used to extract regions with higher saturation, which helps focus on areas with more vivid colors, such as the sky, and eliminate regions with low color intensity or grayish areas.

This paper introduces a dynamic threshold adjustment strategy specifically for the V channel to mitigate the problem of inadequate segmentation performance associated with fixed thresholds in the HSV color space. For the V channel, a sliding window (e.g., 15 × 15 pixels) traverses the image. The median brightness of the region within each window is calculated as the baseline local threshold. The threshold sensitivity is increased if substantial brightness variations exist inside the window, whereas it diminishes in more uniform regions. Finally, bilateral filtering is applied to smooth the thresholds between neighboring windows, ensuring seamless transitions and avoiding abrupt segmentation boundaries. This approach ensures stable segmentation results across diverse illumination conditions.

After threshold segmentation, a binary mask distinguishing the sky and ground regions is obtained. A morphological closing operation is applied to remove noise, fill holes, and smooth edges, ultimately yielding the dynamic threshold-based segmentation results in the HSV color space.

2.3. Horizon Extraction Method

Following the segmentation of the image, distinct boundaries are present, which can be detected using gradient-based algorithms. The traditional Canny edge detection algorithm primarily relies on gradient information to determine edge locations and detect detailed and weak edges. However, when images exhibit considerable noise or are affected by illumination variations, false detections or missed edges may occur [31]. This paper proposes an adaptive bilateral filtering-based Canny operator to overcome this issue. The initial phase of Canny edge detection involves the application of adaptive bilateral filtering on the image. The bilateral filtering formula is

where s represents the local neighborhood centered at pixel P; denotes the pixel value at point q; signifies the weight between p and q; and W is the normalization coefficient for the weights. The formula for is as follows:

where represents the spatial weight between pixels p and q, where is the standard deviation of the spatial Gaussian kernel; denotes the pixel weight between pixels p and q, where is the standard deviation of the pixel-value Gaussian kernel.

The improved Canny operator effectively detects the edge of the image, mitigates interference from non-edge regions, identifies detailed edges, and effectively suppresses noise. The designed Canny operator can be expressed as

where is the final binary image; is the gradient of the image; Tl and Th signify the low and high thresholds, respectively.

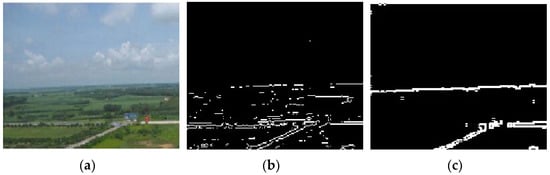

Figure 4 presents a comparative analysis of the edge detection results using the basic and improved versions of the Canny algorithm. The result obtained using the basic Canny algorithm shows blurred edges, missed weak edges, and noticeable noise. In contrast, the improved Canny algorithm produces clearer and more continuous edges while significantly reducing noise. This comparison highlights the advantages of the improved algorithm in terms of edge clarity and noise suppression, validating the effectiveness of the proposed enhancement.

Figure 4.

Image edge detection results. (a) Original image; (b) Edge detection result using the basic Canny algorithm; (c) Edge detection result using the improved Canny algorithm.

After the edge detection of the image, the image containing the horizon edge is obtained. In order to obtain accurate horizon parameters, it is necessary to extract the line from the image after edge detection. The traditional Hough transform achieves line detection by mapping image edge points to parameter space; nevertheless, it requires traversing all edge points (totaling M), which leads to large computation and high storage costs. Therefore, the progressive probabilistic Hough transform is adopted in this paper to calculate a subset of the edge points (the number is m, and the number is satisfied ), thus significantly reducing operational complexity and storage requirements [32]. In addition, the method can directly detect the two endpoints of the line, enabling accurate line positioning without relying on post-processing.

To evaluate the robustness and consistency of the proposed horizon line detection algorithm, Figure 5 presents the processing results for three representative image frames. These frames were selected to include variations in viewpoint and scene composition. As illustrated, the algorithm accurately identifies the horizon line in each case, demonstrating its stable performance across different inputs. This confirms the method’s potential for reliable application in real-world UAV visual attitude estimation tasks.

Figure 5.

Visual Illustration of Horizon Extraction Process.

2.4. Attitude Estimation Algorithm Based on Horizon

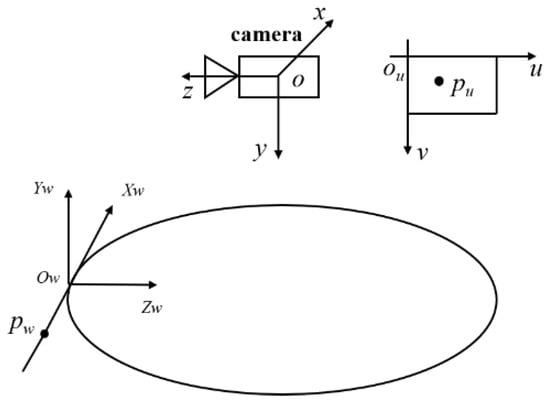

According to the horizon projection model shown in Figure 6, the world coordinate system, the body coordinate system and the image coordinate system are established. The camera is fixed on the head of the UAV, with the camera coordinate system overlapped with the body coordinate system.

Figure 6.

Horizon projection model.

Let pw represent any point on the horizon whose coordinate in the world coordinate system is , then the coordinates of the projection point p’ in the pixel coordinate system can be obtained by the following formula:

where s is the scale factor, A is the internal reference matrix, and R is the rotation matrix, , with R1, R2, and R3 corresponding to the rotation matrices obtained around the three axes, respectively. R0 denotes the rotation matrix of the UAV at its initial position. Assuming that the center point ow of the world coordinate system is located on the yoz plane, the position of ow in the camera coordinate system is , and the yaw angle is 0. The following expansion of Equation (15) can be obtained:

Since the horizon is infinitely distant from the ground, that is, , when , the variables are brought into the formula and xw is eliminated. The simplification can be achieved:

where fx, fy, u0, and v0 represent the focal lengths and principal point coordinates in the internal parameter matrix A, respectively. These parameters were obtained via camera calibration, yielding fx = 417.4831, fy = 417.5472, u0 = 327.8796, v0 = 214.3221. If we assume that the slope of the straight line is k and the intercept is b, then:

When the roll angle , the horizon in the pixel coordinate system is perpendicular to the u-axis at the point up, then the horizon equation is and the pitch angle can be obtained as

From the above analysis, as long as the horizon in the UAV-captured image can be accurately and reliably extracted, and its linear parameters determined, the roll angle and pitch angles of the UAV can be calculated using the formula.

3. Polarization Orientation Error Compensation Method

Early people found that bees, sand ants, and other animals possess remarkable navigation abilities [33,34]. Subsequent research revealed that these animals use detected sky polarization information to navigate. The skylight polarization forms a macroscopically stable distribution pattern containing rich directional information, including the degree of polarization (DoP) and the angle of polarization (AoP). The polarization degree and the polarization Angle represent the polarization degree of light and the direction of light vibration (E vector direction), respectively. Polarized light can be described by the Stokes vector. The Stokes vector comprises four components, which is . I represents the total light intensity. Q and U are two mutually perpendicular linearly polarized components, while V represents circularly polarized light. Given that the sky predominantly exhibits linear polarization, V is typically a value of zero. The formula for calculating the polarization angle and degree is

In the polarized light navigation system, the navigation frame and the body frame meet the following conversion relationship:

where is the projection of the solar vector within the body frame, is the transfer matrix, and indicates the sun’s position within the navigation frame. When the observed direction aligns with the solar direction, the polarization angle in the body frame can be expressed as

where is the observation point, and is the solar azimuth in the body frame:

The transfer matrix from the navigation frame to the body frame can be expressed as

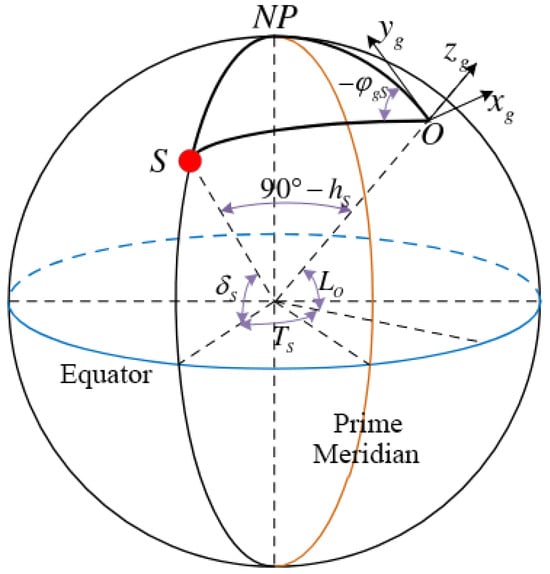

The position of the sun can be solved according to the declination angle of the sun , the solar hour angle , and the latitude of the observation site , as per the pertinent astronomical theories. By solving the spherical triangle in Figure 7, the sun altitude angle and the sun azimuth angle in the east, north, and up (ENU) geographic coordinate system can be obtained.

Figure 7.

Celestial sphere.

In Figure 7, the red dot S represents the sun, O represents the observation site, NP represents the North Pole, signifies the sun’s declination angle, is the sun hour angle, is the latitude of the observation site, is the sun altitude angle, and is the sun azimuth in the east, north, and up (ENU) geographic coordinate system . The following model can be established in Figure 7:

To solve the inverse trigonometric function of Equation (26) and perform quadrant judgment, we can obtain the following:

Among them, the calculation formula of the sun’s declination angle is as follows:

where is the day angle, D is the day of year, and since 1985, the vernal equinox time D0 expressed in days is

where Y represents the year and INT represents rounding to zero.

The solar hour angle of the observation site O is calculated as follows, where the local time Sd of the observation site is

where SZ and FZ represent the hour and minutes of local Beijing time, whereas LonD and LonF represent the longitude and latitude.

The calculation formula of the time difference Et is as follows:

Using Et to modify Sd to get true solar time St:

To solve the local solar hour angle according to St:

Through the above formulas and solving steps, the solar altitude angle and solar azimuth angle can be calculated finally. Solar position can be expressed by solar altitude angle and solar azimuth angle as

Thus, all remaining variables in Equation (22) have been solved. Substituting the acquired horizontal attitude angle into Equation (22) allows for the compensation of the polarized light heading information.

4. Discussion

4.1. Validation of Horizon Attitude Estimation Algorithm

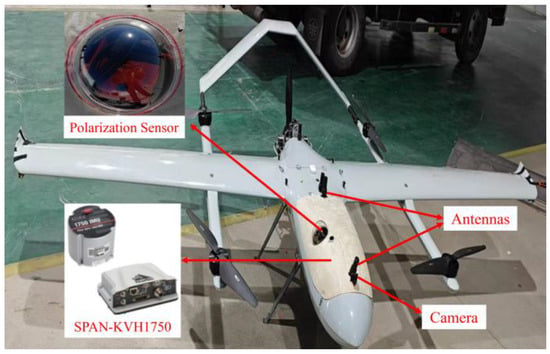

In order to verify the validity and reliability of the horizon attitude estimation method proposed in this paper, an airborne experiment was carried out with a UAV equipped with a high-resolution camera and a polarization camera. In total, 2278 image frames were collected during the flight for analysis and validation. In addition, the IMU-KVH1750 integrated navigation system, with high-precision fiber/satellite, serves as a reference, offering pitch and roll angle accuracy of 0.015° and heading angle accuracy of 0.035°. The experimental equipment is shown in Figure 8, and the camera parameters are shown in Table 3.

Figure 8.

Experimental equipment.

Table 3.

Camera parameters.

This UAV platform was selected due to its stable flight performance and sufficient payload capacity, allowing for the effective integration of the necessary sensors. Furthermore, its ability to execute diverse tilt maneuvers made it well-suited for testing the proposed tilt-induced error compensation method under realistic dynamic conditions. However, one limitation of this platform is its moderate resistance to environmental disturbances, such as wind gusts, which may introduce additional motion noise.

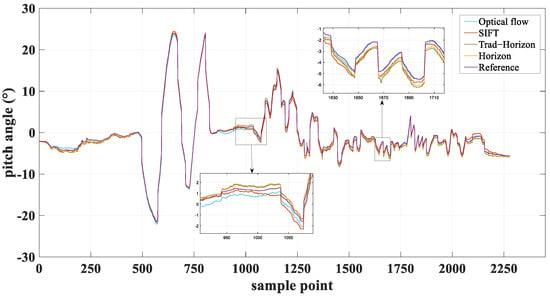

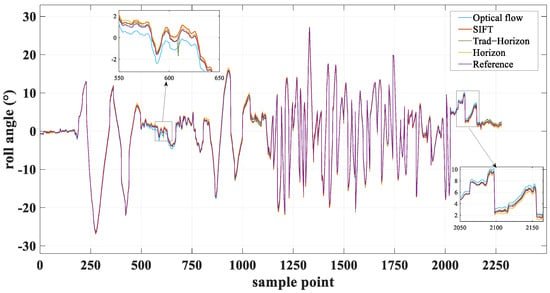

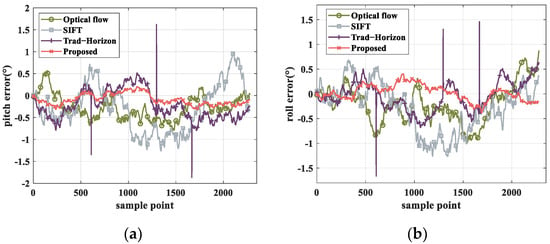

The proposed horizon attitude estimation algorithm in this paper is compared with the following methods: traditional horizon attitude estimation approaches, optical flow-based attitude estimation algorithms, isomorphic matrix attitude estimation methods based on SIFT feature points, and reference data. After the UAV completes its flight mission, the attitude estimation results and error curves for all methods are generated using MATLAB R2020a software. Figure 9 and Figure 10, respectively, display the pitch angle and roll angle estimations obtained by each algorithm. Figure 11 presents the error curves for pitch and roll angles for all compared methods.

Figure 9.

Pitch angle estimation results.

Figure 10.

Roll angle estimation results.

Figure 11.

Estimation error curve. (a) Pitch angle estimation error curve; (b) Roll angle estimation error curve.

The pitch and roll angle attitude estimation results indicate that the isomorphic matrix attitude estimation method based on SIFT feature points exhibits significant systematic bias in both pitch and roll angle calculations, along with pronounced numerical fluctuations. The optical flow-based method displays reduced error fluctuations and superior overall stability compared to the SIFT-based isomorphic matrix approach, though periodic oscillations persist under motion blur interference. The traditional horizon attitude estimation algorithm shows substantial attitude angle deviations at three sampling nodes (609, 1296, and 1668) due to hazy conditions. In contrast, the proposed horizon attitude estimation method achieves optimal accuracy in both pitch and roll angle calculations, with enhanced overall stability, thereby validating the effectiveness of the proposed algorithm.

Table 4 and Table 5 present a comprehensive performance analysis by detailing the Root Mean Square Error (RMSE) and Standard Deviation (STD) of pitch and roll angle estimation errors for each algorithm. As shown in the tables, the proposed attitude estimation method outperforms the other methods in both error metrics.

Table 4.

Pitch angle error comparison table of different attitude estimation methods.

Table 5.

Roll angle error comparison table of different attitude estimation methods.

4.2. Verification of Polarization Orientation Error Compensation Method

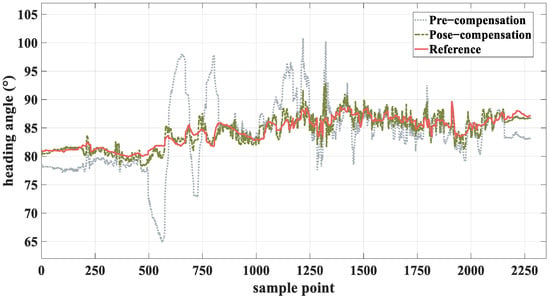

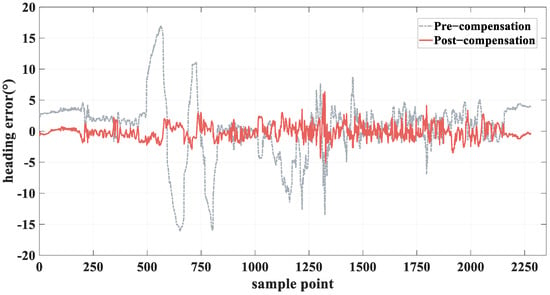

In this experiment, the UAV is equipped with a polarized light sensor to obtain polarized light information at various tilt degrees, aiming to verify the feasibility and accuracy of using a horizontal attitude angle to compensate for the polarized light heading under inclined conditions. The heading data before and after compensation were compared with the real heading data obtained by the high-precision benchmark, and the experimental results are shown in Figure 12 and Figure 13.

Figure 12.

Polarized light heading angle output results before and after compensation.

Figure 13.

Comparison of polarized light heading angle error before and after compensation.

Figure 12 illustrates the comparison results between the heading angle of the polarized light and the reference prior to and following compensation. The figure demonstrates how the raw polarized light data exhibit substantial fluctuations and deviation from the reference due to the tilt factor affecting the measurements. This deviation is particularly noticeable at certain sample points, where the angle reaches significant extremes. After compensation, the heading angle of the polarized light aligns more closely with the reference, showing a marked reduction in error. The results clearly highlight that the compensation method substantially reduces the misalignment, confirming the effectiveness of the horizontal attitude angle in correcting heading errors. Figure 13 illustrates the comparison of the heading error in polarized light before and after compensation. The pre-compensation error, represented by the gray dashed line, shows high variability and spikes, which suggests significant inaccuracies in the heading calculation. This is primarily due to the effects of tilt and environmental factors. After compensation (red line), the error is significantly reduced, with a marked decrease in error amplitude and frequency. The post-compensation curve shows a much more stable and smaller range of error, further confirming that the proposed compensation method effectively minimizes heading errors, improving overall heading accuracy.

Table 6 provides a quantitative comparison of the heading error before and after the application of the compensation method. It lists the root-mean-square error (RMSE), standard deviation (STD), and mean heading error for both the pre-compensation and post-compensation cases. The results show a substantial improvement in all metrics post-compensation: The RMSE decreases from 5.0869° to 1.1485°, a reduction of approximately 77.4%, indicating a significant reduction in the overall heading error. The standard deviation (STD) also decreases, from 5.0633° to 1.1441°, showing that the compensation method not only reduces the average error but also stabilizes the data, making the measurements more consistent. The mean heading error shifts from a positive value of 0.5005° (indicating a slight overestimation) to a negative value of -0.1039°, indicating a slight underestimation post-compensation. This shift suggests that the compensation method helps to bring the heading angle closer to the true value.

Table 6.

Comparison table of error before and after heading compensation.

5. Conclusions

This paper proposes a method to estimate the UAV’s horizontal attitude angle using horizon computation, aiming to compensate for significant heading errors in polarized light navigation under tilted conditions. Firstly, a dehazing-enhancement method integrating Retinex theory and dark channel prior is proposed, improving the algorithm’s adaptability under low illumination and hazy conditions. Secondly, an HSV-based dynamic threshold image segmentation method is developed, significantly enhancing the robustness of horizon extraction. Then, an improved adaptive bilateral filtering Canny operator is employed for edge detection, which not only detects fine edges but also effectively suppresses noise. Finally, the progressive probabilistic Hough transform is employed for line extraction, substantially reducing computational complexity and memory requirements. Subsequent to horizon extraction, the horizontal attitude angle of the UAV is calculated from the horizon parameters. Sensor measurements are converted from the body frame to the navigation frame using coordinate transformation, thereby compensating for polarized light heading errors. Airborne experiments are conducted to validate the efficacy of the suggested method. The experimental results show that the horizontal attitude angle estimation method based on the horizon in this paper has high accuracy. Furthermore, the implementation of the polarization orientation error compensation method proposed in this paper substantially improves the course accuracy and effectively mitigates errors.

While the proposed algorithm achieves high accuracy, it possesses specific limitations. Firstly, in urban or densely forested areas, the horizon is often occluded, causing failure in vision-based horizontal attitude angle estimation. Secondly, horizon detection relies on computationally intensive image processing algorithms, complicating the fulfillment of real-time requirements. Additionally, when the UAV undergoes large roll or pitch angles, errors in the polarized light heading compensation model increase significantly, degrading compensation performance. Future research might involve multi-source sensor fusion for attitude estimation to reduce reliance on single-source visual information while optimizing image processing algorithms to enhance real-time performance. Additionally, the polarized light heading compensation model requires refinement to improve stability under large-angle conditions.

Author Contributions

Conceptualization, M.Y. and X.W.; methodology, M.Y.; software, M.Y.; validation, M.Y., X.W. and X.L.; formal analysis, M.Y.; investigation, M.Y.; data curation, M.Y.; writing—original draft preparation, M.Y.; writing—review and editing, M.Y. and X.L.; visualization, M.Y.; supervision, C.W. and X.L.; project administration, C.W.; funding acquisition, C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by Key research and development project of Shanxi Province (202202020101002), in part by the Shanxi Province Basic Research Program (202303021211150), in part by the Aviation Science Foundation (202400080U0001), in part by the Shanxi province key laboratory of quantum sensing and precision measurement (201905D121001), in part by the Shanxi Province Graduate Student Innovation Practice Project (2024SJ244), in part by the Scientific and technological breakthrough of “Light of the Taihu Lake” (basic research) (K20241058).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Temple, S.; How, M.; Powell, S.; Gruev, V.; Marshall, N.; Roberts, N. Thresholds of polarization vision in octopus. J. Exp. Biol. 2021, 224, jeb240812. [Google Scholar] [CrossRef]

- How, M.J.; Robertson, A.; Smithers, S.P.; Wilby, D. Polarization vision in terrestrial hermit crabs. J. Comp. Physiol. A 2023, 209, 899–905. [Google Scholar] [CrossRef]

- Fahmizal; Nugroho, H.A.; Cahyadi, A.I.; Ardiyanto, I. Attitude Control and Low Cost Design of UAV Bicopter. arXiv 2023, arXiv:2309.08209. [Google Scholar] [CrossRef]

- Liu, M.; Wang, W.; Shi, Z.; Xie, L.; Chen, W.; Yan, Y.; Yin, E. Deep Learning-Based IMU Errors Compensation with Dynamic Receptive Field Mechanism. In Advances in Guidance, Navigation and Control; Springer: Singapore, 2025; pp. 95–105. [Google Scholar]

- Lu, H.; Shen, H.; Tian, B.; Zhang, X.; Yang, Z.; Zong, Q. Flight in GPS-denied environment: Autonomous navigation system for micro-aerial vehicle. Aerosp. Sci. Technol. 2022, 124, 107521. [Google Scholar] [CrossRef]

- Nazarahari, M.; Rouhani, H. Sensor fusion algorithms for orientation tracking via magnetic and inertial measurement units: An experimental comparison survey. Inf. Fusion 2021, 76, 8–23. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Y.; Jiang, K.; Zheng, W.; Song, M. Adaptive Grid Search Based Pulse Phase and Doppler Frequency Estimation for XNAV. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 3707–3717. [Google Scholar] [CrossRef]

- Kendall, A.; Grimes, M.; Cipolla, R. PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2938–2946. [Google Scholar]

- Qian, G.; Tang, J.; Shen, C.; Liu, J. A highly robust polarization orientation method based on antisymmetry of skylight polarization pattern. Measurement 2022, 204, 112070. [Google Scholar] [CrossRef]

- Li, Z.; Li, M.; Wu, P.; Li, Y. Dynamic Obstacle Detection for Quadrotors with Event Cameras. In Proceedings of the 2024 4th International Conference on Intelligent Communications and Computing (ICICC), Zhengzhou, China, 18–20 October 2024; pp. 126–130. [Google Scholar]

- Wang, Z.; She, H.; Si, W. Autonomous landing of multi-rotors UAV with monocular gimbaled camera on moving vehicle. In Proceedings of the 2017 13th IEEE International Conference on Control & Automation (ICCA), Ohrid, Macedonia, 3–6 July 2017; pp. 408–412. [Google Scholar]

- Jeong, C.Y.; Yang, H.; Moon, K.-D. Horizon detection in maritime images using scene parsing network. Electron. Lett. 2018, 54, 760–762. [Google Scholar] [CrossRef]

- Zardoua, Y.; Mohammed, B.; Mrabet, M.E.; Abdelali, A. A Fast Horizon Detector and a New Annotated Dataset for Maritime Video Processing. arXiv 2021, arXiv:2110.13694. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Rob. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- de Croon, G.C.H.E.; Dupeyroux, J.J.G.; De Wagter, C.; Chatterjee, A.; Olejnik, D.A.; Ruffier, F. Accommodating unobservability to control flight attitude with optic flow. Nature 2022, 610, 485–490. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Li, X.; Shi, Q.; Xu, C.; Tang, Y. UAV attitude estimation based on MARG and optical flow sensors using gated recurrent unit. Int. J. Distrib. Sens. Netw. 2021, 17, 1446–1453. [Google Scholar] [CrossRef]

- Dai, Z.; Yi, J.; Zhang, H.; Wang, D.; Huang, X.; Ma, C. CODNet: A Center and Orientation Detection Network for Power Line Following Navigation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Shi, B.; Wang, C.; Di, Y.; Guo, J.; Zhang, Z.; Long, Y. Research on a Horizon Line Detection Method for Unmanned Surface Vehicles in Complex Environments. J. Mar. Sci. Eng. 2023, 11, 1130. [Google Scholar] [CrossRef]

- Flores, J.; Gonzalez-Hernandez, I.; Salazar, S.; Lozano, R.; Reyes, C. Improving Optical Flow Sensor Using a Gimbal for Quadrotor Navigation in GPS-Denied Environment. Sensors 2024, 24, 2183. [Google Scholar] [CrossRef]

- Aycock, T.M.; Lompado, A.; Wheeler, B.M. Using Atmospheric Polarization Patterns for Azimuth Sensing. In Proceedings of the SPIE Defense + Security, Baltimore, MD, USA, 22–25 September 2014. [Google Scholar]

- Gkanias, E.; Risse, B.; Mangan, M.; Webb, B. From skylight input to behavioural output: A computational model of the insect polarised light compass. PLoS Comput. Biol. 2019, 15, e1007123. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, X.; Zhang, L.; Lian, J.; He, X. Polarized Light Compass-Aided Visual-Inertial Navigation Under Foliage Environment. IEEE Sens. J. 2017, 17, 5646–5653. [Google Scholar] [CrossRef]

- Han, G.; Zhang, L.; He, X.; He, R.; Mao, J.; Wu, X.; Hu, X. A Novel Orientation Method for Polarized Light Compass Under Tilted Conditions. IEEE Sens. J. 2020, 20, 10554–10563. [Google Scholar] [CrossRef]

- Shen, C.; Wu, X.; Zhao, D.; Li, S.; Cao, H.; Zhao, H.; Tang, J.; Liu, J.; Wang, C. Comprehensive Heading Error Processing Technique Using Image Denoising and Tilt-Induced Error Compensation for Polarization Compass. IEEE Access 2020, 8, 187222–187231. [Google Scholar] [CrossRef]

- Zhao, D.; Liu, Y.; Wu, X.; Dong, H.; Wang, C.; Tang, J.; Shen, C.; Liu, J. Attitude-Induced error modeling and compensation with GRU networks for the polarization compass during UAV orientation. Measurement 2022, 190, 110734. [Google Scholar] [CrossRef]

- Wu, X.; Liu, J.; Shen, C.; Cao, H.; Wang, C.; Tang, J. Vehicle-Mounted Polarization Orientation Strategy Considering Inclined Pavement and Occlusion Conditions. IEEE Trans. Intell. Veh. 2024, 1–12. [Google Scholar] [CrossRef]

- Liu, S.; Long, W.; He, L.; Li, Y.; Ding, W. Retinex-Based Fast Algorithm for Low-Light Image Enhancement. Entropy 2021, 23, 746. [Google Scholar] [CrossRef] [PubMed]

- Sabir, A.; Khurshid, K.; Salman, A. Segmentation-based image defogging using modified dark channel prior. EURASIP J. Image Video Process. 2020, 2020, 6. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Kusnandar, T.; Santoso, J.; Surendro, K. Modification Color Filtering in HSV Color Space. 2023. Available online: https://www.researchsquare.com/article/rs-2650337/v1 (accessed on 23 June 2023).

- Chen, B.H.; Tseng, Y.S.; Yin, J.L. Gaussian-Adaptive Bilateral Filter. IEEE Signal Process. Lett. 2020, 27, 1670–1674. [Google Scholar] [CrossRef]

- Marzougui, M.; Alasiry, A.; Kortli, Y.; Baili, J. A Lane Tracking Method Based on Progressive Probabilistic Hough Transform. IEEE Access 2020, 8, 84893–84905. [Google Scholar] [CrossRef]

- Powell, S.B.; Garnett, R.; Marshall, J.; Rizk, C.; Gruev, V. Bioinspired polarization vision enables underwater geolocalization. Sci. Adv. 2018, 4, eaao6841. [Google Scholar] [CrossRef]

- Evangelista, C.; Kraft, P.; Dacke, M.; Labhart, T.; Srinivasan, M.V. Honeybee navigation: Critically examining the role of the polarization compass. Philos. Trans. R. Soc. London. Ser. B Biol. Sci. 2014, 369, 20130037. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).