Abstract

Large language models (LLMs) have been widely used in real-world applications due to their ability to decipher and make sense of perturbed information. However, the performance of LLMs that suffered from input perturbations has not been fully analyzed. In this paper, we propose a self-correction reflection method inspired by human cognition to improve robustness and mitigate the impact of perturbations on LLM performance. Firstly, we analyze the vulnerabilities of tokenization in LLMs through empirical demonstrations, comparative analysis with human cognition, and theoretical investigation of perturbation susceptibility. Secondly, we imitate the correction of input information in the human brain, which is enhanced by the consistency principle, aiming to enable the model to correct the perturbation and reduce the impact on the final response. Finally, we conduct experiments to validate the method’s efficacy, demonstrating improved performance across multiple models and datasets. We introduce a new evaluation metric, Model-Specific Cosine Similarity (MSCS), to quantify how well a specific model understands perturbation text, providing a more comprehensive evaluation of different LLM architectures. Particularly for different types of perturbations, after applying self-correction reflection, the average MSCS improvement across all models reaches 10.88%, while the average ACC improvement is 10.22%. The study also underscores the need for more innovative tokenization techniques and architectural design to achieve human-like cognitive robustness. This study could pave the way for more reliable, adaptable, and intelligent language models capable of thriving in practical applications.

1. Introduction

In our daily lives, the human brain demonstrates remarkable adaptability when confronted with input perturbations [1]. For instance, when faced with a sentence like “Hvae a ncie day. Hpoe you konw the infromation”, humans can typically decipher the intended meaning almost effortlessly by leveraging context and cognitive reasoning. This resilience highlights the extraordinary flexibility of human cognition and underscores the sophisticated mechanisms underlying context-based language understanding.

In stark contrast, current large language models (LLMs), despite being trained on vast amounts of natural language data, often exhibit a striking vulnerability to input perturbations [2]. Even minor perturbations in input can drastically affect the LLMs’ responses and can lead to outright misinterpretations. This fragility raises significant concerns about the reliability of LLMs, particularly in critical applications such as medicine or law, where precision is paramount.

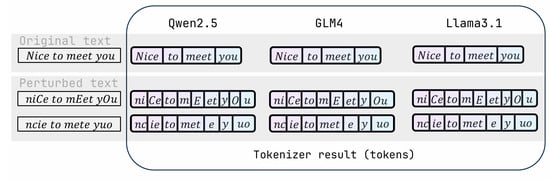

To address the issue of LLM reliability when faced with input perturbations, our study aims to figure out the underlying reasons why LLMs are fragile and to systematically assess possible solutions. Drawing on knowledge from both neuroscience and psycholinguistics, our research reveals that the tokenization process, which breaks down inputs with a fixed vocabulary mapping, is the key source of this vulnerability. As shown in Figure 1, we demonstrate how small input changes can significantly impact the performance of various mainstream LLMs, causing semantic distortions that undermine the reliability of the models.

Figure 1.

The significant variations in tokenization results (tokens) of the perturbed text by different LLMs.

Research [3] has shown that LLMs are capable of imitating the natural language processing abilities of the human brain, allowing us to draw an analogy between the human brain and LLMs. In this context, we further extend this analogy by comparing the working principles of LLMs with those of the human brain. Specifically, based on the work [4], we hypothesize that each LLM layer corresponds to different brain regions. Furthermore, while distinct in mechanism, the tokenization process in LLMs shares functional similarities with how the brain processes incoming linguistic information. Similarly to how neural systems transform sensory inputs into interpretable patterns [5], LLMs employ tokenization to convert raw text into vector representations suitable for computational operations. If this input information is inherently flawed and the model (or brain) fails to correct these perturbations effectively, the output will tend to be compromised [6]. Drawing on [7]’s insight that LLMs intrinsically possess perturbation-correction capabilities similar to the human brain, we develop a self-correction reflection mechanism that leverages the model’s existing capacities without additional training. Unlike approaches requiring external corrections, our method employs the consistency principle [8] to guide the model in autonomously detecting and rectifying input perturbations before response generation, thereby preserving semantic integrity while maintaining the model’s original architecture.

The main contributions of this paper are summarized as follows:

- We conduct a systematic analysis of how input perturbations influence LLM reliability from interdisciplinary perspectives (e.g., neuroscience, psychology), examining how tokenization mechanisms shape model robustness.

- We propose the Model-Specific Cosine Similarity (MSCS) framework that enables granular evaluation of perturbation sensitivity across different models, overcoming the limitation of traditional metrics (e.g., BLEU or BERTScore), which offer only coarse-grained similarity assessments and cannot diagnose model-specific perturbation sensitivity.

- We develop a self-contained reflection-based correction approach that requires neither additional training data nor external linguistic tools, distinguishing itself from conventional grammar correction systems while achieving robust performance through intrinsic consistency verification.

- We perform comprehensive architectural comparisons between Transformer, Mamba, and Hybrid models with perturbation-specific evaluation, providing practical insights for architecture selection in noise-prone scenarios.

The remainder of this paper is organized as follows. Section 2 examines the neurocognitive mechanisms for processing perturbed inputs and analyzes the limitations of existing correction methods in LLM tokenization. Section 3 details our self-correction reflection framework, presenting its formulation of variational inference and its consistency-driven enhancement mechanism. Section 4 introduces the Model-Specific Cosine Similarity (MSCS) metric to evaluate perturbation impacts across different architectures. Section 5 validates our method through systematic experiments that compare Transformer and Mamba models in various perturbation scenarios. Section 6 discusses the advantages of the proposed self-correction reflection approach, while also addressing its inherent limitations and potential challenges in practical applications. Section 7 concludes with recommendations for achieving human-level robustness through cognitive-aligned architectural innovations.

2. Related Work

2.1. Human Adaptation to Input Perturbations from Neurological and Psychological Perspectives

Human beings possess a remarkable ability to understand and make sense of various forms of input, even when it is perturbed.

From a neurological perspective, the language processing areas of the brain, including Broca’s and Wernicke’s areas, play a crucial role [9]. When presented with perturbed texts, these areas first work to decipher the intent of the text and attempt to correct it [10]. For example, studies using functional magnetic resonance imaging (fMRI) have shown that the brain initially registers irregularities and then attempts to map the chaotic elements into known language patterns [11,12]. However, such self-correction reflections remain underexplored in LLMs. When disturbed text is input, the large language model will perform autoregressive generation based on the disturbed text and will not correct the text.

Psychologically, human comprehension is dependent on context, semantic knowledge, and prior experience. The context provides valuable cues that help overcome the confusion caused by perturbations [13]. For example, in a sentence like “The cat chased the muose” with some letter swaps, the overall context of a typical predator–prey relationship allows the reader to infer the correct meaning. Furthermore, continuous exposure to a large amount of language over time allows human beings to develop heuristics and expectations [14]. We subconsciously predict what words are likely to follow based on the initial words in a sentence, which helps to compensate for perturbations. However, unlike the brain, LLMs’ word segmentation is deterministic, making them vulnerable to perturbations such as spelling errors or irregular combinations of tokens [15]. These disruptions can fragment subword units or distort semantics, where minor input deviations rarely compromise brain comprehension.

In general, the human cognitive system demonstrates remarkable resilience to input perturbations through integrated neural and psychological adaptation mechanisms. These self-adaptive processes stand in stark contrast to the current limitations of LLMs, where deterministic tokenization and rigid pattern-matching make them vulnerable to input perturbations that humans effortlessly overcome. We posit that by emulating architectures inspired by human self-correction frameworks, it may be possible to enhance the robustness of LLMs, particularly when processing perturbed inputs, whereas purely autoregressive generation approaches prove inadequate.

2.2. Limitations of External Correction Methods in Tokenization

Although LLMs have made significant advances in natural language processing, they still exhibit vulnerability when confronted with input perturbations, particularly in the tokenization stage [16]. Tokenization is the process of converting the input text into discrete units (tokens) that the model can understand [17]. However, LLMs generally rely on tokenizers that are deterministic and vulnerable to input perturbations, such as case changes. Even a small number of text perturbations can cause significant variations in input tokens, which in turn affects the model’s understanding of the text.

In recent research, the primary focus has been on improving model architectures, such as the Transformer, and newer architectures such as Mamba [18,19] and RWKV [20]. These models are designed to improve efficiency and performance in various natural language processing (NLP) tasks, but the underlying tokenization is often overlooked. Although these advances focus on improving model capacity and generalization, they do not address the issue caused by input perturbations during tokenization.

Existing solutions to mitigating errors rely predominantly on external correction methods, such as applying predefined rules or employing additional trained models to post-process perturbed inputs [21]. However, these approaches have critical drawbacks. First, external correction modifies the original input text to align with the expectations of the tokenizer, which risks altering or discarding semantically important information. For example, case normalization may erase distinctions between proper nouns and common terms. Second, such methods lack scalability: each type of perturbation often requires manual drafting rules or retraining of correction models [22]. Some recent efforts attempt to enhance robustness through runtime anomaly detection using large language models or statistical learning [23], or by embedding Covert Timing Channel (CTC) detection in system-level defenses [24]. Others improve semantic understanding with contextualized embeddings [25]. Although these methods reflect a growing emphasis on system resilience and align in part with Zero Trust principles, they still operate as external additions and do not fundamentally address the vulnerability of the tokenizers. As a result, these solutions often struggle to generalize across diverse and unpredictable perturbations in real-world inputs.

In summary, while external correction methods have achieved some success in mitigating errors during tokenization, they inherently limit the adaptability and scalability of LLM. The challenge lies in developing a more intrinsic approach that mirrors human cognitive agility and automatically adapts to noise during tokenization. Our proposed method addresses this gap by integrating self-correction capabilities through the tokenization process, ensuring semantic fidelity without external interventions. Table 1 illustrates the key differences between our approach and existing methods, emphasizing the advantages of our self-correcting mechanism in handling diverse perturbations autonomously.

Table 1.

Comparison of external correction methods and the self-correction reflection.

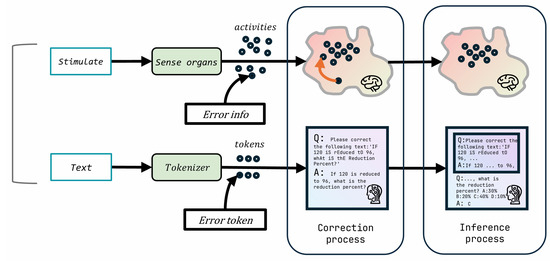

3. Self-Correction Reflection

To address the limitation of the LLM’s deterministic tokenization process being vulnerable to input perturbations (e.g., spelling errors or irregular token combinations), we proposed self-correction reflection (Figure 2). It is inspired by the intrinsic ability of the human brain to correct errors during language processing [7] and leverages the consistency principle of [8], where LLMs exhibit an inherent tendency to maintain coherence across their responses. This method enables the model to detect and rectify perturbations using its existing capabilities autonomously. Unlike traditional approaches that rely on external correction tools or additional training, our method preserves semantic integrity without modifying the model architecture or requiring external resources, ensuring high practicality and scalability.

Figure 2.

Illustration of the self-correction reflection for improving LLM performance by simulating the brain’s error correction ability and leveraging relevant research findings.

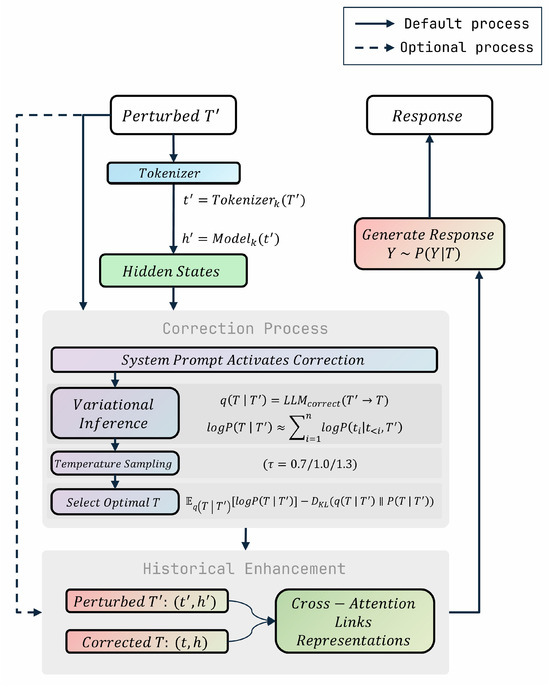

The design of the self-correction reflection framework comprises four key stages: Input Reception and Tokenization, Self-Correction Phase, Historical Enhancement through the Consistency Principle, and Inference with Corrected Context (Figure 3). Initially, the perturbed input undergoes tokenization and hidden-state extraction, establishing the foundation for subsequent analysis. The second stage activates the model’s self-diagnosis mechanism, where variational inference and temperature-guided sampling iteratively refine the perturbed input into a corrected version. Building on this, the third stage integrates the corrected text into the dialogue history while preserving the original perturbed context, enabling cross-attention between distorted and rectified representations to mitigate error propagation. Finally, the model performs inference using the corrected embeddings, ensuring alignment with the intended semantic space. These stages collectively mimic the brain’s error-checking loop while leveraging the LLM’s intrinsic coherence to balance correction rigor with computational efficiency.

Figure 3.

Flowchart of self-correction reflection process.

The self-correction reflection process operates as follows:

(1) Input Reception and Tokenization: When a user submits a perturbed input text , the model processes it through a tokenizer to convert it into a sequence of tokens:

These tokens are passed through the model to extract hidden states from the final embedding layer, yielding vector representations:

where are the hidden states of the final embedding layer of . Perturbations in may cause to deviate from the intended semantic representation, potentially resulting in misinterpretation.

(2) Self-Correction Phase: The model analyzes the tokenized input and its hidden state to generate a corrected text T. Using a system prompt (see Table 2), the model rectifies errors. This correction is modeled as a variational distribution:

which approximates the true posterior . The model outputs a probability distribution over the generated tokens, and the logarithmic probability is calculated as the sum of the logarithmic probabilities of each token in T conditioned on . Similarly, is approximated by the model’s output probability distribution for the corrected text, computed as

where are the tokens in T, and is the model’s predicted probability for each token given the preceding tokens and the perturbed input. The model maximizes the likelihood of reconstructing the intended text by optimizing

selecting the candidate T with the highest . The reconstruction term ensures an accurate correction, while the KL divergence aligns with , ensuring robust corrections.

Table 2.

Example of correction process with LLM’s system prompt, input text, and response.

The overall objective is to maximize

where is computed as the log-probability of the response Y given T. Using different temperature settings () during sampling, the model obtains diverse distributions , allowing for systematic exploration of the correction space. This temperature-based optimization strategy selects the candidate that best achieves the objective while maintaining semantic fidelity.

The corrected text T is tokenized and embedded as and , aligning h with the intended semantic representation. This approach leverages the model’s intrinsic capabilities, mimicking the brain’s error correction without requiring training.

(3) Historical Enhancement through the Consistency Principle: Following [8], the corrected text T and its embeddings h are integrated into the dialogue history as contextual augmentation. Unlike traditional methods that overwrite the original input, risking semantic loss, our approach retains both the perturbed input (with ) and the corrected text T (with ). This historical record, processed through cross-attention mechanisms, enhances contextual awareness by linking perturbed and corrected representations, reducing error propagation. The consistency principle ensures alignment between the corrected and intended distributions, minimizing divergence in the latent space.

(4) Inference with Corrected Context: The model generates the target response Y based on the corrected text T and its hidden state h, expressed as

The reconstruction term ensures accurate responses, and the KL divergence ensures that the correction process is robust. Using corrected embeddings h, the model produces reliable outputs under noisy conditions.

By embedding variational inference and temperature-based sampling within each step, our approach optimizes correction and inference without external models or training. The LLM’s cross-attention to link perturbed and corrected embeddings in the dialogue history mirrors human-like error mitigation, ensuring scalability and preserving the original model architecture for diverse, real-world scenarios.

The effectiveness of this method is demonstrated in Table 2 and Table 3. Table 2 illustrates the correction of a perturbed input, while Table 3 shows how the corrected text informs accurate inference, highlighting the seamless integration of correction and inference for reliable outputs.

Table 3.

Example of inference process with system prompt, history, question, and response.

This cognitively inspired framework enhances LLM resilience to textual distortions, validated through experimental results demonstrating improved performance and reliability without additional training or external models.

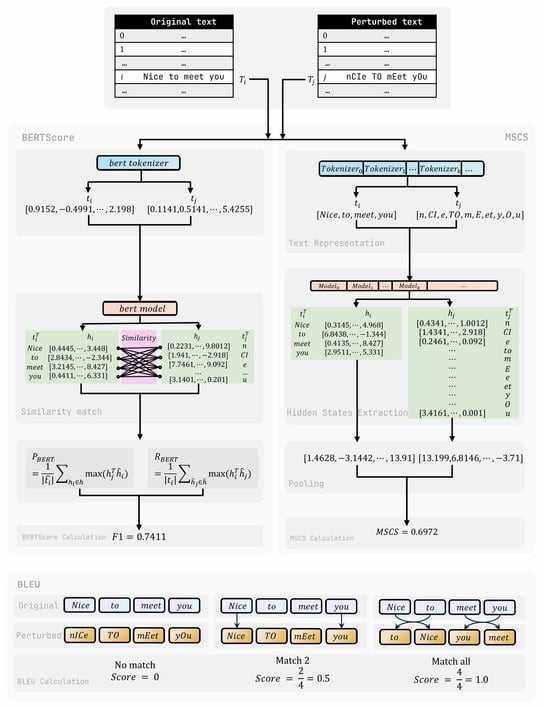

4. Model-Specific Cosine Similarity

Traditional evaluation metrics like BERTScore and BLEU are based on single-model or rule-based calculations, where the scores are fixed once the dataset is determined and cannot effectively evaluate model performance. These metrics do not capture how different models understand perturbed input, limiting their ability to assess model-specific effects. Additionally, since both BERTScore and BLEU rely on token matching, they are unable to detect perturbations caused by word-order changes. To address this limitation, we propose Model-Specific Cosine Similarity (MSCS), a novel evaluation framework that leverages multiple LLMs to assess model performance under input perturbations comprehensively.

MSCS builds upon the well-established cosine similarity metric [26,27], which measures semantic similarity between text representations. Unlike BERTScore and BLEU, which provide static evaluations, MSCS dynamically integrates the capabilities of different models, enabling fine-grained analysis of how perturbations affect input understanding across diverse LLM architectures. This approach captures model-specific variations in processing perturbed inputs, offering insights that traditional metrics cannot provide.

The key steps of MSCS are as follows.

(1) Text Representation: First, we use different LLMs and their corresponding tokenizers to convert the two texts to be compared into vector representations. Given an input text, the model tokenizes it and converts it into tokens.

The formula for this step is the following.

where and are the two input texts, and and are the corresponding tokens.

(2) Hidden States Extraction: Once the text has been tokenized, the tokens are passed through the feedforward layer of the model. In this layer, the model outputs a series of hidden states at each sublayer. To capture the semantic information, we focus on the hidden states of the final layer, as it can comprehensively integrate semantic information after being processed by previous sublayers. As shown in Figure 4, it illustrates the overall flow of the MSCS calculation process.

Figure 4.

Flowchart of the MSCS, BERTScore, and BLEU calculation procedures.

The formula for this step is the following.

where are the hidden states of the final embedding layer of .

(3) Pooling: To obtain a fixed-length vector for each text, we apply mean pooling to the hidden states of the final layer. This involves averaging the hidden states across all tokens in the sequence ,, resulting in vectors , that represent the entire input text.

The formula for this step is the following.

where n is the number of tokens of each text, and and are the vectors of the texts.

(4) MSCS Calculation: We compute the MSCS between the two representation vectors. MSCS is defined as the cosine of the angle between two vectors and is computed as follows:

where and are the vector representations of the two texts, and and are their Euclidean norms.

For , MSCS ranges from to 1. When we calculate MSCS for the perturbed text and the text before perturbation (original text), we can judge the difference between the LLM’s understanding of the text after perturbation and the understanding of the original text based on the results of MSCS. The closer the value is to 1, the more the model understands the two sentences, proving that the model basically ignores the impact of the perturbation. In contrast, the closer it is to −1, the more the model has a completely different understanding of the two sentences, proving that the perturbation has a greater effect on the model.

5. Experiments

The primary objective of our experiments is to systematically evaluate the robustness of state-of-the-art language models against various text perturbations and assess the effectiveness of self-correction reflection in mitigating performance degradation. To achieve this, we designed a comprehensive experimental framework that examines the behavior of the model in different types and intensities of perturbations. Furthermore, we conducted ablation studies to evaluate the performance of self-correction reflection using only the LLM (without leveraging consistency principles) in comparison to the complete proposed framework.

5.1. Experimental Setups

5.1.1. Models and Datasets

We selected a diverse set of models representative of state-of-the-art architectures commonly used in LLM research tasks. Specifically, we included models based on the Transformer architecture, such as Qwen2.5 (7B parameters) [28,29] (Alibaba, Hangzhou, China), GLM4 (9B parameters) [30] (Tsinghua University, Beijing, China), and Llama3.1 (8B parameters) [31] (Meta AI, Menlo Park, CA, USA), as well as models with the Mamba architecture, including Falcon3-Mamba (7B parameters) [32] (Technology Innovation Institute, Abu Dhabi, UAE). These models were chosen for their widespread adoption and proven efficacy in various tasks [33,34].

In this study, we selected two well-established benchmark datasets commonly used for evaluating LLMs: the Massive Multitask Language Understanding (MMLU) dataset [35] (the MMLU datasets can be downloaded from the following link: https://github.com/hendrycks/test, accessed on 11 November 2024) and the AGIEval dataset [36] (the AGIEval datasets can be downloaded from the following link: https://github.com/ruixiangcui/AGIEval, accessed on 15 November 2024), with a focus on the English subsets. The MMLU dataset is a widely recognized benchmark covering a broad range of tasks [37], including, but not limited to, single-choice questions in domains such as science, history, and mathematics. Similarly, the AGIEval dataset is a collection of question-answering tasks, comprising multiple sub-datasets, specifically LogiQA dataset [38], LSAT dataset [39], and SAT dataset. These datasets, consisting of single-choice questions and the corresponding answers, are designed to test the reasoning and problem-solving abilities of the models, making them ideal for assessing the generalizability of LLM [8,40].

We applied a series of controlled perturbations to the datasets, following the methodologies proposed by previous studies [7], where four different perturbation levels were used to explore the performance of the LLMs under varying degrees of input noise. The first level was No Perturbation (Baseline), where no perturbation was applied to the dataset, serving as the control condition for model evaluation. In the second level, Light Perturbation, 20% of the data points that met the specific perturbation criteria were randomly altered, introducing minor noise while preserving the original meaning. The third level, Moderate Perturbation, affected 50% of the data points that satisfied the perturbation conditions, introducing more substantial noise without completely disrupting the underlying information. The fourth level, Heavy Perturbation, applied changes to 100% of the data points, ensuring that all data underwent some form of noise introduction, simulating high levels of disturbance.

Furthermore, a case perturbation was applied based on research on human sensitivity to the letter case [41,42]. For each data point, we randomly selected 0%, 20%, 50%, or 100% of the words and swapped their case. This perturbation is regarded as real-world variations in text input with case inconsistencies, testing the model’s robustness to such a swap. Based on research on human sensitivity to letter and word order [43], we introduce additional letter and word perturbations. For letter perturbation, we randomly selected 0%, 20%, 50%, or 100% of words and swapped the order of two adjacent letters within those words, simulating typing or OCR errors. For word perturbation, we selected 0%, 20%, 50%, or 100% of adjacent words and swapped their order, mimicking sentence-level disorganization often encountered in user-generated content with non-native speakers.

With all these perturbations, three comprehensive datasets named SwapAlpha dataset, SwapWord dataset, and CaseAlpha dataset are established where each data point could be tested under varying conditions in real-world scenarios, enabling measurement of the LLMs’ resilience and ability to maintain performance across diverse, potentially unreliable perturbed inputs.

5.1.2. Evaluation Metrics

We followed established practices from previous work [8]; for the evaluation of the robustness of models to input noise, we used accuracy (ACC), which was calculated as the proportion of generated responses whose labels matched the annotated labels in the dataset. This matching was determined by an LLM. This method takes advantage of LLM evaluation, which has been shown to be as effective as human evaluation [44]. The formula for ACC is as follows:

Additionally, we employed MSCS (see Section 4) to evaluate the understanding of models of perturbed texts. The formula for MSCS refers to Equation (11).

To ensure consistency of the results, all the data in the subsequent experimental results were tested through at least three experiments, and the mean values were obtained.

5.1.3. Hardware and Software

Our experiments were carried out in Python 3.11 with PyTorch 2.5.1 and CUDA 11.8 on an Ubuntu 20.04 machine equipped with a Tesla V100 GPU (NVIDIA, Santa Clara, CA, USA).

5.2. Experimental Results

The experiment evaluated the performance of self-correction reflection by measuring the semantic divergence between the perturbed and original texts. We quantified this divergence using the mean semantic cosine similarity (MSCS) calculated with each model and its tokenizer, where a lower MSCS indicates stronger input perturbations from the model’s perspective. Additionally, we adopted accuracy (ACC) to assess the impact of perturbations and the improvement after self-correction.

As shown in Table 4, the mean semantic cosine similarity (Equation (11)) consistently decreases as perturbation levels increase across all evaluated language models. This demonstrates that more severe perturbations lead to greater semantic divergence between the modified text and the original version, confirming the MSCS metric’s effectiveness in quantifying semantic degradation. For unmodified texts (0% perturbation), all models maintain MSCS scores of 1.00, indicating no measurable semantic deviation from the original texts. This baseline confirms that the observed decreases in similarity scores directly result from the perturbations introduced rather than any inherent model limitations. The consistent inverse relationship between perturbation intensity and MSCS scores across all models and datasets demonstrates how textual modifications progressively degrade semantic fidelity. These findings highlight both the sensitivity of current language models to various types of perturbations and the utility of MSCS as a robust metric for quantifying these effects.

Table 4.

Response MSCS among different perturbations (SwapAlpha, SwapWord, CaseAlpha) of different levels (0%, 20%, 50%, 100%) across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba).

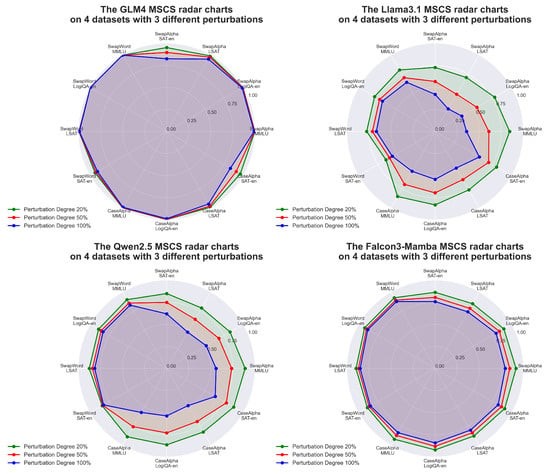

In Figure 5, the radar charts of the four LLMs show the degree of impact at different levels of perturbation. Under the same perturbation type for the same LLM, the magnitude of the change in the MSCS value varies at different perturbation levels. The magnitude of MSCS’s change indicates how the specified perturbation impacts the semantic consistency of the text from the perspective of the tokenizer. A larger change in the MSCS value indicates that the perturbation causes a greater semantic difference between the original text and the perturbed text, indicating a greater impact on the LLM. This in turn may cause a larger impact on ACC, highlighting that different perturbations affect the performance of the LLM to different degrees.

Figure 5.

The MSCS variations are visualized via radar charts under 3 perturbation levels (20%, 50%, 100%) for each LLM. This demonstrates how semantic similarity changes across various perturbation types concerning 12-dimensional properties. In total, 4 datasets under 3 different perturbations are considered.

The results in Table 5 and Table 6 highlight the advantages of MSCS as a comprehensive metric to evaluate the robustness of LLMs to text perturbations, surpassing traditional metrics such as BLEU-1 and BERTScore in terms of granularity and ability to evaluate perturbations. Table 4 shows that MSCS can quantify the semantic understanding deviations of different models under different perturbations with high sensitivity. BLEU-1 (Table 5) and BERTScore (Table 6) only evaluate superficial text similarity and are powerless to understand different models. It is worth noting that BLEU-1 and BERTScore fail to effectively evaluate SwapWord perturbations, which exposes their shortcomings in handling perturbations that change word order. By explicitly detailing how perturbations affect individual model understandings, rather than just evaluating lexical correspondences, MSCS establishes a rigorous methodological framework for research that helps to accurately assess the impact of perturbations on model reasoning capabilities.

Table 5.

Response BLEU-1 score among different perturbations (SwapAlpha, SwapWord, CaseAlpha) of different levels (0%, 20%, 50%, 100%) across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba).

Table 6.

Response BERTScore among different perturbations (SwapAlpha, SwapWord, CaseAlpha) of different levels (0%, 20%, 50%, 100%) across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba).

As presented in Table 7, different models exhibit varying performance in datasets and perturbations, due to their distinct training processes, architectures, and prior knowledge, which influence their ability to handle degraded inputs when processing perturbed text. Llama3.1 shows notable vulnerability, particularly in LogiQA-en, where ACC decreases from 39.50% to 31.81% (SwapAlpha 100%), 28.30% (SwapWord 100%), and 37.00% (CaseAlpha 100%). Across all models, Qwen2.5 consistently outperforms GLM4, Llama3.1, and Falcon3-Mamba across all datasets under three levels of perturbations, showing better performance.

Table 7.

Response ACC among different perturbations (SwapAlpha, SwapWord, CaseAlpha) of different levels (0%, 20%, 50%, 100%) across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba). The subscript represents the variance in accuracy (%).

Table 8 indicates that Qwen2.5 consistently demonstrates the strongest robustness under SwapAlpha and CaseAlpha perturbations, where the decrease in ACC is minimal across all perturbation levels, with an average percentage decrease of 9.01% under the SwapAlpha perturbation and 4.07% under the CaseAlpha perturbation. This suggests that Qwen2.5 exhibits the least sensitivity to these perturbations. In contrast, Falcon3-Mamba shows only a 20.03% average decline in ACC under SwapWord perturbations, which is significantly smaller than the declines observed in other models, indicating that Falcon3-Mamba demonstrates exceptionally high robustness in this particular perturbation type. The consistent degradation of ACC across all models empirically validates that perturbations impair model performance by increasing the semantic divergence between input and output, making predictions increasingly error-prone without corrective mechanisms. These findings underscore the varying degrees of robustness exhibited by different models, depending on the type of perturbation, highlighting the influence of model architecture on perturbation robustness.

Table 8.

Response ACC change rates (%) among different perturbations (SwapAlpha, SwapWord, CaseAlpha) of different levels (20%, 50%, 100%) across four datasets using four LLMs.

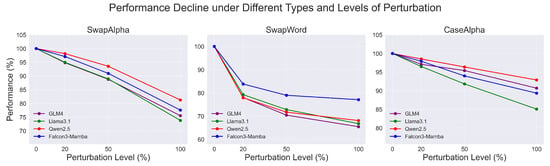

Figure 6 presents line charts that visualize the performance decline trends of different LLMs under varying perturbation levels. The plots demonstrate how the accuracy of the models changes across three perturbation types on four datasets. Each line in the chart represents the average performance decline of one model, with baseline performance (0% perturbation) normalized to 100%. The charts clearly show that Qwen2.5 maintains relatively stable performance with minimal decline under SwapAlpha and CaseAlpha perturbations, suggesting its robustness to these types of perturbations. Conversely, Falcon3-Mamba exhibits the smallest decrease in performance under SwapWord perturbations, indicating its higher robustness in that specific perturbation type. These visual trends emphasize how different models react to various perturbations, with each model showing varying levels of sensitivity depending on the perturbation type, thereby highlighting the impact of the model architecture on its robustness.

Figure 6.

Line charts visualize the performance decline trends of different LLMs under 4 perturbation levels (0%, 20%, 50%, 100%). The plots demonstrate how LLMs’ ACC changes across three perturbation types (SwapAlpha, SwapWord, and CaseAlpha) on 4 datasets (MMLU, LogiQA-EN, LSAT, and SAT-EN). Each line represents one LLM’s average performance decline, with the baseline performance (0% perturbation) normalized to 100%.

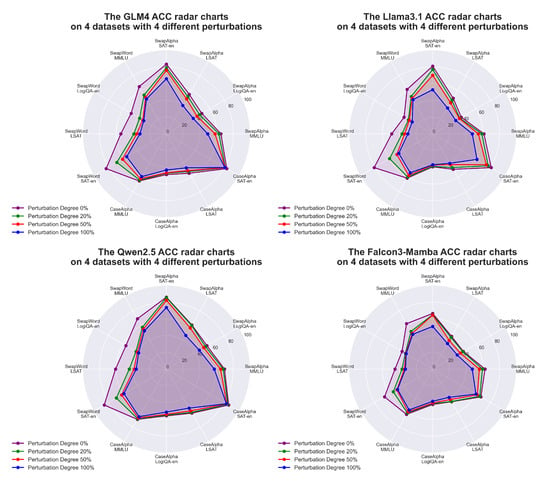

Figure 7 presents radar charts that provide a detailed comparison of the ACC of each LLM under various perturbation levels across different datasets. A larger radar chart area corresponds to higher overall ACC, indicating better model performance. It can be observed that the ACC of the four evaluated models exhibits a consistent trend: the radar chart areas decrease significantly as the perturbation levels increase. Among these models, Qwen2.5 demonstrates a noticeably larger area under all perturbation levels, indicating its superior performance. The GLM model follows as the second-best performer, while the Llama model and Falcon3-Mamba show relatively poor performance, with substantially smaller radar chart areas.

Figure 7.

The ACC variations are visualized via radar charts under 4 perturbation levels (0%, 20%, 50%, 100%) for each LLM. This demonstrates how LLMs’ robustness changes across various perturbation types concerning 12-dimensional properties. In total, 4 datasets under 3 different perturbations are considered.

Figure 8 presents radar charts that provide a detailed comparison of the ACC of four LLMs across various datasets and perturbation levels. The area of each radar chart represents the ACC, with larger areas indicating a higher ACC. We observe a consistent trend across all models: lower ACC levels are achieved on the LSAT and LogiQA-en datasets, whereas higher ACC levels are observed on the SAT-en and MMLU datasets. Among the models evaluated, Qwen2.5 consistently exhibits significantly larger areas in all perturbation datasets and levels, indicating its remarkable ability to solve the perturbation task. It should be noted that although the Falcon3-Mamba model exhibits relatively poor performance under perturbations, the areas formed at different perturbation levels in the radar chart are very similar in size and shape. This suggests that the Falcon3-Mamba model is less affected by perturbations, demonstrating a high degree of robustness when faced with perturbations.

Figure 8.

Illustration of ACC performance under four perturbation intensities (0%, 20%, 50%, 100%), showing how different LLMs maintain their robustness across various test scenarios.

As shown in Table 4 and Table 7, the perturbations significantly reduced both MSCS and ACC, with the degree of reduction being proportional to the strength of the perturbations. For the same model evaluated on the same perturbation type, the degree of reduction in MSCS also corresponds to the decrease in ACC. For instance, the MSCS reduction for the GLM4 model in the SAT-en dataset (from 1 to 0.9506, 0.8956, and 0.8243 under 20%, 50%, and 100% SwapAlpha perturbations, respectively) was notably greater than that on other datasets (for example, in the LogiQA-en dataset, MSCS decreased from 1 to 0.9984, 0.9955, and 0.9874 under the same perturbation levels).

We believe that comparing the correlation between MSCS and ACC for the same model under different types of perturbation is not meaningful. Generally, MSCS reductions caused by the same type of perturbation are consistent, which means that a lower MSCS for a given perturbation type within the same model typically corresponds to a lower ACC. However, in the same model, a reduction in MSCS across different perturbation types does not always lead to a corresponding reduction in ACC. Consider the following questions: “What is the last letter of the word ‘One’?” and “What is the last letter of the word ‘Apple’?” Although the semantic differences measured by MSCS are evident, the answers produced by the model, as evaluated by ACC, remain the same. This results in a significant drop in MSCS while ACC remains nearly unchanged.

To effectively mitigate input perturbations, we incorporated self-correction reflection, allowing the models to respond more accurately to the perturbed input. Through this method, we further evaluated the model performance.

The self-correction reflection demonstrates varying degrees of improvement in MSCS across different perturbation types and models as improved alignment between the model’s understanding of perturbed inputs and their original meaning, leading to more accurate semantic restoration. As shown in Table 9, for the SwapAlpha perturbation, Llama3.1 shows the most significant semantic correction, with MSCS increasing by +32.68% in MMLU and +27.35% in LSAT, reflecting the model’s improved ability to generate corrections semantically closer to the original text. For the CaseAlpha perturbation, most models demonstrate a certain degree of semantic correction, with Llama3.1 again leading to +15.92% improvement in MMLU and +15.14% in LSAT, highlighting the model’s partial success in recovering capitalization consistency. In particular, SwapAlpha consistently achieves the highest mean value in MSCS after correction (+10.88%), followed by CaseAlpha (+8.54%) and SwapWord (+1.33%).

Table 9.

Response MSCS comparison before and after correction for three types of perturbations (SwapAlpha, SwapWord, CaseAlpha) at 100% intensity across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba). For each perturbation type, the table presents the MSCS values before correction (“Before”), after correction (“After”), and the improvement percentage (“Improv.”).

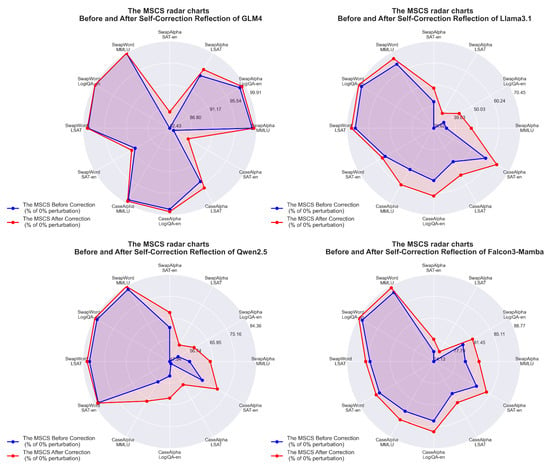

As shown in Figure 9, the self-correction reflection demonstrates a significant effect in enhancing LLMs’ understanding of perturbed questions. All LLMs and datasets exhibit a consistent trend, where the radar chart area increases after applying self-correction reflection, with improvements observed in every radar chart. Notably, the radar chart area expansion is particularly pronounced for Llama3.1 and Qwen2.5, indicating their superior improvement in comprehending perturbed texts after self-correction reflection. These MSCS improvement results validate that self-correction enhances the model’s ability to process noisy inputs and produce more accurate outputs.

Figure 9.

Illustration of MSCS improvement through self-correction reflection, comparing the semantic recovery before and after correction for each LLM. The radar chart plots normalized MSCS percentages (before/after correction relative to 0% perturbation MSCS) on axes representing distinct perturbation scenarios.

The self-correction reflection demonstrates significant improvements in ACC across different perturbation types, as shown in Table 10, which indicates improved model performance through better handling of perturbed inputs and their corrected representations. In particular, among different types of perturbation, the SwapAlpha perturbation shows the highest average improvement rate (+10.22%), significantly outperforming both CaseAlpha (+3.35%) and SwapWord (+2.89%), suggesting that self-correction reflection is particularly effective in addressing character-level swap perturbations. The ACC improvements of all of these models empirically confirm that self-correction enables better performance by processing corrected input rather than noise-degraded input.

Table 10.

Response ACC comparison before and after correction for three types of perturbations (SwapAlpha, SwapWord, CaseAlpha) at 100% intensity across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba). For each perturbation type, the table presents the ACC values before correction (“Before”), after correction (“After”), and the improvement percentage (“Improv.”).

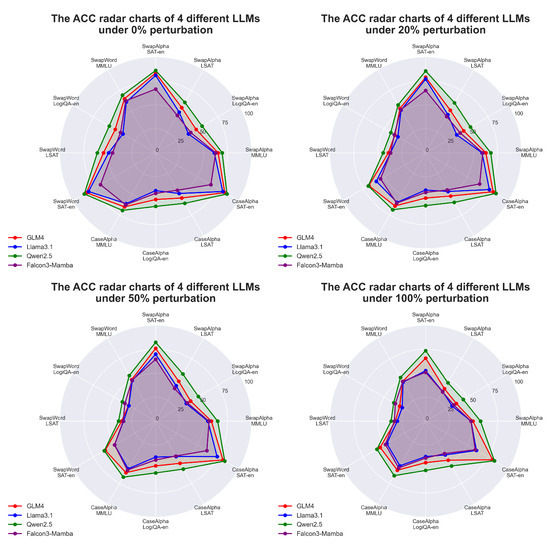

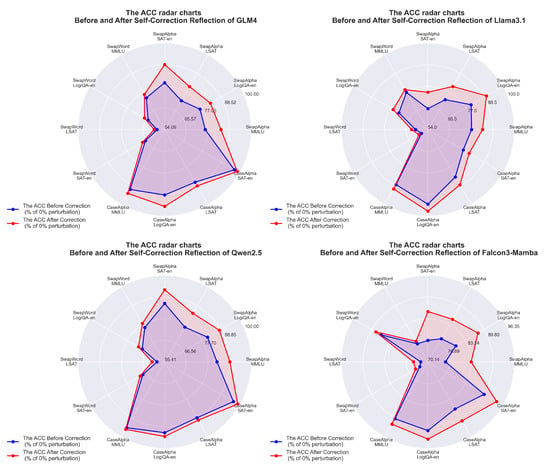

As shown in Figure 10, self-correction reflection increases the radar chart area across all models, with improvements observed in each individual radar chart. The radar charts also reveal that SwapAlpha perturbations consistently show larger improvements compared to SwapWord and CaseAlpha perturbations, which aligns with the average improvement rates in Table 10, respectively. This comprehensive chart suggests that self-correction reflection demonstrates varying degrees of effectiveness across different perturbation types, showing the best enhancement in SwapAlpha perturbation (+10.22%), followed by better enhancement in CaseAlpha (+3.35%), and relatively good enhancement in SwapWord perturbation (+2.89%), indicating that the method’s effectiveness varies depending on the specific type of perturbation encountered.

Figure 10.

Illustration of ACC enhancement through self-correction reflection, displaying the performance improvement for each LLM across different perturbation types. The radar chart plots normalized ACC percentages (before/after correction relative to 0% perturbation ACC) on axes representing distinct perturbation scenarios.

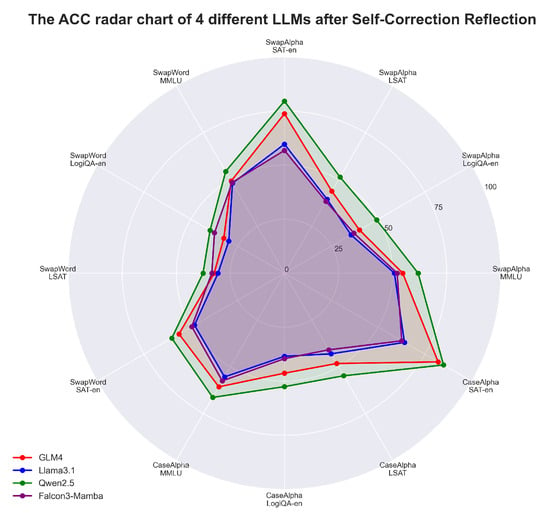

Figure 11 illustrates the performance of various model architectures after applying self-correction reflection. The radar chart highlights that Qwen2.5 consistently outperformed other models, covering the largest area across all datasets and perturbation types, thus demonstrating superior robustness and accuracy. GLM4, while not as robust as Qwen2.5, achieved the second-best performance, indicating its relatively strong ability to handle perturbations after self-correction reflection. Despite the improvements brought by self-correction reflection, significant performance differences remain among the models. These differences can be attributed to variations in their underlying architectures, training objectives, and datasets, which influence each model’s ability to generalize and adapt to perturbations. This suggests that self-correction reflection, while beneficial, cannot fully eliminate the inherent limitations imposed by model design and prior knowledge.

Figure 11.

Illustration of ACC after self-correction reflection comparison among LLMs, revealing their relative performance strengths across different datasets and perturbation types.

To verify the novelty and effectiveness of our proposed self-correction reflection, we also used the existing advanced CTC and TextBlob correction methods mentioned in Section 2 as baselines for comparison. The data presented in Table 11 and Table 12 show that self-correction reflection consistently outperforms the baseline in terms of improvements in both MSCS and ACC. These results highlight the effectiveness of the self-correction reflection method under adversarial perturbations.

Table 11.

Response MSCS comparison after correction for three types of perturbations (SwapAlpha, SwapWord, CaseAlpha) at 100% intensity across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba). For each perturbation type, the table presents the ACC values after CTC Detection (CTC), TextBlob, and the self-correction reflection (Ours).

Table 12.

Response ACC comparison after correction for three types of perturbations (SwapAlpha, SwapWord, CaseAlpha) at 100% intensity across four datasets (MMLU, LogiQA-en, LSAT, and SAT-en) using four LLMs (GLM4, Llama3.1, Qwen2.5, and Falcon3-Mamba). For each perturbation type, the table presents the ACC values after CTC Detection (CTC), TextBlob, and the self-correction reflection (Ours).

Using self-correction reflection, the models achieved significant improvements not only in semantics but also in stable and reliable performance in practical tasks. This indicates that the approach of self-correction reflection is an effective method for enhancing the performance of LLMs when dealing with input perturbations, offering broad application prospects and research value.

5.3. Ablation Study

To validate the importance of the consistency principle in our self-correction reflection framework, we conducted targeted ablation experiments comparing the self-correction reflection with a modified version that removes the Historical Enhancement via Consistency Principle phase (HECP). This isolates the use of the consistency principle of the large language model, so that after the correction perturbation, the historical information of the original text is not retained, which will lead to the loss of semantic information of the original text. Through this ablation experiment, we prove the comprehensiveness of our proposed method.

The ablation results in Table 13 and Table 14 show that removing HECP leads to a consistent performance drop in all evaluation scenarios, validating the necessity of preserving historical semantic information during the self-correction process. For MSCS, the full method (Ours) outperforms the ablation variant (w/o HECP) across a variety of perturbation types, suggesting that HECP mitigates the error propagation caused by semantic drift. For ACC, all models show a consistent trend that the full method (Ours) outperforms the ablated variant (w/o HECP) across perturbation types, especially in datasets with strong reasoning capabilities such as LogiQA-en and LSAT, highlighting the role of HECP in preserving task-specific knowledge during perturbation recovery. Notably, the performance gap is larger for CaseAlpha/SwapAlpha compared to SwapWord, suggesting that HECP is critical for maintaining contextual semantic consistency when corrections corrupt the lexicon itself. Together, these findings confirm that the consistency principle enforced by history augmentation is essential for robust self-correction under semantic perturbations.

Table 13.

Response MSCS comparison of the self-correction reflection (Ours) with the ablated variant (w/o HECP) across three perturbation types at 100% intensity, evaluated on four datasets using four LLMs.

Table 14.

Response ACC comparison of the self-correction reflection (Ours) with the ablated variant (w/o HECP) across three perturbation types at 100% intensity, evaluated on four datasets using four LLMs.

We additionally analyze the GPU memory consumption characteristics of both versions to verify computational feasibility. Table 15 demonstrates that while the full method incurs marginally higher memory usage (average +6.18% across models) due to history retention, the absolute increase remains negligible (0.77–1.2 GB) compared to baseline model memory footprints (16.34–18.55 GB). This confirms that the primary memory burden stems from base model parameters rather than our augmentation mechanisms.

Table 15.

Response GPU memory usage (in GB) of the self-correction reflection (Ours) with the ablated variant (w/o HECP) evaluated on four datasets using four LLMs.

6. Discussion

The experimental results indicate that although LLMs experience performance declines when confronted with various perturbations, the introduction of the self-correction reflection method significantly enhances the models’ capabilities, demonstrating improved robustness and accuracy. In particular, for the SwapAlpha perturbation, after applying self-correction reflection, the average MSCS improvement across all models reaches 10.88%, while the average ACC improvement is 10.22%.

The self-correction reflection method proposed in this study provides a promising approach to enhance the robustness of the response. By simulating the brain’s sequential steps to correct input perturbations, this mechanism effectively mitigates the reduction in accuracy caused by input perturbations. This improvement is consistent with neurological and psychological research on input perturbations. Experimental results demonstrated that integrating self-correction reflection can significantly improve model performance under input perturbation conditions.

We did not undertake generation tasks in our evaluation of LLMs because assessing the output quality can often be challenging. Large language models are capable of generating highly creative or high-quality text, which makes evaluation difficult without reference outputs. According to [45], these challenges can impair effective assessment. Therefore, we opted for classification tasks with clear answers for our tests. Future work may include optimizing these evaluation methods.

Despite these advances, our results also highlight the limitations of current approaches. While self-correction reflection improves the model’s ability to handle input perturbations, it also increases energy consumption due to the implementation of the consistency principle, which expands the context input. The additional context grows with the size of the user’s input text, leading to extra memory or GPU memory overhead for data processing. However, these additional overheads are negligible (average +6.18% across models, or an absolute increase of 0.77–1.2 GB) compared to the substantial memory and GPU memory consumption primarily caused by the huge number of parameters of LLMs during operation (16.34–18.55 GB).

7. Conclusions

This study adopts studies of human sensitivity in neuroscience and psychology to handle input perturbations, providing a novel perspective for the development of LLM technology. By systematically analyzing the limitations of tokenization and introducing the self-correction reflection method inspired by human cognition, we provide a method to mitigate the impact of input perturbations on LLMs.

The experimental results validate the efficacy of self-correction reflection, demonstrating improved performance across multiple models and datasets. Across different models and perturbation types, the ACC demonstrates an average improvement of 5.49% through self-correction reflection. However, this study also underscores the need for more innovation in tokenization techniques and architectural design to achieve truly human-like cognitive robustness. It is the first integration of predictive coding and the noisy channel model to achieve cognitive-inspired robustness.

Future work could focus on integrating dynamic correction strategies within the tokenization process itself, as well as exploring cross-disciplinary approaches that combine advances in artificial intelligence with deeper insights from neuroscience, psychology, and other relevant disciplines. By continuing to refine the interplay between human-inspired mechanisms and machine learning architectures, we can pave the way for more reliable, adaptable, and intelligent language models capable of thriving in real-world applications.

Author Contributions

Conceptualization, H.H. and H.W.; methodology, H.H., Y.W. and H.W.; software, H.H., J.M., Z.Y., Y.W., J.L. and Y.L.; validation, H.H., J.M. and Z.Y.; formal analysis, H.W., H.H. and L.S.; curation, H.H.; writing—original draft preparation, H.H., H.W., L.S., M.P. and X.B.; writing—review and editing, H.W., H.H., J.M., Z.Y., L.S., M.P. and X.B.; visualization, H.W., H.H., J.M. and Z.Y.; supervision, Y.W., Y.L., J.L., M.P. and X.B.; project administration, Y.W., Y.L., J.L., X.B. and M.P.; funding acquisition, L.S., X.B. and M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation, China (Project No. 62202164, 62301220), the Fundamental Research Funds for the Central Universities (Project No. 2023MS033), and the Beijing Key Laboratory Program (Project No. 2023BJ0263).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the High-performance Computing Platform of North China Electric Power University and Beijing Anxin Yiwei Technology Co., Ltd, for assistance with computation resources related to this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sun, K.; Wang, R. Computational Sentence-level Metrics Predicting Human Sentence Comprehension. arXiv 2024, arXiv:2403.15822. [Google Scholar]

- Salinas, A.; Morstatter, F. The Butterfly Effect of Altering Prompts: How Small Changes and Jailbreaks Affect Large Language Model Performance. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 4629–4651. [Google Scholar] [CrossRef]

- AlKhamissi, B.; Tuckute, G.; Bosselut, A.; Schrimpf, M. Brain-like language processing via a shallow untrained multihead attention network. arXiv 2024, arXiv:2406.15109. [Google Scholar]

- Goldstein, A.; Ham, E.; Nastase, S.A.; Zada, Z.; Grinstein-Dabus, A.; Aubrey, B.; Schain, M.; Gazula, H.; Feder, A.; Doyle, W.; et al. Correspondence between the layered structure of deep language models and temporal structure of natural language processing in the human brain. BioRxiv 2022. [Google Scholar] [CrossRef]

- Hickok, G. Computational neuroanatomy of speech production. Nat. Rev. Neurosci. 2012, 13, 135–145. [Google Scholar] [CrossRef] [PubMed]

- Mizuno, A.; Ly, M.; Aizenstein, H.J. A Homeostatic Model of Subjective Cognitive Decline. Brain Sci. 2018, 8, 228. [Google Scholar] [CrossRef] [PubMed]

- Cao, Q.; Kojima, T.; Matsuo, Y.; Iwasawa, Y. Unnatural error correction: Gpt-4 can almost perfectly handle unnatural scrambled text. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 8898–8913. [Google Scholar]

- Wu, H.; Hong, H.; Sun, L.; Bai, X.; Pu, M. Harnessing Response Consistency for Superior LLM Performance: The Promise and Peril of Answer-Augmented Prompting. Electronics 2024, 13, 4581. [Google Scholar] [CrossRef]

- Qiu, J.; Han, W.; Zhu, J.; Xu, M.; Weber, D.; Li, B.; Zhao, D. Can brain signals reveal inner alignment with human languages? In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 1789–1804. [Google Scholar]

- Jiang, J.; Benhamou, E.; Waters, S.; Johnson, J.C.; Volkmer, A.; Weil, R.S.; Marshall, C.R.; Warren, J.D.; Hardy, C.J. Processing of degraded speech in brain disorders. Brain Sci. 2021, 11, 394. [Google Scholar] [CrossRef]

- Janzen, G.; Haun, D.B.; Levinson, S.C. Tracking down abstract linguistic meaning: Neural correlates of spatial frame of reference ambiguities in language. PLoS ONE 2012, 7, e30657. [Google Scholar] [CrossRef]

- Zeki, S.; Hulme, O.J.; Roulston, B.; Atiyah, M. The encoding of temporally irregular and regular visual patterns in the human brain. PLoS ONE 2008, 3, e2180. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, Z. Context understanding in computer vision: A survey. Comput. Vis. Image Underst. 2023, 229, 103646. [Google Scholar] [CrossRef]

- Seijdel, N.; Stolwijk, G.; Janicas, B.; Snell, J.; Meeter, M. Explaining the Sentence Superiority Effect and N400s Elicited by Words and Short Sentences with OB1-Reader. J. Cogn. 2024, 7, 34. [Google Scholar] [CrossRef] [PubMed]

- Zlokapa, A.; Tan, A.K.; Martyn, J.M.; Fiete, I.R.; Tegmark, M.; Chuang, I.L. Fault-tolerant neural networks from biological error correction codes. Phys. Rev. E 2024, 110, 054303. [Google Scholar] [CrossRef] [PubMed]

- Chai, Y.; Fang, Y.; Peng, Q.; Li, X. Tokenization Falling Short: The Curse of Tokenization. arXiv 2024, arXiv:2406.11687. [Google Scholar]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Dao, T.; Gu, A. Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality. In Proceedings of the International Conference on Machine Learning (ICML), Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Peng, B.; Alcaide, E.; Anthony, Q.; Albalak, A.; Arcadinho, S.; Biderman, S.; Cao, H.; Cheng, X.; Chung, M.; Derczynski, L.; et al. RWKV: Reinventing RNNs for the Transformer Era. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 14048–14077. [Google Scholar] [CrossRef]

- Bast, H.; Hertel, M.; Mohamed, M.M. Tokenization Repair in the Presence of Spelling Errors. In Proceedings of the 25th Conference on Computational Natural Language Learning, Online, 10–11 November 2021; pp. 279–289. [Google Scholar] [CrossRef]

- Gupta, A.; Blum, C.; Choji, T.; Fei, Y.; Shah, S.; Vempala, A.; Srikumar, V. Don’t Retrain, Just Rewrite: Countering Adversarial Perturbations by Rewriting Text. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 13981–13998. [Google Scholar] [CrossRef]

- AlSobeh, A.; Shatnawi, A.; Al-Ahmad, B.; Aljmal, A.; Khamaiseh, S. AI-Powered AOP: Enhancing Runtime Monitoring with Large Language Models and Statistical Learning. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 121–133. [Google Scholar] [CrossRef]

- Frisbier, G.L.; Darwish, O.; Alsobeh, A.; Al-shorman, A. Identifying the Origins of Business Data Breaches Through CTC Detection. In Network and System Security, Proceedings of the 18th International Conference, NSS 2024, Abu Dhabi, United Arab Emirates, 20–22 November 2024; Song, H.H., Di Pietro, R., Alrabaee, S., Tubishat, M., Al-kfairy, M., Alfandi, O., Eds.; Springer: Singapore, 2025; pp. 387–406. [Google Scholar]

- Alshattnawi, S.; Shatnawi, A.; AlSobeh, A.M.; Magableh, A.A. Beyond Word-Based Model Embeddings: Contextualized Representations for Enhanced Social Media Spam Detection. Appl. Sci. 2024, 14, 2254. [Google Scholar] [CrossRef]

- Hasan, M.R.; Ferdous, J. Dominance of AI and Machine Learning Techniques in Hybrid Movie Recommendation System Applying Text-to-number Conversion and Cosine Similarity Approaches. J. Comput. Sci. Technol. Stud. 2024, 6, 94–102. [Google Scholar] [CrossRef]

- Pal, S.; Chang, M.; Iriarte, M.F. Summary generation using natural language processing techniques and cosine similarity. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Online, 13–15 December 2021; Springer: Cham, Switzerland, 2021; pp. 508–517. [Google Scholar]

- Qwen Team. Qwen2.5: A Party of Foundation Models. arXiv 2024, arXiv:2412.15115.

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Zhou, C.; Li, C.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2 Technical Report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- GLM, T.; Zeng, A.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Zhang, D.; Rojas, D.; Feng, G.; Zhao, H.; et al. Chatglm: A family of large language models from glm-130b to glm-4 all tools. arXiv 2024, arXiv:2406.12793. [Google Scholar]

- Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Yang, A.; Fan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Zuo, J.; Velikanov, M.; Rhaiem, D.E.; Chahed, I.; Belkada, Y.; Kunsch, G.; Hacid, H. Falcon mamba: The first competitive attention-free 7b language model. arXiv 2024, arXiv:2410.05355. [Google Scholar]

- Zhang, J.; Ren, X. The Application and Comparison of Artificial Intelligence LLMs in Psychological Statistical Analysis. In Proceedings of the 2024 2nd International Conference on Internet of Things and Cloud Computing Technology, Paris, France, 27–29 September 2024; pp. 8–13. [Google Scholar]

- Wang, M.; Wei, J.; Zeng, Y.; Dai, L.; Yan, B.; Zhu, Y.; Wei, X.; Jin, Y.; Li, Y. Precision Structuring of Free-Text Surgical Record for Enhanced Stroke Management: A Comparative Evaluation of Large Language Models. J. Multidiscip. Healthc. 2024, 17, 5163–5175. [Google Scholar] [CrossRef] [PubMed]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring Massive Multitask Language Understanding. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Zhong, W.; Cui, R.; Guo, Y.; Liang, Y.; Lu, S.; Wang, Y.; Saied, A.; Chen, W.; Duan, N. AGIEval: A Human-Centric Benchmark for Evaluating Foundation Models. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024, Mexico City, Mexico, 16–21 June 2024; pp. 2299–2314. [Google Scholar] [CrossRef]

- McDonald, D.; Papadopoulos, R.; Benningfield, L. Reducing llm hallucination using knowledge distillation: A case study with mistral large and mmlu benchmark. Authorea Preprints 2024. [Google Scholar] [CrossRef]

- Liu, J.; Cui, L.; Liu, H.; Huang, D.; Wang, Y.; Zhang, Y. LogiQA: A challenge dataset for machine reading comprehension with logical reasoning. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, IJCAI’20, Yokohama, Japan, 7–15 January 2021. [Google Scholar]

- Wang, S.; Liu, Z.; Zhong, W.; Zhou, M.; Wei, Z.; Chen, Z.; Duan, N. From LSAT: The Progress and Challenges of Complex Reasoning. IEEE/ACM Trans. Audio Speech Lang. Proc. 2022, 30, 2201–2216. [Google Scholar] [CrossRef]

- Zhang, B.; Zhou, K.; Wei, X.; Zhao, X.; Sha, J.; Wang, S.; Wen, J.R. Evaluating and improving tool-augmented computation-intensive math reasoning. Adv. Neural Inf. Process. Syst. 2024, 36, 23570–23589. [Google Scholar]

- Havelka, J.; Frankish, C. Is RoAsT tougher than StEAk?: The effect of case mixing on perception of multi-letter graphemes. Psihologija 2010, 43, 103–116. [Google Scholar] [CrossRef]

- Friedmann, N.; Gvion, A. Letter form as a constraint for errors in neglect dyslexia and letter position dyslexia. Behav. Neurol. 2005, 16, 145–158. [Google Scholar] [CrossRef]

- Grainger, J. Letters, Words, Sentences, and Reading. J. Cogn. 2024, 7, 66. [Google Scholar] [CrossRef]

- Chiang, C.H.; Lee, H.y. Can Large Language Models Be an Alternative to Human Evaluations? In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15607–15631. [Google Scholar] [CrossRef]

- Pan, Q.; Ashktorab, Z.; Desmond, M.; Santillán Cooper, M.; Johnson, J.; Nair, R.; Daly, E.; Geyer, W. Human-Centered Design Recommendations for LLM-as-a-judge. In 1st Human-Centered Large Language Modeling Workshop, Proceedings of the ACL 2024, Bangkok, Thailand, 15 August 2024; Soni, N., Flek, L., Sharma, A., Yang, D., Hooker, S., Schwartz, H.A., Eds.; TBD: London, UK, 2024; pp. 16–29. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).