Abstract

Causal domain knowledge is commonly documented using natural language either in unstructured or semi-structured forms. This study aims to increase the usability of causal domain knowledge in industrial documents by transforming the information into a more structured format. The paper presents our work on developing automated methods for causal information extraction from real-world industrial documents in the semiconductor manufacturing industry, including presentation slides and FMEA (Failure Mode and Effects Analysis) documents. Specifically, we evaluate two types of causal information extraction methods: single-stage sequence tagging (SST) and multi-stage sequence tagging (MST). The presented case study showcases that the proposed MST methods for extracting causal information from industrial documents are suitable for practical applications, especially for semi-structured documents such as FMEAs, with a 93% F1 score. Additionally, the study shows that extracting causal information from presentation slides is more challenging. The study highlights the importance of choosing a language model that is more aligned with the domain and in-domain pre-training.

1. Introduction

Causal domain knowledge plays a vital role in various downstream tasks, such as risk assessment [1], root cause analysis [2], and data mining [3]. The smallest unit of causal domain knowledge is known as a causal relationship. Causal domain knowledge is commonly documented using natural language either in unstructured or semi-structured forms. In natural language, a causal relationship is commonly defined as a connection between two text spans where one text span acts as the cause or the effect of the other. A prime example of semi-structured documents are tabular-formatted documents, which are typically used in Failure Mode and Effects Analysis (FMEA). FMEA is a risk assessment tool. In FMEA, a multidisciplinary team often uses brainstorming methods to identify lists of failure modes and their possible root causes and potential effects. Furthermore, a significant amount of causal domain knowledge is also found in presentation slides, which are documents that do not follow a predefined structure and are typically used in industry for sharing information between the different teams and with customers.

In FMEA documents, the manually created textual content presents several challenges such as non-standardized descriptions of failure modes, effects, and root causes, and in many cases, merged cells. In the case of merged cells, the description of multiple concepts within a single FMEA table cell [4] can involve nested or enchained relations. In an enchained causal relation, a cause or an effect in a causal relation is the cause or the effect of another causal relation. The information contained in FMEA documents creates a complex network of interconnected events where each cause and effect is influenced by and influences other events in the chain. Any discrepancy in the description of causal information in FMEA documents would subsequently affect the usability of the resulting causal network.

Furthermore, the increasing use of digital presentation slides as a medium for presenting and sharing information has made them a valuable source of knowledge [5]. Presentation slides stemming from processes like failure analysis requests encapsulate documented causal relations. These relations shed light on failures detected in products, providing a comprehensive understanding of the factors contributing to such issues. However, the unstructured nature of presentation slides, which combine visual and textual elements and use spatial positioning to document information, can make automated information access difficult. While these features are useful for presenting information to a live audience, they can make it difficult for automated systems to extract and understand the information contained in the slides.

Presentation slides and FMEA documents are a rich source of causal domain knowledge. However, the sheer volume of documents in the industry can make it difficult to manually process and extract the information. This can lead to an increased product development cycle time, which can be detrimental to overall productivity. Therefore, automatically extracting causal domain knowledge from unstructured documents like presentation slides, as well as from semi-structured documents like FMEA documents, can be highly beneficial in increasing the availability and accessibility of this knowledge.

Scholars have been addressing the topic of causal information extraction from text-devising natural language processing (NLP) methods. Two common NLP approaches are lexical pattern-based methods [6] and statistical machine learning-based methods [7]. More recently, pre-trained transformer-based language models are devised for extracting a meaningful representation of the text in a sequence tagging-based approach for extracting causal information [8,9,10]. Also, research indicates that pre-training on domain specific data can enhance the vector text representation by better capturing the nuances and complexities of the domain-specific language, which can improve the overall performance on downstream tasks [11]. However, to the best of our knowledge, the testing and adaptability of methods for causal information extraction from industrial documents remain relatively limited. Furthermore, there is a lack of comprehensive exploration regarding the challenges faced by such methods concerning different document formats, such as presentation slides and tabular formats, as well as regarding domain-specific language in comparison to general language. Finally, streamlined causal information extraction methods tend to struggle with the detection of detailed causal relations including nested and enchained relations [9,10]. Research in these areas could provide valuable insights and guidance for the development of information extraction methods for industrial documents. To address this gap, the following research questions are addressed in this paper:

- How effective are existing causal information extraction methods on different types of industrial documents?

- What is the effect of text representation learning on the overall performance of causal information extraction in industrial settings?

In summary, the aim of this research is to develop a method for detailed causal information extraction on industrial data, with which the availability and consistency of causal domain knowledge can be improved in the industrial world, facilitating more reliable data analysis and more informed decision-making. Our work has the potential to be applicable to a variety of domains, including teaching, where much causal domain knowledge is documented in unstructured or semi-structured documents. Consequently, the contribution of this paper can be summarized as follows:

- Extending causal information extraction methods to industrial documents, increasing the availability of causal domain knowledge for downstream tasks in the industry.

- Providing guidance for practitioners working in different industries with similar types of documents. This guidance includes summarizing causal relation annotation guidelines in natural language, providing examples of these annotations from semiconductor manufacturing, and emphasizing the importance of inter-annotator agreements (IAA) to ensure annotation quality and clarity.

- Addressing data consistency issues commonly found in semi-structured documents, like the merged cells in FMEA documents.

- Contributing to the body of research that highlights the effect of representation learning on downstream tasks.

2. Related Work

The extraction of causal information from documents is a widely discussed topic across various domains such as the medical, financial, and industrial sectors. The importance of extracting causal information lies in its various applications, which are summarized in Section 2.1. Scholars have been actively developing different methods to extract causal information from documents due to the high demand for this task. These methods can be broadly categorized into two groups: knowledge-based methods, such as devising lexical patterns, and data-driven methods including machine learning and deep learning based methods. Section 2.2 provides a summary of these methods and their respective approaches.

2.1. Causal Information Extraction Applications

Causal information extraction plays a crucial role in diverse sectors. The interest in this topic is especially strong in the health [12], finance [10], and industrial [13] sectors. Causal information extraction in the health sector is essential for advancing medical knowledge [14], improving patient care [15], supporting research endeavors [16], and enhancing overall healthcare outcomes. It is vital in various aspects of healthcare, from disease understanding and diagnosis to treatment personalization, drug discovery, and public health management. Causal information extraction in finance can enhance risk management [17], market analysis [18], fraud detection [19], portfolio optimization [20,21], regulatory compliance [21], policy development, and scenario analysis. Causal information empowers financial professionals with valuable insights, assisting them in navigating the complexities of the financial landscape. Extensive research in this field has been conducted as part of the shared task FinCausal 2022 [22].

In industrial settings, ontologies are commonly employed to create a knowledge base and facilitate the sharing of knowledge in a structured manner. As shown in [23], they can also be used to structure causal domain knowledge. However, ontological approaches often encounter challenges related to high maintenance requirements and limited scalability. To address these issues, considerable effort has been invested in automatically enriching tabular formats with additional information derived from domain knowledge. Razouk and Kern [4] present approaches that use statistical pattern-based methods combined with lexical patterns to detect cases of merged cells, aiming to enhance the consistency of these documents. Furthermore, in the realm of risk assessment documents, such as FMEA documents, causal domain knowledge has been extracted and structured into a knowledge graph [13]. This knowledge graph has been effectively utilized to develop a knowledge discovery method, leveraging common-sense knowledge completion techniques.

2.2. Causal Information Extraction Methods

The extraction of causal information from documents is a topic of great interest since not only numerical data but also texts can provide valuable insight into causal relations [24]. While causal discovery aims to uncover the causal model or, at the very least, a Markov equivalent that mimics the data generation process for a given data set [25], causal information extraction from texts focuses on identifying causal entities and how they are connected to each other [7]. The current existing methods for causal information extraction are broadly categorized by the authors in [7] into three main categories: (i) Pattern-based approaches; (ii) Statistical machine learning-based approaches; (iii) Deep learning approaches.

Pattern-based approaches usually leverage linguistic patterns to identify causal language in limited contexts. For instance, the authors of ref. [6] use lexical and syntactic patterns to detect causal relations in medical and business texts. Pattern-based approaches can be effective for specific domains but they lack generalizability. Statistical machine learning-based approaches typically involve the use of third-party NLP tools which require fewer patterns than pattern-based approaches [26]. Deep learning approaches leverage deep neural networks to extract causal information from text [27] and to acquire useful vectorized representations of text [8,9,10]. This involves training neural networks to identify causal relationships in text using techniques such as supervised learning. As such, many scholars have been working on creating annotation guidelines [28,29,30] and annotating data sets to train and test the developed methods [22,31].

One of the most common machine learning and deep learning approaches for extracting causal information is the use of sequence tagging. Causal information extraction is not only about identifying causes and effects but about detecting them as pairs. To obtain useful causal information, the relation between the entities needs to be taken into consideration [7]. Pure sequence tagging methods, such as the method presented in [10,32], are facing difficulties in integrating relational information into the input data while performing Named Entity Recognition (NER). Since the relation between the entities is crucial in order to obtain useful causal knowledge, other approaches have been proposed which involve fine-tuning pre-trained language models for text span classification and sequence labeling tasks. For example, the authors in [10] specifically label the text spans corresponding to cause and effect in a given text. They then proceed to classify whether these identified cause-and-effect spans were linked together through a causal relation. Similarly, [32] employ an event-aware language model in order to predict causal relations by taking into account event information, sentence context, and masked event context. Another major difficulty in the extraction of causality using NER is the recognition of overlapping and nested entities. [33] tackle overlapping entities by employing Text-to-Text Transfer Transformer (T5). Nevertheless, profound nested relations in which whole causal relations are nested within an entity are not captured with their method. Also, Gärber [8] proposed an MST approach for extracting causal information from historic texts. The MST method extracts causal cues in the first stage and then uses this information to extract complete causal relations in subsequent stages.

In summary, causal information extraction from text depends on several success factors, which include the following:

- Efficient generalizability achieving broad applicability of the method with limited manual work, such as creating new knowledge bases for domain-specific patterns.

- The ability to acquire useful vectorized representations of the text that enables the training of different methods for causal information extraction.

- Having consistently and sufficiently annotated data sets with clear annotation guidelines that mitigate the different interpretations of causal information described in text.

- A representation that allows the system to manage the complexity associated with the description of causal relationships, such as nested or enchained causal relations.

3. Methods

Causal domain knowledge is typically documented either in unstructured or semi-structured documents. These documents are by design aimed to be produced and consumed by human domain experts. Thus, this documentation style impedes a straightforward automated information access. The objective of this study is to develop a method that can increase the usability of the causal domain knowledge contained in a set of industrial documents by transforming the information into a more structured format. To achieve this objective, we propose a causal information extraction method tested with various industrial documents, depicted in Figure 1, which involves the following steps:

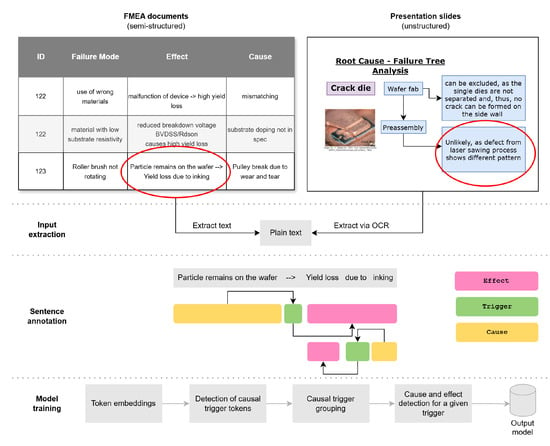

Figure 1.

Proposed method for causal information extraction from various industrial documents. For the extraction of causal information, texts are gathered from tabular FMEA documents and industrial presentation slides. The causal entities and relations in these texts are then annotated following specific annotation guidelines, as described in Section 3.2. The depicted example illustrates a text extracted from an FMEA cell, which contains two causal relations. For higher generalizability, a meaningful text representation is generated using various language models. This representation is then used to train different sequence tagging models for causal information extraction. Namely, the MST approach, which uses a cascade of models for different tasks, is depicted.

- Text extraction from different document formats (the input extraction step in Figure 1): This step involves extracting a textual representation of the information contained in the different documents. This textual representation can be analyzed and used to develop causal information extraction methods. This step is elaborated in Section 3.1.

- Structured data representation (the Sentence annotation step in Figure 1): This step involves representing the information contained in texts extracted from different industrial documents in a structured format (i.e., a set of named entities and relations between these entities) that can be easily used for downstream tasks such as risk assessment, data analysis, etc. This step is outlined in Section 3.2.

- Automated causal information extraction from text (the Model training step in Figure 1): This step involves extracting a meaningful vectorized text representation, leveraging this representation to develop causal information extraction methods that transfer the information contained in the text to a set of named entities and relations between these entities. Namely, we propose two approaches: the first one based on SST, and the second one based on MST. This step is elaborated in Section 3.3.

3.1. Text Extraction from Different Document Formats

Our method focuses on two types of industrial documents: semi-structured documents represented by FMEA documents and unstructured documents represented by presentation slides. For semi-structured documents, our method parses the documents as tabular formats and extracts the text contained in the failure mode, effect, and cause columns. Each cell of these columns represents a separate entity and is treated as a standalone text. This approach was also proposed by Razouk et al. in their study on FMEA documents [4,13]. By extracting causal information from FMEA text, merged cells which contain causal relations can be split into multiple cells and merged into the existing knowledge graph. Thus, a more connected knowledge graph can be achieved.

To extract the data from the presentation slide files, the slides are converted to images and then read by an OCR architecture composed of a text detection model and a text recognition model. This method also recognizes each standalone text based on its position in regard to other texts in the slide. The selection of this method is attributed to the fact that, to date, methods for extracting text directly from presentation slide files are still lacking in terms of reliability. Even though there are libraries for this purpose, such as python pptx, they do not offer support for extracting content from graphic frames, which includes SmartArt. Furthermore, these libraries extract the content of the slides in the order in which the boxes were created and not in the order in which they are intended to be perceived.

3.2. Structured Data Representation of Causal Information

Structured data representation is a crucial part of information extraction. In particular, representing causal relationships in a structured manner has received significant attention in recent years due to their importance in understanding the connections between different events, phenomena, and concepts. There are several ways to represent structured data in the context of causal relationships. One common approach is to use knowledge graphs to represent the relationships between different entities. In these graphs, each entity is represented as a node, and the causal relationships between entities are represented as edges. For example, a knowledge graph could represent the causal relationships between different failure modes and their causes and effects in the FMEA documents [13].

However, information extraction for causal relations is challenging due to several reasons. Causal relations are often part of our cognitive reasoning and can be articulated implicitly in text, making it difficult for automated systems to identify them accurately. Additionally, cognitive reasoning can differ from person to person, which can create disagreement in the interpretation of the same text. To mitigate these challenges, several scholars have proposed different annotation guidelines to guide annotators in the annotation process [28,29,30,34]. Specifically, many scholars have recommended only extracting explicit causal relations [28]. In our structured data representation, we adapted these recommendations by specifying three entity types: cause, effect, and trigger. The cause and effect entities represent events or variables articulated in the text, while the trigger is the explicit causal clue that expresses the presence of the causal relation between the cause and the effect. To fully represent a causal relation, three types of entities—cause, trigger, and effect—along with two relations between them, are used. The first relation connects the cause and the trigger, while the second relation connects the trigger and the effect. This approach helps to accurately capture explicit causal relations in texts and can be useful in various applications such as information retrieval and knowledge representation.

Annotation guidelines are crucial for creating consistent training and testing data, particularly for data-driven methods. They facilitate the consistency required for the effective training and evaluation of such methods. These annotation guidelines aim to reduce the varying interpretations among different annotators. However, these guidelines may have limited applicability to domain-specific texts, such as those from the semiconductor industry, due to their unique characteristics. To address these challenges, we have devised annotation guidelines that combine and distill the diverse paradigms proposed by previous researchers. Our guidelines provide examples from a domain-specific data set to support experts in gaining a better grasp of the task. The developed annotation guidelines are detailed as follows:

- Causal relations are only annotated on text level, which corresponds to a single FMEA cell or a text box recognized by the OCR in the case of a presentation slides. Relations between entities that belong to different texts are disregarded.

- Two entities—either two effects or two causes—are annotated as a single entity if they are linked to the same cause or effect [30]. Example: Die chipping/crackEffect due toTrigger dicing process condition/parameters and the wafer condition in kerf areaCause.

- Causal relations can be chained [30]. The effect of a cause can be the cause of another effect. Example: Due toTriggera wrong implantation doseCause, the compensation was destroyedEffect Cause, and thereforeTrigger, the lot was disregardedEffect. In the example, the entity “the compensation was destroyed” is the effect of “a wrong implementation dose” and the cause of “the lot was disregarded”.

- Nested relations are allowed. There can be causal relations inside an entity (cause or effect). Example: Foreign material or residue does not cause failure at wafer testEffect due toTrigger thin isolation, inhibiting leakage currentCause. In the example, “thin isolation, inhibiting leakage current” is the cause of the first part of the sentence, but within this cause, there is another causal relation, since “Thin isolation” is the inhibitory cause of “leakage current”. In this case, we annotate the cause as well as the entities and the relations within the cause.

- Entities can be interrupted by other entities. Interrupted entities are annotated as one entity, excluding the part that belongs to another entity. Example: Due to a wrong implantation dose, the compensation was destroyedCause, and the lot wasEffect thusTrigger disregardedEffect.

- Entities are only annotated if there is a complete causal relation with a cause, an effect, and a trigger.

- Causal relations without an explicit trigger are disregarded [29].

- Lexical causatives are disregarded [28,29]. Example: Electrical and mechanical stress at application environment is cracking the isolation layer between defect and conductive line. Sentences with transitive verbs like “to crack” are not considered causal, even though one could argue that, in the example, the action of cracking is the cause for the crack. Such relations are disregarded since the entities and the trigger cannot be clearly separated.

- Vague causal relations are disregarded [29,34]. Example: Sentences like “Y is linked to X” are not annotated.

- Hypothetical and assumed causal relations are annotated. Example: Scratches at Wafer BSEffect, most probably due toTrigger particlesCause.

- Future causal relations are considered. Example: Sentences like “X will lead to Y” are annotated.

- Relative pronouns are annotated as part of the cause or effect. Example: There is a QMP regarding edge damage whichCause could causeTrigger the flying diesEffect.

3.3. Automated Causal Information Extraction from Text

The proposed approach utilizes BERT-based language models to extract meaningful vectorized text representations, specifically selected for their relevance to the domain. To potentially further optimize these models, in-domain pre-training using a masked language modeling objective is conducted on a data set from the same domain. During the pre-training, we compare two masking objectives. The first objective involves uniform masking (UM), where the masked tokens are selected randomly across the text. The second objective leverages point-wise mutual information masking (PMI) [35], where spans of tokens related to each other based on their co-occurrence are masked. This approach could potentially help to improve the relevance of the extracted vectorized text representations for the specific domain. Hence, by selecting and pre-training BERT-based models, we could effectively capture the nuances and complexities of the domain-specific language and improve the overall performance of our information extraction model.

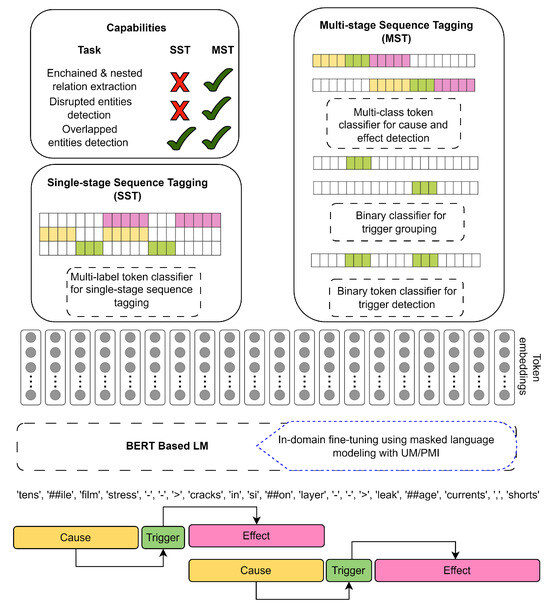

To transfer the information contained in text, two causal information extraction methods are selected. The first approach is based on SST using BERT token embeddings, originally designed for Named Entity Recognition. Specifically, the proposed SST approach extracts the token embeddings from the text and passes them to a multi-label classifier, which allows for detecting overlapping labels, meaning a token can be recognized as a cause and an effect at the same time. The losses of the classifier are used to update both the classifier weights and the language model weights. This approach is depicted in the left side of Figure 2.

Figure 2.

Automated causal information extraction from text. The proposed approach for causal information extraction from text utilizes a BERT-based language model, selected based on the relevance of its initial training data set to the domain of interest. The model is initially fine-tuned for the domain using a masked language modeling objective, with two masking strategies (UM and PMI) being compared. A portion of the annotated data set is used to train the model, and two causal information extraction methods are compared: SST and MST. SST, based on multi-label token classification, is capable of detecting overlapped entities. MST cascades multiple models, including a binary classifier for trigger detection, a binary classifier for trigger grouping, an attention network for trigger combined embedding, and a multi-label classifier for argument detection. In addition to detecting overlapped entities, MST is capable of extracting enchained relations and detecting disrupted entities.

The second approach is based on the MST method first presented in [8]. This approach consists of multiple cascaded models that utilize the text embeddings given by a BERT model. The first model is a binary token classifier that detects tokens with a trigger label. The output of this model is passed to a second model that identifies tokens belonging to the same trigger entity. This is achieved using a trigger grouping model that performs binary classification to determine whether two token embeddings with predicted trigger labels belong to the same trigger entity. This model allows the method to detect disrupted entities which could be the case for the trigger. Disrupted entities refer to entities that have been interrupted by the occurrence of another entity within its text span. In the sentence “The root cause for back side defect are humidity residues”, the trigger is made up of the tokens “The root cause for” and “are”. However, this trigger is disrupted by the effect, which is represented by the tokens “back side defect”.

In the next step, the embeddings of the tokens belonging to the same trigger entity are aggregated together using an attention network. The aggregated combined trigger embeddings are devised by a third model to detect the arguments (i.e., tokens belonging to the cause and effect of that trigger). Specifically, the model concatenates the embeddings of the tokens and the combined trigger embeddings and uses them as an input for a multi-class classification to detect the cause and effect for a given trigger. Such a token could be predicted to be a cause for a certain trigger and the effect of another trigger. As a welcomed result, the MST supports extracting enchained relations. These types of relations can be inferred after MST has extracted the causal relations. Specifically, an enchained causal relation involves two causal relations that share an entity, where this entity acts as an effect in the first relation and as a cause in the second relation. Additionally, a nested causal relation consists of two causal relations, where the first causal relation (i.e., cause, effect, trigger) functions as either the cause or the effect of the second causal relation.

The model is trained in an end-to-end manner, and the weights are updated using a combined loss function as shown in Equation (1). Cross entropy is used to calculate the losses of different classifiers, including trigger detection, trigger grouping, and the argument detection classifier.

This includes the weights of the language model, as well as the binary token classifiers for trigger detection and grouping, the attention network for trigger embeddings aggregation, and the multi-class classifier for detecting arguments. For each training example, the model processes the input data through its various components and produces an output. The output is then compared to the desired output, and a combined loss value is computed. This approach is depicted on the right side of Figure 2.

3.4. Evaluation Methodology

To evaluate the performance of our proposed pipeline for causal information extraction in the semiconductor industry, a set of evaluation techniques is devised. These techniques are detailed as follows. To assess the clarity and effectiveness of the structured data representation of causal information, the consistency of annotations among multiple annotators is evaluated. This metric can help predict potential limitations in the clarity of the annotation guidelines that could propagate to the automated causal information extraction methods. To estimate the IAA, the token-based Cohen’s and score are calculated. While the performance of the method is not bound by the IAA [12], the IAA can set or limit expectations and indicate which aspects might be particularly challenging for the model to learn. In addition, we executed a performance evaluation of each component of the MST pipeline, including trigger detection, trigger grouping, and cause and effect detection. For each component, the score is calculated, and the results between the two approaches (i.e., SST and MST) are compared.

4. Experiments and Results

In pursuit of our research objectives, The selected language models in this experiment are BERT [36] and MatBERT [37]. These models were chosen because BERT is a solid baseline architecture that is used for many general downstream domain tasks, and MatBERT was specifically trained to handle materials science terminologies, which is relevant to the semiconductor manufacturing industry. Also, we make use of the data sets and the in-domain pre-training pipeline using masked language modeling with two masking strategies (UM and PMI) established by Tosone [38] using a semiconductor manufacturing related data set. Specifically, we pre-train BERT and MatBERT for five epochs with a learning rat of 1 . The resulted models are named BERT UM, MatBERT UM, BERT PMI, and MatBERT PMI. UM and PMI refer to the masking strategies used in pre-training the language model.

We collected a data set containing two types of industrial documents from an actual semiconductor manufacturing company. The data set comprises a set of presentation slides and a set of FMEA documents. The text from the FMEA documents was extracted at the tabular cell level, similar to the work of Razouk et al. [4,13]. Mainly, cell texts belonging the failure mode effect and root cause columns were extracted. To extract the textual elements of the presentation slides in their intended order, an optical character recognition (OCR) based method was utilized. Although the OCR-based method produces occasional errors, it represents the best option for this research at this stage. Specifically, the db_resnet50 text recognition model and the crnn_mobilenet_v3_large model from DocTR [39] were utilized.

The collection of texts from various presentation slides and FMEA documents underwent annotation using the Brat annotation tool [40]. A team of two proficient natural language processing (NLP) experts, with a thorough understanding of the provided annotation guidelines, carried out the annotation process separately. The annotated data set comprises 495 texts from FMEA documents and 440 texts from presentation slides. Cohen’s and score were used to estimate the IAA, which was calculated on the token level of the text using a language model tokenizer. In addition to the annotated data set, an additional set of 481 texts extracted from presentation slides underwent annotation by only one NLP expert.

The IAA is estimated for each label type (cause, effect, and trigger) and for each source of text represented in the data set (i.e., presentation slides and FMEA documents) separately. Table 1 summarizes the results of the IAA for different data sets and tokenization of various language models. Based on Table 1, the overall IAA for all three label types is 85% Cohen’s . However, substantial differences in the IAA can be observed when comparing different labels. While there is high agreement in the annotation of triggers with an IAA of 94% Cohen’s , the annotation of causes and especially the annotation of effects proves to be more challenging, with an IAA of 86% and 76% Cohen’s , respectively. The IAA for texts from FMEA documents is generally higher than for texts from presentation slides.

Table 1.

Inter-annotator agreement (IAA) results for different data sets. Two metrics were used to calculate the IAA, the () and (). The percentage occurrences () of tokens associated with the different classes in the annotated data sets are provided as well. The IAA is higher for the data extracted from semi-structured documents, namely FMEAs, compared to the data extracted from presentation slides. Additionally, the IAA is higher for annotations of the `trigger’ type compared to annotations of the `cause’ and `effect’ types. These results suggest that the extraction of causal information from FMEA documents is less ambiguous and more consistent compared to the extraction from presentation slides. This finding is attributed to the fact that the text in FMEA documents is typically simpler and more concise. Additionally, results suggest that the `trigger’ type annotations are easier to identify and agree upon by annotators. We explain this findings with the lower number of tokens used as triggers compared to the cause and effects as well as the limited lexical options for the trigger.

The annotated data set is aggregated from the two annotators on the text level, where each text coming from an FMEA cell or extracted from a presentation slide represents one data instance. The annotations of the two annotators are added to this instance. Where there is agreement between the annotators, the duplicated annotations are removed. When there is a disagreement between the annotators, the annotations are aggregated. For the SST method, the different annotations are added to the tokens. In the case of the MST method, the different relations with the same trigger are added as different relations. Table 1 provides an overview of the percentage of tokens associated with the trigger, the cause, and the effect classes in the resulting data set. The analysis of the annotated data sets reveals the distribution of tokens across different classes. Notably, a higher percentage of tokens is associated with causal relations in FMEA documents compared to presentation slides. Furthermore, in both document types, the cause and effect categories comprise a larger number of tokens than the trigger category. Additionally, a closer examination shows that causes tend to consist of a larger number of tokens than effects.

The annotated data set is split into two primary components: a testing set and a training set. The training set is further subdivided into training and validation sets using a five-fold cross-validation approach. Specifically, 10% of the data are used for testing, 10% of the data for validation, and the remaining 80% of the data are used for training. This division enables the model to be trained on the training set, while the validation set is utilized for early stopping, thereby preventing overfitting. The hyperparameters employed for training both the SST and MST methods are as follows: a learning rate of , a maximum length of 512 tokens, a batch size of 16, and an early stop patience of 5 epochs. Both methods were undertaken using on-premises GPUs.

Notably, both SST and MST methods are trained using the same training and validation folds and subsequently tested using the same testing set. This ensures a fair comparison between the two methods. The performance of the trained models using different language models is summarized in Table 2.

Table 2.

Comparison of sequence tagging results using different language models on various test data sets. The data sets include (i) the FMEA data set representing texts extracted exclusively from FMEA documents and (ii) the slide data set representing texts extracted exclusively from digital presentation slide documents. The provided table showcases the mean scores achieved by models trained five times, employing early stopping based on the development data set. All the models showed better performance on the tagging of the trigger tokens. In-domain pre-training is shown to be effective in increasing model performance.

The table provides a comprehensive evaluation of the performance of each method, including the score of the multi-label classifier for the SST method. Additionally, the table shows the performance of the MST binary classifier for trigger detection, presented in the trigger score. It also includes the performance of the trigger grouping model and the multi-label classifier for detecting causes and effects in the MST method.

The results indicate that the MST method, which leverages MatBERT and in-domain pre-training using PMI, achieves an average of 83 ± 3% (macro average) across various document types (i.e., FMEA and slides) and annotation types (i.e., trigger, cause, and effect). To further elaborate on this , we present the macro average precision and recall values for the same MST method across different document types and annotation types. The precision and recall values are 90 ± 6% and 78 ± 4%, respectively. Also, the results show that both the SST and MST methods perform better in detecting tokens with the trigger label compared to detecting the tokens with the cause and effect labels. Additionally, both methods perform better on texts extracted from FMEA documents compared to texts extracted from presentation slides. Furthermore, both models show an increase in performance when changing the initial language model from BERT to MatBERT without in-domain pre-training. The MST method outperforms the SST method in detecting the different labels when using models without in-domain pre-training.

The MST method experiences a positive performance impact when using BERT as a language model, texts from FMEA as the testing set, and using UM as a masking objective. This performance increases further when using PMI as a masking objective. At the same time, the MST method experiences a positive performance impact when using MatBERT as a language model, texts from presentation slides as the testing set, and UM as a masking objective. This performance also increases further when using PMI as a masking objective.

Similarly, the SST method experiences a positive performance impact when using BERT as a language model, texts from presentation slides as the testing set, and UM as a masking objective. This performance increases further when using PMI as a masking objective. Surprisingly, the SST method also experiences a positive performance impact when using MatBERT as a language model, texts from FMEA documents as the testing set, and using UM as a masking objective. This performance also increases further when using PMI as a masking objective.

5. Discussion

The suggested annotation guidelines have demonstrated their efficiency, as reflected by the high level of agreement between annotators on the annotated data set. The results show a higher IAA on the triggers compared to the causes and effects. This difference in agreement may be due to the fact that triggers usually consist of only a few tokens, while causes and effects tend to be longer and have less clear-cut and unambiguous boundaries. Additionally, the IAA for FMEA is higher than that for presentation slides. This difference in IAA can be attributed to the fact that the content in FMEA documents is typically more structured and organized than in presentation slides. FMEA documents often follow a standard format and contain specific sections related to failure modes, effects, and causes. As a result, annotators may find it easier to identify and annotate these sections consistently. In contrast, presentation slides often contain unstructured and varied content, with information presented in different formats and in no particular order. This variability can make it more challenging for annotators to identify and annotate the relevant content consistently.

Two types of causal information extraction methods, based on SST and MST, are also presented in the study. The SST approach leverages BERT-based language models and supports the extraction of overlapping entities. This method is simple to implement as it only requires a multi-label token classifier. However, this approach is limited when it comes to extracting enchained and nested relations and disrupted entities. The MST addresses these limitations and achieves good performance, sufficient for practical applications, with on FMEA documents and on presentation slides. Both methods perform better on texts from FMEA documents than on texts from presentation slides. This could be attributed to the same finding from the IAA, as presentation slides are more difficult to annotate. Additionally, this could be attributed to the small data set size, as the training data set for presentation slides is less representative of its complexity compared to the FMEA documents.

Choosing a language model that is more aligned with the domain has shown to improve the performance of both the SST and MST methods. However, the effect of in-domain pre-training on the model performance is dependent on the test data set (i.e., FMEA or presentation slides) and the initial language model. In-domain pre-training can be an effective method for further improving the performance of the SST and MST methods. Specifically, PMI has consistently improved the model performance across different base models by incorporating domain-specific language into the masking objective. Of course, this enhancement in model performance comes at the cost of reduced generalizability of the in-domain pre-trained model to other domains, which does not pose an issue as it is quite rare to share in-domain pre-trained models between domains. However, the performance improvement seems to differ for the different entity types, with the F1 score for effect increasing more than the F1 score for cause. This could indicate that effect entities are more domain-specific than the cause entities. However, the effectiveness of in-domain pre-training is highly dependent on the initial language model and the data set used for pre-training. Overall, our findings reflect the importance of careful consideration of the language model and pre-training approach for achieving high-quality causal information extraction from unstructured documents in the semiconductor industry.

Limitations and Opportunities

In this paper, we are able to demonstrate the effectiveness of the MST approach in industrial settings. In the MST approach, the trigger is detected first and the cause and effect are then identified on a given trigger. However, the method uses an OCR for extracting texts from unstructured documents with visual elements such as presentation slides. Therefore, it is limited to the extraction of textualized causal relations in presentation slides and cannot process causal information expressed through visual elements like arrows, color encoding, etc. This limitation is of high relevance as presentation slides are created to be viewed and not be simply read, since a great amount of their information is in visualized form. This information is lost when converting the content into plain text. Furthermore, the content extraction via OCR is prone to errors. In particular, tabular structures, line breaks, hyphenated words, small fonts, and images with blurry texts can pose problems for the OCR. To address these limitations, it is necessary to explore hybrid approaches in which NLP approaches are augmented with computer vision methods.

The proposed method aims to extract causal information from historic archived industrial documents without imposing significant limitations on scalability. However, considering the diversity of data sets, it may be necessary to adapt the language model used. For instance, supporting transfer learning between different languages, as highlighted in [41], could be beneficial. Additionally, annotating a representative sample of the data set may be required, as this method follows a supervised learning approach. These scenarios were not addressed in the provided case study.

Additionally, the multi-stage approach only allows us to extract causal relations which are expressed through explicit linguistic triggers. Causes and effects without an explicit trigger cannot be detected, since the trigger is given as an input to detect the cause and effect in the second stage of the NER. Even though the chances for two elements to be perceived as causally related by the annotators are lower the further away they are from each other in the text [42], it is possible to have causal relations spanning over a few sentences or even a whole document. To extract such relations, inter-sentential or intra-document relation extraction is necessary. Our method focuses on intra-text causality. One text corresponds to the content of one text box or bullet point in terms of presentation slides or one cell in terms of content from tabular formats. This means that this method is not able to detect causal relations between elements from different text boxes or cells. Another drawback of this approach is its dependence on human annotations. This reliance raises not just considerations of resource allocation—given the time and cost associated with large-scale human annotation—but also presents the daunting task of resolving varying interpretations of causality among different annotators. While the suggested guidelines hold the potential to serve as prompts for sophisticated language models in the future, their practical implementation is yet to be fully assessed. It is essential to undertake more in-depth research to explore the viability of this approach, particularly with a keen focus on addressing potential information security and reliability concerns.

Finally, this research primarily focuses on extracting causal relationships described in text. Terms such as “distal” and “proximal”, as well as “sufficient” and “necessary”, are integral to modeling causal domain knowledge. Research on causal domain knowledge represents an important aspect for future work, especially in industrial settings.

6. Conclusions

This paper provides valuable insights into the development of automated methods for causal information extraction from semi-structured and unstructured documents in the semiconductor manufacturing industry. The presented paper aims to bridge the gap by extending causal information exaction methods to industrial documents, thereby increasing the availability of causal domain knowledge for downstream tasks in the industry. The effectiveness of the suggested annotation guidelines is evident from the considerable level of agreement between annotators in relation to the annotated data set. Furthermore, the study demonstrates the effectiveness of two types of causal information extraction methods, SST and MST, using actual industrial documents. The adapted MST-based method can effectively capture complex entities and relations, including enchained and nested relations and disrupted entities. This makes it a more optimal choice for extracting causal information. Moreover, the paper provides guidance for practitioners working in different industries with similar types of documents. Namely, the proposed annotation guidelines can be considered as a solid basis for annotating causal information for other domains and other types of documents. In addition, the study highlights the importance of representation learning on downstream tasks. Choosing a language model that is more aligned with the domain and in-domain pre-training have been shown to significantly improve the performance of the SST and MST methods. Overall, this study contributes to the body of research that aims to develop more accurate and efficient automated methods for causal information extraction from unstructured text data in the semiconductor industry, and provides valuable insights that can be applied to other industries with similar types of documents.

Author Contributions

Conceptualization, L.B., H.R. and R.K.; methodology, L.B. and H.R.; software, L.B., H.R. and D.G.; validation, L.B. and H.R.; formal analysis, L.B. and H.R.; annotation, L.B. and H.R.; writing—original draft preparation, L.B. and H.R.; writing—review and editing, D.G. and R.K.; supervision, R.K.; All authors have read and agreed to the published version of the manuscript.

Funding

Part of this research was funded by EdgeAI. The “Edge AI Technologies for Optimised Performance Embedded Processing” project has received funding from Chips Joint Undertaking (Chips JU) under grant agreement No. 101097300. Chips JU receives support from the European Union’s Horizon Europe research and innovation program, as well as from Austria, Belgium, France, Greece, Italy, Latvia, Netherlands, and Norway.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This research draws upon valuable industry data from a semiconductor manufacturing company. However, due to confidentiality agreements, the specific data cannot be publicly shared.

Conflicts of Interest

Authors Houssam Razouk & Leonie Benischke were employed by the company Infineon Technologies Austria AG. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| Avg | Average |

| FMEA | Failure Mode and Effects Analysis |

| IAA | Inter-Annotator Agreement |

| MST | Multi-Stage Sequence Tagging |

| NER | Named Entity Recognition |

| PMI | Point-Wise Mutual Information masking |

| SST | Single-Stage Sequence Tagging |

| UM | Uniform Masking |

References

- Hu, Y.; Zhang, X.; Ngai, E.; Cai, R.; Liu, M. Software project risk analysis using Bayesian networks with causality constraints. Decis. Support Syst. 2013, 56, 439–449. [Google Scholar] [CrossRef]

- Saha, A.; Hoi, S.C. Mining root cause knowledge from cloud service incident investigations for AIOps. In Proceedings of the 44th International Conference on Software Engineering: Software Engineering in Practice, Pittsburgh, PA, USA, 25–27 May 2022; pp. 197–206. [Google Scholar]

- Anand, S.S.; Bell, D.A.; Hughes, J.G. The role of domain knowledge in data mining. In Proceedings of the Fourth International Conference on Information and Knowledge Management, Baltimore, MD, USA, 28 November–2 December 1995; pp. 37–43. [Google Scholar]

- Razouk, H.; Kern, R. Improving the consistency of the Failure Mode Effect Analysis (FMEA) documents in semiconductor manufacturing. Appl. Sci. 2022, 12, 1840. [Google Scholar] [CrossRef]

- Hayama, T.; Nanba, H.; Kunifuji, S. Structure extraction from presentation slide information. In Proceedings of the PRICAI 2008: Trends in Artificial Intelligence: 10th Pacific Rim International Conference on Artificial Intelligence, Hanoi, Vietnam, 15–19 December 2008; Proceedings 10. Springer: Berlin/Heidelberg, Germany, 2008; pp. 678–687. [Google Scholar]

- Girju, R. Automatic detection of causal relations for question answering. In Proceedings of the ACL 2003 Workshop on Multilingual Summarization and Question Answering, Sapporo, Japan, 8–10 July 2003; pp. 76–83. [Google Scholar]

- Yang, J.; Han, S.C.; Poon, J. A survey on extraction of causal relations from natural language text. Knowl. Inf. Syst. 2022, 64, 1161–1186. [Google Scholar] [CrossRef]

- Gärber, D. Causal Relationship Extraction from Historical Texts using BERT. Master’s Thesis, Graz University of Technology, Graz, Austria, 2022. [Google Scholar]

- Gopalakrishnan, S.; Chen, V.Z.; Dou, W.; Hahn-Powell, G.; Nedunuri, S.; Zadrozny, W. Text to Causal Knowledge Graph: A Framework to Synthesize Knowledge from Unstructured Business Texts into Causal Graphs. Information 2023, 14, 367. [Google Scholar] [CrossRef]

- Saha, A.; Ni, J.; Hassanzadeh, O.; Gittens, A.; Srinivas, K.; Yener, B. SPOCK at FinCausal 2022: Causal Information Extraction Using Span-Based and Sequence Tagging Models. In Proceedings of the 4th Financial Narrative Processing Workshop@ LREC2022, Marseille, France, 24 June 2022; pp. 108–111. [Google Scholar]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef]

- Richie, R.; Grover, S.; Tsui, F.R. Inter-annotator agreement is not the ceiling of machine learning performance: Evidence from a comprehensive set of simulations. In Proceedings of the 21st Workshop on Biomedical Language Processing, Dublin, Ireland, 26 May 2022; pp. 275–284. [Google Scholar]

- Razouk, H.; Liu, X.L.; Kern, R. Improving FMEA Comprehensibility via Common-Sense Knowledge Graph Completion Techniques. IEEE Access 2023, 11, 127974–127986. [Google Scholar] [CrossRef]

- Reklos, I.; Meroño-Peñuela, A. Medicause: Causal relation modelling and extraction from medical publications. In Proceedings of the 1st International Workshop on Knowledge Graph Generation From Text co-located with 19th Extended Semantic Conference (ESWC 2022), Hersonissos, Greece, 30 May 2022; Volume 3184, pp. 1–18. [Google Scholar]

- Seol, J.W.; Jo, S.H.; Yi, W.; Choi, J.; Lee, K.S. A Problem-Action Relation Extraction Based on Causality Patterns of Clinical Events in Discharge Summaries. In Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, Shanghai, China, 3–7 November 2014; pp. 1971–1974. [Google Scholar]

- Negi, K.; Pavuri, A.; Patel, L.; Jain, C. A novel method for drug-adverse event extraction using machine learning. Inform. Med. Unlocked 2019, 17, 100190. [Google Scholar] [CrossRef]

- Aerts, W.; Zhang, S. Management’s causal reasoning on performance and earnings management. Eur. Manag. J. 2014, 32, 770–783. [Google Scholar] [CrossRef]

- Nam, K.; Seong, N. Financial news-based stock movement prediction using causality analysis of influence in the Korean stock market. Decis. Support Syst. 2019, 117, 100–112. [Google Scholar] [CrossRef]

- Kong, M.; Li, R.; Wang, J.; Li, X.; Jin, S.; Xie, W.; Hou, M.; Cao, C. Cftnet: A Robust Credit Card Fraud Detection Model Enhanced by Counterfactual Data Augmentation. Neural Comput. Appl. 2024, 36, 8607–8623. [Google Scholar] [CrossRef]

- Tang, Y.; Xiong, J.J.; Luo, Y.; Zhang, Y.C. How do the global stock markets Influence one another? Evidence from finance big data and granger causality directed network. Int. J. Electron. Commer. 2019, 23, 85–109. [Google Scholar] [CrossRef]

- Ravivanpong, P.; Riedel, T.; Stock, P. Towards Extracting Causal Graph Structures from TradeData and Smart Financial Portfolio Risk Management. In Proceedings of the EDBT/ICDT Workshops, Edinburgh, UK, 29 March 2022. [Google Scholar]

- Mariko, D.; Abi Akl, H.; Trottier, K.; El-Haj, M. The financial causality extraction shared task (FinCausal 2022). In Proceedings of the 4th Financial Narrative Processing Workshop@ LREC2022, Marseille, France, 24 June 2022; pp. 105–107. [Google Scholar]

- Safont-Andreu, A.; Burmer, C.; Schekotihin, K. Using Ontologies in Failure Analysis. In Proceedings of the ISTFA 2021 ASM International, Phoenix, AZ, USA, 31 October–4 November 2021; pp. 23–28. [Google Scholar]

- Yuan, X.; Chen, K.; Zuo, W.; Zhang, Y. TC-GAT: Graph Attention Network for Temporal Causality Discovery. arXiv 2023, arXiv:2304.10706. [Google Scholar]

- Glymour, C.; Zhang, K.; Spirtes, P. Review of causal discovery methods based on graphical models. Front. Genet. 2019, 10, 524. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.L.; Yu, L.C.; Chang, P.C. Detecting causality from online psychiatric texts using inter-sentential language patterns. BMC Med. Inform. Decis. Mak. 2012, 12, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Akkasi, A.; Moens, M.F. Causal relationship extraction from biomedical text using deep neural models: A comprehensive survey. J. Biomed. Inform. 2021, 119, 103820. [Google Scholar] [CrossRef]

- Dunietz, J.; Levin, L.; Carbonell, J. Annotating Causal Language Using Corpus Lexicography of Constructions. In Proceedings of the 9th Linguistic Annotation Workshop, Denver, CO, USA, 5 June 2015; pp. 188–196. [Google Scholar] [CrossRef]

- Dunietz, J.; Levin, L.; Carbonell, J. The BECauSE Corpus 2.0: Annotating Causality and Overlapping Relations. In Proceedings of the 11th Linguistic Annotation Workshop, Valencia, Spain, 3 April 2017; pp. 95–104. [Google Scholar] [CrossRef]

- Mariko, D.; Labidurie, E.; Ozturk, Y.; Akl, H.A.; de Mazancourt, H. Data Processing and Annotation Schemes for FinCausal Shared Task. arXiv 2020, arXiv:2012.02498. [Google Scholar]

- Liu, X.L.; Salhofer, E.; Andreu, A.S.; Kern, R. S2ORC-SemiCause: Annotating and Analysing Causality in the Semiconductor Domain; River Publishers: Aalborg, Denmark, 2022. [Google Scholar]

- Khetan, V.; Ramnani, R.; Anand, M.; Sengupta, S.; Fano, A.E. Causal BERT: Language models for causality detection between events expressed in text. arXiv 2020, arXiv:2012.05453. [Google Scholar]

- Lee, J.; Pham, L.H.; Uzuner, O. Mnlp at fincausal2022: Nested ner with a generative model. In Proceedings of the 4th Financial Narrative Processing Workshop@ LREC2022, Marseille, France, 24 June 2022; pp. 135–138. [Google Scholar]

- Khetan, V.; Rizvi, M.I.H.; Huber, J.; Bartusiak, P.; Sacaleanu, B.; Fano, A. MIMICause: Representation and automatic extraction of causal relation types from clinical notes. arXiv 2021, arXiv:2110.07090. [Google Scholar]

- Levine, Y.; Lenz, B.; Lieber, O.; Abend, O.; Leyton-Brown, K.; Tennenholtz, M.; Shoham, Y. Pmi-masking: Principled masking of correlated spans. arXiv 2020, arXiv:2010.01825. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Walker, N.; Trewartha, A.; Huo, H.; Lee, S.; Cruse, K.; Dagdelen, J.; Dunn, A.; Persson, K.; Ceder, G.; Jain, A. The Impact of Domain-Specific Pre-Training on Named Entity Recognition Tasks in Materials Science. Patterns 2021, 3, 100488. [Google Scholar] [CrossRef]

- Tosone, D. Improving FMEA consistency in semiconductor manufacturing through text classification. Master’s Thesis, University of Udine, Alpen-Adria-Universität Klagenfurt, Klagenfurt, Austria, 2022. [Google Scholar]

- Mindee. docTR: Document Text Recognition. 2021. Available online: https://github.com/mindee/doctr (accessed on 1 March 2023).

- Stenetorp, P.; Pyysalo, S.; Topić, G.; Ohta, T.; Ananiadou, S.; Tsujii, J. brat: A Web-based Tool for NLP-Assisted Text Annotation. In Proceedings of the Demonstrations Session at EACL 2012, Avignon, France, 23–27 April 2012. [Google Scholar]

- Niess, G.; Razouk, H.; Mandic, S.; Kern, R. Addressing Hallucination in Causal Q&A: The Efficacy of Fine-tuning over Prompting in LLMs. In Proceedings of the Joint Workshop of the 9th Financial Technology and Natural Language Processing (FinNLP), The 6th Financial Narrative Processing (FNP), and the 1st Workshop on Large Language Models for Finance and Legal (LLMFinLegal), Abu Dhabi, United Arab Emirates, 19–20 January 2025; pp. 253–258. [Google Scholar]

- Riaz, M.; Girju, R. Another look at causality: Discovering scenario-specific contingency relationships with no supervision. In Proceedings of the 2010 IEEE Fourth International Conference on Semantic Computing, Pittsburgh, PA, USA, 22–24 September 2010; pp. 361–368. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).