Abstract

Eye movement detection technology holds significant potential across medicine, psychology, and human–computer interaction. However, traditional methods, which primarily rely on tracking the pupil and cornea during the open-eye state, are ineffective when the eye is closed. To address this limitation, we developed a novel system capable of real-time eye movement detection even in the closed-eye state. Utilizing a micro-camera based on the OV9734 image sensor, our system captures image data to construct a dataset of eyelid images during ocular movements. We performed extensive experiments with multiple versions of the YOLO algorithm, including v5s, v8s, v9s, and v10s, in addition to testing different sizes of the YOLO v11 model (n < s < m < l < x), to achieve optimal performance. Ultimately, we selected YOLO11m as the optimal model based on its highest AP0.5 score of 0.838. Our tracker achieved a mean distance error of 0.77 mm, with 90% of predicted eye position distances having an error of less than 1.67 mm, enabling real-time tracking at 30 frames per second. This study introduces an innovative method for the real-time detection of eye movements during eye closure, enhancing and diversifying the applications of eye-tracking technology.

1. Introduction

Eye movement not only reflects the process of visual perception but also contains rich physiological and pathological information [1,2]. The development of eye movement detection technology has experienced an evolution from the early observation and mechanical recording methods to various high-precision methods nowadays [3]. The current key technology research literature is shown in Table 1. These methods, which include electro-oculography, video oculography, infrared oculography, scleral search coil, and magnetic resonance imaging, have their advantages and disadvantages.

The current scientific research hotspots about eye movements mainly focus on the open-eye state, paying attention to the indicators of gaze, smooth tracking, and sweeping [4,5]. Recent studies have explored the use of low-cost, non-invasive human–computer interaction strategies to develop augmentative and alternative communication solutions for individuals with Amyotrophic Lateral Sclerosis (ALS) [6]. For instance, Medeiros et al. proposed a real-time blink detection approach that requires only a generic webcam and computer, which enables ALS patients to communicate through intentional eye blink patterns [7].

Table 1.

Eye-tracking studies and methods in recent years.

Table 1.

Eye-tracking studies and methods in recent years.

| Reference | Method | Advantages | Disadvantages |

|---|---|---|---|

| [8,9,10] | Electro Oculography | Non-invasive. Insensitive to light. Can be applied with eyes closed. | Low precision. Influenced by electrode position and physiologic electrical signals. |

| [11,12,13,14] | Video Oculography (Visible Light Camera) | Non-contact. Real-time. Inexpensive. | Sensitive to light. Influenced by eyelids and eyebrows. |

| [15,16,17] | Infrared Oculography (Infrared Rays or Near Infrared Rays) | Non-invasive. Works effectively in low light conditions. | Influenced by position, eyelids, and eyebrows. |

| [18,19,20,21] | Scleral Search Coil | High precision. Can be applied with eyes closed. Suitable for scientific research. | Invasive. Complex equipment. Costly. Not applicable to daily life. |

| [22,23,24] | Magnetic Resonance Imaging | Non-invasive. Observes both eye and brain activity. Suitable for medical research. | Expensive equipment. Subjects need to remain stationary for long periods. Difficult to apply in practice. |

Eye movement detection is important while the eyes are closed. Its application in sleep medicine, neurosurgery, rehabilitation medicine, and other fields is promising. For example, Suzuki et al. believed that eye movements during eye closure closely reflect changes in arousal and that visual scores (VSs) of slow eye movements (SEM) and rapid eye movements (REM) provide useful information for clinical and basic research [25]. In skull base surgery, eye movement monitoring in the closed-eye state can provide surgeons with critical neurological function information to help assess the state of the brainstem and cranial nerves during surgery, thereby reducing surgical risk [26,27,28]. Sakata et al. detected small vibrations induced by eye movements using piezoelectric sensors placed on the eyelids for intraoperative monitoring of the extraocular muscle nerves, with experimental results superior to those obtained using electro-oculography and electromyography [29]. For vegetative patients, eye movements in the closed-eye state may become an important basis for assessing their level of consciousness and neurological recovery and even provide potential avenues for communication [30,31].

Existing eye movement detection methods applicable to closed-eye conditions face several limitations: electro-oculography offers limited accuracy, scleral search coils are invasive, and magnetic resonance imaging requires expensive equipment. These constraints highlight the need for novel approaches combining high accuracy, non-invasiveness, and real-time performance. Recent advances in computer vision, particularly deep learning-based object detection algorithms, have emerged as promising solutions due to their non-contact nature and high precision. In this context, Khan et al. employed the YOLO model for eye and eye-state detection, demonstrating its superior processing speed compared to alternative object detection algorithms [32].

Consequently, in this paper, we propose a YOLO model-based eye movement detection system under closed-eye conditions. The main contributions of the article can be summarized as follows:

- We built an image acquisition system. A micro-camera was built based on the OV9734 image sensor, and the corresponding acquisition software was created. The compact size of the camera makes it easy to embed into the eye-tracking system, achieving device miniaturization and portability;

- We constructed an eye image dataset with eyelid coverage. Its usability and reliability in practical applications were ensured by scientific labeling and segmentation. This dataset can be used to train deep learning-based eye-tracking models in the future;

- We developed a real-time tracking system based on the YOLO model to detect eye movements with high precision and introduced strict tracking evaluation metrics for accuracy and reliability.

2. Theory

2.1. Features of the Eyelids with Eyes Closed

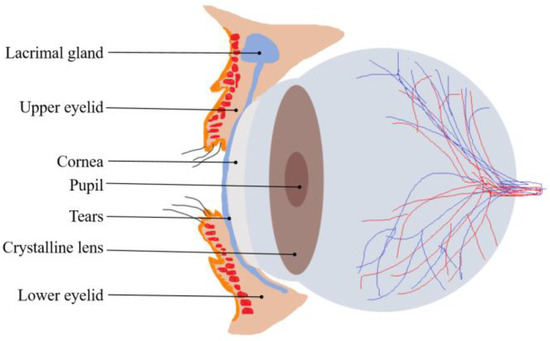

In the closed-eye state, the specific position of the eyeball cannot be localized directly by visual and conventional optical methods because the eyelid completely covers the eyeball, which poses a great challenge for eye movement tracking. However, the texture of the eyelid surface is rich in information that offers the possibility of indirectly localizing eye movements. The skin texture of the eyelid has unique features, including subtle folds, pore distribution, and tissue elasticity, which are affected by the cornea at the front of the eye and change accordingly during eye movements [33]. A sagittal cross-section of the eyeball and eyelid is shown in Figure 1. When the eye rotates, the anterior-most protruding cornea pushes against the eyelid, causing the texture of the eyelid to stretch or compress in localized areas. Such changes can be captured by high-precision image acquisition systems and then quantified using analytical techniques. Based on this principle, we can indirectly infer the trajectory and position of the eye by monitoring and analyzing the dynamic changes in the texture of the eyelid surface in real time. This approach not only avoids direct contact with the eye and the use of complex imaging equipment but also enables high-precision eye movement tracking under non-invasive conditions.

Figure 1.

The sagittal cross-section of the eyeball and eyelid.

There is a certain regularity between the changing pattern of the eyelid texture and the position of eye movements, which makes it possible to further optimize the localization accuracy of the eye position using deep learning algorithms. Deep learning algorithms are capable of automatically extracting features from large amounts of data and learning complex nonlinear relationships [34]. Therefore, they have a significant advantage when dealing with the mapping problem between eyelid texture and eye movements. In this paper, we implemented target detection based on this principle to train a model using a large number of eyelid images labeled with eyeball positions, thus enabling the prediction of eyeball positions based on eyelid texture.

2.2. YOLOv11 Overview

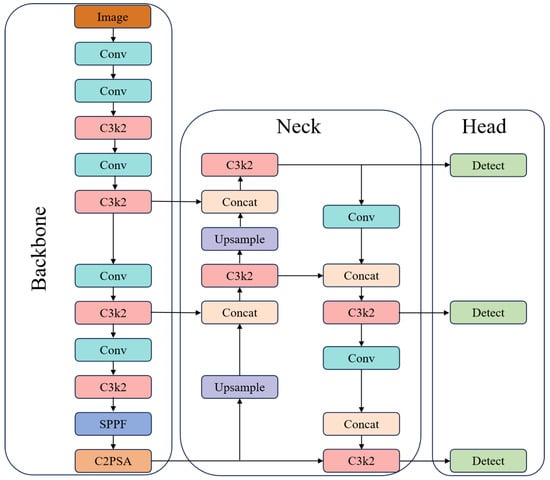

YOLO is a Convolutional Neural Network (CNN)-based target detection algorithm. YOLOv11 was unveiled at the YOLO Vision 2024 (YV24) conference and represents a significant advancement in real-time target detection technology. This new version offers substantial improvements in both architecture and training methodology, leading to enhancements in accuracy, speed, and efficiency [35]. YOLOv11’s network architecture is shown in Figure 2.

Figure 2.

YOLOv11’s network architecture.

YOLOv11 consists of three key components: the backbone, the neck, and the head. The first main component of YOLOv11 is the backbone, which is responsible for extracting key features at different scales from the input image. This component consists of multiple convolutional (Conv) blocks, C3K2 blocks, the Spatial Pyramid Pooling Fast (SPPF), and the Cross-Stage Partial with Spatial Attention (C2PSA). The neck consists of multiple Conv layers, C3K2 blocks, concat operations, and upsample blocks. The primary role of the neck is to aggregate features at different scales and pass them to the head blocks. The head is a crucial module responsible for generating predictions. It determines the object class, calculates the objectness score, and accurately predicts the bounding boxes for identified objects [36].

YOLOv11’s improved feature extraction capabilities enable it to recognize and process a wider range of patterns and complex elements in images, making it easy to detect fine details even in challenging scenes. It is well-suited for real-time target detection tasks.

2.3. Evaluation Indicators

In order to quantitatively measure and evaluate the performance of the algorithm model, a series of indicators were used in the paper, including precision (P), recall (R), F1 score, intersection over union (IoU), confidence score, and average precision (AP) [37].

IoU was used to determine the degree of overlap between the predicted bounding box and the ground truth bounding box. The formula for its calculation is shown in Equation (1):

where is the area of the predicted bounding box and is the area of the ground truth bounding box. denotes intersection, and denotes concatenation.

In object detection, a predicted bounding box is considered a true positive (TP) if its IoU with a ground truth box exceeds a set threshold (commonly 0.5) and the class prediction matches the ground truth class. A predicted box is deemed a false positive (FP) if it does not achieve the required IoU with any ground truth box or does so with an incorrect class. Conversely, a ground truth box is classified as a false negative (FN) if no predicted boxes overlap sufficiently with it, indicating a missed detection.

Precision (P) is the proportion of true positive samples in the bounding boxes that are predicted by the model as positive samples. The formula for its calculation is shown in Equation (2):

Recall (R) is the ratio of the number of true positive predictions to the total number of actual samples, and the formula for its calculation is shown in Equation (3):

The F1 score is the reconciled average of precision and recall, which is used for balancing the two, and is particularly applicable to the scenario of sample imbalance. Its calculation method is outlined in Equation (4):

The confidence score indicates the level of confidence that the model contains the target object in the predicted bounding box. It is used to filter the detection results with a low level of confidence in order to reduce the false detection rate.

Average precision (AP) is the mean of precision values across different recall levels, obtained by averaging the precision at each recall level where the recall changes. The formula for its calculation is shown in Equation (5):

The AP will change as the IoU threshold changes. AP0.5 refers to the AP of the detection model when the IOU threshold is set to 0.5.

The mAP represents the weighted mean of the average precision (AP) values for each class. Its calculation method is outlined in Equation (6):

where N stands for the total number of object classes. In our study, only one target was detected, which was the eye, N = 1, so mAP is equal to AP in our experiments.

These indicators assess the performance of the model from different perspectives. In eye detection, the trained YOLO model can quickly and indirectly predict the location of the eyeball from the eyelid texture image and output the bounding box and confidence level. By choosing the appropriate confidence and IoU thresholds, we can determine a bounding box and then use the center of the bounding box as the eye position to draw the motion trajectory and complete the eye tracking. Based on the coordinates of the eye position in consecutive image frames, we can calculate three key parameters of eye movement: displacement, velocity vector, and trajectory direction. We adopted the resolution, frame rate, and mean displacement error for the tracking evaluation metrics.

3. Experiments and Results

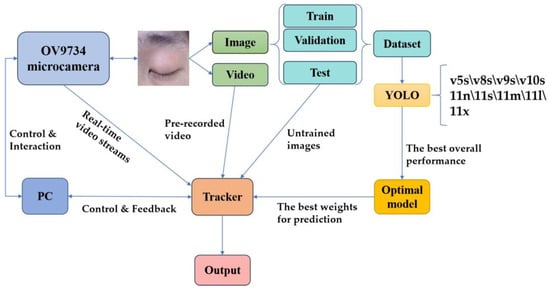

This chapter discusses the acquisition system design, dataset creation, model training, eye tracker design, and associated results of the experiment. The specific experimental framework is shown in Figure 3.

Figure 3.

The experimental framework.

3.1. Acquisition System

The choice and setup of the camera are important considerations, whether the camera is being used for image acquisition to create a dataset or to create a real-time eye tracker. In this study, a small camera equipped with an OV9734 image sensor was used, and specialized acquisition software was created to form a comprehensive image acquisition system.

The OV9734 is a high-performance image sensor renowned for its low power consumption, high sensitivity, and compact form factor. With a resolution of up to 1280 × 720 (720p), it is particularly well-suited for application scenarios such as eye tracking, where stringent demands are imposed on both hardware size and performance. The sensor’s high sensitivity and low-noise characteristics enable the capture of clear, high-quality images even under challenging lighting conditions, significantly enhancing the reliability and robustness of the dataset. Furthermore, the OV9734 features an exceptionally small footprint, measuring just 1/4 inch (approximately 3.6 mm × 2.7 mm). This compact design facilitates seamless integration into eye-tracking systems, enabling the development of miniaturized and portable devices without compromising performance.

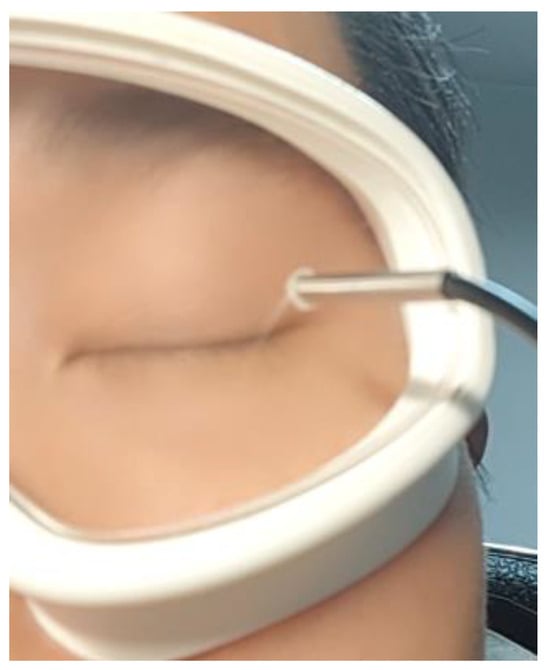

As shown in Figure 4, the camera was mounted on an eye mask-like device positioned 15–30 mm away from the eye. This design ensures that the device can be comfortably worn on the experimenter’s head while maintaining a stable and consistent camera position relative to the eye.

Figure 4.

Placement of the camera.

The acquisition software was developed by utilizing the camera’s application programming interface. We configured the camera to operate at a resolution of 640 × 480 and a frame rate of 30 fps, balancing image clarity with the ability to smoothly capture dynamic changes in eyelid texture. To accommodate diverse application scenarios, the acquisition software saves images in a lossless compressed PNG format, preserving fine details and ensuring the construction of a high-quality dataset. Additionally, the captured video was stored in an AVI format, enabling the recording of dynamic information across consecutive frames. This provides a robust data foundation for subsequent analysis of eye movement trajectories.

3.2. Dataset

To comprehensively capture the characteristics of eye movements under varying lighting conditions, we conducted data collection across multiple lighting environments, including natural light, indoor lighting, and other illumination scenarios. This approach ensures the dataset’s diversity and robustness, enabling it to effectively represent a wide range of real-world conditions.

We recruited five healthy volunteers with typical variations in ocular characteristics, including single/double eyelids, eyelash length, and eyelid thickness. Prior to formal experiments, each subject underwent a brief ocular examination to confirm the absence of conditions that might affect data acquisition. We implemented a standardized protocol: subjects first closed their eyes for 30 s to acclimatize to the experimental environment, then performed three eye movement tasks with adequate rest intervals to prevent fatigue. Real-time camera monitoring ensured that captured eye trajectories matched expected patterns.

The design of the eye-tracking tasks follows a progressive structure, evolving from simple to complex tasks to comprehensively capture eye movement characteristics. The basic task involves standard horizontal and vertical movements, establishing a foundation for analyzing fundamental eye movement patterns. Building upon this, the advanced tasks incorporate diagonal movements (top-left to bottom-right and top-right to bottom-left trajectories), as well as circular movements (clockwise and counterclockwise directions), which better reflect the dynamic nature of eye movements. Finally, the random trajectory task requires subjects to move their eyes unpredictably with their eyes closed, generating eye movement patterns that more closely resemble those observed in natural viewing conditions, thereby enhancing the ecological validity of the study.

Each task included 10 repetition cycles to capture subtle movement variations. An image acquisition module recorded the entire process in an AVI format. Raw videos underwent frame extraction and keyframe selection, removing blurred and invalid frames to yield over 1,400 high-quality images of eyelid-covered eye movements.

These images not only recorded the subtle changes in eyelid texture during eye movements but also encompassed the individual differences among the subjects, providing a comprehensive dataset for subsequent model training and validation.

After the initial organization of the captured image data, each image was annotated using the LabelImg tool, an open-source image annotation tool that supports multiple annotation formats, including the YOLO format. We labeled the bounding box of the eye as the target region and saved the annotation information as a txt file in the YOLO format. The YOLO format labeling file includes the target category number, as well as the normalized center coordinates, width, and height of the bounding box, ensuring compatibility with model training requirements. The filename of each txt file corresponds one-to-one with the corresponding image filename, guaranteeing an unambiguous match between the annotation information and the image.

Subsequently, we adhered to the data allocation principles commonly employed in machine learning to scientifically partition the dataset, thereby supporting model training, validation, and testing. Specifically, 80% of the data was allocated to the training set, 10% to the validation set, and the remaining 10% to the testing set. During the division process, care was taken to ensure that data from each experimenter was evenly distributed across the training, validation, and test sets, preventing model bias due to uneven data distribution. Additionally, images captured under different lighting conditions were uniformly distributed across each subset to ensure the model’s robust performance across various illumination scenarios.

3.3. Model Comparison

We began by creating the dataset’s configuration file (data.yaml), which specifies essential information such as the dataset’s path, category names, and the number of categories. This ensures that the model can correctly load and process the data.

The experiments were conducted using PyTorch 1.8 framework, Python 3.8, an ultralytics library, and the Ubuntu 20.04 operating system, with CUDA 11.1 for GPU acceleration. The hardware setup included an Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40 GHz processor and a 24 GB NVIDIA GeForce RTX 3090 GPU (NVIDIA, Santa Clara, CA, USA). Training and validation images were resized to 640 × 640, with a batch size of 32. Models were trained for 300 epochs to ensure comprehensive learning of the dataset features.

To determine the optimal model, we conducted experiments with multiple versions of YOLO (v5, v8, v9, and v10) and evaluated different sizes of the YOLO v11 model (YOLO11n, YOLO11s, YOLO11m, YOLO11l, and YOLO11x, where the model size follows n < s < m < l < x). By training each model on data from both the training and validation sets, we derived the optimal weights for each model tailored to eye images. These optimized model weights were subsequently utilized to perform experiments on the test set.

The performance of each trained model on the test set is summarized in Table 2, encompassing key evaluation metrics, including precision, recall, F1 score, average precision, and inference time. Both the confidence score threshold and the IoU threshold were consistently set to 0.5 for all tests. The test results demonstrate that YOLO11 achieves the highest AP0.5 score among different versions with the same model size. Within the YOLO11 series, the 11m attains the best AP0.5 performance. Meanwhile, the model achieves an inference time of 18.7 ms, enabling real-time processing capabilities. This is particularly suitable for our acquisition system, which captures video at a rate of 30 frames per second.

Table 2.

Performance evaluation of different versions of YOLO (v5\v8\v9\v10) and various pre-trained weights for YOLOv11.

3.4. Eye Movement Tracker

We loaded the model weights file (YOLO11m_best.pt) with the best overall performance on the test dataset and deployed it into an inference environment to handle pre-recorded and real-time video streams.

3.4.1. Simulation Testbed

We captured real-time updated video streams from the camera acquisition system and fed them into the tracker for analysis. The processing flow of the real-time video stream is as follows:

- Frame Extraction: Images were extracted from the video stream frame by frame and resized to the model’s required input size (640 × 640 pixels);

- Target Detection and Tracking: The YOLO model was applied to each frame to detect the target position, and the detection results from consecutive frames were correlated with the tracker;

- Trajectory Calculation: The target movement trajectory was calculated based on changes in the target position across consecutive frames. The trajectory was represented as a series of coordinate points and could be further converted into motion parameters such as distance, speed, and acceleration;

- Result Display: The detection frame, tracking trajectory, and motion parameters were displayed on the video screen in real time, allowing users to observe and analyze the results.

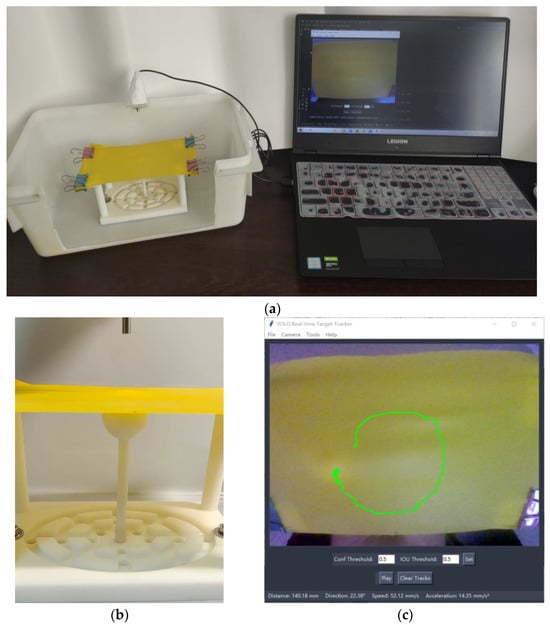

The experiments were conducted using a physical model designed to simulate eye movements, as illustrated in Figure 5a. The camera, positioned atop the physical model, captures images where 640 pixel points correspond to a 100 mm span on the plane. The displacement and velocity of the ball are derived from the pixel coordinates of its trajectory. Figure 5c presents the software interface of the tracker, showcasing the current target tracking.

Figure 5.

Physical simulation model and software interface for trackers. (a) Eye movement simulation system, including computer, camera, and physical models. (b) The 3D printed platform for eye movement simulation, in which the white moving ball represents the eye and the yellow elastic film represents the eyelid. (c) Tracker software interface. Confidence and IoU thresholds can be adjusted for better tracking, and the information bar at the bottom of the interface displays information about the movement of the tracked target.

As a supplementary note, the weights utilized for testing the simulation model were derived through training on simulated ball images rather than eye images. This simulation model was adopted to validate the integrity of the tracker’s hardware and software system. Meanwhile, the next experiment tested the pre-recorded and real-time eye video streams using the optimal model weights trained on actual eye images.

The experimental results from the simulation platform demonstrate that our tracker achieves robust real-time target-tracking performance. The acquisition system, target detection module, and trajectory measurement unit all operate seamlessly and successfully.

3.4.2. Actual Eye Movement Tracking

The processing flow of actual eye movement tracking closely mirrors that of the physical simulation model. It also contains four parts: frame extraction, target detection and tracking, trajectory calculation, and result analysis. In order to reduce noise and jitter, we applied smoothing algorithms to optimize the eye movement trajectory.

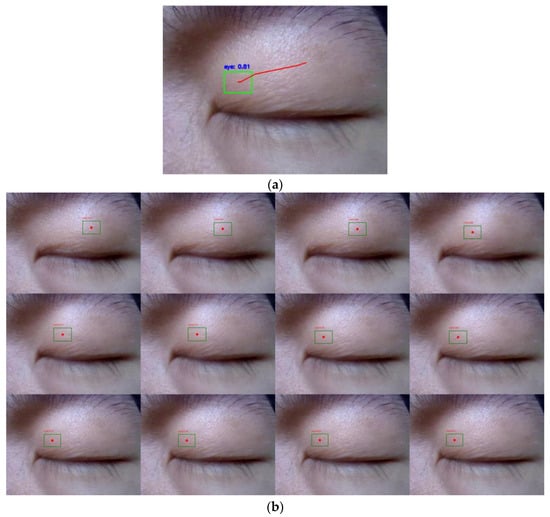

The pre-recorded eye videos were processed using the tracker to analyze eye movement trajectories. As illustrated in Figure 6, one of the eye trajectory prediction results is presented. The selected video segment, spanning approximately 400 ms, was decomposed into 12 frames, exhibiting smooth trajectories and high confidence levels.

Figure 6.

Eye movement trajectories in a pre-recorded video. (a) The trajectories were drawn at the end of 400 ms. (b) Target detection was independently conducted for each of the 12 frames.

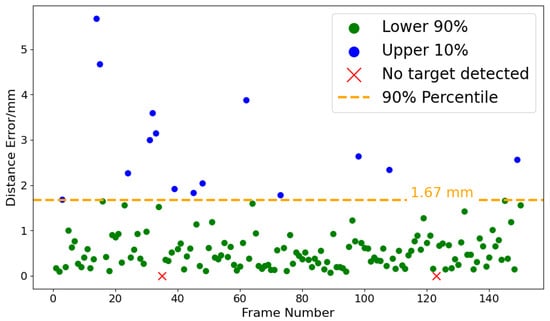

A 5 s video (150 frames) was evaluated using the tracker, with all frames labeled with ground truth bounding boxes. The predicted bounding box for each frame of the video was compared with the ground truth, and the pixel distance between the centers of the two boxes was calculated. The width of the photographed eye portion is 50 mm, covering 640 pixels in the image. According to this rule, we can calculate the distance error of the tracker. As illustrated in Figure 7 regarding the distance error of the eye movement tracker, among the 150 frames, the target was undetected in two frames, while the remaining 148 frames exhibited an average distance error of 0.77 mm. Additionally, 90% of the distance errors were below 1.67 mm, with the maximum distance error not exceeding 6 mm.

Figure 7.

Distance error of the eye movement tracker.

We further applied the tracker to analyze real-time eye videos for trajectory prediction. The tracker ran smoothly at the camera acquisition rate of 30 frames per second. The experimental results demonstrate that the tracker achieves exceptional performance in eye movement detection, generating smooth tracking trajectories that accurately capture eye movement characteristics across various directions. Through testing on horizontal, vertical, and oblique eye movements, the tracker not only precisely identifies the direction of eye movements but also computes the corresponding movement distance and velocity parameters in real time.

To objectively evaluate the tracker’s performance, we recruited an independent healthy adult subject (a 23-year-old male) whose eyelid data were not included in the dataset. We carefully installed the acquisition system, maintaining a consistent 20–30 mm distance between the camera and the center of the subject’s eyelid throughout testing. To minimize potential interference from head movements, we employed a chin rest stabilization system to reduce motion artifacts that might compromise data quality.

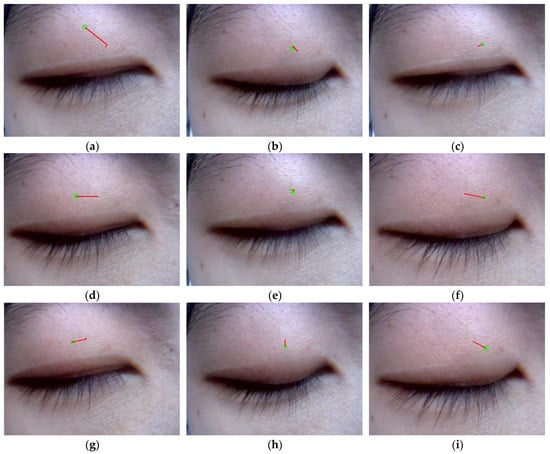

During testing, the subject performed precisely controlled eye movements with closed eyelids, sequentially executing eight primary directions: horizontal (left/right), vertical (up/down), and four diagonal directions (upper left, upper right, lower left, and lower right). Each directional movement was systematically repeated ten times to establish reliable performance metrics. The tracking system operated at 30 frames per second, capturing and displaying real-time motion trajectories through a visualization window that presented a continuous image sequence clearly depicting the subject’s eye movement patterns.

Each of the graphs in Figure 8 illustrates the subject’s ocular trajectories across five consecutive frames, where green circles denote the final frame positions and red lines indicate movement trajectories from frames 1 to 5. Notably, shorter displacement vectors in Figure 8b,h reflect the physiologically constrained vertical range of ocular motion. In Figure 8c, the model exhibits reduced tracking precision when the right eye moves in the direction toward the upper left. This limitation arises from anatomical constraints: (1) the upper-left position is in proximity to the frontal bone, physically restricting ocular mobility, and (2) there is diminished eyelid feature visibility in this quadrant. However, the system demonstrates robust tracking performance for ocular movements in other cardinal directions. Overall, the tracker’s detection trajectory matched the subjects’ eye movement patterns.

Figure 8.

The monocular movement trajectory of the right eye in nine cardinal directions, includes (e) primary position, (b) supraduction, (h) infraduction, (f) levoduction, (d) dextroduction, (a) upward and rightward movement, (g) downward and rightward movement, (c) upward and leftward movement, and (i) downward and leftward movement.

From an anatomical perspective, ocular movement is precisely regulated by six extraocular muscles. The motion trajectories derived from our tracker enable the estimation of force vectors acting upon the eyeball, thereby establishing a scientific basis for the clinical evaluation of ocular muscle function and neural control mechanisms.

4. Discussion

Based on the test results across various versions of YOLO and different pre-trained weights, we successfully identified a target detection model with optimal overall performance. The model demonstrated strong results in AP50, precision, recall, F1 score, and inference time. Its performance was further validated through multiple evaluation metrics, including consistent accuracy under varying lighting conditions, high generalization capability, and robust performance.

The system demonstrates excellent performance in real-world operation, exhibiting high real-time capability and stability. By loading the trained optimal model weights, the system efficiently predicts the position of the eyeball in each frame. For the image stream captured by the acquisition system (with a camera frame rate of 30 fps), the system seamlessly processes the data and achieves real-time tracking of eye movement trajectories, ensuring high operational efficiency.

The system demonstrates robust performance for both the simulated model and actual eye tests. It achieves precise tracking of eye movement trajectories, displaying the eye movement path in real time with smooth and continuous trajectories, free from noticeable jitter or deviation. Furthermore, through quantitative frame-by-frame analysis of pre-recorded videos, the system exhibits low eye-position-distance errors, indicating its high resolution. Most significantly, the eye-tracking results from an independent experimenter, who had no involvement in the training process, were accurate, further validating the system’s reliability and robustness in real-world application scenarios.

Given the system’s high accuracy, real-time performance, and adaptability across various testing conditions, it holds significant potential for a wide range of applications. Below is a detailed discussion of its possible use cases.

- Augmentative and Alternative Communication: By detecting eyeball movement patterns beneath closed eyelids, this system could enable individuals with ALS to control smart home devices or operate typing systems for external communication, offering a new means of interaction for those with severe motor impairments;

- Surgical Monitoring: During intracranial surgeries, such as tumor resections near the oculomotor nerve, the system could provide real-time monitoring of eyeball movements under closed eyelids. If the nerve is accidentally stimulated, triggering involuntary eye motion, the system would immediately issue an alert, helping surgeons avoid neurological damage;

- Ophthalmic Diagnostics: By analyzing eyeball trajectories across eight primary directions, the system can detect abnormal movement patterns, assisting clinicians in diagnosing paralytic strabismus. Specifically, it could help identify weakened or overactive extraocular muscles, leading to more precise treatment plans;

- Enhancing Eye-Tracking Technology: Current eye-tracking systems only recognize open-eye states or blink movements. Integrating this system would allow the capture of eyeball motion parameters even during eyelid closure, making eye tracking more continuous and comprehensive;

- Monitoring Critical or Comatose Patients: In intensive care units, the system could provide a low-cost, non-invasive, and real-time solution for long-term monitoring of anesthetized or comatose patients. By tracking closed-eye movements, clinicians could assess consciousness levels and neurological responses more effectively;

- Sleep Studies: The system’s ability to monitor eye movements during eyelid closure offers unique advantages for sleep research, such as detecting rapid eye movement (REM) sleep stages or abnormal ocular activity in sleep disorders.

These applications demonstrate the system’s versatility, spanning medical diagnostics, assistive technology, and neuroscience research, while highlighting its potential to improve patient care and human–computer interaction.

5. Conclusions

In this paper, we present an eye movement detection system based on the YOLO model, specifically designed for closed-eye conditions. Utilizing a miniature camera to capture images of eyes covered by eyelids, followed by model training and tracker development, the system achieves real-time detection of eye movements under closed-eye scenarios.

Compared with traditional eye-tracking techniques, our method has several advantages:

- It enables high-precision eye movement detection under closed-eye conditions, addressing the limitations of traditional eye trackers in detecting eye movements during blinking or eye closure;

- The method exhibits high real-time performance, making it suitable for real-time monitoring applications in both clinical and research settings;

- The proposed method eliminates the need for complex hardware, demonstrating high practicality and generalizability.

Although the system is promising, it has only been tested and analyzed for potential applications in the laboratory. To realize the value of eye-tracking applications in the closed-eye state, it is necessary to correlate the collected eye movement data with physiological and pathological information. This requires further collaboration with hospitals to seek guidance and advice from doctors.

Our dataset collected eyelid images from only five healthy subjects due to limited ability. While this suffices for our present laboratory investigation, substantial data collection for training purposes is necessary in order to improve the model’s generalizability in future applications.

The detection system remains susceptible to interference from head motion, which compromises tracking accuracy. To enhance performance, improvements could be made either to the hardware design of the acquisition system or the robustness of the detection algorithms. Potential hardware enhancements might involve integrating an inertial measurement unit for active head motion compensation or employing cameras with higher frame rates and wider field-of-view lenses to maintain tracking during head displacement. On the algorithmic side, implementing advanced filtering techniques or motion correction algorithms could help distinguish genuine eye movements from head motion artifacts.

Furthermore, to ensure reliable telemedicine applications, several aspects need to be addressed. These include developing more compact wearable acquisition devices, enhancing power efficiency for prolonged monitoring, and establishing robust data transfer protocols for remote diagnosis, all while maintaining patient privacy through the minimized transmission of raw data.

In the future, this system can be further optimized and applied in medical diagnosis and human–computer interaction, promoting the widespread adoption and advancement of eye-tracking technology.

Author Contributions

Conceptualization, Y.Z. and S.Z.; methodology, Y.Z. and S.Z.; software, S.Z. and J.H.; validation, S.Z.; formal analysis, S.Z.; data curation, S.Z.; writing—original draft preparation, S.Z.; writing—review and editing, Y.Z., S.Z. and J.H.; visualization, S.Z.; supervision, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study as it posed no risk to human subjects and did not involve sensitive personal information or commercial interests.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kassavetis, P.; Kaski, D.; Anderson, T.; Hallett, M. Eye movement disorders in movement disorders. Mov. Disord. Clin. Pract. 2022, 9, 284–295. [Google Scholar] [CrossRef]

- Mao, Y.; He, Y.; Liu, L.; Chen, X. Disease classification based on eye movement features with decision tree and random forest. Front. Neurosci. 2020, 14, 798. [Google Scholar] [CrossRef]

- Singh, H.; Singh, J. Human eye tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Mahanama, B.; Jayawardana, Y.; Rengarajan, S.; Jayawardena, G.; Chukoskie, L.; Snider, J.; Jayarathna, S. Eye movement and pupil measures: A review. Front. Comp. Sci. 2022, 3, 733531. [Google Scholar] [CrossRef]

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef]

- Fernandes, F.; Barbalho, I.; Bispo Junior, A.; Alves, L.; Nagem, D.; Lins, H.; Arrais Junior, E.; Coutinho, K.D.; Morais, A.H.; Santos, J.P.Q. Digital Alternative Communication for Individuals with amyotrophic lateral sclerosis: What we have. J. Clin. Med. 2023, 12, 5235. [Google Scholar] [CrossRef]

- Medeiros, P.A.d.L.; Da Silva, G.V.S.; Dos Santos Fernandes, F.R.; Sánchez-Gendriz, I.; Lins, H.W.C.; Da Silva Barros, D.M.; Nagem, D.A.P.; de Medeiros Valentim, R.A. Efficient machine learning approach for volunteer eye-blink detection in real-time using webcam. Expert Syst. Appl. 2022, 188, 116073. [Google Scholar] [CrossRef]

- Estrany, B.; Fuster-Parra, P. Human Eye Tracking Through Electro-Oculography (EOG): A Review; Springer International Publishing: Cham, Switzerland, 2022; pp. 75–85. [Google Scholar]

- Belkhiria, C.; Boudir, A.; Hurter, C.; Peysakhovich, V. Eog-based human–computer interface: 2000–2020 review. Sensors 2022, 22, 4914. [Google Scholar] [CrossRef]

- Belkhiria, C.; Peysakhovich, V. Electro-encephalography and electro-oculography in aeronautics: A review over the last decade (2010–2020). Front. Neuroergonomics 2020, 1, 606719. [Google Scholar] [CrossRef]

- Deng, W.; Huang, J.; Kong, S.; Zhan, Y.; Lv, J.; Cui, Y. Pupil trajectory tracing from video-oculography with a new definition of pupil location. Biomed. Signal Process. Control 2023, 79, 104196. [Google Scholar] [CrossRef]

- Hirota, M.; Hayashi, T.; Watanabe, E.; Inoue, Y.; Mizota, A. Automatic recording of the target location during smooth pursuit eye movement testing using video-oculography and deep learning-based object detection. Transl. Vis. Sci. Technol. 2021, 10, 1. [Google Scholar] [CrossRef] [PubMed]

- Rojas, M.; Lopez, J.A.; Sosa, F.S.; Velasco, C.R.; Ponce, P.; Balderas, D.C.; Molina, A. Design of a Low-Cost Prototype with 3D Printed Glasses for Real Time Eye-Tracking System. In Proceedings of the 2023 10th International Conference on Electrical and Electronics Engineering (ICEEE), Istanbul, Turkiye, 8–10 May 2023; pp. 52–56. [Google Scholar]

- Bastani, P.B.; Badihian, S.; Phillips, V.; Rieiro, H.; Otero-Millan, J.; Farrell, N.; Parker, M.; Newman-Toker, D.; Tehrani, A.S. Smartphones versus goggles for video-oculography: Current status and future direction. Res. Vestib. Sci. 2024, 23, 63–70. [Google Scholar] [CrossRef]

- Crafa, D.M.; Di Giacomo, S.; Natali, D.; Fiorini, C.; Carminati, M.; Merigo, L.; Ongarello, T. IR Light Sensing at the Edges of Glasses Lenses for Invisible Eye Tracking. In Proceedings of the 2024 IEEE Sensors Applications Symposium (SAS), Naples, Italy, 23–25 July 2024; pp. 1–4. [Google Scholar]

- Modi, N.; Singh, J. A review of various state of art eye gaze estimation techniques. In Advances in Computational Intelligence and Communication Technology: Proceedings of Cict 2019; Springer: Singapore, 2021; pp. 501–510. [Google Scholar]

- Raghavendran, S.; Vadivel, K.D. in Corneal reflection based eye tracking technology to overcome the limitations of infrared rays based eye tracking, AIP Conf. Proc. 2023, 2831, 020006. [Google Scholar]

- Hageman, K.N.; Chow, M.R.; Roberts, D.C.; Della Santina, C.C. Low-noise magnetic coil system for recording 3-D eye movements. IEEE Trans. Instrum. Meas. 2020, 70, 1–9. [Google Scholar]

- Bell, A.; Green, R.; Everitt, A. Measurement of Ocular Torsion Using Feature Matching on the Iris and Sclera. In Proceedings of the 2024 39th International Conference on Image and Vision Computing New Zealand (IVCNZ), Christchurch, New Zealand, 4–6 December 2024; pp. 1–6. [Google Scholar]

- Teiwes, W.; Merfeld, D.M.; Young, L.R.; Clarke, A.H. Comparison of the scleral search coil and video-oculography techniques for three-dimensional eye movement measurement. In Three-Dimensional Kinematics of the Eye, Head and Limb Movements; Routledge: London, UK, 2020; pp. 429–443. [Google Scholar]

- Massin, L.; Nourrit, V.; Lahuec, C.; Seguin, F.; Adam, L.; Daniel, E.; de Bougrenet De La Tocnaye, J. Development of a new scleral contact lens with encapsulated photodetectors for eye tracking. Opt. Express 2020, 28, 28635–28647. [Google Scholar] [CrossRef]

- Qian, K.; Arichi, T.; Price, A.; Dall Orso, S.; Eden, J.; Noh, Y.; Rhode, K.; Burdet, E.; Neil, M.; Edwards, A.D. An eye tracking based virtual reality system for use inside magnetic resonance imaging systems. Sci. Rep. 2021, 11, 16301. [Google Scholar]

- Franceschiello, B.; Di Sopra, L.; Minier, A.; Ionta, S.; Zeugin, D.; Notter, M.P.; Bastiaansen, J.A.; Jorge, J.; Yerly, J.; Stuber, M. 3-Dimensional magnetic resonance imaging of the freely moving human eye. Prog. Neurobiol. 2020, 194, 101885. [Google Scholar]

- Frey, M.; Nau, M.; Doeller, C.F. Magnetic resonance-based eye tracking using deep neural networks. Nat. Neurosci. 2021, 24, 1772–1779. [Google Scholar]

- Suzuki, H.; Matsuura, M.; Moriguchi, K.; Kojima, T.; Hiroshige, Y.; Matsuda, T.; Noda, Y. Two auto-detection methods for eye movements during eyes closed. Psychiatry Clin. Neurosci. 2001, 55, 197–198. [Google Scholar] [CrossRef]

- Cifu, D.X.; Wares, J.R.; Hoke, K.W.; Wetzel, P.A.; Gitchel, G.; Carne, W. Differential eye movements in mild traumatic brain injury versus normal controls. J. Head Trauma Rehabil. 2015, 30, 21–28. [Google Scholar] [CrossRef]

- Leigh, R.J.; Zee, D.S. Disorders of ocular motility due to disease of the brainstem, cerebellum, and diencephalon. In The Neurology of Eye Movements; Oxford University Press: Oxford, UK, 2015; pp. 836–915. [Google Scholar]

- Schlake, H.; Goldbrunner, R.; Siebert, M.; Behr, R.; Roosen, K. Intra-operative electromyographic monitoring of extra-ocular motor nerves (Nn. III, VI) in skull base surgery. Acta Neurochir. 2001, 143, 251–261. [Google Scholar] [PubMed]

- Sakata, K.; Suematsu, K.; Takeshige, N.; Nagata, Y.; Orito, K.; Miyagi, N.; Sakai, N.; Koseki, T.; Morioka, M. Novel method of intraoperative ocular movement monitoring using a piezoelectric device: Experimental study of ocular motor nerve activating piezoelectric potentials (OMNAPP) and clinical application for skull base surgeries. Neurosurg. Rev. 2020, 43, 185–193. [Google Scholar] [PubMed]

- Krimchansky, B.; Galperin, T.; Groswasser, Z. Vegetative state. Isr. Med. Assoc. J. 2006, 8, 819–823. [Google Scholar] [PubMed]

- Ting, W.K.; Perez Velazquez, J.L.; Cusimano, M.D. Eye movement measurement in diagnostic assessment of disorders of consciousness. Front. Neurol. 2014, 5, 137. [Google Scholar] [CrossRef]

- Khan, W.; Topham, L.; Alsmadi, H.; Al Kafri, A.; Kolivand, H. Deep face profiler (DeFaP): Towards explicit, non-restrained, non-invasive, facial and gaze comprehension. Expert Syst. Appl. 2024, 254, 124425. [Google Scholar] [CrossRef]

- Yan, Y.; Fu, R.; Ji, Q.; Liu, C.; Yang, J.; Yin, X.; Oranges, C.M.; Li, Q.; Huang, R. Surgical strategies for eyelid defect reconstruction: A review on principles and techniques. Ophthalmol. Ther. 2022, 11, 1383–1408. [Google Scholar]

- Mathew, A.; Amudha, P.; Sivakumari, S. Deep learning techniques: An overview. In Advanced Machine Learning Technologies and Applications: Proceedings of Amlta 2020; Springer: Singapore, 2021; pp. 599–608. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Rasheed, A.F.; Zarkoosh, M. YOLOv11 Optimization for Efficient Resource Utilization. arXiv 2024, arXiv:2412.14790. [Google Scholar]

- Wang, C.; Liang, G. Overview of Research on Object Detection Based on YOLO. In Proceedings of the 4th International Conference on Artificial Intelligence and Computer Engineering, Dalian, China, 17–19 November 2023; pp. 257–265. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).