Abstract

This paper presents a robustness benchmark evaluation and optimization for vehicle detection. Real-time vehicle detection has become an essential means of data perception in the transportation field, covering various aspects such as intelligent transportation systems, video surveillance, and autonomous driving. However, evaluating and optimizing the robustness of vehicle detection in real traffic scenarios remains challenging. When data distributions change, such as the impact of adverse weather or sensor damages, model reliability cannot be guaranteed. We first conducted a large-scale robustness benchmark evaluation for vehicle detection. Analysis revealed that adverse weather, motion, and occlusion are the most detrimental factors to vehicle detection performance. The impact of color changes and noise, while present, is relatively less pronounced. Moreover, the robustness of vehicle detection is closely linked to its baseline performance and model size. And as the severity of corruption intensifies, the performance of models experiences a sharp drop. When the data distribution of images changes, the features of the vehicles that the model focuses on are weakened, making the activation level of the targets significantly reduced. By evaluation, we provided guidance and direction for optimizing detection robustness. Based on these findings, we propose TDIRM, a traffic-degraded image restoration model based on stable diffusion, designed to efficiently restore degraded images in real traffic scenarios and thereby enhance the robustness of vehicle detection. The model introduces an image semantics encoder (ISE) module to extract features that align with the latent description of the real background while excluding degradation-related information. Additionally, a triple control embedding attention (TCE) module is proposed to fully integrate all condition controls. Through a triple condition control mechanism, TDIRM achieves restoration results with high fidelity and consistency. Experimental results demonstrate that TDIRM improves vehicle detection mAP by 6.92% on real dense fog data, especially for small distant vehicles that were severely obscured by fog. By enabling semantic-structural-content collaborative optimization within the diffusion framework, TDIRM establishes a novel paradigm for traffic scene image restoration.

1. Introduction

As a fundamental perception task in the traffic environment, by predicting their categories and 2D bounding boxes, 2D vehicle detection identifies vehicles of interest in the surrounding environment. Cameras are a crucial sensor type for 2D vehicle detection, capturing rich semantic information of the scene in the form of color images. Given the high safety requirement in traffic scenarios, assessing the robustness of 2D vehicle detectors under different conditions is crucial before deployment.

Despite recent significant advancements in 2D vehicle detection, such as Faster R-CNN [1], YOLO [2], YOLOv3 [3], SSD [4], CornerNet [5], CenterNet [6], Cascade R-CNN [7], RepPoints [8], DETR [9]. Advantages and disadvantages of different detection models are shown in Appendix A.1. However, conducting a comprehensive evaluation of algorithm robustness in deployment environments and optimizing robustness remain challenging tasks. In addition, since most of the annotated datasets used for training detectors were primarily collected under standard conditions, the models trained on clean data may experience performance degradation in adverse weather conditions (such as rain, snow, or fog) and under certain unfavorable conditions (such as strong or weak light, noise, blur, occlusion, etc.). In other words, deep learning models based on data often exhibit poor generalization when faced with data corruption, posing substantial obstacles to safety and reliability. Some researches synthesized common data corruption on clean datasets to evaluate the robustness of object detection, but they only considered a few simple data corruptions, which may be inadequate and unrealistic for vehicle detection. Moreover, due to the high cost associated with data collection and the rarity of extreme scenarios and adverse weather conditions, these datasets tend to be small. In light of this, comprehensively analyzing possible corruption types and accurately evaluating the corruption robustness when considering diverse driving scenarios remain challenging.

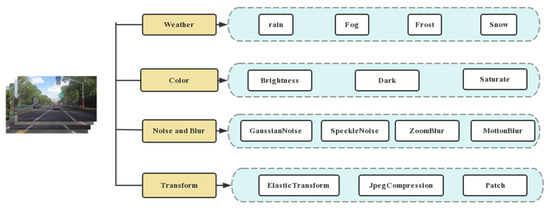

According to the challenge introduced above, in this study, as shown in Figure 1, after comprehensively analyzing possible corruption types, we focus on traffic scenes in real and complex environments. Through experimental analysis of a large number of influence factors, we have removed some factors that have insignificant effects on vehicle detection or rarely occur, such as various adversarial corruption, shadow interference, hail, lens distortion, glare, etc. Finally, according to the entire chain of vehicle detection, we have classified the fundamental factors that have the greatest impact and are most likely to occur on vehicle detection in reality into four categories and then carried out detailed evaluation and analysis. These mainly include external adverse weather and occlusion, noise introduced by sensors, changes in color and brightness, blur caused by the high-speed movement of the vehicle, and transformation during image transmission and compression, effectively covering a wide range of real-world interference scenarios. Furthermore, each type of interference has been generated at three different severity levels and then applied to dense traffic flow images to create various corrupted images. To perform robustness evaluation, on this basis, we constructed datasets that include extreme weather conditions and common image corruption. These large-scale interference datasets can serve as comprehensive and widely applicable benchmark datasets for assessing the robustness of vehicle detection models in complex traffic scenarios, thus facilitating future research.

Figure 1.

There are 14 types of corruption that can affect 2D vehicle detection, categorized into four categories: weather, color, noise, and blur, transform.

Considering the importance of real-time performance in traffic scenarios, we selected representative real-time object detection algorithms, including multiple versions of YOLOv5 and YOLOv8, SSD, CenterNet, Faster R-CNN, Mask R-CNN, RetinaNet, and Cascade R-CNN, to assess the robustness of 2D vehicle detectors under various transportation scenarios. We then conducted detailed experiments on the aforementioned synthetic datasets, testing the mAP and false alarm rate of different models for vehicle detection under various adverse conditions and calculating the corresponding mAP degradation rate. Additionally, to verify the reliability of the synthetic datasets, we performed the same experiments on a small-scale real-world adverse weather dataset. Finally, we conducted interpretative analysis using class activation mapping to observe the changes in the focus of detection models under different adverse conditions. Finally, we draw relevant conclusions to provide directions for the robustness optimization of vehicle detection models.

Based on the evaluation results, we find:

- In the real world, factors that have the greatest impact and are most likely to occur on vehicle detection in reality include adverse weather conditions, color variations due to lighting conditions, noise caused by sensor operations, blur resulting from motion, transform during image transmission and compression, occlusions by other objects, etc. Thereinto, adverse weather, blur, and occlusion have the greatest impact on vehicle detection, while the effects of color changes and noise are relatively minor.

- The robustness of vehicle detection is positively correlated with its baseline performance, and the size of the model is also positively related to its ability to withstand adverse conditions. In other words, a larger number of parameters endows the model with greater robustness. Meanwhile, as the severity of corruption increases, the performance of the model does not decline steadily but rather drops sharply.

- The blur of vehicle features under adverse conditions leads to changes in the data distribution of images, and the features of vehicles that models focus on are weakened, making it challenging for models to detect vehicles. And to a certain extent, synthetic data can represent image corruption in real-world scenarios to use for robustness evaluation of vehicle detection.

Based on these findings, a traffic degradation image restoration model (TDIRM) based on stable diffusion is proposed to effectively restore degraded images in real traffic scenarios, thereby enhancing vehicle detection robustness in adverse conditions. We introduce an image semantics encoder (ISE) module to extract features that align with the latent description of the real background while excluding degradation-related information. Additionally, a triple control embedding attention (TCE) module is proposed to fully integrate all condition controls.

Comparative experiments show that through triple conditional control, the restoration by TDIRM achieves high fidelity. And vehicle detection improves mAP by 6 on real-world dense fog images while significantly boosting precision and recall. By enabling semantic-structural-content collaborative optimization within the diffusion framework, TDIRM establishes a novel paradigm for traffic scene image restoration.

In summary, our contributions mainly contain two parts:

- By robustness benchmark evaluation, we revealed the extent and patterns of how various adverse conditions limit vehicle detection performance, providing guidance and direction for optimizing detection robustness.

- We proposed TDIRM to efficiently restore degraded images with high fidelity and consistency in real traffic scenarios and thereby enhance the robustness of vehicle detection.

2. Related Work

2.1. Vehicle Detection

Vehicle detection has been used for detecting the existence of a vehicle in a given image and returning the spatial location and size of each instance. Based on the detection methods, we categorize 2D vehicle detection models into feature-based method, two-stage method, single-stage method, anchor-free method, and transformer-based method.

2.1.1. Feature Based Method

Prior to convolutional neural networks (CNNs) [10], feature-based vehicle detection was widely used as a method to detect objects based on abstractions of image data and a type-based judging system. A popular method for classifying road users is optical flow [11]. The method looks for consecutive matches between image pixels to calculate displacement vectors. Additionally, support vector machines (SVM) [12], histograms of oriented gradients (HOG) [13], and scale-invariant feature transforms (SIFT) [14] can be used.

It is generally accepted that feature-based detection models are classic methods based on relatively simple architecture and that their performance, efficiency, and robustness can not be compared with the more recent deep learning models.

2.1.2. Two-Stage Method

As CNN and deep learning have become increasingly popular, a number of deep learning-based detection models have been proposed. In general, there are two types of deep learning-based detectors: one-stage and two-stage. A two-stage detector is composed of two phases: (1) region proposal (2) classification and localization. In the first phase, an arbitrary number of objects are identified, and in the second phase, these objects are classified and located. R-CNN is the first CNN-based two-stage detector. Fast R-CNN [15], Faster R-CNN [1], and Mask R-CNN [16] are well-known models developed based on R-CNN that are either more accurate or more efficient.

A more accurate object localization and recognition system could be achieved by executing two separate tasks for region proposal and localization. However, inference speed is sacrificed, which leads to not meeting the real-time requirement in real traffic scenarios.

2.1.3. Single-Stage Method

Single-stage detectors use dense sampling to classify and localize objects in a single step, unlike two-stage detectors. The inference speed of single-stage models is generally faster than two-stage detectors, as they do not have to generate regional proposals. This makes them more suitable for real-time applications, such as detecting vehicles in real time.

Redmon [2] proposed YOLO algorithm, in which classification and bounding box regression are conducted simultaneously. At that time, most convolutional neural networks were significantly slower than YOLO. In a later version, YOLOv3 [3] utilized FPN-like feature pyramids to improve performance for smaller objects. And so far, YOLO series algorithms have developed many versions. Moreover, one single-stage detection model named the single-shot multibox detector (SSD) [4] uses a convolutional neural network to detect and classify objects in a picture in real time. LRTA-SP [17] effectively improves the efficiency and accuracy of small target detection in infrared videos under complex backgrounds by combining low-rank tensor approximation with saliency priors, utilizing a spatiotemporal tensor model, multi-rank constraints, and an alternating projection algorithm.

2.1.4. Anchor Free Method

Anchor boxes are used in most of the popular object detection algorithms in recent times, including those above, which reflect the sizes and aspect ratios of the various items to be recognized and are bound with a specific height and width. When using anchor-free models, no predefined anchor boxes are used, so vehicles of different sizes and shapes can be detected more easily. There are a number of anchor-free models that have become well known, including FCOS [18], CornerNet [5], CenterNet [6], and RepPoints [8].

2.1.5. Transformer Based Method

There is a new category of object detection in addition to the ones mentioned above, which is based on the newly emerging concept named transformer. Since its invention, this avant-garde approach has been gaining recognition and application in the field of object detection. However, it was originally proposed within the context of natural language processing studies. Dosovitskiy [19] proposed the first transformer-based object detection model named ViT. In addition, there are several other transformer-based detection models, such as DeTR [9] and Swin transformer [20]. In particular, Meta’s new segment anything model (SAM) was based on the transformer structure and demonstrated superior performance in object detection, particularly for rare objects like vehicles with abnormal appearances. In general, transformer-based models may require a significant amount of computational resources, as well as longer training times, compared to other types of detectors, which poses a great challenge to the real-time vehicle detection in traffic scenarios.

2.2. Robustness Evaluation

As is well known, deep learning models lack robustness against adversarial examples and common corruption. For vehicle detection of traffic entities, datasets of extreme weather conditions and other corruption are rare due to the high cost of collecting real data. In addition, these datasets are primarily designed for evaluation, whereas large training datasets cover a much wider domain. Because the data were collected in different cities, with varying sensors and vehicles, it is difficult to determine whether or not weather and city have an impact on the robustness of the models. In addition, there are various adverse conditions in the real world, making it difficult to consider comprehensively all situations. And the combination of multiple factors will make robustness analysis more difficult. So it is challenging to develop a comprehensive benchmark for evaluating the robustness of 2D vehicle detection in the face of diverse road conditions in the real world.

2.2.1. Adverse Factors

In real traffic environments, adverse conditions are always diverse and overlapping, such as adverse weather, sensor damage, occlusion, and blur caused by motion. There are some studies that have analyzed adverse factors that may affect the robustness of object detection. There is evidence [21,22] that adverse weather and light strength can impair the robustness of models, making them ineffective in previously unseen datasets. An experimental study [23] found that visibility can adversely affect detection results in a foggy environment, and four quantitative criteria were developed: no fog, light fog, medium fog, and heavy fog. The performance of detecting traffic signs in bad weather was examined [24], and the results indicated that detection performance degraded as weather conditions worsened. A lightweight object detection framework was proposed [25], and its effectiveness was demonstrated through the use of a dataset with image corruption caused by rain and fog. It has been shown [26] that blur, white noise, Gaussian noise, distortion, and extreme contrast can affect object detection algorithms. An analysis of vibration-blurred images [27] explored the environmental adaptability of the visual perception system; moreover, the experiment quantitatively confirmed that mechanical vibration stress affects model performance. As a result of a detailed evaluation of semantic segmentation models in response to real-world image corruption, they [28] found that some architecture properties were significantly related to the robustness of their algorithms, like a dense prediction cell. However, currently most studies have only analyzed a few factors and have not analyzed comprehensively and in detail the adverse factors that affect vehicle detection.

2.2.2. Image Data

Vehicle detection algorithms require large amounts of realistic weather image data to be evaluated, but obtaining such images is difficult under adverse weather. Cityscapes [29], BDD100K [30], KITTI [31], Waymo [32], and nuScenes [33] are great image datasets that provide real traffic images in the field of autonomous driving, but they lack images from adverse conditions and do not classify the severity of adverse conditions. DAWN [34], which is a collection of 1000 images from real traffic environments. These images are categorized into fog, snow, rain, and sandstorms, but the image quality is low, and the quantity is also limited.

In order to evaluate the robustness of models, it is feasible to simulate real-life interferences into clean datasets in a synthetic way. As an example, ImageNet-C [35], introduced for image classification, covers 15 types of interferences, including noise, blur, weather, and digital distortions. It must be noted, however, that many of the interferences analyzed in these research works are hypothetical, introducing bias into practical situations. Rain100H and rain800H are datasets specially synthesized for rainy weather, but the number of images is small, and the fidelity of the images is not high. In summary, datasets for evaluating vehicle detection robustness in various adverse conditions are very scarce.

2.2.3. Robustness Benchmarks

Currently, some studies have evaluated robustness benchmarks for object detection, most of which focus on robustness evaluation for 3D object detection. One study [36] established three corruption robustness benchmarks, including KITTI-C, nuScenes-C, and Waymo-C, and proposed 27 types of corruption for 3D object detection to test a lot of models. Another study [37] designed 16 real-world corruptions according to the real damages in camera sensors and image pipeline and chose some representative 2D object detectors to evaluate robustness. Moreover, an easy-to-use benchmark [38] to assess the robustness of object detectors was proposed. There are many studies about adversarial robustness. For example, both white- and black-box adversarial attacks [39] for object detectors were proposed to evaluate robustness.

In summary, comprehensive evaluations of robustness benchmarks for object detection remain limited, and most of these studies are based on general object detectors without thoroughly analyzing factors causing corruption in real complex traffic environments. Additionally, they often overlook the importance of real-time performance in traffic-related tasks. Accordingly, our goal is to comprehensively analyze potential types of corruption in real traffic environments and to conduct a detailed robustness benchmark evaluation of representative real-time vehicle detection models, providing guidance for future directions in vehicle detection development.

2.3. Degraded Image Restoration

The early degraded image restoration was based on physics approaches such as dark channel prior [40] and color attenuation prior [41], which primarily relied on handcrafted priors derived from physical degradation like atmospheric scattering models. While these methods achieved reasonable results under specific conditions, their performance typically declined significantly in complex real-world scenarios. Later, data-driven deep learning methods emerged and demonstrated superior performance by directly learning degradation patterns from paired training data. FFA-Net [42] improved multi-scale feature fusion capability through feature attention mechanisms. AOD-Net [43] jointly optimized transmission maps and atmospheric light parameters using an end-to-end framework. However, these methods still faced limitations when generalizing to unseen degradation types and real-world distribution shifts.

Recent studies have combined transformer with CNN to capture both local and global dependencies. DehazeFormer [44] integrated CNN-based local feature extraction with transformer-based global context modeling. Restormer [45] employed channel attention and gated convolutions for efficient feature refinement. The diffusion model has gained prominence in restoration tasks due to its powerful image generation capability. Methods like WeatherDiff [46] and DiffBir [47] achieved state-of-the-art performance in multi-weather restoration through iterative optimization of degraded images. WeatherDiff enabled unified restoration across multiple degradation types using weather-conditioned embedding. DiffBir bridged the synthetic–real data gap by jointly training degradation estimation and image generation. However, few studies have specifically focused on traffic image restoration based on incorporating traffic characteristics.

3. Preliminary and Model

3.1. Corruption in Vehicle Detection

3.1.1. Adverse Factors in Vehicle Detection

In real-life situations, corruption can arise from a variety of factors, and we categorize these corruptions into four levels: weather, sensor damage, color change, and image transform. Based on real-world driving scenarios, we design 14 different corruption types. Specific introductions and details can be found in Appendix A.2.

Weather-level corruption: Weather changes are commonly encountered during driving, which can significantly disrupt the input to the camera. For example. There are a number of factors that can affect the visual perception system, including rain, fog, snow, frost, low light, bright light, and other unusual circumstances, possibly leading to traffic accidents because autonomous driving automobiles are less aware of their surroundings. During imaging missions, raindrops, snowflakes, fog scattering, and dust can obscure detection objectives. Image acquisition units convert obscured scenes into vision signals mixed with external interferences, capturing the obscured scenes and affecting the detection capabilities of algorithms in the perception system, which can result in degraded performance or functional failure. In adverse weather conditions, 2D vehicle detectors trained using data collected in normal weather conditions may perform poorly. Considering common weather corruption, we study robustness under adverse weather, including snow, rain, fog, and frost. A visual simulation of weather conditions is created by applying image augmentation techniques.

Sensor-level corruption: Sensors can introduce various corruption to captured data. Sensor vibration, lighting conditions, and reflective materials are all factors that can affect a sensor’s performance. To simulate visual influence caused by motion or camera defects, we designed four practical sensor-level corruptions: Gaussian noise, speckle noise, zoom blur, and motion blur.

Color-level corruption: Changes in brightness or color can also cause instability in vehicle detection results. So, we design three types of corruption caused by changes in brightness or color, including brightness, darkness, and saturation.

Transform-level corruption: In addition to external environmental factors, image transform and loss of high-frequency information can occur during transmission and compression, such as JPEG compression and elastic transform. Furthermore, occlusion by obstacles significantly impacts vehicle detection results.

Patch (occlusion) are used to simulate the impact of obstructions on vehicle detection. Whether the model can still accurately identify vehicles under various degrees of occlusion is vitally important.

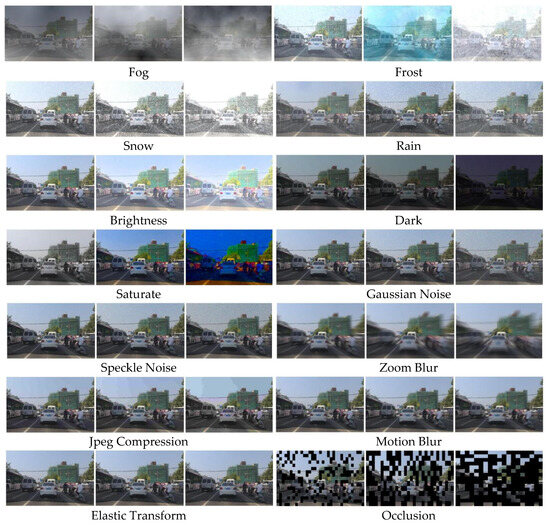

3.1.2. Synthetic Corruption in Vehicle Detection

We design three severities for each corruption and use image enhancement techniques by using the Imgaug [48] library to synthesize corruption images. the specific methods are shown in Table 1 and the synthesized images in Figure 2. More details on parameter design can be found in Appendix A.2. From multiple datasets, we selected 854 original images of densely packed vehicles from a vehicular perspective. These corruptions are categorized into 14 classes, each with three levels of severity. For each image, we synthesized 14 * 3 damaged images reflecting various types and degrees of corruption.

Table 1.

The methods for synthesizing corruption images.

Figure 2.

Visualization of three levels of 14 kinds of corruption corresponding to each image.

A discussion of the gap between synthetic corruption and real corruption: Corruption in real life can come from a variety of different sources. It is possible, for instance, for a vehicle to encounter adverse weather conditions and unusual objects simultaneously, causing much more complex corruption. In consideration of the fact that it is impossible to provide a comprehensive list of all corruption types that exist in the world, we systematically created 14 corruption types that are divided into three levels to serve as a practical testing ground for evaluating robustness in a controlled manner. However, we were able to verify that the model’s performance in synthetic weather conditions was consistent with its performance in real-world data. A more detailed discussion can be found in Appendix A.5.

3.1.3. Real Corruption in Vehicle Detection

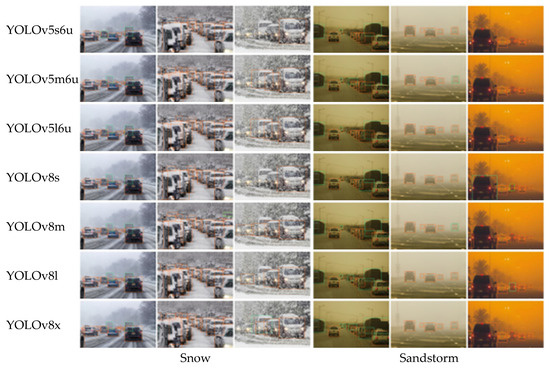

To verify the validity of the synthesized data, we collected 320 real adverse weather images for the same benchmark testing. The images primarily come from the DAWN [33] dataset, which is a collection of 1000 images from real traffic environments. These images are categorized into fog, snow, rain, and sandstorms, and partial examples are shown in Figure 3. Due to the limited data, we did not classify each category by severity.

Figure 3.

4 kinds of corruption types of real adverse weather.

3.2. Vehicle Detection Models

We divide the evaluation into two parts. The main part is to compare the YOLO series together, and the other part is to compare the rest of the real-time detection models.

For the YOLO series, we use the pre-trained weights and models of YOLOv5 and YOLOv8 provided by the official website of Ultralytics. They were trained under the same conditions. The difference is that the model sizes vary among different scaled-down versions. For the rest of the real-time detection models, for the sake of fairness, we trained each model on the clean training set of BDD100K [30] and adopted the same training strategy as described in the original papers of each method.

Considering the need for real-time vehicle detection in practical environments, we selected representative real-time vehicle detection algorithms, including multiple versions of YOLOv5 and YOLOv8, SSD, CenterNet, Faster R-CNN, Mask R-CNN, RetinaNet, and Cascade R-CNN, as shown in Table 2. And the specific model parameter information is shown in Table 3.

Table 2.

Category of models used for testing.

Table 3.

Details of the YOLOv5 and YOLOv8 models used for testing.

As major test models, YOLO offers several advantages, including fast detection speed, high accuracy, low deployment cost, strong scalability, and effective detection of small targets. They have become the mainstream real-time object detection algorithms in industrial applications. Additionally, to explore the impact of different model sizes on robustness, we select various scaled versions of YOLOv5 and YOLOv8, as shown in Table 3.

3.3. Robustness Benchmarks

3.3.1. Evaluation Metric

The robustness evaluation metric is mean average precision (mAP), with an intersection over union (IoU) threshold of 0.5 for car, truck, and bus. More metrics, such as false alarm rate (FAR), are in Appendix A.2. The performance of the model on the original validation set is denoted as . And the performance of the model on the corruption set is denoted as . We calculated the robustness of models for all types of corruption, which are categorized into 14 classes, each with three levels of severity. Subsequently, the rate of performance degradation (LR) under different conditions is calculated as Equation (1):

3.3.2. Visual Explanation

In this study, we use the Grad-CAM [50] (gradient-weighted class activation mapping) algorithm for the visual interpretation of the vehicle detection process. Grad-CAM is used to highlight the areas of the image that convolutional neural networks focus on while making decisions. This method works by calculating the gradients of the class scores with respect to the feature maps. Initially, a convolutional layer within the network is selected for analysis, followed by the computation of the gradients for this layer under a specific class. Subsequently, these gradients are globally averaged to obtain the importance weights for each feature map in that layer. These weights are then used to weigh the feature maps, creating a coarse heatmap. This heatmap indicates the areas of the image that the network focuses on when making class decisions. By using Grad-CAM, we aim to identify the changes in the areas of focus during vehicle detection by the model under various adverse conditions.

3.4. Traffic Degradation Image Restoration Model

In traffic degraded image restoration, the objective is to eliminate various environmental interference factors from the input degraded images as much as possible and restore clear scenes with high authenticity. During this process, it is crucial not to alter or lose the original traffic elements (vehicles, pedestrians, traffic signs), as the primary goal of most traffic environment perception tasks is to accurately identify the categories and locations of these key traffic elements.

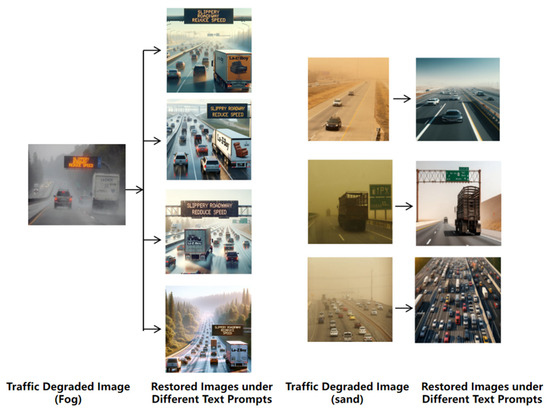

The stable diffusion model (SDM) faces significant challenges when applied to real-world traffic-degraded image restoration. Although SDM can guide the restoration process through text prompts, this approach encounters two major issues. First, text prompts with similar meanings may yield different latent features after being processed by the text encoder, which can introduce bias in SDM’s understanding and lead to image generation along divergent directions. Second, SDM struggles to precisely control the details of generated images, such as inadvertently altering the original layout and content, which is unacceptable for traffic-degraded image restoration. Therefore, directly using text prompts to guide SDM for traffic image restoration is not feasible. Figure 4 demonstrates the results of SDM restoring traffic-degraded images under similar text prompts, all of which describe the removal of adverse environmental effects (dehaze, sand removal). The results show that SDM not only fails to effectively eliminate adverse factors but also causes uncontrolled changes to image content, making it difficult to ensure fidelity.

Figure 4.

Restoration results by SDM under different text prompts.

In this regard, a traffic degradation image restoration model (TDIRM) based on stable diffusion is proposed to effectively restore degraded images in real traffic scenarios.

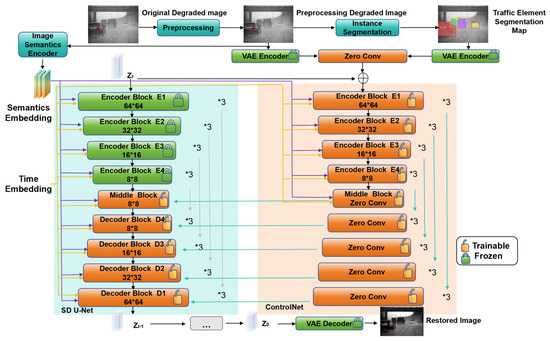

3.4.1. Overall Architecture

The overall architecture of TDIRM is shown in Figure 5. Its core methodology employs triple conditional control to constrain the restoration process, thereby enhancing both restoration quality and fidelity.

Figure 5.

Network structure of traffic degradation image restoration model (TDIRM).

For the original degraded image requiring restoration, a lightweight Swin transformer is first employed to perform preliminary enhancement, improving the quality of the degraded input to some extent. The enhanced image, denoted as DI, serves as the initial input to the model. The image semantics encoder (ISE) processes DI to extract semantics information, encoding it into latent features as the first condition embedding (SE). This embedding contains feature representations that align with the latent description of the real background while excluding degradation-related information.

Simultaneously, DI is encoded by a pre-trained VAE encoder to produce latent features as the second condition embedding DIE. DIE contains the global content understanding and structural layout of the degraded image, thereby enhancing the fidelity of the restored image.

Finally, an instance segmentation model is applied to DI to obtain a segmentation map of key traffic elements such as vehicles, pedestrians, and traffic signs. Segmentation maps are further encoded by a pre-trained VAE encoder into latent features, forming the third condition embedding, SgeE. SgeE contains critical information on traffic elements such as category, location, and size, ensuring that the restoration process does not arbitrarily alter essential traffic elements.

The three condition controls are integrated into the reverse sampling process of the stable diffusion model (SDM) by using a ControlNet-based approach to guide the reconstruction of degraded images. Similar to ControlNet architecture, the denoising module in TDIRM consists of two components: the U-Net denoising module from SDM and a trainable ControlNet copy. The difference is that in the U-Net denoising module of SDM, original U-Net encoder blocks are retained, while original attention modules in the middle block and decoder blocks are replaced with the triple control embedding attention module (TCE) in order to enhance restoration fidelity. The parameters in the U-Net encoder blocks remain frozen to preserve pre-trained feature extraction capability. The middle and decoder blocks are partially trainable.

The image semantics embedding SE is concurrently input into both the U-Net denoising module and the ControlNet module. In contrast, DIE and SgeE are first processed by ControlNet before being input to TCE attention in the middle and decoder blocks of the U-Net denoising module. This is used to guide the restoration process of SDM reverse sampling.

TDIRM adopts the same loss function as the original SDM, as specified in Equation (2):

represents time step of the diffusion process. is the standard Gaussian noise. is the potential feature after adding noise. is the predicted noise by network. c is control condition.

Ultimately, using triple condition control to constrain the sampling process of SDM can effectively enhance the quality and fidelity of the restored image while utilizing SDM’s powerful prior knowledge about the real world.

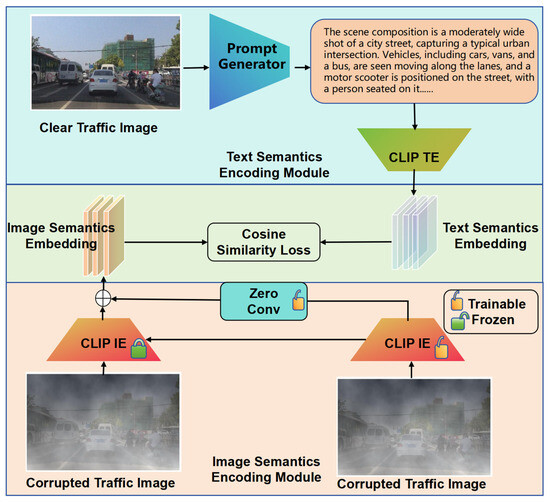

3.4.2. Image Semantics Encoder Module

When directly employing a pre-trained CLIP image encoder to extract features from a degraded image, latent features inevitably incorporate degradation information such as rain or fog noise. This contamination adversely interferes with the reverse sampling process of SDM. At the same time, if potential features are directly extracted from text prompts, there may be ambiguity in the text description, resulting in generated results that are inconsistent with the actual background semantics. To address this issue, we proposed the image semantics encoder module (ISE). SE contains features that align with the latent description of the real background while excluding degradation-related information and effectively constrains the sampling generation process. This module includes an image semantics encoding module and a text semantics encoding module, as shown in Figure 6.

Figure 6.

Network structure of image semantics encoder module (ISE).

The image semantics encoding module contains two image encoders: one is a pre-trained CLIP image encoder with frozen parameters to preserve its powerful capability for extracting general visual semantic features. The other is a trainable copy of the CLIP image encoder, which gradually fuses features at each level with the frozen version through zero convolution. In the initial training phase, the output of zero convolution approximates the original features from the frozen version. As training progresses, the trainable version gradually adjusts its output features. This design prevents randomized initial parameters from corrupting pre-trained features during early training stages, thereby ensuring model stability.

For text semantics encoding module, it is only used during the training phase. It employs a prompt generator to produce text prompts corresponding to clear traffic images, which are then fed into a pre-trained CLIP text encoder to obtain text semantics embedding that match the textual descriptions. Finally, the image semantics encoding module extracts degradation-free image semantics embedding from DI. These image embeddings are then aligned with the text semantics embeddings using cosine similarity as a loss function during training. After training, the image semantics encoder is integrated into TDIRM to extract image semantic latent features, which serve as the first condition embedding called SE.

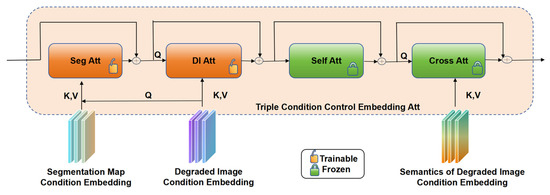

3.4.3. Triple Control Embedding Attention Module

As shown in Figure 7, to efficiently utilize all control information, the triple control embedding attention module (TCE) is designed to replace the original attention modules in the middle blocks and decoder blocks of the denoising U-Net module. In the TCE attention module, parameters of original self-attention and cross-attention modules in U-Net are frozen. Degraded image attention and segmentation map attention are added before them, which are trainable.

Figure 7.

Network structure of triple control embedding attention module (TCE).

Q, K, and V represent query, key, and value features in the attention mechanism, respectively. For Seg attention, Q comes from degraded image condition control, and K and V come from segmentation map condition control. This allows full utilization of latent information in degraded image DI. For DI attention, Q comes from latent feature Z, which is used to denoise iteratively in U-Net, and K and V come from degraded image condition control. For the original cross-attention module, K and V come from semantics embedding SE of the degraded image. This is used to guide the reverse sampling process of U-Net.

In summary, through the TCE attention module, the U-Net denoising process can more fully utilize all control conditions, improving fidelity and generation quality of the restored image.

3.4.4. Experiment Details

The pretrained model is based on Stable Diffusion 1.5. The framework employs Python 3.11 + PyTorch 2.3.1 + CUDA 11.8, with training conducted on two V100-32G GPUs. The Adam optimizer is adopted with a batch size of 32 images per batch, and input images are normalized to 512 × 512 resolution. The training process consists of two parts:

First, image semantics encoder is trained with a learning rate of for 500 epochs. After training, its parameters are frozen and incorporated into TDIRM for the overall model training. The second part involves training the ControlNet module and U-Net denoising module in TDIRM. In the ControlNet module, parameters are initialized using the pretrained encoder and middle blocks from the U-Net of SDM. In TCE attention module, parameters are initialized with self-attention module weights from SDM. Training is performed with a learning rate of for 5000 iterations. The DDPM sampling method is employed with the timestep set to 150.

Evaluation Metrics: The study employs two supervised metrics—PSNR (peak signal-to-noise ratio) and SSIM (structural similarity index)—to quantify the similarity between restored and degraded images. Higher PSNR and SSIM values indicate greater similarity. Additionally, NIQE (natural image quality evaluator) is adopted to assess the naturalness and perceptual quality of restored images, where a lower NIQE score signifies more natural-looking results.

Beyond these three metrics, the evaluation also includes mAP, precision, and recall to measure improvements in vehicle detection accuracy before and after image restoration.

3.4.5. Datasets

The study selects fog—one of the most challenging weather conditions that significantly impacts vehicle detection—as the research subject for model validation. Notably, the entire process does not explicitly incorporate any fog-specific prior knowledge like physical characteristics. That means TDIRM is not merely a restoration model specialized for a single adverse condition type. For other interference types, the model can achieve comparable processing effects through similar fine-tuning approaches.

The training data consist of two components: The synthesized paired foggy images (854 × 3 pairs) used for robustness evaluation in Seciton 3.1.2. The OTS synthetic image pairs (538 × 3 pairs) from RESIDE, where each clear image corresponds to three levels of foggy images. These datasets are split into training and test sets at an 8:2 ratio. Additionally, to effectively restore degraded traffic images in real-world scenarios, the evaluation particularly focuses on comparing restoration results using 76 real dense fog traffic images from the DAWN. Unlike synthetic image pairs, these real-world images lack corresponding clear references.

4. Results and Analysis

4.1. Vehicle Detection Results

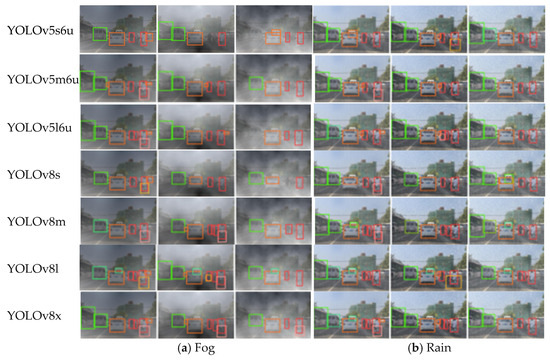

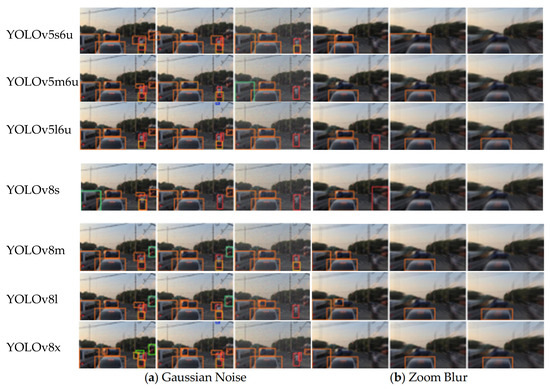

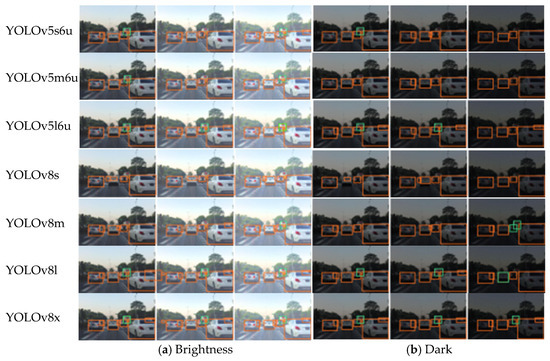

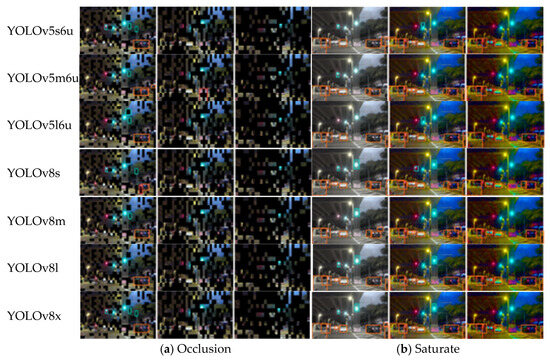

We present visual detection results for eight types of corrosion, including fog, rain, Gaussian noise, zoom blur, brightness, dark, occlusion, and saturate. As illustrated, as the severity of corrosion increases, the number of targets detected by the model decreases, and models with a larger number of parameters exhibit better detection performance. Here are visualizations for robustness evaluation; only the results of YOLOv5 and YOLOv8 are presented, and evaluation results of the remaining models are supplemented in Section 4.4.

In fog corruption, some detailed information of an otherwise clear image is lost because it is absorbed and scattered by suspended particles in the atmosphere, and the original image is blurred due to atmospheric interference causing contrast reduction, color distortion, and other problems, which greatly affect vehicle detection in the actual situation. Rain comes usually with fog. A large amount of rain streaks not only block the vehicles in the traffic scene but also lead to the emergence of fog and weaken the visual enhancement function of the vehicle camera. The residual rain marks severely affect the image quality of the vehicle camera, causing the image to be blurred and distorted and causing a huge impact on the accuracy of vehicle detection. In addition, the road condition on a rainy day is complex, and there is dense overlap and different scales of the vehicles to be detected in the image, which further exacerbates the difficulty of detection.

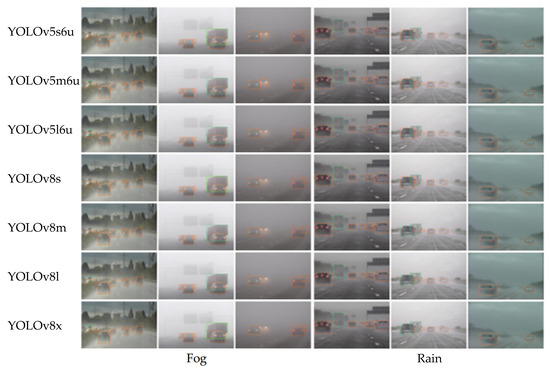

As can be seen from Figure 8, in severe rain or fog, the models are more likely to lose detection vehicles. Moreover, fog has a greater impact on detection performance than rain, and it might be because fog is more likely to cause the loss of important contour information of the target.

Figure 8.

Visualization results under fog and rain (three corruption levels).

As can be seen from Figure 9, Gaussian noise has a relatively minor impact on the detection results to some extent, but zoom blur significantly affects the detection outcome, even rendering the model almost incapacitated. It can alter the shape of the target to a certain degree and blur its features, posing a great challenge for the model to correctly detect the original target.

Figure 9.

Visualization results under Gaussian noise and zoom blur (three corruption levels).

As can be seen from Figure 10, brightness and dark both affect the detection results to some extent. When the degree is mild, the impact is limited, but when it is severe, the model’s performance declines significantly. Excessive lighting can weaken the contours and features of the target, while insufficient lighting may result in the camera failing to capture effective target features. They both pose great challenges for the model to correctly detect the original target.

Figure 10.

Visualization results under brightness and dark (three corruption levels).

As can be seen from Figure 11, saturation has a relatively minor impact on the detection results, but occlusion can significantly reduce the model’s performance, even rendering the model almost incapacitated. When parts of a vehicle are obscured, it becomes challenging for the model to recognize them due to the loss of their complete features.

Figure 11.

Visualization results under occlusion and saturate (three corruption levels).

4.2. Robustness Results on Synthetic Images

We show the corruption robustness of YOLOv5 and YOLOv8 models on test data in Table 4, Table 5 and Table 6 in order to verify the effect of model volume on robustness. Three tables represent the evaluation results under light, middle, and severe conditions, respectively. Evaluation results of the remaining models are supplemented in Section 4.4. A high correlation exists between the robustness of corruption detection and the accuracy of clean detection.

Table 4.

The benchmark results of YOLOv5 and YOLOv8. We show robustness metric (mAP50) under each corruption based on the car, truck, and bus classes at light level.

Table 5.

The benchmark results of YOLOv5 and YOLOv8. We show robustness metric (mAP50) under each corruption based on the car, truck, and bus classes at middle level.

Table 6.

The benchmark results of YOLOv5 and YOLOv8. We show robustness metric (mAP50) under each corruption based on the car, truck, and bus classes at severe level.

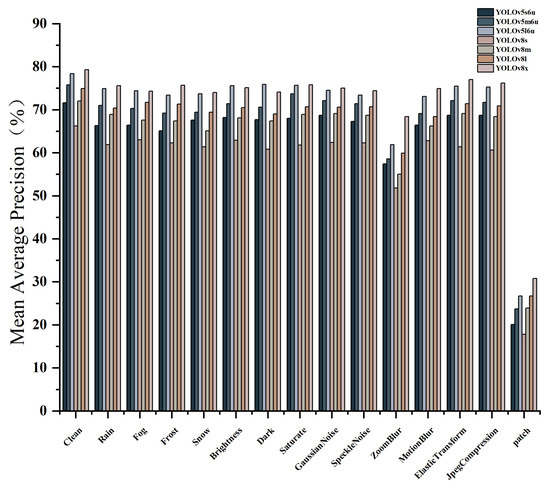

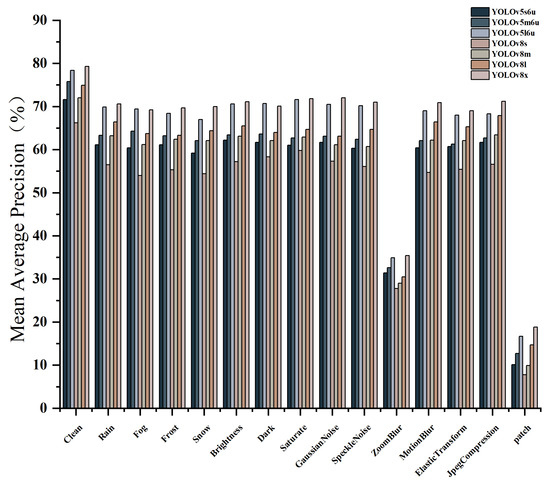

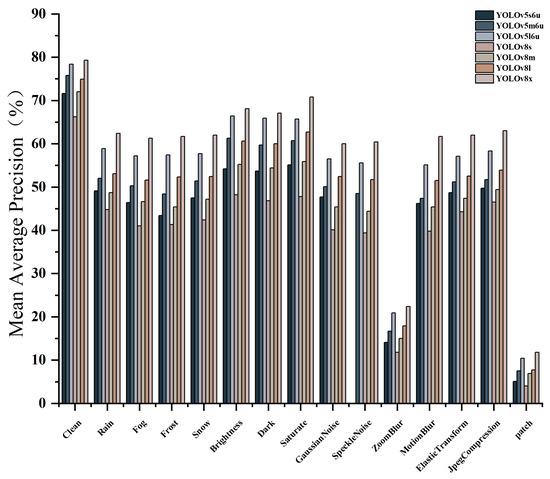

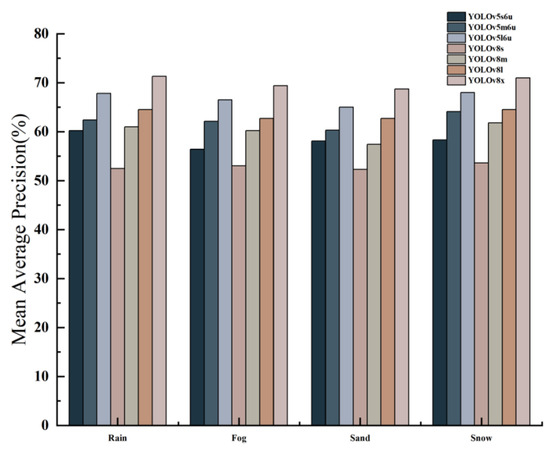

Additionally, we plot bar charts for results to provide a visual representation of the robustness metric changes under different corruption levels. Figure 12, Figure 13 and Figure 14 represent the evaluation results under light, middle, and severe conditions, respectively.

Figure 12.

Bar chart of benchmark results of YOLOv5 and YOLOv8. We show change in robustness metric (mAP50) under different corruption types at light level.

Figure 13.

Bar chart of benchmark results of YOLOv5 and YOLOv8. We show change in robustness metric (mAP50) under different corruption types at middle level.

Figure 14.

Bar chart of benchmark results of YOLOv5 and YOLOv8. We show change in robustness metric (mAP50) under different corruption types at severe level.

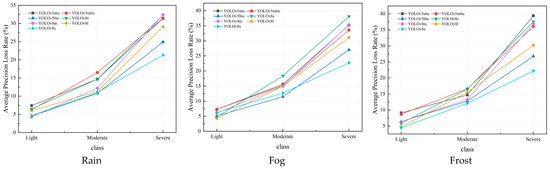

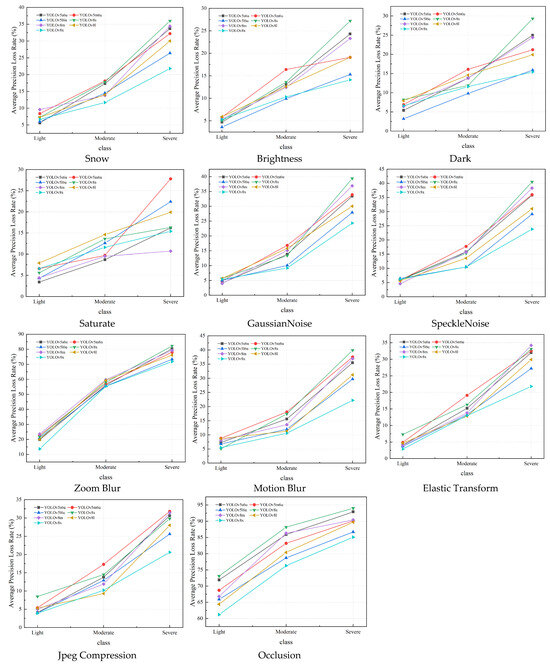

Based on the evaluation results, we obtain several findings as below. Adverse weather conditions, blur, and occlusion have the most significant impact on the model’s performance. Color and noise have a relatively minor effect on the model’s efficacy. Moreover, the performance of the model continually declines with increasing severity of corruption for all types of damage. For instance, severe fog and dark result in poor detection performance by the model. Furthermore, within models of the same category, a larger number of parameters tends to give the model stronger robustness against corruption of all severity. For instance, at the same level of corruption, YOLOv8x achieves a higher mAP compared to YOLOv8s, YOLOv8, and YOLOv8l, suggesting that the model size is positively correlated with its ability to resist adverse conditions. Furthermore, to quantitatively analyze the change in model performance with the increasing severity of corruption, Figure 15 illustrates the decline rate of the model’s performance compared to clean images.

Figure 15.

The average precision loss rate of YOLOv5 and YOLOv8. The results are evaluated based on the car, truck, and bus classes (three corruption levels).

4.3. Robustness Results Under Real Adverse Weather

Because of the huge cost of collecting different kinds of corruption datasets from the real world, it is impractical to form large-scale datasets. To demonstrate that synthetic data can replace real data to some extent, we conducted the same robustness benchmark tests on a subset of real adverse weather datasets and compared the results. We choose 271 images that depict vehicles in various weather conditions from the DAWN [33] datasets, which are divided into four weather conditions: fog, rain, snow, and sandstorms. Same as the previous part, Figure 16 and Figure 17 present visual detection results for four types of corruption, including fog, rain, snow, and sandstorm.

Figure 16.

Visualization results under fog and rain corruption under real conditions.

Figure 17.

Visualization results under snow and sand corruption under real conditions.

We evaluate the real weather images in the same manner. Table 7 and Figure 18 indicate that as the level of corruption increases, there is a continuous decline in model performance. Fog and sandstorms have a more pronounced impact on the model. Additionally, there is a positive correlation between the size of the model and its ability to resist corruption. This is consistent with the conclusions drawn from the proposed synthetic dataset, validating that synthetic corrupted images can substitute for real corrupted ones.

Table 7.

The benchmark results of YOLOv5 and YOLOv8 on real adverse weather dataset.

Figure 18.

Bar chart of benchmark results of YOLOv5 and YOLOv8 on real adverse weather dataset. We show change in robustness metric (mAP50).

4.4. Robustness Results on Other Models

We show the corruption robustness of five other vehicle detection models on test data in Table 8, Table 9 and Table 10, in which they represent the evaluation results under light, moderate, and severe conditions, respectively.

Table 8.

The benchmark results of remaining models. We show robustness metric (mAP50) under each corruption based on the car, truck, and bus classes at light level.

Table 9.

The benchmark results of remaining models. We show robustness metric (mAP50) under each corruption based on the car, truck, and bus classes at middle level.

Table 10.

The benchmark results of remaining models. We show robustness metric (mAP50) under each corruption based on the car, truck, and bus classes at severe level.

It can be observed that different corruption factors exhibit similar effects on various vehicle detection models, indicating that these corruptions have a universal impact across all models.

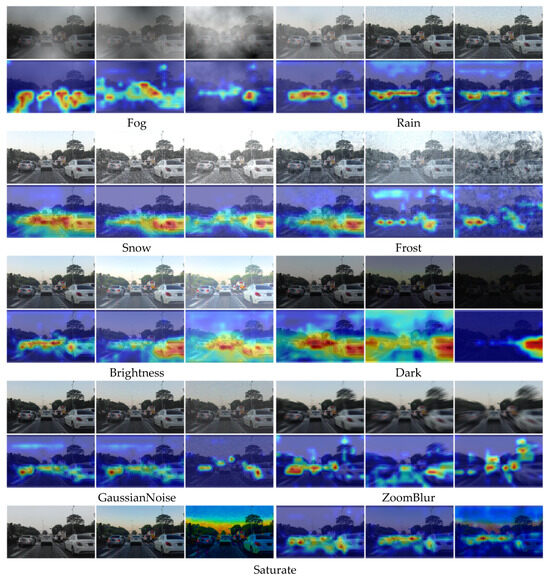

4.5. Visual Explanation About Robustness

Figure 19 shows the class activation map for the car category from the seventh convolutional layer of YOLOv8m, with a threshold of 0.5. As the degree of image corruption increases, fewer vehicles are detected, and the model’s focus areas on car category continuously diminish. Additionally, the focus areas may unpredictably deviate from the vehicles themselves. This indicates that under adverse conditions, when the data distribution of images changes, the features of the vehicles that the model focuses on are weakened. The activation level of the targets is significantly reduced, leading to difficulties in recognition.

Figure 19.

Class activation mapping of cars. Each type of corruption is divided into three levels.

4.6. Restoration Results of Degraded Images on TDIRM

On the synthetic traffic foggy image test set, quantitative comparisons were conducted between TDIRM and various types of mainstream dehaze algorithms, including the (1) dark channel prior (DCP) algorithm [40] based on physical characteristics. (2) Color attenuation prior (CAP) algorithm [41] based on modeling the relationship between brightness and saturation. (3) The FFA-Net [42] algorithm, which enhances restoration capability through feature attention and multi-scale fusion. (4) The AOD-Net [43] algorithm, which jointly optimizes transmission and atmospheric light end-to-end. (5) The DehazeFormer [44] algorithm, which combines CNN’s local feature extraction capability and transformer’s global feature extraction capability. (6) The Restormer [45] algorithm is based on channel self-attention and gated convolutional feed-forward networks. (7) The DiffBir [46] algorithm is based on joint training of degradation estimation and diffusion generation. (8) The WeatherDiff [47] algorithm supports multi-weather restoration.

As shown in Table 11, comparison models mainly include three categories: physics-based, CNN/transformer-based neural network structures, and diffusion model-based. On the synthetic paired traffic foggy image test set, TDIRM achieved the best results in PSNR and NIQE. But for SSIM, DehazeFormer and Restormer obtained slightly higher results than TDIRM. This demonstrates that TDIRM exhibits superior overall performance in dehaze, particularly showing significant improvement in the naturalness of restored images. While ensuring the fidelity of restored images, TDIRM leverages the powerful implicit prior knowledge of SDM to enhance the realism and quality of images.

Table 11.

Quantitative comparison of different dehaze models on paired synthesized traffic foggy images.

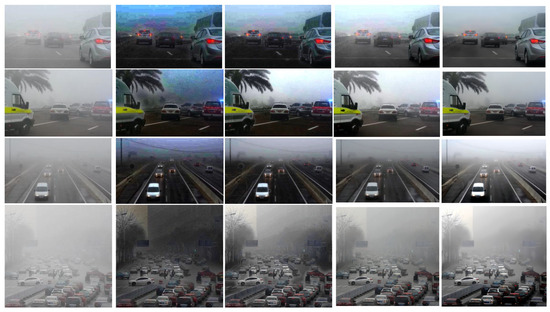

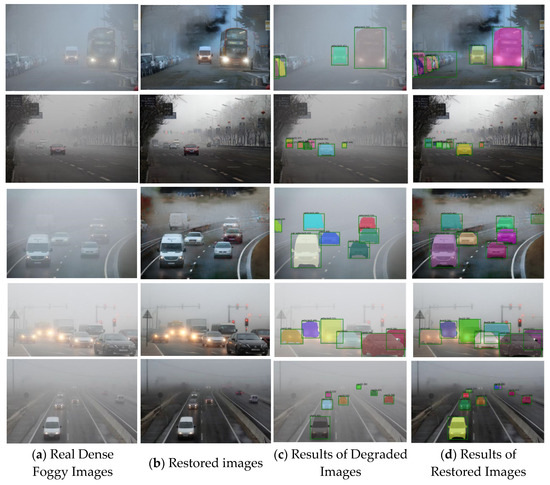

Since TDIRM is designed to efficiently restore degraded images in real traffic scenarios, we focus on validating its restoration performance on real-world degraded traffic images. As shown in Figure 20, the restoration results of several models on real dense fog traffic images are presented. Unlike synthetic image pairs, these real degraded images lack corresponding clear images as reference.

Figure 20.

Restoration effects comparison of real traffic dense fog images under different models.

As shown in Figure 20, both CAP and DehazeFormer exhibit certain degrees of contrast and tonal shifts, with a darker background. Restormer shows relatively limited effectiveness in removing dense fog. In comparison with other models, TDIRM not only maintains the fidelity of degraded images but also effectively restores color and texture information in traffic scenes, thereby enhancing overall image quality.

Notably, TDIRM can eliminate most fog occlusion while preserving original content and details across varying fog intensities. It demonstrates particularly excellent restoration performance for moderate and light fog, where it nearly completely removes fog effects. However, its performance remains somewhat limited for dense fog. This limitation primarily stems from: the model was trained on synthetic fog-clear image pairs, where the data distribution differs from real-world fog images. And the performance gap becomes more pronounced as fog severity increases.

Furthermore, due to the characteristics of traffic degradation images, in upper image regions (sky areas), dense fog almost completely obscures objects. This makes complete fog removal particularly challenging in these areas.

Although the model cannot completely eliminate fog interference, it is still capable of clearly restoring most traffic elements. Vehicle features that were difficult to distinguish due to fog occlusion and small target vehicles that were originally overlooked in the distance reappear after restoration.

4.7. Optimization Results of Vehicle Detection Robustness

To verify this improvement, we conducted comparative experiments on vehicle detection before and after the restoration of degraded traffic images. The evaluation employed precision, recall, and mAP (with an IoU threshold of 0.5) as metrics, tested on 76 real dense fog traffic images from DAWN. The qualitative results of vehicle detection before and after restoration are shown in Figure 21, while the quantitative results are presented in Table 12.

Figure 21.

Qualitative comparison of vehicle detection results before and after recovery in real traffic dense fog images.

Table 12.

Quantitative comparison of vehicle detection results before and after recovery in real traffic dense fog images.

Figure 21 clearly demonstrates that TDIRM can effectively remove fog interference, particularly for large target vehicles in front. The model also has certain restoration capabilities for small distant vehicles that were severely obscured by fog, significantly improving their detection probability and successfully detecting vehicles that were previously missed due to blurred features. Moreover, images restored by TDIRM maintain high fidelity without arbitrarily altering the traffic elements present in the original degraded images, ensuring strong consistency between input and output. The model effectively recovers color and texture information in traffic scenes, thereby enhancing overall image quality.

As shown in Table 13, after processing through TDIRM, the mAP of vehicle detection in real foggy images increased by 6.92%, with both precision and recall rates improving by over 4%. These results prove that real foggy images restored by TDIRM significantly improve vehicle detection accuracy while reducing the false detection rate. Additionally, confidence levels of detected vehicles all showed some degree of improvement. This indicates that TDIRM can effectively enhance the robustness of vehicle detection in adverse traffic environments.

Table 13.

Ablation experiments for different modules of TDIRM.

As shown in Table 14, ablation experiments were conducted for ISE and TCE to validate the effectiveness of different components in TDIRM. CLIP IE refers to the pretrained CLIP image encoder, while SelfAtt denotes the self-attention module. The result demonstrates that replacing the CLIP image encoder with the ISE significantly improves both PSNR and SSIM metrics. This indicates that ISE can effectively reduce the impact of degradation information during the semantic extraction process, thereby minimizing their interference with the SDM reverse sampling procedure and ensuring better consistency and fidelity in restored images. Furthermore, compared to SelfAtt, TCEAtt more effectively integrates different types of control embedding during the U-Net reverse denoising process. This enhanced integration capability leads to superior preservation of image fidelity in final restoration results.

Table 14.

Ablation experiments for different control embedding of TDIRM.

Table 14 demonstrates the effectiveness of different condition controls in TDIRM. The degraded image embedding DIE serves as an essential component since it represents the original input control condition, while both semantics embedding SE and segmentation embedding SegE function as optional controls. SE encapsulates comprehensive semantic information, including structure, objects, and layout within the entire image, whereas SegE specifically contains all critical traffic element information. The result reveals that combining SE with DIE achieves approximately 3 dB PSNR improvement over using DIE alone, along with significant enhancements in both SSIM and NIQE. This confirms that image semantic information extracted by ISE can strengthen SDM’s understanding of degraded image content, thereby effectively improving restoration fidelity. Image semantics features outperform textual semantics features since visual features capture spatial details, while a single text description may correspond to multiple objects distributed across different image regions.

Further analysis shows that incorporating SegE leads to substantial metric improvements. This stems from SegE’s inclusion of critical traffic element attributes—including category, position, and size—which ensures the restored images preserve original traffic elements without arbitrary modifications. As the core component of TDIRM, SegE significantly enhances both the fidelity and consistency of restored images.

5. Discussion and Conclusions

In this study, we delved into two crucial aspects of vehicle detection: robustness benchmark evaluation and robustness optimization. Fourteen fundamental factors that significantly and frequently affect vehicle detection in real traffic scenarios were designed according to the entire chain of vehicle detection. By conducting extensive experiments on both synthetic datasets and a smaller-scale real weather dataset, the impact of these factors on various real-time vehicle detection methods was comprehensively evaluated using multiple metrics. Our findings revealed that adverse weather, motion, and occlusion are the most detrimental factors to vehicle detection performance. For instance, blur caused by vehicle motion can greatly weaken vehicle features, leading to a sharp drop in model accuracy. The impact of color changes and noise, while present, is relatively less pronounced. In addition, robustness of vehicle detection is closely linked to its baseline performance and model size. Larger models with more parameters tend to exhibit greater resilience against adverse conditions. However, it is notable that as the severity of corruption intensifies, the performance of models does not decline gradually but experiences a sharp drop. This indicates that there are critical thresholds beyond which the models struggle to maintain their detection capabilities. Meanwhile, results of the class activation map indicate that under adverse conditions, when the data distribution of images changes, the features of the vehicles that the model focuses on are weakened. The activation level of the targets is significantly reduced, making it difficult for them to accurately identify vehicles.

So, to address the challenges posed by degraded images in real-world traffic scenarios, TDIRM was proposed based on SDM. TDIRM employs a triple collaborative control mechanism—comprising the image semantics embedding SE for separating degradation information, the degraded image embedding DIE for maintaining structural consistency, and the segmentation embedding SegE for preserving critical targets—significantly enhancing image restoration fidelity. Experimental results demonstrate that TDIRM improves vehicle detection mAP by 6.92% on real dense fog data, especially for small distant vehicles that were severely obscured by fog. TDIRM also achieves superior PSNR, about 30.69 dB, in restored images compared to some dehaze models. By enabling semantic-structural-content collaborative optimization within the diffusion framework, TDIRM establishes a novel paradigm for traffic scene image restoration.

However, it is essential to acknowledge the limitations of this study. The complex and diverse nature of adverse conditions, coupled with the scarcity of data, prevented us from considering all potential adverse factors comprehensively. This may have led to an incomplete understanding of the full range of challenges that vehicle detection models face in real scenarios. In the future, a more detailed study on the impact of adverse conditions on the robustness of vehicle detection is warranted, which could involve exploring the combined effects of multiple adverse factors. Such research will contribute to the development of more robust and reliable vehicle detection systems for applications such as intelligent transportation, video surveillance, and autonomous driving.

Author Contributions

Conceptualization, J.C.; methodology, Y.G.; software, J.C.; validation, Y.G.; formal analysis, J.C.; investigation, Y.G.; resources, J.T.; data curation, J.T.; writing—original draft preparation, Y.G.; writing—review and editing, J.C.; visualization, Y.G.; supervision, J.T.; project administration, J.T.; funding acquisition, J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by Key R&D Program of Hunan Province (2023GK2014), National Natural Science Foundation of China (52172310), Natural Science Foundation of Hunan Province (2022JJ30763).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Appendix A

Appendix A.1. Specific Introduction of Some Detection Models

Table A1 shows advantages and disadvantages of different detection models mentioned in the introduction.

Table A1.

Advantages and disadvantages of different detection models.

Table A1.

Advantages and disadvantages of different detection models.

| Model | Advantages | Disadvantages |

|---|---|---|

| Faster R-CNN [1] | High detection accuracy, especially suitable for detecting objects with complex shapes and various scales. | Relatively slow inference speed. |

| YOLO [2] | Fast detection speed, simple architecture, easy to train and deploy. | Lower detection accuracy, especially for small and occluded objects. |

| YOLOv3 [3] | Utilizes feature pyramids to better handle objects at different scales. | Higher computational cost than the original YOLO. |

| SSD [4] | Effective in detecting small objects by using multi-scale feature maps. | Detection accuracy for large objects may be lower. |

| CornerNet [5] | High-precision object localization by directly detecting corner points. | Poor robustness in complex backgrounds. |

| CenterNet [6] | Good performance in handling object occlusion. | Sensitive to object pose changes. |

| Cascade R-CNN [7] | Significantly improves detection accuracy through a cascade structure. | Slow detection speed |

| RepPoints [8] | Good adaptability to object shape and pose changes. | Slow detection speed. |

| DETR [9] | Strong ability in set-based object prediction. Good generalization performance in different datasets. | Slow convergence speed, requiring a long training time. |

Appendix A.2. Specific Introduction of Some Factors

Gaussian noise refers to statistical noise that follows a Gaussian distribution by obscuring fine details, attenuating edges, and generally degrading image quality. This type of noise can originate from various sources, such as sensor imperfections, transmission interferences, or environmental conditions.

Speckle noise is a granular interference that inherently appears due to the coherent process of the image acquisition. The manifestation of speckle noise can obscure critical details, hinder the extraction of meaningful features, and, subsequently, impede accurate interpretation and analysis of image data.

Zoom blur simulates the effect of rapid forward or backward movement in the visual field, akin to the effect achieved through zooming a camera lens during exposure. Unlike uniform blurs, zoom blur affects different regions of an image to varying degrees, complicating tasks such as edge detection, feature extraction, and object recognition.

Motion blur emerges when either the camera or the subject exhibits movement during the exposure time of a single frame. It is characterized by a smearing along the direction of movement, which can obfuscate details and attenuate high-frequency components essential for various computer vision tasks.

Brightness refers to the perceived intensity of light emanating from an image. It is often subject to various intrinsic and extrinsic factors, such as lighting conditions, surface properties of imaged objects, and sensor characteristics. Uneven or inappropriate brightness levels can obscure details, hinder the extraction of meaningful features, and consequently, impede the accurate interpretation and analysis of image data. In this paper, brightness specifically refers to being too bright.

Darkness significantly impacts the visibility and discernibility of vital features within an image. In dark scenarios, image sensors capture limited photons, often resulting in visuals that are not only dim but also pervaded by noise, thereby diminishing the quality and usability of the acquired data. The subtleties and nuances of objects become veiled in darkness, making vehicle detection particularly arduous.

Saturation pertains to the intensity of colors in an image. A highly saturated image exhibits vivid and rich colors, while an image with low saturation appears more muted. Over-saturation and under-saturation can both introduce challenges: the former may lead to loss of detail and color clipping, while the latter may obscure color-based features and distinctions.

JPEG compression can reduce image file sizes by quantizing the transformed coefficients and subsequently encoding them. It introduces artifacts and losses of high-frequency information in the image, such as blocking, ringing, and mosquito noise, which can potentially mask critical details and alter visual features.

Elastic transform involves applying simulated deformations to an image, such as stretching, shearing, and bending, thereby generating new data instances that maintain the intrinsic features of the original image while introducing variability. However, elastic transform will to some extent alter the geometric features of the vehicle, making vehicle detection difficult.

Appendix A.3. Basis for Parameter Selection of Synthesis Methods

Based on the Imgaug library, for corruption types with relatively clear generation reasons, such as noise, contrast, and compression transform, we adopt its predefined synthesis methods under the premise of being as realistic as possible. For those with complex generation reasons, such as rain, snow, fog, darkness, or occlusion, we add some additional conditions to ensure that they approximate the real situation while using the predefined synthesis methods. And we verified their rationality by evaluating real corruption images. As shown in Table 1, we provide simple and fast data generation methods for reasonably evaluating the robustness of vehicle detection models in deployment. The following are the basis for parameter selection when we add additional conditions.

How to synthesize realistic corrupted images for various types of corruption is complex. Although this paper focuses on basic types of corruption. However, when synthesizing corrupted data, in order to be as consistent with real-world scenarios as possible, synthesis methods for most corruption are actually the superposition of multiple basic corruptions, as shown in Table 1. The synthesis method for rain is to add raindrop (size = (0.025, 0.05), speed = (0.25, 0.05)), add splatter with predefined severities {1, 2, 3}, add motion blur (k = 3), and reduce the brightness by 20%. For rain, we add the occlusion caused by raindrops and the splashing of raindrops, the blur caused by the movement of raindrops, and the dim light caused by the decrease in brightness on rainy days. For fog, snow, and frost, based on the predefined synthesis methods in Imgaug [51], we additionally add different opacity gray mask layers according to the severity to reduce the image contrast and brightness and also reduce the brightness to simulate dark. The synthesis method for darkness is: The brightness is multiplied by (0.7, 0.5, 0.3), Gaussian noise is added with (1–5, 5–10, 10–20), and hue and saturation are adjusted with (−10–+10, −20–+20, −30–+30). For darkness, we consider the decrease in brightness, the Gaussian noise brought by the sensor, and the changes in hue and contrast.

Fog. According to the atmospheric scattering model [51], the concentration of fog is inversely proportional to the transmittance. Changing the transmittance is equivalent to adding a grayscale mask layer. Based on multiple experimental observations, adding an opacity gray mask layer with 20% transparency is consistent with the fog concentration (middle class) with a visibility of 100m in Foggy Cityscapes [52].

Therefore, for the predefined fog levels, for light fog (visibility of 200 m), add an opacity gray mask layer with 10% transparency; for moderate fog (visibility of 100 m), add an opacity gray mask layer with 20% transparency; for heavy fog (visibility of 50 m), add an opacity gray mask layer with 30% transparency; in addition, considering that foggy days are often accompanied by decreased brightness, we reduce the image brightness by 20% to ensure consistency with the light intensity distribution of real foggy-day images.

Rain. The synthesis of rain is relatively complex. According to [53], the proportion of raindrops in the image is set to be between 0.025 and 0.05, and the falling speed of raindrops is set to be between 0.25 and 0.5. In addition, because the falling of raindrops will cause motion blur, the blur kernel is set to k = 3 with reference to the medium blur intensity in RainCityscapes [54]. Considering the splashing characteristics of raindrops, predefined splashing effects of different levels are added. Finally, image brightness is reduced by 20% to ensure consistency with the light intensity distribution of real rainy-day images.

Snow. The brightness decrease in snow is related to the snowflake density. On real snowy days, the increase in the ground reflectance leads to a decrease in the overall brightness by about 15–25%. Therefore, the brightness of the image is reduced by 20%. In addition, according to the SFT dataset, an opacity gray mask with 10–30% transparency is added to the predefined snow levels to simulate different snow densities.

Frost. Frost is composed of tiny ice crystals. Frost is mainly manifested in the image as local contrast reduction and texture changes caused by the surface being covered with a white crystalline layer and local blur caused by the scattering of light by ice crystals [55]. Therefore, opacity gray masks with 10%, 20%, and 30% transparency are added to the predefined frost levels to simulate light, moderate, and heavy frost. At the same time, the brightness is reduced by 10% to reflect the high reflectivity of ice crystals.

Dark. Under dark conditions, the decrease in light intensity leads to reduced brightness, a significant increase in sensor noise, and color distortion due to the decrease in saturation. According to the ExDark dataset [56], the brightness of low-light images is approximately 30–50% of normal. Considering the driving differences at different times of the night, the brightness is multiplied by 0.7, 0.5, and 0.3 to correspond to 30%, 50%, and 70% decreases in light intensity, respectively. Referring to the SID dataset [57], the Gaussian noise intensity is set to 5–20. Finally, based on the color statistical characteristics in the ExDark dataset [48], the hue shift and saturation adjustment ranges are set as (−10–+10), (−20–+20), and (−30–+30) according to severity.

Occlusion. The occlusion statistics in KITTI [31] show that the average occlusion ratio of vehicles in traffic scenes is 20–50%, and it can reach 70% in extreme cases. Therefore, we adopt random rectangular occlusion, and the size of the occluded area is 0.01–0.02% of the total image area to remove some features of the vehicle. The removed proportion of the total area is divided into 30%, 50%, and 70% according to severity.

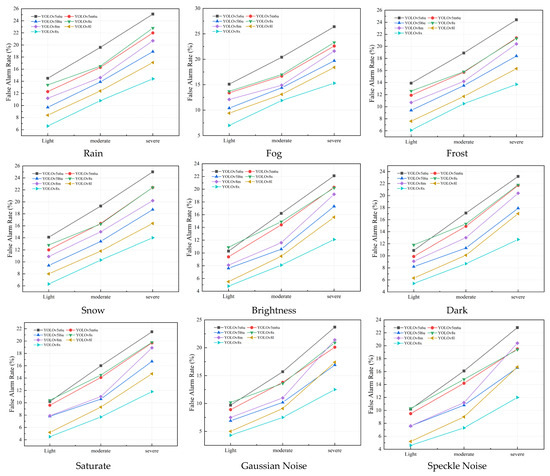

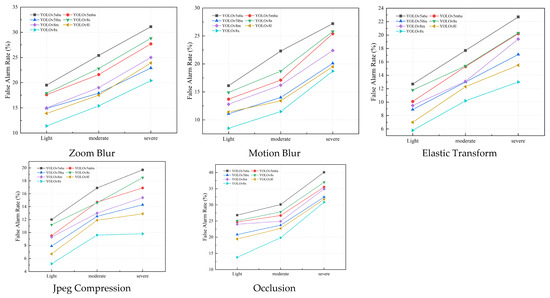

Appendix A.4. Basis for Parameter Selection of Synthesis Methods

To comprehensively evaluate the robustness of vehicle detection models, we added the false alarm rate as a supplementary indicator and conducted analysis. A false alarm occurs when a non-vehicle object is misclassified as a vehicle, which reflects the reliability of models in distinguishing between vehicle and non-vehicle targets. Meanwhile, it can also reflect the robustness of models in complex environments, providing optimization directions for the reliable application of vehicle detection in real-world scenarios. We show FAR of YOLOv5 and YOLOv8 models on test data in Figure A1, with an IoU threshold of 0.5 for car, truck, and bus.

Through the analysis, we reached conclusions similar to those previously. When YOLO predicts bounding boxes, it may generate some bounding boxes without objects, resulting in a relatively high FAR. Generally, the FAR of vehicle detection is negatively correlated with its baseline performance and the size of the model. Among various factors, as the severity increases, motion blur, zoom blur, and occlusion cause a sharp increase in FAR. This should be an important direction for the optimization of vehicle detection.

Figure A1.

The false alarm rate of YOLOv5 and YOLOv8. The results are evaluated based on the car, truck, and bus classes (three corruption levels).

Appendix A.5. Comprehensive Discussion on the Distinctions Between Synthetic and Real Adverse Weather Data

Structural differences in data: In terms of physical characteristics, adverse weather conditions are typically simulated algorithmically by adding noise, texture overlays, or optical models. Weather elements such as raindrops, snowflakes, and fog particles are generated with predefined rules governing their shape, density, and motion trajectories, which may result in patterned features. For instance, synthetic rain often consists of uniformly distributed, regularly shaped elements (ellipses or line segments), lacking the size variations and angular deviations caused by factors like wind speed and air humidity in real rain. In contrast, actual rain exhibits wind-driven raindrops with irregular shapes and slanted trajectories, along with dynamically changing details such as reflections from puddles and wiper marks on vehicle windshields.