Abstract

Vehicle-infrastructure cooperative perception enhances the perception capabilities of autonomous vehicles by facilitating the exchange of complementary information between vehicles and infrastructure. However, real-world environments often present challenges such as differences in sensor resolution and installation angles, which create a domain gap that complicates the integration of features from these two sources. This domain gap can hinder the overall performance of the perception system. To tackle this issue, we propose a novel vehicle–infrastructure cooperative perception network designed to effectively bridge the feature integration between vehicle and infrastructure sensors. Our approach includes a Multi-Scale Dynamic Feature Fusion Module designed to comprehensively integrate features from both vehicle and infrastructure across spatial and semantic dimensions. For feature fusion at each scale, we introduce the Multi-Source Dynamic Interaction Module (MSDI) and the Per-Point Self-Attention Module (PPSA). The MSDI dynamically adjusts the interaction between vehicle and infrastructure features based on environmental changes, generating enhanced interacting features. Subsequently, the PPSA aggregates these interacted features with the original vehicle–infrastructure features at the same spatial location. Additionally, we have constructed a real-world vehicle–infrastructure cooperative perception dataset, DZGSet, which includes multi-category annotations. Extensive experiments conducted on the DAIR-V2X and our self-collected DZGSet datasets demonstrate that our proposed method achieves Average Precision (AP) scores at IoU 0.5 of 0.780 and 0.652, and AP scores at IoU 0.7 of 0.632 and 0.493, respectively. These results indicate that our proposed method outperforms existing cooperative perception methods. Consequently, the proposed approach significantly improves the performance of cooperative perception, enabling more accurate and reliable autonomous vehicle operation.

1. Introduction

Perception is essential for enabling autonomous vehicles to understand their surrounding traffic environment, leading to significant advancements in research. The release of several large-scale open-source datasets [1,2,3] has facilitated the development of highly effective models [4,5,6,7]. However, single-vehicle perception systems have notable limitations, primarily due to the restricted range of vehicle-mounted sensors, which can create blind spots in detection. Additionally, outfitting vehicles with a dense array of sensors can be prohibitively expensive.

Vehicle–infrastructure cooperative perception addresses these challenges by facilitating information sharing between vehicles and infrastructure, thereby enhancing the perception capabilities of autonomous vehicles [8,9]. It extends the perception range and reduces blind spots, effectively mitigating some limitations inherent in single-vehicle systems [10,11,12,13]. Infrastructure sensors, typically installed at higher positions, provide different viewing angles compared to vehicle-mounted sensors, enabling vehicles to detect obstacles at greater distances and within blind spots that would otherwise remain invisible. Furthermore, vehicle–infrastructure cooperative perception enhances the robustness and reliability of the overall perception system; in the event of a failure in the vehicle’s perception system, the infrastructure can serve as a backup, ensuring continued functionality.

Despite its potential, research on vehicle–infrastructure cooperative perception is still in its early stages and faces numerous challenges. A critical issue is the effective fusion of features from vehicle and infrastructure sensors, which directly impacts the performance of cooperative perception. Therefore, there is an urgent need to explore effective methods for integrating vehicle–infrastructure features.

Our research focuses on the fusion of vehicle–infrastructure features to enhance the perception performance of cooperative systems. Differences in installation angles, resolutions, and environmental conditions between vehicle and infrastructure sensors lead to distinct distributions of acquired feature information, resulting in a domain gap. This discrepancy complicates the direct fusion of features, limiting the maximization of cooperative performance. Previous work has explored various methods for effective feature fusion. For instance, F-Cooper [14] employs the max-out method to highlight important features and filter out trivial ones, and Ref. [15] concatenates vehicle–infrastructure features; meanwhile, Ren et al. [16] implement a weighting scheme for merging. However, these methods typically perform fusion at a single resolution, which limits their ability to fully leverage complementary information across different scales, such as local spatial details and global semantic context. Additionally, existing methods do not sufficiently account for distribution differences between vehicle and infrastructure features. Simple concatenation or weighting can lead to interference between these two feature sources, resulting in inconsistently or suboptimally fused features and ultimately degrading detection accuracy.

Currently, datasets in the field of vehicle–infrastructure cooperative perception include both simulation datasets and real-world datasets. However, simulation datasets do not fully capture the complexity and uncertainty of real-world scenarios, such as calibration errors, localization errors, delays, and communication issues, which are practical challenges that require urgent investigation [17]. The only existing real-world dataset, DAIR-V2X [18], is insufficient for verifying the generalization of algorithms. Moreover, existing vehicle–infrastructure perception datasets typically only contain annotations for vehicles, lacking other important categories like pedestrians and cyclists, which restricts comprehensive studies of traffic scenarios.

To address these challenges, we propose a vehicle–infrastructure cooperative perception network that achieves comprehensive fusion of vehicle–infrastructure features. This network takes point clouds from both vehicle and infrastructure sensors as input and facilitates feature sharing to perform 3D object detection.

Additionally, we present a multi-category real-world dataset called DZGSet, which offers richer and more diverse scenarios for vehicle–infrastructure cooperative perception. This dataset captures multiple categories of traffic participants, addressing the limitations in existing datasets. To evaluate our approach, we conduct experiments on DZGSet and DAIR-V2X dataset, comparing it with single-vehicle perception method and state-of-the-art cooperative perception methods.

In summary, the main contributions of our work are as follows:

- We introduce a vehicle–infrastructure cooperative perception network that employs a Multi-Scale Dynamic Feature Fusion Module, incorporating the Multi-Source Dynamic Interaction (MSDI) and Per-Point Self-Attention (PPSA) modules to effectively integrate features from vehicle and infrastructure, thereby significantly enhancing their cooperative perception capabilities.

- We construct DZGSet, a self-collected real-world dataset featuring high-quality annotations for various traffic participants, including “car”, “pedestrian”, and “cyclist”, which addresses the limitations of existing datasets.

- Our extensive experimental evaluations demonstrate that the proposed vehicle–infrastructure cooperative perception network successfully fuses vehicle and infrastructure features, resulting in improved accuracy and a more holistic understanding of the cooperative perception.

The rest of this paper is arranged as follows: Section 2 discusses the recent works that have been undertaken in cooperative perception and autonomous driving datasets. Section 3 portrays information on the proposed vehicle–infrastructure cooperative perception network. The results acquired with the proposed modules are discussed briefly in Section 4. Section 5 concludes this paper.

2. Related Work

Research on vehicle–infrastructure cooperative perception has primarily concentrated on LiDAR-based 3D object detection. This section reviews the latest advancements in vehicle–infrastructure cooperative perception, relevant datasets in the field of autonomous driving, and developments in LiDAR-based 3D object detection.

2.1. Vehicle–Infrastructure Cooperative Perception

Vehicle–infrastructure cooperative perception aims to enhance the perception capabilities of autonomous vehicles by leveraging shared information from infrastructure. This area has recently gained significant attention. Based on the type of information exchanged between vehicles and infrastructure, vehicle–infrastructure cooperation can be categorized into three primary methods: early cooperation, late cooperation, and intermediate cooperation [19].

Early cooperation involves the direct sharing of raw data, such as transmitting raw sensor data from infrastructure to vehicles. However, due to the substantial volume of raw sensor data, this approach demands significant communication bandwidth, making it impractical for real-world applications. For instance, Cooper [20] introduced the first early cooperative strategy based on LiDAR point clouds, which involved sharing raw LiDAR data for cooperative perception.

Late cooperation, on the other hand, focuses on results-level integration, where detection results are exchanged between vehicles and infrastructure. This method requires minimal communication bandwidth and features low coupling between the perception systems of vehicles and infrastructure, making it the most widely adopted approach currently. However, late fusion heavily relies on the accuracy of both vehicles’ and infrastructure’s perception systems. It is susceptible to noise interference, which can lead to false positives and negatives, ultimately diminishing perception performance. For example, Mo et al. [21] proposed a two-stage Kalman filtering scheme designed for late cooperation with infrastructure; meanwhile, Zhao et al. [22] explored lane marking detection by integrating vehicle and infrastructure sensors, employing Dempster–Shafer theory to address uncertainties in the perception process.

Feature-level cooperation, as an intermediate strategy, offers a more effective solution. This method involves sharing features between vehicles and infrastructure, which can reduce communication costs while maintaining high detection accuracy. Currently, this approach is a leading research direction. However, a notable challenge persists: the domain gap between features extracted by vehicle and infrastructure sensors. Effectively fusing these features is essential for advancing vehicle–infrastructure cooperative perception. In contrast to various solutions proposed in previous studies [14,15,16,17], we advocate for a multi-scale dynamic fusion strategy that facilitates dynamic interactions between vehicle and infrastructure features across different scales. This approach enables more comprehensive and effective feature fusion, enhancing the overall performance of cooperative perception systems.

2.2. Autonomous Driving Datasets

For autonomous driving, early research primarily focused on single-vehicle perception, which utilizes on-board sensors to enable autonomous vehicle functionality. To support this research, several high-quality real-world datasets have been developed for training and evaluating algorithm performance. Notably, KITTI [1] is one of the pioneering datasets specifically designed for autonomous driving, providing extensive 2D and 3D annotated data for tasks such as object detection, tracking, and road segmentation. Larger-scale datasets, such as nuScenes [2] and Waymo Open Dataset [3], offer a significantly greater number of scenes, thereby enhancing the diversity and complexity of scenarios available for research.

However, single-vehicle perception is inherently limited by factors such as blind spots and occlusion, which can hinder performance in complex traffic environments. In contrast, cooperative perception can substantially extend the perception range of vehicles by sharing information from infrastructure, thereby improving the robustness and reliability of the perception system, especially in situations involving occluded or distant objects.

Currently, due to the challenges associated with data collection for cooperative perception, most datasets are generated through simulations. For instance, OPV2V [13] is the first dataset specifically focused on vehicle-to-vehicle cooperative perception, created using the CARLA simulator and OpenCDA tools. Similarly, V2XSet [23] and V2X-Sim [24] are datasets generated with the CARLA simulator, concentrating on vehicle-to-infrastructure cooperative scenarios. However, simulated datasets often fall short of fully capturing the uncertainties and diversity of real-world conditions, which can affect the practical performance of the models trained on them.

Real-world datasets for cooperative perception remain relatively scarce. DAIR-V2X [18] is the first real-world dataset dedicated to vehicle-to-infrastructure cooperation, providing authentic scenarios that are essential for addressing practical challenges. Nonetheless, most existing cooperative perception datasets primarily focus on the vehicle categories, failing to adequately represent the complexity of diverse traffic scenes. To address this limitation and offer a broader range of real-world scenarios, we have developed DZGSet, a multi-category real-world dataset that encompasses a wider variety of traffic participants.

2.3. LiDAR-Based 3D Object Detection

LiDAR-based 3D object detection can be primarily classified into two categories based on how the point cloud data is represented: point-based methods and voxel-based methods. Point-based methods directly process raw point clouds, preserving the original structural information. However, they encounter challenges due to the unstructured and sparse nature of point cloud data. For instance, PointNet [25] processes the input point cloud directly and employs MaxPooling to aggregate both local and global features, effectively capturing the intrinsic structure of the data. Building on this, PointNet++ [26] enhances the original PointNet by addressing the issue of uneven point cloud density through a multi-scale approach. Further advancing this concept, PointRCNN [27] introduces a two-stage 3D object detection framework that utilizes point cloud data to generate high-quality region proposals, achieving precise 3D object detection.

In contrast, voxel-based methods partition the point cloud into uniformly spaced 3D voxels, extracting features from the points within each voxel to create a structured representation akin to image pixels [4,28,29]. This structured approach simplifies subsequent feature extraction and detection processes, allowing deep learning models to process point cloud data more efficiently. Unfortunately, the voxelization process can introduce quantization errors, potentially resulting in the loss of detailed information.

VoxelNet [28] is an end-to-end 3D object detection framework that begins by dividing point cloud data into fixed-size voxels. It employs a Voxel Feature Encoder to extract point features within each voxel, aggregating them into voxel-level feature representations before performing object detection using a Region Proposal Network. PointPillars [4], on the other hand, projects point cloud data into a two-dimensional pillar grid. By leveraging its efficient pillar projection mechanism and lightweight network architecture, PointPillars achieves real-time object detection while maintaining high accuracy. In this work, we select PointPillars as the backbone network for constructing the vehicle–infrastructure cooperative perception network.

3. Method

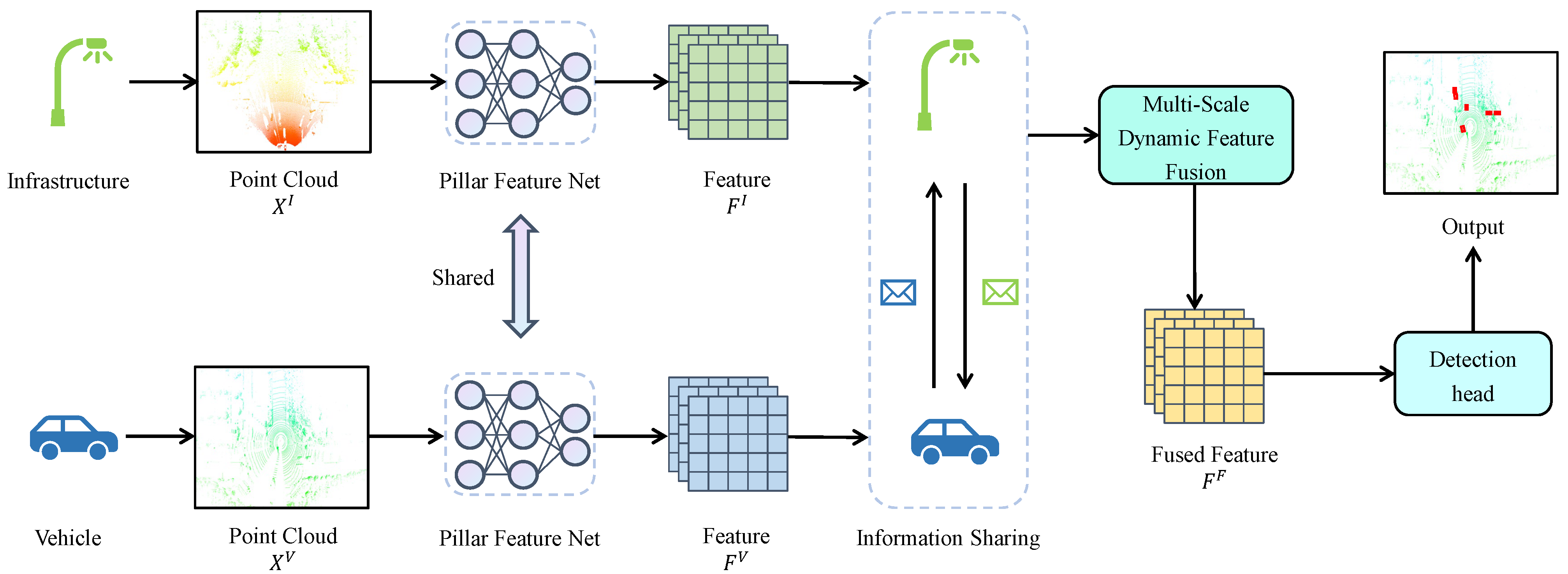

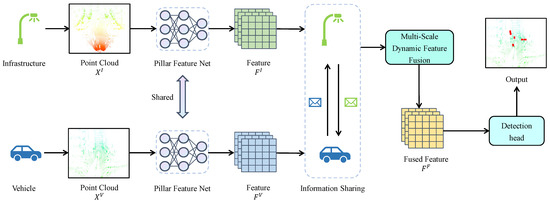

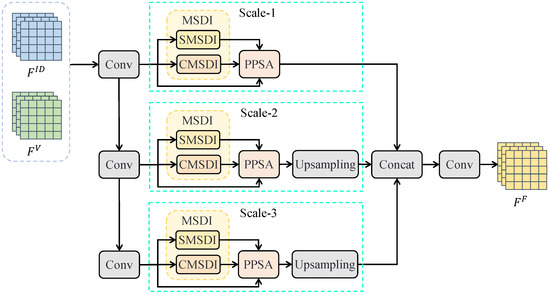

In vehicle–infrastructure cooperative perception, it is essential to address the domain gap between features obtained from vehicle and infrastructure sensors. To enhance the performance of vehicle–infrastructure cooperative perception, we propose a cooperative perception network. The overall architecture of the proposed network is shown in Figure 1, which comprises four main components: (1) point cloud feature extraction; (2) vehicle–infrastructure information sharing; (3) Multi-Scale Dynamic Feature Fusion; (4) detection head.

Figure 1.

Overview of proposed vehicle–infrastructure cooperative perception network.

3.1. Point Cloud Feature Extraction

Point cloud feature extraction is a critical step in the vehicle–infrastructure cooperative perception network. We utilize the Pillar Feature Network from PointPillars to extract features from both vehicle and infrastructure point cloud data. For infrastructure point cloud and vehicle point cloud , we partition each into multiple pillars and employ a convolutional neural network to extract features from the points within each pillar. These features are then integrated and transformed into feature maps from a bird’s-eye view (BEV) perspective, resulting in the infrastructure point cloud feature map and the vehicle point cloud feature map , where H, W, and C represent the height, width, and channels of the feature maps, respectively. During the feature extraction process, the Pillar Feature Network, used for both vehicle and infrastructure, shares the same parameters. This ensures that the features from both sources possess similar representational capabilities, facilitating effective subsequent feature fusion.

3.2. Vehicle–Infrastructure Information Sharing

To minimize communication overhead, we implement an encoder–decoder-based information sharing mechanism [15] that effectively compresses and decompresses feature information, ensuring high-quality transmission even under limited bandwidth conditions. At the infrastructure level, the extracted point cloud features are compressed using a convolutional neural network, which reduces their dimensionality and data volume:

where represents the compressed infrastructure features, Conv denotes the convolutional neural network layer, and , , and correspond to the height, width, and channels of the compressed features, respectively. Upon reaching the vehicle, the compressed features are decompressed through a deconvolution operation to restore their original scale and detail:

where Deconv is the deconvolutional neural network layer responsible for reconstructing the original feature representation, .

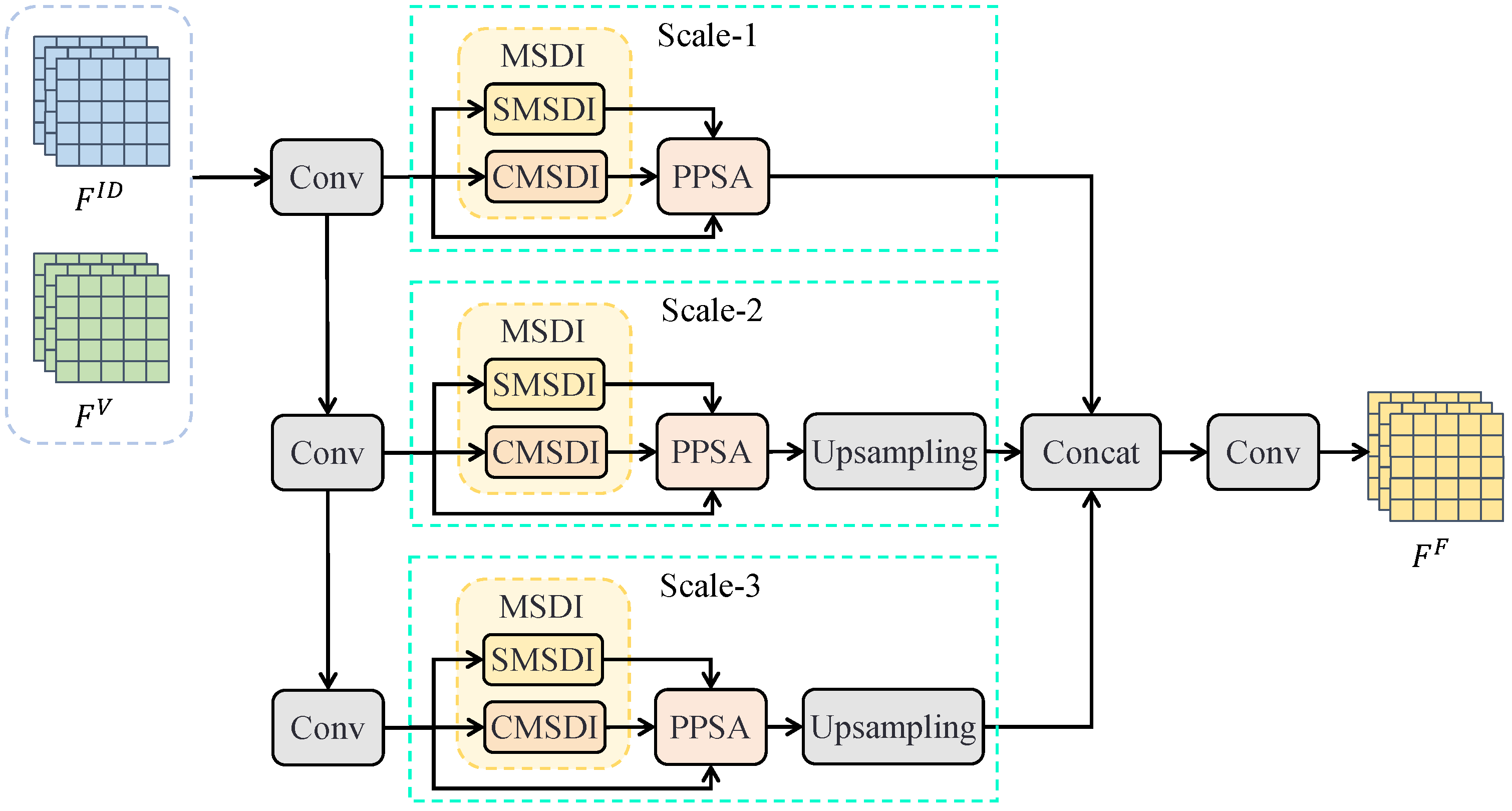

3.3. Multi-Scale Dynamic Feature Fusion

In the vehicle–infrastructure cooperative perception network, the Multi-Scale Dynamic Feature Fusion Module is tasked with fusing vehicle features and decompressed infrastructure features to produce a unified output, as depicted in Figure 2. This fusion process incorporates two essential components at each scale: the MSDI and the PPSA, ensuring comprehensive utilization of features from both vehicle and infrastructure. The Multi-Scale Dynamic Feature Fusion Module consists of four primary processes: (1) Multi-Scale Feature Construction; (2) Multi-Source Dynamic Interaction Module; (3) Per-Point Self-Attention Module; and (4) Multi-Scale Feature Aggregation.

Figure 2.

The Multi-Scale Dynamic Feature Fusion Module fuses vehicle and decompressed infrastructure features across multiple scales, implementing dynamic feature interaction at each scale through MSDI and PPSA.

3.3.1. Multi-Scale Feature Construction

The first step in the Multi-Scale Dynamic Feature Fusion Module is Multi-Scale Feature Construction, which involves downsampling the vehicle features, , and the decompressed infrastructure features, , to various scales using convolution operations. This process ensures that information across different scales is effectively utilized.

Large-scale features capture broader contextual information within a scene and exhibit lower sensitivity to local changes. They emphasize the overall layout rather than precise positional details, providing stable and reliable semantic information less affected by minor environmental variations. Conversely, small-scale features focus on fine-grained attributes, such as specific vehicle parts or pedestrian movements, delivering detailed information that enhances perception accuracy. By integrating both large-scale and small-scale features, the system maintains robustness while remaining attentive to critical details.

To generate feature maps at multiple scales, we employ a step-wise downsampling strategy. Vehicle and decompressed infrastructure features are processed through a series of convolutional and pooling layers to obtain features at three different scales. The multi-scale vehicle and infrastructure features are denoted as and , respectively, where , , and represent the dimensions (height, width, and channels) of the features, with indicating different scale levels.

3.3.2. Multi-Source Dynamic Interaction Module

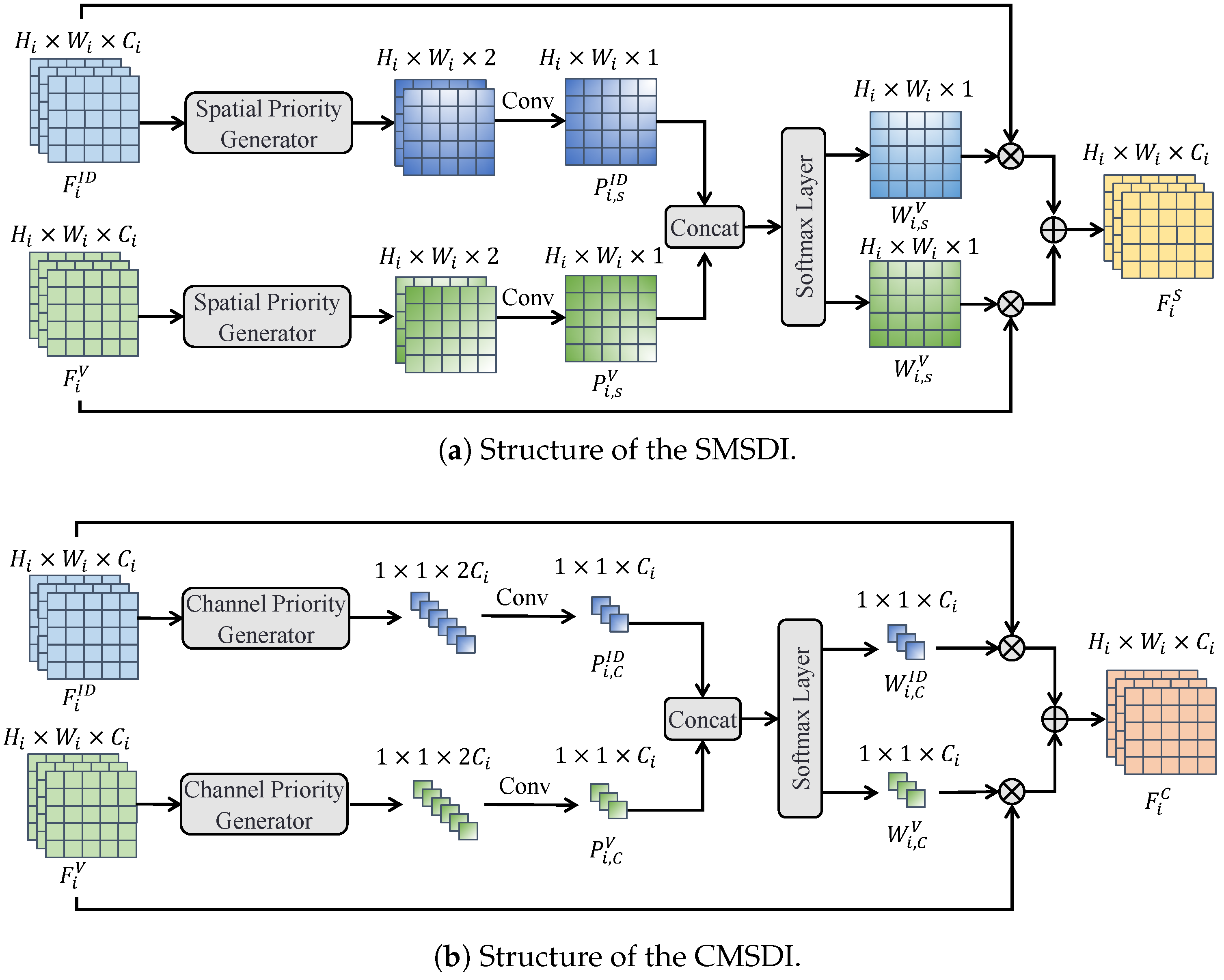

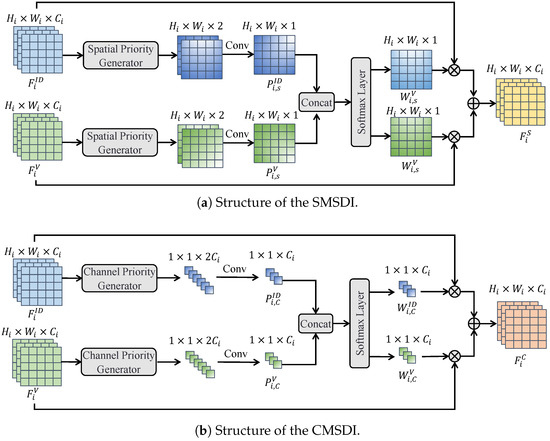

The Multi-Source Dynamic Interaction Module (MSDI) is a crucial component of the Multi-Scale Dynamic Feature Fusion Module, facilitating deep interactions between vehicle and infrastructure features at each scale. As illustrated in Figure 2, this module consists of two parallel sub-modules: the Spatial Multi-Source Dynamic Interaction Module (SMSDI) and the Channel Multi-Source Dynamic Interaction Module (CMSDI). The SMSDI captures correlations between features in the spatial dimension, while the CMSDI focuses on channel-wise correlations, as depicted in Figure 3.

Figure 3.

Structures of the SMSDI and CMSDI. SMSDI and CMSDI, respectively, generate spatial priority map and channel priority map adaptively, achieving dynamic interaction between vehicle and infrastructure features through these priority maps.

The input to the SMSDI consists of vehicle and infrastructure point cloud features. The SMSDI generates a spatial priority map through a spatial priority generator, which indicates the importance of different spatial locations in the feature map. This allows the system to concentrate on critical spatial areas, thereby reducing interference from irrelevant background information.

As shown in Figure 3, the SMSDI first processes vehicle and infrastructure features through the spatial priority generator. It then obtains spatial priority maps via convolution operations, enhancing the robustness of these maps, given by

where and represent the spatial priority maps for vehicle and infrastructure features, respectively, and denotes the spatial priority generator following a detection decoder from PointPillars.

Subsequently, we concatenate the spatial priority maps for vehicle and infrastructure features to ensure alignment at corresponding spatial locations. A Softmax function is then applied at each location to dynamically adjust the weights, thereby reinforcing the association between vehicle and infrastructure features, as detailed below:

where denotes the spatial priority map after interaction, with higher values indicating greater importance at the corresponding locations. Likewise, represents the processed spatial priority map for infrastructure features.

The spatial priority maps obtained after interaction are applied to the vehicle and infrastructure features, enabling each point to be re-weighted based on its spatial location’s priority, thus enhancing feature expressiveness. Finally, the weighted vehicle and infrastructure features are combined to produce the final spatial dynamic interaction features:

where represents the spatial dynamic interaction feature, fully integrating perception information from both vehicles and infrastructure.

CMSDI operates similarly to SMSDI but focuses on dynamic interactions in the channel dimension. Instead of spatial priority maps, it generates channel priority maps and through the channel priority generator , which consists of parallel max pooling and average pooling operations. These maps are then normalized via Softmax (as in Equation (4)) to produce channel weights, and , which re-scale features along the channel dimension.

The output is fused as follows:

where denotes the channel dynamic interaction feature, representing a deep integration of vehicle and infrastructure features at the channel level.

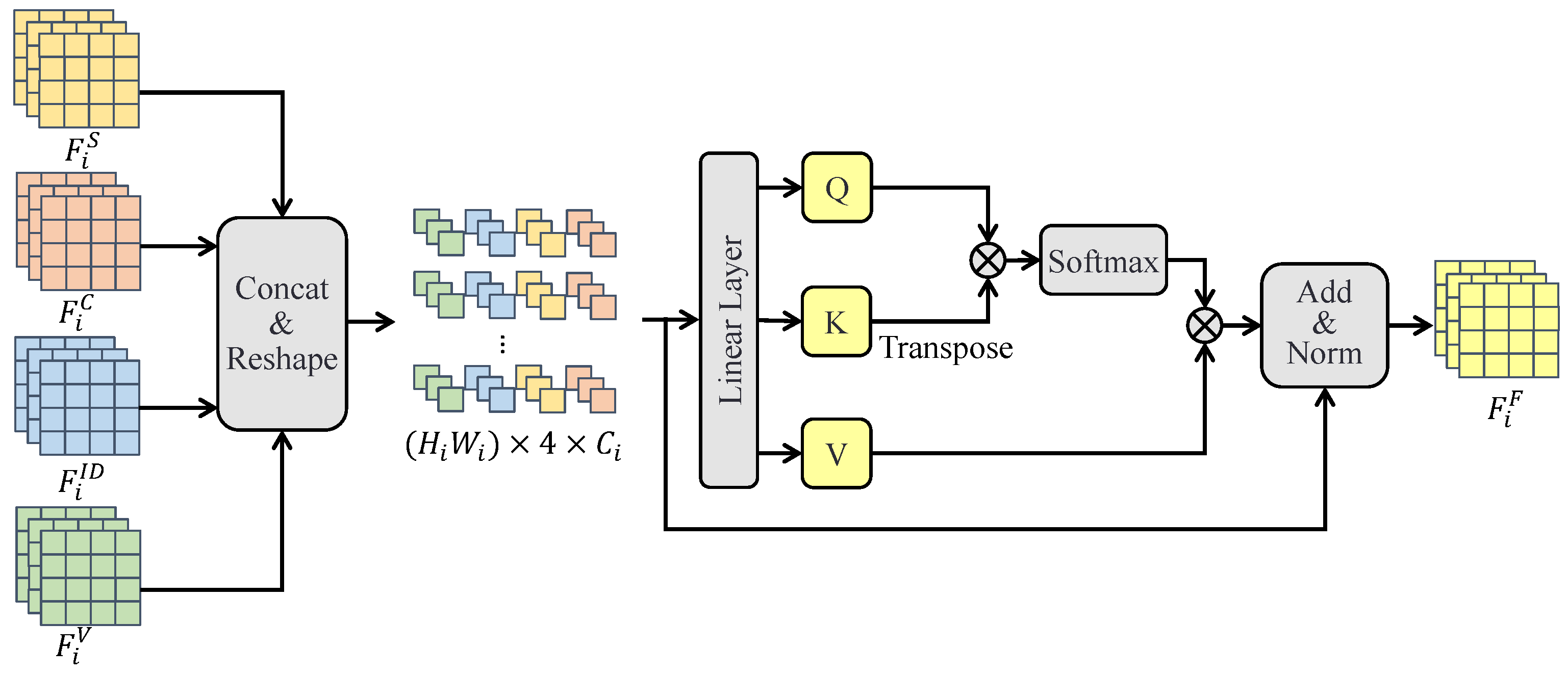

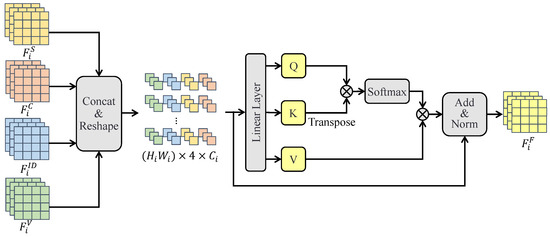

3.3.3. Per-Point Self-Attention Module

The PPSA module, illustrated in Figure 4, builds upon dynamic feature interactions by performing self-attention at identical spatial locations. This approach effectively captures the relationships between features from various sources at the same spatial coordinates, allowing for comprehensive integration of these features. The input to the PPSA consists of spatial and channel dynamic interaction features, along with the original vehicle and infrastructure features. This holistic input strategy reduces the risk of information loss during MSDI, thereby enhancing the network’s robustness and ensuring strong performance in complex environments.

Figure 4.

The PPSA module integrates multi-source features through self-attention mechanism, enhancing interaction and fusion among features.

Initially, the features , , , and are concatenated and reshaped into a tensor with dimensions , denoted as . This reshaping aligns the data with the input requirements of the self-attention mechanism.

Next, we express the applied self-attention mechanism along the second dimension of .

where , and are learnable projection matrices that transform the reshaped features into queries (Q), keys (K), and values (V) through linear transformations. After calculating the scaled dot–product attention scores using the Softmax function, the final fused features are derived by aggregating the value vectors weighted by the attention scores, followed by layer normalization (LN) and a residual connection.

3.3.4. Multi-Scale Feature Aggregation

The Multi-Scale Feature Aggregation module is designed to unify multi-scale features by first upsampling them to a consistent size using deconvolution techniques. Subsequently, these features are concatenated along the channel dimension. This concatenated feature then passes through a convolutional layer for dimensionality reduction, resulting in an integrated vehicle–infrastructure feature, denoted as . The final fused feature contains rich semantic information and spatial details, effectively merging multi-scale features from both vehicle and infrastructure domains, thereby enhancing the overall understanding and representation of the environment.

3.4. Detection Head

Within the vehicle–infrastructure cooperative perception network, the detection head is tasked with executing 3D object detection based on the fused features, which assimilate information from both vehicle and infrastructure. The detection head directly adopts the standard implementation of PointPillars, utilizing L1 loss for bounding box regression, focal loss for classification, and a direction loss for orientation estimation. The final output encompasses the object category, position, size, and yaw angle for each bounding box, thereby completing the 3D object detection process.

4. Experiment

This section presents experiments conducted to evaluate the performance of the proposed method for cooperative 3D object detection, utilizing both the DZGSet and DAIR-V2X datasets. We assess detection performance and benchmark our method against state-of-the-art approaches. Furthermore, qualitative visual analyses are performed to gain deeper insights into the capabilities of the proposed method. An ablation study is also carried out to investigate the contributions of individual modules within the network.

4.1. Datasets

4.1.1. DZGSet Dataset

To address the limitations of existing vehicle–infrastructure cooperative perception datasets, particularly regarding the number of object categories, we developed the DZGSet dataset. This dataset is collected from real-world scenarios and encompasses multi-modal, multi-category data for vehicle–infrastructure cooperative perception. The dataset construction process includes the following:

Hardware configuration: The collection of the DZGSet dataset relies on an advanced hardware setup, featuring an 80-line resolution LiDAR and a 1920 × 1080 pixel camera installed on infrastructure, alongside a 16-line resolution LiDAR and a camera with the same specifications mounted on vehicle. More detailed specifications of the sensors are shown in Table 1. Both vehicle and infrastructure are equipped with time servers to ensure precise synchronization across all devices. Additionally, vehicles are outfitted with a Real-Time Kinematic (RTK) system for the real-time acquisition of high-precision pose information. This comprehensive configuration enhances the richness and accuracy of the dataset by capturing detailed multi-modal data from both vehicle and infrastructure perspectives.

Table 1.

Sensor specifications in DZGSet. Veh. stands for vehicle, and Inf. stands for infrastructure.

Software configuration: On the software side, high-precision time synchronization is achieved through time servers, allowing accurate alignment of data from various sources. Furthermore, precise calibration techniques are employed to ensure the spatial coordinate alignment between the different sensors, enabling data from diverse perspectives to be processed within a unified spatial coordinate system.

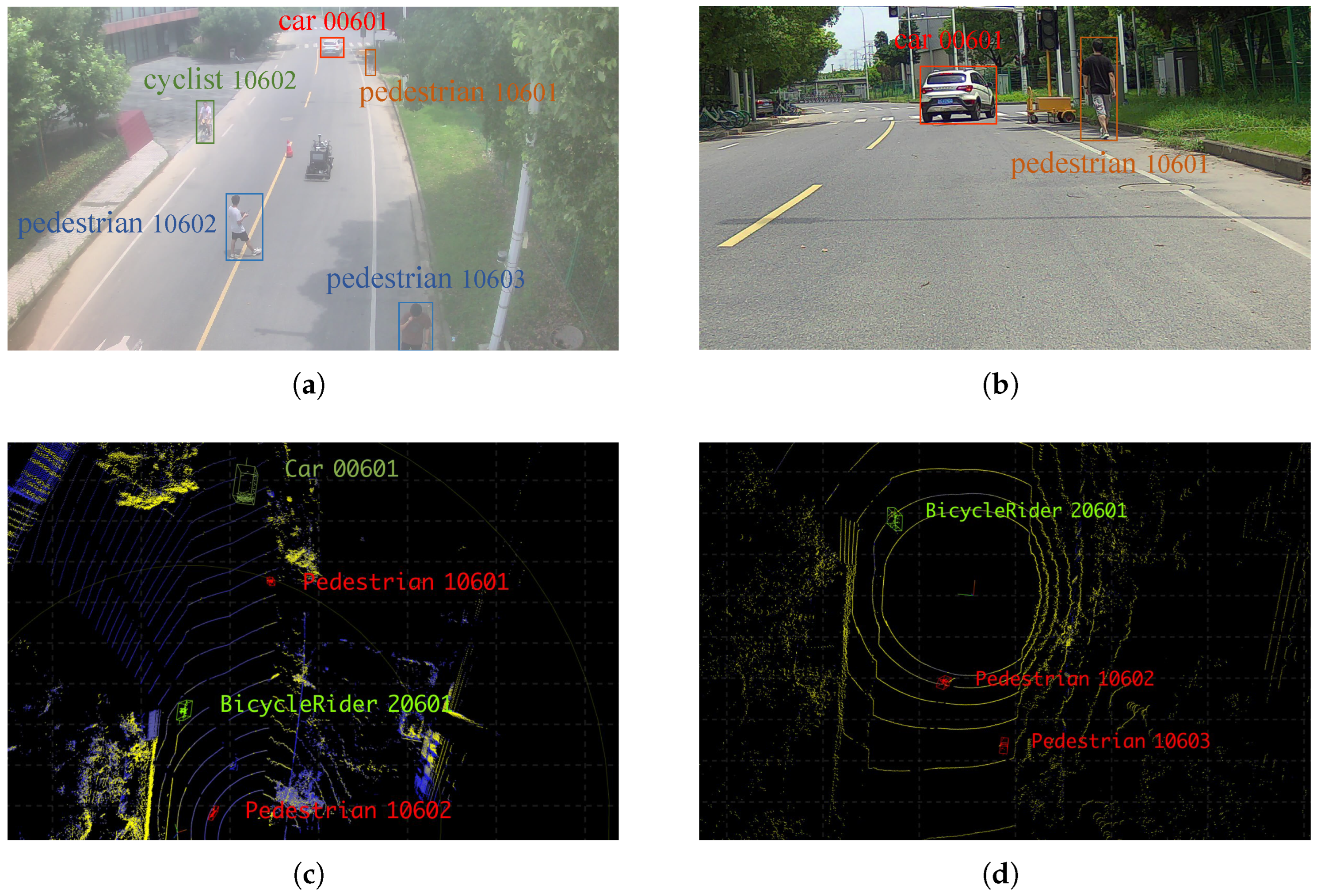

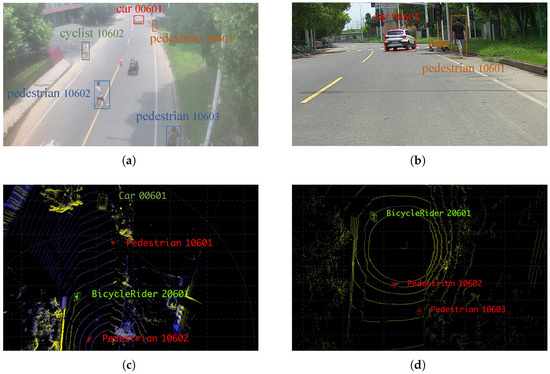

Data collection and annotation: Data are collected in real-world road environments through multiple driving experiments. During each experiment, data from both vehicle and infrastructure sensors are recorded. Professional annotators then perform high-quality manual annotations on objects based on image and point cloud data. The DZGSet dataset features 3D annotations for LiDAR point clouds and 2D annotations for camera images. The dataset primarily annotates on three object categories: “car”, “pedestrian”, and “cyclist”. When annotating point clouds, annotators assign a category and ID to each object, along with a 7-degree-of-freedom (DoF) 3D bounding box. The parameters for the 3D bounding box include the center position coordinates (x, y, z) and the dimensions and orientation defined by length, width, height, and yaw. During image annotation, annotators also label each object’s category and ID, along with its 4-degree-of-freedom 2D bounding box. The parameters for the 2D bounding box consist of the center position coordinates (x, y) and the dimensions defined by length and width. Examples from the DZGSet dataset are depicted in Figure 5.

Figure 5.

Examples from the DZGSet dataset. (a) Infrastructure image with 2D annotation. (b) Vehicle image with 2D annotation. (c) Infrastructure point cloud with 3D annotation. (d) Vehicle point cloud with 3D annotation.

Data analysis: The DZGSet dataset consists of 4240 frames of synchronized point cloud and image data, meticulously organized to facilitate reproducibility. It is divided into training, validation, and test sets in a 60%, 20%, and 20% ratio, respectively. The dataset features a total of 24,676 annotated objects, averaging six objects per frame. Data collection occurred during daytime under two weather conditions, sunny and cloudy, with 64% of the frames captured in sunny conditions and 36% in cloudy conditions. In terms of object distribution, the frames contain 12,920 pedestrians, 7708 cars, and 4048 cyclists. Pedestrians constitute the majority at 52%, while cars and cyclists represent 31% and 17%, respectively. In contrast to other cooperative perception datasets that focus solely on vehicle categories, DZGSet enriches categories by including pedestrians and cyclists, which are often considered smaller objects in the context of autonomous driving. This enhanced diversity significantly increases the dataset’s value for developing robust perception systems in complex urban environments.

4.1.2. DAIR-V2X Dataset

The DAIR-V2X dataset represents the first large-scale, real-world vehicle–infrastructure cooperative perception dataset. It includes both LiDAR point clouds and camera images collected from vehicle and infrastructure. The dataset contains 9000 frames, each annotated with 3D information for point clouds and 2D information for images. Notably, all annotations are limited to the vehicle categories throughout the dataset.

4.2. Evaluation Metrics

The performance of the proposed network is evaluated using the Average Precision (AP) metric, which quantifies the precision-recall trade-off by calculating the area under the precision–recall (P-R) curve [30]. The Intersection over Union (IoU) metric measures the overlap between detected and ground truth bounding boxes, defined as the area of overlap divided by the area of union. This metric is crucial for determining true positives in object detection. For evaluation purposes, AP is calculated at IoU thresholds of 0.5 and 0.7, reflecting detection accuracy at these commonly used thresholds in 3D object detection tasks.

4.3. Implementation Details

In the experiments, the detection range is set to for the DZGSet dataset, and for the DAIR-V2X dataset. The communication range between vehicle and infrastructure is established at 50 m for the DZGSet dataset and 100 m for the DAIR-V2X dataset. Beyond this broadcasting radius, vehicles are unable to receive information from the infrastructure.

For the PointPillars feature extraction network, the voxel size is set to 0.4 m for both height and width. All models are trained and tested on a platform equipped with a GeForce RTX 2080 Ti GPU. The training process spans 30 epochs with a batch size of 4. The Adam optimizer is employed, starting with an initial learning rate of 0.002 and a weight decay of 0.0001. A multi-step learning rate scheduler is utilized, reducing the learning rate by a factor of 0.1 at the 10th and 15th epochs. Furthermore, Kaiming Uniform is used for weight initialization.

4.4. Quantitative Results

To validate the detection performance of the proposed method, we conducted extensive experiments on both the DZGSet and DAIR-V2X datasets. Our network was compared against several benchmarks, including single-vehicle perception (baseline), early and late cooperative perception, and state-of-the-art intermediate cooperative perception methods. As summarized in Table 2, the proposed method consistently achieved the highest AP, underscoring its superiority in vehicle–infrastructure cooperative perception.

Table 2.

Detection performance on the DZGSet dataset and the DAIR-V2X dataset.

Specifically, the proposed method achieved an AP@0.5 of 0.652 and an AP@0.7 of 0.493 on the DZGSet dataset, and an AP@0.5 of 0.780 and an AP@0.7 of 0.632 on the DAIR-V2X dataset. When compared to the single-vehicle perception method (PointPillars), our approach demonstrated a substantial improvement, with an AP@0.5 increase of 0.288 on the DZGSet and 0.299 on the DAIR-V2X datasets. Clearly, the AP@0.5 and AP@0.7 values on the DAIR-V2X dataset are generally higher than those on the DZGSet dataset. This indicates that the larger-scale DAIR-V2X dataset offers a more diverse array of training samples, enabling the model to learn more robust features and enhance detection accuracy, while the DZGSet is smaller in scale, its multi-category annotations create a more complex learning environment, allowing for a broader evaluation of the model’s generalization capabilities. This complexity ultimately improves the model’s adaptability to various detection scenarios. Furthermore, against early, late, and state-of-the-art intermediate cooperative perception methods, our method achieves minimum improvements of an AP@0.5 increase of 0.037 (F-Cooper) and an AP@0.7 increase of 0.043 (F-Cooper) on the DZGSet dataset, as well as an AP@0.5 increase of 0.034 (Coalign) and an AP@0.7 increase of 0.028 (Coalign) on the DAIR-V2X dataset.

The single-vehicle perception method relies solely on the vehicle’s sensors and does not utilize supplementary infrastructure information, leading to significantly lower performance compared to the proposed method. On the other hand, intermediate cooperative perception methods typically fuse vehicle and infrastructure features at a single scale through concatenation or weighting mechanisms, which is insufficient for optimal detection performance, and therefore inferior to the proposed method as well.

The advancements of our vehicle–infrastructure cooperative perception network are primarily attributed to the Multi-Scale Dynamic Feature Fusion Module, which effectively integrates features from both domains. Specifically, at smaller scales, it refines bounding box localization by fusing spatial details, while at larger scales, it aggregates semantic information to mitigate spatial misalignment between vehicle and infrastructure sensors. By dynamically capturing correlations between vehicle and infrastructure features in both channel and spatial dimensions, it ensures thorough fusion of interacted features and the original vehicle–infrastructure features at each spatial location. This synergy allows the proposed approach to fully exploit the complementary perception data from infrastructure, achieving state-of-the-art detection accuracy while maintaining robustness in complex real-world scenarios.

Furthermore, we performed inference time experiments on the DAIR-V2X dataset. The analysis reveals a trade-off between computational efficiency and detection performance. The single-vehicle perception method achieves the fastest inference time of 28.27 ms, owing to its low model complexity. In contrast, V2X-ViT has the slowest inference time at 172.01 ms, primarily due to the high computational demands of its attention mechanisms. The proposed method records an inference time of 57.62 ms, achieving a balance between accuracy and computational cost. However, this time is still higher than that of the single-vehicle perception method, suggesting that further optimization may be necessary for deployment on edge devices with limited computational resources.

4.5. Qualitative Results

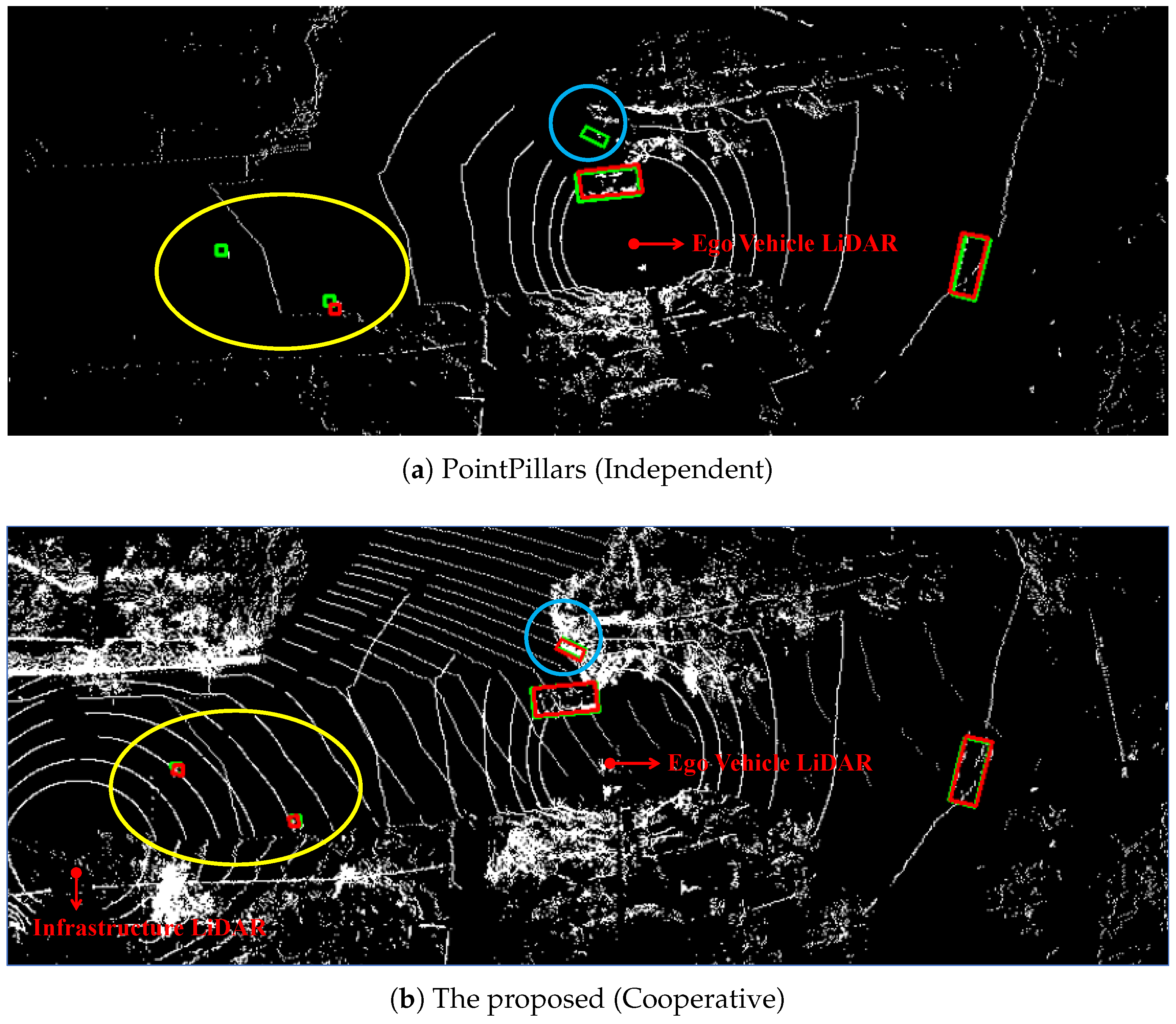

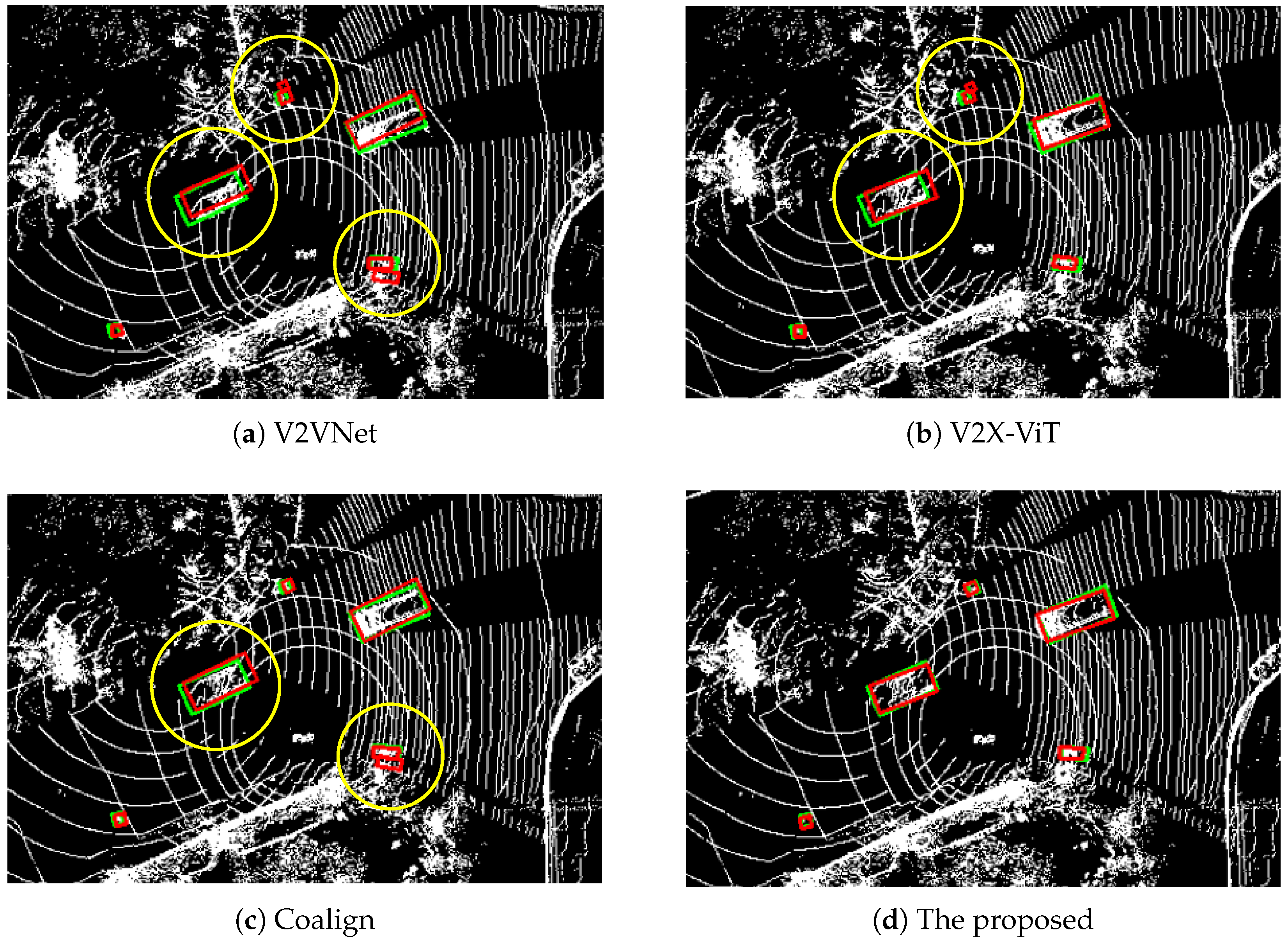

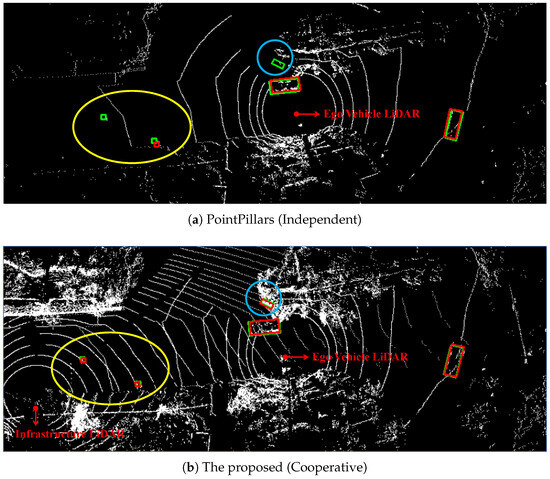

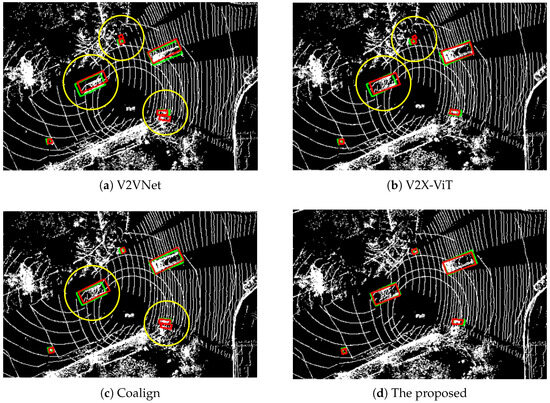

To intuitively illustrate the perception capability of different models, we present detection visualization comparisons in typical scenarios in Figure 6 and Figure 7.

Figure 6.

Detection visualization comparison with single-vehicle perception method on the DZGSet dataset. Green boxes denote ground-truth, while red boxes represent detection results. The object marked by the blue circle represents an occluded object and the objects marked by the yellow circle indicate distant objects in the single-vehicle perception.

Figure 7.

Detection visualization comparison with advanced cooperative perception methods on the DZGSet dataset. Green boxes are ground-truth, while red ones are detection. The objects marked by the yellow circle indicates inaccurate detection.

Comparison with single-vehicle perception method (Figure 6): Figure 6a highlights the limitations of single-vehicle perception in occlusion and long-range scenarios. For instance, the cyclist marked by the blue circle is occluded by a nearby car, rendering it undetectable for the ego vehicle. Additionally, two pedestrians marked by yellow circles are located at a significant distance, leading to missed or misaligned detections. In contrast, as shown in Figure 6b, the proposed method successfully detects these objects by leveraging complementary information from infrastructure LiDAR. This demonstrates that in a vehicle–infrastructure cooperation system, infrastructure sensors enhance single-vehicle perception capabilities by providing complementary viewpoint data, effectively mitigating blind spots caused by occlusion and reducing performance degradation typically observed in single-vehicle perception at extended distances.

Comparison with advanced cooperative perception methods (Figure 7): Figure 7a–c visualize the detection results of various cooperative methods (V2VNet, V2X-ViT, and CoAlign). The yellow circles indicate inaccurate detections, including false positives for pedestrians and cyclists, as well as misaligned bounding boxes for cars. In comparison, the proposed method produces predictions that are closely aligned with the ground truth, as shown in Figure 7d. The superiority of the proposed method arises from its ability to process vehicle and infrastructure features through the MSDI and PPSA modules at multiple scales. It effectively aggregates spatial details and semantic information from both vehicle and infrastructure features, resulting in more accurate and stable detection outcomes.

Overall, qualitative results highlight that the proposed approach achieved more accurate detection results compared to single-vehicle perception method and state-of-the-art cooperative perception methods.

4.6. Ablation Study

To validate the effectiveness of each component in the proposed vehicle–infrastructure cooperative perception network, we conducted comprehensive ablation studies on both the DZGSet and DAIR-V2X datasets. The results are summarized in Table 3, where we systematically integrated key modules into the baseline model and evaluate their contributions.

Table 3.

Ablation studies on the DZGSet dataset and the DAIR-V2X dataset.

The results indicate that each component significantly enhances detection accuracy, with the multi-scale fusion strategy demonstrating the most substantial impact, improving AP@0.5 by 0.040 and AP@0.7 by 0.035 on the DZGSet dataset, and AP@0.5 by 0.020 and AP@0.7 by 0.037 on the DAIR-V2X dataset. This enhancement is attributed to the multi-scale fusion’s ability to capture complementary information from vehicle–infrastructure features: small-scale features improve spatial localization by preserving geometric details, while large-scale features aggregate semantic context to mitigate ambiguities arising from sensor misalignment. Furthermore, within the MSDI module, the SMSDI shows a more significant contribution to performance gains compared to the CMSDI. This suggests that the interaction between vehicle and infrastructure features in the spatial dimension is particularly advantageous for the comprehensive fusion of these features. By synergizing these components, our network achieves state-of-the-art performance on both the DZGSet and DAIR-V2X datasets.

5. Conclusions

To tackle the significant challenge of domain gap arising from sensor characteristics in real-world applications, we introduce the Multi-Scale Dynamic Feature Fusion Module. This module integrates vehicle and infrastructure features through a multi-scale fusion strategy that operates at both fine-grained spatial and broad semantic levels. This approach facilitates a more effective exploration of the correlations and complementarities between vehicle and infrastructure features.

During the feature fusion process at each scale, we implement two key components: the Multi-Source Dynamic Interaction Module (MSDI) and the Per-Point Self-Attention Module (PPSA). The MSDI module adaptively aggregates vehicle–infrastructure features across spatial and channel dimensions in response to environmental variations, while the PPSA module optimizes feature aggregation at each spatial location through a self-attention mechanism. This combination ensures that vehicle–infrastructure features at every scale are fully utilized and effectively fused.

Additionally, we have constructed DZGSet, a self-collected real-world cooperative perception dataset that includes annotations for multiple object categories. Extensive validation on both the DAIR-V2X and the self-collected DZGSet datasets demonstrates state-of-the-art performance. Compared to advanced cooperative perception methods, our approach achieves improvements of 0.037 in AP@0.5 and 0.043 in AP@0.7 on the DZGSet dataset, and improvements of 0.034 in AP@0.5 and 0.028 in AP@0.7 on the DAIR-V2X dataset. The ablation studies further confirm that each proposed module significantly contributes to these performance gains.

While LiDAR point clouds provide precise 3D information, they often lack color and texture details. By combining high-resolution images with LiDAR point clouds, we can compensate for this deficiency, resulting in a more comprehensive environmental perception. In future work, we aim to extend our method to incorporate multi-modal data integration.

In conclusion, the proposed method achieves excellent vehicle–infrastructure cooperative perception performance by efficiently fusing vehicle–infrastructure features, thereby providing high-precision and robust data support that serves as a solid foundation for decision making in autonomous vehicles.

Author Contributions

Conceptualization, J.L.; methodology, J.L.; software, J.L.; validation, J.L.; formal analysis, J.L.; investigation, J.L.; resources, X.W. and P.W.; data curation, J.L.; writing—original draft preparation, J.L.; writing—review and editing, X.W. and P.W.; visualization, J.L.; supervision, X.W. and P.W.; project administration, X.W. and P.W.; funding acquisition, P.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Commission of Shanghai Municipality (22dz1203400).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this paper are DAIR-V2X and DZGSet. The DAIR-V2X dataset can be accessed at: https://github.com/AIR-THU/DAIR-V2X (accessed on 8 April 2024). For the DZGSet dataset, please contact the corresponding author directly.

Acknowledgments

We thank Can Tian for their contributions to resources, manuscript review, and supervision.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. Nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Huang, K.C.; Lyu, W.; Yang, M.H.; Tsai, Y.H. Ptt: Point-trajectory transformer for efficient temporal 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 14938–14947. [Google Scholar]

- Wu, H.; Wen, C.; Shi, S.; Li, X.; Wang, C. Virtual sparse convolution for multimodal 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21653–21662. [Google Scholar]

- Xie, G.; Chen, Z.; Gao, M.; Hu, M.; Qin, X. PPF-Det: Point-pixel fusion for multi-modal 3D object detection. IEEE Trans. Intell. Transp. Syst. 2024, 25, 5598–5611. [Google Scholar]

- Chen, S.; Hu, J.; Shi, Y.; Peng, Y.; Fang, J.; Zhao, R.; Zhao, L. Vehicle-to-everything (V2X) services supported by LTE-based systems and 5G. IEEE Commun. Stand. Mag. 2017, 1, 70–76. [Google Scholar] [CrossRef]

- Storck, C.R.; Duarte-Figueiredo, F. A 5G V2X ecosystem providing internet of vehicles. Sensors 2019, 19, 550. [Google Scholar] [CrossRef] [PubMed]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. Pointpainting: Sequential fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4604–4612. [Google Scholar]

- Xu, R.; Chen, W.; Xiang, H.; Xia, X.; Liu, L.; Ma, J. Model-agnostic multi-agent perception framework. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1471–1478. [Google Scholar]

- Xu, R.; Li, J.; Dong, X.; Yu, H.; Ma, J. Bridging the domain gap for multi-agent perception. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 6035–6042. [Google Scholar]

- Xu, R.; Xiang, H.; Xia, X.; Han, X.; Li, J.; Ma, J. Opv2v: An open benchmark dataset and fusion pipeline for perception with vehicle-to-vehicle communication. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2583–2589. [Google Scholar]

- Chen, Q.; Ma, X.; Tang, S.; Guo, J.; Yang, Q.; Fu, S. F-cooper: Feature based cooperative perception for autonomous vehicle edge computing system using 3D point clouds. In Proceedings of the 4th ACM/IEEE Symposium on Edge Computing, Washington, DC, USA, 7–9 November 2019; pp. 88–100. [Google Scholar]

- Yu, H.; Tang, Y.; Xie, E.; Mao, J.; Yuan, J.; Luo, P.; Nie, Z. Vehicle-infrastructure cooperative 3d object detection via feature flow prediction. arXiv 2023, arXiv:2303.10552. [Google Scholar]

- Ren, S.; Lei, Z.; Wang, Z.; Dianati, M.; Wang, Y.; Chen, S.; Zhang, W. Interruption-aware cooperative perception for V2X communication-aided autonomous driving. IEEE Trans. Intell. Veh. 2024, 9, 4698–4714. [Google Scholar]

- Yazgan, M.; Graf, T.; Liu, M.; Fleck, T.; Zöllner, J.M. A Survey on Intermediate Fusion Methods for Collaborative Perception Categorized by Real World Challenges. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 2226–2233. [Google Scholar]

- Yu, H.; Luo, Y.; Shu, M.; Huo, Y.; Yang, Z.; Shi, Y.; Guo, Z.; Li, H.; Hu, X.; Yuan, J.; et al. Dair-v2x: A large-scale dataset for vehicle-infrastructure cooperative 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21361–21370. [Google Scholar]

- Han, Y.; Zhang, H.; Li, H.; Jin, Y.; Lang, C.; Li, Y. Collaborative perception in autonomous driving: Methods, datasets, and challenges. IEEE Intell. Transp. Syst. Mag. 2023, 15, 131–151. [Google Scholar] [CrossRef]

- Chen, Q.; Tang, S.; Yang, Q.; Fu, S. Cooper: Cooperative perception for connected autonomous vehicles based on 3d point clouds. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–9 July 2019; pp. 514–524. [Google Scholar]

- Mo, Y.; Zhang, P.; Chen, Z.; Ran, B. A method of vehicle-infrastructure cooperative perception based vehicle state information fusion using improved kalman filter. Multimed. Tools Appl. 2022, 81, 4603–4620. [Google Scholar] [CrossRef]

- Zhao, X.; Mu, K.; Hui, F.; Prehofer, C. A cooperative vehicle-infrastructure based urban driving environment perception method using a DS theory-based credibility map. Optik 2017, 138, 407–415. [Google Scholar] [CrossRef]

- Xu, R.; Xiang, H.; Tu, Z.; Xia, X.; Yang, M.H.; Ma, J. V2x-vit: Vehicle-to-everything cooperative perception with vision transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 107–124. [Google Scholar]

- Li, Y.; Ma, D.; An, Z.; Wang, Z.; Zhong, Y.; Chen, S.; Feng, C. V2X-Sim: Multi-agent collaborative perception dataset and benchmark for autonomous driving. IEEE Robot. Autom. Lett. 2022, 7, 10914–10921. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Li, J.; Luo, C.; Yang, X. PillarNeXt: Rethinking network designs for 3D object detection in LiDAR point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 17567–17576. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Wang, T.H.; Manivasagam, S.; Liang, M.; Yang, B.; Zeng, W.; Urtasun, R. V2vnet: Vehicle-to-vehicle communication for joint perception and prediction. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II 16. Springer: Cham, Switzerland, 2020; pp. 605–621. [Google Scholar]

- Lu, Y.; Li, Q.; Liu, B.; Dianati, M.; Feng, C.; Chen, S.; Wang, Y. Robust collaborative 3d object detection in presence of pose errors. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 4812–4818. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).