1. Introduction

Digital technologies and artificial intelligence (AI) drive transformative changes across various sectors, including education [

1]. AI facilitates personalized learning experiences, supports data-driven decision-making, and enables automated assessments and customized educational content [

2]. AI-driven analytics enable personalized learning and assist teachers in developing targeted educational strategies [

3].

As AI technology reshapes the K-12 education paradigm, countries are actively developing policies to create AI-based learning environments. South Korea, through its revised 2022 educational curriculum, emphasized the use of AI in teaching, learning, and assessment processes [

4], while Japan implemented the ‘GIGA School Program’ to support AI-based personalized learning with a one-device-per-student policy [

5]. Singapore introduced the ‘AI Learning Analytics’ system to analyze individual student learning data and support customized education [

6], and China has expanded its AI-based education innovation through the ‘New Generation Artificial Intelligence Development Plan’ and the ‘Smart Campus AI Initiative’, which includes AI textbook development and online assessment systems [

7,

8]. Saudi Arabia and the UAE are also advancing in building smart learning environments utilizing AI [

9,

10].

The expansion of AI in education is transforming teaching methods and reshaping educators’ roles. Teachers are increasingly required to design personalized learning experiences using AI-based learning analytics tools and to enhance the objectivity of assessments through AI grading systems, thereby serving as both designers of personalized learning and data-driven decision-makers [

11]. These changes necessitate teachers to possess AI Literacy, highlighting the need for effective integration of AI in education. In the United States, the National AI Education Initiative (2022) has been enhancing AI education by linking the AI4K12 framework with STEM education and expanding AI Literacy training for teachers [

12]. The United Kingdom and the European Union (EU) continue to explore integrating AI technology in education, developing AI Literacy training and usage guidelines for teachers [

13].

AI Literacy encompasses a comprehensive skill set that includes understanding the fundamental concepts and principles of AI, applying them effectively within an educational context, and considering the social and ethical implications of their use. This skill set is an extension of traditional literacy (reading, writing, speaking) and digital literacy, including the understanding of AI’s operational principles and data analytics capabilities, the ability to assess the impact of AI on education and society, and a responsible ethical stance on AI usage [

1]. Teacher AI Literacy is the ability to effectively integrate AI technologies in all educational stages—preparation, execution, assessment, and utilization of assessments. This requires teachers to possess theoretical and practical knowledge, develop technical skills to achieve educational objectives, and master pedagogical methods for effective AI application [

1].

With the increasing importance of AI Literacy, the level of teachers’ AI Literacy is becoming more crucial in enhancing the quality of education and student learning outcomes. Recent studies have been actively exploring the development of training programs to improve AI Literacy and the execution of technology, with a focus on teachers’ AI Literacy capabilities and their influence on students’ acquisition of AI Literacy [

14,

15,

16]. While initial studies primarily concentrated on the impact of teachers’ AI Literacy on students’ learning, most prior research has focused on establishing the concept of AI Literacy and developing pedagogies, with less empirical examination of how teachers’ perceptions affect their AI Literacy performance and educational effectiveness in real classrooms.

This study makes several significant contributions. Firstly, it empirically investigates the relationship between teachers’ perceptions and their AI Literacy performance using advanced machine learning techniques, addressing a gap in previous research that primarily focused on conceptual discussions. Second, it applies XGBoost and SHAP analysis to identify key factors affecting teachers’ AI Literacy across different teaching phases, providing both predictive insights and explainable interpretations. Third, the findings offer practical implications for the design of teacher training programs and educational policies aimed at fostering AI Literacy in real classroom settings.

Furthermore, the study clarifies which factors have a significant impact on teachers’ AI Literacy performance at each instructional phase, providing insights into where targeted support is most effective. This enables the development of focused teacher training strategies that can enhance teachers’ AI Literacy performance efficiently within a short period of time, especially addressing gaps in teachers’ self-assessment and confidence in AI Literacy.

By providing empirical data and methodological innovation, this study supports the systematic development of AI Literacy education in the educational field. These contributions are expected to offer valuable insights for researchers, teacher educators, and educational policymakers seeking to foster AI Literacy in K-12 education.

2. Literature Review

As the use of AI technologies in K-12 education expands, the educational implications of AI Literacy are becoming increasingly significant. However, AI Literacy is often conflated with digital literacy or understood as part of AI education, and its conceptual independence is not always clearly defined [

17,

18,

19,

20,

21,

22]. This study aims to strengthen the foundation of our research by systematically discussing the definition of AI Literacy. To concretize AI Literacy, we first articulate it through a bottom-up approach based on the relationship between General Literacy and Literacy. General Literacy refers to the basic knowledge and competencies necessary for social participation, while Literacy involves the ability to understand and use information within specific contexts [

23,

24,

25]. Traditionally focused on reading and writing, the concept of Literacy was expanded by The New London Group [

26] through the introduction of ‘Multiliteracies’, emphasizing modes of meaning-making in digital and multicultural environments. Gilster [

27] extended the scope of literacy within the information technology environment by introducing ‘Digital Literacy’. AI Literacy extends Digital Literacy, encompassing the understanding of AI, its critical analysis, and ethical use [

22,

28]. While some previous studies viewed AI Literacy as a subset of digital literacy [

21], recent research suggests it is developing as an independent concept reflecting the unique characteristics and societal impacts of AI technology [

22,

29].

Next, we compare the educational approaches of AI education and AI Literacy. AI education, as part of computing education (Depending on national educational policies and academic traditions, the names for computing curricula in K-12 education are interchangeably used as Informatics, Computing, ICT, etc. [

30,

31,

32,

33,

34]. CC 2020 acknowledges these differences and clarifies that these terms are used interchangeably to mean the same thing. Initially, the concept of “Introduction to AI” (1991) was included in computer science education [

35], and over time elements such as "Machine Learning", "Data Mining" (2001), “Natural Language Processing”, and “AI Ethics” (2021) were added, gradually expanding the scope [

35]. Recently, the “AI Competency Framework” was introduced, emphasizing that not only AI technical competencies but also ethical considerations must be addressed at all educational levels [

35]), focuses on developing problem-solving skills through understanding AI concepts and principles, processing data, and creating models [

30,

31,

32,

33,

34]. International K-12 AI education guidelines play a crucial role in establishing and developing an AI education system [

20]. UNESCO [

36] is developing a K-12 AI curriculum, setting directions for AI education in various countries, and AI4K12 recommends designing curricula around the five Big Ideas of “Perception of Intelligence”, “Representation and Reasoning”, “Learning”, “Natural Interaction", and “Societal Impact” [

14]. This highlights that AI education should encompass ethical and social responsibilities beyond mere technical acquisition [

20]. This includes learning AI concepts, practical tech applications, and the integration of AI with other academic disciplines [

3,

20,

28,

35,

36,

37]. In contrast, AI Literacy is not confined to a specific subject area but encompasses understanding the basic principles of AI technology, using tools, and analyzing its social and ethical impacts [

3,

22,

28,

37,

38]. In AI-based educational environments, teachers are required to do more than merely use AI tools; they must effectively utilize AI technologies within educational contexts and critically assess their use [

21,

29]. Teachers need to understand AI’s basic concepts and operational principles to develop and apply appropriate instructional strategies that align with learning objectives. Therefore, a teacher with AI Literacy can be defined as “an expert who effectively utilizes AI technology in lesson planning, teaching execution, and the assessment process”.

Section 2 is organized to provide the theoretical background and methodological framework for this study. Instead of relying on a pre-established theoretical framework, this study constructed its theoretical foundation by systematically reviewing the conceptual development of AI Literacy and its educational implications, as well as the evolving roles of teachers in AI-based educational environments. This integrative approach reflects recent educational trends, curriculum changes, and the practical needs of teachers. Additionally, this study incorporates advanced machine learning techniques (XGBoost and SHAP) to analyze factors influencing teachers’ AI Literacy performance, providing a methodological innovation beyond traditional theoretical frameworks in educational research.

Section 2.1 discusses the transformation of educational environments due to AI adoption and the evolving roles of teachers, followed by a review of preceding research on AI Literacy and teacher professional development.

Section 2.2 subsequently introduces the analytical techniques employed in this study, namely XGBoost and SHAP, and explains how these methods facilitate the identification of key factors influencing teachers’ AI Literacy.

2.1. AI-Based Educational Environments and the Evolving Role of Teachers

As K-12 educational settings increasingly adopt AI technologies, the role of teachers has expanded from mere transmitters of knowledge to designers of data-driven learning experiences, evaluators, experts in AI tool utilization, and ethical decision-makers [

12,

39]. This section examines the transformation in AI-based educational environments, focusing on AI-driven learning analytics, AI-based assessment systems, AI educational tools, and ethical considerations in AI-based educational environments. It also discusses the implications of these changes for teachers’ AI Literacy capabilities and roles, alongside analyzing trends in AI Literacy research.

2.1.1. The Impact of AI-Based Educational Environments on Teachers’ Roles

AI-based learning analytics, which analyze vast amounts of learning data in real time to provide personalized learning experiences, allow teachers to identify and tailor educational strategies to individual student patterns [

40]. For instance, Singapore’s implementation of the ’AI Learning Analytics’ system enables customized education support by analyzing individual learning data [

6]. Studies by Siemens and Baker [

41] and Ferguson [

42] suggest that the use of AI learning analytics can enhance student achievement by 15–20% and increase learning efficiency by over 30% when teachers apply AI data critically in lesson planning. Consequently, teachers play a crucial role in interpreting AI analysis and adjusting educational strategies. The importance of integrating AI in teaching and assessment practices has been emphasized in the revised 2022 educational curriculum in South Korea [

4]. AI-based assessment systems provide automated grading and adaptive feedback, reducing the workload for teachers even in large-scale assessments [

43]. However, these systems face limitations in assessing creative problem-solving skills and may produce biased outcomes based on certain linguistic styles or grammatical structures [

44,

45]. Efforts in China to apply and refine AI-based assessment systems in educational settings address these biases through research and policy development [

7]. AI educational tools, such as AI chatbots and virtual tutors, offer instantaneous responses and support repetitive learning, but their effectiveness is not uniform across all learner groups, possibly leading to biased outcomes [

46,

47]. Japan’s ’GIGA School Program’ supports customized learning through a one-device-per-student policy, guiding effective utilization of AI educational tools [

5]. Teachers thus play a critical role in recognizing the limitations of AI tools and guiding students in their proper use. Ethical considerations in AI-based educational environments address significant issues such as algorithmic bias, data privacy, and transparency in AI decision-making [

38]. These ethical concerns must be considered across all aspects of AI learning analytics, AI assessment systems, and AI educational tools. The EU’s AI Act provides legal guidelines to address biases in AI educational tools, while initiatives in Saudi Arabia and the UAE focus on creating smart learning environments utilizing AI [

9,

10]. The AI4K12 Framework includes AI ethics and fairness as core elements, underscoring the role of teachers in ensuring transparency in AI decision-making processes and educating students about the limitations and responsibilities associated with AI technologies.

2.1.2. Research Trends in AI Literacy and Teacher Professional Development

Research on AI Literacy capabilities has focused on defining essential elements of AI Literacy for teachers and modeling these components. Smith and Lee [

15] categorized AI Literacy into understanding AI concepts, data literacy, using AI tools, and ethical considerations, while Jones and Brown [

16] emphasized that AI Literacy should extend beyond technical understanding to include educational applications and ethical judgments. Moreover, Schmidt and Fischer [

48] noted that a lack of teacher training programs is a significant barrier to spreading AI Literacy. Studies on developing AI Literacy training programs concentrate on effective training designs to enhance teachers’ AI Literacy skills. Chen et al. [

49] reported that hands-on training effectively improves teachers’ ability to use AI tools, and Ng [

50] proposed that comprehensive training programs covering AI concept learning, tool use, and educational applications are essential. Jones and Bradshaw [

51] highlighted the importance of continuous practice opportunities and collaboration among teachers following training sessions. Anderson and Shattuck [

52] argued that maintaining AI Literacy requires regular training, practice-based learning, collaboration among teachers, and feedback provision. Research on applying AI Literacy in educational settings focuses on how AI technologies are practically utilized in classrooms. Chen et al. [

49] observed that teachers who received hands-on training were more capable of effectively integrating AI-based educational tools into their teaching. However, existing studies tend to concentrate on practical approaches to enhancing AI Literacy capabilities, with relatively limited research on the challenges and solutions that teachers face during the implementation of AI Literacy education. Studies on AI Literacy and teachers’ self-efficacy examine the relationship between teachers’ capacity to use AI and their educational attitudes. Self-efficacy, the belief in one’s ability to successfully complete a specific task, is closely linked to confidence in AI Literacy-related skills. Wang et al. [

53] found that teachers who underwent AI training were 40% more likely to utilize AI technologies and more likely to adopt AI-based educational tools. Smith et al. [

54] reported that teachers with higher self-assessment scores were more active in using AI tools, although Schmid et al. [

55] cautioned that self-assessment results might not always align with actual performance capabilities. To address discrepancies between self-assessment and actual performance, Rosenberg et al. [

56] developed the AI Literacy Competency Framework to objectively assess AI utilization skills and clarify the effectiveness of AI Literacy training. As AI technology proliferates in educational environments, research on AI Literacy is evolving, with increasing focus on teachers’ AI Literacy skills. This research trend is establishing a crucial foundation for practical classroom applications, reflecting the growing importance of AI Literacy and the changing role of teachers in contemporary education.

2.2. Application of XGBoost and SHAP in Machine Learning-Based Educational Data Research

Among the various machine learning techniques used in educational data analysis, Decision Trees and Random Forests have been prominently employed. Decision Trees automatically learn the relationships between features, yet single tree models are prone to overfitting and can exhibit performance variability depending on data sampling [

57]. To overcome these limitations, Random Forests combine multiple trees to enhance prediction accuracy, although interpreting the importance of features remains a challenge [

58].

XGBoost (eXtreme Gradient Boosting) addresses these limitations and is widely used in the analysis of educational data. XGBoost is a Gradient Boosting-based algorithm that combines multiple decision trees to maximize predictive performance [

59]. This algorithm applies weight updates and regularization techniques to prevent overfitting while maintaining high predictive accuracy and provides stable performance even in datasets with nonlinear relationships and multicollinearity [

60]. Furthermore, XGBoost utilizes ensemble learning techniques to combine several weak predictive models (decision trees) into a strong predictor. This iterative improvement process corrects errors from previous trees, thereby optimizing the overall model performance. Such ensemble methods are tailored to the complexity and characteristics of the data, showing exceptional performance even in complex datasets like education.

Recent studies indicate that XGBoost outperforms traditional machine learning models, with an increase in the average coefficient of determination (R

2) by 0.05–0.1 compared to Random Forests, and a reduction in the root mean square error (RMSE) by an average of 10–20% compared to regression analyses [

58,

60]. Additionally, the mean absolute error (MAE) has been reported to decrease by an average of 15% compared to single decision trees, enabling more precise predictions [

57,

58].

Performance evaluation of the XGBoost model utilizes various metrics, such as R

2, mean absolute error (MAE), mean squared error (MSE), root mean square error (RMSE), and mean absolute percentage error (MAPE). R

2 and MAPE are crucial indicators of predictive accuracy, with R

2 values above 0.7 typically indicating a model with high explanatory power and MAPE values below 10% signifying a highly accurate model [

60,

61].

However, XGBoost is often considered a “black box” model, making it difficult to intuitively interpret the impact of individual features on the prediction process [

60]. To address this, SHAP (Shapley Additive Explanations) analysis has been applied, which uses game theory to quantitatively assess and distribute the contributions of features [

62,

63,

64]. SHAP analysis employs Shapley values to quantitatively evaluate the contribution of each feature, thus enhancing the transparency of the model’s predictive process. By analyzing how the inclusion or exclusion of specific features affects prediction outcomes, SHAP helps intuitively interpret the results.

SHAP enables the intuitive understanding of whether specific features increase (positive values) or decrease (negative values) the prediction and also allows for the analysis of interactions between features [

65]. This approach clarifies how significant features interact and contribute to predictions in educational data. Recent studies have further discussed both the possibilities and limitations of applying explainable AI (XAI) in education. For instance, Farrow (2023) explores the socio-technical challenges of implementing XAI, arguing that transparency alone may not ensure meaningful educational outcomes [

66]. Similarly, Reeder (2023) investigates how user characteristics, such as gender and educational background, influence the interpretation of XAI outputs, highlighting the need to account for user diversity in educational applications of XAI [

67]. These studies suggest that, although SHAP improves model interpretability, it is essential to carefully consider its practical implications and how users in educational contexts may interpret the results.

Optimizing the performance of the XGBoost model requires tuning hyperparameters, with recent studies extensively using Bayesian Optimization techniques to efficiently find the best settings while reducing computational load compared to traditional methods like Grid Search or Random Search [

63]. This tuning significantly enhances model performance and helps prevent overfitting [

60].

XGBoost and SHAP analysis are broadly used in educational research, including AI Literacy education, effectiveness analysis of teacher training programs, and student achievement prediction. For instance, in AI Literacy education research, XGBoost has been used to predict students’ understanding of AI concepts, while SHAP analysis has identified key factors influencing outcomes [

60]. Additionally, SHAP has been employed in policy analysis to assess the impact of specific educational policies [

68].

3. Materials and Methods

This study aims to identify factors influencing K-12 teachers’ Self-Perceived Performance of AI Literacy and to evaluate the performance of the model developed. The research procedure is outlined as follows (refer to

Figure 1).

The collected data were preprocessed and analyzed using Python-based Google Colab (

https://colab.research.google.com/), supported by GPU and essential programming libraries, including NumPy, Pandas, and scikit-learn (accessed on 14 February 2025; see

Table 1).

3.1. Tool Development

The survey tool for this research was developed by integrating domestic and international research on AI Literacy and AI-based education, policy reports, and case analyses. To ensure the validity of the survey tool, a review by 16 experts in SW and AI education was conducted using Lawshe’s (1975) Content Validity Ratio (CVR). Items with a CVR value of 0.476 or higher were selected for the final research tool [

69].

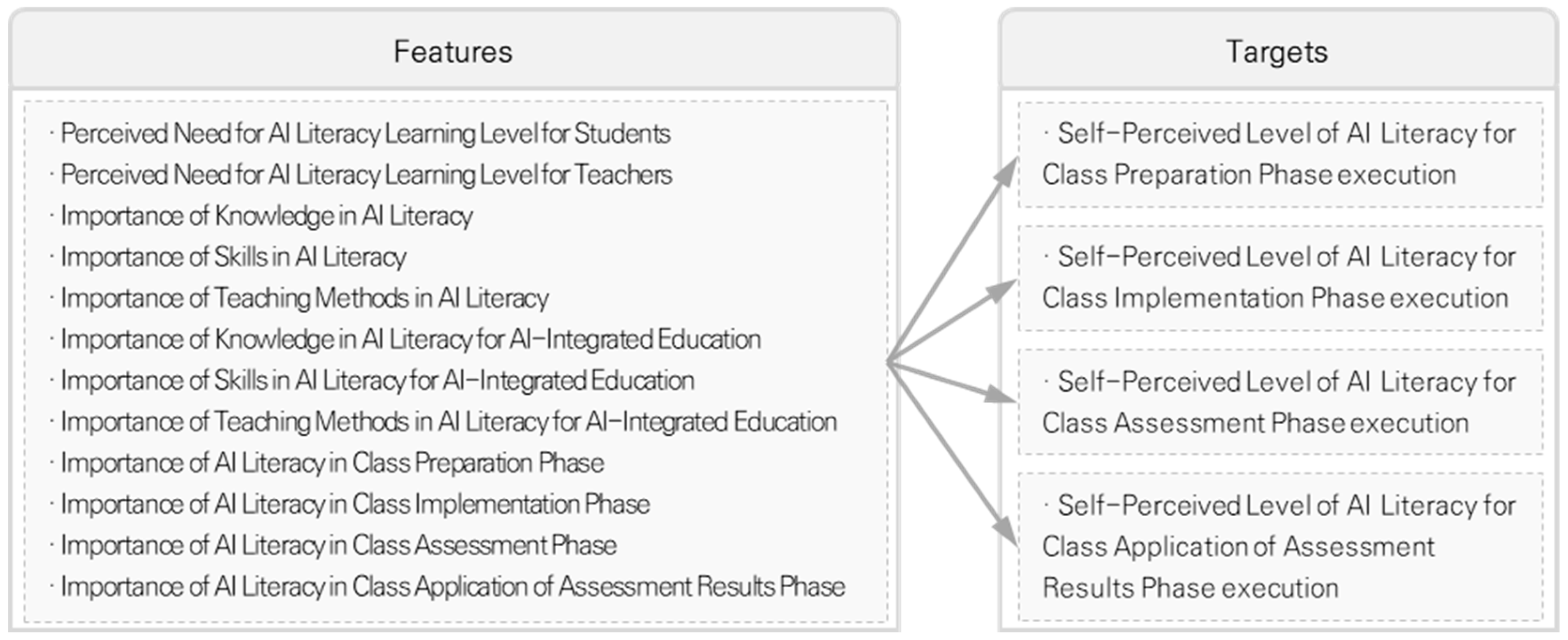

Composition of Features and Targets

The composition of features and targets for this study is as follows (refer to

Figure 2). Individual models were trained and evaluated to predict four targets.

3.2. Data Collection

Reflecting the geographical and educational diversity of Korea, this study selected the C area as the target region. The C area encompasses both metropolitan and rural educational environments, including schools located in urban areas and those in agricultural and mountainous regions. According to the Ministry of the Interior and Safety (2024), approximately 55% of Korea’s administrative divisions are classified as urban areas (si) and about 40% as rural areas (gun), although more than 90% of the population resides in urban areas. Similarly, in C area, as of 1 April 2024, a total of 808 schools (kindergartens, elementary, middle, and high schools) were operating, with approximately 60% located in urban areas and 40% in rural and mountainous areas [

70,

71,

72]. This distribution reflects the overall geographical and educational structure of Korea, making the C area a suitable and representative region for investigating teachers’ AI Literacy.

Although the survey was not conducted nationwide, the selection C area holds significance in that the region structurally mirrors the geographical and educational characteristics of Korea. Therefore, the findings of this study are expected to provide meaningful insights applicable to the broader Korean educational context. While the study was conducted within a single province, the structural alignment between the C area and the national educational landscape mitigates concerns about the generalizability of the findings.

A census survey was conducted among all primary and secondary school teachers in the C area from 16 June to 30 June 2024 using an online questionnaire. Out of 1325 participating teachers, responses from 1172 teachers were considered valid after excluding 152 incomplete or unclear responses. The characteristics of the respondents are detailed in

Table 2.

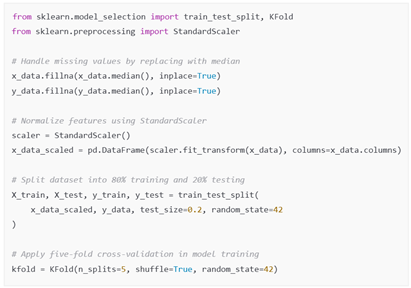

3.3. Data Preprocessing

Data preprocessing was performed to ensure data quality and enhance the reliability of model training, involving missing data handling, data normalization, and data splitting.

Missing values were addressed using median imputation to prevent data loss and minimize outliers [

61].

Data normalization was achieved using StandardScaler to adjust scale differences among features, thus preventing overfitting and improving training speed.

Normalized data, X: Original data, μ: Mean, σ: Standard deviation.

The data were split into training data (80%) and test data (20%), and five-fold cross-validation was applied to validate the generalization performance of the model.

3.4. Model Training and Performance Evaluation

A Pearson correlation matrix, including features and targets, was calculated to analyze linear relationships, serving as a basis for training the XGBoost model and conducting SHAP analysis. The correlation analysis results were visually represented through heatmaps, and the strength of relationships between features and targets was assessed based on Pearson correlation coefficients. Bayesian Optimization was applied to optimize the XGBoost model’s performance, using metrics such as R2, MSE, MAE, and RMSE for evaluation. SHAP analysis was performed to identify key features contributing to the predictions and to analyze interactions among features.

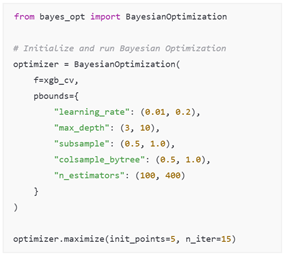

3.4.1. Hyperparameter Tuning for XGBoost Optimization

In this study, Bayesian Optimization was utilized to efficiently search for optimal hyperparameters such as learning_rate, max_depth, subsample, colsample_bytree, and n_estimators, minimizing computational costs compared to traditional Grid Search and Random Search methods. Initial exploration was conducted in 5 points (init_points = 5), with 15 iterations of optimization (n_iter = 15), totaling 20 Bayesian Optimization sessions to derive the best hyperparameter combination.

Here, θ represents the hyperparameter vector, Θ denotes the hyperparameter search space, and f(θ) is a function that describes the performance of the model based on the hyperparameters.

The search process involved an initial exploration of five points (init_points = 5) and 15 optimization iterations (n_iter = 15), making a total of 20 runs. Through this process, the optimal combination of hyperparameters was determined.

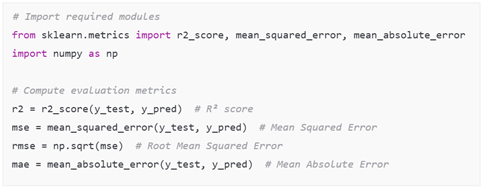

3.4.2. Performance Metrics for Model Evaluation

The model performance was evaluated based on the coefficient of determination (R2), Mean Squared Error (MSE), Mean Absolute Error (MAE), and Root Mean Squared Error (RMSE).

The coefficient of determination (R

2) indicates the extent to which variability in the data is explained, with values above 0.7 considered indicative of good performance [

53].

: the actual value, : the predicted value, : the mean of actual values, n: the number of observations.

Mean Squared Error (MSE) is calculated by averaging the squares of the differences between predicted and actual values, with smaller values indicating higher prediction accuracy. A value below 25 is considered to represent good performance [

57].

Mean Absolute Error (MAE) averages the absolute differences between predicted and actual values, directly showing the discrepancy between prediction and reality. Generally, a MAE less than 3 is interpreted as stable predictive performance [

73].

Root Mean Squared Error (RMSE) is the square root of MSE, providing a more intuitive representation of error magnitude. A value below 5 indicates excellent model performance [

65].

Based on these metrics, the predictive performance of the model was quantitatively analyzed, and its generalizability was objectively validated.

3.4.3. XGBoost-Based Prediction of AI Literacy and SHAP Analysis

The study employed an XGBoost regression model to predict the Self-Perceived Performance of AI Literacy among teachers. This model leverages the Gradient Boosting technique, which enhances predictions by iteratively minimizing errors from previous predictions. Here is a simplified explanation:

The prediction update at iteration

t is calculated by adding an improvement

to the previous prediction:

The final prediction

after K iterations is the sum of all improvements:

The study further dissects the learning principles by examining the objective function, which includes both the prediction error and a regularization term to control model complexity:

where

represents the loss function. The optimization of the model involves approximating the loss function using its second-order Taylor expansion:

To explain the predictions made by our model, we chose SHAP (Shapley Additive Explanations), a powerful tool particularly suited for tree-based models like XGBoost. SHAP values offer a detailed breakdown of feature contributions, enhancing interpretability. The formula to compute the Shapley value for a feature

j is:

Although various explainability techniques, such as LIME and PDP, are available, SHAP analysis was adopted because it is particularly well-suited to tree-based ensemble models like XGBoost. SHAP can efficiently compute feature contributions by leveraging the model structure, enabling both global and local interpretation without approximation errors [

64,

74,

75].

After model training, SHAP analysis was conducted to assess feature importance, utilizing Shapley Values to quantitatively evaluate each feature’s contribution and enable interpretation that considers interactions between features.

The study also utilized a SHAP Summary Plot to compare the relative impacts of major features across the dataset and created a Feature Importance Plot to analyze the contributions of features learned by the XGBoost model.

4. Result

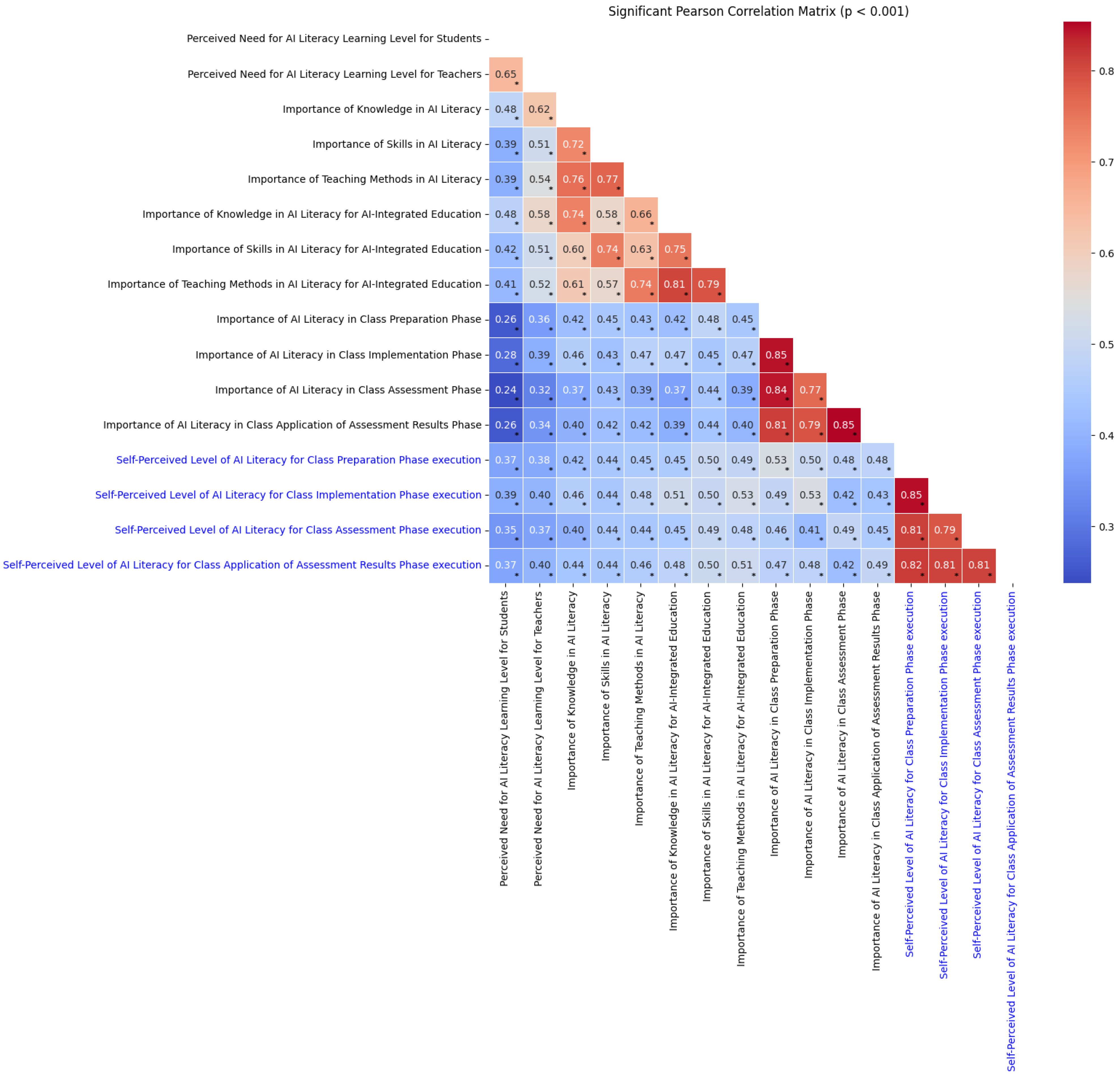

This study aimed to construct a model that explains the Self-Perceived Level of AI Literacy for Class Preparation Phase execution and to identify key features that influence the model. Pearson Correlation Analysis was employed to examine the relationships among 12 features and four targets used in the regression model and their impact [

Figure 3].

The analysis of relationships among features showed that the correlation between the Importance of AI Literacy in the Class Preparation Phase and the Importance of AI Literacy in the Class Implementation Phase was the highest, with an r-value of 0.85, indicating a statistically significant correlation at the 0.001 level. This suggests that teachers who perceive a high Importance of AI Literacy in the Class Preparation Phase also perceive a high Importance of AI Literacy in the Class Implementation Phase. Following this, the relationship between the Importance of AI Literacy in the Class Preparation Phase and the Importance of AI Literacy in the Class Assessment Phase had an r-value of 0.84, and between the Importance of AI Literacy in the Class Implementation Phase and the Importance of AI Literacy in the Class Assessment Phase, the r-value was 0.79, both statistically significant.

In the correlation analysis among AI Literacy-related features, the relationship between the Importance of Knowledge in AI Literacy and the Importance of Skills in AI Literacy showed the highest coefficient with an r-value of 0.76, significant at the 0.001 level. Thus, teachers who recognize the high Importance of Knowledge in AI Literacy also perceive a high Importance of Skills in AI Literacy, and vice versa.

The correlation analysis among targets revealed that the correlation between the Self-Perceived Level of AI Literacy for Class Preparation Phase execution and the Self-Perceived Level of AI Literacy for Class Implementation Phase execution was the highest, with an r-value of 0.85 and statistical significance at the 0.001 level. This indicates that teachers with a high self-perceived level of AI Literacy in the preparation phase also exhibit high performance in the implementation phase. The relationship between the Self-Perceived Level of AI Literacy for Class Assessment Phase execution and the Self-Perceived Level of AI Literacy for Class Application of Assessment Results Phase execution also showed a high correlation (r = 0.81).

4.1. Evaluation of Predictive Performance

To optimize the performance of the XGBoost regression model, a combination of hyperparameters was determined, and the model was trained. By applying Bayesian Optimization, the most effective hyperparameter combination within the search space was identified (see

Section 4.1.1), after which the model was trained and its performance evaluated (see

Section 4.1.2).

4.1.1. Hyperparameter Optimization Using Bayesian Optimization

The optimized Hyperparameter settings and their corresponding R

2 values are summarized in

Table 3.

During iteration 6, the R2 value was the highest at 0.7422, with the max_depth set at 9.961 and the learning rate at 0.1349. In iteration 9, the max_depth was set at 9.859 and the learning rate at 0.1908, achieving an R2 value of 0.74. For iteration 7, the max_depth was 9.562, the learning rate was 0.1003, and the R2 value was 0.7356.

In iteration 18, the max_depth was significantly lower at 3.174, resulting in a decreased R2 value of 0.5116. Iteration 17 featured a max_depth of 9.8 and a learning rate of 0.05436, with an R2 value of 0.7289. In iteration 19, the max_depth was set at 5.263 and the learning rate at 0.1361, yielding an R2 value of 0.6658.

The colsample_bytree values were set within a range of 0.5 to 0.9, while the subsample was optimized within a range of 0.7 to 0.9. The n_estimators values ranged from 140 to 320, and it was observed that using more than 200 trees tended to maintain an R2 value above 0.74.

The derived hyperparameter values are presented in

Table 4.

To regulate the learning speed and convergence, the learning rate was set at 0.1349, and the max_depth was adjusted to 9. Additionally, the subsample value was set at 0.8033, and the colsample_bytree was set at 0.5895, allowing the model to utilize only a portion of the training data.

Analysis of the model performance changes due to hyperparameter settings indicated an enhancement in the predictive performance of the XGBoost model. A stable R2 value was maintained when the max_depth was set to 9 or higher. Adjusting the subsample and colsample_bytree values enabled the learning of diverse data patterns. This adjustment of optimized hyperparameters has been confirmed to contribute to the improvement of the model’s performance.

4.1.2. Model Performance Evaluation with Optimized Hyperparameters

The optimized hyperparameters were applied to train and evaluate the model across four targets. The predictive performance of the model was assessed using Explained Variance (R

2), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and Mean Absolute Error (MAE), with the results detailed as follows [

Table 5].

In the Class Preparation Phase, the R2 score was 0.8206, indicating that the XGBoost model could explain 82.06% of how well teachers could utilize AI Literacy in this phase. Additionally, the prediction errors were relatively low, with an MSE at 0.1235, RMSE at 0.3514, and MAE at 0.1430.

In the Class Implementation Phase, the R2 score was 0.8007, indicating an explanatory power of 80.07% for AI Literacy. The MSE was recorded at 0.1337, the RMSE at 0.3656, and the MAE at 0.1372, showing stable prediction performance.

In the Class Assessment Phase, the R2 score was 0.8066, with MSE at 0.1534, RMSE at 0.3917, and MAE at 0.1561, demonstrating the model’s stable predictive performance.

In the final phase, the Class Application of Assessment Results Phase, the R2 score was 0.7746, with MSE at 0.1616, RMSE at 0.4019, and MAE at 0.1581. This indicates that the model’s predictive performance was consistently maintained.

Overall, the XGBoost model recorded R2 values above 0.7 for all targets, and error indicators (MSE, RMSE, MAE) were maintained within a consistent range. Based on these performance evaluation results, the model’s predictive performance can be considered reliably robust.

4.2. Feature Importance Analysis Using XGBoost and Comparative Review with SHAP

The results of deriving the main features affecting the Self-Perceived Level of AI Literacy for each phase of execution in the Class Preparation Phase (4.2.1), Class Implementation Phase (4.2.2), Class Assessment Phase (4.2.3), and Class Application of Assessment Results Phase (4.2.4) are as follows:

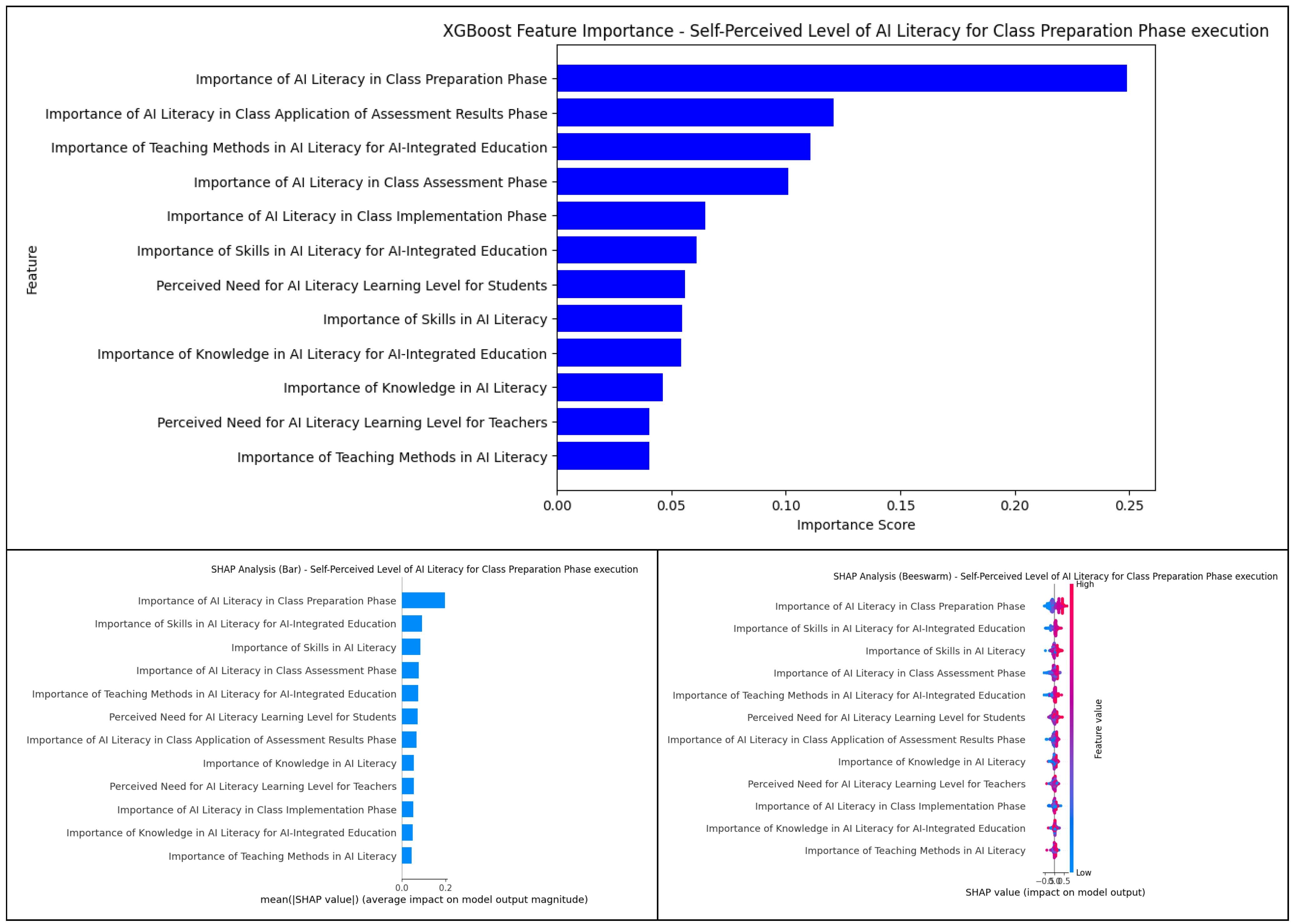

4.2.1. Feature Importance Analysis for AI Literacy in the Class Preparation Phase

Feature Importance Analysis for AI Literacy in the Class Preparation Phase is detailed in [

Table 6,

Figure 4].

The regression analysis with XGBoost recorded the highest Feature Importance value for the “Importance of AI Literacy in Class Preparation Phase” feature (0.2488). This was followed by “Importance of AI Literacy in Class Utilizing Assessment Results Phase” (0.1208), “Importance of Teaching Methods in AI Literacy for AI-Integrated Education” (0.1109), “Importance of AI Literacy in Class Assessment Phase” (0.1009), and “Importance of AI Literacy in Class Implementation Phase” (0.0650). Comparative analysis with SHAP indicated that some features, such as “Importance of AI Literacy in Class Utilizing Assessment Results Phase” (XGBoost: 0.1208, SHAP: 0.0679) and “Importance of AI Literacy in Class Implementation Phase” (XGBoost: 0.0650, SHAP: 0.0521), showed lower mean absolute values in SHAP than their XGBoost counterparts. Conversely, features like “Importance of Skills in AI Literacy for AI-Integrated Education” (XGBoost: 0.0610, SHAP: 0.0918) and “Importance of Skills in AI Literacy” (XGBoost: 0.0547, SHAP: 0.0837) displayed higher mean absolute values in SHAP analysis, suggesting discrepancies in how the models assess feature contributions.

The SHAP findings provide further insight into these discrepancies by elucidating why certain variables carry higher or lower importance. Features with substantially lower SHAP influence relative to their XGBoost importance (for example, the perceived importance of AI Literacy in the results-utilization phase) likely have more context-dependent effects, contributing less uniformly across all teachers. In contrast, features that exhibited higher SHAP values than expected from the XGBoost ranking (such as teachers’ emphasis on AI Literacy skills) seem to exert their influence mainly under particular conditions, which the tree-based metric may understate. Additionally, SHAP dependence plots highlight interaction effects between key factors. For instance, the positive impact of a teacher’s AI Literacy skills on predicted class preparation performance is markedly amplified when that teacher also assigns a high importance to integrating AI in the preparation phase; this synergy appears in SHAP plots as significantly larger SHAP values for the skills feature when both features are high. Similarly, teachers who rate both their own and their students’ need for AI Literacy learning as high tend to show amplified SHAP contributions for these need-related features, implying that a broad awareness of AI Literacy needs (encompassing both oneself and one’s students) can reinforce the influence of other variables on preparation-phase performance. These interaction patterns underscore that a teacher’s commitment to AI integration and recognition of learning needs to work synergistically with their skillset, ultimately shaping their effectiveness in the Class Preparation Phase.

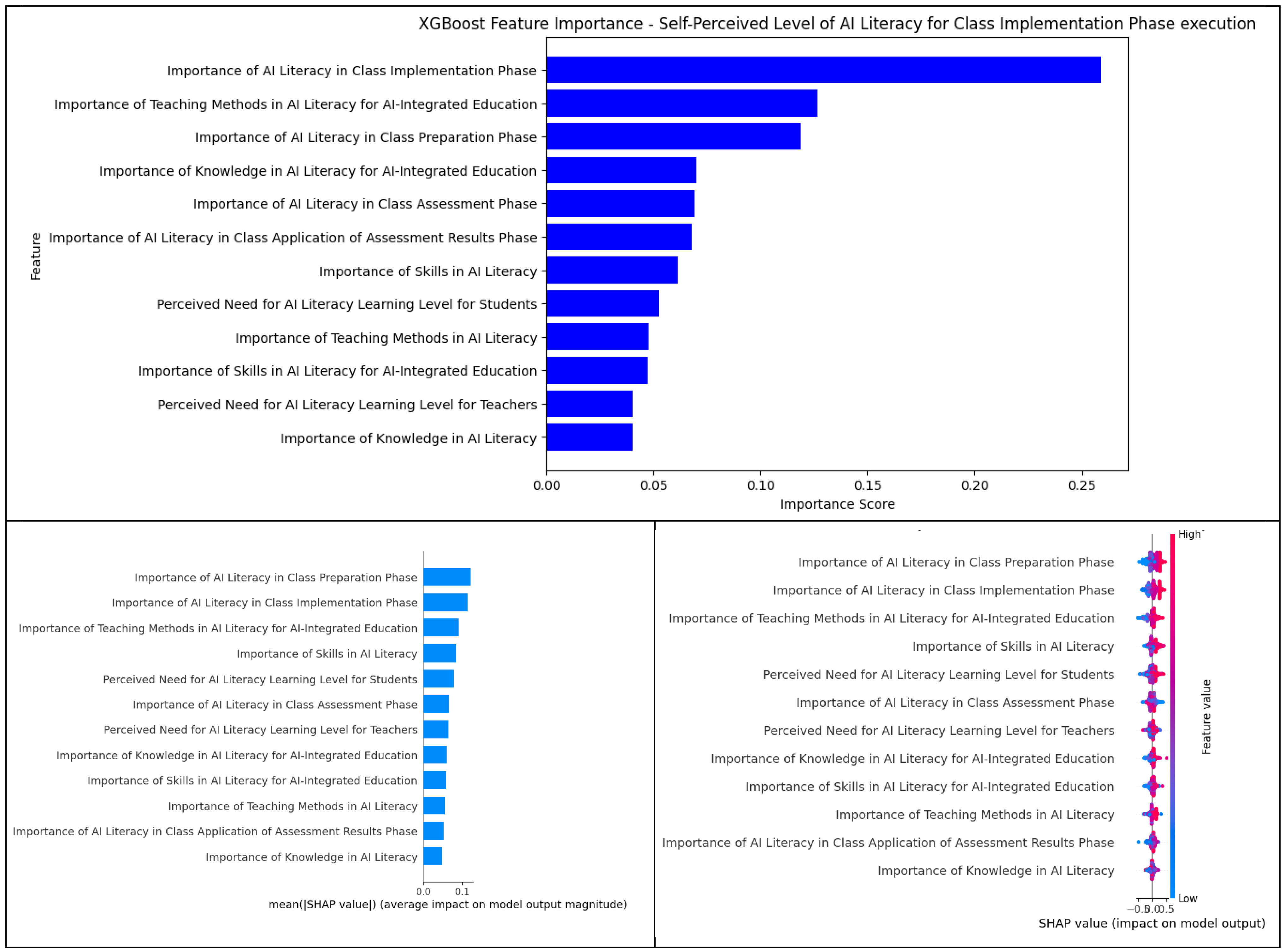

4.2.2. Feature Importance Analysis for AI Literacy in Class Implementation Phase

Feature Importance Analysis for AI Literacy in the Class Implementation Phase is presented in [

Table 7,

Figure 5].

In this analysis, “Importance of AI Literacy in Class Implementation Phase” recorded the highest Feature Importance value (0.2588). This was followed by “Importance of Teaching Methods in AI Literacy for AI-Integrated Education” (0.1266), “Importance of AI Literacy in Class Preparation Phase” (0.1185), “Importance of Knowledge in AI Literacy for AI-Integrated Education” (0.0699), and “Importance of AI Literacy in Class Assessment Phase” (0.0691). Comparative analysis with SHAP revealed that features like “Importance of AI Literacy in Class Application of Assessment Results Phase” (XGBoost: 0.0679, SHAP: 0.0527) and “Importance of Teaching Methods in AI Literacy for AI-Integrated Education” (XGBoost: 0.1266, SHAP: 0.0905) had lower mean absolute values in SHAP, whereas “Importance of Skills in AI Literacy” (XGBoost: 0.0611, SHAP: 0.0851) and “Perceived Need for AI Literacy Learning Level for Students” (XGBoost: 0.0526, SHAP: 0.0780) showed higher mean absolute values, highlighting significant variations in the valuation of these features between the two analyses.

Expanding on these results, the SHAP analysis clarifies why certain features diverged in importance. For example, the “Importance of Teaching Methods in AI Literacy for AI-Integrated Education” was a prominent predictor in XGBoost but exhibited a much smaller average impact in SHAP, suggesting that its influence on class implementation outcomes is contingent on other factors (possibly overlapping with teachers’ knowledge or phase-specific priorities). Conversely, features such as teachers’ emphasis on AI Literacy skills and their perception of students’ AI Literacy needs, while ranked lower by XGBoost, showed elevated SHAP contributions. This indicates that when a teacher strongly prioritizes practical AI skills or perceives a high student need for AI literacy, it leads to substantial changes in their implementation-phase performance—an effect that the global XGBoost metric may under-represent. SHAP dependence plots further reveal that these factors do not operate in isolation but interact. Notably, the positive effect of a teacher’s belief in the importance of AI Literacy during the assessment phase (a top XGBoost feature for class implementation) is significantly amplified if the teacher also possesses strong AI Literacy skills. In the SHAP dependence plot, teachers who highly value AI in assessment and simultaneously report high skill levels attain much larger SHAP values for these features, implying that technical competencies enable them to act on their beliefs during class implementation. Another interaction is observed between pedagogical orientation and perceived needs: the contribution of adopting AI-integrated teaching methods to implementation success is more pronounced for teachers who also recognize a substantial need for AI Literacy among their students. In practice, educators who tailor their teaching methods for AI and are keenly aware of their students’ AI Literacy requirements tend to leverage AI more effectively in the classroom (reflected by higher combined SHAP effects). These interactions suggest that to excel in the Class Implementation Phase, teachers benefit from a combination of strong technical skills, adaptive teaching strategies, and a clear perception of AI Literacy needs.

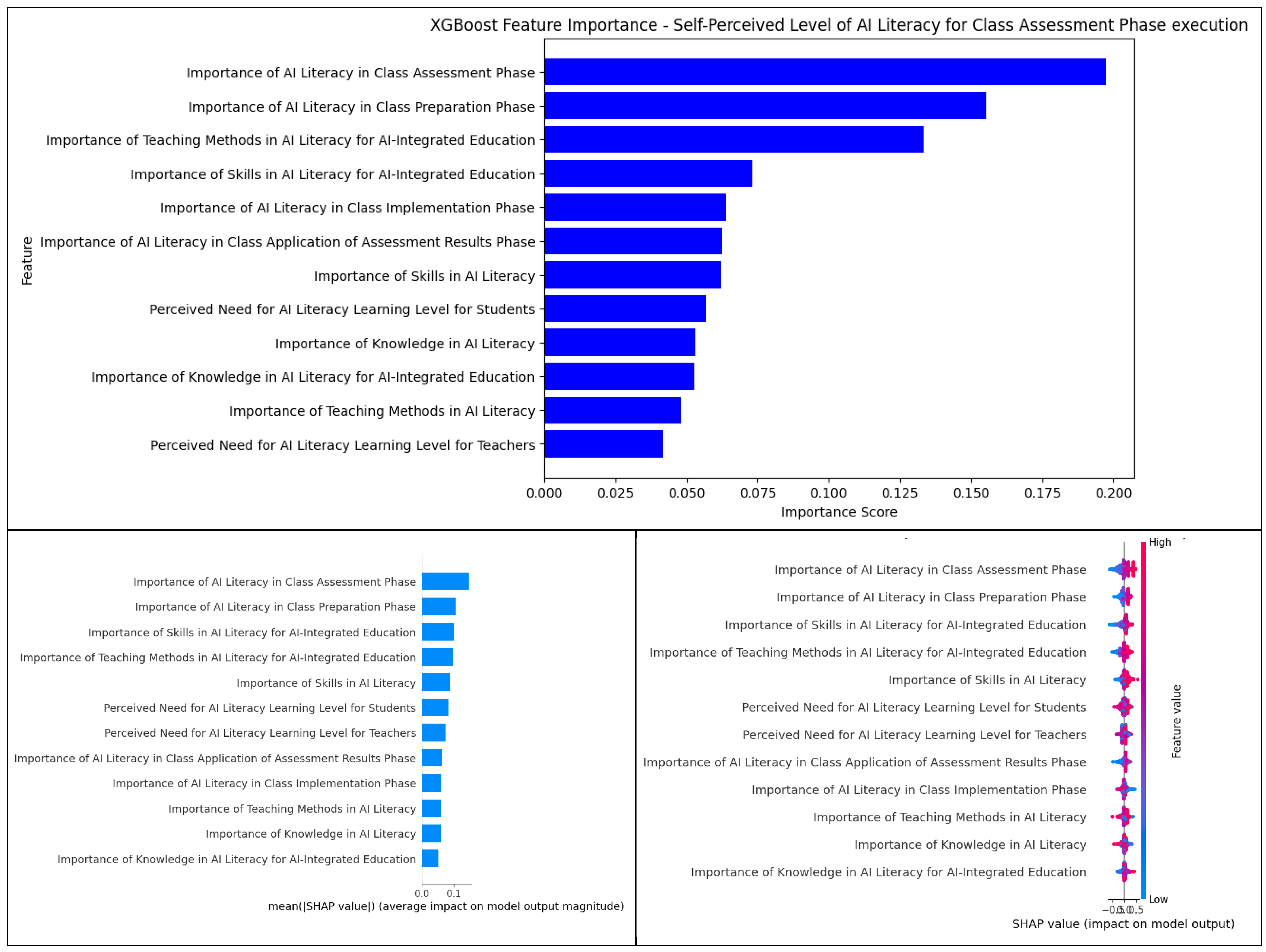

4.2.3. Feature Importance Analysis for AI Literacy in Class Assessment Phase

The Feature Importance Analysis for AI Literacy in the Class Assessment Phase is detailed in [

Table 8,

Figure 6]. In the regression analysis with XGBoost, the feature “Importance of AI Literacy in Class Assessment Phase” recorded the highest Feature Importance value (0.1973). Subsequent features include “Importance of AI Literacy in Class Preparation Phase” (0.1553), “Importance of Teaching Methods in AI Literacy for AI-Integrated Education” (0.1333), “Importance of Skills in AI Literacy for AI-Integrated Education” (0.0733), and “Importance of AI Literacy in Class Implementation Phase” (0.0638).

Comparative analysis with SHAP showed that some features had higher importance in XGBoost but lower mean absolute values in SHAP. Specifically, the feature “Importance of AI Literacy in Class Application of Assessment Results Phase” recorded lower mean values in SHAP (XGBoost: 0.0624, SHAP: 0.0630). Conversely, features such as “Importance of Skills in AI Literacy for AI-Integrated Education” (XGBoost: 0.0733, SHAP: 0.1005), “Perceived Need for AI Literacy Learning Level for Students” (XGBoost: 0.0569, SHAP: 0.0841), and “Perceived Need for AI Literacy Learning Level for Teachers” (XGBoost: 0.0416, SHAP: 0.0745) displayed higher mean absolute values in SHAP analysis, indicating a discrepancy between the models in assessing feature contributions.

The deeper examination of SHAP values helps explain why these discrepancies occur and illuminates interaction effects among features. In this phase, factors like teachers’ technical skill orientation and perceived AI literacy needs turned out to have a greater influence than their XGBoost ranks suggested. For instance, the importance of AI literacy skills (for AI-integrated education) and the perceived need for AI literacy training (for both students and teachers) show higher SHAP impact, implying that teachers who focus on practical AI skills or who are acutely aware of the need for AI literacy tend to perform differently in assessment tasks. These features likely drive significant outcome differences for certain subsets of teachers (e.g., those with very high or very low emphasis on skills and needs), which may be why SHAP attributes them more significance than the averaged XGBoost importance. In contrast, the value a teacher places on using AI in the results-utilization phase—while recognized by XGBoost—has a less uniform effect (lower average SHAP value), perhaps because its influence is only realized in conjunction with other competencies. SHAP dependence plots indeed indicate that the impact of perceived AI literacy needs can be interdependent: the contribution of a high perceived student AI literacy need to the class assessment outcome is amplified when the teacher also perceives a high need for their own AI literacy development. This suggests that a teacher who is broadly cognizant of AI literacy gaps (in both their students and themselves) may approach assessment tasks with either heightened diligence or caution, affecting performance accordingly. Moreover, an interaction between technical and pedagogical factors is evident: the benefit of strong AI literacy skills on assessment-phase performance is most pronounced when coupled with an emphasis on AI-integrated teaching methods. Teachers who pair a high level of AI technical skill with robust AI-driven pedagogical strategies achieve the greatest improvements in applying AI during assessments (reflected in higher SHAP values when both attributes are high), whereas focusing on one without the other yields more limited gains. These insights reinforce that successful AI integration in the Class Assessment Phase hinges on a combination of technical proficiency, awareness of needs, and pedagogical adaptability.

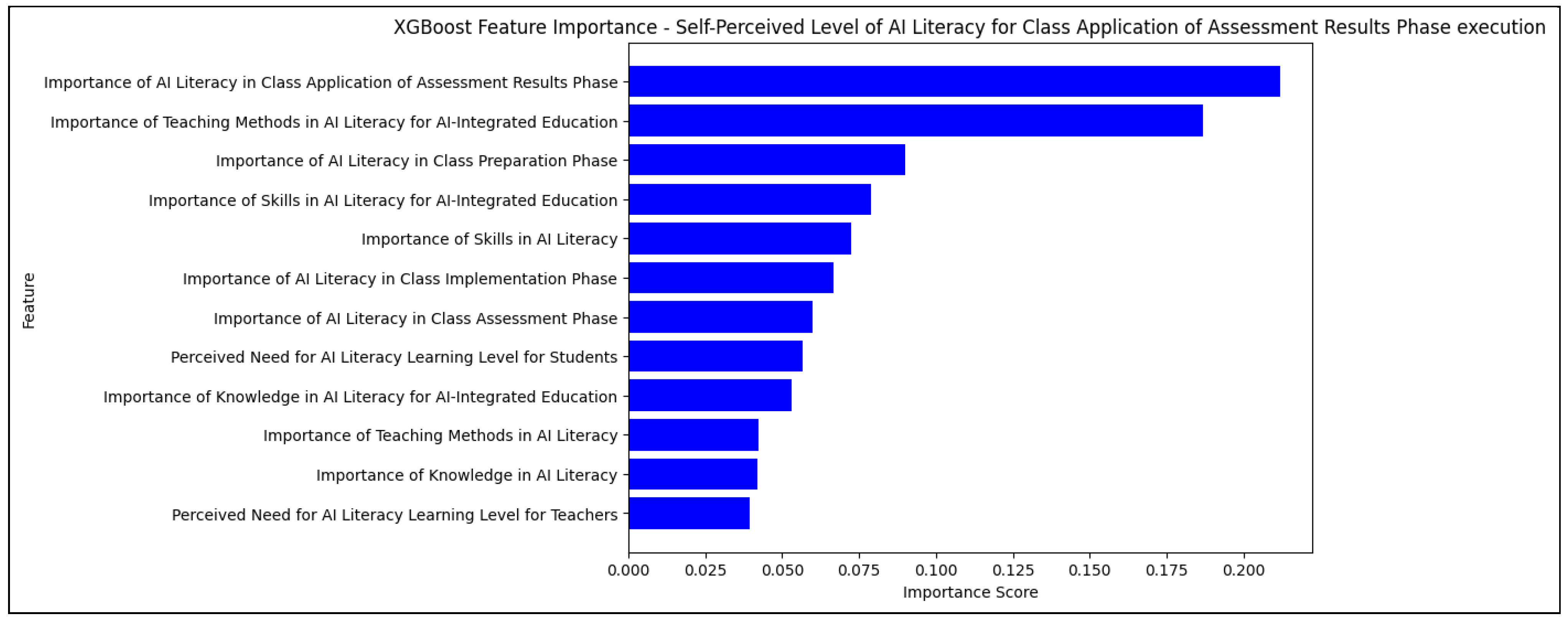

4.2.4. Feature Importance Analysis for AI Literacy for Class Application of Assessment Results Phase

The Feature Importance Analysis for the Class Application of Assessment Results Phase is as follows [see

Table 9,

Figure 7].

The regression analysis with XGBoost indicates that the feature “Importance of AI Literacy in Class Application of Assessment Results Phase” recorded the highest Feature Importance value (0.2117). This was followed by “Importance of Teaching Methods in AI Literacy for AI-Integrated Education” (0.1868), “Importance of AI Literacy in Class Preparation Phase” (0.0899), “Importance of Skills in AI Literacy for AI-Integrated Education” (0.0788), and “Importance of Skills in AI Literacy” (0.0724).

In comparison with SHAP analysis, certain features, such as “Importance of AI Literacy in Class Assessment Phase”, displayed lower mean absolute values in SHAP (XGBoost: 0.0600, SHAP: 0.0462). Conversely, features like “Importance of Skills in AI Literacy for AI-Integrated Education” (XGBoost: 0.0788, SHAP: 0.0959), “Importance of Skills in AI Literacy” (XGBoost: 0.0724, SHAP: 0.0972), and “Perceived Need for AI Literacy Learning Level for Teachers” (XGBoost: 0.0395, SHAP: 0.0612) showed higher mean absolute values in SHAP analysis, suggesting a variance in how the models assess contributions of features.

By delving into the SHAP outcomes, we can interpret why some variables assume greater or lesser importance in this phase and identify how they interact. The notably lower SHAP contributions for the top XGBoost features (e.g., the importance placed on AI Literacy in the results phase and on AI-integrated teaching methods) imply that these factors, while critical, do not uniformly translate into performance gains unless certain underlying conditions are met. In other words, a teacher’s stated priority on using AI in applying assessment results or on innovative teaching methods must be backed by other capacities to fully impact their practice. Supporting this, several foundational competencies and perspectives—such as AI Literacy skills and the teacher’s self-identified need for AI learning—exhibited higher SHAP influence than their XGBoost rankings would suggest. This highlights that a teacher’s ability to effectively utilize AI when applying assessment results is strongly driven by their actual skill level and awareness of their own learning needs, which can outweigh the influence of simply valuing AI use in that phase. Furthermore, SHAP dependence plots point to key interactions: the benefit of valuing AI in the results-application phase is significantly amplified for teachers with high technical proficiency. Teachers who both consider AI important for using assessment results and possess strong AI Literacy skills achieve much greater predicted performance in this phase (manifested as higher SHAP values for the combination of these features), whereas those lacking in skills gain little from merely holding that belief. Another interaction is observed between pedagogical approach and self-reflection: the positive effect of emphasizing AI-integrated teaching methods on utilizing assessment results is stronger when a teacher also recognizes a high personal need for AI Literacy development. This suggests that educators who are both methodologically innovative and attuned to improving their own AI capabilities can more effectively translate student assessment data into actionable insights using AI. In sum, the SHAP analysis for the results-utilization phase reveals that successful integration of AI in post-assessment activities depends not only on acknowledging the importance of AI and adopting new methods but also on having the requisite skills and a growth-oriented mindset to act on those priorities.

5. Discussion

This study organizes the discussion around four key aspects necessary for cultivating teachers’ AI Literacy: comprehensive enhancement of knowledge, skills, teaching methods, and value judgments; differentiated support across teaching phases; integration of educational decision-making and ethical judgments; and concrete strategy support based on a data support system.

Firstly, the cultivation of AI Literacy in teachers should not be limited to knowledge and skills alone but should also encompass teaching methods, values, and attitudes. AI Literacy education must focus on equipping teachers with sufficient knowledge about AI and its practical applications and, importantly, on developing teaching methods that effectively integrate AI within educational contexts. This study evaluated how teachers perceive and perform AI Literacy, revealing a significant correlation (r = 0.76) between the Importance of Knowledge in AI Literacy and the Importance of Skills in AI Literacy. This suggests that teachers who value theoretical knowledge also appreciate the importance of practical skills [

60]. Therefore, AI Literacy education should support teachers in incorporating AI ethically and appropriately into education, which requires training that includes practical exercises and case studies to apply these concepts in real classroom settings [

60].

Secondly, to support the effective use of AI Literacy, differentiated support and integration tailored to each teaching phase are necessary. The performance analysis of the XGBoost model applied in this study showed that the application of AI Literacy varies across teaching phases, with particularly high predictive performance noted in the Class Preparation Phase and Class Implementation Phase (R

2 = 0.8206, R

2 = 0.8153), suggesting that AI Literacy manifests clearly and specifically in these phases [

76]. Conversely, the Class Application of the Assessment Results Phase showed a lower R

2 value (0.7746) and higher error metrics (MSE = 0.1616, RMSE = 0.4019, MAE = 0.1581), indicating the inclusion of more complex elements in this phase. Thus, teacher training should provide practical training to utilize AI technologies during the preparation and implementation phases while offering additional courses that develop skills in data interpretation, ethical considerations, and educational decision-making during the assessment and results utilization phases [

77].

Thirdly, the assessment and results utilization phase should support AI Literacy that includes educational decision-making and ethical judgments. Analysis of self-assessed AI Literacy across teaching phases revealed high levels of self-assessed AI Literacy in the Class Preparation Phase and Class Implementation Phase (R

2 = 0.85, 0.83), with both XGBoost and SHAP analysis confirming the significant role of AI Literacy in these phases (R

2 = 0.82, SHAP value = 0.25). This indicates that teachers recognize the importance of AI Literacy and are effectively using it in the preparation and implementation of lessons. However, the roles of AI Literacy extend beyond mere technical application in the Class Assessment Phase and Utilizing Assessment Results Phase. While XGBoost rated the necessity of AI Literacy highly in these phases (R

2 = 0.76), the SHAP analysis showed comparatively lower influence (SHAP value = 0.15), suggesting that these phases involve a complex process that goes beyond simple technical analysis and includes providing individualized feedback to students and making educational decisions. Teachers must understand and clearly explain the processes involved in deriving AI evaluation results, ensure algorithm transparency and data accuracy, and prioritize student privacy. Moreover, teachers must use AI technology to assess student achievement and apply results in a way that considers the overall learning context and individual characteristics of students, making ethical decisions accordingly. Ultimately, teachers play a crucial role in educating students to critically assess and effectively utilize AI technology, ensuring that the use of AI in evaluation and results application phases is both educational and ethical [

78].

Fourthly, to systematically strengthen AI Literacy education, a data support system based on a methodologically robust research approach should be established. AI Literacy research needs to comprehensively analyze teachers’ perceptions of AI Literacy and their self-assessed AI Literacy competencies in the teaching process using predictive models and explainable AI techniques. This study utilized XGBoost and SHAP analysis to quantitatively assess the impact of AI Literacy-related characteristics on target variables, providing intuitive interpretations of the predictions. It empirically analyzed how AI Literacy manifests in the design and execution of lessons, defining features and targets clearly, constructing four independent models, and comparing how AI Literacy manifests across different teaching phases. The research designed practical implications for educational policy and teacher training programs based on the results, providing a foundation for policy support that enables teachers to genuinely cultivate AI Literacy [

79].

Existing studies on AI Literacy have primarily focused on conceptual discussions and the development of teacher training programs [

15,

16]. However, few empirical studies have quantitatively analyzed how teachers’ perceptions and self-assessed AI Literacy competencies manifest in actual teaching processes using machine learning techniques. By applying XGBoost and SHAP analysis, this study addresses this gap and offers a novel methodological approach to understanding teachers’ AI Literacy performance.

The results of this study confirm that teachers’ cognitive awareness and skill recognition regarding AI Literacy significantly influence their AI Literacy performance across different teaching phases. This finding aligns with the results of Johnson (2021) [

80] and Miller and Brown (2020) [

81], who emphasized the importance of both knowledge and practical skills in AI Literacy. However, this study extends prior research by providing empirical evidence of how these factors interact and differ across teaching phases, thus offering a more nuanced understanding of the dynamics of AI literacy.

From a practical perspective, this study provides actionable implications for teacher training and educational policy development. By clarifying which factors have a significant impact on teachers’ AI Literacy performance at each instructional phase, the study offers guidance on where targeted support and training would be most effective. This allows for the design of differentiated training programs that can enhance teachers’ AI Literacy performance efficiently, especially addressing gaps in teachers’ self-assessment and confidence.

Overall, this study fills an important research gap by providing empirical evidence on the manifestation of AI Literacy across the teaching process and by offering practical recommendations to strengthen teachers’ AI Literacy in educational settings. Reflecting the geographical and educational diversity of Korea, this study selected the C area as the target region, encompassing both metropolitan and rural educational environments. This choice is significant as the C area structurally mirrors the diverse geographical and educational characteristics of Korea, thus enhancing the representativeness and applicability of the findings to the broader Korean educational context. A census survey was conducted among all primary and secondary school teachers in the C area, using an online questionnaire to gauge AI Literacy. The structural alignment between the C area and the national educational landscape, along with the high response rate and the comprehensive analysis, mitigates concerns about the generalizability of the findings. These factors allow this study to provide meaningful insights that are expected to be applicable across various educational settings in Korea, thereby suggesting the potential for broader policy applications and further research that could encompass more diverse and inclusive samples from different regions.