Integration of Causal Models and Deep Neural Networks for Recommendation Systems in Dynamic Environments: A Case Study in StarCraft II

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

- 1.

- Data Collection: We gathered match data from StarCraft II using the PySC2 environment and the official game API. Key variables related to resources, military units, strategy, and player interactions were recorded to ensure comprehensive coverage of in-game dynamics.

- 2.

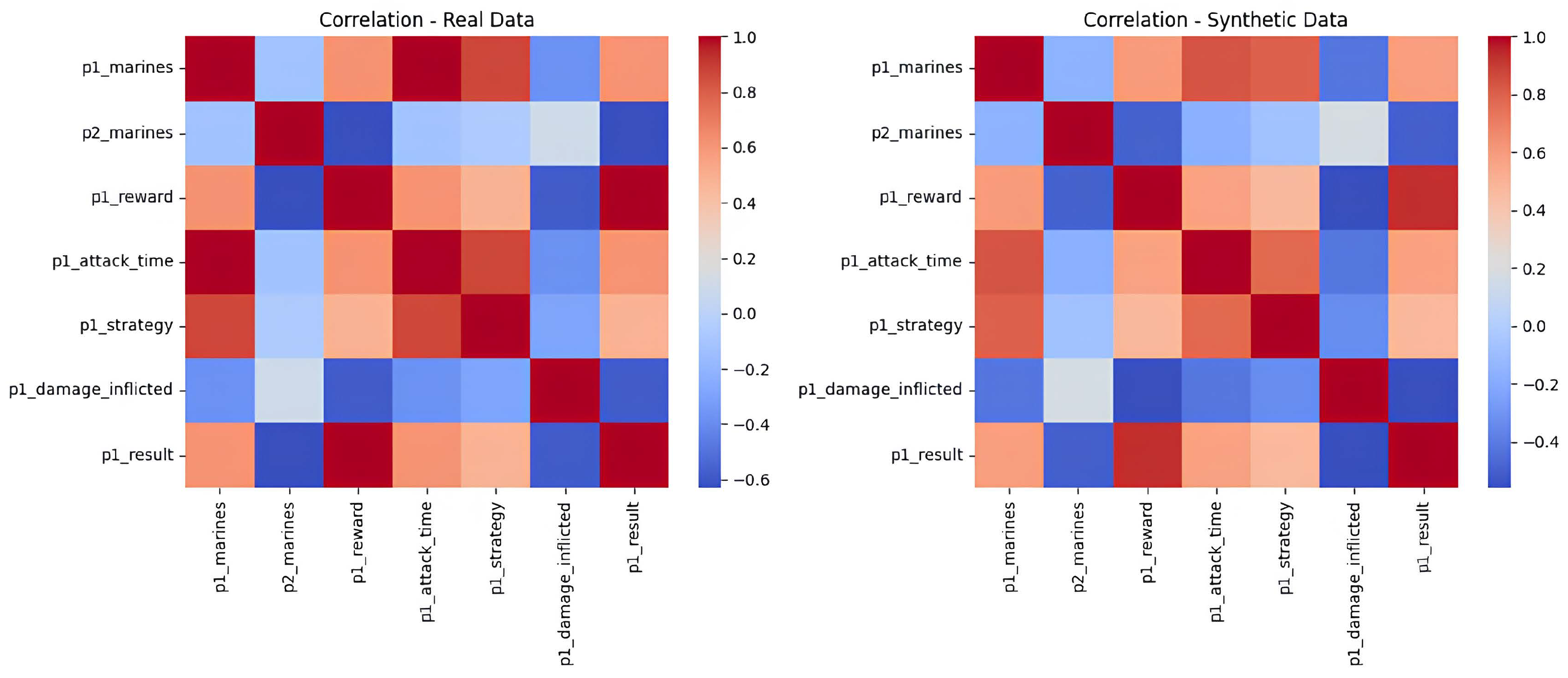

- Synthetic Data Generation: To address data scarcity in specific strategic scenarios, we used a Conditional Tabular Generative Adversarial Network. This approach enabled the generation of high-quality synthetic samples while preserving statistical properties and correlations between variables.

- 3.

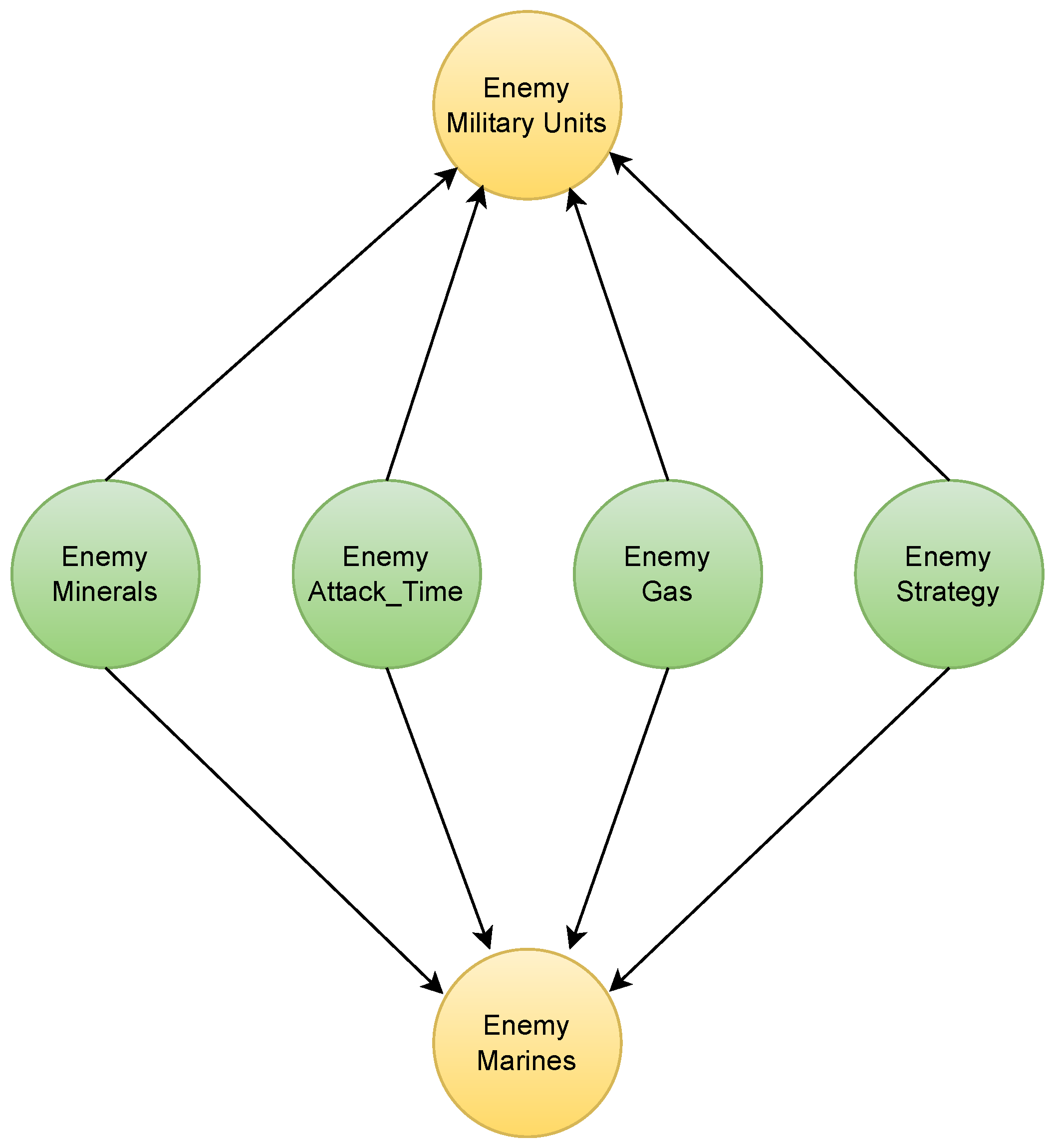

- Causal modeling: We implemented a causal inference framework to analyze the relationships between strategic decisions and matching outcomes. We utilized a Structural Causal Model to demonstrate the relationships between key variables and applied Bayesian inference to estimate the intervention’s effects.

- 4.

- Strategy recommendations via deep neural networks: We trained a deep learning model to provide strategic recommendations based on both real and synthetic data. The neural network leveraged learned causal relationships to suggest optimal strategies, improving decision-making in varying game conditions.

3.1. Data Acquisition

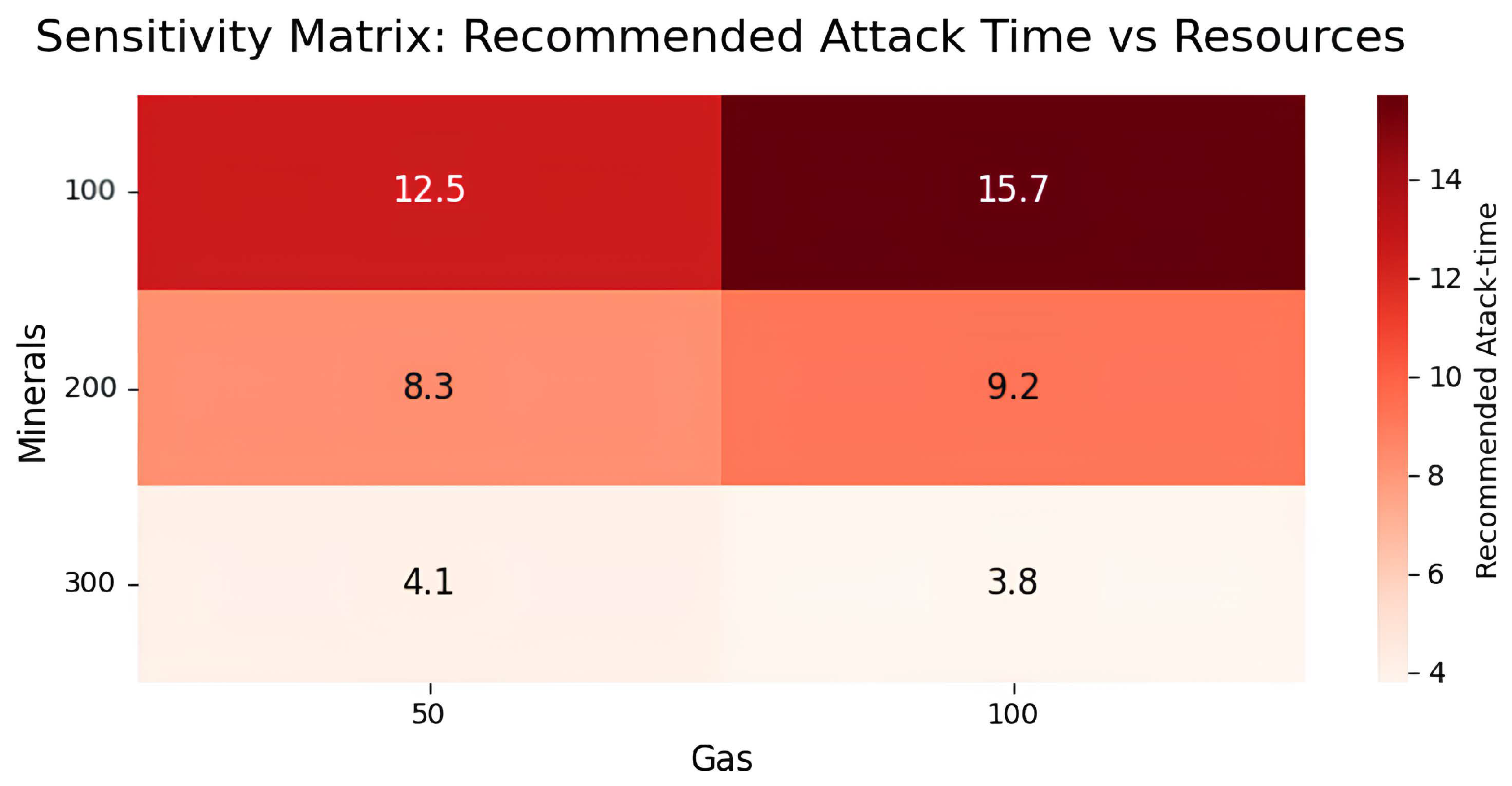

- Resources: The amount of minerals and gas available to players.

- Military units and structures: The number of units and buildings controlled by each player.

- Strategy and outcomes: The attack timing of a player, their attack strategy, and the match outcome.

- Interaction-related variables: For instance, the reward and damage inflicted by one of the players.

3.2. Synthetic Data Generation

3.3. Causal Modeling

3.4. Integration of the Causal Model and Neural Network

3.5. Deep Neural Network

3.6. System Evaluation

4. Results

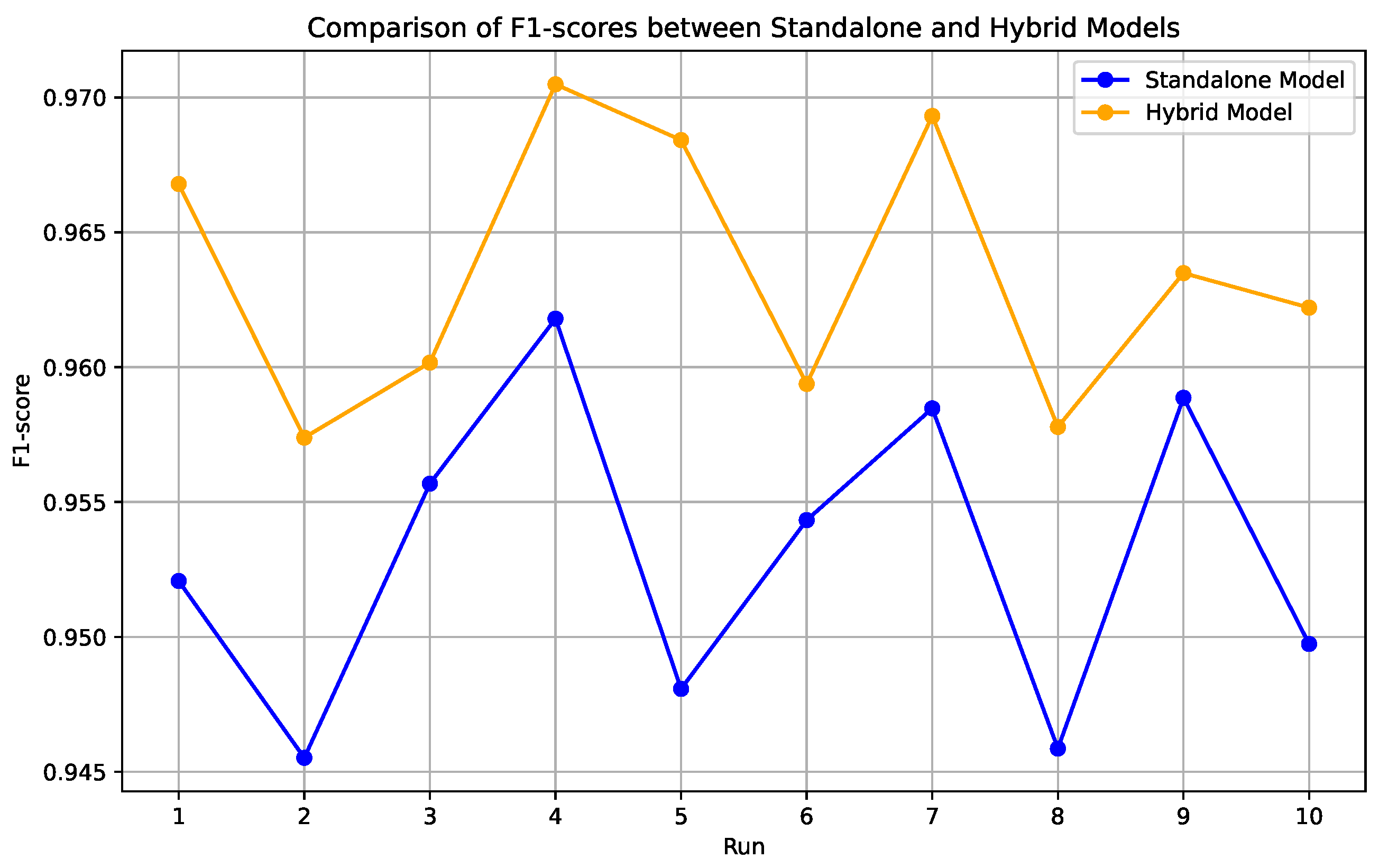

4.1. Integration of the Causal Model into the Neural Network

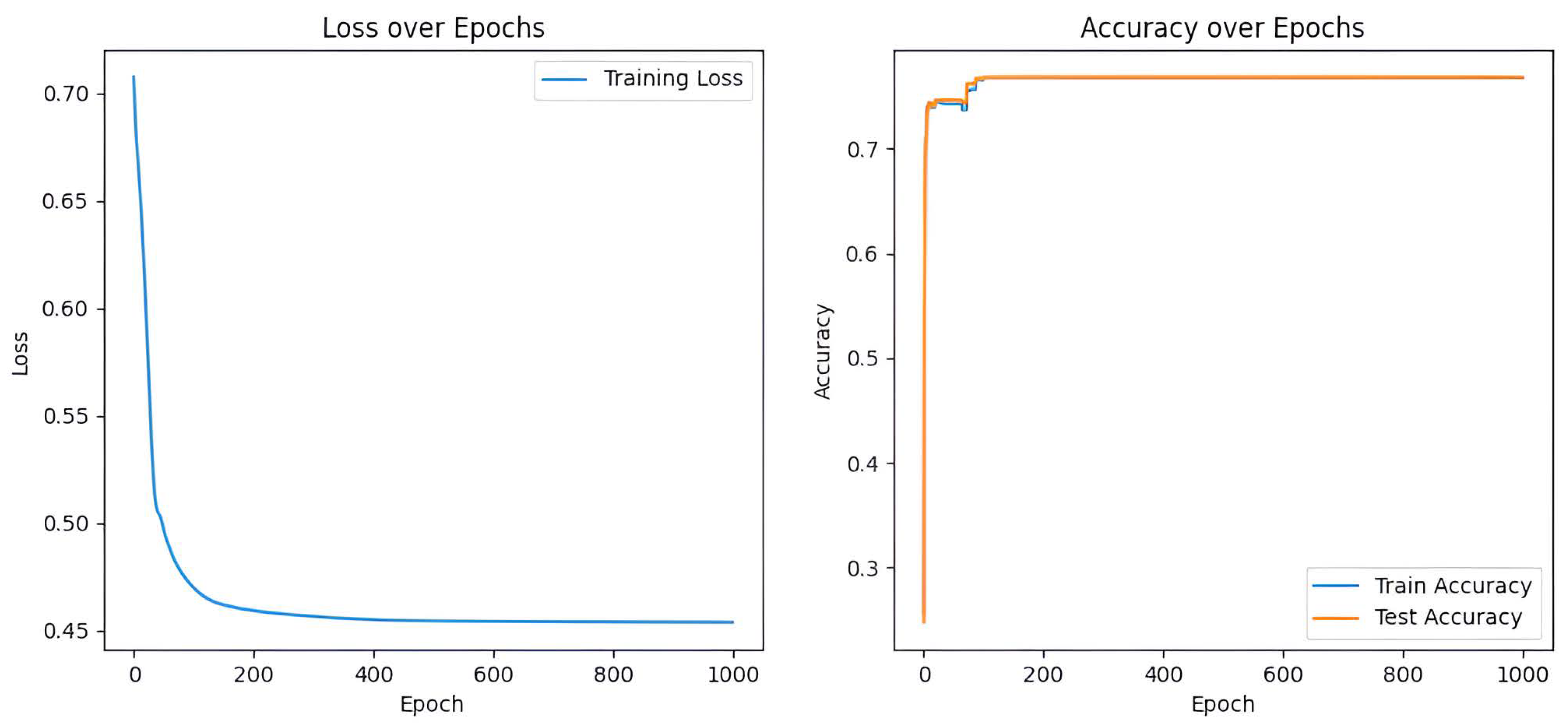

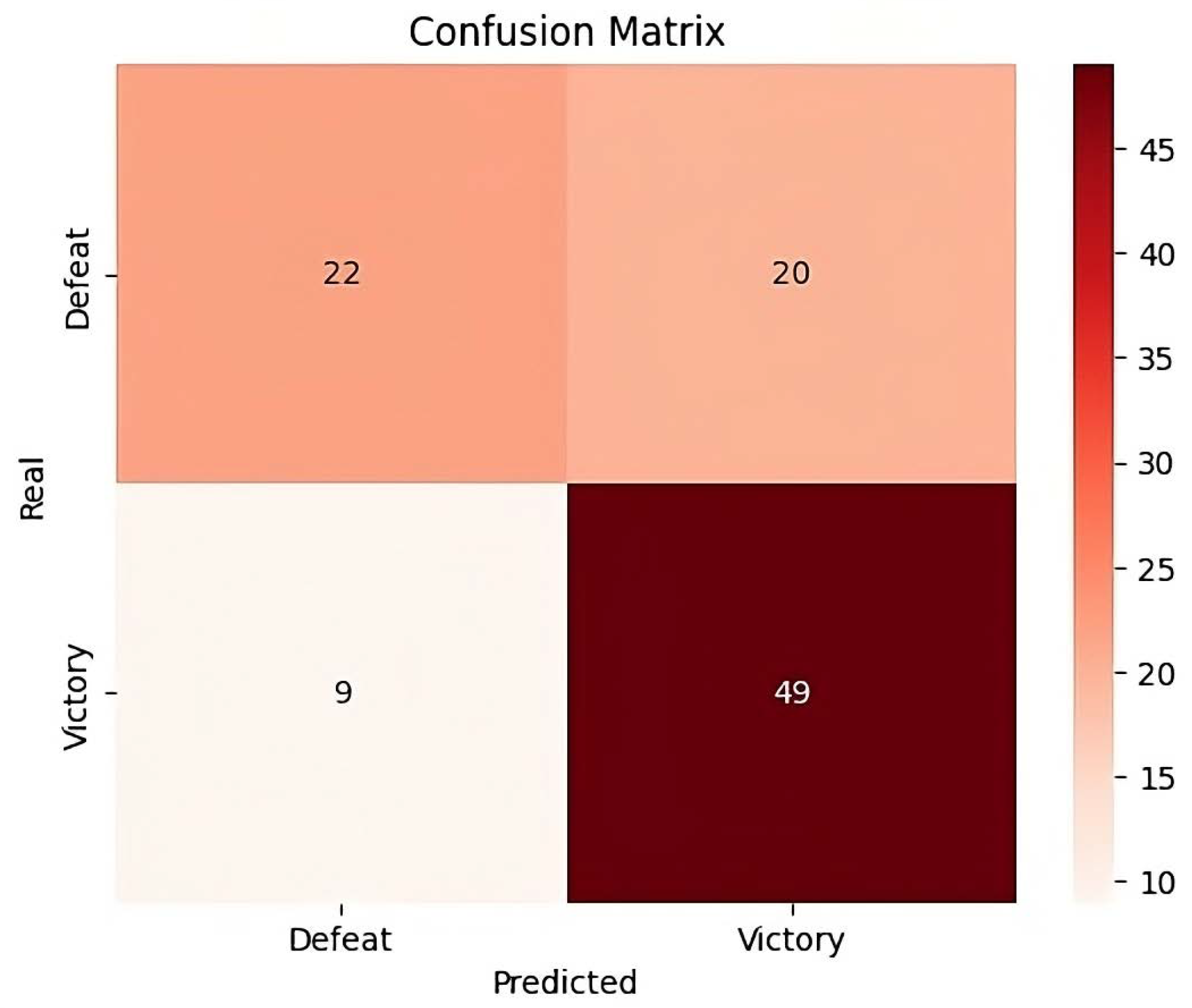

4.2. Neural Network Evaluation

4.3. Comparative Analysis with League Prediction Research

- Data Efficiency: The baseline study [13] employed 46,398 replays to forecast player leagues with an accuracy of 75.3%. In contrast, our hybrid model focuses on strategy suggestions using a more compact curated dataset of 100 matches, augmented by:

- −

- Causal feature engineering (e.g., mineral-to-army thresholds)

- −

- Synthetic data augmentation (CTGAN) for scenario generalization

This difference in data scale reflects distinct methodological requirements: their classification task benefits from large-scale replay analysis, while our decision-support system prioritizes targeted causal relationships. - Interpretability: Their Random Forest operates as a black box, whereas our system provides human-readable strategy rules (e.g., “Expand if mineral surplus > 500 with 92% confidence”).

- Task Complexity: League prediction relies on coarse features (APM, build orders), while our strategy recommendation requires fine-grained analysis of dynamic game states—a harder problem justifying our higher F1-score requirement.

- Real-Time Adaptation: Their model analyzes completed games, while ours reacts to live gameplay (5.94 ms latency vs. their batch-processing approach).

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vinyals, O.; Ewalds, T.; Bartunov, S.; Georgiev, P.; Vezhnevets, A.S.; Yeo, M.; Makhzani, A.; Küttler, H.; Agapiou, J.; Schrittwieser, J.; et al. Starcraft ii: A new challenge for reinforcement learning. arXiv 2017, arXiv:1708.04782. [Google Scholar]

- Justesen, N.; Bontrager, P.; Togelius, J.; Risi, S. Deep learning for video game playing. IEEE Trans. Games 2019, 12, 1–20. [Google Scholar] [CrossRef]

- Ontanón, S.; Synnaeve, G.; Uriarte, A.; Richoux, F.; Churchill, D.; Preuss, M. A survey of real-time strategy game AI research and competition in StarCraft. IEEE Trans. Comput. Intell. AI Games 2013, 5, 293–311. [Google Scholar] [CrossRef]

- Pearl, J. Theoretical impediments to machine learning with seven sparks from the causal revolution. arXiv 2018, arXiv:1801.04016. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Salvatier, J.; Wiecki, T.V.; Fonnesbeck, C. Probabilistic programming in Python using PyMC3. PeerJ Comput. Sci. 2016, 2, e55. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, arXiv:1912.01703. [Google Scholar]

- Xu, L.; Skoularidou, M.; Cuesta-Infante, A.; Veeramachaneni, K. Modeling tabular data using conditional gan. Adv. Neural Inf. Process. Syst. 2019, arXiv:1912.01703. [Google Scholar]

- Li, J.; Wang, S.; Zhang, Q.; Cao, L.; Chen, F.; Zhang, X.; Jannach, D.; Aggarwal, C.C. Causal Learning for Trustworthy Recommender Systems: A Survey. arXiv 2024, arXiv:2402.08241. [Google Scholar]

- Schölkopf, B.; Locatello, F.; Bauer, S.; Ke, N.R.; Kalchbrenner, N.; Goyal, A.; Bengio, Y. Toward causal representation learning. Proc. IEEE 2021, 109, 612–634. [Google Scholar] [CrossRef]

- Yuan, Y.; Ding, X.; Bar-Joseph, Z. Causal inference using deep neural networks. arXiv 2020, arXiv:2011.12508. [Google Scholar]

- Shi, C.; Wang, X.; Luo, S.; Zhu, H.; Ye, J.; Song, R. Dynamic causal effects evaluation in a/b testing with a reinforcement learning framework. J. Am. Stat. Assoc. 2023, 118, 2059–2071. [Google Scholar] [CrossRef]

- Lee, C.M.; Ahn, C.W. Feature extraction for starcraft ii league prediction. Electronics 2021, 10, 909. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, H.; Xiong, H. When graph neural network meets causality: Opportunities, methodologies and an outlook. arXiv 2023, arXiv:2312.12477. [Google Scholar]

- Mu, R. A survey of recommender systems based on deep learning. IEEE Access 2018, 6, 69009–69022. [Google Scholar] [CrossRef]

- Gao, C.; Zheng, Y.; Wang, W.; Feng, F.; He, X.; Li, Y. Causal inference in recommender systems: A survey and future directions. ACM Trans. Inf. Syst. 2024, 42, 1–32. [Google Scholar] [CrossRef]

- Luo, H.; Zhuang, F.; Xie, R.; Zhu, H.; Wang, D.; An, Z.; Xu, Y. A survey on causal inference for recommendation. Innovation 2024, 5, 100590. [Google Scholar] [CrossRef]

- Hollander, M.; Wolfe, D.A.; Chicken, E. Nonparametric Statistical Methods; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Hernán, M.A.; Robins, J.M. Causal Inference: What If; Chapman & Hall/CRC: Boca Raton, FL, USA, 2010. [Google Scholar]

- Pearl, J. Causal inference in statistics: An overview. Statist. Surv. 2009, 3, 96–146. [Google Scholar] [CrossRef]

- Angrist, J.D.; Pischke, J.S. Mostly Harmless Econometrics: An Empiricist’s Companion; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Morgan, S.L.; Winship, C. Counterfactuals and Causal Inference: Methods and Principles for Social Research; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Roy, V. Convergence diagnostics for markov chain monte carlo. Annu. Rev. Stat. Its Appl. 2020, 7, 387–412. [Google Scholar] [CrossRef]

- Kruschke, J.K. Bayesian data analysis. Wiley Interdiscip. Rev. Cogn. Sci. 2010, 1, 658–676. [Google Scholar] [CrossRef]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M.; Mathieu, M.; Dudzik, A.; Chung, J.; Choi, D.H.; Powell, R.; Ewalds, T.; Georgiev, P.; et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J.H.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: Berlin/Heidelberg, Germany, 2009; Volume 2. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Epochs | 3000 |

| Batch size | 512 |

| Learning rate | |

| Pac | 1 |

| Set | Precision | F1-Score | AUC-ROC |

|---|---|---|---|

| Only real data | 0.9520 | 0.9520 | 0.9870 |

| Real data + Synthetic data | 0.9630 | 0.9630 | 0.9915 |

| Parameter | Configuration/Result |

|---|---|

| MCMC Sampling | 2 chains 500 tuning iterations 500 samples Fixed seed: seed = 42 |

| Convergence diagnostics | Visual traces inspected (stability) Autocorrelation < 0.1 in all parameters |

| Fit to data | 92% alignment with the data (predictions vs. observations) |

| Aspect | Detail | ||

|---|---|---|---|

| Data flow |

| ||

| Performance | Model | Precision | F1-score |

| Standalone Neural Network | 0.9520 | 0.9520 | |

| Hybrid Model | 0.9630 (+1.1%) | 0.9630 (+1.1%) | |

| Interpretability |

| ||

| Metric | Value | Unit |

|---|---|---|

| Win Rate | 58.00 | % |

| Average Minerals Used | 473.79 | units |

| Average Gas Used | 250.35 | units |

| Average Response Time | 5.94 | s |

| Metric | Equation | Precision |

|---|---|---|

| Precision (P) | 71.01% | |

| Recall (R) | 84.48% | |

| Accuracy (A) | 71.00% | |

| F1-Score | 77.15% |

| Aspect | Baseline Study | Our Work |

|---|---|---|

| Goal | League classification | Strategy recommendation |

| Data | 46,398 raw replays | 100 matches + synthetic |

| Features | APM, camera switches | Resource ratios, unit counts |

| Model | Random Forest | Causal-DNN hybrid |

| Performance | 75.3% accuracy | 96.3% F1-score |

| Latency | Batch processing | 5.94 ms (real-time) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreira, F.; Velez-Bedoya, J.I.; Arango-López, J. Integration of Causal Models and Deep Neural Networks for Recommendation Systems in Dynamic Environments: A Case Study in StarCraft II. Appl. Sci. 2025, 15, 4263. https://doi.org/10.3390/app15084263

Moreira F, Velez-Bedoya JI, Arango-López J. Integration of Causal Models and Deep Neural Networks for Recommendation Systems in Dynamic Environments: A Case Study in StarCraft II. Applied Sciences. 2025; 15(8):4263. https://doi.org/10.3390/app15084263

Chicago/Turabian StyleMoreira, Fernando, Jairo Ivan Velez-Bedoya, and Jeferson Arango-López. 2025. "Integration of Causal Models and Deep Neural Networks for Recommendation Systems in Dynamic Environments: A Case Study in StarCraft II" Applied Sciences 15, no. 8: 4263. https://doi.org/10.3390/app15084263

APA StyleMoreira, F., Velez-Bedoya, J. I., & Arango-López, J. (2025). Integration of Causal Models and Deep Neural Networks for Recommendation Systems in Dynamic Environments: A Case Study in StarCraft II. Applied Sciences, 15(8), 4263. https://doi.org/10.3390/app15084263