Featured Application

In the sustainable management of marine resources, the underwater object detection technology in this paper has a wide range of applications in the fields of monitoring marine species, underwater environmental protection, and aquaculture.

Abstract

To address the challenge posed by the abundance of small objects with weak object features and little information in the images of underwater biomonitoring scenarios, and the added difficulty of recognizing these objects due to light absorption and scattering in the underwater environment, this study proposes an improved RHS-YOLOv8 (Ref-Dilated-HBFPN-SOB-YOLOv8). Firstly, a combination of hybrid inflated convolution and RefConv is used to redesign the lightweight Ref-Dilated convolution block, which reduces the model computation. Second, a new feature pyramid network fusion module, the Hybrid Bridge Feature Pyramid Network (HBFPN), is designed to fuse the deep features with the high-level features, as well as the features of the current layer, to improve the feature extraction capability for fuzzy objects. Third, Efficient Localization Attention (ELA) is added to reduce the interference of irrelevant factors on prediction. Fourth, an Involution module is introduced to effectively capture spatial long-range relationships and improve recognition accuracy. Finally, a small object detection branch is incorporated into the original architecture to enhance the model’s performance in detecting small objects. Experiments based on the DUO dataset show that RHS-YOLOv8 reduces 9.95% of computing power, while mAP@0.5 and mAP@0.50:0.95 are improved by 2.54% and 4.31%, respectively. Compared with other cutting-edge underwater object detection algorithms, the present algorithm improves the detection accuracy while lightweighting the improvement, which effectively enhances the capability to detect small underwater objects.

1. Introduction

Precise detection and localization of underwater resources are essential for the sustainable management of marine ecosystems. Nowadays, unmanned vehicles for underwater detection (UUVs) [1] are indispensable in marine research, seafloor mapping, and various underwater missions to assist in mapping the seafloor, identifying potential obstacles, and recognizing both topographical and biological features, as well as inspecting underwater infrastructure. Currently, underwater object detection has a wide range of applications, from monitoring marine species [2] to underwater archaeology [3], aquaculture [4], and military operations [5].

Underwater object detection faces many challenges. First, underwater images, unlike normal land images, exhibit a significant reduction in contrast between objects and their background. This effect arises from the inherent uncertainty of the underwater environment, light absorption and scattering by water, and the presence of various particulate media (e.g., coral reefs and sediments) [6]. Secondly, factors such as water currents, suspended particles, and bubbles can introduce blurring and distortion into images, further reducing recognition accuracy [7]. In addition, objects in the underwater environment are usually numerous, small in size, and diverse in shape, and the objects easily obscure each other, which puts forward higher requirements on the feature extraction and multi-scale feature fusion ability of the detection model [8]. Finally, various forms of noise and disturbances, including water waves, air bubbles, and floating debris, further disrupt the detection and recognition process [9]. Although existing conventional object detection techniques are effective at extracting shallow features of an object such as shape, contour, and texture [10,11,12,13], the recognition of these features relies on manual design and is poorly adapted to environmental variations (e.g., changes in water quality and changes in illumination). In addition, these methods find it difficult to accurately separate target objects in complex backgrounds, often lack precision and efficiency, and exhibit poor performance in detecting effects in complex underwater environments. With advances in artificial intelligence, deep learning-based object detection and classification techniques have enabled rapid object detection in complex underwater environments.

Deep learning-based object detection methods are primarily divided into two categories: two-stage detection models and single-stage detection models. Among them, the most prominent two-stage models are R-CNN [14], Fast-RCNN [15], and Faster-RCNN [16]. The main feature of the two-stage model is that the object detection task is divided into two stages: region proposal and classification regression. Although it has some advantages in detection accuracy, the model structure is larger, with low computational speed and high complexity, which is not applicable to real-time object detection in underwater monitoring scenarios. The other category is single-stage algorithms based on regression, such as the RetinaNet [17], SSD series [18,19], YOLO series [20,21,22,23], and so on. The single-stage algorithms have good real-time performance and are suitable for scenarios with limited storage and computational performance on underwater detection hardware devices, but have the disadvantage of low accuracy and need to be optimized, especially in the detection of small objects.

The primary representative of single-stage models is the YOLO series, characterized by its ability to take images directly as input and produce detection results in a single step. Its main advantages include a simple architecture, compact model size, and high processing speed. Compared with other models, it is more suitable for object detection in underwater images, and how to further lighten the algorithm of object detection and ensure the accuracy of detection is the difficulty of current research. For underwater object detection in the field of single-stage modeling, Liu [24] and others proposed the SSD model, which uses feature maps at different scales to detect targets, improving the detection speed and the effect of small-target detection while maintaining high efficiency. However, SSD is poorly adapted to high-density backgrounds and is prone to miss detecting objects in noisy backgrounds, and the efficiency of the model decreases at larger image sizes. The RetinaNet model proposed by Lin [25] et al. introduces Focal Loss to alleviate the positive and negative sample imbalance problem, which improves the performance of the single-stage detection model with small and sparse objects, making it more accurate when detecting small objects in complex underwater backgrounds. However, the RetinaNet network is deeper and has a higher demand for computational resources; thus, it is deficient in real-time performance. DETR, proposed by Carion [26] et al., utilizes the Transformer structure for object detection, which avoids the step of region proposal generation, resulting in the model’s robustness in complex scenes and improves the model’s feature extraction capability in complex backgrounds. However, the training cost of the model is very high, and it is highly dependent on a substantial amount of data. In the case of underwater data scarcity, the effect of DETR is difficult to guarantee, and at the same time, its inference speed is slow, which is not suitable for underwater tasks with high real-time requirements. Lei [27] et al. improved upon YOLOv5 by enhancing the Path Aggregation Network (PANet) for multi-scale feature fusion, enabling the model to focus on combining the most relevant resolution features. Additionally, they introduced an improved confidence loss function, tailored to different detection layers, which encourages the network to prioritize learning high-quality positive anchor frames, thereby enhancing its object detection capabilities. However, the model remains large and does not fully meet the efficiency requirements for underwater detection tasks. Yan [28] et al. introduced the Convolutional Block Attention Module (CBAM) [29] in YOLOv7 and proposed a detection model suitable for object detection in underwater environments, which has stronger feature extraction capability and better detection speed in underwater scenes. However, CBAM contains two modules, channel and spatial attention, each of which needs to compute the global information of the feature map, thus introducing additional computational overhead and memory requirements. In large networks or real-time applications, this leads to a decrease in model speed and is not favorable for deployment to devices with limited computational resources. Zhang [30] et al. used FasterNet-T0 [31] instead of the backbone of YOLOv8 to obtain faster training speed and fewer model parameters, but with a greater loss in detection accuracy.

Based on the above analysis, the current research still suffers from the problems of more complex algorithms and lower detection accuracy, and some of the research, even though it improves the detection accuracy, is not enough to satisfy the demand for high real-time performance and light computational overhead for underwater target detection. To efficiently and accurately recognize the object marine organisms in underwater images in complex environments, a lightweight and improved RHS-YOLOv8 detection algorithm is proposed, and the main contributions of this paper are as follows:

- A new Ref-Dilated block is redesigned to replace the original bottleneck block in the C2f module of YOLOv8 [32], which combines the multi-scale receptive field of the cavity convolution and the strong feature extraction capability of RefConv, so as to satisfy the detection model’s feature extraction and multi-scale feature fusion capability, and the computation amount is greatly reduced.

- A new fusion module, the Hybrid Bridge Feature Pyramid Network (HBFPN), is designed to be added to the neck to fuse the deep features with the high-level features as well as the current layer, so that the fusion module with a lightweight setup efficiently improves the model’s characterization and reduces the probability of missed detection.

- The ELA attention mechanism is added to the backbone network to ensure accurate location information of the region of interest, which improves the overall performance of the model with only a minimal increase in computation. Additionally, the use of Involution convolution blocks in the backbone network enables lightweight embedding into the representation learning paradigm, which enhances the visual recognition capability of the model.

- Adding a small object detection branch to part of the low feature layer improves the accuracy of small object detection and does not lead to the loss of detection accuracy for medium and large objects.

The remainder of this study is organized as follows: Section 2 outlines the primary structure of the detection model within the seafloor observation network and examines specific improvement strategies. Section 3 presents an overview of the dataset, experimental environment, and model evaluation metrics. Section 4 discusses the experimental design and analyzes the experimental results. Section 5 discusses the contributions and shortcomings of the model proposed in this paper. Finally, Section 6 offers concluding remarks and suggests directions for current and future research.

2. Methodology

2.1. Object Detection Algorithm

The YOLOv8 model, released by Ultralytics in 2023, offers versatile support across a range of computer vision tasks. In object detection specifically, YOLOv8, a newer and well-received version of the YOLO series, combines the high efficiency of traditional convolutional neural networks with the strong expressive power of the Transformer module, resulting in a good balance between inference speed and detection accuracy. In addition, YOLOv8 supports a lightweight version of the model design, which is particularly suitable for resource-constrained underwater detection tasks. Compared to YOLOv5 and YOLOv7, YOLOv8 also introduces a dynamic label assignment strategy, which significantly improves the detection of small objects. Building on YOLOv5, YOLOv8 introduces a novel network structure that combines the strengths of earlier YOLO models with cutting-edge design concepts in object detection algorithms [33]. Overall, YOLOv8 is mainly set up with five versions, n, s, m, l, and x, and a different number of channels is set up for each version, and its network structure provides different magnitudes of the model by varying the channel widths and network depths to enhance the robustness of the model to handle various types of detection tasks. The model structure of YOLOv8 is divided into three parts: the backbone, the neck, and the detection head. The backbone network architecture of YOLOv8 is based on and adapted from the DarkNet53 structure. For example, the C3 module in the feature extraction network is replaced with a C2f module incorporating residual connections, which includes two convolutional cross-stage partial bottlenecks. This modification allows for the integration of high-level features and contextual information with fewer parameters, enhancing feature extraction and leading to improved detection accuracy. The neck section includes an SPPF module, a PAA module, and two PAN modules, using a multi-scale fusion technique to fuse feature maps from the backbone network for enhanced feature representation. In the detection head section, YOLOv8 draws inspiration from the design ideas of YOLOX [34] by using a decoupling head for decoupling, a design that allows each branch to focus on the current prediction task, making the training and inference of the network more efficient. Compared with other YOLO series models, YOLOv8 can have better detection accuracy while maintaining a lower computation volume, which meets the lightweight and high-efficiency requirements of models carried by underwater equipment when performing object detection tasks.

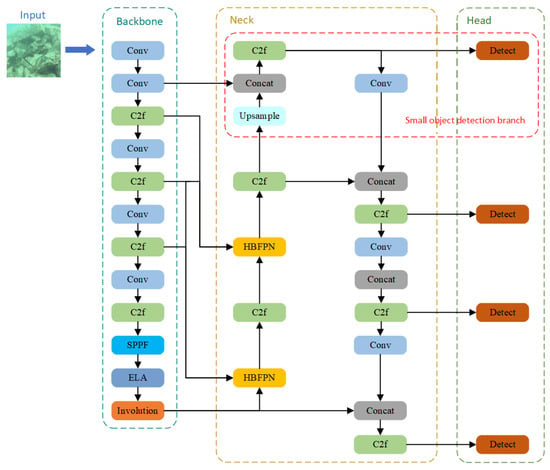

In this paper, to achieve real-time and high-efficiency detection in underwater images, we use the newly designed Ref-Dilated convolution block to replace the original bottleneck convolution block to improve the structure of the C2f module, design the HBFPN to replace the original Concat operation, introduce the ELA attention mechanism and the Involution module, and finally add the small target detection branch to the overall architecture. In the RHS-YOLOv8 model, the backbone consists of various convolution modules. In addition to the convolutional modules, the neck consists of upsampling, downsampling, and connection operations. The network provides four predicted outputs for different sizes of detected objects. The network structure of the improved RHS-YOLOv8 is shown in Figure 1.

Figure 1.

The network structure of RHS-YOLOv8.

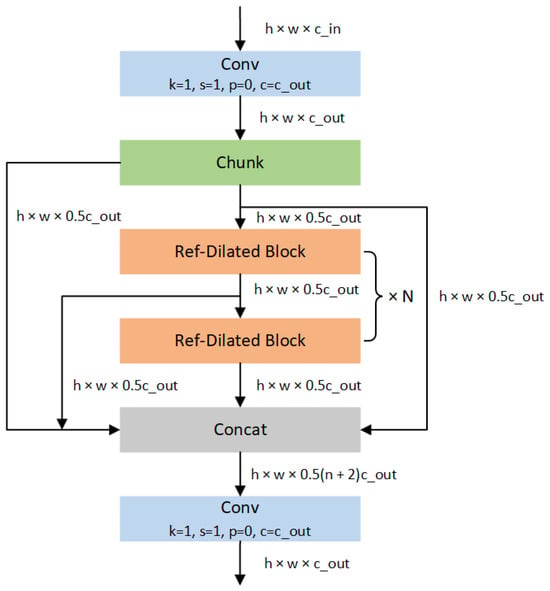

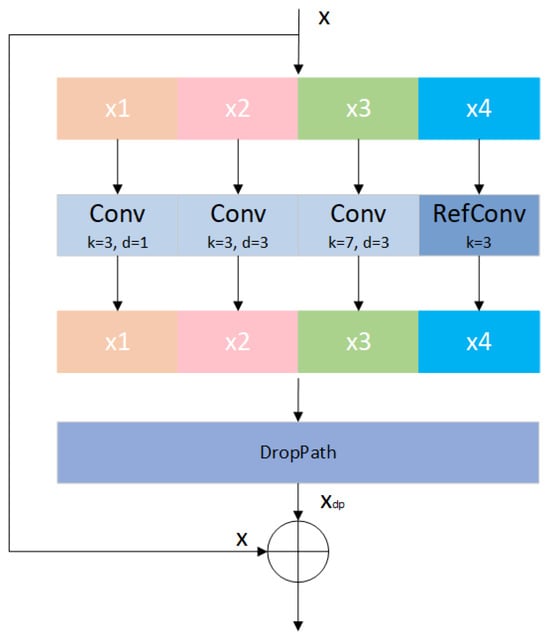

2.2. Ref-Dilated Convolutional Block

Due to the complexity and timeliness of underwater detection tasks, the feature extraction and contextual information aggregation capabilities of the detection models are more demanding. Ref-Dilated block combines the ideas of dilated convolution [35] and RefConv (Re-parameterized Refocusing Convolution) [36], aiming to improve the detection of small objects while controlling the computational cost. The block divides the input feature map into the four branches , each using a different convolution strategy, and then stitches the results together. The structure of the Ref-Dilated block in the modified C2f module, as well as its internal structure, are shown in Figure 2 and Figure 3, respectively.

Figure 2.

The structure of the improved C2f module.

Figure 3.

The structure of the Ref-Dilated block.

is convolved using an ordinary 3 × 3 convolution kernel, expressed as in Equation (1).

where Conv denotes the convolution operation. and perform cavity convolution with a dilation rate of 3. performs cavity convolution using a 3 × 3 convolution kernel, which is equivalent to a 9 × 9 receptive field, while performs cavity convolution using a 7 × 7 convolution kernel, which yields a larger equivalent 21 × 21 receptive field, expressed as in Equation (2) and Equation (3).

where DilatedConv shows the null convolution operation, and rate is the expansion rate. uses a 3 × 3 convolution kernel with feature transformation via the RefConv operation, which contains an additional learnable parameterization matrix formulation, as in Equation (4).

Then, the output features of the four branches are spliced as and then downscaled, and the number of channels is adjusted by a 1 × 1 convolution, expressed as in Equation (5).

To enhance the generalization ability of the model, the spliced feature is regularized to with DropPath, expressed as in Equation (6).

Finally, the original input x and the DropPath-processed feature are fused, e.g., by element summing or channel splicing, expressed as in Equation (7).

Dilated convolution can expand the receptive field by introducing an expansion coefficient, thus capturing more feature information at different receptive field scales, which is particularly suitable for coping with underwater targets of varying sizes. RefConv improves the expressive power of feature extraction and optimizes the computational efficiency of the network by reparametrizing the weights in the training and inference phases. In this way, the Ref-Dilated block combines the multi-scale sensory field of null convolution and the strong feature extraction capability of RefConv, while maintaining a low computational cost.

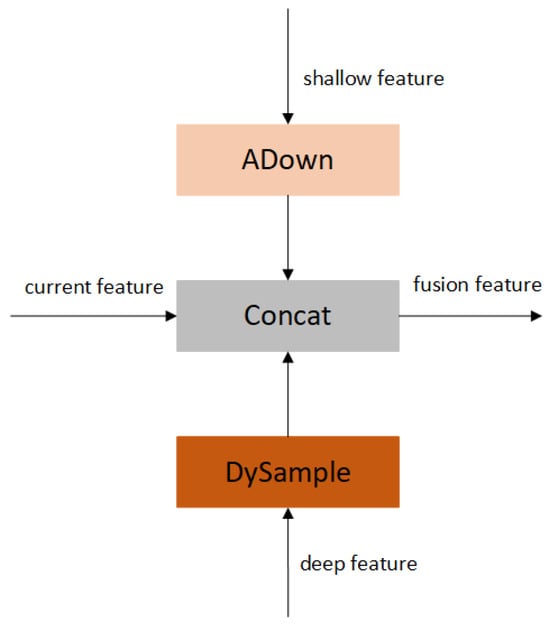

2.3. Hybrid Bridge Feature Pyramid Network Fusion Block

Due to the small size and weak features of underwater small objects, they are prone to miss detection when performing the detection task; thus, the characterization ability of the detection model needs to be improved. Feature fusion is a common method to improve the model’s characterization ability, where high-level features contain rich texture features and deep-level features contain more semantic features. To effectively utilize these two types of features, we designed a module called the HBFPN (Hybrid Bridge Feature Pyramid Network) for fusing current features, high-level features, and deep features. The structure of the HBFPN fusion block is shown in Figure 4.

Figure 4.

The structure of the HBFPN fusion block.

Letting the high-level feature be , the deep-level feature be , and the current-level feature be , first, for the processing of high-level features, we adopt the ADown module (the downsampling module used by YOLOv9) [37] for downsampling. The ADown module effectively reduces the resolution of the high-level feature map through a series of well-designed convolution and pooling operations, while preserving the rich texture features. This process can be expressed as Equation (8).

where denotes the high-level features after downsampling. The ADown module reduces the complexity of the model by decreasing the number of parameters, which helps to improve the efficiency of the model operation, especially in resource-constrained environments.

Then, for the deep features, we employ the DySample module [38] for upsampling. The DySample module utilizes advanced interpolation algorithms and convolution operations to increase the resolution of the deep feature map so that it has the same size as the current-layer feature, facilitating subsequent fusion. The upsampling process can be expressed as Equation (9).

where denotes the deep layer feature after upsampling. Finally, in the feature fusion stage, we fuse the high-level feature F′high after downsampling, the deep-level feature after upsampling, and the feature of the current layer. This fusion makes full use of the feature information of different layers and improves the model’s representational ability. The fusion operation can be expressed as Equation (10).

The neck part of YOLOv8 is usually used to fuse feature figures of different scales. We integrate the HBFPN into the neck part of YOLOv8 and replace the original Concat operation. This design is particularly suitable for the detection tasks of underwater small objects and diverse target sizes, where the multi-scale fusion mechanism effectively solves the problem that small objects are easily overlooked.

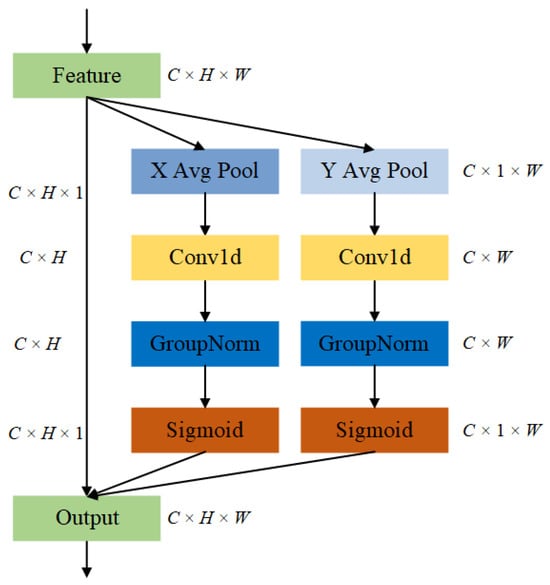

2.4. Efficient Localization Attention

To further enhance the detection capability of the model, this paper adopts the Efficient Local Attention (ELA) module [39], which is proposed based on an in-depth analysis of the Coordinate Attention (CA) method [40]. The structure of ELA is shown in Figure 5. Compared to CA, the ELA module is able to efficiently encode two 1D positional feature figures without the need to reduce the channel dimensions through 1D convolution and group normalized feature enhancement techniques. In addition, the design of the ELA module maintains its lightweight nature, enabling it to be easily integrated into existing convolutional neural networks. Thus, this modification effectively addresses the limitations of CA batch normalization in generalization, mitigates the negative impact of dimensionality reduction on channel attention, and reduces the complexity of the attention generation process.

Figure 5.

The structure of Efficient Local Attention (ELA).

The parameters of the input x from the convolutional block are denoted as , denoting the height, width, and channel dimension (i.e., the number of convolutional kernels), respectively. ELA extracts feature vectors in both the horizontal and vertical directions by applying banded pooling along the spatial dimension, preserving elongated kernel shapes to capture long-distance dependencies while minimizing interference from unrelated regions during label prediction. Specifically, for input x, each channel is encoded along horizontal and vertical coordinates using pooling kernels of dimensions and , corresponding to the i and j directions, respectively. The output of the c.th channel with height h is shown in Equation (11).

Similarly, the output of the c.th channel with width w is as in Equation (12).

The above two transformations perform feature aggregation along the two spatial directions, returning a pair of direction-aware attention graphs, which in doing so can generate informative object location features in each direction. To efficiently utilize these features, 1D convolution is applied to augment the position information in the horizontal and vertical directions, denoted as and , respectively, using a convolution kernel size of 5 or 7. Subsequently, we utilize Group Normalization (denoted as ) [41] to process the augmented position information and thus represent the positional attention in both the horizontal and vertical directions, as shown in Equations (13) and (14).

where σ is the nonlinear activation function, and the results of positional attention in the horizontal and vertical directions are denoted as and , respectively. The final output Y of the ELA module can be expressed as in Equation (15).

In YOLOv8, this paper places the ELA module after the backbone part as a key component of the model. The main role of the backbone is to extract the features of the image, while the ELA module further enhances the spatial information of these features so that the model can better focus on the object region and ignore the background noise.

2.5. Involution

To further improve the detection accuracy and efficiency of the model, we deeply explored the optimization methods of the model architecture. In this process, we paid special attention to Involution [42], a novel spatial modeling operation, which provides us with new optimization ideas with its unique symmetric inverse convolution property, i.e., spatially specific and channel-independent.

The Involution operation, through its unique spatial scalability and switchability, can be lightly embedded into the representation learning paradigm to enhance the model’s visual recognition capabilities. We skillfully incorporate it behind the backbone structure of YOLOv8, enabling the model to capture a wider range of spatial contextual information while maintaining an efficient inference speed, thus localizing and recognizing objects more accurately.

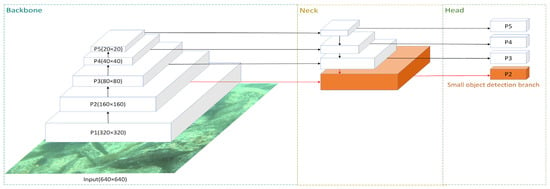

2.6. Small Object Detection Branch

There are more difficulties in small object detection, resulting in many algorithms being much less effective in detecting small objects than large- and medium-sized objects. The main reasons affecting the performance of the algorithms are as follows: First, small objects have low resolution and insufficient information, resulting in fewer effective features extracted by the neural network. Second, small objects occupy a small area in the image and are susceptible to background interference, which requires high localization performance of the algorithm. Third, small objects are difficult to label and the training data are limited, which leads to poor model generalization ability. In the original YOLOv8, the pictures need to output the object-related information after converging the neck structure through the P3, P4 and P5 feature layers, and predict the object location afterwards. The sizes of these three layers of feature maps are 20 × 20, 40 × 40, and 80 × 80 pixel points, respectively, but the information contained in the feature maps in the original model is not enough to support the adequate representation of the information of small objects. Therefore, we add the P2 detection head in the model, which is equivalent to four detection heads, so that the network can detect smaller objects. The P2 feature maps are located in the low feature layer, with the size of 160 × 160 pixels which contains more information, has a better ability to transfer more shallow features to deeper features, and is conducive to enhancing the expressive ability of the multi-scale feature map extracted by the network, so that the network is better able to perform multi-scale object detection and describe the location of the small object. The structure of the YOLOv8 model with the addition of a small object detection branch is shown in Figure 6. Compared with the original YOLOv8 model, the amount of information obtained by this model increases, resulting in an increase in accuracy.

Figure 6.

YOLOv8 structure with the small object detection branch.

3. Experiments

3.1. Underwater Object Detection Dataset

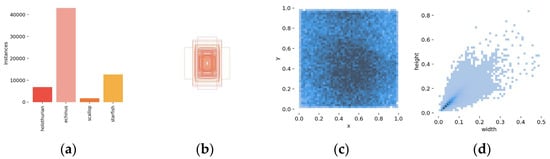

We validate our approach in experiments using the open-source underwater image DUO dataset [43] for underwater object detection. The dataset consists of 7782 accurately labeled images featuring four typical aquaculture organisms: holothurian, echinus, scallop, and starfish. Of these images, 6671 are designated for training, while 1111 are set aside for testing. Some sample images of this dataset are shown in Figure 7. The images in the DUO dataset present typical underwater image characteristics such as high bias, low contrast, uneven lighting, blurring, and high noise, and the images have fine objects, overlapping objects, and sparse distribution, which poses some challenges for accurately detecting different aquaculture organisms, and at the same time largely reflects the problems faced by detecting objects in real marine environments. The number of labels for each category and the aspect ratio of image labels to the whole image are shown in Figure 8. It can be visualized that the dataset has a disproportionate number of objects. The entire dataset is more difficult to detect due to the non-uniform number of detection categories, the large variation in target size and the high number of small objects.

Figure 7.

Some sample images of the underwater object detection dataset.

Figure 8.

Analysis of the DUO dataset: (a) bar chart of the samples of each class of the training set; (b) size and quantity of the grand truth box; (c) the position of the center points relative to the image; (d) the ratio of height and width of the object relative to the image.

In addition, we further validate the improved model by applying it to the RUOD dataset [44], which is designed for underwater detection in general scenarios. As such, the dataset encompasses large-scale data, high-quality annotations, rich object types, and diverse detection challenges, and is able to provide a comprehensive and systematic evaluation of the prerequisites. The training and test sets of RUOD contain 9800 and 4200 images, respectively, with a total of 10 object categories (fish, echinus, coral, starfish, holothurian, scallop, diver, cuttlefish, turtle, and jellyfish). The DUO dataset has limited scene variation, whereas most of the RUOD images were collected from websites, which were taken at different locations and contain different backgrounds and objects. Compared to the DUO dataset, RUOD contains more underwater challenges, including foggy effects, off-color, light interference, and complex marine objects, and almost twice as many images and categories as DUO. Therefore, RUOD can further test the findings of this paper.

3.2. Experimental Configuration and Environment

Our experiments are implemented in Python using the PyTorch deep learning framework on Ubuntu 20.04. The hardware configuration is detailed in Table 1. During training, the images have an input size of 640 × 640, with a total of 200 training epochs and a batch size of 16. Optimization is performed using stochastic gradient descent (SGD) with an initial learning rate (lr0) of 0.001, momentum set to 0.937, and a weight decay of 0.0005.

Table 1.

The experimental configuration.

3.3. Evaluation Metrics

To demonstrate the improvement effect of this paper more intuitively, the performance of the model is evaluated by using the FPS (Frames Per Second), GFLOPs, precision (P), recall (R), and mean Average Precision (mAP). FPS stands for the number of images processed by the model per second. FLOPs, i.e., floating point operations, refer to the number of floating-point operations performed in a computational model, including operations such as addition, multiplication, and so forth. GFLOPs are a unit of FLOPs, where 1 GFLOP is 109 FLOPs. Usually, the computation of deep learning models mainly comes from the GFLOPs in the convolutional layer, fully connected layer, and other operations. GFLOPs are used to evaluate the computational complexity of the model. Higher GFLOPs mean that the model is more computationally intensive, which also means that the model may require a longer inference time or higher computational resources. E.g., assuming that there is a convolutional layer with input size (width, height and number of channels), convolutional kernel size k × k, and output size , the floating point operations of this convolutional layer are shown in Equation (16).

which denotes the multiplication operation for each convolution kernel, followed by 1 because the convolution operation also contains an addition operation. Precision is used to evaluate the accuracy of the model’s prediction and recall is used to evaluate the model’s ability to find the correct sample. The precision and recall are shown in Equations (17) and (18).

where TP is true positive, FP is false positive, and FN is false negative. Average Precision (AP) denotes the average accuracy of the model on a category, and mAP is the average of the AP values across all categories, which is used to measure the overall performance of the model on all categories, as shown in Equations (19) and (20).

The precision–recall curve P(R) in Equation (19) is defined as the interpolated precision values across varying recall levels R, where P(R) corresponds to the maximum precision achieved at any recall value greater than or equal to R. The parameter n (specified in Equation (20)) represents the total number of object categories in the dataset. mAP@0.5 denotes the mean Average Precision calculated with a fixed IoU (Intersection over Union) threshold of 0.5, and mAP@0.5:0.95 measures the mean Average Precision by averaging results across multiple IoU thresholds, ranging from 0.5 to 0.95 with a step size of 0.05.

4. Analysis and Discussion of Experimental Results

4.1. Ablation Experiments on the DUO Dataset

To assess the impact of the enhancements suggested in this paper, a series of ablation experiments on the DUO dataset were analyzed using YOLOv8’s three original models of s, m, and l as a baseline. The findings of these experiments are presented in Table 2.

Table 2.

The results of the ablation experiment on the DUO dataset.

The results are analyzed: compared with the base model, after improving the C2f module by using the Ref-Dilated module, the model computation is significantly reduced, and the GFLOPS of the three base models are reduced by 18.83%, 24.14%, and 28.7%, respectively, and taking YOLOv8s as the benchmark, all the performance metrics, mAP@0.5 and mAP@0.5:0.95, are improved by 1.65% and 2.87%, respectively, indicating that the Ref-Dilated module’s multi-scale enhancement of the receptive field and feature extraction capability in the C2f module improves the detection efficiency and thus significantly reduces the computing cost. This lightweight and efficient core improvement meets our expectations for the general needs of underwater small object detection. With the introduction of Involution in the backbone network, YOLOv8l shows uniformly tiny improvements in R, mAP@0.5, and mAP@0.5:0.95 of 2.12%, 0.57%, and 0.99%, respectively, without a significant increase in the amount of computation. This indicates that it is lighter and more efficient than the traditional convolution in the model, and can be used to achieve a balance between performance and efficiency in modeling for detection tasks.

In terms of overall performance metrics, while improvements in the lightweight aspect of detection were secured, the improvement in the accuracy aspect of detection was not effective enough. Due to the low input resolution, a large number of small objects are matched with the detection layer corresponding to the high-resolution feature figure, resulting in a large complexity of the model. In this case, only three detection heads cannot effectively detect a large number of small objects, so the design of a small object detection branch was considered. After adding the small object detection branch, although P and R are basically unchanged, the m of YOLOv8s is improved by 1.47% and the number of parameters is still lower than the benchmark model. It can be analyzed and understood that the design of the small object detection branch increases the weight of the model on small object detection, and optimizes the regression of the bounding box in the scenario of small objects, so that the prediction box is positioned closer to the Ground Truth. This increase in localization accuracy does not directly affect P (the ratio of the number of correct predictions to the number of all predictions) or R (the ratio of the number of correct predictions to the total number of objects), but it will improve the IoU between the detection frame and the true frame, thus improving the AP of the small target category at high IoU thresholds, and the mAP is the average of the APs of all the categories, so the mAP metrics are improved. It can be seen that by adding the small object detection layer, the interference of the background misdetection (false positive and false negative) on the specific category of small objects is reduced, which makes the model have a better ability to extract the information of small objects.

Then, we integrated the designed HBFPN into the neck part of YOLOv8 and replaced the original Concat operation. Through this integration, the HBFPN model can fully utilize its advantage of fusing high-level and deep-level features to improve the detection performance of the model. From the results of YOLOv8l, P and R are improved by 0.35% and 2.48%, and mAP@0.5 and mAP@0.5:0.95 are improved by 0.23% and 1.26%, respectively. Finally, the introduction of ELA in the backbone further enhances the ability of extracting the spatial information of image features by the backbone, and all the metrics are improved by 0.1–0.2% in both models m and l, while the small increase in the number of operations is within the acceptable range. Analyzing the experimental data, it can be seen that both the HBFPN and ELA improve the detection performance when they are introduced, but the optimal effect is achieved when they are used in combination, especially in the DUO dataset in scenes with complex backgrounds and a large number of small objects. The HBFPN generates more comprehensive multi-scale feature maps for object detection by integrating the low-level detail features and the high-level semantic features through multilevel feature fusion. Based on the multi-scale features provided by the HBFPN, ELA further focuses on object-related high-value features while suppressing background noise. This process generates horizontal and vertical attentional features through a strip pooling method, which effectively enhances the representation of object location information in complex scenes. In contrast, CA is not as well balanced as ELA in capturing global and local features. From the experimental results of YOLOv8l, for example, the P, R, mAP@0.5, and mAP@0.5:0.95 of the model incorporating ELA are increased by 2.1%, 0.1%, 0.3%, and 1.4%, respectively, compared to that incorporating CA. Therefore, the traditional CA, although it can encode the coordinate information efficiently, is more sensitive to the noise disturbances of the underwater environment, whereas the ELA module has a higher robustness to the noise in the underwater environment without an extra computational increase.

As can be seen from the results of the ablation experiments, the improved method in this paper improves P by 2.46%, R by 1.43%, mAP@0.5 by 2.47%, and mAP@0.5:0.95 by 4.39% when using YOLOv8s as a benchmark at the cost of only a 3.3 GFLOPs improvement. When YOLOv8m is used as the benchmark, R is improved by 7.12%, mAP@0.5 by 2.54%, mAP@0.5:0.95 by 4.31%, and the computation is reduced by 16.4 GFLOPs, i.e., a reduction of about 9.95%. All the indexes of the improved algorithm are better than the base model of YOLOv8, and the overall improvement can be considered to be more effective. This shows that the method in this paper not only reduces the model arithmetic more substantially, but also improves the detection accuracy, which can meet the demand for real-time and accurate performance of small object detection tasks in underwater image scenarios.

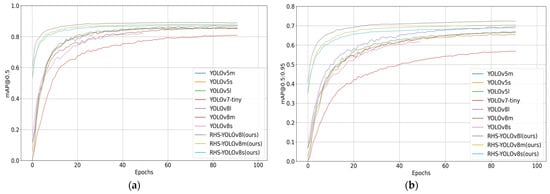

4.2. Comparison of Experimental Results on the DUO Dataset

To validate the improvement and demonstrate the superiority of the model, we compared the model proposed in this study with various other models. The comparison experiments were conducted on the DUO dataset and under the same conditions, and the results are shown in Figure 9 and Table 3.

Figure 9.

Comparison of evaluation metrics: (a) mAP@0.5 changes; (b) mAP@0.5:0.95 changes.

Table 3.

The results of each model on the DUO dataset.

From Figure 9, it can be seen that with the increase in the number of iterations, the algorithms can eventually reach the convergence state, and the two metrics mAP@0.5 and mAP@0.5:0.95 of the improved algorithm are higher than the benchmark model and other models, indicating that the improved algorithm in this paper is able to converge in a timely manner and effectively improve the detection accuracy.

As can be seen from Table 3, compared with other models, the improved model of this paper is in the leading position in almost all evaluation metrics. Compared to AWF-YOLO, which utilizes an adaptive weighted feature pyramid network to enhance underwater object detection, and CEH-YOLO, which improves the comprehensiveness of object recognition by designing a composite detection module, our improved algorithms with the Ref-Dilated convolutional block’s expansion of the model sensory field and the HBFPN module’s fusion of different levels of feature information are more effective, with an improvement of mAP@0.5 by 11.0% and 0.4%, respectively, and an improvement of mAP@0.5:0.95 by 2.5% and 1.4%, respectively. Even though the mAP@0.5 of FishDet-YOLO is almost the same as the model in this paper, mAP@0.5:0.95 contains metrics with high IoU thresholds (e.g., 0.75, 0.85, etc.). At these thresholds, the regression quality (i.e., localization accuracy) of the bounding box is more critical. The small object detection branch optimizes the localization accuracy of small objects, leading to a boost in AP at high IoU thresholds, and thus outperforming FishDet-YOLO by 3.2% in mAP@0.5:0.95. In addition, in terms of frames per second (FPS, referring to the number of images processed per second), all three versions of this improved model (RHS-YOLOv8s, m, and l) are 22.4%, 19.7%, and 29.2% higher than the corresponding original model, respectively, among which RHS-YOLOv8s has the highest FPS, which is sufficient to meet the high real-time detection requirements. RHS-YOLOv8l has the highest detection accuracy with a slightly lower FPS, however, it has an advantage over most of the other models, which makes it a better choice for scenarios that require lower real-time performance while pursuing higher detection accuracy. Therefore, the model in this paper is more suitable for the complex environment in which the underwater objects are located.

4.3. Validation Experiments on the RUOD Dataset

We also conducted further training and testing on the RUOD dataset to demonstrate the effectiveness of the proposed model, and the results are shown in Table 4. The results in the more challenging RUOD dataset show that Faster R-CNN, RetinaNet, and YOLOv5 are unable to better balance their computational cost, detection accuracy, and image processing time. YOLOv7-tiny has low GFLOPs and high FPS, but it has poor P, R, and mAP. In contrast, YOLOv8’s original model has fair detection accuracy and FPS, but it pays a higher computational cost.

Table 4.

The results of each model on the RUOD dataset.

Remarkably, the model proposed in this paper maintains a smaller computational cost and faster FPS. Taking RHS-YOLOv8l as an example, it reduces the computational effort by 9.95% (16.4 GFLOPs), improves the mAP@0.5 by 1.60%, and improves the FPS by 10.59%, compared with the original YOLOv8l. It can be seen that the improved model in this paper achieves decent results in both DUO and RUOD, with higher universality and credibility.

4.4. Visualization and Analysis

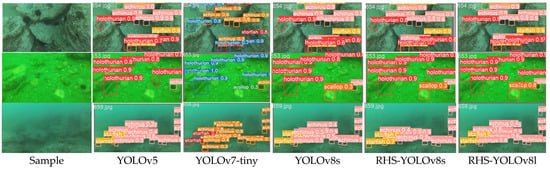

To further validate the model effect, underwater detection images in several different scenarios were sampled and tested, and the visualization results on the DUO dataset are shown in Figure 10 (where the leftmost side is the test sample and the right side is the detection results of each model).

Figure 10.

Visual comparison of object detection effects: the three rows of results are for scenes with occluded objects, scenes with complex backgrounds, and scenes with a high concentration of small objects, respectively.

As shown in Figure 10, YOLOv5, v7-tiny, and v8 have the problem of missed detection in scenes where the targets are occluded, while the improved model is able to recognize these targets. In the scene with complex background, YOLOv5 and YOLOv8s have missed detection of larger but less recognizable scallops and smaller sea cucumbers that are closer in color to the environment in the silt zone of the seabed in the picture, and the accuracy of the detection is low, while both models s and l of the improved model are able to detect them, with the latter having a higher accuracy rate. In the scenario of dense small targets, the improved RHS-YOLOv8l improves the accuracy of detecting small objects by 15%, 10%, and 6% compared to YOLOv5, v7-tiny, and v8s, respectively. Then, we further quantified the effect of background complexity on detection accuracy by defining the background complexity score using the texture complexity, color diversity, and background object density of the image, and analyzing the test samples in groups; the complexity layers of the background environments of the three test samples selected are, in order, low complexity, high complexity, and medium complexity. It can be seen that the confidence scores of our improved model in the low-complexity environment compared to the prediction frames of the other algorithms do not differ much, i.e., 0–0.2, whereas in the group of test results in the medium-complexity background, the difference in confidence scores, for example, in the case of sea urchins, comes to 0.2–0.3, and in the second group, which has the highest complexity in the background, the difference in confidence scores comes to 0.5–0.8. It can be seen that the detection performance of all models decreases as the background complexity score increases. However, the decrease in performance of RHS-YOLOv8 in complex backgrounds is significantly smaller than that of YOLOv5, v7-tiny, and the base model of YOLOv8. Especially in the group with the highest background complexity, the confidence score of the prediction frames of RHS-YOLOv8l is still maintained at 0.8, which is significantly better than that of the base model, 0.3. This result indicates that the confidence scores of the Ref-Dilated block and HBFPN are designed to effectively enhance the feature capture of the object, while suppressing the noise interference in the complex background, and have strong adaptability to complex background interference.

In summary, the analysis shows that the improved model has a better overall detection effect, is more sensitive to small targets than the other models and the original model, and is sharper in recognizing fuzzy and overlapping objects. In addition, RHS-YOLOv8s sacrifices a certain amount of accuracy for a lighter and more efficient detection process, whereas RHS-YOLOv8l pays more computation as a price for a higher detection accuracy. Therefore, the appropriate detection model can be selected according to different scenarios.

5. Discussion

To address the high computational costs of detecting small underwater objects in complex environments while maintaining accuracy, this paper proposes RHS-YOLOv8, a lightweight detection algorithm based on YOLOv8, validated on the DUO and RUOD datasets. Key improvements include the following: (1) replacing the standard bottleneck block with a Ref-Dilated block to expand the convolutional receptive field and reduce computational costs; (2) integrating a lightweight Involution block to reduce inter-channel redundancy; (3) adding a small object detection branch at low feature layers to enhance generalization; (4) introducing a novel fusion module to optimize feature combination; and (5) employing the ELA attention mechanism to improve spatial feature extraction. On the DUO dataset, these modifications reduced computational costs by 9.95% (from 164.8 to 146.8 GFLOPS) while improving mAP@0.5 and mAP@0.5:0.95 by 2.54% (0.888) and 4.31% (0.726), respectively.

However, this study has limitations. First, the dataset suffers from insufficient sample size and limited detection scenarios, potentially affecting model generalizability. Second, the native underwater images in the dataset exhibit quality issues (e.g., low contrast and light distortion), which may degrade detection performance. Third, while the model achieves the lightweight design goals, its compatibility and adaptability for real-world underwater embedded systems (e.g., cross-platform deployment and real-time inference under resource constraints) remain unverified through sufficient experiments.

6. Conclusions

This work presents RHS-YOLOv8, a lightweight detection framework that balances computational efficiency and accuracy for underwater small object detection. By optimizing the network architecture through multi-scale feature extraction, redundancy reduction, and attention mechanisms, the model achieves a 9.95% reduction in computational load while improving detection accuracy. Its efficiency and performance make it suitable for deployment on underwater platforms (e.g., unmanned vehicles and observation systems) for real-time marine organism monitoring.

Future research will focus on three directions:

- Dataset enhancement: expanding sample diversity, particularly for small objects and complex underwater environments, and incorporating data from varied sea conditions (depth, visibility, and lighting) to improve robustness.

- Image preprocessing: integrating underwater-specific enhancement techniques (e.g., CLAHE and color correction based on optical physics models) to address image quality challenges.

- Deployment optimization: accelerating model inference via tools like TensorRT or ONNX Runtime, and enabling cross-platform compatibility (GPU/ARM architectures) for real-time applications in resource-constrained underwater devices.

Author Contributions

Conceptualization, Y.W. and J.T.; methodology, Y.W.; software, W.W.; validation, Y.W., J.T. and W.W.; formal analysis, D.Y.; investigation, D.Y.; resources, S.H.; data curation, S.H.; writing—original draft preparation, Y.W.; writing—review and editing, Y.W.; visualization, Y.W.; supervision, Y.W.; project administration, J.T.; funding acquisition, J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Postgraduate Cultivating Fund of Jianghan University (301004310001) and Innovation Graduate Research Fund of Jianghan University (KYCXJJ202320).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in the paper can be downloaded here: https://github.com/chongweiliu/DUO (accessed on 16 October 2021).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lee, D.; Kim, G.; Kim, D.; Myung, H.; Choi, H.T. Vision-based object detection and tracking for autonomous navigation of underwater robots. Ocean Eng. 2012, 48, 59–68. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, M.; Song, W.; Mei, H.; He, Q.; Liotta, A. A systematic review and analysis of deep learning-based underwater object detection. Neurocomputing 2023, 527, 204–232. [Google Scholar] [CrossRef]

- Ødegård, Ø.; Mogstad, A.A.; Johnsen, G.; Sørensen, A.J.; Ludvigsen, M. Underwater hyperspectral imaging: A new tool for marine archaeology. Appl. Opt. 2018, 57, 3214–3223. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-time detection of uneaten feed pellets in underwater images for aquaculture using an improved YOLO-V4 network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar]

- Cho, S.H.; Jung, H.K.; Lee, H.; Rim, H.; Lee, S.K. Real-time underwater object detection based on DC resistivity method. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6833–6842. [Google Scholar] [CrossRef]

- Mosk, A.P.; Lagendijk, A.; Lerosey, G.; Fink, M. Controlling waves in space and time for imaging and focusing in complex media. Nat. Photonics 2012, 6, 283–292. [Google Scholar] [CrossRef]

- Chiang, J.Y.; Chen, Y.C. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769. [Google Scholar]

- Lucieer, V.; Hill, N.A.; Barrett, N.S.; Nichol, S. Do marine substrates ‘look’ and ‘sound’ the same? Supervised classification of multibeam acoustic data using autonomous underwater vehicle images. Estuar. Coast. Shelf Sci. 2013, 117, 94–106. [Google Scholar] [CrossRef]

- Ho, M.; El-Borgi, S.; Patil, D.; Song, G. Inspection and monitoring systems subsea pipelines: A review paper. Struct. Health Monit. 2020, 19, 606–645. [Google Scholar] [CrossRef]

- Kim, D.; Lee, D.; Myung, H.; Choi, H.T. Object detection and tracking for autonomous underwater robots using weighted template matching. In Proceedings of the 2012 Oceans-Yeosu, Yeosu, Republic of Korea, 21–24 May 2012; pp. 1–5. [Google Scholar]

- Saini, A.; Biswas, M. Object detection in underwater image by detecting edges using adaptive thresholding. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; pp. 628–632. [Google Scholar]

- Priyadharsini, R.; Sharmila, T.S. Object detection in underwater acoustic images using edge based segmentation method. Procedia Comput. Sci. 2019, 165, 759–765. [Google Scholar] [CrossRef]

- Hu, J.; Jiang, Q.; Cong, R.; Gao, W.; Shao, F. Two-branch deep neural network for underwater image enhancement in HSV color space. IEEE Signal Process. Lett. 2021, 28, 2152–2156. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Afif, M.; Ayachi, R.; Said, Y.; Pissaloux, E.; Atri, M. An evaluation of retinanet on indoor object detection for blind and visually impaired persons assistance navigation. Neural Process. Lett. 2020, 51, 2265–2279. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Du, L.; Li, L.; Wei, D.; Mao, J. Saliency-guided single shot multibox detector for object detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3366–3376. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wu, W.; Liu, H.; Li, L.; Long, Y.; Wang, X.; Wang, Z.; Li, J.; Chang, Y. Application of local fully Convolutional Neural Network combined with YOLO v5 algorithm in small object detection of remote sensing image. PLoS ONE 2021, 16, e0259283. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Lei, F.; Tang, F.; Li, S. Underwater target detection algorithm based on improved YOLOv5. J. Mar. Sci. Eng. 2022, 10, 310. [Google Scholar] [CrossRef]

- Yan, J.; Zhou, Z.; Zhou, D.; Su, B.; Xuanyuan, Z.; Tang, J.; Lai, Y.; Chen, J.; Liang, W. Underwater object detection algorithm based on attention mechanism and cross-stage partial fast spatial pyramidal pooling. Front. Mar. Sci. 2022, 9, 1056300. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision 2018, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, M.; Wang, Z.; Song, W.; Zhao, D.; Zhao, H. Efficient Small-Object Detection in Underwater Images Using the Enhanced YOLOv8 Network. Appl. Sci. 2024, 14, 1095. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Srinivas, A.; Lin, T.Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck transformers for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 19–25 June 2021; pp. 16519–16529. [Google Scholar]

- Ultralytics. Track and Count Objects Using YOLOv8. Roboflow. Available online: https://blog.roboflow.com/yolov8-tracking-and-counting/#object-detection-with-yolov8 (accessed on 24 May 2024).

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Yu, F. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Cai, Z.; Ding, X.; Shen, Q.; Cao, X. Refconv: Re-parameterized refocusing convolution for powerful convnets. arXiv 2023, arXiv:2310.10563. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2023, Paris, France, 2–3 October 2023; pp. 6027–6037. [Google Scholar]

- Xu, W.; Wan, Y. ELA: Efficient Local Attention for Deep Convolutional Neural Networks. arXiv 2024, arXiv:2403.01123, 2024. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision 2018, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, D.; Hu, J.; Wang, C.; Li, X.; She, Q.; Zhu, L.; Zhang, T.; Chen, Q. Involution: Inverting the inherence of convolution for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 19–25 June 2021; pp. 12321–12330. [Google Scholar]

- Liu, C.; Li, H.; Wang, S.; Zhu, M.; Wang, D.; Fan, X.; Wang, Z. A dataset and benchmark of underwater object detection for robot picking. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Fu, C.; Liu, R.; Fan, X.; Chen, P.; Fu, H.; Yuan, W.; Zhu, M.; Luo, Z. Rethinking general underwater object detection: Datasets, challenges, and solutions. Neurocomputing 2023, 517, 243–256. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, Y.; Zhang, Y.; Qin, H.; Qi, H.; Jiang, Y. AWF-YOLO: Enhanced Underwater Object Detection with Adaptive Weighted Feature Pyramid Network. Complex Eng. Syst. 2023, 3, 16. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, F.; Wu, X. FEB-YOLOv8: A multi-scale lightweight detection model for underwater object detection. PLoS ONE 2024, 19, e0311173. [Google Scholar] [CrossRef]

- Feng, J.; Jin, T. CEH-YOLO: A composite enhanced YOLO-based model for underwater object detection. Ecol. Inform. 2024, 82, 102758. [Google Scholar] [CrossRef]

- Chen, J.; Er, M.J. Dynamic YOLO for small underwater object detection. Artif. Intell. Rev. 2024, 57, 1–23. [Google Scholar] [CrossRef]

- Yang, C.; Xiang, J.; Li, X.; Xie, Y. FishDet-YOLO: Enhanced underwater fish detection with richer gradient flow and long-range dependency capture through Mamba-C2f. Electronics 2024, 13, 3780. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).