1. Introduction

1.1. Background

Recently, digital image integrity has become an important research area within digital content management. The integrity of image data refers to the completeness and soundness (error detection) of the content record [

1].

Digital content, including text, video, and sound, is created in various fields. It is then transmitted across various network environments to repositories, which may be located on servers, in clusters, or in the cloud, for storage and use [

2]. Digital content has unique and essential characteristics that make it susceptible to alteration, deletion, and corruption. Ensuring the integrity of digital content is a fundamental task in information technology to ensure safe data management [

3].

The General Data Protection Regulation (GDPR) is a comprehensive European Union (EU) law protecting the privacy and personal data of individuals within the EU. It strictly regulates organizations’ collection, storage, and processing of such data [

4,

5]. Under the GDPR, protecting personal data against unauthorized access, alteration, or disclosure is crucial. In terms of integrity and confidentiality, data must be processed in a manner that ensures appropriate security, including protection against unauthorized or unlawful processing and accidental loss, destruction, or damage.

1.2. Motivation

The verification of data integrity is crucial for the correct use of digital content, and ensuring the efficiency of the network bandwidth used for data verification is essential [

6]. However, many commercial data acquisition and storage systems lack an integrity verification function to detect content modifications such as forgery and deletion.

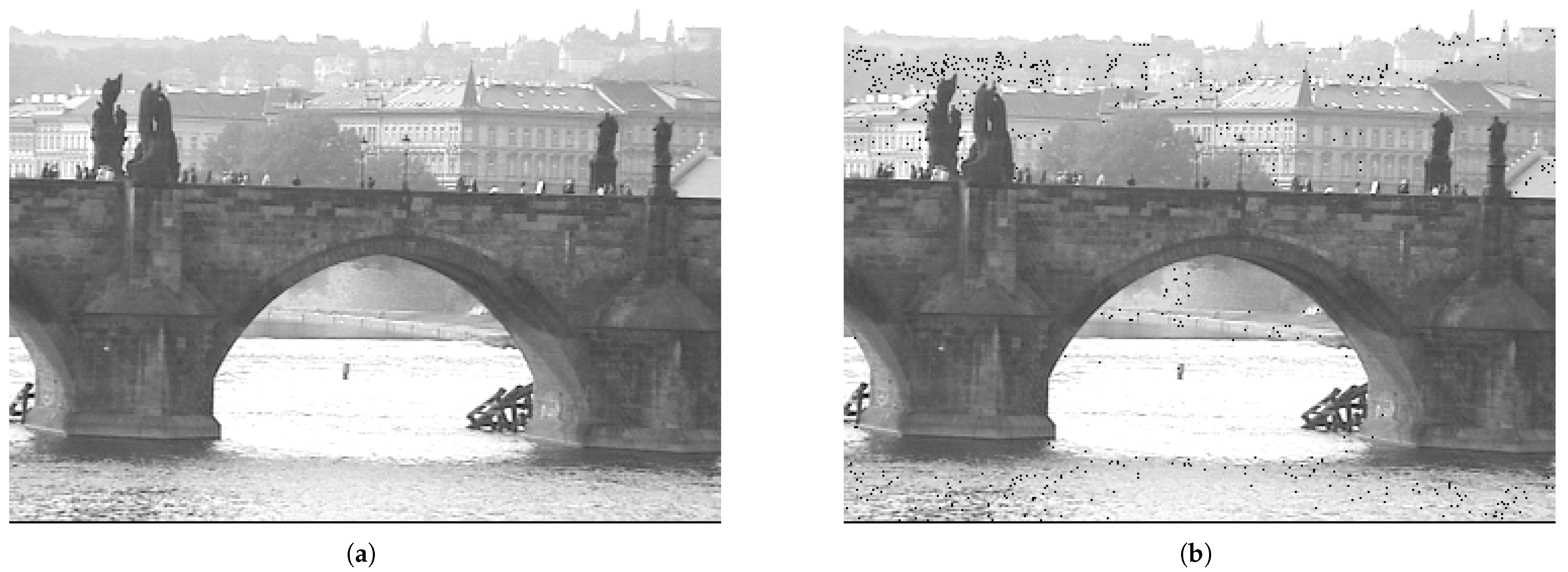

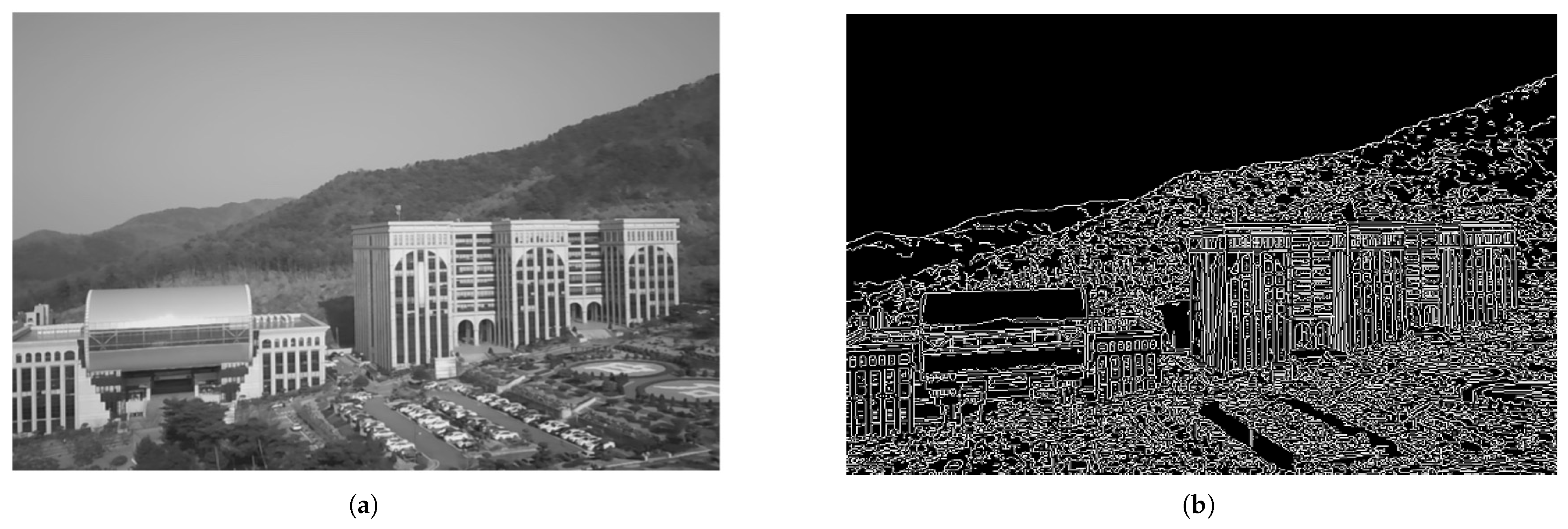

Figure 1 shows the original image and its altered version.

The purpose of this paper is to preserve and protect sensitive data from unauthorized access throughout their lifecycle while maintaining the ability to access and utilize the original image when necessary. By encoding sensitive objects, the system mitigates risks associated with unauthorized access or misuse while ensuring data integrity for authorized purposes. Annotating sensitive data in images is crucial for privacy protection and compliance with data regulations. In this process, to protect a sensitive object from exposure, such as privacy infringement, a protection technique involving secure transmission of the sensitive object is employed [

7].

1.3. Contributions

In this work, we define a digital fingerprint that is an output of the cryptographic hash function

, applied to a record of a data frame captured by a web camera. It captures the essential idea of the unique identification of digital content using a cryptographic hash function. The digital fingerprint represents the unique value derived from a data frame and can originate from any device between edge devices and a server in a cloud. Its details are described in

Section 2.2.

Watermarks are embedded marks that indicate ownership and deter unauthorized distribution [

8]. It helps indicate the ownership of content and discourages unauthorized copying or distribution. However, watermarking procedures introduce alterations to the original data, which may render such data unreliable for use as forensic or legal evidence. An invisible watermark is embedded within the content in a way that is not readily visible. Our experiment focuses primarily on feature extraction from the original image data.

AES (Advanced Encryption Standard) is a symmetric-key encryption algorithm widely used to secure data [

9]. In AES, the same key is used for both encryption and decryption. AES is considered highly secure and resistant to various forms of cryptographic attacks. The main objective of the paper, which is image authenticity verification, is a key requirement in the selection of the applied technology. While AES provides block-based symmetric key encryption and decryption functions, it is difficult to perform authenticity verification using AES alone. Additionally, applying AES for fingerprint composition, rather than the entire image, is not suitable.

The determination of the authenticity of digital records must maintain the continuity of evidence storage or the linkage of digital records [

10]. During investigations of incidents caused by intentional contamination or accidentally exposed data, these fingerprints can be used to identify the incidents. Additionally, the logger, playing a role as a data creator, generates situational information by extracting the object information included in the captured video. In order to maintain data reliability, authenticity, verification, a function that detects whether data have been modified by an unauthorized user is required [

11].

Designing an encoding and decoding algorithm can help preserve sensitive data that could be misused in deep-fakes. It can also help verify the authenticity of the original data and detect any unauthorized modifications. This paper presents a privacy protection method based on XOR operation, which offers several advantages, including simple and efficient computation, reversibility, enhanced security through the use of keys, diverse applicability, and ease of integration with additional security techniques.

The contributions of this paper are as follows.

We design an XOR encoding scheme with a filter to securely and efficiently handle both encoding and decoding operations within an edge device.

This scheme is designed to securely and efficiently handle both encoding and decoding operations within an edge device.

We apply the fingerprint of an image as the value of a leaf node in the hash tree.

We evaluate the performance of the data delivery method using a prototype based on an edge device.

2. Related Works

To verify the integrity of video data, there are methods such as a hash function, hash tree, digital signature, and blockchain [

12,

13,

14,

15]. In this section, we discuss the methods applied in this paper.

2.1. Hash Function

A hash function is a function that takes an arbitrary string of length as an argument and calculates a fixed length value n. The resulting value is called a hash value of the given string [

16]. A cryptographic hash function is a type of hash function and has the following properties, making it difficult to find the relationship between the hash value and the original input value [

16,

17].

For large amounts of data, such as sets of images, ordered sequences of data blocks with variable sizes can be processed by generating hash values. Conventional techniques for computing a hash value over a sequence of data blocks involve concatenating the blocks in their specified order, treating the resulting concatenated data as a single input to a cryptographic hash function. As a result, the precise computation of the overall hash value requires the data blocks to be available in their original, sequential order.

SHA-256 (Secure Hash Algorithm 256-bit) is a hash function with very strong properties in terms of collision resistance. Collision resistance means that the probability of two different inputs producing the same hash value is extremely low. From the perspective of preimage resistance, SHA-256 is designed to be a one-way function, which means that it is computationally infeasible to reverse the hashing process. It is a member of the SHA-2 family and produces a 256-bit (32-byte) hash value. The length of a hash value depends on the difficulty of finding an input that produces a given hash output.Given an input and its corresponding hash, this refers to the difficulty of finding a different input that produces the same hash output, in terms of second-preimage resistance.

SHA-3 (Secure Hash Algorithm 3) is the latest member of the Secure Hash Algorithm family, developed by the National Institute of Standards and Technology (NIST). It is a cryptographic hash function designed to generate a fixed-size output (a hash) from an input of arbitrary size, ensuring data integrity, security, and authentication. SHA-3 supports multiple output lengths, whereas SHA-256 produces a fixed-length output of 256 bits (32 bytes). SHA-3 tends to be slower than SHA-256 in terms of pure hashing speed due to the complexity of the Keccak construction.

SHA-256 is generally faster than SHA-3 in terms of raw performance and computation. It is highly optimized and widely used across many systems and applications. An important technique for confirming data integrity is to construct hash values for the data by a cryptographic hash function such as SHA-256 that maps an arbitrary binary string to a binary string with a fixed size. The cryptographic hash function is a hash function that tries to make it computationally infeasible to invert them.

Table 1 summarizes the key parameters and characteristics of SHA-256 and SHA-3 hash functions.

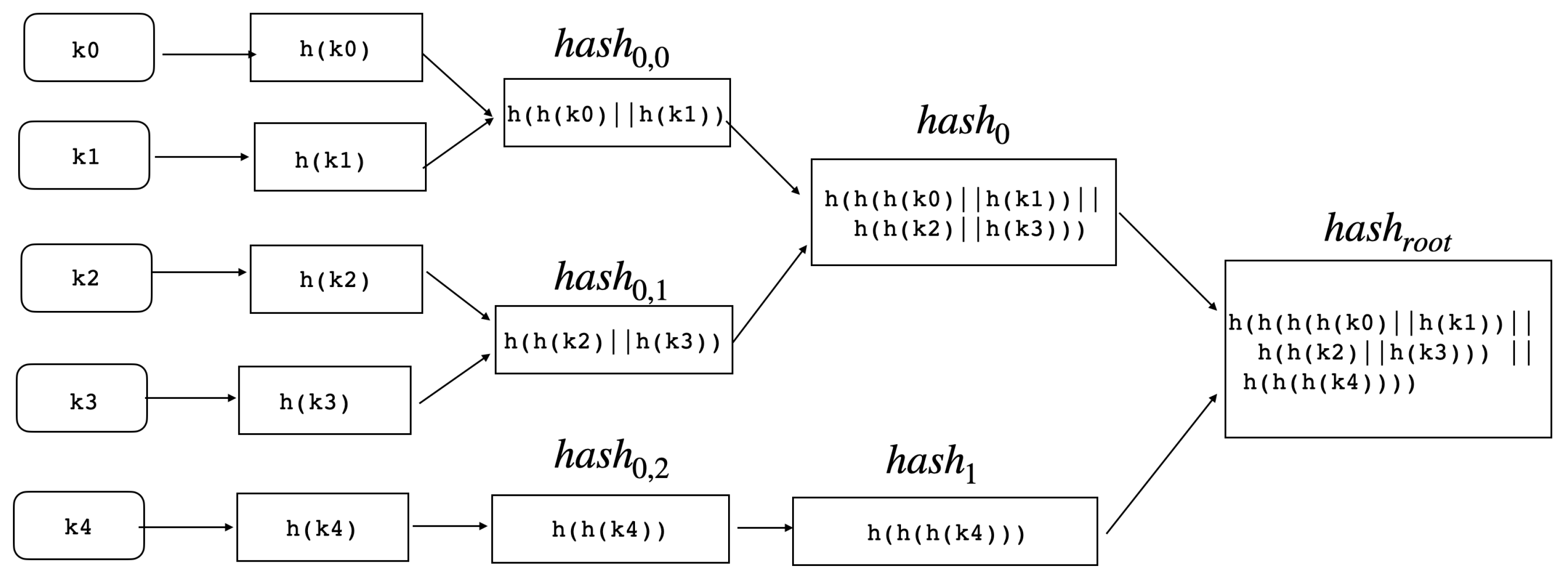

2.2. Hash Tree

A hash tree is a hierarchical data structure, where each leaf node represents a data block and is labeled with its corresponding cryptographic hash [

18]. In a blockchain, a hash tree enables efficient data integrity verification without requiring the download of the entire blockchain. Each block contains a root hash, a cryptographic hash of all its transactions. The hash tree provides a tamper-proof way to summarize and verify the transactions within a block [

19]. This allows for the efficient verification of whether a specific transaction is included in a block without downloading the entire block. For instance, a Merkle tree is a tree data structure where each leaf node is labeled with the cryptographic hash of a data block, and each non-leaf node is labeled with the cryptographic hash of its children’s labels. The hash tree used for integrity verification is created and used as a Merkle tree with a height of

O() and

nodes, for the given n verification target data. Merkle tree is also used in blockchain as a technique to ensure that block data have not been changed or damaged since they were recorded [

20]. This allows the efficient and secure verification of the contents of large data structures.

Figure 2 describes the structure of a hash tree with leaves of five hash values,

to

.

is the result of hashing the concatenation of

and

, where

denotes concatenation.

is the root of a hash tree given its leaves:

To verify the data integrity in the proposed method, we apply the hash tree to find whether the data frame has been tampered with or not. In the case of receiving an image that has been tampered with, the part of the image with tampering is detected by the verification method using the hash tree.

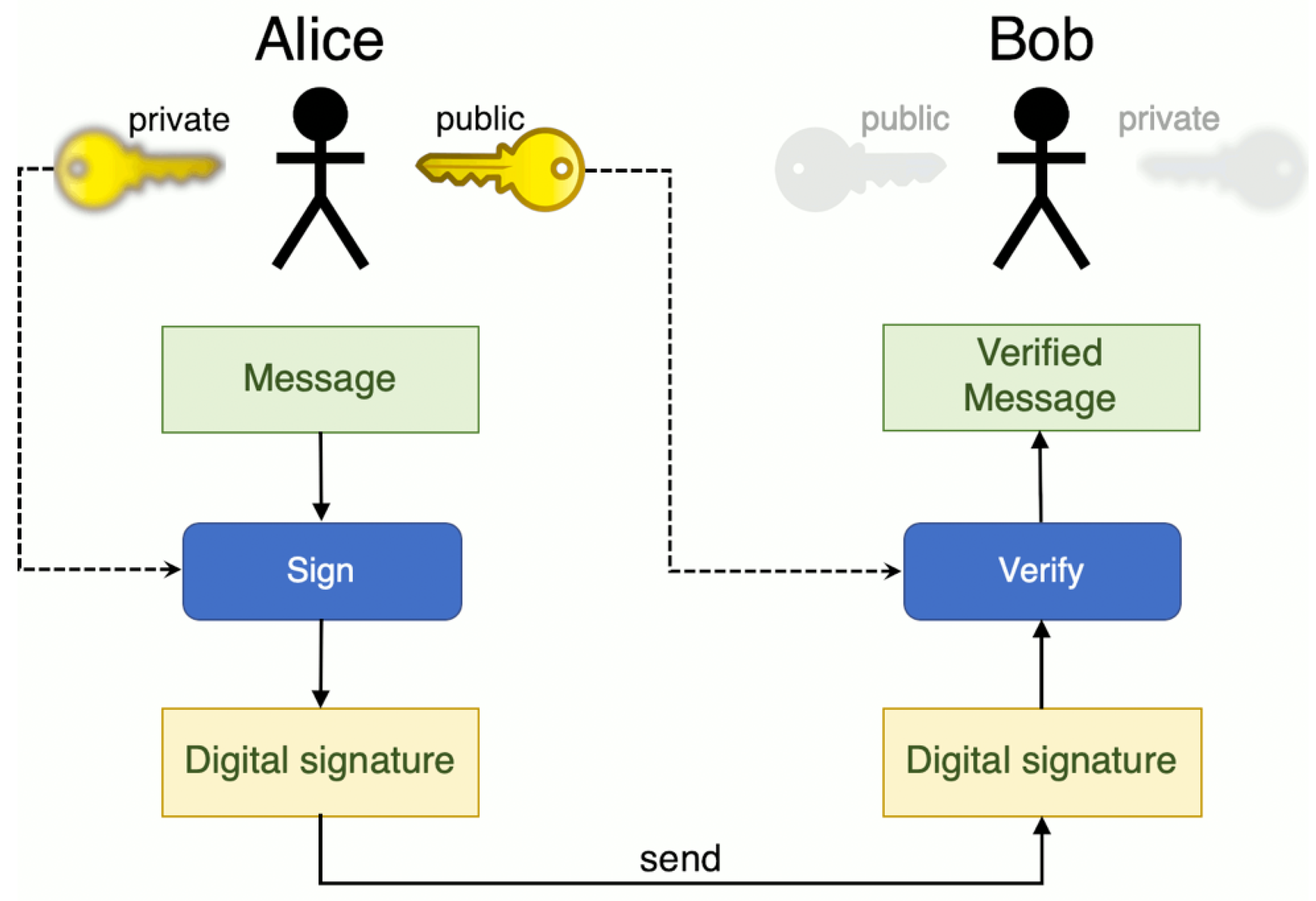

2.3. Digital Certificate

The Public Key Infrastructure (PKI) is a public key infrastructure based on the X.509 standard that provides a chain of trust. PKI uses public-key cryptography to authenticate users and systems [

21]. It relies on digital certificates issued by trusted certificate authorities (CAs). PKI is a combination of policies, procedures, and technologies for the secure exchange of digital information online, using algorithms to protect messages and files and ensure delivery to the intended recipient. PKI authentication involves verifying the identity of users and systems by a trusted third-party certification authority. This centralized authority manages key generation, renewal, and revocation based on digital certificates. PKI employs two types of cryptographic keys: public and private keys. Together, these keys ensure the confidentiality, integrity, and authenticity of communications over the internet.

Figure 3 illustrates the process of signing and verifying a message between Alice and Bob. Alice generates a public–private key pair and sends the public key to Bob, who then uses it to verify the received message.

3. Methods

3.1. System Structure Design

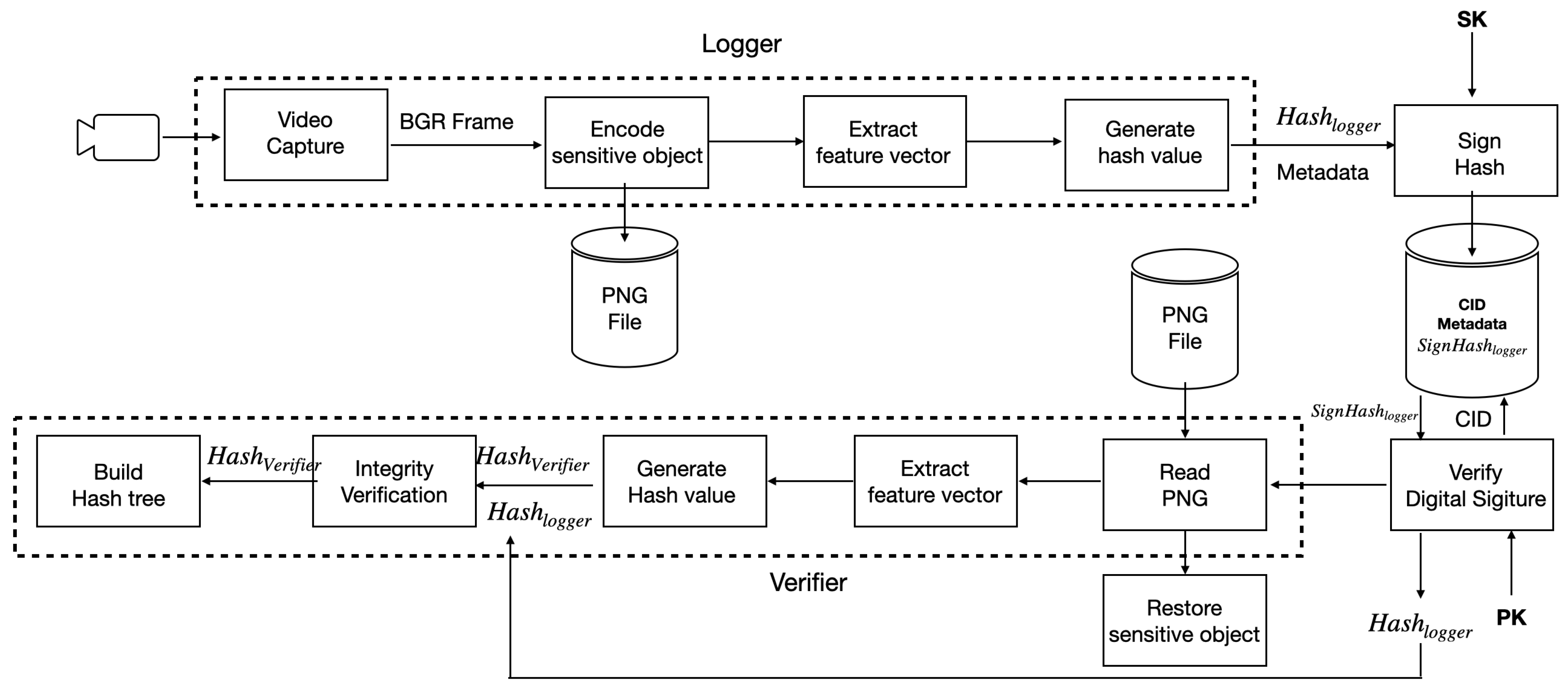

Figure 4 shows the software architecture associated with the primary functions of the logger and the verifier in the designed system. The logger generates metadata during thread-based concurrent video frame handling. The logger generates a hash value for each video frame, signs it, and stores it on the verifier. As shown in

Figure 4, CID is a given video identifier. The hash value of the video frame and its signature are delivered to the verifier from the logger by the thread. The verifier performs hash verification, constructs a hash tree to verify future video changes, and performs hash tree verification. The verifier stores the integrity verification results on the repository. In the integrity verification process of

Figure 4, the verified hash value obtained after verifying the video frame is passed to the build hash tree process, which is the hash tree generation process, as input for the construction of the hash tree. The hash tree is then constructed in units of hash tree construction nodes as defined in the configuration. To deliver the verified hash value to the build hash tree process, a message queue, which is an IPC mechanism, is used.

For data lifecycle management, a logger plays a role as a software component that records events while a digital content producer captures an image. The producer then checks for the presence of a sensitive object within the image. If found, the sensitive object is encoded. The encoded object, along with related metadata, is securely delivered to a verifier. Finally, the original image is recovered through decoding. Further details are provided in the subsequent

Section 3.2.

The designed logger is used in an edge-based environment to extract features from an image for integrity verification. By extracting features at the edge, which is close to the data source, it ensures low latency in acquiring the information required for integrity checks and delivers it to the verifier, enabling real-time processing and facilitating the design of a distributed architecture. The system for verifying the integrity and authenticity of digital video data consists of a logger and a verifier. Listed below are the requirements for the logger and verifier.

The verifier receives video data from the logger, which is the module responsible for acquiring video data, stores them, and performs verification of the video data.

Communication between the logger and the verifier is based on Representational State Transfer (REST), using a request–response model, and data transmission and processing are carried out by defining message specifications in advance.

The logger and verifier operate as entities responsible for signing and verification.

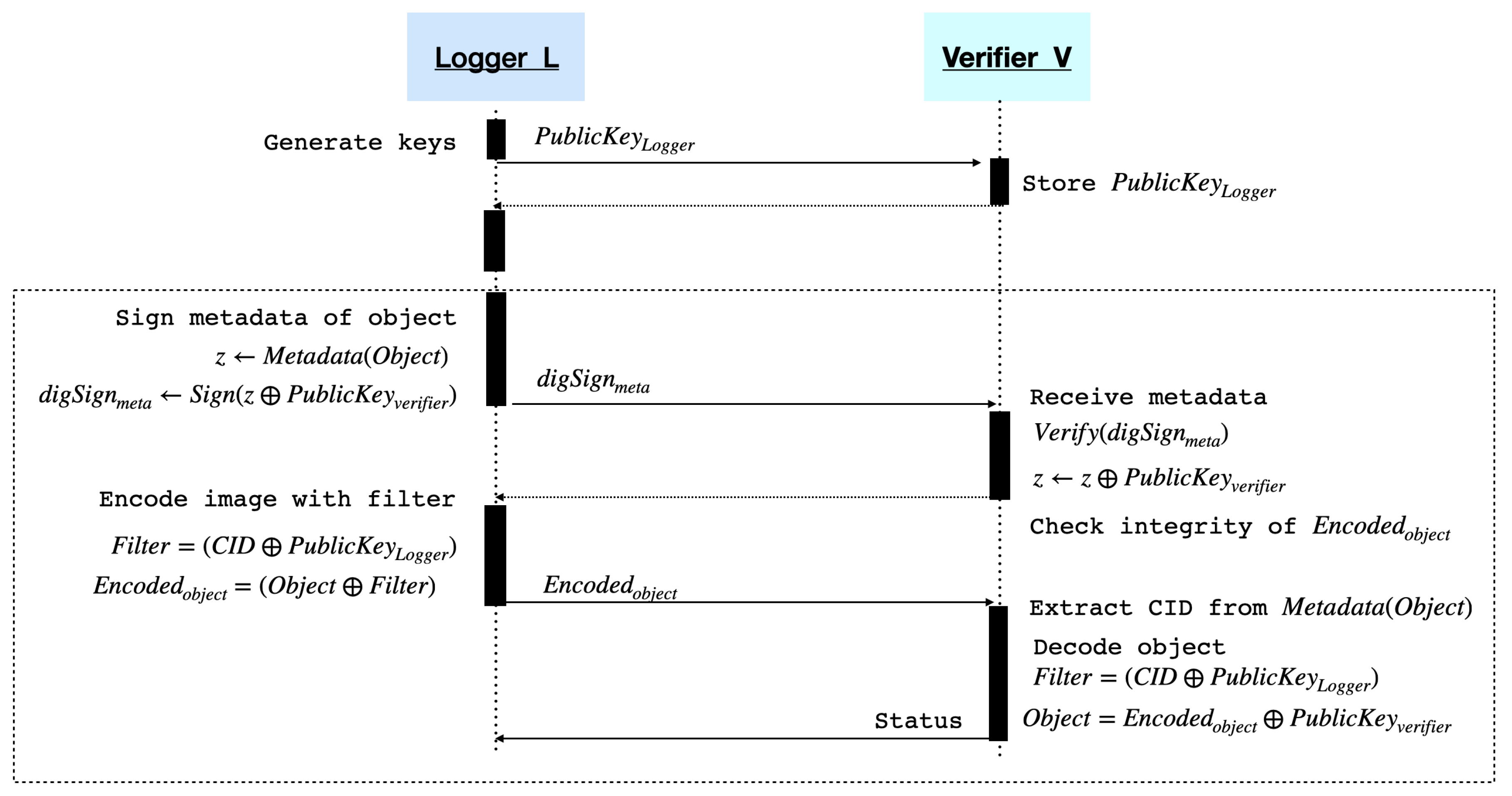

3.2. Delivery Scheme

The XOR operation is a simple and fast bitwise operation used to hide information. Due to its reversibility, the XOR operation exhibits the characteristic of restoring the original data when performed twice with the same key. In the process of transmitting metadata, the XOR operation is utilized for encoding to conceal information, and XOR operation is also used for encoding sensitive objects. For the filter for encoding sensitive objects, encoding is performed with a separately configured seed. A seed is computed using the CID of the original video data, along with a public key sharing with a logger and a verifier. During the verification, the verifier needs to obtain the logger’s public key to verify the hash value and decode the image with sensitive objects. This public key is received from the logger during the initial session establishment process. To ensure secure transmission of the public key, a secure channel can be established, or cryptographic techniques such as Multi-Party Computation (MPC) can be employed. This paper proposes the use of Shamir’s Secret Sharing scheme (SSS) [

22] for secure public key transfer, enabling the reconstruction of the public key generated by the verifier. The SSS is a cryptographic scheme for distributing a secret among multiple parties, requiring a certain number of shares to reconstruct the original secret. In this case, the secret is used as the public key:

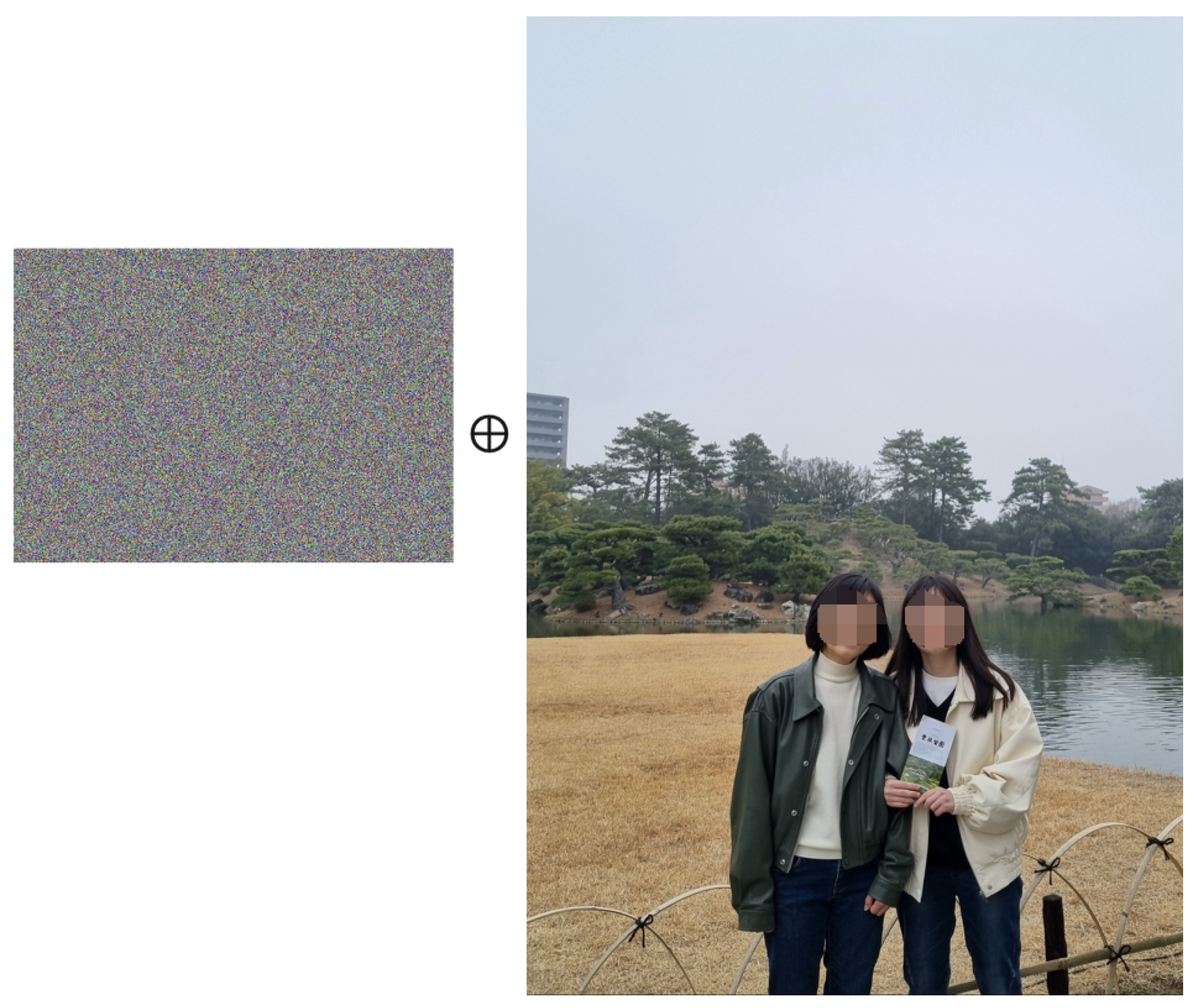

After detecting objects within the video frame, if a sensitive object is identified, it is encoded to prevent exposure by the seed, and the proposed transmission scheme is applied. It works by combining the secret data with a given seed. This pattern is then embedded into the video frame. To restore the encoded data, the same key is used to XOR the modified values, revealing the original information.

Figure 5 shows the encoded data. As shown in

Figure 5, we use one of the images in the COCO dataset in this experiment [

23].

The secure delivery of metadata enhances data privacy by configuring the PKI key pair used for signing as a filter and performing an XOR (exclusive OR) operation to verify authenticity [

21,

24]. A secure transmission scheme for transmitting metadata after encoding them with a filter is expected to infringe privacy within video data.

Figure 6 shows the logical structure of a secure delivery scheme. The security of the filter depends on the robustness of the seed. The filter used in XOR encoding is constructed using a public key and CID, which may have security vulnerabilities due to the fixed public key and the CID with rules. Here, we strengthen the security system by utilizing SSS (Shamir’s Secret Sharing) to construct the public key for seed configuration, thereby preventing access to the public key by untrusted users. A trusted validator stores the public key, generated by the logger in

Figure 6, into the verifier using SSS.

The verifier interprets the video and metadata received from the logger and stores them in a database. The stored video and metadata are then checked for integrity through the verifier. The integrity check is divided into two parts: authenticity verification through the validation of the signed hash value, and data integrity verification by comparing the hash value generated from the video with the received hash value.

3.3. Construction of Digital Fingerprint

Table 2 presents the fingerprint generation process for ensuring data integrity. Key generation and exchange are performed by the logger. The logger generates a secret key (SK) and a public key (PK), storing the private key in a secure location and transmitting the public key to the verifier. The verifier uses the public key for subsequent operations. The logger generates a hash value for each video frame, signs it, and constructs a hash tree with 30 video frames, transmitting the root hash value. The verifier then conducts hash verification using the root hash, detecting any video frames with integrity violations.

3.4. Multi-Thread Logger

To ensure data integrity and authenticity, the logger creates digital fingerprints and signatures using hash functions. These are transmitted to a Video Management System (VMS) that is composed of a repository and a verifier. By collaborating with an object detection server, the logger extracts features from video data to construct a unique digital fingerprint. This fingerprint, along with the original data, is used for integrity verification in the VMS.

Thread safety is crucial when multiple threads access and modify shared resources concurrently. The threads of a logger that operates with multiple threads use a queue for inter-thread data communication, thereby supporting the parallel processing of the threads. To ensure thread safety, the shared resource, the queue, is locked using a mutex, and after the thread performs its designated function, the lock is released.

The functions of the logger list are as follows:

Capturing an image: Video data are captured through a camera. The camera is connected via USB, and after reading the shape file, video capture is performed according to the shape information. The captured video data in this paper are in BGR format. BGR (Blue, Green, Red) is a color format where the color values are stored in the order of blue, green, and red.

Encoding sensitive objects: The BGR image is passed to the encoder with object detection. Encoded videos are stored in a separate folder. The file name for video storage is assigned a sequential number using the MAC address of the logger as a prefix for logger identification. Since the currently operating edge device of the logger does not have a real-time clock (RTC), sequential numbers representing logical time are used for information to determine the chronological order of file creation.

Extracting a feature vector: The BGR data of the captured video is converted to grayscale. Canny edge detection is used for constructing the feature vector of the video. A feature vector is a numerical representation of a video frame. The grayscale conversion is performed by calling the OpenCV library.

Making a hash value: Hashes are generated for the feature vectors. is a cryptographic hash function that generates a fixed-size 256-bit (64-character) unique hexadecimal. The hash value generated by is represented as a digital fingerprint, ensuring the integrity of the data.

Signing the hash value: A digital signature, generated by signing the hash with a private key, confirms its origin from a specific logger.

Sending image and its metadata: Video data and metadata are transmitted to the VMS. The HTTP-based REST (Representational State Transfer) protocol is used for data transmission.

3.5. Scheme for Encoding and Decoding Sensitive Objects

In this work, we encrypt sensitive data in transit and at rest. We use a designed encoding and decoding algorithm to protect data transferred and stored on servers in a cloud. When transferring data, we do not apply any cryptographic protocol like TLS/SSL designed to secure communication over a network. Instead of using the cryptographic protocol, a logger initiates a connection to the VMS server. The designed encoding and decoding algorithm consists of two phases, handshake and encoding:

(Handshake) The logger sends its digital certificate with a public key, part of a key pair in PKI, to the server. The server verifies the certificate to ensure that it is valid and issued by a trusted Certificate Authority (CA). If the certificate is valid, the logger sends the logger a filter, which plays a role as a shared secret key.

(Encoding) All subsequent communication between the client and server is encrypted using the shared secret key. This encryption ensures that any transmitted data are unreadable to anyone who might intercept them.

In the proposed sensitive-data safe-transmission scheme, the object detection model detects an object in an image, and when a sensitive object is identified, the sensitive object is encoded to prevent exposure, and the proposed transmission scheme is applied. To ensure the safe delivery of sensitive objects, the PKI public key used for signatures is configured as a filter, and XOR is performed to strengthen the data-hiding function.

Data encoding is performed on sensitive objects within videos, such as human figures, using the YOLOv8 [

25,

26] object detection model. The encoding and decoding processes are as follows. This paper does not cover the eight integrity check processes:

The logger generates a public–private key pair based on PKI and transmits the public key to the verifier.

The logger obtains object information from video data using an object detection model.

Based on the object information of identified sensitive objects, the logger applies a filter to perform encoding.

4. Results

4.1. Experimental Setup

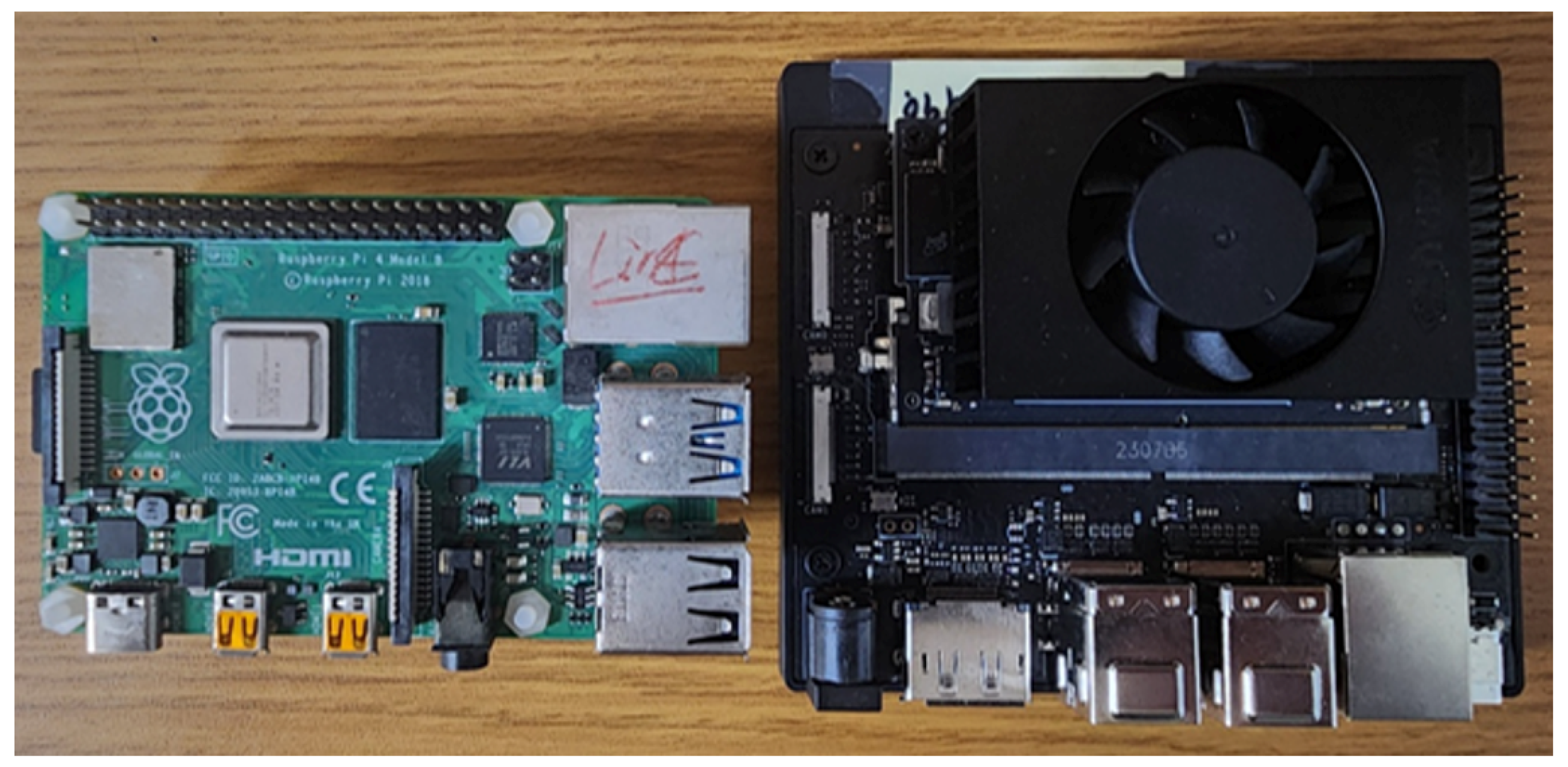

Embedded systems such as Raspberry Pi have smaller capacities and lower processing speeds than general PCs in terms of memory and CPU. They are used as a video frame in an edge device of a data creator. Therefore, memory savings and processing efficiency in embedded systems are major essential considerations in application development, and this should be reflected in the implementation of the integrity verification function. Jetson Nano Orin is one of the embedded computing platforms developed by NVIDIA. The constructed hash tree is used to efficiently detect integrity violations in a data verifier. The Jetson Nano serves as an AI accelerator for object detection, employing the OpenSSL library for key generation, signing, and decryption. It utilizes the curl library to transmit video data via REST API.

Figure 7 illustrates the physical hardware configuration.

The experimental setup is shown in

Table 3. Jetson Nano Orin is a compact, system-on-module (SOM) from NVIDIA, designed for edge computing and AI applications. It is based on the NVIDIA Orin architecture with an ARM Cortex-A78AE CPU and Ampere GPU. It is running on Jetpack 5.1.4-b17. The YOLOv8 model is used to detect objects in a video frame. To run the object detector using YOLOv8, Jetson Nano Orin is applied to run it, which is CUDA-supported. The Raspberry Pi is a series of small, affordable, single-board computers (SBCs) developed by the Raspberry Pi Foundation; Raspberry PI 4 is used for running a logger, which uses a webcam connected to a USB.

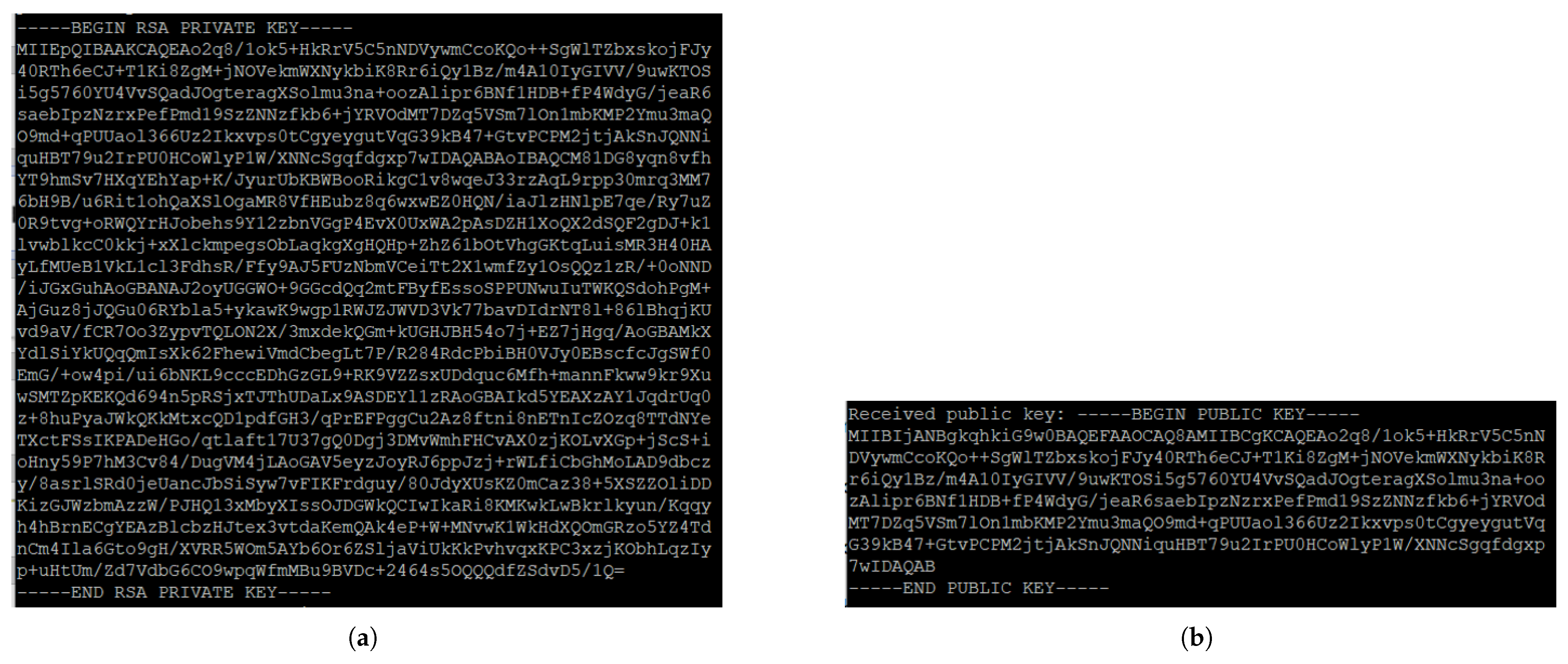

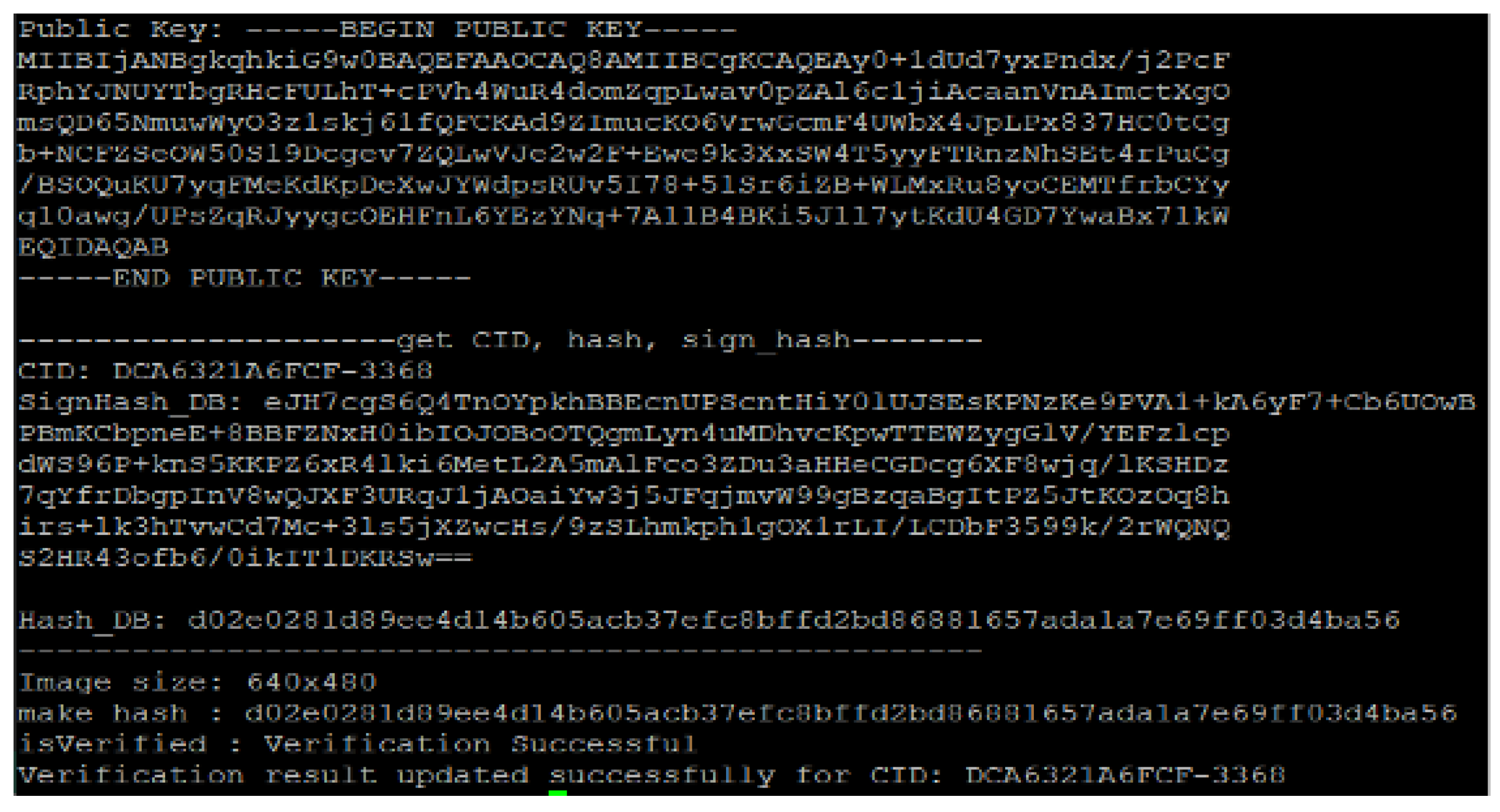

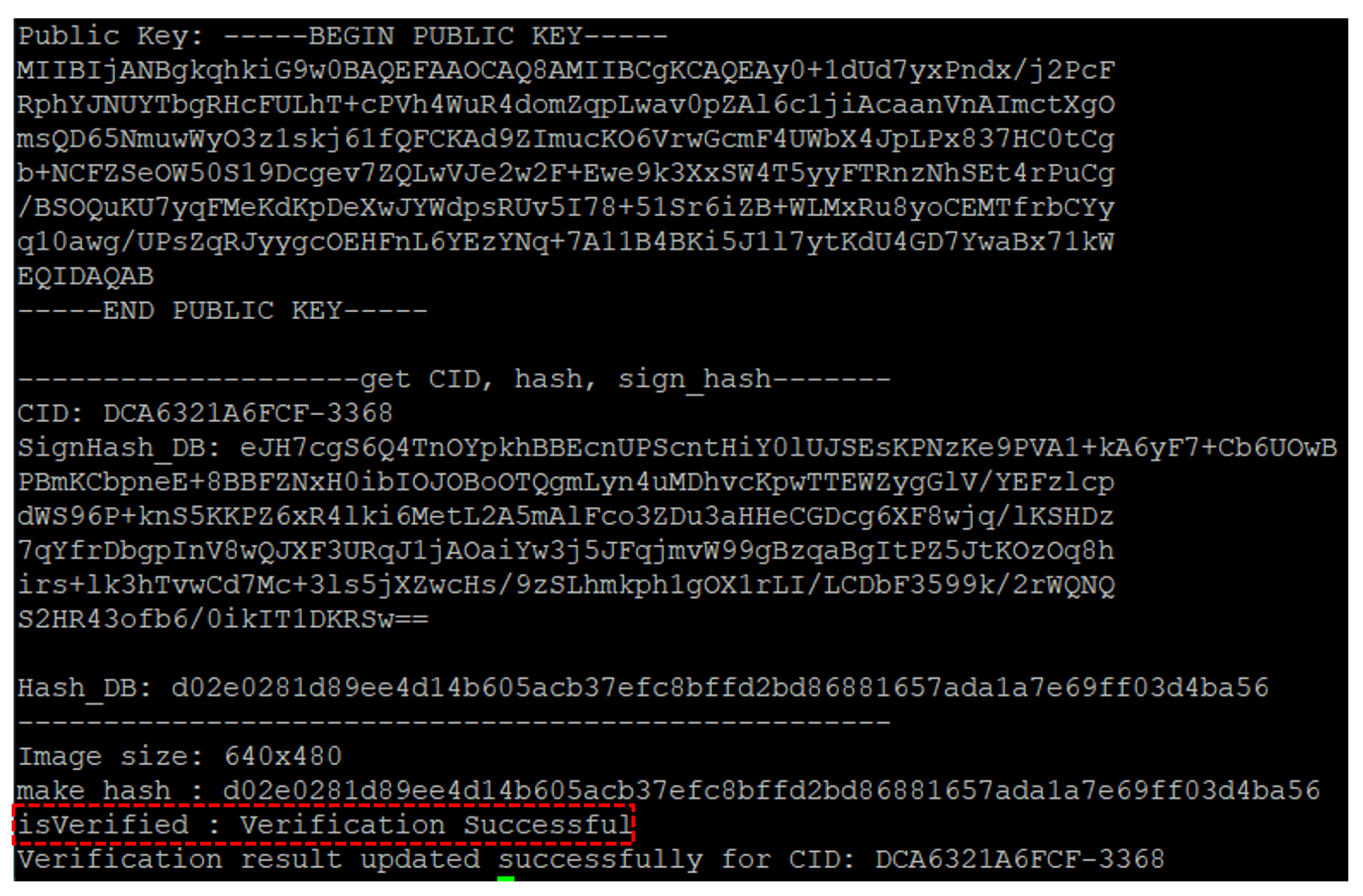

4.2. Result of a Generated Key Pair

In this experiment, a key pair with a public key and a private key is generated in a logger using the OpenSSL library, and the private key is maintained in the wallet of the logger. The public key associated with the generated private key is delivered to the verifier by the key exchange scheme. The key pair is in PEM format, which is used to store and transmit cryptographic keys. The PEM format is a text-safe format which is encoded using Base64. We apply the secure PEM format for transmitting the public key from the logger to the verifier over the network.

Figure 8 shows the key pair.

4.3. Result of Preprocessed Image

To extract features from threat-related images associated with Gemini, the images are first converted from the BGR format to grayscale. Then, Canny edge detection is applied to the converted grayscale images. This process is performed using the OpenCV library.

Figure 9a shows the image converted to grayscale, and

Figure 9b shows the Canny edge detection image of the grayscale image.

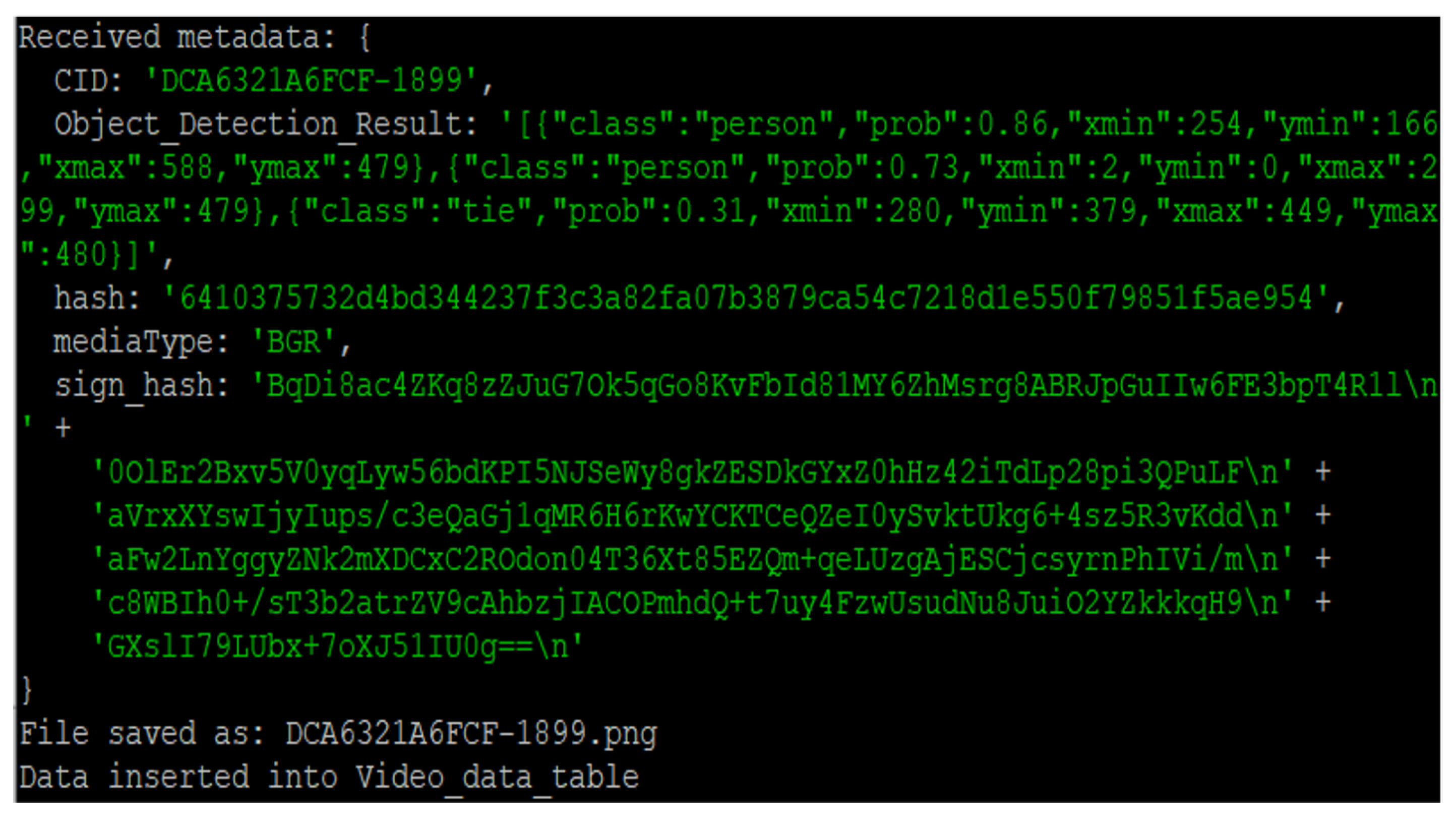

4.4. Result of Delivering Image and Its Metadata

The logger transmits metadata about images and videos to the Video Management System (VMS). In this process, the logger uses the HTTP-supporting curl library. Images are transferred using the POST method, and image-related metadata are formatted in JSON and also transmitted via POST.

Figure 10 shows the metadata transmitted to the VMS. The object detection information includes object labels and bounding box information.

The transmitted images are stored as files, and the metadata are stored in the database. Metadata are data that describe other data. The metadata are described in

Table 4.

Figure 11 shows the server’s received message from the logger, which includes CID (Content ID), signed hash value formatted in BASE64, hash a hash value, object detection results, and media data format. The signed hash value is used to verify the fingerprint obtained after feature extraction on the subsequently received video. CID is a unique identifier for the content.

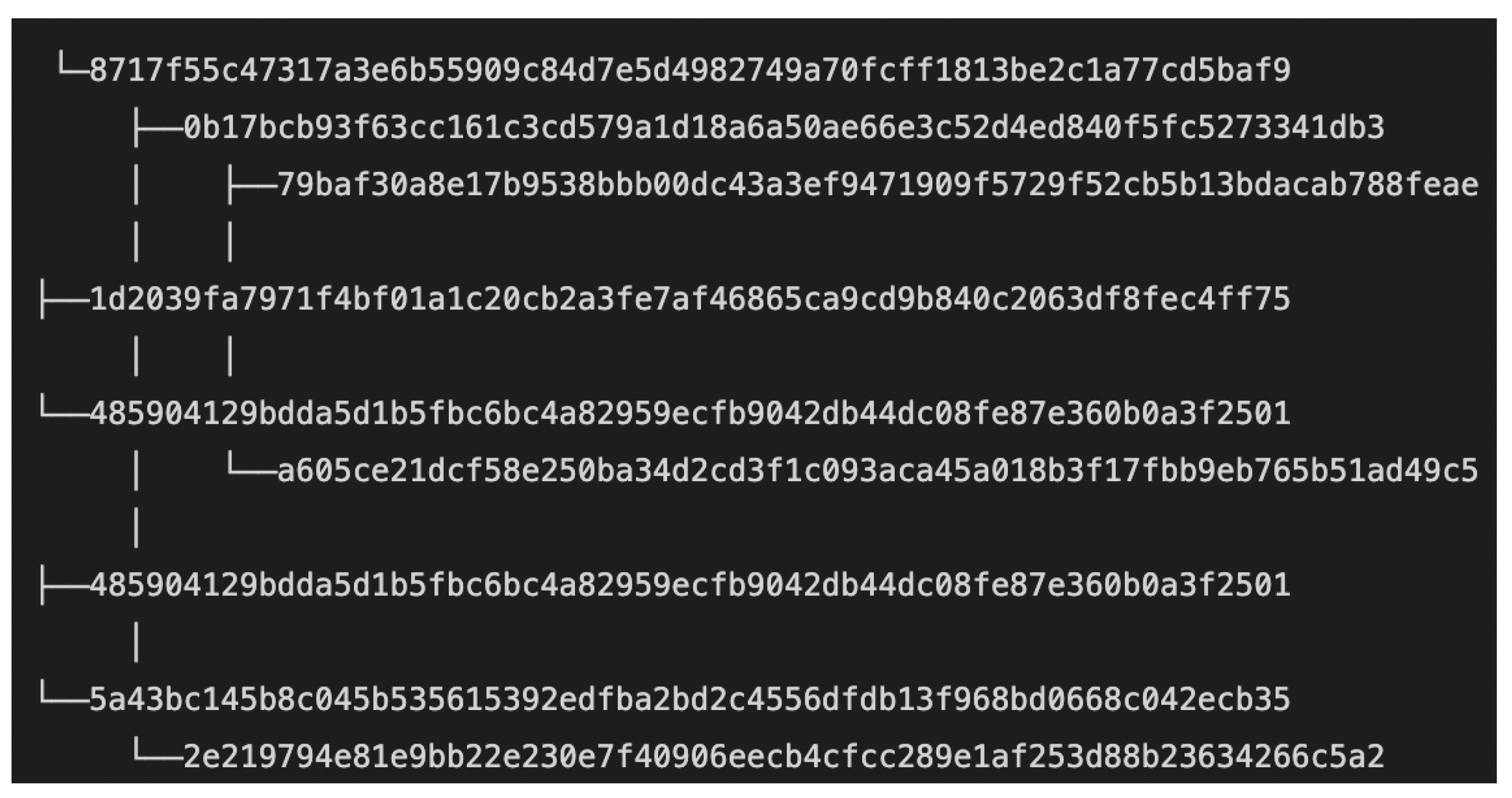

4.5. Result of Hash Tree and Verification

A hash tree is a data structure used for the efficient and secure verification of large datasets. The hash values generated by the logger are verified for integrity by the verifier, and the verified hash values are used as input for constructing a hash tree. The hash tree is used to verify the integrity of the data, which may be compromised due to external tampering or internal data management issues during the image storage process.

According to the designed data verification process flow, it can detect whether data have been changed by using the hash value of the hash tree’s root node (root hash) delivered from the logger, who is the digital content creator.

Figure 12 shows a hash tree composed of four leaf nodes.

Figure 13 shows the processing results after verification using the hash tree’s root hash value, followed by integrity and authenticity verification.

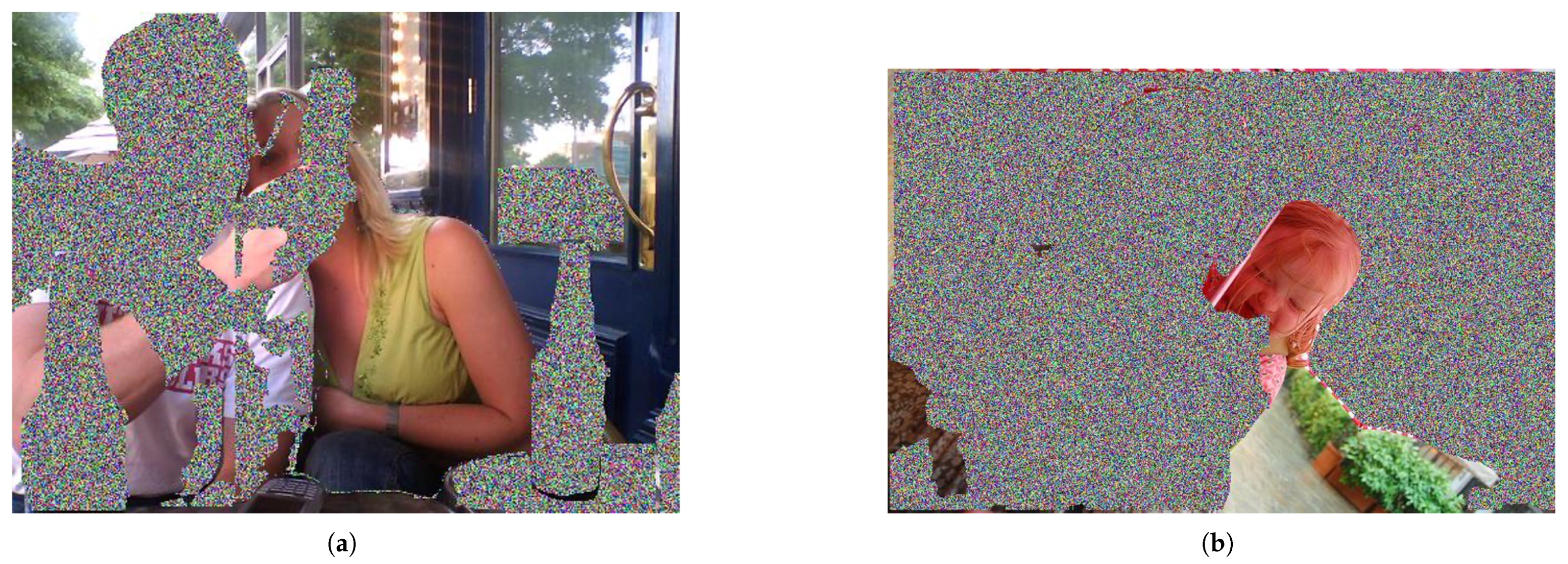

4.6. Result of Encoding Sensitive Object

Figure 14a shows an image detected by the object detector interacted with logger, containing sensitive information such as human objects. Therefore, object detection must be followed by information obfuscation of these objects.

Figure 14b presents the encoding results of the sensitive object by the logger, and the verifier recovers the sensitive object through XOR operation with the public key. The encoded image is used for integrity checking. Both the encoded image and the object information, including the contours of the sensitive object, are transmitted to the REST server using the POST method.

Performance evaluation indicates that the Jetson device is capable of real-time object detection, with an average processing time of 65 ms per image.

5. Performance Evaluation

5.1. Evaluation of Logger Threads

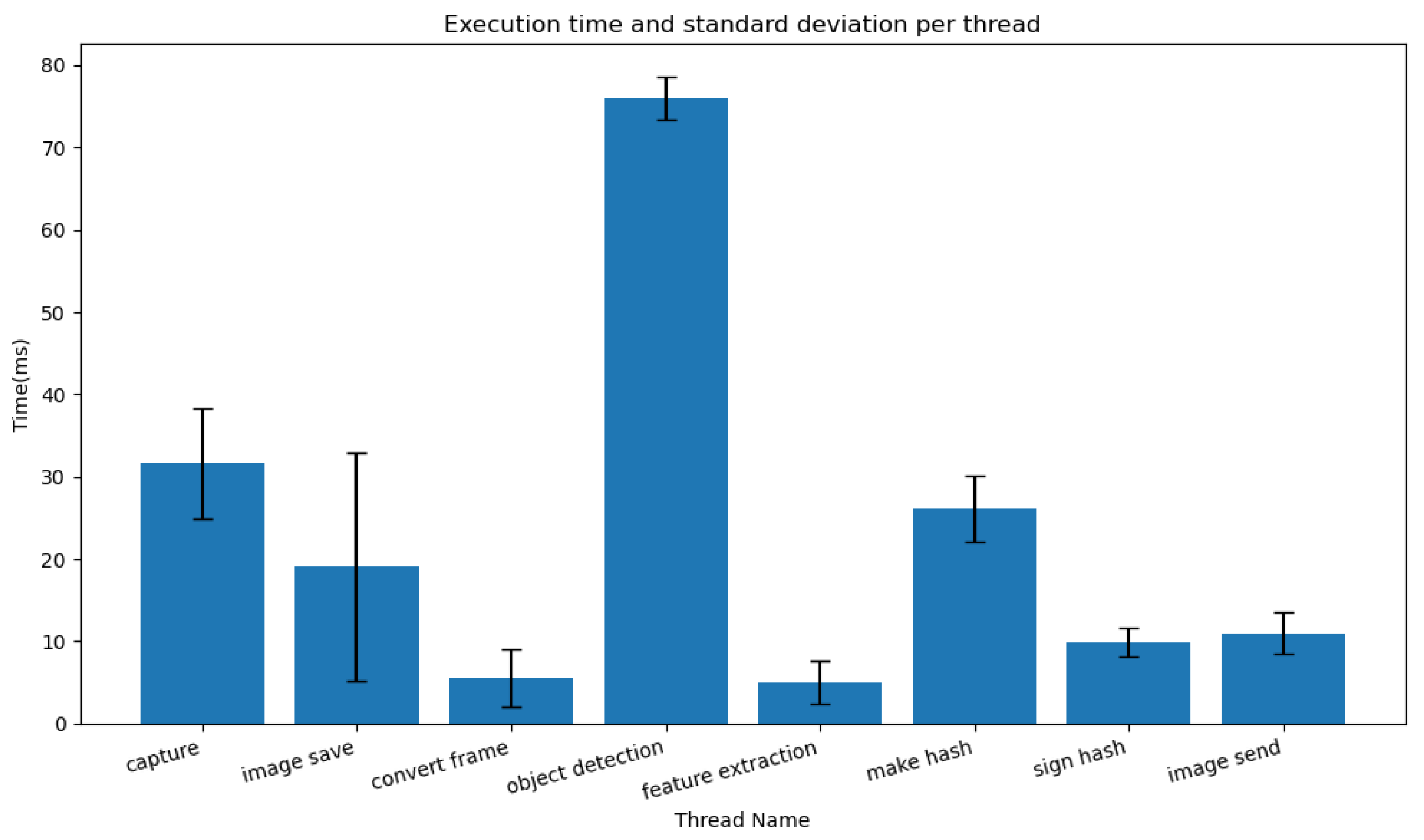

Table 5 presents the performance evaluation results of threads constituting the logger. The processing performance is compared by calculating the average execution time and standard deviation of the execution time for each individual thread. Among the total of eight threads, the object detection thread has the longest execution time at 76 ms. This thread is configured to operate using a GPU and includes the encoding time of sensitive objects.

Figure 15 presents the elapsed time and standard deviation for each thread within the logger. As shown in

Figure 15, the image save thread exhibits a higher standard deviation in execution time compared to other threads, indicating high variability in its execution time. This variability arises from the write performance limitations inherent in storage media utilizing a microSD card. To reduce the observed standard deviation, a high-throughput storage medium should be considered. With an average execution time of 31.64 ms, the capture thread meets the 30 frames per second (FPS) performance specification.

5.2. Evaluation of Object Detection and Encoding

The majority of the encoding time for sensitive objects is determined by the object detection time, which relies on the inference performance of the YOLOv8 model.

Table 6 illustrates the comparative performance of CPU and GPU for object detection, with the GPU demonstrating a considerable reduction in processing latency. In our experiments, we observed that object detection using a GPU was approximately 12.57 times faster than using a CPU for a set of 10 images. When the number of images was increased to 100, the GPU-based object detection was still significantly faster, at about 11.24 times the speed of the CPU. We also observed that the standard deviation of the object detection time for images was smaller when using a GPU compared to a CPU. This indicates that the object detection time remains consistent, leading to more stable and efficient processing. Our evaluation demonstrates that object detection utilizing GPU-accelerated Jetson equipment achieves an average processing time of 65 ms per image, indicating the feasibility of real-time operation.

The comparative object detection performance of CPU and GPU is evaluated using a statistical hypothesis test, p-value. The obtained p-value of 0.029, below the conventional significance level of 0.05, confirms a statistically significant improvement in object detection performance when using the GPU.

Figure 16 shows the performance of encoding images using CPU and GPU. As seen in

Figure 16, the encoding time of the video using the GPU is, on average, 13.59 times faster compared to the CPU. Additionally, the standard deviation is 0.05 ms, confirming excellent processing performance.

6. Discussion

Video data frames generated from data sources verify the cause of an accident when it occurs. To handle forensic evidence, the integrity of the data stemming from the evidence should be preserved. AI solutions like machine learning are used routinely across many industries. To ensure the governance and trustworthiness of AI models, it is necessary to introduce AI TRiSM, which stands for AI Trust, Risk, and Security Management. For this reason, ensuring the rapid and media-independent integrity of training data is becoming increasingly important.

The results of this experiment confirm that performing the XOR operation requires minimal time for encoding sensitive data during the image detection process. Furthermore, through the parallel processing of multiple threads, feature extraction, metadata construction, and data transmission could be performed without delay for 30 images per second. In order to mitigate security vulnerabilities, a filter employing XOR encoding will be designed, incorporating Hardware Security Modules (HSMs) for robust cryptographic key management. HSMs, as physical devices, provide high assurance of security for cryptographic operations, including the generation, storage, and management of cryptographic keys. Both the logger and verifier interact with their respective HSMs to generate a random number, which serves as an input for the filter.

The YOLOv8 used in the experiment is an object detection model designed for general-purpose object recognition. This experiment has a limitation, in that it did not perform performance evaluation using a separate dataset due to the purpose of detecting sensitive objects without performing separate fine-tuning for Yolov8. During the experiment, as shown in

Figure 17, there are limitations such as false positives and false negatives, which can lead to the exposure of sensitive objects. To address this issue, it is necessary to reconstruct the learning model by retraining and fine-tuning it on the dataset acquired from the given environment, thereby improving the accuracy of detected objects. The experiment observes instances of incorrect object detection, indicating the need for the development of specialized algorithms and further fine-tuning to enhance accuracy.

7. Conclusions

With the emergence of the Internet of Things environment where smartphones, CCTV, and sensors are connected, the amount of digital content generated from devices connected to the internet is rapidly increasing, and the demand for applications utilizing this content is growing.

Digital materials have the characteristic of being easy to change, and it is important to ensure integrity and authenticity in order to utilize digital materials. Integrity and authenticity verification is used for the purpose of verifying that digital data have been provided by the producer without any changes to their contents. The proposed integrity scheme is used for the purpose of the verification of authenticity and integrity to confirm that the video frame was created and provided by a specific logger.

We designed a system for preserving data integrity based on a digital fingerprint generated from XOR encoding scheme. The system operates on a logger within an edge device. This paper focuses on the privacy concerns associated with transmitting video data. By leveraging an object detection model using a Jetson device, we developed a novel automated encoding technique to obfuscate sensitive objects within video data. The proposed method employs a public key filter to ensure the security of sensitive information and can be applied to a wide range of applications. Through quantitative performance analysis of GPU-accelerated object detection, we observed results with minimal processing time variation, confirming the stability of the overall system performance.

Considering the scalability of the system, the verifier will be configured to operate as a container within a Kubernetes environment in a cloud-based infrastructure as part of future work. To handle large-scale hash data, we consider a lightweight hash tree. This lightweight hash tree is designed to perform compression on consecutive duplicate hash values.

A proof-of-concept prototype was developed to demonstrate the efficacy of the proposed encoding scheme. This prototype can be integrated between the sensor data acquisition module and the anomaly detection system within a nuclear power plant’s architecture. Deployed on an edge server, the prototype enhances data integrity, a critical non-functional requirement for mission-critical systems. Furthermore, it ensures the authenticity of data sources through digital signatures. In future work, the prototype will be extended to ensure the determination of digital record authenticity by maintaining the continuity of evidence storage and the linkage between digital records.

Author Contributions

Conceptualization, Y.-R.C. and Y.K.; methodology, Y.K.; software, Y.K.; formal analysis, Y.K.; writing—original draft preparation, Y.K.; writing—review and editing, Y.K.; visualization, Y.K.; supervision, Y.K.; project administration, Y.-R.C.; funding acquisition, Y.-R.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea through the Government (Ministry of Science and Information and Communications Technology) in 2022 under Grant RS-2022-00144000.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zimmermann, A.; Schmidt, R.; Jain, L.C. (Eds.) Architecting the Digital Transformation—Digital Business, Technology, Decision Support, Management; Springer: Cham, Switzerland, 2021; Volume 188. [Google Scholar] [CrossRef]

- Jaspin, K.; Selvan, S.; Sahana, S.; Thanmai, G. Efficient and Secure File Transfer in Cloud Through Double Encryption Using AES and RSA Algorithm. In Proceedings of the 2021 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 5–7 March 2021; pp. 791–796. [Google Scholar] [CrossRef]

- Sivathanu, G.; Wright, C.P.; Zadok, E. Ensuring data integrity in storage: Techniques and applications. In Proceedings of the StorageSS, Fairfax, VA, USA, 11 November 2005; Atluri, V., Samarati, P., Yurcik, W., Brumbaugh, L., Zhou, Y., Eds.; ACM: New York, NY, USA, 2005; pp. 26–36. [Google Scholar]

- Voigt, P.; Bussche, A.V.D. The EU General Data Protection Regulation (GDPR): A Practical Guide, 1st ed.; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Hjerppe, K.; Ruohonen, J.; Leppanen, V. The General Data Protection Regulation: Requirements, Architectures, and Constraints. In Proceedings of the 2019 IEEE 27th International Requirements Engineering Conference (RE), Jeju, South Korea, 23–27 September 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Fotiou, N.; Xylomenos, G.; Thomas, Y. Data integrity protection for data spaces. In Proceedings of the 17th European Workshop on Systems Security, New York, NY, USA, 22 April 2024; EuroSec ’24. pp. 44–50. [Google Scholar] [CrossRef]

- Wu, C. Data privacy: From transparency to fairness. Technol. Soc. 2024, 76, 102457. [Google Scholar] [CrossRef]

- Wang, J.; Liu, G.; Lian, S. Security Analysis of Content-Based Watermarking Authentication Framework. In Proceedings of the 2009 International Conference on Multimedia Information Networking and Security, Wuhan, China, 18–20 November 2009; Volume 1, pp. 483–487. [Google Scholar] [CrossRef]

- Miller, F.P.; Vandome, A.F.; McBrewster, J. Advanced Encryption Standard; Alpha Press: Portland, OR, USA, 2009. [Google Scholar]

- Duranti, L.; Rogers, C. Trust in digital records: An increasingly cloudy legal area. Comput. Law Secur. Rev. 2012, 28, 522–531. [Google Scholar] [CrossRef]

- Geus, J.; Ottmann, J.; Freiling, F. Systematic Evaluation of Forensic Data Acquisition using Smartphone Local Backup. arXiv 2024, arXiv:2404.12808. [Google Scholar]

- Hansen, T.; Eastlake, D., 3rd. US Secure Hash Algorithms (SHA and HMAC-SHA). RFC 4634, 2006. Available online: https://www.rfc-editor.org/info/rfc4634 (accessed on 2 January 2025).

- Goldwasser, S.; Micali, S.; Rivest, R.L. A Digital Signature Scheme Secure Against Adaptive Chosen-Message Attacks. Siam J. Comput. 1988, 17, 281–308. [Google Scholar] [CrossRef]

- Swan, M. Blockchain: Blueprint for a New Economy; O’Reilly Media: Sebastopol, CA, USA, 2015. [Google Scholar]

- Gao, T.; Yao, Z.; Si, X. Data Integrity Verification Scheme for Fair Arbitration Based on Blockchain. In Proceedings of the 2024 4th International Conference on Blockchain Technology and Information Security (ICBCTIS), Huizhou, China, 9–11 August 2024; pp. 191–197. [Google Scholar] [CrossRef]

- Cherckesova, L.V.; Safaryan, O.A.; Lyashenko, N.G.; Korochentsev, D.A. Developing a New Collision-Resistant Hashing Algorithm. Mathematics 2022, 10, 2769. [Google Scholar] [CrossRef]

- Rogaway, P.; Shrimpton, T. Cryptographic Hash-Function Basics: Definitions, Implications, and Separations for Preimage Resistance, Second-Preimage Resistance, and Collision Resistance. In Proceedings of the Fast Software Encryption, 11th International Workshop, FSE 2004, Delhi, India, 5–7 February 2004; Revised Papers. Roy, B.K., Meier, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2004. Lecture Notes in Computer Science. Volume 3017, pp. 371–388. [Google Scholar] [CrossRef]

- Liu, H.; Luo, X.; Liu, H.; Xia, X. Merkle Tree: A Fundamental Component of Blockchains. In Proceedings of the 2021 International Conference on Electronic Information Engineering and Computer Science (EIECS), Changchun, China, 23–25 September 2021; pp. 556–561. [Google Scholar] [CrossRef]

- Belchior, R.; Vasconcelos, A.; Guerreiro, S.; Correia, M. A Survey on Blockchain Interoperability: Past, Present, and Future Trends. ACM Comput. Surv. 2021, 54, 168. [Google Scholar] [CrossRef]

- Merkle, R.C. A Digital Signature Based on a Conventional Encryption Function. In Proceedings of the A Conference on the Theory and Applications of Cryptographic Techniques on Advances in Cryptology, Santa Barbara, CA, USA, 16–20 August 1987; Springer: Berlin/Heidelberg, Germany, 1987. CRYPTO ’87. pp. 369–378. [Google Scholar]

- Bashiri, K.; Fluhrer, S.; Gazdag, S.L.; Geest, D.V.; Kousidis, S. Internet X.509 Public Key Infrastructure: Algorithm Identifiers for SLH-DSA. Internet-Draft Draft-Ietf-Lamps-X509-Slhdsa-03, Internet Engineering Task Force, 2024, Work in Progress. Available online: https://datatracker.ietf.org/doc/draft-ietf-lamps-x509-slhdsa/01/ (accessed on 25 February 2025).

- Shamir, A. How to share a secret. Commun. ACM 1979, 22, 612–613. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European conference on computer vision (ECCV), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Rivest, R.L.; Shamir, A.; Adleman, L. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

Figure 1.

(a) Original image, (b) modified image.

Figure 1.

(a) Original image, (b) modified image.

Figure 2.

Structure of hash tree.

Figure 2.

Structure of hash tree.

Figure 4.

Overall system structure with logger and verifier.

Figure 4.

Overall system structure with logger and verifier.

Figure 5.

Encoded data using filter.

Figure 5.

Encoded data using filter.

Figure 6.

Secure delivery of metadata and sensitive objects.

Figure 6.

Secure delivery of metadata and sensitive objects.

Figure 7.

HW device: Raspberry Pi (left) and Jetson Nano Orin (Right).

Figure 7.

HW device: Raspberry Pi (left) and Jetson Nano Orin (Right).

Figure 8.

(a) Private key, (b) public key.

Figure 8.

(a) Private key, (b) public key.

Figure 9.

(a) Grayscale image, (b) transformed image by Canny edge detection.

Figure 9.

(a) Grayscale image, (b) transformed image by Canny edge detection.

Figure 10.

Generated metadata.

Figure 10.

Generated metadata.

Figure 11.

Received message.

Figure 11.

Received message.

Figure 12.

Constructed hash tree.

Figure 12.

Constructed hash tree.

Figure 13.

Result of verification.

Figure 13.

Result of verification.

Figure 14.

(a) Image with objects detected by Yolo8, (b) encoded the image contained hidden human objects.

Figure 14.

(a) Image with objects detected by Yolo8, (b) encoded the image contained hidden human objects.

Figure 15.

Elapsed time and std. deviation per logger threads.

Figure 15.

Elapsed time and std. deviation per logger threads.

Figure 16.

Elapsed time of encoding images.

Figure 16.

Elapsed time of encoding images.

Figure 17.

Examples of encoding with false positives (a) and false negatives (b).

Figure 17.

Examples of encoding with false positives (a) and false negatives (b).

Table 1.

Comparison hash functions: SHA-256 and SHA-3.

Table 1.

Comparison hash functions: SHA-256 and SHA-3.

| Feature | SHA-256 | SHA-3 |

|---|

| Standard | Part of SHA-2 family | NIST standard (Keccak algorithm) |

| Use case | Digital signatures | General-purpose hashing |

| Security | Considered secure | Considered secure |

| Processing time | Moderate | Slower than SHA-256 |

| Usability | Widely adopted | Relatively newer than SHA-256 |

Table 2.

Fingerprint generation procedure.

Table 2.

Fingerprint generation procedure.

| Phase | Description | Remarks |

|---|

| 1 | Generate a key pair, pk and sk | |

| 2 | Transfer pk to verifier | |

| 3 | Generate a feature of given image | Canny edge detection |

| 4 | Generate a hash value | SHA-256 |

| 5 | Sign the hash value | |

| 6 | Build a hash tree | Merkle tree |

Table 3.

Experimental setup for the designed system.

Table 3.

Experimental setup for the designed system.

| Type | Name | Specification |

|---|

| Object detector | HW | Jetson Nano Orin | 8G byte (Memory) |

| SW | OS | Ubuntu 20.04.6LTS |

| Language | Python 3.8.10 |

| Libraries | PyTorch 2.1.0 |

| Torchvision 0.16.1 |

| YOLOv8 |

| Logger | HW | Raspberry PI 4 | 8G byte (Memory) |

| WebCam | Logitech Webcam c270 |

| SW | OS | Raspbian |

| Language | gcc 12 |

| Libraries | OpenCV 4.10.0 |

| Curl 7.1 |

| OpenSSL 1.1.0 |

Table 4.

Metadata.

| Name | Description |

|---|

| CID | unique ID of captured image |

| Hash | hash value of a feature vector in character format |

| Sign hash | digital signature of hash value |

| Object detection result | information of object with bounding box |

| Media type | media format like BGR |

Table 5.

Elapsed time of detecting object.

Table 5.

Elapsed time of detecting object.

| No | Thread | Performance Index |

|---|

|

Mean

|

Std Dev

|

|---|

| 1 | Capture thread | 31.64 | 6.73 |

| 2 | Image save thread | 19.12 | 13.86 |

| 3 | Convert frame thread | 5.56 | 3.50 |

| 4 | Object detection thread | 76.00 | 2.62 |

| 5 | Feature extraction thread | 4.97 | 2.62 |

| 6 | Make hash thread | 26.10 | 3.99 |

| 7 | Sign hash thread | 9.89 | 1.76 |

| 8 | Image send thread | 11.04 | 2.51 |

Table 6.

Elapsed time of detecting object.

Table 6.

Elapsed time of detecting object.

| No of Images | CPU | GPU |

|---|

|

Mean

|

Std Dev

|

Mean

|

Std Dev

|

|---|

| 10 | 8.67 | 1.09 | 0.69 | 0.04 |

| 30 | 34.99 | 0.10 | 1.97 | 0.05 |

| 60 | 50.07 | 0.12 | 3.91 | 0.06 |

| 100 | 72.49 | 5.24 | 6.45 | 0.05 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).