Development of an Augmented Reality Surgical Trainer for Minimally Invasive Pancreatic Surgery

Abstract

1. Introduction

- An innovative parallel robot particularly designed for minimally invasive pancreatic surgery;

- A real-time augmented reality environment for enhanced visualization and spatial guidance;

- An AI-driven force prediction model that replaces traditional physical sensors, thus reducing costs and improving adaptability to variable conditions;

- A high-fidelity haptic feedback algorithm to ensure realistic interactions.

2. Materials and Methods

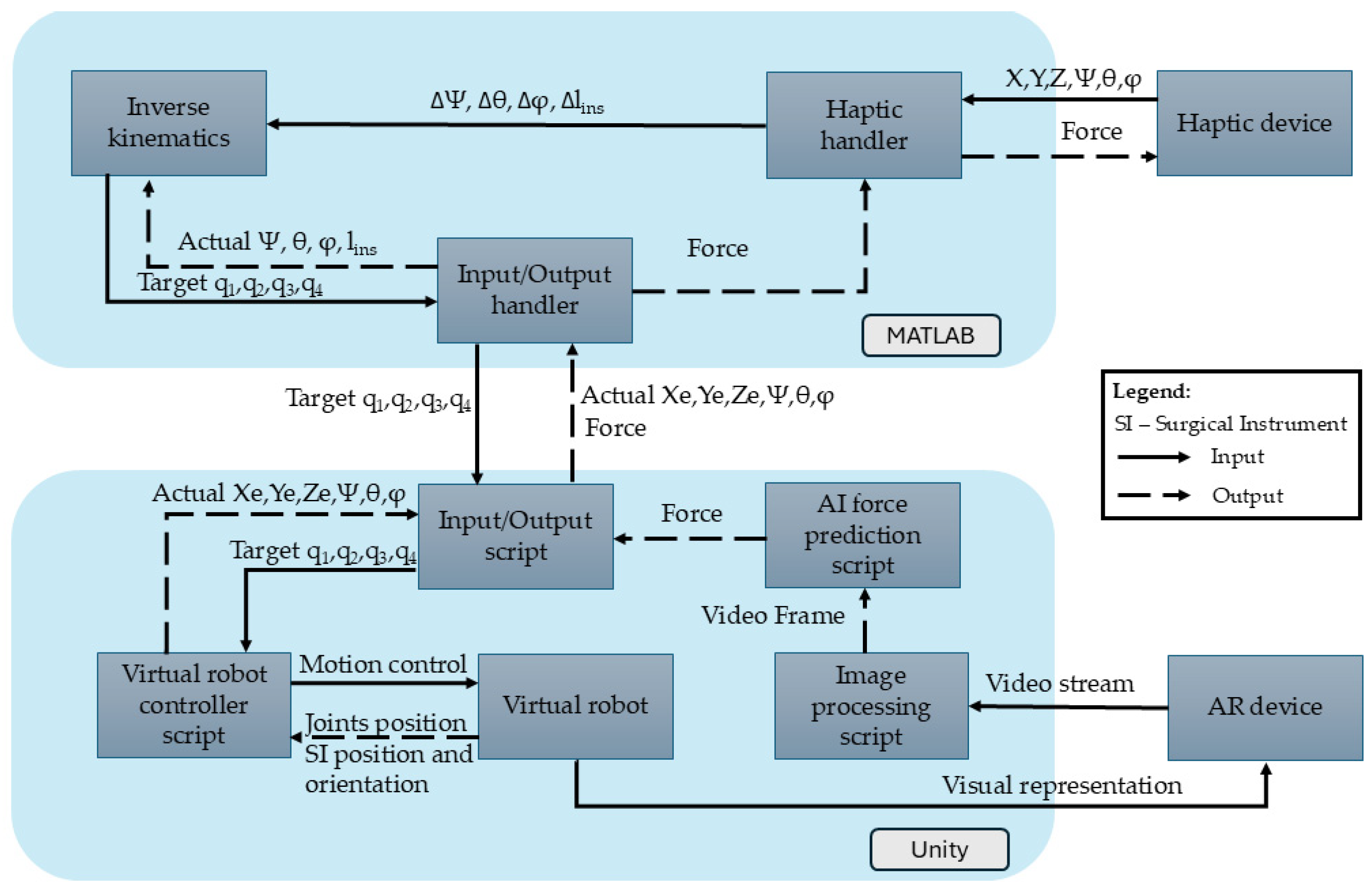

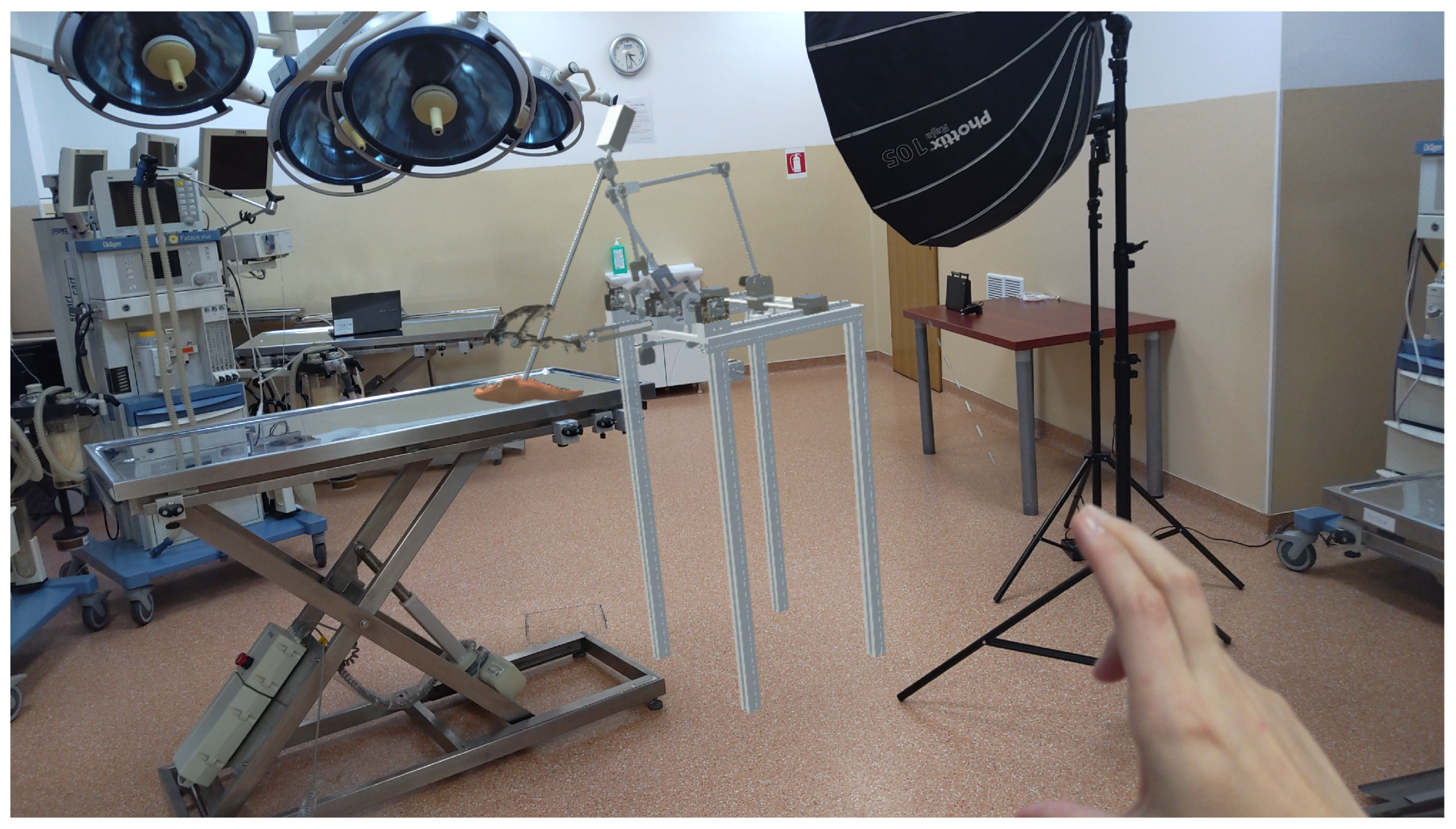

2.1. Simulator’s Architecture

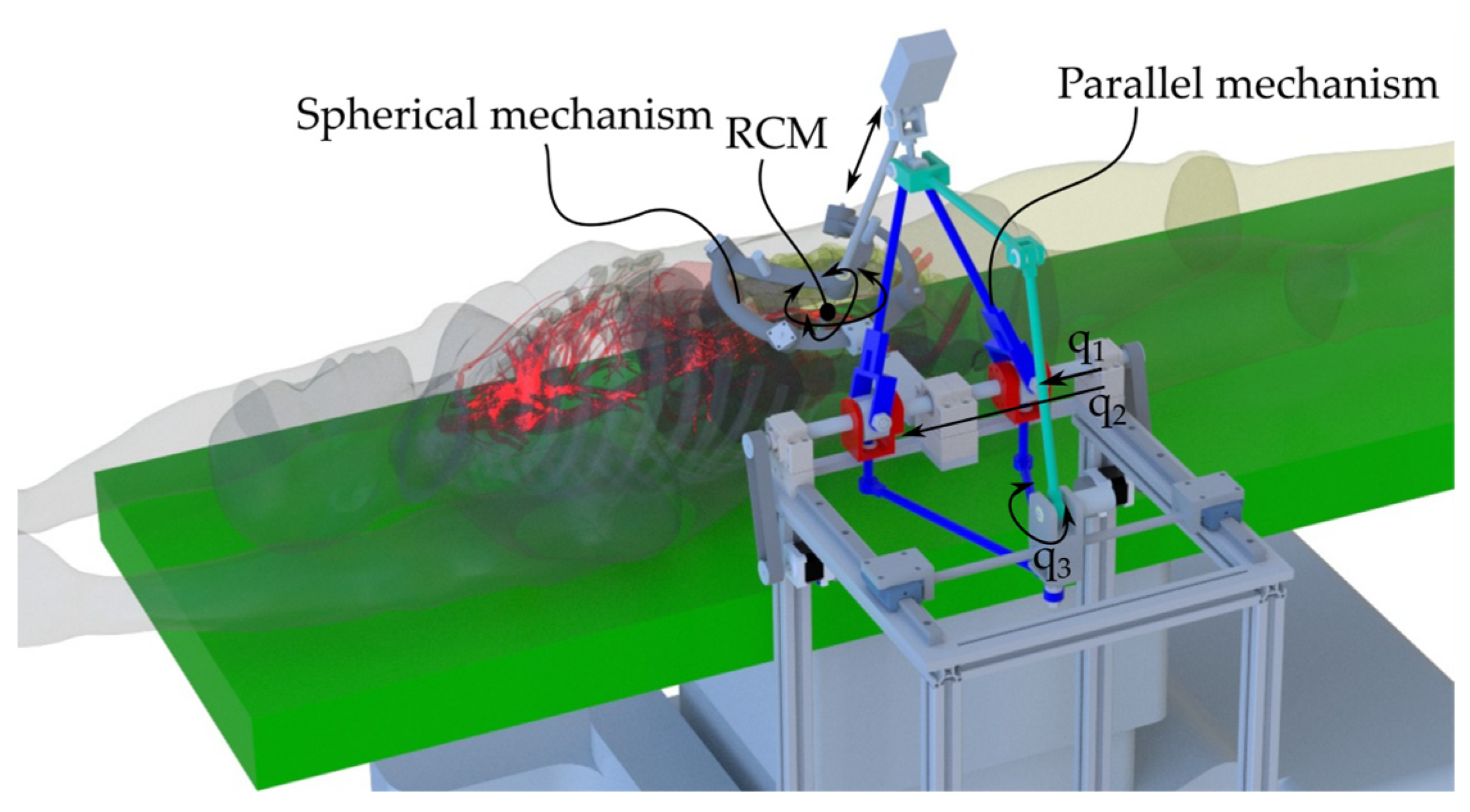

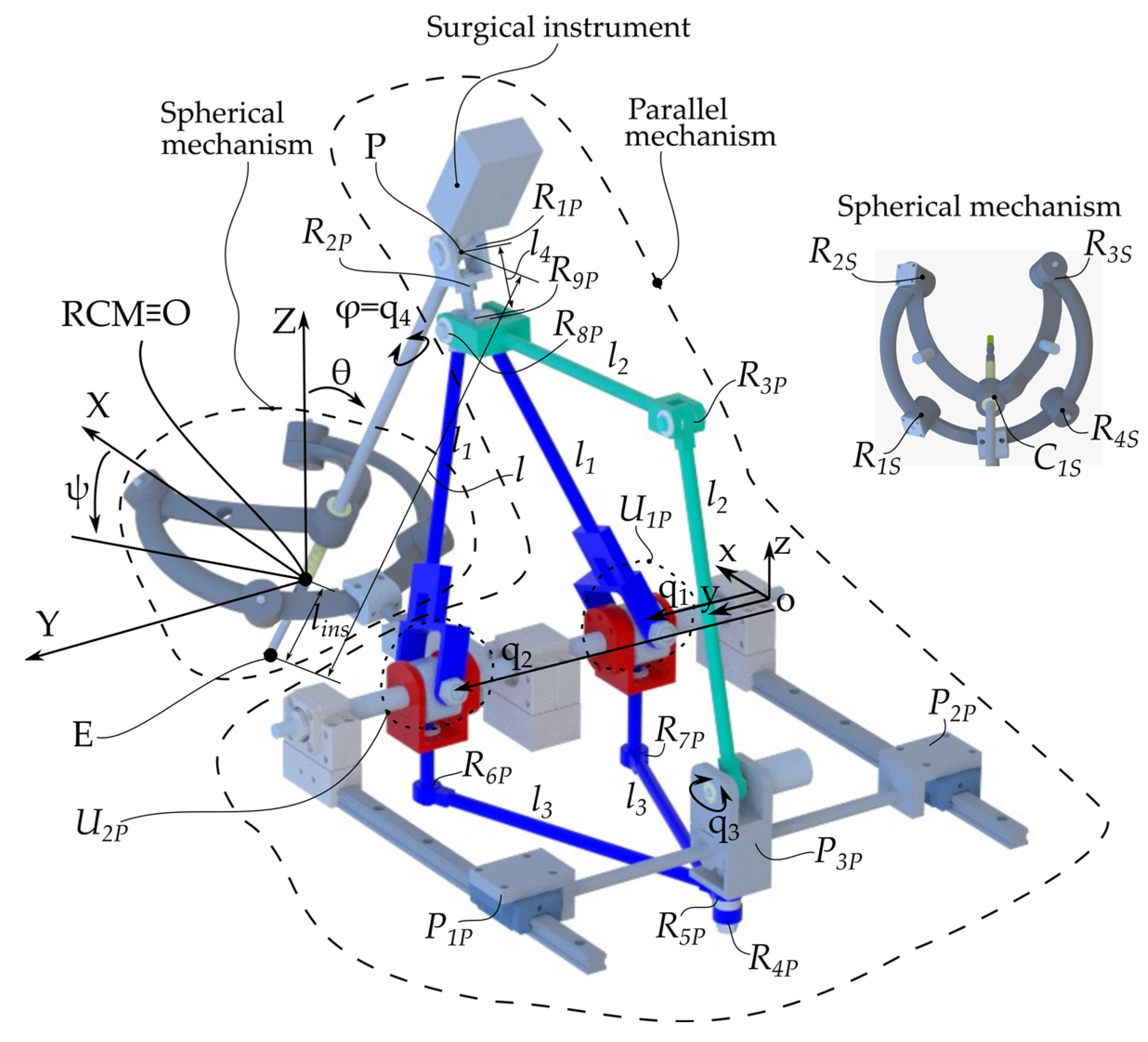

2.2. ATHENA Parallel Robot for Minimally Invasive Pancreatic Surgery

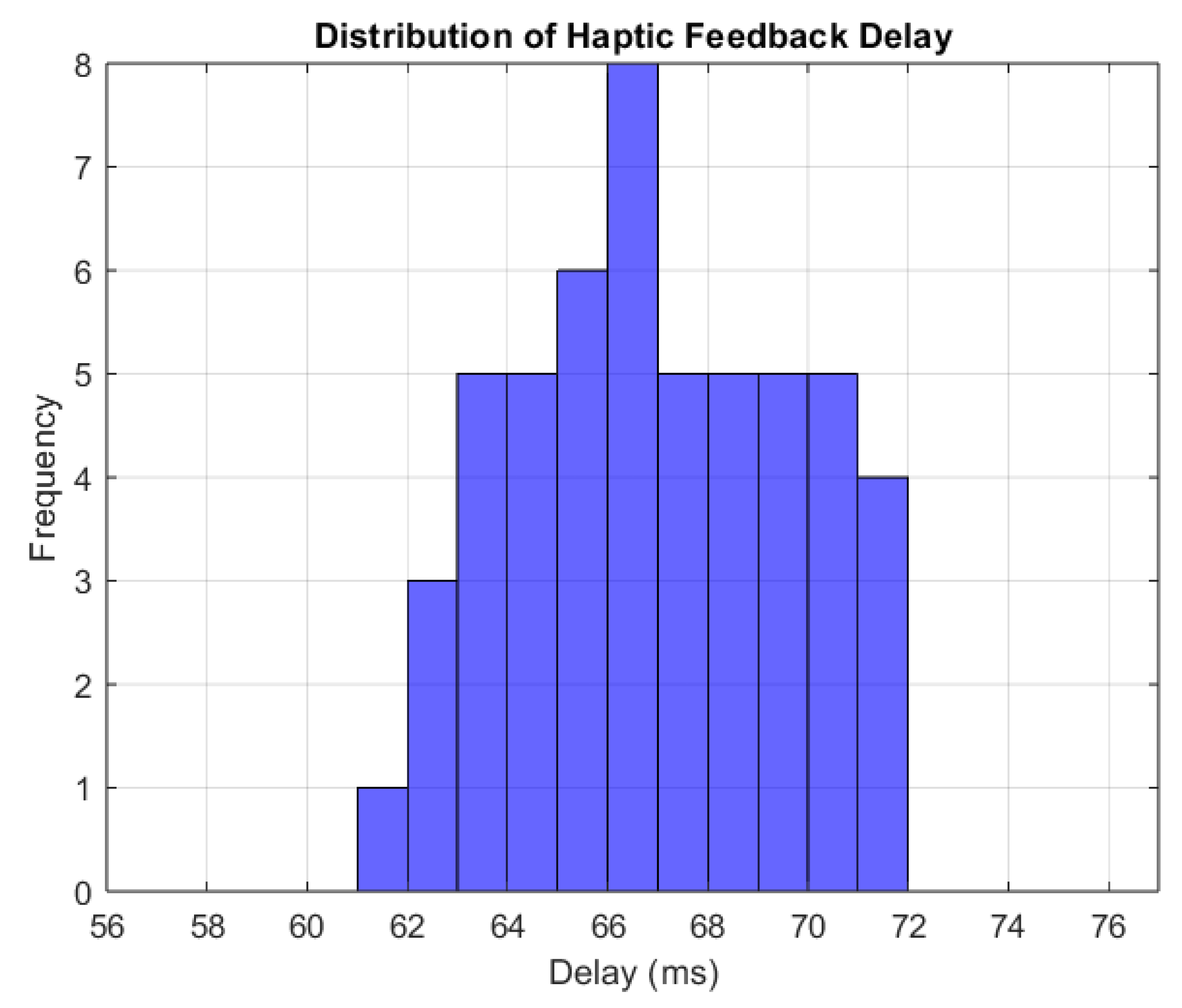

2.3. Haptic Device Integration

- Haptic Handler Module—Establishes communication with the haptic device, recording its position (X, Y, Z), orientation (ψ, θ, φ), and gripper state. It also sets forces along the X, Y, and Z axes of the Omega 7 haptic device.

- Inverse Kinematics Module—Uses the output from the Haptic Handler, namely the desired change in orientation and position in terms of Δψ, Δθ, Δlins, and Δφ determined using Equation (1) to compute new joint positions (q1, q2, q3, and q4) that replicate the user’s movements.

- Input/Output Handler—Transmits the computed joint positions (q1, q2, q3, and q4) to Unity via a .NET pipeline and receives the end-effector’s position and orientation from the virtual environment in Unity.

- Input/Output Script—Receives joint positions from MATLAB R2024B to update the virtual robot and transmits the actual end-effector positions back to MATLAB.

- Virtual Robot Controller—Adjusts joint positions based on the mathematical model, ensuring precise replication of the user’s haptic movements.

- Virtual Robot—The Unity object whose configuration is changed upon the provided values of the active joints (q1, q2, q3, and q4).

- Image Processing Script—Processes the images from the HoloLens camera stream, selects every tenth frame, and resizes it to meet the AI model’s input requirements.

- AI Force Prediction Script—Analyzes the images provided by the Image Processing Script for force prediction. The AI force prediction script estimates the applied force at the end-effector and sends the data back to the Haptic Handler in MATLAB R2024B, ensuring real-time force feedback for the user.

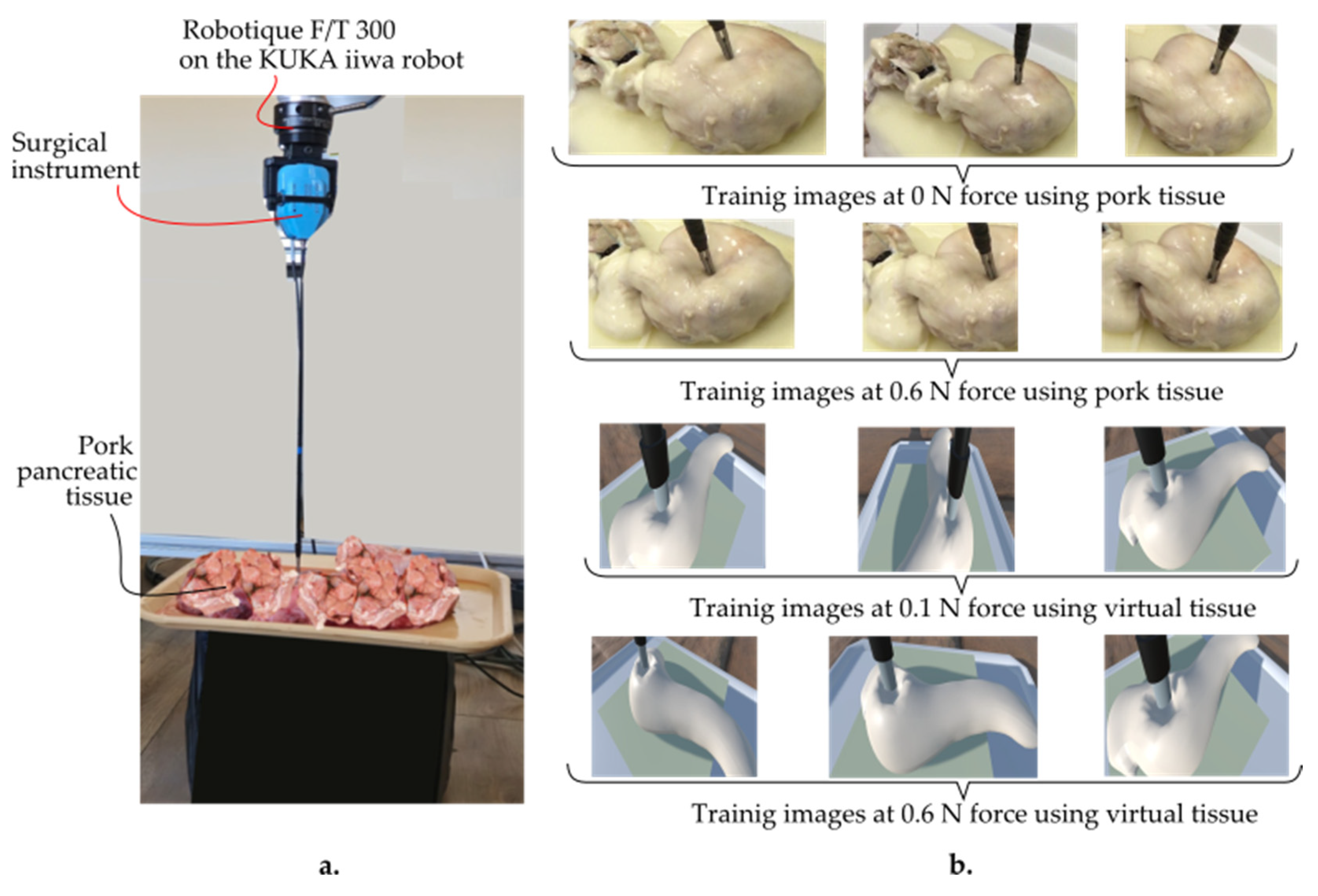

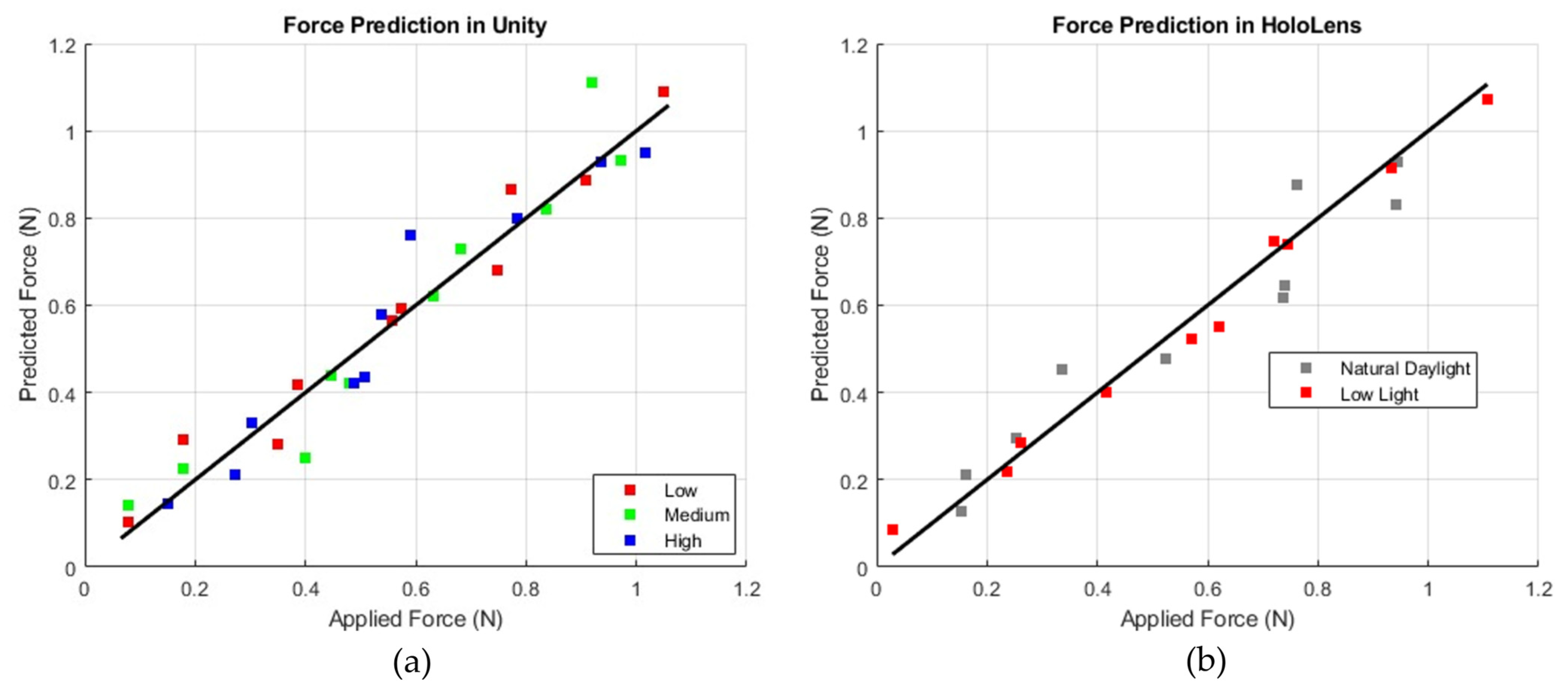

2.4. Force Prediction and Feedback

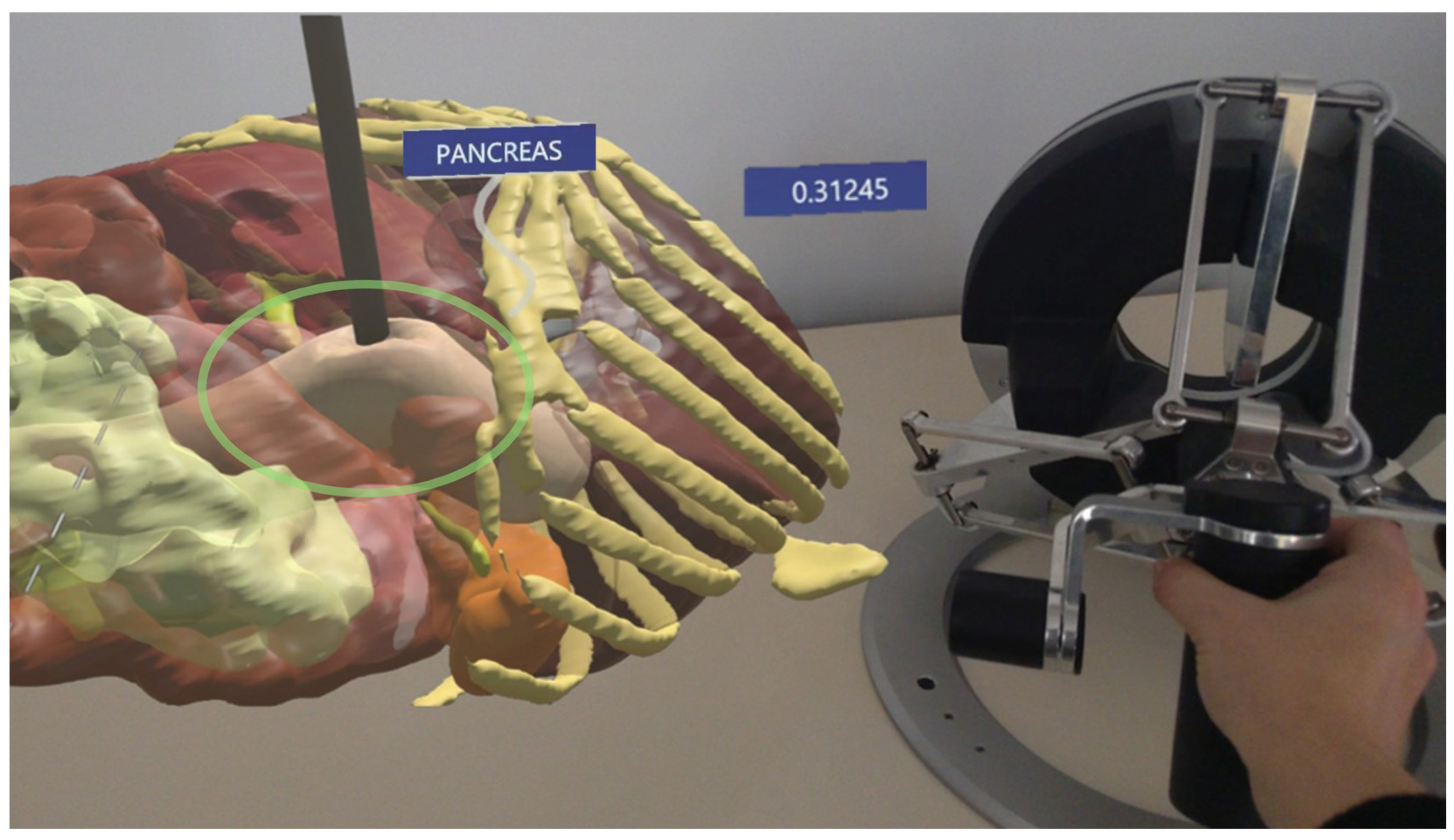

2.5. Augmented Reality Environment Integration

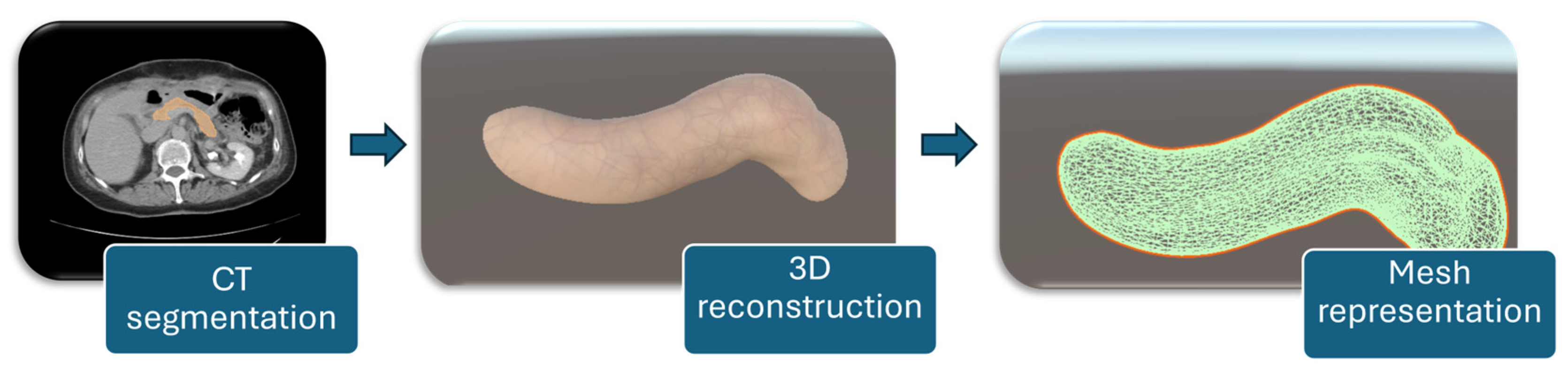

2.6. Pancreas Reconstruction and Setup

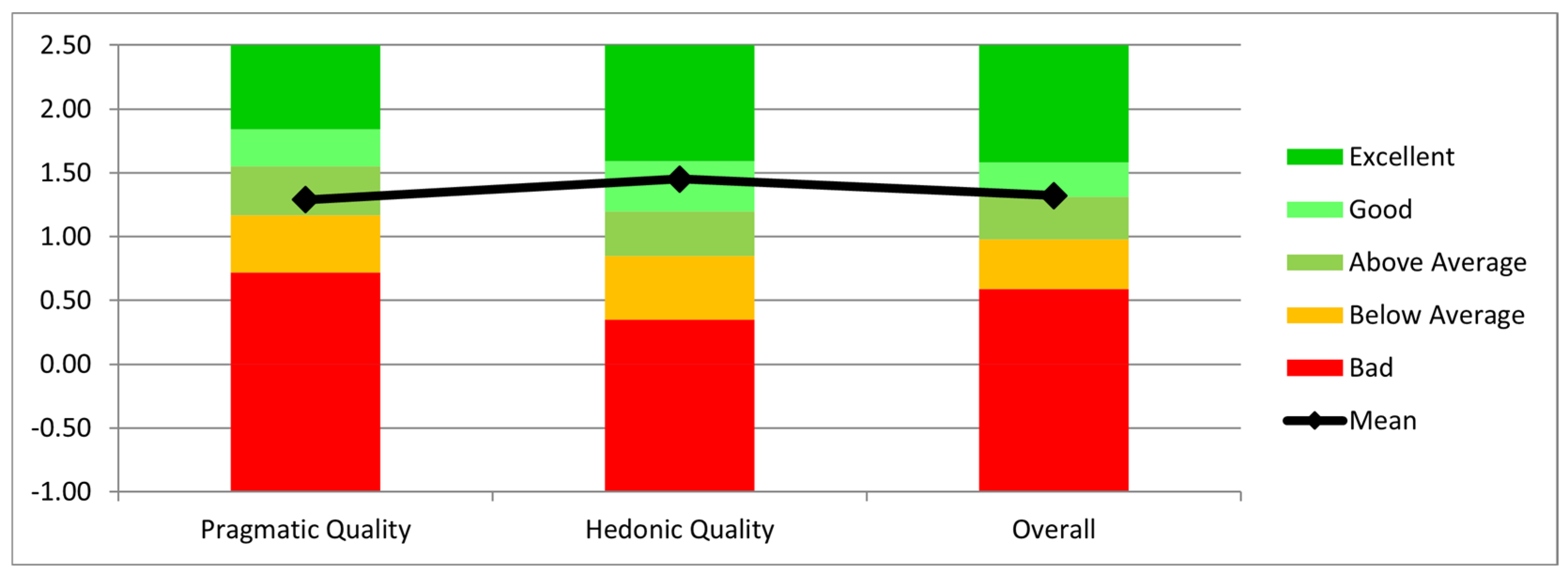

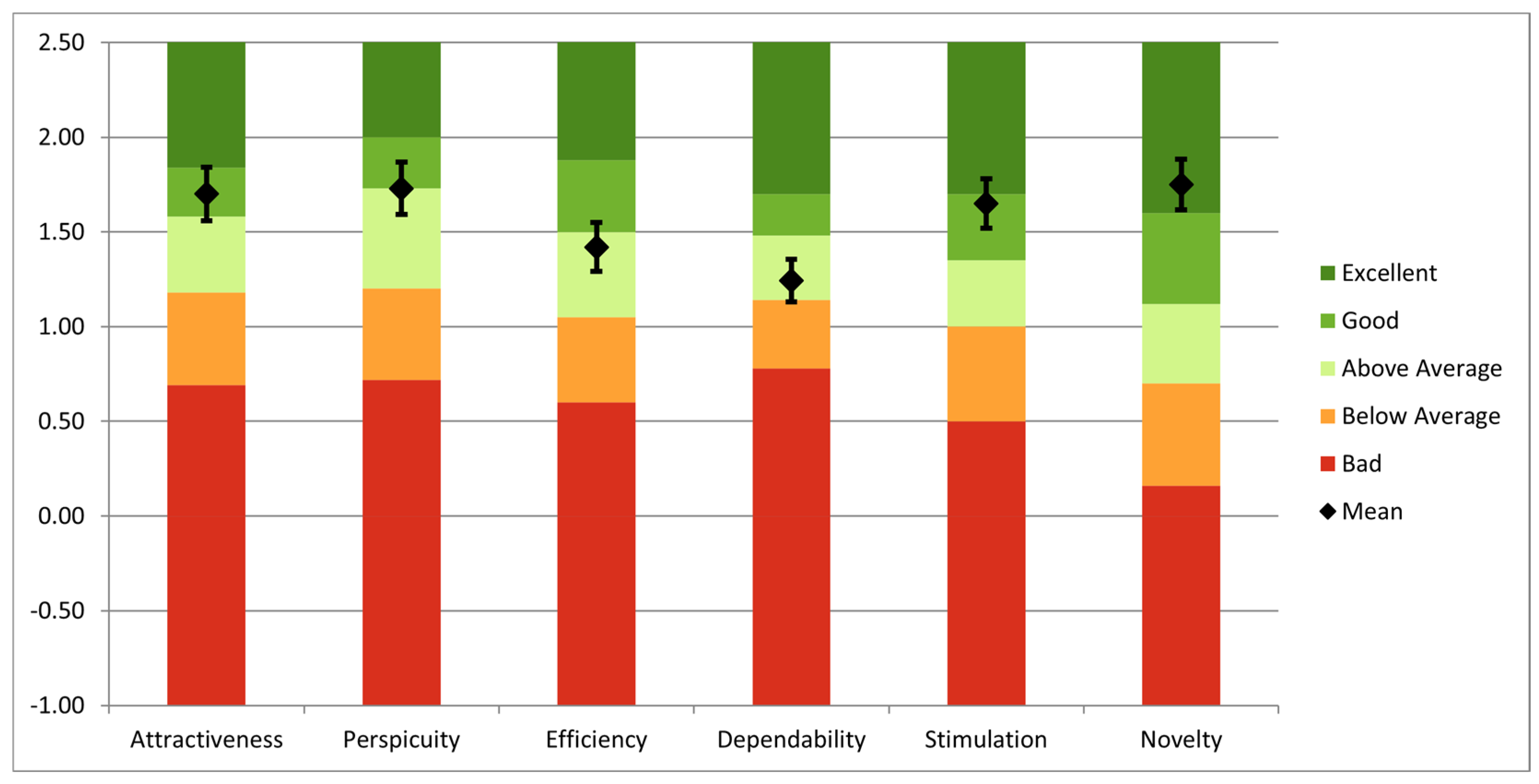

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bienstock, J.; Heuer, A. A review on the evolution of simulation-based training to help build a safer future. Medicine 2022, 101, e29503. [Google Scholar] [CrossRef] [PubMed]

- Da Vinci Simulator. da Vinci® Skills Simulator—Mimic Simulation. Available online: https://mimicsimulation.com/da-vinci-skills-simulator (accessed on 29 December 2024).

- Gleason, A.; Servais, E.; Quadri, S.; Manganiello, M.; Cheah, Y.L.; Simon, C.J.; Preston, E.; Graham-Stephenson, A.; Wright, V. Developing basic robotic skills using virtual reality simulation and automated assessment tools: A multidisciplinary robotic virtual reality-based curriculum using the Da Vinci Skills Simulator and tracking progress with the Intuitive Learning platform. J. Robot. Surg. 2022, 16, 1313–1319. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Rodrigues Armijo, P.; Krause, C.; SAGESRobotic Task Force Siu, K.C.; Oleynikov, D. A comprehensive review of robotic surgery curriculum and training for residents, fellows, and postgraduate surgical education. Surg. Endosc. 2020, 34, 361–367. [Google Scholar] [CrossRef] [PubMed]

- Ou, Y.; Zargarzadeh, S.; Sedighi, P.; Tavakoli, M. A Realistic Surgical Simulator for Non-Rigid and Contact-Rich Manipulation in Surgeries with the da Vinci Research Kit. In Proceedings of the 2024 21st International Conference on Ubiquitous Robots (UR), New York, NY, USA, 24–27 June 2024. [Google Scholar] [CrossRef]

- Lefor, A.K.; Heredia Pérez, S.A.; Shimizu, A.; Lin, C.; Witowski, J.; Mitsuishi, M. Development and Validation of a Virtual Reality Simulator for Robot-Assisted Minimally Invasive Liver Surgery Training. J. Clin. Med. 2022, 11, 4145. [Google Scholar] [CrossRef] [PubMed]

- Chorney, H.V.; Forbes, J.R.; Driscoll, M. System identification and simulation of soft tissue force feedback in a spine surgical simulator. Comput. Biol. Med. 2023, 164, 107267. [Google Scholar] [CrossRef] [PubMed]

- Bici, M.; Guachi, R.; Bini, F.; Mani, S.F.; Campana, F.; Marinozzi, F. Endo-Surgical Force Feedback System Design for Virtual Reality Applications in Medical Planning. Int. J. Interact. Des. Manuf. 2024, 18, 5479–5487. [Google Scholar] [CrossRef]

- Misra, S.; Ramesh, K.T.; Okamura, A.M. Modeling of Tool-Tissue Interactions for Computer-Based Surgical Simulation: A Literature Review. Presence 2008, 17, 463. [Google Scholar] [CrossRef] [PubMed]

- Haptic Device. Available online: https://www.forcedimension.com/products/omega (accessed on 7 January 2025).

- Tucan, P.; Vaida, C.; Horvath, D.; Caprariu, A.; Burz, A.; Gherman, B.; Iakab, S.; Pisla, D. Design and Experimental Setup of a Robotic Medical Instrument for Brachytherapy in Non-Resectable Liver Tumors. Cancers 2022, 14, 5841. [Google Scholar] [CrossRef] [PubMed]

- Unity. Available online: https://unity.com/ (accessed on 7 January 2025).

- HoloLens 2 Device. Available online: https://www.microsoft.com/en-us/d/hololens2/91pnzzznzwcp?msockid=1d8aaf670ae2690e3d42bcff0b3b6880&activetab=pivot:overviewtab (accessed on 1 February 2025).

- Vaida, C.; Birlescu, I.; Gherman, B.; Condurache, D.; Chablat, D.; Pisla, D. An analysis of higher-order kinematics formalisms for an innovative surgical parallel robot. Mech. Mach. Theory 2025, 209, 105986. [Google Scholar] [CrossRef]

- Chua, Z.; Jarc, A.M.; Okamura, A.M. Toward force estimation in robot-assisted surgery using deep learning with vision and robot state. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 12335–12341. [Google Scholar] [CrossRef]

- Sabique, P.V.; Pasupathy, G.; Kalaimagal, S.; Shanmugasundar, G.; Muneer, V.K. A Stereovision-based Approach for Retrieving Variable Force Feedback in Robotic-Assisted Surgery Using Modified Inception ResNet V2 Networks. J. Intell. Robot. Syst. 2024, 110, 81. [Google Scholar] [CrossRef]

- Jung, W.-J.; Kwak, K.-S.; Lim, S.-C. Vision-Based Suture Tensile Force Estimation in Robotic Surgery. Sensors 2021, 21, 110. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar] [CrossRef]

- Fu, J.; Rota, A.; Li, S.; Zhao, J.; Liu, Q.; Iovene, E.; Ferrigno, G.; De Momi, E. Recent Advancements in Augmented Reality for Robotic Applications: A Survey. Actuators 2023, 12, 323. [Google Scholar] [CrossRef]

- Suresh, D.; Aydin, A.; James, S.; Ahmed, K.; Dasgupta, P. The Role of Augmented Reality in Surgical Training: A Systematic Review. Surg. Innov. 2022, 30, 366–382. [Google Scholar] [CrossRef] [PubMed]

- Slicer 3D Program. Available online: https://www.slicer.org/ (accessed on 8 January 2025).

- Akinci, T.; Berger, L.K.; Indrakanti, A.K.; Vishwanathan, N.; Weiß, J.; Jung, M.; Berkarda, Z.; Rau, A.; Reisert, M.; Küstner, T.; et al. TotalSegmentator MRI: Sequence-Independent Segmentation of 59 Anatomical Structures in MR images. arXiv 2024, arXiv:2405.19492. Available online: https://arxiv.org/abs/2405.19492 (accessed on 5 February 2025).

- Carrara, S.; Ferrari, A.M.; Cellesi, F.; Costantino, M.L.; Zerbi, A. Analysis of the Mechanical Characteristics of Human Pancreas through Indentation: Preliminary In Vitro Results on Surgical Samples. Biomedicines 2023, 12, 91. [Google Scholar] [CrossRef]

- Rubiano, A.; Delitto, D.; Han, S.; Gerber, M.; Galitz, C.; Trevino, J.; Thomas, R.M.; Hughes, S.J.; Simmons, C.S. Viscoelastic properties of human pancreatic tumors and in vitro constructs to mimic mechanical properties. Acta Biomater. 2018, 67, 331–340. [Google Scholar] [CrossRef] [PubMed]

- Jin, M.L.; Brown, M.M.; Patwa, D.; Nirmalan, A.; Edwards, P.A. Telemedicine, telementoring, and telesurgery for surgical practices. Curr. Probl. Surg. 2021, 58, 100986. [Google Scholar] [CrossRef] [PubMed]

- User Experience Questionnaire. Available online: https://www.ueq-online.org/ (accessed on 12 January 2025).

- Pisla, D.; Birlescu, I.; Vaida, C.; Tucan, P.; Pisla, A.; Gherman, B.; Crisan, N.; Plitea, N. Algebraic modeling of kinematics and singularities for a prostate biopsy parallel robot. Proc. Rom. Acad. Ser. A 2019, 19, 489–497. [Google Scholar]

- Abdelaal, A.; Liu, E.J.; Hong, N.; Hager, G.D.; Salcudean, S.E. Parallelism in Autonomous Robotic Surgery. IEEE Robot. Autom. Lett. 2021, 6, 1824–1831. [Google Scholar] [CrossRef]

- Pisla, D.; Plitea, N.; Gherman, B.; Pisla, A.; Vaida, C. Kinematical Analysis and Design of a New Surgical Parallel Robot. In Computational Kinematics; Kecskeméthy, A., Müller, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

| Procedure Step | Medical Tasks |

|---|---|

| Step 1: Preplanning | Review medical history. Plan approach. 3D organ reconstruction with AR visualization. Define the patient and robot position. |

| Step 2: Preparation | Induce anesthesia and position the robot. Calibrate instruments and prepare tools. CO2 insufflation and trocar incisions. |

| Step 3: Surgical task | Perform dissection, mobilization, and tissue resection. Access the pancreas and adjacent organ retraction. Seal pancreatic stump, restore digestive tract through anastomosis if needed, hemostasis, and insert drains. |

| Step 4: Instrument retraction | Remove instruments, release CO2, and undock the robot. Suture incisions. |

| Step 5: Procedure finalizing | Transfer the patient to intensive care. Document the procedure and sterilize all equipment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pisla, D.; Hajjar, N.A.; Rus, G.; Gherman, B.; Ciocan, A.; Radu, C.; Vaida, C.; Chablat, D. Development of an Augmented Reality Surgical Trainer for Minimally Invasive Pancreatic Surgery. Appl. Sci. 2025, 15, 3532. https://doi.org/10.3390/app15073532

Pisla D, Hajjar NA, Rus G, Gherman B, Ciocan A, Radu C, Vaida C, Chablat D. Development of an Augmented Reality Surgical Trainer for Minimally Invasive Pancreatic Surgery. Applied Sciences. 2025; 15(7):3532. https://doi.org/10.3390/app15073532

Chicago/Turabian StylePisla, Doina, Nadim Al Hajjar, Gabriela Rus, Bogdan Gherman, Andra Ciocan, Corina Radu, Calin Vaida, and Damien Chablat. 2025. "Development of an Augmented Reality Surgical Trainer for Minimally Invasive Pancreatic Surgery" Applied Sciences 15, no. 7: 3532. https://doi.org/10.3390/app15073532

APA StylePisla, D., Hajjar, N. A., Rus, G., Gherman, B., Ciocan, A., Radu, C., Vaida, C., & Chablat, D. (2025). Development of an Augmented Reality Surgical Trainer for Minimally Invasive Pancreatic Surgery. Applied Sciences, 15(7), 3532. https://doi.org/10.3390/app15073532