Abstract

Robot-assisted minimally invasive surgery offers advantages over traditional laparoscopic surgery, including precision and improved patient outcomes. However, its complexity requires extensive training, leading to the development of simulators that still face challenges such as limited feedback and lack of realism. This study presents an augmented reality-based surgical simulator tailored for minimally invasive pancreatic surgery, integrating an innovative parallel robot, real-time AI-driven force estimation, and haptic feedback. Using Unity and the HoloLens 2, the simulator offers a realistic augmented environment, enhancing spatial awareness and planning in surgical scenarios. A convolutional neural network (CNN) model predicts forces without physical sensors, achieving a mean absolute error of 0.0244 N. Tests indicate a strong correlation between applied and predicted forces, with a haptic feedback latency of 65 ms, suitable for real-time applications. Its modularity makes the simulator accessible for training and preoperative planning, addressing gaps in current robotic surgery training tools while setting the stage for future improvements and broader integration.

1. Introduction

Minimally invasive surgery (MIS) offers several advantages over open surgery, including smaller incisions, reduced recovery times, and improved patient outcomes. The adoption of robotic systems has proven enhanced precision and stability, but at the cost of substantial training due to indirect manipulation, limited tactile feedback, and high hand–eye coordination requirements. Conventional training on animal models or cadavers raises ethical and financial concerns, prompting the rise in virtual surgical simulators as safer, more reproducible environments for skill development [1].

Early commercial robotic surgical simulators such as the da Vinci® Skills Simulator (dVSS) [2,3] and the Robotic Surgical Simulator (RoSS) [4] provided immersive tasks with real-time performance metrics. However, their high costs stimulated research into customizable, cost-effective platforms like CRESSim [5] or the simulator proposed by Alan et al. [6]. These alternatives offer greater flexibility in creating specialized training scenarios while maintaining lower financial barriers.

Even though this field has achieved a lot of progress, there are still certain areas that might use development. Of particular importance are those related to force feedback, which is still a challenge in producing realistic and immersive surgical simulations. To address this issue, many researchers have focused on developing solutions to overcome this limitation.

Recent advances in surgical simulation have focused on various individual components that enhance realism and training efficacy. For instance, ref. [7] investigated the simulation of force feedback by modeling tissue interactions in spinal surgery simulators, demonstrating the challenges associated with accurately replicating tissue behavior. Similarly, ref. [8] developed a haptic feedback system integrated into a virtual reality framework for surgical planning, with an emphasis on interface design and tactile response. These studies highlight the importance of realistic force feedback, yet they are limited by their reliance on physical sensors, which are not only costly but also sensitive to environmental variations.

In the domain of augmented reality, research such as [9] has shown that AR can significantly enhance visual immersion and spatial awareness during surgical training. Commercial platforms like the da Vinci Skills Simulator and the Robotic Surgical Simulator also incorporate AR and haptic feedback elements. However, these systems typically employ traditional physical sensors for force measurement and are not specifically optimized for minimally invasive pancreatic surgery.

Despite these advancements, no existing work has yet integrated these individual technologies into a single, unified system that specifically addresses the challenges of replicating precise force interactions in pancreatic surgery.

To address these gaps, this research presents an innovative augmented reality-based simulator that not only determines the force exerted by an instrument on an organ but also visualizes it within the simulation and renders it as haptic feedback for the user.

The paper presents the simulator of a parallel robot developed especially for minimally invasive pancreatic surgery. The robot offers precision and stability, making it suitable for the requirements of this surgery and enhancing the simulator’s ability to replicate surgical scenarios.

The simulator features the following key innovations:

- An innovative parallel robot particularly designed for minimally invasive pancreatic surgery;

- A real-time augmented reality environment for enhanced visualization and spatial guidance;

- An AI-driven force prediction model that replaces traditional physical sensors, thus reducing costs and improving adaptability to variable conditions;

- A high-fidelity haptic feedback algorithm to ensure realistic interactions.

Following the introduction, the paper is structured as follows: Section 2 details the methodology for developing the simulator, covering the parallel robot design, the CNN-based force prediction model, and the integration of augmented reality and haptic feedback. Section 3 presents the results, focusing on system performance and user evaluations. Section 4 discusses the simulator’s contributions to surgical training, highlighting its benefits and areas for improvement. Finally, Section 5 concludes by summarizing the simulator’s potential for advancing robotic-assisted surgical training.

2. Materials and Methods

2.1. Simulator’s Architecture

Targeting minimally invasive pancreatic surgery, the simulator is designed to be included in Step 1 of the medical protocol from Table 1, developed in collaboration with hepato-pancreato-biliary surgeons. Using the simulator, the doctors can visualize and analyze the adequate position of both the patient and the robot in the operating room.

Table 1.

Medical protocol for minimally invasive pancreatic surgery.

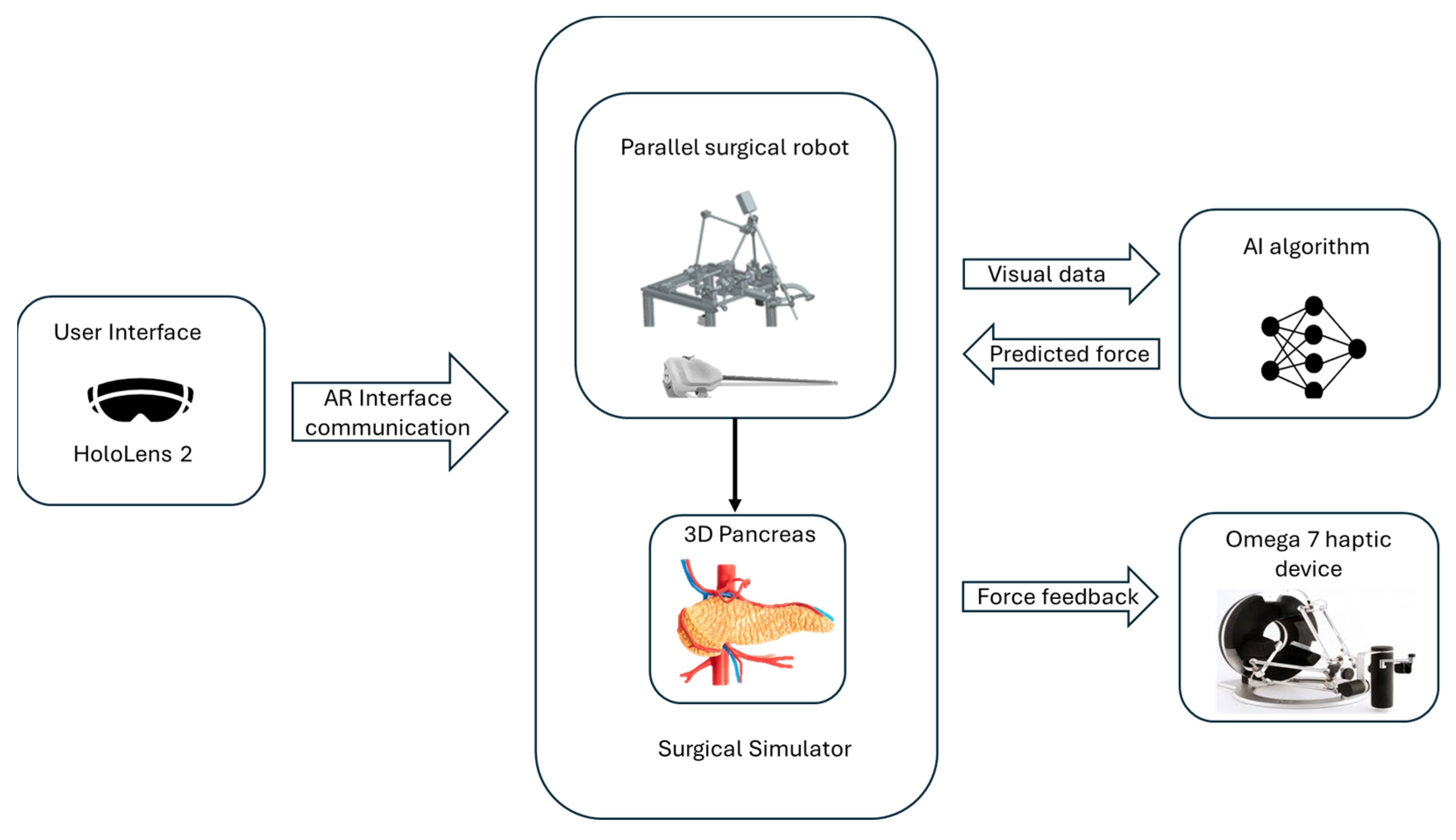

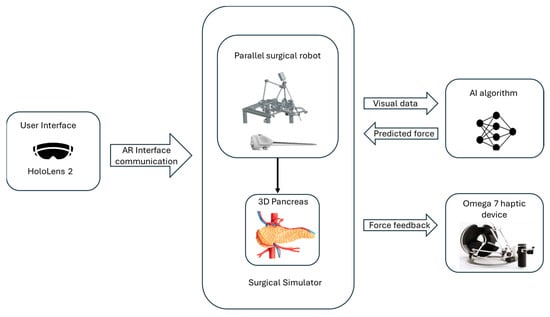

The simulator is built on a modular architecture that integrates several key components to provide a comprehensive training environment, as can be seen in Figure 1.

Figure 1.

The architecture of the simulator.

The surgical simulator integrates a surgical parallel robot developed for minimally invasive pancreatic surgery, detailed in Section 2.2, with an Omega 7 haptic device [10] used in the simulator’s force-feedback system. The Omega 7 haptic device (provided by Force Dimension, Allée de la Petite Prairie 2, CH-1260 Nyon, Switzerland) is a high-precision, force-feedback interface designed to provide realistic tactile sensations and precise control for virtual and robotic environments, featuring six degrees of freedom for advanced simulation applications [11].

To predict the force used during surgical procedures, the system uses a convolutional neural network (CNN) model. The trained model estimates the force by analyzing visual data from the simulation without the use of physical sensors. During surgical procedures, the simulator can give precise and dynamic force feedback thanks to this model.

The simulator is developed using the Unity Engine (Editor version 2021.3.16f1), utilizing its physics engine for simulating interactivity and physical behavior [12]. It is targeted for AR environments and particularly for the Microsoft HoloLens 2 device (provided by Microsoft Corporation One Microsoft Way, Redmond, WA 98052-6399, USA), [13]. With the aid of HoloLens 2, the user can visualize 3D organs as well as the robot’s structure, enabling the users to interact with them in real time. By tracking spatial mapping, HoloLens precisely determines the direction and movement of surgical tools relative to the patient’s body and its internal structures. This integration enhances the surgical process in the preplanning stage, enabling the visualization of the anatomy and the robot’s movements during the procedure. By simulating the elasticity of pancreatic tissue, the simulator provides users with feedback on the force used during interactions.

2.2. ATHENA Parallel Robot for Minimally Invasive Pancreatic Surgery

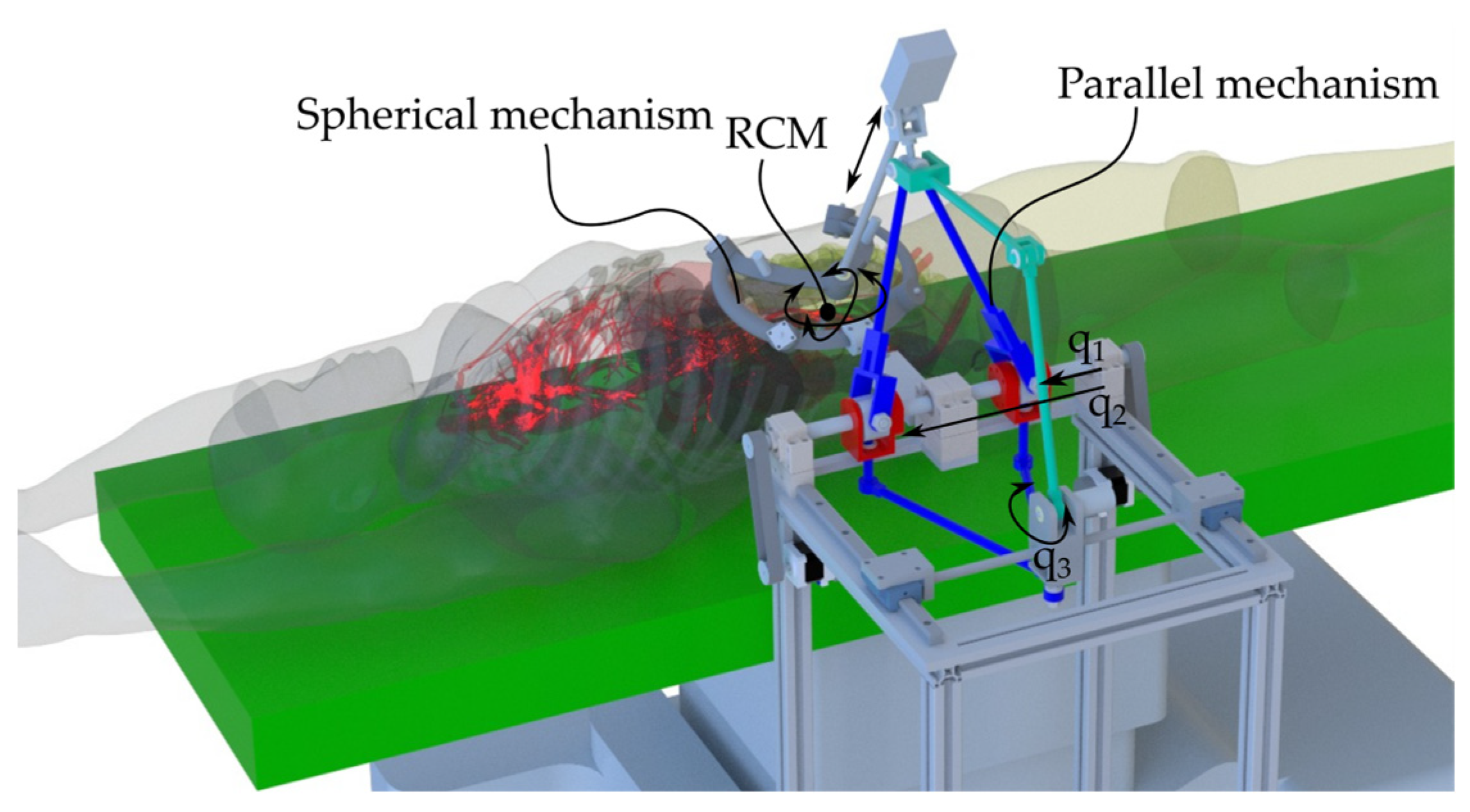

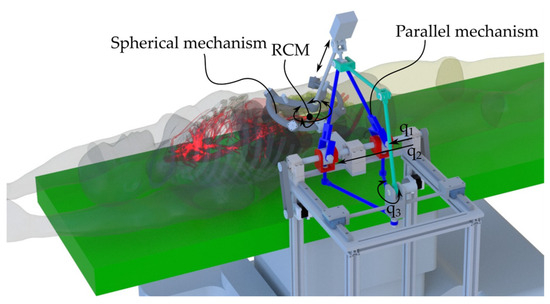

Designed as an assistant for the surgeon, the ATHENA robotic system provides precise control for accurate simulation of surgical procedures (Figure 2). It can act as an organ retractor or handle any other active surgical instrument used as a third surgical tool (e.g., the endoscopic camera), adjusting its orientation and position according to the requirements of the primary surgeon.

Figure 2.

ATHENA parallel robot for minimally invasive surgery in the medical environment.

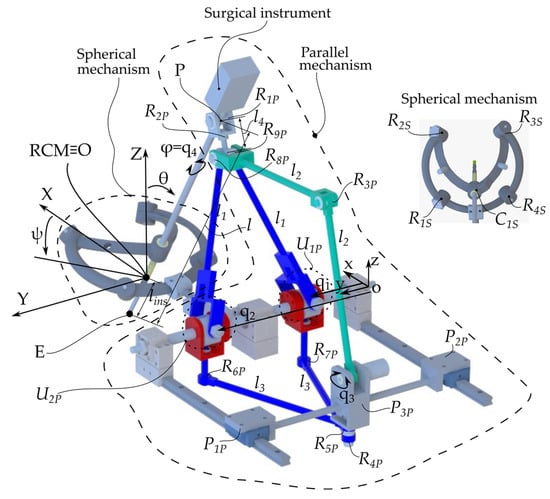

The ATHENA robot [14] has a modular architecture consisting of two parallel modules (Figure 3). The first module is a parallel passive Spherical Mechanism (SM) with an architecturally constrained Remote Center of Motion (RCM), ensuring that the surgical instrument always passes through the RCM. It has four degrees of freedom (DOFs): three orientations of the surgical instrument relative to the fixed coordinate system OXYZ (identical with the RCM) and one translation along the axis of the surgical instrument for its insertion and retraction, which is possible since one of the revolute joints of the SM was replaced with a cylindrical one, namely C1s. The other revolute joints of the SM are Ris, i = 1.4.

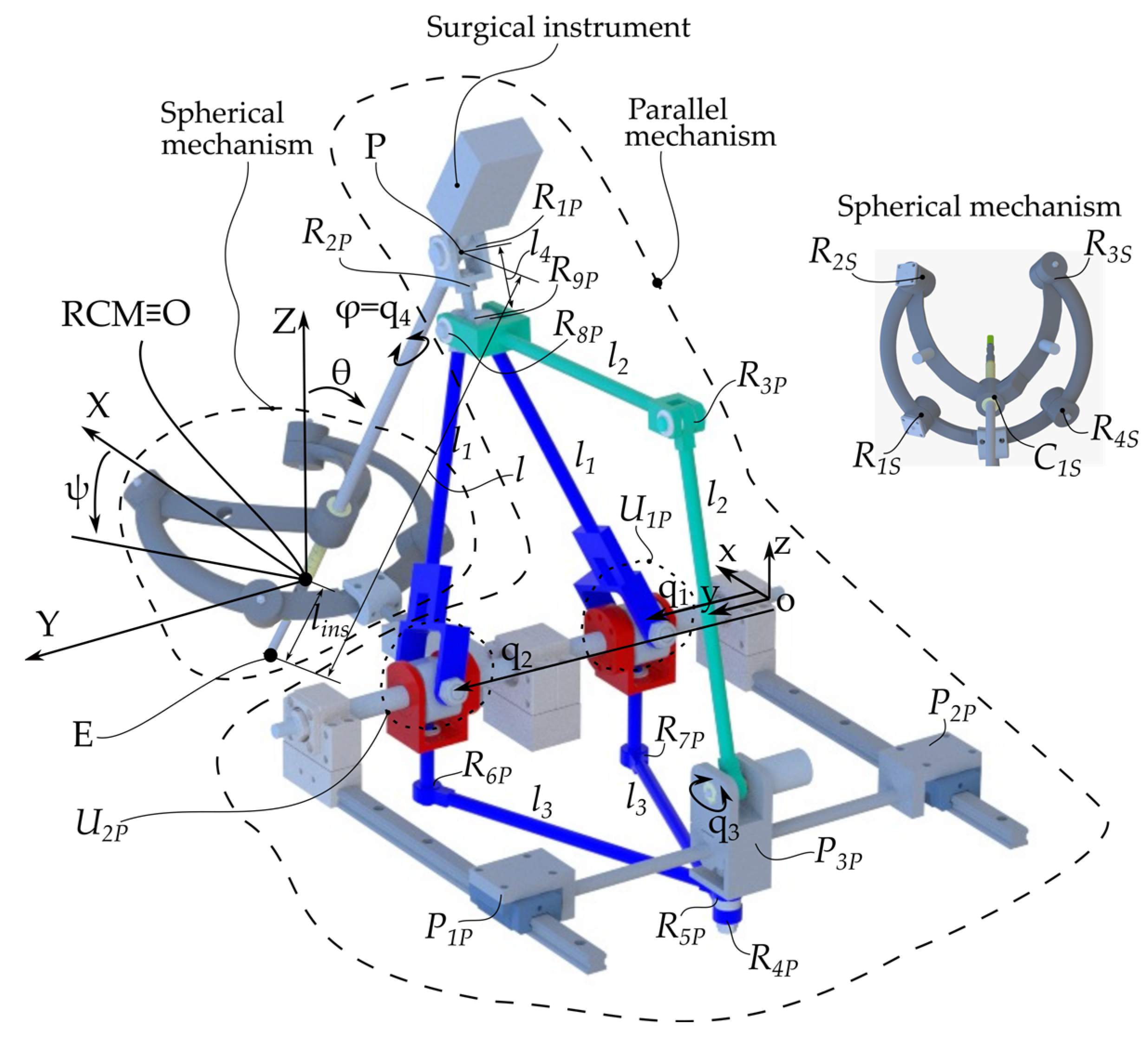

Figure 3.

ATHENA parallel robot kinematic scheme.

The second module is an active 4-DOF Parallel Mechanism (PM) with three kinematic chains. The first one consists of the q1 and q2 active prismatic joints connected to the links l1 through the U1P and U2P universal joints. The l1 links are connected through the R9P passive revolute joint. The second parallel kinematic chain consists of the same q1 and q2 active joints connected to the links l3 through the R6P and R7P revolute prismatic joints. The l3 links are connected through the R4P passive revolute joint. This kinematic chain is connected to the third kinematic chain through the revolute joint R5P. It is a serial kinematic chain constating of the following joints: the active revolute joint q3 and the passive revolute joints R3P and R8P connected through the l2 links. The surgical instrument is connected to the 4-DOF parallel module through a universal joint consisting of two passive revolute joints (R1P and R2P) intersecting in point P, as the center of the revolute joint R1P.

The parallel robot controls the surgical instrument, which has 4 DOFs, namely the orientations ψ, θ, and φ and the insertion length lins or the coordinates of point E(XE,YE,ZE) and the orientation angle φ. These sets of coordinates are constrained by the following equation:

Besides the geometrical parameters of the robot (l1–l4 and the surgical instrument’s length l), the coordinates of the mobile coordinate system oxyz in the fixed coordinate system OXYZ (l01, l02, and l03) are required.

The coordinates of point P are as follows:

The input–output equations of the ATHENA robot are presented in Equation (3), from where the inverse kinematics (the active joints q1, q2, q3, and q4) is derived:

where

2.3. Haptic Device Integration

The Omega 7 haptic device was integrated into the system to control the ATHENA virtual parallel robot and provide the required force feedback to the user.

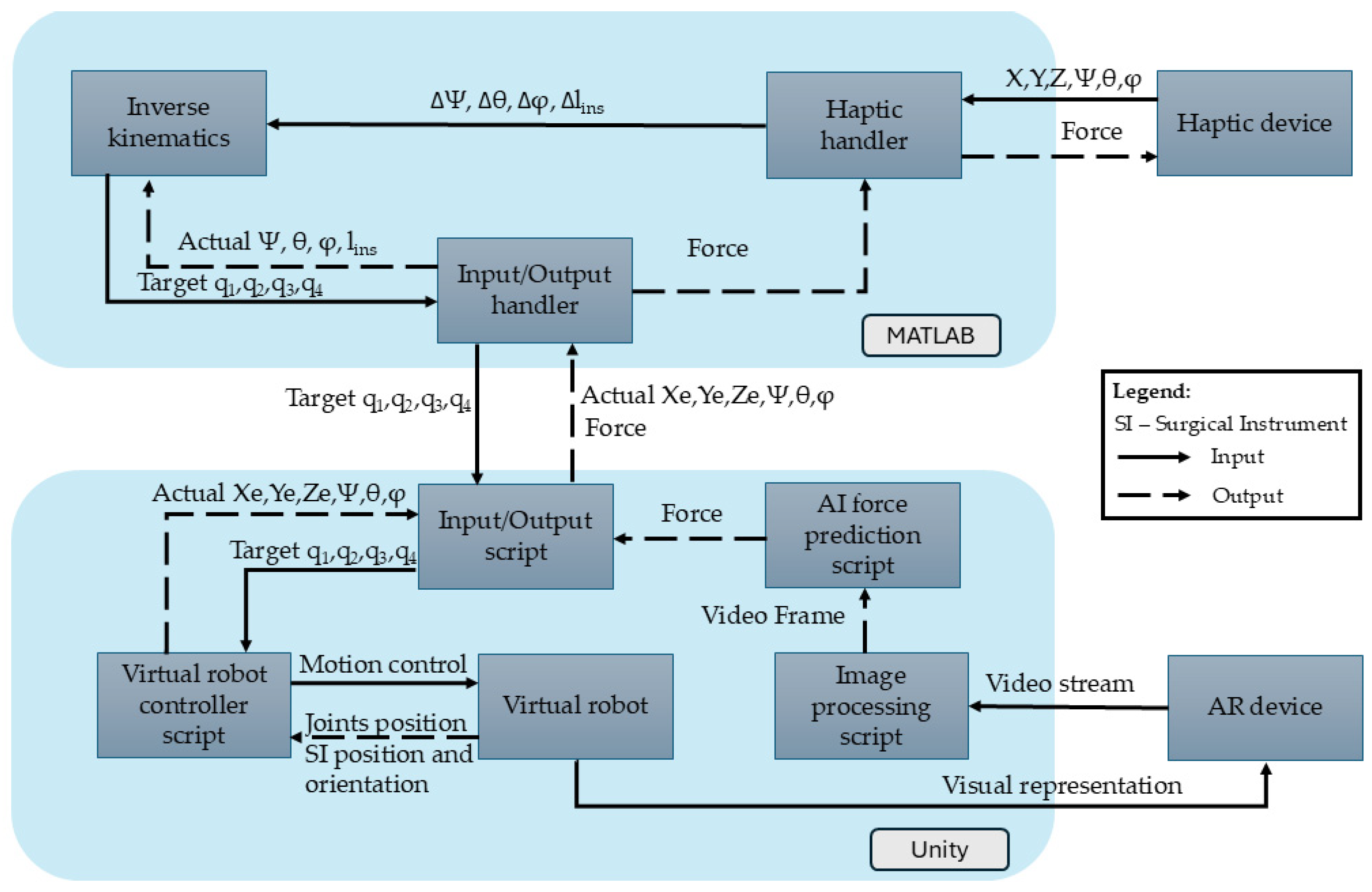

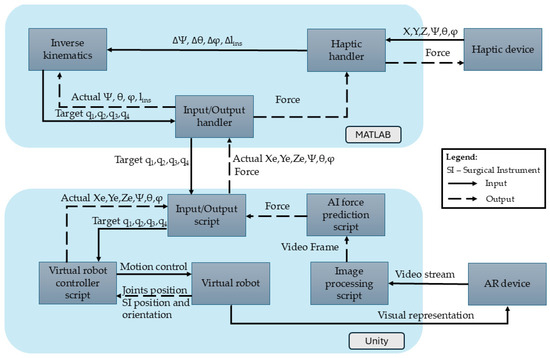

Omega 7 is a 7-DOF haptic device with hybrid architecture. It uses a Delta parallel architecture with 3 active DOFs used to provide force feedback. It also has a serial wrist with 3 passive DOFs used for orientation and a 1-DOF pinch used for the active function of the surgical instrument (i.e., gripping). The Omega 7 enables real-time control of the robot by continuously updating the end-effector position based on the user’s movements, generating the X, Y, Z, ψ, θ, and φ coordinates (Figure 4).

Figure 4.

Haptic device integration into AR-based simulator.

Both the robot and the haptic device positions are continuously monitored and processed in the MATLAB R2024B environment. The MATLAB R2024B application consists of three key modules:

- Haptic Handler Module—Establishes communication with the haptic device, recording its position (X, Y, Z), orientation (ψ, θ, φ), and gripper state. It also sets forces along the X, Y, and Z axes of the Omega 7 haptic device.

- Inverse Kinematics Module—Uses the output from the Haptic Handler, namely the desired change in orientation and position in terms of Δψ, Δθ, Δlins, and Δφ determined using Equation (1) to compute new joint positions (q1, q2, q3, and q4) that replicate the user’s movements.

- Input/Output Handler—Transmits the computed joint positions (q1, q2, q3, and q4) to Unity via a .NET pipeline and receives the end-effector’s position and orientation from the virtual environment in Unity.

In the Unity environment, multiple scripts ensure accurate end-effector movement according to the haptic device’s inputs:

- Input/Output Script—Receives joint positions from MATLAB R2024B to update the virtual robot and transmits the actual end-effector positions back to MATLAB.

- Virtual Robot Controller—Adjusts joint positions based on the mathematical model, ensuring precise replication of the user’s haptic movements.

- Virtual Robot—The Unity object whose configuration is changed upon the provided values of the active joints (q1, q2, q3, and q4).

- Image Processing Script—Processes the images from the HoloLens camera stream, selects every tenth frame, and resizes it to meet the AI model’s input requirements.

- AI Force Prediction Script—Analyzes the images provided by the Image Processing Script for force prediction. The AI force prediction script estimates the applied force at the end-effector and sends the data back to the Haptic Handler in MATLAB R2024B, ensuring real-time force feedback for the user.

2.4. Force Prediction and Feedback

For the identification of the most suitable Artificial Neural Network, a literature survey was performed, analyzing multiple ANNs with respect to their performance in processing real-time images, with the aim of minimizing the network training time while preserving an output accuracy of over 90%. Studies included networks combining RGB images and robot state data [15], modified Inception ResNet V2 for force-feedback estimation [16], and RCNNs integrating CNNs and LSTMs to capture spatial and temporal dynamics for tensile force estimation in robotic surgery [17].

The image-based model for force prediction is integrated into a force-feedback system to estimate forces applied to biological tissues. This model predicts the interaction forces based on visual input, which are then translated into haptic feedback for robotic control in minimally invasive procedures. By replacing traditional sensor-based methods, this approach eliminates the need for physical sensors.

The model is based on EfficientNetV2B0, the smallest model from the EfficientNetV2 family, with these models being recognized for their minimal resource usage [18].

The network uses depth-wise separable convolutions to improve computational efficiency and global average pooling (GAP) operations to reduce each feature map to a single scalar value by computing the average of all spatial locations in the feature map. This replaces the need for fully connected layers. Additionally, a dense layer with 1024 neurons is included to help the model recognize more complex patterns.

To improve accuracy, the model also includes Squeeze-and-Excitation (SE) blocks for enhancing the network’s ability to focus on the most informative feature; residual connections for improving gradient flow, faster training, deeper network formation, and performance; and dropout layers for preventing the overfitting of the model.

For this study, the model was initially trained using images of animal pancreas tissue.

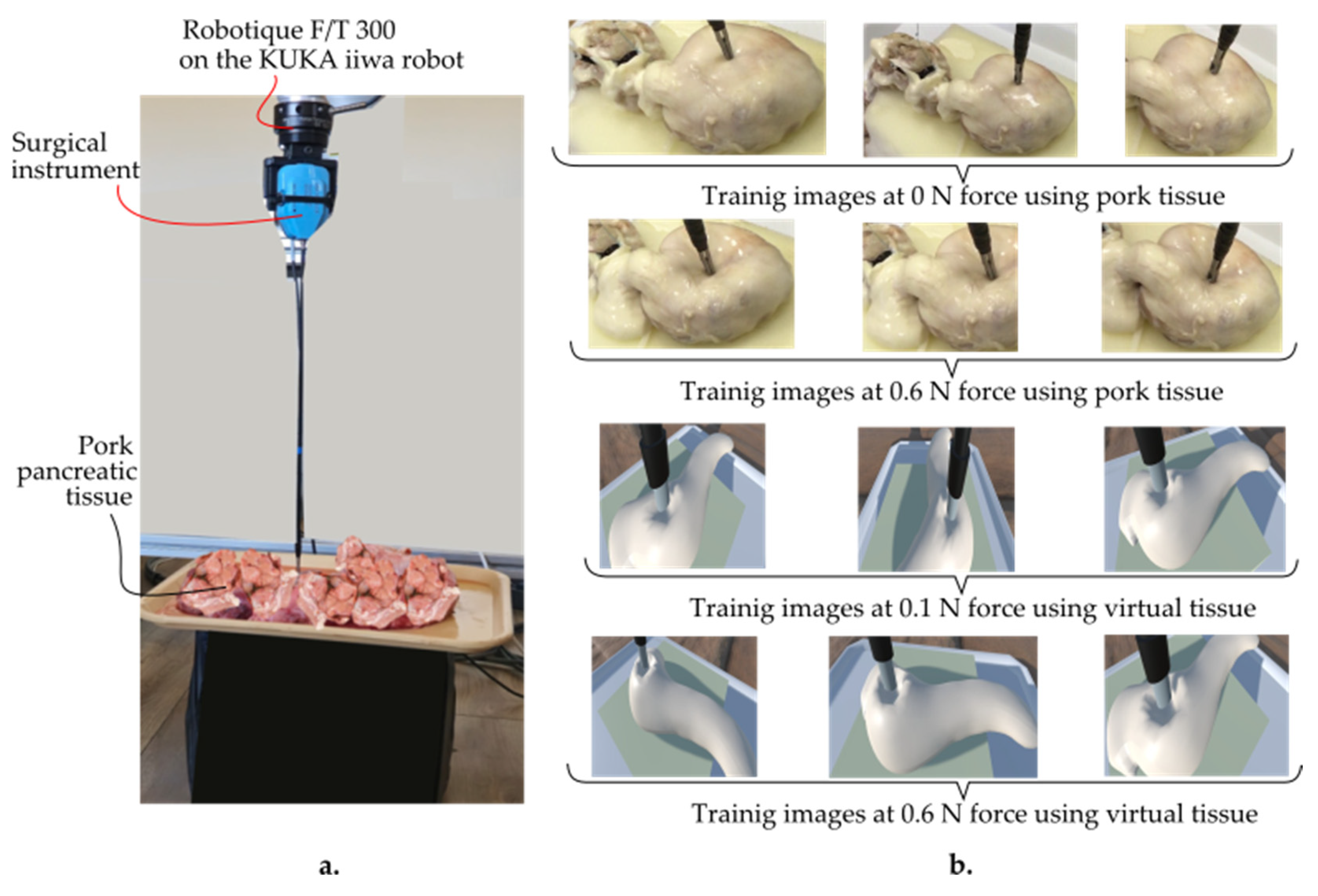

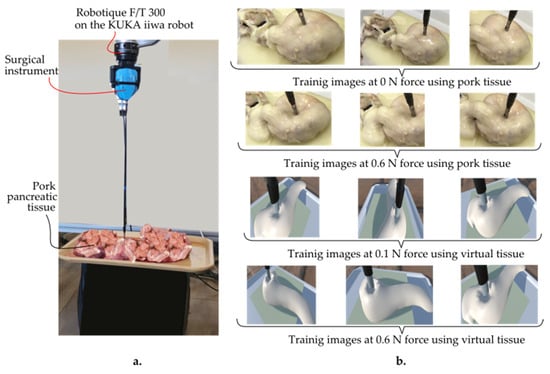

The data were collected by recording videos of tissue responses under applied forces ranging from 0 to 1 N using a Kuka iiwa LBR 7 R800 robot (provided by KUKA AG, KUKA Aktiengesellschaft, Zugspitzstraße 140 86165 Augsburg, Germany), equipped with a Robotiq FT 300 force–torque sensor (provided by Robotiq, Robotiq Inc., 966 Chemin Olivier, Suite 500 Lévis, QC, G7A 2N1, Canada) and an endoscopic camera (provided by Olympus Corporation, 3500 Corporate Parkway, Center Valley, PA 18034, USA) (Figure 5).

Figure 5.

(a) Training setup of the AR-based simulator. (b) Training images using pork pancreatic and virtual tissue at 0 N and 0.6 N, respectively, as well as 0.1 N and 0.6 N at various camera angles.

The force sensor provided precise force measurements, while the camera captured HD images from multiple angles (frontal, lateral, and oblique) to ensure a diverse dataset. Each video lasted about 30 s, with force levels consistently maintained. Frames were extracted from the videos using the OpenLabeling tool, resulting in 2064 images. These images were labeled with their corresponding force values and stored in a CSV file for model training.

The resulting dataset was split into 60% for training, 20% for validation, and 20% for testing. The model predicted forces in the range of 0 to 1 N, in increments of 0.1 N, with no visible deformation in the tissue beyond this threshold.

The training, which utilized the PyTorch library (Python 3.13, torchvision 0.11.0), was performed on a 12th Gen Intel® Core™ i9-12900K CPU. A batch size of 32, a learning rate of 0.00001, and 50 epochs were used to train the CNN on the CPU. When tested on the test dataset, the model’s performance resulted in a mean absolute error (MAE) of 0.0016 and a loss of 0.0011. This shows that there was an average deviation of around 0.0016 N between the actual measured forces and the expected values.

Following the initial training, the model was adapted to process Unity-generated synthetic images as well. Specifically, the deformable pancreatic tissue was tested to known forces ranging from 0 to 1 N in Unity, and the resulting images were used for additional training. To make the generated images as close to the originals as possible, textures and lighting were reproduced to maintain consistency. The final dataset contained 1831 pictures with a size of 348 × 348.

The dataset was further augmented to increase the diversity of the training data, helping the model generalize better and avoid overfitting; these include random rotations, width and height shifts, shearing, zooming, horizontal flipping, and brightness adjustments.

The training was made utilizing the same hardware and software setup.

The Adam optimizer was used to train the model for 100 epochs with a batch size of 64 and a learning rate of 0.00001. To preserve previously learned characteristics while adjusting to the new environment, 25 layers of the model were frozen during this fine-tuning procedure.

The model performed well, with the MAE values being 0.0244 and a loss of 0.015.

Originally saved in the Hierarchical Data Format version 5 (HDF5) format, the final trained model was converted to the ONNX (Open Neural Network Exchange) format for easier Unity environment deployment. The model architecture, weights, and inference capabilities were all preserved during the conversion process, ensuring compatibility. Barracuda, the neural network inference library in Unity, was used to incorporate the ONNX model.

2.5. Augmented Reality Environment Integration

AR enables the overlay of holograms into a real environment, creating an augmented experience. This technology is particularly useful in robotic surgery, where the surgeon’s information is limited to images from the endoscopic camera. In the context of surgical simulators, AR provides many advantages such as better integration with real surgical environments, due to its partially immersive nature which allows for more realistic simulations of the actual operating room, improved spatial awareness, and depth perception for a better understanding of the relationship between toll pathways and tissues. It also enables enhanced hand–eye coordination, preserving natural coordination, and an easy transition to real surgeries [19].

HoloLens 2 was used for this study as it has shown the most promising results across all parameters and improved performance measures in surgical trainees [20].

The simulator was developed using Unity 3D, considering aspects such as AR framework, physics engines, and high-quality graphics. Due to the PhysX 5 physics engine, included in Unity, this platform is particularly useful for physical simulations of soft bodies such as organs [9].

An accurate representation of the anatomical structures and surgical tools within the virtual environment was essential to achieve a realistic simulation.

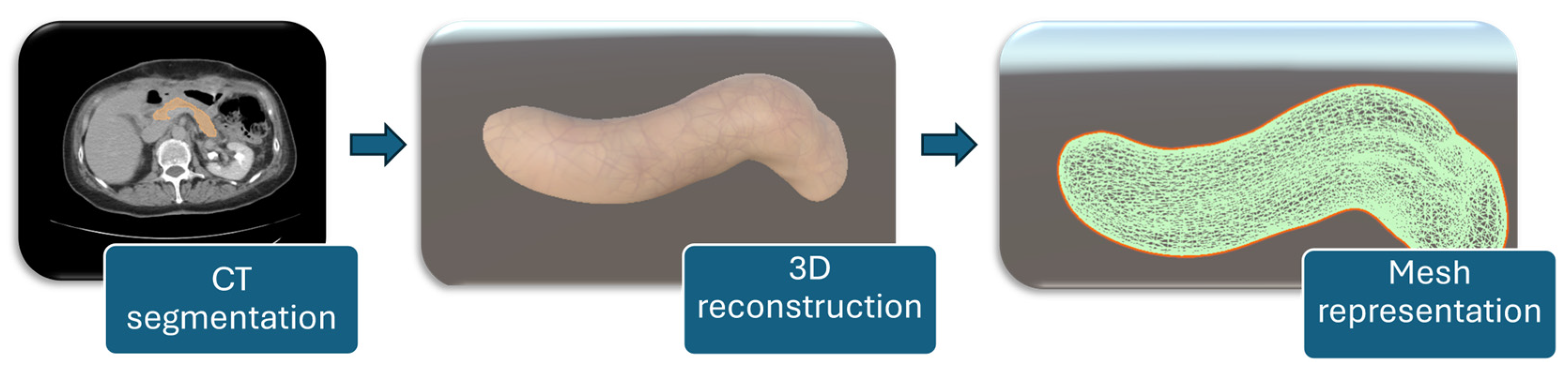

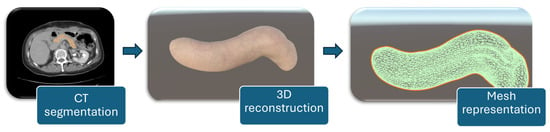

2.6. Pancreas Reconstruction and Setup

For a detailed representation of the soft tissue, the organ was reconstructed from CT scans. The process was carried out in Slicer 3D [21], where the targeted organ was segmented using the Total Segmentator module [22]. This method separates the targeted organ by analyzing color differences between pixels in the CT slices. After segmentation, the organ was reconstructed, and the model was exported as a 3D object. The final object was then imported into Unity, where custom textures were applied to give it a more accurate appearance of porcine tissues. The workflow for patient-specific pancreas modeling consists of CT segmentation of the organ, followed by 3D reconstruction, and mesh representation for simulation purposes, as illustrated in Figure 6.

Figure 6.

Workflow for patient-specific pancreas modeling.

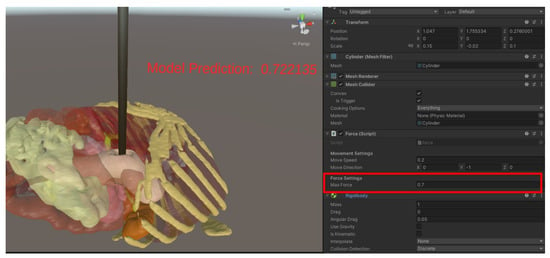

To achieve an approximation of the biomechanical properties of the porcine pancreas in Unity, several techniques were implemented to simulate its key characteristics, such as its Young’s modulus, Poisson’s ratio, and viscoelastic behavior.

A spring-based deformation model was developed to mimic the pancreas’ elastic modulus, which varies in the range of 1.40 ± 0.47 kPa [23]. The spring constants were set to represent a similar stiffness range, allowing the organ to deform once forces were applied but return to its original shape when the force was removed.

Poisson’s ratio of the pancreas, typically between 0.45 and 0.5 [24], was incorporated into the deformation model by simulating volume conservation during compression. In Unity, the Rigidbody component was utilized to apply forces along the z-axis, mimicking compression. To prevent lateral movement, the rigid body’s constraints, FreezePositionX and FreezePositionY, were selected. To replicate the tissue’s resistance to lateral expansion under compression, this restricted expansion in the x and y directions maintained the volume constant throughout deformation. To detect collisions, a mesh collider was added, directing the deformation-based mesh vertices. When forces are applied, the positions of the vertices are adjusted to reflect interactions with the surgical instruments, allowing for realistic, local deformations based on the collisions.

A maximum deformation failure threshold of 1 N was established, beyond which the mesh would undergo permanent modifications. If the organ was subjected to forces exceeding this threshold, the mesh would either rupture or deform permanently. The deformation limitations were maintained by simulating tissue failure when the 1 N force threshold was reached and using custom scripts to measure strain over time.

Applying increasing damp pressures in parallel with the deformation rate was necessary to simulate the pancreas’ viscoelastic characteristics (relaxation duration between 0.1 and 2 s). These forces imitated the relaxing behavior of soft tissues by simulating their time-dependent resistance to deformation, where the organ resists rapid motions more than slow ones.

Finally, time-dependent pressures were applied to replicate stress relaxation behavior [24]. To replicate the stress relaxation observed in the pancreas during compression testing, the stress in the organ was set to decrease over time.

The parallel robot described in Section 2.2 was also imported into Unity. The robot was initially designed in NX Siemens, exported as STEP files, and converted to FBX files (the format supported by Unity).

By utilizing the Configurable Joint in Unity and considering both its active and passive joints, the robot was designed to function with both the haptic device and in a collaborative mode, allowing manual control. The latter feature was effectively implemented using the HoloLens 2 device.

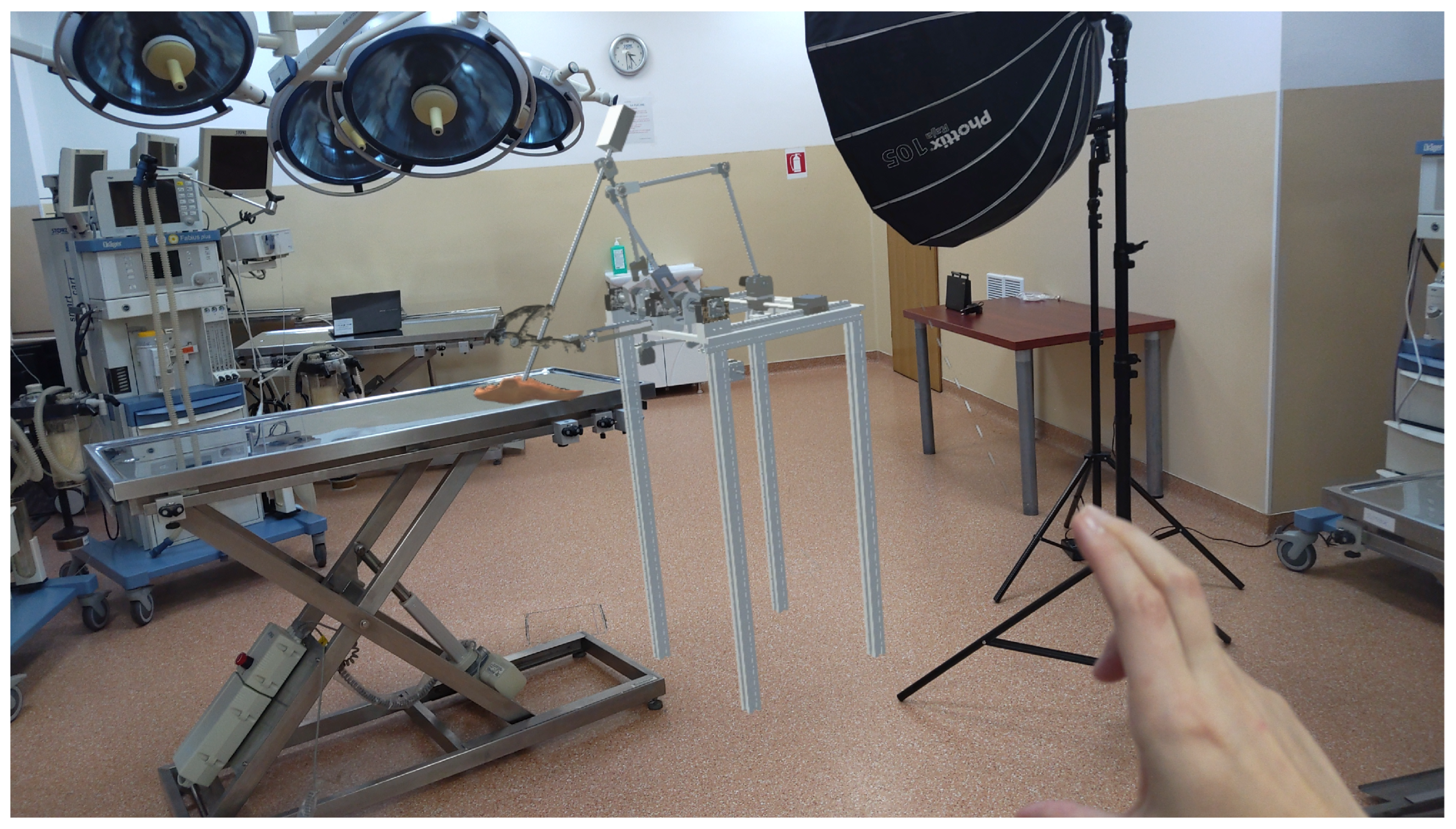

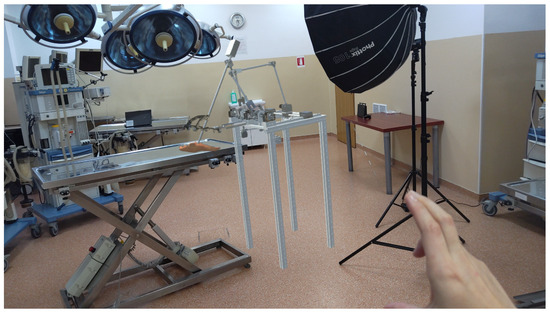

The integration of the robot as a hologram into the operating room (Figure 7) provides a powerful tool for both the developers and the medical professionals. By using HoloLens 2, the robot is projected as a 3D hologram, enabling real-time visualization and interaction during surgical procedures. This allows developers to test and refine the robot’s functionality in a realistic, immersive environment without the need for physical prototypes. For medical professionals, holographic representation enhances their understanding of the robot’s movements and capabilities, facilitating more precise control and decision-making. Furthermore, this augmented reality setup improves collaboration between the medical team and the robot, ensuring the integration of robotic assistance during surgery, and ultimately contributes to better patient outcomes.

Figure 7.

Augmented reality with ATHENA parallel robot integrated into the operating room.

3. Results

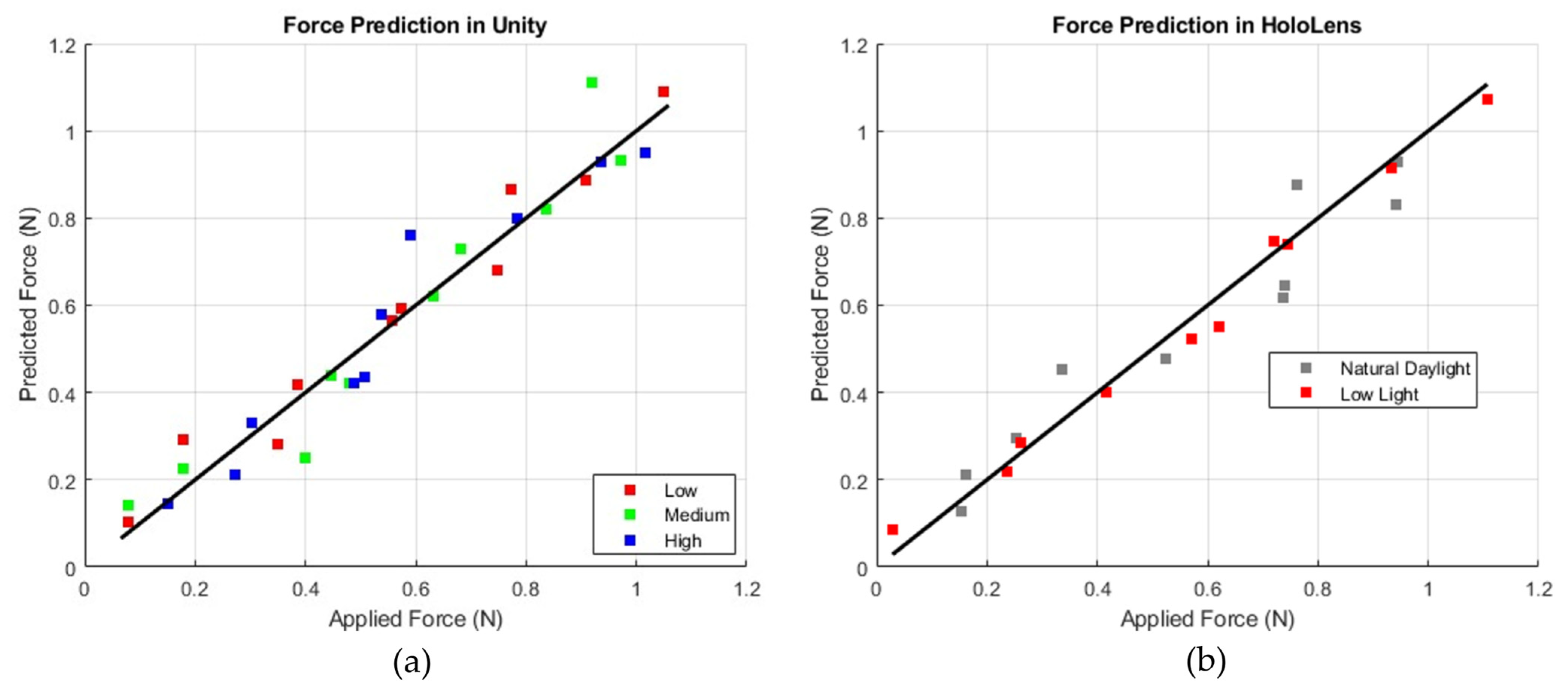

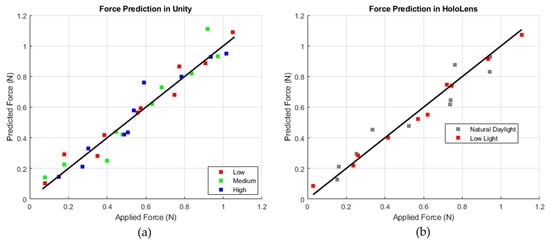

The simulator’s prediction accuracy was evaluated by applying known forces in Unity and comparing them to the forces predicted by the AI model (Figure 8). For each lighting condition (low, medium, and high brightness), forces ranging from 0.1 N to 1.0 N were incrementally applied, and the predicted forces were recorded.

Figure 8.

Force prediction in Unity.

The results indicated an average deviation of ±0.02 N and a maximum deviation of 0.045 N, which means that for an applied force of 0.5 N, the model consistently predicted forces within the range of [0.48 N, 0.54 N] across all lighting conditions.

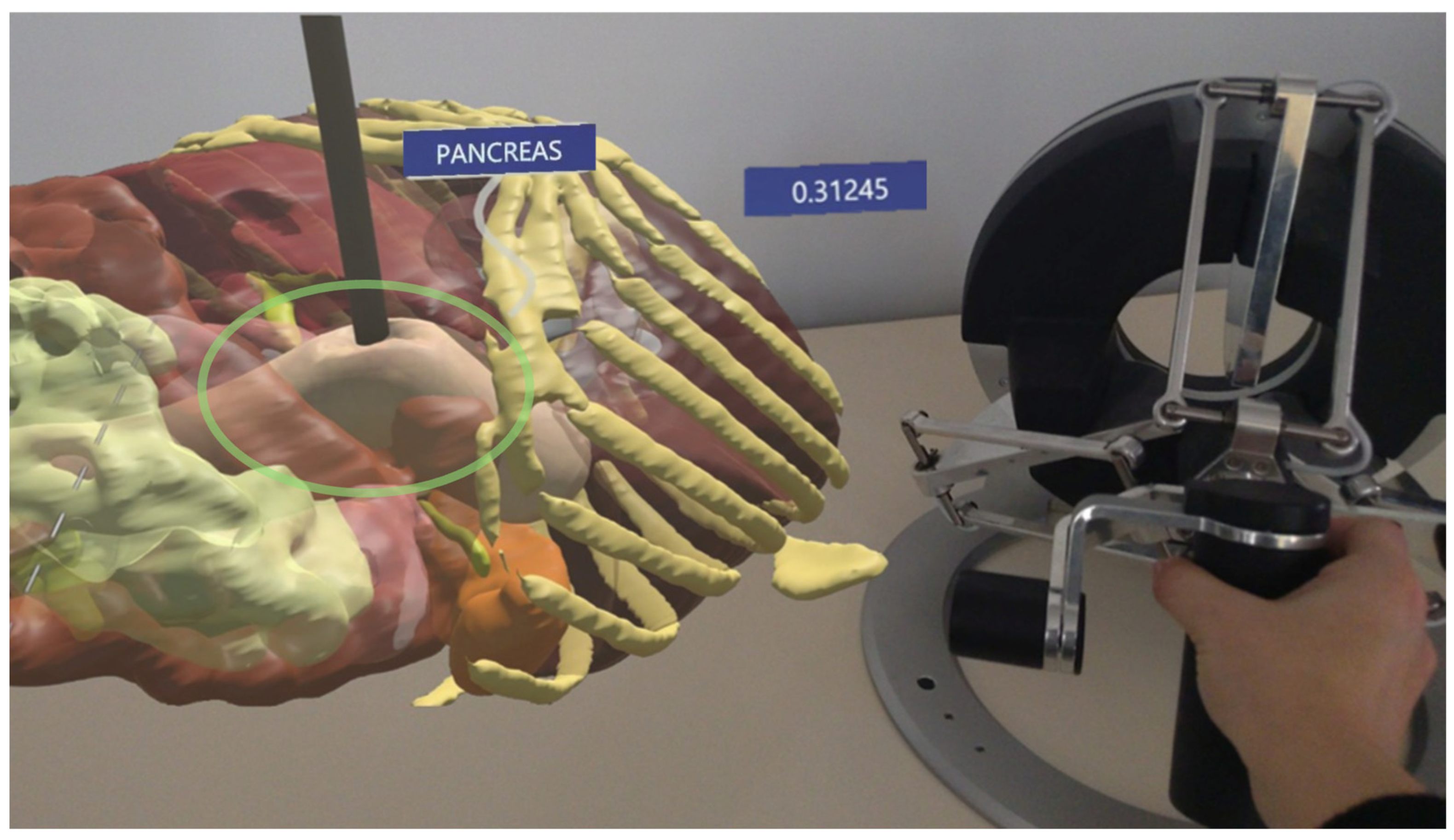

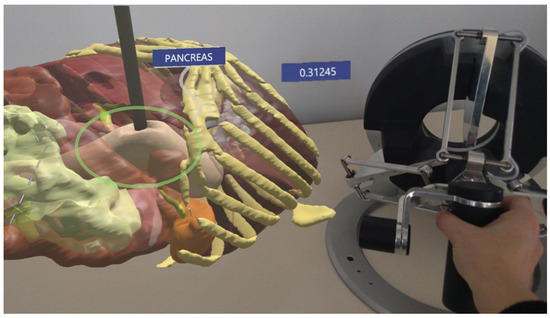

In HoloLens testing, similar validation was performed by simulating force interactions under two lighting environments: natural daylight and low light (Figure 9).

Figure 9.

The AR environment highlighting the force prediction in HoloLens: the hologram of the pancreas is on the left and the instrument pressing on the tissue and the haptic device are on the right.

Figure 10 displays plots comparing predicted force (N) to applied force (N), with a diagonal line representing a perfect correlation between the two. In the Unity plot, data points corresponding to low, medium, and high brightness levels (shown in red, green, and blue) remain consistently close to the diagonal, indicating stable accuracy across different lighting conditions. Conversely, the HoloLens plot, with gray markers for natural daylight and red markers for low light, shows that the red (low-light) data points are positioned closer to the diagonal, suggesting improved prediction accuracy in low-light environments. This indicates that while Unity exhibits consistent performance regardless of brightness, the HoloLens model performs best under low-light conditions, with slightly reduced accuracy observed in natural daylight.

Figure 10.

Comparison of force prediction performance: Unity-based simulation (a) with varying intensity levels (b) and HoloLens-based simulation (right) under different lighting conditions (natural daylight and low light).

The simulator was also tested to observe its reaction to unknown data, such as a different instrument compared to what the model was trained on. The results showed that the deviation from the predicted values was slightly increased. Following ten repetitions, the average deviation was 0.025 N, indicating that the model learned the deformation feature and is not overly reliant on details such as a different instrument. The simulator was also tested to observe what happens when the instrument applies another force while the tissue has not fully returned to its original form yet. This test was repeated 20 times with different forces, and the results indicated an average deviation of 0.08 Newtons, suggesting that, at some level, the model offers acceptable precision, but a hysteresis effect can be observed.

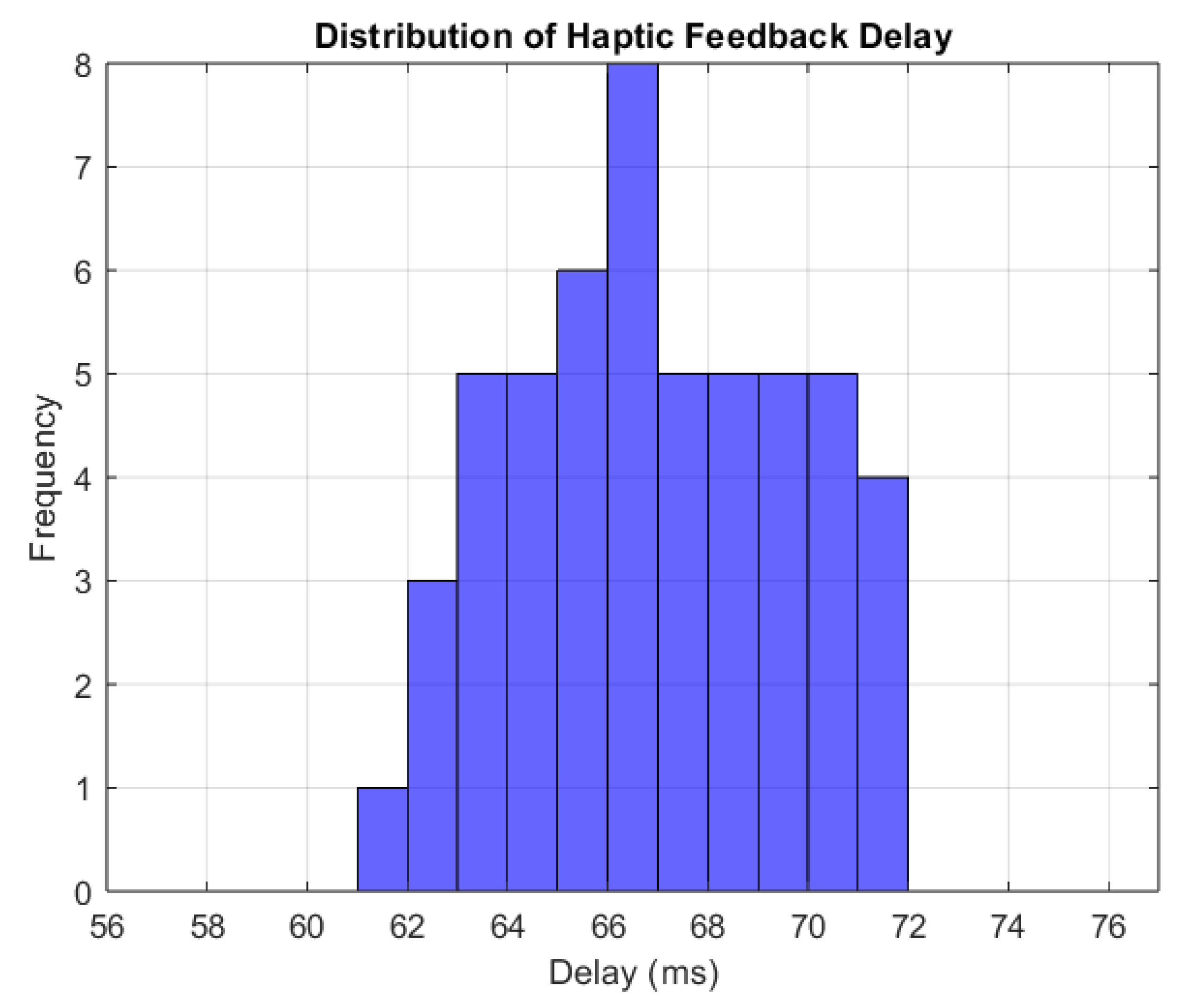

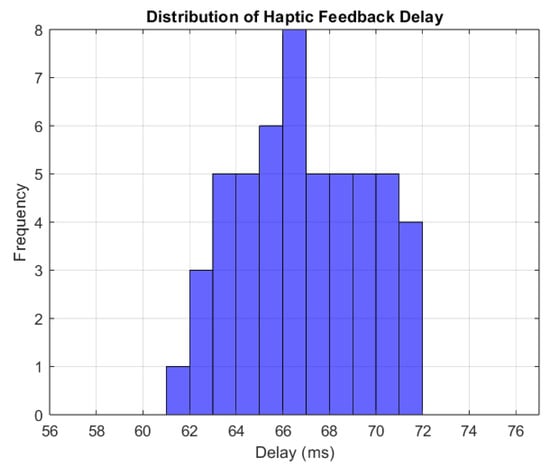

The time interval between force application and value perception by the Omega 7 haptic device was analyzed to assess the simulator’s real-time performance.

To consistently measure the feedback propagation time, multiple force applications on the tissue were simulated; the feedback delay was calculated by measuring the delay 10 times for each force applied and then averaging these 10 measurements to obtain a more reliable value for the delay. The histogram (Figure 11) shows delays ranging from 60 ms to 72 ms, with the most frequent values being around 66 ms. This suggests that the feedback delay is constant, presenting just minor fluctuations. The average response time of 66 ms is considered adequate for real-time simulations [25].

Figure 11.

Distribution of haptic feedback delay.

The threshold for real-time simulations confirms that the haptic feedback is properly synchronized with the force application and that the system provides reliable, real-time interaction with the user.

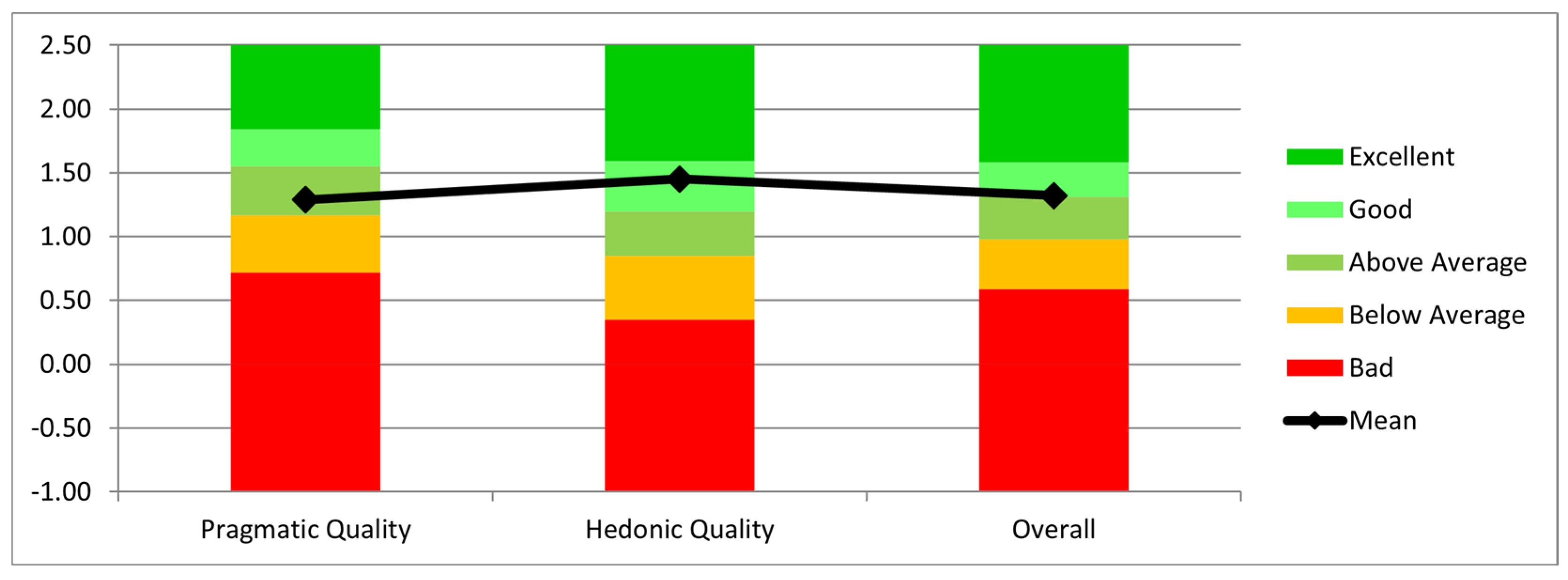

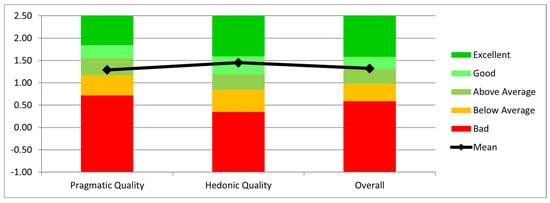

The collaborative aspect of the robot was tested by having users manually move the robot’s end-effector along a predefined trajectory.

The interaction was evaluated using the Short User Experience Questionnaire (UEQ) [26]. According to the results presented in Figure 12, the users considered the system useful and efficient for the job, as evidenced by the Mean Pragmatic Quality (practical and real-world applicability) score of 1.29. It should be mentioned, nevertheless, that users needed multiple repetitions to properly become used to the HoloLens’ functionality. The Mean Hedonic Quality (utility) score was 1.45, suggesting that users found the interaction enjoyable and engaging. Finally, the Overall Mean score was 1.32, reflecting an overall positive user experience with the system.

Figure 12.

Evaluation of pragmatic quality, hedonic quality, and overall system performance.

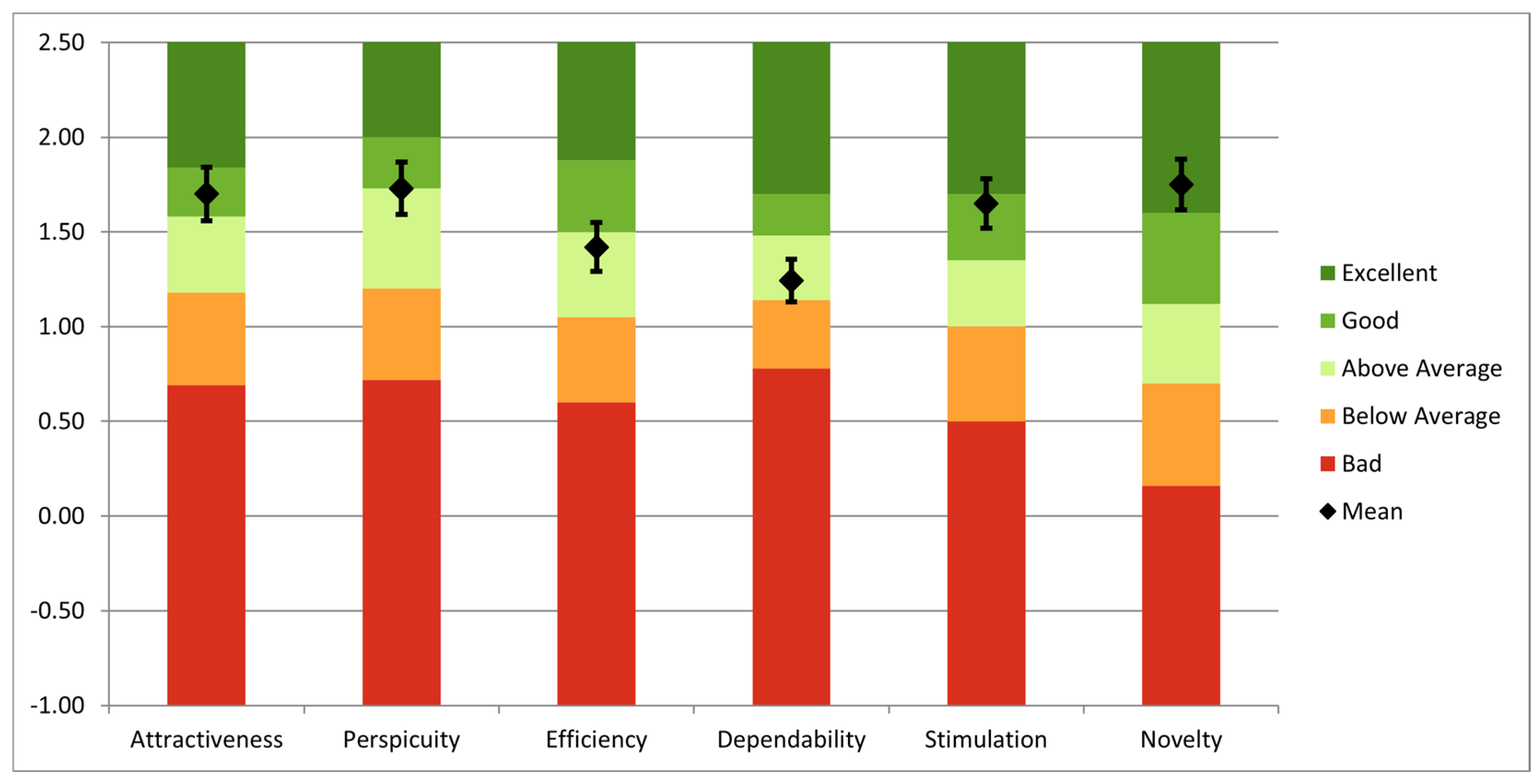

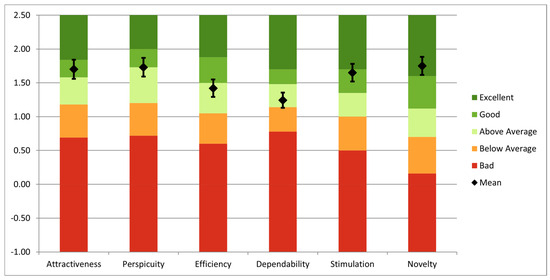

Using the long version of UEQ, an overall analysis was performed to evaluate the simulator. The questionnaire was completed by fifteen users: three senior surgeons, four specialists, three residents, and five engineers with competencies in robotic-assisted surgery. All users have previously interacted with surgical simulators, and 12 of them have prior experience with AR technology. The findings highlight both notable areas of performance and opportunities for improvement, as can be seen in Figure 13. Novelty (1.75), perspicuity (1.73), and attractiveness (1.70) had the highest mean values, suggesting that users find the system to be creative, user-friendly, and visually appealing. Additionally, stimulation (1.65) scored well, indicating that the simulator offers an enjoyable and motivating experience. However, the mean scores for efficiency (1.42) and dependability (1.24) were more modest, suggesting possible issues with task completion speed and system dependability.

Figure 13.

UEQ analysis of the entire system.

4. Discussion

Advanced training techniques can enhance the specific skills required for minimally invasive treatments. This work presents a simulator that offers a realistic training environment for minimally invasive pancreatic surgery. The system comprises a parallel robot, the Omega 7 haptic device by Force Dimension, the HoloLens 2 device, and an AI network, all integrated within the Unity 3D engine.

Parallel robots are known for their accuracy and stiffness compared to serial architectures [27,28,29]. Parallel robots are also more challenging to implement in engine graphics. The Omega 7, a well-known haptic device, was chosen primarily for its availability. The HoloLens 2 is a popular choice for implementing AR technology due to its high-quality visualization, comfort, ergonomics, and spatial mapping capabilities. However, its price, limited battery life, and potential future lack of support may hinder its use in commercially available surgical training systems, which must be considered in future development.

An AI-based force prediction system is also included into the simulator, enabling real-time haptic feedback without the use of physical sensors. The system predicts forces with a maximum deviation of 0.045 N and an average variance of ±0.02 N, according to the results. With an average delay of 65 ms, haptic feedback technology is appropriate for real-time applications.

While the simulator demonstrates promising results, there are areas where further enhancements could improve its performance. One area to address is the sensitivity of the AI model to lighting conditions. The holograms projected by the HoloLens 2 are more vivid and easier to see in low light, as there is less interference from surrounding light, which makes the system more effective in such conditions than in natural light. Additionally, the current force range of 1 N is tailored to a specific organ, providing an opportunity to expand its applicability to a broader range of scenarios. Ultimately, when successive forces are applied without allowing the tissue to return to its original shape, a hysteresis effect is observed. This highlights the need for further refinement to account for time-dependent tissue behaviors, enhancing the realism and functionality of the simulator.

A UEQ analysis revealed strong performance in novelty, perspicuity, and attractiveness, indicating that the system is innovative, user-friendly, and aesthetically pleasing. Stimulation also scored well, suggesting that the event was enjoyable. However, lower scores in dependability and efficiency highlight areas for improvement, especially in system reliability.

Compared to other simulators in the scientific literature, such as the da Vinci Skills Simulator (dVSS) and the Robotic Surgical Simulator (RoSS), this system addresses specific gaps. It combines visual data and AI-based force estimation, which eliminates the need for extra sensors, unlike conventional simulators relying on physical sensors and predetermined activities for haptic feedback, which leads to cost-effectiveness and versatility.

The simulator’s modular architecture suggests its potential integration into more extensive surgical simulation frameworks. The AI technology and modular robot might improve existing systems by augmenting the training realism for less invasive treatments. From an economic perspective, the simulator represents a more cost-efficient option for training facilities, since it does not need actual sensors and utilizes a relatively inexpensive AR device. Artificial intelligence-driven force prediction reduces reliance on costly gear, possibly enhancing accessibility to surgical training instruments.

Further improvements will be focused on increasing the database, including different instruments for a better generalization of the simulator. Also, advanced biomechanical models could improve the realism of tissue behavior. Its utility might also be increased by additional clinical validation and deeper integration with other surgical simulation systems.

5. Conclusions

This study introduces an augmented reality-based surgical simulator designed for robotic-assisted minimally invasive pancreatic surgery, integrating a novel parallel robot, AI-driven force prediction, and real-time haptic feedback. The AI model accurately estimates applied forces with a mean absolute error of 0.0244 N, while the force-feedback system, operating with a 65 ms latency, ensures real-time interaction. Compared to existing simulators, this system eliminates the need for physical force sensors, enhancing adaptability and improving spatial awareness in surgical training and procedural planning.

Future improvements will focus on expanding the AI training dataset for better generalization, refining biomechanical tissue modeling, and conducting further clinical validation. Overall, the system advances robotic-assisted surgery training by providing a highly realistic and interactive learning environment, addressing key limitations in current surgical simulation platforms.

Author Contributions

Conceptualization, D.P., N.A.H., G.R., B.G., A.C. and D.C.; data curation, N.A.H., A.C., C.R. and B.G.; formal analysis, D.P., N.A.H., D.C. and C.V.; funding acquisition, D.P.; investigation, D.P. and C.V.; methodology, D.P., G.R. and B.G.; project administration, D.P.; resources, C.V.; software, G.R.; supervision, D.P., C.V. and B.G.; validation, N.A.H., B.G., A.C. and C.R.; writing—original draft, G.R.; writing—review and editing, B.G., A.C. and C.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the project New smart and adaptive robotics solutions for personalized minimally invasive surgery in cancer treatment—ATHENA, which was funded by the European Union—NextGenerationEU and Romanian Government, under the National Recovery and Resilience Plan for Romania, contract no. 760072/23.05.2023, code CF 116/15.11.2022, through the Romanian Ministry of Research, Innovation and Digitalization, within Component 9, investment I8.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bienstock, J.; Heuer, A. A review on the evolution of simulation-based training to help build a safer future. Medicine 2022, 101, e29503. [Google Scholar] [CrossRef] [PubMed]

- Da Vinci Simulator. da Vinci® Skills Simulator—Mimic Simulation. Available online: https://mimicsimulation.com/da-vinci-skills-simulator (accessed on 29 December 2024).

- Gleason, A.; Servais, E.; Quadri, S.; Manganiello, M.; Cheah, Y.L.; Simon, C.J.; Preston, E.; Graham-Stephenson, A.; Wright, V. Developing basic robotic skills using virtual reality simulation and automated assessment tools: A multidisciplinary robotic virtual reality-based curriculum using the Da Vinci Skills Simulator and tracking progress with the Intuitive Learning platform. J. Robot. Surg. 2022, 16, 1313–1319. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Rodrigues Armijo, P.; Krause, C.; SAGESRobotic Task Force Siu, K.C.; Oleynikov, D. A comprehensive review of robotic surgery curriculum and training for residents, fellows, and postgraduate surgical education. Surg. Endosc. 2020, 34, 361–367. [Google Scholar] [CrossRef] [PubMed]

- Ou, Y.; Zargarzadeh, S.; Sedighi, P.; Tavakoli, M. A Realistic Surgical Simulator for Non-Rigid and Contact-Rich Manipulation in Surgeries with the da Vinci Research Kit. In Proceedings of the 2024 21st International Conference on Ubiquitous Robots (UR), New York, NY, USA, 24–27 June 2024. [Google Scholar] [CrossRef]

- Lefor, A.K.; Heredia Pérez, S.A.; Shimizu, A.; Lin, C.; Witowski, J.; Mitsuishi, M. Development and Validation of a Virtual Reality Simulator for Robot-Assisted Minimally Invasive Liver Surgery Training. J. Clin. Med. 2022, 11, 4145. [Google Scholar] [CrossRef] [PubMed]

- Chorney, H.V.; Forbes, J.R.; Driscoll, M. System identification and simulation of soft tissue force feedback in a spine surgical simulator. Comput. Biol. Med. 2023, 164, 107267. [Google Scholar] [CrossRef] [PubMed]

- Bici, M.; Guachi, R.; Bini, F.; Mani, S.F.; Campana, F.; Marinozzi, F. Endo-Surgical Force Feedback System Design for Virtual Reality Applications in Medical Planning. Int. J. Interact. Des. Manuf. 2024, 18, 5479–5487. [Google Scholar] [CrossRef]

- Misra, S.; Ramesh, K.T.; Okamura, A.M. Modeling of Tool-Tissue Interactions for Computer-Based Surgical Simulation: A Literature Review. Presence 2008, 17, 463. [Google Scholar] [CrossRef] [PubMed]

- Haptic Device. Available online: https://www.forcedimension.com/products/omega (accessed on 7 January 2025).

- Tucan, P.; Vaida, C.; Horvath, D.; Caprariu, A.; Burz, A.; Gherman, B.; Iakab, S.; Pisla, D. Design and Experimental Setup of a Robotic Medical Instrument for Brachytherapy in Non-Resectable Liver Tumors. Cancers 2022, 14, 5841. [Google Scholar] [CrossRef] [PubMed]

- Unity. Available online: https://unity.com/ (accessed on 7 January 2025).

- HoloLens 2 Device. Available online: https://www.microsoft.com/en-us/d/hololens2/91pnzzznzwcp?msockid=1d8aaf670ae2690e3d42bcff0b3b6880&activetab=pivot:overviewtab (accessed on 1 February 2025).

- Vaida, C.; Birlescu, I.; Gherman, B.; Condurache, D.; Chablat, D.; Pisla, D. An analysis of higher-order kinematics formalisms for an innovative surgical parallel robot. Mech. Mach. Theory 2025, 209, 105986. [Google Scholar] [CrossRef]

- Chua, Z.; Jarc, A.M.; Okamura, A.M. Toward force estimation in robot-assisted surgery using deep learning with vision and robot state. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 12335–12341. [Google Scholar] [CrossRef]

- Sabique, P.V.; Pasupathy, G.; Kalaimagal, S.; Shanmugasundar, G.; Muneer, V.K. A Stereovision-based Approach for Retrieving Variable Force Feedback in Robotic-Assisted Surgery Using Modified Inception ResNet V2 Networks. J. Intell. Robot. Syst. 2024, 110, 81. [Google Scholar] [CrossRef]

- Jung, W.-J.; Kwak, K.-S.; Lim, S.-C. Vision-Based Suture Tensile Force Estimation in Robotic Surgery. Sensors 2021, 21, 110. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar] [CrossRef]

- Fu, J.; Rota, A.; Li, S.; Zhao, J.; Liu, Q.; Iovene, E.; Ferrigno, G.; De Momi, E. Recent Advancements in Augmented Reality for Robotic Applications: A Survey. Actuators 2023, 12, 323. [Google Scholar] [CrossRef]

- Suresh, D.; Aydin, A.; James, S.; Ahmed, K.; Dasgupta, P. The Role of Augmented Reality in Surgical Training: A Systematic Review. Surg. Innov. 2022, 30, 366–382. [Google Scholar] [CrossRef] [PubMed]

- Slicer 3D Program. Available online: https://www.slicer.org/ (accessed on 8 January 2025).

- Akinci, T.; Berger, L.K.; Indrakanti, A.K.; Vishwanathan, N.; Weiß, J.; Jung, M.; Berkarda, Z.; Rau, A.; Reisert, M.; Küstner, T.; et al. TotalSegmentator MRI: Sequence-Independent Segmentation of 59 Anatomical Structures in MR images. arXiv 2024, arXiv:2405.19492. Available online: https://arxiv.org/abs/2405.19492 (accessed on 5 February 2025).

- Carrara, S.; Ferrari, A.M.; Cellesi, F.; Costantino, M.L.; Zerbi, A. Analysis of the Mechanical Characteristics of Human Pancreas through Indentation: Preliminary In Vitro Results on Surgical Samples. Biomedicines 2023, 12, 91. [Google Scholar] [CrossRef]

- Rubiano, A.; Delitto, D.; Han, S.; Gerber, M.; Galitz, C.; Trevino, J.; Thomas, R.M.; Hughes, S.J.; Simmons, C.S. Viscoelastic properties of human pancreatic tumors and in vitro constructs to mimic mechanical properties. Acta Biomater. 2018, 67, 331–340. [Google Scholar] [CrossRef] [PubMed]

- Jin, M.L.; Brown, M.M.; Patwa, D.; Nirmalan, A.; Edwards, P.A. Telemedicine, telementoring, and telesurgery for surgical practices. Curr. Probl. Surg. 2021, 58, 100986. [Google Scholar] [CrossRef] [PubMed]

- User Experience Questionnaire. Available online: https://www.ueq-online.org/ (accessed on 12 January 2025).

- Pisla, D.; Birlescu, I.; Vaida, C.; Tucan, P.; Pisla, A.; Gherman, B.; Crisan, N.; Plitea, N. Algebraic modeling of kinematics and singularities for a prostate biopsy parallel robot. Proc. Rom. Acad. Ser. A 2019, 19, 489–497. [Google Scholar]

- Abdelaal, A.; Liu, E.J.; Hong, N.; Hager, G.D.; Salcudean, S.E. Parallelism in Autonomous Robotic Surgery. IEEE Robot. Autom. Lett. 2021, 6, 1824–1831. [Google Scholar] [CrossRef]

- Pisla, D.; Plitea, N.; Gherman, B.; Pisla, A.; Vaida, C. Kinematical Analysis and Design of a New Surgical Parallel Robot. In Computational Kinematics; Kecskeméthy, A., Müller, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).