Abstract

In this study, we have developed a humanoid nursing care robot with the goal of helping older people realizing achieve various tasks such as assisted mobility, feeding, drinking, defecation, and bathing. This type of robot has the following features: (1) by using an omnidirectional mobility mechanism that does not require space equivalent to the turning radius, there is no risk of the robot itself falling over, and it can be used safely even in the small spaces of the home; (2) by giving the robot two arms with the same structure as a human arm, it is possible to perform a wide variety of nursing care movements; (3) in addition to avoiding bumping into furniture and walls, and to emulate human mobility intelligence, the robot has a high level of intelligence, which is required to understand the needs of the person receiving care and to determine appropriate action. This article presents the concept for the development of a humanoid nursing care robot and the design and basic configuration of the prototype. On the other hand, the care provided by robot is expected not only to eliminate labor shortages, but also improve the quality of care by taking into account the unique characteristics of each person requiring care. Quantitative management of nutritional intake is recognized as a way to improve the quality of care. This article proposes a method to use force sensors to enable a robot to pour water (beverages) quantitatively, and proves its effectiveness through experiments on a humanoid nursing care robot.

1. Introduction

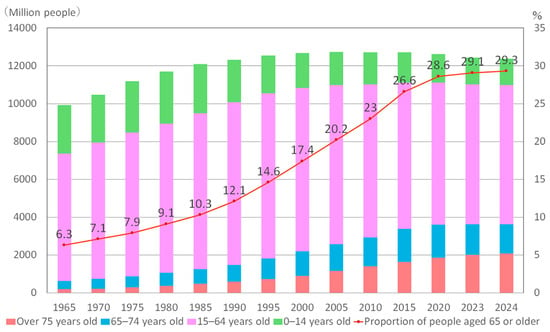

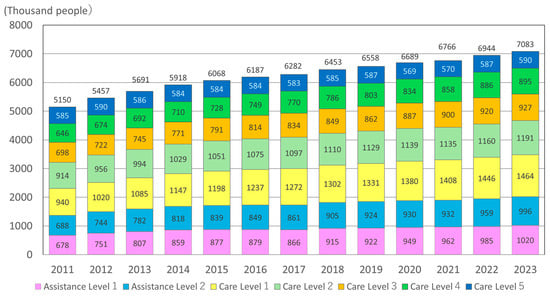

Japan is experiencing a rapid decline in birth rates and has an aging population. The United Nations defines an older person as someone aged 65 or above. As shown in Figure 1, compared to 50 years ago (1975), the older adult population has quadrupled to 36.77 million, or approximately 29.5% of the total population (124.7 million) [1]. As shown in Figure 2, the number of older people that are certified as requiring support or nursing care is 6,766,000, which is approximately 18.4% of the older adult population [2]. However, the number of people who need support or nursing care far exceeds 6,766,000, and this number is likely to continue to increase. A large amount of labor is required to care for these individuals.

Figure 1.

Changes in population and percentage of people aged 65 and over.

Figure 2.

Trends in the number of people certified in need of care (aged 65 and over).

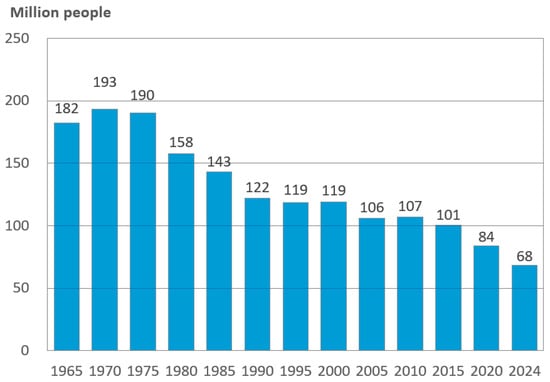

Regarding Japan’s birth rates, as shown in Figure 3, the number of births has been decreasing every year; in 2024, the figure was 684,000, down to 35% of the number 50 years ago (1.9 million in 1975) [3]. If the death rate before reaching the productive age is considered, it should be less than 684,000. If nursing care is based solely on human resources, it faces a severe shortage for both the productive population and nursing care personnel.

Figure 3.

Changes in the number of births in Japan.

Japan is not the only country facing such social problems; many countries are plagued by age-related imbalances. The United Nations defines a society in which the proportion of people aged 65 or older exceeds 7% of the population as an “aging society”, a society in which the proportion exceeds 14% of the population as an “aged society”, and a society in which the proportion exceeds 21% as a “super-aged society” [1,4]. According to the World Bank’s demographic data for 2023, 21 countries (Japan, Italy, Germany, France, Spain, etc.) are super-aged societies, 67 are aged societies, and 122 are aging societies. The top five countries in terms of older population are China, India, the United States, Japan, and the Russian Federation. As of 2020, the world’s older population was 739 million, representing 9.4% of the world’s population. In other words, the world is already becoming an aging society on average, and it is predicted that this proportion will reach 18.7% in 35 years [5].

Having evolved over a period of approximately 7 million years, the average life expectancy of humans has doubled in just 100 years, and the global population is aging rapidly. As the number of older people increases, the number of those needing nursing care also increases. In recent years, the world has been facing the problem of so-called nursing care refugees who are unable to obtain adequate nursing care. Therefore, various research and development efforts are underway to create and popularize welfare robots for older adult care (nursing care robots) as a breakthrough that can solve this difficult problem. Welfare refers to the state of happiness and well-being experienced by a person. Robots that can directly contribute to people’s happiness and well-being are collectively called welfare robots [6]. Nursing care robots are welfare robots that assist or care for older people who have difficulty performing the activities of daily living. However, unlike industrial robots that handle objects, nursing care robots deal with people. As a result, bottleneck issues are yet to be resolved, meaning that a nursing care robot that can rival a caregiver is yet to be created. Most of the welfare robots currently being researched and developed to assist or care (nursing care robots) for older people have a single function. Due to the limited space in individual homes, it is difficult for younger people to provide multiple robots to assist or care for the disabled older people in their lives. Moreover, the use of various nursing care robots is complicated and not standardized. Therefore, to truly play its role in society, nursing care robots need to have multiple functions and accomplish multiple assistive or nursing tasks. In this study, we develop a humanoid nursing care robot with the goal of helping older people perform various tasks such as assisted mobility, feeding, drinking, defecation, and bathing.

In this article, we first describe the current state of research on welfare robots to assist in the care of older people. Next, we describe the development concept, prototype design, and prototype fabrication of a humanoid nursing care robot. Furthermore, with the aim of improving the quality of caregiving, we propose a method to achieve quantitative pouring operation of a drink by measuring force sensors, and demonstrate its effectiveness through verification experiments.

2. Welfare Robots for Older Person Care: About the Nursing Care Robot

Older persons who are unable to perform certain activities of daily living independently due to declining physical and cognitive functioning require assistance or care. The so-called activities of daily living include mobility, eating, defecation, dressing, washing, bathing, and grooming. Three functions are necessary to maintain health and life: eating and drinking water to maintain physical strength, eliminating waste and toxins from the body, and maintaining body hygiene through bathing. If these three tasks are supported, then older persons requiring care can lead a comfortable life. Welfare robots (nursing care robots) that assist or care for older people with difficulties in daily living can be broadly categorized into two types, those that directly assist or care for older adult people, and those that indirectly provide care based on the assistance of caregivers. The nursing care robots discussed in this article are welfare robots that directly assist or care for older people with difficulties in activities of daily living. Research has been carried out mainly in the following areas:

- (a)

- Walking assistance for people who are weak in muscle strength and balance.

The physiological decline evident in the aging process is mainly manifested in decreased limb flexibility, osteoporosis, and muscle atrophy, which leads to a substantially higher risk of falls and fractures. The weakening of the standing function of older adults caused by disease, muscle weakness, fractures, and falls leads to difficulty in walking and restricts the range of activities, and the physical distress leads to mental depression, which further accelerates the aging of body functions, forming a vicious circle. To utilize the residual walking function of older people with weakened standing function and to prevent the complete loss of walking function of the lower limbs, walking assistance utilizing the residual function of the lower limbs [7,8] and walking assistance that recognizes the intention of the person who needs help with walking [9,10] have been used in the field of walking assistance.

- (b)

- Mobility and self-care assistance for people with total walking disability

If people with total walking disability do not actively use their remaining upper body motor functions, they may be confined to bed for a long period of time, which not only leads to personal suffering, but also creates a burden on the family and society. To fulfill the functions of the upper part of the body and to assist in moving, transferring, and standing up, it is necessary to carry or support the entire body when moving or transferring [11,12,13], use mobility assistance [14,15], and provide support for the autonomous work of the upper body, even when the person is unable to walk [16,17].

- (c)

- Dietary assistance and nutritional management

In the area of meal assistance and nutritional management, studies have been conducted on meal assistance using forks and chopsticks [18], and meal assistance utilizing the residual function of the upper extremities [19]. Meal assistance robots can minimize the time and effort required for caregiver dietary assistance and reduce the workload of care facilities. On the other hand, it can enable older people who need meal assistance to eat independently, thus improving the quality of their dining experience.

- (d)

- Excretory assistance

To sustain life, people need to absorb nutrients from their diet and eliminate waste products from the body. Waste elimination is a prerequisite for nutrient absorption, making excretory functions essential for humans and animals. However, the major differences between humans and animals are that (A) the act of defecation is highly private and not carried out in public, and (B) there is no aversion to waste while it is in the human body, whereas once it is out of the body, it is regarded as alien and creates a strong feeling of disgust. These two points have become ingrained in the culture over time. The first point (A) is both a human rights protection issue and a human dignity issue, and the second point (B) is both a psychological issue and a difficult cleanliness issue. Therefore, assisted defecation behavior and the handling of excreta are considered to be the greatest difficulties in nursing, and they drain nursing staff’s energy. In recent years, due to the rapid increase in the number of older people with disabilities, research on excretory aids and excretory disposal has been emphasized, and a variety of excretory aids have been developed, but each has its own advantages and disadvantages, and the problem has essentially not been solved. For elimination support and health observations, a toilet bowl attached to a mobile robot [20], a fixed toilet seat for motorized rotation and rise support [21], and passive rise support [22] have been developed.

- (e)

- Assistance in changing clothes and personal hygiene

Changing assistance has two purposes: hygiene and temperature control. Dressing assistance based on image processing and machine learning [23,24] and with bi-arm co-ordination [25] has been proposed. Hair-washing support robots [26], body-washing support robots [27], and bathing support robots [28] are available for maintaining physical hygiene. Although hairdressing itself is not directly related to life support, it improves older people’s motivation to live.

In summary, various nursing care robots have been developed to assist or provide care for older people with declining physical and cognitive functions, especially bedridden older people, in their day-to-day lives. However, most of these nursing care robots only have a single function. Due to the limited space in individual homes, it is difficult to provide multiple robots to assist or care for disabled older people, and the use of various nursing care robots is complicated and not standardized. Therefore, to truly play a role in society, assistive care robots need to have multiple functions and accomplish multiple assistive or nursing tasks. In this study, we propose a concept of designing a humanoid nursing care robot with the goal of achieving a number of nursing tasks, such as assisted mobility, feeding, drinking, defecation, and bathing.

3. Humanoid Nursing Care Robot

3.1. Development Concept

This study is based on the following concepts for the development of robots: (1) it will be used at home; (2) it can perform as many nursing care actions as possible; (3) it has high intelligence. A home is generally small with many different pieces of furniture. In principle, a bipedal structure can be used to move around in a small environment; however, the stable area of the bipedal structure is small, so there is a risk of the robot falling. Therefore, to achieve its use in a small environment as described in (1), we used an omnidirectional movement function that does not require space equal to the turning radius and does not pose a risk to the robot itself falling. Human arms can perform a variety of tasks and are highly versatile. Therefore, if a robot is given dual arms with the same structure as a human arm, it can perform the wide variety of caregiving actions mentioned in (2). For (3), in addition to avoiding bumping into furniture and walls, and to achieve human mobility intelligence, a high level of intelligence is required to understand the intention of the person in need of care and to determine appropriate action. Therefore, it should be equipped with environmental measurement sensors and a voice function to communicate with a person requiring care. Moreover, for the nursing care robot to appear approachable and friendly to those who need care and those around them, it requires head movement and waist twisting functions, as well as human-friendly colors and a smart structure. To realize these functions, the developed humanoid nursing care robot has a structure with 24 degrees of freedom (DOFs) (4 DOFs for omnidirectional movement + 7 DOFs for the dual arms × 2 + 3 DOFs for the waist + 3 DOFs for the neck), internal sensors (displacement, speed, acceleration, and current), and external sensors (camera, range sensor, ultrasonic sensor, touch sensor, bumper sensors, force sensors, speakers, and microphones).

3.2. Prototype Design and Prototyping

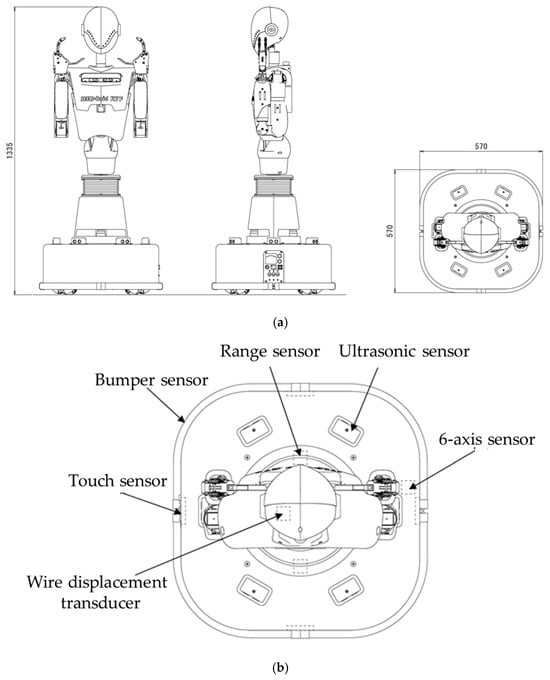

Figure 4 shows a prototype of the developed humanoid nursing care robot.

Figure 4.

Humanoid care robot.

This humanoid nursing care robot is equipped with an omniwheel and various ex-ternal sensors, enabling it to move freely, even in a small home environment, while avoiding obstacles. In addition, the waist and neck are movable, and the robot can cooperatively operate and carry objects with its 7-DOF dual bowls, allowing it to move in a human-friendly manner, making it appealing to people who need assistance or care.

3.2.1. Overall Design

Figure 5a shows the dimensions of the robot prototype (W 570 × D 570 × H 1335 mm), and Figure 5b shows the sensor arrangement. As for the housing materials, the internal frame is mainly made of aluminum and the exterior cover is made of ABS plastic. The robot weighs approximately 90 kg. The power source is a fully sealed lead-acid battery. Two RGB-D cameras are mounted on the head and chest. Two speakers with built-in microphones are mounted around the head and ears. Three-axis force sensors are attached to both of the robot’s wrists. The wheel used is an original safety-type omniwheel with a built-in motor, wheel diameter of 200 mm, wheel motor capacity of 90 W/24 VDC (brushless), and motor gear ratio of 21:1. The wheel motors are mounted inside the hubs of the four wheels, and each wheel motor is equipped with a Hall sensor (approximately 1000 pulses/wheel revolution) and an encoder (43,008 pulses/wheel revolution). Two range sensors are mounted on the front and rear wheels, with a measuring distance of 4 m and a measuring angle of 240°. As shown in Figure 5, six-axis sensors are installed to detect three-axis acceleration and three-axis angular velocity. An ultrasonic sensor is installed every 60 degrees, with a total of six sensors installed, with a measuring distance of 2 cm to 3 cm. A bumper sensor is installed every 90 degrees, a total of four sensors are installed, and the force is 19 N. A contact sensor is installed every 90 degrees, a total of four sensors are installed, and the force is 3 N. One wire displacement transducer is installed to track the electric cylinder.

Figure 5.

Prototype design: (a) robot dimensions; (b) sensor arrangement.

3.2.2. Lower Body Structure and Sensors

The lower body consists of an omnidirectional platform with four omnidirectional wheels. In the plane, it can move in any direction and orientation and turn with a zero turning radius. Thus, this nursing care robot can move smoothly, even in a small home. Additionally, six ultrasonic sensors are used to measure nearby obstacles and passersby. The touch sensor detects the lower body to be touched. The bumper sensor detects when the robot hits the walls, furniture, or other objects in the surrounding environment. A range finder is installed at the front and rear of the robot to detect the distance between obstacles around it in real time. Because the range finder is a laser finder, it can be used not only for obstacle avoidance, but also for navigation with simultaneous localization and mapping (SLAM).

3.2.3. Upper Body Structure and Sensors

The upper body comprises the head, waist, chest, and twin arms. The head can rotate with a two-DOF neck and is equipped with an RGB-D camera, a microphone, and stereo speakers. Through a microphone and stereo speakers, the robot and the person being assisted can communicate with each other. The waist has three DOFs and can rotate like a human. The two manipulators consist of an arm with six DOFs and an end-effector with one DOF in each case. The chest is also equipped with an RGB-D camera to measure objects in the direction of the torso. The purpose of having a camera on both the head and chest is to obtain more information about the environment and to take more appropriate actions when the orientations of the head and chest are different.

3.2.4. Control

This nursing care robot can control the upper and lower body independently. In other words, in the moving state, the lower body performs the object-carrying task while maintaining a constant upper body posture. However, when objects are caught and passed by controlling the posture of the upper body, the lower body is assumed to remain motionless. The advantage of this control method is that it enables stable movement and reliable object manipulation with a small number of calculations. The kinematic formulas are shown in Equations (1)–(4). Equation (1) represents the kinematics of the lower body in the absolute coordinate system, Equation (2) represents the kinematics of the left-side manipulator in the coordinate system relative to the lower body, Equation (3) represents the kinematics of the right-side manipulator in the coordinate system relative to the lower body, and Equation (4) represents the kinematics of the manipulated object in the coordinate system relative to the lower body.

where

- : central coordinates and posture of the lower body;

- : omniwheel rotation angle;

- : coordinates and posture of the left-hand tip;

- : articular system coordinates of the left-hand lumbar region;

- : coordinates and posture of the right-hand tip;

- : coordinates of the right-hand lumbar joint system;

- : position and posture of the manipulated object;

- : coordinates and posture of left and right fingers.

In the control sequence, the robot is first controlled by moving its lower body to the manipulable range of the object based on Equation (1). Next, based on Equation (4), the tip work and posture of the left and right manipulators are obtained from the positions and postures of the manipulators. Furthermore, based on Equations (2) and (3), the joint system coordinate values of the left and right manipulators and the joint system coordinate values of the waist are obtained. Finally, the joint angles obtained of the left and right joints and the joint angle of the waist are input to each servo system as the target values of the control system. Consequently, the support movement is performed as desired by the person in need of care. In controlling the servo system of each joint, the highly practical PI control method (proportional–integral controller) is used.

3.3. Research on Understanding the Intentions of People Who Need Care

A person in need of nursing care is unable to move freely around the room by him-self/herself because they have difficulty walking, so they stay in bed all day and become bedridden. Although food and drink are available in refrigerators, bedridden people can-not go to the refrigerator to eat or drink by themselves outside the home helper’s visiting care hours. In addition, although they have bedrooms, living rooms, kitchens, toilets, and bathrooms, they cannot live like fully functional people. Thus far, we have developed an algorithm for understanding the intentions of a bedridden person and confirmed its effectiveness using a prototype of a humanoid nursing care robot [29,30].

In [29], we proposed an algorithm for inferring objects and places based on physiological needs, such as hunger and thirst. Then, a confirmation experiment was conducted in which a humanoid care robot understood the physiological needs of a bedridden person and handed food on a table and milk in a refrigerator in front of the bedridden person’s bed, while avoiding furniture and walls in a small home. In [30], the algorithm learned the preferences of each person in need of care while caring for the patient, and achieved more appropriate care actions.

On the other hand, the care provided by robots is expected not only to eliminate labor shortages, but also to improve the quality of care by taking into account the unique characteristics of each person in need of care. Quantitative management of nutritional intake is recognized as a way to improve the quality of care. We believe that if force sensors are attached to a two-armed robot, it may be possible to naturally quantify the amount of drink poured or food taken during the process of pouring or taking food. In this article, we discuss the feasibility of quantifying nutritional intake and hydration by means of force sensors attached to a two-armed robot. Combined with the functions described in [29,30], this humanoid care robot enables us to understand the physiological needs of caregivers and manage their health quantitatively, bringing us one step closer to realizing a care robot that can perform a wide variety of care actions.

4. Realization of Quantitative Pouring Operations

Figure 6 shows the developed humanoid care robot helping a bedridden person to drink.

Figure 6.

Humanoid nursing care robot assisting with fluid intake.

The flow of assisting a bedridden person to drink is as follows:

- A person requiring care determines that they need to drink something [6,7];

- Take a pitcher containing a drink from the refrigerator, a cup from the cupboard, or other location;

- Pour the drink into the cup;

- Hand the cup containing the drink to the person requiring care.

4.1. Equipped with Force Sensor

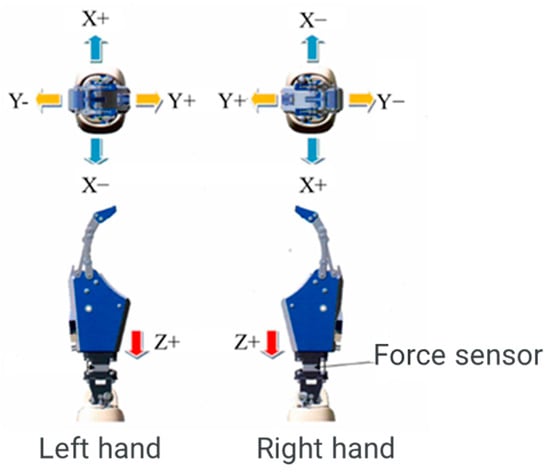

We propose a method for the quantitative pouring operation of a drink by measuring the weight of the drink using a force sensor mounted on the neck of a nursing care robot’s hand, as shown in Figure 7.

Figure 7.

Force sensor.

The installed force sensor is a three-axis force sensor that can measure forces in the three axial directions of x, y, and z. It can measure large forces with a measurement range of ±250 N in the x and y directions, and 0 to 500 N in the z direction. The force sensor sampling period is 100 ms. The orientation of the force sensor is shown in Figure 8.

Figure 8.

Force sensor orientation.

4.2. Realizing the Quantitative Pouring Operation of a Drink

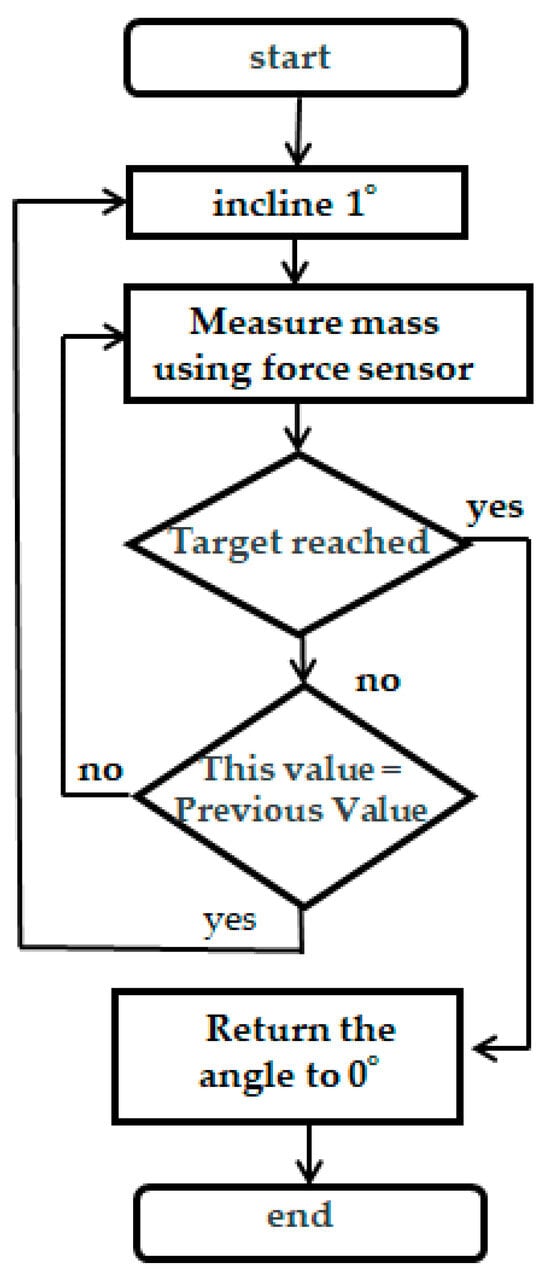

Figure 9 shows the flow of the operation of pouring a measured amount of drink into a cup from a pitcher filled with drink held in one hand and a cup in the other. The position of the hand holding the cup is adjusted so that the drink flows from the spout to the cup when the pitcher is tilted with one hand. The hand holding the pitcher is moved based on quasi-kinematics, whereas the hand holding the cup is moved based on inverse kinematics.

Figure 9.

Flow of the process of pouring a drink.

The flow of motion in Figure 9 consists of steps ① to ⑤:

- Tilt the hand holding the pitcher by 1°;

- Measure the mass of the robot hand from the force sensor;

- Determine if the measured value has reached the target value of the pouring drink. If it has reached the target value, proceed to ⑤. If not, go to ④;

- If the value measured at this time is the same as the previous one, return to ① where the robot hand is tilted by 1°; otherwise, continue to ② where the mass of the robot hand is measured. The resolution of the force sensor is not considered;

- When the target value is reached, the angle of the hand holding the pitcher is returned to 0°.

The reason for including ④ is the possibility that the liquid poured due to the 1° tilt at the point of measurement in ② may not have flowed completely. Therefore, with a sampling period of the force sensor of 100 ms, the measured value of the current mass is compared with that of the previous mass. If they are different, it is judged that the amount of liquid poured by tilting at an angle of 1° has not flowed completely, and the angle of the hand holding the pitcher is not changed, i.e., wait until the pitcher is poured out at the same angle and return to ②. If the measured mass of the pitcher this time is the same as the previously measured mass, it can be judged that the pitcher has been poured out at the same angle, and the process returns to ①. The hand holding the pitcher is tilted by another 1°.

4.3. Experiments and Results

We conducted a verification experiment by pouring a certain amount of water from a PET bottle into a cup and measuring the force sensor of the hand holding the cup of the robot.

Specifically, a PET bottle with a capacity of 650 g was filled with 300, 450, or 600 g of water, and the cup was held in one of the robot’s hands. The robot was operated to pour water from the PET bottle into a cup with a 150 g target value of pouring volume. The mass of the water poured into the cup was measured using a scale.

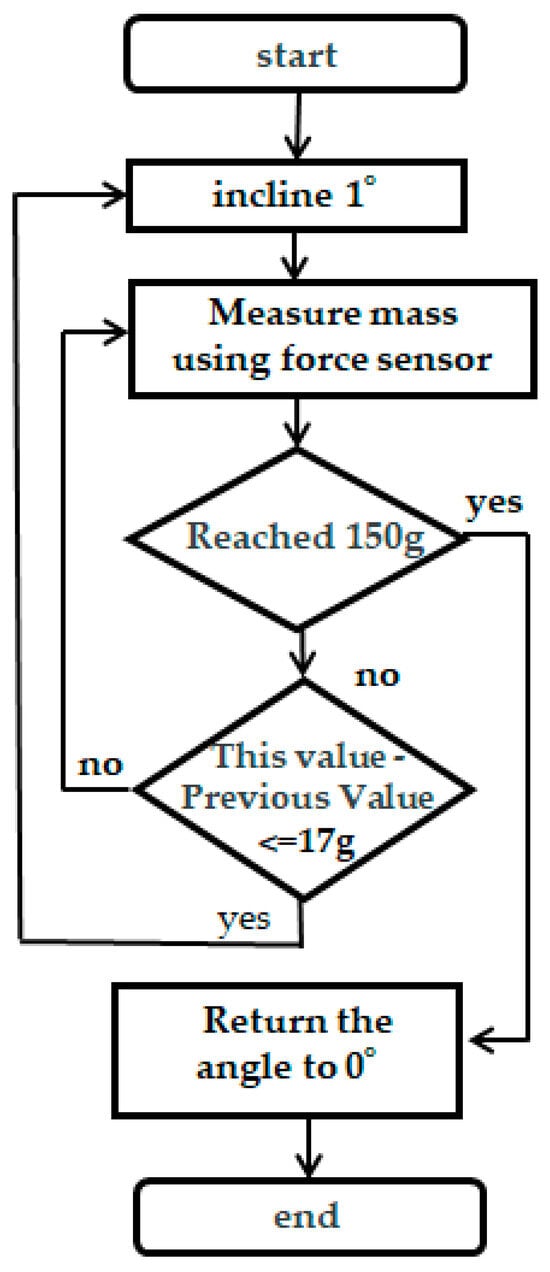

In the verification experiment, the robot was operated according to the flowchart shown in Figure 10. In other words, the flow of robot motion in the verification experiment consisted of the following steps ① to ⑤. However, the resolution of the force sensor was only 17 g.

Figure 10.

Flow of actions for pouring 150 g of water into a cup.

- Tilt the hand holding the plastic bottle by 1°;

- The mass of the water in the cup is measured by a force sensor in the hand holding the cup;

- Determine whether the measured amount of water in the cup reaches the target value of 150 g. If it has, proceed to ⑤. If it has reached it, go to ⑤. If it has not been reached, go to ④;

- Compare this measurement with the previous one. If the difference between this and the previous measurement is greater than the force sensor resolution of 17 g of the hand holding the cup, it is judged that the amount of water poured by tilting the bottle 1° has not flowed completely, and the angle of the hand holding the PET bottle is not changed; that is, wait until it pours out at the same angle, and return to ②. If the difference between this and the previous measurement is smaller than or equal to the force sensor resolution of 17 g, we could judge that the bottle has been poured out at the same angle, and the process is returned to ①. The hand holding the PET bottle is tilted by another 1°;

- The amount of water in the cup reaches the target value of 150 g, and the angle of the hand holding the plastic bottle returns to 0°.

Figure 11 shows the verification experiment of the robot pouring a certain amount of water from a PET bottle into a cup.

Figure 11.

Robot pouring 150 g of water into a cup.

Experiments to verify the robot’s behavior when pouring 150 g of water from PET bottles containing 300, 450, and 600 g into a cup were conducted three times each. The results of these experiments are presented in Table 1.

Table 1.

Results of pouring 150 g of water into a cup.

When the PET bottle was filled with 300 g of water, the average value of the three robot pouring motions with a target value of 150 g was 139.7 g. When the PET bottle was filled with 600 g of water, the average value of the pouring motions of the three robots was 143 g. When the PET bottle was filled with 450 g of water, the average value of the pouring motions was 140.3 g. When the PET bottle was filled with 600 g of water, the average pouring motion of the three robots was 143.3 g. The results of the validation experiment showed that the average value for each condition fell within approximately 10 g of the injection target value; that is, the force sensor mounted on the robot wrist could be measured accurately, and the robot pouring motion was well realized.

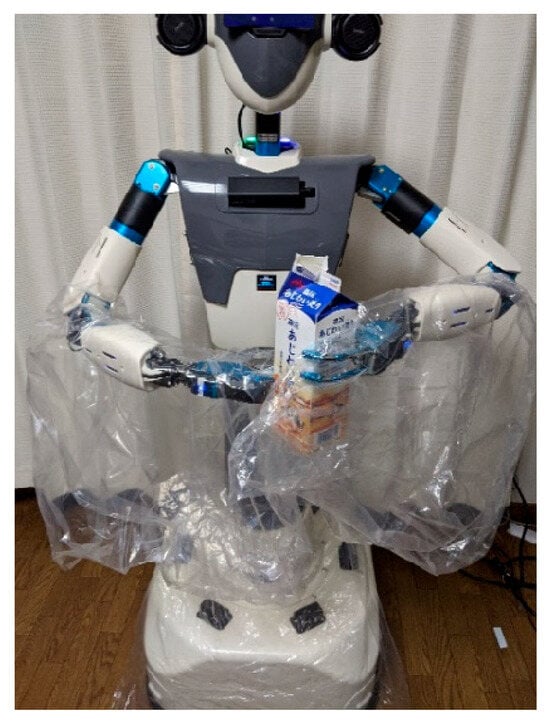

In addition, a verification experiment was conducted using a force sensor for the hand holding the cup, as shown in Figure 12, to verify the operation of filling a paper carton with milk and pouring it into the cup at a fixed amount.

Figure 12.

Experimental scene of pouring milk in a fixed quantity.

Milk (300 g) was placed in a paper carton with a capacity of 1000 g and held in one hand by the robot, which also held the cup in the other hand (Figure 12). The target value for pouring was set to 150 g, and the robot was moved to pour milk from the paper carton into the cup. The pouring motion was verified thrice, and the experimental results are presented in Table 2. The average value of the three pourings was 160.7 g, and the error was within 15 g for all three cases.

Table 2.

Results of pouring 150 g of milk from a paper carton.

5. Conclusions and Future Research Directions

The world is facing the challenge of so-called care refugees, who are unable to obtain adequate care due to rapidly aging populations with low birthrates. Therefore, various research and development efforts are underway to create and popularize nursing care robots as a breakthrough that can solve this difficult problem. Until now, most nursing care robots for older people have had a single function. Due to the limited space in individual homes, it is difficult to provide multiple robots to assist or care for disabled older people in their lives, and the use of various nursing care robots is complicated and not standardized. Therefore, to truly play a role in society, nursing care robots need to have multiple functions and accomplish multiple assistive or nursing tasks. In this study, we have developed a humanoid nursing care robot with the goal of realizing various tasks such as assisted mobility, feeding, drinking, defecation, and bathing.

On the one hand, the use of robots will not only be for eliminating labor shortages, but is also expected to improve the quality of care by taking into account the unique characteristics of each person requiring care. Quantitative management of nutritional intake is considered a key factor in improving the quality of care. Manual caregiving requires time and the loading of food and drinks on a measuring device, which is difficult to implement in caregiving settings that already lack human resources. The idea behind this research was to attach a force sensor to the wrist of a nursing care robot to measure the forces while picking up and carrying drinks and food, thereby improving the quality of nursing care.

In this article, we explained the development concept, prototype design, and prototype fabrication of a humanoid nursing care robot. With the aim of improving the quality of caregiving, we proposed a method to achieve quantitative pouring operation of a drink by measuring force sensors, and demonstrated its effectiveness through verification experiments.

In this article, we focused on the use of the developed humanoid nursing care robot to help persons requiring nursing care with their eating. In future, we plan to expand our research agenda to include the quantification of a variety of foods and the use of this robot to assist caregivers in changing clothing and providing personal hygiene services and excretion care. We are confident that by continuing such steady efforts, we will be able to develop a humanoid nursing care robot that can contribute to the field of nursing care.

Author Contributions

Conceptualization and methodology, S.W.; software, K.M.; validation, S.W. and K.M.; formal analysis, S.W.; investigation, S.W.; resources, S.W.; data curation, S.W. and K.M.; writing—original draft preparation, S.W.; writing—review and editing, S.W.; visualization, S.W.; supervision, S.W.; project administration, S.W.; funding acquisition, S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by JSPS Grant-in-Aid for Scientific Research under Grant number 16K12503, the Canon Foundation, Casio Science Promotion Foundation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors would like to thank SATT SYSTEMS Ltd for his technical support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Statistics Bureau of Japan. Available online: https://www.stat.go.jp/data/topics/pdf/topics142.pdf (accessed on 17 December 2024).

- Cabinet Office; Government Japan. Available online: https://www8.cao.go.jp/kourei/whitepaper/w-2024/html/zenbun/index.html (accessed on 17 December 2024).

- Minister of Health. Labour and Welfare of Japan. Available online: https://www.mhlw.go.jp/toukei/list/81-1a.html (accessed on 17 December 2024).

- WHO. Available online: https://www.who.int/ (accessed on 17 December 2024).

- World Bank. Available online: https://www.worldbank.org/ext/en/home (accessed on 17 December 2024).

- Wang, S. Research on Welfare Robots: A Multifunctional Assistive Robot and Human–Machine System. Appl. Sci. 2025, 15, 1621. [Google Scholar] [CrossRef]

- Li, L.; Foo, M.J.; Chen, J.; Tan, K.Y.; Cai, J.; Swaminathan, R.; Chua, K.S.G.; Wee, S.K.; Kuah, C.W.K.; Zhuo, H.; et al. Mobile Robotic Balance Assistant (MRBA): A gait assistive and fall intervention robot for daily living. J. Neuroeng. Rehabil. 2023, 20, 29. [Google Scholar] [CrossRef] [PubMed]

- Fujimoto, A.; Matsumoto, N.; Jiang, Y.; Togo, S.; Teshigawara, S.; Yokoi, H. Gait Analysis based Speed Control of Walking Assistive Robot. In Proceedings of the IEEE International Conference on Intelligence and Safety for Robotics (ISR), Shenyang, China, 24–27 August 2018. [Google Scholar]

- Wang, Y.; Wang, S. A new directional-intent recognition method for walking training using an omnidirectional robot. J. Intell. Robot Syst. 2017, 87, 231–246. [Google Scholar]

- Zhao, D.; Yang, J.; Okoye, M.-O.; Wang, S. Walking assist robot: A novel non-contact abnormal gait recognition approach based on extended set membership filter. IEEE Access. 2019, 7, 76741–76753. [Google Scholar]

- Mukai, T.; Onishi, M.; Odashima, T.; Hirano, S.; Luo, Z. Development of the tactile sensor system of a human-interactive robot “RI-MAN”. IEEE Trans Robot. 2008, 24, 505–512. [Google Scholar] [CrossRef]

- Ding, M.; Ikeura, R.; Mori, Y.; Mukai, T.; Hosoe, S. Measurement of Human Body Stiffness for Lifting-Up Motion Generation Using Nursing-Care Assistant Robot—RIBA. In Proceedings of the SENSORS, 2013 IEEE, Baltimore, MD, USA, 3–6 November 2013. [Google Scholar]

- Kato, K.; Yoshimi, T.; Aimoto, K.; Sato, K.; Itoh, N.; Kondo, I. Reduction of multiple-caregiver assistance through the long-term use of a transfer robot in a nursing facility. Assist Technol. 2023, 35, 271–278. [Google Scholar] [CrossRef] [PubMed]

- Ekanayaka, D.; Cooray, O.; Madhusanka, N.; Ranasinghe, H.; Priyanayana, S.; Buddhika, A.G.; Jayasekara, P. Buddhika, Elderly supportive intelligent wheelchair. In Proceedings of the 2019 Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 3–5 July 2019. [Google Scholar]

- Zhang, Z.; Liu, T.; Lan, Z.; Ding, H. Design and analysis of multi-posture electric wheelchair. In Proceedings of the 2024 World Rehabilitation Robot Convention (WRRC), Shanghai, China, 23–26 August 2024. [Google Scholar]

- Shen, B.; Wang, S. A User’s Steps Considered Motion Control Approach of an Intelligent Walking Training Robot. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA 2018), Changchun, China, 5–8 August 2018; pp. 1281–1286. [Google Scholar]

- Su, X.; Qing, F.; Chang, H.; Wang, S. Trajectory tracking control of human robots via adaptive sliding-mode approach. IEEE Trans. Cybern. 2024, 54, 1747–1754. [Google Scholar] [CrossRef] [PubMed]

- Xu, P.; Chang, C.; Ren, Y.; Zhai, C.; Yu, H. Development of a novel and cost-effective meal assistance robot for self-feeding. In Proceedings of the 2024 World Rehabilitation Robot Convention (WRRC), Shanghai, China, 23–26 August 2024. [Google Scholar]

- Hou, Y.; Kiguchi, K. Generation of virtual tunnels for meal activity perception assist with an upper limb power assist exoskeleton robot. IEEE Access. 2024, 12, 115137–115150. [Google Scholar] [CrossRef]

- Toride, Y.; Sasaki, K.; Kadone, H.; Shimizu, Y.; Suzuki, K. Assistive Walker with Passive Sit-to-Stand Mechanism for Toileting Independence. In Proceedings of the 2021 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Delft, The Netherlands, 12–16 July 2021; pp. 12–16. [Google Scholar]

- Takahashi, Y.; Manabe, G.; Takahashi, K.; Hatakeyama, T. Simple Self-Transfer Aid Robotic System. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003. [Google Scholar]

- Wang, Y.; Wang, S. Development of an Excretion Care Robot with Human Cooperative Characteristics. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6868–6871. [Google Scholar]

- Zhang, F.; Demiris, Y. Visual-tactile learning of garment unfolding for robot-assisted dressing. IEEE Robot. Autom. Lett. 2023, 8, 5512–5519. [Google Scholar] [CrossRef]

- Tamei, T.; Matsubara, T.; Rai, A.; Shibata, T. Reinforcement learning of clothing assistance with a dual-arm robot. In Proceedings of the 11th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2011), Bled, Slovenia, 26–28 October 2011; pp. 733–738. [Google Scholar]

- Zhu, J.; Gienger, M.; Franzese, G.; Kober, J. Do you need a hand?—A bimanual robotic dressing assistance scheme. IEEE Trans. Robot. 2024, 40, 1906–1919. [Google Scholar]

- Hirose, T.; Ando, T.; Fujioka, S.; Mizuno, O. Development of Head Care Robot Using Five-Bar Closed Link Mechanism with Enhanced Head Shape Following Capability. In Proceedings of the IEEE 2013/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Gu, Y.; Yiannis Demiris, V.T.T.B. AVisuo-tactile learning approach for robot-assisted bed bathing. IEEE Robot. Autom. Lett. 2024, 9, 5751–5758. [Google Scholar] [CrossRef]

- Wang, W.; Ren, W.; Li, M.; Chu, P. A survey on the use of intelligent physical service robots for elderly or disabled bedridden people at home. Int. J. Crowd Sci. 2024, 8, 88–94. [Google Scholar] [CrossRef]

- Yang, G.; Wang, S.; Yang, J. Desire-driven reasoning for personal care robots. IEEE Access. 2019, 7, 75203–75212. [Google Scholar] [CrossRef]

- Yang, G.; Wang, S.; Yang, J.; Shi, P. Desire-driven reasoning considering personalized care preferences. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 5758–5769. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).