1. Introduction

Motor behavior is regulated by the sensorimotor control system. This system integrates sensory input (i.e., visual, vestibular and somatosensory inputs) to execute motor commands, resulting in muscular responses [

1]. Proprioception is a subsystem of the somatosensory system. Proprioception is the perception of joint and body movement as well as the position of the body or body segments in space [

2]. For example, proprioception allows someone’s body segment to be localized without having to rely on visual input alone. Muscle spindles, Golgi tendon organs and other mechanoreceptors located in the skin and capsuloligamentous structures provide proprioceptive information to the central nervous system for central processing [

3]. As postural control is also regulated by the sensorimotor control system, the development of postural control in children is related to the maturation of proprioception, more specifically lower limb proprioception. Also, in other populations, difficulties in processing, integrating and (consciously) perceiving proprioceptive input can lead to impaired motor performance [

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. Training programs have been shown to lead to improved proprioceptive function in various populations [

14]. As such, it is important to accurately measure proprioceptive function to identify potential deficits (as soon as possible).

Different senses of proprioception can be measured, such as the ability to perceive joint position, movement (extent, trajectory, and velocity), the level of force, muscle tension and weight [

15]. The most commonly used and clinically applicable method to assess proprioception in the context of motor behavior is the Joint Position Reproduction (JPR) test, which assesses the sense of joint position [

15,

16,

17]. The JPR test examines the ability to actively or passively reproduce a previously presented target joint position [

18]. The examiner-positioned joint angle is compared with the joint angle repositioned by the subject. Differences between the targeted and reproduced joint angles indicate proprioceptive accuracy (i.e., a measure of proprioceptive function) and are defined as angular errors (i.e., joint position reproduction error (JRE)). The JRE could be expressed as an absolute error, which considers the absolute magnitude of the error (regardless of the direction of the error) and a systematic error, which considers the magnitude and direction of the error.

The gold standard to accurately measure reliable joint angles is laboratory-based optoelectronic three-dimensional motion capture. Unfortunately, the need to attach markers and the need for a high-tech laboratory inhibit the routine collection of valuable high-quality data, because it is very expensive and time-consuming. In addition, incorrect and inconsistent marker placement and soft tissue artifacts are common, and minimal or tight-fitting clothing is preferred to avoid these [

19,

20]. Recent advances in biomedical engineering resulted in new techniques based on deep learning to track body landmarks in simple video recordings, which include a high degree of automatization, and allow recordings in an unobtrusive manner in a natural environment. The manually placed, skin-mounted markers could be replaced with automatically detected landmarks with these deep-learning-based techniques. In humans, the development and application of systems that can estimate two- and three-dimensional human poses by tracking body landmarks without markers under a variety of conditions are increasing (e.g., Theia3D, DeepLabCut, Captury, OpenPose, Microsoft Kinect, Simi Shape). DeepLabCut is a free open-source toolbox that builds on a state-of-the-art pose-estimation algorithm to allow a user to train a deep neural network to track user-defined body landmarks [

21,

22]. DeepLabCut distinguishes itself because it is free and it is possible to use your own model or extend and retrain a pre-trained model in which joints are annotated. Techniques based on deep learning, such as DeepLabCut, make it possible to accurately measure sagittal knee and hip joint angles during walking and jumping without the need for a laboratory to apply markers, and with a high level of automatization. However, techniques based on deep learning (including DeepLabCut) have not yet been validated to measure joint angles and proprioceptive function during JPR tests with respect to a marker-based motion capture system. Due to this gap in the literature, this study aims to validate two-dimensional deep-learning-based motion capture (DeepLabCut) with respect to laboratory-based optoelectronic three-dimensional motion capture (Vicon motion capture system, gold standard) to assess lower limb proprioceptive function (joint position sense) by measuring the JRE during knee and hip JPR tests in typically developing children.

2. Materials and Methods

2.1. Study Design

A cross-sectional study design was undertaken to validate two-dimensional deep-learning-based motion capture (DeepLabCut) to measure the JRE during knee and hip JPR tests with respect to laboratory-based optoelectronic three-dimensional motion capture (Vicon motion capture system). This study is part of a larger project in which children executed the JPR tests three times per joint, both on the left and right side, to assess proprioceptive function in typically developing children [

23] and children with neurological impairments. COSMIN reporting guidelines were followed [

24].

2.2. Participants

Potential participants were included when they were (1) aged between five years, zero months and twelve years, eleven months old, (2) born > 37 weeks of gestation (full-term) and (3) cognitively capable of understanding and participating in the assessment procedures. They were excluded if, according to a general questionnaire completed by the parents, they had; (1) intellectual delays (IQ < 70), (2) developmental disorders (e.g., developmental coordination disorder, autism spectrum disorder or attention deficit hyperactivity disorder), (3) uncorrected visual or vestibular impairments and/or 4) neurological, orthopedic or other medical conditions that might impede the proprioceptive test procedure. The children were recruited from the researcher’s lab environment, an elementary school (Vrije Basisschool Lutselus, Diepenbeek, Belgium), through acquaintances and social media. Parents/caregivers gave written informed consent prior to the experiment for their child’s participation and analysis of the data. The study protocol (piloting and actual data collection) was in agreement with the declaration of Helsinki and had been approved by the Committee for Medical Ethics (CME) of Hasselt University (UHasselt), CME of Antwerp University Hospital-University of Antwerp (UZA-UA) and Ethics Committee Research of University Hospitals Leuven-KU Leuven (UZ-KU Leuven) (B3002021000145, 11 March 2022).

2.3. Experimental Procedure

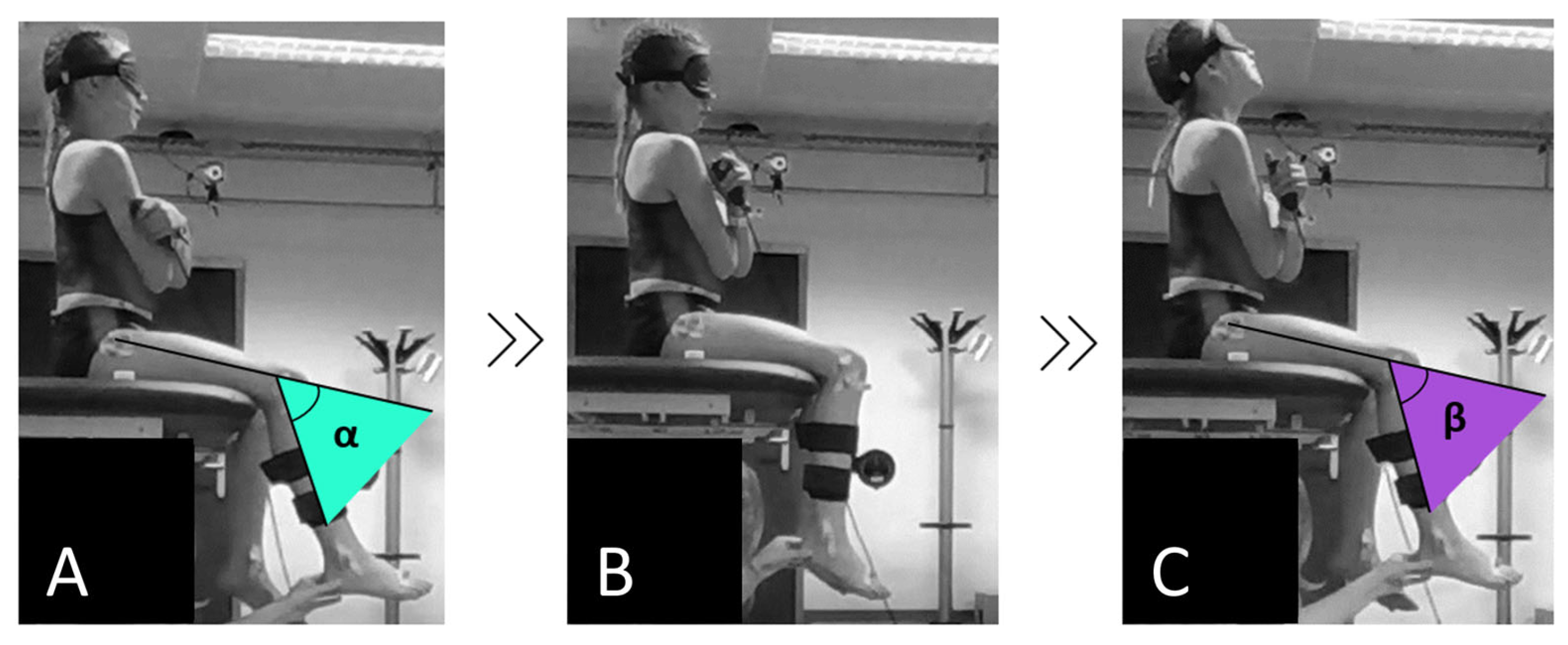

Proprioception (joint position sense) was assessed as the child’s ability to re-identify a passively placed target position of the ipsilateral hip (90° of flexion) and knee (60° of flexion) according to the passive-ipsilateral JPR method (

Figure 1). For the knee JPR test, children were seated blindfolded on the table with their lower legs hanging relaxed and unsupported (90° of knee flexion) while not wearing shoes and socks. The upper leg was fully supported by the table (90° of hip flexion) and hands were crossed on the chest. For the hip JPR test, the resting position was the same, only the children sat on an inclined cushion on the table (without back support) to align the baseline hip joint angle at 70° of flexion. From the resting position, the examiner moved the child’s limb 30° to knee extension, resulting in a final knee flexion angle of 60° or 20° to hip flexion, resulting in a final hip flexion angle of 90°, using an inclinometer distally attached to the moving segment. After experiencing and memorizing this joint position for five seconds, the child’s limb was passively returned to the start position. Afterward, the examiner moved the ipsilateral limb back into the same range and the child was asked to re-identify the target joint position as accurately as possible by pressing a button synchronized to motion capture software.

Depending on the region from which the child was recruited, testing was conducted at the Multidisciplinary Motor Centre Antwerp (M2OCEAN) (University of Antwerp) or the Gait Real-time Analysis Interactive Lab (GRAIL) (Hasselt University) by the same trained examiner using the same standardized protocol and instructions. This protocol was first tested on seven pilot participants before the actual data collection started.

For the current study, we focused on the concurrent validity of DeepLabCut markerless tracking with respect to three-dimensional (3D) Vicon optoelectronic motion capture to assess proprioceptive function. This required synchronized measurements of both systems. In the current study, 90 knee JPR test trials of 15 typically developing children and 126 hip JPR test trials of 21 typically developing children were screened for eligibility. Data collection was conducted between April 2022 and June 2023. Trials were eligible for the current study if the JPR tests were captured on video, if there was accurate tracking of the markers for the 3D movement analysis and if there was visibility of the shoulder, hip, knee and ankle joints of the child in the sagittal video recordings.

2.4. Materials and Software

Lower body 3D kinematics were collected with a 10-camera Vicon motion capture system (VICON, Oxford Metrics, Oxford, UK) (100 Hz), using the International Society of Biomechanics (ISB) lower limb marker model (26 markers) [

25]. The 3D knee and hip flexion/extension joint angles were quantified via VICON Nexus software (version 2.12.1) using Euler Angles.

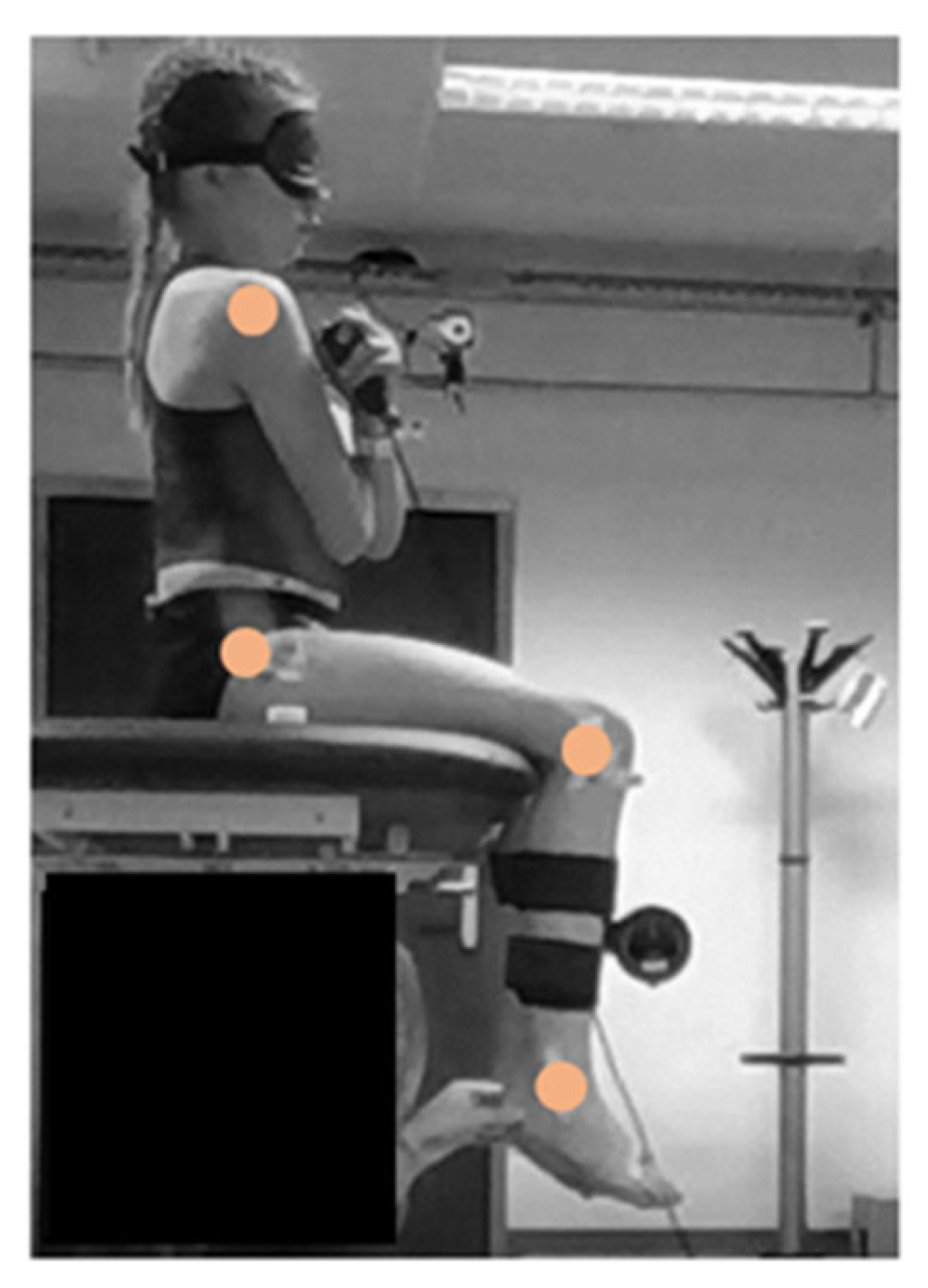

Two-dimensional (2D) pose estimation was performed with DeepLabCut (version 2.3.7), an open-source deep learning python toolbox (

https://github.com/DeepLabCut (accessed on 10 October 2023)) [

21,

22]. Anatomical landmarks (i.e., ankle, knee, hip and shoulder) were tracked in sagittal video recordings (

Figure 2).

The resolution of the video recordings was 644 × 486 pixels when recorded at the M2OCEAN laboratory and 480 × 640 pixels when recorded at the GRAIL. The frame rate of the video recordings was at both sites 50 frames per second. Separate DeepLabCut neural networks per joint for the left and right sides were created. The pretrained MPII human model of the DeepLabCut toolbox (ResNet101) was used to retrieve 2D coordinates of these anatomical landmarks:

With an additional 30 manually labeled frames from eight videos of left knee JPR test trials of six different children (network 1),

with an additional 25 manually labeled frames from six videos of right knee JPR test trials of five different children (network 2),

with an additional 28 manually labeled frames from six videos of left hip JPR test trials of six different children (network 3) and

with an additional 26 manually labeled frames from nine videos of hip JPR test trials of six different children (network 4)

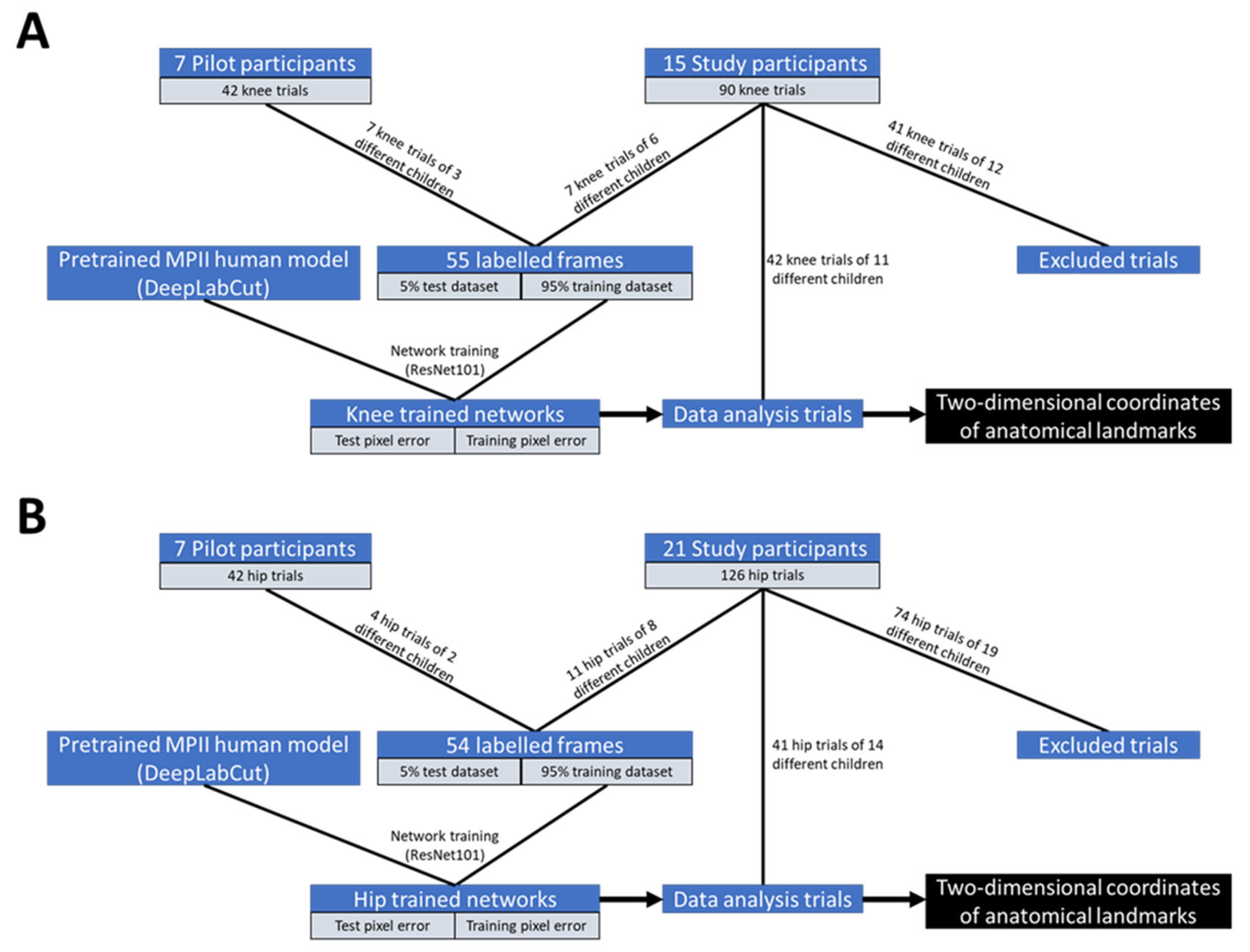

The labeled frames are a combination of frames from videos of the study and pilot participants (

Table 1 and

Table 2,

Figure 3). These videos were used only for network training and were excluded from statistical analyses. If one or more videos of a child were used for additional labeling to extend the neural network, all videos of that child were excluded from statistical analyses (

Tables S1 and S2). Frames for labeling were manually selected in order to select frames with a variety of appearances (e.g., clothing and posture of the participant and examiner, background, light conditions) within the datasets. The labeled frames were randomly split into either a training or test set (95% training dataset, 5% test dataset). The knee networks were trained up to 520,000 iterations and the hip networks were trained up to 500,000 iterations, both with a batch size of eight. Only trials with confident predictions of the coordinates of the anatomical landmarks were included in the analyses (i.e., the likelihood of confident predictions > p-cut off of 0.8).

The 2D knee flexion/extension joint angles were quantified by determining the angle between the upper and lower leg using trigonometric functions based on hip, knee and ankle joint coordinates via deep-learning-based motion capture. The 2D hip flexion/extension joint angles were quantified by determining the angle between the trunk and the upper leg using trigonometric functions based on shoulder, hip and knee joint coordinates via deep-learning-based motion capture.

2.5. Data Analysis

The time points of the targeted angles were determined by placing event markers in the VICON Nexus software within the optoelectronic 3D motion capture software. The time points of the reproduced angles were determined via event markers created within the optoelectronic 3D motion capture software by the child pressing the button. These time points were also used to determine the targeted and reproduced angles via deep-learning-based motion capture. Data of the left and right segment sides were pooled together.

The difference between the targeted and reproduced joint angle (i.e., JRE in degrees) was the outcome measure to validate deep-learning-based motion capture (DeepLabCut) with respect to laboratory-based 3D optoelectronic motion capture (gold standard) to assess proprioceptive function. Negative JREs corresponded to a greater reproduced angle compared to the targeted angle. Positive JREs corresponded to a smaller reproduced angle compared to the targeted angle.

2.6. Statistics

The normality of the residuals of the JRE differences between the systems was assessed by visually checking the Q-Q plot and histogram of the unstandardized residuals, by determining the skewness and kurtosis and by using the Shapiro–Wilk test.

Per the DeepLabCut neural network, a training and test pixel error was calculated. For the training pixel error, the locations of the manually labeled anatomical landmarks in frames in the training data set (i.e., 95% of all labeled frames) were compared to the predicted locations of the anatomical landmarks after training. For the test pixel error, the locations of the manually labeled anatomical landmarks in frames in the test dataset (i.e., 5% of all labeled frames, which were not used for training) were compared to the predicted locations of the anatomical landmarks after training.

To assess differences in systematic JREs between DeepLabCut and VICON, a linear mixed model was used with a dependent variable (hip or knee JRE), fixed variable (measurement system) and random variable (subject ID). The best repeated covariance type was chosen based on Akaike information criteria (AIC).

In addition, the best absolute error (i.e., minimal JRE across the three repetitions) was determined per subject and compared between VICON and DeepLabCut using a paired t-test in order to validate a clinically applicable outcome measure. Furthermore, the linear correlation between the best absolute errors measured with DeepLabCut and VICON was assessed using a Pearson correlation.

Hip and knee Bland–Altman plots were created (based on the systematic JREs) with a 95% confidence interval for the mean difference between the systems. The presence of proportional bias was tested by testing the slope of the regression line fitted to the Bland–Altman plot using linear regression analysis.

Statistical analyses were performed with SPSS (v29) at α < 0.05.

3. Results

In total, 42 trials of knee JPR tests of 11 typically developing children (six girls, mean age 8.9 ± 1.0 years old, mean BMI 17.0 ± 2.7 kg/m

2) out of the initially included 90 trials of 15 typically developing children were included in the statistical analyses (

Figure 3 and

Table S1). Likewise, 41 trials of hip JPR tests of 14 typically developing children (six girls, mean age 9.0 ± 1.1 years old, mean BMI 16.9 ± 2.5 kg/m

2) out of the initially included 126 trials of 21 typically developing children were included in the statistical analyses (

Figure 3 and

Table S2).

Visually inspecting the Q-Q plot and histogram of the unstandardized residuals, the Shapiro–Wilk test results (knee: p = 0.91, hip: p = 0.31), skewness (knee: 0.47, hip: 0.49) and kurtosis (knee: 1.03, hip: 0.98) close to zero indicated that the data was normally distributed.

Training and test pixel errors of the different DeepLabCut neural networks were all below four pixels (which corresponds to errors below 2 cm) (

Table S3).

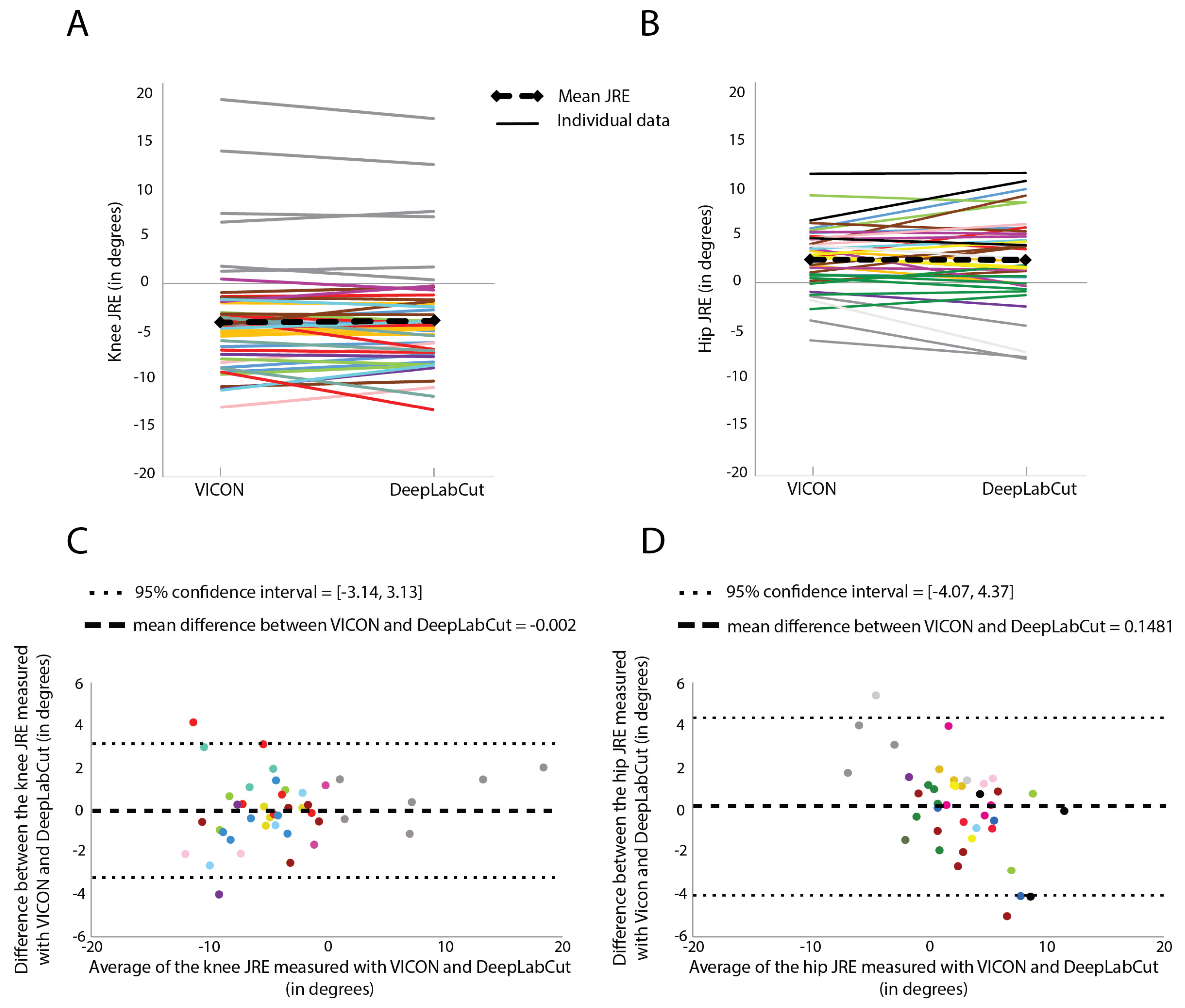

3.1. Knee JPR Tests

There was no significant difference in the knee systematic JREs measured with VICON (−3.65 degrees) and the knee systematic JREs measured with DeepLabCut (−3.69 degrees) (F = 0.09,

p = 0.77, covariance structure = Toeplitz) (

Figure 4). The best absolute errors measured with VICON (6.2 degrees) were not significantly different from the best absolute errors measured with DeepLabCut (5.9 degrees) (t(10) = 0.22,

p = 0.83). The best absolute errors measured with VICON were strongly correlated with the best absolute errors measured with DeepLabCut (r(9) = 0.92,

p < 0.001)

The scatters of differences were uniform (homoscedasticity), and the slope of the regression line fitted to the Bland–Altman plot did not significantly differ from zero (R2 = 0.04, F(1, 40) = 1.76, p = 0.19).

3.2. Hip JPR Tests

There was no significant difference in the hip systematic JREs measured with VICON (−2.42 degrees) and the hip systematic JREs measured with DeepLabCut (−2.41 degrees) (F = 0.38

p = 0.54, covariance structure = Compound symmetry) (

Figure 4). The best absolute errors measured with VICON (3.4 degrees) were not significantly different from the best absolute errors measured with DeepLabCut (4.2 degrees) (t(13) = −0.12,

p = 0.90). The best absolute errors measured with VICON were strongly correlated with the best absolute errors measured with DeepLabCut (r(12) = 0.81,

p < 0.001).

Proportional bias was present as the slope of the regression line fitted to the Bland–Altman plot did significantly differ from zero (R2 = 0.31, F(1, 39) = 17.46, p < 0.001).

4. Discussion

The aim of this study was to validate two-dimensional deep-learning-based motion capture (DeepLabCut) with respect to laboratory-based optoelectronic three-dimensional motion capture (VICON, gold standard) to assess proprioceptive function (joint position sense) by measuring the JRE during knee and hip JPR tests in typically developing children. We validated measuring knee and hip systematic JREs using DeepLabCut with respect to VICON, but also validated measuring the best absolute JREs using DeepLabCut, in order to also validate a clinically applicable outcome measure (where over or undershooting is not important).

Until now, no previous study has reported the validity of a deep-learning-based motion capture system for the assessment of proprioception by measuring the JRE during knee and hip JPR tests. Deep-learning-based motion capture techniques (such as OpenPose, Theia3D, Kinect and DeepLabCut) have already been validated to measure joint kinematics and spatiotemporal parameters with respect to a marker-based motion capture system during walking, throwing or jumping in children with cerebral palsy [

26] and healthy adults [

27,

28,

29,

30,

31,

32]. The average Root Mean Square (RMS) errors of the captured sagittal knee and hip joint angles between markerless tracking and marker-based tracking ranged from 3.2 to 5.7 degrees and from 3.9 to 11.0 degrees, respectively, during walking. These differences between joint angles measured with the two systems are higher than the average absolute differences in systematic JREs measured with the two systems in the current study (knee: 1.2 degrees hip: 1.7 degrees). Greater differences between the systems during walking compared to JPR tests could be explained by the greater movement speed of the body segments during walking. Greater movement speed increases the probability of image blurring, which may lead to inaccurate markerless tracking of the body landmarks [

33].

Furthermore, the differences in JREs measured with the two systems are negligible because the averages of the absolute JRE differences between the systems (knee: 1.2 degrees (systematic) and 0.9 degrees (best absolute), hip: 1.7 degrees (systematic) and 1.2 degrees (best absolute)) are smaller than the intersession standard error of measurement (SEM) values for the knee and hip JRE measured with VICON, which were previously determined in our sample of typically developing children (knee: 2.26 degrees, hip: 2.03 degrees) (submitted for publication; unpublished [

23]). Further research is needed to assess the reliability of measuring the JRE with DeepLabCut.

In order to use DeepLabcut to assess proprioceptive function, it is important to unravel the potential cause of the proportional bias, which was present in the hip Bland–Altman plot. The difference between the hip JRE measured with VICON and DeepLabCut was greater when the mean JRE of both systems deviated more from zero. More specifically, the difference between the hip JRE measured with VICON and DeepLabCut greater than two degrees (=hip SEM) often corresponded to a relatively large VICON JRE of at least three degrees. However, large VICON JREs did not always correspond to large differences between the hip JRE measured with VICON and DeepLabCut. This means that DeepLabCut JREs were not measured accurately in some children, but not in all children with relatively large VICON JREs of at least three degrees. A possible explanation could be that the DeepLabCut neural networks were not optimally trained to accurately predict the coordinates of the anatomical landmarks (especially the shoulder joint when wearing a loose-fitted shirt, which could have caused the presence of a proportional bias only in the hip Bland–Altman plot), which are used to determine joint angles, in some children with relatively large deviations of the reproduction angle from the target angle (i.e., large JREs). The presence of a proportional bias may be prevented by extending the neural network models with more labeled frames during reproduction during a trial with a large JRE. Another reason for differences in JREs measured with VICON and DeepLabCut could be that the visual appearance such as clothing and posture of some participants was less similar to the visual appearance of the participants that are used for network training. This could lead to inaccurate DeepLabCut tracking of the joints for these participants. Increasing the variation in appearance in the video footage used for network training (e.g., clothing and posture of the participant and examiner, background, light conditions) will increase the accuracy of the DeepLabCut tracking, potentially leading to smaller differences between the JREs measured with VICON and DeepLabCut. However, an exploration of the effect of environmental factors (such as lighting and background) on DeepLabCut’s performance is still needed.

In the current study, the visual appearance also included the skin-mounted markers that are visible in the video footages. However, the markers are not used for labelling in DeepLabCut as the marker locations are different from the DeepLabCut anatomical landmarks. Tracking the joints using DeepLabCut should also work without skin-mounted markers visible in the video footage. But ideally, if only markerless tracking (and no marker-based tracking) will be used in future work to determine joint angles, video footage of persons without skin-mounted markers should also be used for network training. Future work should also consider applying data augmentation methods (e.g., rotations, scaling, mirroring), as this increases dataset diversity (without data collection) and therefore will improve deep learning model robustness and generalization.

Furthermore, by extending the neural network models, it could be expected that fewer trials need to be excluded because of low confidence in the predictions of the coordinates of the anatomical landmarks; however, the dataset for the current study was limited to further extend the neural network models (with more variation in the labeled frames) because videos of trials used for network training are excluded from the statistical analyses. A possible reason to exclude trials was if one or more joints were not visible in the video recording. It requires some practice to master the posture of the examiner in which the joints of the participant are clearly visible in the video. Visibility of the joints of the participant could be increased when using active proprioception assessment methods (e.g., active JPR assessment and active movement extent discrimination assessment (AMEDA)). Furthermore, the ecological validity of proprioception assessment would increase using active proprioception assessment methods. However, using active methods increases the risk of out-of-plane movements. Out-of-plane movements of a participant could cause different JREs measured by the two systems. Determining the JREs based on measuring two-dimensional joint angles in persons that do not move linearly in one dimension will be inaccurate. Differences in JREs measured by the two systems caused by out-of-plane movements could not be solved by extending the neural network models, but should be solved by measuring three-dimensional joint angles. In the current study, typically developing children performed passive JPR tasks, while sagittal videos were recorded (i.e., a camera on a tripod was placed perpendicular to the movement direction, the flexion-extension axis), which resulted in a minimized chance of out-of-plane movements. In the case of performing JPR tasks in other populations (such as children with cerebral palsy) and/or under active testing conditions, more out-of-plane movements will be expected. Therefore, measuring three-dimensional joint angles should be considered when out-of-plane movements are expected. Assessing the validity and reliability of DeepLabCut in measuring JREs based on three-dimensional joint angles is still needed.

Further research is needed to assess the validity of DeepLabCut to measure the JRE in joints other than the knee and hip (such as the ankle and upper extremity joints) and different measurement planes (such as the frontal movement plane, determining joint adduction-abduction). Detecting proprioceptive deficits via the assessment of proprioception function is not only relevant in typically developing children, but also in athletes, older adults and disabled people. In these populations, poorer proprioception is indicative of a higher chance of getting a sports injury [

34], can further exacerbate the likelihood of falls and balance problems [

35,

36] and can negatively affect movement control [

37,

38,

39,

40,

41,

42]. Deep-learning-based motion capture techniques could identify these proprioceptive deficits and therefore facilitate the implementation of proprioception assessment in clinical and sports settings, as there are no markers and no laboratory setting needed.