Abstract

This review evaluates the application of artificial intelligence (AI), particularly neural networks, in diagnosing and staging periodontal diseases through radiographic analysis. Using a systematic review of 22 studies published between 2017 and 2024, it examines various AI models, including convolutional neural networks (CNNs), hybrid networks, generative adversarial networks (GANs), and transformer networks. The studies analyzed diverse datasets from panoramic, periapical, and hybrid imaging techniques, assessing diagnostic accuracy, sensitivity, specificity, and interpretability. CNN models like Deetal-Perio and YOLOv5 achieved high accuracy in detecting alveolar bone loss (ABL), with F1 scores up to 0.894. Hybrid networks demonstrate strength in handling complex cases, such as molars and vertical bone loss. Despite these advancements, challenges persist, including reduced performance in severe cases, limited datasets for vertical bone loss, and the need for 3D imaging integration. AI-driven tools offer transformative potential in periodontology by rivaling clinician performance, improving diagnostic consistency, and streamlining workflows. Addressing current limitations with large, diverse datasets and advanced imaging techniques will further optimize their clinical utility. AI stands poised to revolutionize periodontal care, enabling early diagnosis, personalized treatment planning, and better patient outcomes.

1. Introduction

Periodontitis is a disease affecting the supporting tissues of the tooth, characterized by microbial infection and host-related inflammatory response, which results in loss of periodontal attachment. Associated with this is, as a second typical feature of the disease, marginal alveolar bone loss [1]. Its prevalence in the population makes it the sixth most prevalent disease in the world and the second most prevalent oral disease (after dental caries), with a worldwide prevalence of 10.8 percent and an incidence of 743 million cases [2]. The outcome of the disease, namely the loss of dental elements, thus determines not only a decisive impact on the individual’s life but also a major public health problem.

In recent years, the study of this condition has evolved, leading to improvements in cases treated with enhanced techniques, as well as more precise ways of classifying and diagnosing [3]. As with other diseases affecting the oral cavity, prevention and early diagnosis are key to halting evolution.

According to the new classification for staging and grading periodontal disease [4], radiographic visualization of alveolar bone loss (ABL) represents, after clinical assessment of attachment loss, a key parameter for diagnosing the early stages of disease. Periodontal probing, currently the gold standard for diagnosing early clinical attachment loss (CAL), is often unreliable when conducted by general or inexperienced dentists. The development of advancing imaging technologies and the integration of artificial intelligence-based-decision support systems could bring an advantage to clinical practice for the early diagnosis of stage I periodontitis [1].

Artificial intelligence (AI) is becoming increasingly widespread in various fields. Specifically, in the medical and dental fields, the main use of this technology is geared toward the development of decision-support tools, referred to as decision-support systems (DSS), which are intended to assist the clinician in evaluating a radiographic examination and, consequently, in formulating a diagnosis [5]. In particular, among the various types of AI, we considered the subset of deep learning (DL) algorithms in this study. These models, which are becoming increasingly popular in recent years compared to other forms of AI, can learn complex information directly from the data provided to them, and, on the basis of these data, can build more or less accurate predictive models, potentially applicable to clinical practice, especially during the segmentation of regions of interest (ROI), which is a crucial step in the entire workflow involving artificial intelligence algorithms and pathology classification and staging processes [6]. Recent developments in an alternative segmentation method using a mathematical model for biomedical applications are shown in [7]. Over the years, several of these models, called neural networks, have been developed. Specifically, we can distinguish between convolutional neural networks (CNNs) [8], generative adversarial networks (GANs) [9], transformer neural networks [10], and hybrid neural network [11]. All of these types of neural networks in medicine have been widely used for several years, with the purpose of aiding in the diagnosis of a wide range of diseases [12,13]. In recent times, neural networks are also increasingly being used in dental research, with promising results in several of its areas.

In cariology [14,15], a DL algorithm was applied to evaluate various third molar lesions on OPTs. The proposed method achieved an accuracy of 87%, a sensitivity of 86%, and a specificity of 88%, proving to be quite accurate in detecting caries on third molars from orthopantomograms and in oral surgery [16,17,18,19,20] for the evaluation of the relationship between inferior third molar and mandibular canal and the prediction of mandibular nerve paresthesia after inferior third molar surgery. Lo Casto et al. [16] compared two CNNs to evaluate the contact between the lower third molar roots and the mandibular canal. They also compared the neural network’s capabilities with that of a dental student, which underperformed both CNNs. In oral medicine, Yang et al. [21] compared the diagnostic performance of a CNN with that of a group of oral surgeons and one of general dentists. They used 1603 orthopantomographs divided into four groups (dentigerous cyst, ameloblastoma, keratocyst, no lesion) based on the histopathological report of the lesion. The results obtained in this study showed little difference between the diagnostic performance of the algorithm and that of clinicians [22,23]. In oral implantology, in a pilot study by Takahashi et al. [24], the possibility of recognizing, from radiographic images, the model of the implant placed in the bone was evaluated using a CNN. A total of 1282 orthopantomographs were used, with implants of six different models. The ability of the DL algorithm to detect and discriminate between the implant and the radiograph was then evaluated. The results were accurate, but improvable by performing CNN training with a larger dataset of images and including other commercially available implant models; in dental traumatology, Fukuda et al. [25] utilized a CNN for the assessment of vertical root fracture on OPTs; in endodontics, Hiraiwa et al. [26] studied the performance of a DL system in detecting the presence, on orthopantomograms, of the distal second root in the lower first molar. Comparing the performance of the system to that of two experienced radiologists, they found that the software was more accurate in assessing the anatomical variant. In orthodontics and gnathology, Lee et al. [27] applied a CNN to more easily detect the bony changes underlying osteoarthritis of the temporomandibular joint on CBCTs. Based on their results, one can look forward to the future use of this model as a diagnostic support tool. Finally, another study by Lee et al. [28] employed a convolutional neural network to automatically identify cephalometric rèpere points on a latero lateral teleradiography to reduce inter-operator variability and standardize cephalometric tracings underlying orthodontic treatments.

Several studies on artificial intelligence applications in periodontology have also been published in this field in recent years, with different results depending on the tasks performed by the neural network and the type of neural network used. This literature review aims to understand the current artificial intelligence applications in periodontology. Specifically:

- A.

- To identify which of these types of neural networks (CNN; GAN; hybrid NN; transformer NN) are most widely used to date.

- B.

- To understand how these techniques can improve the early diagnosis of CAL and ABL in periodontal patients.

2. Materials and Methods

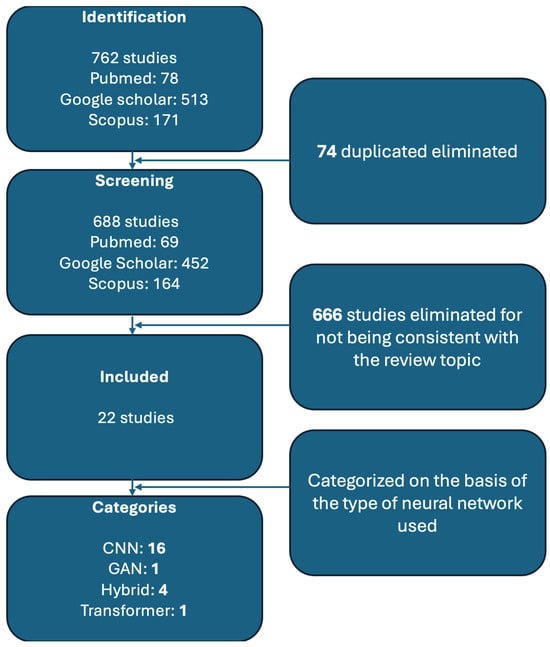

Various studies have been conducted in periodontology to determine whether alveolar bone loss can be detected by artificial intelligence. We performed a systematic search on PubMed, Google Scholar, and Scopus. The following keywords were used: “periodontitis” AND (“CNN” OR “Generative Adversarial Network” OR “Transformer Network”OR “Hybrid Network”) AND “alveolar bone loss”. A total of 762 studies were initially identified (Pubmed = 78, Google scholar = 513, Scopus = 171). A total of 74 duplicates were identified and removed from the dataset, leaving 688 papers. The titles and abstract of the remaining studies were reviewed to assess their relevance to the topic. Studies that did not focus on AI applications in periodontitis diagnosis and ABL assessment were excluded. Studies that mentioned AI but did not use neural networks were also excluded. At this stage, 666 studies were removed for not being consistent with the review topic. At this point, a full text review of the remaining 22 studies were made, assessing methodological quality, dataset size, AI method used, and relevance to the clinical application in periodontal bone loss detection.

To be included in the review, studies had to meet the following criteria: publication date between 2017 and 2024; neural network employed; radiographic images used for detecting ABL or periodontal disease-related bone defects; and AI performance metrics reported. Studies were excluded if they met any of the following conditions: non-AI based approaches; non-relevant clinical application; and lack of sufficient data of performance metrics). After final inclusion, the selected studies were categorized based on the type of neural network architecture used. The process of selecting, skimming, and categorizing papers is represented in Figure 1.

Figure 1.

Flowchart describing the various stages of research.

In selecting the studies, we considered the type of radiographic images used, dataset size, image resolution, type of AI used, assessment of alveolar bone loss, reference test, and availability of code. The most commonly used types of images were OPT (n = 12) followed by periapical endoral radiographs (n = 10); the number of images used in the dataset ranged from 12 to 103,909, the resolution ranged from 128 × 128 up to 148 × 2276; the AI methods are convolutional neural network (n = 16), hybrid networks (n = 4), transformer network (n = 1), and generative adversarial network (n = 1); alveolar bone loss was assessed by percentage (n = 6), present or absent (n = 6), or stage (mild, moderate, and severe) (n = 7) and CAL measurement (n = 3); different solutions were chosen as reference tests and to create a label to be assigned to the images used in the study.

Table 1 presents an overview of these studies.

Table 1.

Current applications of AI in periodontology.

We divided the selected studies based on the type of artificial intelligence used: convolutional neural network; hybrid neural network; generative adversarial network; and transformer network.

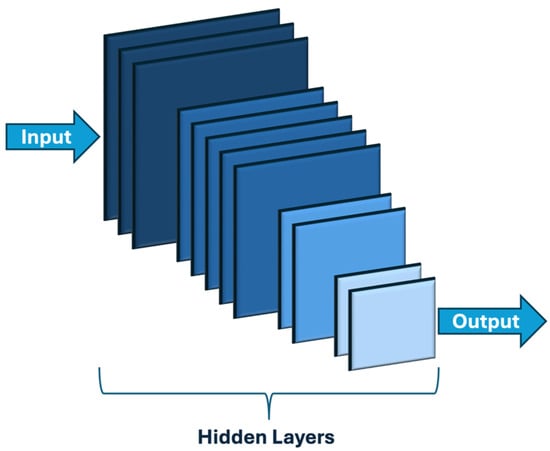

CNNs, whose structure is shown in Figure 2, are among the oldest and most popular DL models, mainly used for image recognition tasks through supervised learning.

Figure 2.

Convolutional neural network general structure.

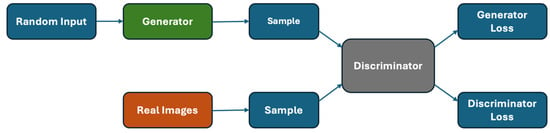

In 2014 [9], GANs (Figure 3) were introduced, among the first models used for generative AI. GANs consist of two neural networks combined to compete to perform their assigned task most effectively.

Figure 3.

Generative adversarial network general structure.

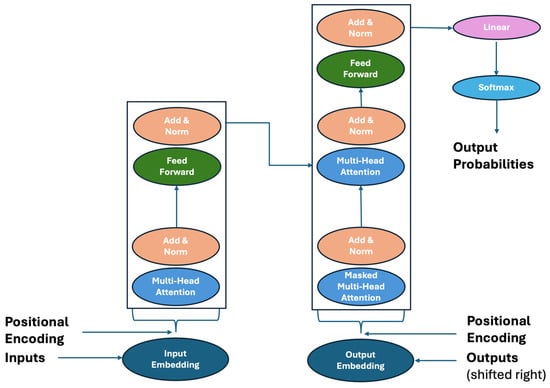

Transformer networks are a further evolution of neural networks, first introduced in 2017 by Google Brain [10]. To date, their use is mainly aimed at natural language processing (NLP), a field of computer science that aims to improve the ability of computers to recognize human language. Figure 4 shows their structure.

Figure 4.

Transformer neural network representation.

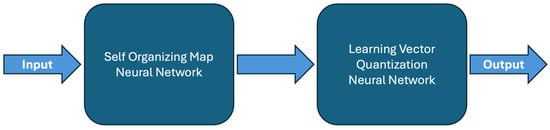

Hybrid neural networks (Figure 5) combine two different types of neural networks in their structure to take advantage of the benefits of both [11].

Figure 5.

Hybrid neural network structure.

2.1. Convolutional Neural Network (CNN)

Several studies have explored CNN-based approaches for detecting and quantifying PBL in panoramic and periapical radiographs. Krois et al. [29] developed a seven-layer CNN trained on 2001 image segments, achieving an accuracy of 0.81, outperforming human clinicians in specificity while slightly lagging in sensitivity. The study highlighted the potential of AI to standardize periodontal diagnostics, reducing inter-examiner variability. However, the model’s performance discrepancies between different tooth types underscored the need for further refinement, particularly for complex cases involving incisors. In comparison, Kim et al. [30] introduced DeNTNet, a multi-phase DL model incorporating transfer learning and region-of-interest (ROI) segmentation to enhance lesion detection and tooth classification. DeNTNet outperformed human clinicians with an F1 score of 0.75 versus 0.69, demonstrating that structured training approaches significantly impact model efficacy. The inclusion of an ablation study further reinforced the importance of each model component, particularly segmentation and knowledge-driven transfer learning, in optimizing diagnostic precision. Beyond basic CNN models, advanced architectures such as VGG-19, Mask R-CNN, and YOLO have been explored for periodontal assessment.

Lee et al. [31] employed a VGG-19-based CNN to predict the extraction probability of periodontally compromised teeth (PCT), achieving an accuracy of 81% for premolars and 76.7% for molars. The study revealed that tooth morphology influences diagnostic performance, with premolars exhibiting better predictive accuracy due to their simpler anatomical structure.

Meanwhile, Li et al. [32] introduced Deetal-Perio, a Mask R-CNN-based system integrating segmentation and alveolar bone loss (ABL) calculation, achieving F1 scores of 0.894 and 0.820 across two datasets. The study demonstrated that hybrid approaches combining segmentation and machine learning classifiers, such as XGBoost, could improve interpretability and diagnostic consistency. Similarly, YOLO-based models have gained traction for their real-time detection capabilities. Uzun Saylan et al. [33] applied YOLOv5 to detect ABL in panoramic radiographs, emphasizing its effectiveness in localized segmentation, particularly for maxillary incisors. The study highlighted the need for larger datasets and 3D imaging integration to further enhance precision.

Alotaibi et al. [34] extended CNN applications by using VGG-16 for classifying ABL severity in periapical radiographs, achieving 73% accuracy in binary classification but struggling with multi-class staging. This limitation suggests that while CNNs effectively detect PBL, they require additional enhancements to accurately determine disease severity. Another avenue of exploration involves super-resolution techniques for improving radiographic image quality. Moran et al. [35] assessed the use of SRCNN and SRGAN for enhancing resolution in periodontal radiographs, demonstrating improved contrast and edge definition. However, while these enhancements benefited visual assessment, they introduced inconsistencies in classification accuracy, with artifacts sometimes disrupting CNN performance. This finding underscores the necessity for balanced pre-processing techniques that enhance image quality without compromising diagnostic integrity.

In terms of automated measurement techniques, landmark-based approaches have been explored for PBL quantification.

Lin et al. [36] developed an automated system for localizing the cementoenamel junction (CEJ), alveolar crest (ALC), and apex (APEX), achieving 90% localization accuracy within ~0.44 mm deviation. More than half of the system’s ABL measurements deviated less than 10% from the ground truth, showcasing its potential for early diagnosis and treatment monitoring. Similarly, Tsoromokos et al. [37] employed a CNN model to estimate ABL as a percentage of root length, achieving moderate reliability (ICC = 0.601), with higher accuracy for non-molars (ICC = 0.763) but reduced performance in severe ABL cases. These studies emphasize the importance of precise anatomical landmark localization in automated periodontal assessment.

Among the most innovative contributions is PDCNN, introduced by Kong et al. [38] which employs a two-stage CNN with anchor-free encoding for RBL diagnosis. PDCNN outperformed Faster R-CNN and YOLOv4, achieving an accuracy of 0.762 while maintaining real-time processing speeds (39.4 FPS). The model’s efficiency highlights the potential of optimizing detection algorithms for clinical applications, reducing the computational burden without sacrificing diagnostic accuracy.

Similarly, Chen et al. [39] integrated YOLOv5, VGG-16, and U-Net to develop a model capable of segmenting and measuring PBL across 8000 images. With an accuracy of 97.0% for RBL detection, the model surpassed human diagnostic performance but required further refinement to ensure robustness across diverse clinical settings.

Further extending DL applications, Bayrakdar et al. [40] utilized a U-Net model to detect horizontal and vertical bone loss, achieving strong performance for total (AUC = 0.951) and horizontal loss (AUC = 0.910) but lower for vertical loss (AUC = 0.733). The disparity in detection performance for different bone loss types underscores the challenge of developing models that generalize well across all clinical scenarios.

In another study, Chen et al. [41] combined U-Net and Mask-RCNN and enabled precise segmentation and keypoint localization for bone loss ratio measurement. Diagnostic accuracy was highest for stage III (97%) and lower for stages I and II. The model reached sensitivity, specificity, and F-measure values of 0.84, 0.88, and 0.81, respectively, suggesting it can effectively support periodontal diagnosis and reduce clinician workload. Similarly, Thanathornwong et al. [42] employed Faster R-CNN for detecting periodontally compromised teeth in panoramic radiographs, achieving a sensitivity of 0.84 and specificity of 0.88, highlighting its potential in clinical decision support. However, as with many DL models, the study noted challenges in interpretability, a critical barrier to widespread clinical adoption.

Another noteworthy study by Ayyildiz et al. [43] compared various DL models for classifying periodontal disease stages, identifying DenseNet121 with global average pooling (GAP) and the minimum redundancy maximum relevance (mRMR) feature selection method as the most effective combination. Achieving a classification accuracy of 90.7%, this approach demonstrated the potential of feature selection techniques in optimizing model performance while reducing computational complexity.

Lastly, Lin et al. [44] applied Mask R-CNN and YOLOv8 for periodontal disease analysis, achieving 97.01% accuracy in tooth detection and high segmentation performance for teeth, bones, and crowns. Their study emphasized the importance of preprocessing techniques, such as CLAHE image enhancement, in improving model precision and reducing diagnostic time.

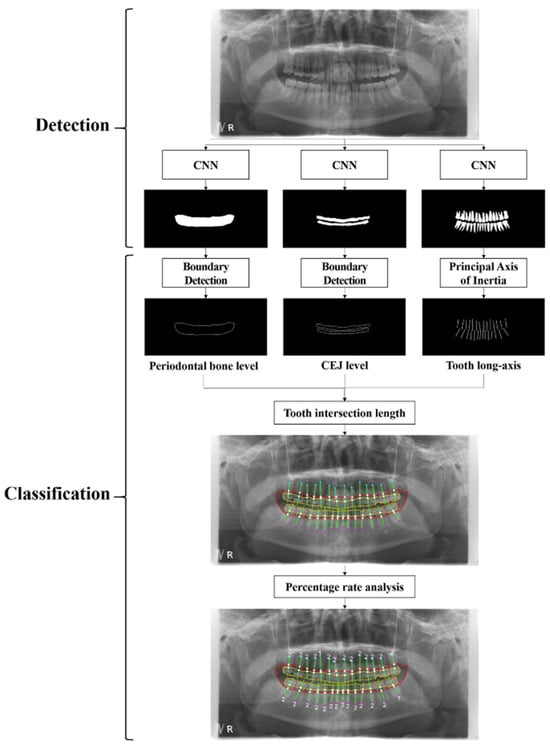

2.2. Hybrid Neural Network

Four studies used hybrid neural networks instead. We reported on the Chang et al. [45] study, which developed a hybrid DL method to diagnose periodontal bone loss and stage periodontitis using panoramic radiographs (Figure 6). DL was applied to detect radiographic bone and CEJ levels for the entire jaw, while percentage-based analysis of bone loss relative to tooth long axes enabled classification of periodontal bone loss. This classification aligns with the 2017 World Workshop periodontitis staging criteria. The method achieved a Pearson’s correlation coefficient of 0.73 and an intraclass correlation value of 0.91 (p < 0.01) when compared with radiologist diagnoses, demonstrating high accuracy and reliability. This hybrid framework shows promise for automatic, reliable periodontitis diagnosis and staging. A CNN identified bone and CEJ levels, achieving 0.92-pixel accuracy and strong correlation with expert diagnoses. While effective overall, incisor and molar staging was less accurate due to complexity. The method shows promise for consistent diagnostics, with further research needed on larger datasets and complex cases for broader clinical applications.

Figure 6.

Complete process for detecting and categorizing periodontal bone loss using a hybrid framework that combines the traditional CAD technique with DL architecture. Reprinted from Ref. [45]. Copyright 2021, Scientific Reports. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY 4.0) license.

Instead, Xue et al. [46] presented in their study a DL model to diagnose periodontal bone loss and stage periodontitis in dental panoramic radiographs. The study developed a CNN-based ensemble, using YOLOv8, Mask R-CNN, and TransUNet models for tooth detection, segmentation, and bone loss measurement. It was tested on 320 radiographs with 8462 teeth from January 2020 to December 2023. The DL method demonstrated a periodontal bone loss degree deviation of 5.28%, a Pearson’s correlation coefficient of 0.832 (p < 0.001), and an intraclass correlation value of 0.806 (p < 0.001). The model showed an 89.45% diagnostic accuracy, closely aligning with professional diagnoses. The method holds promise as a reliable aid for dentists, especially in resource-limited settings, and supports consistent periodontal assessments.

Ertas et al. [47] developed a machine learning-based decision system for staging and grading periodontitis using clinical and radiographic data. Algorithms like decision trees, random forests, and SVM were tested, achieving up to 98.6% accuracy. For image-only staging, a hybrid model of ResNet50 and SVM reached 88.2% accuracy. Despite lower accuracy in grading from images, the model offers a promising tool for aiding clinicians, especially for complex diagnoses, with plans for further optimization. Finally, this study by Kim et al. [48] introduced an unsupervised few-shot learning model using UNet architecture and convolutional variational autoencoder (CVAE) for periodontal disease diagnosis in dental radiographs, addressing limited labeled data issues. The validation, comparing this method with traditional supervised learning and autoencoder-based clustering, shows a significant improvement in diagnostic accuracy and efficiency. For three real-world validation datasets, the Unet-CVAE model achieved 14% higher accuracy than supervised models with 100 labeled images across datasets. UNet extracts regions of interest, while CVAE clusters features, improving diagnostic accuracy and data efficiency in dental imaging. This framework is promising for data-scarce clinical applications.

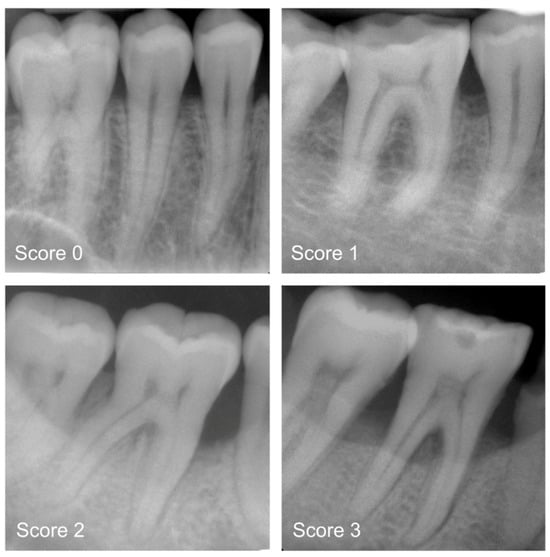

2.3. Transformer Neural Network

Only one study has incorporated transformer networks into periodontology. Specifically, Dujic et al. [49] evaluated Vision Transformer networks for automated periodontal bone loss (PBL) detection in periapical radiographs, comparing five models (ViT-base, ViT-large, BEiT-base, BEiT-large, and DeiT-base) which were utilized and evaluated based on accuracy (ACC), sensitivity (SE), specificity (SP), positive/negative predictive value (PPV/NPV), and area under the ROC curve (AUC). Using 21,819 anonymized radiographs labeled by dentists, the results showed high diagnostic accuracy (83.4–85.2%) and AUC scores (0.899–0.918). The model performed best on mandibular teeth (94.1–96.7% accuracy for anterior teeth) but had lower accuracy for maxillary posterior teeth (Figure 7). These Vision Transformers demonstrated promising diagnostic consistency, though further improvements are suggested, such as expanding datasets and incorporating precise region annotations for increased clinical applicability.

Figure 7.

Periapical radiograph pictures for the following categories: mild radiographic periodontal bone loss (Score 1), moderate radiographic periodontal bone loss (Score 2), severe radiographic PBL extending to the mid-third of the root and beyond (Score 3), and healthy periodontium (Score 0). Reprinted from Ref. [49]. Copyright 2023, Diagnostics. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY 4.0) license.

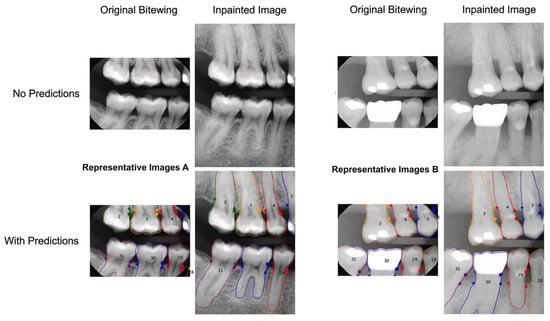

2.4. Generative Adversarial Network

Instead, GAN were used by Kearney et al. [50], who introduced a GAN in-painting method with partial convolution to improve the prediction of clinical attachment levels (CAL) in periodontitis using dental radiographs (Figure 8). This approach compensates for limited fields of view in bitewing radiographs, which often miss anatomy critical for CAL assessment. The dataset, including 80,326 training images, 12,901 validation images, and 10,687 comparison images, was collected between 1 July 2016 and 30 January 2020. Statistical analyses, including mean bias error (MBE), mean absolute error (MAE), and Dunn’s pairwise test, were used to evaluate prediction accuracy. The results showed a significant improvement in CAL prediction accuracy with inpainted methods (MAE of 1.04 mm) compared to non-inpainted methods (MAE of 1.50 mm). The best-performing inpainted methods outperformed their non-inpainted counterparts, achieving a Dunn’s pairwise value of −63.89. The model demonstrated clinically acceptable accuracy within 1 mm, aiding in consistent periodontal disease diagnosis and treatment planning.

Figure 8.

Images A and B, which represent technique 1 without and with inpainting, respectively, show no radiographic bone loss and considerable radiographic bone loss. The original x-rays are displayed on the left, while the inpainted images with the outlines of the apical bone and teeth are displayed on the right. No predictions are shown in the top row, while forecasts are shown in the bottom row. The apical bone placements indicate CAL, while the points indicate the CEJ. Reprinted from Ref. [50]. Copyright 2022, Journal of Dentistry. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY 4.0) license.

3. Discussion

The integration of artificial intelligence (AI) into periodontology, particularly through neural networks, has marked a significant leap in the accuracy and efficiency of diagnosing periodontal diseases. AI systems have shown remarkable capabilities in detecting alveolar bone loss (ABL) and clinical attachment loss (CAL), two critical parameters for diagnosing and staging periodontal conditions [1,4,51]. The success of AI models like convolutional neural networks (CNNs), hybrid neural networks, and generative adversarial networks (GANs) reflects the transformative potential of these tools in modern dental practice.

For instance, Krois et al. [29] developed a seven-layer CNN that achieved 81% accuracy, sensitivity, and specificity in detecting ABL from panoramic radiographs, comparable to experienced dentists (76%). Similarly, Kim et al. [30] introduced DeNTNet, which employs transfer learning and Grad-CAM interpretability, achieving an F1 score of 0.75, outperforming clinicians’ score of 0.69, and demonstrating superior sensitivity and specificity in complex imaging scenarios. The Li et al. [32] study on Deetal-Perio demonstrated its capability to segment and number teeth while calculating ABL using Mask R-CNN, achieving F1 scores of 0.894 and 0.820 across two datasets. Its integration of clinical interpretability further highlights its utility in assisting clinicians. Bilge et al. [33] highlighted YOLOv5′s ability to perform localized segmentation, excelling at identifying bone loss in specific dental regions such as incisors, with high sensitivity and precision. In addition to CNN-based approaches, hybrid models have shown promise in combining the strengths of various AI methods. Chang et al. [45] introduced a hybrid DL system that achieved 92% pixel accuracy in identifying bone levels and staging periodontitis, showing strong correlations with expert diagnoses. These models, while effective overall, faced challenges staging molars and incisors due to anatomical complexity. Advanced imaging techniques were further explored in studies by Moran et al. [35], which used super-resolution CNNs to enhance radiograph visual clarity, improving bone loss identification and boosting diagnostic confidence. Chen et al. [39] demonstrated a hybrid model combining YOLOv5, VGG-16, and U-Net, achieving up to 97% accuracy in detecting radiographic bone loss, surpassing traditional clinician accuracy.

However, challenges remain, particularly in addressing anatomical variability, molars with complex root structures, and vertical bone loss. Studies such as Bayrakdar et al. [40] highlighted reduced performance in detecting vertical bone loss (AUC = 0.733) compared to horizontal loss (AUC = 0.910), emphasizing the need for robust datasets and 3D imaging integration. Similarly, Tsoromokos et al. [37] reported moderate reliability (ICC = 0.601) in ABL estimation, with improved performance on non-molar teeth. These results demonstrate the strengths and limitations of artificial intelligence in periodontal diagnostics at present. Future efforts should prioritize creating larger, more diverse datasets, incorporating 3D imaging modalities such as Cone Beam Computer Tomography (CBCT), and refining algorithms for complex cases. In fact, studies that apply deep learning algorithms on CBCTs are not widespread because this method of investigation is not indicated in the diagnosis of periodontal disease, which instead makes use of 2D imaging techniques, such as endoral bitewing [1,51,52]. Expanding interpretability tools and integrating clinical parameters will further enhance trust and usability among clinicians. The path forward involves several key developments. First, expanding datasets to include diverse patient demographics, bone and dental anatomical complexities, and advanced imaging modalities will be critical for refining AI algorithms. Many studies highlight the need for larger, multi-institutional dataset and the necessity to conduct multicenter trials is critical to reduce biases and test the stability and reliability of AI models. In addition, more advanced algorithmic developments are necessary to improve the recognition of complex cases, including severe periodontal disease and anatomically challenging structures. Current CNN-based models often struggle with such cases due to their limited ability to capture subtle variations in anatomical structures and disease progression. Refining these models by incorporating hybrid deep learning techniques, such as transformer-based architectures and multi-scale feature extraction methods, could enhance diagnostic performance by allowing the models to capture finer details and hierarchical features more effectively. Leveraging synthetic data generation and domain adaptation techniques may help compensate for the scarcity of severe cases in training datasets, improving model robustness. Methods like active learning, where models iteratively refine their learning process based on the most uncertain cases, could further enhance AI accuracy in challenging scenarios. Moreover, integrating multimodal data, including clinical records, intraoral scans, and patient history, could provide a more holistic understanding of disease progression, improving the model’s diagnostic precision [53].

Second, the development of interpretable and user-friendly AI tools is vital for ensuring seamless integration into clinical practice. Models that offer clear explanations for their predictions not only foster trust among practitioners but also facilitate collaboration between AI and human expertise. In fact, model interpretability remains a key concern, as black-box AI systems pose challenges in clinical decision-making by limiting clinicians’ ability to understand and validate AI-driven diagnoses. A lack of transparency can reduce trust in AI-assisted tools, potentially hindering their adoption in real-world settings. To address this, techniques such as Grad-CAM visualizations, feature attribution methods, and hybrid approaches that integrate deep learning with traditional machine learning can enhance interpretability. These methods provide insight into model decision-making processes, allowing for more transparent and explainable AI applications in periodontal diagnostics. Additionally, the development of rule-based systems and attention mechanisms further aids in bridging the gap between AI predictions and clinical reasoning, fostering greater confidence among practitioners. Real-world deployment requires rigorous validation and regulatory approval, necessitating collaborations between AI researchers, dental clinicians, and regulatory bodies to ensure clinical efficacy and safety [54,55,56].

Retrospective study designs, while valuable for analyzing existing data, often fall short in capturing the dynamic variabilities and issues encountered in real-time clinical practice. These studies depend on historical data, which may not reflect the current clinical environment or the rapid advancements in medical technology and treatment protocols. As a result, the findings from retrospective analyses might not fully account for the complexities and nuances of actual clinical scenarios, where patient conditions and treatment responses can vary significantly. This limitation can lead to a gap between the study outcomes and their applicability in real-world settings, potentially affecting the generalizability and relevance of the research conclusions. To address these challenges, it is crucial to complement retrospective studies with prospective research and real-time data collection, ensuring that AI models and clinical insights are robust and adaptable to the ever-evolving landscape of healthcare. To effectively address the challenges of interoperability and data quality in the application of AI in periodontology, several strategies can be implemented.

The necessity of an interoperable digital data infrastructure for incorporating AI into healthcare is another emerging problem in the application of AI in periodontology and, subsequently, in medicine. Electronic health records (EHRs) must be easily accessible for AI development, but interoperability problems create obstacles that prevent fair progress. Strong regulatory monitoring, consistent investment, and proactive industry cooperation are necessary to maximize AI’s influence in dentistry. AI-driven advancements will benefit patients, healthcare providers, and researchers equally if a safe, standardized digital architecture that facilitates smooth health data sharing is established [57]. This leads to another challenge to address, which is the flawed data in the development and implementation of AI models; in fact, even well-trained AI systems can produce erroneous outcomes if they are fed incorrect or biased input data. Focusing on data quality, enabling patient-initiated data corrections, and deploying automated error-correction systems could be key strategies to mitigate the risks associated with flawed data [54,58].

Lastly, exploring hybrid approaches that combine AI diagnostics with decision-support systems could revolutionize treatment planning [59,60]. In this direction, AI based on increasingly high-performance algorithms is highly connected to precision medicine, including in the theranostic and nanotechnology fields and in the field of biomedical imaging [61,62,63]. These systems could integrate multiple diagnostic parameters, such as radiographic findings, periodontal probing data, and patient risk factors, to provide comprehensive and personalized treatment recommendations.

AI has proven to be powerful in periodontology, offering unprecedented accuracy and efficiency in diagnosing and staging periodontal diseases. While challenges such as data imbalance, anatomical complexity, and reliance on 2D imaging persist, ongoing advancements in technology and research hold immense promise. By addressing these limitations and refining AI tools, periodontology stands to benefit from the era of precision diagnostics and personalized care, ultimately improving patient outcomes and advancing dental medicine.

The integration of AI in healthcare, particularly in managing sensitive patient information, raises several ethical and privacy considerations. Data privacy and security are paramount, as AI systems handle vast amounts of sensitive patient data. Healthcare providers must implement robust protection measures to prevent unauthorized access and breaches, ensuring patient confidentiality. Additionally, informed consent plays a crucial role in maintaining trust and transparency; patients must be fully aware of how their data will be used, including the potential benefits and risks associated with AI-driven healthcare solutions. Another critical issue is bias and fairness, as AI systems can inadvertently reflect biases present in the training data. To ensure equitable treatment for all patients, it is essential to use diverse datasets and continuously monitor AI systems for biased outcomes. Furthermore, accountability and transparency in AI-driven decision-making are vital. Healthcare providers must ensure that AI systems operate transparently, enabling clinicians to understand and validate AI-generated recommendations. This fosters trust and facilitates AI integration into clinical practice. Lastly, regulatory compliance is essential to ensure that AI applications align with ethical standards and data protection laws, promoting responsible and ethical AI use in healthcare. By addressing these ethical and privacy concerns, healthcare providers can effectively harness AI’s potential to improve patient care while maintaining trust and safeguarding sensitive information.

4. Conclusions

Artificial intelligence (AI), particularly neural networks, in periodontology is revolutionizing periodontal disease diagnosis and management. Convolutional neural networks (CNNs), along with hybrid models, have demonstrated remarkable efficacy in detecting and staging alveolar bone loss (ABL) and clinical attachment loss (CAL). These systems often achieve diagnostic accuracy comparable to, and in some cases exceeding, that of human clinicians. Models like Deetal-Perio and YOLOv5 have proven their ability to segment teeth and assess ABL with high precision, while hybrid models enhance diagnostic accuracy in complex scenarios, such as molars and vertical bone loss.

These advancements highlight the potential for AI-driven decision-support systems to transform early diagnosis, standardize evaluations, and optimize treatment planning. By reducing inter-operator variability and increasing diagnostic consistency, AI tools promise to significantly improve care quality and patient outcomes. Additionally, tools like DeNTNet, which integrate interpretability features such as Grad-CAM, bridge the gap between AI and clinical applicability, fostering trust among practitioners.

However, realizing the full potential of AI in periodontology requires overcoming current limitations. Efforts should focus on expanding datasets, refining algorithms to handle complex diagnostic cases, and incorporating clinical parameters to provide a holistic approach. The integration of three-dimensional imaging modalities and further development of transparent, user-friendly tools are essential for gaining clinician trust and ensuring seamless adoption in dental practices.

As research and technology continue to advance, artificial intelligence systems will become indispensable in the field of periodontology. These tools not only aid in early diagnosis and enhance treatment planning but also contribute to better patient outcomes globally, shaping the future of periodontal care.

Author Contributions

Conceptualization, G.S. and A.C.; methodology, G.S. and A.C.; investigation, G.S., A.L.C. and A.C.; writing—original draft preparation, G.S.; writing—review and editing, G.S., V.B., M.A. and A.C.; visualization, G.S., A.L.C., V.B., M.A., A.T., D.D.R., A.C., G.S. and A.Y.; supervision, V.B., A.L.C. and A.C; project administration, A.C.; funding acquisition, A.C. All authors have read and agreed to the published version of the manuscript.

Funding

In this research Anthony Yezzi was funded by grant number W911NF-22-1-0267 from the Army Research Office.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tonetti, M.S.; Greenwell, H.; Kornman, K.S. Staging and Grading of Periodontitis: Framework and Proposal of a New Classification and Case Definition. J. Clin. Periodontol. 2018, 45, S149–S161. [Google Scholar] [CrossRef] [PubMed]

- Peres, M.A.; D Macpherson, L.M.; Weyant, R.J.; Daly, B.; Venturelli, R.; Mathur, M.R.; Listl, S.; Keller Celeste, R.; Guarnizo-Herreño, C.C.; Kearns, C.; et al. Oral Health 1 Oral Diseases: A Global Public Health Challenge. Lancet 2019, 394, 249–260. [Google Scholar] [CrossRef] [PubMed]

- Cholan, P.; Ramachandran, L.; Umesh, S.G.; Sucharitha, P.; Tadepalli, A.; Palanisamy, S. The Impetus of Artificial Intelligence on Periodontal Diagnosis: A Brief Synopsis. Cureus 2023, 15, e43583. [Google Scholar] [CrossRef] [PubMed]

- Caton, J.G.; Armitage, G.; Berglundh, T.; Chapple, I.L.C.; Jepsen, S.; Kornman, K.S.; Mealey, B.L.; Papapanou, P.N.; Sanz, M.; Tonetti, M.S. A New Classification Scheme for Periodontal and Peri-Implant Diseases and Conditions—Introduction and Key Changes from the 1999 Classification. J. Clin. Periodontol. 2018, 45, S1–S8. [Google Scholar] [CrossRef]

- Heo, M.S.; Kim, J.E.; Hwang, J.J.; Han, S.S.; Kim, J.S.; Yi, W.J.; Park, I.W. Dmfr 50th Anniversary: Review Article Artificial Intelligence in Oral and Maxillofacial Radiology: What Is Currently Possible? Dentomaxillofacial Radiol. 2020, 50, 20200375. [Google Scholar] [CrossRef]

- Ali, M.; Benfante, V.; Cutaia, G.; Salvaggio, L.; Rubino, S.; Portoghese, M.; Ferraro, M.; Corso, R.; Piraino, G.; Ingrassia, T.; et al. Prostate Cancer Detection: Performance of Radiomics Analysis in Multiparametric MRI. In Proceedings of the Image Analysis and Processing—ICIAP 2023 Workshops, Udine, Italy, 11–15 September 2023; pp. 83–92. [Google Scholar]

- Corso, R.; Comelli, A.; Salvaggio, G.; Tegolo, D. New Parametric 2D Curves for Modeling Prostate Shape in Magnetic Resonance Images. Symmetry 2024, 16, 755. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Vaswani, A.; Brain, G.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Al-Sayegh, S.W. Hybrid Neural Network. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006; pp. 763–770. [Google Scholar]

- Yuan, Y.; Zhang, X.; Wang, Y.; Li, H.; Qi, Z.; Du, Z.; Chu, Y.; Feng, D.; Hu, J.; Xie, Q.; et al. Multimodal Data Integration Using Deep Learning Predicts Overall Survival of Patients with Glioma. View 2024, 5, 20240001. [Google Scholar] [CrossRef]

- Lyu, X.; Liu, J.; Gou, Y.; Sun, S.; Hao, J.; Cui, Y. Inside Front Cover: Development and Validation of a Machine Learning-based Model of Ischemic Stroke Risk in the Chinese Elderly Hypertensive Population (View 6/2024). View 2024, 5, 20240059. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and Diagnosis of Dental Caries Using a Deep Learning-Based Convolutional Neural Network Algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Vinayahalingam, S.; Kempers, S.; Limon, L.; Deibel, D.; Maal, T.; Hanisch, M.; Bergé, S.; Xi, T. Classification of Caries in Third Molars on Panoramic Radiographs Using Deep Learning. Sci. Rep. 2021, 11, 12609. [Google Scholar] [CrossRef] [PubMed]

- Lo Casto, A.; Spartivento, G.; Benfante, V.; Di Raimondo, R.; Ali, M.; Di Raimondo, D.; Tuttolomondo, A.; Stefano, A.; Yezzi, A.; Comelli, A. Artificial Intelligence for Classifying the Relationship between Impacted Third Molar and Mandibular Canal on Panoramic Radiographs. Life 2023, 13, 1441. [Google Scholar] [CrossRef] [PubMed]

- Zhu, T.; Chen, D.; Wu, F.; Zhu, F.; Zhu, H. Artificial Intelligence Model to Detect Real Contact Relationship between Mandibular Third Molars and Inferior Alveolar Nerve Based on Panoramic Radiographs. Diagnostics 2021, 11, 1664. [Google Scholar] [CrossRef]

- Fukuda, M.; Ariji, Y.; Kise, Y.; Nozawa, M.; Kuwada, C.; Funakoshi, T.; Muramatsu, C.; Fujita, H.; Katsumata, A.; Ariji, E. Comparison of 3 Deep Learning Neural Networks for Classifying the Relationship between the Mandibular Third Molar and the Mandibular Canal on Panoramic Radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 336–343. [Google Scholar] [CrossRef]

- Karthikeyan, T.; Manikandaprabhu, P. A Novel Approach for Inferior Alveolar Nerve (IAN) Injury Identification Using Panoramic Radiographic Image. Biomed. Pharmacol. J. 2015, 8, 307–314. [Google Scholar] [CrossRef]

- Kim, B.S.; Yeom, H.G.; Lee, J.H.; Shin, W.S.; Yun, J.P.; Jeong, S.H.; Kang, J.H.; Kim, S.W.; Kim, B.C. Deep Learning-Based Prediction of Paresthesia after Third Molar Extraction: A Preliminary Study. Diagnostics 2021, 11, 1572. [Google Scholar] [CrossRef]

- Yang, H.; Jo, E.; Kim, H.J.; Cha, I.H.; Jung, Y.S.; Nam, W.; Kim, J.Y.; Kim, J.K.; Kim, Y.H.; Oh, T.G.; et al. Deep Learning for Automated Detection of Cyst and Tumors of the Jaw in Panoramic Radiographs. J. Clin. Med. 2020, 9, 1839. [Google Scholar] [CrossRef]

- Cairone, L.; Benfante, V.; Bignardi, S.; Marinozzi, F.; Yezzi, A.; Tuttolomondo, A.; Salvaggio, G.; Bini, F.; Comelli, A. Robustness of Radiomics Features to Varying Segmentation Algorithms in Magnetic Resonance Images. In Proceedings of the Image Analysis and Processing—ICIAP 2022 Workshops, Lecce, Italy, 23–27 May 2022; pp. 462–472. [Google Scholar]

- Benfante, V.; Salvaggio, G.; Ali, M.; Cutaia, G.; Salvaggio, L.; Salerno, S.; Busè, G.; Tulone, G.; Pavan, N.; Di Raimondo, D.; et al. Grading and Staging of Bladder Tumors Using Radiomics Analysis in Magnetic Resonance Imaging. In Proceedings of the Image Analysis and Processing—ICIAP 2022 Workshops, Lecce, Italy, 23–27 May 2022; pp. 93–103. [Google Scholar]

- Takahashi, T.; Nozaki, K.; Gonda, T.; Mameno, T.; Wada, M.; Ikebe, K. Identification of Dental Implants Using Deep Learning—Pilot Study. Int. J. Implant. Dent. 2020, 6, 53. [Google Scholar] [CrossRef]

- Fukuda, M.; Inamoto, K.; Shibata, N.; Ariji, Y.; Yanashita, Y.; Kutsuna, S.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. Evaluation of an Artificial Intelligence System for Detecting Vertical Root Fracture on Panoramic Radiography. Oral Radiol. 2020, 36, 337–343. [Google Scholar] [CrossRef]

- Hiraiwa, T.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. A Deep-Learning Artificial Intelligence System for Assessment of Root Morphology of the Mandibular First Molar on Panoramic Radiography. Dentomaxillofacial Radiol. 2019, 48, 20180218. [Google Scholar] [CrossRef]

- Lee, K.S.; Kwak, H.J.; Oh, J.M.; Jha, N.; Kim, Y.J.; Kim, W.; Baik, U.B.; Ryu, J.J. Automated Detection of TMJ Osteoarthritis Based on Artificial Intelligence. J. Dent. Res. 2020, 99, 1363–1367. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Yu, H.J.; Kim, M.J.; Kim, J.W.; Choi, J. Automated Cephalometric Landmark Detection with Confidence Regions Using Bayesian Convolutional Neural Networks. BMC Oral Health 2020, 20, 270. [Google Scholar] [CrossRef] [PubMed]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, H.S.; Song, I.S.; Jung, K.H. DeNTNet: Deep Neural Transfer Network for the Detection of Periodontal Bone Loss Using Panoramic Dental Radiographs. Sci. Rep. 2019, 9, 17615. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Diagnosis and Prediction of Periodontally Compromised Teeth Using a Deep Learning-Based Convolutional Neural Network Algorithm. J. Periodontal Implant. Sci. 2018, 48, 114–123. [Google Scholar] [CrossRef]

- Li, H.; Zhou, J.; Zhou, Y.; Chen, Q.; She, Y.; Gao, F.; Xu, Y.; Chen, J.; Gao, X. An Interpretable Computer-Aided Diagnosis Method for Periodontitis From Panoramic Radiographs. Front. Physiol. 2021, 12, 655556. [Google Scholar] [CrossRef]

- Uzun Saylan, B.C.; Baydar, O.; Yeşilova, E.; Kurt Bayrakdar, S.; Bilgir, E.; Bayrakdar, İ.Ş.; Çelik, Ö.; Orhan, K. Assessing the Effectiveness of Artificial Intelligence Models for Detecting Alveolar Bone Loss in Periodontal Disease: A Panoramic Radiograph Study. Diagnostics 2023, 13, 1800. [Google Scholar] [CrossRef]

- Alotaibi, G.; Awawdeh, M.; Farook, F.F.; Aljohani, M.; Aldhafiri, R.M.; Aldhoayan, M. Artificial Intelligence (AI) Diagnostic Tools: Utilizing a Convolutional Neural Network (CNN) to Assess Periodontal Bone Level Radiographically—A Retrospective Study. BMC Oral Health 2022, 22, 399. [Google Scholar] [CrossRef]

- Moran, M.; Faria, M.; Giraldi, G.; Bastos, L.; Conci, A. Do Radiographic Assessments of Periodontal Bone Loss Improve with Deep Learning Methods for Enhanced Image Resolution? Sensors 2021, 21, 2013. [Google Scholar] [CrossRef]

- Lin, P.L.; Huang, P.Y.; Huang, P.W. Automatic Methods for Alveolar Bone Loss Degree Measurement in Periodontitis Periapical Radiographs. Comput. Methods Programs Biomed. 2017, 148, 1–11. [Google Scholar] [CrossRef]

- Tsoromokos, N.; Parinussa, S.; Claessen, F.; Moin, D.A.; Loos, B.G. Estimation of Alveolar Bone Loss in Periodontitis Using Machine Learning. Int. Dent. J. 2022, 72, 621–627. [Google Scholar] [CrossRef] [PubMed]

- Kong, Z.; Ouyang, H.; Cao, Y.; Huang, T.; Ahn, E.; Zhang, M.; Liu, H. Automated Periodontitis Bone Loss Diagnosis in Panoramic Radiographs Using a Bespoke Two-Stage Detector. Comput. Biol. Med. 2023, 152, 106374. [Google Scholar] [CrossRef]

- Chen, C.C.; Wu, Y.F.; Aung, L.M.; Lin, J.C.Y.; Ngo, S.T.; Su, J.N.; Lin, Y.M.; Chang, W.J. Automatic Recognition of Teeth and Periodontal Bone Loss Measurement in Digital Radiographs Using Deep-Learning Artificial Intelligence. J. Dent. Sci. 2023, 18, 1301–1309. [Google Scholar] [CrossRef]

- Kurt-Bayrakdar, S.; Bayrakdar, İ.Ş.; Yavuz, M.B.; Sali, N.; Çelik, Ö.; Köse, O.; Uzun Saylan, B.C.; Kuleli, B.; Jagtap, R.; Orhan, K. Detection of Periodontal Bone Loss Patterns and Furcation Defects from Panoramic Radiographs Using Deep Learning Algorithm: A Retrospective Study. BMC Oral Health 2024, 24, 155. [Google Scholar] [CrossRef]

- Chen, I.H.; Lin, C.H.; Lee, M.K.; Chen, T.E.; Lan, T.H.; Chang, C.M.; Tseng, T.Y.; Wang, T.; Du, J.K. Convolutional-Neural-Network-Based Radiographs Evaluation Assisting in Early Diagnosis of the Periodontal Bone Loss via Periapical Radiograph. J. Dent. Sci. 2024, 19, 550–559. [Google Scholar] [CrossRef]

- Thanathornwong, B.; Suebnukarn, S. Automatic Detection of Periodontal Compromised Teeth in Digital Panoramic Radiographs Using Faster Regional Convolutional Neural Networks. Imaging Sci. Dent. 2020, 50, 169–174. [Google Scholar] [CrossRef]

- Guler Ayyildiz, B.; Karakis, R.; Terzioglu, B.; Ozdemir, D. Comparison of Deep Learning Methods for the Radiographic Detection of Patients with Different Periodontitis Stages. Dentomaxillofac Radiol. 2024, 53, 32–42. [Google Scholar] [CrossRef]

- Lin, T.J.; Mao, Y.C.; Lin, Y.J.; Liang, C.H.; He, Y.Q.; Hsu, Y.C.; Chen, S.L.; Chen, T.Y.; Chen, C.A.; Li, K.C.; et al. Evaluation of the Alveolar Crest and Cemento-Enamel Junction in Periodontitis Using Object Detection on Periapical Radiographs. Diagnostics 2024, 14, 1687. [Google Scholar] [CrossRef]

- Chang, H.J.; Lee, S.J.; Yong, T.H.; Shin, N.Y.; Jang, B.G.; Kim, J.E.; Huh, K.H.; Lee, S.S.; Heo, M.S.; Choi, S.C.; et al. Deep Learning Hybrid Method to Automatically Diagnose Periodontal Bone Loss and Stage Periodontitis. Sci. Rep. 2020, 10, 7531. [Google Scholar] [CrossRef]

- Xue, T.; Chen, L.; Sun, Q. Deep Learning Method to Automatically Diagnose Periodontal Bone Loss and Periodontitis Stage in Dental Panoramic Radiograph. J. Dent. 2024, 150, 105373. [Google Scholar] [CrossRef]

- Ertaş, K.; Pence, I.; Cesmeli, M.S.; Ay, Z.Y. Determination of the Stage and Grade of Periodontitis According to the Current Classification of Periodontal and Peri-Implant Diseases and Conditions (2018) Using Machine Learning Algorithms. J. Periodontal Implant. Sci. 2022, 53, 38. [Google Scholar] [CrossRef]

- Kim, M.J.; Chae, S.G.; Bae, S.J.; Hwang, K.G. Unsupervised Few Shot Learning Architecture for Diagnosis of Periodontal Disease in Dental Panoramic Radiographs. Sci. Rep. 2024, 14, 23237. [Google Scholar] [CrossRef]

- Dujic, H.; Meyer, O.; Hoss, P.; Wölfle, U.C.; Wülk, A.; Meusburger, T.; Meier, L.; Gruhn, V.; Hesenius, M.; Hickel, R.; et al. Automatized Detection of Periodontal Bone Loss on Periapical Radiographs by Vision Transformer Networks. Diagnostics 2023, 13, 3562. [Google Scholar] [CrossRef]

- Kearney, V.P.; Yansane, A.I.M.; Brandon, R.G.; Vaderhobli, R.; Lin, G.H.; Hekmatian, H.; Deng, W.; Joshi, N.; Bhandari, H.; Sadat, A.S.; et al. A Generative Adversarial Inpainting Network to Enhance Prediction of Periodontal Clinical Attachment Level. J. Dent. 2022, 123, 104211. [Google Scholar] [CrossRef]

- Tonetti, M.S.; Sanz, M. Implementation of the New Classification of Periodontal Diseases: Decision-Making Algorithms for Clinical Practice and Education. J. Clin. Periodontol. 2019, 46, 398–405. [Google Scholar] [CrossRef]

- Woelber, J.; Fleiner, J.; Rau, J.; Ratka-Krüger, P.; Hannig, C. Accuracy and Usefulness of CBCT in Periodontology: A Systematic Review of the Literature. Int. J. Periodontics Restor. Dent. 2018, 38, 289–297. [Google Scholar] [CrossRef]

- Ahmed, N.; Abbasi, M.S.; Zuberi, F.; Qamar, W.; Halim, M.S.B.; Maqsood, A.; Alam, M.K. Artificial Intelligence Techniques: Analysis, Application, and Outcome in Dentistry—A Systematic Review. BioMed Res. Int. 2021, 2021, 9751564. [Google Scholar] [CrossRef]

- Feher, B.; Tussie, C.; Giannobile, W.V. Applied Artificial Intelligence in Dentistry: Emerging Data Modalities and Modeling Approaches. Front. Artif. Intell. 2024, 7, 1427517. [Google Scholar] [CrossRef]

- Kundu, S. AI in Medicine Must Be Explainable. Nat. Med. 2021, 27, 1328. [Google Scholar] [CrossRef]

- Reddy, S. Explainability and Artificial Intelligence in Medicine. Lancet Digit. Health 2022, 4, e214–e215. [Google Scholar] [CrossRef]

- Mandl, K.D.; Gottlieb, D.; Mandel, J.C. Integration of AI in Healthcare Requires an Interoperable Digital Data Ecosystem. Nat. Med. 2024, 30, 631–634. [Google Scholar] [CrossRef]

- Kristiansen, T.B.; Kristensen, K.; Uffelmann, J.; Brandslund, I. Erroneous Data: The Achilles’ Heel of AI and Personalized Medicine. Front. Digit. Health 2022, 4, 862095. [Google Scholar] [CrossRef]

- Giaccone, P.; Benfante, V.; Stefano, A.; Cammarata, F.P.; Russo, G.; Comelli, A. PET Images Atlas-Based Segmentation Performed in Native and in Template Space: A Radiomics Repeatability Study in Mouse Models. In Proceedings of the Image Analysis and Processing—ICIAP 2022 Workshops, Lecce, Italy, 23–27 May 2022; pp. 351–361. [Google Scholar]

- Benfante, V.; Stefano, A.; Comelli, A.; Giaccone, P.; Cammarata, F.P.; Richiusa, S.; Scopelliti, F.; Pometti, M.; Ficarra, M.; Cosentino, S.; et al. A New Preclinical Decision Support System Based on PET Radiomics: A Preliminary Study on the Evaluation of an Innovative 64Cu-Labeled Chelator in Mouse Models. J. Imaging 2022, 8, 92. [Google Scholar] [CrossRef]

- Basirinia, G.; Ali, M.; Comelli, A.; Sperandeo, A.; Piana, S.; Alongi, P.; Longo, C.; Di Raimondo, D.; Tuttolomondo, A.; Benfante, V. Theranostic Approaches for Gastric Cancer: An Overview of In Vitro and In Vivo Investigations. Cancers 2024, 16, 3323. [Google Scholar] [CrossRef]

- Benfante, V.; Stefano, A.; Ali, M.; Laudicella, R.; Arancio, W.; Cucchiara, A.; Caruso, F.; Cammarata, F.P.; Coronnello, C.; Russo, G.; et al. An Overview of In Vitro Assays of 64Cu-, 68Ga-, 125I-, and 99mTc-Labelled Radiopharmaceuticals Using Radiometric Counters in the Era of Radiotheranostics. Diagnostics 2023, 13, 1210. [Google Scholar] [CrossRef]

- Ali, M.; Benfante, V.; Di Raimondo, D.; Salvaggio, G.; Tuttolomondo, A.; Comelli, A. Recent Developments in Nanoparticle Formulations for Resveratrol Encapsulation as an Anticancer Agent. Pharmaceuticals 2024, 17, 126. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).