From Simulation to Validation in Ensuring Quality and Reliability in Model-Based Predictive Analysis

Abstract

1. Introduction

Related Work

2. Tools and Methods

- Regression Learner App—This is an interactive tool for designing, training and testing regression models. It supports pre-built models (linear, non-linear, trees, SVM, ensembles) [15].

- Statistics and Machine Learning Toolbox—This provides tools for designing models using various machine learning algorithms, Support Vector Machines (SVMs), Decision Trees, and Gaussian Process Regression.

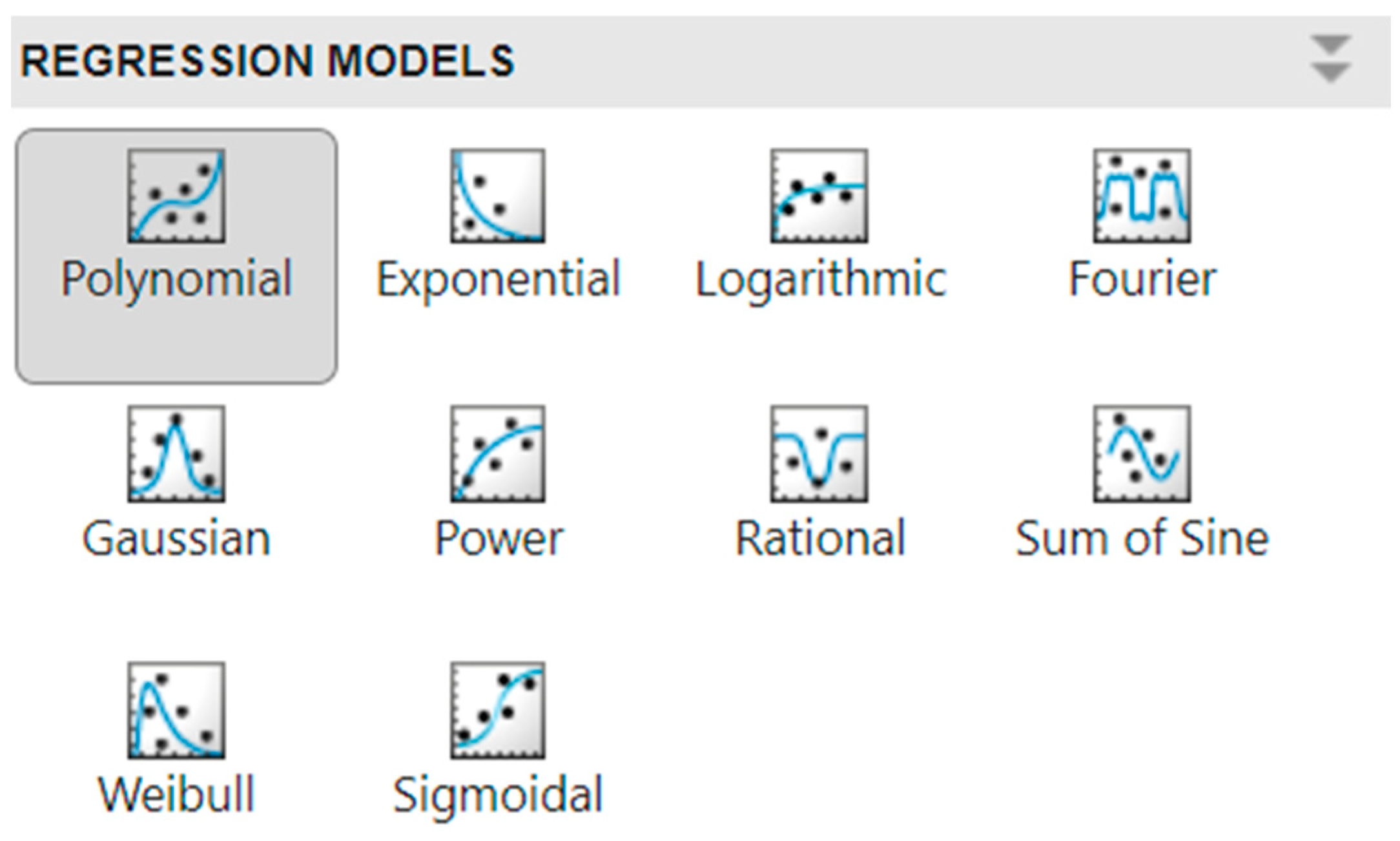

- Curve Fitting Toolbox—allows data fitting, model visualisation, and automatic parameter optimisation.

2.1. Curve Fitter Development Environment

- An extensive collection of preset models, covering both linear and non-linear types, as well as the ability to develop custom models.

- Robust data visualisation tools to help users identify trends and anomalies in the data.

- Model selection tools that allow users to evaluate the performance of different models and select the most appropriate one for the given data.

- Various techniques for calculating model parameters, such as least squares regression, non-linear regression and maximum likelihood estimation.

- A toolbar that allows you to save the results of your analyses to variables in the MATLAB workspace and to files in various formats, such as Excel, CSV and LaTeX.

2.2. Model Assessment Metrics

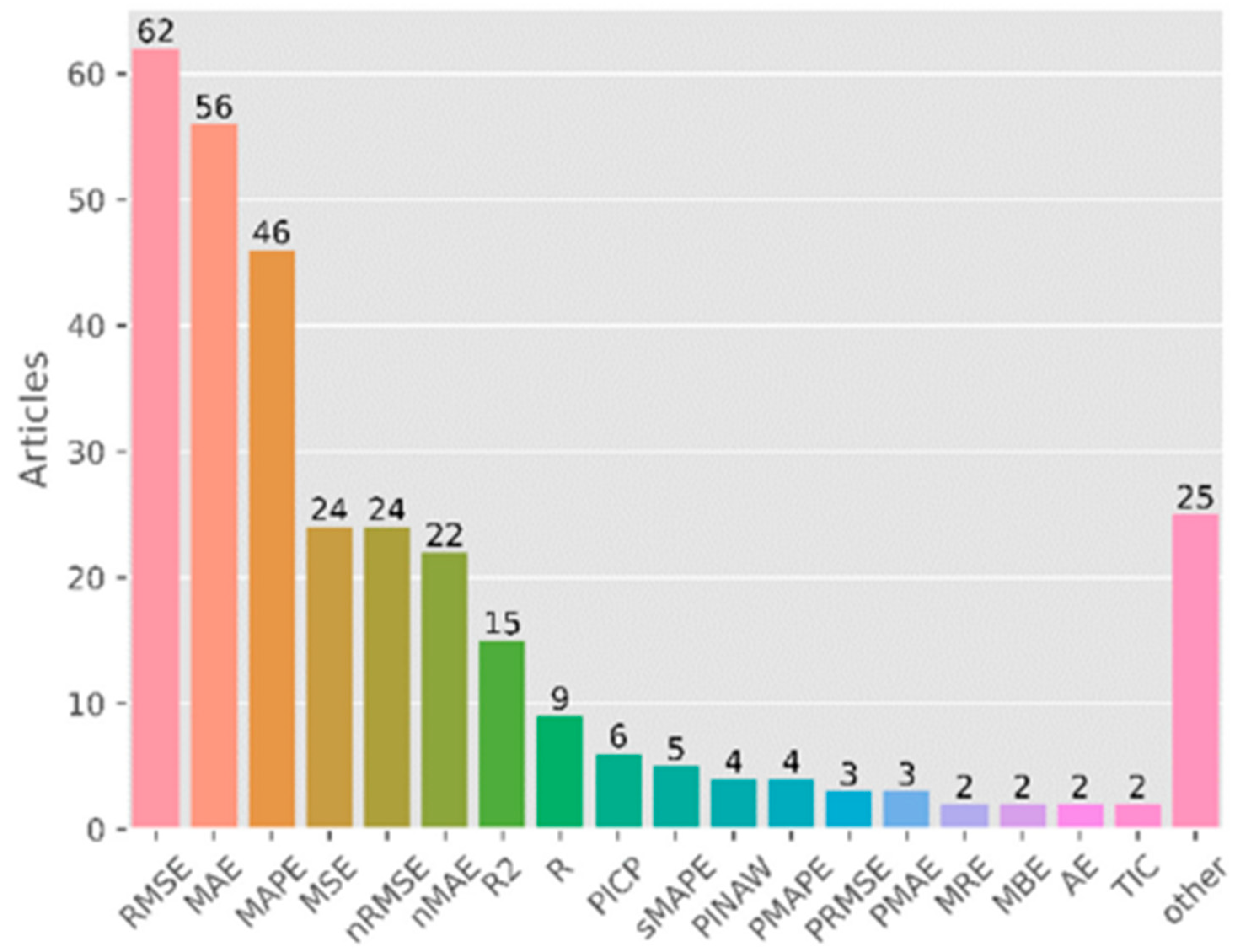

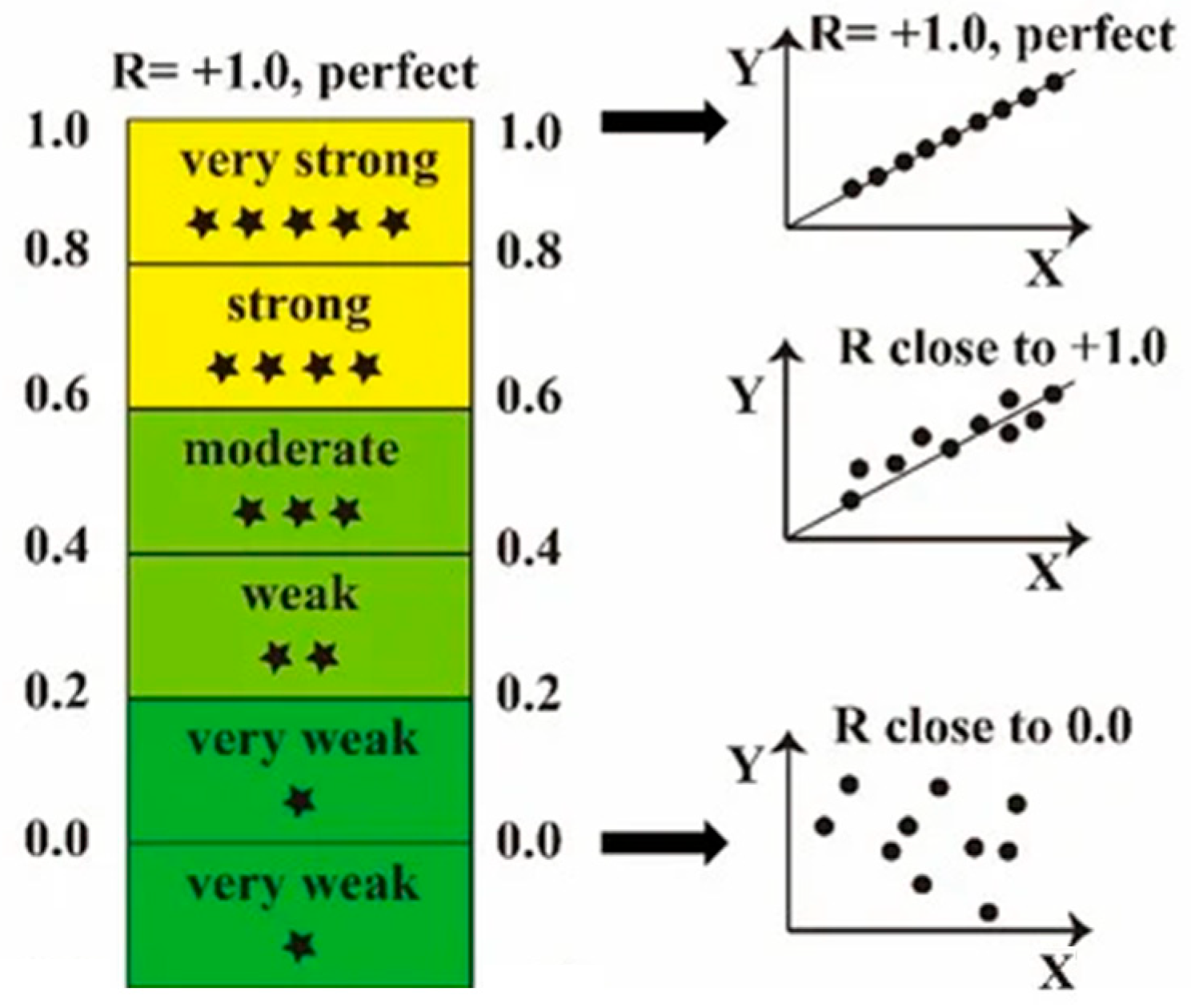

- R2—The coefficient of determination (R2) is frequently utilised to assess the quality of linear fit in regression models (5). R2 represents the square of the correlation between the real variable and the forecasted variable [24], or the fraction of the forecasted variable that the regression model elucidates [25]. A value of 1 indicates that the regression model accounts for all the predicted variables, signifying a perfect correlation between the two variables [15,26].

- The Sum of Squared Errors (SSE) is a statistical measure employed to assess the quality of a model or the difference between predicted outcomes and actual data. It is often used in regression, modelling, and machine learning to quantify model error.

- Root Mean Squared Error (RMSE),

- Mean Squared Error (MSE)—The value of Mean Squared Error (MSE) indicates the proximity of the regression line to a group of points. It calculates the Mean Squared Error between the predicted and actual values, assigning greater importance to larger discrepancies. When the average of the collection of errors is determined, it is referred to as the Mean Squared Error. The smaller the MSE value, the more accurate the prediction becomes. MSE is measured in units that are the square of the target variable. The units of MSE are the square of the target variable.

- Mean Absolute Error (MAE)—The Mean Absolute Error reflects the average discrepancy between the measured and forecasted values. The nearer the MAE value is to 0, the more accurate the prediction becomes [7].

- MAPE indicates the level of accuracy of the forecasted value compared to the actual measured values [7] (expressed in percent). For the MAPE index, an effective model has a value of less than 14%.

- SMAPE (symmetric mean absolute percentage error).

2.2.1. Akaike Information Criterion (AIC)

2.2.2. Bayesian Information Criterion (BIC)

3. Illustrative Study

- Data collection and preparation—Source data were cleaned and outliers removed prior to analysis. We assume that the data distribution is normal.

- Visualisation—Since we have samples with different textile fibre content, we used the graphical tools of the Matlab environment and visualised the obtained data (Figure 3).

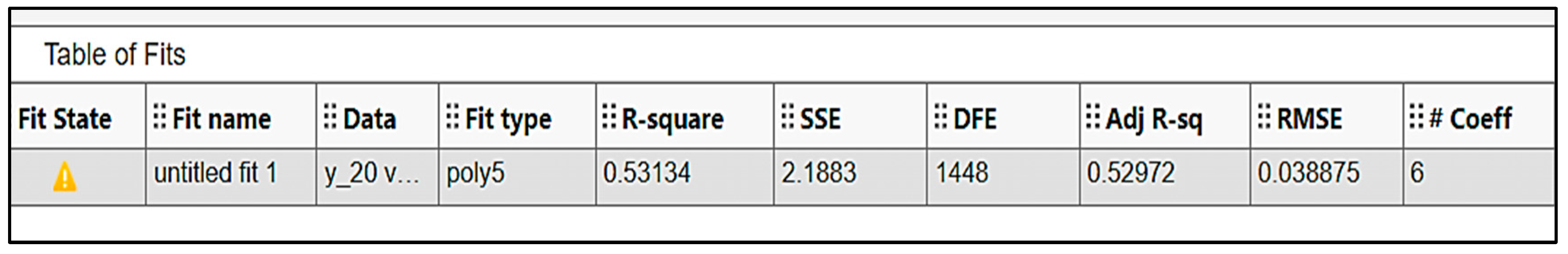

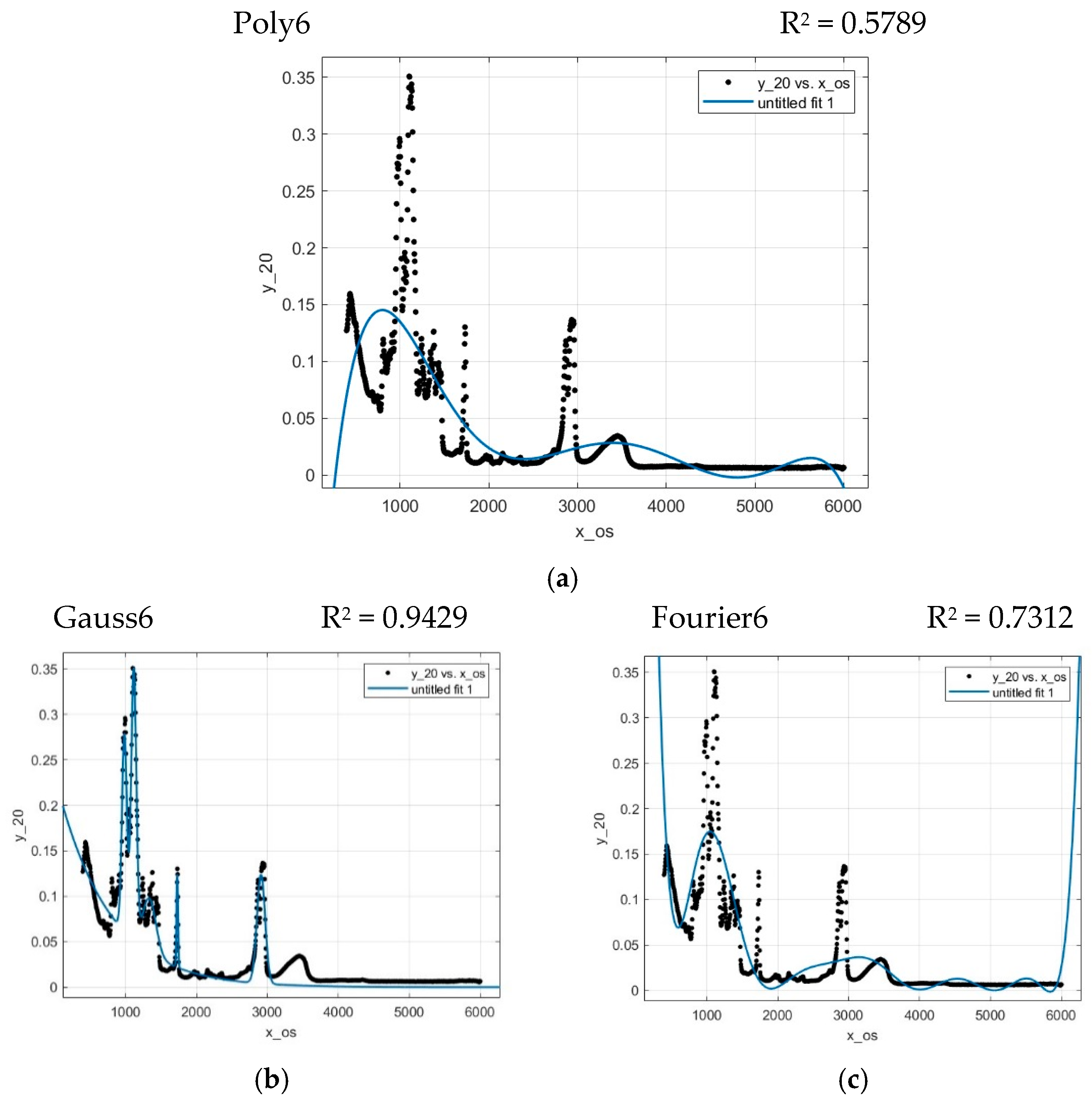

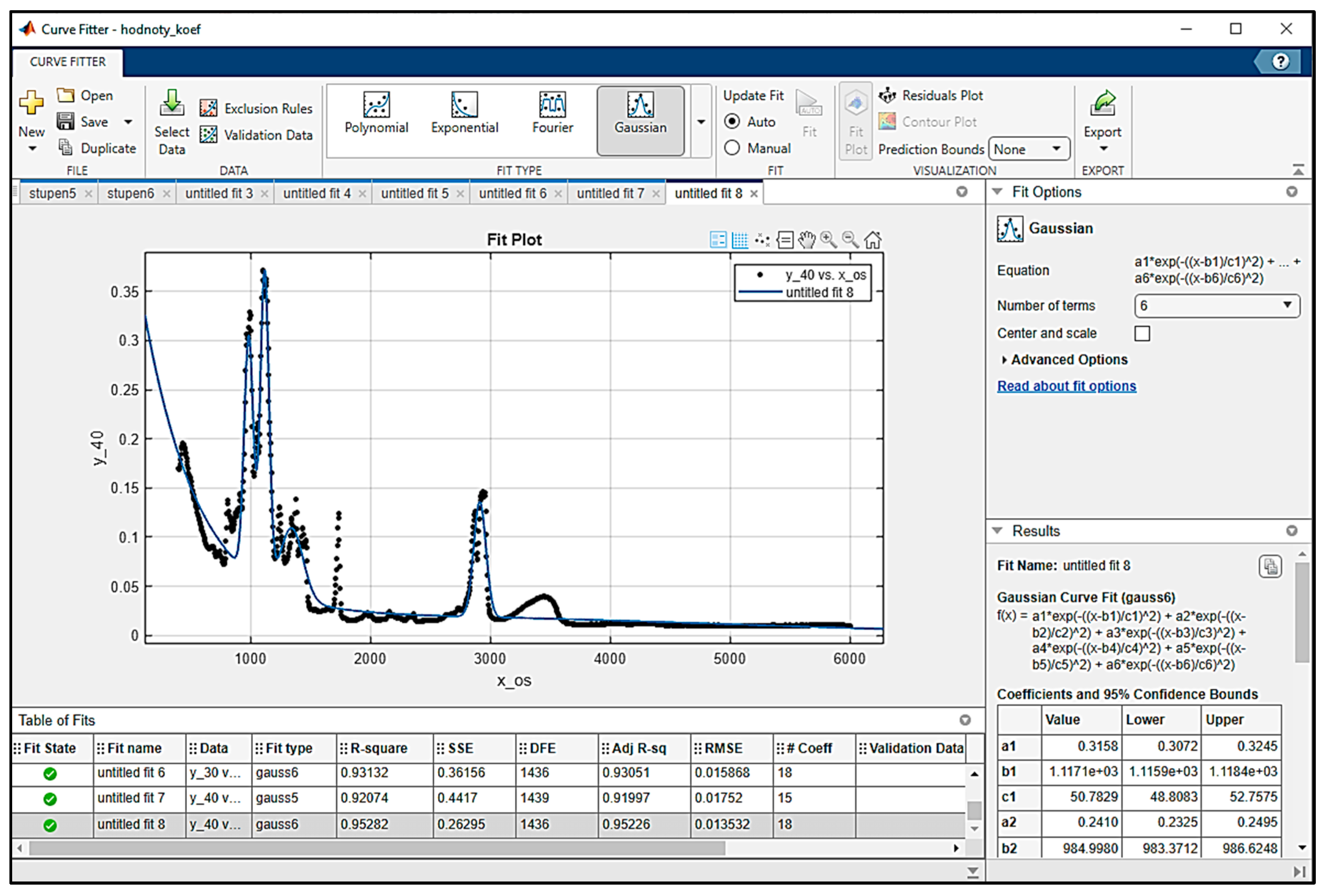

- Model design—Using the Curve Fitter Toolbox, we will progressively analyse the suitability of each built-in function and select a suitable model based on the R2 value.

- Validation—Quality versus balance. At this point, we will not only analyse the quality of the model but also look for a balance between the quality and complexity of the model.

4. Results

- MAE—a lower value of this indicator means that the model is more accurate, because its predictions are closer to the actual values.

- MAPE expresses the error in the form of a percentage difference between the actual and predicted values, which allows easier comparison of different datasets or units. A lower value means a more accurate model.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Al-Marshadi, A.H.; Alharby, A.H.; Shahbaz, M.Q. Selecting the “true” regression model: A new ranking method. Adv. Appl. Stat. 2023, 87, 1–11. [Google Scholar] [CrossRef]

- Vagaská, A.; Gombár, M.; Straka, Ľ. Selected Mathematical Optimization Methods for Solving Problems of Engineering Practice. Energies 2022, 15, 2205. [Google Scholar] [CrossRef]

- Sargent, R.G. Verification, Validation, and Accreditation of Simulation Models. In Proceedings of the 2000 Winter Simulation Conference, Orlando, FL, USA, 10–13 December 2000; Volume 1, pp. 50–59. [Google Scholar] [CrossRef]

- Hošovský, A.; Pite, J.; Adámek, M.; Mižáková, J.; Židek, K. Comparative study of week-ahead forecasting of daily gas consumption in buildings using regression ARMA/SARMA and genetic-algorithm-optimized regression wavelet neural network models. J. Build. Eng. 2021, 34, 101955. [Google Scholar] [CrossRef]

- Pavlenko, I.; Piteľ, J.; Ivanov, V.; Berladir, K.; Mižáková, J.; Kolos, V.; Trojanowska, J. Using Regression Analysis for Automated Material Selection in Smart Manufacturing. Mathematics 2022, 10, 1888. [Google Scholar] [CrossRef]

- Hrehova, S.; Vagaska, A. Computer Models as Appropriate Tools in Elearning. In Proceedings of the INTED2017 Proceedings, Valencia, Spain, 6–8 March 2017; pp. 8871–8877. [Google Scholar]

- Piotrowski, P.; Rutyna, I.; Baczyński, D.; Kopyt, M. Evaluation Metrics for Wind Power Forecasts: A Comprehensive Review and Statistical Analysis of Errors. Energies 2022, 15, 9657. [Google Scholar] [CrossRef]

- Tako, A.; Tsioptsias, N.; Robinson, S. Can we learn from simple simulation models? An experimental study on user learning. J. Simul. 2020, 14, 130–144. [Google Scholar] [CrossRef]

- Robinson, S. Exploring the relationship between simulation model accuracy and complexity. J. Oper. Res. Soc. 2022, 74, 1992–2011. [Google Scholar] [CrossRef]

- Priesmann, J.; Nolting, L.; Praktiknjo, A. Are complex energy system models more accurate? An intra-model comparison of power system optimization models. Appl. Energy 2019, 255, 113783. [Google Scholar] [CrossRef]

- Zhang, L. Analysis of the trade-off between accuracy and complexity of identified models of dynamic systems. Anal. Data Process. Syst. 2024, 94, 85–93. [Google Scholar] [CrossRef]

- Rohskopf, A.; Goff, J.; Sema, D.; Gordiz, K.; Nguyen, N.; Henry, A.; Thompson, A.; Wood, M. Exploring model complexity in machine learned potentials for simulated properties. J. Mater. Res. 2023, 38, 5136–5150. [Google Scholar] [CrossRef]

- Trojanová, M.; Hošovský, A.; Čakurda, T. Evaluation of Machine Learning-Based Parsimonious Models for Static Modeling of Fluidic Muscles in Compliant Mechanisms. Mathematics 2023, 11, 149. [Google Scholar] [CrossRef]

- Hrehova, S.; Husár, J.; Knapčíková, L. The Fuzzy Logic Predictive Model for Remote Increasing Energy Efficiency. Mob. Netw. Appl. 2023, 28, 1293–1305. [Google Scholar] [CrossRef]

- Rodríguez-Martín, M.; Fueyo, J.G.; Gonzalez-Aguilera, D.; Madruga, F.J.; García-Martín, R.; Muñóz, Á.L.; Pisonero, J. Predictive Models for the Characterization of Internal Defects in Additive Materials from Active Thermography Sequences Supported by Machine Learning Methods. Sensors 2020, 20, 3982. [Google Scholar] [CrossRef] [PubMed]

- Knapcikova, L.; Behunova, A.; Behun, M. Using a discrete event simulation as an effective method applied in the production of recycled material. Adv. Prod. Eng. Manag. 2020, 15, 431–440. [Google Scholar] [CrossRef]

- Greene, W.H. Econometric Analysis, 7th ed.; Pearson Education Limited: Edinburgh, UK, 2012; pp. 383–494. [Google Scholar]

- Hsiao, C.-W.; Chan, Y.-C.; Lee, M.-Y.; Lu, H.-P. Heteroscedasticity and Precise Estimation Model Approach for Complex Financial Time-Series Data: An Example of Taiwan Stock Index Futures before and during COVID-19. Mathematics 2021, 9, 2719. [Google Scholar] [CrossRef]

- Belkin, M.; Hsu, D.; Mitra, P.P. Overfitting or Perfect Fitting? Risk Bounds for Classification and Regression Rules that Interpolate. arXiv 2018, arXiv:1806.05161. [Google Scholar] [CrossRef]

- Donald, S.; Lang, K. Inference with Difference-in-Differences and Other Panel Data. Rev. Econ. Stat. 2007, 89, 221–233. [Google Scholar] [CrossRef]

- Im, K.S.; Pesaran, M.; Shin, Y. Testing for unit roots in heterogeneous panels. J. Econ. 2003, 115, 53–74. [Google Scholar] [CrossRef]

- Adamczak, M.; Kolinski, A.; Trojanowska, J.; Husár, J. Digitalization Trend and Its Influence on the Development of the Operational Process in Production Companies. Appl. Sci. 2023, 13, 1393. [Google Scholar] [CrossRef]

- Lazár, I.; Husár, J. Validation of the serviceability of the manufacturing system using simulation. J. Effic. Responsib. Educ. Sci. 2012, 5, 252–261. [Google Scholar] [CrossRef][Green Version]

- Jierula, A.; Wang, S.; OH, T.-M.; Wang, P. Study on Accuracy Metrics for Evaluating the Predictions of Damage Locations in Deep Piles Using Artificial Neural Networks with Acoustic Emission Data. Appl. Sci. 2021, 11, 2314. [Google Scholar] [CrossRef]

- Carrera, B.; Kim, K. Comparison Analysis of Machine Learning Techniques for Photovoltaic Prediction Using Weather Sensor Data. Sensors 2020, 20, 3129. [Google Scholar] [CrossRef]

- Asante-Okyere, S.; Shen, C.; Yevenyo Ziggah, Y.; Moses Rulegeya, M.; Zhu, X. Investigating the Predictive Performance of Gaussian Process Regression in Evaluating Reservoir Porosity and Permeability. Energies 2018, 11, 3261. [Google Scholar] [CrossRef]

- Trojanowski, P. Comparative analysis of the impact of road infrastructure development on road safety—A case study. Sci. J. Marit. Univ. Szczecin 2020, 63, 23–28. [Google Scholar] [CrossRef]

- Rajamanickam, V.; Babel, H.; Montano-Herrera, L.; Ehsani, A.; Stiefel, F.; Haider, S.; Presser, B.; Knapp, B. About Model Validation in Bioprocessing. Processes 2021, 9, 961. [Google Scholar] [CrossRef]

- St-Aubin, P.; Agard, B. Precision and Reliability of Forecasts Performance Metrics. Forecasting 2022, 4, 882–903. [Google Scholar] [CrossRef]

- Cavanaugh, J.E.; Neath, A.A. The Akaike information criterion: Background, derivation, properties, application, interpretation, and refinements. WIREs Comput Stat. 2019, 11, e1460. [Google Scholar] [CrossRef]

- Folz, B. Multiple Regression. Available online: https://www.youtube.com/watch?v=-BR4WElPIXg (accessed on 25 November 2024).

- Marliana, R.R.; Suhayati, M.; Ningsih, S.B.H. Schwarz’s Bayesian Information Criteria: A Model Selection Between Bayesian-SEM and Partial Least Squares-SEM. Pak. J. Stat. Oper. Res. 2023, 19, 637–648. [Google Scholar] [CrossRef]

- Kronova, J.; Izarikova, G.; Trebuna, P.; Pekarcikova, M.; Filo, M. Application Cluster Analysis as a Support form Modelling and Digitalizing the Logistics Processes in Warehousing. Appl. Sci. 2024, 14, 4343. [Google Scholar] [CrossRef]

- Ondov, M.; Rosova, A.; Sofranko, M.; Feher, J.; Cambal, J.; Feckova Skrabulakova, E. Redesigning the Production Process Using Simulation for Sustainable Development of the Enterprise. Sustainability 2022, 14, 1514. [Google Scholar] [CrossRef]

- Mesarosova, J.; Martinovicova, K.; Fidlerova, H.; Chovanova, H.H.; Babcanova, D.; Samakova, J. Improving the level of predictive maintenance maturity matrix in industrial enterprise. Acta Logist. 2022, 9, 183–193. [Google Scholar] [CrossRef]

- Hrehova, S.; Knapčíková, L. Design of Mathematical Model and Selected Coefficient Specifications for Composite Materials Reinforced with Fabric from Waste Tyres. Materials 2023, 16, 5046. [Google Scholar] [CrossRef] [PubMed]

- Isametova, M.E.; Nussipali, R.; Martyushev, N.V.; Malozyomov, B.V.; Efremenkov, E.A.; Isametov, A. Mathematical Modeling of the Reliability of Polymer Composite Materials. Mathematics 2022, 10, 3978. [Google Scholar] [CrossRef]

- Filina-Dawidowicz, L.; Sęk, J.; Trojanowski, P.; Wiktorowska-Jasik, A. Conditions of Decision-Making Related to Implementation of Hydrogen-Powered Vehicles in Urban Transport: Case Study of Poland. Energies 2024, 17, 3450. [Google Scholar] [CrossRef]

| Number of Coefficients | ||||

|---|---|---|---|---|

| 4 | 5 | 6 | 7 | |

| R2 | 0.855315 | 0.929122 | 0.942937 | 0.94663 |

| adjR2 | 0.854916 | 0.928877 | 0.942700 | 0.946371 |

| SSE | 0.675565 | 0.330945 | 0.266441 | 0.249196 |

| RMSE | 0.021555 | 0.015087 | 0.013537 | 0.013091 |

| MSE | 0.000465 | 0.000228 | 0.000183 | 0.000171 |

| MAE | 0.005992 | 0.006701 | 0.004885 | 0.007245 |

| MAPE | 0.013936 | 0.014061 | 0.011719 | 0.013945 |

| SMAPE | 0.000442 | 0.000364 | 0.000343 | 0.000333 |

| Number of Coefficients | ||||

|---|---|---|---|---|

| 4 | 5 | 6 | 7 | |

| R2 | 0.855315 | 0.929122 | 0.942937 | 0.94663 |

| adjR2 | 0.854916 | 0.928877 | 0.942700 | 0.946371 |

| AIC | −2223.45 | −2672.05 | −2806.93 | −2847.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hrehova, S.; Antosz, K.; Husár, J.; Vagaska, A. From Simulation to Validation in Ensuring Quality and Reliability in Model-Based Predictive Analysis. Appl. Sci. 2025, 15, 3107. https://doi.org/10.3390/app15063107

Hrehova S, Antosz K, Husár J, Vagaska A. From Simulation to Validation in Ensuring Quality and Reliability in Model-Based Predictive Analysis. Applied Sciences. 2025; 15(6):3107. https://doi.org/10.3390/app15063107

Chicago/Turabian StyleHrehova, Stella, Katarzyna Antosz, Jozef Husár, and Alena Vagaska. 2025. "From Simulation to Validation in Ensuring Quality and Reliability in Model-Based Predictive Analysis" Applied Sciences 15, no. 6: 3107. https://doi.org/10.3390/app15063107

APA StyleHrehova, S., Antosz, K., Husár, J., & Vagaska, A. (2025). From Simulation to Validation in Ensuring Quality and Reliability in Model-Based Predictive Analysis. Applied Sciences, 15(6), 3107. https://doi.org/10.3390/app15063107