1. Introduction

Generative Artificial Intelligence (GAI), a cutting-edge subset of artificial intelligence specializing in natural language processing [

1], has gained significant traction in education due to its ability to interpret complex inputs and generate human-like responses [

2]. While GAI offers notable advantages, its integration into educational settings is not without challenges. For example, not all learners benefit equally from AI-assisted interactions, and an excessive dependence on mechanized tools can weaken a sense of community and adversely affect academic performance [

3]. As a result, researchers are increasingly focused on identifying effective strategies for applying GAI in technology-supported education, particularly its impact on learning outcomes and learner attitudes [

4]. Furthermore, the study of GAI’s effectiveness in education is complicated by the intrinsic nature of pedagogical methods and the varying ways GAI tools are utilized, adding complexity to the field.

Project-based learning (PBL), with its emphasis on diverse learning objectives, often requires students to complete authentic, complex projects that foster deep thinking. This process frequently necessitates the support of information and communication technology. As technology advances and resources are optimized, PBL has increasingly transitioned from traditional face-to-face settings to online environments [

5]. However, this shift has introduced new challenges for online PBL, including a lack of personalized instruction [

6], limited resource access [

7], insufficient feedback mechanisms [

8], etc.

To address these challenges, integrating effective scaffolding tools becomes essential [

9]. Intelligent aids such as GAI provide scaffolding, offering features like instant feedback and personalized instruction, which help students better understand and master knowledge during the PBL process [

10]. Consequently, PBL not only serves as an effective teaching strategy but also as a valuable method for studying the advantages and limitations of GAI in learning. Through PBL, researchers can observe and analyze how GAI operates in real learning scenarios and its impact on students’ behaviors and learning effectiveness.

Given PBL’s emphasis on student initiative and practical engagement [

11], it provides an ideal framework to assess the educational value and applications of GAI. Some studies have begun examining this relationship. For instance, Ramos found that incorporating GAI enhances student engagement, teamwork, and professional skills in project-oriented learning [

12]. However, there is limited research on integrating large language model (LLM) plug-ins within online PBL platforms, highlighting a critical gap in the literature.

To address these gaps, this study aims to achieve two primary objectives. First, we developed an online PBL platform integrated with a GAI-assisted plug-in to explore the interactions between students and GAI. Understanding these interactions is crucial for uncovering patterns and trends in learning behaviors that can inform the design and improvement of GAI-supported educational platforms [

13]. Second, we constructed a GAI-PBL learning model and conducted experimental analyses of students’ learning behaviors across three groups. This approach allowed us to assess the impact of GAI on learners’ methods, cognitive processes, and learning effectiveness within the PBL framework.

The study seeks to answer the following research questions:

Can GAI facilitate PBL learning, while providing a research avenue for GAI?

How does GAI influence students’ learning behaviors, cognitive skills, and learning effectiveness?

2. Literature Review

2.1. Challenges in Implementing PBL

Extensive research has highlighted the numerous benefits of PBL, such as fostering entrepreneurial skills by enhancing decision-making abilities, encouraging exploration and experimentation, promoting idea exchange, and building trust among participants [

14], with this learning method being recognized as an effective approach for improving learners’ English language skills and other competencies [

15].

Despite these advantages, the practical implementation of PBL faces several obstacles. One significant issue is the hesitation among educators to adopt PBL due to concerns about its practicality and effectiveness. This reluctance is often driven by the complexity of its application in real-world settings and the additional demands it places on teachers [

16]. For students, PBL can be a double-edged sword. While it has the potential to cultivate critical skills and increase engagement, it requires substantial time management, self-discipline, and an adequate foundational understanding of the subject matter to be effective [

17]. These demands may create barriers for learners, particularly those lacking prior experience with independent or self-directed learning.

Moreover, PBL is highly resource-dependent, posing significant challenges in resource-constrained educational environments, such as community colleges. The successful implementation of PBL often necessitates more time, preparation, and motivation from both educators and students compared to traditional teaching methods [

18]. In such settings, limited access to the necessary materials, technology, and institutional support may further restrict the viability of PBL as a pedagogical approach.

2.2. The Development of GAI in Conjunction with PBL

In recent years, the rapid advancement of GAI technology has significantly transformed traditional teaching paradigms, with its application in education becoming increasingly widespread. Since OpenAI introduced ChatGPT-3.5, a leading example of a large language model, the use of GAI in education has expanded to encompass various dimensions. These include fostering GAI literacy and enhancing digital competence among learners and educators [

19], exploring effective methods to leverage GAI in teaching and learning [

20], analyzing its impact on students and teachers [

21], and addressing ethical and moral implications [

22].

When integrating GAI with PBL, existing research has predominantly focused on two main areas. The first involves utilizing PBL as a framework for advancing AI education. For example, project-based curricula have been designed to cover diverse GAI disciplines and applications, emphasizing hands-on projects to enhance students’ understanding of AI theories and practical applications. Gamification strategies have also been employed within PBL to improve learning outcomes in AI courses [

23]. The second area explores the role of GAI as a pedagogical aid to optimize PBL instruction. Studies in this domain examine how GAI can improve classroom efficiency, provide personalized support, and enhance learning outcomes by scalably assessing student engagement and performance through machine learning models analyzing dialogue data.

While these studies underscore the value of integrating GAI with PBL, the research focus has been limited to these two mainstream trends. There remains a noticeable gap in exploring how GAI can be integrated more comprehensively into PBL, not merely as a tool for instruction or discipline-specific development but as an integral component of the PBL process itself. Specifically, the potential of GAI to function as a resource that supports deep learning and facilitates student-driven exploration within PBL environments remains underexplored.

Furthermore, there is a scarcity of research on leveraging GAI as a comprehensive learning platform that seamlessly integrates with PBL to create a student-centered, interactive, and highly personalized learning experience. Such an approach could revolutionize PBL by combining the strengths of GAI and project-based frameworks to foster more profound engagement, adaptability, and individualization in learning.

2.3. Selection of Interaction Behaviour Analysis Methods

In the initial screening phase of the study, a series of common pattern mining algorithms were compared, including, but not limited to, Lagged Sequence Analysis (LSA), Generalized Sequential Patterns (GSP), the Sequential Pattern Discovery Algorithm (SPADE), and the Event Tree-based Mining Algorithm (EP-Tree). It is evident that these algorithms exhibit considerable proficiency in data processing within their designated domains of applicability. However, the central objective of this study is to examine the interaction behavior between the GAI (under the assumption that it is an intelligent assisted instruction system or platform) and the learner. Consequently, when selecting algorithms, particular emphasis is placed on their sensitivity and accuracy in capturing and analyzing such interaction behaviors.

Whilst the algorithms GSP [

24], SPADE [

25], and EP-Tree [

26] demonstrate efficacy in general pattern mining tasks, they are not well-suited to the requirements of this study in certain scenarios. For instance, GSP has been observed to prioritize global pattern mining over local interaction details [

27], while SPADE is susceptible to computational efficiency challenges when dealing with large-scale datasets [

28]. Additionally, while EP-Tree demonstrates proficiency in handling complex event sequences, it may not be as intuitive and effective as LSA in capturing subtle time lag effects.

More importantly, LSA has been employed to investigate shared cognitive development in online collaborative learning [

29] and to examine visual behavioral patterns in online learning activities [

30]. This study was conducted based on an online learning platform, so we ultimately chose LSA as the research method.

It is undeniable that LSA offers distinctive advantages in elucidating interdependencies in behavioral sequences and investigating behavioral patterns. However, it is important to recognize that LSA primarily concentrates on lagged relationships between behaviors [

31], which may be more intricate and diverse in authentic scenarios. Consequently, in this study, in addition to LSA, we employed controlled experimental design and visual analysis methods as supplementary techniques, with the objective of comprehensively capturing and analyzing the multidimensional relationships among behaviors.

It is noteworthy that, in contrast to previous studies that employ LSA to examine a range of aspects related to online learning, this research is distinctive in its focus on the interactional behaviors between learners and GAI. By capturing these specific behaviors, the study seeks to reveal how learners engage with GAI as an interactive partner, shedding light on their problem-solving processes and the role of GAI in facilitating learning within the context of PBL.

3. Methodology

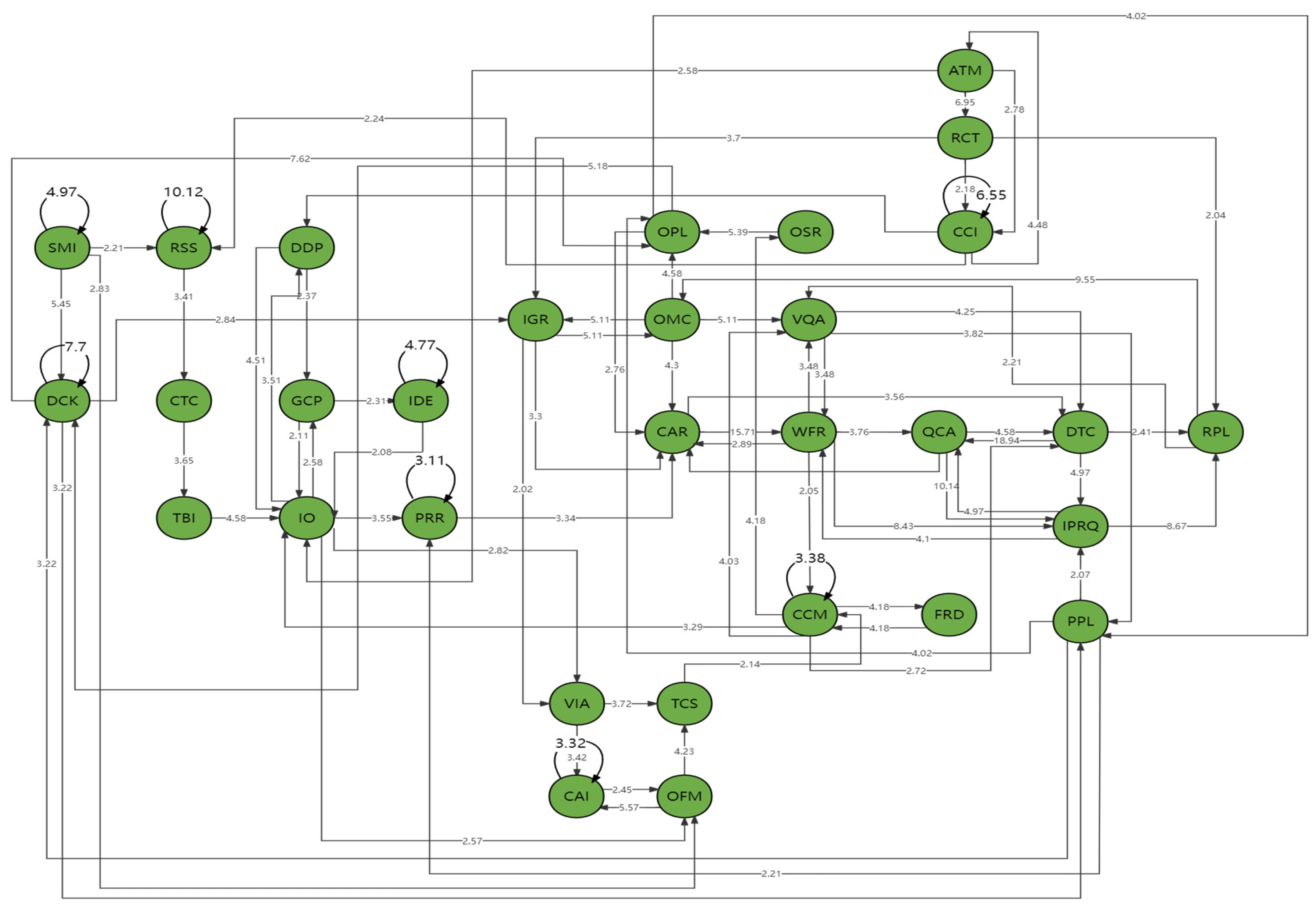

This study conducted a one-year PBL course at a university in Macau using a self-developed GAI-PBL online learning platform. Detailed records of students’ learning behaviors were collected throughout the course. These records formed the basis for three in-depth analyses aimed at understanding the characteristics of students’ learning behaviors, cognitive and thinking processes, and overall learning effectiveness in a GAI-assisted PBL environment. For the behavioral data collected, we adopted Lagged Sequential Analysis (LSA) for in-depth analysis and supplemented it with a variety of other research methods; the structure of this analytical framework is shown in

Figure 1.

From

Figure 1, it can be seen that the study was completed in three stages: Data Collection and Processing, Pattern Discovery, and Analytical Perspective.

At the Data Collection and Processing stage, we developed a GAI system integrated with the PBL process. The PBL-GAI platform, an online learning environment with an embedded GAI plug-in, served as the primary data source for this study. For data analysis, five sets of behavior recordings were randomly selected and independently reviewed by two experts to define and categorize student behaviors. A sequential behavior list was subsequently developed, and the behaviors were labeled at regular intervals using GSEQ 5.1 software. We then carried out the following three sets of data analyses in an attempt to analyse the dynamic correlations, differences, and impact of students’ behaviour during that learning process.

The first set of analyses employed Lag Sequential Analysis (LSA) to construct behavioral sequence diagrams that mapped learners’ interactions during the GAI-PBL process. Using GSEQ 5.1 software, a frequency table of behavioral transitions and an adjusted residual table were generated. In this study, the Z-score was used as a key statistic to determine the significance of behavioural sequences in LSA. These tools identified significant behavioral sequences exceeding a Z-score threshold of 1.96, revealing non-random patterns and regularities in the learning process [

32].

The second analysis compared behavioral patterns from the first and second semesters in three randomly selected groups. We evaluated the frequency of behavioral occurrences and identified the top five most frequent behaviors in each semester, providing insights into how learners’ interactions evolved over time.

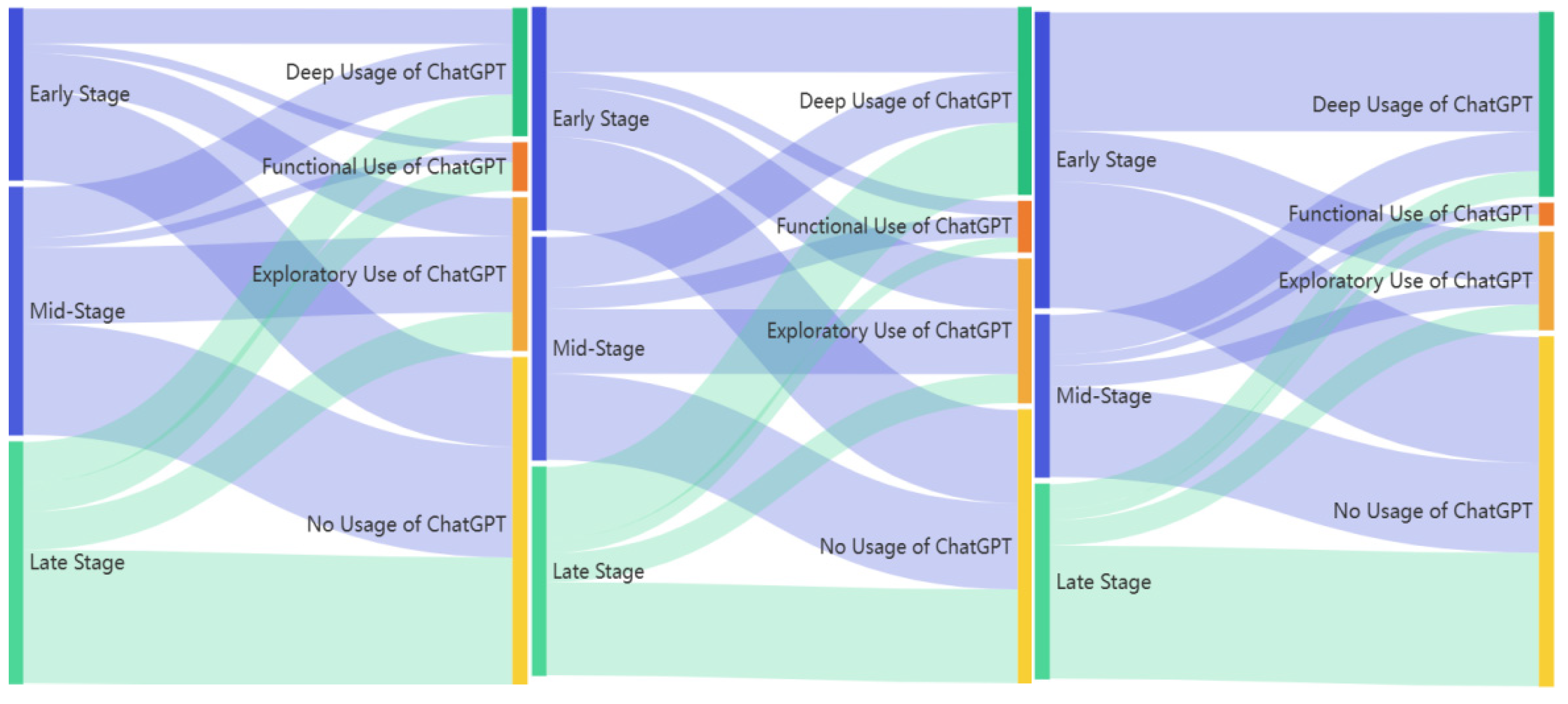

For the third analysis, learners were divided into high- and low-performance groups based on final semester grades. Grades were derived from evaluations of project completion, innovativeness, and process execution. Learning behaviors were further categorized based on their degree of GAI usage into four distinct types: “Deep Use of GAI”, “Functional Use of GAI”, “Exploratory Use of GAI”, and “No Use of GAI”.

Behavioral distributions across three stages of the project (early, middle, and late) were analyzed. Additionally, a focused study of the interaction processes between GAI and learners during the final session was conducted for each performance group. These interactions were evaluated across five dimensions: “Question Precision”, “Keyword Effectiveness”, “Dialogue Rounds”, “Question Innovativeness”, and “Question Complexity”.

Meanwhile, in order to comprehensively assess the application value of GAI plug-ins in educational scenarios, we conducted a cost–benefit analysis of the collected data.

We conducted a comprehensive cost analysis in three dimensions: learning cost, technology deployment cost and time cost, and correspondingly, a cost–benefit comparison in three dimensions, learning efficiency, learning quality, and innovation ability, in order to systematically assess the input–output relationship of GAI plug-ins in student learning.

At the Pattern Discovery stage, the primary objective was to identify meaningful patterns within the collected data, focusing on two key aspects: Significant Relationships and Behavioral Sequences. This phase aimed to uncover hidden structures and dynamics in student learning behaviors and their interactions with the GAI-enhanced PBL platform.

3.1. Participants

The study involved 21 PBL groups, each consisting of 5–6 university students from Macau. The learners involved in the study were university students enrolled in the Faculty of Education. The course they participated in was an exploration of teaching and learning hotspots, and the learners had knowledge and practical experience of project-based learning. Prior to the study, participants completed a two-week training program to ensure proficiency in using both the AI dialogue model and the PBL platform. Over the course of a year, participants engaged in weekly sessions on the platform, working in groups, completing a new PBL task each week. During these tasks, participants utilized the platform’s AI plug-in, and their activities were recorded via screen capture (excluding audio) to document their interactions and learning behaviors.

3.2. Procedure

The experiment commenced in September 2023 and spanned one academic year (two semesters). The implementation of the study followed a structured timeline:

Weeks 1–2 (Preparation Phase): During the first two weeks of the semester, students in the experimental group underwent training on the PBL-GAI platform and the GAI plug-in. Additionally, students were organized into learning groups, each consisting of 5–6 members.

Weeks 3–8 (Experimental Phase): The formal experimental phase began in week 3 and continued until week 8. Weekly PBL activities were conducted on the PBL-GAI platform, with each session lasting 3 h. The structure of each session was as follows:

1 h: Students engaged in a systematic study using the GAI user guide, facilitated by the instructor.

2 h: Under the instructor’s guidance, students worked in small groups to complete PBL tasks. Throughout these sessions, the instructor provided ongoing support to ensure effective learning.

Second Semester Weeks 1–6: The experimental design continued into the second semester, with PBL tasks continuing on a weekly basis, following the same schedule as in the first semester.

Week 7 (Final Assessment): The final assessment occurred in week 7, taking the form of an independent project task on the PBL-GAI platform. This task served as a comprehensive evaluation of the students’ learning. Upon completion, the experimental cycle was concluded, and a total of 13 weeks of continuous learning data were collected.

Throughout the experimental period, the researcher recorded various learning behavior indicators, including student login frequency, task completion, GAI usage, collaborative interaction, and other relevant metrics, via the backend system of the PBL-GAI platform. Additionally, the instructor’s guidance was documented through classroom observation sheets to ensure consistency and standardization in the experimental process.

4. Material

4.1. Online Platforms and Plug-Ins Used in the Experiment (GAI-PBL)

PBL emphasizes collaborative and practical learning, requiring students to actively engage in group discussions, problem definition, exploration, solution design, implementation, presentation, and reflection [

33]. GAI, with its advanced capabilities in dialogue interaction [

34], content generation, and intelligent recommendations, plays a pivotal role in supporting this process.

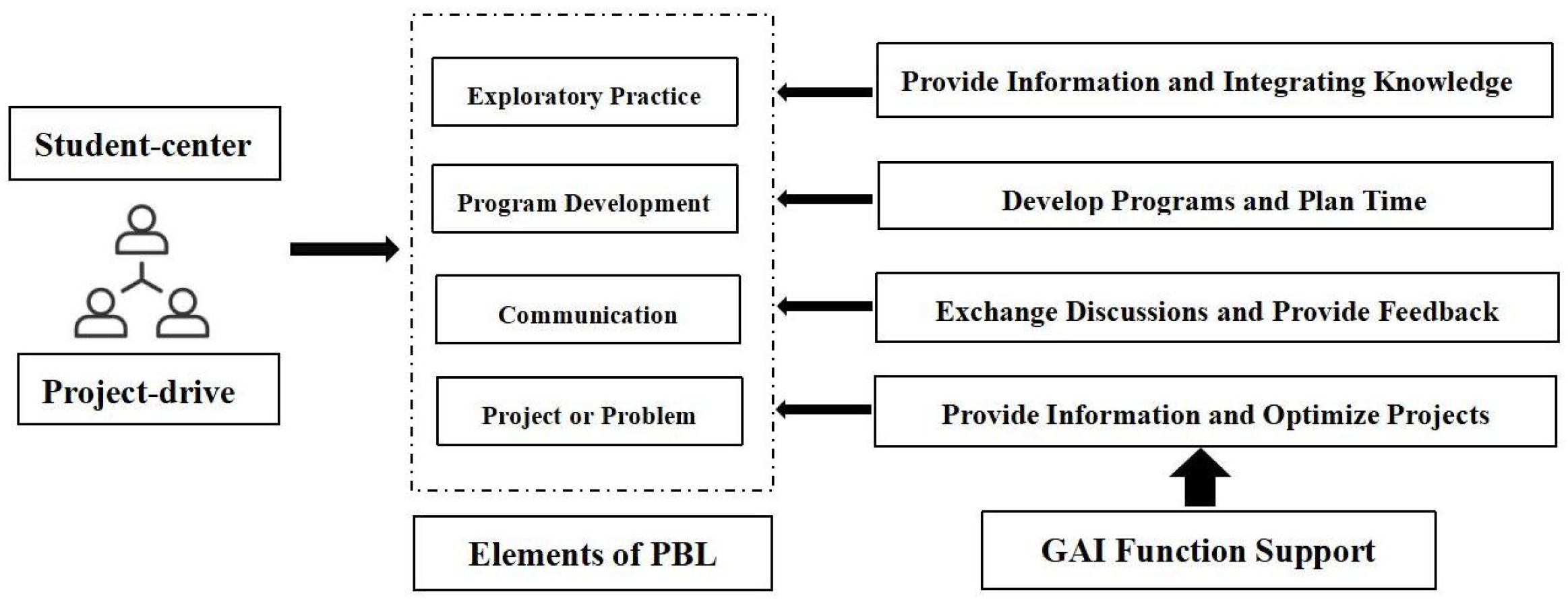

We developed a model to assess how GAI can enhance PBL by facilitating interactions and improving learner effectiveness across key stages of the learning process:

Group Discussions: GAI functions as a virtual discussion partner, enhancing or supplementing group discussions by creating a dynamic and continuous communication environment. It helps organize ideas, provide feedback, and foster collaboration;

Problem Definition and Exploration: GAI broadens learners’ research perspectives by offering background information and access to relevant case studies, aiding in the formulation of well-defined problems;

Solution Design and Implementation: GAI assists in drafting initial solutions, monitoring project progress, and refining designs based on iterative feedback. Its dynamic adaptability allows for on-the-fly adjustments to evolving project needs;

Results Presentation and Reflection: GAI supports learners by generating presentation materials and providing personalized suggestions for critical reflection and improvement;

This integration of GAI into PBL not only improves learning efficiency and quality but also creates a more flexible and enriched educational experience. By acting as a versatile collaborator, GAI helps cultivate innovative thinking and problem-solving skills among learners, aligning with the pedagogical goals of PBL.

After analyzing this GAI-PBL learning approach (as shown in

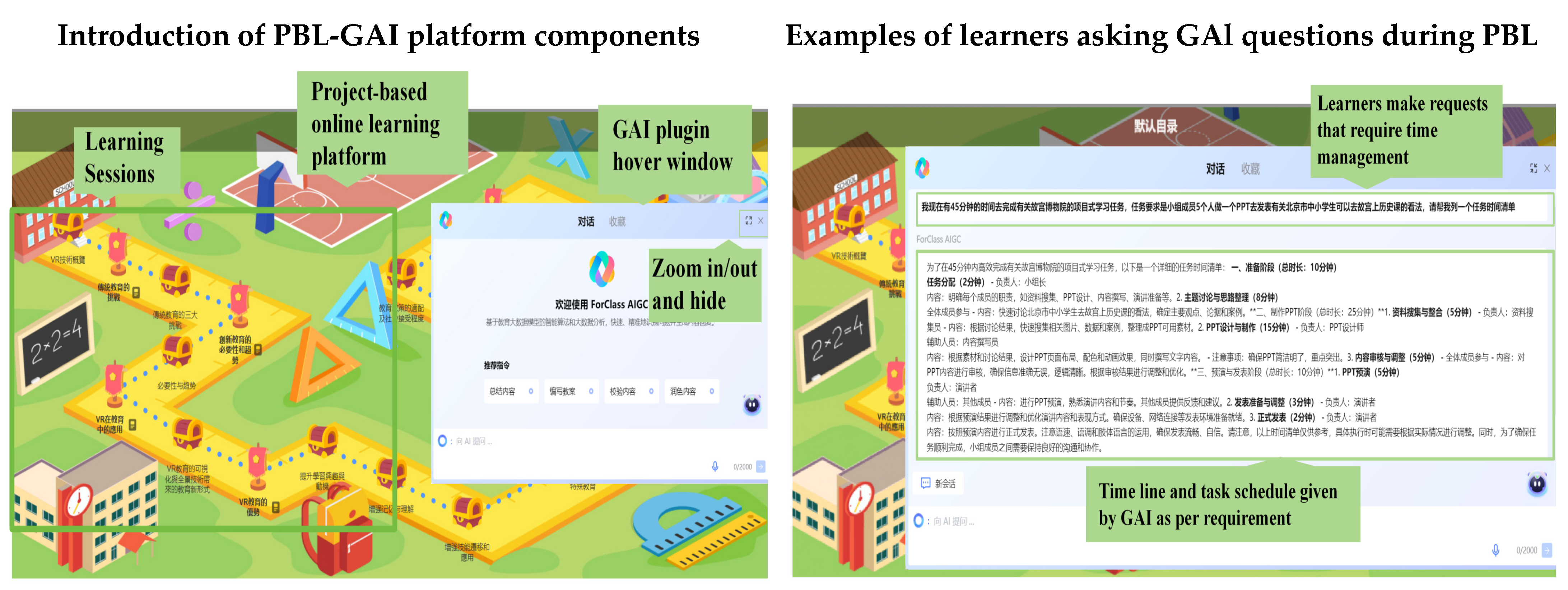

Figure 2) and modeling that learning, we found that the path was feasible. At the same time, to ensure the accuracy and reliability of learning outcomes, this study carefully evaluated the state-of-the-art GAI tools. We identified that variations in functionality, user interface design, and ease of use among these tools could significantly influence students’ learning experiences and experimental results. Based on this analysis, we developed a custom online PBL platform, the Nuclear Valley Future Course Platform, and integrated a dialogue plug-in called ForClass. This plug-in, built on the Educational Big Data Model AI (as shown in

Figure 3), is designed to support students throughout their PBL tasks. For example, learners can request the GAI to create detailed timelines for their projects, enabling streamlined task completion and project management (as shown in

Figure 3).

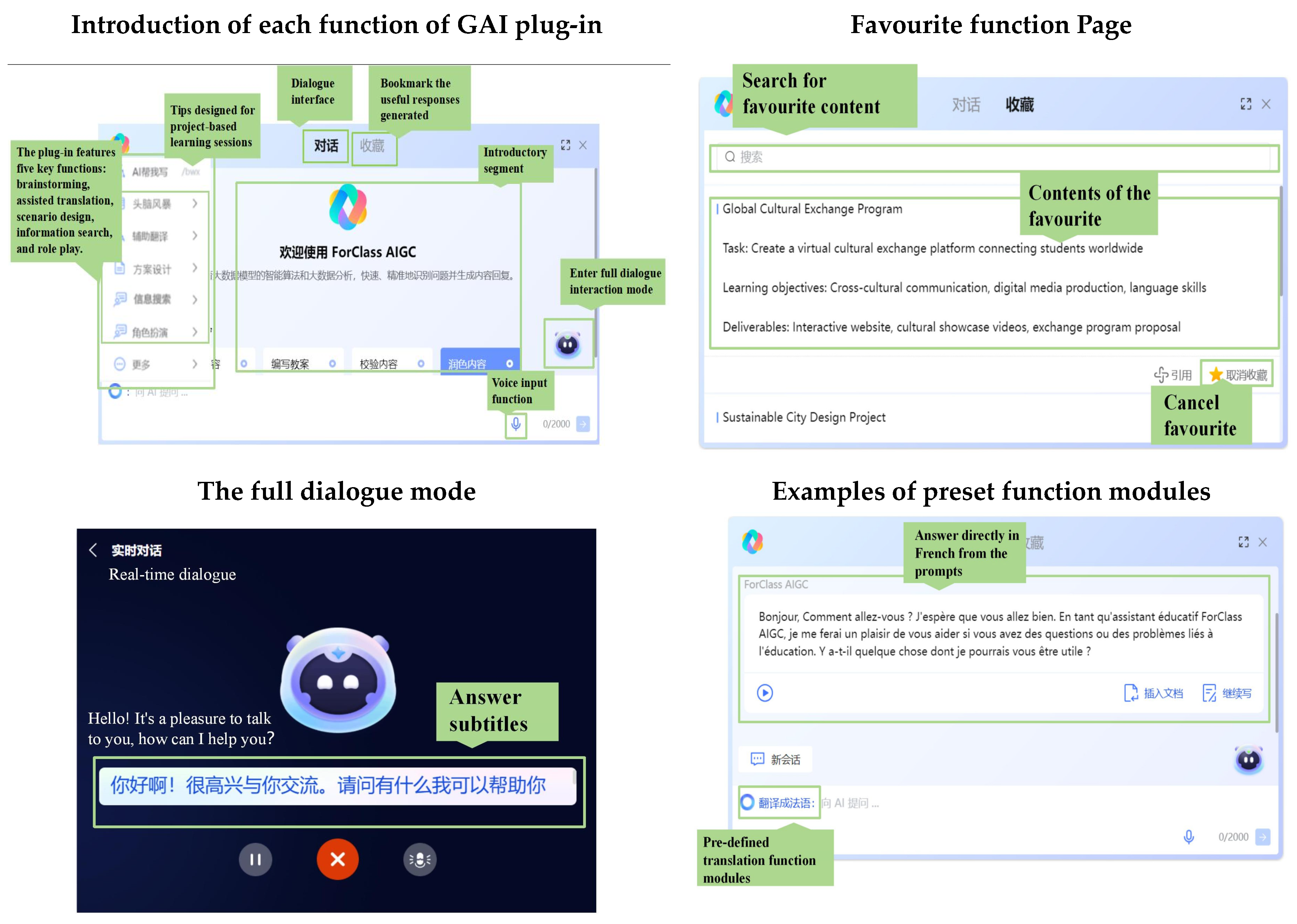

The ForClass plug-in, designed after a thorough analysis of the PBL learning process, is integrated into the PBL platform as a GAI tool. It not only incorporates the core functions of GAI but also improves upon them based on the specific needs of PBL, enhancing usability for students. This improvement is achieved through preset function modules and intelligent interaction designs tailored to PBL learning characteristics, making the plug-in more accessible and effective for project-based tasks.

The plug-in features five key functions: brainstorming, assisted translation, scenario design, information search, and role play. These functions are designed to be selected by learners at different stages of their PBL tasks. This modular setup ensures that the GAI can better understand learners’ needs and provide accurate, targeted information, while also saving time on information retrieval and analysis. The functions are implemented through the Prompts project, where pre-defined prompts correspond to each module’s characteristics within the PBL process. Learners can select prompts embedded in the platform to ask specific questions, facilitating more accurate and contextually relevant responses from the GAI. For example, in translation tasks, learners can input text without specifying the target language, as the system automatically defaults to French with the preset prompt: “Please translate the user input directly into French”.

Additionally, the ForClass plug-in includes a voice input feature, significantly lowering the entry barrier for users. This feature is particularly useful for mobile devices or situations requiring quick input, offering learners greater flexibility and convenience. Voice input improves interaction efficiency, enabling users to seamlessly engage with the platform in a variety of learning environments.

Based on user feedback, we also introduced a full dialogue mode that allows for real-time interaction between learners and the GAI. As shown in

Figure 4, in this mode, the GAI can be considered a dynamic participant in the PBL process. This feature enhances the collaborative nature of PBL by providing continuous, interactive support.

To further optimize the learning experience, the plug-in includes a content favorite function (see

Figure 4), which allows learners to bookmark valuable responses generated by the GAI. This feature enables easy access to saved content from past interactions, helping students organize and retrieve important information more effectively. By building a personalized knowledge base, students can systematically accumulate and utilize learning resources, thus improving learning efficiency and effectiveness.

The ForClass plug-in is powered by advanced GAI technologies, with security and privacy as top priorities. All user interactions, including voice inputs and chat histories, are encrypted to ensure data protection and prevent unauthorized access.

4.2. Action Vocabulary Generation

This study enhances the theoretical framework of the Flanders Interaction Analysis System (FIAS) to systematically evaluate the accuracy of behavioral coding in capturing teaching and learning behaviors in a PBL context [

35]. Expert interviews were conducted to refine the coding framework, ensuring its theoretical relevance and practical applicability. Feedback from these interviews informed the meticulous revision of the coding system, optimizing its precision and usability.

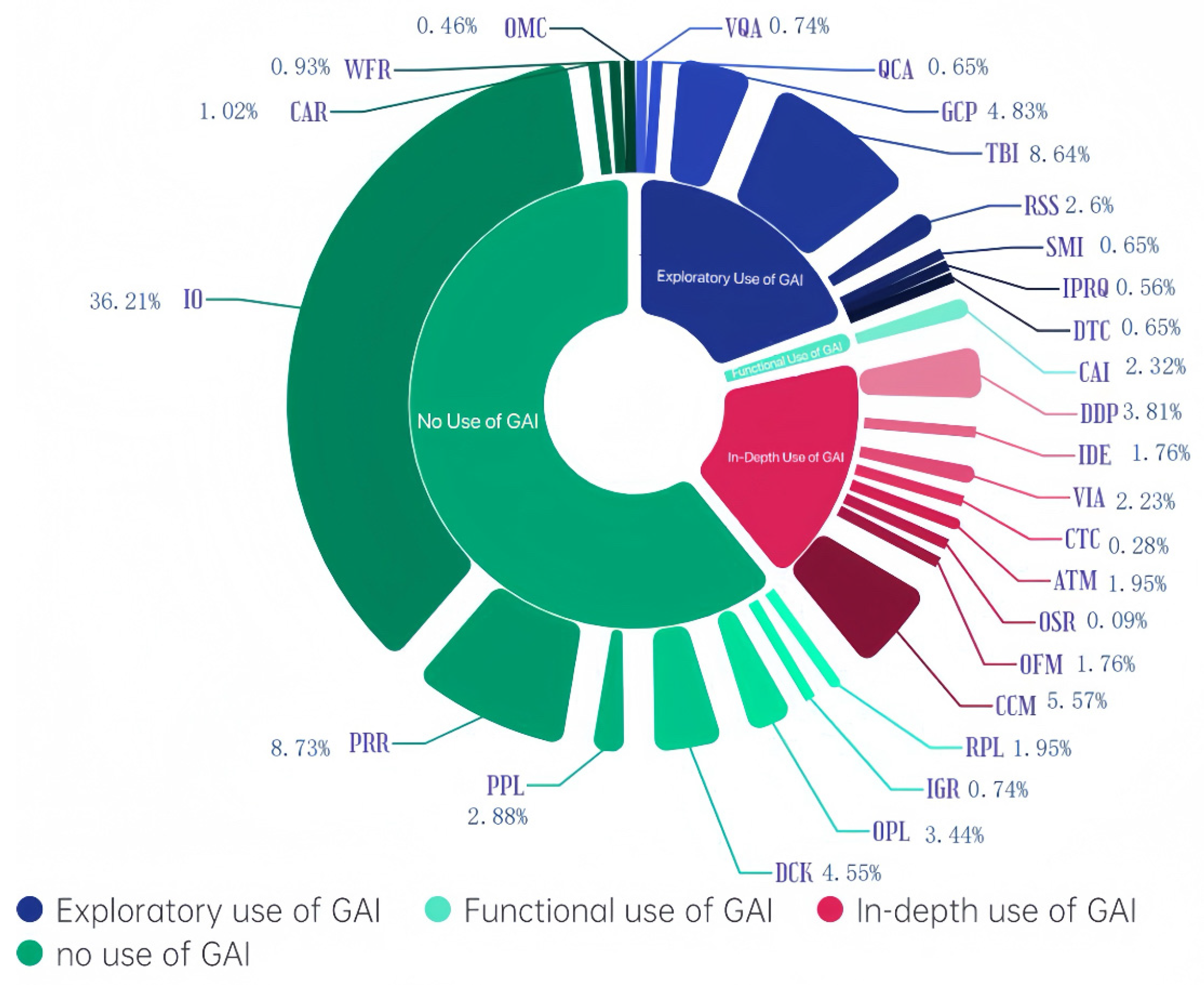

To validate and improve the coding framework, a back-to-back pre-coding procedure was employed. Two independent researchers coded the same sample videos to identify discrepancies in the recognition of teaching behaviors. This iterative process led to the definition of 31 distinct behaviors, categorized into four groups based on the degree of GAI utilization: “Deep Use of GAI”, “Functional Use of GAI”, “Exploratory Use of GAI”, and “No Use of GAI”.

Table 1 provides detailed descriptions and examples for each behavioral category.

The analysis of learning videos yielded a total of 1111 raw codes, which were subsequently subjected to reliability testing. The coded data were imported into SPSS 27.0 for inter-rater consistency evaluation, with kappa values exceeding 0.75 across all categories. This result demonstrates robust inter-rater reliability, ensuring the consistency and validity of the coded data for subsequent analysis.

- 2.

Behavior Abbreviations and Tagging.

Each of the 31 identified behaviors was assigned a unique abbreviation to streamline the tagging and categorization process during analysis. This standardized approach facilitated efficient labeling and improved the clarity of the data, enabling the precise identification of patterns in students’ interactions with GAI during PBL activities.

4.3. Classification of Behaviors Defined

The 31 identified behaviors were categorized into four distinct levels of GAI utilization, ranging from the most to the least extensive use:

In-Depth Use of GAI: Learners in this category demonstrated frequent and advanced use of GAI tools during PBL. This included tasks requiring sophisticated logical reasoning, conducting multi-round dialogues, and leveraging advanced functionalities for problem-solving and project enhancement;

Functional Use of GAI: This level reflects a moderate application of GAI, where learners utilized tools for specific functional purposes such as information retrieval, data organization, and completing foundational tasks;

Exploratory Use of GAI: Learners in this group engaged in limited or initial interactions with GAI, often experimenting with its capabilities for creative brainstorming or broadening their perspectives. This category represents a nascent stage of GAI engagement aimed at stimulating ideas and exploring possibilities;

No Use of GAI: This classification pertains to learners who did not interact with GAI tools during the PBL activities, relying instead on traditional approaches to learning and collaboration.

Figure 5 illustrates the distribution and proportional representation of these behavioral categories among all coded behaviors. The analysis highlights the varying degrees of GAI integration within the PBL framework, offering insights into the diverse ways learners interact with AI tools and their implications for learning effectiveness.

5. Results

This section presents the findings from three analyses conducted using the behavioral data collected on the GAI-PBL platform. The results provide a comprehensive overview of the impact of GAI on learners’ behavioral patterns, cognitive processes, and learning effectiveness.

The first analysis employed LSA to uncover the temporal dependencies and sequential relationships between learner behaviors. LSA, a robust tool for time series analysis, revealed significant regularities in students’ actions throughout the experiment. The probability of one behavior immediately following another demonstrated clear patterns, indicating that the integration of GAI influenced the learners’ approaches to task completion. These findings suggest that the use of GAI not only enhanced the structure of learning activities but also played a role in shaping students’ learning styles and strategies over time.

- 2.

Long-Term Impact on Behavioral Changes:

To assess the sustained influence of GAI on learners, we compared the most prominent behavioral features exhibited during the first semester with those observed in the second semester. This longitudinal comparison highlighted significant changes in learners’ behavioral dimensions, particularly in areas that reflect their cognitive engagement and problem-solving approaches. The GAI-assisted environment appeared to encourage more sophisticated thinking processes, as evidenced by the evolution of behavioral patterns.

Moreover, a detailed examination of the most frequent behaviors in each semester further validated these observations. The shift in behavior frequencies between the two periods underscored GAI’s role in enhancing learners’ cognitive frameworks, reinforcing its impact on developing higher-order thinking skills.

- 3.

Association Between GAI Utilization and Learning Effectiveness;

The final set of analyses focused on the relationship between GAI utilization and learners’ performance. Participants were stratified into performance groups based on their final grades, encompassing metrics such as project completion, innovation, and process evaluation.

By analyzing the behavior distribution across different stages of learning (early, mid, and late), as well as the specific use of GAI tools during the final sessions, the study identified a strong correlation between the depth of GAI integration and learning effectiveness. Learners who exhibited frequent and advanced use of GAI—such as leveraging it for multi-round dialogues, critical reasoning, or innovative solutions—consistently outperformed their peers.

- 4.

Cost–Benefit Comparison.

The final set of analyses focused on assessing the cost–benefit relationship of GAI utilization within the PBL learning process. Participants were grouped based on their learning outcomes, which included metrics such as task completion efficiency, learning quality, and innovation performance.

The results highlight that the manner in which learners engaged with GAI, the frequency of its use, and their mastery of its functions were crucial factors in determining their performance outcomes. These findings underscore GAI’s transformative potential in enhancing learning effectiveness and emphasize the need for pedagogical strategies that promote deeper, more strategic use of AI tools.

5.1. GAI Impacts Learners’ Learning Methods

The impact of GAI on learners’ approaches to PBL was examined through LSA, revealing distinct behavioral patterns. The analysis provided insights into how GAI influenced learners’ interactions, critical thinking, and creative engagement during PBL tasks.

Figure 6,

Figure 7,

Figure 8 and

Figure 9 illustrate the findings, emphasizing key behavioral tendencies.

In the LSA diagram, the arrows indicate transitions from one behavior to the next, with the starting point of each arrow representing a specific behavior and the endpoint representing the subsequent behavior. The nodes on the diagram correspond to the specific behaviors exhibited by the learners during the learning process. Statistical significance (Z-scores) is visually represented on the graph, quantifying the significance of each behavioral transition.

The Z-score was used as the primary statistical indicator to determine the significance of behavioral sequences in LSA. A Z-score greater than 1.96, corresponding to a 95% confidence level (α = 0.05), was considered statistically significant. A Z-score greater than 1.96 indicates that the observed frequency of behavioral transitions is significantly higher than what would be expected by chance (p < 0.05), ensuring that only meaningful sequences were considered. Behavioral patterns with Z-scores greater than 1.96 were selected to exclude random fluctuations.

First, we identified and classified all key behavioral sequences exhibited by the learners (as shown in

Figure 6). From this dataset, we focused on sequences most strongly linked to academic engagement and learning behaviors.

Figure 6 visually presents all significant behavioral sequences identified through LSA. The nodes represent specific learning behaviors, such as “Referencing GAI Text (ATM)” and “Varying Question Approaches (VQA)”, while the directed arrows between nodes indicate the transitions between behaviors. The Z-scores attached to these arrows measure the statistical significance of each transition. The selected sequences were chosen for their strong correlation with the PBL framework and their ability to provide insights into how learners interact with the AI tool and complete their learning tasks.

Figure 6.

Sequential transformation diagram of learner behavior (total).

Figure 6.

Sequential transformation diagram of learner behavior (total).

5.1.1. High Frequency of Question Submission

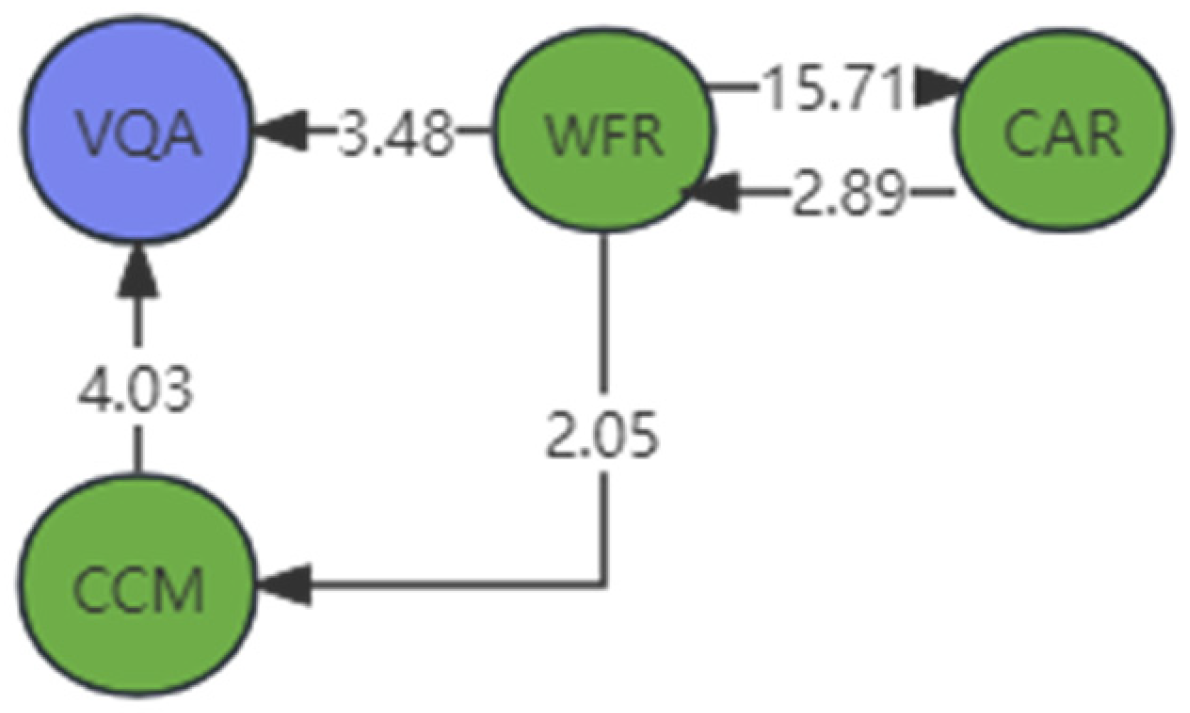

Learners frequently demonstrated active question submission during PBL tasks, as shown in

Figure 7. This behavior highlights how students used GAI’s contextual features, such as Continue Context Memory (CCM), to refine and adjust their questioning strategies, particularly after receiving initial responses from the system. This iterative questioning process involved three main phases: (1) interpreting the information provided by GAI, (2) filtering relevant content, and (3) formulating follow-up inquiries. These behaviors suggest that learners developed trust in GAI’s capabilities, relying on it as a reliable and responsive resource to support their learning.

Figure 7.

Behavioural sequence diagram (explain high frequency of question submission).

Figure 7.

Behavioural sequence diagram (explain high frequency of question submission).

The key behavioral sequences include:

Continue Context Memory → Varying Question Approaches (CCM → VQA, Z-score = 4.03);

Waiting for Responses → Varying Question Approaches (WFR → VQA, Z-score = 3.48);

Waiting for Responses → Interpreting and Filtering AIGC Answers (WFR → CAR, Z-score = 15.71).

These sequences demonstrate a dynamic interaction between learners and GAI that is marked by adaptive questioning and an increasing reliance on GAI for iterative problem solving.

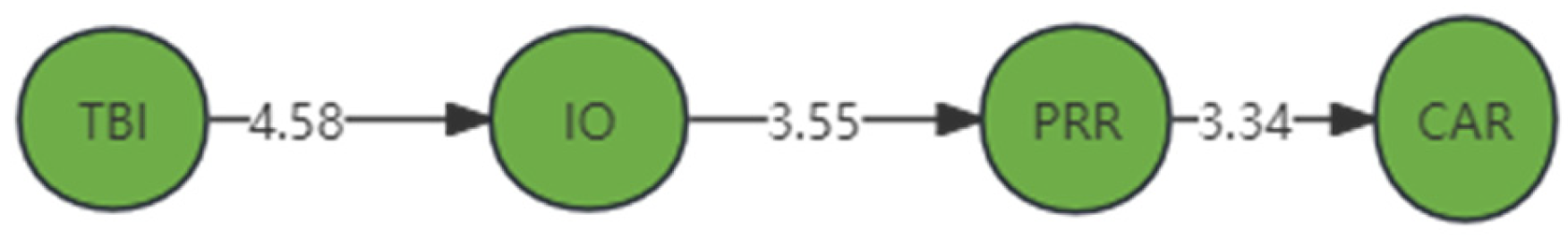

5.1.2. Elevated Levels of Critical Thinking

Figure 8 highlights learners’ critical thinking behaviors, particularly their ability to assess GAI’s responses against project requirements. After requesting GAI assistance, students engaged in reviewing and analyzing its outputs to determine their alignment with PBL objectives. This critical evaluation underscores that learners prioritized their project goals and regarded GAI as a supplementary tool rather than a primary decision maker.

Figure 8.

Behavioural sequence diagram (explain elevated levels of critical thinking).

Figure 8.

Behavioural sequence diagram (explain elevated levels of critical thinking).

The prominent behavioral sequences include:

Query Background Information → Idle Operation → Project Requirement Review & Replication → Interpreting and Filtering AIGC Answers (TBI → IO → PRR → CAR, TBI → IO, Z-score = 4.58, PRR → CAR, Z-score = 3.34).

These findings suggest that students employed GAI strategically to enhance their problem-solving process, reinforcing its role in fostering analytical thinking.

5.1.3. Creativity and Improvement Through Iterative Engagement

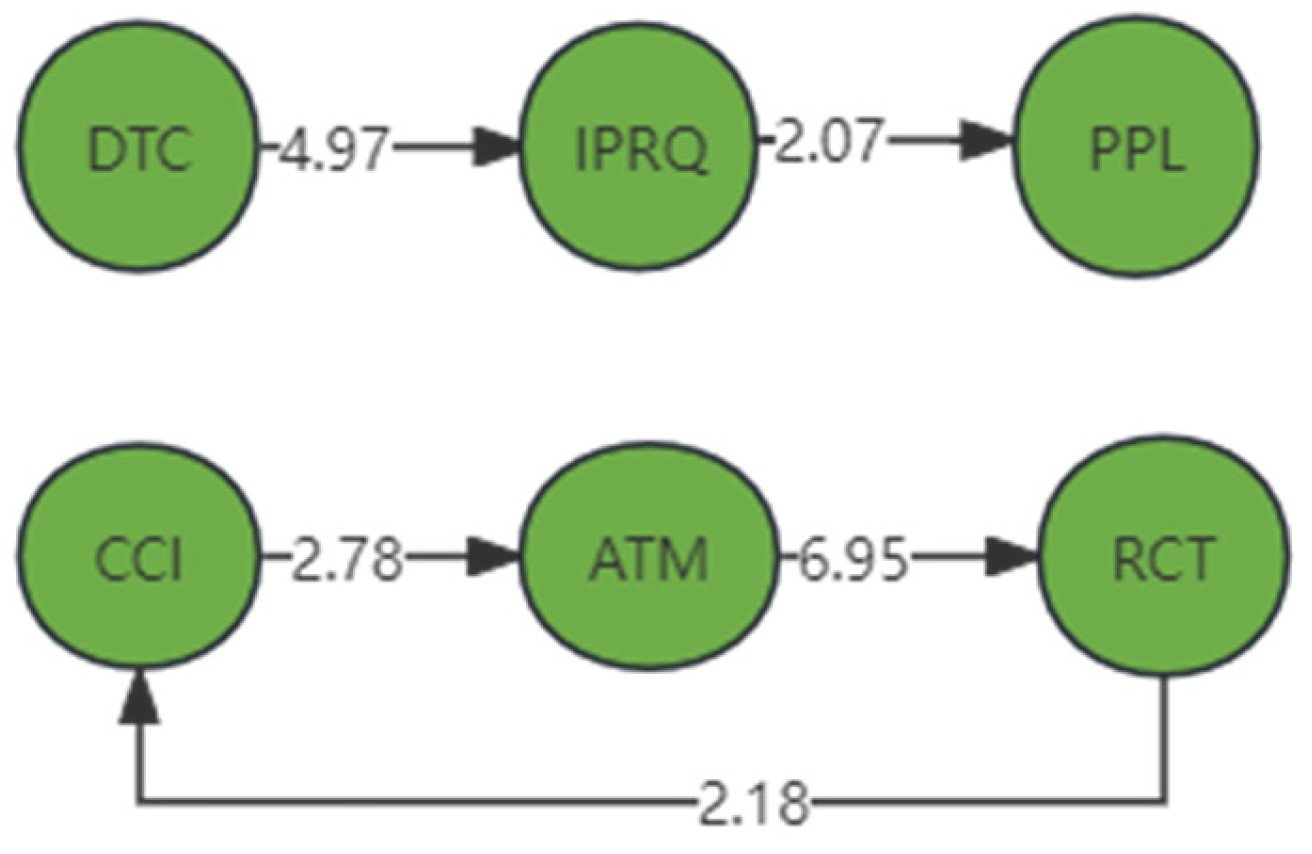

As shown in

Figure 9, learners effectively used GAI to support creative endeavors in PBL tasks. For instance, they employed GAI to draft initial frameworks or content and then refined these outputs by integrating project-specific requirements. This cyclical use of GAI underscores its role in supporting iterative improvement and fostering innovative thinking.

Figure 9.

Learner behavior diagram (explain creativity and improvement).

Figure 9.

Learner behavior diagram (explain creativity and improvement).

The significant behavioral sequences include:

Data Table Creation with GAI → Integrating Project Requirements in GAI Queries → Previewing PBL Content (DTC → IPRQ, Z-score = 4.97, IPRQ → PPL, Z-score = 2.07);

The sequence begins with students using GAI to create data tables (DTC), a foundational step in organizing project information. The high Z-score (4.97) for the transition to integrating project requirements (IPRQ) indicates that students strategically tailored GAI outputs to align with specific project goals. This demonstrates a purposeful and goal-oriented use of the tool. The subsequent transition to previewing PBL content (PPL) suggests that students reviewed and refined their work after incorporating project requirements, ensuring the final output met their expectations.

Creative Content Inspiration → Adjusting Text to Meet Requirements → Referencing GAI Text → Creative Content Inspiration (CCI → ATM, Z-score = 2.78, ATM → RCT, Z-score = 6.95).

The initial transition from creative content inspiration (CCI) to adjusting text to meet requirements (ATM) shows students using GAI-generated ideas as a starting point, which they then adapted to fit project needs. The subsequent transition to referencing GAI text (RCT) demonstrates how students actively incorporated GAI-generated reference material, which had a remarkably high Z-score (6.95), highlighting GAI’s significant role in supporting their work. The cyclical return to creative content inspiration (CCI) suggests that students used GAI not just as a one-time tool but as an ongoing resource for iterative refinement and innovation.

These sequences indicate that learners not only relied on GAI to generate ideas but also used it to critically enhance the outputs through thoughtful adjustments, illustrating an iterative learning approach that deepens creativity and problem-solving approaches.

In summary, the behavioral patterns observed indicate that GAI significantly influenced learners’ methods in problem-posing, critical thinking, and creative production within PBL.

Figure 7,

Figure 8 and

Figure 9 collectively illustrate how learners invested considerable effort in extracting, refining, and applying information from GAI to optimize their learning processes. These findings suggest that GAI effectively enhances the research and problem-solving phases of PBL, empowering students to develop more sophisticated and iterative learning approaches.

5.2. GAI’s Influence on Learners’ Perceptions and Strategies

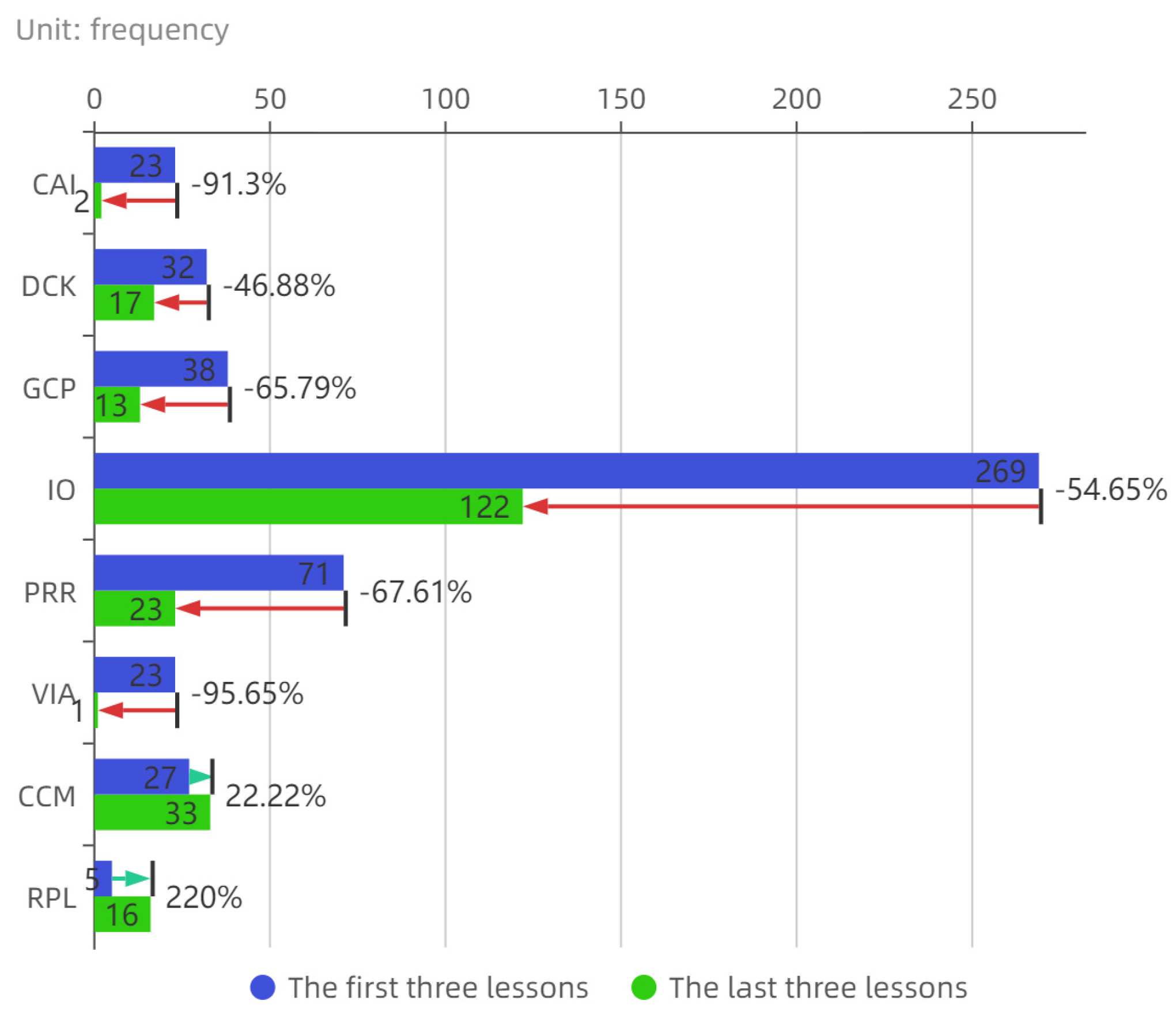

To assess the impact of GAI on learners’ perceptions and strategies, we examined behavioral trends across two semesters of PBL. By randomly selecting three PBL tasks from each semester, we analyzed changes in students’ behaviors and their frequency over time, as shown in

Figure 10 and detailed in

Table 2.

The following behavioral shifts across semesters were observed:

Decreased reliance on verification and integration: In the latter three sessions, behaviors related to verifying information accuracy (VIA) and combining GAI with other tools (CAI) dropped significantly, by 95.65% and 91.3%, respectively. This decline suggests that learners increasingly trusted GAI’s outputs and depended less on external validation or supplemental tools;

Reduced project requirement review: Project Requirement Review and Replication (PRR) behavior decreased by 67.61%, indicating that as students grew more accustomed to GAI and PBL, they needed to revisit project instructions less frequently. This reflects improved familiarity and confidence in navigating both the platform and the tasks;

Lower Idle Operation frequency: Students’ Idle Operation (IO) behavior, characterized by pauses and hesitation, reduced by 54.65%. This reduction points to increased proficiency and comfort with GAI over time, enabling learners to engage more seamlessly with the platform;

Changes in creative engagement: Creative behaviors, including Generate Conception or Plan (GCP) and Design Creative Key (DCK), decreased by 65.79% and 46.88%, respectively. Meanwhile, behaviors such as Continue Context Memory (CCM) rose, suggesting a shift toward relying on GAI’s outputs and memory features. This shift, while increasing efficiency, also indicated a decline in learners’ independent creative contributions, hinting at a potential over-reliance on GAI.

From

Table 2, distinct differences emerged in learners’ frequent behaviors between the two semesters:

First Semester: Students primarily focused on foundational tasks such as generating initial ideas, reviewing project requirements, and basic queries for background information. This suggests early-stage reliance on GAI for exploratory support and structural guidance;

Second Semester: With growing familiarity, students engaged more with GAI’s contextual memory for continuous discussions and delved deeper into addressing complex or uncertain project issues. Despite this, the frequency of refraining from manipulative behaviors—tasks requiring minimal manual interaction—remained high but slightly declined, reflecting increased active engagement;

Over time, learners transitioned from cautious, exploratory use of GAI to a more integrated and strategic approach. Key observations include:

Increased trust and confidence: As learners gained experience, they placed greater trust in GAI, reducing verification efforts and relying more on its outputs for efficiency;

Shift from mechanical to strategic use: Early reliance on structured and mechanical interactions gave way to selective, purposeful engagement, leveraging GAI’s advanced features like contextual memory for complex tasks;

Transformation in cognitive approaches: The progression from external validation and repetitive behaviors to the deep integration of GAI in learning indicates a cognitive shift. This evolution reflects not only the tool’s usability but also its potential to shape learners’ problem-solving strategies and attitudes.

The findings demonstrate that GAI significantly influences learners’ perceptions of learning and their strategic behaviors in PBL. Over time, learners evolve from cautious users to confident, selective practitioners, highlighting GAI’s role in transforming both their cognitive engagement and approach to PBL.

5.3. The Effectiveness of PBL Influenced by Learners’ Use of GAI

The course adopts a cumulative assessment system, with the content of the last lesson as an important part of the final grade that has a high weight and is directly related to the learner’s final grade. At the same time, after a series of learning and practice tasks, learners achieve a high level of proficiency and stability in the use of course content, operational processes, and learning tools in the final lesson. This increased proficiency helps to reduce the experimental errors caused by rusty skills or understanding bias, making the collected data on learner behavior more accurate and reliable. Consequently, the third set of analyses concentrated on the distribution and categories of the GAI-related behaviors demonstrated by learners across the diverse performance groups (high, medium, and low) in relation to the PBL task conducted during the concluding session. Insights into the behavioral patterns and their correlation with learning effectiveness are summarized as follows:

Table 3 presents the following distribution of GAI behaviors among performance groups:

We can conclude the following characteristics of high-performance learners’ GAI usage:

Effective problem definition and retrieval skills: High performers excelled in defining problems precisely, employing appropriate keywords, and generating innovative, task-relevant queries. These skills enhanced their ability to extract meaningful insights from GAI;

Iterative dialogues and effort investment: This group engaged in more dialogue rounds with GAI, reflecting their willingness to explore ideas comprehensively. They demonstrated a balance between the quantity and quality of interactions, maximizing the tool’s potential for detailed feedback and refinement.

The following challenges were faced by low-performance learners:

Deficiencies in problem definition: Low performers struggled with framing effective queries, which impacted the relevance and utility of GAI responses;

Reduced engagement over time: While their early-stage usage involved deep interactions, they were less consistent in maintaining meaningful dialogues during subsequent project stages.

The experiment highlights the influence of GAI on learning effectiveness, with notable variations across performance groups:

Enhanced learning for high performers: High-performing students benefited significantly from GAI by leveraging its advanced features for iterative problem-solving and deeper cognitive engagement;

Missed opportunities for low performers: The low-performance group’s declining engagement and inadequate problem-framing skills limited the effectiveness of GAI as a supportive tool.

In order to more rigorously verify the validity of the experimental results, two experts were invited to meticulously score the behavioral performance of different performance groups during their interactions with the GAI (see

Table 4 for details). This scoring process was designed to explore the potential correlation between the effectiveness of users’ interactions with the GAI and their final performance through multi-dimensional and fine-grained analyses.

The scoring basis encompasses the following aspects:

Question Accuracy Score: This assesses the accuracy and relevance of the questions posed by users, gauging their ability to articulate their needs or queries in a clear and precise manner;

Keyword Effectiveness Score: The objective is to ascertain whether the keywords employed by the user in their questioning are appropriate and effective and whether these keywords assist the GAI in comprehending the user’s intention with greater accuracy;

Conversation Round Score: This evaluation assesses the efficiency and patience of the user’s interaction with the GAI, based on the number of conversation rounds and the depth of dialogue between the two parties;

Innovative Questions Score: This scoring system encourages users to pose innovative and exploratory questions, thereby measuring their flexibility and creativity;

Question Complexity Score: This evaluation assesses the complexity of the questions posed by users, encompassing the structure, rationale, and scope of the questions, in order to reflect the users’ capacity to engage in profound thinking and rigorous analysis.

It can be concluded that there is a significant correlation between a user’s level of mastery of the GAI and their behavior and performance in interacting with the GAI. In particular, users who are able to pose questions accurately and effectively, utilize appropriate keywords, and demonstrate innovative thinking and in-depth analysis in the dialogue tend to achieve higher scores.

GAI significantly impacts the effectiveness of PBL by enhancing learners’ ability to engage in exploratory and functional behaviors. However, the degree of benefit depends on the learner’s skill in effectively leveraging GAI’s capabilities. High-performing learners demonstrated consistent and strategic GAI use, translating to superior project outcomes, whereas low-performing learners faced challenges that limited their progress. This underscores the importance of guiding learners in developing effective GAI interaction strategies to optimize its benefits across diverse performance groups.

5.4. Achieving a Higher Return on Investment Through the Use of GAI

To assess the cost-effectiveness and long-term feasibility of using the GAI plug-in, we randomly selected six learner groups and systematically measured the time they spent learning, debugging, and adapting to the GAI system. We calculated the average time investment for these groups. Additionally, to control for potential biases and isolate the impact of the GAI, these groups were asked to complete the same number of alternative tasks without using the plug-in as post-course assignments.

Our findings revealed the following:

Initially, students invested 3 to 5 h learning how to use the GAI plug-in, which included familiarizing themselves with its features, troubleshooting settings, and adjusting to the new learning process. Despite this initial time investment, the long-term benefits were significant. On average, students saved 2 to 3 h of study time per week by using the GAI plug-in. Over a 16-week semester, this resulted in total time savings of 32 to 48 h. This reduction not only alleviated the students’ academic load but also provided them with more time for in-depth learning, creative exploration, or leisure activities.

Additionally, as shown in previous analyses, the use of the GAI plug-in significantly enhanced students’ innovation and creativity. The system’s support for multiple rounds of dialogue, personalized feedback, and idea generation enabled students to complete tasks more efficiently, while simultaneously fostering the development of critical thinking and creative problem-solving skills.

6. Conclusions and Discussion

In the context of the rapid development of GAI and other large-scale models, these technologies have become central to academic and practical discussions, particularly regarding their application in education. Numerous studies indicate that the rational application of GAI can enhance both teaching efficiency and learning quality, yielding positive outcomes for education [

36]. Despite the rapid technological advancements, particularly with the widespread use of large language models, some countries have introduced policies banning GAI in academic assessments to prevent unfair advantages and dependency [

37]. Hence, how to leverage GAI effectively in education remains a key topic for further exploration. We therefore analyze the relationship between GAI and PBL as a way of answering the first question: Can GAI facilitate PBL learning and, at the same time, can PBL provide a research avenue for GAI?

To answer this query, this study innovatively combined GAI with PBL by designing and building a GAI-PBL online learning platform. By conducting a one-year experimental study on this platform, we not only verified the feasibility of GAI technology in assisting PBL learning and solving the existing problems of PBL but also revealed the unique value of the PBL teaching model in exploring the effectiveness of the application of GAI technology—it provides a fruitful research framework and paradigm. Specifically, the characteristics of PBL, such as information integration and cooperation and communication, provide a rich practical scenario for evaluating the mechanism, effect, and optimization path of GAI technology in the learning process.

Our GAI-PBL platform development reveals several key alignments between the functional characteristics of GAI and the learning methods promoted by PBL. For instance, PBL emphasizes communication among students and GAI, as a conversational model, provides learners with opportunities for dialogue, making it possible for students to work together virtually. By operating multiple GAI models simultaneously, learners can form a PBL group, enabling them to collaborate on project-based tasks. Furthermore, the platform overcomes many of PBL’s traditional limitations, thereby enhancing its potential for educational research.

Regarding the second question of how GAI influences students’ learning behaviors, cognitive skills, and learning outcomes, our study identifies several complex, interrelated phenomena. These findings underscore the potential value of GAI as a supportive tool in learning but also highlight some challenges and risks.

Firstly, the introduction of GAI has significantly transformed the learning process. Learners naturally incorporate the use of GAI into the learning process, producing a series of behavioral sequences. Learners are increasingly inclined to ask questions with the help of GAI, often after engaging in deeper analysis and contextualization. This suggests that GAI facilitates deep learning. Additionally, GAI stimulates learners’ critical thinking, prompting them to evaluate whether the answers provided meet their learning needs. In the context of PBL, direct access to external knowledge enriches students’ understanding and fosters new insights [

38]. GAI’s immediate feedback and vast knowledge base allow learners to quickly explore diverse perspectives, promoting the development of critical thinking. Learners are encouraged to question, analyze, and assess the reliability and relevance of information, which is crucial in today’s information-rich environment. This process is vital for developing independent thinking and problem-solving abilities.

Furthermore, the impact of GAI on students’ cognitive processes is profound. As the learning cycle progresses, students’ trust in GAI gradually intensifies. Compared to the first semester, students in the second semester exhibited a reduced tendency to deeply consider and verify the answers provided by GAI upon receipt. This phenomenon suggests that over-reliance on GAI may lead to a weakening of the students’ willingness to engage in proactive thinking. Additionally, as the duration of GAI usage increases, learners demonstrate a decrease in creative behaviors, such as Generate Conception or Plan (GCP) and Design Creative Key (DCK). The constant reliance on the immediate answers provided by GAI may inhibit students’ ability to explore multiple solutions, conduct in-depth analysis, and solve problems independently, thereby hindering the development of critical thinking and self-reflection skills. However, it cannot be denied that, with the assistance of GAI, learners have improved their efficiency in the process of PBL and that their learning strategies have undergone subtle changes, evolving from cautious users to confident and selective practitioners. Consequently, the influence of GAI on students’ thinking and learning strategies is multidimensional and complex. On one hand, long-term dependence may weaken students’ critical thinking and innovative capabilities; on the other hand, it undoubtedly enhances learning efficiency and promotes the evolution of learning strategies towards greater autonomy and efficiency. It is also in light of this long-term impact that we argue that the sustained use of GAI-assisted learning positively influences both the effectiveness and creativity of learners. While some challenges may arise during its use, the overall benefits—such as enhanced learning outcomes and innovation—appear to outweigh any potential drawbacks. This long-term impact underscores the value of integrating GAI into educational practices, providing learners with a tool that not only supports their academic success but also promotes the development of essential 21st-century skills.

Finally, we have discovered that the assistance provided by GAI can influence learners’ academic performance. Generally speaking, high-performing groups excel at precisely defining problems, utilizing appropriate keywords, and generating innovative, task-relevant queries. Furthermore, expert ratings have further confirmed a significant correlation between the effectiveness of user interaction with GAI and their ultimate performance. Scores in areas such as problem accuracy, keyword effectiveness, dialogue rounds, innovative questions, and problem complexity all reflect users’ behavioral characteristics and cognitive levels during their interactions with GAI. High-performing groups generally perform well on these dimensions, whereas low-performing groups tend to be relatively weaker. That is to say, when utilizing GAI in the learning process, we must also consider issues of educational equity and ethics.

7. Limitation

Although this study elucidates the beneficial role of GAI in facilitating PBL, it is still constrained by a number of factors. Firstly, the data were collected over a relatively short period of time, only one year. This limited timeframe may not adequately cover potential long-term effects, such as the potential risk of technological fatigue or boredom that may arise from continued exposure to a consistent technological environment. Technological fatigue, as a combined psychological and physiological response, refers to the decreased efficiency and discomfort that individuals experience as a result of increased cognitive load, distraction, and lack of effective rest during continuous interaction with technological interfaces [

39]. In the scenario of GAI educational applications, the frequent and prolonged use of tools such as ChatGPT, although theoretically able to provide students with instant feedback, personalized learning path construction, and other benefits, may also exacerbate the students’ sense of technological fatigue, which in turn affects their ability to learn in depth, their efficiency of information processing, and even their motivation to continue learning.

Furthermore, it is crucial to acknowledge the inherent limitations of this study’s findings. These limitations are primarily due to constraints in data collection, the representativeness of the sample, and the limitations of the analytical method employed. Despite the rigorous efforts of the researchers to ensure the precision and comprehensiveness of the data, there remains a possibility that relevant variables and contexts may not have been fully captured. This could potentially impact the universality and depth of the conclusions drawn, as well as hinder the identification of additional behavioral patterns and insights. At the same time, this study focuses on how GAI directly affects students specific learning behaviors, cognitive processes, and learning effectiveness, but neglects to conduct an in-depth analysis and comprehensive assessment of the potential impact of GAI on students’ learning ecology, affective engagement, social interactions, and long-term motivation in a broader perspective under the PBL framework.

Additionally, while LSA offers a robust instrument for elucidating behavioral interactions, its inherent limitations impeded this study’s capacity to comprehensively grasp and thoroughly investigate the intricate relationships between these behaviors. Moreover, the research methodology employed was somewhat uniform, which precluded a more comprehensive examination of the association between learners and GAI.