1. Introduction

With the recent boom in generative AI, artificial intelligence has become increasingly intertwined with daily life, sparking discussions on how to cultivate essential information technology skills. Consequently, programming has emerged as one of the core subjects in contemporary university education. Programming skills are considered fundamental in fields such as science, engineering, and business. However, mastering programming is a gradual process that cannot be achieved overnight. One of the significant challenges in teaching programming lies in providing effective individualized guidance and feedback to students, especially in classes with limited time and large student populations. In traditional teaching models, students often encounter various difficulties while writing programs. When questions arise, they typically must wait for class time to consult their instructor or seek help through an online teaching platform. This process can lead to interruptions and delays in learning. Furthermore, even when face-to-face consultations are available, not all students are willing to seek help, particularly those who are shy or lack confidence. These students may fall behind in their learning outcomes as a result.

Since 2018, studies have explored the use of virtual teaching assistants, which have received positive responses and high levels of student satisfaction. Virtual teaching assistants not only enhance student engagement but also positively impact students’ learning motivation [

1]. From a teaching perspective, the ideal learning environment allows educators to meet students’ individual needs and provide one-on-one support [

2]. However, achieving a balanced student-to-teacher ratio in general courses is challenging, making personalized guidance difficult to provide. To address this, some researchers have proposed integrating chatbots into formal learning environments. Chatbots can interact with students conversationally while providing knowledge-based assistance [

3,

4]. For example, Georgia State University implemented a chatbot named “Pounce” to improve graduation rates. By identifying 800 risk factors for dropout and utilizing predictive data analysis, the chatbot provided round-the-clock answers to students’ questions, especially benefiting those needing assistance outside of regular hours. Moreover, the chatbot offered personalized responses to students’ queries, effectively addressing obstacles they encountered in their studies. The program’s success was evidenced by 94% of students recommending the continued use of chatbots due to their instant, non-judgmental, and accessible responses [

5]. Similarly, AI-based automated teaching agents have been developed to reduce the workload of instructors and teaching assistants in verbal interactive learning settings [

6], and chatbots have been employed to help students resolve technical programming issues [

7]. These examples highlight the dual benefits of addressing staff shortages in educational contexts while enhancing students’ learning experiences [

8]. A survey conducted by Abdelhamid and Katz [

9] further supports the effectiveness of chatbot services. Over 75% of the surveyed students reported previous use of chatbots or similar systems, with 71% acknowledging challenges in meeting with teaching assistants. Notably, more than 95% expressed that chatbots would be helpful in providing timely answers to their questions.

The emergence of generative AI has profoundly influenced the educational landscape. Numerous studies and discussions in the academic community have examined the application of generative AI in assisted learning [

7,

10,

11]. Among these technologies, ChatGPT, developed by OpenAI, stands out as a highly advanced language model. ChatGPT is capable of generating human-like responses to diverse queries, making it an invaluable resource for students and educators. By offering instant and accurate answers, ChatGPT enables users to quickly grasp complex concepts. Its natural language conversational capabilities have also made it a popular teaching tool, facilitating interactive engagement between teachers and students [

12]. Particularly, ChatGPT has shown significant potential in providing instant feedback and error correction guidance. While earlier studies have utilized automated feedback tools to offer immediate feedback on error identification and correction, these systems often lack the depth needed to support further learning [

13]. Most feedback mechanisms in such systems provide only single-level responses or fixed feedback types tailored to specific errors, leaving students unable to address more complex problems effectively [

14,

15].

To address these challenges, this study integrated generative AI technology to develop ITS-CAL (Intelligent Tutoring System for Coding and Learning), a system designed to respond to students’ questions instantly and provide targeted guidance. The intelligent teaching assistant functionality leverages natural language processing technology to comprehend students’ inquiries and deliver appropriate responses. However, given that generative AI is capable of resolving most programming problems and even directly generating program code, directly providing hints that closely resemble correct answers risks fostering students’ dependency on the system. This dependence may lead to students simply submitting generative AI-provided code as their own, thereby undermining the original intent of the system as a tool for fostering critical thinking [

16]. To address this concern, the study employed a step-by-step approach to delivering hints. Initially, the system provides a description of the process structure. If students are unable to write code based on this structure, the next step is to present the explanation using mathematical formulas, catering to students who may excel in numerical reasoning. If mathematical formulas still do not enable students to apply appropriate syntax to solve the problem, the system then provides pseudo code. As pseudo code is straightforward and easier to interpret, it helps students comprehend algorithmic logic and data structures. This multi-layered feedback mechanism allows the intelligent teaching assistant to alleviate the workload of instructors and teaching assistants while enabling students to receive immediate help, thereby enhancing learning continuity and efficiency.

Summarizing the research findings, the intelligent teaching system ITS-CAL, driven by an LLM, demonstrates significant application value in programming education. It enables the analysis and exploration of students’ learning behaviors, outcomes, and subjective experiences before and after its implementation. The following subsections elaborate on the three main research focuses:

Understanding students’ usage behaviors is fundamental to optimizing learning system functionalities. When using ITS-CAL, students may exhibit diverse patterns of interaction due to variations in their learning backgrounds, programming knowledge levels, and individual needs. For instance, some students may frequently rely on the Hint function to seek guidance, whereas others may prefer the Debug function for correcting programming errors. Analyzing these patterns provides insights into the distinct learning needs of various student groups, enabling the system’s functions to be refined to better address individual requirements. Furthermore, investigating the types and depth of questions posed by students offers a deeper understanding of their conceptual grasp and challenges during the learning process. For example, students with lower programming knowledge may tend to ask basic syntax-related questions, while those with intermediate or advanced knowledge levels might focus on topics like logic design or program integration. These insights not only reflect students’ learning stages but also aid in devising targeted strategies for learning assistance.

- 2.

The Impact of System Accessibility on Learning Outcomes

Effective feedback is a critical mechanism in programming education, as real-time guidance can help students overcome challenges and enhance their problem-solving abilities. ITS-CAL emphasizes providing feedback that focuses on the technical correctness of students’ work without offering direct solutions. This approach is intended to encourage deep thinking and foster independent learning. However, the extent to which this model contributes to substantial improvements in students’ programming skills requires validation through rigorous empirical research. This research focus evaluates the impact of ITS-CAL’s feedback mechanisms on students’ learning outcomes.

- 3.

Students’ Subjective Experiences and Feedback

Students’ subjective experiences significantly influence their long-term engagement with learning systems and their motivation to learn. If students perceive ITS-CAL as overly complex, unintuitive, or misaligned with their learning needs, their willingness to use the system may diminish, thereby limiting its educational effectiveness. Consequently, understanding students’ perceptions and experiences with ITS-CAL is crucial for assessing the system’s practicality and acceptability. Additionally, students’ feedback provides valuable insights into the system’s design. For example, students may express varying opinions on the effectiveness of specific functions, such as the Hint, Debug, and User-defined Question features. These perspectives not only help researchers understand the impact of each function on learning but also highlight areas for improvement, offering a foundation for future system optimization.

The remainder of this paper is organized as follows:

Section 2 presents related work on intelligent tutoring systems and AI-driven programming assistance.

Section 3 introduces the ITS-CAL system, detailing its design, architecture, and core functionalities.

Section 4 describes the experimental setup, participants, and evaluation methods, including how ITS-CAL was assessed during the programming exam.

Section 5 reports the results of the study, including student performance analysis, system usage patterns, and statistical tests.

Section 6 discusses the findings, implications, recommendations, and potential confounding factors. Finally,

Section 7 concludes the paper and suggests directions for future research and limitations of the study.

3. Intelligent Tutoring System for Coding and Learning

ITS-CAL (Intelligent Tutoring System for Coding and Learning), developed by our lab, is a programming learning assistance tool powered by large language model (LLM) technology. ITS-CAL is designed to provide students with technically accurate, adaptive, and supportive feedback while deliberately avoiding the provision of complete code solutions. This approach encourages students to engage in deeper problem solving and critical thinking. Below is a detailed introduction to the system’s design, architecture, and primary auxiliary functions.

3.1. System Architecture and Design

The architecture of ITS-CAL comprises three main components: the front-end interface, the back-end server, and the core LLM module. This design ensures high modularity and scalability.

The front-end interface, developed using the Vue.js framework, offers an intuitive and user-friendly experience, as shown in

Figure 1. Key interface components include the following:

Question Area: located on the left, this area contains questions pre-designed by instructors.

Code Area: positioned on the right, students can write and edit their code after reviewing the questions.

Result Message Area: at the bottom right, this block displays system-generated messages, including compilation and execution results, as well as comparison outcomes with pre-designed test data.

Help Functions Area: on the lower left, this section provides access to three primary auxiliary functions:

- ■

Hint: offers problem-solving guidance.

- ■

Debug: assists in correcting code errors.

- ■

User-defined Question: enables students to ask custom questions and receive tailored feedback.

Students submit their code by clicking the confirm button. The system then compiles and executes the code, comparing the outputs against multiple sets of pre-defined test data. A match with all test cases indicates the successful completion of the question; otherwise, it is marked as a failure. Students can view the execution and comparison results in the message block and make corrections or proceed to the next question as needed. The interface design emphasizes simplicity and efficiency to prevent distractions and support students’ focus. The layout includes distinct blocks for questions, code editing, results, and auxiliary functions to maintain a streamlined and organized experience.

- 2.

Back-End Server

The back-end server processes student requests and forwards them to the core LLM module for analysis and response generation. It also integrates a learning behavior data collection module and a database for storing the following information:

Submitted program code.

Execution results and comparison outcomes with test data.

Usage statistics of auxiliary functions, including the following:

- ■

Number of times each function (Hint, Debug, User-defined Question) is selected.

- ■

Timestamps for auxiliary function usage.

- ■

Feedback messages generated by each auxiliary function.

- ■

Content of custom questions submitted by students.

The back-end is built using a Node.js framework, ensuring efficient and stable data transmission.

- 3.

Core LLM Module

ITS-CAL integrates GPT-4 as a natural language processing engine to generate educationally relevant feedback, but it does not directly generate executable program code for students. The compile functionalities of ITS-CAL were independently developed and are not derived from AI-generated code. Instead, GPT-4 serves as an assistance mechanism to interpret user queries and provide conceptual explanations, debugging guidance, and structured hints. Key features include the following:

Adaptive Feedback: the module generates technically accurate responses to student queries, avoiding the direct provision of answers to encourage deeper engagement.

Example Explanations: it provides relevant examples and learning directions while ensuring example code is unrelated to the specific question to prevent direct copying.

3.2. ITS-CAL Assistance Features

ITS-CAL provides three primary auxiliary functions: Hint, Debug, and User-defined Question. The detailed descriptions of these functions are as follows.

3.2.1. Hint

The Hint function assists students by offering solution directions and specific steps when they encounter problems they cannot solve independently. When students face challenging questions and lack any initial solution ideas, the Hint function provides step-by-step guidance based on the question’s content, accompanied by example code for explanation. To prevent students from directly copying the provided code, the examples are designed to be conceptually relevant but not directly applicable to the specific question.

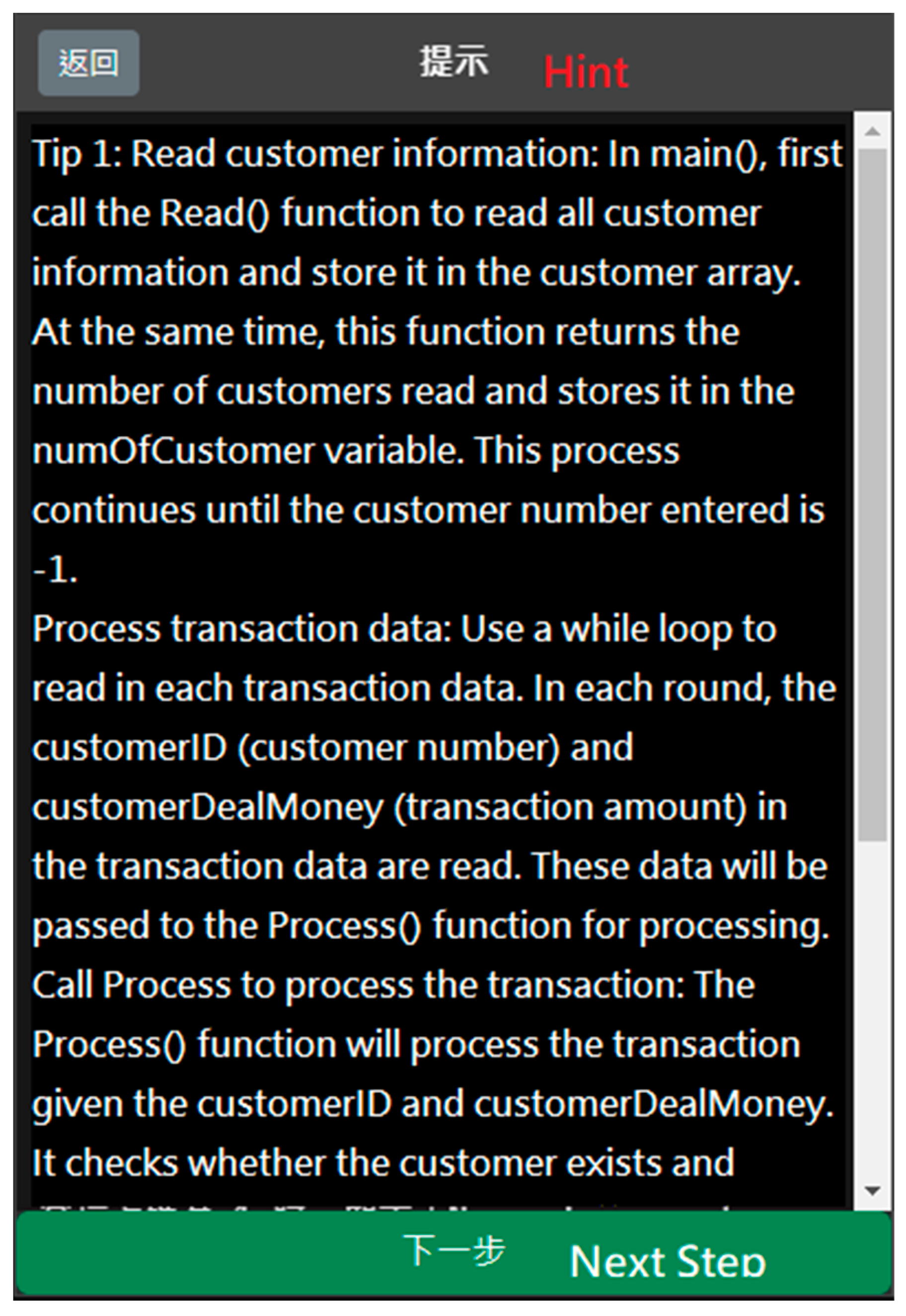

The design of the Hint function emphasizes guiding students to consider the problem-solving context through sequential descriptions and reference syntax. The system avoids providing a complete solution all at once, instead adopting a staged approach. For instance, as shown in

Figure 2, for a question requiring the input of customer data, the Hint function might initially suggest that students use a “Read()” function to read and store all customer data into an array. If students cannot understand the initial hint, they can click the “Next” button to receive a second-level prompt, which offers more detailed guidance. This layered approach not only helps students solve the immediate problem but also fosters a deeper understanding of related concepts.

3.2.2. Debug

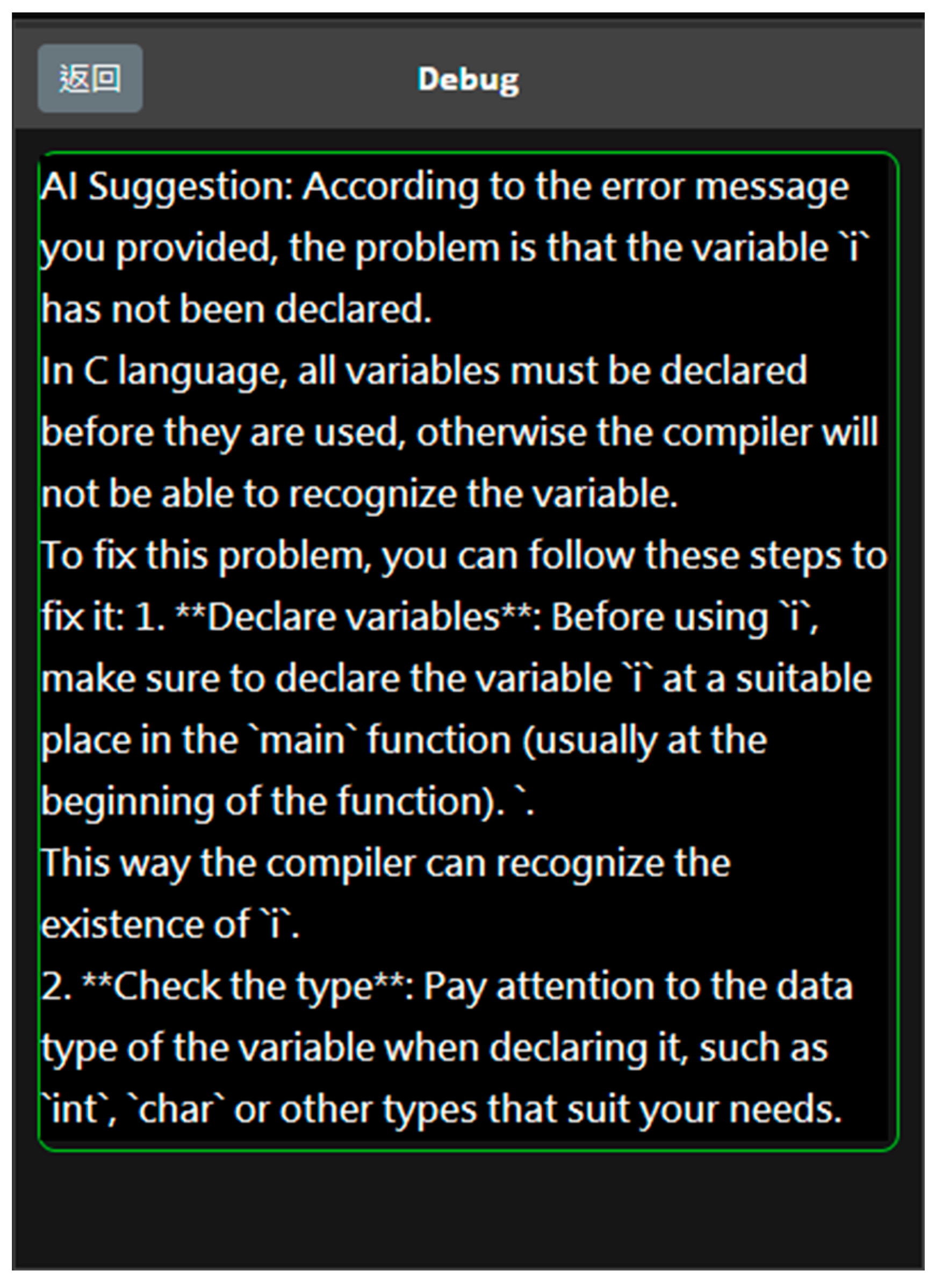

The Debug function is designed to help students identify and correct errors in their code. When compile-time or runtime errors occur, students often struggle to locate the root cause. The Debug function assists by analyzing the submitted code and error messages, classifying errors into categories such as syntax errors, logical errors, or execution issues. Based on the error type, the system provides detailed suggestions for corrections. For example, as illustrated in

Figure 3, if a student declares a variable without specifying the correct data type, the system highlights the problematic code and suggests the correct declaration method. Additionally, sample code is provided to clarify the correct approach. The Debug function also employs a **staged assistance mode**, wherein the repeated use of the function yields progressively detailed guidance. This ensures that students receive timely and appropriate support throughout their debugging process while maintaining an opportunity for independent problem solving.

3.2.3. User-Defined Question

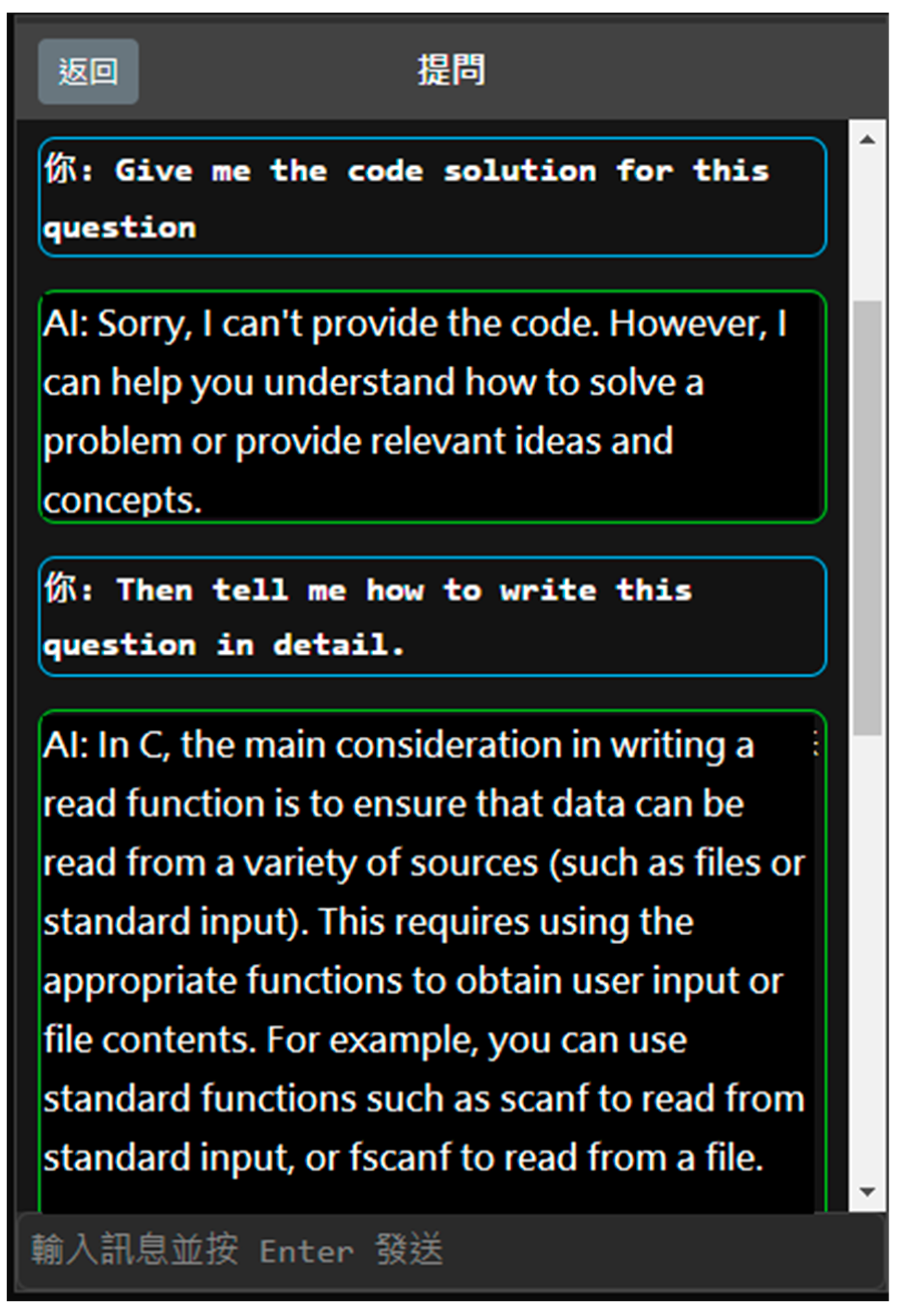

The User-defined Question function enables students to pose personalized questions to the system, offering flexible assistance tailored to their specific needs. Unlike preset question types, this feature encourages independent thinking and supports a broader range of queries. Students can inquire about basic syntax, logical design, or program optimization, and the system provides targeted responses based on the question’s content. To prevent over-reliance on the system, a guiding strategy is incorporated into the responses. Instead of directly providing complete program code, the system offers logical analyses and solutions. For instance, as shown in

Figure 4, when a student asks for a direct code solution, the system encourages the student to reframe the question or focus on understanding the underlying logic. If the student pastes a question directly into the system, the response includes key concepts, methods for solving the problem, and example code that is not directly related to the question. This approach helps students build a better understanding of problem-solving strategies. Additionally, the User-defined Question function integrates an interactive learning mode with a feedback mechanism. If a student submits a vague or incomplete question, the system prompts the student to provide more details, thereby cultivating their ability to ask precise questions. This feature not only enhances problem-solving skills but also strengthens critical thinking and communication abilities.

4. Materials and Methods

4.1. Experimental Courses and Subjects

This study was conducted during the final exam of a university course titled “Introduction to Computer”. The participants included 39 students enrolled in the course, comprising 29 males and 10 females, all with a background in electrical engineering and information technology. Notably, the cohort included one senior repeating student (female) and two junior repeating students (male). Since one of the junior students (male) never attended classes and did not participate in the experiment, he was eventually excluded from the experimental data. Another freshman (male) stopped taking classes after the mid-term exam, so he was also excluded from the experimental data. Therefore, the final sample consisted of 35 freshmen, 1 junior, and 1 senior, with an average age of 18.

To further validate the adequacy of the sample size, a post hoc power analysis was conducted using the G*Power software (

https://www.psychologie.hhu.de/arbeitsgruppen/allgemeine-psychologie-und-arbeitspsychologie/gpower accessed on 16 December 2024). Given the study’s design, an effect size of 0.5, and a significance level of 0.05, the power of detecting meaningful differences in system usage and learning outcomes was calculated to be 54.07%. While a larger sample would be ideal for broader generalization, the analysis suggests that the current sample size provides sufficient statistical power to support the study’s conclusions. While this is a relatively small sample, it represents a complete cohort of students enrolled in the “Introduction to Computer Science” course at the department, ensuring consistency in the learning environment. Given that this study focuses on a controlled educational setting rather than a large-scale generalization, the findings provide valuable insights into ITS-CAL’s impact on student learning within this context. Additionally, prior studies in intelligent tutoring systems and AI-driven educational tools have utilized similar or smaller sample sizes to investigate learning behaviors and system effectiveness [

19,

35].

To explore the behavioral differences among students with varying knowledge levels when using the three auxiliary functions of the ITS-CAL system (Hint, Debug, and User-defined Question), a cluster analysis was performed based on the students’ course grades prior to the experiment. These grades were derived from two computer-based tests and one mid-term computer-based examination, all conducted in a closed-book format and lasting two hours each. Prior to applying K-means clustering, we conducted Hierarchical Clustering to determine the optimal number of clusters. Based on the dendrogram and agglomeration schedule, we observed distinct separation at three clusters, supporting our decision to set K = 3 in subsequent K-means clustering. To ensure that the clusters were significantly different, we conducted ANOVA (Analysis of Variance) tests and post hoc analyses. The results demonstrated statistically significant differences (

p < 0.05) among clusters across all three selected assessments. These results confirm that the clusters represent meaningfully distinct groups based on programming performance—high, medium, and low knowledge levels—as shown in

Table 1 and

Table 2.

To assess the validity of the clustering model, we computed the silhouette score and explained variance ratio. The silhouette score, which measures how well students fit within their assigned clusters, was calculated as 0.3994. A score indicates moderate cluster separability. Additionally, the explained variance ratio of 100% confirms that the selected features fully capture student performance differences. These validation metrics confirm that the clustering results are meaningful and provide a reasonable classification of students’ knowledge levels. Finally, to verify the stability of the classification, a cross-validation approach was applied. The randomly resampled 80% of the dataset multiple times and re-applied the clustering process. The results remained consistent across iterations, with an average cluster assignment stability of 39.84%, indicating that the clustering method is reasonable clustering stability across resampling trials.

The experimental design accounted for the potential use of external generative AI tools (e.g., ChatGPT) by students to directly obtain answers, which could hinder their opportunities for critical thinking. To mitigate this issue, the ITS-CAL system was embedded into the exam, with strict management and restrictions on its usage. To ensure fairness among students, the exam adopted a **”use deduction”** mechanism: each time the ITS-CAL system was used, the score would be progressively discounted by 9.5%, 10%, 15%, and so on, down to a minimum threshold of 50%. This scoring method was explicitly communicated to the students before the exam. Additionally, students were informed that the responses generated by the ITS-CAL system were produced by an AI language model, which could exhibit overconfidence or inaccuracies. As a result, students were required to critically evaluate the responses provided by the system.

4.2. Experimental Procedure

The experiment was conducted in three main stages: the system familiarization stage, the examination and experimentation stage, and the post-test survey stage, spanning a total duration of three weeks.

One week prior to the experiment, the system familiarization phase was mandatory for all students, ensuring that every participant had an equal opportunity to learn how to use ITS-CAL, including its three primary functions: Hint, Debug, and User-defined Question. This session also covered the system’s limitations and usage rules. The training included both demonstration examples and hands-on exercises, allowing students to explore the features of the three functions through multiple interactions. The goal was to ensure that students could effectively use the system during the examination. Additionally, even after the mandatory training session, the ITS-CAL system remained accessible until the exam, giving students the flexibility to further familiarize themselves with the interface. No students reported any difficulties in using the system before or during the exam, nor did they request additional training time.

- 2.

Examination and Experimentation Stage

One week after the familiarization phase, a two-hour final examination was conducted as the formal experimental phase. During the exam, students were allowed to use the ITS-CAL system but had to adhere to the pre-established usage restrictions and the “use deduction” scoring mechanism. Students were free to decide whether to utilize any of the three auxiliary functions, and the system recorded detailed usage behavior for each operation to support subsequent analysis.

The examination consisted of six questions. The first five questions focused on implementing specific functions, while the sixth question required students to integrate the functions from the previous questions and adjust parameters to produce the correct results. Each question included test data pre-defined by the instructor, divided into public data and hidden data. Public data helped students understand the problem requirements and expected execution results, whereas hidden data prevented students from tailoring their program logic to specific datasets, ensuring program versatility.

To ensure the content validity of the final exam, the test questions were collaboratively designed by three instructors with backgrounds in computer science and programming education. The exam has been administered for several years, with similar exam structures appearing in previous iterations of the course, ensuring its alignment with the learning objectives. The test covers key programming concepts that are commonly evaluated in prior programming education research, further reinforcing its validity as a learning assessment tool.

- 3.

Post-Test Survey Stage

After completing the test, students were asked to fill out a questionnaire designed to assess their experience with the ITS-CAL system. The questionnaire covered aspects such as the practicality of the system’s functions, its ease of use, and its overall helpfulness during the learning process. In addition, test scores and system usage data were collected and combined with the students’ feedback for a comprehensive analysis.

4.3. Experimental Tools

This study systematically investigated the application of the ITS-CAL system in university programming education and students’ usage behavior using the following tools:

ITS-CAL served as the core tool of the experiment, providing three key functions: Hint, Debug, and User-defined Question. The system integrates an LLM-powered intelligent assistance module capable of generating responses that are accurate yet do not directly provide complete solutions. The system’s database logs comprehensive operation histories, including the following:

Number of times each function is used.

Duration of function usage.

Type of problems addressed.

These data provided the foundation for analyzing students’ usage behavior and the system’s impact on their learning.

- 2.

System Usage Questionnaire

To evaluate students’ subjective experiences and perceptions of the ITS-CAL system, a questionnaire was designed, as summarized in

Table 3. The questionnaire consisted of 27 questions:

Questions 1–26: A five-point Likert scale (ranging from “Strongly Agree” to “Strongly Disagree”) was used. For auxiliary functions, questions 19–26 included an additional “Not Used” option to account for students who did not utilize specific features.

Question 27: an open-ended question allowed for qualitative insights into students’ thoughts and experiences.

The questionnaire data were analyzed using the Statistical Package for Social Science (SPSS), employing principal component analysis (PCA). Results of the KMO sampling adequacy test and Bartlett’s sphericity test confirmed the dataset’s suitability for factor analysis. PCA extracted common factors based on eigenvalues greater than 1, explaining a cumulative variance of 85.84%. The extracted factors were rotated using the orthogonal maximum variance rotation method, enhancing the interpretability of the factor loadings. Each question aligned with the intended constructs of the study, with factor loadings exceeding 0.5, demonstrating good construct validity. Reliability analysis using Cronbach’s α yielded a coefficient of 0.869, indicating high internal consistency and scale reliability.

5. Results

5.1. Overview of the Help Functions Using ITS-CAL

In this experiment, 36 students participated in the final exam using the ITS-CAL system. One freshman, classified in the low-knowledge cluster, was absent from the exam. The results, as summarized in

Table 4, indicate differences in the frequency of usage among the three auxiliary functions: Hint, Debug, and User-defined Question.

The Hint function was the most frequently used, reflecting students’ tendency to seek guidance on solution directions when encountering difficulties. The Debug function was the second most used, primarily for addressing code errors. The User-defined Question function was used the least, likely due to its higher cognitive cost (e.g., requiring clear articulation of questions) or students’ limited familiarity with the feature. On average, each student used the ITS-CAL help functions 4.72 times during the exam, with an average pass rate of 37.50%. The total usage breakdown was as follows: 42.35% for Hint, 27.06% for Debug, and 30.59% for User-defined Question, indicating a preference for the simpler and more direct Hint function.

5.2. Usage Patterns of Students with Different Knowledge Levels

To further analyze behavior, students were divided into three groups based on their academic performance: high knowledge level, medium knowledge level, and low knowledge level. The usage patterns of ITS-CAL among these groups are presented in

Table 5.

High-knowledge students: This group used the help functions an average of 2.88 times, predominantly focusing on the Hint and User-defined Question functions while rarely using the Debug function. Their higher problem-solving abilities allowed them to debug issues independently through system execution feedback, resulting in lower reliance on the Debug function. Instead, they utilized the Hint function for detailed adjustments and the User-defined Question function for addressing specific queries.

Medium-knowledge students: On average, this group used the help functions 4.89 times, with the Hint function being the most frequently used. This suggests a greater need for Hint function support during problem-solving, complemented by the User-defined Question function to address gaps in specific knowledge areas.

Low-knowledge students: This group exhibited the highest usage frequency, averaging 6 times per student, with a strong preference for the Hint function. Their minimal use of the Debug and User-defined Question functions suggests difficulties in understanding basic concepts and problem-solving logic. These challenges may have limited their ability to utilize more advanced features effectively.

5.3. Relationship Between Usage Frequency and Performance

To examine the relationship between ITS-CAL usage and student performance, we conducted a multiple linear regression analysis with exam performance as the dependent variable and ITS-CAL usage metrics (hint usage, debugging frequency, and question-asking frequency) as independent variables. The regression model was statistically significant (F (3, 32) = 154.026, p < 0.05), with an R2 value of 0.935, indicating that 93.5% of the variance in student performance could be explained by ITS-CAL usage. These results suggest that ITS-CAL usage had a positive association with exam performance.

Secondly, the relationship between usage frequency and test scores was analyzed, as shown in

Table 6. The results reveal distinct patterns. Students who did not use ITS-CAL had an average pass rate of 56.77%. Among them, high-knowledge students achieved a 100% pass rate, medium-knowledge students had a pass rate of 50.83%, and low-knowledge students failed entirely. This indicates that high-knowledge students could perform well without ITS-CAL, whereas medium- and low-knowledge students had a greater dependency on the system.

Moderate usage (e.g., 2 times) correlated with the highest average pass rate of 72.22%, with high-knowledge students maintaining a 100% pass rate and low-knowledge students achieving a pass rate of 16.67%. This suggests that moderate ITS-CAL use effectively supports learning, particularly for medium-knowledge students, by compensating for their limited problem-solving abilities.

Excessive usage (e.g., 4 times) led to a significant decline in performance, with an average pass rate of 27.98%. Notably, the pass rate for high-knowledge students dropped to 0%, while medium-knowledge students achieved 47.92% and low-knowledge students dropped to 2.08%. This indicates that over-reliance on the system may impair independent problem-solving abilities.

When the total number of uses exceeded 10 times, the average pass rate declined further to 16.67% or lower. For students with 15 or more uses, the pass rate fell to 4.17%, predominantly in the low-knowledge group. This suggests that excessive reliance on ITS-CAL without effective problem-solving strategies may result in poor learning outcomes.

From a knowledge level perspective, high-knowledge students consistently maintained high pass rates, regardless of ITS-CAL usage, demonstrating their ability to tackle challenges independently. Medium-knowledge students showed a positive correlation between moderate ITS-CAL usage and improved performance, indicating that the system provided meaningful support. In contrast, low-knowledge students exhibited low pass rates across all usage levels, reflecting a need for additional support beyond ITS-CAL to improve their learning outcomes.

To determine whether the differences in performance among students with different knowledge levels were statistically significant, a one-way ANOVA was conducted to assess differences in exam performance among low-, medium-, and high-knowledge students. The results indicated a statistically significant difference among groups (F (2, 33) = 8.313, p < 0.05, η2 = 0.335), suggesting that the knowledge level had a significant impact on student performance. Post hoc Tukey’s HSD tests further revealed that high-knowledge students performed significantly better than medium- and low-knowledge students (p < 0.05). Medium-knowledge students outperformed low-knowledge students (p < 0.05). The 95% confidence intervals for mean exam scores were as follows:

High-knowledge group: [37.9694, 103.7181];

Medium-knowledge group: [33.6509, 61.3491];

Low-knowledge group: [2.5761, 26.1739].

5.4. System Usage Questionnaire Results

According to the results of the student questionnaire survey on the ITS-CAL system, most students expressed positive opinions regarding its functionality and its role in supporting learning. Approximately 60% of students agreed that ITS-CAL contributed to improving their programming skills and highly appreciated the system’s user-friendliness and convenience. Additionally, 48% of students found the system to be convenient during the learning process, emphasizing its humanized design. However, a smaller proportion of students reported challenges, particularly with the accuracy and clarity of the system’s responses. Specifically, 16% of students found the responses insufficiently accurate, while 8% felt that some response structures were overly general, causing confusion.

Hint Function: The Hint function received the highest recognition among the three features, with 40% of students stating that it was helpful in solving problems. However, 20% of students did not use this function, indicating potential limitations in its practicality and suggesting room for further enhancement.

Debug Function: The Debug function received comparatively lower evaluations, with only 29% of students indicating that it significantly assisted in solving program errors. Moreover, 33% of students did not use this feature, which may reflect a combination of design limitations and reduced need for debugging support during the exam.

User-defined Question Function: This function also exhibited relatively low usage. A total of 28% of students did not engage with it, and only 32% believed it provided helpful responses for their learning needs. These findings indicate the need for improvements in the design and user guidance associated with this function to encourage broader and more effective utilization.

- 2.

Qualitative Feedback

Qualitative responses from students highlighted specific needs and expectations for ITS-CAL’s future development. Many students described the system as a “helpful and effective learning resource” and expressed satisfaction with its overall functionality. However, several areas for improvement were noted and are as follows:

Content of the Hint Function: some students remarked that the hints provided were too simplistic and lacked specific guidance for handling hidden test data.

Practicality of the Debug Function: a number of students felt that the Debug function’s responses were overly general and insufficiently detailed to address specific issues effectively.

Interface Design Suggestions: students recommended increasing the size of the code editing area to enhance usability and adding a “usage count” notification to the system to prevent unintentional overuse.

Deduction Mechanism: Some students criticized the scoring deduction mechanism as overly strict, suggesting that it might discourage them from using the system’s features. They expressed a desire for greater flexibility to explore ITS-CAL’s functionalities without excessively impacting their scores.

- 3.

Overall Student Attitude and Recommendations

Overall, the survey results indicate that most students hold a positive attitude toward ITS-CAL, recognizing its value in supporting programming education. Nevertheless, several key areas require attention to optimize the system’s design and effectiveness:

Improving Accuracy and Clarity: enhancing the precision and detail of the Hint and Debug functions to better address students’ specific learning needs.

Optimizing Interface Design: expanding the code editing area and incorporating additional features, such as usage notifications, to improve the user experience.

Adjusting the Deduction Mechanism: balancing the scoring penalty to encourage greater exploration and use of ITS-CAL’s features without unduly penalizing students.

Enhancing User Guidance: providing clearer instructions and strategies to help students effectively utilize the User-defined Question function, thereby increasing its practical value.

6. Discussion

This study examines the application value of ITS-CAL, driven by a large language model (LLM), in programming education. Specifically, it analyzes the usage behavior patterns of students with different knowledge levels, the system’s impact on learning outcomes, and students’ subjective perceptions. By integrating quantitative data with qualitative feedback, the findings highlight ITS-CAL’s potential to provide effective learning assistance while identifying areas for improvement in functionality and user experience. Below is an in-depth discussion of the research questions, offering comprehensive insights and recommendations for future optimization.

6.1. RQ1: What Patterns Emerge When Students with Different Knowledge Levels Use ITS-CAL?

The results reveal significant differences in how students of varying knowledge levels utilize ITS-CAL. High-knowledge students used the system’s help functions an average of 2.87 times, primarily focusing on the Hint and User-defined Question functions. Their stronger problem-solving abilities allowed them to leverage the Hint function for fine-tuning and use the Question function to address specific issues. They rarely relied on the Debug function, as they were often capable of independently troubleshooting errors through program-generated feedback. Medium-knowledge students had the highest demand for the Debug function, using the system an average of 4.89 times. This group required substantial assistance with error correction during problem solving and appropriately utilized the Question function to fill knowledge gaps. Their relatively balanced use of all functions suggests a higher degree of dependence on the system compared to the high-knowledge group. Low-knowledge students exhibited the highest usage frequency, averaging six uses per student. They relied heavily on the Hint function, indicating difficulties in basic concepts and problem-solving logic. However, they used the Debug and User-defined Question functions less frequently, which may reflect challenges in utilizing these features effectively when faced with incomplete programs or insufficient understanding.

6.2. RQ2: What Is the Impact of ITS-CAL Feedback Content on Students’ Learning Outcomes?

The impact of ITS-CAL feedback on learning outcomes varied by usage frequency and knowledge level, displaying a stratified effect. Moderate usage (e.g., two uses) was associated with the highest average pass rate of 72.22%, particularly benefiting medium-knowledge students, whose pass rates increased significantly. This finding indicates that ITS-CAL effectively addresses knowledge gaps and enhances problem-solving skills for this group. Excessive usage (e.g., more than 3 times) resulted in declining pass rates, with students who used ITS-CAL 4 times achieving an average pass rate of only 27.98%. Excessive reliance on the system appears to weaken independent problem-solving abilities, particularly for low-knowledge students, whose pass rates remained consistently low even with frequent usage. High-knowledge students consistently maintained high pass rates regardless of usage frequency, indicating that ITS-CAL feedback served as a supplementary tool rather than a critical factor for their success. Conversely, low-knowledge students showed minimal improvement, highlighting the need for more targeted optimization to better support this group.

6.3. RQ3: What Are Students’ Perceptions of ITS-CAL?

The questionnaire and qualitative feedback suggest that most students view ITS-CAL positively, with approximately 60% agreeing that the system improves their programming skills. Students also praised its user-friendliness and convenience. However, several shortcomings were identified and are as follows:

Hint Function: students found the hints overly simplistic, particularly lacking guidance for handling hidden test data.

Debug Function: the debug responses were deemed too general, providing limited assistance for specific issues.

User-defined Question Function: this feature was perceived as costly to use, with room for improvement in meeting students’ needs effectively.

In addition, students expressed concerns about the deduction mechanism, with some suggesting that it discourages system usage. They recommended reducing the penalty’s weight to encourage greater freedom in exploring ITS-CAL’s features for learning. Other suggestions included adding a usage count reminder to prevent unintended overuse and optimizing the code editing interface for a better user experience.

6.4. Implications and Recommendations

Overall, students viewed ITS-CAL as a valuable supplementary tool, but improvements are necessary to enhance its functionality and user experience. Based on the findings, the proposed recommendations are as follows:

Enhance Feedback Precision: improve the specificity and depth of the Hint and Debug functions to better address diverse student needs, particularly for low-knowledge users.

Optimize User-defined Question Function: simplify the process of formulating questions and provide clearer guidance to encourage more effective utilization.

Revise the Deduction Mechanism: balance the scoring penalty to maintain motivation while preserving the system’s intended purpose as a learning aid.

Improve Interface Design: expand the code editing area and incorporate features such as usage notifications to enhance usability.

Targeted Support for Low-knowledge Students: develop additional features or strategies to address the specific challenges faced by students with low knowledge levels, such as tailored tutorials or scaffolding mechanisms.

These enhancements would further ITS-CAL’s potential as an effective tool for programming education, ensuring that it meets the diverse needs of students while fostering independent learning and critical thinking.

6.5. Potential Confounding Factors

While the use of external learning tools such as ChatGPT is a common concern in AI-assisted learning research, our experimental design explicitly addressed this issue by embedding ITS-CAL into the exam environment under strict supervision. As described in

Section 4.1, students were only allowed to use ITS-CAL during the exam, and proctors strictly monitored their activities to prevent the use of external AI-based tools. This design ensured that students relied solely on ITS-CAL for assistance within the experimental setting. Outside of the exam, students had full access to other learning resources, but these were not included as a part of the controlled experiment. Therefore, while we acknowledge that students’ independent study time and prior exposure to AI tools before the exam could have influenced their learning outcomes, we can reasonably rule out the direct impact of external AI tools during the ITS-CAL usage period.

Another potential concern is whether the grade reduction mechanism discouraged students from using ITS-CAL, introducing a bias in system engagement. While this is a valid consideration, our statistical analysis suggests that students who engaged with the system did so despite the grade penalties, likely prioritizing their exam result. Moreover, because the final exam and questionnaire were designed and validated independently, the grading mechanism does not impact the validity or reliability of these instruments. Nonetheless, future studies could experiment with alternative incentive models (e.g., bonus score-based systems instead of penalties) to encourage engagement without discouraging usage.

7. Conclusions, Limitations, and Future Work

7.1. Conclusions

This study conducted an in-depth investigation into the application of ITS-CAL, powered by large language models (LLMs), in programming education. Through a comprehensive analysis of the usage behavior, learning outcomes, and subjective experiences of students with varying knowledge levels, the findings demonstrate the significant potential of ITS-CAL in providing immediate feedback, enhancing learning motivation, and improving educational outcomes. Additionally, the study highlights the differences in functional needs and usage patterns across different student groups.

Compared to previous research, this study underscores the innovative and practical value of ITS-CAL. While prior studies have primarily focused on the role of artificial intelligence as virtual teaching assistants or chatbots, they often overlooked the specific technical challenges students face in programming. By developing three auxiliary functions (Hint, Debug, and User-defined Question), ITS-CAL leverages the advanced language comprehension and generation capabilities of LLMs to provide enriched problem-solving support. Unlike traditional systems that offer single-level, fixed feedback, ITS-CAL introduces hierarchical feedback tailored to students’ individual needs, marking a significant advancement.

Past studies have noted limitations in chatbot responses, such as generalized feedback or insufficient guidance [

15]. This study addresses these shortcomings through the design of ITS-CAL. For instance, the Debug function analyzes errors and provides example explanations, helping students develop a deeper understanding of the underlying issues. The Hint function avoids offering direct code solutions, instead encouraging guided problem solving, contrasting sharply with traditional chatbots that primarily deliver direct answers.

Furthermore, this study identifies distinct usage patterns among students with different knowledge levels, offering new insights that build upon the gaps in prior research. High-knowledge students tend to use the Hint and User-defined Question functions moderately, enhancing their problem-solving abilities. In contrast, low-knowledge students rely heavily on the Hint function but exhibit limited improvement in learning outcomes, indicating a need for additional, targeted support for this group. These findings highlight the importance of designing adaptive support mechanisms to address diverse student needs, providing a strong empirical foundation for the future development of LLM-driven educational systems.

The primary contribution of this study lies in the development of a programming learning system that combines the immediacy and flexibility of LLM technology with hierarchical feedback. The results demonstrate its potential to enhance students’ learning outcomes and optimize their educational experiences. Moving forward, these findings offer valuable guidance for improving intelligent teaching systems and underscore the need for continued innovation in leveraging artificial intelligence to support diverse learning environments.

7.2. Limitations and Future Work

The first of the key limitations of this study is the absence of a control group, which makes it challenging to isolate the direct impact of ITS-CAL on students’ learning outcomes. Without a comparison against students who did not use ITS-CAL, we cannot rule out the possibility that the observed improvements were influenced by external factors such as self-motivation, prior experience, or additional learning resources. Future studies should include a control group to better assess ITS-CAL’s effectiveness. Additionally, a longitudinal study could be conducted to track students’ learning progression over a longer period and evaluate the system’s long-term impact.

The second limitation of this study is that student learning was assessed solely through a final exam, without a pretest–posttest comparison or complementary performance-based evaluations. While the final exam provides a standardized measure of students’ programming proficiency, it does not capture individual learning progress or coding practices developed throughout the course. Future research should incorporate pretest–posttest comparisons to measure knowledge gains at an individual level. Additionally, alternative evaluation metrics such as code quality analysis, debugging efficiency, and project-based assessments could provide a more comprehensive picture of student learning. These additional measures would enhance the validity of the findings and offer deeper insights into the impact of ITS-CAL on programming skill development.

The third limitation of this study is that, while external tool usage was strictly controlled during the ITS-CAL evaluation, we did not measure students’ exposure to AI-assisted learning outside of the exam environment. Although proctors ensured that students did not use external generative AI tools (e.g., ChatGPT) during the exam, it remains possible that students’ prior experience with such tools influenced their overall programming ability. Future studies could incorporate survey-based self-reporting or tracking mechanisms to better understand students’ AI tool usage before the experiment. Additionally, comparative studies could be conducted to evaluate the effects of ITS-CAL under different learning conditions (e.g., with or without prior exposure to AI-assisted learning tools).