Abstract

The integration of automated tools in engineering education has the potential to improve student assessments, ensuring consistency and reducing instructor workload. This study introduces a Python-based automation tool designed to evaluate student Computer-Aided Design (CAD) submissions. The tool utilises software API and Open Cascade library to calculate model parameters. These parameters are compared against expected values from a solution file and marks are assigned based on deviations relative to the solution file. As a use case, seventy-five Solid Edge CAD files were assessed for geometric properties such as volume, surface area, and centre of gravity location to evaluate inter- and intra-marker reliability. The results showed perfect agreement, with a Cohen kappa of 1.0 for both metrics. Furthermore, the automated tool reduced grading time by 89.7% compared to manual evaluation. The potential of automation in improving marking efficiency, consistency, and objectivity in engineering education has been shown, providing a foundation for further integration of software. The python-based automation script is openly available on GitHub.

1. Introduction

Computer-Aided Design (CAD) software has become an integral part of engineering education, allowing students to create and manipulate 3D models of parts and assemblies [1]. However, the assessment of student submissions in CAD courses can be time-consuming and labour-intensive for instructors [2]. This challenge is evident in courses using Solid EdgeTM, v2023, a popular CAD software in mechanical engineering education. Automation in software engineering aims to partially or fully execute software engineering activities with minimal human intervention [3]. In the context of CAD education, automation can significantly streamline the assessment process, allowing instructors to focus on providing valuable feedback rather than repetitive tasks [4,5,6]. The application of automation techniques in educational settings has shown promising results in enhancing both teaching efficiency and student learning outcomes [7,8]. The increasing complexity of CAD models and the growing number of students in engineering programs have amplified the challenges faced by educators in providing timely and comprehensive feedback [3]. Traditional manual assessment methods often lead to inconsistencies in grading and delays in feedback delivery, which can hinder student progress and engagement [3]. Moreover, the time-intensive nature of manual grading limits instructors’ ability to focus on higher-order teaching activities, such as fostering critical thinking and problem-solving skills [3,9,10].

Automated assessment tools offer a solution to these challenges by providing rapid, consistent, and objective evaluations of student work [3,11]. By leveraging computational power, these tools can analyse complex geometric properties, adherence to design specifications, and other technical aspects of CAD models with high accuracy and speed [12,13,14]. This not only reduces the grading workload for instructors but also enables them to allocate more time to qualitative feedback and personalised instruction [4,15,16]. To address this need, a Python-based automation tool has been developed that facilitates the marking of student part file submissions. Python, with its extensive libraries and ease of use, is well suited for interacting with CAD software and automating repetitive tasks [17]. The tool leverages the software object model and Python’s capabilities to analyse student submissions efficiently. The choice of Python for this automation task is strategic. Python’s open-source nature, versatility, and rich ecosystem of libraries make it an ideal language for developing educational technology solutions. Its readability and relatively low learning curve also make it accessible to educators who may want to customise or extend the tool’s functionality. Furthermore, Python’s strong presence in data science and machine learning opens up possibilities for future enhancements, such as incorporating predictive analytics or adaptive learning features into the assessment process [17].

The integration of automated assessment tools in engineering education has been shown to improve the consistency and objectivity of grading processes [2,3]. Furthermore, such tools can provide students with immediate feedback, which is crucial for effective learning in technical disciplines. This aligns with established pedagogical principles that emphasise the importance of timely and detailed feedback in fostering student engagement and skill development [5,8,10]. Automated assessment tools also have the potential to transform the learning experience by enabling more frequent and formative assessments [6,16]. Instead of relying solely on summative evaluations at the end of a course, instructors can implement regular checkpoints throughout the learning process. This approach supports a more iterative and reflective learning cycle, allowing students to identify and address misconceptions or skill gaps early on [6,16,18]. The implementation of a marking automation tool could contribute to this growing body of research on educational technology in engineering. The work aligns with recent advancements in computer-aided assessment techniques for engineering education and reflects the broader trend of digital transformation in the field. As the engineering industry increasingly adopts automated design and analysis tools, exposing students to similar technologies in their educational journey becomes crucial. This approach not only enhances the efficiency of the educational process but also better prepares students for the realities of modern engineering practice [19]. By interacting with automated assessment tools, students gain familiarity with the type of software-driven workflows they are likely to encounter in their professional careers. This alignment between academic practices and industry trends can help bridge the often-cited gap between engineering education and professional requirements [19].

In this paper, the development and implementation of the marking automation tool are presented. A workflow of how it streamlines the assessment process by automatically evaluating key aspects of student part files, such as dimensions, features, and adherence to design specifications, is provided. Additionally, a discussion on the tool’s potential to enhance the learning experience by providing consistent and timely feedback to students is given. By automating routine assessment tasks, instructors can dedicate more time to providing in-depth, qualitative feedback on complex design aspects that require human expertise. This shift in focus aligns with encouraging critical thinking, creativity, and advanced problem-solving skills among students. The research contributes to the ongoing efforts to integrate advanced technologies into engineering education, aiming to prepare students for the increasingly digital and automated landscape of modern engineering practice [19]. Through this work, the aim is to bridge the gap between traditional CAD education methods and the evolving needs of the engineering industry, fostering a more efficient and effective learning environment for future engineers. The subsequent sections of this paper will detail the methodology used in developing and testing the automation tool, present the results of its implementation, and discuss the implications of the findings for CAD education and engineering pedagogy. Potential future directions for this research are explored, including the integration of machine learning techniques and the expansion of the tool’s capabilities to assess more complex design aspects.

2. Materials and Methods

2.1. Automation Tool Development

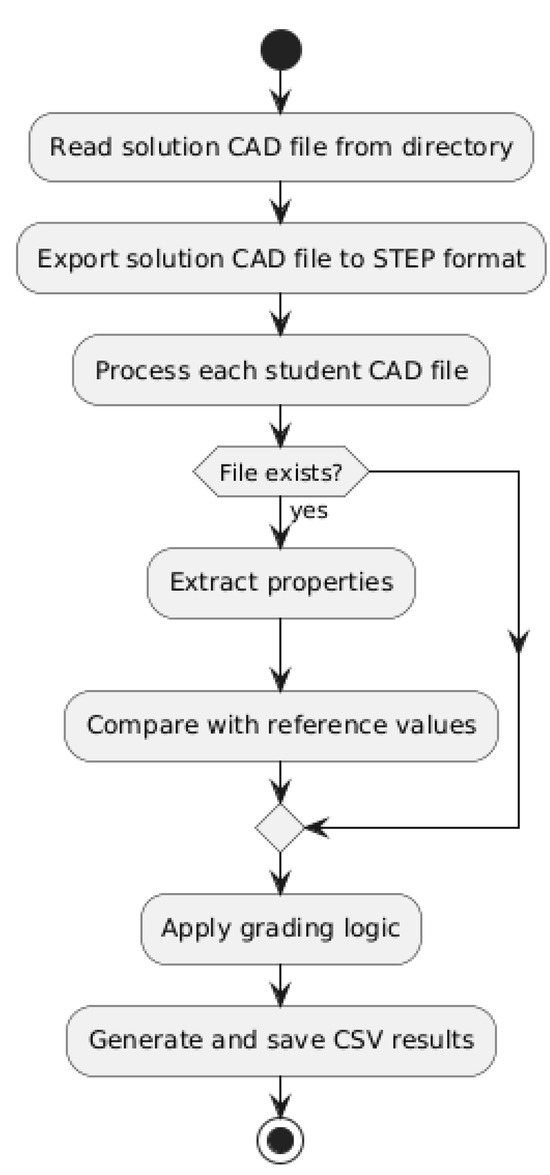

The methods employed in this study were designed to develop and evaluate a Python-based automation tool for assessing student CAD submissions. The tool was built using PythonTM v3.9 and leveraged software API to interact with CAD files. The workflow (Figure 1) of the script involved several key steps: (1) Input Files and Setup: A solution file containing the expected parameters (P1−n) was used as a reference. Student submissions were provided as CAD files, located in a specified directory. The script required an output directory for intermediary files and generated a final CSV file summarising marks for each submission. (2) Solution File Processing: The CAD solution file was exported to a STEP file format using a COM automation interface via the win32com.client module. The STEP file was analysed using the Open Cascade (OCC) library to extract the expected parameters for comparison. (3) Student Submissions: For each CAD file in the submissions folder, the script executed Step 2 above. (4) Marking Scheme: Each property was evaluated against the expected value using a percentage difference method. (5) Output: Results, including calculated parameters and individual marks, were saved to a CSV file for quick review and further analysis. To ensure scalability and usability, the script processed all CAD files in a designated directory, generating a CSV file summarising the results for each submission. Intermediate files, such as temporary STEP files and logs, were automatically deleted after processing to maintain a clean output directory.

Figure 1.

Workflow of the Python-based automation tool for assessing student CAD submissions.

2.2. Solid Edge Use Case

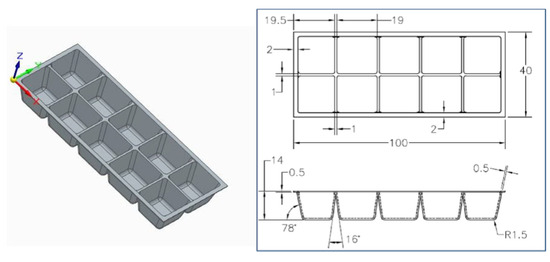

The tool was tested on a dataset of seventy-five Solid Edge 3D CAD part files with auto-generated ID filenames adapted from previous university Year 1 Engineering class test submissions. The script was run on a standard laptop computer (Intel i5 processor, 4 core, 8 GB RAM, Dell Technologies Inc. Texas, USA) with Solid Edge 2023 installed. The primary objective was to automate the evaluation of geometric properties, including volume (P1), surface area (P2), and the centre of gravity (CG) XYZ location (P3), and compare these metrics against expected values derived from a reference solution file. Full marks (5) were awarded if the deviation was ≤1%. Reduced marks (4 to 1) were assigned for deviations up to 80%. No marks were awarded for deviations above 80% or for missing properties. The total maximum score for each file was 15 marks with 5 marks allocated for volume, surface area, and CG location with respect to the coordinate system (Figure 2). The development process included an analysis of the Solid Edge API to identify the most efficient methods for extracting geometric data. Python’s win32com.client module was used to access Solid Edge’s COM automation interface, allowing seamless interaction with CAD files. The OCC library was then integrated into the workflow to analyse STEP files generated from Solid Edge part files. The script is freely available on GitHub (see ‘Data Availability Statement’).

Figure 2.

Three-dimensional model and respective engineering drawing utilised for the analysis.

Reliability assessments were conducted by comparing the automated tool’s marks with those assigned manually by an experienced instructor. Inter-marker reliability was measured by calculating Cohen’s kappa and percentage agreement between the automated and manual marks across all submissions [20,21]. To evaluate the intra-marker reliability of the automation script, variations in marks for identical submissions were compared using Cohen’s kappa and percentage agreement. The identical submissions were intentionally added in the dataset, were given different auto-generated ID filenames, and were only included for the intra-marker reliability calculation. Cohen’s kappa was calculated using standard formulae that accounted for observed agreement and chance agreement between markers [20,21]. Percentage agreement provided an additional measure of consistency by quantifying the proportion of identical scores assigned by both methods [20,21]. The efficiency of the tool was also assessed by comparing its processing time against manual grading time for the same dataset.

3. Results

The Python-based automation tool demonstrated exceptional efficacy in the assessment of CAD files, exhibiting perfect inter-marker and intra-marker reliability. The analysis of all 75 files revealed that the automated tool’s evaluations of volume, surface area, and CG location corresponded consistently with manually assigned marks. This consistency yielded a Cohen kappa coefficient of 1.0 and a 100% agreement rate, indicative of perfect inter-marker reliability. Intra-marker reliability of the tool was equally robust. When processing identical files with distinct auto-generated ID filenames, the script consistently produced uniform results for all geometric properties and assigned marks. This uniformity resulted in a Cohen kappa of 1.0 and a 100% agreement rate for intra-marker reliability, further illustrating the tool’s accuracy and reliability.

A notable advantage of the automation script was its substantial reduction in processing time. The automated tool completed the evaluation of all 75 CAD files in 22 min, a significant improvement over the manual marking process, which required 213 min (excluding breaks). This represents an 89.7% reduction in processing time, underscoring the considerable efficiency gains achieved through automation.

4. Discussion

The findings of this study underscore the significant advantages of integrating automation into CAD education. The Python-based automation tool demonstrated perfect reliability in both inter-marker and intra-marker assessments, highlighting its reliability and accuracy in grading CAD submissions. These results align with previous research emphasising the reliability of automated tools in educational contexts, particularly in minimising human error and bias in grading processes [12,13,14]. The development and implementation of this Python-based automation tool represent a significant step forward in enhancing CAD education. By streamlining the assessment process and providing consistent, objective feedback, the tool addresses key challenges in engineering education [4,10]. The perfect reliability and substantial time-saving capabilities demonstrate the potential for automation to transform educational practices in technical fields. As the field moves forward, it is crucial to continue refining and expanding the capabilities of such tools while maintaining a balanced approach that values both technological efficiency and human insight. By doing so, the field can create more effective, engaging, and industry-relevant educational experiences for future engineers, preparing them for the challenges and opportunities of an increasingly digital and automated professional landscape [19].

The tool’s ability to achieve 100% agreement with manual assessments indicates that it can serve as a reliable substitute for traditional evaluation methods, enabling instructors to focus on more qualitative aspects of feedback [4,10]. This is particularly important in CAD education, where understanding the rationale behind design decisions often requires nuanced, human insight [1,9]. By automating the grading of objective metrics such as volume, surface area, and CG location, instructors can allocate more time to addressing complex design principles and fostering creative problem-solving skills among students. Another outcome of this study is the dramatic reduction in grading time. The automation tool’s ability to process 75 CAD files in 22 min, compared to 213 min for manual grading, represents a nearly 90% improvement in efficiency. This time-saving benefit is consistent with prior studies on the adoption of automation in education, which report similar enhancements in workflow efficiency [12,13,14]. Such improvements are particularly valuable in large classes, where the grading workload can be overwhelming for instructors. From a pedagogical perspective, the immediate feedback provided by the automation tool has the potential to significantly enhance student learning outcomes [12,13,14]. Prompt feedback is a critical component of effective teaching, allowing students to identify and address errors in their work more efficiently [12,13,14]. By incorporating this tool into the curriculum, instructors can provide students with detailed, timely evaluations, fostering a more iterative and reflective learning process. This aligns with learning theories which emphasise the importance of active engagement and self-assessment in skill development [4].

The implications of this research extend beyond education. The automation of CAD evaluation reflects broader trends in engineering practice, where automation and digital tools are increasingly used to streamline workflows and enhance productivity [19]. By familiarising students with these technologies, educators can better prepare them for the demands of the modern engineering workplace, bridging the gap between academic instruction and professional practice [19]. The implementation of automated assessment tools in CAD education also raises important considerations regarding the role of technology in shaping pedagogical approaches. While the efficiency gains are evident, it is crucial to maintain a balance between automated and human-led instruction. The tool’s ability to provide consistent and objective feedback on technical aspects of CAD models can be complemented by instructor-led discussions on design philosophy, problem-solving strategies, and industry best practices.

Furthermore, the integration of such automated tools into the curriculum necessitates a re-evaluation of assessment strategies. Traditional grading rubrics may need to be adapted to incorporate both automated and manual evaluation components, ensuring a comprehensive assessment of student skills [12]. This hybrid approach can lead to more nuanced and holistic evaluations of student work, potentially improving the overall quality of CAD education. The introduction of automated assessment tools can significantly impact student learning experiences. By providing rapid feedback, students can engage in more iterative design processes, fostering a culture of continuous improvement [6,16]. This aligns with the principles of formative assessment, where ongoing feedback is used to guide and enhance learning throughout the course [6,16]. Moreover, the consistency of automated feedback can help students develop a more systematic approach to CAD modelling. By repeatedly encountering objective evaluations of their work, students may become more attuned to the technical requirements and best practices in CAD. This can lead to improved self-assessment skills and a deeper understanding of CAD principles [1].

Despite the promising results, several challenges and limitations must be addressed to optimise the advantage of the tool for both instructor and student. As students progress to more complex designs, the automated tool may need to evolve to handle advanced features. The tool’s reliance on specific software versions, file formats, and APIs may pose challenges in maintaining long-term compatibility and accessibility. Expanding the tool to work with various CAD software packages, each with unique features and modelling approaches, presents a significant technical challenge but would enhance its utility in diverse educational settings. Additionally, while the tool excels in evaluating quantitative metrics, its capacity to assess more subjective aspects of design, such as creativity or aesthetic quality, remains limited. The focus was on geometric properties due to their standardisation. Unfortunately, the Solid Edge API could not incorporate checks for model tree quality, constrained sketches, and material properties, as .par files are a proprietary format. Other CAD software APIs may be able to incorporate these parameters. This highlights a limitation with proprietary software for generalisability and open science. The CG location relative to the coordinate system provides a proxy for ensuring students can use the relationship features in Solid Edge.

To address these challenges and further enhance the capabilities of automated CAD assessment tools, several avenues for future research and development can be explored. Incorporating machine learning algorithms could enable the tool to assess more subjective aspects of design, such as aesthetics or ergonomics [22]. Future research should explore the integration of machine learning techniques to assess these qualitative factors, further expanding the scope of automated grading in CAD education. Developing a more versatile tool that can interact with multiple CAD software platforms (e.g., Solid Works, AutoCAD, or CATIA) would increase its applicability across different educational settings. Applying the automated marking approach to similar software such as finite element analysis, signal processing, or multibody modelling could further expand the scope of automated grading in engineering education [23,24,25]. Integrating features that support peer review and collaborative design processes could enhance the tool’s educational value [6,22]. Virtual reality [26], augmented reality [27], and generative artificial intelligence [28,29] provide an exciting opportunity for education enhancement in engineering, particularly around design and manufacturing [30,31]. Implementing adaptive learning algorithms could personalise the feedback and learning experience based on individual student performance and progress [32].

5. Conclusions

This study demonstrates the efficacy of a Python-based automation tool for assessing student CAD submissions, offering significant implications for the future of engineering education. The tool’s performance, characterised by perfect inter-marker and intra-marker reliability, coupled with a substantial reduction in grading time of nearly 90%, underscores its potential to enhance CAD education. By providing consistent and objective evaluations of geometric properties, the tool addresses repetitive tasks and key challenges in the assessment process, enhancing both efficiency and accuracy. The findings of this research highlight the transformative potential of automation in CAD education, particularly in its capacity to enhance grading efficiency, reliability, and feedback quality. This aligns with broader trends in educational technology, where automated assessment tools are increasingly recognised for their ability to streamline administrative tasks and provide timely, constructive feedback to students. The tool’s ability to rapidly process and evaluate CAD files not only alleviates the burden on instructors but also enables more frequent and detailed assessments, potentially leading to improved learning outcomes. Future work should focus on expanding the tool’s compatibility with other CAD platforms, which would significantly enhance its applicability across diverse educational settings. Additionally, exploring the integration of advanced algorithms to assess qualitative design aspects, such as creativity and aesthetic quality, represents an interesting next step in the evolution of automated CAD assessment. This development would bridge the gap between automated and human assessment, providing a more comprehensive evaluation of student work. By leveraging the capabilities of automation, educators can create a more effective and engaging learning environment that better prepares students for the realities of modern engineering practice. As these technologies continue to evolve, they have the potential to significantly enhance the quality and effectiveness of CAD teaching, ultimately producing graduates who are better equipped to meet the challenges of an increasingly digital and automated engineering landscape.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The automation script is available on GitHub: https://github.com/GTBiomech/CADMarkingAutomation (accessed on 10 January 2025).

Conflicts of Interest

The author declares no conflicts of interest.

References

- Hamade, R.F.; Artail, H.A.; Jaber, M.Y. Evaluating the learning process of mechanical CAD students. Comput. Educ. 2007, 49, 640–661. [Google Scholar] [CrossRef]

- Bojcetic, N.; Valjak, F.; Zezelj, D.; Martinec, T. Automatized evaluation of students’ CAD models. Educ. Sci. 2021, 11, 145. [Google Scholar] [CrossRef]

- Messer, M.; Brown, N.C.; Kölling, M.; Shi, M. Automated grading and feedback tools for programming education: A systematic review. ACM Trans Comput. Educ. 2024, 24, 1–43. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Deeva, G.; Bogdanova, D.; Serral, E.; Snoeck, M.; De Weerdt, J. A review of automated feedback systems for learners: Classification framework, challenges and opportunities. Comput. Educ. 2021, 162, 104094. [Google Scholar] [CrossRef]

- Shute, V.J. Focus on formative feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Moreno, R.; Bazán, A.M. Automation in the teaching of descriptive geometry and CAD. High-level CAD templates using script languages. IOP Conf. Ser. Mater. Sci. Eng. 2017, 245, 062040. [Google Scholar] [CrossRef]

- Serral, E.; De Weerdt, J.; Sedrakyan, G.; Snoeck, M. Automating immediate and personalized feedback taking conceptual modelling education to a next level. In Proceedings of the 2016 IEEE Tenth International Conference on Research Challenges in Information Science, Grenoble, France, 1–3 June 2016; pp. 1–6. [Google Scholar]

- Hunter, R.; Rios, J.; Perez, J.M.; Vizan, A. A functional approach for the formalization of the fixture design process. Int. J. Mach. Tools Manuf. 2006, 46, 683–697. [Google Scholar] [CrossRef]

- Subheesh, N.P.; Sethy, S.S. Learning through assessment and feedback practices: A critical review of engineering education settings. EURASIA J. Math. Sci. Tech. Educ. 2020, 16, em1829. [Google Scholar] [CrossRef] [PubMed]

- Jaakma, K.; Kiviluoma, P. Auto-assessment tools for mechanical computer aided design education. Heliyon 2019, 5, e02622. [Google Scholar] [CrossRef]

- Company, P.; Contero, M.; Otey, J.; Plumed, R. Approach for developing coordinated rubrics to convey quality criteria in MCAD training. Comput. Aided Des. 2015, 63, 101–117. [Google Scholar] [CrossRef]

- Colombo, G.; Mosca, A.; Sartori, F. Towards the design of intelligent CAD systems: An ontological approach. Adv. Eng. Info. 2007, 21, 153–168. [Google Scholar] [CrossRef]

- Steudel, H.J. Computer-aided process planning: Past, present and future. Int. J. Prod. Res. 1984, 22, 253–266. [Google Scholar] [CrossRef]

- Ujir, H.; Salleh, S.F.; Marzuki, A.S.W.; Hashim, H.F.; Alias, A.A. Teaching Workload in 21st Century Higher Education Learning Setting. Int. J. Eval. Res. Educ. 2020, 9, 221–227. [Google Scholar]

- Gikandi, J.W.; Morrow, D.; Davis, N.E. Online formative assessment in higher education: A review of the literature. Comput. Educ. 2011, 57, 2333–2351. [Google Scholar] [CrossRef]

- Machado, F.; Malpica, N.; Borromeo, S. Parametric CAD modeling for open source scientific hardware: Comparing OpenSCAD and FreeCAD Python scripts. PLoS ONE 2019, 14, e0225795. [Google Scholar] [CrossRef] [PubMed]

- Chappuis, S.; Stiggins, R.J. Classroom assessment for learning. Educ. Lead 2002, 60, 40–44. [Google Scholar]

- Radcliffe, D.F. Innovation as a meta attribute for graduate engineers. Int. J. Eng. Educ. 2005, 21, 194–199. [Google Scholar]

- Cohen, J. Statistical Power and Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates, Inc.: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Hallgren, K.A. Computing inter-rater reliability for observational data: An overview and tutorial. Tutor. Quant. Methods Psychol. 2012, 8, 23. [Google Scholar] [CrossRef]

- Singh, J.; Perera, V.; Magana, A.J.; Newell, B.; Wei-Kocsis, J.; Seah, Y.Y.; Strimel, G.J.; Xie, C. Using machine learning to predict engineering technology students’ success with computer-aided design. Comput. Appl. Eng. Educ. 2022, 30, 852–862. [Google Scholar] [CrossRef]

- Tierney, G.; Weaving, D.; Tooby, J.; Al-Dawoud, M.; Hendricks, S.; Phillips, G.; Stokes, K.A.; Till, K.; Jones, B. Quantifying head acceleration exposure via instrumented mouthguards (iMG): A validity and feasibility study protocol to inform iMG suitability for the TaCKLE project. BMJ Open Sport Exerc. Med. 2021, 7, e001125. [Google Scholar] [CrossRef]

- Tierney, G.J.; Simms, C. Predictive Capacity of the MADYMO Multibody Human Body Model Applied to Head Kinematics during Rugby Union Tackles. Appl. Sci. 2019, 9, 726. [Google Scholar] [CrossRef]

- Giudice, J.S.; Zeng, W.; Wu, T.; Alshareef, A.; Shedd, D.F.; Panzer, M.B. An analytical review of the numerical methods used for finite element modeling of traumatic brain injury. Ann. Biomed. Eng. 2019, 47, 1855–1872. [Google Scholar] [CrossRef] [PubMed]

- Soliman, M.; Pesyridis, A.; Dalaymani-Zad, D.; Gronfula, M.; Kourmpetis, M. The application of virtual reality in engineering education. Appl. Sci. 2021, 11, 2879. [Google Scholar] [CrossRef]

- Solmaz, S.; Alfaro, J.L.; Santos, P.; Van Puyvelde, P.; Van Gerven, T. A practical development of engineering simulation-assisted educational AR environments. Educ. Chem. Eng. 2021, 35, 81–93. [Google Scholar] [CrossRef]

- Regenwetter, L.; Nobari, A.H.; Ahmed, F. Deep generative models in engineering design: A review. J. Mech. Des. 2022, 144, 071704. [Google Scholar] [CrossRef]

- Tsai, M.L.; Ong, C.W.; Chen, C.L. Exploring the use of large language models (LLMs) in chemical engineering education: Building core course problem models with Chat-GPT. Educ. Chem. Eng. 2023, 44, 71–95. [Google Scholar] [CrossRef]

- Hunde, B.R.; Woldeyohannes, A.D. Future prospects of computer-aided design (CAD)—A review from the perspective of artificial intelligence (AI), extended reality, and 3D printing. Res. Eng. 2022, 14, 100478. [Google Scholar]

- Nazir, A.; Azhar, A.; Nazir, U.; Liu, Y.F.; Qureshi, W.S.; Chen, J.E.; Alanazi, E. The rise of 3D Printing entangled with smart computer aided design during COVID-19 era. J. Manuf. Syst. 2021, 60, 774–786. [Google Scholar] [CrossRef] [PubMed]

- Pando Cerra, P.; Fernández Álvarez, H.; Busto Parra, B.; Castaño Busón, S. Boosting computer-aided design pedagogy using interactive self-assessment graphical tools. Comput. Appl. Eng. Educ. 2023, 31, 26–46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).