4.3.1. Verification of Optimized Parameters

The hyperparameter configurations obtained through Optuna optimization must be validated on an independent dataset to ensure the reliability and stability of the optimized results. The model is retrained and evaluated using the dataset described in

Section 3.1, with the pre-trained model reinitialized to verify the effectiveness of the optimized hyperparameters. The experimental setup follows the configuration outlined in

Section 4.1.2. After replacing the empirical hyperparameters with those identified by Optuna (

Table 8), the model is trained for 4000 steps, with checkpoints saved every 200 steps.

In low-resource settings, models are highly susceptible to overfitting due to the limited amount of training data. To mitigate this issue, this paper introduces a dual-metric early stopping strategy to improve training stability and prevent performance degradation.

To robustly evaluate the effectiveness of LoRA fine-tuning strategies and hyperparameter optimization, randomized seed-repeat experiments were conducted on three low-resource languages (Ug, Kk, and Ky). Specifically, each experiment was independently trained using three distinct random seeds (42, 2025, and 12). The final WER was recorded for each run, and the mean, standard deviation (SD), and 95% confidence interval (CI) were calculated to ensure robust and reproducible results. The performance metrics reported represent the average across the three seed experiments. The results are summarized in

Table 9.

Experimental results show that after LoRA fine-tuning with parameters optimized via Optuna, the WER for all three languages was significantly lower than that of the baseline LoRA fine-tuning. Specifically, for Ug, the WER decreased from 55.58% to 34.60%, a reduction of 20.98%; for Kk, it dropped from 30.81% to 24.35%, a reduction of 6.46%; and for Ky, it declined from 49.84% to 41.12%, a reduction of 8.72%. In addition, the small standard deviations and 95% confidence intervals indicate that the results are stable and reproducible across repeated experiments.

After completing the overall performance evaluation using multiple random seeds, this paper further analyzed the statistical significance of the optimal parameter configuration by performing more detailed statistical tests and examining the training process under a fixed random seed (seed = 42). Subsequently, a Wilcoxon signed-rank test [

38] was conducted on the paired WER values between the baseline LoRA model and the parameter configuration obtained through Optuna + LoRA optimization on the test set. The Wilcoxon signed-rank test is a nonparametric paired test suitable for situations where the observed differences do not follow a normal distribution. The procedure involves calculating the differences between each pair of observations, as shown in Equation (

8):

Following the exclusion of samples with zero differences, the absolute values of the remaining differences were sorted in ascending order and assigned corresponding ranks. The original signs of the differences were then restored to determine positive and negative directions, yielding the positive rank sum and negative rank sum, respectively. The Wilcoxon signed-rank test uses the smaller of these two rank sums as the test statistic. Based on this statistic, the p-value is calculated to assess whether the difference between the two groups is statistically significant (p-value < 0.05).

As shown in

Table 10, experimental results on the test set indicate that LoRA fine-tuning under the optimal parameter configuration significantly outperforms baseline LoRA fine-tuning across all three low-resource languages (Ky, Ug, Kk) (

p-value < 0.05). Sentence-level comparisons show that recognition accuracy improves for most sentences under the optimal parameter configuration, fully demonstrating the effectiveness and stability of the hyperparameter optimization. Furthermore, this paper examines the evolution of training-stage loss and WER to evaluate the model’s training process and convergence under the optimized parameters.

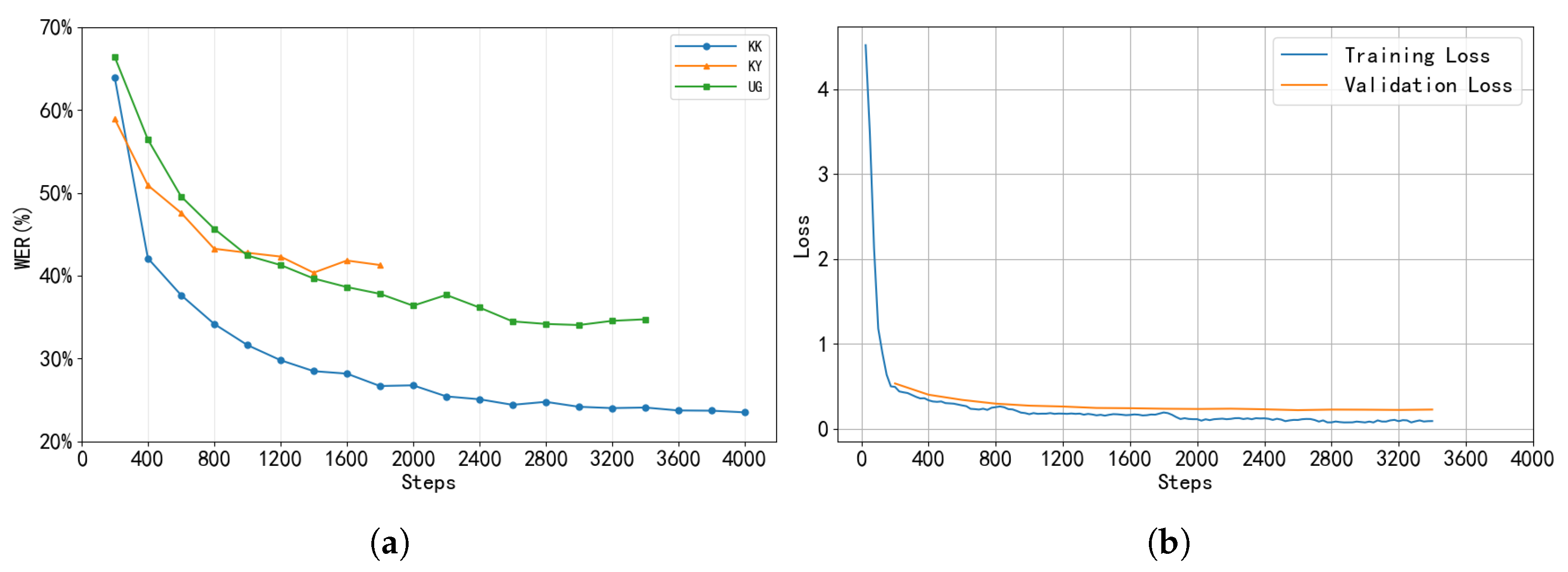

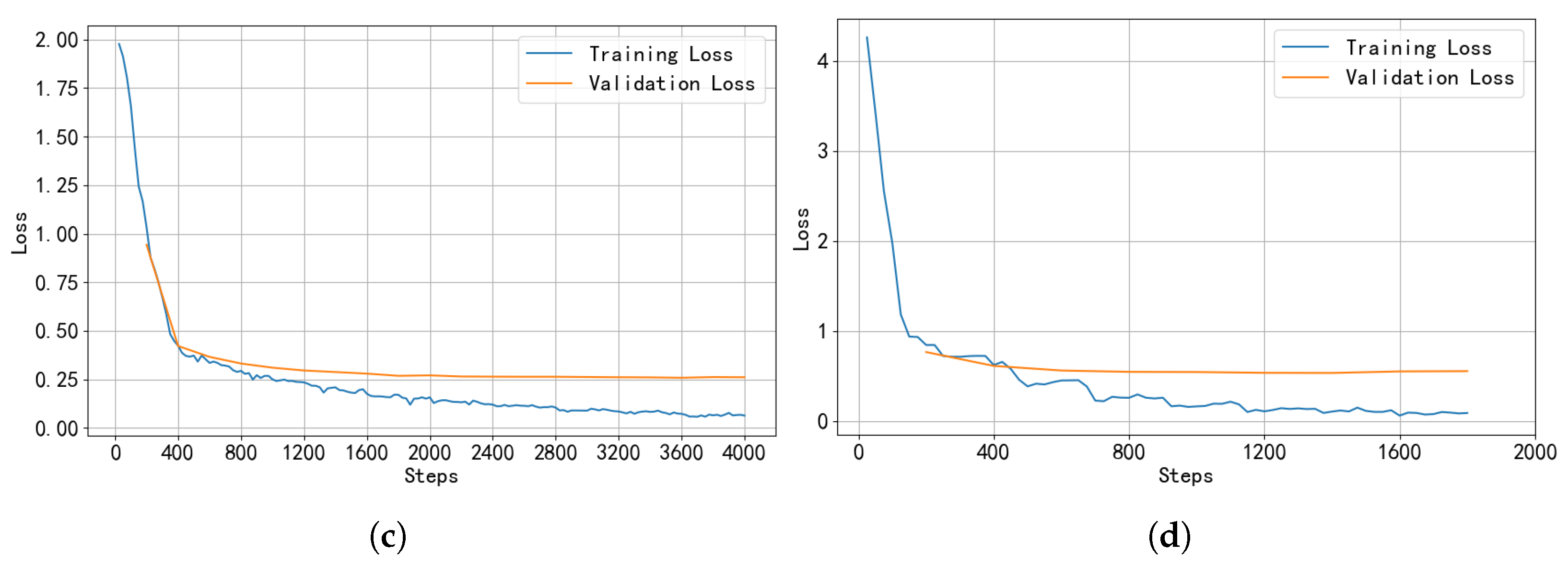

Figure 5 illustrates the training performance of three low-resource languages under optimized parameter settings.

Figure 5a shows the WER evolution for the three languages during training. The WER for all three languages demonstrates a clear downward trend, indicating that the model’s recognition capability for Turkic languages improves progressively. Specifically, Ug converged at 3000 steps with a WER of 34.04%; Ky converged at 1400 steps with a WER of 40.35%; and Kk converged at 3600 steps with a WER of 24.09%. These results demonstrate that the parameter combinations obtained via Optuna optimization effectively enhance the model’s recognition performance on these low-resource languages.

Figure 5b–d show the training and validation loss curves for Ug, Kk, and Ky, respectively. For Ug and Kk, the training and validation loss curves follow similar trends, showing no obvious signs of overfitting. In the case of Ky, the training and validation losses differ by approximately 0.5 but both converge to stable values, indicating that the model achieves good fit on both the training and validation sets. These results suggest that the dual-metric early stopping strategy adopted in this study helps the model converge stably.

4.3.2. Parameter Impact Analysis

In resource-constrained speech recognition tasks, model performance is particularly sensitive to hyperparameters. For each of the three languages, this study conducted 50 rounds of hyperparameter tuning experiments, with some trials prematurely terminated due to median pruning and thus excluded from subsequent parameter analysis. Upon aggregating the optimization results, certain trials exhibited abnormally high WER values, which could potentially skew assessments of the true contributions of individual hyperparameters. To achieve a robust sensitivity analysis, two metrics were introduced: Median WER and Median Absolute Deviation (MAD). Median WER serves as a measure of central tendency, is insensitive to extreme WER values, and accurately reflects model performance across the majority of samples. MAD functions as a robust measure of dispersion, quantifying the deviation of WER from the median. Compared with standard deviation, MAD demonstrates stronger resilience to outliers.

Table 11 presents the mean WER, median WER, and MAD across all experimental results for the three low-resource languages, reflecting the overall performance distribution and variability of the model under different hyperparameter combinations. Median WER and MAD provide more robust measures of central tendency and dispersion, effectively mitigating the influence of extreme WER values. Based on these metrics, outlier experiments were identified and removed using the criterion Median

. After this cleaning process, 32, 37, and 34 complete experiments remained for Ug, Kk, and Ky, respectively, for subsequent analysis. The removal rate did not exceed 14%, ensuring that the overall trends were not affected. Using the outlier-cleaned data, further analyses were conducted, including Optuna hyperparameter sensitivity analysis, parameter correlation analysis, and significance testing based on p-values. This approach ensures that the final analysis is robust and reliable, free from the influence of extreme outlier experiments.

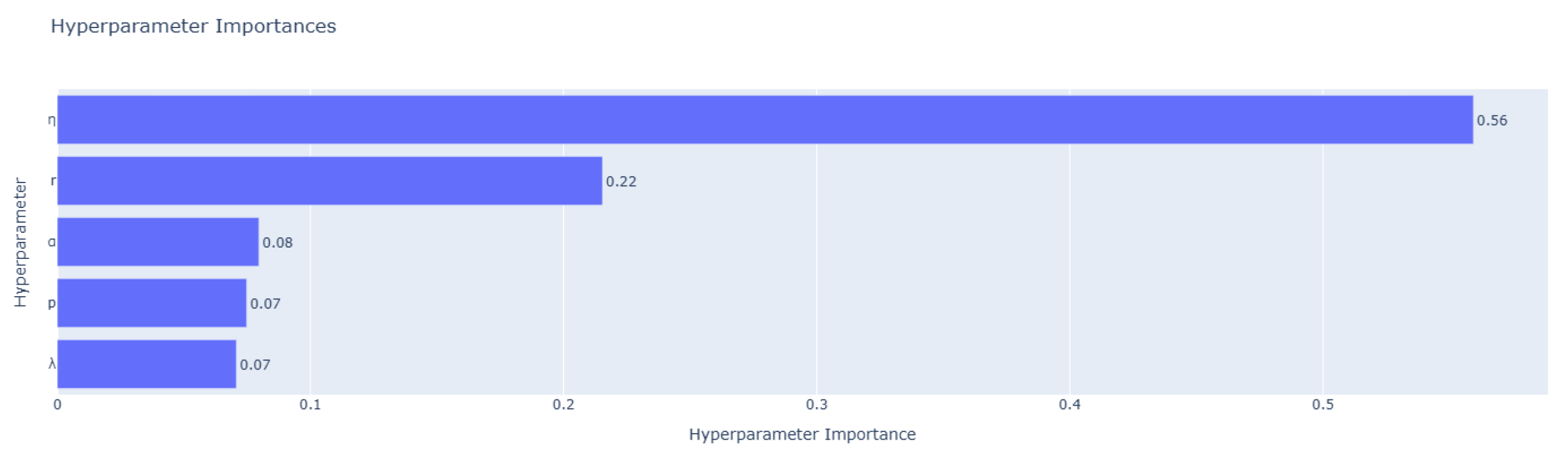

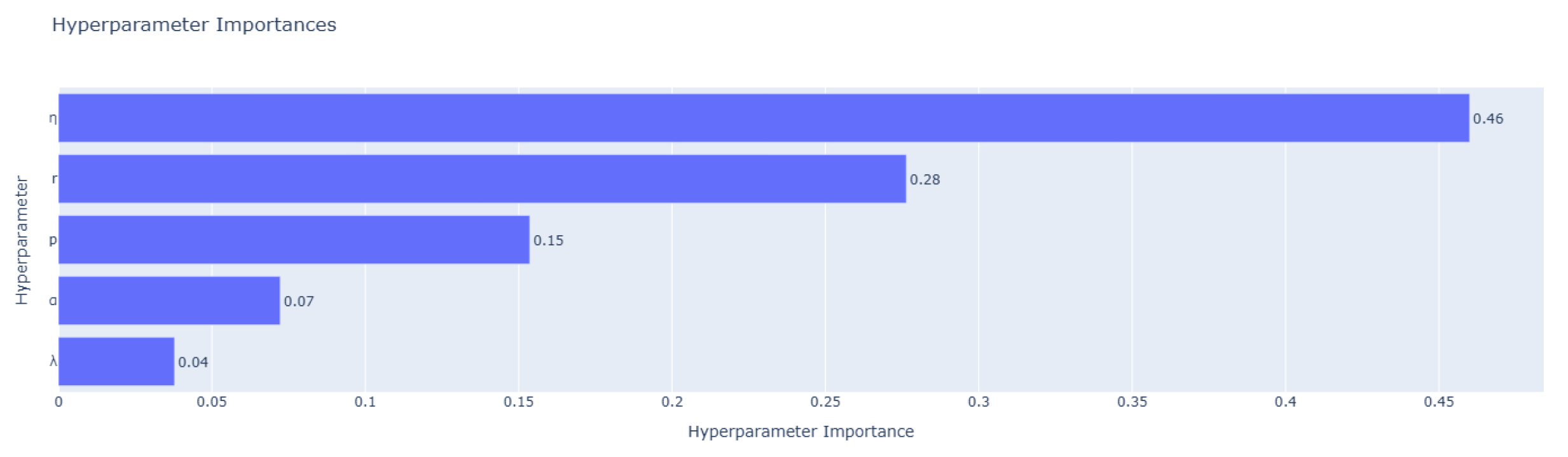

After removing outliers, this paper further utilizes Optuna’s built-in fANOVA method to evaluate hyperparameter importance. This approach constructs a random forest model to estimate each hyperparameter’s contribution to performance variance, thereby quantifying its true impact during optimization.

Figure 6,

Figure 7 and

Figure 8 show the hyperparameter sensitivity for Ug, Kk, and Ky, respectively.

For Ug and Ky, the hyperparameter has the greatest impact, followed by r, while the remaining parameters exert relatively minor effects. In contrast, Kk displays a more balanced sensitivity pattern. Although remains the primary contributor, the influence of r and p is noticeably higher, suggesting that performance in Kk depends more on the coordinated tuning of multiple hyperparameters. Overall, across all three low-resource languages, consistently has the most significant effect on model performance, followed by r. Sensitivity to p varies among languages, whereas the effects of and are comparatively weaker.

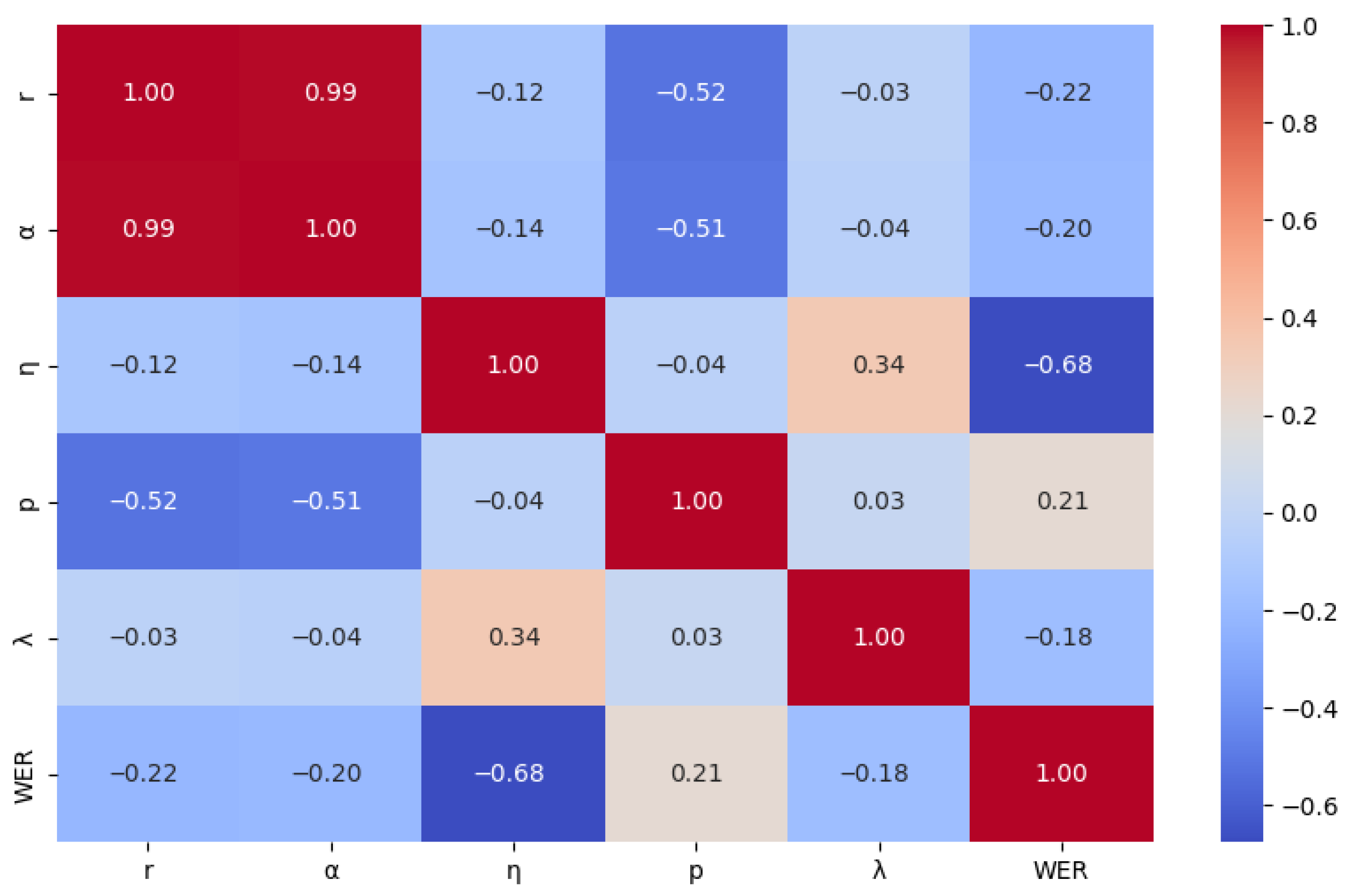

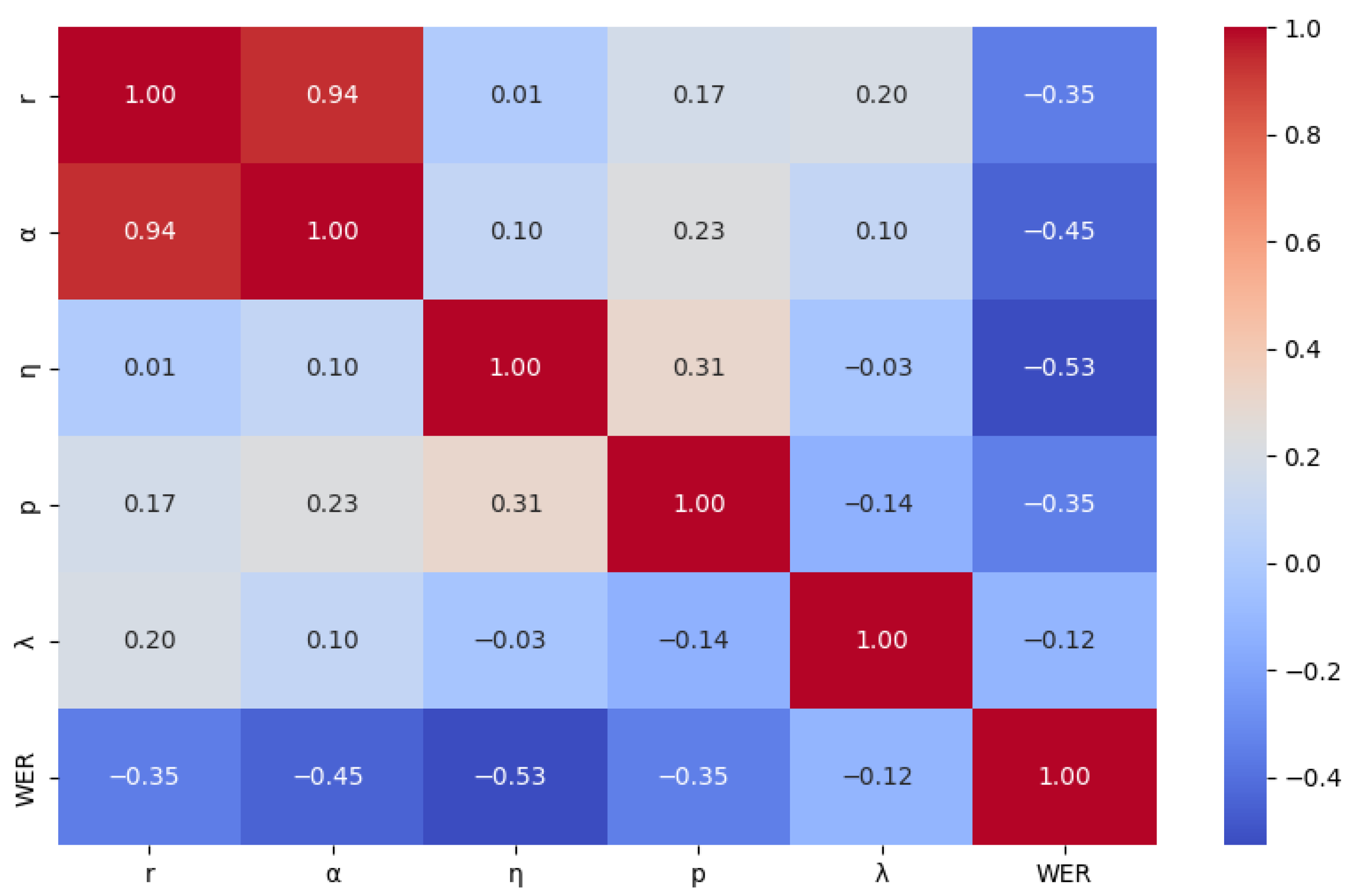

After completing the hyperparameter sensitivity analysis using fANOVA, this paper further validated the relationship between hyperparameters and model performance through statistical correlation and significance testing to ensure the robustness of the findings. Using the processed optimization data, a Pearson correlation coefficient matrix was constructed between hyperparameters and WER and visualized as a heatmap. The heatmap intuitively depicts the relationships through color intensity and numerical values: red indicates positive correlations in the range [0, 1], while blue indicates negative correlations in the range [−1, 0]. Darker colors represent stronger correlations.

Figure 9,

Figure 10 and

Figure 11 respectively show the correlation between hyperparameters and WER for Ug, Kk, and Ky. Across the three low-resource language datasets,

r,

, and

exhibit negative correlations with WER, indicating that appropriately increasing these hyperparameters within their valid ranges can help reduce WER. In contrast, the effects of

p and

vary by language:

p is positively correlated with WER in Ug and Ky but negatively correlated in Kk;

shows a positive correlation in Ky but negative correlations in Ug and Kk. These results indicate that the model’s sensitivity to hyperparameters differs across languages.

To further validate the statistical significance of these trends, Pearson correlation significance tests were conducted for the three languages (significance level = 0.05).

Figure 12 shows the

p-value analysis of hyperparameters versus WER for each language. The results indicate that

significantly affects WER across all three languages (

p-value

).

r and

show significant correlations in Kk (

p-value

) but not in Ug or Ky (

p-value

).

p is significantly correlated in Kk and Ky (

p-value

) but not in Ug, while

is insignificant for all three languages (

p-value

), further confirming its unstable influence. Overall, this test provides statistical evidence for the linear impact of each hyperparameter on WER across languages, supporting the robustness of our conclusions. Notably, although Pearson primarily measures linear relationships, combining it with fANOVA’s nonlinear sensitivity analysis allows for a more comprehensive assessment of hyperparameter effects.

Within the hyperparameter search ranges defined in this study, and based on the preceding analysis of parameter influence, is the most critical hyperparameter for model training across the three low-resource languages and should be prioritized during optimization. The effects of r and are significant for certain languages and can serve as auxiliary adjustment parameters. The parameter p moderately affects the performance of Kk and Ky and can be tuned as needed. In contrast, shows unstable influence on model performance and can be kept at its default value. For the three experimental datasets, the recommended parameter ranges are: , , [2 × 10−4, 5 × 10−4]. In practical applications, further hyperparameter tuning should be performed experimentally, taking into account the specific characteristics of the dataset and validation set performance, to achieve optimal model performance and generalization.