Optimizing Sign Language Recognition Through a Tailored MobileNet Self-Attention Framework

Abstract

1. Introduction

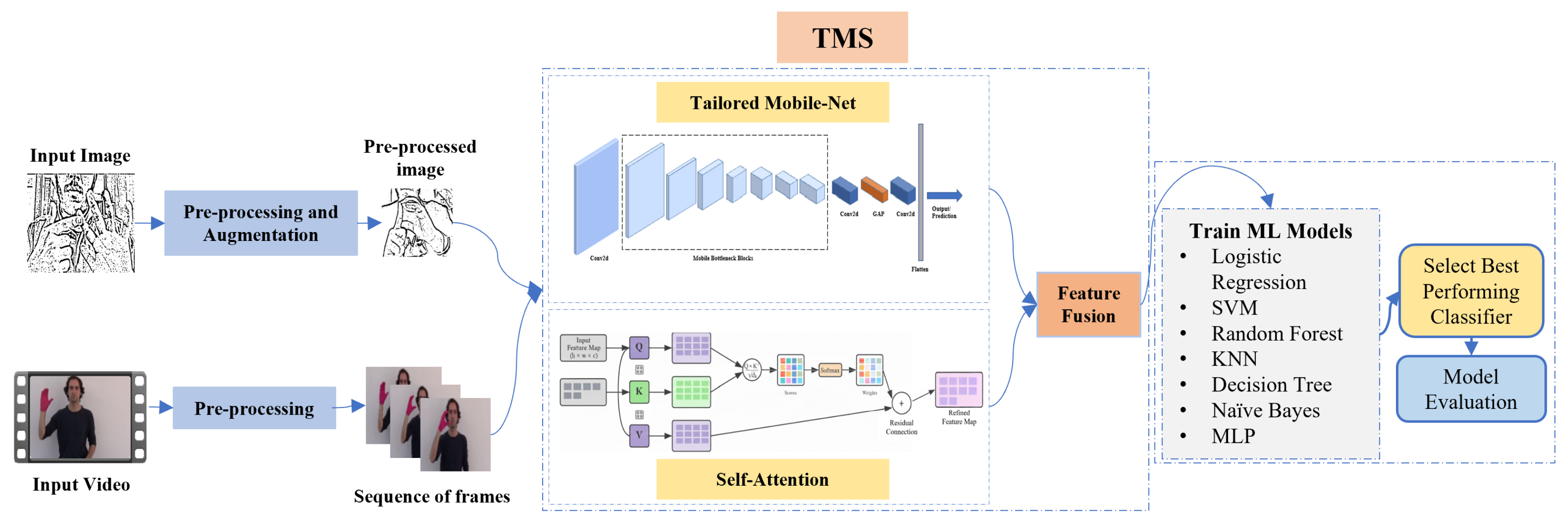

- Lightweight Framework (TMS): We propose the ‘Tailored MobileNet with Self-Attention’ (TMS) framework, specifically designed to tackle the problem of efficient SLR on resource-constrained mobile devices. TMS integrates a MobileNet backbone with a Self-Attention module, which efficiently captures spatial and contextual features while minimizing computational overhead. This makes the framework suitable for real-world SLR applications where model size and inference speed are critical.

- Modular Classification: To address the challenge of deploying SLR systems in real-time applications, we design a modular classification approach that allows for integration with various machine learning classifiers. Through systematic hyperparameter optimization using Grid Search and cross-validation, the k-Nearest Neighbors classifier was identified as the optimal choice for achieving a balance between accuracy and computational efficiency. Its integration with the TMS framework forms the TMSK model, which is shown to be highly effective for on-device use.

- Cross-Dataset Robustness: The framework’s robustness is rigorously validated across multiple datasets to address the generalization problem in SLR. By testing the model on four image-based datasets and one video dataset, we demonstrate strong generalization across different signing modalities, languages, and data types. This ensures that the model can be deployed effectively in diverse real-world settings, such as multilingual communities and assistive technologies, where variability in sign language use is a major challenge.

2. Related Work

3. Materials and Methods

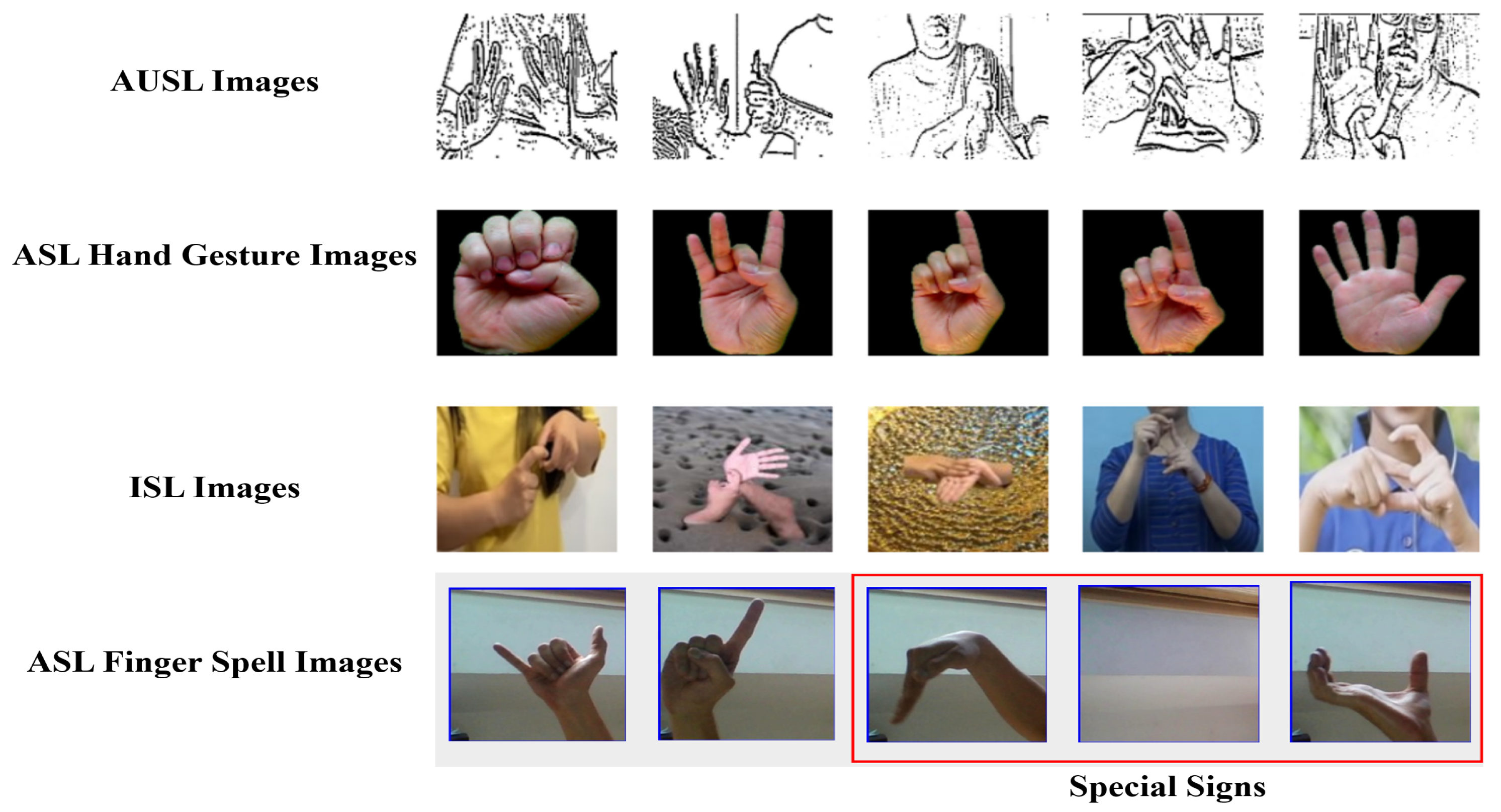

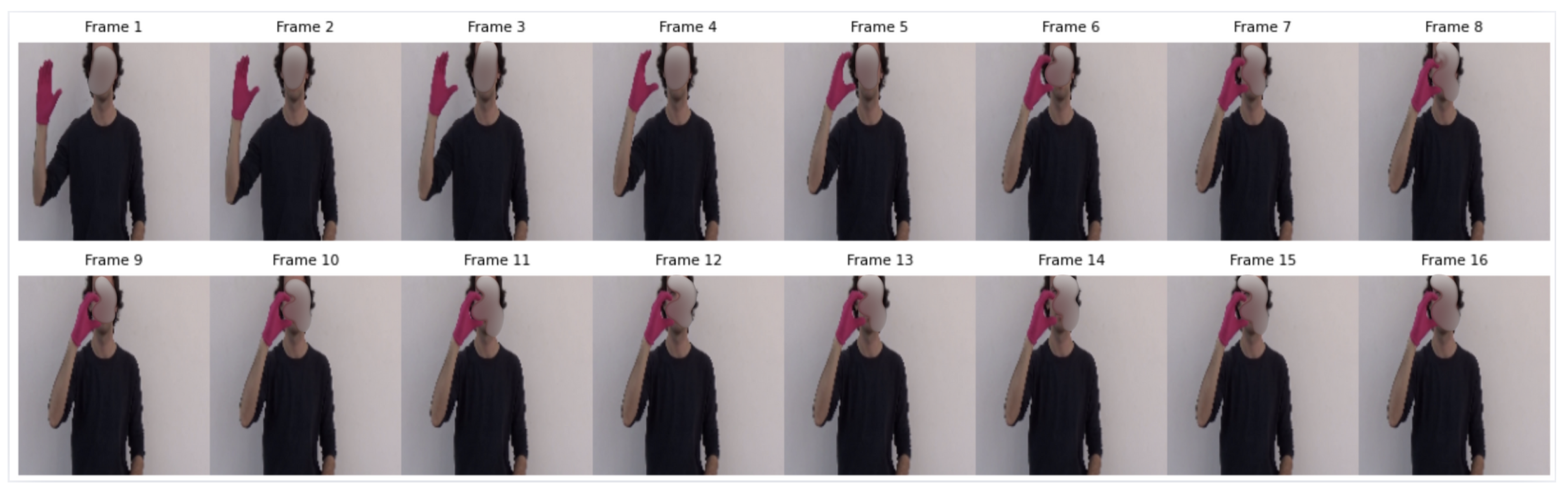

3.1. Datasets

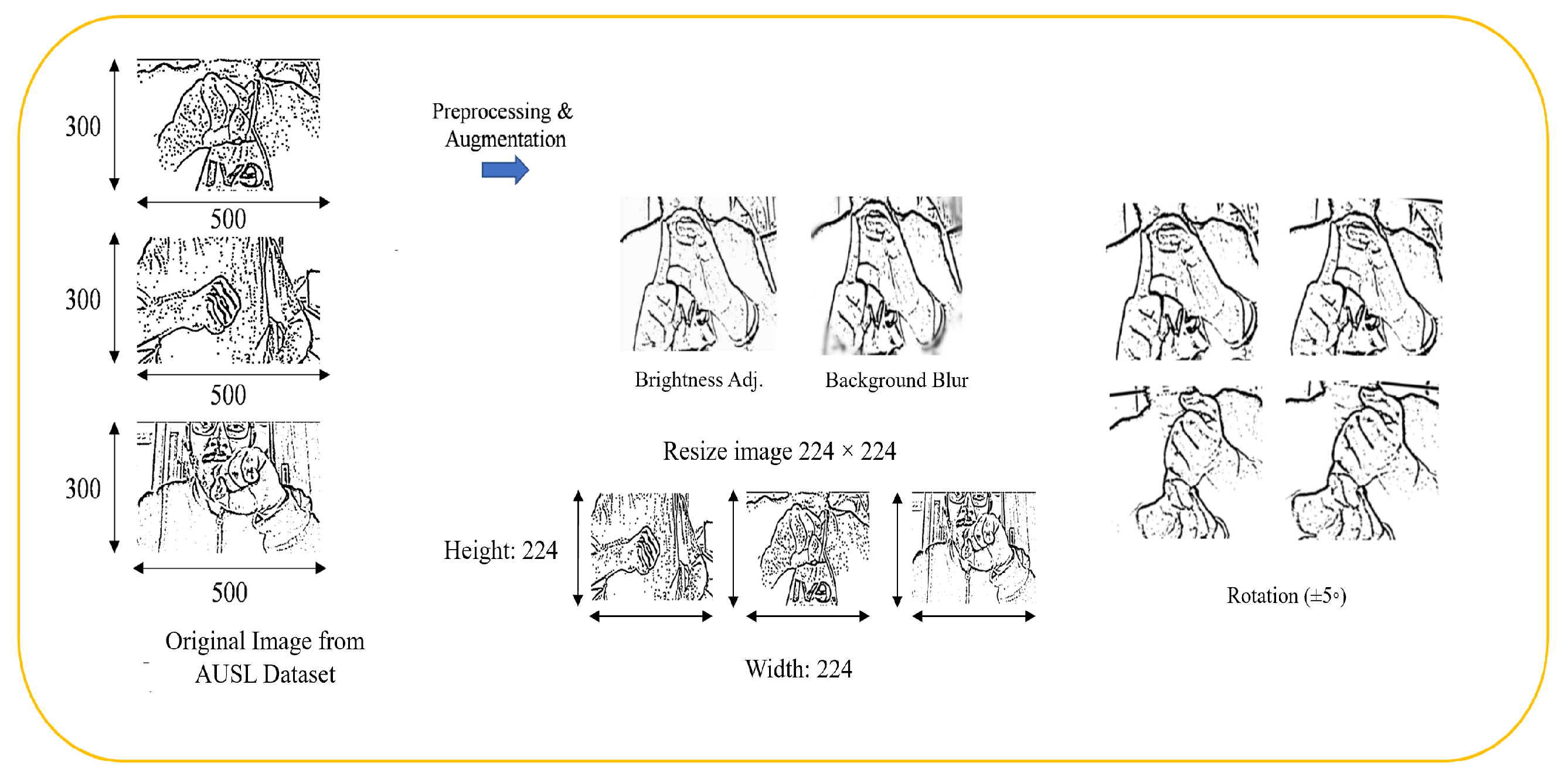

3.2. Preprocessing and Augmentation

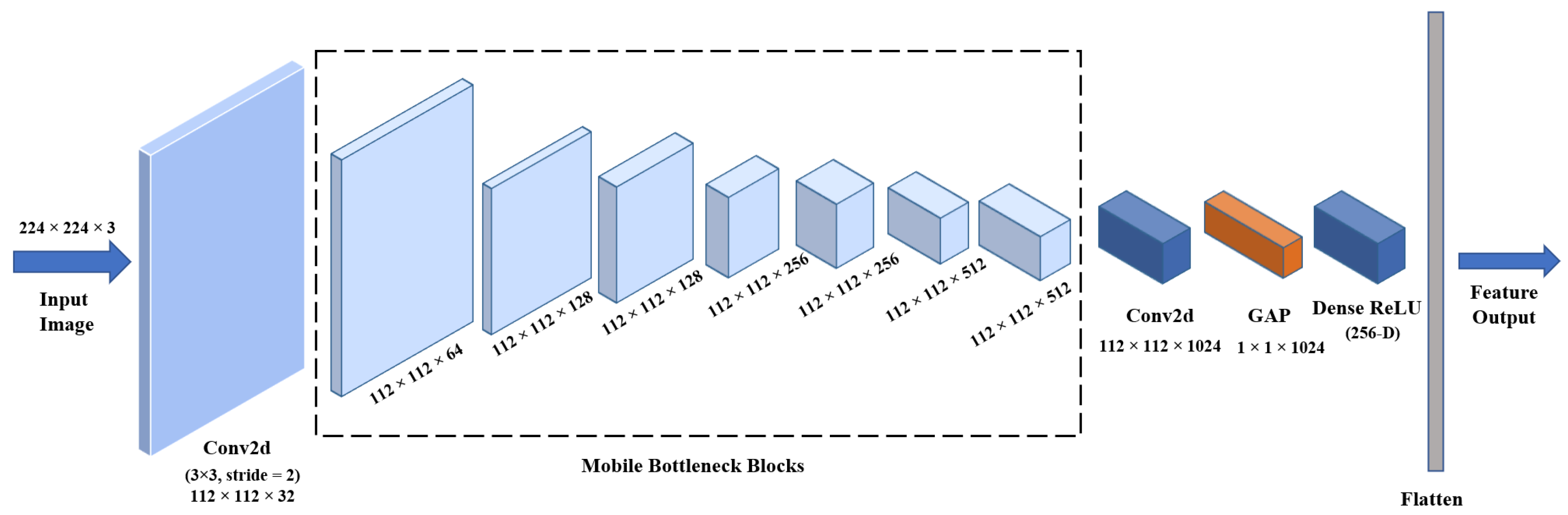

3.3. Tailored MobileNet Self-Attention Model (TMS)

3.3.1. Tailored MobileNet

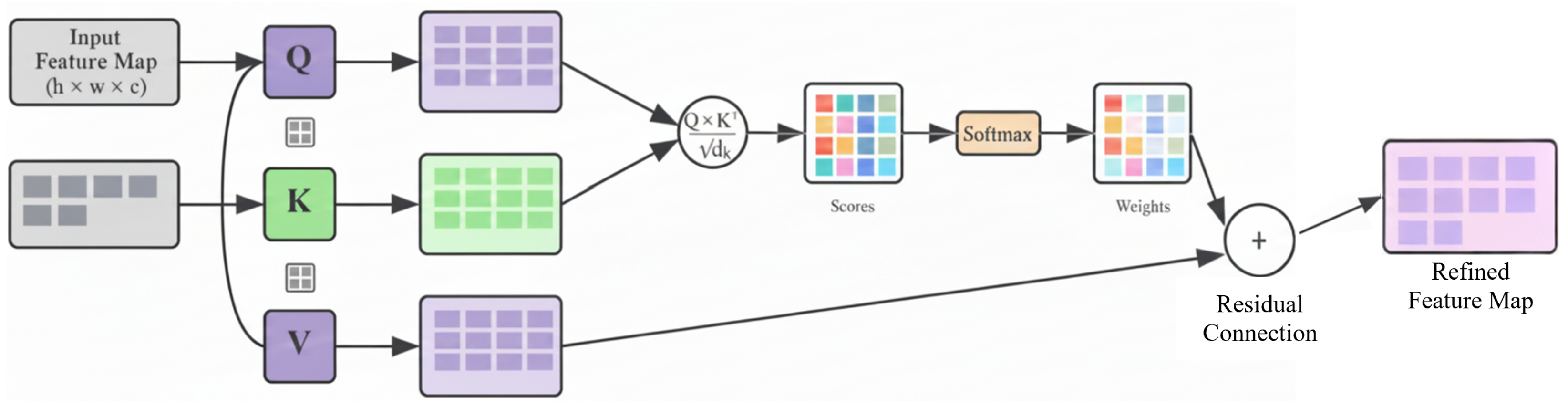

3.3.2. Self-Attention Block

3.3.3. Feature Fusion

3.4. Machine Learning Classifiers and Hyperparameter Optimization

4. Results

4.1. Evaluation Metrics

4.2. Experimental Setup

- Exp #1 establishes baseline performance on the large AUSL dataset;

- Exp #2–4 evaluate cross-dataset generalization;

- Exp #5 evaluates ability to generalize to special sign categories;

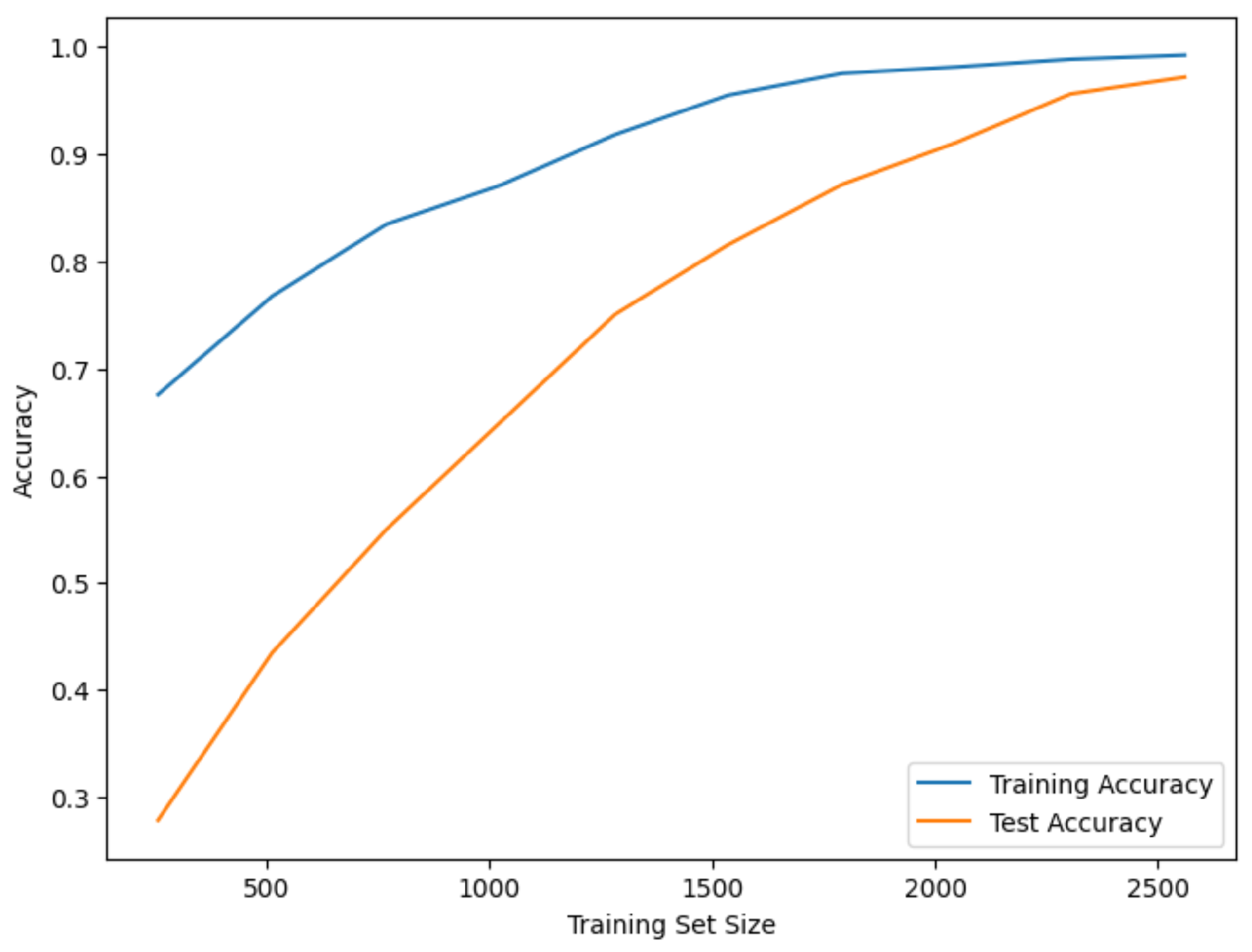

- Exp #6 assesses performance when training exclusively on limited, complex data.

4.3. Experiments on Image Datasets

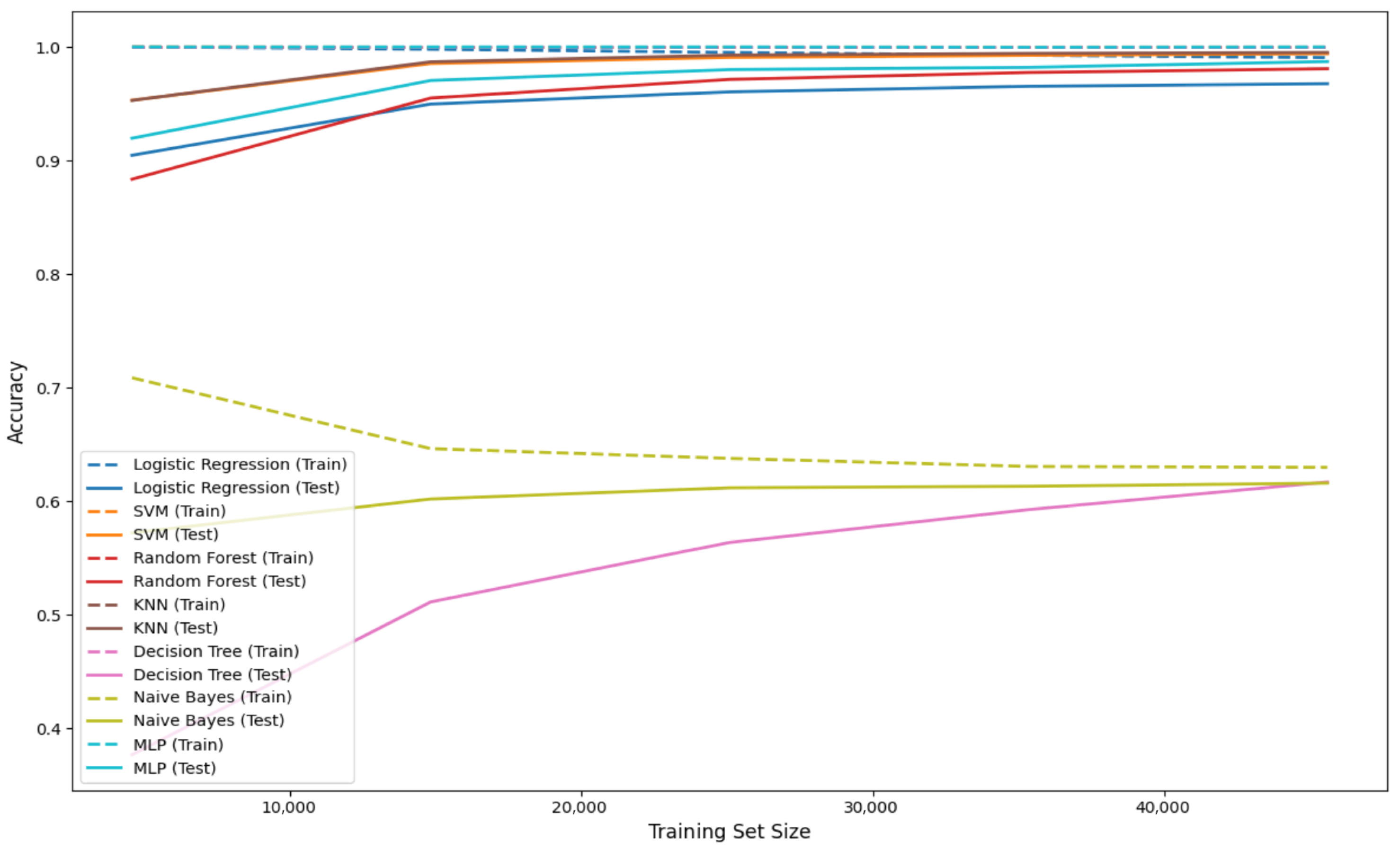

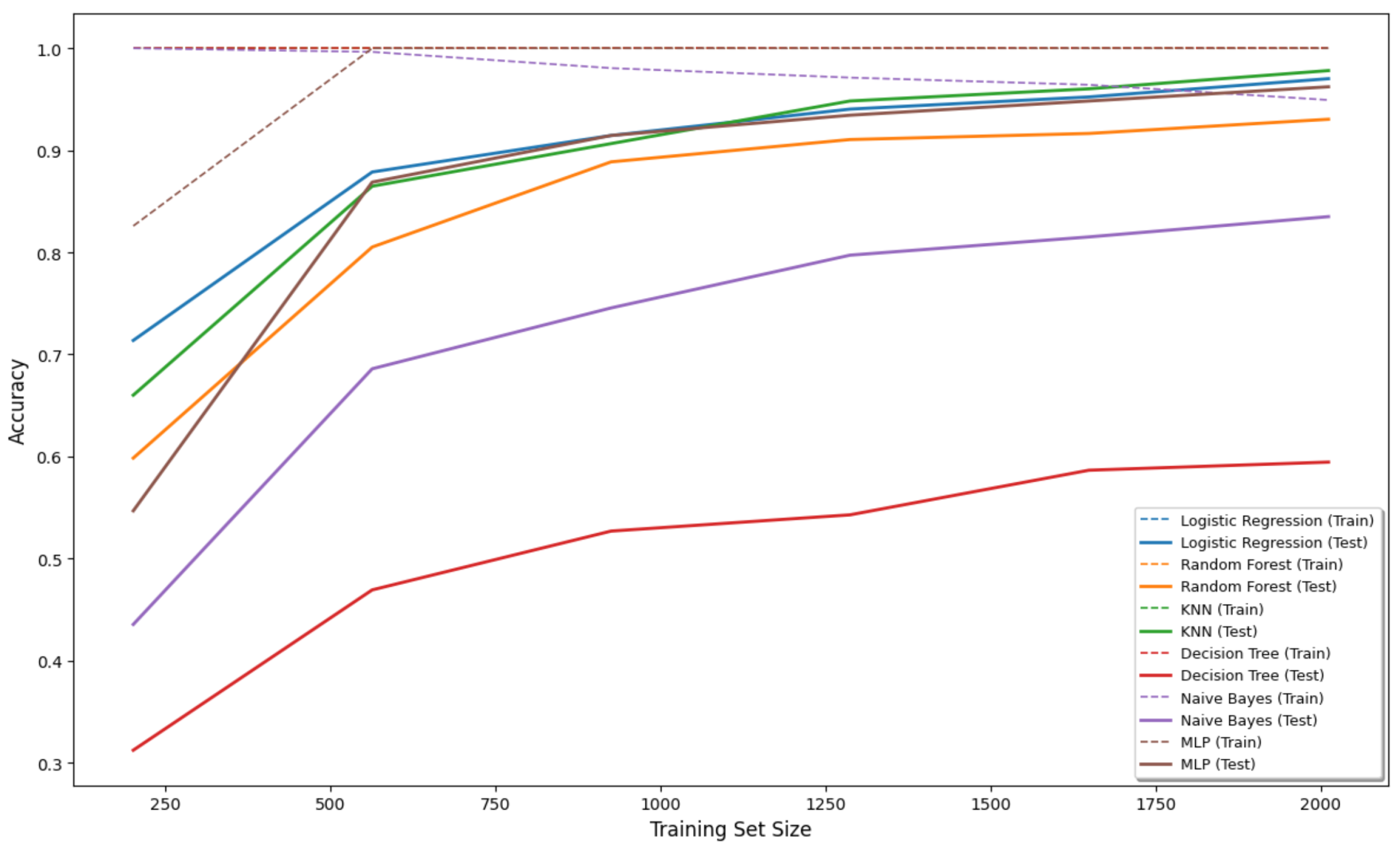

4.3.1. Classifier Comparison and Selection

| Classifier | Exp #1 | Exp #2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | A (%) | Model Size | P% | R% | F1% | A% | Model Size | |

| SVM | 99.5 | 99.6 | 99.5 | 99.5 | 115.2 MB | 96.4 | 96.0 | 97.0 | 96.5 | 16.9 MB |

| Random Forest | 98.1 | 98.1 | 98.1 | 98.1 | 36.1 MB | 94.7 | 94.4 | 94.4 | 94.4 | 15.2 MB |

| Logistic Regression | 97.2 | 97.2 | 97.2 | 97.2 | 4.5 MB | 95.8 | 95.6 | 95.6 | 95.6 | 3.4 MB |

| Decision Tree | 63.1 | 63.2 | 63.1 | 63.1 | 6.5 MB | 61.8 | 60.4 | 60.0 | 60.4 | 3.9 MB |

| Naïve Bayes | 62.8 | 66.1 | 62.8 | 62.8 | 9 MB | 84.5 | 82.7 | 83.0 | 82.7 | 4.9 MB |

| MLP | 98.3 | 98.3 | 98.3 | 98.3 | 115.7 MB | 97.1 | 96.8 | 96.8 | 96.8 | 57.4 MB |

| KNN | 99.6 | 99.6 | 99.6 | 99.6 | 5 MB | 97.8 | 97.7 | 97.8 | 97.8 | 3.1 MB |

| Classifier | Hyperparameters |

|---|---|

| Logistic Regression | C: [0.01, 0.1, 1, 10], Solver: [lbfgs, liblinear] |

| SVM | C: [0.1, 1, 10], Kernel: [linear, rbf], Gamma: [scale, auto] |

| Random Forest | n_estimators: [100, 200, 300], max_depth: [10, 20, None], min_samples_split: [2, 5, 10] |

| KNN | n_neighbors: [1,3, 5, 7, 9], Weights: [uniform, distance], Metric: [euclidean, manhattan] |

| Decision Tree | max_depth: [10, 20, None], min_samples_split: [2, 5, 10], Criterion: [gini, entropy] |

| Naive Bayes | var_smoothing: [1 , 1 , 1 ] |

| MLP | Activation: [relu, tanh], alpha: [0.0001, 0.001], learning_rate_init: [0.001, 0.01] |

| Fold No. | P (%) | R (%) | F1 (%) | A (%) | P (%) | R (%) | F1 (%) | A (%) |

|---|---|---|---|---|---|---|---|---|

| Exp #1 | Exp #2, #3, #4 | |||||||

| 1 | 99.49 | 99.49 | 99.49 | 99.49 | 97.07 | 96.77 | 96.78 | 96.77 |

| 2 | 99.53 | 99.53 | 99.53 | 99.53 | 96.79 | 96.53 | 96.50 | 96.53 |

| 3 | 99.65 | 99.65 | 99.65 | 99.65 | 97.98 | 97.76 | 97.76 | 97.76 |

| 4 | 99.52 | 99.52 | 99.52 | 99.52 | 98.64 | 98.51 | 98.50 | 98.51 |

| 5 | 99.56 | 99.56 | 99.56 | 99.56 | 97.53 | 97.26 | 97.29 | 97.26 |

| Mean ± Std | 99.55 ± 0.05 | 99.55 ± 0.05 | 99.55 ± 0.05 | 99.55 ± 0.05 | 97.60 ± 0.66 | 97.37 ± 0.71 | 97.37 ± 0.71 | 97.37 ± 0.71 |

| CI | [99.48, 99.62] | [99.48, 99.62] | [99.48, 99.62] | [99.48, 99.62] | [96.78, 98.42] | [96.46, 98.25] | [96.46, 98.25] | [96.46, 98.25] |

| Exp #5 | Exp #6 | |||||||

| 1 | 1.0 | 1.0 | 1.0 | 1.0 | 99.21 | 99.26 | 99.23 | 98.91 |

| 2 | 1.0 | 1.0 | 1.0 | 1.0 | 99.18 | 99.23 | 99.20 | 99.23 |

| 3 | 1.0 | 1.0 | 1.0 | 1.0 | 98.87 | 98.93 | 98.90 | 98.93 |

| 4 | 1.0 | 1.0 | 1.0 | 1.0 | 99.43 | 99.38 | 99.40 | 99.38 |

| 5 | 1.0 | 1.0 | 1.0 | 1.0 | 98.91 | 98.85 | 98.88 | 98.85 |

| Mean ± Std | 100 ± 0.0 | 100 ± 0.0 | 100 ± 0.0 | 100 ± 0.0 | 99.12 ± 0.22 | 99.13 ± 0.21 | 99.13 ± 0.21 | 99.13 ± 0.21 |

| CI | [100, 100] | [100, 100] | [100, 100] | [100, 100] | [98.87, 99.43] | [98.85, 99.38] | [98.85, 99.38] | [98.85, 99.38] |

4.3.2. Cross-Dataset Performance Analysis

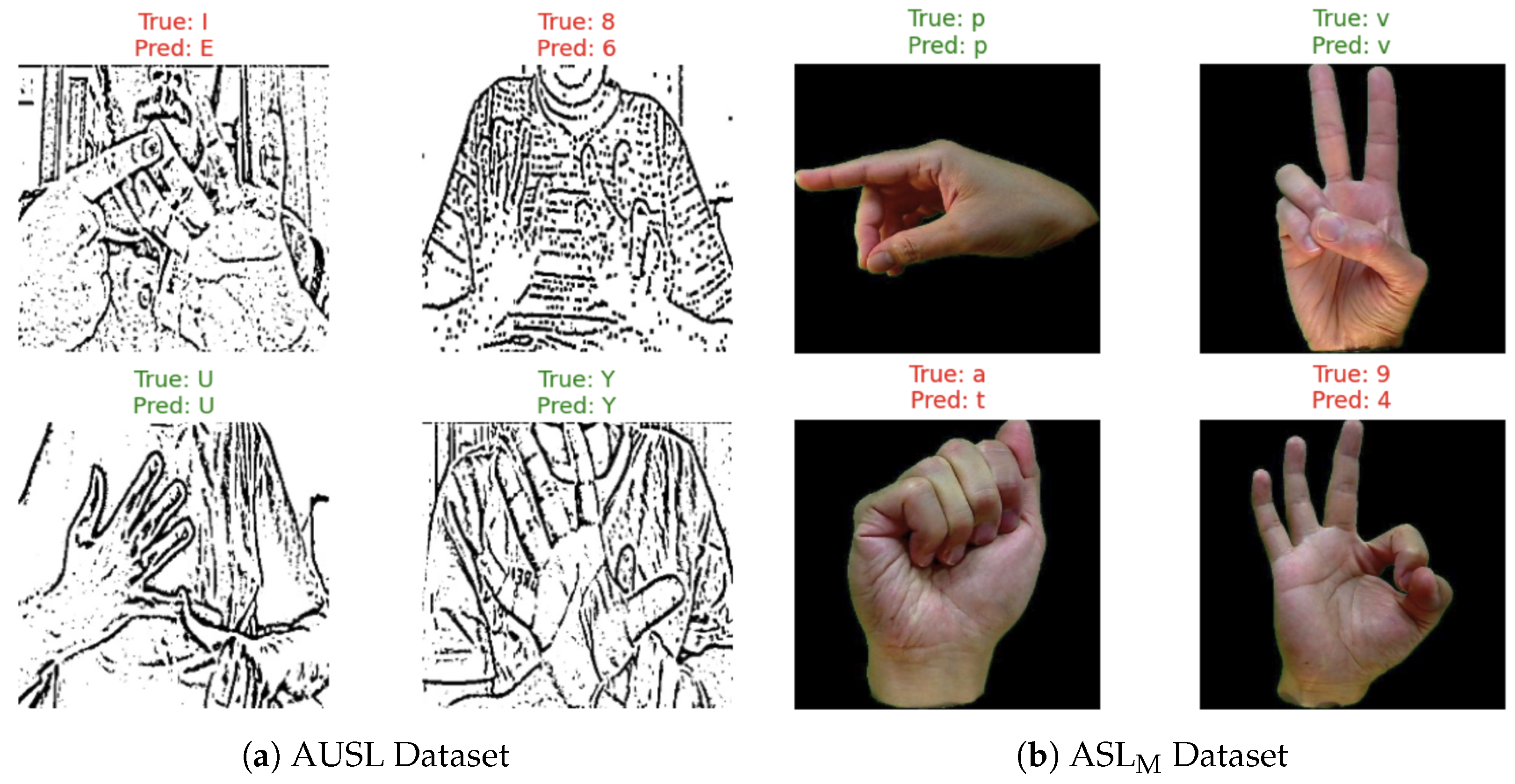

4.3.3. Error Analysis and Discussion

4.4. Performance Analysis on Video Dataset

5. Discussion

5.1. State of the Art Comparison

5.2. Computational Performance and Practical Deployment Analysis

5.3. Limitations of the Study

5.4. Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| AUSL | Australian Sign Language |

| ASLM | American Sign Language (Modified National Institute of Standards and Technology) |

| ASLF | American Sign Language Fingerspelling |

| CNN | Convolutional Neural Network |

| DT | Decision Tree |

| ISL | Indian Sign Language |

| KNN | K-Nearest Neighbor |

| LR | Logistic Regression |

| LSA64 | Argentinian Sign Language (64-Signs) |

| MLP | Multi-Layer Perceptron |

| RF | Random Forest |

| RNN | Recurrent Neural Network |

| SLR | Sign Language Recognition |

| SVM | Support Vector Machine |

| TMS | Tailored MobileNet Self-Attention |

| TMSK | Tailored MobileNet Self-Attention and KNN as classifier |

References

- Green, J.; Hodge, G.; Kelly, B. Two decades of sign language and gesture research in Australia: 2000–2020. Lang. Doc. Conserv. 2022, 16, 32–78. [Google Scholar]

- Rosa, M.D.; Bernardi, M.; Kleppe, S.; Walz, K. Hearing Loss: Genetic Testing. Genes 2024, 15, 178. [Google Scholar] [CrossRef]

- Miah, A.S.M.; Shin, J.; Al Mehedi Hasan, M.; Rahim, M.A.; Okuyama, Y. Rotation, Translation and Scale Invariant Sign Word Recognition Using Deep Learning. Comput. Syst. Sci. Eng. 2023, 44, 2521–2536. [Google Scholar] [CrossRef]

- Miah, A.S.M.; Hasan, M.A.M.; Shin, J.; Okuyama, Y.; Tomioka, Y. Multistage spatial attention-based neural network for hand gesture recognition. Computers 2023, 12, 13. [Google Scholar] [CrossRef]

- Shin, J.; Miah, A.S.M.; Hasan, M.A.M.; Hirooka, K.; Suzuki, K.; Lee, H.S.; Jang, S.W. Korean sign language recognition using transformer-based deep neural network. Appl. Sci. 2023, 13, 3029. [Google Scholar] [CrossRef]

- Miah, A.S.M.; Hasan, M.A.M.; Jang, S.W.; Lee, H.S.; Shin, J. Multi-stream general and graph-based deep neural networks for skeleton-based sign language recognition. Electronics 2023, 12, 2841. [Google Scholar] [CrossRef]

- Bencherif, M.A.; Algabri, M.; Mekhtiche, M.A.; Faisal, M.; Alsulaiman, M.; Mathkour, H.; Al-Hammadi, M.; Ghaleb, H. Arabic sign language recognition system using 2D hands and body skeleton data. IEEE Access 2021, 9, 59612–59627. [Google Scholar] [CrossRef]

- Ghadami, A.; Taheri, A.; Meghdari, A. A Transformer-Based Multi-Stream Approach for Isolated Iranian Sign Language Recognition. arXiv 2024, arXiv:2407.09544. [Google Scholar] [CrossRef]

- Abdolmalaki, A.; Ghaderzadeh, A.; Maihami, V. Recognition of Persian Sign Language Alphabet Using Gaussian Distribution, Radial Distance and Centroid-Radii. Recent Adv. Comput. Sci. Commun. Former. Recent Patents Comput. Sci. 2021, 14, 2171–2182. [Google Scholar] [CrossRef]

- Papadimitriou, K.; Potamianos, G.; Sapountzaki, G.; Goulas, T.; Efthimiou, E.; Fotinea, S.E.; Maragos, P. Greek sign language recognition for an education platform. Univers. Access Inf. Soc. 2025, 24, 51–68. [Google Scholar] [CrossRef]

- Obi, Y.; Claudio, K.S.; Budiman, V.M.; Achmad, S.; Kurniawan, A. Sign language recognition system for communicating to people with disabilities. Procedia Comput. Sci. 2023, 216, 13–20. [Google Scholar] [CrossRef]

- Rahim, M.A.; Miah, A.S.M.; Sayeed, A.; Shin, J. Hand gesture recognition based on optimal segmentation in human-computer interaction. In Proceedings of the 2020 3rd IEEE International Conference on Knowledge Innovation and Invention (ICKII), Kaohsiung, Taiwan, 21–23 August 2020; pp. 163–166. [Google Scholar]

- Wali, A.; Shariq, R.; Shoaib, S.; Amir, S.; Farhan, A.A. Recent progress in sign language recognition: A review. Mach. Vis. Appl. 2023, 34, 127. [Google Scholar] [CrossRef]

- Cihan Camgoz, N.; Hadfield, S.; Koller, O.; Bowden, R. Subunets: End-to-end hand shape and continuous sign language recognition. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3056–3065. [Google Scholar]

- Cheng, K.L.; Yang, Z.; Chen, Q.; Tai, Y.W. Fully convolutional networks for continuous sign language recognition. In Proceedings of the European Conference on Computer Vision. Springer, Glasgow, UK, 23–28 August 2020; pp. 697–714. [Google Scholar]

- Al-Qurishi, M.; Khalid, T.; Souissi, R. Deep learning for sign language recognition: Current techniques, benchmarks, and open issues. IEEE Access 2021, 9, 126917–126951. [Google Scholar] [CrossRef]

- Pu, J.; Zhou, W.; Li, H. Iterative alignment network for continuous sign language recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 4165–4174. [Google Scholar]

- Rajalakshmi, E.; Elakkiya, R.; Subramaniyaswamy, V.; Alexey, L.P.; Mikhail, G.; Bakaev, M.; Kotecha, K.; Gabralla, L.A.; Abraham, A. Multi-semantic discriminative feature learning for sign gesture recognition using hybrid deep neural architecture. IEEE Access 2023, 11, 2226–2238. [Google Scholar] [CrossRef]

- Rajalakshmi, E.; Elakkiya, R.; Prikhodko, A.L.; Grif, M.G.; Bakaev, M.A.; Saini, J.R.; Kotecha, K.; Subramaniyaswamy, V. Static and dynamic isolated Indian and Russian sign language recognition with spatial and temporal feature detection using hybrid neural network. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2022, 22, 1–23. [Google Scholar] [CrossRef]

- Tangsuksant, W.; Adhan, S.; Pintavirooj, C. American Sign Language recognition by using 3D geometric invariant feature and ANN classification. In Proceedings of the The 7th 2014 Biomedical Engineering International Conference, Fukuoka, Japan, 26–28 November 2014; IEEE: New York, NY, USA, 2014; pp. 1–5. [Google Scholar]

- Ragab, A.; Ahmed, M.; Chau, S.C. Sign language recognition using Hilbert curve features. In Image Analysis and Recognition, Proceedings of the 10th International Conference, ICIAR, Aveiro, Portugal, 26–28 June 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 143–151. [Google Scholar]

- Miah, A.S.M.; Hasan, M.A.M.; Shin, J. Dynamic hand gesture recognition using multi-branch attention based graph and general deep learning model. IEEE Access 2023, 11, 4703–4716. [Google Scholar] [CrossRef]

- Natarajan, B.; Rajalakshmi, E.; Elakkiya, R.; Kotecha, K.; Abraham, A.; Gabralla, L.A.; Subramaniyaswamy, V. Development of an end-to-end deep learning framework for sign language recognition, translation, and video generation. IEEE Access 2022, 10, 104358–104374. [Google Scholar] [CrossRef]

- Dabwan, B.A.; Gazzan, M.; Ismil, O.A.; Farah, E.A.; Almula, S.M.; Ali, Y.A. Hand Gesture Classification for the Deaf and Mute Using the DenseNet169 Model. In Proceedings of the 2024 9th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 16–18 December 2024; IEEE: New York, NY, USA, 2024; pp. 933–937. [Google Scholar]

- Urmee, P.P.; Al Mashud, M.A.; Akter, J.; Jameel, A.S.M.M.; Islam, S. Real-time bangla sign language detection using xception model with augmented dataset. In Proceedings of the 2019 IEEE International WIE Conference on Electrical and Computer Engineering (WIECON-ECE), Bangalore, India, 15–16 November 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar]

- Morillas-Espejo, F.; Martinez-Martin, E. A real-time platform for Spanish Sign Language interpretation. Neural Comput. Appl. 2025, 37, 2675–2696. [Google Scholar] [CrossRef]

- Liu, J.; Xue, W.; Zhang, K.; Yuan, T.; Chen, S. TB-Net: Intra-and inter-video correlation learning for continuous sign language recognition. Inf. Fusion 2024, 109, 102438. [Google Scholar] [CrossRef]

- Koller, O.; Zargaran, S.; Ney, H.; Bowden, R. Deep sign: Enabling robust statistical continuous sign language recognition via hybrid CNN-HMMs. Int. J. Comput. Vis. 2018, 126, 1311–1325. [Google Scholar] [CrossRef]

- Ma, X.; Yuan, L.; Wen, R.; Wang, Q. Sign language recognition based on concept learning. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Cui, R.; Liu, H.; Zhang, C. A deep neural framework for continuous sign language recognition by iterative training. IEEE Trans. Multimed. 2019, 21, 1880–1891. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, W.; Li, H.; Li, W. Attention-based 3D-CNNs for large-vocabulary sign language recognition. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2822–2832. [Google Scholar] [CrossRef]

- Qambrani, S.A.; Dahri, F.A.; Bhatti, S.; Banbhrani, S.K. Auslan Sign Language Image Recognition Using Deep Neural Network. Soc. Sci. Rev. Arch. 2025, 3, 1762–1773. [Google Scholar] [CrossRef]

- Naglot, D.; Kulkarni, M. Real time sign language recognition using the leap motion controller. In Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–27 August 2016; IEEE: New York, NY, USA, 2016; Volume 3, pp. 1–5. [Google Scholar]

- Chowdhury, P.K.; Oyshe, K.U.; Rahaman, M.A.; Debnath, T.; Rahman, A.; Kumar, N. Computer vision-based hybrid efficient convolution for isolated dynamic sign language recognition. Neural Comput. Appl. 2024, 36, 19951–19966. [Google Scholar] [CrossRef]

- Hama Rawf, K.M.; Abdulrahman, A.O.; Mohammed, A.A. Improved recognition of Kurdish sign language using modified CNN. Computers 2024, 13, 37. [Google Scholar] [CrossRef]

- Al Khuzayem, L.; Shafi, S.; Aljahdali, S.; Alkhamesie, R.; Alzamzami, O. Efhamni: A deep learning-based Saudi sign language recognition application. Sensors 2024, 24, 3112. [Google Scholar] [CrossRef]

- Kumari, D.; Anand, R.S. Isolated video-based sign language recognition using a hybrid CNN-LSTM framework based on attention mechanism. Electronics 2024, 13, 1229. [Google Scholar] [CrossRef]

- Abdul Ameer, R.; Ahmed, M.; Al-Qaysi, Z.; Salih, M.; Shuwandy, M.L. Empowering communication: A deep learning framework for Arabic sign language recognition with an attention mechanism. Computers 2024, 13, 153. [Google Scholar] [CrossRef]

- Saha, S.; Lahiri, R.; Konar, A.; Nagar, A.K. A novel approach to american sign language recognition using madaline neural network. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Hariharan, U.; Devarajan, D.; Kumar, P.S.; Rajkumar, K.; Meena, M.; Akilan, T. Recognition of American sign language using modified deep residual CNN with modified canny edge segmentation. Multimed. Tools Appl. 2025, 84, 38093–38120. [Google Scholar] [CrossRef]

- Likhar, P.; Bhagat, N.K.; G N, R. Deep learning methods for indian sign language recognition. In Proceedings of the 2020 IEEE 10th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 9–11 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Adaloglou, N.; Chatzis, T.; Papastratis, I.; Stergioulas, A.; Papadopoulos, G.T.; Zacharopoulou, V.; Xydopoulos, G.J.; Atzakas, K.; Papazachariou, D.; Daras, P. A comprehensive study on deep learning-based methods for sign language recognition. IEEE Trans. Multimed. 2021, 24, 1750–1762. [Google Scholar] [CrossRef]

- Mureed, M.; Atif, M.; Abbasi, F.A. Character Recognition of Auslan Sign Language using Neural Network. Int. J. Artif. Intell. Math. Sci. 2023, 2, 29–36. [Google Scholar]

- Li, D.; Rodriguez, C.; Yu, X.; Li, H. Word-level deep sign language recognition from video: A new large-scale dataset and methods comparison. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 1459–1469. [Google Scholar]

- Baihan, A.; Alutaibi, A.I.; Alshehri, M.; Sharma, S.K. Sign language recognition using modified deep learning network and hybrid optimization: A hybrid optimizer (HO) based optimized CNNSa-LSTM approach. Sci. Rep. 2024, 14, 26111. [Google Scholar] [CrossRef]

- Tanni, K.F.; Islam, S.; Sultana, Z.; Alam, T.; Mahmood, M. DeepBdSL: A Comprehensive Assessment of Deep Learning Architectures for Multiclass Bengali Sign Language Gesture Recognition. In Proceedings of the 27th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 20–22 December 2024; IEEE: New York, NY, USA, 2024; pp. 2219–2224. [Google Scholar]

- Kvanchiani, K.; Kraynov, R.; Petrova, E.; Surovcev, P.; Nagaev, A.; Kapitanov, A. Training strategies for isolated sign language recognition. arXiv 2024, arXiv:2412.11553. [Google Scholar] [CrossRef]

- Neto, G.M.R.; Junior, G.B.; de Almeida, J.D.S.; de Paiva, A.C. Sign Language Recognition Based on 3D Convolutional Neural Networks. In Image Analysis and Recognition; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); LNIP; Springer: Cham, Switzerland, 2018; Volume 10882, pp. 399–407. [Google Scholar]

- Incel, O.D.; Bursa, S.Ö. On-device deep learning for mobile and wearable sensing applications: A review. IEEE Sens. J. 2023, 23, 5501–5512. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Sarhan, N.; Lauri, M.; Frintrop, S. Multi-phase fine-tuning: A new fine-tuning approach for sign language recognition. KI-Künstliche Intell. 2022, 36, 91–98. [Google Scholar] [CrossRef]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Singh, R.K.; Mishra, A.K.; Mishra, R. Enhancing Sign Language Recognition: Leveraging EfficientNet-B0 with Transformer-based Decoding. Int. Res. J. Multidiscip. Scope (IRJMS) 2024, 5, 679–688. [Google Scholar] [CrossRef]

| Dataset | # Classes | Samples per Group | Total Samples |

|---|---|---|---|

| AUSL | 36 | (0–9): 199 (A–Z): 1971 | 71,257 |

| ASL MNIST (ASLM) | 36 | (0–9): 70 (A–Z): 69 | 2515 |

| ASL Finger Spell (ASLF) | 29 | (SP-Signs): 3000 (A–Z): 300 | 16,800 |

| ISL | 23 | (A–Z, except H, J, Y): 30 | 694 |

| Dataset Characteristics |

|---|

|

| Component | FLOPs | GFLOPs | Overhead |

|---|---|---|---|

| Standard MobileNetV2 | 675,396,278 | 0.675 | - |

| Tailored MobileNet | 615,923,531 | 0.615 | - |

| Self-Attention Module | 103,218,824 | 0.103 | - |

| TMS | 719,242,355 | 0.718 | 0.16 |

| Metric | Formula | Description |

|---|---|---|

| Accuracy | Proportion of correctly classified samples relative to total samples | |

| Precision | Proportion of true positives among all positive predictions | |

| Recall | Proportion of true positives correctly identified | |

| F1-Score | Harmonic mean of precision and recall |

| Training Data | Finetuning Data | Test Data | |

|---|---|---|---|

| Exp #1 | AUSL (80%): 57,005 | – | AUSL (20%): 14,252 |

| Exp #2 | AUSL (80%): 57,005 | ASLM (80%): 2012 | ASLM (20%): 503 |

| Exp #3 | AUSL (80%): 57,005 | ASLM (80%): 2012 | ASLF (A-Z): 7800 |

| Exp #4 | AUSL (80%): 57,005 | ASLM (80%): 2012 | ISL: 694 |

| Exp #5 | AUSL (80%): 57,005 | ASLM (80%): 2012 ASLF(SP): 7200 | ASLF (SP): 1800 |

| Exp #6 | ISL (80%): 555 | – | ISL (20%): 139 |

| Split | Videos | Frames/Video | Total Frames |

|---|---|---|---|

| Training (80%) | 2560 | 16 | 40,960 |

| Testing (20%) | 640 | 16 | 10,240 |

| Total | 3200 | 16 | 51,200 |

| Test Dataset | P (%) | R (%) | F1 (%) | A (%) | |

|---|---|---|---|---|---|

| Exp #1 | AUSL (Alphanumeric) | 99.6 | 99.6 | 99.6 | 99.6 |

| Exp #2 | ASLM (Alphanumeric) | 97.8 | 97.7 | 97.8 | 97.8 |

| Exp #3 | ASLF (Alphabetic) | 97.9 | 97.9 | 97.9 | 97.9 |

| Exp #4 | ISL (Alphabetic exp. H,J,Y) | 91.7 | 91.7 | 91.7 | 91.7 |

| Exp #5 | ASLF (Special Signs) | 100.0 | 100.0 | 100.0 | 100.0 |

| Exp #6 | ISL (Alphabetic exp. H,J,Y) | 99.3 | 99.3 | 99.3 | 99.3 |

| Actual Sign | Prediction | Per Class Error Rate (%) | Actual Sign | Prediction | Per Class Error Rate (%) | |

|---|---|---|---|---|---|---|

| Exp #1 | 6 | 7 | 1.3 | 4 | 2 | 2.3 |

| C | D | 1.5 | M | N | 2.3 | |

| Z | 8 | 1.0 | X | D | 1.0 | |

| Exp #2 | I | L | 2.3 | D | 1 | 1.5 |

| M | N | 1.5 | - | - | - | |

| Exp #3 | Y | I | 1.3 | G | U | 2.3 |

| S | U | 3.3 | M | N | 2.0 | |

| E | I | 2.3 | J | G | 1.0 | |

| Exp #4 | A | 0 | 1.3 | M | N | 3.2 |

| E | C | 2.4 | W | V | 1.7 | |

| N | E | 1.2 | O | U | 2.5 | |

| X | D | 3.5 | K | L | 1.0 |

| Category | Percentage (%) |

|---|---|

| Accurate predictions | 99.1 |

| Misclassified predictions | 0.9 |

| Model | Train/Test Dataset (Ratio %) | P (%) | R (%) | F (%) | A (%) |

|---|---|---|---|---|---|

| CNN [43] | AUSL (80:20) | 95.1 | 94.7 | 95.0 | 95.2 |

| DenseNet169 [24] | AUSL (80:20) | 96.8 | 96.7 | 96.6 | 96.7 |

| HMM + CL [29] | AUSL (80:20) | 92.4 | 91.5 | 92.4 | 92.4 |

| Xception [25] | AUSL (80:20) | 94.5 | 94.7 | 95.1 | 94.3 |

| TMSK | AUSL (80:20) | 99.6 | 99.6 | 99.6 | 99.6 |

| 3D-CNN [48] | LSA64 (80:20) | 93.5 | 94.1 | 93.7 | 93.9 |

| TMSK | LSA64 (80:20) | 99.1 | 99.1 | 99.1 | 99.1 |

| Madaline [39] | ASL (80:20) | 96.3 | 96.6 | 95.8 | 96.4 |

| MobileNet [56] | ASL (80:20) | 88.2 | 87.5 | 88.1 | 88.2 |

| Resnet-101 [41] | ASL (80:20) | 93.4 | 92.8 | 93.2 | 93.3 |

| ResNet50 [56] | ASL (80:20) | 82.3 | 83.1 | 81.7 | 82.5 |

| VGG16 [56] | ASL (80:20) | 83.0 | 82.6 | 83.1 | 83.0 |

| TMSK | AUSL+ASL (80:20) | 97.8 | 97.7 | 97.8 | 97.8 |

| Model | Dataset | P (%) | R (%) | F (%) | A (%) | Model Size (MB) | FLOPs | GFLOPs | Inf. Time (ms/FPS) |

|---|---|---|---|---|---|---|---|---|---|

| TMSK | AUSL | 99.6 | 99.6 | 99.6 | 99.6 | 5.00 | 719,242,355 | 0.718 | 2.48 ms/403.12 FPS |

| YOLOv8 | AUSL | 99.5 | 99.3 | 99.4 | 99.3 | 9.88 | 762,417,152 | 0.762 | 1.18 ms/848.31 FPS |

| MobileOne | AUSL | 99.7 | 99.7 | 99.7 | 99.7 | 16.76 | 1,050,999,296 | 1.051 | 5.15 ms/194.26 FPS |

| TMSK | LSA64 | 99.1 | 99.1 | 99.1 | 99.1 | 5.07 | 753,471,891 | 0.753 | 2.65 ms/377.36 FPS |

| YOLOv8 | LSA64 | 97.8 | 97.7 | 97.7 | 97.7 | 9.92 | 831,060,480 | 0.831 | 1.15 ms/863.11 FPS |

| MobileOne | LSA64 | 95.5 | 95.0 | 95.0 | 95.0 | 17.33 | 1,051,027,968 | 1.051 | 6.21 ms/160.85 FPS |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qutab, I.; Po, L.; Rollo, F.; Naqvi, W. Optimizing Sign Language Recognition Through a Tailored MobileNet Self-Attention Framework. Appl. Sci. 2025, 15, 12622. https://doi.org/10.3390/app152312622

Qutab I, Po L, Rollo F, Naqvi W. Optimizing Sign Language Recognition Through a Tailored MobileNet Self-Attention Framework. Applied Sciences. 2025; 15(23):12622. https://doi.org/10.3390/app152312622

Chicago/Turabian StyleQutab, Irfan, Laura Po, Federica Rollo, and Wahab Naqvi. 2025. "Optimizing Sign Language Recognition Through a Tailored MobileNet Self-Attention Framework" Applied Sciences 15, no. 23: 12622. https://doi.org/10.3390/app152312622

APA StyleQutab, I., Po, L., Rollo, F., & Naqvi, W. (2025). Optimizing Sign Language Recognition Through a Tailored MobileNet Self-Attention Framework. Applied Sciences, 15(23), 12622. https://doi.org/10.3390/app152312622