1. Introduction

Endoscopic pituitary surgery is a standard minimally invasive technique for the resection of pituitary neuroendocrine tumors, offering clear advantages over traditional transcranial approaches. By providing surgeons with a magnified and illuminated view of the surgical field, the endoscope allows for precise tumor excision, reduced trauma to surrounding structures, and shorter recovery times for patients. Nevertheless, this procedure remains technically demanding due to the narrow endonasal corridor, limited maneuverability, and proximity to critical neurovascular structures. It also shows high variability in tumor characteristics and surgeon-dependent techniques, which increase complexity. All of these challenges require continuous situational awareness in the Operating Room (OR) and precise decision-making essential to ensure patient safety and surgical success.

In this context, Surgical Phase Recognition (SPR) systems have emerged as a promising component of computer-assisted surgery [

1,

2]. These systems automatically classify surgical videos into defined procedural phases, providing feedback in the OR to enhance intraoperative awareness, improving communication among surgical staff, and supporting surgical documentation of the procedure. Beyond workflow monitoring, SPR has potential applications in surgical training, quality control, and postoperative analysis [

3].

Recent advances in Deep Learning (DL) have significantly improved SPR performance by enabling the automatic extraction of visual and temporal features from surgical videos, where convolutional and temporal neural networks have demonstrated strong capabilities in modeling the dynamics of complex surgical workflows [

4,

5,

6,

7]. However, most existing SPR approaches rely on fully Supervised Learning, requiring exhaustive frame-level annotations from expert surgeons and large volumes of labeled data [

8,

9,

10]. Despite the efforts in recording endoscopic pituitary surgeries, for which there are few cases every year, the annotation process remains a major bottleneck, demanding intensive manual effort and specialized expertise. This challenge is amplified in pituitary surgery due to the high procedural complexity. In addition, strong illumination changes, blood and smoke artifacts, and frequent withdrawal of the endoscope for cleaning, disrupt visual continuity and complicate automated analysis. To mitigate these challenges, this study explores a Semi-Supervised Learning (SSL) framework for Surgical Phase Recognition (SPR) that substantially reduces annotation requirements while maintaining competitive performance. The proposed approach employs a Timestamp Supervision strategy [

11], where only some representative frames per surgical phase are annotated, drastically reducing expert labeling effort. From these sparse annotations, pseudo-labels are created and propagated to unlabeled frames through an Uncertainty-Aware Temporal Diffusion (UATD) mechanism [

12], which exploits temporal dependencies and model uncertainty to guide reliable label propagation across the complete surgical video.

The objective of this work is to demonstrate that an SSL strategy trained under timestamp-level supervision can achieve accurate and temporally consistent phase recognition in complex neurosurgical workflows such as the case of endoscopic pituitary surgery. Using the PituPhase–SurgeryAI dataset [

13], the largest publicly available collection of endoscopic pituitary surgeries to date, this study evaluates multiple spatial and temporal architectures for the task of SPR. In addition, the study investigates two complementary approaches for handling outside-body frames, which correspond to moments when the surgeon temporarily withdraws the endoscope from the patient to clean the lens, adjust instruments, or other procedural reasons. The first approach explicitly identifies them as an independent phase and the second merges them with the preceding in-body phase. Previous studies have often discarded these frames [

14], overlooking their potential impact on temporal continuity and model stability. By systematically comparing these two strategies, we aim to analyze how the explicit removal of non-intraoperative segments affects phase balance and temporal coherence. This dual perspective provides valuable insights into improving model robustness and workflow representation in complex endoscopic procedures. To the best of our knowledge, this is the first work to apply an Uncertainty-Aware SSL framework to Surgical Phase Recognition in the context of pituitary surgery, paving the way for scalable and annotation-efficient AI-assisted surgical analysis [

7,

12,

13].

In summary, the novelty of this work resides in the application of a previously published Timestamp Supervision Strategy [

11], involving the propagation of sparse annotations using an Uncertainty-Aware Temporal Diffusion mechanism [

12], to the field of endoscopic pituitary surgery for the first time, to investigate the performance of the methodology in a surgical procedure that has a considerable variability among surgeons and patients, large number of phases, and ambiguously defined transitions. In addition, it systematically investigates two different approaches to dealing with outside-body frames, an aspect that has not been directly addressed before.

2. Background and Literature Review

Automatic surgical workflow analysis has become a rapidly evolving field within computer-assisted interventions. Early methods relied on handcrafted features and probabilistic models such as Hidden Markov Models (HMMs) to capture temporal dependencies between surgical phases [

15,

16]. Although these approaches achieved moderate success, their performance was limited by their inability to generalize across procedures with high temporal and visual variability.

The advent of Deep Learning has led to significant advancements in the field, particularly through Convolutional Neural Networks (CNNs) and Temporal Convolutional Networks (TCNs). Twinanda et al. (2017) introduced EndoNet [

4], a CNN-LSTM hybrid architecture for laparoscopic cholecystectomy phase recognition. The dataset presented in that work, Cholec80, has become the benchmark for surgical phase recognition, although some authors (Kirtac et al. 2022) have shown that publicly available datasets often fail to capture the full variability encountered in real-world surgical procedures [

3,

17]. Subsequent works, such as MS-TCN [

6], refined temporal modeling through multi-stage refinement and dilated convolutions, leading to more stable phase segmentation. More recently, attention-based methods such as Segment-Attentive Hierarchical Consistency (SAHC) enhance robustness by leveraging hierarchical representations that exploit multi-level contextual information to correct ambiguous frame-level predictions [

8]. A review of recognition approaches is provided in a survey by Garrow et al. (2021) [

1].

Despite these advances, most frameworks remain Fully Supervised, relying on dense frame-level annotations that are costly to obtain because they require expert surgical knowledge to be produced. Self-Supervision is still in the very early stages of research within surgical computer vision [

18]. Recent research has explored Weakly Supervised and Semi-Supervised alternatives, including Timestamp Supervision and pseudo-labeling strategies [

12], to reduce annotation costs, which achieves a 74% reduction in annotation time compared with the full annotation. However, these methods have primarily been evaluated on well-structured procedures with clear phase boundaries, such as laparoscopic cholecystectomy, rather than on complex neurosurgical workflows. Very recently, Semi-Supervised approaches have been used in open surgical procedures, a domain in which video data are considerably scarcer [

19].

In the context of pituitary surgery, the PitVis-2023 Challenge published a 25-video endoscopic pituitary dataset [

14], and more recently in 2025, Lagares et al. (2025) introduced the PituPhase–SurgeryAI dataset [

13], containing 44 videos, which currently represents the largest publicly available resource in this domain. Building on the second dataset, two studies have been published: González-Cebrián et al. (2025) proposed an attention-based hierarchical framework using the SAHC architecture to model temporal dependencies in pituitary surgery, while Centeno López et al. (2025) combined various SSL strategies with attention mechanisms, achieving a 50% reduction in annotation requirements without compromising performance [

7,

20]. However, none of these works incorporate temporal modeling within a Semi-Supervised Learning framework, representing a key limitation that the present study seeks to overcome.

Based on this dataset and previous research, we adapt an SSL approach that integrates uncertainty estimation and confidence-guided label propagation to enhance temporal learning from limited annotations, reducing the gap between annotation efficiency and model performance, contributing to the broader goal of achieving robust, scalable, and clinically applicable surgical phase recognition.

3. Materials and Methods

The study relied on the PituPhase–SurgeryAI dataset, whose clinical and technical characteristics are first described. Preprocessing procedures and the strategy adopted for timestamp generation are then outlined, simulating a weakly supervised scenario. The Semi-Supervised Learning (SSL) framework is subsequently presented, including the use of confidence masks and the Uncertainty-Aware Temporal Diffusion (UATD) method for pseudo-label propagation. Finally, the different spatial and temporal architectures evaluated are introduced, together with the implementation details and evaluation metrics used to assess model performance.

3.1. Dataset

The study was conducted on the PituPhase–SurgeryAI dataset [

13], the largest publicly available collection of endoscopic pituitary surgery videos. It consists of 44 surgical recordings performed at Hospital Universitario 12 de Octubre (Madrid, Spain), with a mean duration of 82 min per procedure. Videos were acquired at 25 fps with a resolution of 1920 × 1080 pixels. Informed consent was obtained from all patients and ethics approval was granted by the Ethics Committee of the Hospital (Date 16 March 2023, Approval No. 23/037). All patient-identifiable information was removed prior to the labeling process.

Phase annotations were provided by two expert neurosurgeons who reached a consensus to ensure reliability. An inter-annotator agreement was calculated using Cohen’s Kappa coefficient achieving a score of 87%.

The dataset defines seven surgical phases, listed in

Table 1. A distinctive feature of this dataset is the inclusion of the outside-the-body phase, P0, which corresponds to moments when the endoscope is removed from the body for cleaning. This occurs frequently due to the limited surgical workspace and the presence of visual artifacts such as bleeding, fogging, or thermal vaporization, which compromise visibility. Consequently, the outside-the-body phase appears recurrently with variable duration and without a fixed temporal position, introducing additional variability into the surgical workflow.

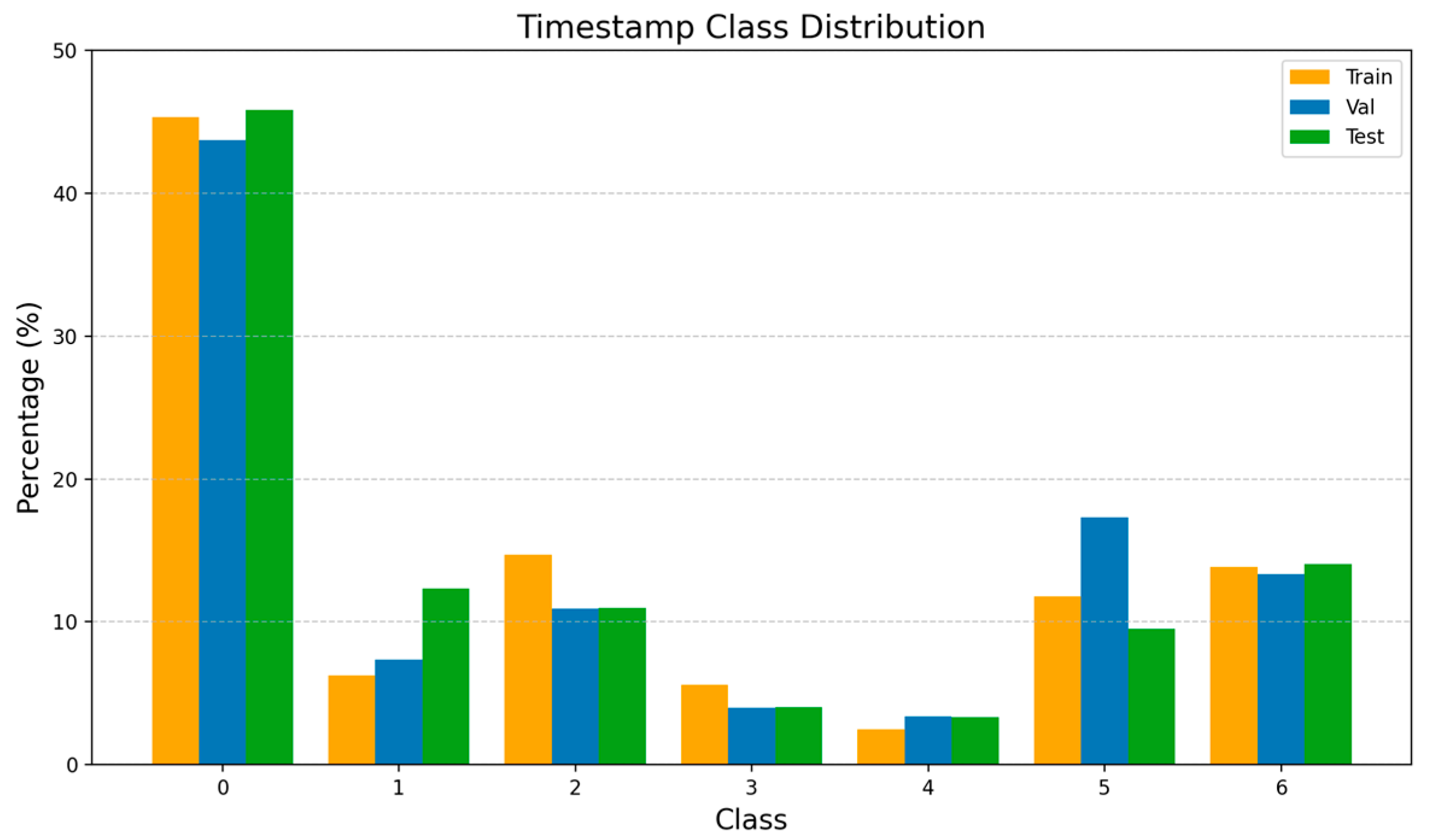

This variability also extends throughout the entire dataset. The PituPhase–SurgeryAI recordings exhibit substantial intra- and inter-phase variance across patients, which further complicates the learning process of the model. On the one hand, limited inter-phase variance arises because different phases often share similar anatomical regions and visual contexts, making it difficult to establish clear temporal boundaries. On the other hand, significant intra-phase variance is observed in frames within individual phases, driven by changes in illumination, camera angle and motion, blood or smoke artifacts, and surgeon-specific techniques. Furthermore, both video length and phase duration vary considerably across procedures, resulting in pronounced class imbalance in the dataset, as illustrated in

Figure 1. These factors, combined with the presence of class variability, make phase recognition particularly challenging and emphasize the need for robust temporal modeling capable of learning consistent representations under such heterogeneous conditions.

3.2. Timestamp Generation

To simulate weak supervision, only one representative frame per phase was randomly selected from each video, instead of using the full annotations available. In a practical setting, surgeons would manually select a small number of confident reference frames instead of labeling the entire sequence, reducing time efforts. To better reflect realistic clinical annotation, frames were selected away from the very beginning and end of each phase, since these boundaries are often ambiguous and associated with uncertain transitions.

From this Timestamp Supervision strategy, the training set was reduced to an initial subset of 3373 timestamps, representing approximately 3% of the fully annotated dataset and simulating a more efficient annotation process.

Considering only one frame was selected per phase in each video, the resulting timestamped dataset showed a strong predominance of the outside-the-body phase (P0), accounting for about 43% of all samples, as can be seen from

Figure 2. This imbalance occurs from the high frequency of P0 intervals throughout the procedure, when the endoscope is withdrawn for cleaning or obscured by smoke or blood.

To investigate the effect of annotation density, a second configuration was generated by selecting two representative frames per phase, doubling the proportion of labeled data (~6%) to evaluate whether this modest increase improved model stability and phase boundary detection. The same sampling criteria were maintained, excluding the first and last frames of each phase to avoid ambiguous transitions.

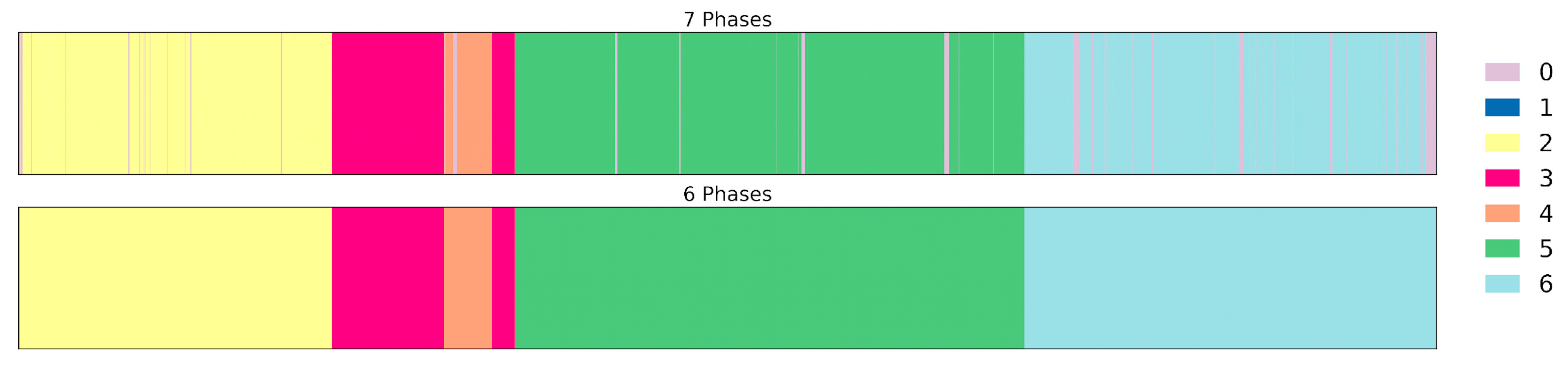

Despite this adjustment, P0 remained substantially overrepresented due to its recurrent nature. To mitigate this imbalance, an additional line of experimentation was conducted using a six-phase configuration, in which P0 was removed and its frames were reassigned to the preceding phase, as shown in

Figure 3. This modification not only reduced the overall class imbalance in the timestamp dataset but also simplified the temporal structure of the surgical workflow, facilitating more stable learning.

3.3. Pseudo-Labels Generation

Reducing the annotated dataset to a very small fraction substantially decreases the time required from clinical experts to annotate but also limits the amount of labeled data available for training, which negatively impacts model performance. To address this limitation, pseudo-labels were incorporated. These are automatically generated annotations that extend the information provided by sparse timestamps to unlabeled frames, allowing the model to exploit a larger portion of the data.

For this purpose, the Uncertainty-Aware Temporal Diffusion (UATD) method was applied to propagate pseudo-labels from timestamped frames to unlabeled regions of the video. In this approach, model uncertainty was quantified using Monte Carlo Dropout (MCD) is defined as the variance of the softmax stochastic passes [

12]:

where

represents the predicted probability vector at pass

, and

is the mean prediction.

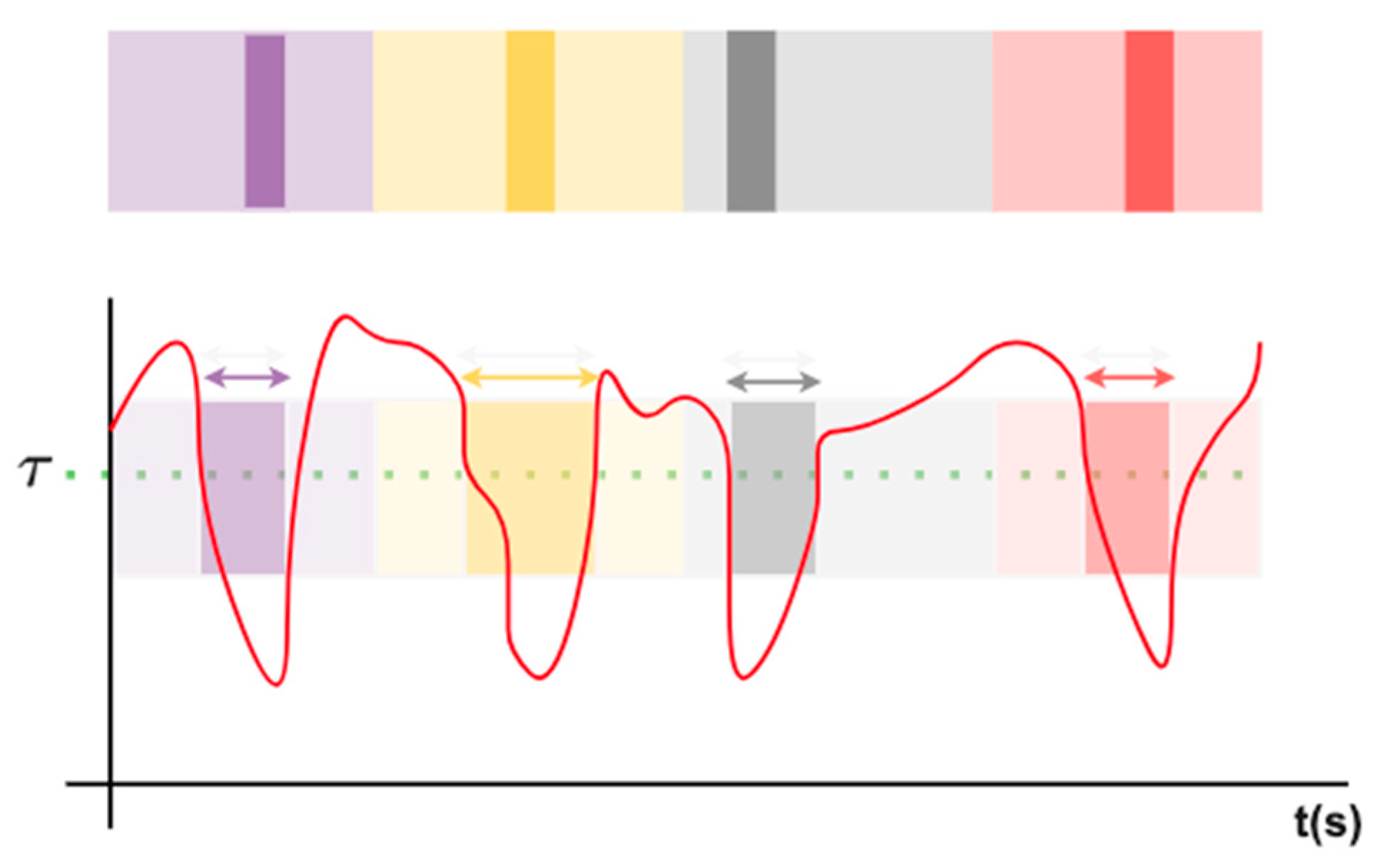

Figure 4 illustrates the UATD mechanism for pseudo-label propagation. For a given surgical video sequence over time, frames exhibiting low uncertainty (below the threshold

) and high temporal consistency with neighboring frames were selected for label propagation. Pseudo-labels from timestamp-annotated frames were then diffused to adjacent unlabeled frames according to their temporal proximity and confidence weighting. This process ensured smooth and coherent phase transitions while suppressing noisy or ambiguous predictions near phase boundaries, thereby improving the temporal stability of model training.

In parallel, confidence masks were employed to weigh the learning process, assigning greater importance to frames close to phase transitions, which are critical for accurate temporal segmentation. Together, pseudo-labeling through UATD enabled the model to compensate for the reduced annotation effort and significantly improve training effectiveness.

3.4. Models

The complete learning pipeline considered two complementary modeling approaches, a spatial study to extract visual representations of the timestamped generated frames, followed by a temporal study to model phase transitions using the UATD model for pseudo-label propagation.

3.4.1. Spatial Study

The spatial study focused on the extraction of discriminative visual features from individual surgical frames. These features encode anatomical structures, surgical tools, and contextual cues essential for recognizing each phase. To this end, three convolutional neural network architectures were evaluated: ResNet-50 [

21], Inception-v3 [

22], and Xception [

23], all pre-trained on the ImageNet dataset [

24].

Each model was fine-tuned on the PituPhase–SurgeryAI dataset using timestamp-labeled frames. For preprocessing, frames were center-cropped and resized to 224 × 224 for ResNet-50 and 299 × 299 for Inception-v3 and Xception. Standard per-channel normalization was applied. ResNet-50 was trained using an Adam optimizer with learning rate = 5 × 10−7 and weight decay = 1 × 10−2 and with a batch size of 128 for a maximum of 100 epochs. A StepLR scheduler was used to halve the learning rate by half every 5 epochs (ϒ = 0.5, step_size = 5). A cross-entropy loss function weighted inversely to class frequency was used to mitigate class imbalance that arose from P0.

For the Inception-v3 and Xception architectures, a slightly different training configuration was adopted. Instead of the weighted cross-entropy loss used for other models, both networks were trained using a Focal Loss formulation (γ = 2.0) with per-class weights computed inversely to class frequency. This loss function down-weights well-classified examples and focuses training on harder or underrepresented samples, improving model robustness. Optimization was also performed with Adam (learning rate = 5 × 10−5, weight decay = 1 × 10−2) but using a OneCycleLR scheduler that increased the learning rate up to 5 × 10−4 and then decayed it following a cosine annealing strategy (pct_start = 0.1).

In all experiments, early stopping was monitored through validation loss. The dataset was split into 60% training, 20% validation, and 20% test sets, preserving class distributions across subsets.

The feature vectors extracted from the penultimate layer of each network were later used as inputs to the temporal models described below. The spatial study thus provided the basis for identifying which CNN encoder produced the most representative embeddings of the surgical workflow.

3.4.2. Temporal Study

The temporal study addressed the modeling of sequential dependencies between frames to achieve temporally consistent phase recognition results. Two state-of-the-art temporal architectures were evaluated at this stage: the Multi-Stage Temporal Convolutional Network (MS-TCN) and the Segment-Attentive Hierarchical Consistency (SAHC) model.

The MS-TCN employs a stack of temporal convolutional layers with dilated convolutions and multiple refinement stages, progressively refining frame-level predictions. Its architecture enables the modeling of long-range temporal dependencies while preserving high temporal resolution, which is essential for endoscopic pituitary surgery, given its extended duration, with an average of approximately 82 min per procedure.

In contrast, the SAHC model introduces an attention-based hierarchical framework designed to capture dependencies at multiple temporal scales. Through segment-level attention modules, SAHC emphasizes temporally relevant features and enforces consistency across adjacent segments, which is particularly beneficial for transitions between surgical phases.

Both temporal models were trained for up to 30 epochs with an Adam optimizer, using a learning rate of 5 × 10−5 for SAHC and 1 × 10−3 for MS-TCN. The batch size was defined at the patient level, meaning that all videos belonging to the same patient were grouped within the same batch to preserve intra-patient temporal consistency and prevent data leakage across subjects. This strategy ensured that temporal dependencies were learned coherently within individual surgical cases while maintaining subject-level separation between training and validation sets. The input sequences consisted of frame-wise spatial embeddings obtained from the best-performing CNN.

In the UATD mechanism, two key hyperparameters were adjusted empirically through exploratory validation experiments: the uncertainty threshold (τ), which controlled the confidence required for pseudo-label propagation, and the class-balancing factor (β) which adjusted the weighting of underrepresented phases during training. Both parameters were explored within the ranges reported in the original Less is More implementation: τ ∈ [0.02, 0.1, 0.2] and β ∈ [0.9, 0.95, 0.98, 0.99]. Each combination was evaluated under identical training conditions to determine which values achieved the best performance on the validation set.

The selected configuration (τ = 0.1, β = 0.99) yielded the most stable and accurate results, consistent with the findings discussed in the reference study [

12]. These values impose a relatively strict confidence threshold for pseudo-label propagation, ensuring that only highly reliable predictions are diffused (frames with lower uncertainty than the threshold), while appropriately compensating for class imbalance during training.

All models were implemented in PyTorch (

https://pytorch.org/) and trained on an NVIDIA RTX 3090 GPU and a memory card with 64 GB of RAM. Although the two-stage training and Monte Carlo Dropout introduced additional computational overhead, with approximately 3.16 M parameters, these processes were confined to the training phase. Once trained, the temporal model operates with standard forward passes, allowing for efficient inference time and averaging approximately 10 s per test patient.

Together, the two approaches (spatial and temporal) allowed the system to capture both visual appearance and workflow dynamics, which are essential for surgical phase recognition.

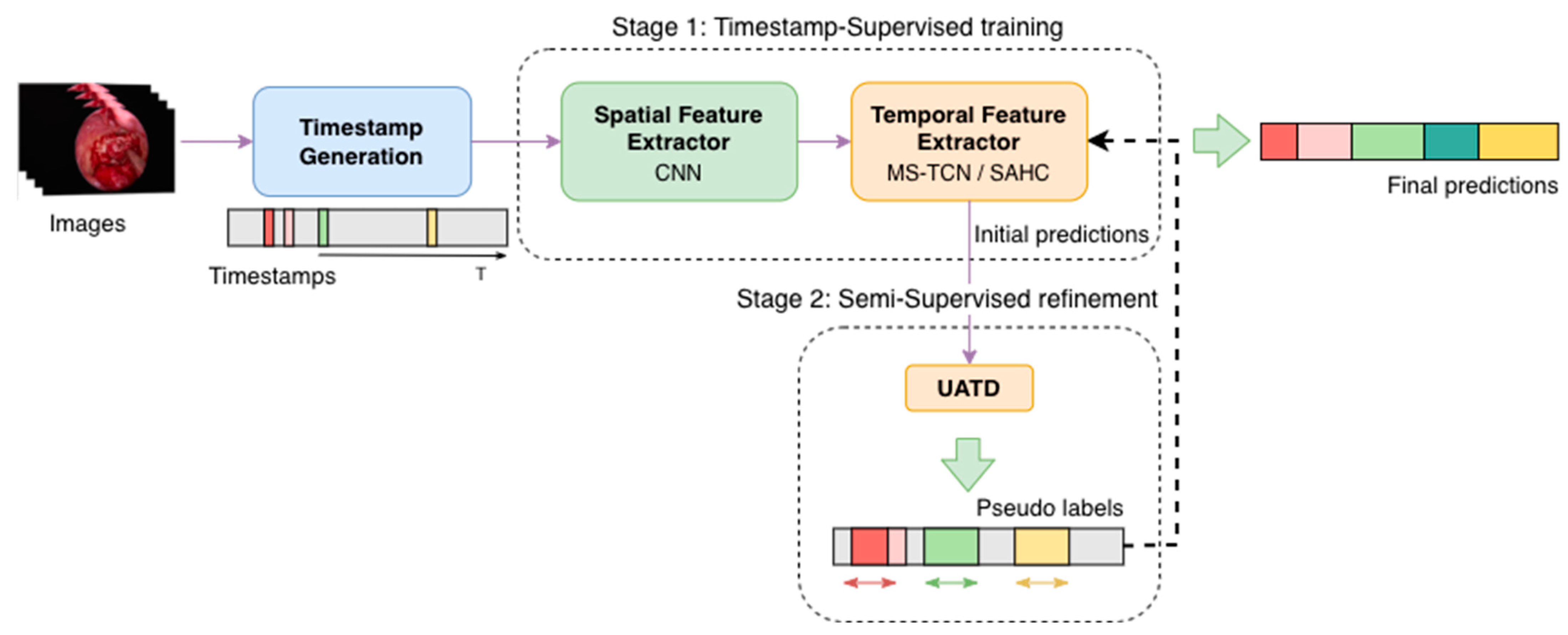

3.5. Training Procedure

The complete training procedure followed the two-stage SSL strategy proposed in the paper Less is More: Surgical Phase Recognition with Timestamp Supervision [

12], adapted to the pituitary surgery context. The framework combined the spatial and temporal components described above into a unified training process composed of two consecutive phases, as illustrated in

Figure 5.

In the first stage (i.e., Timestamp-Supervised training), only the timestamped-labeled frames were used for model training, while the remaining frames were not used. The spatial extractor was fine-tuned on these labeled samples to learn representative visual features for each surgical phase. The resulting embeddings were then used to initialize the temporal model, producing initial predictions, ensuring that temporal learning began from a stable and discriminative feature space.

In the second stage (i.e., Semi-Supervised refinement), these model predictions on unlabeled frames were leveraged to generate pseudo-labels using the Uncertainty-Aware Temporal Diffusion (UATD) mechanism. For each frame, multiple stochastic forward passes with Monte Carlo Dropout were performed to estimate predictive uncertainty. Frames with low uncertainty (below a threshold τ of 0.1) and high temporal consistency with neighboring frames were selected for propagation. These pseudo-labeled frames were then incorporated into the training set, allowing the model to be retrained jointly on both labeled and pseudo-labeled data in an iterative fashion.

Training was alternated between batches of labeled and pseudo-labeled samples to stabilize convergence. The optimization settings at this stage (learning rate, loss weighting, and number of epochs) were maintained consistent with those described in Less is More [

12] to ensure methodological comparability.

This two-step pipeline enabled the model to progressively learn temporal coherence from sparse supervision, refining its understanding of phase transitions as pseudo-labels were iteratively diffused across the video sequences. The final models were selected based on the best validation performance, achieving an optimal trade-off between accuracy, stability, and annotation efficiency.

Both the seven-phase and six-phase configurations were evaluated as two independent lines of experimentation, each trained using the same procedure to ensure a fair and consistent comparison of results.

3.6. Metrics

Model performance was assessed using standard classification and segmentation metrics: accuracy, precision, recall, and F1-score. All metrics, except for accuracy, were reported in their macro-averaged versions, ensuring equal weight for each class regardless of its frequency in the dataset. The Jaccard index, computed as the Intersection over Union (IoU), was additionally included for all temporal models after applying the UATD pseudo-label propagation method, to facilitate direct comparison with the results reported in the original Less is More study [

12]. Additionally, 95% confidence intervals (CIs) were computed through bootstrapping with 50 iterations and sampling with replacement at the patient level (seven patients were drawn in each iteration). This evaluation strategy provided a more robust estimation of model performance while accounting for inter-patient variability.

5. Discussion

The results demonstrate that Surgical Phase Recognition (SPR) in endoscopic pituitary surgery can be effectively addressed using a Semi-Supervised Learning (SSL) framework that relies on sparse timestamp annotations. Despite using less than 3% of the fully annotated data, the proposed method achieved competitive performance compared with traditional fully supervised approaches, confirming that limited yet well-distributed supervision can capture the essential spatio-temporal structure of complex neurosurgical workflows.

A clear performance gap was observed between the spatial and temporal models under the seven-phase configuration (Line 1 of experimentation). As shown in

Table 2, spatial CNNs achieved limited performance when trained on the reduced timestamped dataset (3% of original data), with F1-scores ranging from 0.25 to 0.38 across architectures. Among the three, Xception ranked the strongest spatial feature extractor, providing highly discriminative representations of surgical scenes. This behavior could be attributed to its ability to combine convolutions with different depths, which allows the model to capture more complex spatial patterns that are relevant for the classification of surgical phases. It should be noted that, across all spatial studies conducted in Line 1, the use of two timestamps per phase (2Ts) improved results compared to a single timestamp (1Ts). This increase in temporal resolution provides the model with further information on phase characteristics without generating a significant annotation burden for neurosurgeons, as the annotation of timestamps remains efficient.

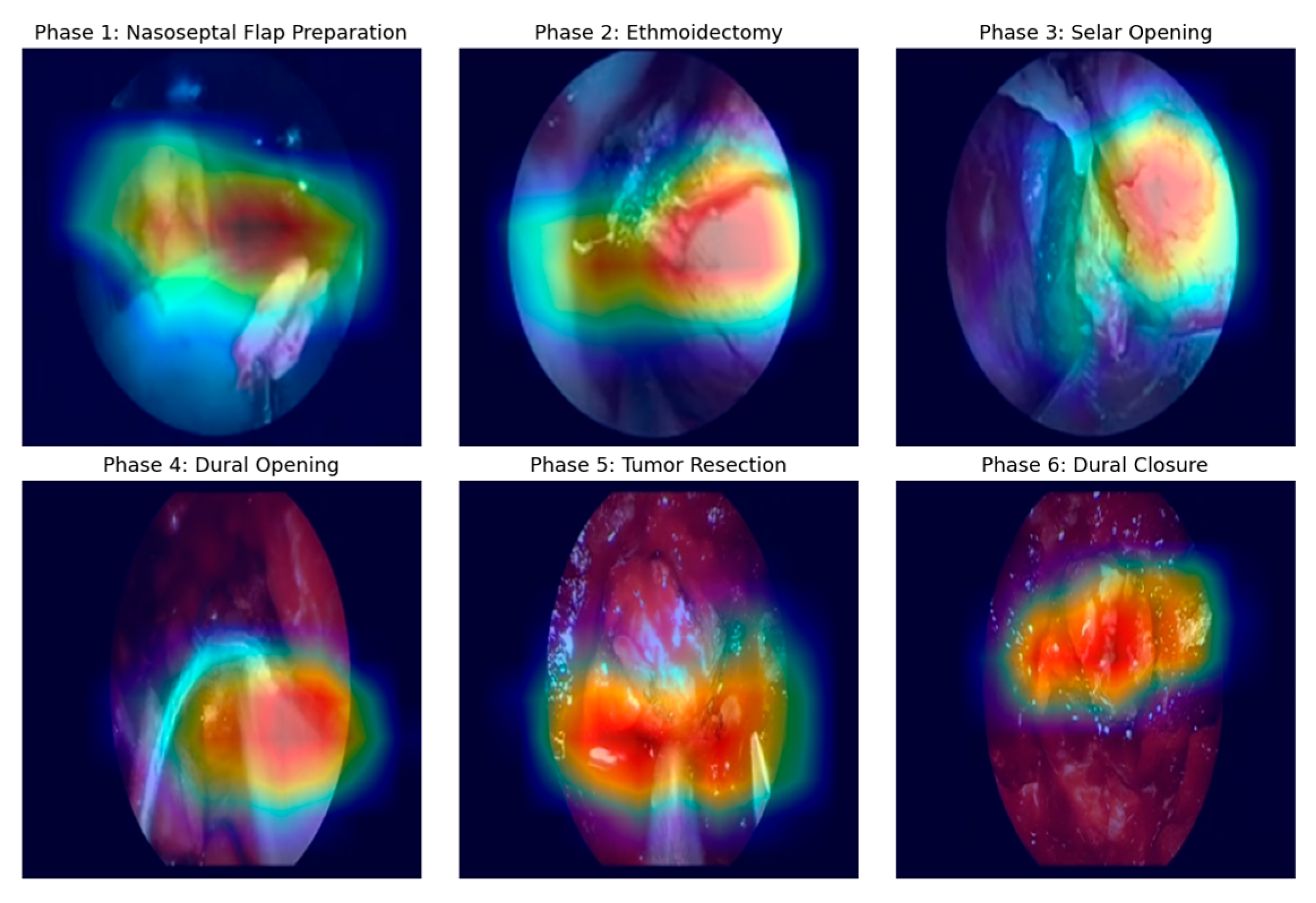

Given that Xception demonstrated superior performance, its classification mechanism was analyzed using Grad-CAM activation maps.

Figure 7 displays these activations for one representative frame across phases P1-P6 and shows that Xception effectively concentrates attention on relevant regions of each frame (particularly the operative field and surgical instruments), allowing to capture meaningful spatial cues for phase discrimination despite the limited supervision.

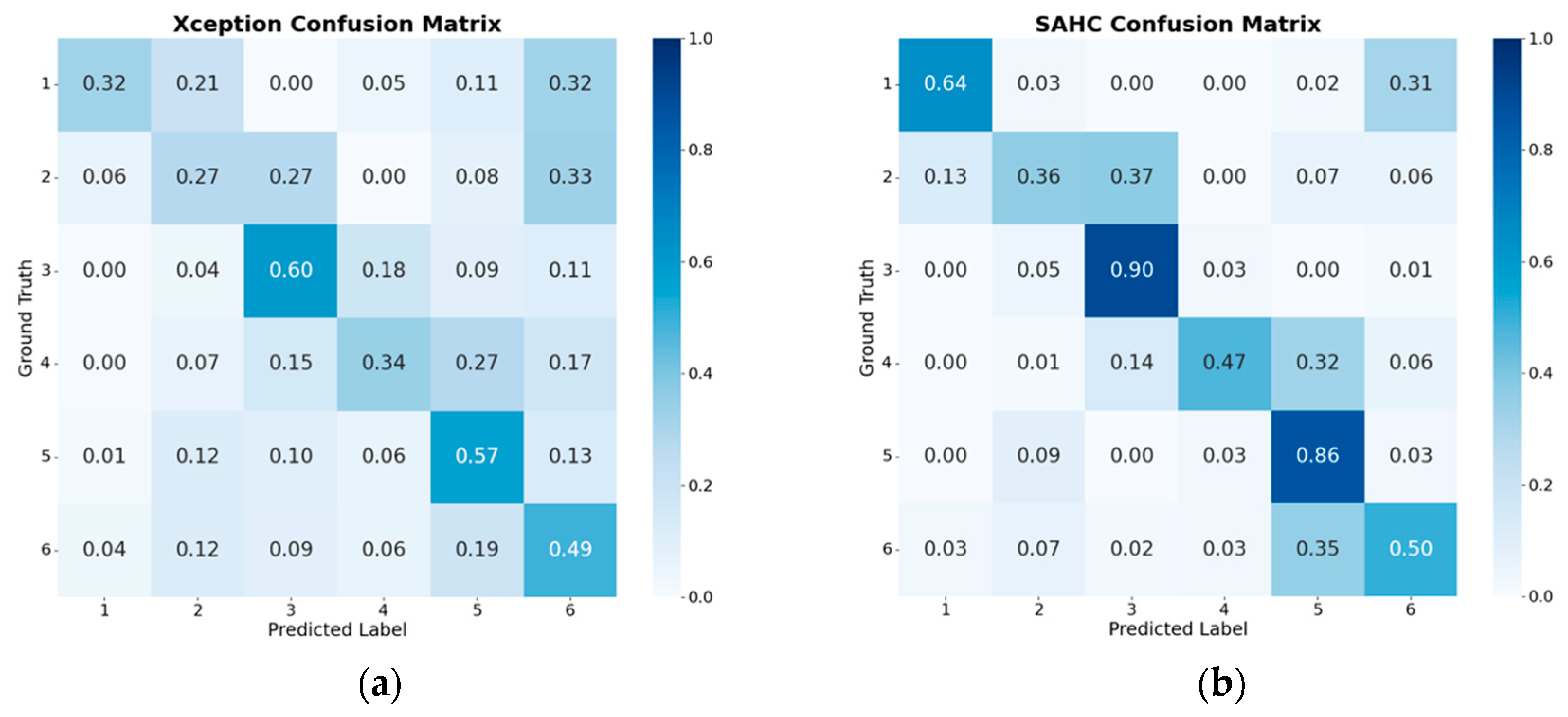

However, the confusion matrices in

Figure 6 highlight the main limitation of spatial modeling: the Xception model alone (

Figure 6a) exhibits considerable misclassification of phases, indicating overlap between adjacent phases and confusion during transitions, since visual differences are often subtle in pituitary surgery. In particular, the Tumor Resection (P5) and Dural Closure (P6) phases were among the most prone to confusion, as these are longer stages in the procedure and present high intra-class variability, with frames within the same phase showing markedly different visual appearances. At the same time, inter-class variability between consecutive phases is limited, leading to high visual similarity in transitions that further complicates discrimination.

Incorporating temporal modeling substantially improved performance, as reflected in the sharper diagonal values observed in the SAHC confusion matrix of

Figure 6b. On average, recall increased from 0.43 in the Xception model to 0.62 in SAHC, showing consistent improvement across all phases: for instance, P1 doubled its individual recall from 0.32 to 0.63 when temporal dependencies and pseudo-label propagation were considered. Misclassifications in the off-diagonal regions were also markedly reduced in the SAHC matrix (

Figure 6b), confirming that the inclusion of the UATD mechanism mitigates the noise typically associated with pseudo-label refinement, as declared in [

12]. By filtering predictions according to uncertainty, UATD reduced the propagation of ambiguous labels, especially nearing phase boundaries, and the introduction of confidence masks further strengthened this process by prioritizing frames with higher predictive reliability, contributing to smoother and more stable phase transitions.

Among temporal architectures, a clear difference was observed in phase recognition performance between MS-TCN and SAHC, seen in the results reported in

Table 3 and

Table 4. The MS-TCN employs stacked dilated temporal convolutions with multiple refinement stages, progressively improving frame-level predictions while maintaining high temporal resolution—an essential property given the extended duration of pituitary surgeries (averaging 82 min per procedure). The SAHC model, in contrast, integrates a hierarchical attention mechanism that captures temporal dependencies at multiple scales and refines frame-level predictions, which proved particularly effective in this context. As a result, SAHC achieved better overall performance across different spatial encoders, particularly when incorporating the Xception Net at two timestamps per phase, achieving an F1-score of 0.60 [0.55–0.65] compared to the 0.39 [0.37–0.41] obtained with the Multi-Stage TCN. The attention mechanism and hierarchical consistency constraints introduced by SAHC facilitated the modeling of phase dependencies across different temporal scales, which was particularly advantageous in this surgery, characterized with variable duration and different visual conditions across patients.

The comparative analysis between the two lines of experimentation (i.e., seven-phase versus six-phase configuration) offers additional insights into the influence of class structure on model performance. Removing the outside-the-body phase (P0) reduced class imbalances and improved the temporal consistency of predictions, yielding a modest but consistent performance gain across all five metrics (e.g., from an F1-score of 0.59 [0.54–0.64] in the seven-phase setup to 0.61 [0.53–0.68] in the six-phase configuration).

This improvement suggests that removing highly recurrent but semantically less informative segments, such as those of P0, helps the model focus on clinically meaningful transitions between intraoperative phases. However, this simplification also removes information about workflow interruptions, such as endoscope cleaning events, which could be valuable for real-time surgical workflow monitoring. Therefore, the choice between configurations should ultimately depend on the intended application—whether the goal is to maximize phase segmentation accuracy or to capture a comprehensive representation of the surgical process.

When comparing the best-performing model (Xception-SAHC with 3% labeled data) to the fully supervised SAHC benchmark reported in [

7], similar performance is observed: our SSL approach produces an accuracy of 0.70 versus 0.71 in the fully annotated one, and an F1-score of 0.61 versus 0.68, respectively. These results are particularly remarkable given the substantial reduction in manual annotations: the SSL model was trained using only ~3% of the labeled dataset, subsequently expanded to unlabeled frames through pseudo-label propagation with the UATD mechanism, obtaining comparable results. This outcome demonstrates that the proposed framework can replicate the effectiveness of Fully Supervised models while relying on a fraction of the annotation effort.

Notably, the Less is More study [

12] reported that Timestamp Supervision could confidently reduce annotation time by 74% compared with full frame-level labeling; in contrast, the present work extends this paradigm further, achieving strong performance using only ~3% of labeled samples. In terms of performance levels, the proposed Xception-SAHC model achieved a Jaccard index of 0.46 [0.38–0.53] and an accuracy of 0.70 [0.63–0.76] in the six-phase configuration (excluding the outside-body phase P0). Although these values are below the 0.73 Jaccard index and 0.88 accuracy reported in the Less is More study [

12] for the Cholect80 dataset, the comparison should be interpreted keeping in mind the procedural context: The Cholect80 dataset corresponds to standardized laparoscopic cholecystectomy surgeries, which exhibit lower variability and shorter duration, whereas the present work addresses a more complex and heterogeneous neurosurgical scenario. The results therefore underscore the capacity of SSL to generalize effectively in data-scarce conditions, offering a scalable, annotation-efficient, and clinically viable solution for surgical phase recognition in complex neurosurgical workflows.

Still, some limitations must be acknowledged. In this study, the random-sampling scheme proposed in the original paper [

12] was adopted, in which timestamps are selected near the center of each phase while avoiding the ambiguous boundary regions. However, these randomly sampled timestamps may fail to capture the most representative visual states of each phase due to the specific characteristics of endoscopic pituitary surgery, and pseudo-labels may accumulate minor errors over long sequences despite the application of uncertainty filtering. This surgery is considerably longer (averaging approximately 80 min) than most reported laparoscopic procedures, and extended phases, such as the Tumor Resection phase (P5) are especially prone to propagation drift over time. Moreover, pituitary surgery presents substantial variability across patients and phases, a larger number of phases, and less clearly defined transitions. These factors make phase boundaries difficult to determine, even for expert neurosurgeons, which inherently limit model performance, and can negatively affect the reliability of randomly selected timestamps. Nevertheless, Timestamp Supervision may help address this issue by encouraging the model to learn temporal patterns that better delineate phase transitions. Another limitation arises from the exclusion of the outside-the-body phase (P0) in the second part of the study, a phase that occurs frequently and unpredictably throughout all the procedure for brief intervals, reducing the model’s applicability for certain activities.

Future research will explore more informed timestamp-selection strategies to address these limitations. Recent studies in frame sampling propose methods such as normal-distribution centered sampling within phases [

25]. Still, in practical clinical settings, random selection will be replaced with surgeon-guided frame selection, where surgeons will choose one or two representative frames per phase, those they can identify with absolute confidence as belonging to a specific class, which will then serve as high-reliability anchor points for pseudo-label propagation. Such informed selection is expected to strengthen the initial supervision and improve temporal consistency. Moreover, while this framework was tested in a demanding and highly variable domain, we anticipate that its performance will be further improved in surgical procedures with lower variability and more reproducible workflows, such as laparoscopic surgeries. Additionally, future studies will consider incorporating the outside-the-body phase (P0) into the learning framework to enhance the model’s robustness and deployment potential in real surgical environments.

From a clinical perspective, this framework highlights the feasibility of developing scalable and annotation-efficient surgical AI systems. By significantly reducing expert workload without compromising accuracy, the proposed approach could enable broader adoption of AI-driven workflow analysis in real surgical settings.

This work paves the way for the future development of more robust models for surgical workflow recognition by incorporating modern auto-, semi-, and self-supervision techniques—approaches that have already achieved remarkable success in other computer vision domains—into surgical vision. Our results, together with recent research, suggest that it may soon be possible to develop a generic model—a foundation model for surgical vision, capable of leveraging large volumes of unlabeled video. With appropriate fine tuning for specific tasks and procedures, such a model will support the development of adaptable, solutions for surgical workflow recognition, intraoperative assistance, and surgical training, ultimately advancing the clinical translation of intelligent surgical systems.

6. Conclusions

The main contributions of this study are threefold: first, it demonstrates the effectiveness of a Semi-Supervised Deep Learning (SSL) strategy for Surgical Phase Recognition in endoscopic pituitary surgery, a particularly complex procedure characterized by significant phase variability, limited working space, and frequent visual artifacts. Second, it evaluates two complementary strategies for handling out-the-body frames, when the endoscope is withdrawn for cleaning or adjustment. Third, it compares multiple spatial-temporal architectures identifying the Xception–SAHC combination as the most effective for modeling both visual and temporal dependencies.

Our SSL framework, based on Timestamp Supervision and uncertainty-aware pseudo-label propagation, substantially reduces annotation requirements while maintaining robust performance. With less than 3% of annotated data, it achieves an F1-score of 0.60 [0.55–0.65] comparable to fully supervised methods. Additionally, using a six-phase configuration improves temporal stability by reducing class imbalance introduced by recurrent out-of-body frames.

Overall, these findings highlight uncertainty-guided SSL as a practical alternative to full supervision in challenging surgical scenarios. Its application could enable efficient labeling of complete surgical video datasets with minimal manual effort and provide the basis for downstream tasks such as decision support in the operating room, automatic surgical report generation, and preparation of educational material for medical students.