Low-Altitude Photogrammetry and 3D Modeling for Engineering Heritage: A Case Study on the Digital Documentation of a Historic Steel Truss Viaduct

Featured Application

Abstract

1. Introduction

2. Materials and Methods

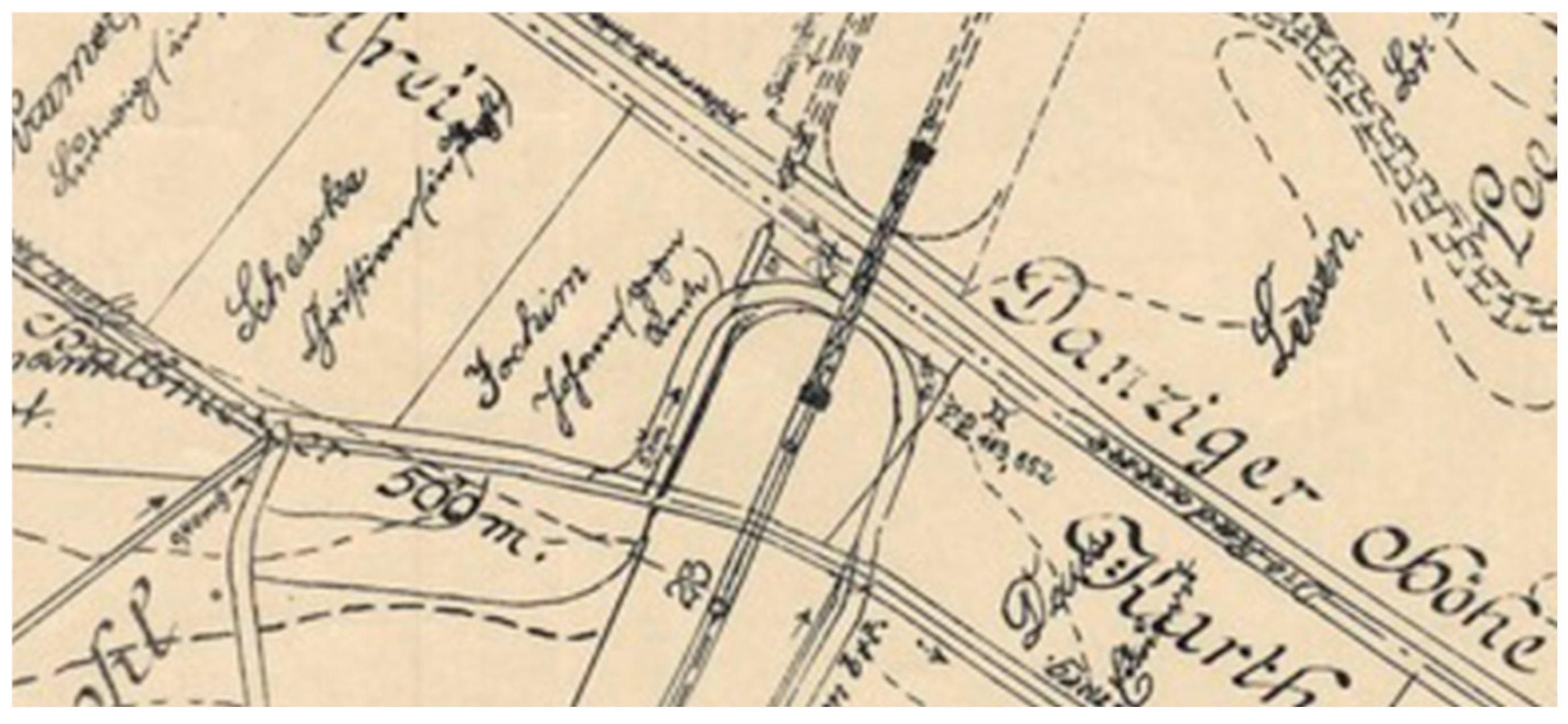

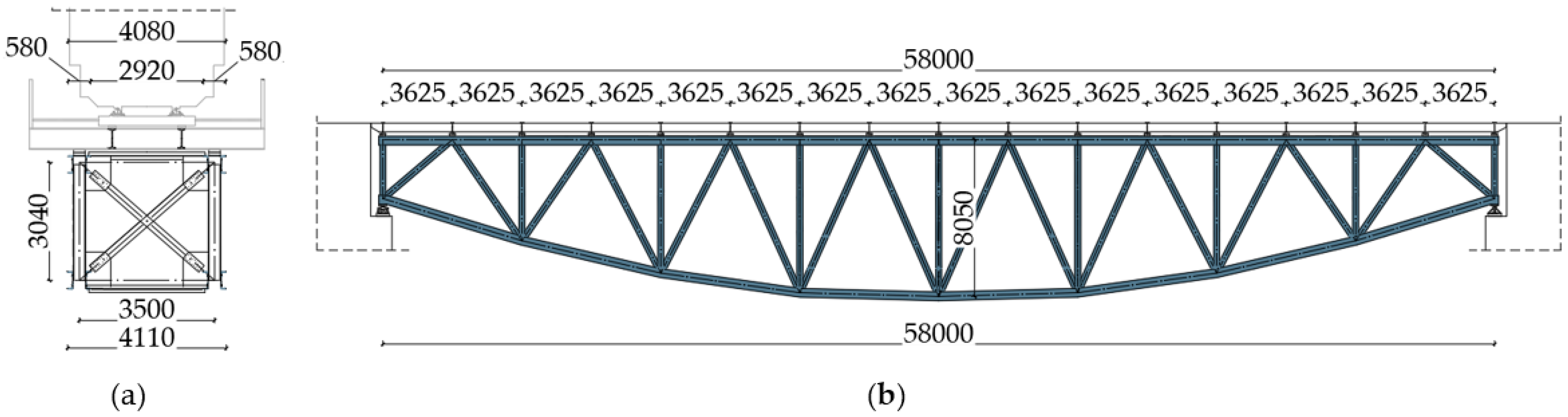

2.1. Bridge Description

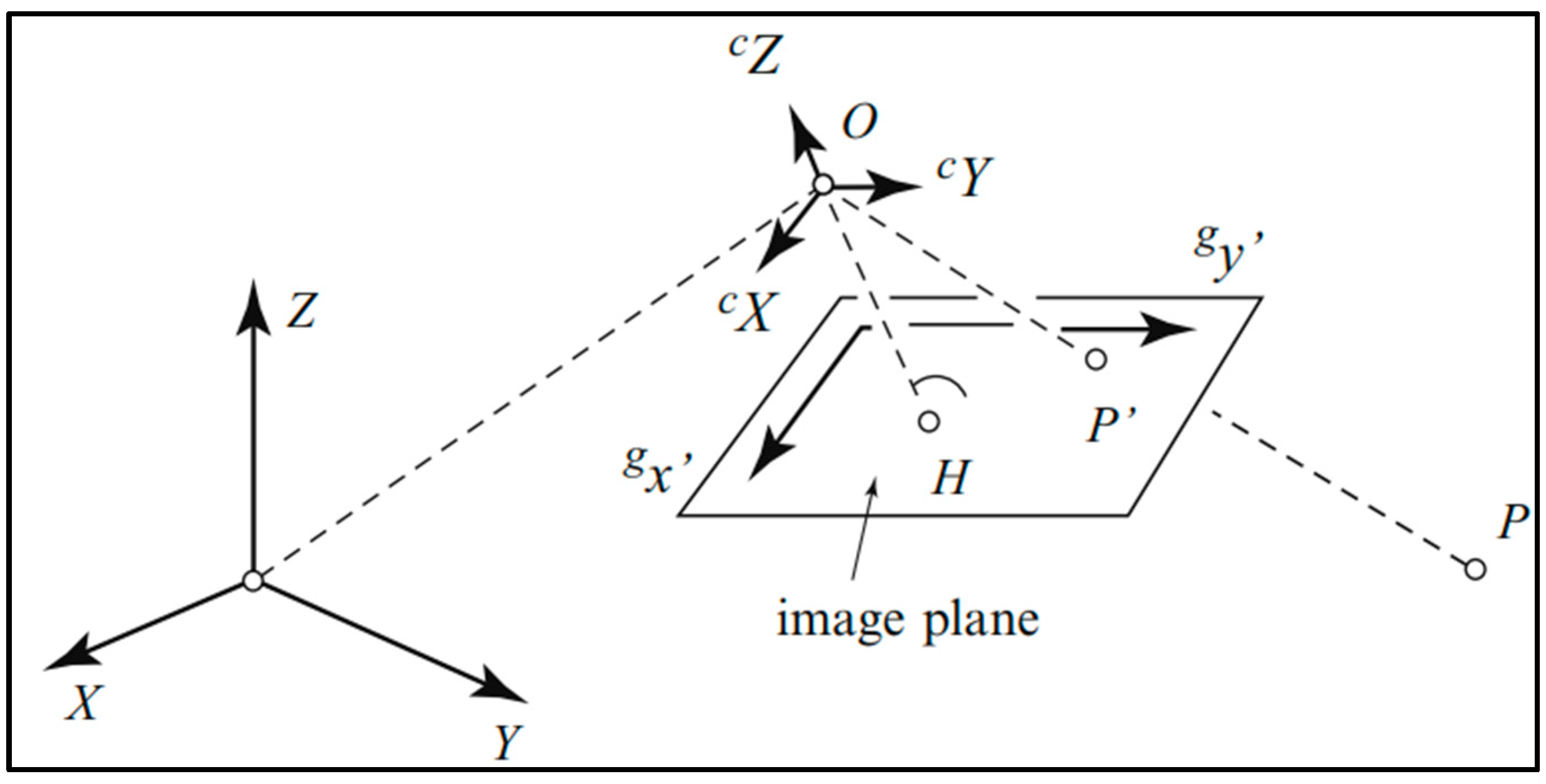

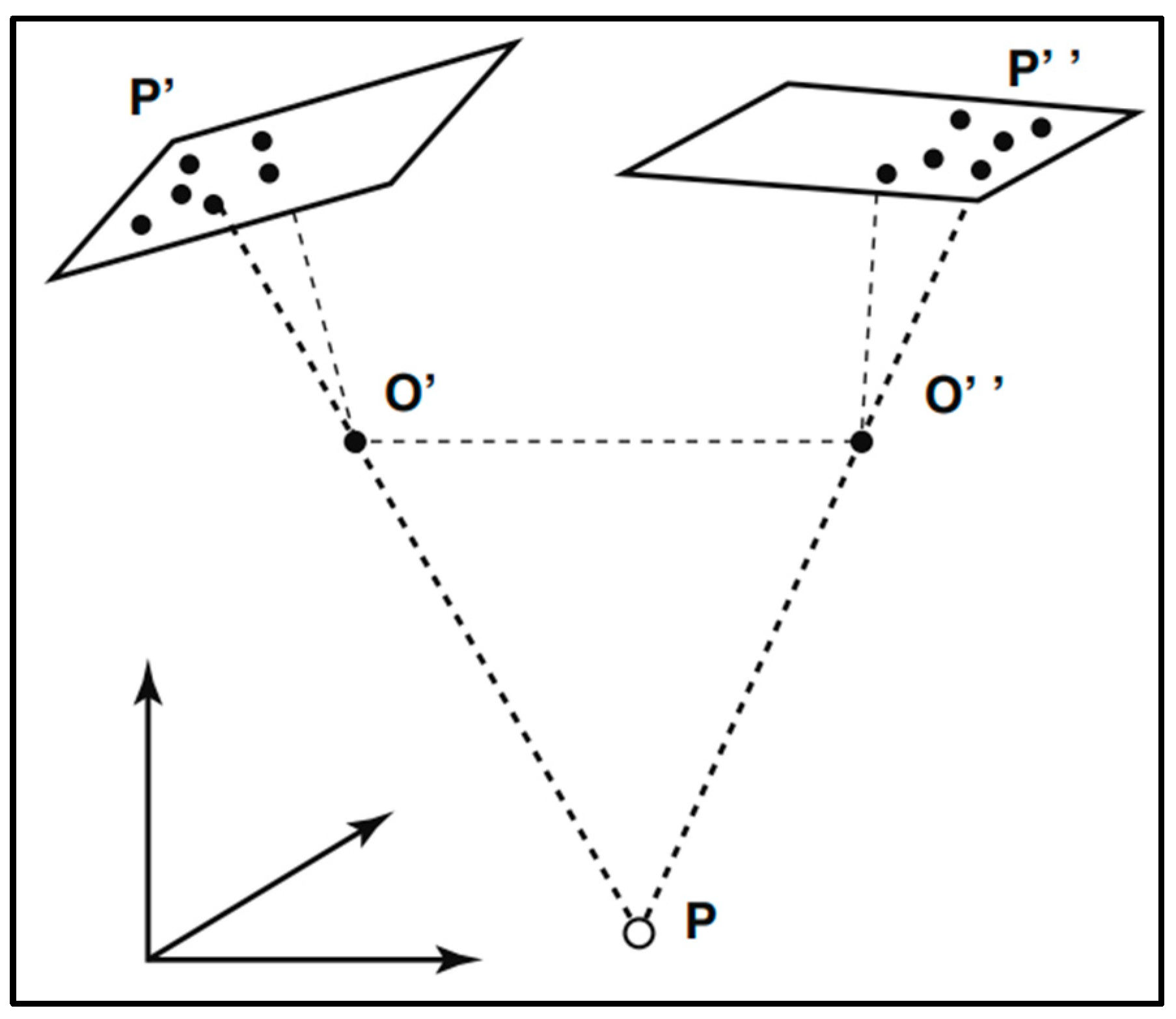

2.2. Theoretical Foundations of Photogrammetry

- f: The focal length represents the distance from the camera’s perspective center to the image plane.

- : The coordinates of the camera’s perspective center in the object coordinate system, defining the camera’s position in 3D space.

- R: The rotation matrix, a 3 × 3 matrix that specifies the camera’s orientation or attitude relative to the object coordinate system.

2.3. Advantages and Limitations of Photogrammetry

- Good geometric consistency: The accuracy of UAV photogrammetry is generally sufficient for documentation and monitoring purposes, although it is lower than that achieved with TLS. The method facilitates the generation of precise 3D models with high geometric fidelity, which is particularly critical for the detailed documentation of historical sites and artifacts [41].

- Cost-Effectiveness: In comparison to active remote sensing methods like LiDAR, photogrammetry is significantly more cost-effective. The required equipment, primarily high-resolution digital cameras and consumer-grade UAVs, involves substantially lower initial investment and operating expenses [7].

- Non-Invasiveness: As a non-contact measurement technique, photogrammetry is an ideal solution for documenting fragile or delicate objects without the risk of physical damage [38].

- Versatility and Scalability: The technology is highly adaptable, enabling its application to objects of varying scales, from small artifacts to large-scale architectural structures. The integration of Unmanned Aerial Vehicles (UAVs) further extends the utility of this technology by allowing access to hard-to-reach areas [37,42].

- Integration: Photogrammetric data can be seamlessly integrated with other technologies, such as 3D printing, to create physical replicas for educational purposes or conservation efforts [43].

- Lighting and Image Quality: The technique relies heavily on clear images with good lighting and sharp contrast. Poor lighting, reflections, or motion blur can introduce significant errors and compromise the accuracy of the 3D model.

- Calibration: For precise spatial measurements, the cameras used must be accurately calibrated. Errors in camera calibration can lead to inaccuracies in the final 3D model, as the geometric relationship between the camera and the object is incorrectly defined.

- Image Geometry: The process can be challenged by complex objects or environments with hidden or obscured areas. Difficulty in acquiring images from appropriate perspectives can result in incomplete or distorted models.

- Computational Intensity: The processing of large datasets of high-resolution images is computationally demanding. It requires significant processing power and time, which can be a major constraint for large-scale projects.

- Object Properties: The method is less effective on objects with smooth, monochrome, or transparent surfaces (such as polished metal or glass). These surfaces lack the distinct feature points necessary for accurate image matching, which is a fundamental step in the photogrammetry workflow.

- Regulatory and Legal Constraints: The increasing reliance on Unmanned Aerial Vehicles (UAVs) for aerial photogrammetry introduces significant limitations derived from national and international flight regulations. Operators are subject to mandatory registration, specific pilot qualifications, and strict airspace restrictions defined by relevant air navigation services. Non-compliance with these rules, including mandatory Civil Liability (OC) insurance and respecting designated geographical zones, can lead to substantial financial penalties [44].

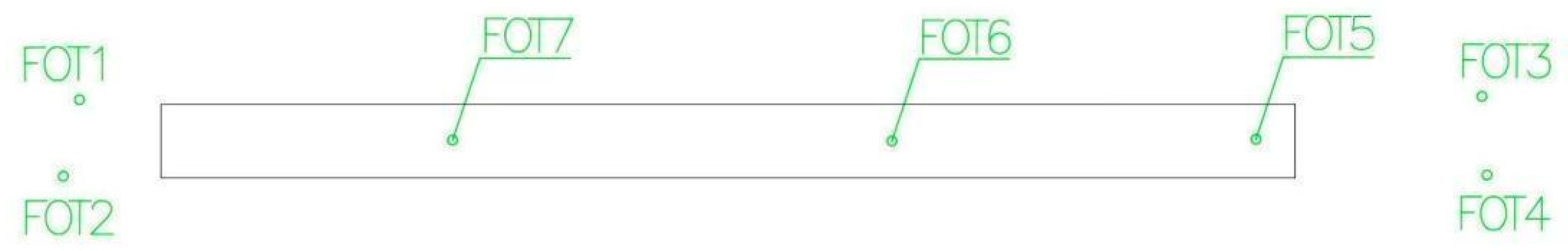

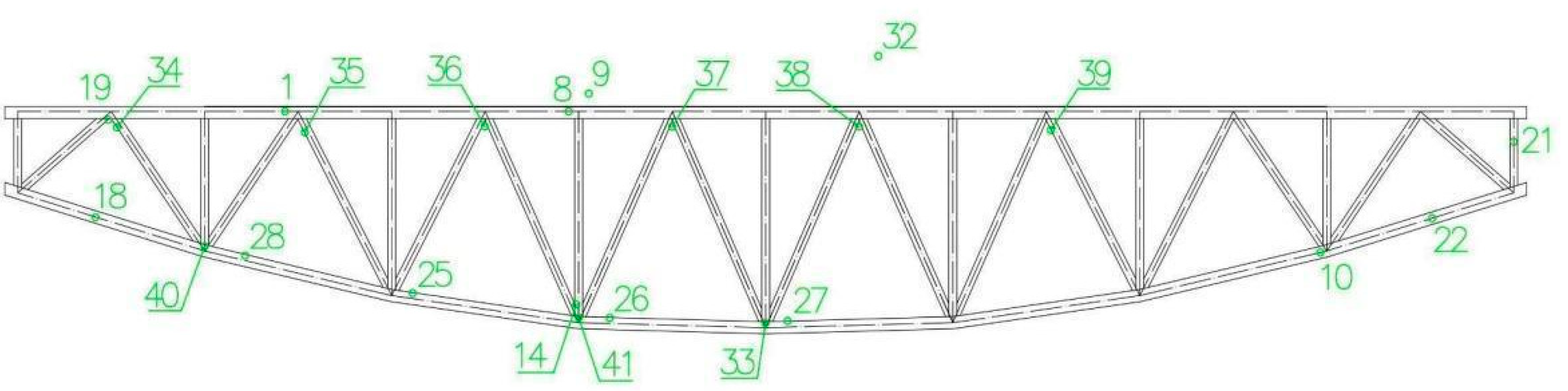

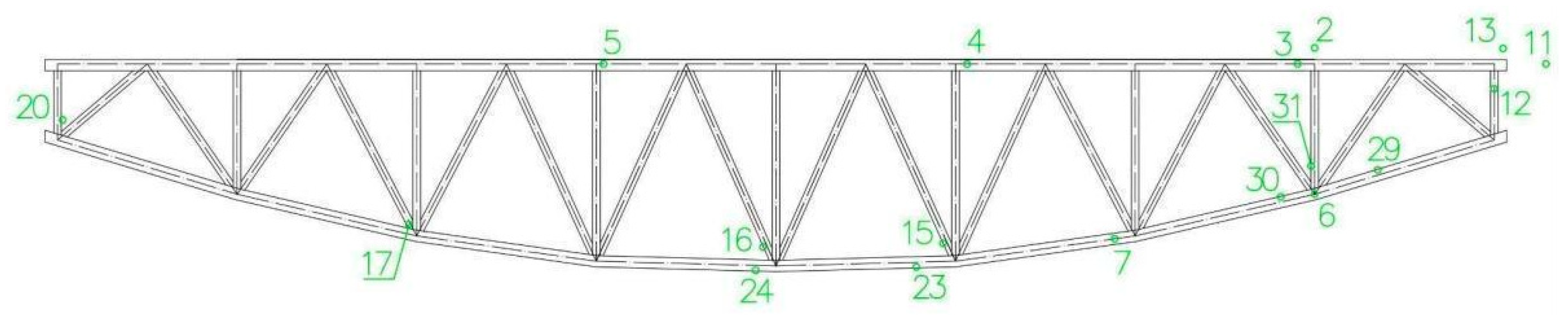

2.4. Geodetic Control Network Establishment

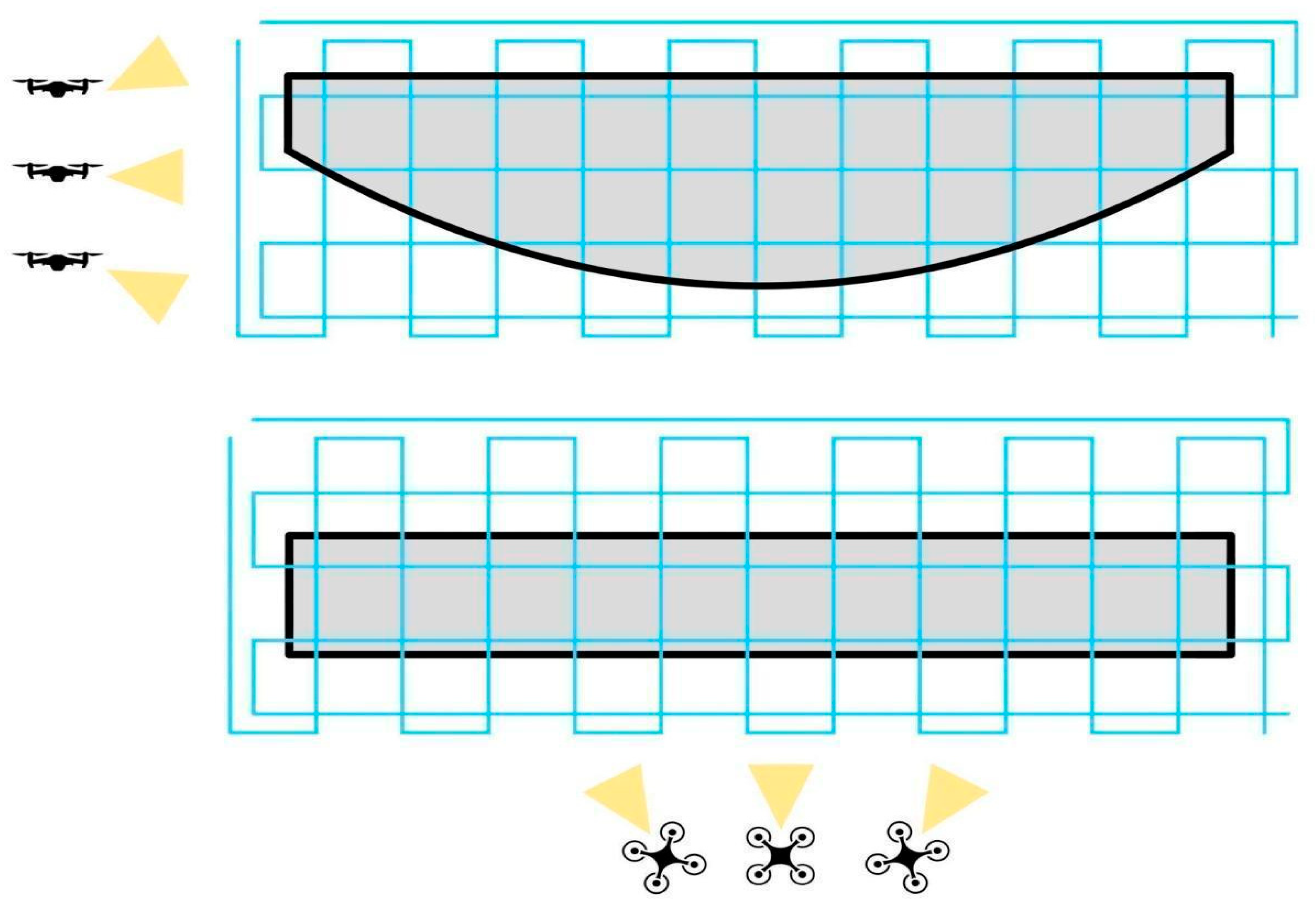

2.5. UAV Data Acquisition and Processing

2.6. Three-Dimensional Model Creation Methodology

3. Results

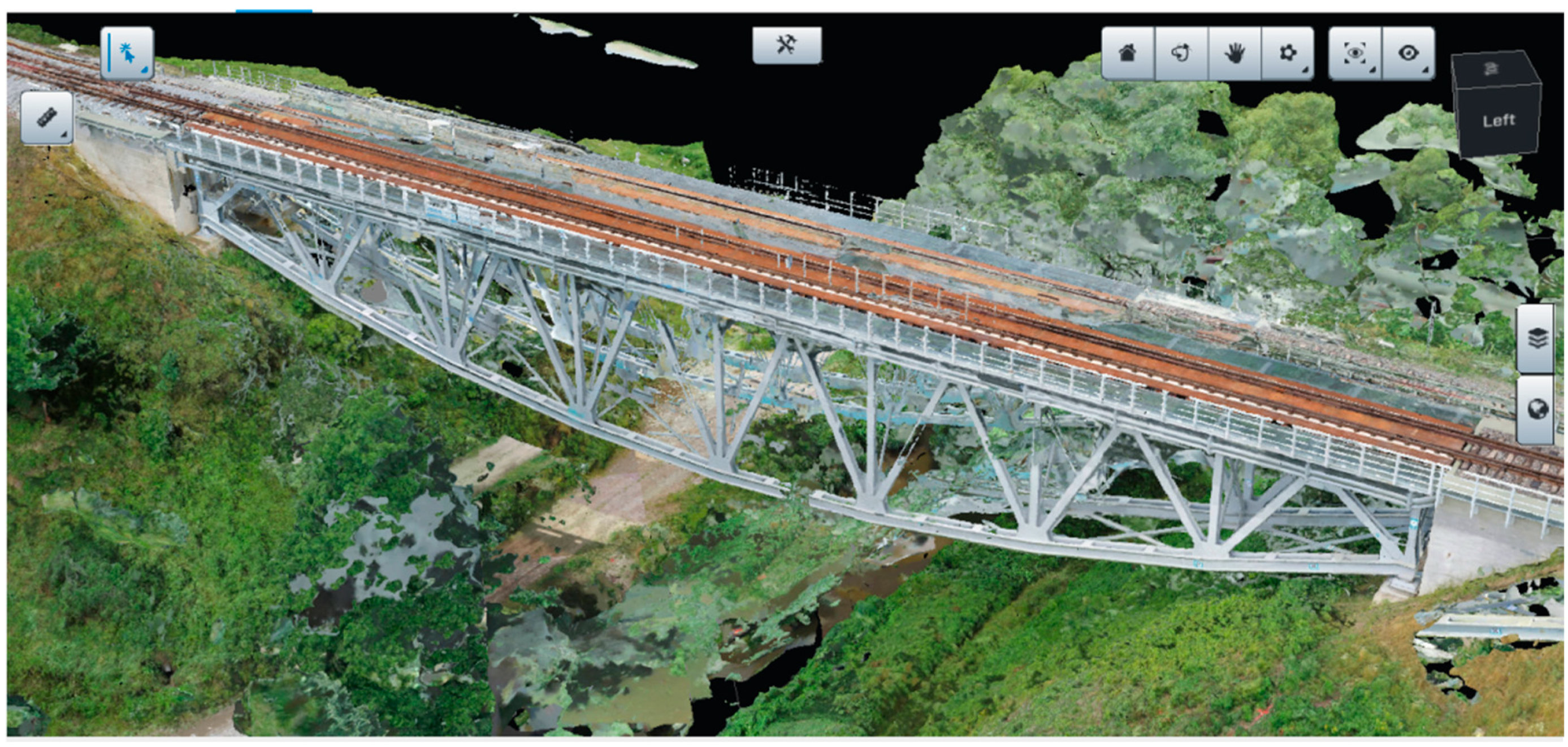

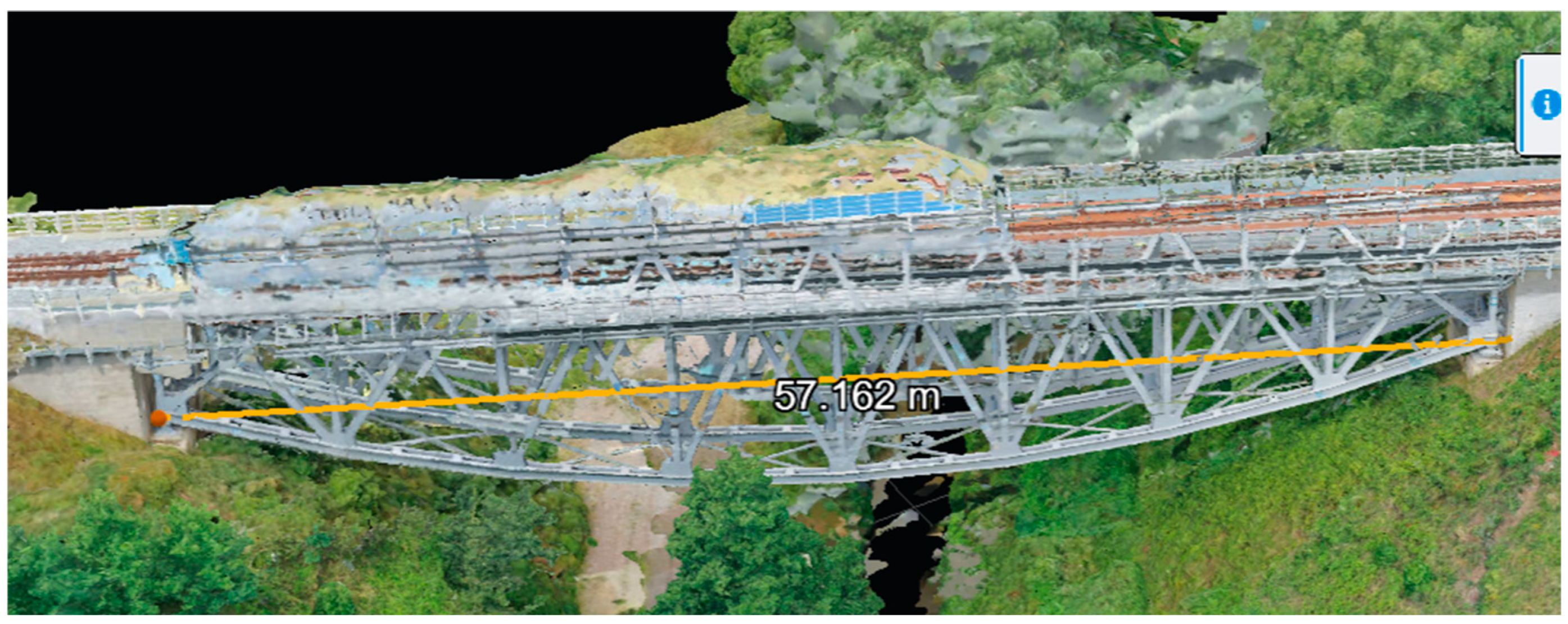

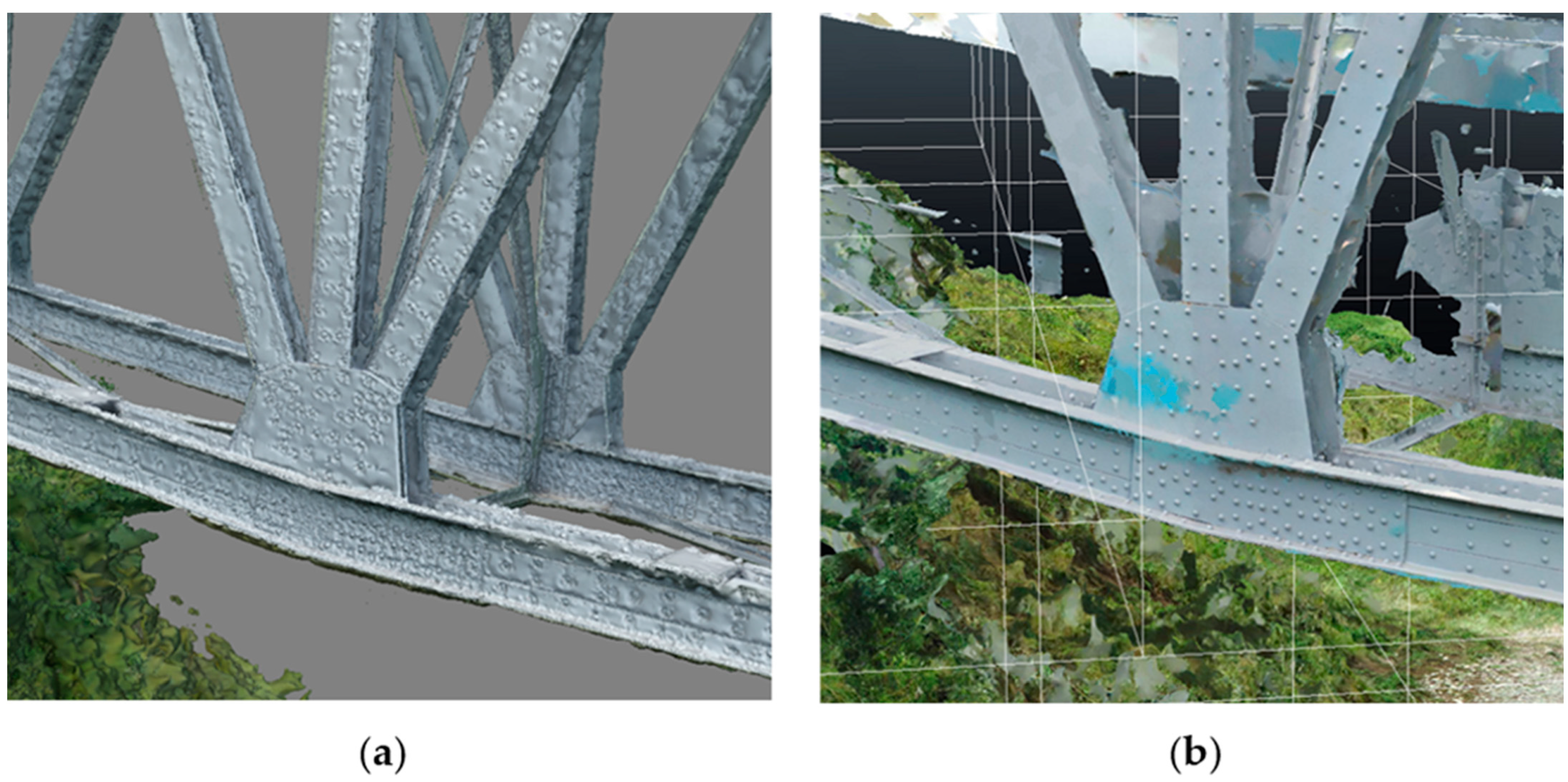

3.1. Results of the Bentley Model

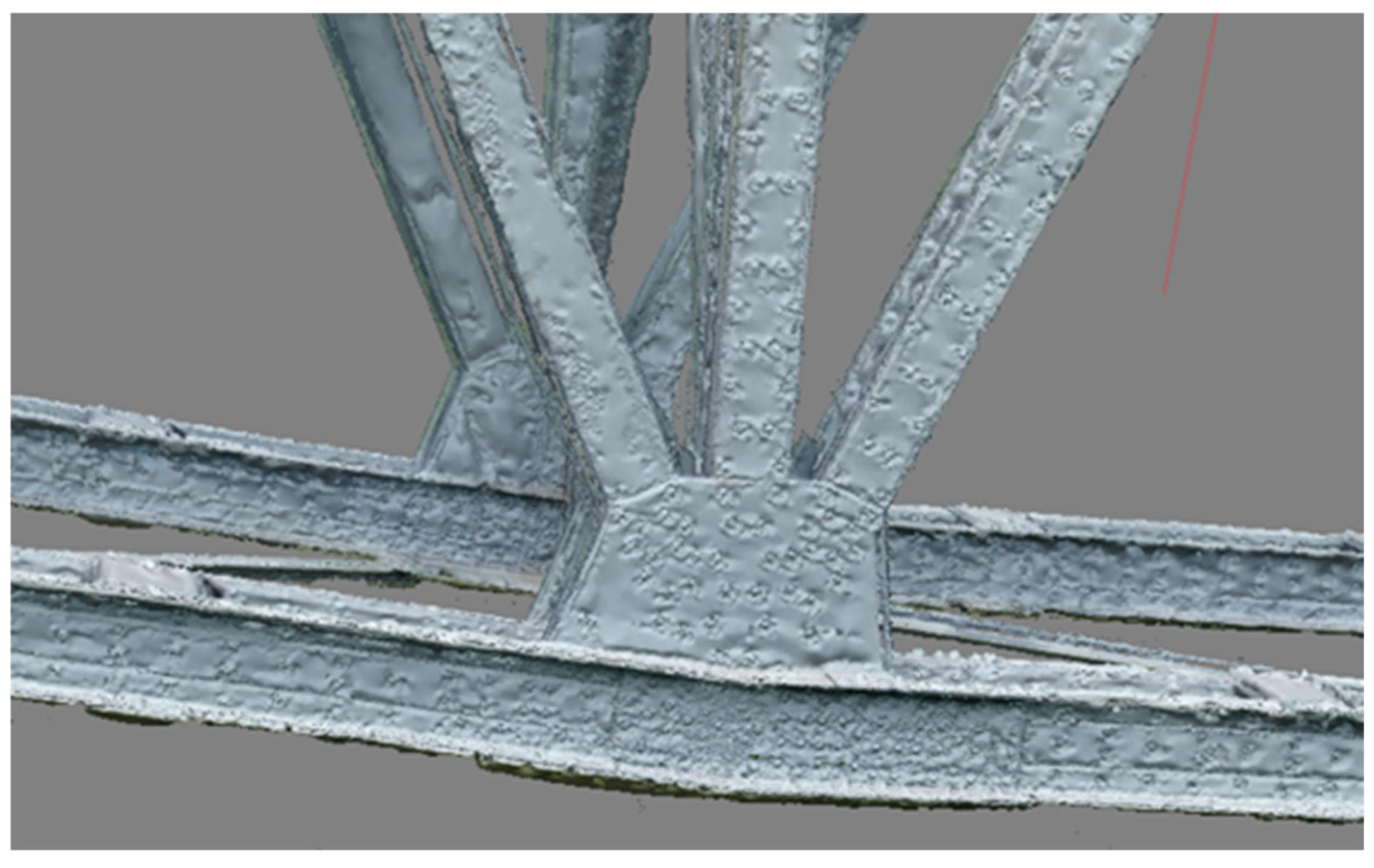

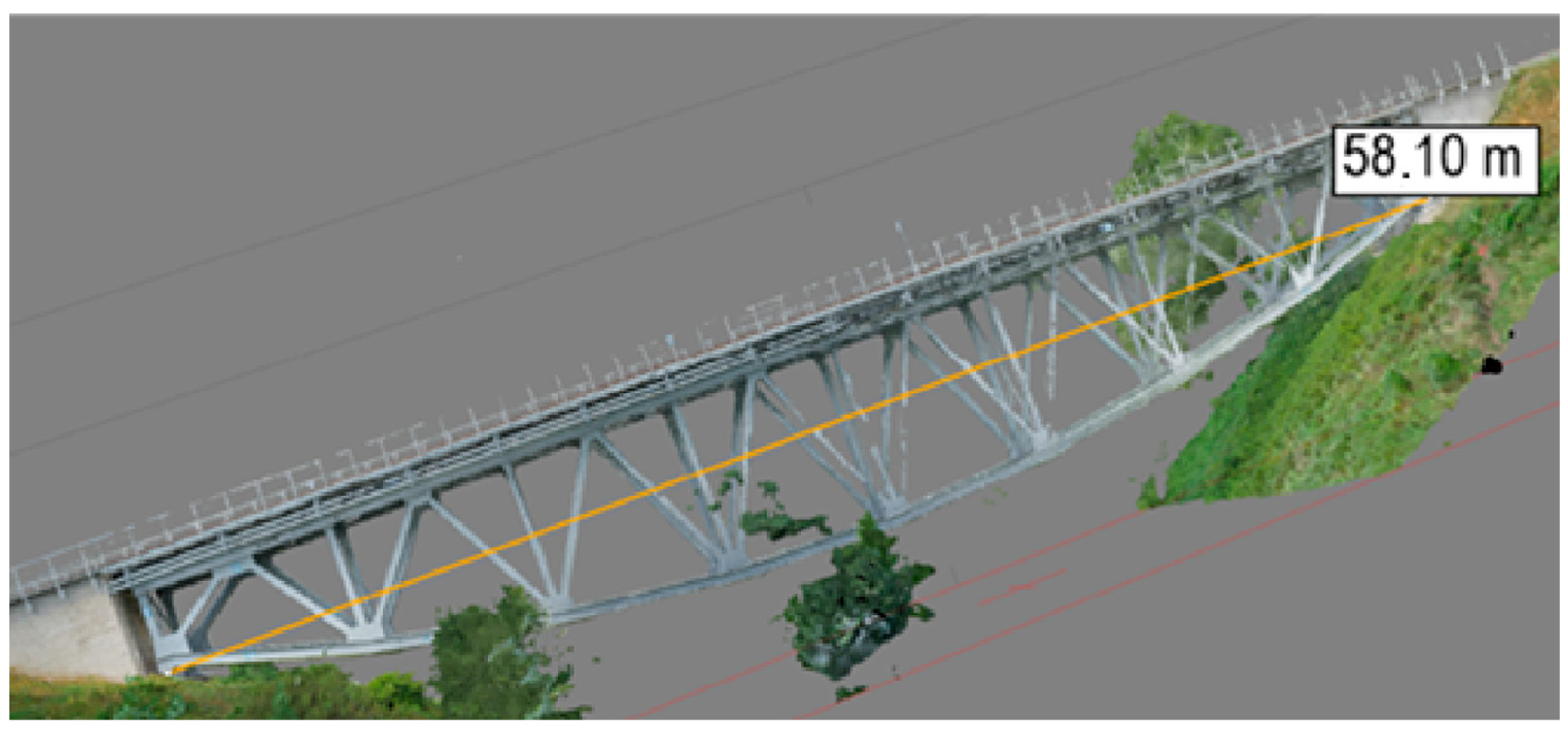

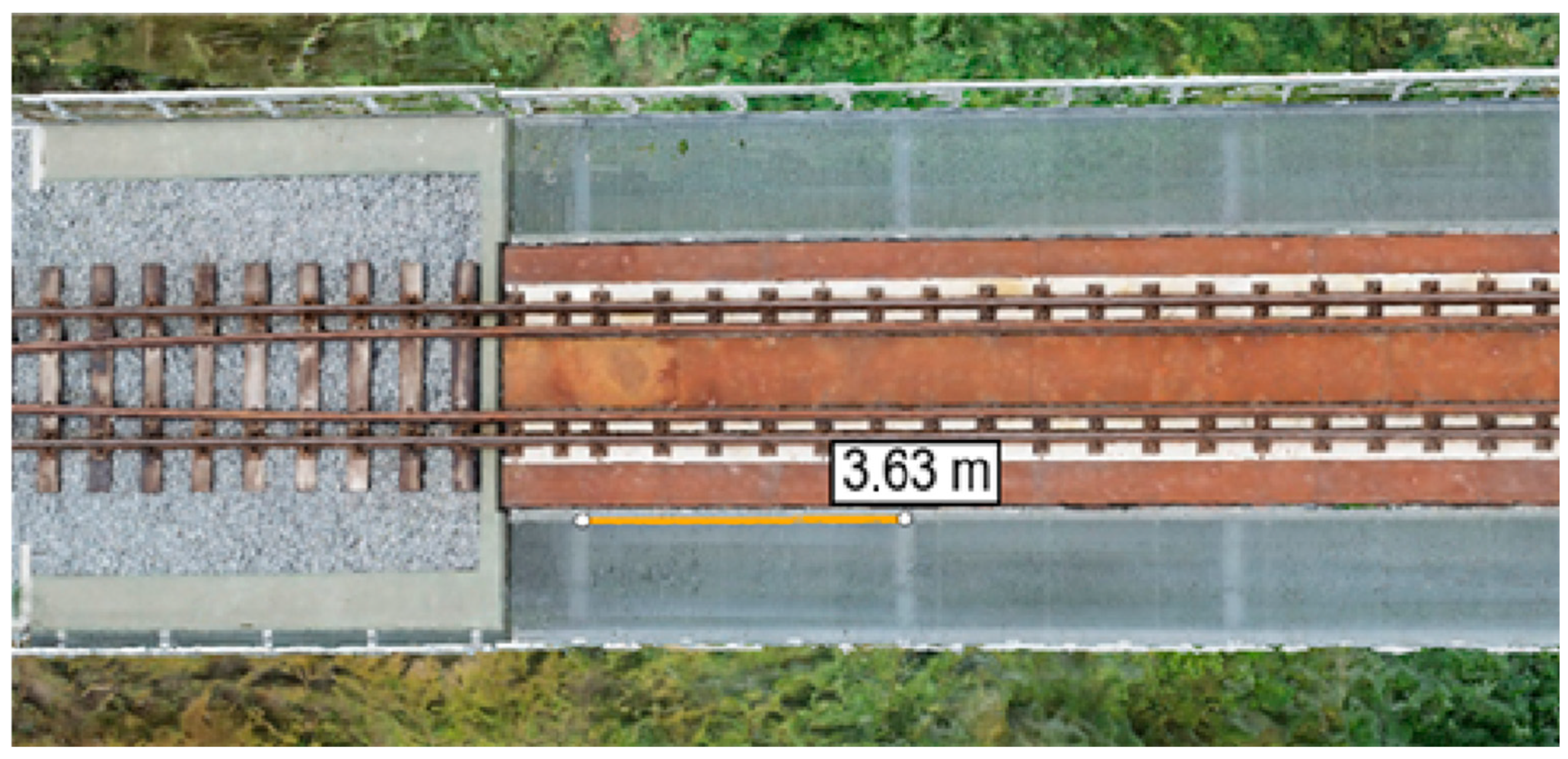

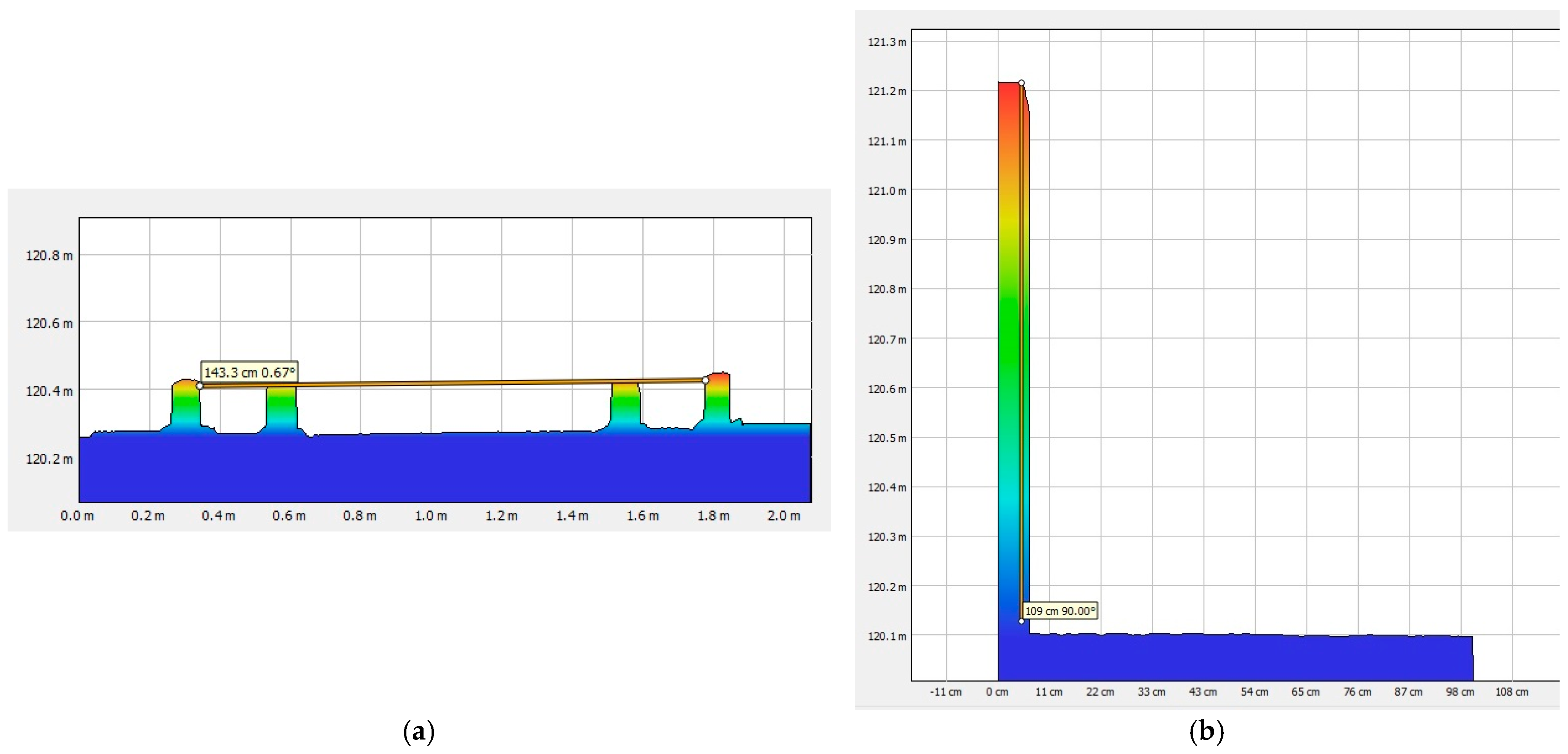

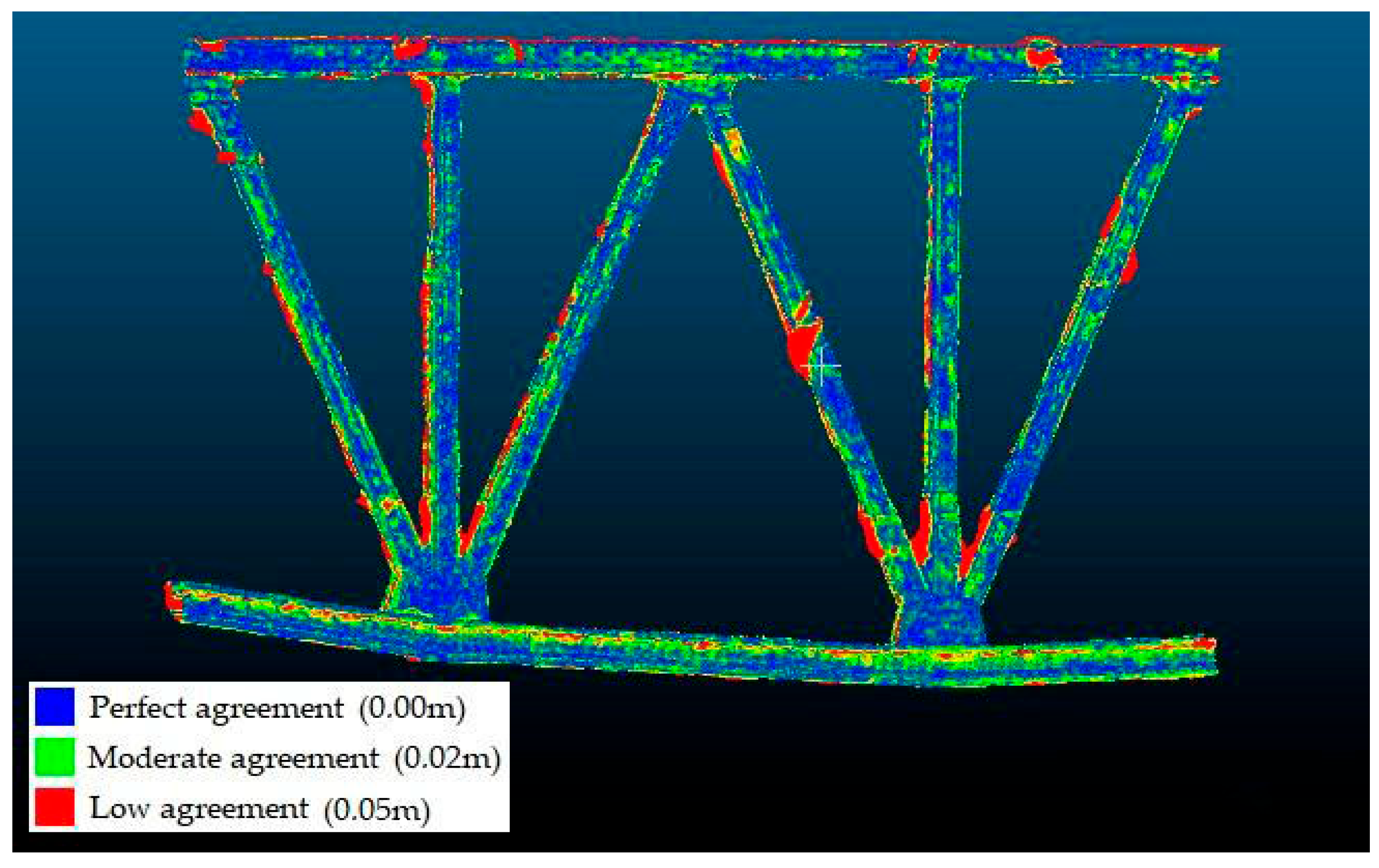

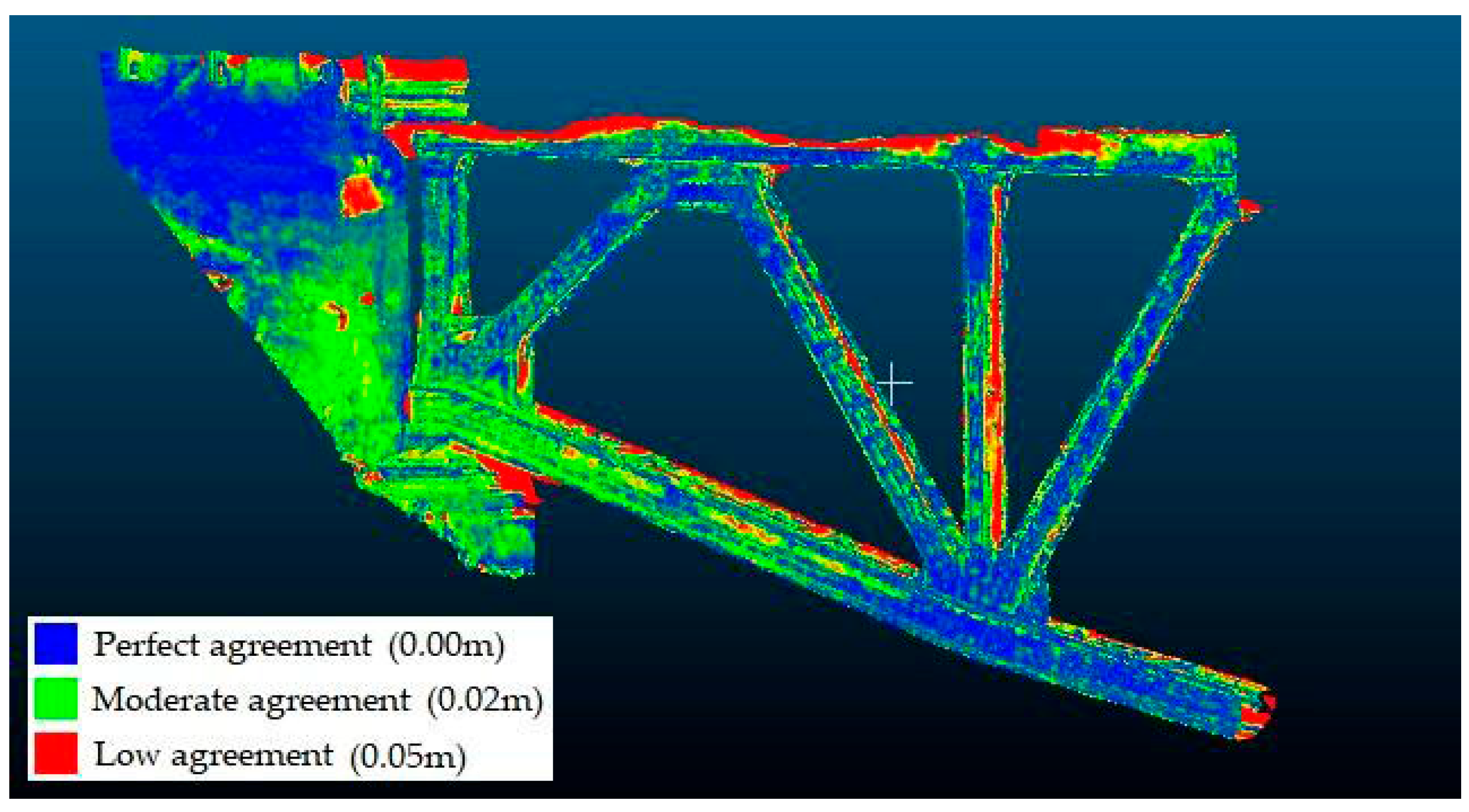

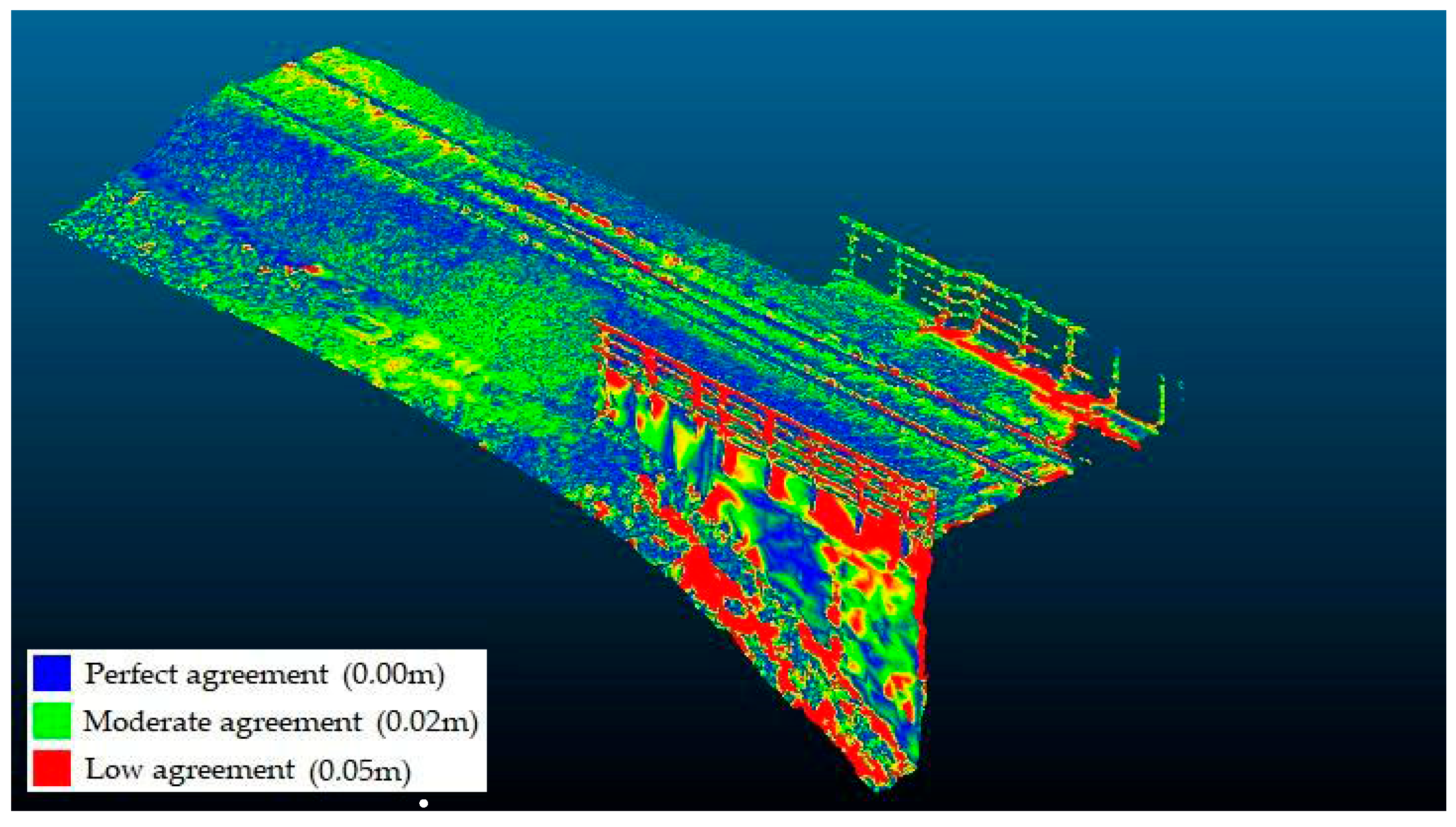

3.2. Results of the Agisoft Metashape Model

4. Discussion

4.1. Comparison of Photogrammetry 3D Models

4.2. Challenges of Photogrammetric Post-Processing

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | Three Dimensional |

| BBA | Bundle Block Adjustment |

| CMOS | Complementary Metal-Oxide Semiconductor |

| DLT | Direct Linear Transformation |

| GCP | Ground Control Points |

| GDOP | Geometric Dilution Of Precision |

| GIS | Geographic Information System |

| GNSS | Global Navigation Satellite System |

| LD | Linear Dichroism |

| MP | Megapixel |

| MVS | Multi-View Stereo |

| PL-2000 | Coordinate System 2000 |

| PL-EVRF2007-NH | European Vertical Reference Frame 2007 for Poland, Normal Height |

| SF | Structure-from-Motion |

| TLS | Terrestrial Laser Scanning |

| UAV | Unmanned Aerial Vehicle |

Appendix A

| Number | X | Y | Z |

|---|---|---|---|

| 1 | 6,021,882.57 | 6,528,774.50 | 119.61 |

| 2 | 6,021,881.19 | 6,528,769.42 | 121.01 |

| 3 | 6,021,881.67 | 6,528,771.98 | 119.62 |

| 4 | 6,021,893.00 | 6,528,777.25 | 119.68 |

| 5 | 6,021,906.64 | 6,528,783.58 | 119.71 |

| 6 | 6,021,880.77 | 6,528,770.77 | 113.57 |

| 7 | 6,021,888.56 | 6,528,774.62 | 111.67 |

| 8 | 6,021,892.52 | 6,528,779.14 | 119.67 |

| 9 | 6,021,892.83 | 6,528,781.68 | 121.11 |

| 10 | 6,021,918.70 | 6,528,792.41 | 113.68 |

| 11 | 6,021,868.77 | 6,528,763.73 | 119.94 |

| 12 | 6,021,874.67 | 6,528,767.64 | 117.97 |

| 13 | 6,021,874.60 | 6,528,766.36 | 120.98 |

| 14 | 6,021,892.31 | 6,528,780.11 | 111.96 |

| 15 | 6,021,894.87 | 6,528,777.07 | 111.95 |

| 16 | 6,021,901.41 | 6,528,780.11 | 111.55 |

| 17 | 6,021,914.47 | 6,528,786.17 | 112.57 |

| 18 | 6,021,875.79 | 6,528,772.43 | 115.05 |

| 19 | 6,021,876.26 | 6,528,772.62 | 118.42 |

| 20 | 6,021,926.84 | 6,528,792.25 | 116.86 |

| 21 | 6,021,925.66 | 6,528,795.59 | 118.07 |

| 22 | 6,021,922.65 | 6,528,794.19 | 115.07 |

| 23 | 6,021,895.32 | 6,528,777.76 | 110.78 |

| 24 | 6,021,901.73 | 6,528,780.73 | 110.56 |

| 25 | 6,021,887.31 | 6,528,777.30 | 111.67 |

| 26 | 6,021,894.08 | 6,528,780.46 | 110.78 |

| 27 | 6,021,900.47 | 6,528,783.22 | 110.57 |

| 28 | 6,021,881.50 | 6,528,775.08 | 113.21 |

| 29 | 6,021,878.88 | 6,528,769.62 | 114.53 |

| 30 | 6,021,882.75 | 6,528,771.42 | 113.34 |

| 31 | 6,021,881.30 | 6,528,770.77 | 115.12 |

| 32 | 6,021,902.66 | 6,528,786.15 | 122.62 |

| 33 | 6,021,899.45 | 6,528,782.99 | 110.78 |

| 34 | 6,021,876.82 | 6,528,772.55 | 118.19 |

| 35 | 6,021,883.24 | 6,528,775.60 | 118.31 |

| 36 | 6,021,889.76 | 6,528,778.59 | 118.37 |

| 37 | 6,021,896.30 | 6,528,781.64 | 118.43 |

| 38 | 6,021,902.90 | 6,528,784.67 | 118.44 |

| 39 | 6,021,909.52 | 6,528,787.75 | 118.44 |

| 40 | 6,021,879.74 | 6,528,773.83 | 113.67 |

| 41 | 6,021,892.90 | 6,528,779.94 | 111.10 |

| FOT1 | 6,021,862.81 | 6,528,760.64 | 119.18 |

| FOT2 | 6,021,858.10 | 6,528,766.58 | 119.34 |

| FOT3 | 6,021,947.79 | 6,528,800.58 | 119.83 |

| FOT4 | 6,021,944.60 | 6,528,805.21 | 119.95 |

| FOT5 | 6,021,925.66 | 6,528,793.42 | 120.37 |

| FOT6 | 6,021,903.92 | 6,528,783.32 | 120.31 |

| FOT7 | 6,021,885.55 | 6,528,774.84 | 120.25 |

| 1001 | 6,021,806.63 | 6,528,799.75 | 103.35 |

| 1002 | 6,021,893.54 | 6,528,779.18 | 103.22 |

| 1003 | 6,021,863.78 | 6,528,705.30 | 103.67 |

| 1004 | 6,021,923.10 | 6,528,734.32 | 103.70 |

| 1005 | 6,021,920.75 | 6,528,799.78 | 112.65 |

References

- Geyik, M.; Tarı, U.; Özcan, O.; Sunal, G.; Yaltırak, C. A new technique mapping submerged beachrocks using low-altitude UAV photogrammetry, the Altınova region, northern coast of the Sea of Marmara (NW Türkiye). Quat. Int. 2024, 712, 109579. [Google Scholar] [CrossRef]

- Pan, Y.; Dong, Y.; Wang, D.; Chen, A.; Ye, Z. Three-Dimensional Reconstruction of Structural Surface Model of Heritage Bridges Using UAV-Based Photogrammetric Point Clouds. Remote Sens. 2019, 11, 1204. [Google Scholar] [CrossRef]

- Wang, Q.; Fang, N.; Zeng, Y.; Yuan, C.; Dai, W.; Fan, R.; Chang, H. Optimizing UAV-SfM photogrammetry for efficient monitoring of gully erosion in high-relief terrains. Measurement 2025, 256, 118154. [Google Scholar] [CrossRef]

- Dahal, S.; Imaizumi, F.; Takayama, S. Spatio-temporal distribution of boulders along a debris-flow torrent assessed by UAV photogrammetry. Geomorphology 2025, 480, 109757. [Google Scholar] [CrossRef]

- Sestras, P.; Badea, G.; Badea, A.C.; Salagean, T.; Roșca, S.; Kader, S.; Remondino, F. Land surveying with UAV photogrammetry and LiDAR for optimal building planning. Autom. Constr. 2025, 173, 106092. [Google Scholar] [CrossRef]

- Wu, S.; Feng, L.; Zhang, X.; Yin, C.; Quan, L.; Tian, B. Optimizing overlap percentage for enhanced accuracy A and efficiency in oblique photogrammetry building 3D modeling. Constr. Build. Mater. 2025, 489, 142382. [Google Scholar] [CrossRef]

- Gruszczyński, W.; Matwij, W.; Ćwiąkała, P. Comparison of low-altitude UAV photogrammetry with terrestrial laser scanning as data-source methods for terrain covered in low vegetation. ISPRS J. Photogramm. Remote Sens. 2017, 126, 168–179. [Google Scholar] [CrossRef]

- Cho, J.; Jeong, S.; Lee, B. Optimal ground control point layout for UAV photogrammetry in high precision 3D mapping. Measurement 2025, 257, 118343. [Google Scholar] [CrossRef]

- Pepe, M.; Costantino, D. UAV photogrammetry and 3D modelling of complex architecture for maintenance purposes: The case study of the masonry bridge on the Sele River, Italy. Period. Polytech. Civ. Eng. 2021, 65, 191–203. [Google Scholar] [CrossRef]

- Zollini, S.; Alicandro, M.; Dominici, D.; Quaresima, R.; Giallonardo, M. UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA). Remote Sens. 2020, 12, 3180. [Google Scholar] [CrossRef]

- Olaszek, P.; Maciejewski, E.; Rakoczy, A.; Cabral, R.; Santos, R.; Ribeiro, D. Remote Inspection of Bridges with the Integration of Scanning Total Station and Unmanned Aerial Vehicle Data. Remote Sens. 2024, 16, 4176. [Google Scholar] [CrossRef]

- Tang, Z.; Peng, Y.; Li, J.; Li, Z. UAV 3D modeling and application based on railroad bridge inspection. Buildings 2024, 14, 26. [Google Scholar] [CrossRef]

- Ioli, F.; Pinto, A.; Pinto, L. UAV photogrammetry for metric evaluation of concrete bridge cracks. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 1025–1032. [Google Scholar] [CrossRef]

- Wang, X.; Demartino, C.; Narazaki, Y.; Monti, G.; Spencer, B.F. Rapid seismic risk assessment of Bridges Rusing UAV aerial photogrammetry. Eng. Struct. 2023, 279, 115589. [Google Scholar] [CrossRef]

- Yiğit, A.Y.; Uysal, M. Virtual reality visualisation of automatic crack detection for bridge inspection from 3D digital twin generated by UAV photogrammetry. Measurement 2025, 242, 115931. [Google Scholar] [CrossRef]

- Castellani, M.; Meoni, A.; Garcia-Macias, E.; Antonini, F.; Ubertini, F. UAV photogrammetry and laser Canning of bridges: A new methodology and its application to a case study. Procedia Struct. Integr. 2024, 62, 193–200. [Google Scholar] [CrossRef]

- Mohammadi, M.; Rashidi, M.; Mousavi, V.; Karami, A.; Yu, Y.; Samali, B. Quality evaluation of digital twins generated based on UAV photogrammetry and TLS: Bridge case study. Remote Sens. 2021, 13, 3499. [Google Scholar] [CrossRef]

- Mousavi, V.; Rashidi, M.; Mohammadi, M.; Samali, B. Evolution of digital twin frameworks in bridge management: Review and future directions. Remote Sens. 2024, 16, 1887. [Google Scholar] [CrossRef]

- Jürgen, H.; Michał, W.; Oliver, S. Use of unmanned aerial vehicle photogrammetry to obtain topographical information to improve bridge risk assessment. J. Infrastruct. Syst. 2018, 24, 04017041. [Google Scholar] [CrossRef]

- Graves, W.; Aminfar, K.; Lattanzi, D. Full-Scale Highway Bridge Deformation Tracking via Photogrammetry and Remote Sensing. Remote Sens. 2022, 14, 2767. [Google Scholar] [CrossRef]

- Shang, Z.; Shen, Z. Flight Planning for Survey-Grade 3D Reconstruction of Truss Bridges. Remote Sens. 2022, 14, 3200. [Google Scholar] [CrossRef]

- Pargieła, K. Optimising UAV Data Acquisition and Processing for Photogrammetry: A Review. Geomat. Environ. Eng. 2023, 17, 29–59. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Szulwic, J.; Janowski, A.; Tysiąc, P.; Dawidowicz, A. Using UAV Photogrammetry to Analyse Changes in the Coastal Zone Based on the Sopot Tombolo (Salient) Measurement Project. Sensors 2020, 20, 4000. [Google Scholar] [CrossRef] [PubMed]

- Cano, M.; Pastor, J.L.; Tomás, R.; Riquelme, A.; Asensio, J.L. A New Methodology for Bridge Inspections in Linear Infrastructures from Optical Images and HD Videos Obtained by UAV. Remote Sens. 2022, 14, 1244. [Google Scholar] [CrossRef]

- Qi, Y.; Lin, P.; Yang, G.; Liang, T. Crack detection and 3D visualization of crack distribution for UAV-based bridge inspection using efficient approaches. Structures 2025, 78, 109075. [Google Scholar] [CrossRef]

- Dabous, S.A.; Al-Ruzouq, R.; Llort, D. Three-dimensional modeling and defect quantification of existing concrete bridges based on photogrammetry and computer aided design. Ain Shams Eng. J. 2023, 14, 12. [Google Scholar] [CrossRef]

- Rashidi, M.; Mousavi, V.; Perera, S.; Devitt, J. Bridge health monitoring through photogrammetry-based digital twins: A topological data analysis approach to missing bolts detection. Measurement 2025, 259, 119713. [Google Scholar] [CrossRef]

- Luo, K.; Kong, X.; Zhang, J.; Hu, J.; Li, J.; Tang, H. Computer Vision-Based Bridge Inspection and Monitoring: A Review. Sensors 2023, 23, 7863. [Google Scholar] [CrossRef]

- Dudek, M.; Lachowicz, Ł. Przegląd Specjalny Mostu Kolejowego w km 17+106 W Ramach Zadania pn. „Przygotowanie Linii Kolejowych nr 234 na Odcinku Kokoszki—Stara Piła Oraz nr 229 na Odcinku Stara Piła—Glincz Jako Trasy Objazdowej na Czas Realizacji Projektu „Prace na Alternatywnym Ciągu Transportowym Bydgoszcz—Trójmiasto, Etap I”; Technical Report; PKP Polskie Linie Kolejowe S.A.: Gdaśnk, Poland, 2023; (material not publicly available). [Google Scholar]

- Szukaj w Archiwach. Available online: https://www.szukajwarchiwach.gov.pl/jednostka/-/jednostka/37485097 (accessed on 13 October 2025).

- Marín-Buzón, C.; Pérez-Romero, A.; López-Castro, J.L.; Ben Jerbania, I.; Manzano-Agugliaro, F. Photogrammetry as a New Scientific Tool in Archaeology: Worldwide Research Trends. Sustainability 2021, 13, 5319. [Google Scholar] [CrossRef]

- Borkowski, A.S.; Kubrat, A. Integration of Laser Scanning, Digital Photogrammetry and BIM Technology: A Review and Case Studies. Eng 2024, 5, 2395–2409. [Google Scholar] [CrossRef]

- Karami, A.; Menna, F.; Remondino, F. Combining Photogrammetry and Photometric Stereo to Achieve Precise and Complete 3D Reconstruction. Sensors 2022, 22, 8172. [Google Scholar] [CrossRef]

- Hellwich, O. Photogrammetric methods. In Encyclopedia of GIS; Shekhar, S., Xiong, H., Zhou, X., Eds.; Springer: Cham, Switzerland, 2017; pp. 1574–1580. [Google Scholar] [CrossRef]

- Borg, B.; Dunn, M.; Ang, A.; Villis, C. The application of state-of-the-art technologies to support artwork conservation: Literature review. J. Cult. Herit. 2020, 44, 239–259. [Google Scholar] [CrossRef]

- Tommasi, C.; Achille, C.; Fassi, F. From point cloud to BIM: A modelling challenge in the cultural heritage field. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 429–436. [Google Scholar] [CrossRef]

- Konstantakis, M.; Trichopoulos, G.; Aliprantis, J.; Gavogiannis, N.; Karagianni, A.; Parthenios, P.; Serraos, K.; Caridakis, G. An Improved Approach for Generating Digital Twins of Cultural Spaces through the Integration of Photogrammetry and Laser Scanning Technologies. Digital 2024, 4, 215–231. [Google Scholar] [CrossRef]

- Afaq, S.; Jain, S.K.; Sharma, N.; Sharma, S. Comparative assessment of 2D photogrammetry versus direct anthropometry in nasal measurements. Eur. J. Clin. Exp. Med. 2025, 23, 307–315. [Google Scholar] [CrossRef]

- Kingsland, K. Comparative analysis of digital photogrammetry software for cultural heritage. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00157. [Google Scholar] [CrossRef]

- Cabral, R.; Oliveira, R.; Ribeiro, D.; Rakoczy, A.M.; Santos, R.; Azenha, M.; Correia, J. Railway bridge geometry assessment supported by cutting-edge reality capture technologies and 3D as-designed models. Infrastructures 2023, 8, 114. [Google Scholar] [CrossRef]

- Maboudi, M.; Backhaus, J.; Mai, I.; Ghassoun, Y.; Khedar, Y.; Lowke, D.; Riedel, B.; Bestmann, U.; Gerke, M. Very high-resolution bridge deformation monitoring using UAV-based photogrammetry. J. Civ. Struct. Health Monit. 2025, 15, 1–18. [Google Scholar] [CrossRef]

- Xing, Y.; Yang, S.; Fahy, C.; Harwood, T.; Shell, J. Capturing the Past, Shaping the Future: A Scoping Review of Photogrammetry in Cultural Building Heritage. Electronics 2025, 14, 3666. [Google Scholar] [CrossRef]

- Balletti, C.; Ballarin, M.; Vernier, P. Replicas in cultural heritage: 3D printing and the museum experience. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 55–62. [Google Scholar] [CrossRef]

- Ustawa z Dnia 24 Stycznia 2025 r. o Zmianie Ustawy—Prawo Lotnicze Oraz Niektórych Innych Ustaw. Dziennik Ustaw 2025, Poz. 179. Available online: https://isap.sejm.gov.pl/isap.nsf/DocDetails.xsp?id=WDU20250000179 (accessed on 3 November 2025).

| UAV | Sensor Width | Width in Pixel | Focal Length | Pixel Size | GSD |

|---|---|---|---|---|---|

| DJI Phantom 4 Pro | 13.2 mm | 5472 px | 8.8 mm | 0.002412 mm | 0.82 cm/px |

| DJI Mavic 2 Pro | 13.2 mm | 5472 px | 10.3 mm | 0.002412 mm | 0.70 cm/px |

| DJI Mini 3 pro | 9.8 mm | 4032 px | 6.7 mm | 0.002430 mm | 1.09 cm/px |

| Parameter | Bentley ContextCapture | Agisoft Metashape |

|---|---|---|

| Number of input images | 9274 | 11,212 |

| Processing time | 10 days | 4 days |

| Tie points used | 53 | 78 |

| Texture quality | Very high | High |

| Strengths | Robust automation, photorealistic texture | User control Detailed crack detection |

| Limitations | Long processing time, alignment errors | Hardware demanding repetitive geometry challenges |

| Element | Archival Records | Bentley ContextCapture | Agisoft Metashape | ||

|---|---|---|---|---|---|

| [m] | [m] | Δ [%] | [m] | Δ [%] | |

| Theoretical span Lt | 58.00 | 57.16 | 1.45% | 58.10 | 0.17% |

| Spacing crossbeams bc | 3.63 | 3.68 | 1.52% | 3.63 | 0.05% |

| Height of the truss girder hg | 8.04 | 8.09 | 0.50% | 8.21 | 1.99% |

| Track gauge gt | 1.435 | 1.446 | 0.77% | 1.433 | 0.14% |

| Railing height hr | 1.10 | 1.09 | 1.27% | 1.09 | 1.27% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ciborowski, T.; Księżopolski, D.; Kuryłowicz, D.; Nowak, H.; Rocławski, P.; Stalmach, P.; Wałdowski, P.; Banas, A.; Makowska-Jarosik, K. Low-Altitude Photogrammetry and 3D Modeling for Engineering Heritage: A Case Study on the Digital Documentation of a Historic Steel Truss Viaduct. Appl. Sci. 2025, 15, 12491. https://doi.org/10.3390/app152312491

Ciborowski T, Księżopolski D, Kuryłowicz D, Nowak H, Rocławski P, Stalmach P, Wałdowski P, Banas A, Makowska-Jarosik K. Low-Altitude Photogrammetry and 3D Modeling for Engineering Heritage: A Case Study on the Digital Documentation of a Historic Steel Truss Viaduct. Applied Sciences. 2025; 15(23):12491. https://doi.org/10.3390/app152312491

Chicago/Turabian StyleCiborowski, Tomasz, Dominik Księżopolski, Dominika Kuryłowicz, Hubert Nowak, Paweł Rocławski, Paweł Stalmach, Paweł Wałdowski, Anna Banas, and Karolina Makowska-Jarosik. 2025. "Low-Altitude Photogrammetry and 3D Modeling for Engineering Heritage: A Case Study on the Digital Documentation of a Historic Steel Truss Viaduct" Applied Sciences 15, no. 23: 12491. https://doi.org/10.3390/app152312491

APA StyleCiborowski, T., Księżopolski, D., Kuryłowicz, D., Nowak, H., Rocławski, P., Stalmach, P., Wałdowski, P., Banas, A., & Makowska-Jarosik, K. (2025). Low-Altitude Photogrammetry and 3D Modeling for Engineering Heritage: A Case Study on the Digital Documentation of a Historic Steel Truss Viaduct. Applied Sciences, 15(23), 12491. https://doi.org/10.3390/app152312491