Performance Analysis of Data-Driven and Deterministic Latency Models in Dynamic Packet-Switched Xhaul Networks

Abstract

1. Introduction

- A regression-based approach is proposed to predict maximum flow latencies in dynamic TSN-enabled packet-switched Xhaul networks.

- The performance of the proposed QR predictor is evaluated and compared with deterministic WC latency estimations in a dynamic Xhaul scenario characterized by varying traffic loads and changing Xhaul flow configurations.

- The accuracy and applicability of the QR model, trained on a comprehensive data set generated under diverse network configurations and load conditions, are validated across a wide range of evaluation scenarios.

- The impact of data-driven latency prediction on overall network performance is analyzed, demonstrating its potential to enhance deterministic latency modeling in dynamic Xhaul operation.

2. Related Works

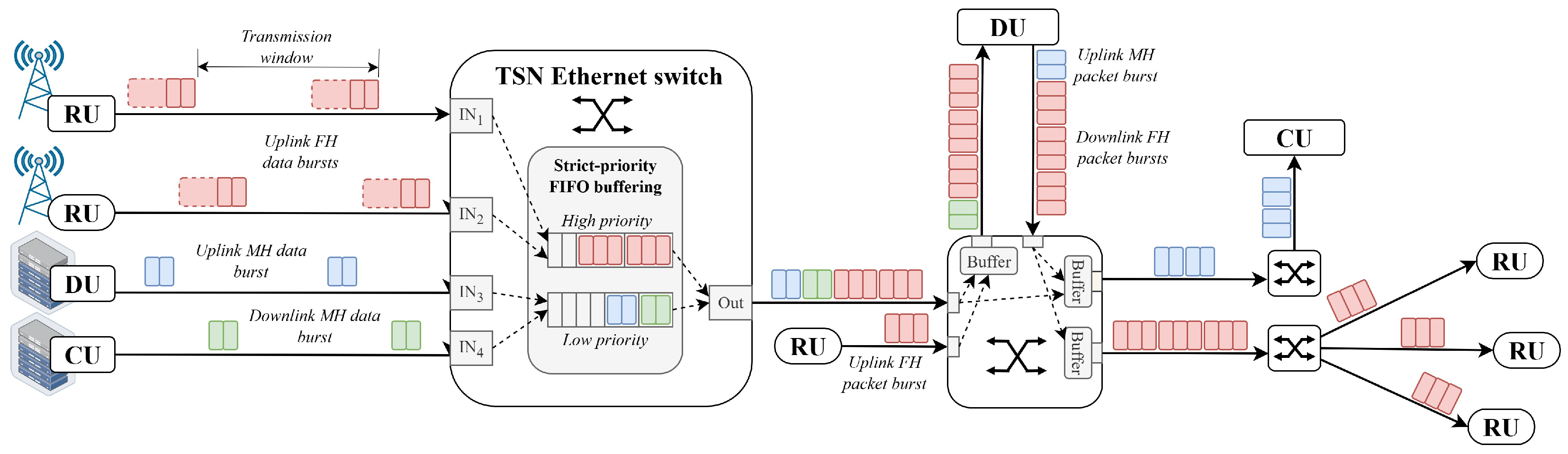

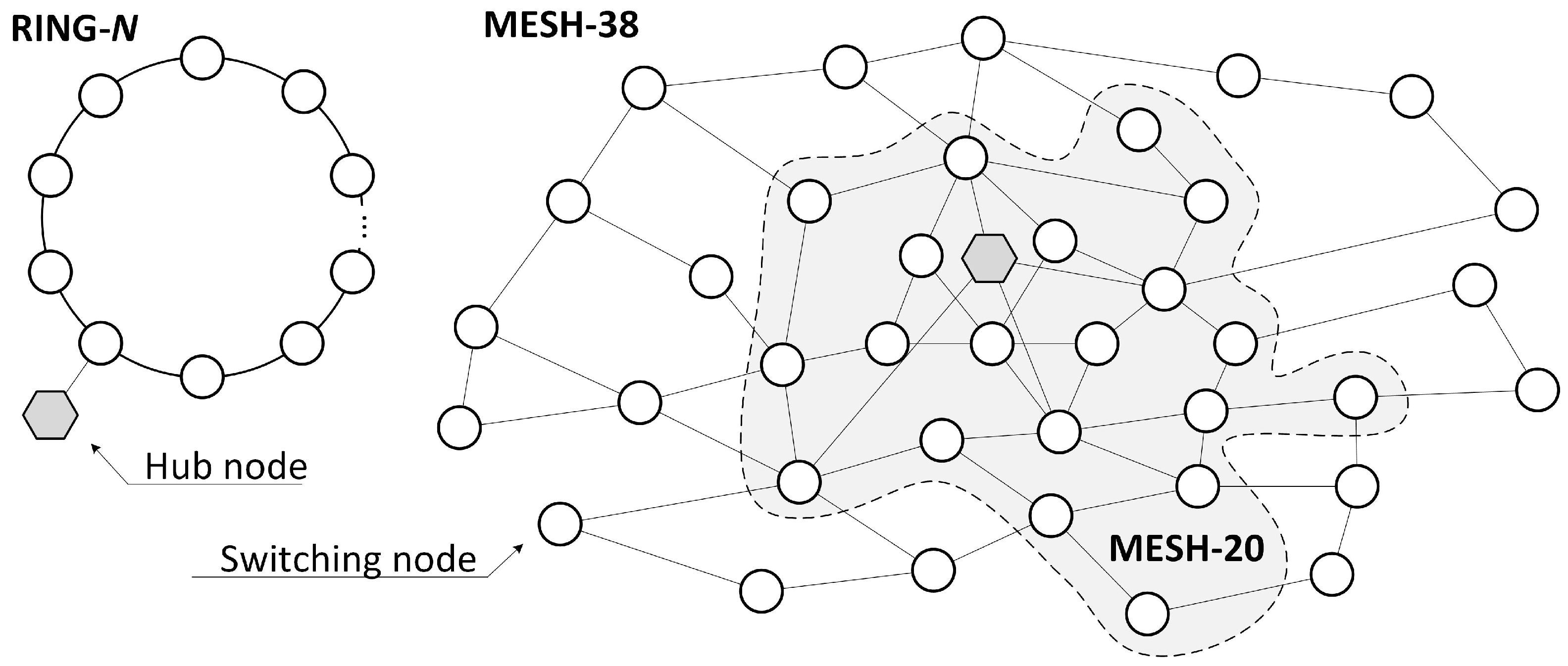

3. Network Model

4. Latency Models

4.1. Worst-Case Model

- bursts belonging to other flows of equal or higher priority that may be transmitted before the burst of the considered flow, and

- the largest burst of a lower-priority flow that may already be in transmission and is not preempted.

4.2. Quantile Regression Model

- Routing path characteristics: hop count , the number of buffered links along the path , and the static latency , representing the sum of propagation, store-and-forward, and transmission delays, i.e., ;

- Deterministic latency bound: the worst-case latency estimate , which serves as a baseline input linking the QR model to analytical latency bounds;

- Queuing-related indicators:

- -

- —buffering contribution from equal-priority (EP) flows entering the same switch input port g. It captures the overlap between the reception delay of the burst of flow f () and the transmission delay of a burst of another EP flow q at the switch output port e (). If , the burst of flow q is completely transmitted before the burst of flow f is fully received, and no buffering delay is introduced. Otherwise, a partial buffering delay occurs, which is reduced proportionally to the difference between the burst reception and transmission times.

- -

- —buffering contribution from EP flows arriving from other input ports. It accounts for the longest EP burst from each such port, and is calculated as the sum of their respective delay contributions.

- -

- —aggregated buffering impact from higher-priority (HP) flows, calculated as the total delay induced by all such flows contending for transmission at the same output port.

5. Data Generation

5.1. Main Assumptions

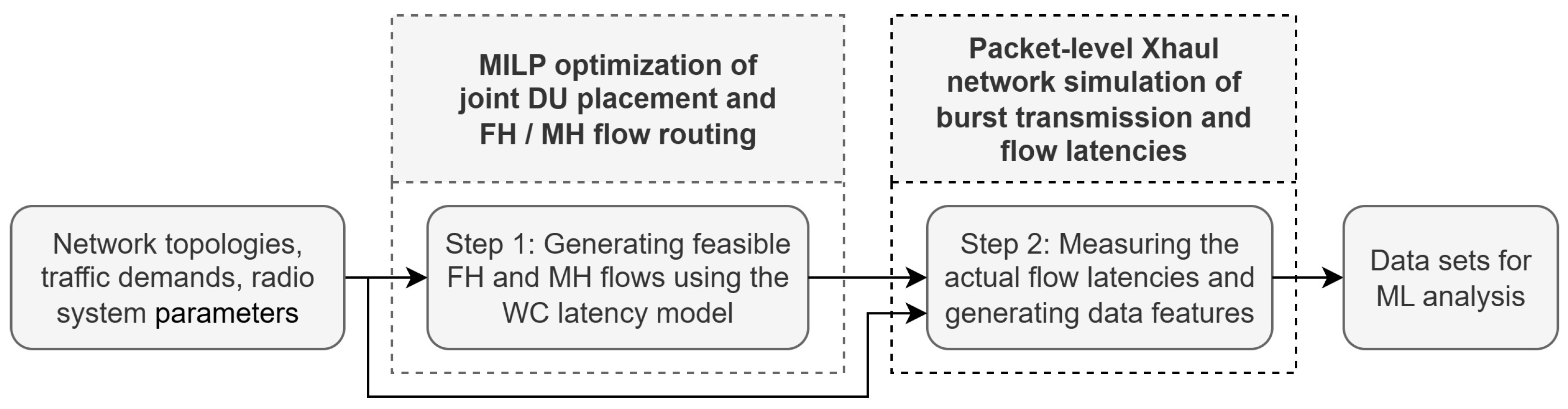

5.2. Data Generation Workflow

- Step 1: Generating feasible flows —feasible FH and MH flows satisfying capacity and latency constraints were generated for each network scenario. For this purpose, a mixed-integer linear programming (MILP) optimization method, discussed in Section 5.3, was applied assuming maximum Xhaul flow bit-rates () and latency estimation based on the WC model.

- Step 2: Generating data features—the goal of this step was to generate the data features, including the measurement of the actual latencies, for each individual data flow produced in Step 1. To this end, packet-level Xhaul network simulations were executed for each network scenario and data were gathered, as described in Section 5.4.

5.3. MILP Optimization

5.4. Network Simulations

- To introduce variability and avoid repetitive buffering patterns, the departure time of each burst (i.e., its offset relative to the start of the transmission window) is randomly modified every two transmission periods. Furthermore, the simulation enforces that all bursts complete their transmission within each two-window cycle, preventing temporal congestion caused by overlapping bursts from the same source.

- A store-and-forward switching mechanism without cut-through is assumed, meaning that each burst is fully received at the input port before transmission begins at the output port.

- Packet bursts are queued in a first-in–first-out (FIFO) manner and transmitted as complete units, without fragmentation or interleaving.

- Switches operate according to the strict-priority algorithm [11], which ensures that high-priority (HP) latency-sensitive FH bursts are always served before lower-priority (LP) MH bursts.

- Profile A of operation, as defined in [11], is applied, guaranteeing that an LP burst already being transmitted cannot be preempted by an HP burst.

- The latency of a flow, defined as the maximum one-way delay, is taken as the largest delay value measured among all bursts of that flow transmitted during the entire simulation.

- Each simulation assumes the transmission of bursts, after which the simulation terminates.

6. Evaluation Scenario

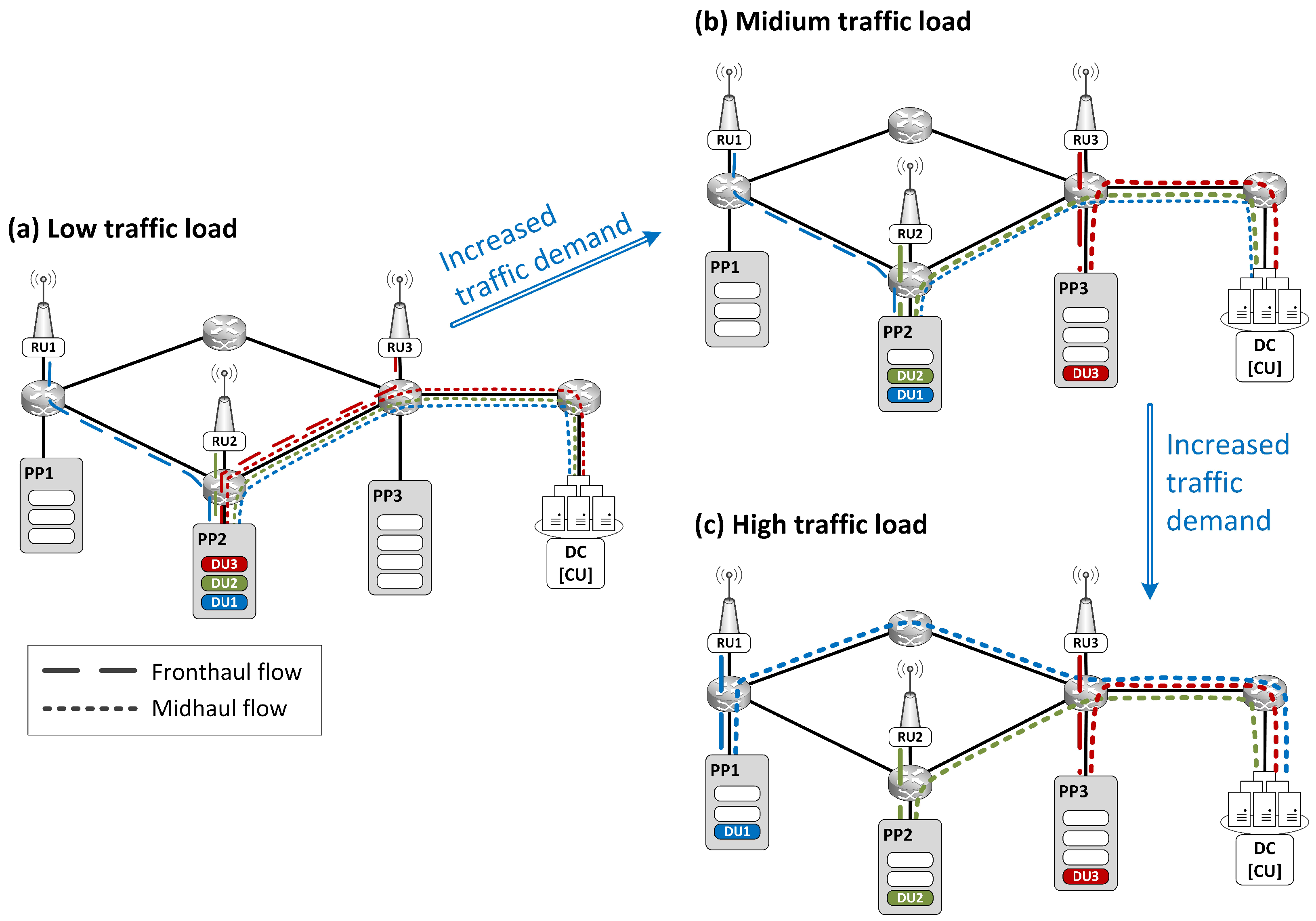

- Stage 1: Selection of PP nodes —In an offline preprocessing stage, a pair of PP nodes, denoted as and , is determined for each RU by solving the MILP optimization model described in Section 5.3 for two extreme network load conditions, namely the minimum () and maximum () traffic levels.

- Stage 2: DU reallocation—During the network simulation, whenever a change in traffic load is detected, the system verifies whether the current DU allocation satisfies the transmission capacity and latency constraints of all flows. If these conditions are not met, an inactive node hosting the largest number of DUs is activated, and the corresponding DUs are reallocated to this node. The reallocation is carried out only to the minimum extent required to restore feasibility, ensuring that the number of active PP nodes remains as small as possible throughout the simulation.

7. Results

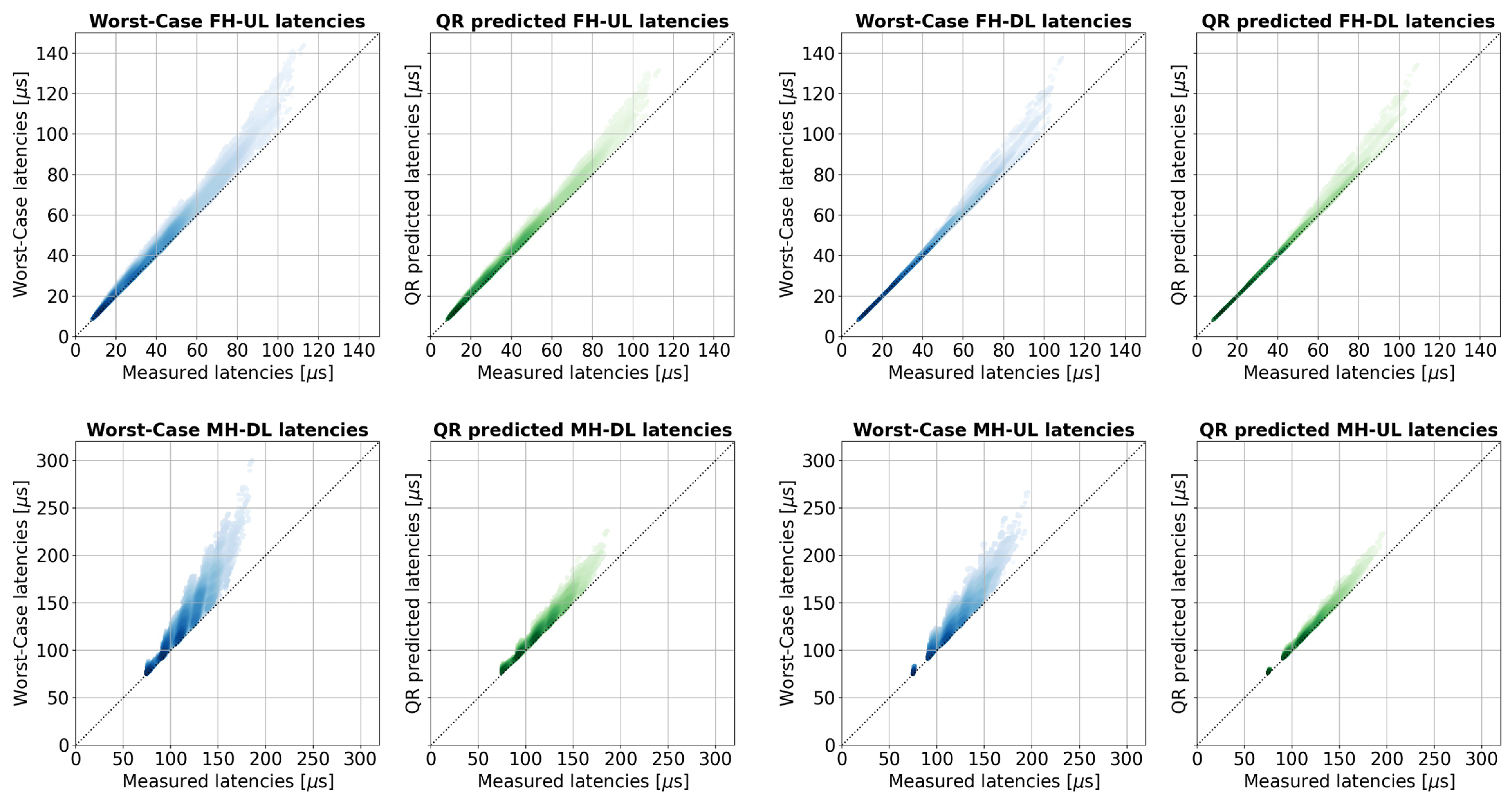

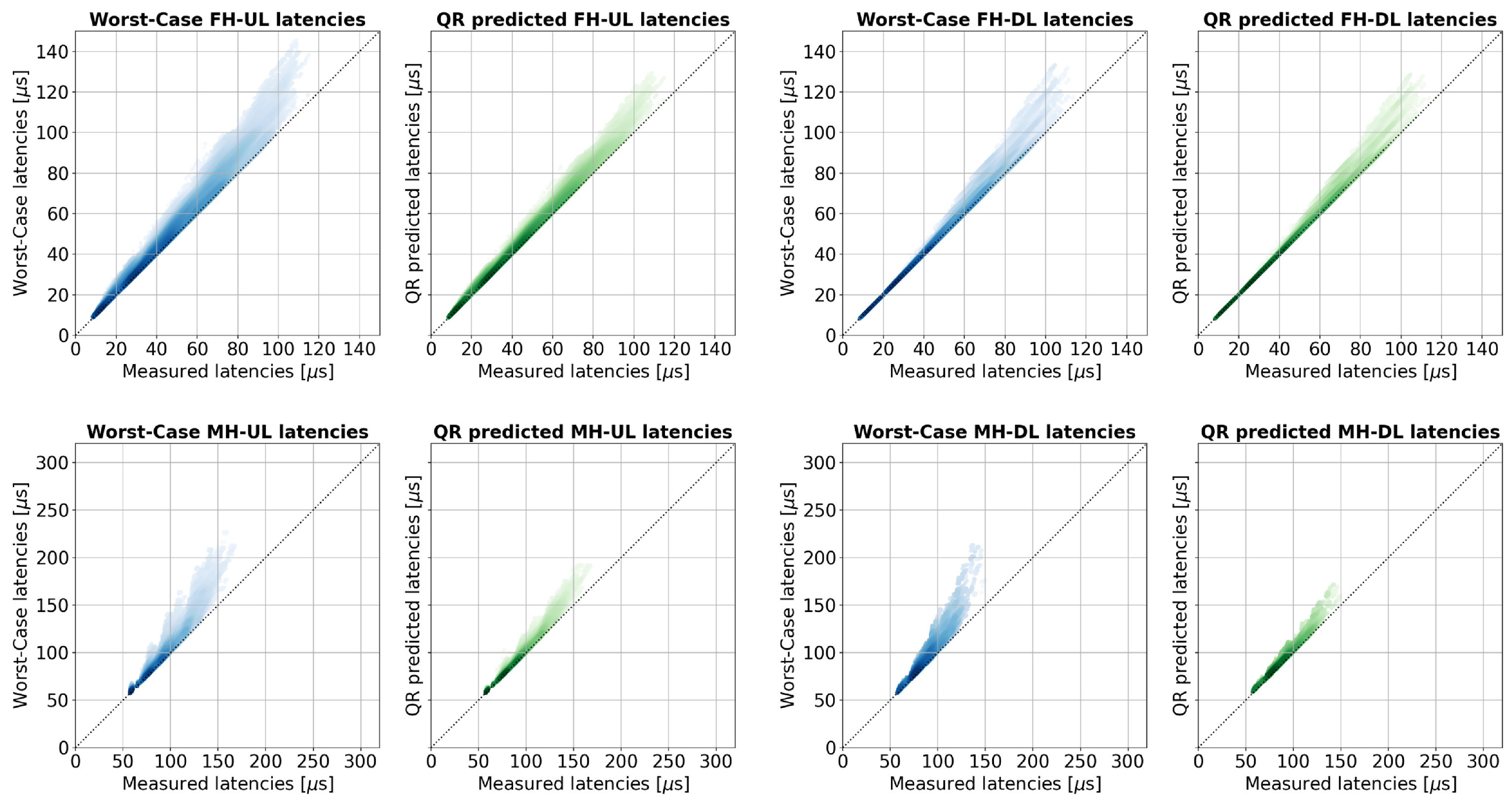

7.1. Validation of QR Model

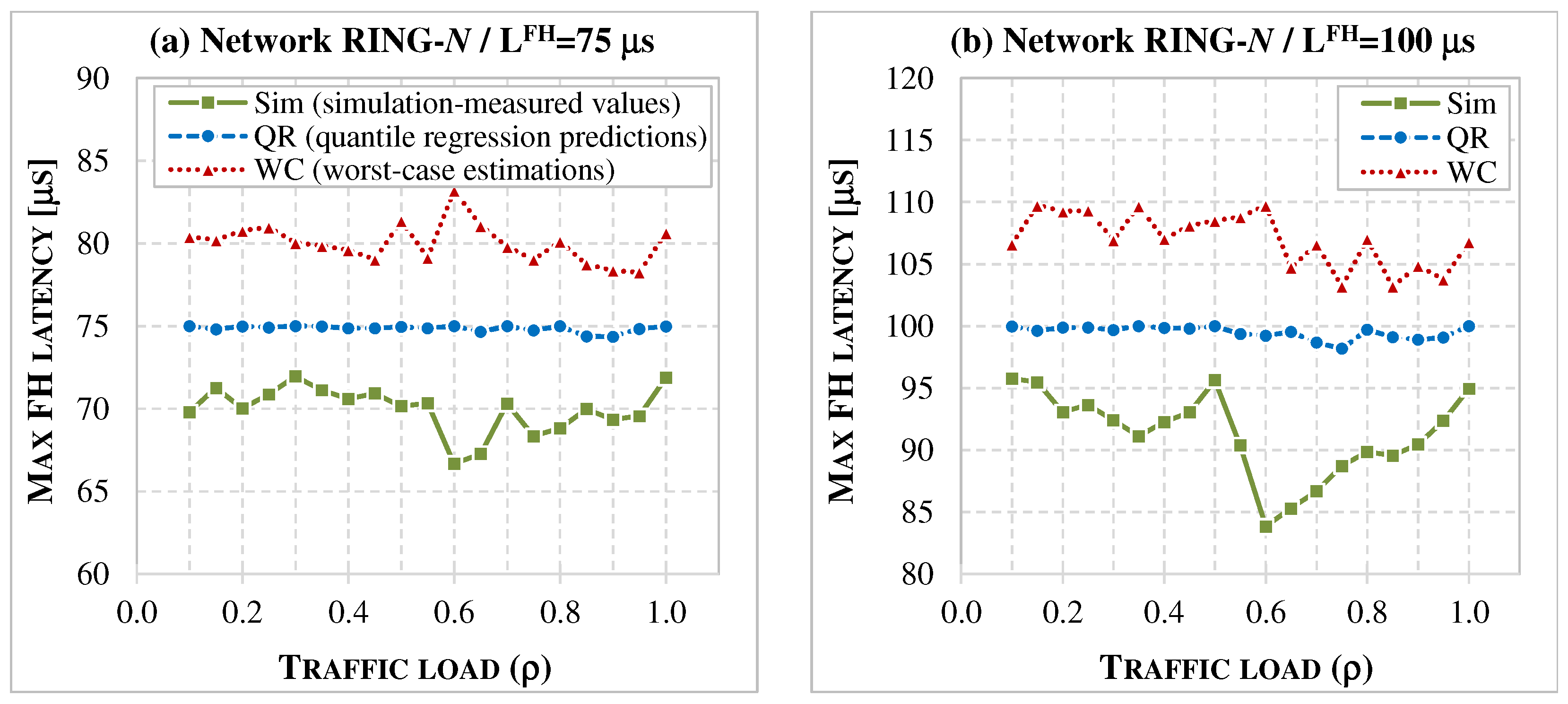

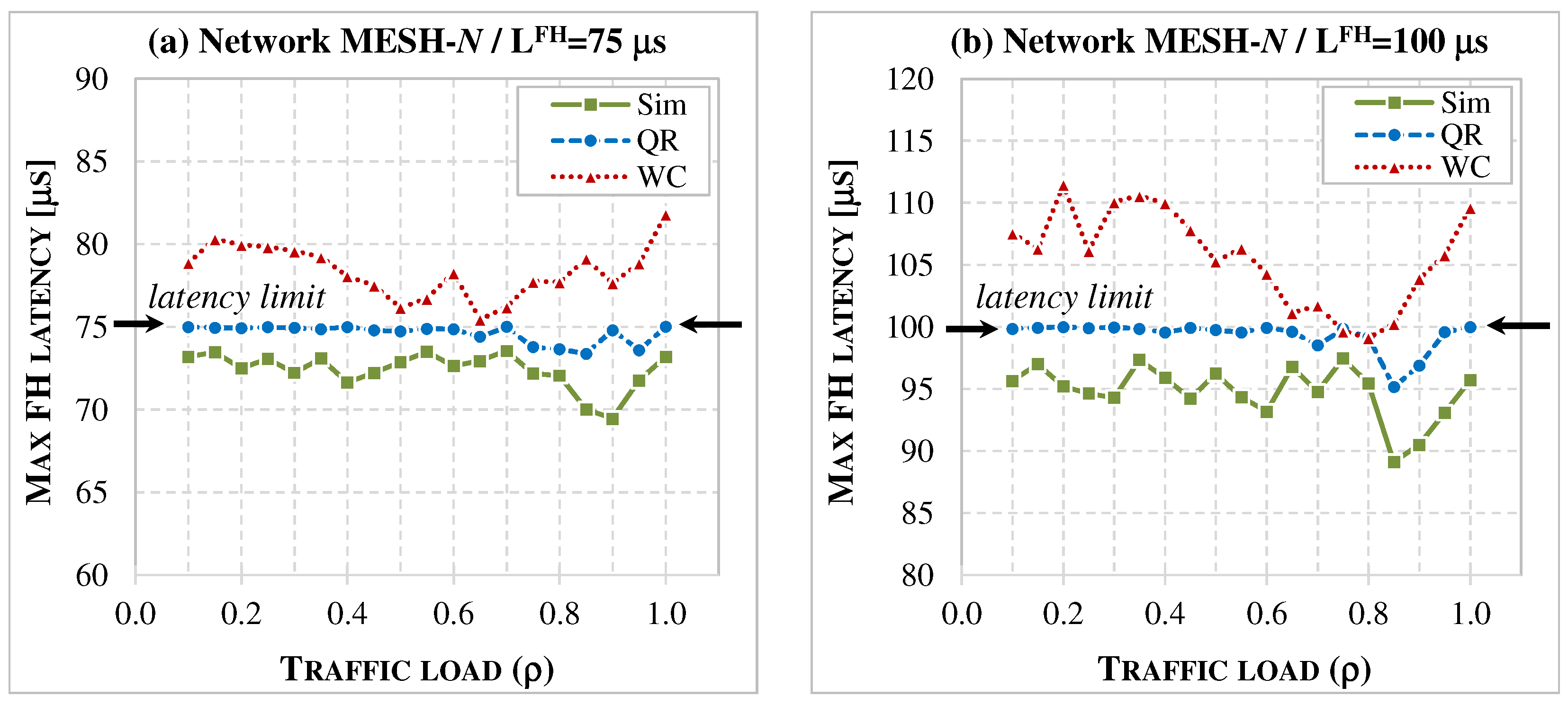

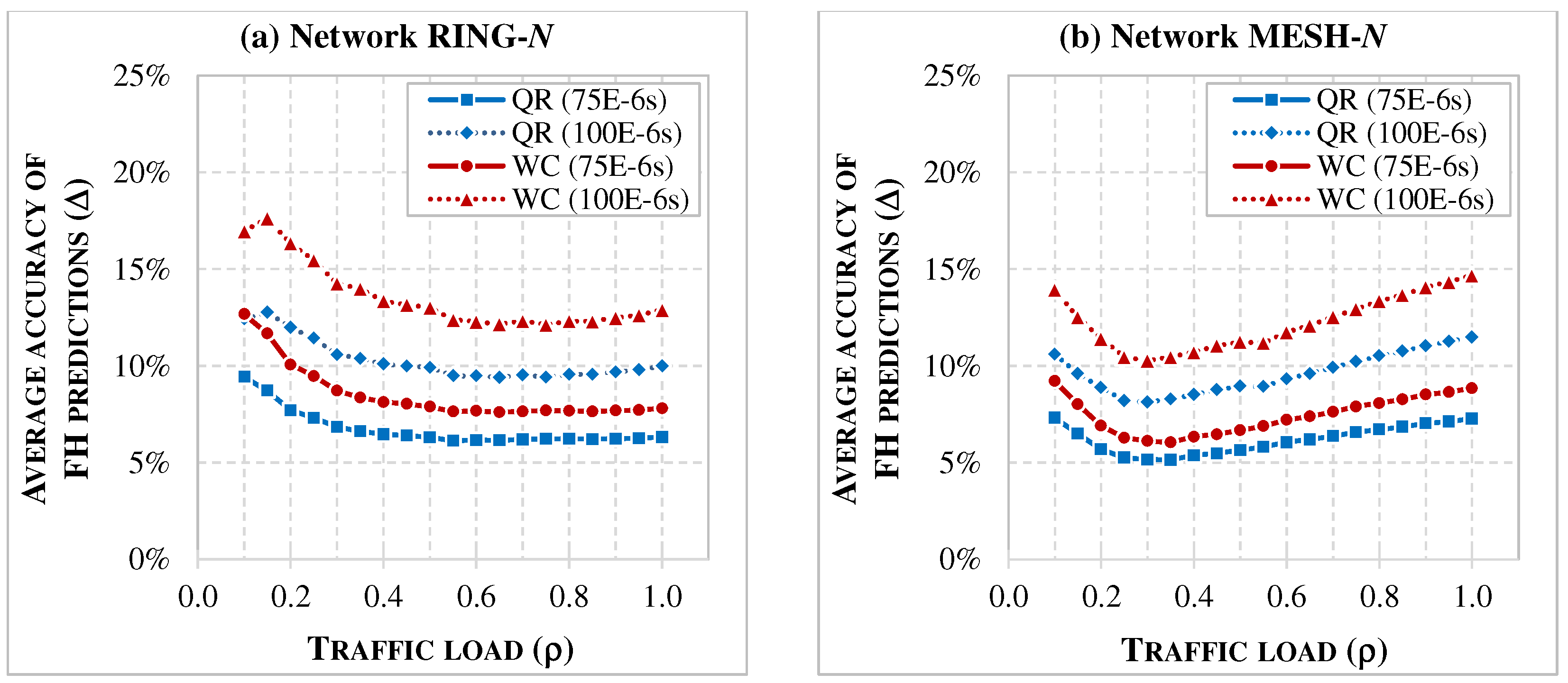

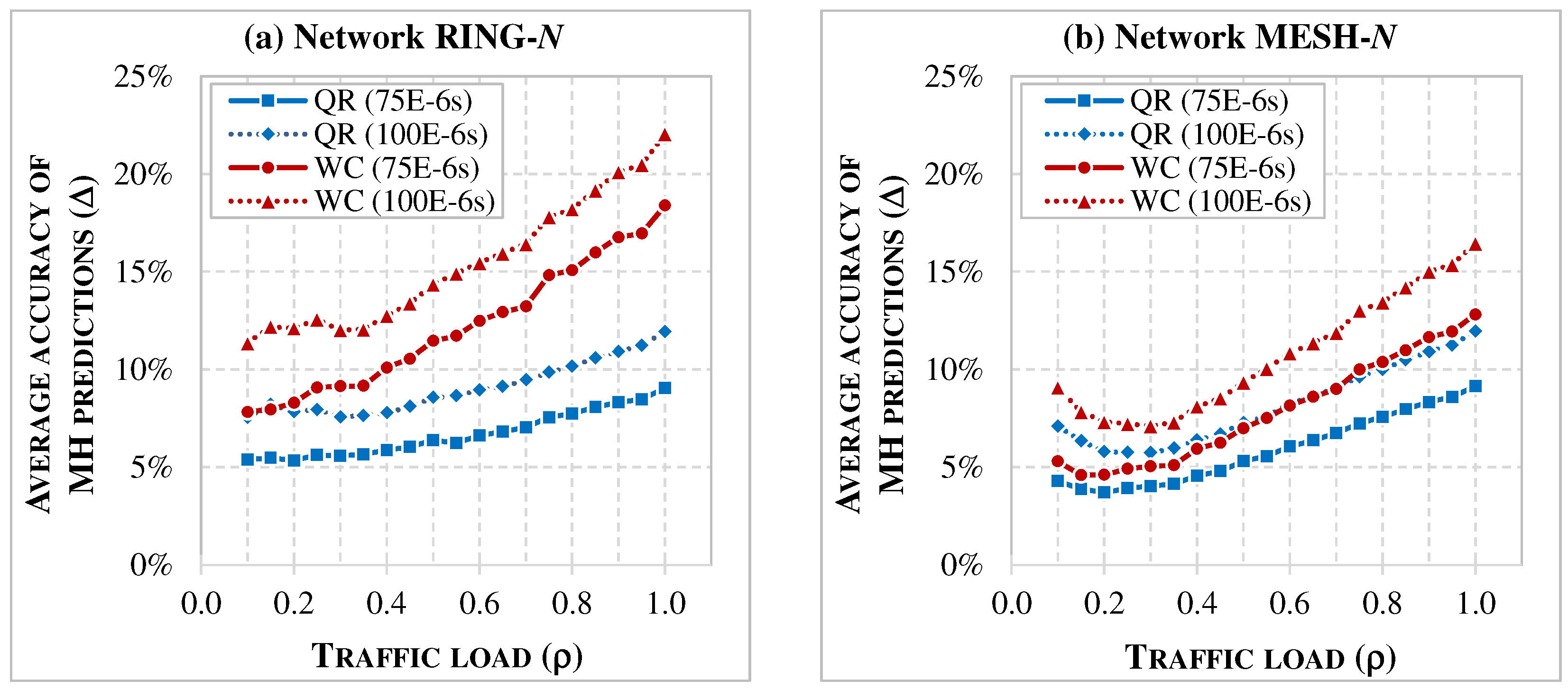

7.2. Accuracy of Latency Models

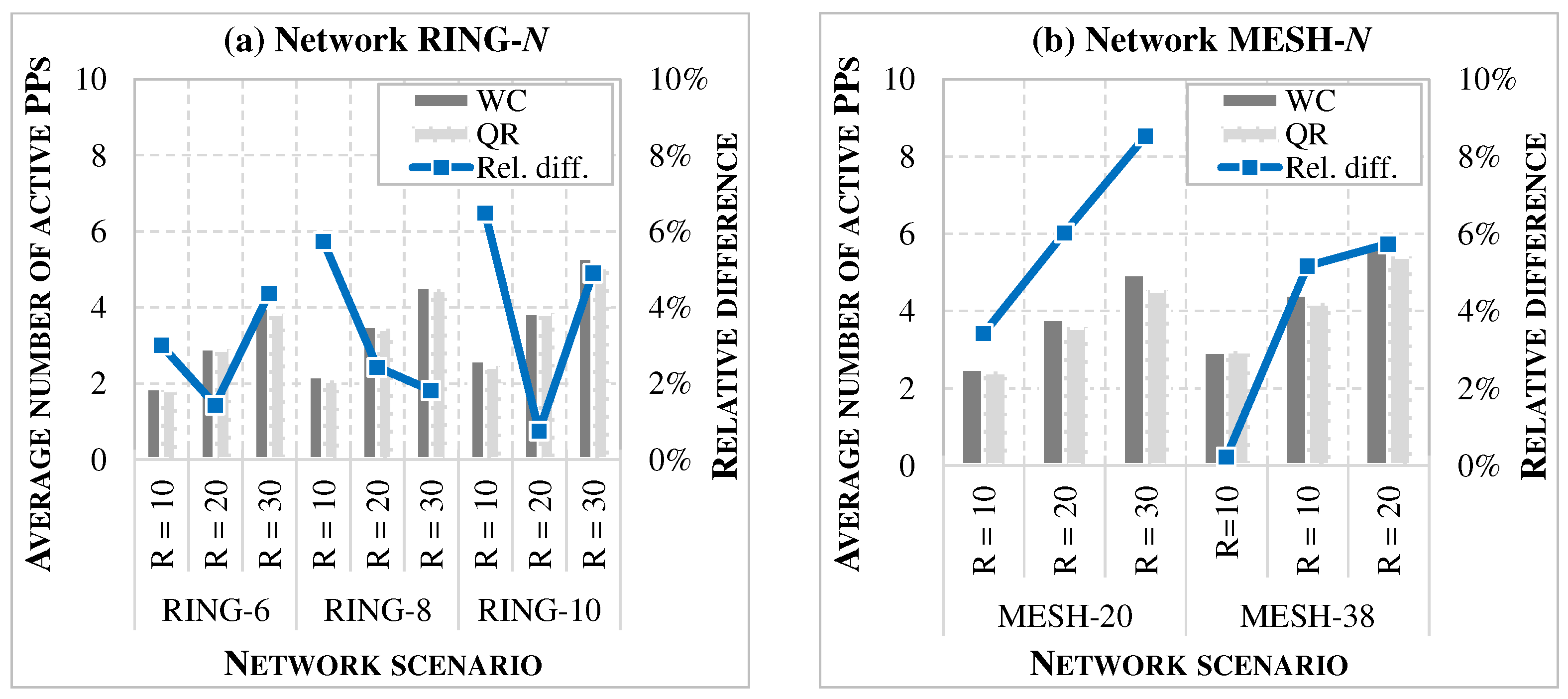

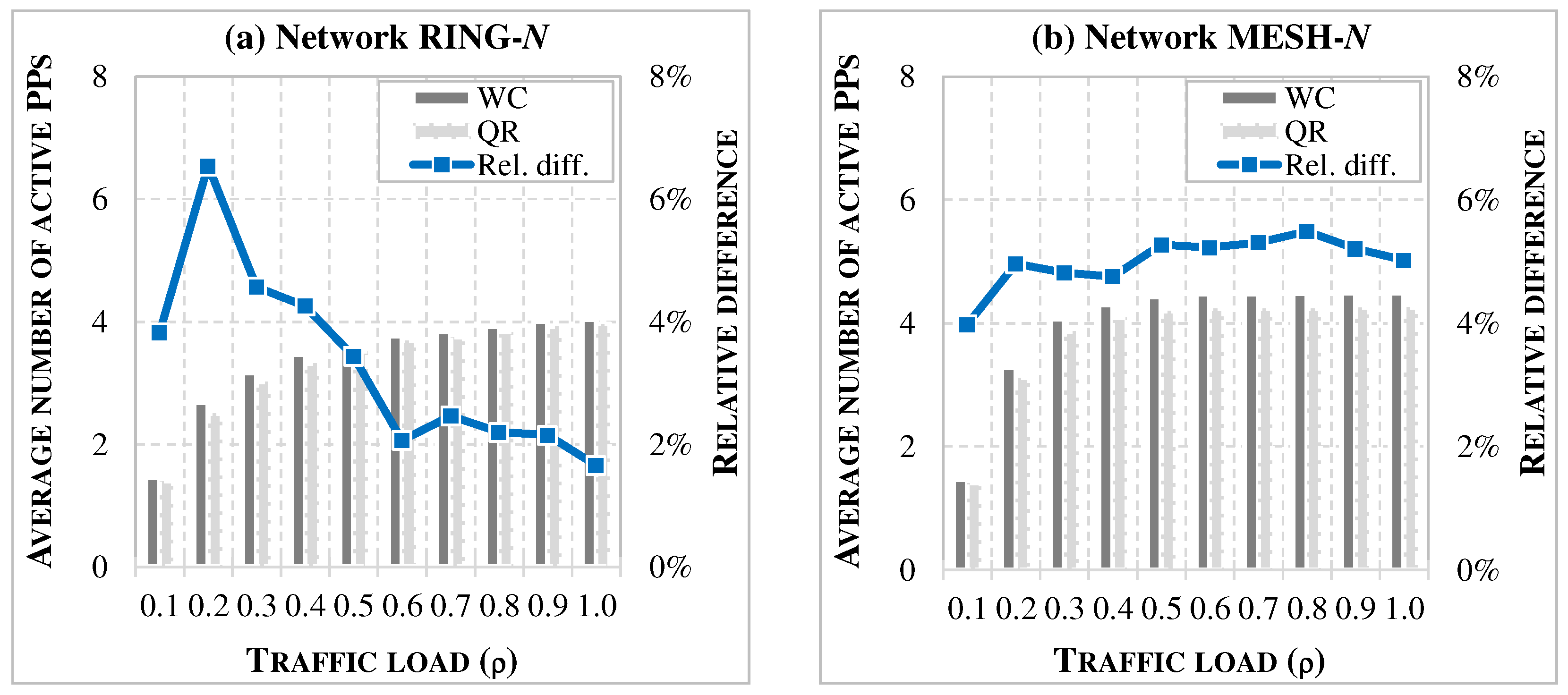

7.3. Impact on Network Performance

8. Discussion

- The comparison presented in Section 7 shows that deterministic WC estimation, while ensuring that latency limits are not violated, is overly conservative. WC values often exceed the actual latencies observed in simulations, which may lead to rejecting feasible configurations and allocating more network resources than necessary. In contrast, the QR-based predictions remain within acceptable limits and closely approximate the measured latencies, making them a more accurate approach for estimating maximum one-way delays.

- The accuracy analysis confirms that the QR model consistently reduces prediction errors for both FH and MH flows. Although the absolute error still reaches a few percent in some cases, it represents a clear improvement over the WC estimator, especially in mesh topologies and at higher traffic loads. The results also show that tighter latency limits improve the accuracy of both models and that the impact of numerology differs between FH and MH flows. These observations highlight the need for parameter-aware adaptation of predictors and motivate the exploration of more advanced ML models.

- At the network level, more accurate latency prediction translates directly into improved resource efficiency. When QR-based estimates are applied during network operation, a decrease of 1–11% in active processing nodes is observed on average, depending on the scenario. Although these gains may seem modest, they become significant in large-scale deployments, where even small efficiency improvements yield noticeable reductions in energy consumption and operational costs. Importantly, this effect is consistent across different network sizes, traffic loads, and RU configurations, confirming the robustness of the QR approach.

- An important consideration regarding the applicability of the QR model is the range of network sizes and traffic loads represented in the training data. In this study, the maximum load level was limited to , corresponding to the full radio-flow bit-rate defined for each RU, and therefore representing the intended maximum Xhaul traffic. Scenarios with —i.e., exceeding the nominal RU capacity—were not considered. While the QR predictor may still produce reasonable outputs under such conditions, proper training for these extreme loads would require incorporating corresponding samples into the dataset. Similarly, although the training data cover a diverse set of topologies, including ring networks with up to 10 switches and mesh networks with up to 50 RUs, applying the model to significantly larger or structurally different networks would likely require extending the training dataset to ensure robust generalization.

- The QR model is well suited for packet-switched Xhaul networks because it focuses on conservatively predicting high-quantile latency values. In this study, the QR predictor incorporates worst-case latency estimations as one of its input features. If analogous WC estimations can be formulated for scenarios with additional traffic classes or more advanced QoS differentiation schemes, the same QR-based methodology could be extended to those settings as well. Extending the approach to multi-class priority scheduling or differentiated QoS policies therefore constitutes a promising direction for future research.

- While the evaluation considers a broad range of traffic loads and dynamically changing flow configurations, it does not explicitly address extreme operating conditions such as sudden traffic surges or link failures. The analysis assumes that traffic remains within the SLA-compliant maximum bit-rate levels defined for the flows. Nevertheless, the simulations include scenarios with varying routing configurations and time-varying loads, suggesting that the QR model may retain applicability in situations where flows must be rerouted following a link failure. A systematic investigation of such extreme network states, particularly those involving abrupt congestion spikes or partial network outages, remains an important topic for future research.

- An additional consideration concerns the computational complexity of the QR model in the context of dynamic network operation. Although the model is intended for real-time use, its training is performed entirely offline using large data sets that cover diverse traffic loads and routing configurations. For the mesh topology, training on the complete dataset of up to 1.44 million labeled samples, corresponding to 360,000 samples per flow type, required approximately 1.5–2 h per flow type on a standard laptop. Once trained, the model can be deployed without further retraining, even under dynamically changing flow configurations. Inference is lightweight (sub-millisecond), as it involves only simple linear operations on features such as WC and other latency-related components, which are dynamically computed through iterative summation of per-link latency values along each flow’s routing path. These properties make the QR predictor entirely suitable for real-time network control. While a formal complexity analysis of the underlying optimization solver is beyond the scope of this work, the empirical results indicate that the approach is computationally efficient and practically deployable.

- Overall, the obtained results show that applying the QR model in latency-sensitive packet-switched Xhaul networks improves efficiency without violating SLA constraints. By mitigating conservatism in WC estimates, the QR approach enables more effective use of network resources. These findings support the integration of data-driven latency predictors into future network control and management systems, including those operating within the O-RAN architecture, enabling proactive and automated network optimization.

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alimi, I.A.; Teixeira, A.; Monteiro, P. Towards an Efficient C-RAN Optical Fronthaul for the Future Networks: A Tutorial on Technologies, Requirements, Challenges, and Solutions. IEEE Commun. Surv. Tutor. 2018, 20, 708–769. [Google Scholar] [CrossRef]

- Chih-Lin, I.; Li, H.; Korhonen, J.; Huang, J.; Han, L. RAN Revolution with NGFI (xhaul) for 5G. IEEE J. Lightw. Technol. 2018, 36, 541–550. [Google Scholar]

- Abdullah, M.; Elayoubi, S.E.; Chahed, T.; Lisser, A. Performance Modeling and Dimensioning of Latency-Critical Traffic in 5G Networks. In Proceedings of the GLOBECOM 2023—2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023. [Google Scholar]

- 1914.1-2019; IEEE Standard for Packet-Based Fronthaul Transport Networks. IEEE: New York, NY, USA, 2019.

- Common Public Radio Interface: eCPRI V1.2 Requirements for the eCPRI Transport Network. 2018. Available online: https://www.cpri.info/downloads/Requirements_for_the_eCPRI_Transport_Network_V1_2_2018_06_25.pdf (accessed on 14 October 2025).

- O-RAN Alliance. O-RAN Control, User and Synchronization Plane Specification, v18.0. Available online: https://www.o-ran.org/ (accessed on 14 October 2025).

- Skocaj, M.; Conserva, F.; Grande, N.S.; Orsi, A.; Micheli, D.; Ghinamo, G.; Bizzarri, S.; Verdone, R. Data-driven Predictive Latency for 5G: A Theoretical and Experimental Analysis Using Network Measurements. In Proceedings of the 2023 IEEE 34th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Toronto, ON, Canada, 5–8 September 2023. [Google Scholar]

- Cai, Y.; Li, W.; Meng, X.; Zheng, W.; Chen, C.; Liang, Z. Adaptive Contrastive Learning Based Network Latency Prediction in 5G URLLC Scenarios. Comput. Netw. 2024, 240, 110185. [Google Scholar] [CrossRef]

- Zhang, L.; Fu, J.; He, Y.; Jiang, X. Toward Deterministic Wireless Communication: Latency Prediction Using Network Measurement Data. In Proceedings of the 2024 IEEE 35th International Symposium on Software Reliability Engineering Workshops (ISSREW), Tsukuba, Japan, 28–31 October 2024. [Google Scholar]

- Klinkowski, M. Optimized Planning of DU/CU Placement and Flow Routing in 5G Packet Xhaul Networks. IEEE Trans. Netw. Serv. Manag. 2024, 21, 232–248. [Google Scholar] [CrossRef]

- 802.1CM-2018; IEEE Standard for Local and Metropolitan Area Networks—Time-Sensitive Networking for Fronthaul. IEEE: New York, NY, USA, 2018.

- O-RAN Alliance. Xhaul Packet Switched Architectures and Solutions. Tech. Spec. v8.0. 2025. Available online: https://specifications.o-ran.org/specifications (accessed on 14 October 2025).

- Bertin, E.; Crespi, N.; Magedanz, T. Shaping Future 6G Networks: Needs, Impacts, and Technologies; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- 802.1Qbv-2015; IEEE Standard for Local and Metropolitan Area Networks—Bridges and Bridged Networks—Amendment 25: Enhancements for Scheduled Traffic. IEEE: New York, NY, USA, 2015.

- 802.1Qbu-2016; IEEE Standard for Local and Metropolitan Area Networks—Bridges and Bridged Networks—Amendment 26: Frame Preemption. IEEE: New York, NY, USA, 2016.

- Chitimalla, D.; Kondepu, K.; Valcarenghi, L.; Tornatore, M.; Mukherjee, B. 5G Fronthaul–Latency and Jitter Studies of CPRI over Ethernet. J. Opt. Commun. Netw. 2017, 9, 172–182. [Google Scholar] [CrossRef]

- Perez, G.O.; Larrabeiti, D.; Hernandez, J.A. 5G New Radio Fronthaul Network Design for eCPRI-IEEE 802.1CM and Extreme Latency Percentiles. IEEE Access 2019, 7, 82218–82229. [Google Scholar] [CrossRef]

- Kleinrock, L. Queueing Systems Volume 1: Theory; John Wiley & Sons: Hoboken, NJ, USA, 1975. [Google Scholar]

- Chughtai, M.N.; Noor, S.; Laurinavicius, I.; Assimakopoulos, P.; Gomes, N.J.; Zhu, H.; Wang, J.; Zheng, X.; Yan, Q. User and Resource Allocation in Latency Constrained Xhaul via Reinforcement Learning. J. Opt. Commun. Netw. 2023, 15, 219–228. [Google Scholar] [CrossRef]

- Boudec, J.Y.L.; Thiran, P. Network Calculus—A Theory of Deterministic Queuing Systems for the Internet; Lecture Notes in Computer Science (2050); Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Zhang, J.; Wang, T.; Finn, N. Bounded latency calculating method, using network calculus. In Proceedings of the IEEE 802.1 Working Group Interim Session, Hiroshima, Japan, 14–17 January 2019. [Google Scholar]

- Klinkowski, M.; Perello, J.; Careglio, D. Application of Linear Regression in Latency Estimation in Packet-Switched 5G xHaul Networks. In Proceedings of the 2023 23rd International Conference on Transparent Optical Networks (ICTON), Bucharest, Romania, 2–6 July 2023. [Google Scholar]

- Koenker, R. Quantile Regression; Econometric Society Monographs; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Wan, T.; Ashwood-Smith, P. A performance Study of CPRI over Ethernet with IEEE 802.1Qbu and 802.1Qbv Enhancements. In Proceedings of the 2015 IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015. [Google Scholar]

- Helm, M.; Carle, G. Predicting Latency Quantiles Using Network Calculus-assisted GNNs. In Proceedings of the GNNet ’23: Proceedings of the 2nd on Graph Neural Networking Workshop 2023, Paris, France, 8 December 2023. [Google Scholar]

- Zhao, L.; Pop, P.; Craciunas, S.S. Worst-Case Latency Analysis for IEEE 802.1Qbv Time Sensitive Networks Using Network Calculus. IEEE Access 2018, 6, 41803–41815. [Google Scholar] [CrossRef]

- Diez, L.; Alba, A.M.; Kellerer, W.; Agüero, R. Flexible functional split and fronthaul delay: A queuing-based model. IEEE Access 2021, 9, 151049–151066. [Google Scholar] [CrossRef]

- Koneva, N.; Sánchez-Macián, A.; Hernández, J.A.; Arpanaei, F.; González de Dios, O. On Finding Empirical Upper Bound Models for Latency Guarantees in Packet-Optical Networks. In Proceedings of the 2025 International Conference on Optical Network Design and Modeling (ONDM), Pisa, Italy, 6–9 May 2025. [Google Scholar]

- Elgcrona, E. Latency Prediction in 5G Networks by Using Machine Learning. Master’s Thesis, Lund University, Lund, Sweden, 2023. [Google Scholar]

- Zinno, S.; Navarro, A.; Rotbei, S.; Pasquino, N.; Botta, A.; Ventre, G. A Lightweight Deep Learning Approach for Latency Prediction in 5G and Beyond. In Proceedings of the 2025 21st International Conference on Network and Service Management (CNSM), Bologna, Italy, 27–31 October 2025. [Google Scholar]

- Agarwal, B.; Irmer, R.; Lister, D.; Muntean, G.M. Open RAN for 6G Networks: Architecture, Use Cases and Open Issues. IEEE Commun. Surv. Tutor. 2025. [Google Scholar] [CrossRef]

- Brik, B.; Chergui, H.; Zanzi, L.; Devoti, F.; Ksentini, A.; Siddiqui, M.S.; Costa-Pérez, X.; Verikoukis, C. Explainable AI in 6G O-RAN: A Tutorial and Survey on Architecture, Use Cases, Challenges, and Future Research. IEEE Commun. Surv. Tutor. 2025, 27, 2826–2859. [Google Scholar] [CrossRef]

- Adamuz-Hinojosa, O.; Zanzi, L.; Sciancalepore, V.; Garcia-Saavedra, A.; Costa-Pérez, X. ORANUS: Latency-tailored Orchestration via Stochastic Network Calculus in 6G O-RAN. In Proceedings of the IEEE INFOCOM 2024—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 20–23 May 2024; pp. 61–70. [Google Scholar]

- Garcia-Saavedra, A.; Costa-Perez, X.; Leith, D.J.; Iosifidis, G. Enhancing 5G O-RAN Communication Efficiency Through AI-Based Latency Forecasting. In Proceedings of the IEEE INFOCOM 2025—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), London, UK, 19 May 2025; pp. 1–2. [Google Scholar]

- Klinkowski, M. Optimization of Latency-Aware Flow Allocation in NGFI Networks. Comp. Commun. 2020, 161, 344–359. [Google Scholar] [CrossRef]

- Klinkowski, M. Latency-Aware DU/CU Placement in Convergent Packet-Based 5G Fronthaul Transport Networks. Appl. Sci. 2020, 10, 7429. [Google Scholar] [CrossRef]

- Common Public Radio Interface: eCPRI V1.2 Interface Specification. 2018. Available online: https://www.cpri.info/downloads/eCPRI_v_1_2_2018_06_25.pdf (accessed on 14 October 2025).

- Esmaeily, A.; Mendis, H.V.K.; Mahmoodi, T.; Kralevska, K. Beyond 5G Resource Slicing with Mixed-Numerologies for Mission Critical URLLC and eMBB Coexistence. IEEE Open J. Comm. Soc. 2023, 4, 727–747. [Google Scholar] [CrossRef]

- Koenker, R.; Bassett, G.J. Regression Quantiles. Econometrica 1978, 46, 33–50. [Google Scholar] [CrossRef]

- Skocaj, D.; Dincic, M.; Bennesby, T.; Vukadinovic, V. TAILING: Tail Distribution Forecasting of Packet Delays Using Quantile Regression Neural Networks. In Proceedings of the ICC 2023—IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023. [Google Scholar]

- ITU-T Technical Report. Transport Network Support of IMT-2020/5G. 2018. Available online: https://www.itu.int/hub/publication/t-tut-home-2018/ (accessed on 14 October 2025).

- Khorsandi, B.M.; Raffaelli, C. BBU location algorithms for survivable 5G C-RAN over WDM. Comput. Netw. 2018, 144, 53–63. [Google Scholar] [CrossRef]

- Lagen, S.; Giupponi, L.; Hansson, A.; Gelabert, X. Modulation Compression in Next Generation RAN: Air Interface and Fronthaul Trade-offs. IEEE Comm. Mag. 2021, 59, 89–95. [Google Scholar] [CrossRef]

- 3GPP. Study on New Radio Access Technology: Radio Access Architecture and Interfaces. Tech. Rep. TR 38.801, v14.0.0. 2017. Available online: https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=3056 (accessed on 14 October 2025).

- IBM. CPLEX Optimizer. Available online: https://www.ibm.com/products/ilog-cplex-optimization-studio (accessed on 14 October 2025).

- Varga, A. OMNeT++ Discrete Event Simulator. Available online: https://omnetpp.org/ (accessed on 14 October 2025).

- Innovative Optical Wireless Network Global Forum. PoC Reference of Mobile Fronthaul over APN (ver. 2.0). 2024. Available online: https://iowngf.org/wp-content/uploads/2025/02/IOWN-GF-RD-PoC_Reference_of_MFH_over_APN-2.0.pdf (accessed on 14 October 2025).

| Link | Bit-Rate [Gbit/s] | Length [km] |

|---|---|---|

| switch–RU | 25 | |

| switch–switch | 100 | |

| switch–PP | 400 | |

| switch–hub | 400 |

| Score | ||||||

|---|---|---|---|---|---|---|

| FH | MH | |||||

| Network | Model | UL | DL | UL | DL | |

| RING-N | 1 | WC | 0.952 | 0.990 | 0.633 | 0.012 |

| QR | 0.980 | 0.993 | 0.952 | 0.864 | ||

| 2 | WC | 0.956 | 0.991 | 0.823 | 0.516 | |

| QR | 0.986 | 0.995 | 0.972 | 0.929 | ||

| MESH-N | 1 | WC | 0.960 | 0.990 | 0.754 | 0.381 |

| QR | 0.984 | 0.993 | 0.944 | 0.873 | ||

| 2 | WC | 0.918 | 0.980 | 0.884 | 0.480 | |

| QR | 0.974 | 0.991 | 0.963 | 0.853 | ||

| Model WC | Model QR | Absolute Difference | ||||||

|---|---|---|---|---|---|---|---|---|

| Network | [μs] | FH | MH | FH | MH | FH | MH | |

| RING-N | 75 | 1 | 7% | 14% | 6% | 8% | 1% | 6% |

| 2 | 10% | 10% | 7% | 5% | 3% | 5% | ||

| 100 | 1 | 13% | 19% | 11% | 12% | 2% | 7% | |

| 2 | 14% | 12% | 10% | 7% | 4% | 5% | ||

| MESH-N | 75 | 1 | 6% | 8% | 5% | 6% | 1% | 2% |

| 2 | 9% | 7% | 7% | 5% | 2% | 2% | ||

| 100 | 1 | 10% | 12% | 9% | 9% | 2% | 3% | |

| 2 | 14% | 9% | 11% | 7% | 4% | 2% | ||

| Traffic Load () | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Network | [μs] | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 |

| RING-N | 75 | 4% | 3% | 3% | 4% | 3% | 2% | 2% | 3% | 3% | 2% |

| 100 | 4% | 11% | 6% | 5% | 4% | 2% | 3% | 2% | 1% | 2% | |

| MESH-N | 75 | 3% | 3% | 5% | 5% | 6% | 6% | 6% | 6% | 6% | 6% |

| 100 | 5% | 8% | 4% | 4% | 4% | 4% | 4% | 4% | 4% | 4% | |

| Traffic Load | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Network | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | |

| RING-N | 1 | 6% | 5% | 4% | 4% | 3% | 1% | 1% | 2% | 3% | 2% |

| 2 | 1% | 9% | 5% | 4% | 4% | 3% | 4% | 3% | 1% | 1% | |

| MESH-N | 1 | 5% | 2% | 2% | 3% | 5% | 4% | 4% | 4% | 4% | 3% |

| 2 | 3% | 9% | 10% | 8% | 7% | 8% | 8% | 8% | 8% | 8% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Klinkowski, M.; Więcek, D. Performance Analysis of Data-Driven and Deterministic Latency Models in Dynamic Packet-Switched Xhaul Networks. Appl. Sci. 2025, 15, 12487. https://doi.org/10.3390/app152312487

Klinkowski M, Więcek D. Performance Analysis of Data-Driven and Deterministic Latency Models in Dynamic Packet-Switched Xhaul Networks. Applied Sciences. 2025; 15(23):12487. https://doi.org/10.3390/app152312487

Chicago/Turabian StyleKlinkowski, Mirosław, and Dariusz Więcek. 2025. "Performance Analysis of Data-Driven and Deterministic Latency Models in Dynamic Packet-Switched Xhaul Networks" Applied Sciences 15, no. 23: 12487. https://doi.org/10.3390/app152312487

APA StyleKlinkowski, M., & Więcek, D. (2025). Performance Analysis of Data-Driven and Deterministic Latency Models in Dynamic Packet-Switched Xhaul Networks. Applied Sciences, 15(23), 12487. https://doi.org/10.3390/app152312487