1. Introduction

In recent years, video-based content has grown explosively, driving demand for live streaming services with ever-higher quality expectations. Supporting such real-time streaming requires both high-performance computing and efficient network management. At the same time, interest in virtual content has expanded across industries such as entertainment and gaming, where visual effects (VFX) and motion capture (MoCap) are widely used to create immersive experiences and digital characters [

1]. More recently, commercially viable technologies have emerged that combine these elements, enabling the 3D reconstruction of real people and their integration into diverse virtual environments [

2].

Two primary technologies support realistic 3D human modeling. The first, volumetric capture, uses arrays of cameras to generate high-resolution 3D meshes and textures. While this method produces highly detailed results, it requires extensive camera setups, significant processing power, and high bandwidth, making it impractical for real-time applications. The second, motion capture (MoCap), focuses on recording movement rather than texture. MoCap can be implemented with wearable sensors or camera-based systems, producing less data and enabling faster processing, though at the cost of realistic texture reconstruction and visual fidelity.

Volumetric methods are generally infrastructure-heavy and not optimized for real-time applications [

3], whereas camera-based MoCap systems are more scalable and better suited for interactive use cases [

4]. Such systems have been increasingly applied in gaming, online avatars, and virtual presenters [

5]. They offer immersive experiences while protecting user privacy by abstracting personal identity.

The rapid progress of computer vision and artificial intelligence (AI) has significantly advanced MoCap technology [

6]. Historically, marker-based systems dominated in gaming and entertainment, requiring performers to wear sensor-equipped suits or rely on specialized cameras. Recent developments in deep learning, particularly convolutional neural networks (CNNs), have enabled posture estimation using only RGB cameras. This approach has broadened accessibility, reduced hardware dependence, and expanded adoption across multiple fields.

Realistic 3D human modeling relies primarily on two approaches. Volumetric capture employs arrays of cameras to generate high-resolution 3D meshes and textures, producing highly detailed results but requiring large-scale camera setups, significant processing power, and high bandwidth, which limits real-time applications. Motion capture (MoCap), in contrast, focuses on capturing movement rather than texture and can be implemented using either wearable sensors or camera-only systems. MoCap generates less data and enables faster processing, though it lacks realistic texture reconstruction, which constrains visual fidelity.

Volumetric approaches are typically infrastructure-intensive and not optimized for real-time performance [

3], whereas camera-based MoCap systems are more scalable and suitable for interactive applications [

4]. Such systems have been increasingly adopted in gaming, virtual presenters, and online avatars [

5], providing immersive experiences while protecting user privacy through abstraction of identity.

With rapid advancements in AI-powered computer vision, MoCap technology has evolved substantially, enabling high-accuracy human posture estimation using simple RGB cameras [

6]. Marker-based systems historically dominated gaming and entertainment but required specialized suits and cameras. Deep learning methods, particularly convolutional neural networks (CNNs), have recently enabled high-performance pose estimation from single images, expanding applicability across multiple domains.

As the demand for precise motion tracking grows, 3D human pose estimation has become a key research area. Methods are commonly categorized into multi-view and single-view models, with multi-view approaches achieving higher accuracy by integrating inputs from multiple cameras [

7]. These advancements have opened new opportunities for interactive, immersive environments.

Recent trends in digital cultural heritage have increasingly emphasized immersive, interactive, and data-driven methods for documenting and disseminating cultural assets. IoT–XR–based frameworks have been explored for large-scale reconstruction, restoration support, and intelligent guided-tour experiences, demonstrating how emerging technologies can broaden public access to cultural heritage [

8,

9]. In parallel, studies on virtual and metaverse-based museums have examined user trust, engagement, and social cognition in digitally mediated heritage experiences [

10,

11,

12].

At the same time, research on camera-based posture analysis has expanded rapidly in both digital-ergonomics and cultural-heritage applications. OpenPose-style 2D keypoint pipelines are widely used in low-cost, multi-camera settings for quantifying human movement, while depth-enabled systems such as ZED provide geometric cues that improve pose disambiguation in heritage documentation and motion analysis tasks. These technological developments collectively highlight the growing need for real-time, high-fidelity, and culturally contextualized 3D representations of intangible heritage—particularly traditional performing arts—motivating the direction of the present study.

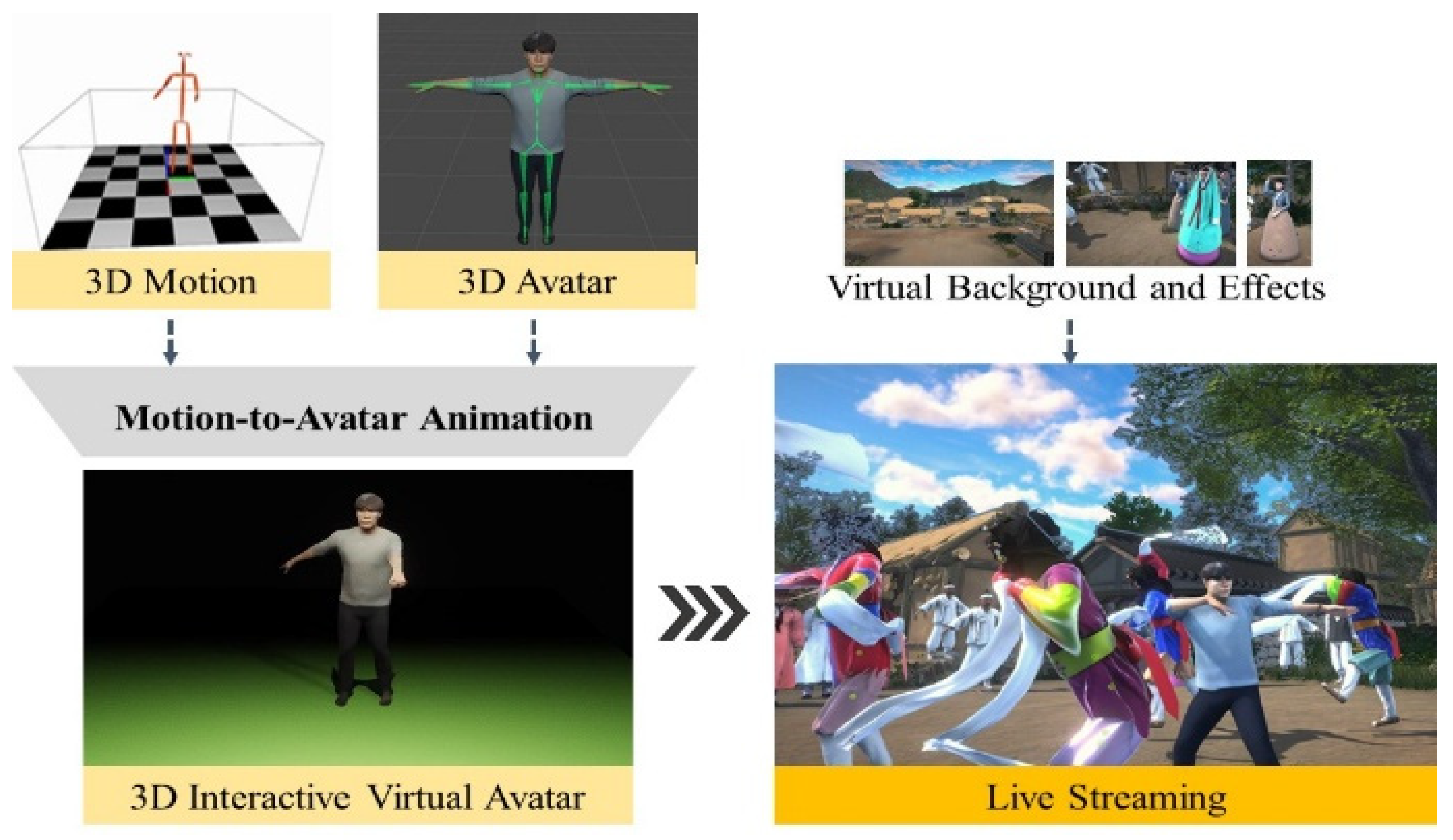

This study presents a real-time live streaming platform for digitizing and animating traditional performing arts, demonstrated through Korean traditional dance (

Figure 1). The platform supports multi-performer scenarios and leverages a distributed edge computing infrastructure. It integrates multi-view 3D pose estimation with 3D coordinate synthesis, enabling accurate, coherent tracking of multiple dancers in dynamic environments. Reconstructed motion data are mapped onto pre-modeled, rigged 3D avatars, generating real-time animated virtual performances that can be enriched with virtual effects and cultural backdrops.

Despite these technological advances, applying real-time 3D human digitization to intangible cultural heritage—especially multi-performer traditional dance—remains technically challenging. Specifically, this study focuses on addressing the following key challenges: (1) Latency and bandwidth constraints required for real-time multi-camera 3D motion capture and streaming, (2) Multi-performer robustness, including occlusion handling, depth ambiguity resolution, and identity consistency during complex ensemble choreography, (3) Cultural authenticity, ensuring that reconstructed movements, costumes, and symbolic gestures faithfully preserve the characteristics of traditional performances.

These points define the technical and cultural challenges that our framework seeks to address. In summary, our main contributions are as follows:

We propose a lightweight, edge-based platform that integrates AI-driven pose estimation with avatar-based animation, validated through demonstrations of Korean traditional dance.

We achieve bandwidth-efficient real-time streaming, reducing raw HD video transmission (~1 Gbps) to skeleton-based motion data (~64 Kbps) while maintaining 60 fps rendering quality.

We ensure high realism and interactivity through multi-device synchronization across a distributed edge network, enabling smooth, immersive delivery of cultural performances in digital form.

3. Proposed Framework

The proposed real-time streaming platform, extended and restructured from our prior work [

24,

25] and illustrated in

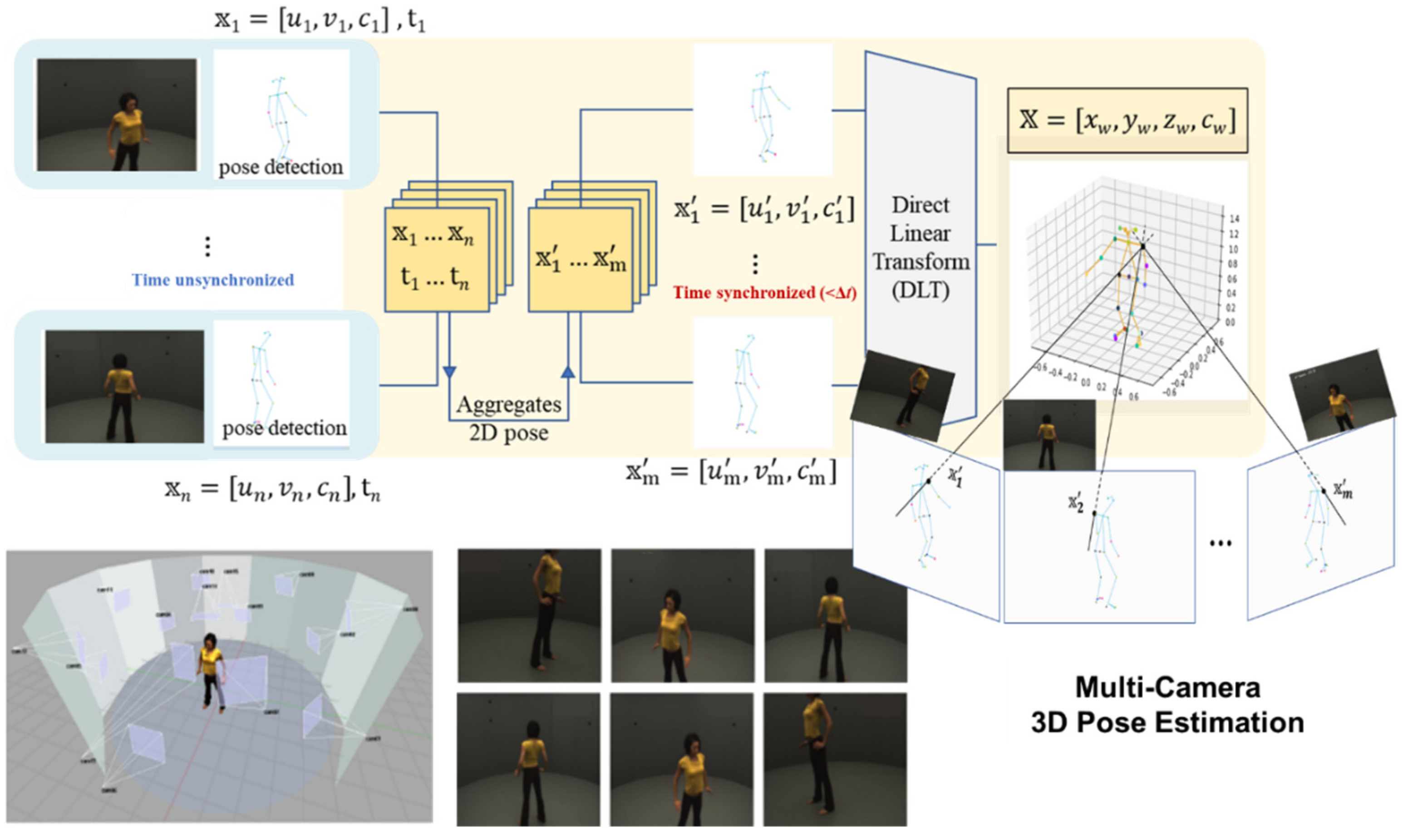

Figure 2, comprises two primary components: a motion capture system that acquires real-time 3D motion data from performers using multiple synchronized cameras, and a 3D reconstruction system that integrates pre-modeled character avatars. The platform generates and streams 3D content by combining real-time motion data with pre-built 3D character models.

Unlike our previous work [

25], which focused on technical performance evaluation of multi-view 3D pose estimation and synchronization accuracy, the present study extends that validated backbone toward a real-time and interactive platform for cultural-heritage applications. In particular, the system newly integrates edge-based lightweight inference, skeleton-only data transmission for bandwidth reduction, and real-time avatar retargeting with physics-based simulation, enabling expressive reproduction of traditional performances in virtual environments. The proposed platform generates and streams 3D content by combining real-time motion data with pre-built 3D character models.

The motion capture system consists of multiple cameras connected to edge AI devices and a central server. Edge devices detect 2D human poses and extract appearance features from RGB images, transmitting only this lightweight data to the central server. Because only pose information is transmitted, network bandwidth requirements are typically in the order of tens of Kbps.

At the central server, 2D pose data are organized by timestamp and reconstructed into 3D poses using geometric triangulation. Compared with conventional systems, this architecture is better suited for real-time applications because deep learning tasks are distributed across edge devices, substantially reducing network traffic. The server groups 2D poses based on geometric similarity and reconstructs them into 3D coordinates through multi-view triangulation.

The platform employs pre-modeled 3D avatars representing the target characters. Each avatar, composed of 3D meshes and texture data, is animated in real time by combining it with motion data from the capture system. The resulting avatars mimic the performer’s movements naturally within a virtual environment. Animated avatars are synchronized with background effects and rendered as part of the live streaming output.

Models for 3D motion capture [

6,

7,

13,

14] achieve high accuracy in monocular 3D pose estimation through deep temporal learning, while models for avatar generation [

20,

21,

22,

23] demonstrates advanced neural rendering for photorealistic avatar generation. However, these methods are generally optimized for offline reconstruction or single-person settings, limiting their applicability to real-time multi-performer cultural scenarios.

In contrast, our proposed Hybrid System integrates the efficiency of keypoint-based motion capture with the realism of pre-scanned avatars, achieving low latency and high fidelity suitable for live streaming of traditional performances. The comparative positioning of these approaches is summarized in

Table 1, which situates the proposed method between motion-capture- and avatar-generation-based paradigms.

4. System Implementation

The proposed platform is a real-time live streaming system that integrates three key components: (1) edge AI-based 3D motion capture, (2) 3D avatar creation, and (3) real-time motion-to-avatar animation. It uses a distributed network of cameras and edge processors to extract human motion, which is combined with pre-modeled avatars to generate and stream realistic virtual performances.

To clarify the computational workflow, the edge devices do not perform model splitting or distributed inference in the strict sense. Instead, each edge node independently executes lightweight 2D pose estimation on its paired camera stream and transmits only the detected 2D keypoints to the central server. Because multi-view fusion is performed only once at the server side, this design naturally distributes the computational load: the edge devices handle dense per-frame inference, while the server performs triangulation, identity matching, and final 3D reconstruction. This architecture significantly reduces server-side processing requirements and removes the need for transmitting high-bandwidth video streams, enabling stable real-time operation even with multiple performers.

The following subsections describe the implementation details and specific roles of each subsystem.

4.1. Edge AI-Based 3D Motion Capture

To maintain focus on the cultural-heritage application, we provide here only the conceptual overview of the multi-view synchronization, PDJ evaluation, and depth-aided identity matching modules. The complete algorithmic specifications are fully detailed in our prior study [

25], which this system directly builds upon.

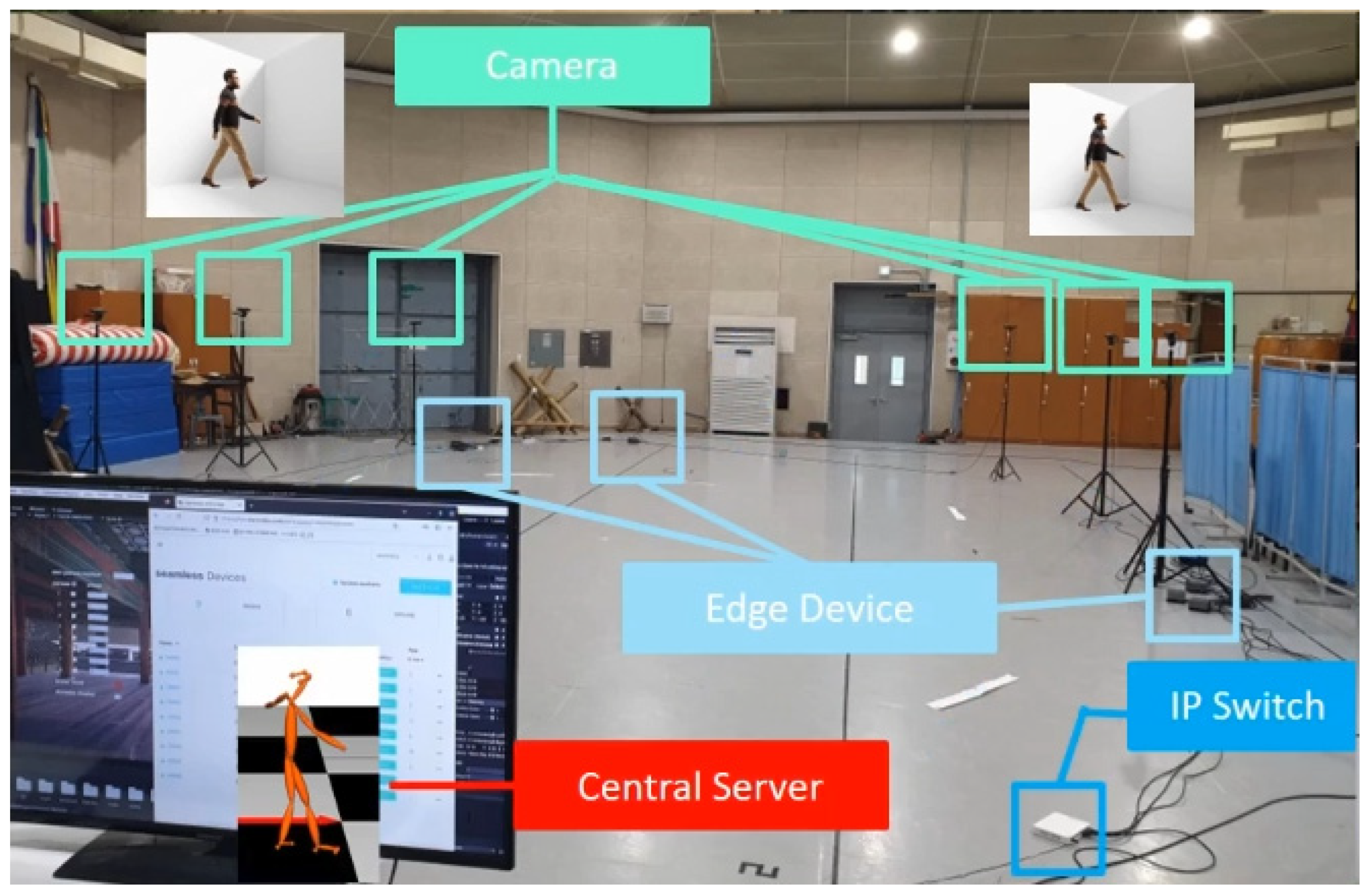

Each edge device independently performs 2D human pose estimation on its respective image input and forwards the resulting data to a centralized server, as illustrated in

Figure 3. The

i-th edge device transmits the 2D keypoint coordinates

i and timestamp

ti to the central server, where

n represents the 2D keypoint coordinates {

un,

vn} along with the confidence score {

cn} from the

n-th camera. The central server collects {

, …,

}, a set of 2D keypoint coordinates synchronized by timestamp, forming a synchronized group of 2D poses. It then reconstructs a 3D pose

= {

xw,

yw,

zw} with confidence score {

cw} using geometric triangulation techniques applied to the set of 2D human poses via the DLT method [

6].

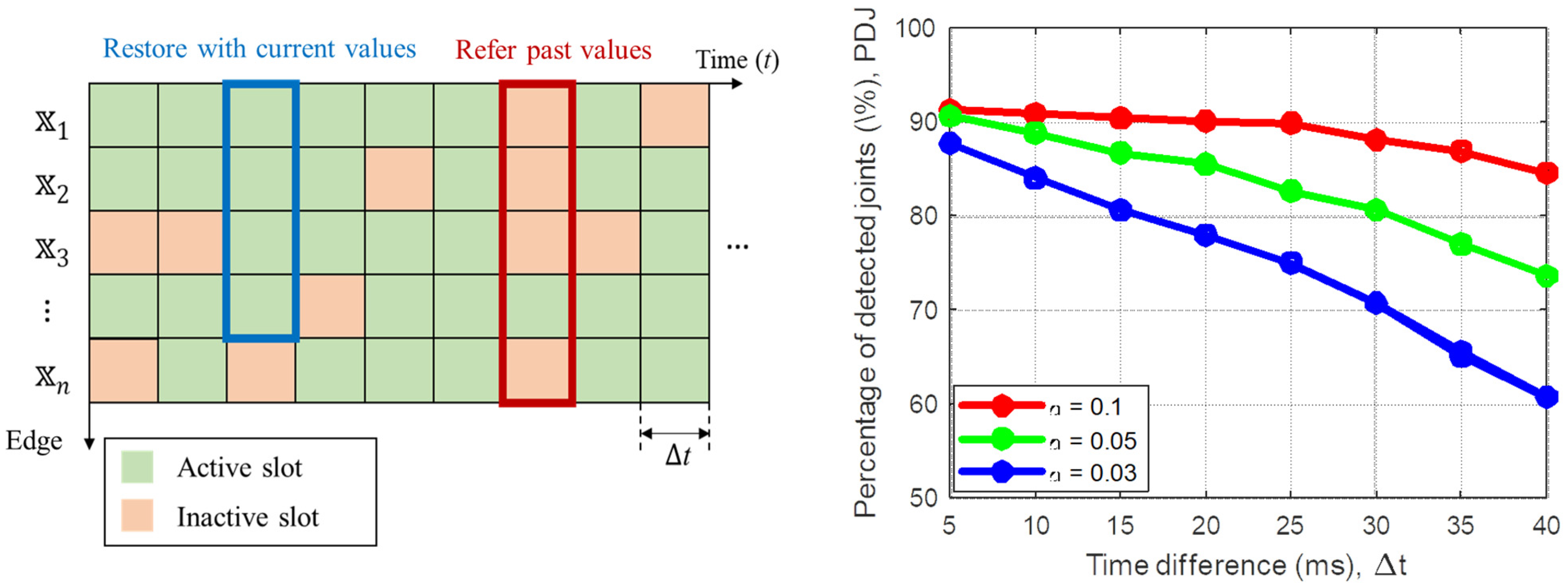

Synchronization of 2D poses from multiple edge devices is necessary because perfectly aligned timestamps are generally impossible. To address this, data within an acceptable error range Δ

t are considered co-occurring. Green in

Figure 4 (left) illustrates data sent within the acceptable time window for each device, while red indicates data outside the window. For DLT reconstruction, at least two data points are required; if fewer than two are received, the previous frame’s values are used. However, this scenario is unlikely in the proposed distributed system due to the number of edge devices. Performance of the time synchronization algorithm is evaluated using the percentage of detected joints (PDJ) [

8]:

where

is the Euclidean distance between the

i-th predicted keypoint and the corresponding ground-truth keypoint,

D denotes the Euclidean distance of the 3D bounding box of the human body,

is a distance threshold used to verify the estimation accuracy,

is the total number of keypoints, and

B(·) is a Boolean function returning 1 if the condition is true and 0 otherwise.

An appropriate Δ

t can be determined from the computer simulation results shown on the right side of

Figure 4. These results illustrate the PDJ across varying Δ

t values for eight edge devices, with detection performance adjusted using

. The simulations show that setting Δ

t to approximately 33 ms for a fixed 30 fps configuration achieves a PDJ of around 80%, while for a 60 fps configuration, a Δ

t of ~20 ms yields a PDJ of nearly 90%. In practical field applications, the output interval Δ

t should be configured based on factors such as the number of cameras and edge devices, edge processing speed, network conditions, and other relevant system parameters.

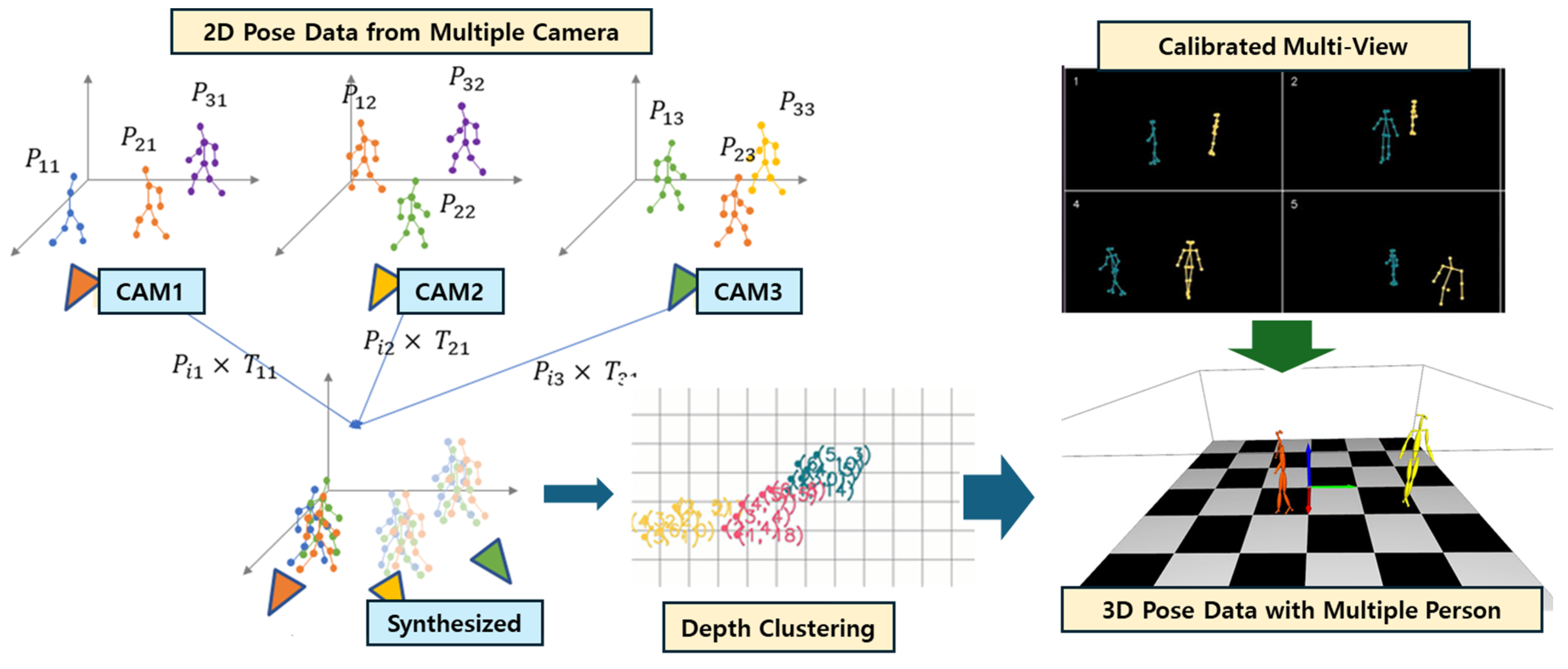

To distinguish multiple individuals, the central server matches 2D poses across views using depth-sensing results. Calibrated cameras are aligned to a world plane, and 2D poses

are grouped by minimizing Euclidean distances using a clustering algorithm, such as agglomerative clustering, based on depth-sensing results

and

. Each cluster corresponds to the 2D poses of the same individual across multiple views. Person matching is performed for every frame, as illustrated in

Figure 5.

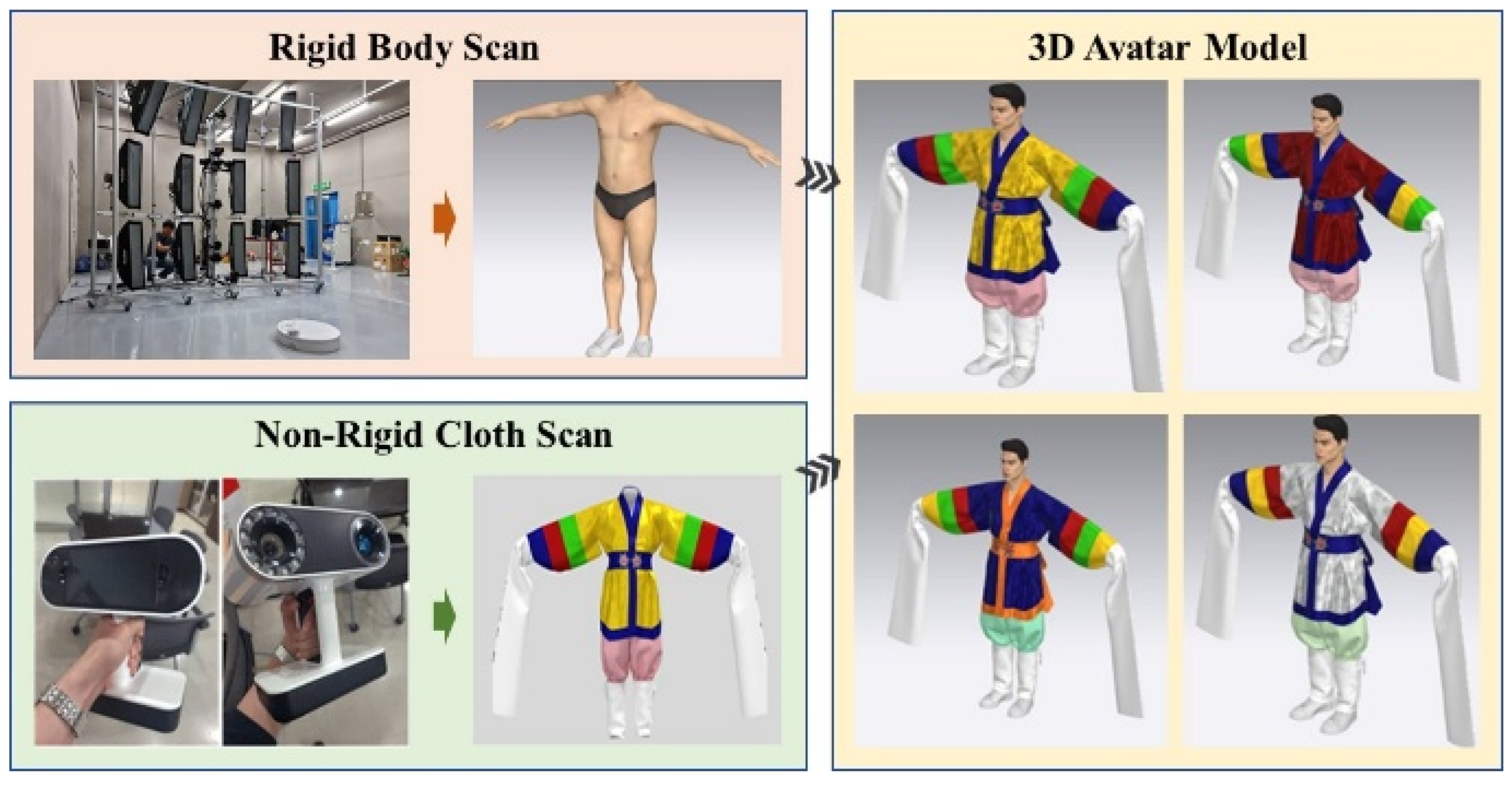

4.2. 3D Scan Based Avatar Creation

The 3D reconstruction system, shown in

Figure 6, uses multiple 8K DSLR cameras along with handheld scanners (Artec Leo) to capture high-resolution models of the performer, including the rigid body, clothing, and props. This system produces high-quality human model data, ensuring both precision and realism. Each component, body, costume, and props, is scanned individually. The process includes cleanup, retopology, and rigging to maintain data quality comparable to real-life models. Rigging allows the 3D model to integrate seamlessly with motion capture data, enabling dynamic and natural animation.

For soft-body components such as clothing, reconstructed models are simulated over the rigid body base. These non-rigid elements respond to captured motion data and are animated in real time using a physics engine, which calculates realistic fabric behavior within the virtual environment. This approach enhances visual realism and allows lifelike interactions between the avatar and its surroundings. While physical simulation is not the primary focus of this study, our implementation leverages Unity’s built-in physics material system to achieve real-time animation effects.

4.3. Real-Time Motion-to-Avatar Animation

The real-time motion-to-avatar animation module maps 3D skeletal data from the motion capture system onto the pre-modeled and rigged 3D avatars. This process generates natural, real-time animations for multiple performers simultaneously. To enhance realism, an inverse kinematics (IK) library is used. By utilizing the estimated 3D joint positions of key body parts, such as hands, feet, and elbows, the system infers plausible positions and rotations for other joints, enabling smooth and physically accurate movement of multi-joint structures.

To satisfy real-time constraints, the IK solver operates within the rendering loop and is executed immediately after receiving updated 3D joint positions from the motion capture module. We adopt Unity’s lightweight IK library, which performs local joint optimization with a bounded number of iterations (typically 1–2 per frame). This design keeps the computation within a 3–5 ms per-frame budget, ensuring that avatar retargeting consistently runs at 60 fps without interrupting the overall streaming pipeline.

To maintain consistency between captured motions and assigned avatars, each 3D joint sequence is assigned a unique ID during the motion capture stage. This ID ensures that motion data is correctly mapped to the corresponding avatar, preventing model-switching artifacts during animation.

As illustrated in

Figure 7, virtual backgrounds and additional effects can be integrated during rendering. The final output delivers an immersive and visually rich 3D performance experience from dynamic camera perspectives, allowing end users to engage with the content with enhanced realism and spatial interaction.

5. Experimental Results

5.1. Environment Setup

We developed a real-time demonstration environment to validate the feasibility of the proposed platform. Since the focus of this study is on practical functionality in a live demo, standard algorithm performance datasets were not used. Instead, results were obtained by directly recruiting performers in a real-world setting. As shown in

Table 2 and

Figure 8, the demonstration setup enabled real-time visualization of 3D pose estimation results on a display screen. Eight RGB cameras were connected to eight edge devices, each performing 2D pose estimation on single-view RGB images and outputting results with corresponding timestamps. All edge devices were linked to a central server via an IP switch over an Ethernet network. Because only pose data, not raw image data, was transmitted, network traffic was limited to a few hundred kbps. The central server aggregated the 2D pose data from all edge devices and reconstructed 3D poses at a constant rate of 60 fps. The process was monitored on-site in real time using GUI software (Unity 2023). Measured latency between the live scene and the GUI display was approximately 200 ms, providing sufficient responsiveness for an interactive experience.

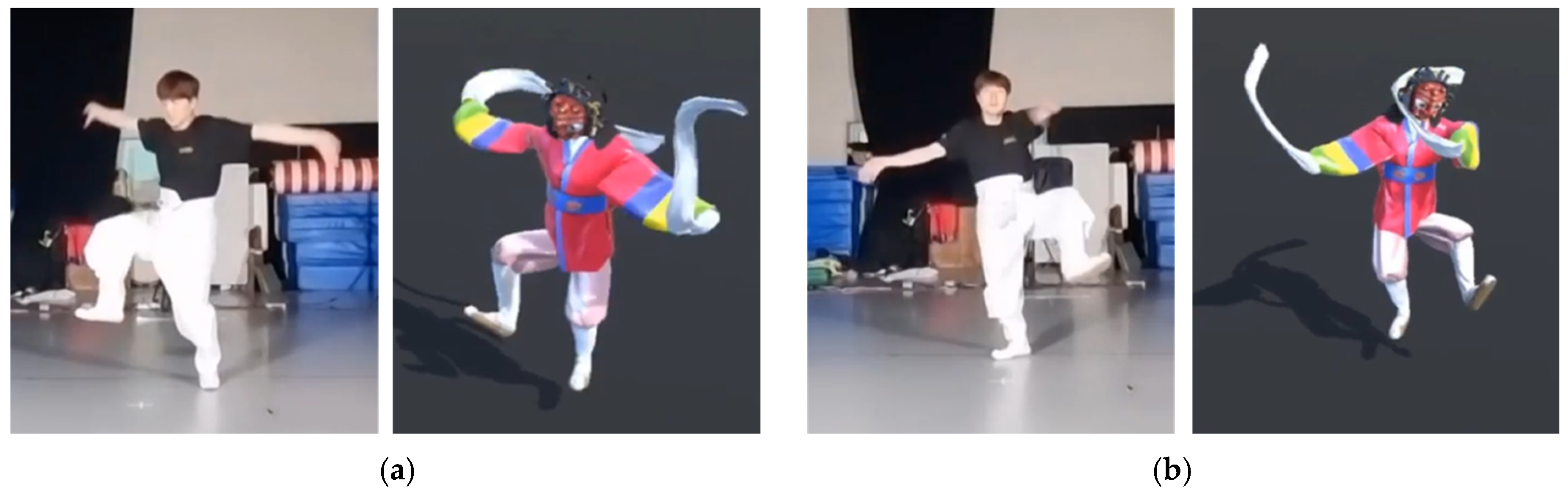

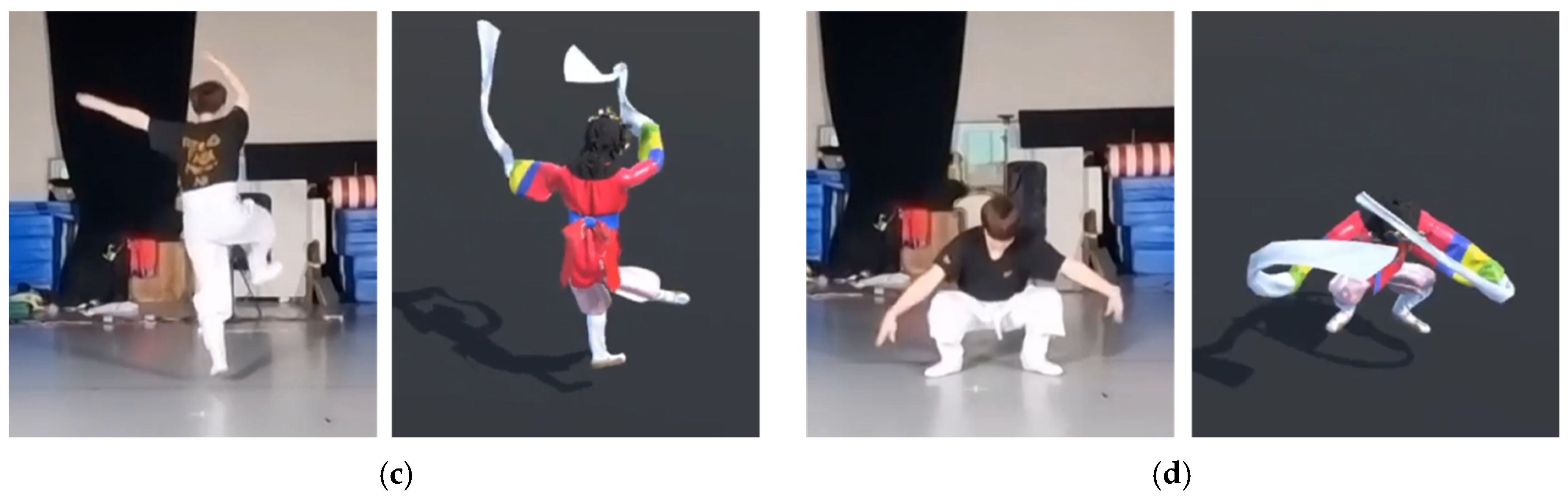

5.2. Real-Time Demonstration

An experiment was conducted using a dancing performer to evaluate the feasibility of reproducing various human actions in real time within the implemented demonstration environment. The goal was to determine whether the pre-modeled virtual avatar could accurately replicate the performer’s movements while providing an interactive experience. The results are shown in

Figure 9. The avatar demonstrated high responsiveness and accurately expressed actions such as standing, looking forward, looking back, and sitting, confirming its suitability as part of a real-time live streaming platform.

Additional experiments involved multiple performers, focusing on eight individuals. A key objective was to assess whether the system could effectively manage occlusions between performers. Real-time virtual content was also generated with integrated background elements, illustrating the platform’s potential for expansion into broader content services, as shown in

Figure 10.

The developed technology leverages AI-based 3D performance generation by estimating performer poses and synthesizing 3D positions using only multi-camera RGB images. While this system may (1) be slightly more costly and exhibit marginally lower fidelity than conventional motion capture systems, it offers significant advantages in usability, and (2) although the visual restoration quality may be slightly lower than volumetric systems, it excels in cost efficiency and real-time processing. Considering factors such as mobility, responsiveness, and economic feasibility, this technology demonstrates strong potential for commercialization in real-time virtual performance content creation, including the preservation and digital presentation of traditional performances.

5.3. Performance Analysis

To contextualize the performance of the proposed framework, we compare it against two representative state-of-the-art approaches: ConvNeXtPose [

18], a recent single-view 3D human pose estimation model, and GaussianAvatar [

21], an advanced avatar-generation pipeline based on Gaussian Splatting. These models represent the dominant paradigms in (1) learning-based 3D pose estimation and (2) high-fidelity digital human reconstruction, respectively.

Table 3 summarizes the key differences in terms of input modality, latency, accuracy, real-time feasibility, and streaming capability.

Our system differs significantly from ConvNeXtPose, which supports real-time inference on a single GPU but lacks a full real-time, multi-view, multi-performer, streaming-capable pipeline. ConvNeXtPose operates on single-view RGB images and provides only inference-level performance; it does not include multi-camera synchronization, triangulation, identity preservation, or avatar retargeting, which are essential for ensemble dance capture. In contrast, the proposed framework performs distributed inference across synchronized edge devices, reconstructs multi-view 3D skeletons, and streams animation-ready motion data to the server in real time.

When compared with GaussianAvatar, our system provides complementary strengths. GaussianAvatar excels at generating high-quality static digital humans but relies on offline reconstruction, with reconstruction times on the order of several minutes. Although its rendering performance is high, the overall pipeline cannot support real-time cultural performance streaming or multi-performer interaction. The proposed system, by contrast, focuses on real-time operation: it achieves approximately 200 ms end-to-end latency, supports up to eight performers simultaneously, and maintains a stable 60 fps streaming rate. The current ~200 ms latency is adequate for real-time streaming, and future work will explore further reduction through network and pipeline optimization.

In terms of accuracy, the proposed system achieves 94.6–96.1% PDJ, which is comparable to modern learning-based approaches. Although ConvNeXtPose provides competitive accuracy in single-view scenarios, it generally underperforms in multi-person or multi-view conditions because it lacks geometric triangulation. Meanwhile, GaussianAvatar does not provide joint-level accuracy metrics because its objective is appearance reconstruction rather than pose accuracy. The accuracy of our method benefits from the multi-view triangulation module developed in prior work, which improves tolerance to occlusion—particularly important in Korean traditional dance, where wide sleeves, props, and overlapping arm motions are common. While commercial MoCap systems may show slightly higher accuracy, our multi-view RGB pipeline demonstrated stable performance for real-time streaming, with minor gaps arising mainly from sleeve occlusion and overlapping silhouettes. The measured PDJ scores indicate that the accuracy is sufficient for ensemble dance reconstruction, and further refinement under heavy occlusion is planned for future work.

Finally, the streaming capability of the proposed pipeline distinguishes it from both baselines. By transmitting only lightweight 2D keypoints from each edge device, the system maintains network bandwidth below 1 Mbps per performer, enabling stable real-time streaming even in multi-performer conditions. Neither ConvNeXtPose nor GaussianAvatar provides an end-to-end streaming architecture, making our method more suitable for live cultural-heritage performances, remote exhibitions, or virtual museum applications.

5.4. Technical Implications for Cultural-Heritage Preservation

The proposed framework offers several technical advantages that contribute directly to the preservation and digital transmission of intangible cultural heritage, particularly in the context of traditional Korean performing arts. Unlike conventional 3D capture pipelines—which primarily focus on single-performer motion acquisition or offline reconstruction—our system integrates real-time multi-view synchronization, multi-performer 3D motion reconstruction, and volumetric avatar–based retargeting to preserve cultural expressions with higher fidelity and contextual richness.

First, the real-time multi-view pipeline enables the preservation of embodied motion semantics, including subtle rhythmic variations, expressive upper-body gestures, and choreographic structures that define traditional dance idioms. Such motion-level information is often lost or simplified in single-view or offline systems, especially in performances involving wide sleeves, long silhouettes, or overlapping arm trajectories. By resolving depth ambiguity through synchronized triangulation, the system captures motion dynamics with greater robustness to occlusion—a critical requirement for documenting dances that incorporate large costume elements or handheld props.

Second, the framework allows the preservation of ensemble interactions, which are central to many traditional dance forms. Multi-performer tracking supports the reconstruction of group spacing, formation changes, and relational body movements, all of which constitute essential components of intangible cultural heritage. Existing pipelines seldom address these multi-person spatiotemporal relationships due to their reliance on offline volumetric processing or monocular pose estimation.

Third, the integration of high-fidelity volumetric avatar scans ensures that culturally meaningful costume characteristics—such as sleeve length, garment flow, and the silhouette of traditional attire—are reflected in the final virtual performance. Through physics-based simulation and avatar retargeting, the system preserves both the motion and the esthetic symbolism conveyed by traditional costumes.

Finally, the lightweight streaming architecture enables low-bandwidth, real-time dissemination of reconstructed performances, making the captured heritage accessible in remote or distributed environments such as museums, education centers, and virtual exhibitions. This contributes not only to archival preservation but also to broader public engagement and re-experiencing of traditional performances in immersive digital formats.

In the demonstrations conducted for this study, eight trained performers from a Korean traditional dance program participated in the evaluation. Their height range (158–176 cm) and experience levels (5–12 years) provided a realistic representation of typical ensemble choreography. The recorded sequences included basic steps, sleeve-driven arm motions, and short excerpts from commonly practiced dance routines. During these sessions, the system maintained stable performance, achieving an ID-matching accuracy of 97.2%, a frame-drop rate below 1.8%, and PDJ scores consistent with those presented in

Section 5.3. These results indicate that the proposed pipeline remains reliable even in occlusion-prone multi-performer settings characteristic of traditional dance.

5.5. Cultural Authenticity and Ethical Considerations

To ensure that the proposed framework not only captures motion data but also preserves the cultural authenticity of traditional Korean performances, several measures were incorporated throughout the digitization and animation process. First, costumes and props—key elements that carry symbolic meaning in many traditional dance forms—were digitized using high-resolution 3D scanning. This allowed the system to faithfully reproduce the geometry, texture, and visual characteristics of garments such as long sleeves, layered fabrics, and ornamental accessories, all of which play an essential role in shaping the expressive qualities of Korean dance.

Second, culturally meaningful gestures were reviewed in close collaboration with professional traditional dancers. Particular attention was given to subtle but symbolically significant motions, including sleeve trajectories, fingertip articulation, and ritualized arm poses. During the retargeting stage, these gestures were prioritized to prevent semantic distortion, and motion sequences with cultural importance were archived together with metadata annotations. This ensures that the captured material can serve not only as a visual reproduction but also as a contextually interpretable cultural record for future researchers, curators, and educators.

From an ethical standpoint, all performers provided written informed consent prior to participating in the data acquisition process, and an anonymized version of the consent form has been submitted according to journal requirements. The collected data are used strictly for research and educational purposes, and participants retain the right to request data deletion or restricted access. Data ownership and usage conditions were discussed and agreed upon in advance, ensuring that the digitization of intangible cultural heritage respects both individual rights and the broader cultural value of the archived material.

6. Conclusions

The demand for video live streaming continues to grow, driven by increasing data traffic, rising network costs, and the technical challenges of delivering real-time performance. At the same time, interest in virtual content, such as VFX and motion capture, is expanding, creating new opportunities for immersive entertainment and interactive live experiences. This study presented a real-time live streaming framework that integrates motion capture and 3D reconstruction technologies. The developed platform leverages AI-based 3D performance generation, estimating human poses and synthesizing 3D positions using only multi-camera image input. The system was demonstrated with both single- and multi-performer scenarios, including traditional Korean dance, highlighting its potential for preserving and digitally presenting intangible cultural heritage in an interactive format.

While the system may incur slightly higher implementation costs and marginally lower accuracy compared to conventional commercial motion capture systems, it provides substantial advantages in usability, flexibility, and real-time responsiveness. Similarly, although reconstruction fidelity may not fully match that of volumetric systems, the platform excels in cost efficiency and practical real-time performance. Considering its scalability, responsiveness, and economic feasibility, the proposed framework offers a promising solution for commercializing real-time virtual performance content, with applications ranging from entertainment and education to the preservation and dissemination of traditional performing arts.

Looking ahead, two technical extensions represent promising directions for future work. First, integrating a lightweight real-time feedback interface—allowing performers to monitor their reconstructed motions during capture—could further enhance interactivity and improve motion fidelity. Second, adopting higher-level predictive models such as temporal networks or reinforcement-learning-based motion smoothing may yield more stable trajectories for complex or dynamic choreography, provided such methods can be incorporated without compromising real-time performance. These extensions will be explored as part of the next phase of system refinement.