1. Introduction

In today’s industry, manufacturers face the challenge of delivering high external variety to meet growing demands for customization [

1]. This variety, while enhancing competitiveness, increases product complexity, understood here as the number of different product or component variants a company offers [

2]. In the academic literature product variety is found to influence all types of costs across all life cycle phases [

3,

4,

5]. It manifests in longer sales processes [

6], more demanding engineering work [

7], less efficient production [

8], and procurement [

9].

To address this, research has focused on product architecture, defined by Fixson [

10] as a comprehensive description of a bundle of product characteristics, including the number and type of components as well as the interfaces between them. A related concept is the product platform, which Ulrich [

11] described as the set of assets shared by a family of products. Within these structures, modularity—the clustering of functions into modules with standardized interfaces—has been suggested as a way to reduce internal variety while still supporting external variety [

12].

Studies indicate that modular product development can bring benefits such as reduced lead times [

13], lower inventory levels [

14], improved purchasing conditions [

15], less cannibalizing sales [

16], and faster quotation and specification in the order process [

15,

17]. Yet, despite broad recognition of these advantages, industry adoption remains limited [

12]. This is partly due to a lack of robust quantitative frameworks capable of measuring modularization effects across full product programs and organizational processes [

3,

12,

18,

19].

Design decisions made in product development are critical, influencing up to 70% of life-cycle costs according to some sources [

20], and they shape how products are manufactured, sold, and serviced [

10]. These decisions are costly to reverse [

21,

22], yet their broader impacts remain poorly understood [

23,

24]. A key challenge is the lack of structured data across the value chain, which makes it difficult to link product characteristics to activities such as engineering, procurement, or production preparation [

25]. Traditional accounting schemes primarily emphasize direct labor and direct materials, while overlooking overhead and cross-functional costs [

26]. As a result, decision-makers often lack a holistic view, risking choices that seem efficient in isolation but generate complexity elsewhere in the organization [

8,

27].

Existing methods for evaluating modularization exhibit recurring limitations. Early frameworks mapped modularity’s benefits qualitatively, while later approaches introduced cost models such as activity-based costing (ABC) [

18,

28] and TDABC [

3,

26]. Yet these often emphasize production costs, rely on static comparisons, or neglect overhead and cross-functional activities. Life cycle-oriented models [

29,

30] and complexity assessments [

31] provide broader perspectives but depend heavily on historical data, subjective estimates, or incomplete links between activities and product structures. As a result, managers still lack robust, data-driven tools capable of dynamically capturing how modular product architectures affect costs and complexity across the assortment, life cycle, and company value chain [

14,

32].

To address this gap, this study develops and applies a data-driven framework for evaluating the effects of modular product architectures on company operations. The framework integrates qualitative process understanding with quantitative data extraction and analysis, operationalized through a structured methodology that combines semi-structured interviews, transactional data from ERP systems, and model-based simulations. In doing so, it identifies high-impact product areas where modularization can deliver the greatest time savings across relevant departments. Unlike traditional accounting schemes, which primarily capture direct labor and material, the framework enables the allocation of new cost pools such as engineering hours, procurement hours, and production preparation hours—overheads that were previously untraceable in the case company. Grounded in principles from TDABC [

33], complexity management [

2,

4], and theory on the decomposition of technical systems [

34], it constitutes a novel methodological synthesis that links activities throughout the company value chain directly to product- and component complexity. By connecting data at the lowest possible level (e.g., components) while enabling bottom-up aggregation to modules, subsystems, or complete products or projects, the framework provides a flexible and dynamic basis for evaluating product structures across different configurations and supporting comprehensive comparisons at any desired level of detail [

5,

21,

29]. The framework is validated through a case study of a company producing highly customized ETO equipment, demonstrating its potential to inform design decisions, increase transparency regarding the impact of customizations, and support strategic product architecture planning.

2. State of the Art

A range of frameworks has been proposed to assess the economic and organizational consequences of modular product architectures, yet most suffer from limitations in scope, quantification, or generalizability. Early work emphasized qualitative mappings of modularity’s benefits, while more recent studies have sought to incorporate cost models and performance metrics.

Modularity, in this context, is characterized by functional independence, standardized interfaces, and defined interaction rules between components. These properties enable parallel development and product flexibility but also create opposing cost effects that are difficult to generalize due to interdependencies [

14]. For instance, oversized modules may raise unit costs through higher material and labor requirements [

15], yet these can be offset by economies of scale [

35] and learning effects [

36]. Likewise, modular product development often demands higher upfront engineering investment, but this can be compensated by reduced design effort for future variants [

8]. Such trade-offs illustrate why the economic consequences of modularization remain complex to quantify and why more data-driven approaches are needed.

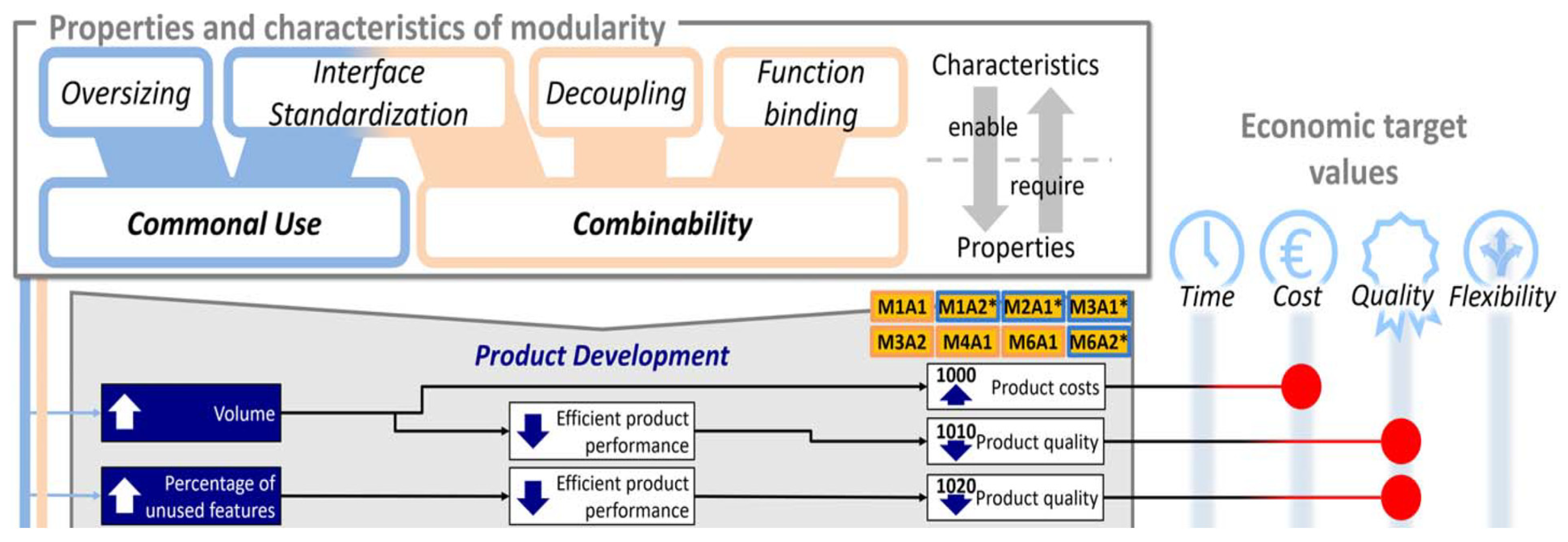

One prominent line of research is the Impact Model Framework (IMF) developed in [

7] and later refined in [

37]. The IMF connects modularity’s five generic design properties [

38] with over 70 effect chains, linking them qualitatively to strategic outcomes such as cost, quality, and flexibility as shown in

Figure 1. More recent refinements [

17,

32] use model based systems engineering approaches and added key performance indicators (KPIs) and various calculation methods including TDABC, critical path method [

39] and others, extending its ability to address quantifiable effects across life cycle phases dynamically. However, the framework does not address integration with company processes and activities at different levels of the product structure (e.g., component, subassembly, or full product). This lack of insight limits the ability to identify which design decisions or parts of the product architecture generate additional costs through increased process complexity across the value chain [

2].

Several attempts have been made to adapt ABC to modularization. Since individual product variants draw unevenly on resources across different functions in the value chain [

28], traditional volume-based accounting methods with fixed overhead allocations will inevitably produce distorted cost figures [

27]. ABC was developed to capture these cost differences more accurately by linking expenses to the activities that generate them [

40]. A further advantage of activity-based approaches is their ability to trace resource consumption through activities tied to specific product configurations or modular design decisions, as demonstrated by the authors discussed in the following paragraph.

Thyssen et al. [

18] used ABC to explore how far costs could rise before modular alternatives became uncompetitive, though the analysis mainly captured inventory effects and neglected uneven cost distributions across product variants. Park and Simpson [

28,

41] extended ABC to product families, introducing the concept of cost modularization. Later works highlighted its potential to restructure activity costs around modular design decisions [

42]. While these studies advanced the link between activities and design, they largely restricted focus to production costs and proved resource-intensive [

43,

44], motivating later adoption of TDABC for greater scalability [

33,

45]. Ripperda and Krause [

3] and Mertens [

26] integrated TDABC into modularization frameworks, offering more direct quantification of process costs. However, the approach in [

3] is based on static “AS-IS” versus “TO-BE” comparisons. Since market conditions evolve over time, firms continuously introduce customizations in response [

46]. Consequently, reliance on static inputs limits the framework’s ability to capture the effects of such ongoing changes [

47]. Mertens [

26] provides an elegant theoretical link between economic theory and modularization but does not clearly map the impact of variety on activities or address overhead costs across different product-structure levels.

Other frameworks highlight life cycle perspectives. Altavilla and Montagna [

29] combined case-based reasoning, ABC, and parametric analysis to assess design impacts on life cycle costs, while Maisenbacher et al. [

30] applied life cycle costing to platform alternatives. Altavilla and Montagna [

29] highlight the importance of abstraction levels and propose a dynamic, adaptable model that incorporates the full life cycle. However, their reliance on historical cost data for similar products restricts applicability in heterogeneous, low-volume ETO contexts, where the product assortment may span a broad spectrum (from tanks to conveyors to lifts and so on), and sales frequencies are too low to support valid comparisons even when products are similar [

48]. The fundamental limitation lies in the assumption of repeatable design and production cycles. In ETO systems, each order may constitute a one-off configuration [

12], making it difficult to establish valid empirical baselines. Hence life-cycle models must rely on estimation based on key design parameters rather than historical data, as commonality between orders may be too low or orders may be too far apart to justify using cost data from previous projects. Maisenbacher et al. [

30], in turn, fail to link activities to the product structure, reducing their ability to identify specific effects of product and component commonality and variety on company processes [

49]. Similarly, Wang and Zhang [

50] quantified modularization effects in satellite production, but the link between customization, activities, and costs remained weak. Siddique and Adupala [

51] also proposed the Product Family Architecture Index (PFAI); however, its emphasis on material-related factors left broader overhead impacts largely unaddressed.

Alternative methods such as target costing extensions [

52] and complexity assessments [

31] introduce valuable perspectives but still lack fine-grained connections between product variety, overhead activities, and resource consumption. Target costing provides useful managerial benchmarks but not predictive cost allocations [

53], while [

31] rely solely on subjective employee estimates rather than combining it with transactional data.

Overall, the state of the art demonstrates a clear shift from qualitative mappings toward quantifiable, data-driven methods. Yet most existing frameworks either focus narrowly on production costs, overlook overhead functions, fail to dynamically link product variety and modularity to company processes across the value chain, or are not suitable in low commonality contexts as seen in

Table 1. This gap motivates the development of frameworks, such as the one proposed in this study, which combines TDABC with product structures, product variety, and structured data integration to capture the systemic, quantifiable impacts of modular architectures in complex, ETO environments.

Conceptually, the framework extends previous TDABC-based modularization approaches by linking product architecture complexity directly to organizational resource consumption. While earlier models applied TDABC within predefined functional domains, the present framework integrates decomposition theory and complexity management to connect cost drivers to architectural attributes rather than static activities. This shift from a function-centered to a structure-centered representation of cost causality advances theoretical understanding and advance how modular architectures propagate complexity-induced costs across the value chain and product assortment.

3. Methodology

The proposed framework provides a systematic basis for evaluating the impact of modularization across an entire product program. Instead of focusing on individual product variants, it combines qualitative process knowledge with quantitative data to trace how product variation affects activities and resource consumption across the value chain. A key feature is its ability to integrate data from multiple sources and represent them at different levels of granularity. This allows aggregation from individual components to modules, subsystems, complete products, or entire projects [

5,

21]. By linking operational data with process insights, the framework enables data-driven decision-making and supports transparent evaluations of trade-offs between customization, complexity, and efficiency [

54].

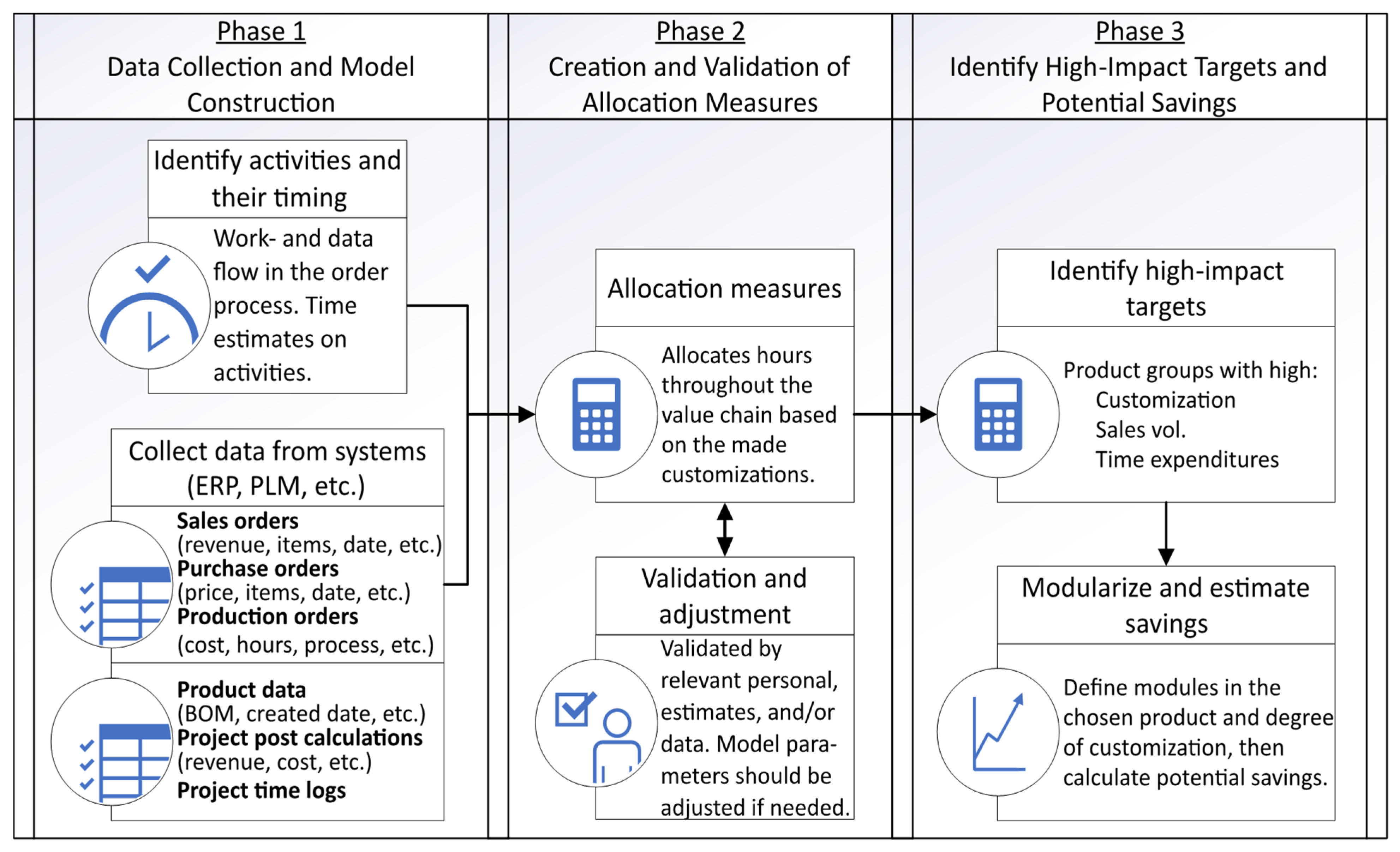

The framework is operationalized through a structured, three-phase methodology typical of case research [

55] as seen in

Figure 2:

- (1)

Data collection and model construction

- (2)

Creation and validation of allocation measures

- (3)

Identify high-impact targets and potential savings

Each phase involves multiple iterations—for example, during data collection and model construction, it may become necessary to identify and integrate additional ERP tables or data sources before the model can be finalized. However, once a phase reaches completion (e.g., the data model is fully constructed), the subsequent phase can proceed with minimal need to revisit earlier steps. In this sense, each phase is considered closed once it is sufficiently complete to enable progression to the next.

3.1. Phase 1—Data Collection and Model Construction

The process begins with a scoping exercise aimed at understanding the company’s product program, key processes, and main sources of product and process complexity. This is achieved through:

- -

Semi-structured interviews [

56] with stakeholders across multiple functions (e.g., sales, engineering, production, procurement, installation, after-sales, accounting, IT). These should be performed by multiple investigators, as convergence of observations increase confidence in the findings [

55].

- -

Interviews are designed to capture:

- ○

Typical workflows and activity sequences in the order fulfillment process, as found in methodologies like TDABC [

33]

- ○

Types of data used and generated at each stage

- ○

Information handovers between departments

- ○

Estimates of time spent on recurring activities

This phase applies the principles of TDABC, where time estimates for activities are used to allocate resource consumption. This framework is easily managed, can be integrated with transactional ERP and customer relationship management systems, and can be scaled to handle millions of transactions according to the developers of the method [

33]. Additionally, this phase should build a shared process map that highlights where customizations and handovers occur, and where product or part variety impacts the process, which may imply where standardization or modularization could have the largest effect, as modularity will turn the negative cost effects of variety positive—at least on a qualitative basis [

3]. The operational understanding gained here shall then be aligned with actual transactional and master data from the company’s information systems. The gathering of data from multiple sources within the company may increase the reliability and validity of the findings [

55].

Data Sources

Relevant datasets are identified and extracted from enterprise databases (e.g., ERP or product lifecycle management systems). These typically include:

- -

Fact tables: Tables where each row represents a discrete business event or measurement, typically identified by foreign keys to dimensions [

57,

58] (e.g., production orders, purchase order lines, sales transactions, time logs, post-calculation records). These tables store quantitative, event-driven data such as hours, costs, quantities, or revenues.

- -

Dimension tables: Tables where each row represents a distinct entity or descriptive attribute set, uniquely identified by a primary key (e.g., item master data, project master data, bill of material (BOM) structures, product specifications), which is used by fact tables to establish relationships [

58]. These hold relatively stable or slowly changing attributes that provide context for analyzing the facts.

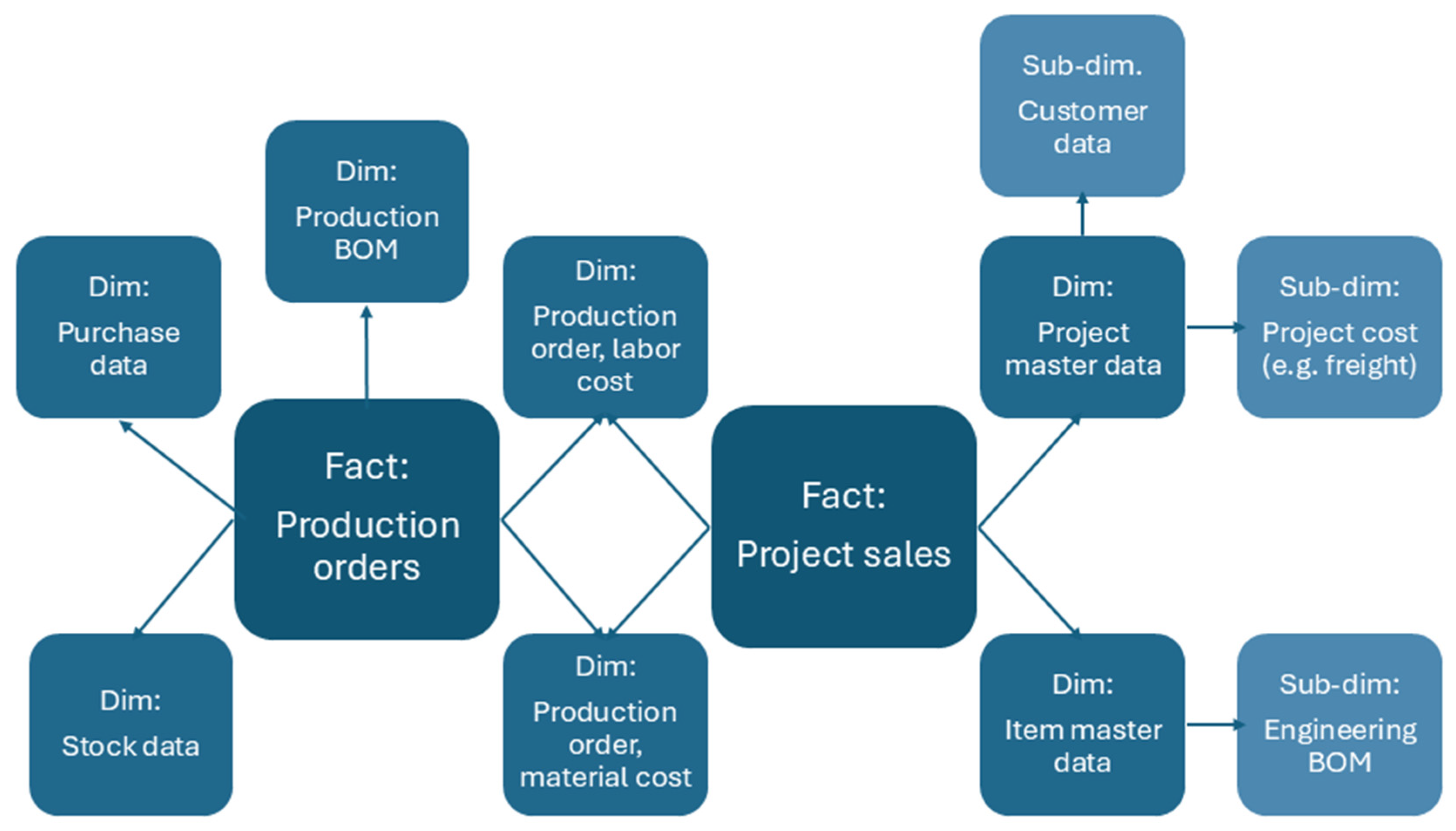

The relevant tables are then organized to follow a relational data warehouse design, where multiple fact tables are linked through a shared set of normalized product, project, and BOM dimensions as one would find in a star-, snowflake, or starflake schema [

58,

59] as seen in

Figure 3. Data architectures may vary greatly from company to company depending on their existing data setup. Particularly the snow- and starflake schemas are beneficial when complexity of data sources, hierarchies (component, module, product, etc.), and size of dimension are large [

60].

3.2. Phase 2—Creation and Validation of Allocation Measures

The data model estimates departmental time expenditures attributable to specific products or modules. It enables the direct allocation of overhead cost pools to the product structure—a feature not possible in traditional volume-based costing [

26,

27]. For each overhead function of interest (e.g., engineering, procurement, production preparation, sales, or others), the model generates allocation measures by combining:

- -

Time estimates from interviews

- -

Transactional records from relevant tables (e.g., BOM changes, purchase order counts, items specified in sales orders, etc.)

The model applies a hierarchical product breakdown based on the theory of decomposition of technical systems [

34], linking data from interviews and transactional records at the lowest possible level (e.g., components) while allowing bottom-up aggregation to modules, subsystems, or full products or projects. This structure ensures flexibility in evaluating products across different configurations and supports comprehensive comparisons at any desired level of detail [

5,

21].

Validation

Where time logs exist (e.g., engineering hours), estimates are validated by:

- -

Comparing predicted vs. reported hours

- -

Accounting for known uncertainty in reported values

- -

Excluding projects with implausibly low or zero hours to avoid skewing statistics

Validation results are summarized across projects, products, or orders. Here it may be necessary to categorize projects or products for instance by size, functionality, or processes to ensure comparability, and to reveal where the model performs well and where it requires refinement. Its purpose is not to deliver exact cost figures but to provide approximate, directional insights that support decision-making in early stages, as emphasized by previous works [

11].

When applying the model in different contexts (e.g., companies), some adaptation is to be expected, as each company has distinct processes, data structures, and priorities [

14]. In the case presented here, the critical measure was engineering hours, but in other companies it may be a different KPI (e.g., on-site installation) that attracts the most interest, to which the model should be adapted to some degree. Results are validated by multiple sources such as relevant personnel at the company, time logs, and various measures such as total work hours in a year for a full time employee, team, or department. Using multiple sources enables triangulation, which might strengthen construct validity [

55].

Despite these validation steps, certain sources of bias and uncertainty remain. Reported time logs may not fully capture all hours spent on specific activities, and interview-based estimates are subject to recall and interpretation bias [

61]. Differences in data granularity between ERP tables, project documentation, and time logs can also introduce inconsistencies in allocation results. To mitigate these effects, the study applies triangulation across multiple data sources and cross-verifies key figures with company personnel, thereby increasing the robustness of the results [

55].

3.3. Phase 3—Identify High-Impact Targets and Potential Savings

Once allocation measures are in place, the model is used to simulate the impact of introducing modular architectures. The aim at this stage is to identify product areas with the greatest potential for savings through modularization. Specifically, attention is directed toward product groups or subsystems characterized by:

- -

High customization levels [

62], indicating substantial design variety and engineering effort

- -

High frequency in sales orders [

63], ensuring that standardization or automation efforts will have tangible impact

- -

High cumulative time expenditures, reflecting resource-intensive variety

Once the high-impact targets are identified, the product’s structure should be decomposed into modules based on functions, customer requirements, or manufacturing constraints [

64]. Several methods exist for achieving this, including those proposed by Lehnerd and Meyer [

65], Krause [

66], Ericsson and Erixon [

36], Simpson et al. [

67], and Harlou [

64].

Once BOMs for the modules are determined the model can carry out its calculations. However, this is a very detailed level of information, and particularly in development projects this information may not be available before late in the design process—particularly for the development of modular product architectures development times are known to be long [

68,

69]. Alternatively, an architecture can be simulated based on the existing product structures, and assuming substitution where module- or subassembly variants are very similar in functionality, customer requirements, or technical requirements. This allows for early stage estimations of an architecture’s impact on the value chain where the available information is sparse [

70].

With modules identified and BOMs defined the model can now estimate the potential departmental time savings for each module and in total. Furthermore, the model can also show the effects of increased variance or customizations throughout the value chain. The output supports strategic modularization decisions, indicating where standardization could yield the greatest benefit within the scope of the analysis.

3.4. Novelty and Practical Application of the Methodology

The developed methodology integrates qualitative process understanding with quantitative data extraction and analysis, combining semi-structured interviews, transactional ERP data, and model-based simulations to identify high-impact product areas where modularization can deliver the greatest time savings across the value chain. Building on TDABC, decomposition theory, and complexity management, the methodology operationalizes a structured framework that links product variety and complexity in order processes to resource consumption at the component level, while supporting aggregation to modules, subsystems, complete products, and entire projects. This flexibility allows decision-makers to evaluate modularization effects at multiple levels of decomposition—an ability rarely achieved in existing frameworks. Moreover, by integrating data from diverse sources and representing them at varying levels of granularity, the methodology incorporates a data model that enables data-driven, quantifiable measures to evaluate alternative proposals, prioritize resources, and improve planning capabilities. As demonstrated in the case company, the methodology not only identifies where modularization can yield the greatest impact but also supports early estimation of time expenditures, enhances transparency regarding customization-driven costs, and enables more informed pricing and product architecture decisions.

4. Case Study Application

To demonstrate the practical applicability of the approach, this section presents its application in the case company and outlines the key findings from the analysis. The results are structured to first introduce the company background, then describe the data sources, model construction, and the development and validation of allocation measures, before presenting the identified high-impact targets and potential savings. All results and interpretations were reviewed and validated with relevant stakeholders at the case company, ensuring both the accuracy of the findings and their practical relevance for decision-making.

4.1. Case Company Background

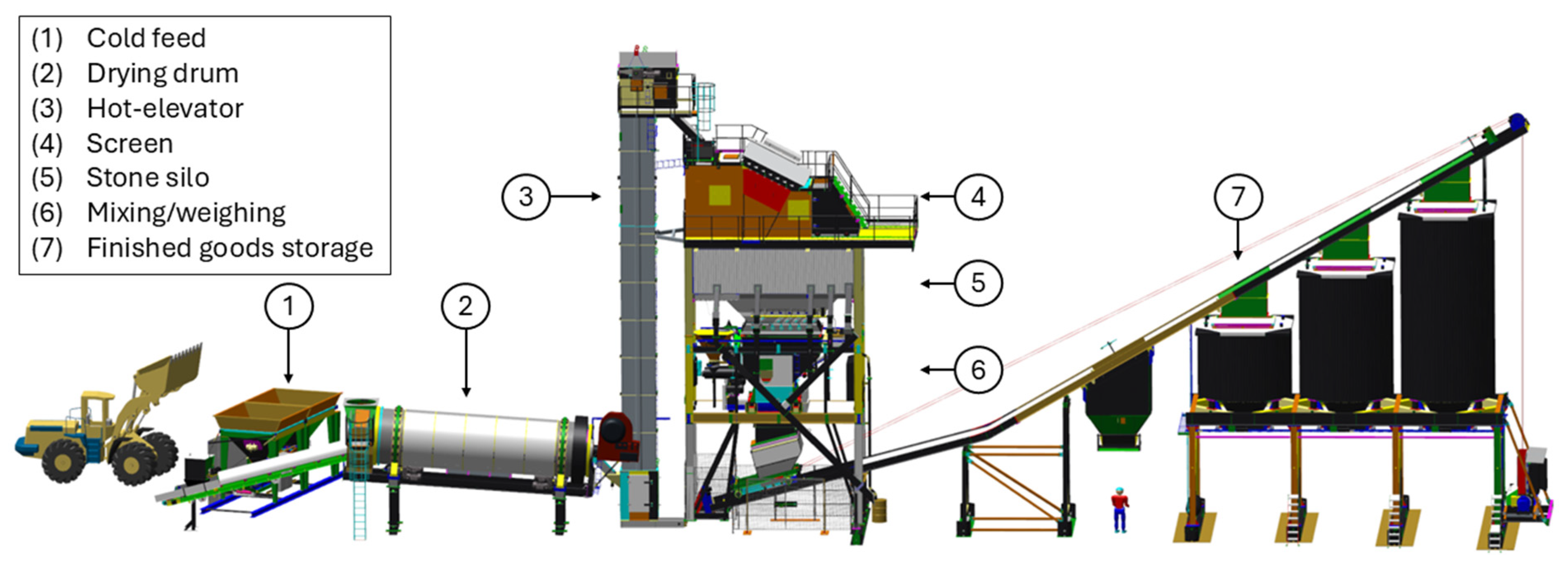

The case company manufactures production equipment for asphalt manufacturing plants. Their portfolio spans the full process—from cold feed systems handling sand, pebbles, and rocks to heated silos storing finished asphalt for transport.

A typical plant contains 10–14 major machines, along with conveyor belts, pipes, stairs, and elevators. The most essential plant components are shown in

Figure 4, while stairways, pipes, tanks, and other less critical machinery have been omitted to provide a clearer overview. The process starts with cold feed bins loading aggregates onto conveyors, followed by drying in a drum at app. 300 °C, where recycled asphalt may be added. Exhaust gases are filtered, and heated material is moved by a hot-elevator to the mixing tower, where bitumen, filler, and optional additives (e.g., colorants, fibers) are combined. The tower typically includes industrial screens, stone silos, and a mixing/weighing section. Once the asphalt is mixed it can be stored in heated silos.

Most components within the plant are heated, which increases the engineering complexity—particularly regarding lubricants for moving parts, wear resistance, heating systems, and power requirements. As a result, only engineers with many years of industry experience are typically qualified to carry out this work.

Although the company prefers greenfield projects (without the constraints of existing structures, processes, or legacy systems), these are often secured by larger competitors. The company instead focuses on retrofitting new equipment into existing plants, an approach that demands high engineering effort and results in a highly customized, ETO workflow. Each project is tailored to customer needs, and almost no two orders are identical. This variety, combined with reliance on specialized expertise, has become a bottleneck. The company is therefore exploring modularization to reduce design and development time, targeting the subsystems and product areas where savings would be most impactful.

4.2. Phase 1—Data Collection and Model Construction

Two primary data sources supported the analysis:

- (1)

Semi-structured interviews: With staff from sales, engineering, production, procurement, installation, after-sales services, and accounting.

- (2)

SQL database extracts: Covering sales, production, product configuration, procurement, and project cost calculations.

A total of 22 interviews were conducted with 15 individuals, representing upper, middle, and operational levels. Interview durations ranged from 45 to 75 min.

Table 2 summarizes participants by role and number of interviews.

The SQL data provided broad coverage, including production orders, purchase orders, sales lines/orders, project post-calculation records, BOM structures, and time logs. The model for this case used two main fact tables:

- -

Item fact table: contained data such as creation date, the number of BOMs the item appears in, and average purchase order quantities regarding all components, subsystems, machines, and plants.

- -

Project fact table: included revenue, cost, customer details, and time expenditure per project.

These fact tables linked to multiple transaction tables summarized in

Table 3.

One of the “project post-calculation” tables (specifically the one linking item-, production-, and purchase IDs to project IDs) was created by manually extracting material requirements for individual projects from the company’s ERP system, followed by additional formatting and data transformation before the data could be used in the model. These manual and somewhat labor-intensive tasks naturally limited the scope of included projects. As a result, that table covers projects from 2018 through 2023, while the remaining tables contain data from 2005 (when the ERP system was implemented) through 2023. Since this is the only table in which it is possible to track which of the critical identifiers belong to which projects, the results presented in this paper are likewise limited to that subset of projects.

To ensure data quality, the manually extracted records were cross-checked against other company data sources, including production and purchase orders as well as project calculation sheets prepared by sales personnel. Furthermore, key personnel involved in the selected projects—such as engineers, sales representatives, and project managers—reviewed and verified the analyses and results based on the extracted data.

4.3. Phase 2—Creation and Validation of Allocation Measures

Four departmental time measures were applied in the case:

- -

Estimated engineering hours in development and design

- -

Estimated procurement hours

- -

Estimated production preparation hours

- -

Estimated sales hours

The company did not record procurement-, production preparation-, or sales hours. These measures could therefore only be validated indirectly, using approximate activity times reported during interviews and by presenting results to relevant employees.

Engineering hours were of particular interest. The company’s engineering-hour logs enabled more direct validation, though both managers and employees noted their approximate nature. A ±20% margin was used when comparing estimates to reported values. Projects with fewer than 10 reported engineering hours, as well as those with zero predicted hours, were excluded.

Validation results (

Table 4) showed that the model performed best for large projects, with accuracy dropping for smaller ones. Across all projects, 51% of estimates fell within the ±20% margin. Poorer performance (too high estimates) was linked to projects dominated by product groups where the engineering task is outsourced (e.g., tanks and conveyor belts). Excluding these projects improved the results. The proportion within margin rose across all size categories, and the average relative error (excluding outliers) fell from 27% to 18%.

In production preparation, engineers’ BOMs are checked for errors and supplemented with missing standard parts (e.g., screws, bolts). Supplier pricing and project schedules are also prepared. These tasks are only needed when it is a new BOM or one where changes have been made. However, these are frequent due to the ETO nature of the company. Purchase orders are usually also issued at this stage.

Model estimates reflected observed variation: most purchase orders took 10–40 min (87% of purchase orders), except for large/complex ones involving multiple vendors, where time estimates might be as much as several hours. Production preparation typically took up to app. 30 min (54% of production orders), but repeated BOMs from earlier projects required only 5 min and occurred in about 21% of cases.

Estimates on sales hours showed uncertainty. Of the projects which were covered in both the interviews and the data sample, nine were able to use for validation. Five projects matched reasonably well while four had model estimates roughly double the reported times in interviews. The small sample size and poor results make the estimated sales hours unreliable.

4.4. Phase 3—Identify High-Impact Targets and Potential Savings

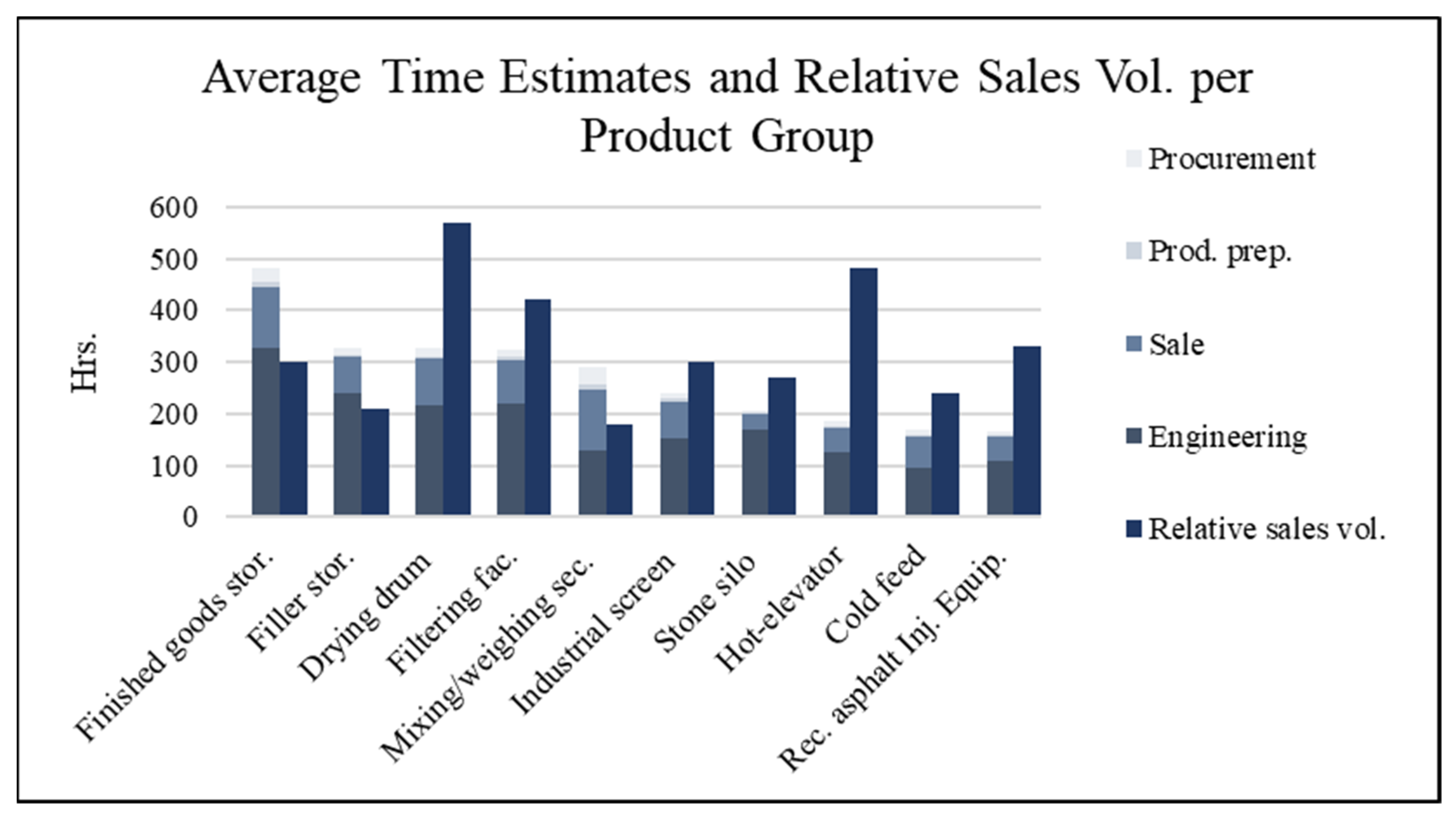

The model aims to give some insight into how the customization of machines in projects affects the value chain of the company. This insight might also give some idea of where modularization might be particularly beneficial. Here it will be shown in terms of how many hours the different departments are expected to use. This can off course easily be translated into monetary values based on salaries for the relevant employees if desired. Applying the model on the different product groups in the company’s portfolio we can see where which product groups attract the most work due to customizations on average, as seen in

Figure 5. Alongside these figures the relative sales volume per product group is shown. The actual sales figures have been hidden as this is confidential information.

The analysis of product groups (

Figure 5) revealed:

- -

The drying drum had both the highest sales volumes and significant customization-related overhead, making it a strong candidate for potential savings with modularization. The same might be said for the filtering facilities, though the sales volume is slightly lower.

- -

Hot elevators also sold in high volumes but required relatively little customization effort.

- -

Finished goods storage incurred the highest average customization costs but had lower sales volumes than the previous two.

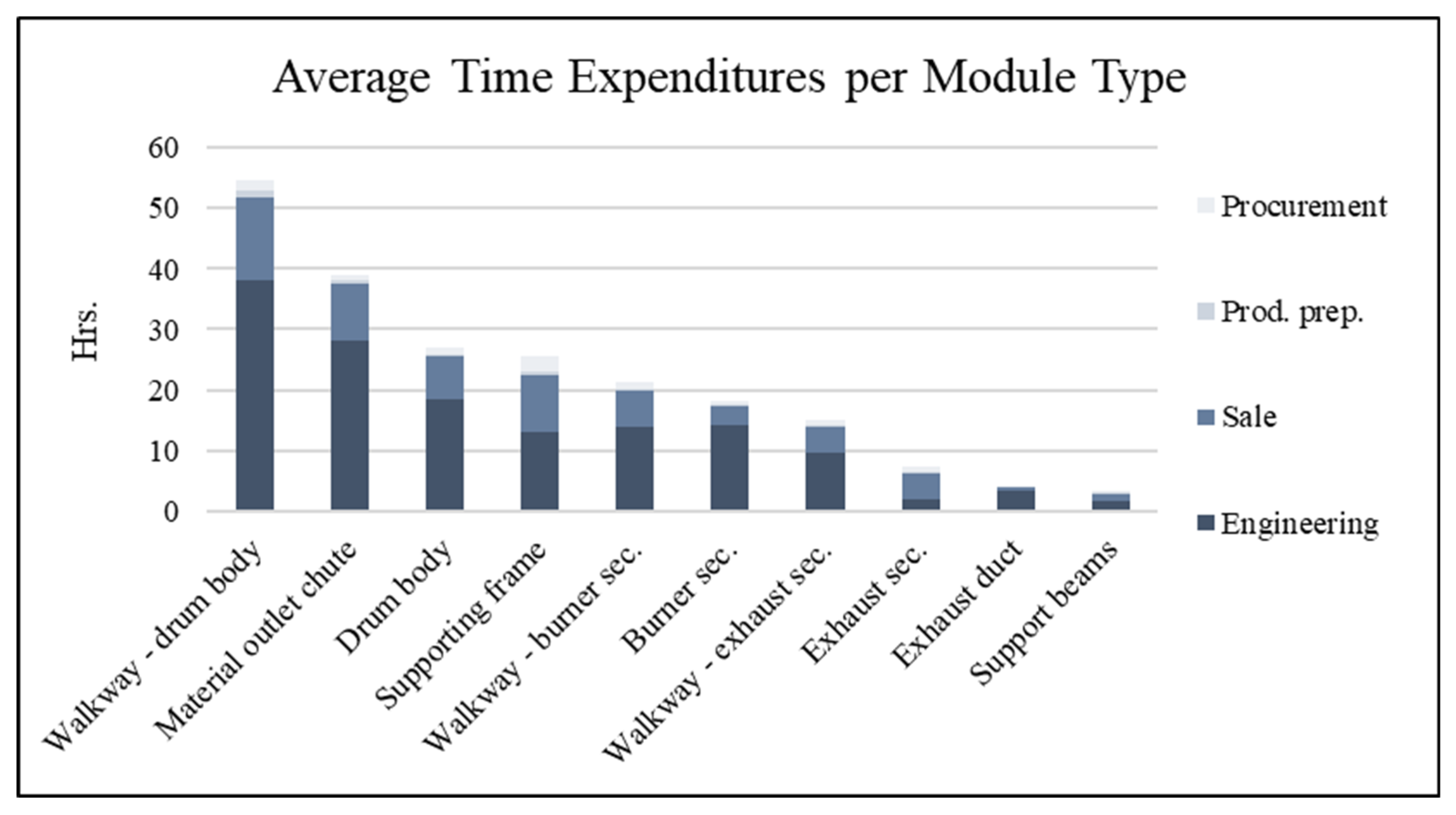

Using the drying drum as an example, we can break it down into modules to identify where overhead hours are concentrated (

Figure 6).

- -

Walkways scored high for potential savings but are often heavily customized in brownfield projects, limiting further standardization potential.

- -

Material outlet chute, drum body, and supporting frame also ranked high. However, the supporting frame’s high score was mainly due to sales hours—a less reliable measure in this case—whereas the burner section showed strong potential for reducing engineering hours.

- -

Supporting frame attracts the most purchase hours, suggesting that standardizing purchased parts in the supporting frame (e.g., electric motors), and if possible across modules and maybe even product groups, could yield substantial savings. Some through savings in procurement hours, but if lot size increases significantly through economies of scale and reduced stock levels. However, such measures are not included in the model yet.

Proposed Modular Architecture

Based on the variants found in the data sample used in this study the following module variant reductions were proposed, in order to maintain coverage for app. 80% of customer orders:

- -

From 15 to 4 material outlet chute variants

- -

From 13 to 8 drum body variants

- -

From 11 to 8 burner section variants

- -

From 10 to 4 supporting frame variants

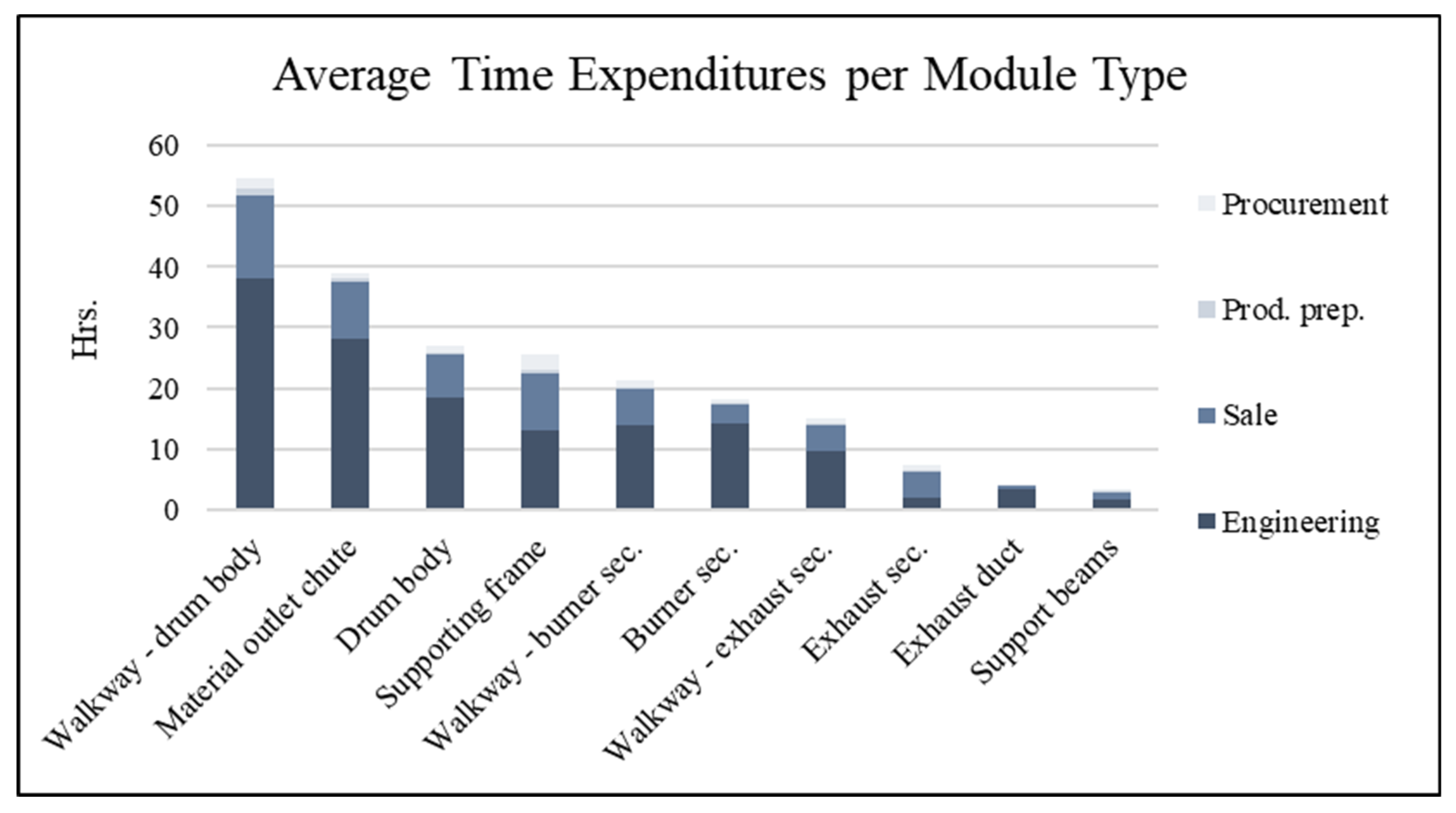

Under this scenario, customization of these modules would be unnecessary in most cases. Looking at

Figure 7, the expected average time savings can be seen for the different modules individually and in total. The model estimates that engineering could save app. 62 h per drying drum, which is a reduction of 29% compared to the 216 h it takes on average to construct a drying drum in the engineering department. The largest savings comes from the material outlet chute and drum body. Sales hours would also likely fall, as specification and pricing could be based on predefined module configurations. The exact number given by the model might not be the correct one as already discussed, but many studies have pointed towards these effects as expected when modularizing [

7,

14]. Procurement could benefit from larger lot sizes and reduced order frequencies [

15,

69], resulting in an average reduction of 2.3 h allocated per drying drum. Similarly, production preparation time would decrease by app. 2 h per drying drum, as BOM checks and supplier price updates would only be required for customized BOMs according to the conducted interviews.

Beyond demonstrating reductions in time expenditures, these results also highlight where in the product portfolio product variety and modularization may have the greatest impact along the company’s value chain. This can help decision-makers prioritize resources to maximize benefits and provide data-driven, quantifiable measures for evaluating alternative proposals.

In the case company, it was further noted that the tool could support estimating time expenditures for incoming projects and enhance planning capabilities within the engineering department. It also offers insights into the magnitude of cost differences between projects with high and low degrees of customization, which may inform pricing decisions, according to the company’s head of sales.

As demonstrated in this case study, the model enables analyses at multiple levels of decomposition—e.g., project, product, module, or any intermediate level—which is rarely observed in existing modularity frameworks. This flexibility stems from the novel integration of TDABC with theories on the decomposition of technical systems.

5. Discussion

The proposed methodology provides a data-driven way to quantitatively link product variety and complexity with overhead activities across the value chain. It enables the direct allocation of overhead resources to the product structure—a capability not possible in traditional volume-based costing [

26,

27]. Rather than seeking exact cost figures, the methodology delivers robust estimates with predictive value, highlighting directional trends in overhead resources that would otherwise remain hidden in conventional accounting systems [

27]. Even without full precision, such directional insights help managers recognize where resource consumption is disproportionately high, prioritize which product areas to standardize, and assess trade-offs between customization and efficiency. The framework is shown to support informed decisions in early design and portfolio planning stages, when detailed data are rarely available though the case study presented. In doing so, the underlying framework does not replace traditional accounting but extends it by showing how overhead resources can be linked to customizations or architectural changes. This allows decision-makers in early design phases, portfolio management, and modularization initiatives to experiment with alternative product architectures more systematically, rather than relying on “gut feel” [

11].

The case study illustrates this potential by identifying high-impact subsystems, such as the drying drum, where reducing the number of module variants could cut engineering hours by approximately 29% while also reducing procurement and production preparation times. These results echo prior research on modularity regarding development time, economies of scale, and inventory reduction [

13,

14,

15,

65], yet the model advances the field by quantifying such effects at multiple levels of decomposition—from components to modules to full products—thus providing a richer foundation for strategic decisions [

29].

Compared with previous quantitative frameworks—such as ABC, TDABC, and the IMF—the proposed approach advances the field in several respects. Whereas ABC- and TDABC-based models typically focus on allocating resources to activities at a single organizational level [

5], the present framework dynamically links overhead costs to the product structure itself, allowing effects of product variety and modularity to be traced across components, subsystems, and projects. This integration of hierarchical product decomposition with time-based cost allocation and ERP data structures therefore represents a step toward operationalizing modularity’s systemic impacts in measurable, company-specific terms.

The study illustrates the importance of evaluating impacts at different levels of decomposition. While high-level results pointed to product groups with significant customization effort, the module-level breakdown revealed more targeted opportunities for intervention, such as the material outlet chute and burner section. This bottom-up aggregation capability is rarely addressed in existing frameworks [

29], yet it is essential in providing the proposed methodology with the needed flexibility, versatility, and dynamic nature [

21].

The case study further showed that the framework can serve not only as a retrospective analysis tool but also as a forward-looking decision support system. By estimating time expenditures associated with different levels of customization, the model enables scenario analyses of alternative modular architectures even in early design stages. This potential for early-stage evaluation is particularly relevant given that design decisions made upstream determine a large share of life-cycle costs [

20].

Another important aspect is the adaptability of the framework across different industrial contexts. As highlighted in the methodology, the underlying model is not confined to engineering hours, even though this was the critical measure in the case. In other settings, functions such as on-site installation or after-sales may represent the most critical bottlenecks, requiring the model to be adapted accordingly. This flexibility strengthens its value as a decision-support tool, since it allows companies to tailor the analysis to their specific strategic priorities and resource constraints [

14,

17]. Future research could explore applications in other firms to evaluate savings in different departments or KPIs, depending on their unique circumstances.

Beyond the specific case examined here, the underlying logic of the framework is broadly transferable to other engineering-to-order and project-based industries where product variation drives overhead activities. Sectors such as machinery, construction equipment, and customized process plants share similar challenges in linking design variety to resource consumption across functions. Even in more standardized, assemble-to-order contexts, the framework could be adapted to quantify engineering change impacts or procurement complexity associated with product variety. The key requirement is access to basic transactional data such as BOMs, production or service orders, and time records—either from time logs or employee estimates—from which activity drivers can be derived. Consequently, while the specific measures applied in this case (engineering hours) may differ elsewhere, the methodological principle of linking process data to product structures remains applicable across a wide range of industrial settings. The generalizability of the framework and which adaptations will be necessary should be further investigated in future endeavors.

At the same time, data limitations shaped the analysis. Missing project identifiers in sales orders required a workaround that linked BOM items to sales lines, capturing project size but not actual workload. In addition, a manually compiled post-calculation table restricted the dataset to a subset of projects. These shortcomings highlight the critical role of data integration and suggest that more robust ERP structures are a prerequisite for replicability and accuracy. These limitations inevitably influence the precision and generalizability of the findings. For instance, the absence of reliable sales-hour estimates eliminates the possibility of assessing how product or architectural changes affect sales-related workloads in the case. As a result, any decision-support insights regarding potential efficiency gains or bottlenecks within the sales department are lost, and this gap may also contribute to the underrepresentation of certain product types or project sizes in the overall analysis. The restricted project subset in the manually compiled table also limits the ability to capture longer-term trends or exceptional cases. Likewise, reliance on interview-based time estimates introduces subjective elements that can affect the magnitude—but not the overall direction—of the results. Consequently, while the estimated savings and workload distributions should be interpreted as indicative rather than absolute values, the relative patterns observed across subsystems and activities remain robust, as they are supported by multiple data sources and cross-validation with company personnel.

Overall, this study contributes to bridging the gap between theoretical modularization benefits and their practical quantification in industry. By combining TDABC with complexity management, structured data integration, and hierarchical product decomposition, the proposed framework advances the state of the art in three ways:

- (1)

It quantifies overhead activities often ignored in existing models

- (2)

It enables analysis across product groups and product structure levels

- (3)

It promotes rapid, fact-based experimentation with product architectures

Together, these capabilities provide a systematic foundation for evaluating modularization impacts across the value chain and support more transparent, data-driven decision-making in complex engineering-to-order environments.

Future Work

Future research should extend and refine the framework in three main directions.

First, the model should be expanded to capture product development and R&D activities. In the case company, new innovations are developed through customer projects, providing only partial insights into true engineering effort. Because R&D structures vary widely across firms, the framework cannot yet address pure product-development scenarios. As modular product development often entails high upfront investments and extended development times [

11,

71], including this dimension would enhance the applicability of the framework.

Second, the economic scope could be broadened by incorporating additional effects such as economies of scale, inventory reductions, and lead-time improvements into the data model. These factors are central to financial performance of companies [

72] and would make the framework more comprehensive in evaluating the full economic impact of modular product architectures.

Third, the framework could be developed toward greater automation and predictive capability. While the current framework already provides predictions based on modeled data of potential time savings linked to product structure standardization, these estimates are currently based on modeled relationships between product variety and overhead resource consumption. Further development could enable automated extraction and integration of ERP and engineering data, allowing near real-time updates of cost allocations as new projects or configurations are introduced. With sufficient data maturity, the model could also support predictive analytics—estimating the resource impacts of design changes—and, ultimately, AI-based optimization of module portfolios [

73].

Advancing these directions would transform the framework from a diagnostic tool into a proactive decision-support system, enhancing both its analytical precision and its practical relevance across industries seeking to balance customization, complexity, and cost efficiency.

6. Conclusions

This study introduced a data-driven framework for quantifying the effects of modular product architectures, advancing beyond existing approaches that often emphasize production costs or rely on static comparisons. By integrating time-driven activity-based costing with theories of complexity management and hierarchical product decomposition, the framework establishes explicit links between product structures, complexity, and overhead activities such as engineering, procurement, production preparation, and sales.

The contribution is threefold. First, the framework captures cross-functional overheads that traditional volume-based costing methods typically overlook [

26], thereby providing a more complete representation of modularization’s impact. Second, it enables analysis at multiple levels of product decomposition—from components to modules, products, and projects—offering decision-makers a flexible tool to evaluate product structures across configurations and portfolios. Third, it allows scenario testing in early design phases, supporting rapid and fact-based exploration of alternative architectures, informing strategic product and portfolio decisions before costly commitments are made.

Application in an ETO context with high product complexity demonstrated the framework’s practical relevance. The results showed substantial potential for reducing engineering hours and improving transparency in how customization costs are distributed across the value chain. The framework delivers directional insights that strengthen decision-making in portfolio management, pricing, and modularization initiatives. Overall, the study contributes a novel, data-driven framework that bridges the gap between the theoretical benefits of modularization and their quantification in industrial practice.

Future research should extend the economic scope by incorporating development time, economies of scale, and inventory effects, thereby broadening applicability across industries. For practitioners, the framework offers a structured way to identify high-impact product areas, prioritize modularization efforts, and better align architectural decisions with strategic business objectives.