1. Introduction

In Industry 5.0 and Zero Defect Manufacturing (ZDM), human–robot collaboration (HRC) is becoming increasingly important [

1,

2,

3]. Where possible, robots should relieve humans of repetitive and burdensome tasks [

4,

5]. This naturally raises the question of how to best organize HRC. Trust, usability, and acceptance are key requirements for the successful introduction of HRC, according to [

6]. In order for humans and robots to work together effectively, it is often necessary to redesign workplaces accordingly [

7]. Important criteria in this context are, for example, safety and anxiety, fear of surveillance, but also concepts for user guidance and achieving the highest possible level of acceptance among the workforce. According to [

7,

8,

9], different forms of human–robot interaction (HRI) exist, such as collaboration, synchronization, cooperation, and co-existence, given particular capabilities of robotic systems. Based on data reported by [

7], in 2021, 32.5% (113) of 348 publications in collaborative robotics addressed human–robot collaboration, but only 3.7% (13) considered task allocation.

1.1. Ergonomics-Oriented

Manufacturing Scheduling

In terms of using a human-centered approach to manufacturing (as proposed in the context of Industry 5.0), the assignment of work, in particular organizing work in a human–robot and human-system collaborative setting, requires the consideration of human factors in planning and scheduling. This includes the incorporation of worker ergonomics into scheduling, balancing productivity with a worker’s well-being. Human factors, such as fatigue, physical workload, and skill proficiency, are considered part of a collection of optimization criteria to schedule the workload of both workers and robotic systems. In [

10], an approach to human-centered workforce scheduling is presented. In [

11], a dynamic scheduling concept for a hybrid flow shop [

12] (similar to the approach outlined in this paper) is developed based on a self-organizing multiagent system (MAS) and reinforcement learning. In this scheduling approach, fatigue of workers and skill levels are considered. Human fatigue is also a concern addressed in [

13] and the presented task scheduling approach plans micro-breaks during job cycles in order to avoid human fatigue accumulation. In [

14], worker fatigue problems and productivity are addressed with a simulation-based approach that focuses on task scheduling in the context of human–robot collaboration. The digital panopticon [

15] describes a continuous, persistent data collection that makes workers feel constantly visible and self-disciplined.

In the context of the work presented in this paper (see

Section 2.2), the concept of human initiative is regarded as a basic principle in the scheduling approach developed. In this work, task allocation is driven by human initiative and paced according to worker preferences and ergonomic needs. It is expected to minimize human discomfort that is frequently reported as arising in collaborative human–robot activities, while still allowing effective human–robot collaboration.

1.2. Agent-Based Manufacturing Scheduling

Agent-based manufacturing scheduling has seen considerable advancements. As pointed out in [

16], a MAS approach allows the distribution of scheduling control among autonomous agents, with the goal of enhancing flexibility, scalability, and responsiveness in complex production settings. Effects, such as reducing production makespan and improving overall performance under disturbance conditions, are reported in [

17]. The work of [

18] considers a make-to-stock problem in the construction industry. A combination of functional and physical manufacturing system decomposition is employed to obtain agent types, e.g., a schedule agent controls schedule generation (functional agent), and machine agents represent physical machines on the shop floor. For schedule generation, a heuristic algorithm has been developed. A job shop scheduling problem (JSSP) in the context of re-manufacturing is solved in [

19]. The JSSP is extended with scheduling autonomous guided vehicles (AGVs), transporting material between machines. The extended JSSP is solved with Constraint Programming. The scheduler component is embedded in an agent-based hybrid control architecture, comprising centralized and decentralized components.

Recent work integrates MASs with AI methods, such as embedding deep reinforcement learning to enable schedule optimization in a flexible job shop integrating AGVs [

20]. A dynamic JSSP is tackled in [

21], with stochastic process times and machine breakdowns as dynamic events. Scheduling policies are trained with multiagent reinforcement learning, employing a knowledge graph that represents dynamic machines in a factory. The knowledge graph encodes machine-specific job preference information and has been trained with historical manufacturing records.

In this paper, an agent-based scheduling approach is used, which considers a final end-of-line inspection scenario to achieve a zero-defect situation for products leaving a multistation work cell. A particular scheduling problem arises from this scenario due to properties immanent to Zero Defect Manufacturing (ZDM).

1.3. Zero Defect Manufacturing

ZDM is a quality management method to maintain both product and process quality at a level of zero defect output to ensure that defective products do not leave a production facility [

22]. ZDM is made possible through the ongoing digitalization of manufacturing environments, using data-driven and artificial intelligence (AI) approaches in line with Industry 4.0 concepts. ZDM is an important means of achieving sustainability goals in manufacturing and aligning them with Industry 5.0 objectives. An early proposal for ZDM was made in [

23]. In [

24], it is stated that a zero defect output in manufacturing is achieved through corrective, preventive, and predictive techniques. The authors outline a ZDM framework that defines four basic activities for achieving ZDM, namely, detecting or predicting defects (Detect, Predict), followed by repair activities (Repair), as well as implementing measures to prevent failures situation (Prevent). In this model, Detect and Predict identify quality issues and are regarded as the triggering strategies, whereas Repair and Prevent are considered as actions to remedy a defect or prevent it. This model proposes three so-called ZDM strategy pairs: Detect–Repair, Detect–Prevent, and Predict–Prevent. Modern data-driven approaches may improve beyond just a Detect–Repair activity and use the data to improve production after defect detection (Detect–Prevent). Specifically, predicting potential defects before they occur (Predict–Prevent) utilizes modern AI-driven methods (see, for example, [

25]). According to the work of [

3], humans are now regarded as assets rather than sources of error. However, human-centric aspects of ZDM have received little attention and are often overlooked.

1.4. Research Gap and Contributions

This paper addresses the following research question: ‘How to implement a human-centric approach to task scheduling in an end-of-line quality assurance situation with human–robot collaboration?’ None of the existing work provides a comprehensive answer to that question. Approaches to task scheduling in HRC scenarios prevalently address assembly use cases, avoiding the dynamic character of quality assurance, where important information such as the number of rework cycles is not available for scheduling in advance. Moreover, existing HRC approaches rarely unify multistation resource sharing, human-initiated task assignment, and agent-based scheduling.

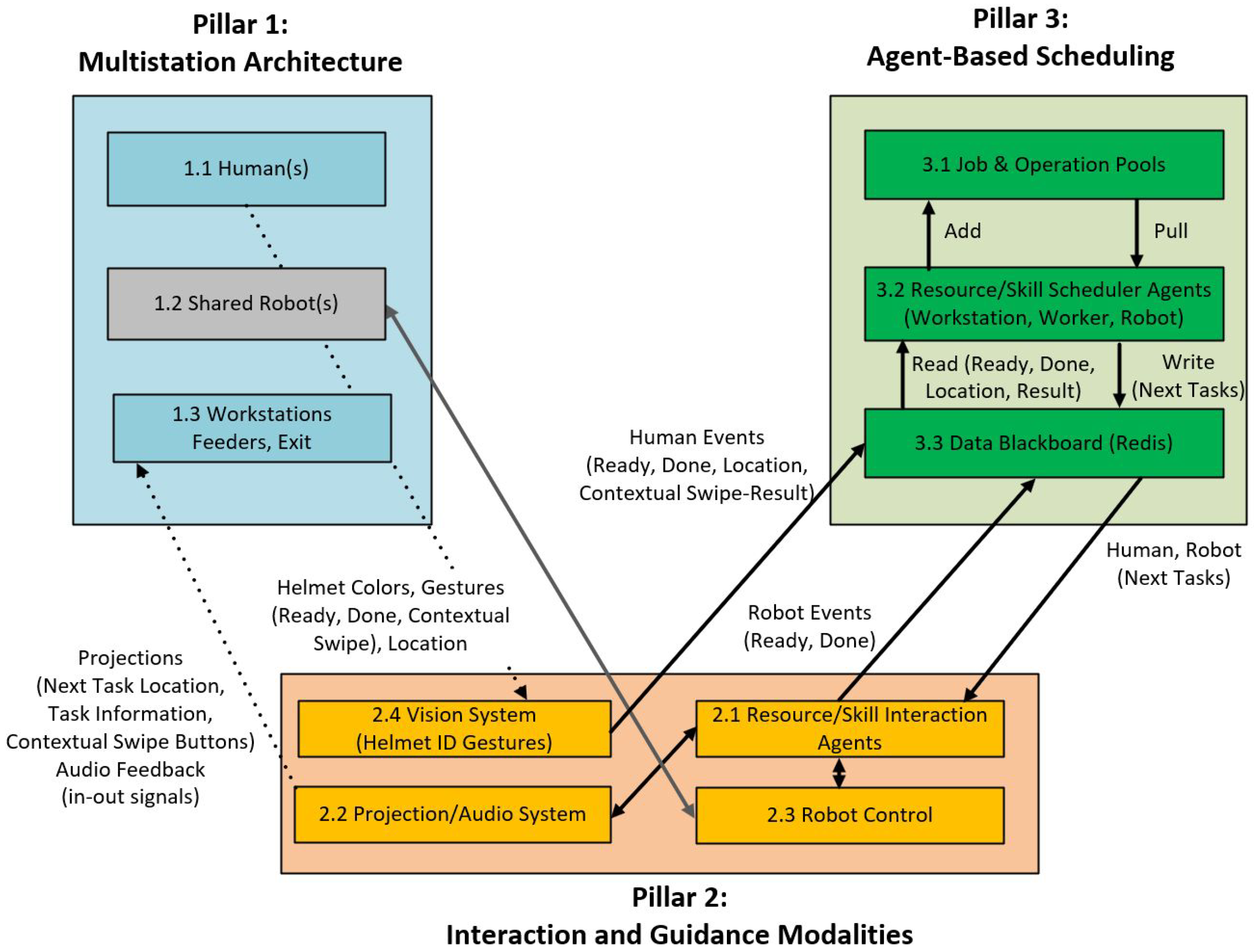

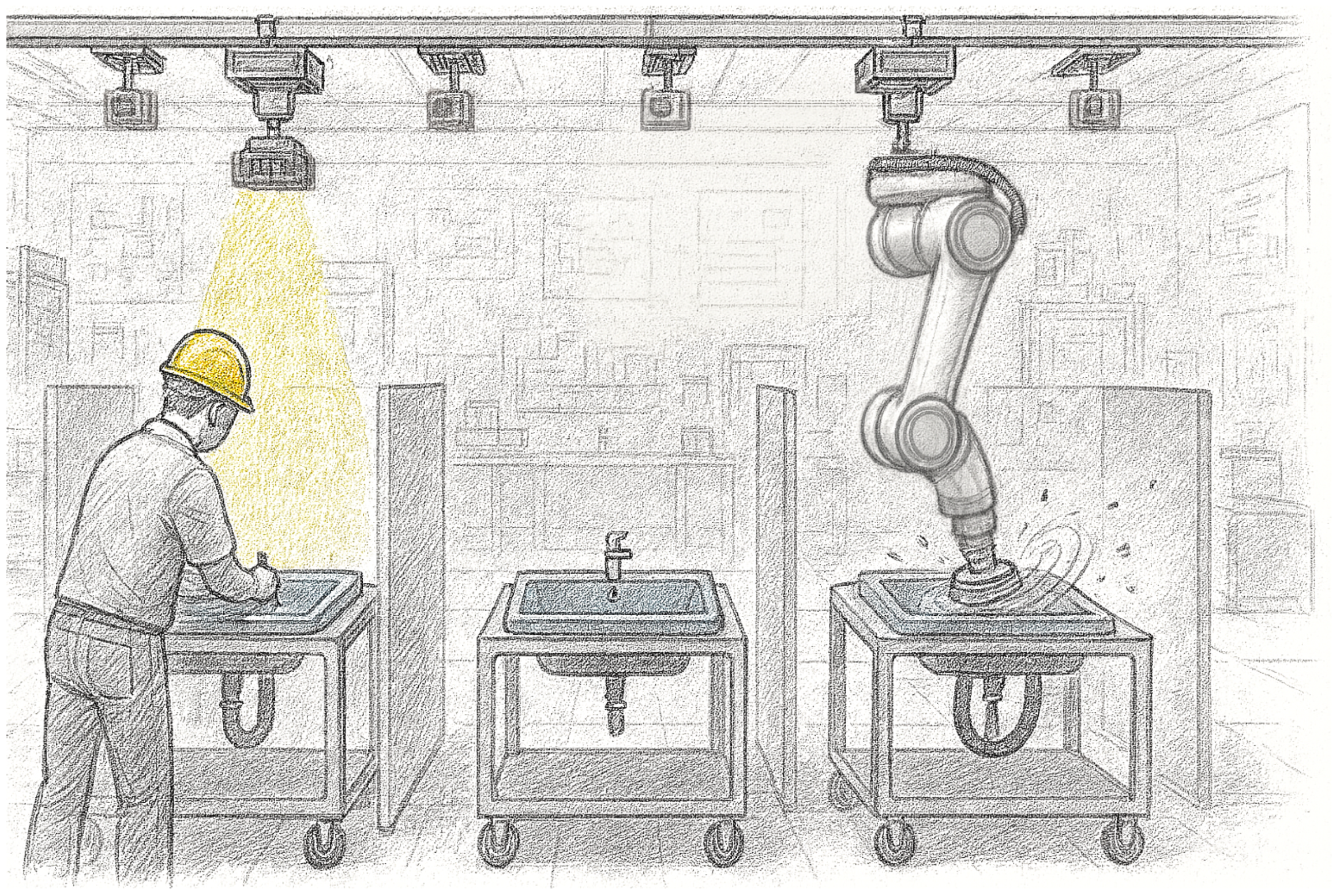

Addressing the above research question, a Human–Robot Multistation (HRMS) framework has been implemented as lab setup to address ZDM problems with a human–robot collaborative scheduling approach. The ZDM scenario shown is the end-of-line quality inspection of products with possible repair activities, in order to satisfy a zero defect requirement for a particular production process. In terms of the model proposed by [

22,

24], the ZDM approach implemented with our HRMS framework can be categorized as a Detect–Repair strategy, where repetitive inspection and rework tasks are assigned to teams of human workers and robots. One or more workstations are operated flexibly by both humans and robots and a close interaction between human workers and robots is enabled through a multiagent scheduling approach integrated with intelligent perception and interaction systems in order to allow for an alternating or collaborative task execution in a multistation setting.

The HRMS framework builds on prior research [

26,

27]. In [

26], the agent-based approach to task scheduling used in the framework is described, and in [

27] that scheduling approach is evaluated in simulation, focusing on scalability and performance aspects. The simulation model used for evaluation implements the ZDM scenario shown in this paper, and it realizes a virtual HRMS environment with up to three workers, two robots, and six workstations. The work presented in this paper extends the prior research with a real-world, laboratory-scale implementation of the HRMS concept, integrating the agent-based scheduler from [

26] and introducing a human-initiated human–robot interaction scheme.

The contributions of this paper can be summarized as follows:

- 1.

Conceptual contribution: We define and introduce the Human–Robot Multistation System (HRMS) as a novel framework for flexible human–robot collaboration. HRMS generalizes existing human–robot work cells towards multistation environments with shared robotic resources.

- 2.

Integration and validation of scheduling concepts for human-driven work assignments: Building on our previous work on agent-based scheduling [

26,

27], we integrate the scheduling mechanism into the HRMS framework and validate it in a ZDM laboratory setting.

- 3.

Interaction contribution: We introduce a human-initiated human–robot interaction scheme that integrates gesture recognition, helmet-based identity detection, and projection-based task guidance for the HRMS context, enabling workers to initiate, control, and coordinate tasks intuitively across multiple stations.

- 4.

Laboratory proof-of-concept: We implement a real-world HRMS demonstrator with a gantry-style robot and two human workstations and conduct a pilot study assessing technical feasibility, task performance, and user experience.

The remainder of this paper first introduces the HRMS concept as a novel integration of prior research streams in

Section 2. In

Section 2.2, the task scheduler as a central enabler of HRMS is then presented.

Section 3 describes the laboratory demonstrator as the first proof-of-concept implementation, followed by a user study assessing task performance and worker experience. Our findings are then described in detail in

Section 4.

Section 5 discusses the methods and results. Finally, our paper is concluded in

Section 6.

3. Materials and Methods

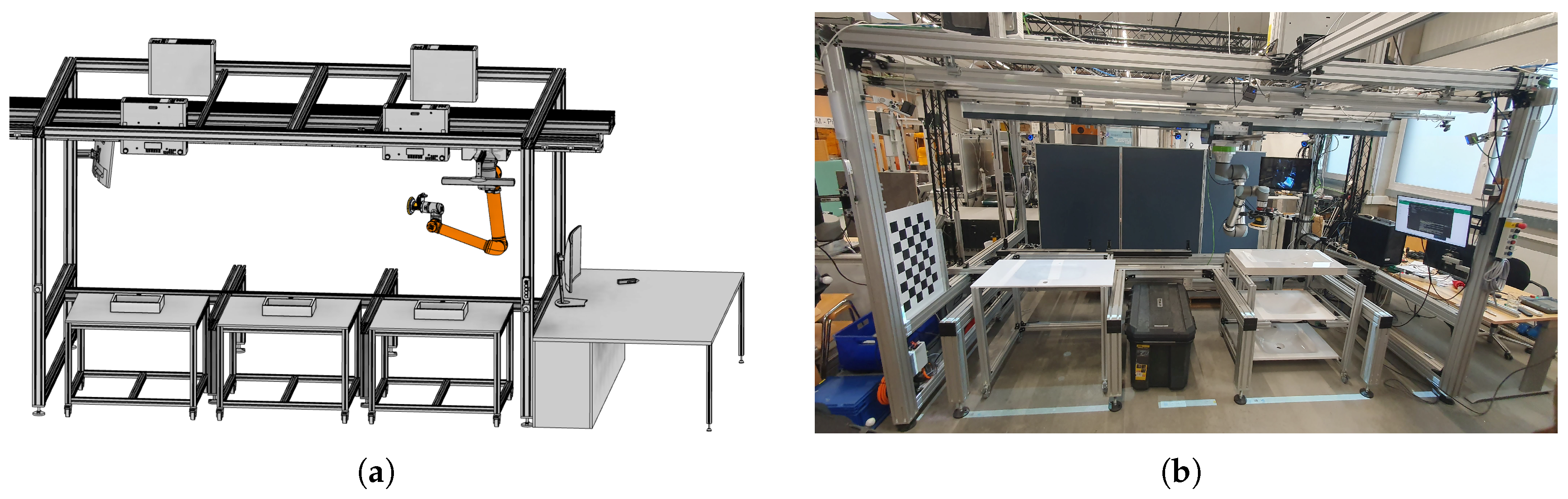

For the laboratory HRMS demonstrator, a setup with two workstations and one robot mounted on an overhead gantry was built that allows the inspection and rework activities by humans in collaboration with the robot. Our HRMS laboratory setup is depicted in

Figure 7a,b. It provides space for up to six workstations that can be flexibly arranged. For the current study, a setup with two workstations and one robot was used.

In the real-world implementation, the following hardware components are integrated:

Ceiling-mounted robotic system: A UR10e collaborative robotic arm (Universal Robots A/S, Odense, Denmark) mounted at a height of 1.9 m above the floor on a 4-m linear axis (OH-au2mate, Ponitz, Germany), forms a hybrid system with a simplified gantry-style configuration that enables access to all workstations (driving speed of the robot: ≈).

Projection system: LCD laser projectors PT-MZ880 and PT-VMZ51S (Panasonic Corporation, Osaka, Japan) provide visualization and projection of task-relevant information for the workstations, with each projector capable of serving two workstations. For the pickup/delivery station, a cost-effective Epson EF-11 (Seiko Epson Corporation, Suwa, Japan) is used, providing sufficient brightness for this use case.

Wide-angle cameras: Multiple Genie Nano cameras (Teledyne DALSA Inc., Waterloo, ON, Canada) with a 2/3″ sensor and a short focal length lens are used for scene understanding.

For marking defect positions, six OptiTrack PrimeX 13W cameras (NaturalPoint, Inc., Corvallis, OR, USA) were additionally integrated during the project to enable marker-based tracking of a handheld 3D pen (tracked 6-DoF stylus) [

33]. These hardware components are integrated with their corresponding systems in the overall architecture, which are interconnected via Redis, enabling perception, projection, and robotic control.

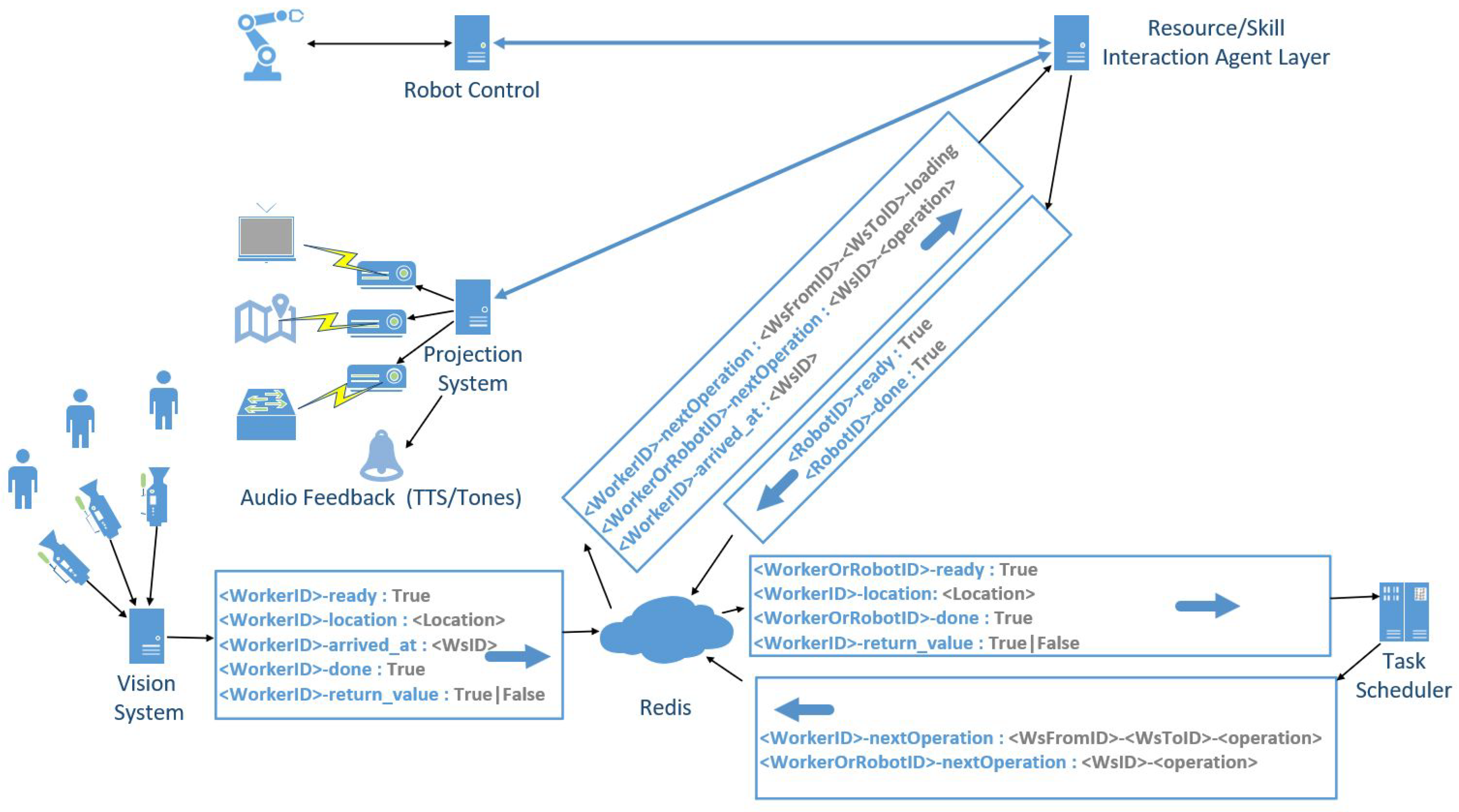

Figure 8 shows an overview of the system architecture and the Redis topic-based communication, indicating which systems publish and subscribe to specific keys, excluding the OptiTrack defect position marking. The systems are configured to delete the entries once the corresponding events have been processed.

The resource/skill agent layer acts as the execution bridge between the task scheduler and the physical systems. The layer runs software agents for each worker and robot, responsible for controlling the UR10e on the linear axis, equipped with a Mirka AIROS 650CV robotic sanding unit (150 mm pad diameter, 5 mm orbit)—also suitable for polishing applications (Mirka Ltd., Jeppo, Finland). Moreover, the resource/skill agent layer generates appropriate task guidance information through the projection system described in [

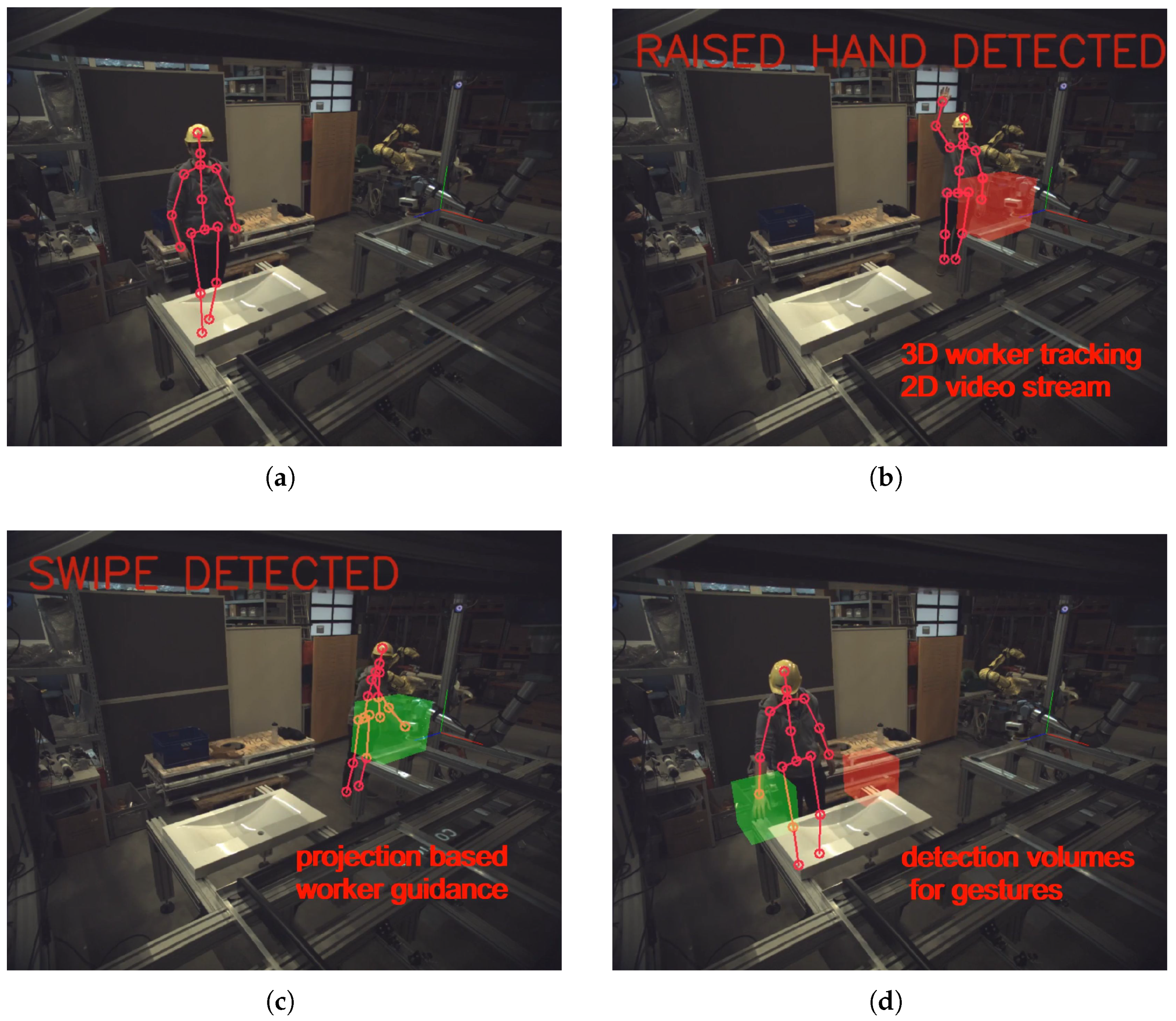

34]. For the vision system, gesture recognition using wide-angle cameras was implemented as an interaction modality. Humans wearing helmets are identified and the corresponding helmet color is used in the projections on the workstation to which they are assigned for tasks. Both tasks could benefit from previous work [

35,

36]. The assignment of tasks occurs only for helmet-wearing humans (

Figure 9a) who actively signal ‘Ready’ by raising a hand, reflecting a human-initiative approach. Three types of gestures are supported (

Figure 9b–d): hand raising to signal to be ‘Ready’ for the next task, swipe gestures to confirm task completion (‘Done’), and contextual swipes to answer yes/no questions, e.g., whether an inspection was acceptable. The task scheduler, described in detail in

Section 2.2, coordinates the consistent execution of the process plans among all participants.

Following initial tests, two issues were observed: workers could not reliably tell whether their gestures were correctly interpreted, and sometimes forgot to raise a hand to request a new task after completing the previous one. To address this, an audio feedback module was added to the projection system. The module subscribes to the same Redis topics as the visual cues and emits brief text-to-speech messages or tones on events. Specifically, the system now plays in- and out- tones for ‘Ready’ and ‘Done’ events to confirm that the vision system has recognized the workers’ gestures and remind workers to raise a hand to request the next task.

3.1. Task and Interaction Specification for HRMS

Unlike conventional single-station HRC setups, the HRMS is characterized by multiple stations, shared robotic resources, human-initiative task allocation, and perception-driven events. This requires a precise specification of resources, preconditions, projections, and tasks, to ensure consistent coordination across the perception, projection, scheduling, and robotic subsystems. To implement the process plan in

Figure 2, the individual steps were detailed with respect to the required resources, dependencies, projections, and tasks assigned to humans and robots.

Table 1 summarizes these specifications. For each step, it lists (i) the resources (Human, Robot, Workstation, Feeder/Exit), (ii) the preconditions (e.g., human wearing helmet in color, human signaled ‘Ready’ (raised hand) for task, robot ready, flag set if the previous inspection was signaled as Ok or Nok), (iii) the projected cues (project color on feeder, workstation or exit, contextual swipe detection), and (iv) the task actions. The preconditions reflect the principle of human-initiative interaction (e.g., human signaled ‘Ready’), while projections provide context-sensitive SAR support at the stations.

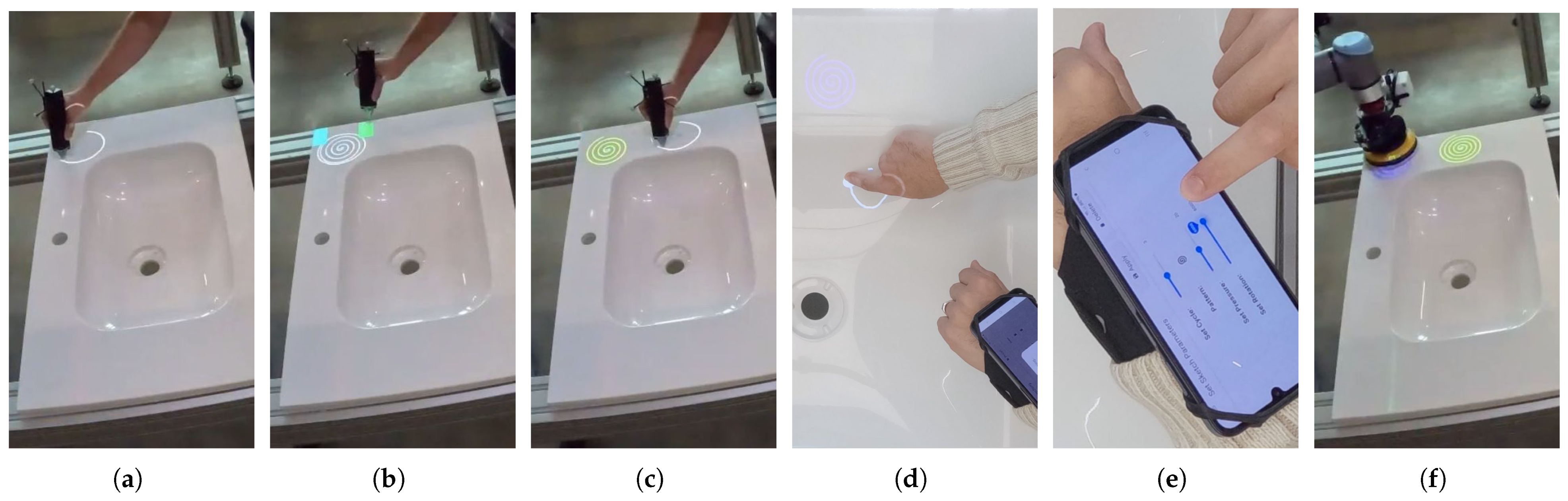

An illustrative example of the process-step loading is shown in

Figure 10a–d. The helmet-wearing worker first signals ‘Ready’ (

Figure 10a) and then is guided to load a basin from a feeder (

Figure 10b) into a workstation (

Figure 10c). During insertion of the basin into the workstation, the text load is displayed at the basin (

Figure 10d). After successful insertion of the basin into the workstation, the end of the load task is confirmed by a swipe gesture (

Figure 10e). Additionally,

Figure 10f shows the Ok/Nok display for contextual swipe detection to ask for the result of the inspection task, which always follows the load task.

For defect marking, we build on prior work: 3D positions can be specified either with a 3D pen [

37,

38] (

Figure 11a–c) or by freehand finger drawing using SketchGuide [

39,

40] (

Figure 11d,e). For our lab study, we used the previously developed 3D-pen-based interface because it is integrated with the existing OptiTrack setup and requires no additional sensors, minimizing setup time. After a worker defines and confirms regions on the workpiece, the projection mapping visualizes the suggested polishing regions on the workpiece. Finally, after the worker has confirmed all work regions, the scheduler assigns the polishing task to the robot. In the next step of the process, the confirmed regions are translated into robot paths, and the robot executes the polishing accordingly (see

Figure 11f), following the projection-based approach described in [

37]. The inspection–setup–polish cycle repeats until no defects remain.

3.2. Study Design

We conducted an observational within-subjects pilot study of a human–robot collaboration (HRC) workflow with five target users (n = 5; PROFACTOR employees) in our laboratory setup described. The pilot study was designed as a formative, safety-critical pilot to establish technical feasibility, interaction flow, and failure modes under controlled laboratory conditions. For safety and rapid iteration, we recruited internal volunteers to enable a low-risk first validation of the system. The age of the participants (4 males, 1 female) ranged from 22 to 42. Only one participant had no robotic experience. Recruitment followed an internal call; participation was not compensated. Inclusion: normal or corrected-to-normal vision, ability to stand and manipulate small parts. Exclusion: direct prior participation in designing the HRMS prototype evaluated. The task that all participants had to perform was to execute two jobs (Job A, Job B) together with the polishing robot with the help of the HRMS. This allowed us to compare Job A vs. Job B learning effects. For the task scheduler we used only the SPA heuristics. All sessions began with a briefing/informed consent and a safety induction. The participants then received scripted standardized instructions on the study procedure (task sequence, timing, permitted interactions, pause/stop rules) and completed a practice trial. An emergency stop was within reach and predefined stop criteria were applied.

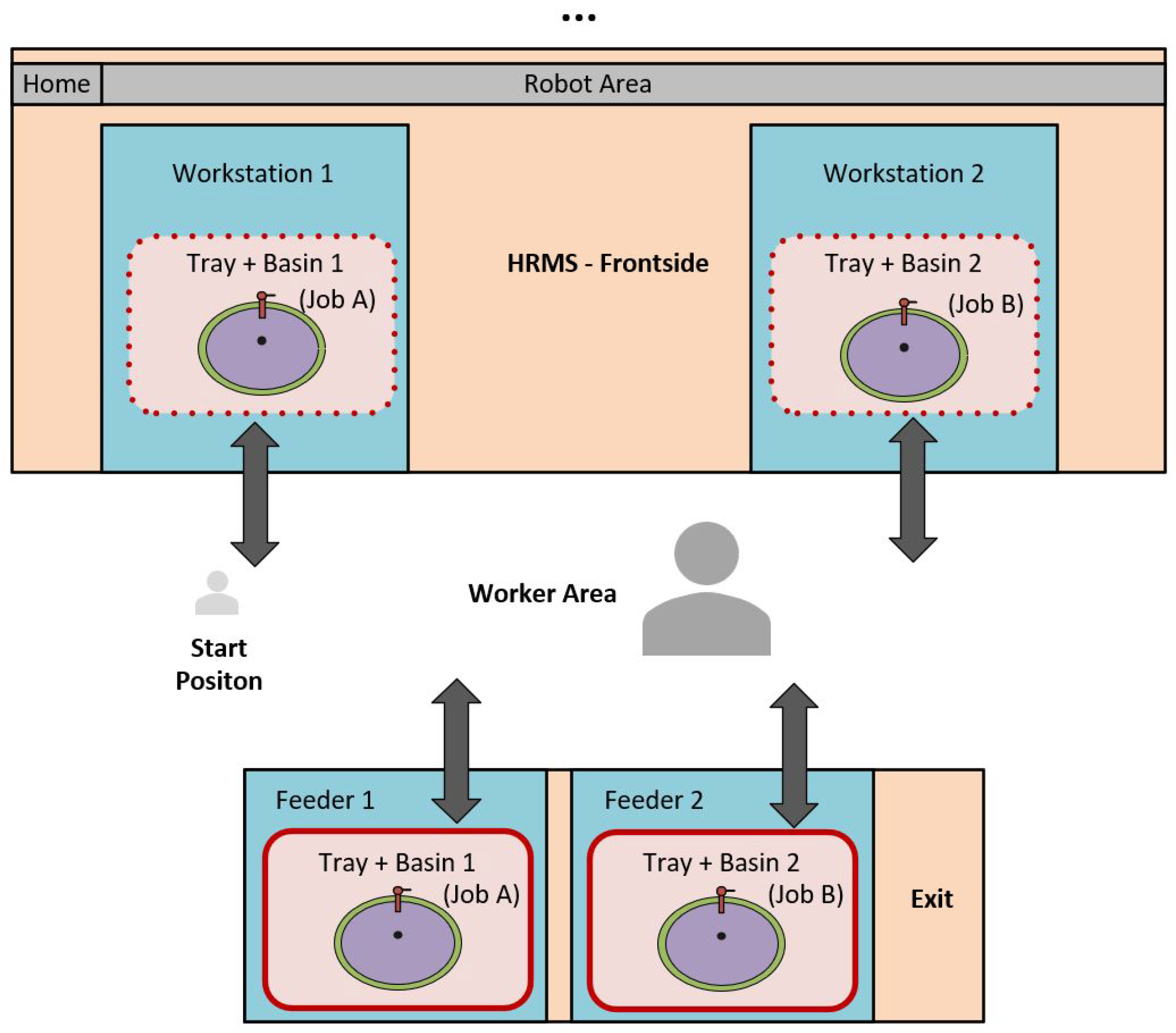

For the two jobs, participants had to first fetch a basin on a tray from a feeder and then transport it to an appropriate workstation at the HRMS. Then they had to work together with the polishing robot, as defined by the process plan given in

Figure 2, until no more defects were found. Finally, the basin had to be transported to the exit area. After both jobs were completed, the total session ended.

Figure 12 shows the placement of workstations, feeders, and jobs in the laboratory. A video illustrating the task setup of the pilot study is provided in [

41].

For defects, we chose to create a single issue (defect) with a removable marker on each basin. The participants were told to mark these issues for the robot to be polished with the 3D pen. This way it was guaranteed that only one rework cycle occurred, as the robot later polished the removable marked issue position away in the first cycle. For each session, performance-related metrics were recorded by analyzing the videos taken. Job throughput time was measured from the start signal (first confirmed user event) to each job completion. The following errors were recorded: (E1) safety-critical /procedural errors (for example, the tray was not secured to the base with the safety clips before the robot started polishing, basin was fetched/moved from/to the wrong station, …); (E2) the need for intervention by the study management (had to give an instruction so that the process could continue); (E3) system misbehavior (for example, button not clickable, correct gesture not recognized); and (E4) technical interruptions/stop events (e.g., emergency stop). The analyzes are descriptive. For continuous metrics, we report the mean ± SD and 95% CI per job (A/B) and overall. For count measures, we report totals (and percentages). Inferential tests are not reported.

After the session, the participants completed the System Usability Scale (SUS) [

42], the Godspeed Questionnaire Series (GQS) [

43] to capture robot-related perceptions (Anthropomorphism, Animacy, Likeability, Perceived Intelligence, Perceived Safety), and the RAW NASA Task Load Index (NASA-TLX) to estimate human workload [

44,

45]. The German translations followed established sources: SUS [

46], GQS [

47], and NASA-TLX [

48]. To obtain feasible improvement ideas, we added brief post-task open-ended prompts (for example, ‘The first change you would make’, ‘Lack of feedback’, ‘Moments with low perceived safety’).

3.3. Statistical Analysis

Given the pilot sample (n = 5), analyses were descriptive. Continuous outcomes are summarized as mean ± SD with two-sided 95% CIs computed via bias-corrected and accelerated (BCa) bootstrap. Unless stated otherwise, we used 10,000 resamples with participant-level resampling. For job-specific metrics (Job A, Job B) we report mean ± SD and 95% CIs based on participant-level resampling. For the overall time, we first compute a within-subject average for each participant and then obtain its BCa 95% CI by applying the same participant-level bootstrap to the set.

Questionnaire scores are treated as continuous summaries; scale ranges are SUS (0–100), GQS (1–5; higher is better), and RAW NASA-TLX (0–100; higher indicates greater workload).

Implementation details: analyses were performed in R (v4.0.3, 2020-10-10) using the boot package (v1.3-25); random seed 1234. We did not perform hypothesis tests and do not draw confirmatory inferences. Adjective mapping for SUS [

49] and RAW NASA–TLX [

50] was used only as orientation. This pilot serves to explore feasibility and signaling.

3.4. Protocol

Notes and Rationale

We (a) normalized Godspeed Safety anchors (safe on the right) and added anchor instructions to avoid reverse-coding; (b) moved NASA–TLX Performance to the end and labeled anchors; and (c) avoided concurrent/retrospective think-aloud in favor of brief post-task open-ended prompts to minimize interference in a safety-critical setting. Questionnaire scores are treated as continuous summaries. Scale ranges are as follows: SUS out of 100, GQS 1–5 (higher is better), and RAW NASA-TLX 0–100 (higher indicates greater workload). The overall GQS is computed as the simple unweighted mean of the subscales. Counts (e.g., error classes E1–E4) are reported as raw frequencies (and percentages) without CIs.

4. Results

In view of the pilot design (n = 5), outcomes for technical performance and usability were summarized descriptively (mean ± SD; 95% BCa CIs); see

Section 3.3 for statistical details. Questionnaire outcomes in

Table 2 and performance metrics in

Table 3. Details are as follows:

4.1. System

Usability (SUS)

Overall usability was SUS

,

;

(

Table 2). CIs are computed as specified in

Section 3.3. This is typically interpreted as ‘OK’ bordering ‘Good’ [

49]. Interpretive bands are used for orientation only.

4.2. User Perceptions (Godspeed, 1–5)

Overall GQS was 3.2 ± 0.8 with 95% CI

(

Table 2). Mean (SD) subscales were Anthropomorphism

, Animacy

, Likeability

, Perceived Intelligence

, and Perceived Safety

. Corresponding

CIs were

,

,

,

, and

. CIs computed as specified in

Section 3.3.

4.3. Workload (NASA-TLX, RAW)

The RAW NASA-TLX total averaged

,

;

(

Table 2). CIs computed as specified in

Section 3.3. This is typically interpreted as the moderate ‘Somewhat high’ workload [

50]. Totals used the standard RAW-TLX computation (unweighted mean of the six dimensions).

Dimension means (0–100; lower = less load) were Mental 38, Physical 29, Temporal 46, Performance 45 (higher = worse performance), Effort 30, Frustration 34. Temporal (46), and Performance (45) were highest; Physical was lowest (29).

4.4. Performance Metrics

Mean throughput time was 232.5 ± 46.5 s;

overall, with Job A 258.4 ± 46.8 s;

and Job B 206.6 ± 31.7 s;

. CIs computed as specified in

Section 3.3. To compare Job A and Job B throughput time (paired data, n = 5), we used the exact Wilcoxon signed-rank test. We reported

p-values for both the directed and two-sided alternatives (V = 15; one-sided (A > B)

p = 0.031; two-sided

p = 0.063). The Hodges–Lehmann (HL) estimator indicated that Job A exceeded Job B by a median of 53.0 s (95%, BCa CI = [35.5, 72.0], consistent with the Wilcoxon signed-rank test, although with considerable uncertainty due to n = 5. But because Job A experienced systematic disadvantages due to scheduling interactions with Job B (longer block when switching from A→B—for details, see

Section 5), processing times cannot be interpreted as evidence of a learning effect.

4.5. Errors

We instructed all participants to lock the wheels after placing the basin in the workstation. Surprisingly, this was only forgotten in one out of ten cases (E1). Most errors happened on the handling of swipe gestures. In total, five situations occurred where participants forgot to carry out a final swipe gesture (E2), and in one case the user wanted to know if a swipe gesture is necessary (E2). Only one participant forgot to take the 3D pen (E2). And one participant forgot to raise the hand and could not proceed (E2), whereas a lot of participants then raised their hands when they recognized that no new job appeared for them for a while. Analyzing the most interesting (E3) errors, the most disappointing system behavior was a wrong configuration of the green button on the basin at workstation 1. Here, the correct location in space where the gesture was expected seemed to be 20 cm more right than the green beaming suggested. Pressing the red button on workstation 1 was sometimes not recognized by the system. Most users used their right or left hand for the finger gesture, only two preferred to use only the finger, which surprisingly also worked. And for workstation 2, a situation occurred where the participant had to repeat the swipe gesture seven times until the system recognized it correctly. A technical restart (E4) was necessary when the system did not assign new tasks due to blocked gesture recognition.

In total, E2 errors (procedural/intervention) occurred seven times for Job A and one time for Job B, while E3 errors (system misbehavior) were counted three times for Job A and three times for Job B. No Job A and only one Job B were completed without errors. In total, only 10% of the jobs were completed without errors.

Across five E2 paired observations, the second condition produced fewer errors in 3/5 pairs, with two ties and none worse. To compare E2 for Job A and Job B (paired data, n = 5), we used the exact Wilcoxon signed-rank test with ties excluded (effective n = 3). We reported p-values for both the directed and two-sided alternatives: (V = 6; one-sided (A > B) p = 0.125; two-sided p = 0.250). The HL estimate (ties excluded) indicated that Job A exceeded Job B by a median of two errors (95% BCa CI = [1.0, 2.75]), which is consistent with the Wilcoxon test, but represents only weak evidence given the very small effective sample size (n = 3).

4.6. Open-Ended Post-Task Feedback + Observed Issues from the Videos

Thematic coding (descriptive counts; no inferential statistics) yielded the three most recurrent themes: (i) improve the audio signal’s sound level (3/5 mentions), (ii) improve the display output (3/5), and (iii) reduce unnecessary gestures (for example, after configuring the task, users prefer to mark failures immediately without the additional ‘inspection’ step) (3/5). The videos showed that sometimes participants started to perform tasks before the display on the basin showed the corresponding step text. Three of the five users used our internal central debugging monitor to find out what gestures were expected for them to perform. This would not work in multi-user settings. Two users appeared to get nervous when they heard the robot moving. And the sound of the moving robot prevented hearing the in–out audio signals.

5. Discussion

The HRMS implemented in the laboratory works well for the most part. For example, it consistently recognized gestures to determine whether people had arrived at their workstations or not. The task scheduler worked well in the laboratory—the SPA algorithm always scheduled the tasks closest to the participants. In tests, the robot arm sometimes obscured the cameras, making gesture recognition more difficult. One finding is that, where possible, at least two cameras should always be used to cover each relevant area in order to achieve good detection.

Our objectives were technical feasibility, usable task guidance, and human-initiative scheduling in a multistation ZDM cell. The pilot confirms feasibility under lab conditions but also reveals recurrent task-level failures (notably E2/E3) that currently limit robust operation and must be addressed before field deployment.

Despite the very narrow testing conditions in our laboratory, all test subjects were able to recognize where their next task was located because of the flashing LED light on the projectors. And there was not a single wrong transport. Nevertheless, although the light from the inexpensive EPSON projector used in the tests at the feeder/storage locations was sufficient, a more powerful projector should be used in the future.

The SUS mean of 70.5, which can be rated as ‘OK’ near to the ‘Good’ range, indicates basic acceptability despite remaining systemic errors, with room to improve confirmation flows and cue clarity, consistent with participant comments

As part of a more detailed comparison of the throughput times for Job A and Job B, a subsequent review of the session videos revealed that Job A was systematically disadvantaged by the sequence of tasks. After switching from Job A to Job B, when the robot had started the polish task for Job A, the SPA algorithm left the operator at Job B, resulting in a longer blockage of Job A. After later returning to Job A, when the robot had started the polish task for Job B, only short finishing tasks were required for Job A, so Job B was not comparably blocked. This asymmetry between jobs, caused by the sequence and interdependence, distorts the time measurements. Accordingly, the observed job throughput time differences between Job A and Job B (including Wilcoxon tests and the HL estimate) should not be interpreted as a learning or acceleration effect. They reflect planning/interaction effects rather than changes in participants performance.

The fact that only one out of ten jobs could be completed completely error-free was due to the still excessive number of system errors. Thus, addressing these specific failure modes and confirming usability and throughput in larger and more diverse samples remain essential next steps. Contextual swipe in general worked well, regardless of whether users pressed the button with their entire hand or with just one finger, except for the green buttons that were incorrectly set on workstation 1. Contextual swipe recognition occasionally underperformed, as changing laboratory conditions for other activities required repeated, time-consuming recalibration of the vision system. Unfortunately, this led to challenges on the day of the tests. Most E3 problems may become avoidable if these setup conditions are met. The swipe gesture, on the other hand, needs to be significantly revised from the current perspective. In many cases, it did not work as desired. Or, users forgot to do it altogether, which accounts for more than half of the E2 errors. The problem here is that, unlike the contextual swipe, the projector does not display information to indicate that a swipe gesture is expected. Replacing this with a simple contextual button could possibly lead to better results in the medium term.

Currently, error marking is not sufficiently integrated. For example, there is no signal to indicate when all errors have been marked. Instead, the system listens for a final swipe gesture while marking can be performed with the 3D pen. A major change in the architecture may become necessary to remedy this situation, also because users would have preferred to perform the inspection and configure tasks in a single step.

The E2 data (procedural/intervention) consistently favor fewer errors in the second condition (no pair worsened), but inference is weak due to the very small effective sample size (n = 3) and many effects of ties/floor. Exact tests and the BCa interval point in the same direction, yet they are not significant, so small or null effects cannot be ruled out. Practically, the trend is encouraging, but preliminary.

On the NASA–TLX, Physical Demand received the lowest score, likely because the trays simplify basin handling. The temporal Demand was comparatively higher, indicating some perceived time pressure. A comparable Performance score suggests that some participants were not fully satisfied with their own performance. This perception may be partly attributable to system-related issues. For example, a participant had to repeat the swipe gesture seven times. Although this was not the fault of the participant but a technical limitation, such incidents may have contributed to lower perceived performance in this pilot. Moderate RAW NASA-TLX scores point to cognitive/temporal load from confirmations and ambiguous cues. Reducing confirmation steps and increasing frequency of cues are expected to reduce perceived workload. Scheduling appointments based on proximity and clear signaling in the workplace can also reduce unnecessary walking and shifts in attention.

In current tests, only a single low-cost central speaker was used to generate the acoustic in- and out-signals. As a result, user feedback criticized the low volume. Future use of industrial ceiling speakers, for example, could improve this. Some of these devices also have microphones, which would enable voice input.

Regarding the Godspeed questionnaire, Perceived Safety was the main focus. The Perceived Safety score averaged 3.5 (SD = 0.9), suggesting generally favorable perceptions; interpretation remains descriptive given the pilot design. Maybe the robot’s driving noise contributed to the signs of nervousness observed in the video recordings. This raises the question whether it is possible to reduce the volume. Accordingly, for safety reasons and as part of standard commissioning, the maximum permissible speed should be confirmed. If necessary, the driving speed has to be reduced, which will also reduce the noise level.

The small sample size (n = 5) reflects an exploratory objective, namely, to identify system and interaction problems at an early stage and is not intended to draw general conclusions. The laboratory environment at a single location may cause location-specific effects (calibration, special features of the facility).

Related work shows that effective HRC requires specially tailored workplace adjustments [

7]. Our HRMS meets these requirements through projection-based guidance, camera calibration procedures, and agent-based schedules that adjust access and workflow. The scheduler and the implemented task allocation are designed to take into account changes in HRMS actors and tasks. For our use case, for example, the basins could be delivered by AGVs that dock under the trays and position them at the workstations, which would also reduce E1 errors (tray not secured before polishing). Similarly, human inspection and error marking could be preceded by robotic AI-based pre-inspection and defect detection, followed by targeted human checks. More broadly, the HRMS concept may extend beyond ZDM to production processes requiring HRC, e.g., assembly processes.

For future industrial applications of the HRMS framework, several issues require consideration. In real-word manufacturing settings, carefully positioned cameras and sensor fusion of the multi-camera system will be essential for proper functioning of the camera-based gesture recognition system. In multi-robot settings, it might be necessary to introduce scheduling constraints specifying the workstations that are accessible for a robot. Depending on the installed multi-robot system and HRMS topology that constraint can be dynamic, if accessibility of workstations is influenced by other robots. In larger HRMS topologies an extended projection system will be required to ensure intuitive worker guidance, e.g., adding projected arrows on the shop floor.

Future Work

In the next phase, elimination of as much as possible of the system’s detected misbehavior is targeted, and the interaction will be extended beyond gestures with multi-modal inputs and safety-rated confirmation. Assessment of either safety-rated tactile skin (AIRSKIN; Blue Danube Robotics GmbH, Vienna, Austria) or on-robot proximity sensing (Roundpeg; Aschheim/Munich, Germany) is planned as an added layer for speed-and-separation monitoring, with effects on cycle time, safety margins, and user acceptance to be evaluated, while verifying compliance with ISO 10218 [

51,

52] and ISO 13849 [

53] within the HRMS.

Currently, the laboratory setting is limited to recognize only yellow helmets and is thus not capable for multi-human operation. Updating the system accordingly and testing scalability with multiple humans is on the near-term roadmap. Challenges we expect include being able to reliably recognize different helmet colors in a wide variety of lighting conditions. The use of multiple speakers at workstations could lead to instructions such as in- and out-tones played for ‘Ready’ and ‘Done’ events being misunderstood by workers at other stations. To enable human initiative scheduling, workers must reliably know whether the system has already scheduled them. Whether alternatives to loudspeaker output are necessary or whether projection instructions in different colors work can only be determined in concrete multi-human tests.

Simulation KPIs (e.g., jobs per hour) measured in our previous simulation runs are not comparable to the lab setting due to a simplified lab setup and different operation-times. Extraction of operation-time distributions from the videos and their use to calibrate the simulation are planned, allowing predictions for HRMS configurations with multiple humans and robots and more than two workstations.

After implementing the necessary system improvements identified in this pilot, we plan to conduct a larger study with an externally recruited, more diverse participant sample to increase statistical significance and reduce confidence intervals. The primary outcomes will be SUS and job throughput time. Secondary outcomes will include error rates (E1–E4), NASA-TLX, and Godspeed Safety.

6. Conclusions

We asked, ‘How to implement a human-centric approach to task scheduling in an end-of-line quality assurance situation with human–robot collaboration?’ We answered this by specifying and building the Human–Robot Multistation System framework that integrates (i) multistation resource sharing, (ii) human-initiated task allocation via gestures and identity, (iii) projection-based guidance, and (iv) agent-based scheduling, and by validating technical feasibility in a ZDM lab demonstrator.

A gantry-style laboratory demonstrator for the final inspection process in a ZDM use case was realized and evaluated in an exploratory pilot (n = 5) focused on ergonomics and other human-factor outcomes. SUS, GQS, and RAW NASA–TLX and brief open-ended comments were collected to capture usability, perceptions, workload, and improvement ideas. The study confirms the technical feasibility of HRMS under laboratory conditions while still exposing recurrent task-level failures. Addressing these specific failure modes and confirming usability and throughput in larger, more diverse samples remain essential next steps.

Quantified outcomes were SUS 70.5 ± 22; ; overall GQS 3.2 ± 0.8; ; RAW NASA-TLX 37 ± 16.3; ; mean job throughput time 232.5 ± 46.5 s; ; and errors in 9/10 jobs (E1 = 1, E2 = 8, E3 = 6, E4 = 1; 1/10 jobs error-free), which should be addressed in future work. In simulation, a proximity-aware shortest-path heuristic reduced walking distance by up to 70% versus FIFO without reducing productivity.

Usability was acceptable, with a SUS mean of 70.5 (interpreted as ‘OK’ nearing ‘Good’), and perceived workload was moderate. Observational analyses and participant feedback identified concrete opportunities to streamline confirmations, strengthen visual and audio feedback, and clarify projected cues at the workstations. Procedure- and recognition-related errors revealed where robustness, calibration routines, and interaction details should be refined to support better task execution.

Specific recommendations for industrial applications are to use carefully positioned and routinely calibrated multi-camera vision, provide redundant visual/audio feedback with reliable input modalities (e.g., buttons for critical confirmations), employ proximity-aware scheduling to reduce walking, and ensure adequate projector coverage at stations.

The work is preliminary by design. The single-site laboratory setting, small sample size, and the specific gantry configuration limit generality to other HRMS topologies and field conditions. All inferences are descriptive.