A Novel WaveNet Deep Learning Approach for Enhanced Bridge Damage Detection

Abstract

1. Introduction

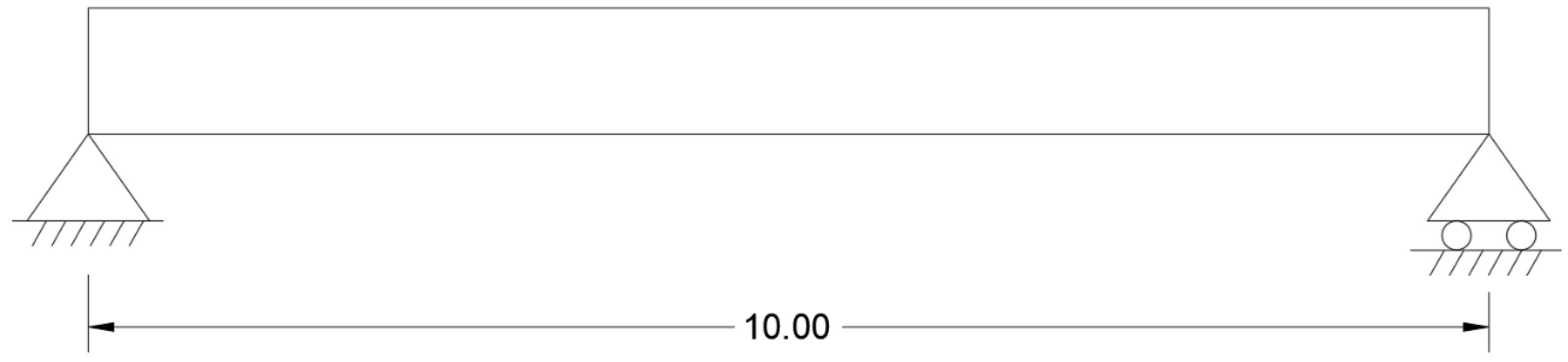

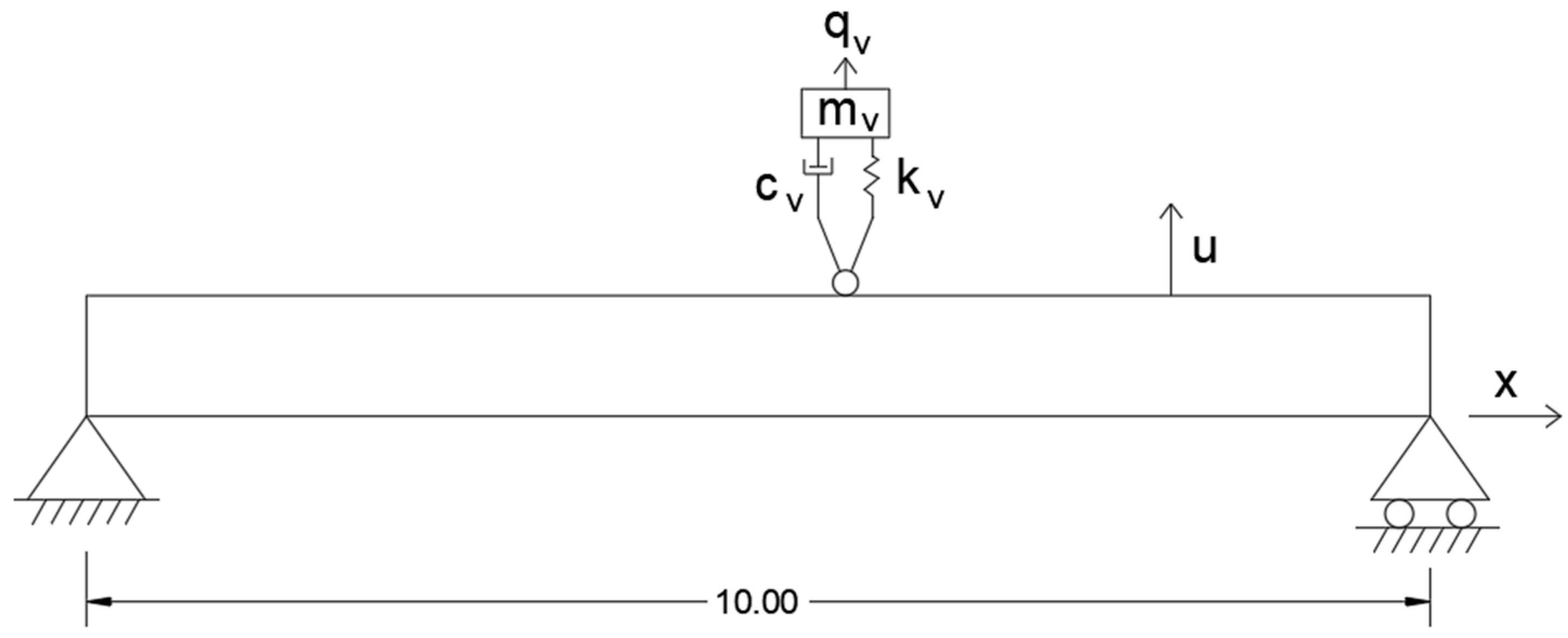

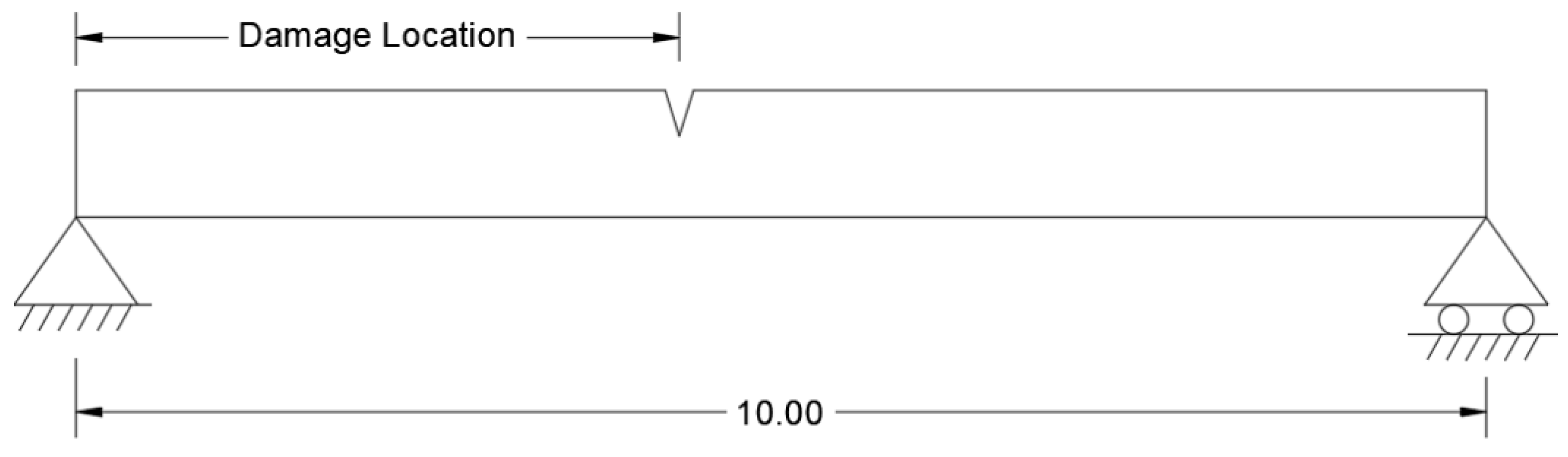

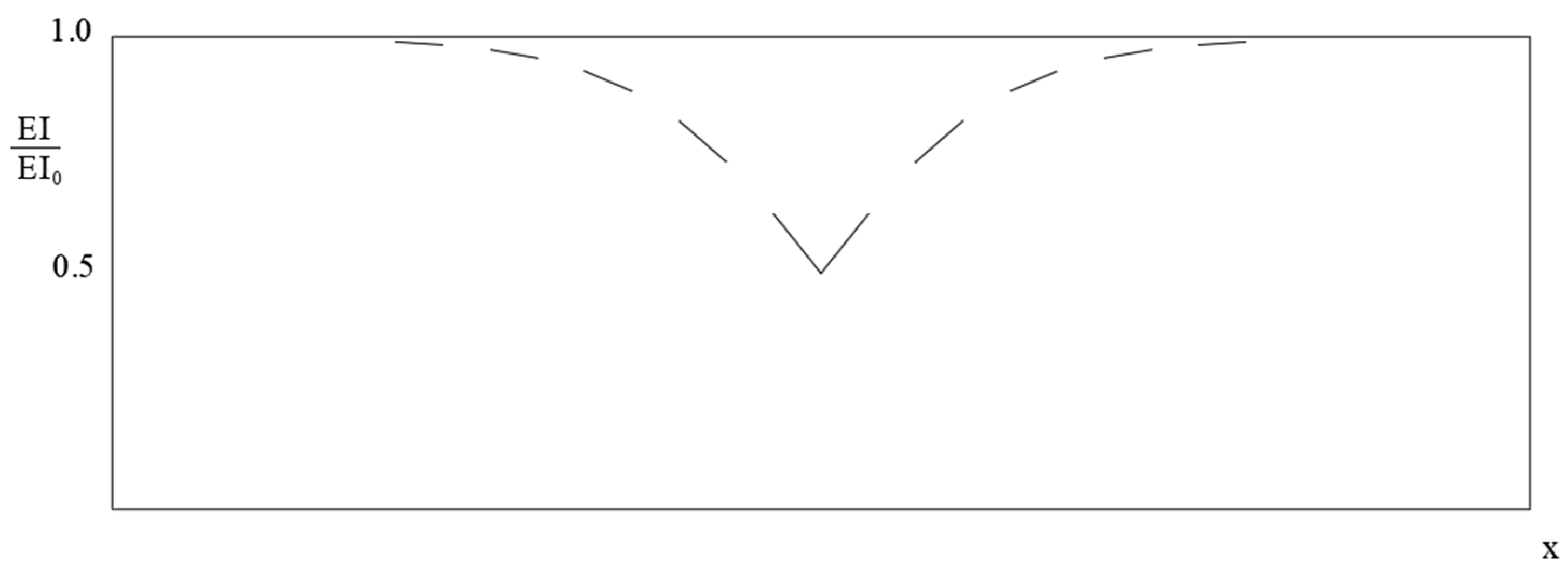

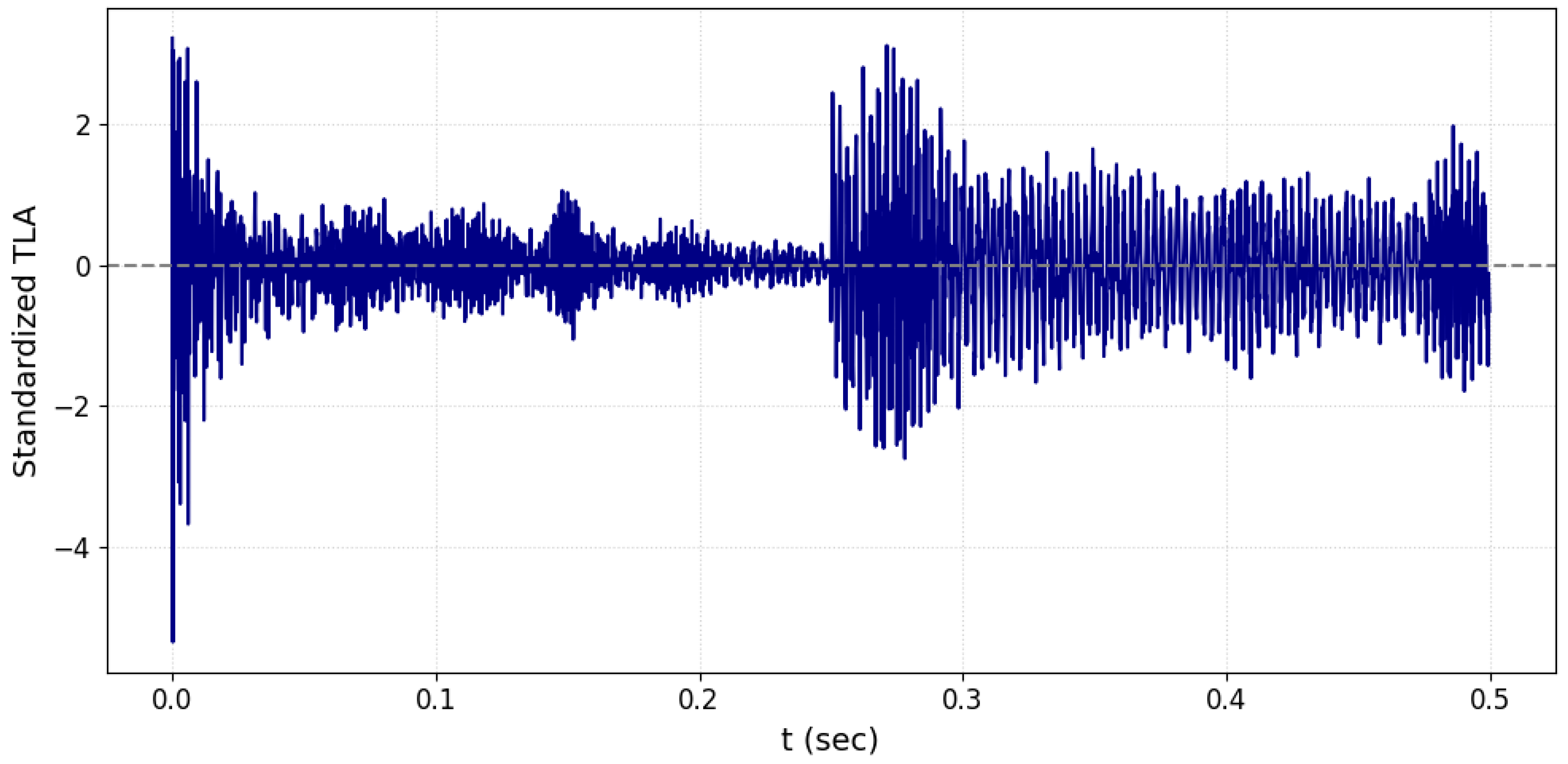

2. Bridge and Sensor Description

3. Methodology

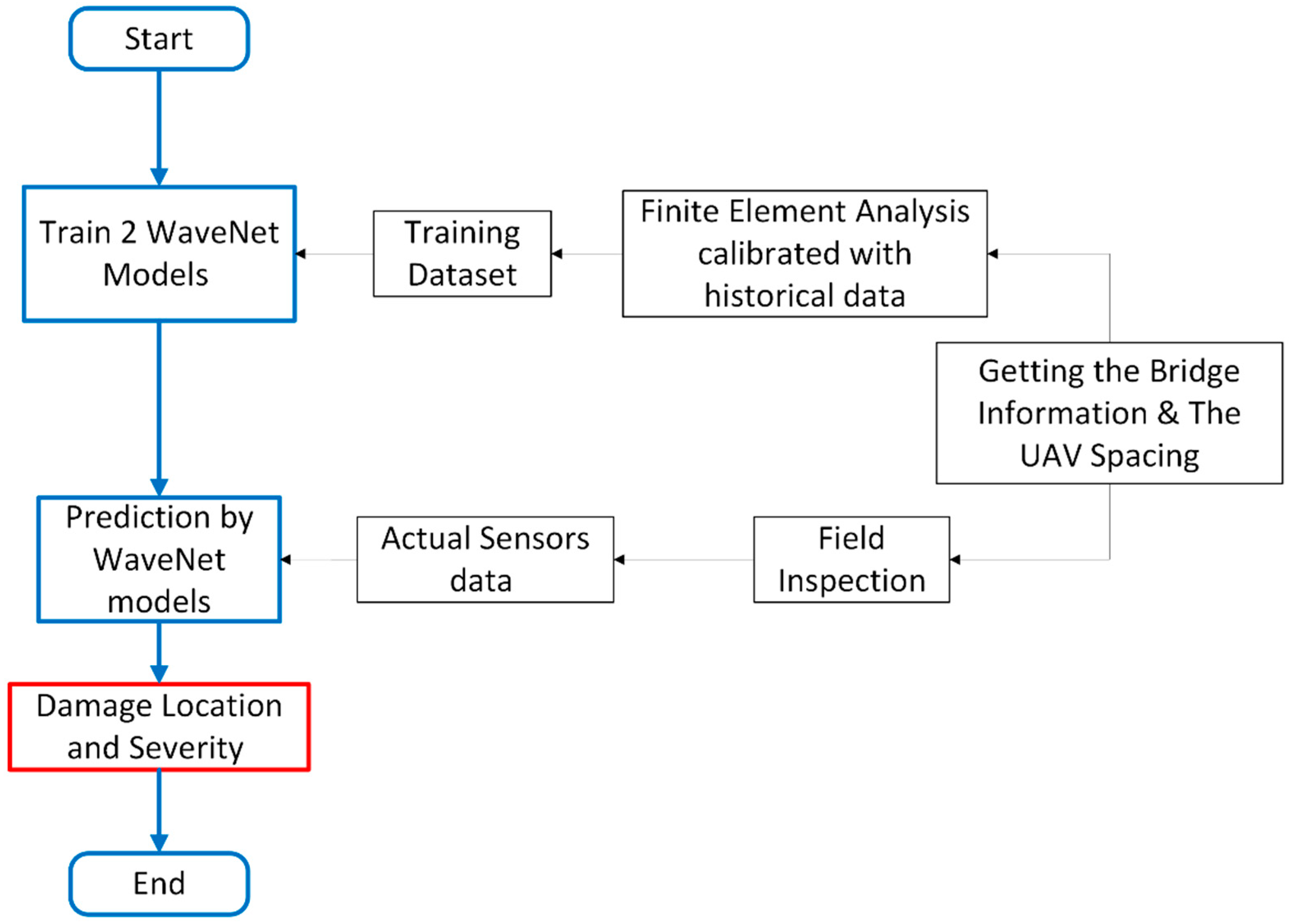

3.1. Proposed Framework

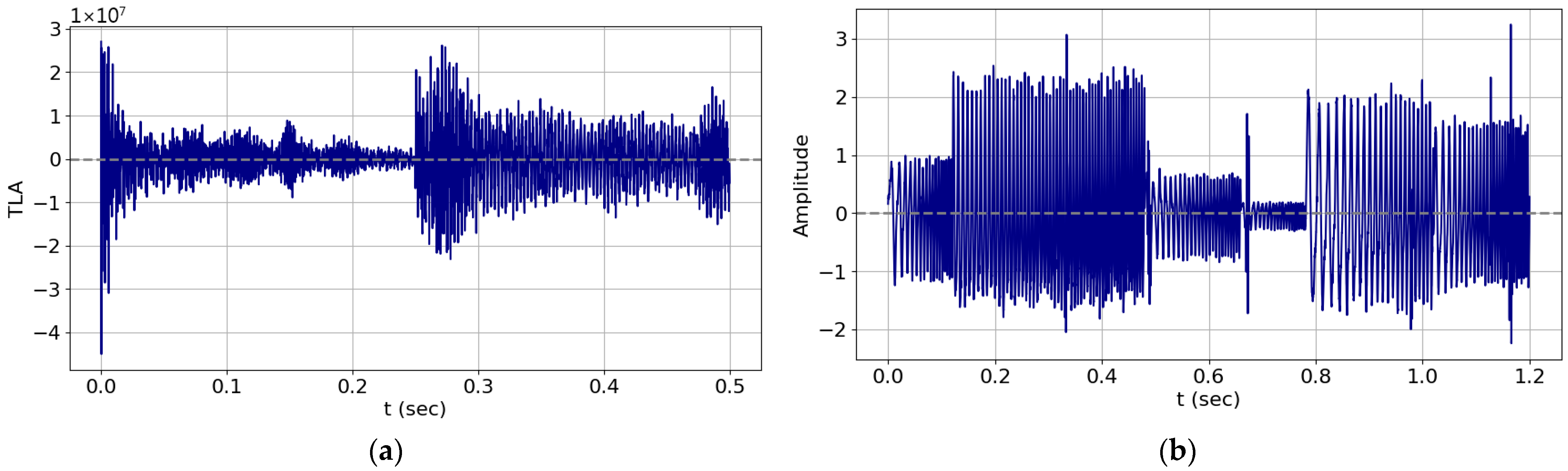

3.2. Dataset

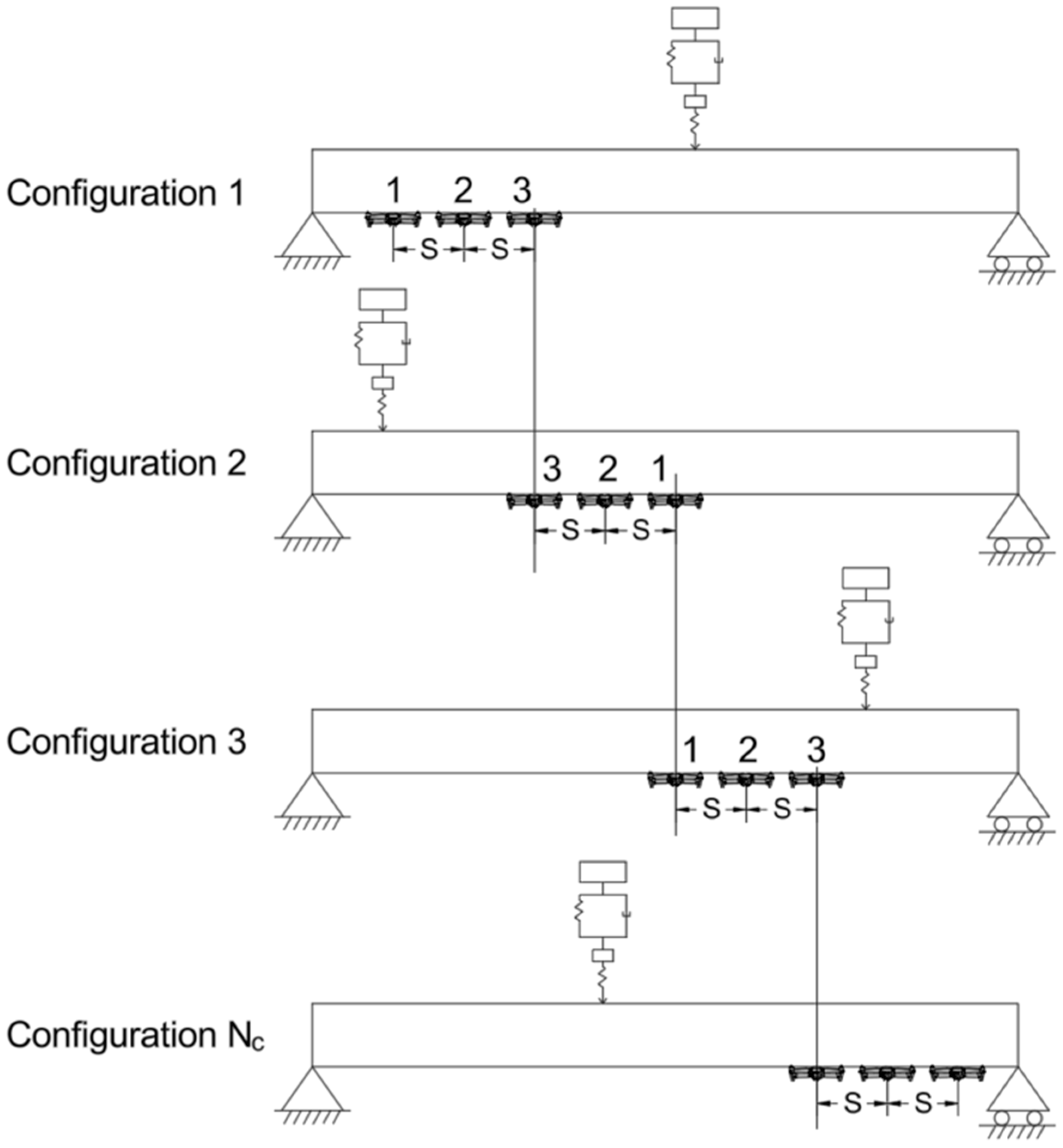

3.3. UAV Sensor Deployment

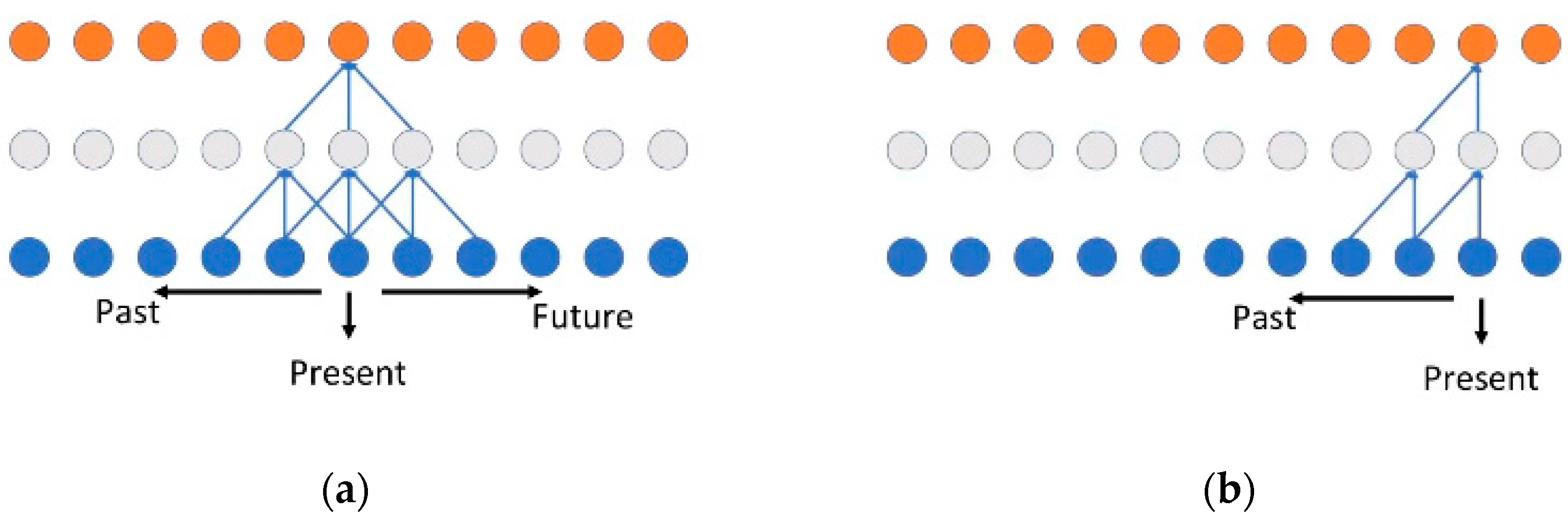

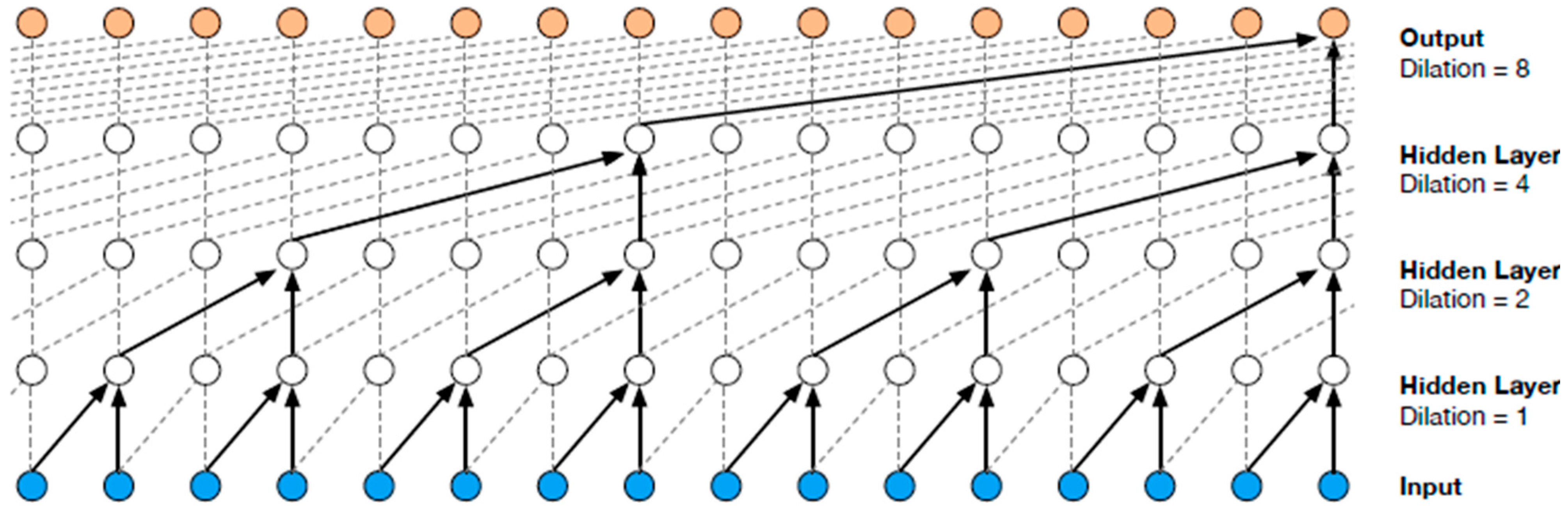

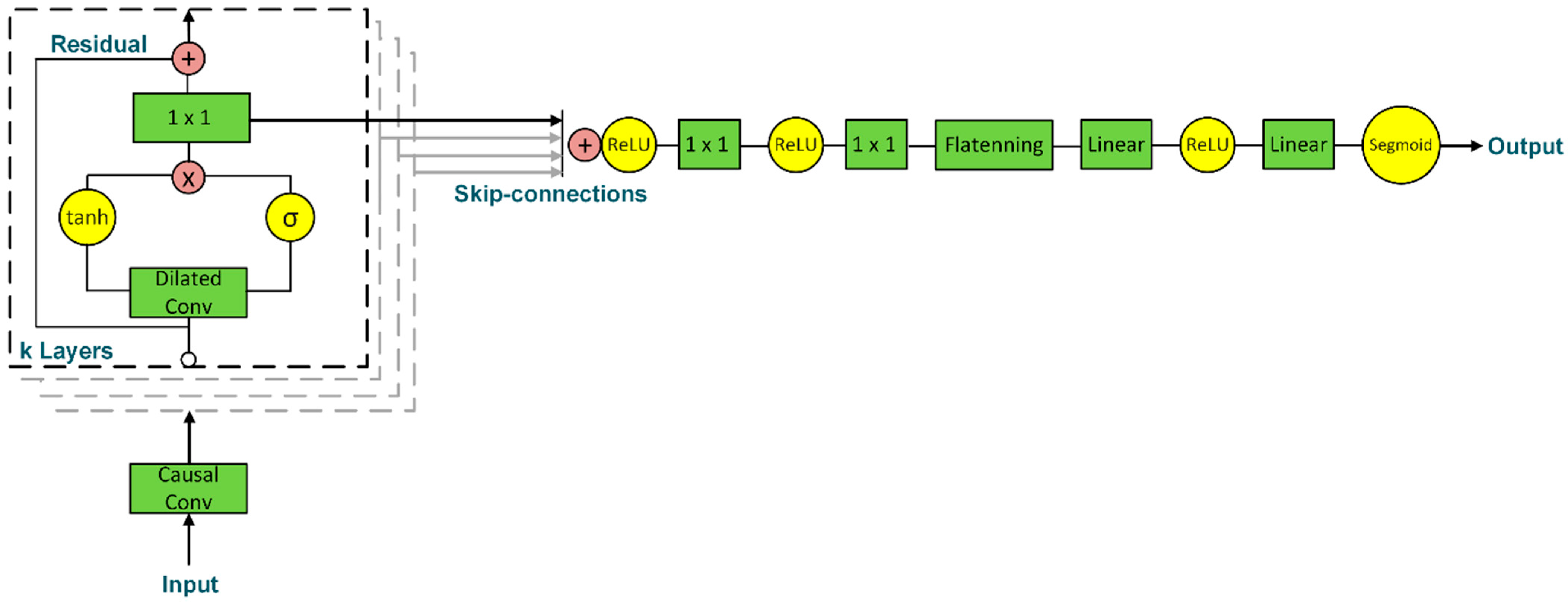

3.4. WaveNet Models

3.4.1. Severity Model

3.4.2. Location Model

3.4.3. Adaptation to Structural Health Monitoring

3.5. Training Procedure

4. Analysis Results

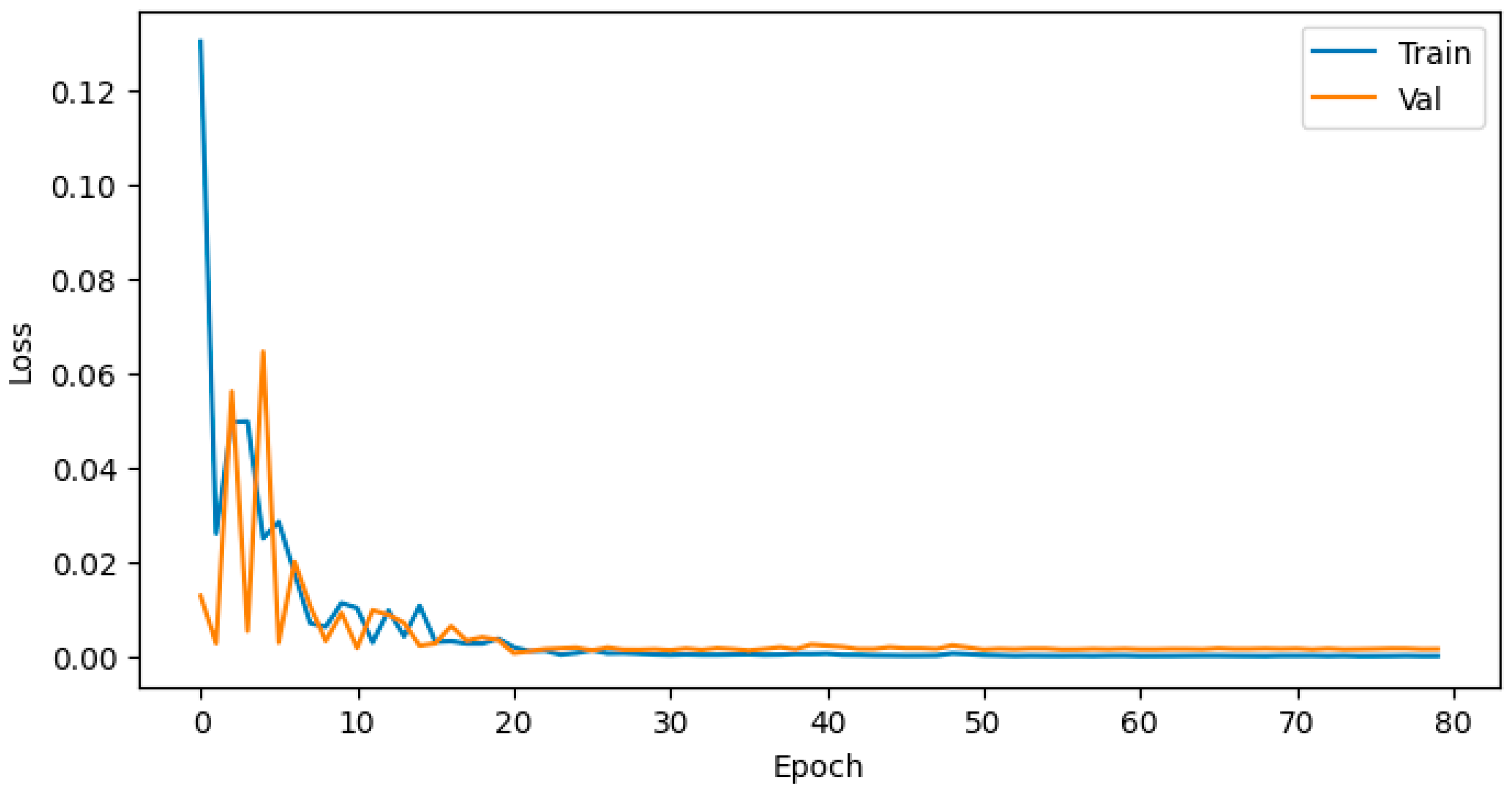

4.1. Training Evaluation

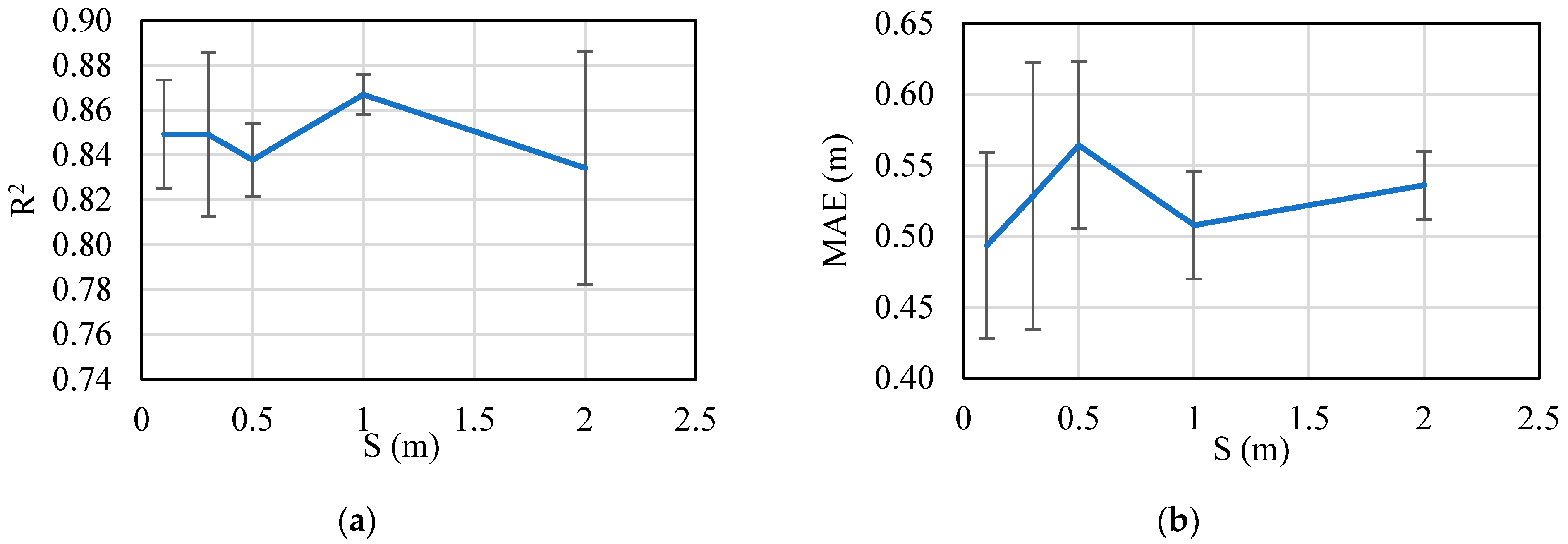

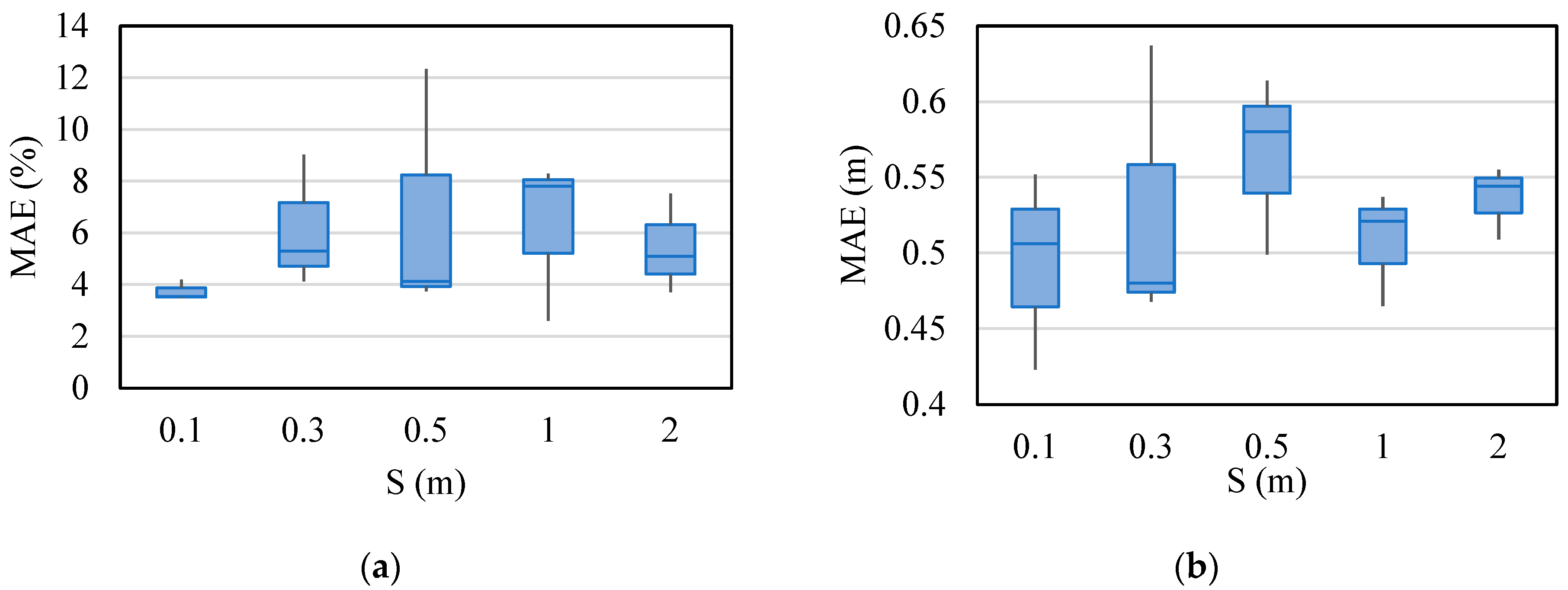

4.2. Test Metrics

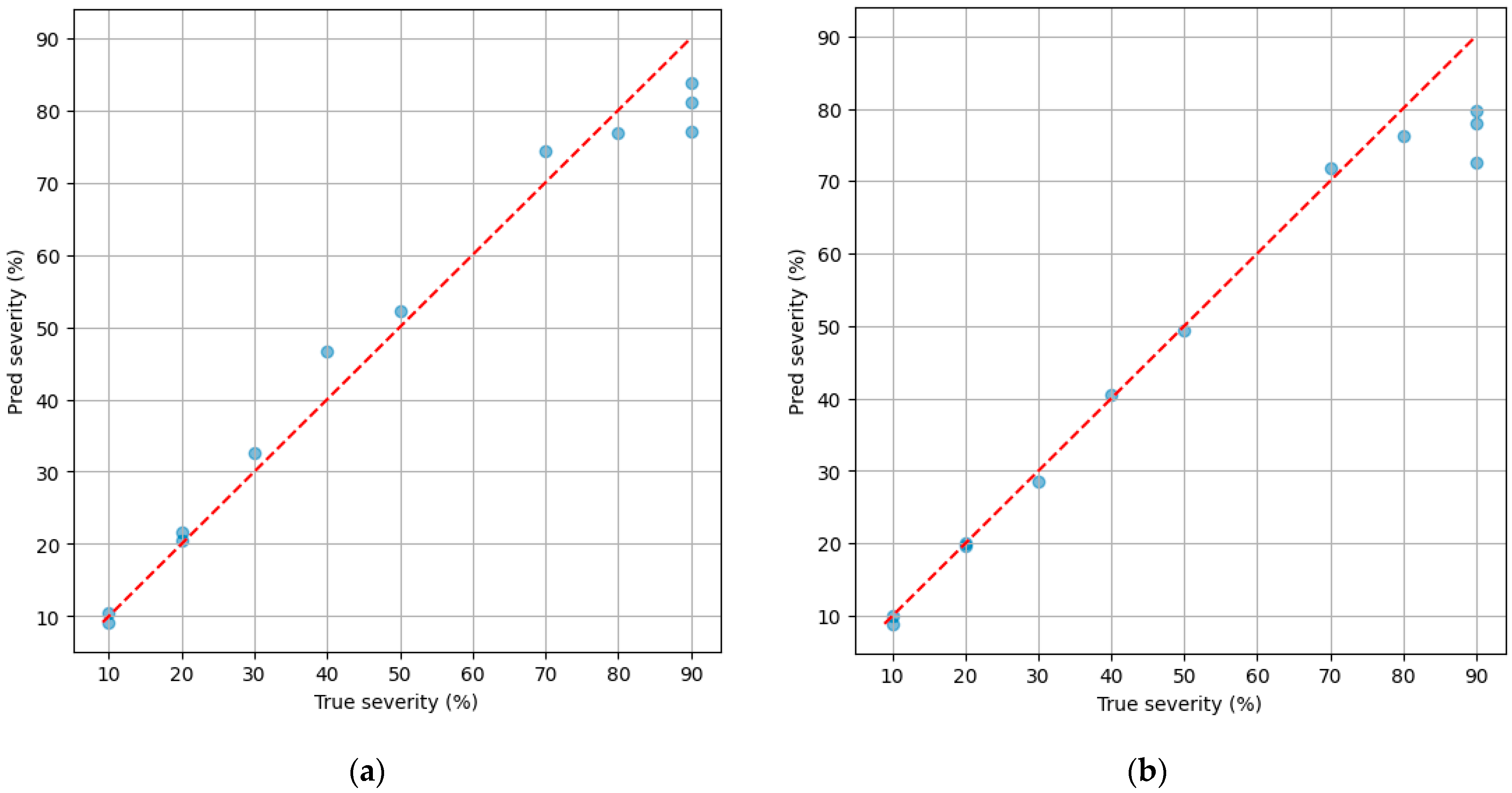

4.3. Damage Prediction

5. Summary and Discussion of Results

6. Conclusions and Recommendations

6.1. Conclusions

- The severity model performed best with dense to moderately dense UAV layouts. While S = 0.1 m achieved the highest accuracy during training, the practical optimum S = 0.5 m yields near-target means and the lowest SD among seeds. This balance between information richness and noise control ensures strong robustness during both training and testing.

- The location model is evaluated with the highest accuracy at S = 1.0 m, including the highest R2 and lowest MAE, P90, and P95. This spacing provides enough spatial resolution to capture local gradients without amplifying noise or redundancy.

- Coarse spacing (S = 2.0 m) for location model underfits and misses local features, while denser spacings (S 0.3 m) can overfit or become noise sensitive. Therefore, the intermediate S = 1.0 m minimizes both bias and SD for localization.

6.2. Recommendations

- Various bridge dimensions and conditions could be used through the WaveNet framework, depending on the availability of the bridge dataset.

- A comparison may be conducted between the proposed WaveNet Framework and other DL models (e.g., CNN, LSTM, GRU) using the same dataset to quantitatively evaluate relative accuracy, convergence speed, and stability.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Acronym | Definition | Acronym | Definition |

|---|---|---|---|

| 1-D | One-Dimensional | API | Application Programming Interface |

| CNN | Convolutional Neural Network | DL | Deep Learning |

| EEG | Electroencephalogram | FE | Finite Element |

| GB | Gigabyte | GRU | Gated Recurrent Unit |

| LSTM | Long Short-Term Memory | MAE | Mean Absolute Error |

| MEMS | Micro-electromechanical System | NumPy | Numerical Python |

| RC | Reinforced Concrete | ReLU | Rectified Linear Unit |

| RNN | Recurrent Neural Network | SD | Standard Deviation |

| SHM | Structural Health Monitoring | SWA | Stochastic Weight Averaging |

| TLA | Temporal Laplacian Acceleration | UAV | Unmanned Aerial Vehicle |

References

- Aktan, A.; Catbas, F.N.; Grimmelsman, K.; Tsikos, C. Issues in infrastructure health monitoring for management. J. Eng. Mech. 2000, 126, 711–724. [Google Scholar] [CrossRef]

- Phares, B.M.; Washer, G.A.; Rolander, D.D.; Graybeal, B.A.; Moore, M. Routine highway bridge inspection condition documentation accuracy and reliability. J. Bridge Eng. 2004, 9, 403–413. [Google Scholar] [CrossRef]

- Mitra, M.; Gopalakrishnan, S. Guided wave based structural health monitoring: A review. Smart Mater. Struct. 2016, 25, 053001. [Google Scholar] [CrossRef]

- Cawley, P. Structural health monitoring: Closing the gap between research and industrial deployment. Struct. Health Monit. 2018, 17, 1225–1244. [Google Scholar] [CrossRef]

- He, Z.; Li, W.; Salehi, H.; Zhang, H.; Zhou, H.; Jiao, P. Integrated structural health monitoring in bridge engineering. Autom. Constr. 2022, 136, 104168. [Google Scholar] [CrossRef]

- Yu, X.; Fu, Y.; Li, J.; Mao, J.; Hoang, T.; Wang, H. Recent advances in wireless sensor networks for structural health monitoring of civil infrastructure. J. Infrastruct. Intell. Resil. 2024, 3, 100066. [Google Scholar] [CrossRef]

- Sonbul, O.S.; Rashid, M. Towards the structural health monitoring of bridges using wireless sensor networks: A systematic study. Sensors 2023, 23, 8468. [Google Scholar] [CrossRef]

- Zelenika, S.; Hadas, Z.; Bader, S.; Becker, T.; Gljušćić, P.; Hlinka, J.; Janak, L.; Kamenar, E.; Ksica, F.; Kyratsi, T. Energy harvesting technologies for structural health monitoring of airplane components—A review. Sensors 2020, 20, 6685. [Google Scholar] [CrossRef]

- Spencer Jr, B.; Ruiz-Sandoval, M.E.; Kurata, N. Smart sensing technology: Opportunities and challenges. Struct. Control Health Monit. 2004, 11, 349–368. [Google Scholar] [CrossRef]

- Entezami, A.; Sarmadi, H.; Behkamal, B.; Mariani, S. Big data analytics and structural health monitoring: A statistical pattern recognition-based approach. Sensors 2020, 20, 2328. [Google Scholar] [CrossRef]

- Armijo, A.; Zamora-Sánchez, D. Integration of Railway Bridge Structural Health Monitoring into the Internet of Things with a Digital Twin: A Case Study. Sensors 2024, 24, 2115. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Ali, R.; Lewis, J.; Büyüköztürk, O. Deep learning-based structural health monitoring. Autom. Constr. 2024, 161, 105328. [Google Scholar] [CrossRef]

- Jia, J.; Li, Y. Deep learning for structural health monitoring: Data, algorithms, applications, challenges, and trends. Sensors 2023, 23, 8824. [Google Scholar] [CrossRef] [PubMed]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. In The Handbook of Brain Theory and Neural Networks; HAL Open Science: Lyon, France, 1998. [Google Scholar]

- Zhang, G.-Q.; Wang, B.; Li, J.; Xu, Y.-L. The application of deep learning in bridge health monitoring: A literature review. Adv. Bridge Eng. 2022, 3, 22. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed]

- Sreenath, S.; Malik, H.; Husnu, N.; Kalaichelavan, K. Assessment and use of unmanned aerial vehicle for civil structural health monitoring. Procedia Comput. Sci. 2020, 170, 656–663. [Google Scholar] [CrossRef]

- Tan, C.; Elhattab, A.; Uddin, N. Wavelet-entropy approach for detection of bridge damages using direct and indirect bridge records. J. Infrastruct. Syst. 2020, 26, 04020037. [Google Scholar] [CrossRef]

- Elhattab, A.; Uddin, N.; OBrien, E. Drive-by bridge damage monitoring using Bridge Displacement Profile Difference. J. Civ. Struct. Health Monit. 2016, 6, 839–850. [Google Scholar] [CrossRef]

- Fan, W.; Qiao, P. Vibration-based damage identification methods: A review and comparative study. Struct. Health Monit. 2011, 10, 83–111. [Google Scholar] [CrossRef]

- Tan, C.; Zhao, H.; OBrien, E.J.; Uddin, N.; Fitzgerald, P.C.; McGetrick, P.J.; Kim, C.-W. Extracting mode shapes from drive-by measurements to detect global and local damage in bridges. Struct. Infrastruct. Eng. 2021, 17, 1582–1596. [Google Scholar] [CrossRef]

- Kariyawasam, K.D.; Middleton, C.R.; Madabhushi, G.; Haigh, S.K.; Talbot, J.P. Assessment of bridge natural frequency as an indicator of scour using centrifuge modelling. J. Civ. Struct. Health Monit. 2020, 10, 861–881. [Google Scholar] [CrossRef]

- Van Den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar] [CrossRef]

- Mariani, S.; Rendu, Q.; Urbani, M.; Sbarufatti, C. Causal dilated convolutional neural networks for automatic inspection of ultrasonic signals in non-destructive evaluation and structural health monitoring. Mech. Syst. Signal Process. 2021, 157, 107748. [Google Scholar] [CrossRef]

- Oord, A.; Li, Y.; Babuschkin, I.; Simonyan, K.; Vinyals, O.; Kavukcuoglu, K.; Driessche, G.; Lockhart, E.; Cobo, L.; Stimberg, F. Parallel wavenet: Fast high-fidelity speech synthesis. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 3918–3926. [Google Scholar]

- Engel, J.; Resnick, C.; Roberts, A.; Dieleman, S.; Norouzi, M.; Eck, D.; Simonyan, K. Neural audio synthesis of musical notes with wavenet autoencoders. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1068–1077. [Google Scholar]

- Rethage, D.; Pons, J.; Serra, X. A wavenet for speech denoising. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5069–5073. [Google Scholar]

- Pankka, H.; Lehtinen, J.; Ilmoniemi, R.J.; Roine, T. Forecasting EEG time series with WaveNet. bioRxiv 2024. [Google Scholar] [CrossRef]

- Lv, T.; Tao, A.; Zhang, Z.; Qin, S.; Wang, G. Significant wave height prediction based on the local-EMD-WaveNet model. Ocean Eng. 2023, 287, 115900. [Google Scholar] [CrossRef]

- Oh, S.L.; Jahmunah, V.; Ooi, C.P.; Tan, R.-S.; Ciaccio, E.J.; Yamakawa, T.; Tanabe, M.; Kobayashi, M.; Acharya, U.R. Classification of heart sound signals using a novel deep WaveNet model. Comput. Methods Programs Biomed. 2020, 196, 105604. [Google Scholar] [CrossRef] [PubMed]

- Dorado Rueda, F.; Durán Suárez, J.; del Real Torres, A. Short-term load forecasting using encoder-decoder wavenet: Application to the french grid. Energies 2021, 14, 2524. [Google Scholar] [CrossRef]

- Ning, C.; Xie, Y.; Sun, L. LSTM, WaveNet, and 2D CNN for nonlinear time history prediction of seismic responses. Eng. Struct. 2023, 286, 116083. [Google Scholar] [CrossRef]

- Mariani, S.; Kalantari, A.; Kromanis, R.; Marzani, A. Data-driven modeling of long temperature time-series to capture the thermal behavior of bridges for SHM purposes. Mech. Syst. Signal Process. 2024, 206, 110934. [Google Scholar] [CrossRef]

- Psathas, A.P.; Iliadis, L.; Achillopoulou, D.V.; Papaleonidas, A.; Stamataki, N.K.; Bountas, D.; Dokas, I.M. Autoregressive deep learning models for bridge strain prediction. In Proceedings of the International Conference on Engineering Applications of Neural Networks, Chersonissos, Crete, Greece, 17–20 June 2022; pp. 150–164. [Google Scholar]

- Dabbous, A.; Berta, R.; Fresta, M.; Ballout, H.; Lazzaroni, L.; Bellotti, F. Bringing Intelligence to the Edge for Structural Health Monitoring. The Case Study of the Z24 Bridge. IEEE Open J. Ind. Electron. Soc. 2024, 5, 781–794. [Google Scholar] [CrossRef]

- Gaebler, K.O.; Shield, C.K.; Linderman, L.E. Feasibility of Vibration-Based Long-Term Bridge Monitoring Using the I-35W St. Anthony Falls Bridge; Minnesota Department of Transportation: Saint Paul, MN, USA, 2017. [Google Scholar]

- Yang, Y.; Lin, C.; Yau, J. Extracting bridge frequencies from the dynamic response of a passing vehicle. J. Sound Vib. 2004, 272, 471–493. [Google Scholar] [CrossRef]

- Yang, Y.; Chang, K. Extracting the bridge frequencies indirectly from a passing vehicle: Parametric study. Eng. Struct. 2009, 31, 2448–2459. [Google Scholar] [CrossRef]

- Keenahan, J.; McGetrick, P.; O’Brien, E.J.; Gonzalez, A. Using instrumented vehicles to detect damage in bridges. In Proceedings of the 15th International Conference on Experimental Mechanics, Porto, Portugal, 22–27 July 2012. [Google Scholar]

- Eshkevari, S.S.; Matarazzo, T.J.; Pakzad, S.N. Bridge modal identification using acceleration measurements within moving vehicles. Mech. Syst. Signal Process. 2020, 141, 106733. [Google Scholar] [CrossRef]

- Neubauer, K.; Bullard, E.; Blunt, R. Collection of Data with Unmanned Aerial Systems (UAS) for Bridge Inspection and Construction Inspection; United States Department of Transportation, Federal Highway Administration: Washington, DC, USA, 2021.

- Khan, M.A.; McCrum, D.P.; OBrien, E.J.; Bowe, C.; Hester, D.; McGetrick, P.J.; O’Higgins, C.; Casero, M.; Pakrashi, V. Re-deployable sensors for modal estimates of bridges and detection of damage-induced changes in boundary conditions. Struct. Infrastruct. Eng. 2022, 18, 1177–1191. [Google Scholar] [CrossRef]

- Malekjafarian, A.; OBrien, E.J. Identification of bridge mode shapes using short time frequency domain decomposition of the responses measured in a passing vehicle. Eng. Struct. 2014, 81, 386–397. [Google Scholar] [CrossRef]

- Liu, H.; Tian, H.; Wang, D.; Yuan, T.; Zhang, J.; Liu, G.; Li, X.; Chen, X.; Wang, C.; Cai, S. Electrically active smart adhesive for a perching-and-takeoff robot. Sci. Adv. 2023, 9, eadj3133. [Google Scholar] [CrossRef]

- Lussier Desbiens, A.; Cutkosky, M.R. Landing and perching on vertical surfaces with microspines for small unmanned air vehicles. J. Intell. Robot. Syst. 2010, 57, 313–327. [Google Scholar] [CrossRef]

- Harris, C.R.; Millman, K.J.; Van Der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- McKinney, W. Data structures for statistical computing in Python. Scipy 2010, 445, 51–56. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Hunter, J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Abdellatef, A.I.M. Integrated Structural Health Monitoring Techniques Using Drone Sensors. Ph.D. Thesis, The University of Alabama at Birmingham, Birmingham, AL, USA, 2022. [Google Scholar]

- Christides, S.; Barr, A. One-dimensional theory of cracked Bernoulli-Euler beams. Int. J. Mech. Sci. 1984, 26, 639–648. [Google Scholar] [CrossRef]

- Shen, M.-H.; Pierre, C. Natural modes of Bernoulli-Euler beams with symmetric cracks. J. Sound Vib. 1990, 138, 115–134. [Google Scholar] [CrossRef]

- Shen, M.-H.; Pierre, C. Free vibrations of beams with a single-edge crack. J. Sound Vib. 1994, 170, 237–259. [Google Scholar] [CrossRef]

- Weng, J.; Lee, C.K.; Tan, K.H.; Lim, N.S. Damage assessment for reinforced concrete frames subject to progressive collapse. Eng. Struct. 2017, 149, 147–160. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Hussein, M.; Gabbouj, M.; Inman, D.J. A review of vibration-based damage detection in civil structures: From traditional methods to Machine Learning and Deep Learning applications. Mech. Syst. Signal Process. 2021, 147, 107077. [Google Scholar] [CrossRef]

- Sinha, J.K.; Friswell, M.; Edwards, S. Simplified models for the location of cracks in beam structures using measured vibration data. J. Sound Vib. 2002, 251, 13–38. [Google Scholar] [CrossRef]

- Zhu, X.; Law, S. Wavelet-based crack identification of bridge beam from operational deflection time history. Int. J. Solids Struct. 2006, 43, 2299–2317. [Google Scholar] [CrossRef]

- Zhang, Y.; Pintea, S.L.; Van Gemert, J.C. Video acceleration magnification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 529–537. [Google Scholar]

- Voth, G.A.; La Porta, A.; Crawford, A.M.; Alexander, J.; Bodenschatz, E. Measurement of particle accelerations in fully developed turbulence. J. Fluid Mech. 2002, 469, 121–160. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Zhao, C.; Wang, Y.; Zhang, X.; Chen, S.; Wu, C.; Teo, K.L. UAV dispatch planning for a wireless rechargeable sensor network for bridge monitoring. IEEE Trans. Sustain. Comput. 2022, 8, 293–309. [Google Scholar] [CrossRef]

- Salimans, T.; Kingma, D.P. Weight normalization: A simple reparameterization to accelerate training of deep neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. Dropblock: A regularization method for convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Kim, B.J.; Choi, H.; Jang, H.; Lee, D.; Kim, S.W. How to use dropout correctly on residual networks with batch normalization. In Proceedings of the Uncertainty in Artificial Intelligence, Pittsburgh, PA, USA, 31 July–4 August 2023; pp. 1058–1067. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; Volume 4. [Google Scholar]

- Huber, P.J. Robust estimation of a location parameter. In Breakthroughs in Statistics: Methodology and Distribution; Springer: New York, NY, USA, 1992; pp. 492–518. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ainsworth, M.; Shin, Y. Plateau phenomenon in gradient descent training of RELU networks: Explanation, quantification, and avoidance. SIAM J. Sci. Comput. 2021, 43, A3438–A3468. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Liaw, R.; Liang, E.; Nishihara, R.; Moritz, P.; Gonzalez, J.E.; Stoica, I. Tune: A research platform for distributed model selection and training. arXiv 2018, arXiv:1807.05118. [Google Scholar] [CrossRef]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.-C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. Specaugment: A simple data augmentation method for automatic speech recognition. arXiv 2019, arXiv:1904.08779. [Google Scholar] [CrossRef]

- Izmailov, P.; Podoprikhin, D.; Garipov, T.; Vetrov, D.; Wilson, A.G. Averaging weights leads to wider optima and better generalization. arXiv 2018, arXiv:1803.05407. [Google Scholar]

- Falcetelli, F.; Yue, N.; Di Sante, R.; Zarouchas, D. Probability of detection, localization, and sizing: The evolution of reliability metrics in Structural Health Monitoring. Struct. Health Monit. 2022, 21, 2990–3017. [Google Scholar] [CrossRef]

- Fisher, R.A. Statistical Methods for Research Workers; Oliver and Boyd: Edinburgh, UK, 1928. [Google Scholar]

- Parrot. Anafi Ai Technical Specifications. Available online: https://www.parrot.com/en/drones/anafi-ai (accessed on 3 November 2025).

- DJI. Air 3 Product Specifications. Available online: https://www.dji.com/air-3/specs (accessed on 3 November 2025).

| Component | Location Model | Severity Model |

|---|---|---|

| Input channels | Variable with S: 4, 9, 19, 33, 99 | Variable with S: 4, 9, 19, 33, 99 |

| Residual/Skip channels | 64/64 | 128/128 |

| Kernel size | 3 | 3 |

| Stacks x Layers per stack | 3 × 8 | 3 × 1 |

| Dilation schedule | 1, 2, 4, …, 27 | 1 |

| Receptive field | 1533 | 9 |

| Dropout in the residual block | 0.075 | 0 |

| Optimizer | AdamW | AdamW |

| Learning rate | 1 × 10−5 | 1 × 10−3 |

| Weight decay | 5 × 10−4 | 0 |

| Batch size | 8 | 8 |

| Epochs | 150 | 80 |

| Training loss function | Smooth L1 (β = 0.075) | MAE |

| S (m) | P90 (m) | P95 (m) | % ≤ 0.5 m |

|---|---|---|---|

| 0.1 | 1.84 ± 0.09 | 2.16 ± 0.15 | 80.5 ± 4.8 |

| 0.3 | 1.96 ± 0.25 | 2.15 ± 0.25 | 75.0 ± 8.3 |

| 0.5 | 1.93 ± 0.08 | 2.21 ± 0.13 | 69.4 ± 12.7 |

| 1 | 1.64 ± 0.22 | 1.98 ± 0.08 | 77.8 ± 4.8 |

| 2 | 2.00 ± 0.43 | 2.22 ± 0.49 | 75.0 ± 14.4 |

| S (m) | Predicted Location (m) | Predicted Severity (%) |

|---|---|---|

| 0.1 | 5.233 ± 0.138 | 14.00 ± 0.97 |

| 0.3 | 5.037 ± 0.061 | 13.58 ± 0.64 |

| 0.5 | 4.983 ± 0.163 | 14.57 ± 0.27 |

| 1 | 5.092 ± 0.054 | 14.02 ± 0.39 |

| 2 | 5.046 ± 0.130 | 14.40 ± 0.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Turkomany, M.; AbdelLatef, A.I.; Uddin, N. A Novel WaveNet Deep Learning Approach for Enhanced Bridge Damage Detection. Appl. Sci. 2025, 15, 12228. https://doi.org/10.3390/app152212228

Turkomany M, AbdelLatef AI, Uddin N. A Novel WaveNet Deep Learning Approach for Enhanced Bridge Damage Detection. Applied Sciences. 2025; 15(22):12228. https://doi.org/10.3390/app152212228

Chicago/Turabian StyleTurkomany, Mohab, AbdelAziz Ibrahem AbdelLatef, and Nasim Uddin. 2025. "A Novel WaveNet Deep Learning Approach for Enhanced Bridge Damage Detection" Applied Sciences 15, no. 22: 12228. https://doi.org/10.3390/app152212228

APA StyleTurkomany, M., AbdelLatef, A. I., & Uddin, N. (2025). A Novel WaveNet Deep Learning Approach for Enhanced Bridge Damage Detection. Applied Sciences, 15(22), 12228. https://doi.org/10.3390/app152212228