FE-WRNet: Frequency-Enhanced Network for Visible Watermark Removal in Document Images

Abstract

1. Introduction

- We construct a new dataset, TextLogo, which comprises background images containing dense textual content overlaid with diverse watermarks that exhibit variations in color, texture, and edge characteristics. By encompassing a broad range of watermark types, TextLogo fills a critical gap in document-image dewatermarking benchmarks.

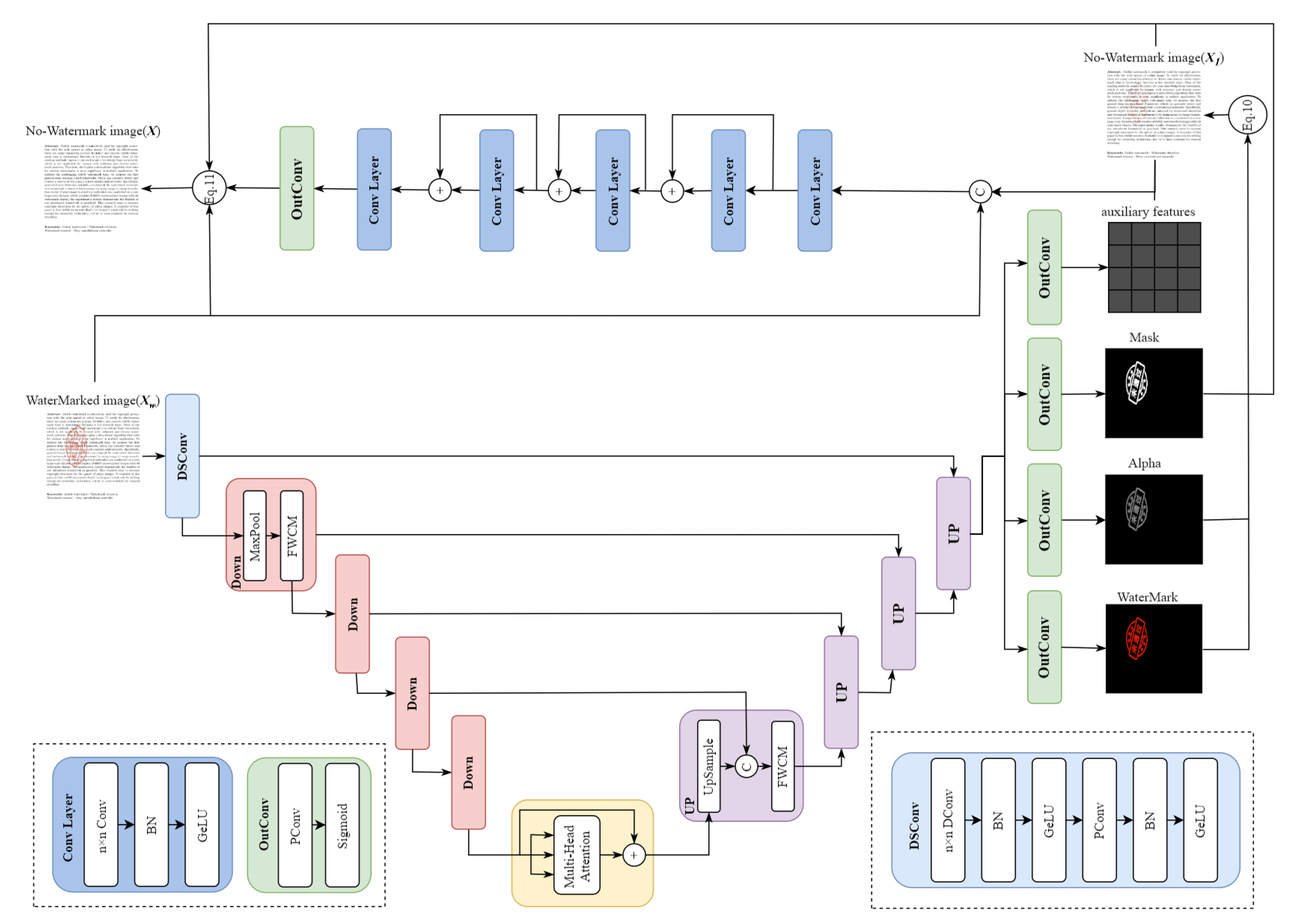

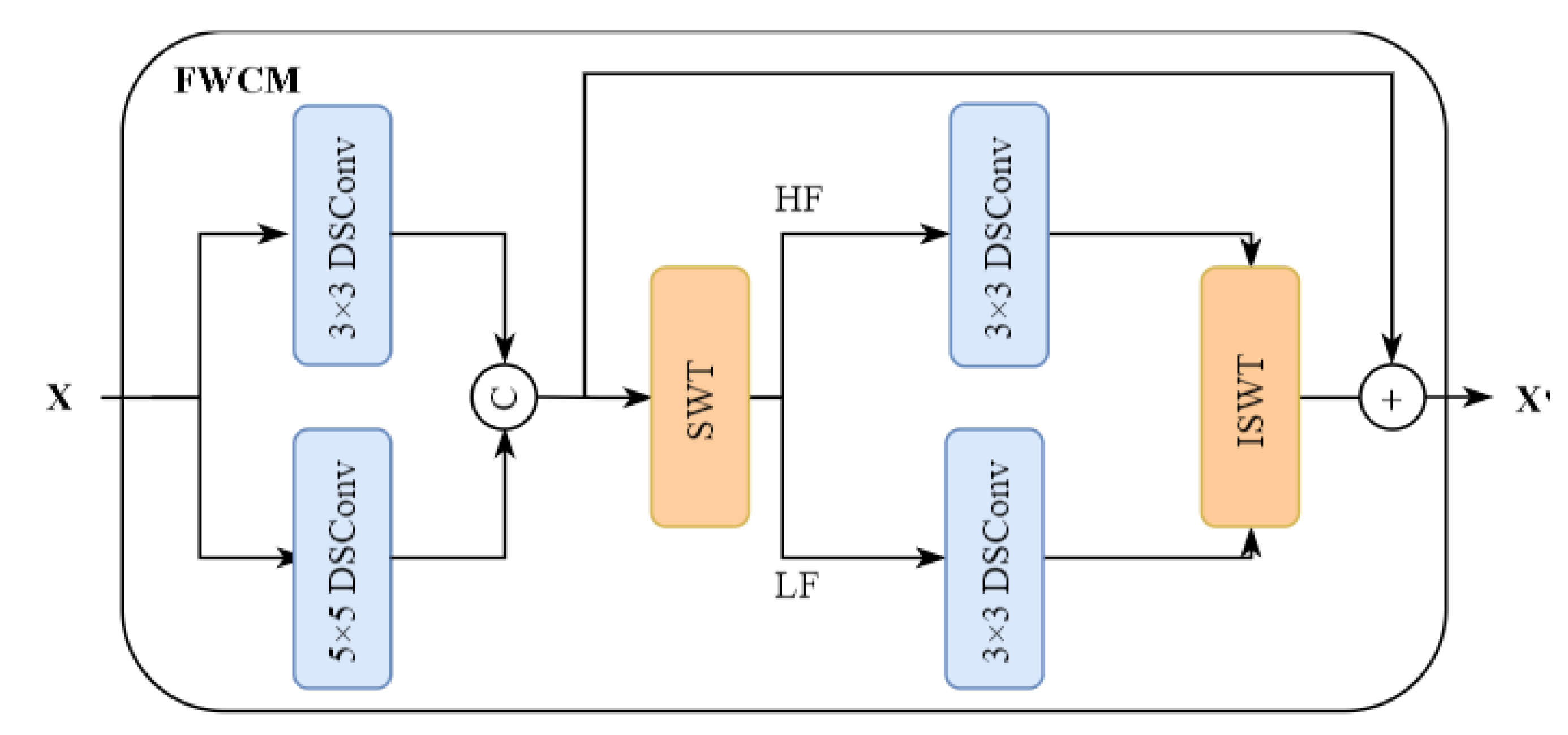

- Building on TextLogo, we propose a frequency-enhanced watermark removal network, FE-WRNet. Central to this network is the FWCM submodule, which operates jointly in the spatial domain and the discrete wavelet domain to capture watermark edges and structural information more effectively. Additionally, a hybrid loss based on spatial and wavelet domains is designed to enhance the model’s perception of details and edge information.

- Dataset sufficiency. Does a document-centric benchmark covering multiple layouts and 30 heterogeneous watermark styles (TextLogo) reveal the challenges of document watermark removal better than natural image benchmarks?

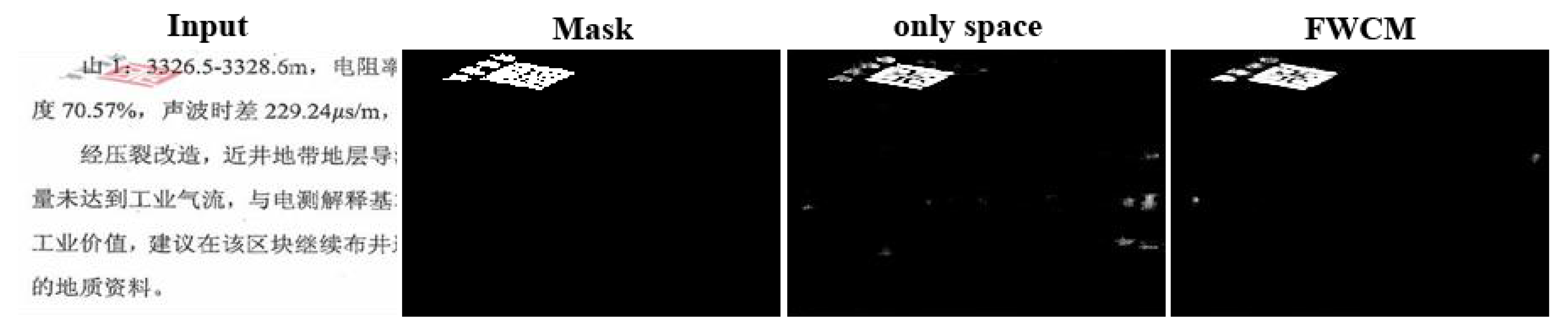

- Representation and Architecture. Does the proposed FWCM, which fuses spatial cues with wavelet subbands, achieve more accurate watermark localization and removal than using a spatial feature extractor alone?

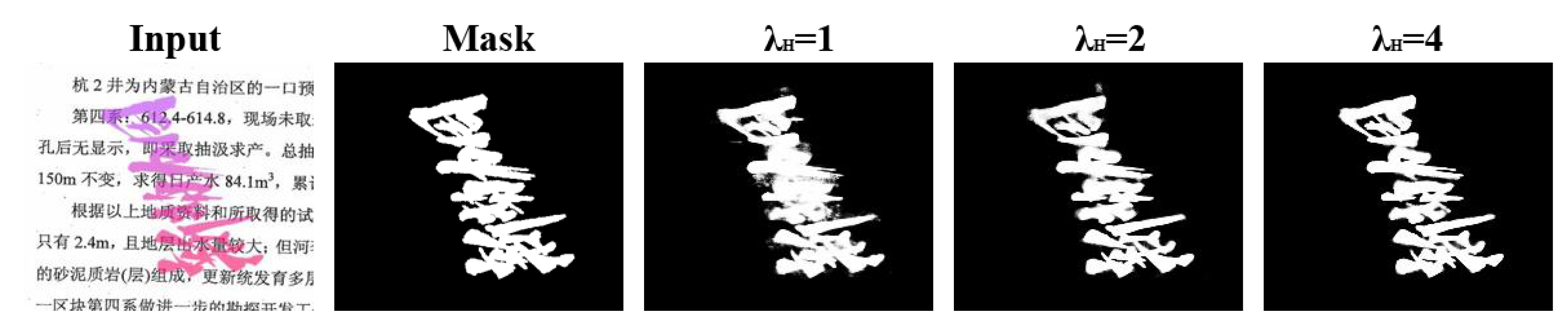

- Loss Design. Does emphasizing high-frequency subbands in the pixel loss (by using a coefficient >1) sharpen mask boundaries and improve perceptual quality without compromising low-frequency color harmony?

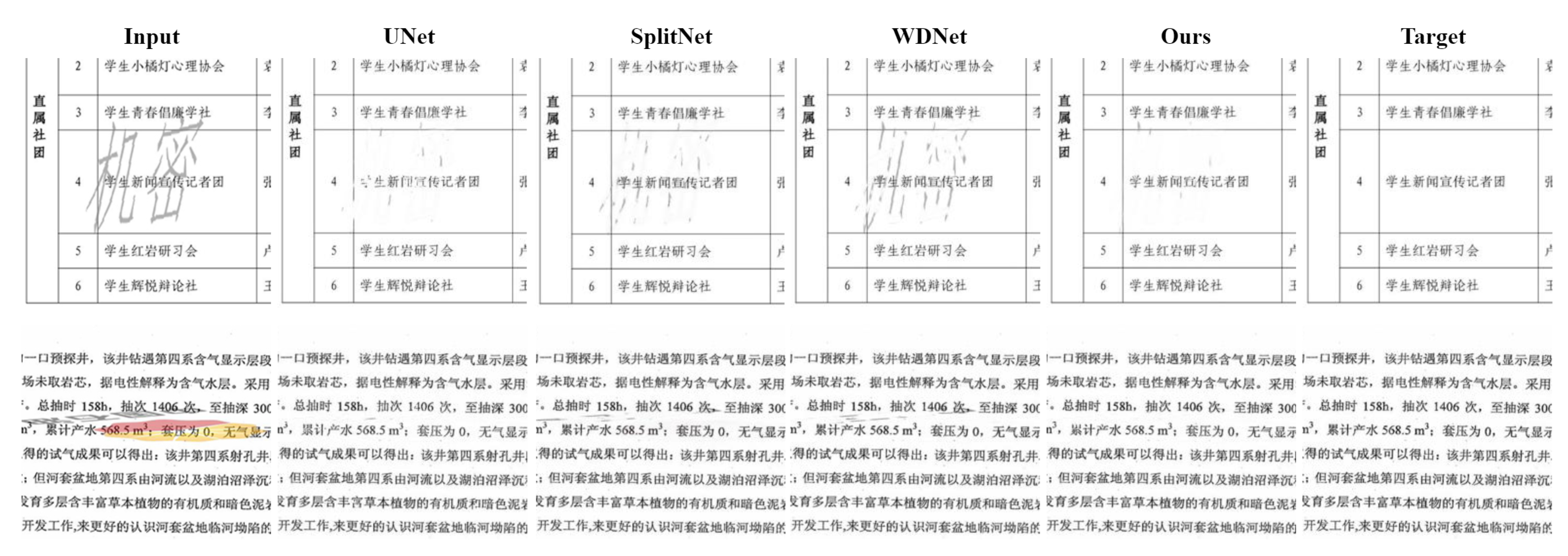

- Accuracy–efficiency trade-off. Against UNet, SplitNet, and WDNet, can FE-WRNet achieve superior TextLogo scores with lower-inference FLOPs, and remain competitive on CLWD?

2. Related Work

2.1. Visible Watermark Removal

2.2. Document Image Restoration

2.3. Related Vision Tasks: Deraining and Defogging

2.4. Datasets for Watermark Removal

3. Method

3.1. TextLogo Dataset

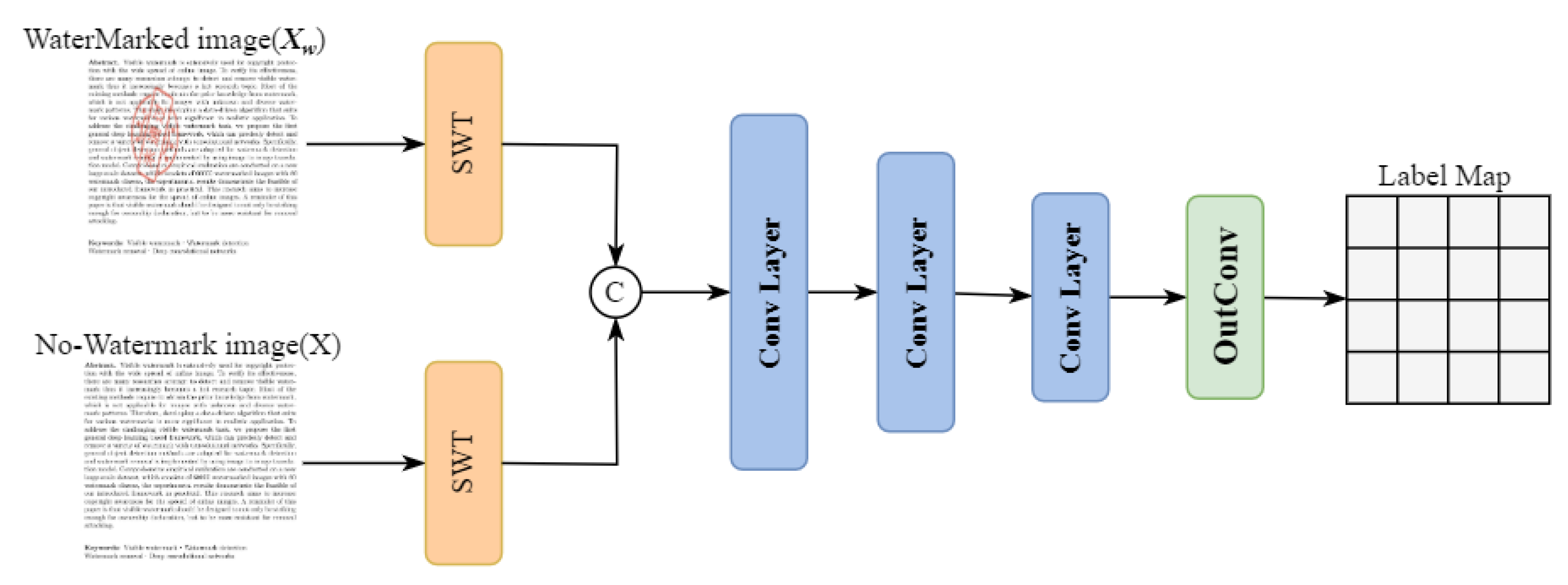

3.2. FE-WRNet

3.2.1. Watermark Localization

3.2.2. Watermark Removal

3.2.3. Image Restoration

3.3. Discriminator

3.4. Loss Function

3.5. Summary

4. Experiment

4.1. Experimental Settings

4.2. Ablation Study

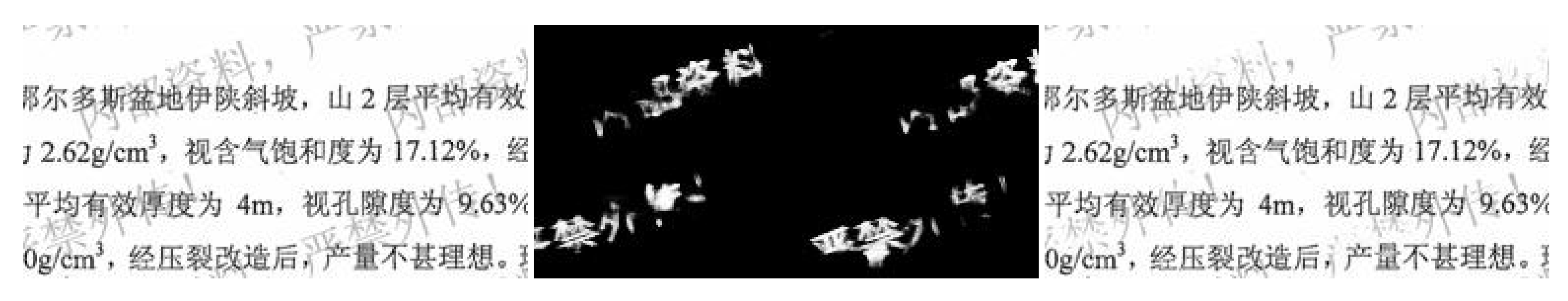

4.2.1. Analysis of the High-Frequency Penalty Coefficient

4.2.2. Analysis of the FWCM

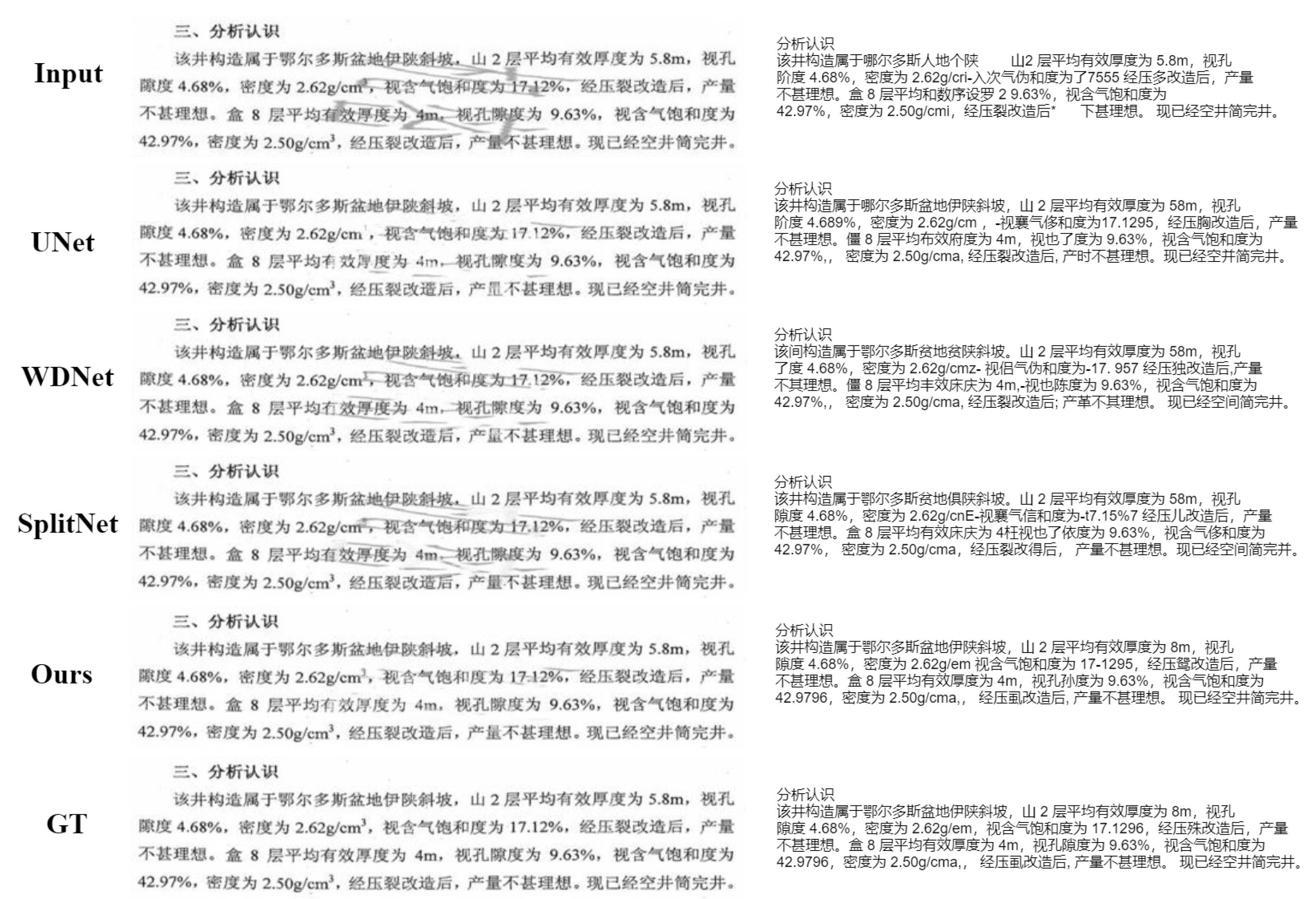

4.3. Comparison with Other WaterMark Removal Models

4.3.1. Comparisons on TextLogo

4.3.2. Comparisons of CLWD

4.3.3. Application Test

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Zhu, Z.; Hou, J.; Wu, D. Spatial-temporal graph enhanced detr towards multi-frame 3D object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10614–10628. [Google Scholar] [CrossRef]

- Shen, Y.; Feng, Y.; Fang, B.; Zhou, M.; Kwong, S.; Qiang, B.-h. DSRPH: Deep semantic-aware ranking preserving hashing for efficient multilabel image retrieval. Inf. Sci. 2020, 539, 145–156. [Google Scholar] [CrossRef]

- Zhou, M.; Wei, X.; Wang, S.; Kwong, S.; Fong, C.K.; Wong, P.H.W.; Yuen, W.Y.F. Global Rate-Distortion Optimization-Based Rate Control for HEVC HDR Coding. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4648–4662. [Google Scholar] [CrossRef]

- Pei, S.-C.; Zeng, Y.C. A novel image recovery algorithm for visible watermarked images. IEEE Trans. Inf. Forensics Secur. 2006, 1, 543–550. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, M.; Ji, C.; Sui, X.; Bai, J. Cross-Frame Transformer-Based Spatio-Temporal Video Superresolution. IEEE Trans. Broadcast. 2022, 68, 359–369. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, Y.; Li, B.; Lin, X. Complexity Correlation-Based CTU-Level Rate Control with Direction Selection for HEVC. ACM Trans. Multimedia Comput. Commun. Appl. 2017, 13, 1–23. [Google Scholar] [CrossRef]

- Shen, W.; Zhou, M.; Luo, J.; Li, Z.; Kwong, S. Graph-Represented Distribution Similarity Index for Full Reference Image Quality Assessment. IEEE Trans. Image Process. 2024, 33, 3075–3089. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.; Zhou, M.; Wei, X.; Wang, H.; Fang, B.; Ji, C.; Zhuang, X.; Wang, J.; Luo, J.; Pu, H.; et al. A blind video quality assessment method via spatiotemporal pyramid attention. IEEE Trans. Broadcast. 2024, 70, 251–264. [Google Scholar] [CrossRef]

- Lan, X.; Xian, W.; Zhou, M.; Yan, J.; Wei, X.; Luo, J. No-Reference Image Quality Assessment: Exploring Intrinsic Distortion Characteristics via Generative Noise Estimation with Mamba. IEEE Trans. Circuits Syst. Video Technol. 2025; early access. [Google Scholar] [CrossRef]

- Dekel, T.; Rubinstein, M.; Liu, C.; Freeman, W.T. On the Effectiveness of visible watermarks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 6864–6872. [Google Scholar]

- Li, X.; Lu, C.; Cheng, D.; Li, W.-H.; Cao, M.; Liu, B.; Ma, J.; Zheng, W.-S. Towards photorealistic visible watermark removal with conditional generative adversarial networks. In Proceedings of the Image and Graphics: 10th International Conference, ICIG 2019, Beijing, China, 23–25 August 2019; Proceedings, Part I 10. Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 345–356. [Google Scholar]

- Cao, Z.; Niu, S.; Zhang, J.; Wang, X. Generative adversarial network model for visible watermark removal. IET Image Process. 2019, 13, 1783–1789. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Bengio, Y. Generative adversarial nets. In Proceedings of the Neural Information Processing Systems, NIPS, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Cun, X.; Pun, C.M. Split then refine: Stacked attention-guided resunets for blind single image visible watermark removal. AAAI Conf. Artif. Intell. 2021, 35, 1184–1192. [Google Scholar] [CrossRef]

- Liu, Y.; Zhu, Z.; Bai, X. Wdnet: Watermark-decomposition network for visible watermark removal. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 3685–3693. [Google Scholar]

- Wei, X.; Li, J.; Zhou, M.; Wang, X. Contrastive distortion-level learning-based no-reference Image quality assessment. Int. J. Intell. Syst. 2022, 37, 8730–8746. [Google Scholar] [CrossRef]

- Zhou, M.; Wei, X.; Ji, C.; Xiang, T.; Fang, B. Optimum Quality Control Algorithm for Versatile Video Coding. IEEE Trans. Broadcast. 2022, 68, 582–593. [Google Scholar] [CrossRef]

- Liao, X.; Wei, X.; Zhou, M.; Kwong, S. Full-reference image quality assessment: Addressing content misalignment issue by comparing order statistics of deep features. IEEE Trans. Broadcast. 2023, 70, 305–315. [Google Scholar] [CrossRef]

- Wei, X.; Zhou, M.; Kwong, S.; Yuan, H.; Jia, W. A hybrid control scheme for 360-degree dynamic adaptive video streaming over mobile devices. IEEE Trans. Mob. Comput. 2021, 21, 3428–3442. [Google Scholar] [CrossRef]

- Xian, W.; Zhou, M.; Fang, B.; Kwong, S. A content-oriented no-reference perceptual video quality assessment method for computer graphics animation videos. Inf. Sci. 2022, 608, 1731–1746. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, Y.; Li, B.; Fan, R.; Zhou, M. A fast and HEVC-compatible perceptual video coding scheme using a transform-domain Multi-Channel JND model. Multimed. Tools Appl. 2018, 77, 12777–12803. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, Y.; Li, B.; Hu, H.-M. Complexity-based Intra Frame Rate Control by Jointing Inter-Frame Correlation for High Efficiency Video Coding. J. Vis. Commun. Image Represent. 2016, 42, 46–64. [Google Scholar] [CrossRef]

- Wei, X.; Zhou, M.; Jia, W. Toward Low-Latency and High-Quality Adaptive 360∘ Streaming. IEEE Trans. Ind. Inform. 2022, 19, 6326–6336. [Google Scholar] [CrossRef]

- Souibgui, M.A.; Kessentini, Y. Degan: A conditional generative adversarial network for document enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1180–1191. [Google Scholar] [CrossRef]

- Li, M.; Sun, H.; Lei, Y.; Zhang, X.; Dong, Y.; Zhou, Y. High-fidelity document stain removal via a large-scale real-world dataset and a memory-augmented transformer. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 7614–7624. [Google Scholar]

- Gao, T.; Sheng, W.; Zhou, M.; Fang, B.; Luo, F.; Li, J. Method for Fault Diagnosis of a Temperature-Related MEMS Inertial Sensors that combine the Hilbert–Huang transform and deep learning. Sensors 2020, 20, 5633. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Zhang, B.; Zhou, M.; Kwok, H.F.; Siu, S.W. Multi-Branch-CNN: Classification of ion channel interacting peptides using a multibranch convolutional neural network. Comput. Biol. Med. 2022, 147, 105717. [Google Scholar] [CrossRef]

- Yan, J.; Zhang, B.; Zhou, M.; Campbell-Valois, F.X.; Siu, S.W.I. A deep learning method for predicting the minimum inhibitory concentration of antimicrobial peptides against Escherichia coli using Multi-Branch-CNN and Attention. mSystems 2023, 8, E00345-23. [Google Scholar] [CrossRef]

- Wei, X.; Zhou, M.; Wang, H.; Yang, H.; Chen, L.; Kwong, S. Recent Advances in Rate Control: From Optimization to Implementation and Beyond. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 17–33. [Google Scholar] [CrossRef]

- Xian, W.; Zhou, M.; Fang, B.; Xiang, T.; Jia, W.; Chen, B. Perceptual Quality Analysis in Deep Domains Using Structure Separation and High-Order Moments. IEEE Trans. Multimed. 2024, 26, 2219–2234. [Google Scholar] [CrossRef]

- Zhang, K.; Cong, R.; Chen, J.; Zhou, M.; Jia, W. Low-light image enhancement via a frequency-based model with structure and texture decomposition. Acm Trans. Multimed. Comput. Commun. Appl. 2023, 19, 187. [Google Scholar] [CrossRef]

- Shen, W.; Zhou, M.; Chen, Y.; Wei, X.; Feng, Y.; Pu, H. Image Quality Assessment: Investigating Causal Perceptual Effects with Abductive Counterfactual Inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–17 June 2025. [Google Scholar]

- Zhou, M.; Xian, W.; Chen, B.; Fang, B.; Xiang, T.; Jia, W. HDIQA: A Hyper Debiasing Framework for Full-Reference Image Quality Assessment. IEEE Trans. Broadcast. 2024, 70, 545–554. [Google Scholar] [CrossRef]

- Zhou, M.; Li, J.; Wei, X.; Luo, J.; Pu, H.; Wang, W.; He, J.; Shang, Z. AFES: Attention-Based Feature Excitation and Sorting for Action Recognition. IEEE Trans. Consum. Electron. 2015, 71, 5752–5760. [Google Scholar] [CrossRef]

- Gan, Y.; Xiang, T.; Liu, H.; Ye, M.; Zhou, M. Generative adversarial networks with adaptive learning strategy for noise-to-image synthesis. Neural Comput. Appl. 2023, 35, 6197–6206. [Google Scholar] [CrossRef]

- Wei, X.; Song, J.; Pu, H.; Luo, J.; Zhou, M.; Jia, W. COFNet: Contrastive Object-aware Fusion using Box-level Masks for Multispectral Object Detection. IEEE Trans. Multimed. 2025; early access. [Google Scholar]

- Lang, S.; Liu, X.; Zhou, M.; Luo, J.; Pu, H.; Zhuang, X. A full-reference image quality assessment method via deep meta-learning and conformer. IEEE Trans. Broadcast. 2023, 70, 316–324. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, X.; Li, X.; Li, Y.; Ding, S.; Li, Y.; Xiao, J. Regularized attentive capsule network for overlapped relation extraction. Expert Syst. Appl. 2024, 245, 122437. [Google Scholar]

- Zhang, Y.; Liu, Z.; Wu, Y.; Wang, X.; Wang, Y. Cross-modal identity correlation mining for visible-thermal person re-identification. IEEE Trans. Image Process. 2020, 29, 1761–1775. [Google Scholar] [CrossRef]

- Zhu, Z.; Hou, J.; Liu, H.; Zeng, H.; Hou, J. Learning efficient and effective trajectories for differential equation-based image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 9150–9168. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Yan, Z.; Ma, L. New insights on the generation of rain streaks: Generating-removing united unpaired image deraining network. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xiamen, China, 13–15 October 2023; Springer Nature: Singapore, 2023; pp. 390–402. [Google Scholar]

- Li, J.; Feng, H.; Deng, Z.; Cui, X.; Deng, H.; Li, H. Image derain method for generative adversarial network based on wavelet high frequency feature fusion. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Shenzhen, China, 14–17 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 165–178. [Google Scholar]

- Chen, T.; Liu, M.; Gao, T.; Cheng, P.; Mei, S.; Li, Y. A fusion-based defogging algorithm. Remote Sens. 2022, 14, 425. [Google Scholar] [CrossRef]

- Wei, X.; Zhou, M.; Kwong, S.; Yuan, H.; Wang, S.; Zhu, G.; Cao, J. Reinforcement learning-based QoE-oriented dynamic adaptive streaming framework. Inf. Sci. 2021, 569, 786–803. [Google Scholar] [CrossRef]

- Zhou, M.; Zhao, X.; Luo, F.; Luo, J.; Pu, H.; Xiang, T. Robust rgb-t tracking via adaptive modality weight correlation filters and cross-modality learning. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 20, 95. [Google Scholar] [CrossRef]

- Luo, F.; Zhou, M.; Fang, B. Correlation Filters Based on Strong Spatio-Temporal for Robust RGB-T Tracking. J. Circuits Syst. Comput. 2022, 31, 2250041. [Google Scholar] [CrossRef]

- Li, J.; Fang, B.; Zhou, M. Multi-Modal Sparse Tracking by Jointing Timing and Modal Consistency. Int. J. Pattern Recognit. Artif. Intell. 2022, 36, 2251008. [Google Scholar] [CrossRef]

- Guo, Q.; Zhou, M. Progressive domain translation defogging network for real-world fog images. IEEE Trans. Broadcast. 2022, 68, 876–885. [Google Scholar] [CrossRef]

- Cheng, D.; Li, X.; Li, W.H.; Lu, C.; Li, F.; Zhao, H.; Zheng, W.S. Large-scale visible watermark detection and removal with deep convolutional networks. In Proceedings of the Pattern Recognition and Computer Vision: First Chinese Conference, PRCV 2018, Guangzhou, China, 23–26 November 2018; Proceedings, Part III 1. Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 27–40. [Google Scholar]

- Guo, T.; Peng, S.; Li, Y.; Zhou, M.; Truong, T.-K. Community-based social recommendation under local differential privacy protection. Inf. Sci. 2023, 639, 119002. [Google Scholar] [CrossRef]

- Li, L.; Zhang, X.; Wang, S.; Ma, S.; Gao, W. Content-adaptive parameters estimation for multi-dimensional rate control. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 117–129. [Google Scholar] [CrossRef]

- Li, L.; Wang, S.; Ma, S.; Gao, W. Region-based intra-frame rate-control scheme for high efficiency video coding. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1304–1317. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Inter Vention-MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Cheng, S.; Song, J.; Zhou, M.; Wei, X.; Pu, H.; Luo, J. Ef-detr: A lightweight transformer-based object detector with an encoder-free neck. IEEE Trans. Ind. Inform. 2024, 20, 12994–13002. [Google Scholar] [CrossRef]

- Li, Y.-l.; Feng, Y.; Zhou, M.-l.; Xiong, X.-c.; Wang, Y.-h.; Qiang, B.-h. DMA-YOLO: Multi-scale object detection method with attention mechanism for aerial images. Vis. Comput. 2023, 40, 4505–4518. [Google Scholar] [CrossRef]

- Song, J.; Zhou, M.; Luo, J.; Pu, H.; Feng, Y.; Wei, X. Boundary-aware feature fusion with dual-stream attention for remote sensing small object detection. IEEE Trans. Geosci. Remote Sens. 2024, 63, 5600213. [Google Scholar] [CrossRef]

- Wei, X.; Song, J.; Pu, H.; Luo, J.; Zhou, M.; Jia, W. GAANet: Graph Aggregation Alignment Feature Fusion for Multispectral Object Detection. IEEE Trans. Ind. Inform. 2025; early access. [Google Scholar]

- Zhou, M.; Han, S.; Luo, J.; Zhuang, X.; Mao, Q.; Li, Z. Transformer-Based and Structure-Aware Dual-Stream Network for Low-Light Image Enhancement. ACM Trans. Multimed. Comput. Commun. Appl. 2025, 21, 293. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhou, M.; Luo, J.; Pu, H.; Wei, L.H.U.X.; Jia, W. VideoGNN: Video Representation Learning via Dynamic Graph Modelling. ACM Trans. Multimed. Comput. Commun. Appl. 2025. [Google Scholar] [CrossRef]

- Starck, J.L.; Fadili, J.; Murtagh, F. The undecimated wavelet decomposition and its reconstruction. IEEE Trans. Image Process. 2007, 16, 297–309. [Google Scholar] [CrossRef]

- Zhang, Q.; Hou, J.; Qian, Y.; Zeng, Y.; Zhang, J.; He, Y. Flattening-net: Deep regular 2D representation for 3D point cloud analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9726–9742. [Google Scholar] [CrossRef]

- Tahmid, M.; Alam, M.S.; Rao, N.; Ashrafi, K.M.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Thiruvananthapuram, India, 25–26 November 2017; pp. 1125–1134. [Google Scholar]

- Zhao, L.; Shang, Z.; Tan, J.; Zhou, M.; Zhang, M.; Gu, D.; Zhang, T.; Tang, Y.Y. Siamese networks with an online reweighted example for imbalanced data learning. Pattern Recognit. 2022, 132, 108947. [Google Scholar] [CrossRef]

- Korkmaz, C.; Tekalp, A.M.; Dogan, Z. Training generative image superresolution models by wavelet-domain losses enables better control of artifacts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5926–5936. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.-F. Perceptual losses for real-time style transfer and superresolution. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Kim, M.W.; Cho, N.I. WHFL: Wavelet-domain high frequency loss for sketch-to-image translation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 744–754. [Google Scholar]

- Liao, X.; Wei, X.; Zhou, M.; Li, Z.; Kwong, S. Image Quality Assessment: Measuring Perceptual Degradation via Distribution Measures in Deep Feature Spaces. IEEE Trans. Image Process. 2024, 33, 4044–4059. [Google Scholar] [CrossRef]

- Hamedani, E.Y.; Aybat, N.S. Accelerated primal-dual mirror dynamics for centralized and distributed constrained convex optimization problems. J. Mach. Learn. Res. 2023, 24, 1–76. [Google Scholar]

- Duan, C.; Feng, Y.; Zhou, M.; Xiong, X.; Wang, Y.; Qiang, B.; Jia, W. Multilevel Similarity-Aware Deep Metric Learning for Fine-Grained Image Retrieval. IEEE Trans. Ind. Inform. 2023, 19, 9173–9182. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhang, Y.; Zhang, Q.; Zhu, Z.; Hou, J.; Yuan, Y. Glenet: Boosting 3D object detectors with generative label uncertainty estimation. Int. J. Comput. Vis. 2023, 131, 3332–3352. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Ren, S.; Hou, J.; Chen, X.; Xiong, H.; Wang, W. DDM: A Metric for Comparing 3D Shapes Using Directional Distance Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 6631–6646. [Google Scholar] [CrossRef]

- Xian, W.; Zhou, M.; Fang, B.; Liao, X.; Ji, C.; Xiang, T. Spatiotemporal feature hierarchy-based blind prediction of natural video quality via transfer learning. IEEE Trans. Broadcast. 2022, 69, 130–143. [Google Scholar] [CrossRef]

- Zhang, Y.; Hou, J.; Ren, S.; Wu, J.; Yuan, Y.; Shi, G. Self-supervised learning of lidar 3D point clouds via 2D-3D neural calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 9201–9216. [Google Scholar] [CrossRef]

| PSNR ↑ | SSIM ↑ | LPIPS ↓ | |

|---|---|---|---|

| 1 | 37.1172 | 0.9876 | 0.0118 |

| 2 | 37.5532 | 0.9897 | 0.0086 |

| 4 | 37.2040 | 0.9887 | 0.0097 |

| Configuration | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

|---|---|---|---|

| only space | 36.1617 | 0.9850 | 0.0140 |

| FWCM | 37.5532 | 0.9897 | 0.0086 |

| Models | PSNR ↑ | SSIM ↑ | LPIPS ↓ | RMSE ↓ | FLOPs ↓ |

|---|---|---|---|---|---|

| UNet | 36.3627 | 0.9882 | 0.0096 | 4.4597 | 524.92G |

| SplitNet | 36.5808 | 0.9874 | 0.0106 | 4.3012 | 681.44G |

| WDNet | 36.7202 | 0.9872 | 0.0114 | 4.2350 | 560.28G |

| Ours | 37.5532 | 0.9897 | 0.0086 | 3.8511 | 422.98G |

| Models | PSNR ↑ | SSIM ↑ | LPIPS ↑ | RMSE ↑ | FLOPs ↑ |

|---|---|---|---|---|---|

| UNet | 31.2305 | 0.9534 | 0.0612 | 7.9124 | 131.24G |

| SplitNet | 34.3812 | 0.9688 | 0.0437 | 5.8872 | 170.36G |

| WDNet | 31.0243 | 0.9520 | 0.0638 | 8.5043 | 140.06G |

| Ours | 31.9011 | 0.9578 | 0.0566 | 7.8075 | 105.74G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Zhang, Y.; Yan, J.; Wei, X.; Xian, W.; Mao, Q.; Qin, Y.; Gao, T. FE-WRNet: Frequency-Enhanced Network for Visible Watermark Removal in Document Images. Appl. Sci. 2025, 15, 12216. https://doi.org/10.3390/app152212216

Chen Z, Zhang Y, Yan J, Wei X, Xian W, Mao Q, Qin Y, Gao T. FE-WRNet: Frequency-Enhanced Network for Visible Watermark Removal in Document Images. Applied Sciences. 2025; 15(22):12216. https://doi.org/10.3390/app152212216

Chicago/Turabian StyleChen, Zhengli, Yuwei Zhang, Jielu Yan, Xuekai Wei, Weizhi Xian, Qin Mao, Yi Qin, and Tong Gao. 2025. "FE-WRNet: Frequency-Enhanced Network for Visible Watermark Removal in Document Images" Applied Sciences 15, no. 22: 12216. https://doi.org/10.3390/app152212216

APA StyleChen, Z., Zhang, Y., Yan, J., Wei, X., Xian, W., Mao, Q., Qin, Y., & Gao, T. (2025). FE-WRNet: Frequency-Enhanced Network for Visible Watermark Removal in Document Images. Applied Sciences, 15(22), 12216. https://doi.org/10.3390/app152212216