1. Introduction

Cold chain logistics is a critical supply chain activity that ensures the transportation and storage of temperature-sensitive products within specified temperature ranges throughout the entire process from production to the end consumer. Products such as food, pharmaceuticals, biologicals, and chemicals require controlled environments to maintain quality and safety. In this context, the cold chain is regarded not only as a logistical solution but also as a strategic infrastructure for public health, consumer confidence, and economic sustainability. Especially during crisis periods such as the global pandemic, the challenges faced in vaccine logistics have clearly revealed the fragility of this system and the urgent need for improvement [

1].

However, current cold chain systems still suffer from shortcomings such as centralized data structures, manual processes, and limited transparency. The collection of critical environmental data such as temperature, humidity, and location throughout the supply chain is largely left to the discretion of logistics providers. These data are stored in centralized databases, creating risks of alteration [

2], making product safety and transparency challenging [

3].

With the advancement of technology, digital innovations are increasingly being employed to fill this gap. Recently, models that fundamentally transform technology have been proposed through the integration of the Internet of Things (IoT) and blockchain technologies. IoT enables real-time monitoring of environmental parameters like temperature, humidity, and vibration, whereas blockchain guarantees the tamper-proof, decentralized, and transparent storage of such data [

4]. As a result, it becomes possible to provide both authorities and end users with reliable and traceable information about product conditions [

5].

In the literature, how environmental data collected via IoT devices can be utilized in decentralized systems is extensively discussed through technical components such as energy efficiency, data security, and synchronization with several studies confirming that blockchain–IoT integration enhances traceability while still facing challenges of scalability and security. In particular, the long-term data collection by low-power devices with limited energy resources, the uninterrupted transmission of this data, and its real-time synchronization with the system are among the major challenges. Wisessing and Vichaidis [

6] have highlighted the energy limitations, low bandwidth, and delays in data synchronization encountered in IoT-based cold chain solutions and demonstrated that such technical bottlenecks can lead to significant data losses at the endpoints of the chain.

Therefore, this study aims to address the persistent challenges of centralized data structures, limited transparency, and the lack of cross-organizational trust in cold chain logistics. Specifically, it proposes a permissioned blockchain framework based on Hyperledger Fabric, integrated with IoT sensors, to ensure reliable and tamper-proof recording of environmental data across producers, carriers, and retailers. The main contributions of this study are as follows: (i) development of a multi-organizational blockchain network architecture ensuring role-based and secure collaboration; (ii) implementation of an IoT-integrated data acquisition and API layer for real-time environmental monitoring; (iii) design of a consumer-oriented web interface enabling blockchain-backed product traceability; and (iv) experimental evaluation of system scalability and performance using Hyperledger Caliper. Together, these contributions provide a comprehensive solution that bridges technical and managerial gaps in blockchain-enabled cold chain logistics.

The remainder of this paper is structured as follows.

Section 2 reviews the related literature,

Section 3 describes the proposed methodology,

Section 4 presents the performance analysis,

Section 5 discusses the findings, and

Section 6 concludes the study with future research directions.

2. Related Works

In the context of cold chain logistics, blockchain- and IoT-based solutions have been explored in literature across various sectors and modeled according to different application domains. Within this scope, the existing studies have been categorized into four main groups based on their semantic similarity: blood and vaccine cold chains, food supply chains, industrial and pharmaceutical logistics, and integrated system architectures.

Blood and vaccine cold chain applications are among the most frequently studied areas of blockchain-based traceability systems. For instance, Kim et al. [

7] proposed a model that enables tracking of blood components at every stage and ensures secure data transmission across the supply chain. The study emphasizes the strengths of data integrity, system transparency, and proper sequencing of operations. However, Asokan et al. [

8] proposed a simpler architecture to reduce system complexity, placing emphasis on reliability and data immutability. Kim et al. [

9], notable for its user-centered design, aimed to facilitate system usability for both donors and recipients. Nevertheless, most of these studies provide limited detail regarding authorization policies, cross-chain interoperability, and performance metrics. In the context of vaccine supply chains, studies such as [

1,

2] stand out in the literature. In addition, Arsheen and Ahmad [

10] introduced ImmuneChain, a vaccine cold chain framework built on Hyperledger Fabric with RAFT consensus and ABAC-based authorization, providing secure and transparent vaccine traceability.

In the ImmuneChain framework, access control is implemented through an attribute-based access control (ABAC) approach. In this model, users’ access privileges are determined by the verification of predefined attributes. While attribute-based control provides high flexibility, it also increases administrative complexity as the number of attributes grows.

In the proposed study, a role-based access control (RBAC) model is adopted for managing permissions. This approach organizes authorization through predefined roles assigned to each organization and simplifies the administration of access policies. Each role performs operations corresponding only to its defined responsibilities, and when a new organization joins the network, the authorization process is completed merely by assigning the appropriate role. This structure enables more consistent and scalable management of privilege delegation and identity handling across multi-stakeholder cold chain environments. The Membership Service Provider (MSP) and Organizational Unit (OU) components in the Hyperledger Fabric architecture inherently support this model, ensuring that role-based access control is seamlessly integrated throughout the system. Consequently, the proposed framework provides a reliable solution for permissioned blockchain environments, where institutional roles are explicitly defined and access control is maintained with architectural coherence.

Notably, Sreenu et al. [

2] proposed a cyber-physical system architecture that enables the transmission of environmental data, such as temperature monitoring, to the blockchain. Mendonça et al. [

1] integrated sensor data into the blockchain via the Ethereum network and proposed a global system for healthcare logistics. However, both studies addressed performance, energy consumption, and data security only as secondary considerations. Similarly, while Zeng et al. [

11] proposed a solution targeting the tracking of emergency medical products, the issues of system sustainability and potential performance bottlenecks were not sufficiently addressed.

In the fields of industrial and pharmaceutical logistics, the use of permissioned blockchain systems such as Hyperledger Fabric has become prominent. Within this scope, Rehan et al. [

12] present a framework based on the Hyperledger architecture to enhance data integrity and transparency across organizations in the supply chain. Although access control, identity management, and data integrity mechanisms are carefully designed in the study, performance testing remains limited. The study [

13] specifically aims to monitor authorized transactions on the blockchain. This structure is valuable in terms of regulatory compliance and internal auditing needs. Another study [

14] focusing on pharmaceutical counterfeiting, proposes a rather simple verification mechanism. While this simplifies the model, it is considered potentially limiting when faced with the complexity of real-world supply chains. Additionally, Kutybayeva et al. [

15] aim to contribute to decision support systems by integrating blockchain with big data platforms, thereby introducing a novel approach grounded in data analytics.

In studies focusing on the food supply chain, concepts such as quality assurance, consumer trust, and food safety are more prominently emphasized. Wisessing and Vichaidis proposed a framework [

6] that enables temperature data collected via IoT sensors to be recorded on the Sawtooth blockchain, thereby providing consumers with verifiable information about the environmental conditions of the product. However, issues such as system scalability and the assurance of data accuracy were addressed only superficially. There are also studies that approach blockchain as a tool for certification, allowing the verification of food authenticity and quality information through the chain [

5]. Several studies [

16,

17] have been evaluated particularly within the context of producer–consumer trust relationships, focusing on tracking the history within the chain to enhance transparency. Nonetheless, critical system-level issues like security, authentication, and access authorization were largely overlooked.

Lastly, examples of integrated system architectures in which blockchain operates in conjunction with IoT, RFID, big data, or other digital monitoring systems are relatively limited. Wang et al. [

18] present a model that directly links RFID data with the blockchain, achieving item-level alignment between physical and digital records. This particularly allows the digital verification of systems that provide physical traceability within the cold chain. Some studies have focused on real-time data sharing by integrating IoT sensors with blockchain technology [

6,

11]. However, critical parameters such as system security, data encryption levels, and energy consumption per transaction are generally not discussed in detail in these systems. A comprehensive evaluation of these systems in terms of scalability and performance optimization has not been conducted.

The comparative characteristics of blockchain- and IoT-based cold chain solutions are summarized in

Table 1. The studies are organized chronologically, reflecting the gradual transition from earlier conceptual approaches to more recent, implementation-oriented research. While most existing works provide conceptual models or scenario-driven demonstrations, they typically lack full implementation and performance evaluation. In contrast, the proposed study contributes not only a complete implementation on Hyperledger Fabric but also a systematic performance assessment using Hyperledger Caliper, thereby addressing one of the most critical gaps identified in the literature.

The literature summarized in

Table 1 reveals that integrating blockchain technology into cold chain systems offers significant benefits in terms of product safety, transaction transparency, and chain integrity. However, most existing studies either focus on specific application domains or remain limited to conceptual system proposals. Moreover, aspects such as systematic access control, cross-organizational transaction security, data analytics integration, and performance evaluation have either been overlooked or addressed only superficially.

This study introduces a model designed to bridge these gaps, offering a Hyperledger Fabric-based framework that securely logs environmental data from IoT devices, facilitates access-controlled and role-specific data sharing among multiple organizations, and allows for the assessment of network performance. This integrated approach, which has few examples in the existing literature, aims to offer a unique and comprehensive contribution by considering both technical infrastructure and institutional requirements.

3. Methodology and Implementation

Cold supply chain applications require monitoring of products in terms of temperature, humidity, and environmental conditions, along with maintaining auditable historical records. In such systems, it is crucial that multiple stakeholders can operate on a secure, controllable, and tamper-proof data infrastructure. To meet these requirements, this study employs Hyperledger Fabric, a permissioned blockchain platform designed for enterprise-level solutions. The choice of Fabric over alternatives such as Ethereum or Sawtooth is motivated by its performance and architectural advantages. Prior studies have shown that Fabric achieves lower latency and higher throughput in private networks compared to Ethereum [

20], while its modular consensus mechanisms allow flexibility and scalability across diverse scenarios [

21]. Moreover, Fabric’s permissioned model and channel-based privacy controls are particularly suited for multi-stakeholder supply chains where selective data sharing is required [

22].

Owing to its modular and customizable architecture, Fabric enables secure data sharing among multiple organizations and is architecturally well-suited for high-sensitivity applications such as cold supply chains. As emphasized by Ravi et al. [

23] Fabric’s channel mechanism and modular consensus frameworks provide selective transparency and privacy, allowing producers, carriers, and retailers to collaborate on the same network while safeguarding sensitive business information.

The Fabric network operates based on three fundamental components:

Peer nodes, which store and process product information, environmental sensor data, and historical records,

Orderer nodes, which sequence all transactions and assemble them into blocks,

Certificate Authority (CA) services, which define user and node identities specific to each organization.

Each organization manages identity through its own CA service, and these identities are introduced to the network via the MSP infrastructure. This allows role-based authentication and authorization of all network participants. Such MSP-based identity management, combined with chaincode automation, enforces secure access while preserving transaction integrity through cryptographic signatures [

23].

This structure not only enables collaboration among diverse actors, such as producers, carriers, and retailers, on the same blockchain network, but also ensures data privacy and role-based access using channels and private data collections. The architectural flexibility offered by Hyperledger Fabric provides a reliable and adaptable infrastructure for cold supply chain applications.

3.1. A System Architecture and Application Configuration

Hyperledger Fabric provides a permissioned blockchain framework characterized by its modular consensus protocols, execute–order–validate transaction flow, and MSP for identity management, ensuring both transparency and data privacy in distributed environments [

24]. The system developed in this study is configured on a multi-organizational blockchain network based on Hyperledger Fabric. The application enables three distinct organizations, representing the production, transportation, and retail stages of the cold supply chain, to collaborate on the same network. Each organization is independently positioned within the network with its own peer nodes, MSP, CA service, and chaincode infrastructure.

The organizations are integrated through a shared channel, allowing all transactions on the network to be observable and verifiable by all parties. Each peer node executes transactions on the channel using its own chaincode and ledger components and can access only the data it is authorized to view. The chaincode handles operations such as recording product identifiers on the blockchain, processing sensor data with timestamps, and querying historical records.

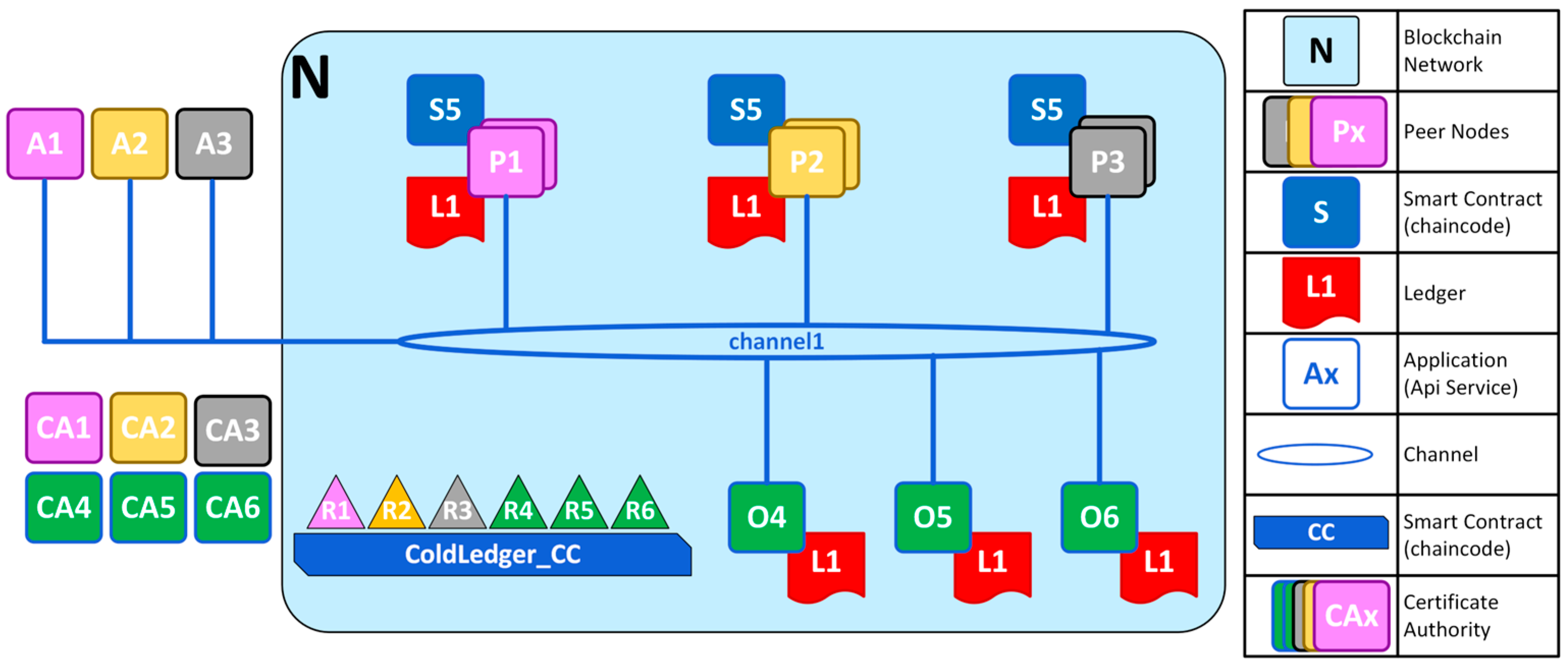

The general structure of the system architecture is presented in

Figure 1. In this architecture, the peer nodes of each organization are identified through their respective CA services, and instances of the chaincode are executed concurrently on each peer. Although the organizations are connected to the same channel, they can only operate on the data within their own scope of authorization. This architecture enables secure collaboration among multiple organizations while also fulfilling the requirements of data privacy and transaction integrity.

According to the cold supply chain model illustrated in

Figure 1, P1 represents the producer organization, P2 the transporter organization, and P3 the retailer organization. Each organization is configured with its own peer nodes, CA services (CA1–CA6), Representational State Transfer (REST) API applications (A1–A3), and chaincode instances. All organizations communicate through a shared channel.

Leveraging the distributed architecture, chaincode operations and the generation of environmental sensor data can only be performed by authorized organizations; each organization securely executes transaction and records flows specific to its role within the system. For instance, the producer organization records the initial RFID identification and production temperature of the product; the transporter organization writes temperature and humidity values related to shipment onto the blockchain, while the retailer records post-delivery verification and inspection data into the system. Consequently, data from each stage of the chain is stored on the network in an integrated, auditable, and immutable manner.

3.2. Chaincode Design and Functional Components

The chaincode developed in this study is designed to ensure the secure and authorized recording of environmental conditions throughout the cold supply chain process. Functioning as a smart contract within the Hyperledger Fabric architecture, the chaincode enables the execution, validation, and storage of transactions recorded on the blockchain. In the context of the application, the goal is to record each product’s temperature, humidity, and location data onto the blockchain at relevant transport stages, ensuring these records are accessible in an auditable manner.

The developed chaincode operates with organization-specific authorization mechanisms and permits only predefined roles to perform specific operations. With this structure, for instance, the producer organization can register product identifiers, while the transporter organization is allowed to write only environmental condition data to the blockchain. This role-based transaction control is enforced through attributes defined within user identities.

To illustrate the functionality of the chaincode, the logEnvironmentData function, defined as shown in Listing 1, is one of the key components responsible for recording the environmental data of a product, measured at a specific location, onto the blockchain. This function takes temperature, humidity, and timestamp values along with a LocationID; combines them into an EnvironmentLog structure; and permanently records the data on the ledger using a unique key.

| Listing 1. Role-based environmental data logging on the blockchain. |

func (s *SmartContract) logEnvironmentData(APIstub shim.ChaincodeStubInterface, args []string) sc.Response {

if len(args) != 4 {

return shim.Error(“Expecting 4 arguments: locationID, temp, humidity, timestamp”)

}

log:= EnvironmentLog{

LocationID: args [0],

Temp: args [1],

Humidity: args [2],

Timestamp: args [3],

}

key:= fmt.Sprintf(“ENV_%s_%s”, log.LocationID, log.Timestamp)

logAsBytes, _ := json.Marshal(log)

err:= APIstub.PutState(key, logAsBytes)

if err != nil {

return shim.Error(“Failed to log environment data: “ + err.Error())

}

return shim.Success(nil)

} |

This structure enables environmental data collected via IoT sensors during transportation to be recorded on the blockchain with timestamps; these records serve as a foundation for reliability and traceability in subsequent stages of the supply chain. Through this architecture, time-stamped environmental data gathered during shipment is immutably stored on the blockchain, establishing a reliable and traceable foundation for later stages in the cold chain. In addition, the system ensures data privacy by using private data collections to isolate sensitive information that must be shared only among specific organizations from the public blockchain.

This structure applies role-based access control (RBAC) based on user roles defined within the MSP framework of Hyperledger Fabric. Each transaction type is executed only by the role authorized to perform it. For instance, the producer can register product information, while the carrier can record temperature and humidity data. Compared to the ABAC model, this approach reduces administrative complexity and facilitates the integration of new participants into the system.

The deployment of the chaincode was carried out in accordance with Hyperledger Fabric’s Lifecycle procedures; all organizations installed the chaincode, approved the defined version, and completed the commit process at the channel level. All chaincode operations are executed exclusively by organization nodes that support TLS-enabled communication and are authenticated.

3.3. Api Layer and System Integration

An API layer has been implemented to ensure that the developed system is accessible and usable by external users or third-party applications. This layer was developed based on REST principles in a Node.js environment and integrated into the distributed network architecture as a Docker container. The API server securely transmits transaction requests from the client to the chaincode and returns the results of those transactions to the client while ensuring that responses

The primary role of the API layer is to enable clients to invoke chaincode functions and manage identity authentication within the network. Network configuration files, organizational identity information, and certificates are defined through a connection profile, while user authorization is handled using Fabric’s wallet structure. Once a transaction is endorsed, ordered, and committed to the ledger, the API layer returns an ACK to confirm its immutable recording, allowing clients to distinguish between successful and failed operations.

Data writing operations, such as product registration or environmental data submission, are triggered through HTTP POST requests. The API server forwards these to the appropriate peer and channel for invocation. Data retrieval operations, such as accessing a product’s environmental history or location-based records, are handled through HTTP requests invoking chaincode query functions. Both write and query requests follow a unified handling pattern to ensure consistent client interaction.

User authentication and role-based control are enforced via the MSP and CA mechanisms in Hyperledger Fabric. Each user is validated through their digital certificate, and only predefined roles are granted access to specific chaincode functions, ensuring secure and role-based data access.

The developed API structure provides a secure and extensible middleware layer that enables integration of the system with web applications, mobile apps, or IoT devices. On-chain transaction security is maintained, while a decentralized control model is established for user interaction.

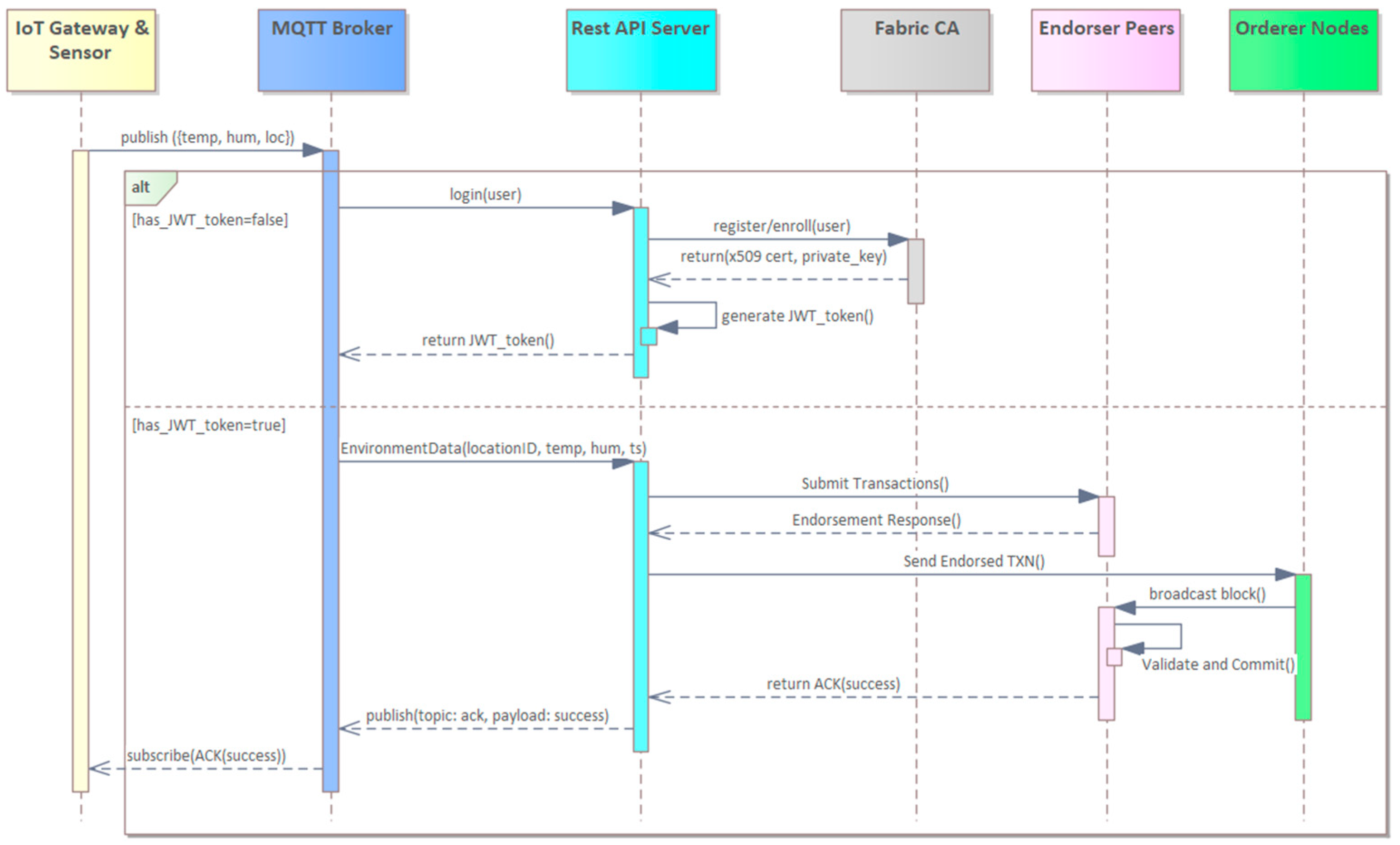

Figure 2 illustrates the step-by-step process of transmitting sensor data to the Hyperledger Fabric network via the REST API. This sequence highlights how authentication, data transmission, and chaincode invocation operate in temporal order, without redundant validation steps that could increase latency.

As shown in

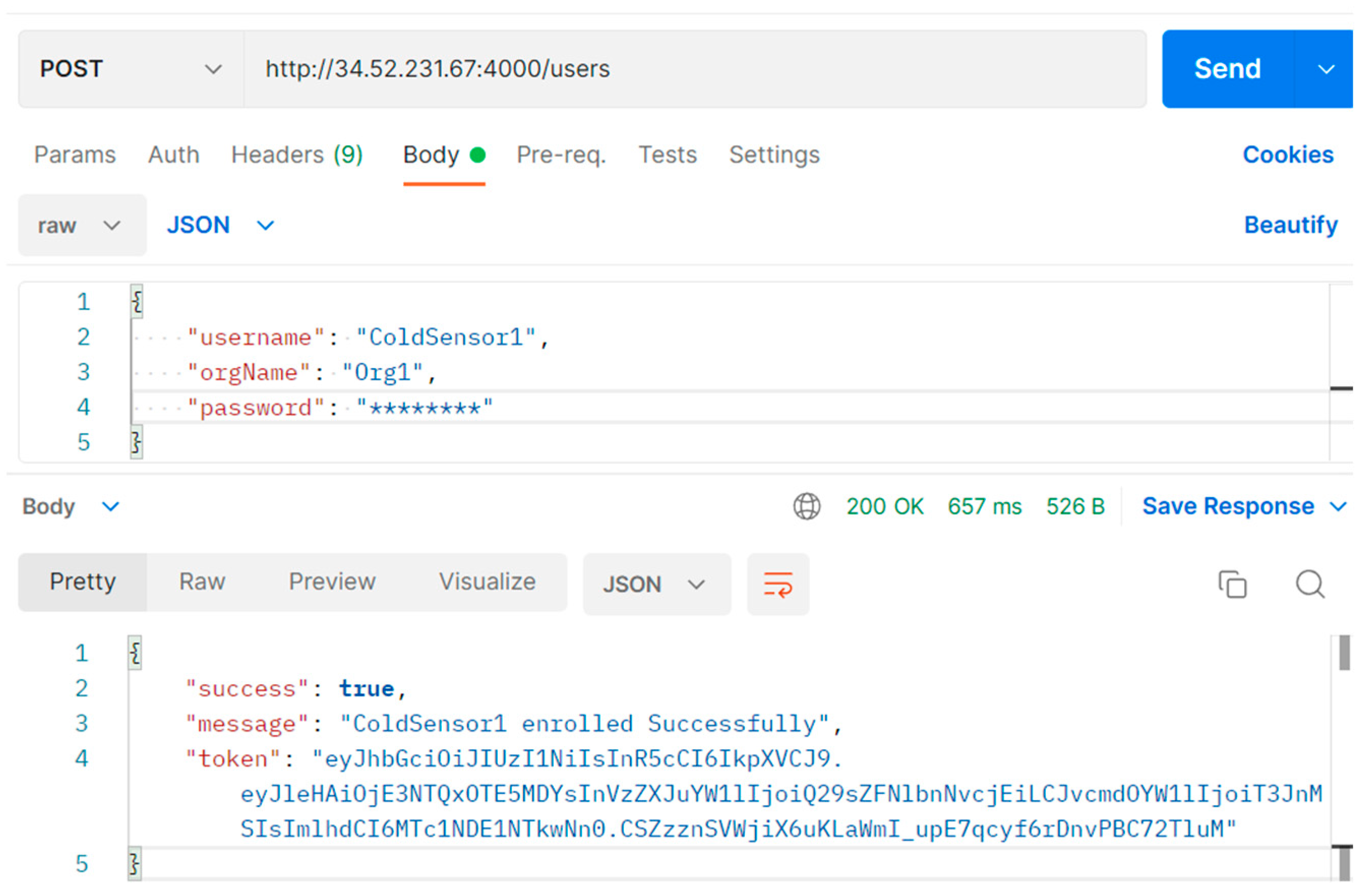

Figure 2, temperature, humidity, and location data from IoT sensors are first transmitted via an MQTT broker. If not linked to a verified user, A JSON Web Token (JWT)-based authentication process is initiated through the API server. During this process, Fabric CA registers and enrolls the user, generating digital credentials (x.509 certificate and private key). A JWT is then created and returned to the client for authenticated interactions. This process is detailed in

Figure 3, highlighting the flow from enrollment to token-based authorization.

As illustrated in

Figure 3, the API server requires a valid enrollment ID and secret registered with Fabric CA. Upon successful verification through the CA’s enrollment function, a signed JWT is generated and returned to the client, ensuring that only authenticated users can submit data to the blockchain.

Following JWT verification, the user submits environmental data (Location ID, temperature, humidity, timestamp) to the API server. The server invokes the corresponding chaincode function, and the transaction is:

Endorsed by peer nodes;

Sent to orderer nodes for block inclusion;

Validated and immutably committed to the ledger, after which an ACK is returned to the client.

Through this architecture, each environmental data entry is securely stored in an auditable and tamper-proof manner, ensuring integrity and compliance with each organization’s authorized privileges.

3.4. Integration of IOT and Blockchain in the Cold Chain System

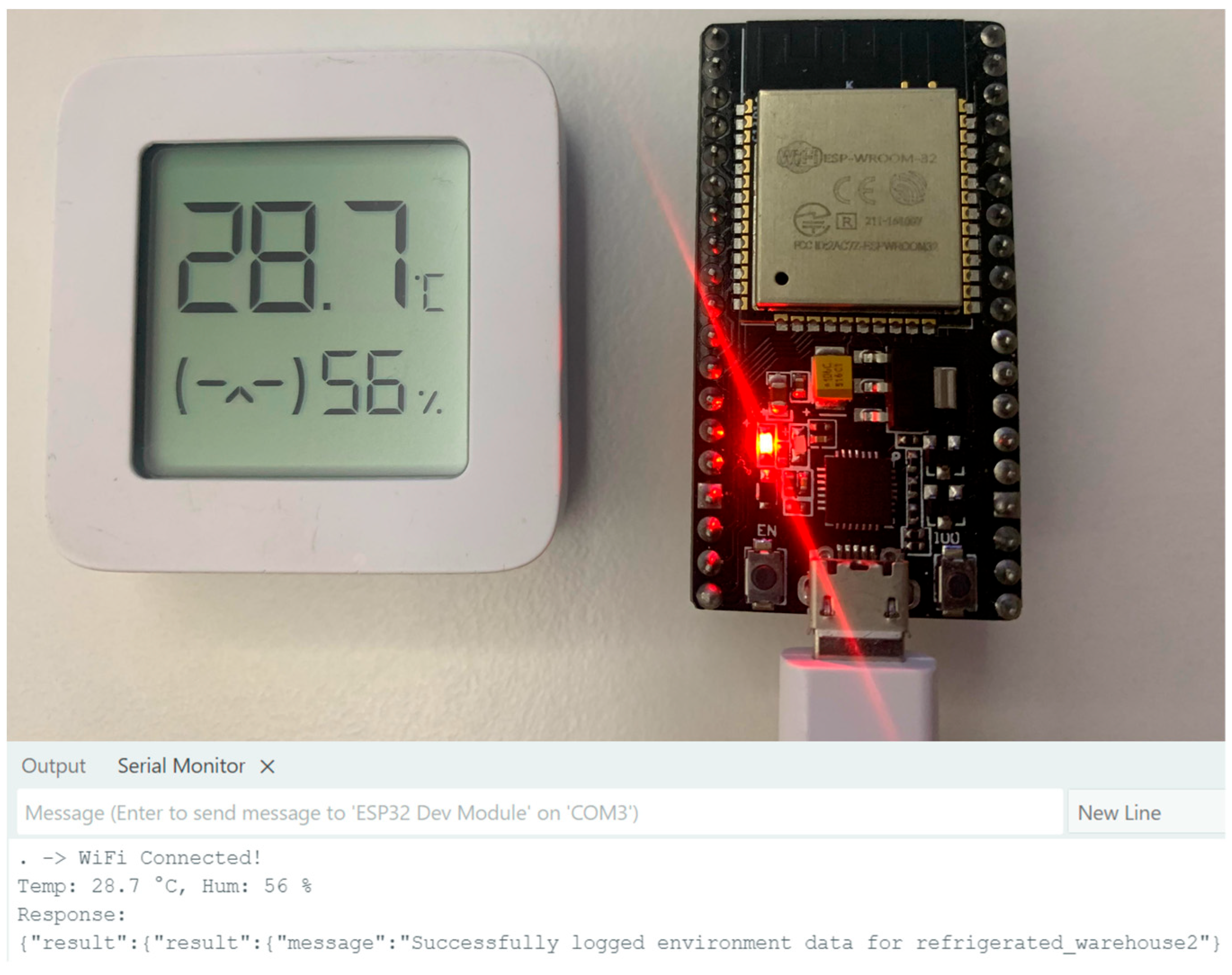

Real-time monitoring of environmental variables such as temperature and humidity plays a pivotal role in ensuring product safety and quality throughout cold chain logistics. In this context, Bluetooth Low Energy (BLE) sensors and ESP32 microcontrollers with low-power wireless communication capabilities were employed to experimentally validate blockchain-based data transmission. The sensor periodically transmits environmental data to a gateway via the MQTT protocol, after which the data is routed to the processing layer within the system architecture.

The environmental sensing module was configured using a low-power Bluetooth Low Energy (BLE 4.2) temperature–humidity sensor offering an accuracy of ±0.3 °C for temperature and ±2% for relative humidity, with a resolution of 0.1 °C and 1%, respectively. The ESP32-based gateway establishes a Bluetooth connection with the sensor, retrieves these periodic readings, and forwards them to the API layer via the MQTT protocol. The sampling interval was set to one reading per minute to achieve a balance between temporal precision and power efficiency. To minimize energy consumption during transportation, the ESP32 gateway operates in a deep-sleep mode, waking up for approximately five seconds every sixty seconds to receive and transmit data. This configuration enables uninterrupted monitoring over extended cold chain durations, even in energy-limited environments.

During transportation, continuous power supply to the sensor and gateway nodes cannot be guaranteed; therefore, the system was designed with a low-power operation profile. The ESP32-based gateway was configured to operate in deep-sleep mode. It wakes up every 60 s for approximately 5 s to collect temperature and humidity data from the BLE sensor and then transmits the readings to the system via MQTT.

Experimental measurements showed that the ESP32 drew an average current of about 85 mA in active mode and 0.014 mA in deep-sleep mode, resulting in an average current consumption of approximately 7.1 mA per duty cycle. With a 2000 mAh Li-ion battery, this configuration enables continuous operation for roughly 11.7 days under ideal conditions. Considering real-world variations in temperature, signal strength, and transmission intervals, the effective operation time typically ranges between 7 and 10 days. These results confirm that the proposed low-power design is suitable for long-duration cold chain monitoring and significantly reduces maintenance requirements related to power supply during transportation.

As illustrated in

Figure 4, the sensor’s temperature and humidity readings are initially sent to an MQTT broker and subsequently forwarded to the REST API server using an authenticated device identity. The system is designed to accept data exclusively from authorized devices. Accordingly, each device must possess a valid JWT to transmit data. Upon initial connection or token expiration, the device triggers an identity verification process to request a new JWT.

Following successful JWT authentication, the device transmits environmental parameters such as temperature, humidity, location, and timestamp to the REST API endpoint. The API server then forwards this data to the appropriate chaincode function, which logs it as a transaction on the Hyperledger Fabric network. The transaction is first endorsed by peer nodes, subsequently ordered by the orderer nodes, and ultimately committed to the ledger in an immutable manner. This architecture ensures that, despite the continuous flow of data from devices, system resources are accessed solely by authorized entities. Consequently, the system maintains decentralized identity management while delivering a secure and reliable data processing infrastructure.

3.5. Consumer-Level Access and Web Interface Design

A web interface architecture has been implemented on top of the developed API server to facilitate system interaction. The first layer enables authorized actors such as producers, carriers, and retailers to access and monitor the system. Authentication and authorization protocols are employed to protect this layer, restricting each organization’s access to its designated data domain.

At the second level, the system provides an unauthenticated public interface for open data access. This interface was developed to support the system’s transparency objective and represents one of the core contributions of this study. Consumers access the interface by scanning the product’s QR code identifier to initiate queries. Consumers can access environmental records such as temperature and humidity linked to the product, including their temporal and spatial attributes. These queries are executed via chaincode functions such as “QueryEnvironmentLogsByProductID” and are exposed to the public through REST API endpoints.

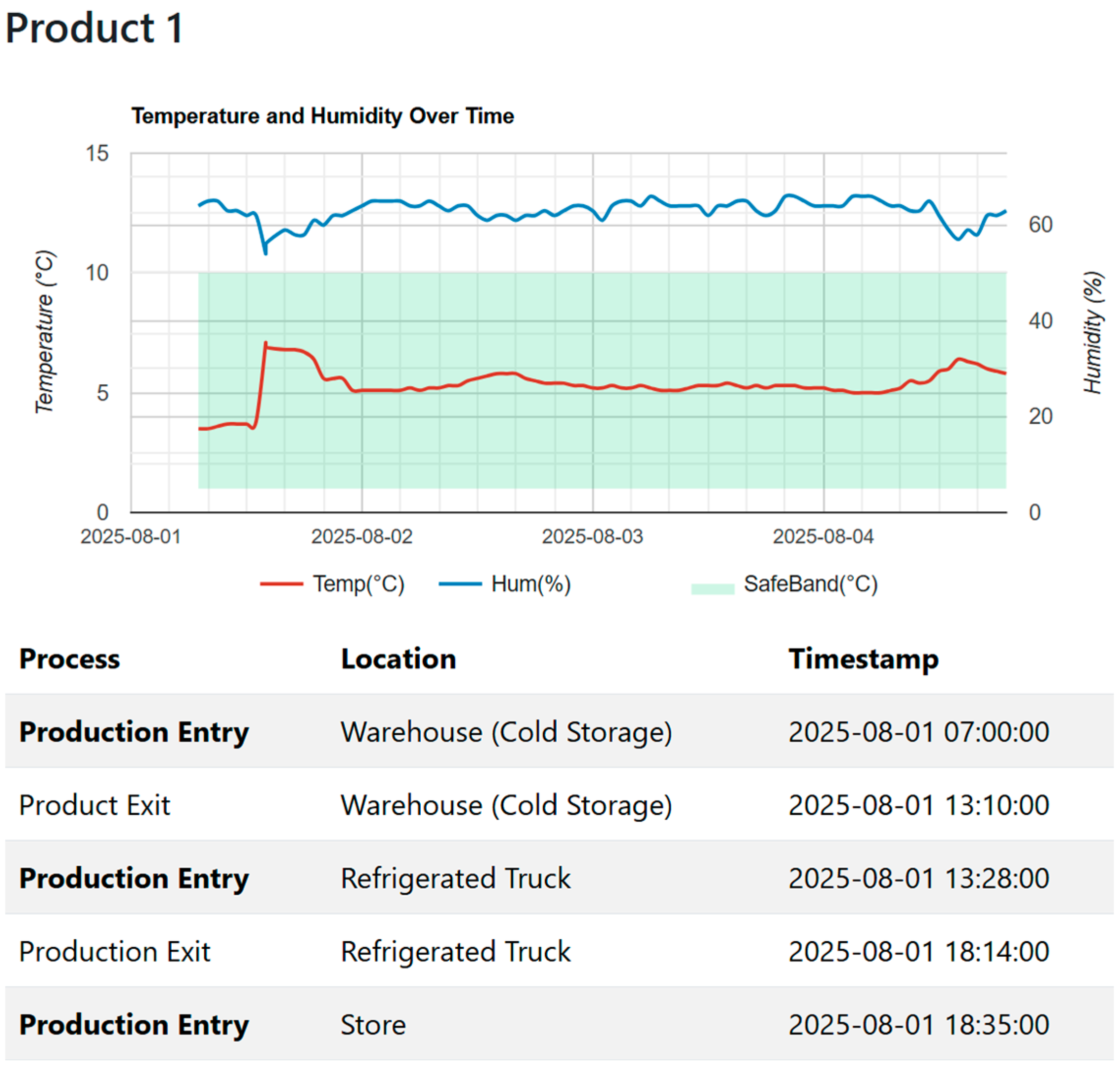

The final functionality of the user interface is completed with a web-based visualization module that provides product-level traceability. As shown in

Figure 5, this module graphically presents the historical temperature and humidity values of each product over time. By scanning the QR code on the product, users can access and verify the environmental conditions encountered at each logistics point from production to retail via the blockchain.

Figure 5 presents the historical temperature and humidity values of a sample product in a time-dependent graphical format. In the system, a predefined permissible temperature range (SafeBand) is assigned to each product record and visualized as a green zone on the graph. Through graphical monitoring, users can easily determine whether the product has remained within the defined limits during transportation. As illustrated, the temperature and humidity of the sample product remained within the safe range throughout its transportation, indicating no violations occurring during the chain.

The web interface is built on React and is seamlessly integrated with the REST API layer to facilitate user interaction. When a user scans a QR code, the client interface sends an HTTP GET request containing the identifier to the API server. This request triggers chaincode using the corresponding product ID. The API server forwards these queries to the Hyperledger Fabric network via an authorized peer node, and returns the resulting environmental data such as temperature, humidity, and location to the client. All data are securely stored on the Hyperledger Fabric distributed ledger in accordance with predefined identity and access control policies. This architecture ensures that the information presented to the user is immutably recorded on the ledger, providing a significantly higher level of data integrity and trust compared to centralized systems. Beyond its graphical role, the web interface serves as the final verification layer linked directly to on-chain records in the permissioned blockchain system.

The public query functionality reinforces the system’s transparency principle and enables immutable verification of historical data for sensitive products transported under cold chain conditions. It ensures blockchain-backed traceability, allowing consumers to examine the environmental conditions experienced by a product throughout its logistics lifecycle. Moreover, the design sustains secure, role-constrained inter-organizational processes, thereby enabling traceability without undermining system-level confidentiality or integrity.

4. Performance Analysis

To evaluate the performance of the Hyperledger Fabric-based system, experimental measurements were conducted in a multi-virtual machine (VM) environment. The primary focus of these measurements was to assess transaction latency, throughput (transactions per second, TPS), and scalability behavior. During the experiments, the Hyperledger Caliper benchmarking tool was employed, and performance metrics were obtained based on tests performed under varying transaction send rates.

Three primary test scenarios were implemented during the benchmarking process. First, open-type transactions, which involve one read, and one write operation, were executed and measured. Second, transfer-type transactions, characterized by more complex read–write combinations, were tested to evaluate their impact on latency. This experimental study was designed to observe how the Fabric platform performs under both low- and high-volume transaction models involving simple and complex operations.

Table 2 presents the architectural configuration of the Hyperledger Fabric network used in the experiments and Caliper benchmark test parameters applied during throughput and latency evaluation.

The performance of the system under different transaction types and send rates is presented in

Table 3. Three primary transaction categories were evaluated in this study. Open transactions represent simple scenarios consisting of a single read and a single write operation. Transfer transactions, in contrast, involve more complex read–write combinations and are designed to model processes such as product handovers along the supply chain. Query transactions are limited to retrieving existing ledger records without performing write operations, thereby imposing a lower computational burden on the system. These three transaction types were selected to capture system behavior under varying workload conditions.

The results indicate that, under varying workloads, the average latency of open transactions ranges between 2 and 5 s, while throughput increases with higher send rates. This demonstrates that the system provides a scalable structure for simple read–write scenarios. In the case of transfer transactions, higher latency values were observed, and throughput remained lower compared to open transactions. Nevertheless, the latency growth did not exhibit an exponential trend, confirming that the proposed system maintains stable behavior even under increasing transaction loads. On the other hand, query transactions achieved exceptionally high efficiency, delivering between 442 and 818 TPS with average latencies of 0.21–0.26 s, at send rates of 500 and 1000 TPS, respectively. This result validates the system’s strong performance in read-only workloads.

Moreover, as shown in

Table 3, no transaction failures were recorded in any of the scenarios. This finding confirms that the proposed system not only delivers high performance but also ensures reliability and transaction correctness. The fact that latency increases remained controlled and predictable demonstrates that the system is suitable for practical deployment even under low hardware configurations. Consequently, the critical requirements of cold chain applications, such as rapid and trustworthy verification of product history and environmental conditions, can be effectively fulfilled.

In particular, the query transactions demonstrated almost linear scalability when the send rate was increased from 500 to 1000 TPS, as throughput rose from 442 to 818 TPS with only a marginal latency increase 0.21 to 0.26 s. This observation validates that read-only operations in the proposed architecture can efficiently utilize network and computational resources without introducing bottlenecks. The results therefore confirm that the system can sustain high query workloads with stable latency, ensuring consistent responsiveness even under heavy load conditions.

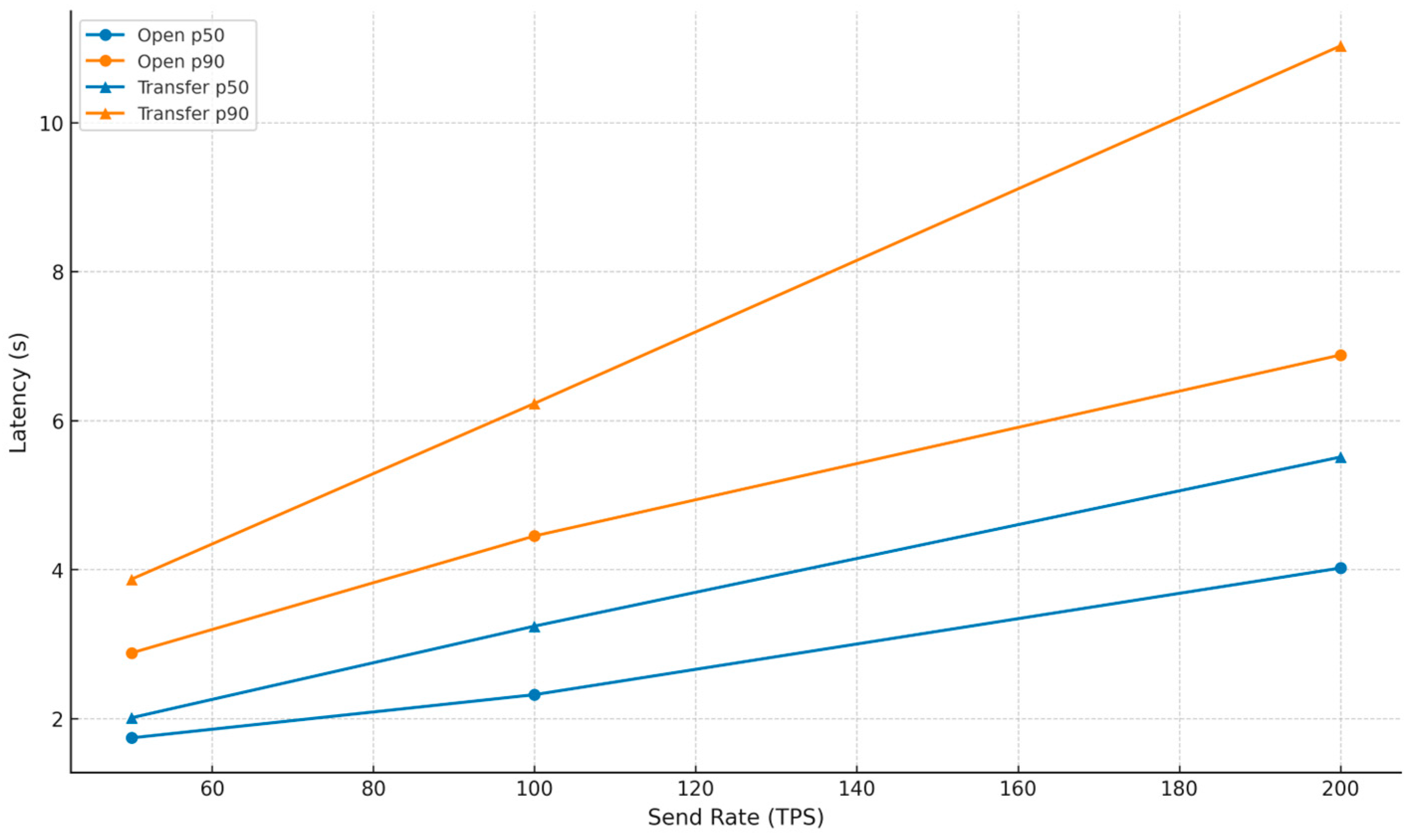

Figure 6 compares the latency distributions of open and transfer transactions based on the p50 and p90 metrics. The p50 value (median latency) represents the point below which 50% of all transactions are completed, reflecting the typical user experience. The p90 value indicates that 90% of transactions finish within this limit, capturing the system’s tail latency under heavy workloads. Taken together, these metrics provide a comprehensive understanding of both typical and extreme-case system behavior under stress.

In real-world cold chain scenarios, the network structure naturally expands over time as new organizations or additional peer nodes are incorporated. Therefore, it is critical to evaluate how the proposed system responds not only to varying transaction loads but also to an increased number of peer nodes. To this end, two scalability experiments were conducted: intra-organizational scaling and network-wide scaling.

Table 4 presents the results of horizontal scaling applied solely to the retailer organization (Org3). In this scenario, the other organizations were kept constant, while the number of peers in Org3 was increased from two to four and eight, respectively. The objective was to observe how the growth of peer nodes within a single organization affects the overall network performance. The results indicate that increasing the number of peers within the same organization enables more efficient workload distribution and reduces network latency. Query transactions achieved up to 20% reduction in latency and 12% improvement in throughput, demonstrating that horizontal scaling within an organization helps balance resource utilization and enhances performance.

Table 5 summarizes the system-wide scaling scenario, in which all organizations were configured with four peer nodes each. In this configuration, every peer was deployed on a separate virtual machine (a total of 12 peer VMs and orderer VMs). This setup eliminated CPU sharing among peers, resulting in a fully distributed (multi-host) architecture. The experiment measured the effect of peer expansion on system-wide latency and throughput. The results show a 15–27% decrease in average latency and a 9–19% increase in throughput across transaction types. Notably, Transfer transactions exhibited approximately 16% lower latency, highlighting that the distributed peer configuration effectively balanced computational and endorsement workloads. Furthermore, the observed reductions in p50 and p90 latency percentiles confirm that the system maintained stable and predictable response times even under high transaction loads.

As shown in

Table 6, the proposed framework provides clear advantages over previous blockchain-based cold chain systems in both scalability and transaction performance. Earlier studies, such as [

1] and the Cyber-Physical Ecosystem [

2], were mainly conceptual or simulation-based and lacked detailed scalability evaluation. Their single-host or limited setups resulted in high latency and restricted throughput due to non-optimized consensus mechanisms. Similarly, the Kubernetes-based system in [

12] primarily focused on network orchestration and IoT connectivity rather than blockchain performance, showing a transaction delay of up to 48 s. In contrast, the proposed system operates on a distributed Hyperledger Fabric network that processes real IoT data under multi-host conditions. Using the Raft consensus with three orderers, it achieves a stable throughput of 818 TPS and an average latency of 0.26 s, demonstrating robust and scalable performance under varying workloads. Additional scalability experiments further verified that the system maintains high efficiency and stable latency even as the network size and peer count increase.

Beyond quantitative performance improvements, the proposed network also delivers qualitative enhancements in access control and system governance. While ImmuneChain [

10] applied an ABAC policy to increase flexibility, it did not validate scalability in multi-organizational environments. The RBAC configuration adopted here ensures clearer administrative boundaries and simplified permission management across organizations. Furthermore, unlike earlier frameworks that used simulated data or single-channel setups, the proposed architecture integrates real IoT data and has been benchmarked on multiple virtual machines using Hyperledger Caliper. The results confirm consistent efficiency among distributed peers and show that the proposed approach effectively bridges the gap between prototype-level models and deployable blockchain–IoT systems for cold chain logistics. The results demonstrate that, as the send rate increases, the latency of both open and transfer transactions rises; however, this growth follows a linear and predictable trend rather than an exponential surge. Open transactions consistently exhibit lower p50 and p90 values, whereas transfer transactions incur higher delays. The increase in p90 values particularly highlights the additional resource demands imposed by more complex operations. These findings confirm that the proposed system maintains a stable latency profile even as transaction loads grow, and that performance remains within acceptable limits under high-stress scenarios. The scalability analysis presented in

Table 4 and

Table 5 further supports this observation by demonstrating consistent performance gains under both local and network-wide peer expansion

5. Discussion

The findings from the performance and scalability experiments provide valuable insights into both the technical and managerial dimensions of the proposed blockchain-based cold chain system. Technically, the results confirm that Hyperledger Fabric sustains high throughput and stable latency even under increasing transaction loads and multi-peer configurations. Query transactions reached up to 818 TPS with average latency below 0.3 s, while open and transfer transactions exhibited predictable, near-linear latency growth as the send rate increased. These results demonstrate that the proposed architecture can reliably support real-time cold chain operations without performance degradation, validating its practicality for deployment in production environments.

Scalability experiments further showed that intra-organizational and system-wide peer expansion improved throughput by up to 19% and reduced latency by up to 27%. This indicates that horizontal scaling can be applied effectively in Hyperledger Fabric networks to accommodate growing operational demands. The consistent p50 and p90 latency behavior across all workloads underscores the robustness and predictability of the network, two essential attributes for mission, critical logistics systems where response times directly influence product safety and regulatory compliance.

From a managerial perspective, these technical findings translate into clear operational advantages. Stable system performance ensures timely data synchronization among stakeholders, minimizing errors caused by delayed or missing updates. This enables managers to make informed, real-time decisions regarding inventory control, temperature deviations, and transportation scheduling based on blockchain-verified data. The integration of IoT sensors with blockchain further enhances end-to-end visibility throughout the supply chain, allowing early detection of anomalies such as temperature excursions and supporting proactive risk management. Automating data collection and verification through a permissioned blockchain reduces manual interventions, strengthens traceability, and lowers administrative overhead.

While recent studies have proposed blockchain-based cold-chain management frameworks focusing on data transparency and efficiency [

25], these approaches remain largely limited to prototype implementations without predictive or adaptive intelligence. In contrast, emerging research on AI/ML-driven capacity forecasting in cold-chain logistics [

26] highlights the growing potential of integrating predictive analytics into blockchain systems, a direction envisioned as part of our future research.

In addition to performance and scalability aspects, recent studies emphasize that integrating blockchain with IoT introduces both significant opportunities and new security challenges related to data integrity, privacy, and interoperability [

27]. As Industry 5.0 moves toward large-scale, human-centric automation, ensuring trust and resilience across interconnected IoT ecosystems has become increasingly critical. In this regard, permissioned frameworks such as Hyperledger Fabric are recognized as suitable platforms for applications demanding strict identity management, access control, and regulatory compliance. Similar research in healthcare and industrial IoT contexts further demonstrates that combining lightweight cryptography and post-quantum algorithms can strengthen Fabric’s resilience against emerging cyber threats and privacy attacks [

28,

29]. These insights suggest that while blockchain significantly enhances transparency and traceability in distributed IoT environments, addressing the security overhead and interoperability constraints remains a key direction for future research.

In addition, the role-based access control (RBAC) model simplifies network governance by clearly defining organizational responsibilities, minimizing authorization errors, and facilitating scalability as new participants join. Combined with the consumer-facing web interface, which reinforces transparency and customer trust, the proposed system effectively bridges technological performance with practical managerial benefits, demonstrating that the Hyperledger Fabric architecture not only achieves strong computational efficiency but also delivers measurable managerial value in terms of visibility, accountability, and operational reliability across the cold chain ecosystem.

6. Conclusions

This study presents a comprehensive Hyperledger Fabric-based solution to address the challenges of centralized data structures, lack of transparency, and trust issues in cold chain logistics. While most existing works in the literature focus narrowly on specific application domains and address authorization policies, access control, and performance evaluation only superficially, the proposed model integrates both the technical infrastructure and institutional requirements, thereby providing a unique contribution.

The developed system enables secure collaboration among three organizations representing production, transportation, and retail stages within the same blockchain network. Environmental data, including temperature, humidity, and location collected from IoT devices, are immutably recorded on the blockchain through role-based access control and authorization mechanisms. Moreover, these records are made transparently and verifiably accessible to end consumers through a web-based interface, ensuring that the environmental conditions encountered by products throughout the logistics cycle can be fully monitored with blockchain-backed trust.

Experimental performance evaluations conducted using Hyperledger Caliper demonstrated that the system provides high reliability and efficiency under varying workloads. Query transactions achieved throughput ranging from 442 TPS (at a 500 TPS send rate) to 818 TPS at a 1000 TPS send rate, with corresponding average latencies of 0.21 and 0.26 s, confirming stable scalability without transaction failures. Open transactions exhibited scalable performance, whereas transfer transactions incurred higher latency; however, the latency growth remained predictable rather than exponential. Most notably, no transaction failures were observed in any of the scenarios.

In addition to the baseline performance analysis, scalability experiments were conducted to evaluate the effect of increasing peer nodes across the network. The results demonstrated that throughput improved by as much as 19%, while the average latency decreased by approximately 27% as the network expanded. These findings confirm that the proposed Hyperledger Fabric-based architecture provides a scalable and balanced infrastructure capable of sustaining high performance even as the network size and peer density grow. In conclusion, the proposed model operates stably even under low hardware configurations and provides a robust infrastructure that guarantees product safety, transaction transparency, and data integrity. With these capabilities, the system offers a practical and scalable solution to meet the critical requirements of cold chain logistics, particularly the rapid and reliable verification of product history and environmental conditions. The RBAC approach adopted in this study aligns naturally with the permissioned architecture of Hyperledger Fabric, simplifying authorization management across multi-stakeholder cold chain environments and offering lower operational complexity compared to ABAC-based models.

In future work, the developed system will be adapted to real-world scenarios, aiming to predict cold-chain disruption risks in advance through AI-supported analyses. In addition, potential performance and resource management issues that may arise during network scaling will be anticipated, and the system’s adaptability will be enhanced accordingly. We anticipate that these studies will further strengthen both the technical robustness and the operational applicability of the proposed blockchain-based framework.