Abstract

The advancement of neural-based models has driven significant progress in modern code intelligence, accelerating the development of intelligent programming tools such as code assistants and automated software engineering systems. This study presents a comprehensive and systematic survey of neural methods for programming tasks within the broader context of software development. Guided by six research questions, this study synthesizes insights from more than 250 scientific papers, the majority of which were published between 2015 and 2025, with earlier foundational works (dating back to the late 1990s) included for historical context. The analysis spans 18 major programming tasks, including code generation, code translation, code clone detection, code classification, and vulnerability detection. The survey methodologically examines the development and evolution of neural approaches, the datasets employed, and the performance evaluation metrics adopted in this field. It traces the progress in neural techniques from early code modeling approaches to advanced Code-specific Large Language Models (Code LLMs), emphasizing their advantages over traditional rule-based and statistical methods. A taxonomy of evaluation metrics and a categorized summary of datasets and benchmarks reveal both progress and persistent limitations in data coverage and evaluation practices. The review further distinguishes neural models designed for natural language processing and programming languages, highlighting the structural and functional characteristics that influence model performance. Finally, the study discusses emerging trends, unresolved challenges, and potential research directions, underscoring the transformative role of neural-based architectures, particularly Code LLMs, in enhancing programming and software design activities and shaping the future of AI-driven software development.

1. Introduction

Artificial intelligence (AI) and its subfields, such as machine learning (ML), deep learning (DL), and generative AI, have become integral to modern activities by improving efficiency and quality in daily tasks. DL-based natural language processing (NLP) enables automation beyond human capabilities [1], including language-related tasks such as autocompletion, long-form text predictions [2], and multilingual translation. The fundamental building blocks of such advancements in the field are neural networks. Hence, they can be considered key drivers of AI-based automation worldwide, enhancing various aspects of daily life. Applications of neural network-based language models, such as ChatGPT [3], showcase improvements in NLP. However, programming, which involves structured instructions to solve computational problems, remains a challenging domain due to its strict syntax, logical complexity, and complexity of semantic analysis. Tasks like writing new code, translating or refactoring existing code, and debugging have traditionally been performed manually or with rigid tools for decades, often struggling to keep up with dynamic and evolving project requirements. This complexity has driven interest in neural approaches for programming tasks, as data-driven models can learn intricate patterns and generalize more effectively to novel problems. Recently, state-of-the-art (SOTA) NLP models—such as OpenAI’s Generative Pre-trained Transformer (GPT) models [3]—have been adapted for programming language (PL) tasks [4], and Code-specific Large Language Models (Code LLMs) have been tailored for code-related tasks [5], thereby reducing traditionally time-consuming manual processes such as code editing, comment writing, summarization, and debugging while enabling automation in a range of programming tasks, including source code translation [6,7], code generation [5], summarization [8], automatic code editing [9], decompilation [10], and code similarity detection [11], among others.

Despite the active utilization of neural-based NLP techniques in code-related tasks, there remains a noticeable gap in comprehensive analyses examining the rapid and evolving integration of neural methods into programming-focused applications. Although previous surveys have examined scientific research on neural methods for programming tasks, they often suffer from a limited scope—either focusing on specific tasks [12,13], covering only a narrow subset of neural network-based techniques used in these tasks [14], emphasizing general AI/ML methods with minimal attention to neural techniques [15], or concentrating on broader software engineering (SE) applications with insufficient focus on the programming-centric tasks. Some reviews [16,17,18] classify the applications of neural models on code into three categories—code generation models, representational models, and pattern mining (code understanding) models—with comparisons of their usage in NLP and programming tasks, whereas others analyze the role of neural models in code understanding, by distinguishing between sequence-based and graph-based approaches. However, many [19,20,21] omit discussions on the role of neural methods specifically tailored to programming tasks, overlooking the challenges these methods face in capturing the complex syntactic and semantic structures of code and the logical idiom constraints unique to each programming language.

Given the limitations of previous surveys [22,23,24,25,26]—such as their task-specific focus (e.g., code summarization and program repair) and the omission of critical discussions [27,28]—researchers may struggle to identify gaps for further exploration in research themes targeting neural networks applied to programming tasks. To address this, our survey consolidates previous surveys and provides a comprehensive review of existing studies, bridging research gaps and guiding future investigations. In summary, this survey outlines the following concepts and contributions:

- Review existing survey papers on neural methods for programming tasks.

- Compare neural methods applied to NLs and PLs.

- Compare rule-based, statistical, and neural methods for code-related tasks.

- Review 18 programming tasks and outline future research directions for each.

- Examine datasets and benchmarks used in these tasks.

- Analyze performance evaluation metrics for neural models in the reviewed papers.

- Summarize key findings from the reviewed papers on neural models applied to source code.

- Discuss major challenges in applying neural-based models for programming tasks.

- Explore the role of LLMs in programming.

- Identify research gaps and propose future research directions.

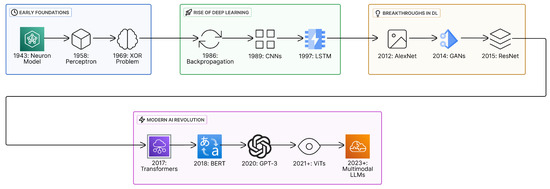

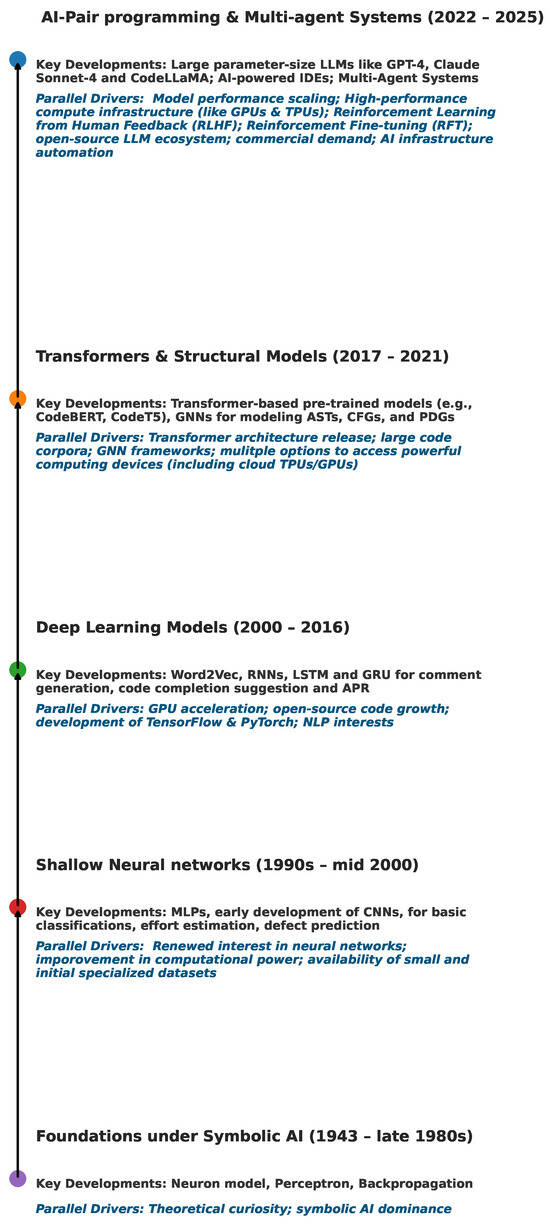

This survey serves as a roadmap for researchers interested in the applications of neural networks to programming languages and programming tasks, highlighting the evolution of neural networks from early single-layer models [29] to modern large-scale architectures [30] as depicted in Figure 1. By providing this historical perspective, we contextualize recent achievements and outline directions for future research. To the best of our knowledge, no existing survey is solely dedicated to neural methods with a comprehensive review tracing their application to programming tasks, from early developments to the latest advancements in Code LLMs trained exclusively on code. To maintain consistency and enhance clarity throughout our analysis, we use the term programming tasks to refer to the various code-related activities discussed in this study. While different literature employs a range of terms, such as SE tasks [14], coding tasks [31], code-related tasks, and programming language tasks [32], to describe similar or overlapping concepts, we adopt a unified terminology to reduce ambiguity for readers. However, given the breadth and interdisciplinary nature of these tasks, often involving source code, bug reports, and natural language artifacts, we occasionally use the term SE tasks when appropriate.

Figure 1.

Advancements of neural networks.

Our research scope concentrates on neural methods applied specifically to programming-related SE, as many other SE activities can often be addressed through general-purpose NLP and deep learning surveys. Consequently, this work represents the first comprehensive and systematic review dedicated exclusively to examining how neural approaches have been applied within programming-centric SE domains. To guide this study, we propose the following research questions:

- RQ1: How do neural approaches compare to rule-based and statistical methods in the context of programming tasks?

- RQ2: What is the current landscape of datasets and benchmarks for neural methods in programming tasks, and what are the critical gaps?

- RQ3: Which evaluation metrics best capture model performance on code, both syntactically and functionally, and where do standard NLP metrics fall short?

- RQ4: What roles do LLMs (e.g., GPT-4, LLaMA, Claude) play in programming tasks?

- RQ5: What are the main bottlenecks in scaling and deploying neural-based programming solutions to real-world codebases, and how can they be addressed?

- RQ6: How have neural methods for programming evolved, and which model- and system-level advances have driven this progression?

Finally, we emphasize that our survey follows established systematic review practices—we defined a set of search queries, inclusion/exclusion criteria, and we applied consistent paper collection procedures as noted in Section 3. Our methodology is based on standard guidelines for software engineering systematic literature reviews (e.g., Brereton et al. [33] and Kitchenham and Charters [34]). We extend these practices by including quantitative and qualitative trend analysis to enhance comprehensiveness and rigor, ensuring that our review is reproducible.

To facilitate the discussion, the rest of the paper is structured as follows. Section 2 reviews existing surveys. Section 3 outlines the paper selection process. Section 4 compares the neural models used for NLs and PLs. Section 5 presents neural methods for 18 programming tasks. Section 6 reviews the datasets and evaluation metrics. Section 7 compares neural methods with traditional methods such as rule-based and statistical methods. Section 8 presents answers to the research questions and outlines future research directions, while Section 9 concludes.

2. Related Works

This section reviews prior surveys examining AI approaches in programming and SE, particularly the role of neural methods in programming-related tasks. While some works broadly address AI in SE, they often overlook the huge gains and critical technical challenges specific to neural methods on code-focused applications. Al-Hossami and Shaikh [27] present a taxonomy of AI methods applied to source code and conversational SE systems, spanning traditional neural models such as Multilayer Perceptron, Recurrent Neural Networks (RNNs), and Long Short-Term Memory (LSTM) to Transformers. They categorize tasks into open-domain and task-oriented dialogue systems, referencing datasets like CoNaLa, CodeSearchNet, and other GitHub-derived corpora. Similarly, Watson et al. [35] review DL utilization across SE tasks including defect prediction, program comprehension, and bug fixing. They identify key neural architectures such as autoencoders, Siamese Networks, encoder–decoder models, and CNNs, and common metrics like accuracy, , Mean Reciprocal Rank (MRR), Recall@k, and Bilingual Evaluation Understudy (BLEU), while noting limitations include weak reproducibility, preprocessing inconsistencies, and overfitting risks across reviewed works.

Cao et al. [36] survey explainable AI in SE, classifying methods by portability (model-agnostic/specific), timing (ante hoc/post hoc), and scope (local/global). Their dataset coverage includes Devign (Deep Vulnerability Identification via Graph Neural Networks), National Vulnerability Database (NVD), StackOverflow, and CodeSearchNet. They highlight explainable AI’s stronger presence in security and defect prediction, its underutilization in early SE phases, and concerns over inconsistent evaluations and lack of standard baselines. The review in [37] emphasizes encoder–decoder architectures in code modeling and generation, exploring a wide array of code representations. Similarly, Samoaa et al. [17] systematically map how DL techniques model code using token, tree, graph, and hybrid formats across classification, clone detection, and summarization tasks. They advocate for standardized benchmarks with industry-level evaluation. Hou et al. [38] discuss the dominance of decoder-only and encoder–decoder architectures (e.g., GPT-3/4, Codex, and CodeT5) with a review on publicly available datasets like HumanEval and Mostly Basic Programming Problems (MBPP). They emphasize the role of prompt engineering and parameter-efficient tuning techniques like Low-Rank Adaptation, prefix-tuning, and adapters, though most models are validated only on academic datasets. Similarly emphasizing LLMs, Fan et al. [39] raise concerns about hallucinations, weak evaluation frameworks, and a lack of rigorous metrics for assessing generated code and other artifacts.

More narrowly scoped reviews target specific programming tasks. Akimova et al. [24] review studies on software defect prediction that utilize RNNs, LSTMs, Tree-LSTMs, GNNs, and Transformer-based models. While neural approaches outperform classical methods, challenges remain in benchmark standardization, class imbalance, and metric selection. Wu et al. [40] analyze vulnerability detection methods, comparing RNN-based and GNN-based models. They stress the need for better code embeddings, representation standards, and interpretability. Xie et al. [25] classify the research works in code search into three taxonomies such as semantic modeling of query, code semantics modeling, and semantic matching phases. Their findings show that pre-trained Transformers like CodeBERT and multi-view inputs significantly improve retrieval, while noting the high training cost and blurred task boundaries with clone detection. Dou et al. [41] empirically review LLMs for code clone detection, comparing open-source (e.g., LLaMA, Vicuna, and Falcon) and proprietary models (e.g., GPT-3.5 and GPT-4) against traditional tools like SourcererCC and NiCad. Using benchmarks such as BigCloneBench and CodeNet (Java, C++, and Python), they examine clone types (1–4) through zero-shot prompts, chain-of-thought prompting, and embedding-based methods (e.g., text-embedding-ada-002 and CodeBERT). LLMs notably outperform classical tools for Type-3/4 clones, especially for Python. GPT-4 demonstrates robustness under multi-step chain-of-thought prompting. Yet, limitations include prompt design constraints, lack of few-shot settings, potential training/evaluation overlap, and computational resource restrictions.

Zhong et al. [42] offer a taxonomy of neural program repair, emphasizing the review of automatic program repair (APR) techniques that leverage various data representation approaches, such as abstract syntax tree (AST) and control flow graph (CFG), to extract context, and employ different neural-based methods, such as encoder–decoder, tree-based, and graph-based architectures, to generate correct or plausible bug-fixing patches. Similarly, Huang et al. [43] trace the APR evolution from search-based to constraint-based, template-based, and learning-based techniques, highlighting models from RNNs and Transformers to static-analysis-integrated encodings, tested on various benchmarks like Defects4J and QuixBugs. Zhang et al. [22] also review learning-based APR tools that frame bug fixing as neural translation, identifying gaps such as limited multi-hunk fix support and inadequate benchmark standardization. Wang et al. [44] provide a broad survey of LLMs in software testing and repair, noting that decoder-only and encoder–decoder models such as GPT-3, Codex, Text-to-Text Transfer Transformer (T5), CodeT5, BART, and LLaMA often outperform traditional techniques. However, they raise caution about benchmark data leakage, privacy risks, and the computational cost of real-world deployment.

Le et al. [28] classify data-driven vulnerability assessment into five themes and subthemes, examining various AI techniques: traditional ML like Support Vector Machine (SVM) and random forest, deep learning like Siamese Neural Networks and GNNs, and knowledge graphs. They also explore datasets like ExploitDB and the Common Vulnerability Scoring Systems, recommending robust validation strategies and evaluation metrics suited for imbalanced data such as Matthews Correlation Coefficient, Mean Absolute Error, and Root Mean Square Error. With a similar research focus, Xiaomeng et al. [23] review ML-based static code analysis for vulnerability detection. Their work spans traditional models like SVMs to deep learning (CNNs, RNNs, and LSTMs), emphasizing reduced manual effort but also highlighting issues like class imbalance and poor generalizability across projects and languages.

Zakeri-Nasrabadi et al. [26] provide a taxonomy of code similarity techniques, covering token-based, tree-based, graph-based, metric-based, and learning-based approaches. They assess benchmarks such as BigCloneBench and metrics like precision, recall, and F1, noting the dominance of languages like Java and C++ and the reproducibility limitations due to some restricted tools for open access. Katsogiannis et al. [45] survey neural approaches for Text-to-SQL translation, reviewing architectures such as seq2seq models, grammar-guided decoders, and GNNs for schema linking. While pre-training-enhanced language models show strong accuracy on benchmarks like WikiSQL and Spider, challenges remain in scalability and generalization to new domains. Grazia and Pradel [46] present a comprehensive review of code search over researches spanned across three decades. They categorize query mechanisms—ranging from NL and code snippets to formal patterns and input/output examples—and discuss indexing strategies, retrieval methods, and ranking techniques. Their survey addresses real-world search behaviors and emphasizes open challenges, such as supporting version history, cross-language search, and more robust evaluation frameworks.

Zheng et al. [47] review 149 studies, comparing those LLMs trained/fine-tuned on code like Codex, CodeLLaMA, and AlphaCode against general LLMs using HumanEval and Automated Programming Progress Standard (APPS) benchmarks. Their findings show that code-specific models typically outperform general models using metrics like Pass@k and BLEU. Zan et al. [48] evaluate 27 LLMs for NL-to-code tasks, identifying three key performance drivers: model size, access to premium datasets, and expert fine-tuning. However, they note an overemphasis on short Python snippets, limiting generalization. Wong et al. [49] survey the impact of LLMs trained on “Big Code” in integrated development environment (IDE), examining their ability to support tasks like code autocompletion and semantic comprehension. They also caution about drawbacks such as model bias, security concerns, and latency that may hinder real-time applicability. Similarly, Zheng et al. [50] focus on Transformer-based pre-trained language models and LLMs with parameter size greater than or equal to 0.8B, across seven core tasks such as test generation, defect detection, and code repair. These models perform well in syntax-aware tasks but struggle with semantic understanding. Their review also underscores fragmented benchmarks, limited error analysis, and insufficient attention to ethical and cost-related concerns. Xu and Zhu [51] offer a deep dive into pre-trained langauge models like CodeBERT, GraphCodeBERT, and CodeT5, detailing their distinct learning objectives and use of input structures such as AST and data-flow graph (DFG). However, they note issues including scarce multilingual datasets and underexplored graph-based techniques.

Some surveys have also explored application of AI and its variant for SE life-cycle. Crawford et al. [21] review the integration of AI into software project management, tracing its progression from early expert systems and rule-based planners to contemporary neural network applications. Their findings highlight AI’s value in improving estimation accuracy, risk management, and analytics for agile workflow. However, persistent challenges remain, including data privacy concerns and the lack of interpretability in AI models, both of which hinder stakeholder trust and limit industry adoption. Batarseh et al. [52] map AI methods to five SE phases, finding active prototyping in testing and requirements engineering, yet limited industrial validation and evaluation standardization. Durrani et al. [19] take a survey on broad perspective across domains of SE phases. However, their treatment of SE tasks and overreliance on quantitative summaries mined from AI tools (e.g., dimensions.ai) detracts from the unique advances in neural methods specific to programming. Their focus on older models like random forests overlooks the transformative capacity of modern neural models that now even support complex program generation and logical reasoning.

In contrast, our survey concentrates on programming-related SE tasks. While some documentation or design tasks may benefit from lightly fine-tuned general NLP models, we focus on how neural-based architectures are designed to understand, generate, and interact with source code. The limitations of prior surveys—such as narrow benchmarks, limited real-world validation, and fragmented methodologies—underscore the need for a unified, code-centric view of modern neural-based applications in SE. Table 1 compares existing survey and review articles. It outlines each prior work’s main focus, key strengths, identified limitations, and basis of validation, allowing readers to quickly compare how existing approaches relate to ours. Based on our review of previous survey studies, we observe that prior works on the application of neural methods and general AI/ML techniques for software engineering and programming tasks can be broadly categorized into four groups as summarized in Table 2.

Table 1.

Summary of previous survey works’ methodologies, strengths, weaknesses, and robustness.

Table 2.

High-level taxonomy of previous survey papers in the field.

Our survey distinguishes itself from prior reviews in several key ways. Unlike earlier works that narrowly focus on specific deep learning techniques or isolated SE subdomains, our survey systematically covers almost all types of neural methods across a broad range of programming-centric SE tasks. Additionally, we compare the application of neural methods in both PL and NL, highlighting representative models used in each context. Furthermore, our work stands out by explicitly comparing rule-based, statistical, and neural approaches, offering a holistic understanding of how neural models outperform earlier paradigms as detailed in Section 7. Overall, our survey adopts a broader perspective by integrating both qualitative synthesis and quantitative analysis, examining over 250 papers across 18 distinct programming task domains. We also provide trend-based insights on publication growth, dataset and benchmark usage, and model performance metrics as described in Section 6.

3. Scientific Paper Selection Process

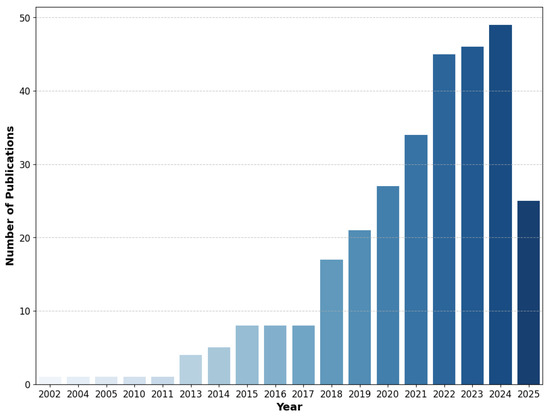

Our paper selection process is visually summarized in Figure 2. In this study, we have collected and analyzed more than 250 research papers, exploring their primary objectives, contributions, and future research directions. Most of these works were published between 2015 and 2025, covering a decade of intensive research on neural methods for programming tasks. A few earlier foundational studies, dating back to the late 1990s, were also included to provide historical continuity and contextualize recent advances as depicted in Figure 3.

Figure 2.

Paper selection process.

Figure 3.

A yearly highlight summary of publications on neural methods for code considered in this survey.

Our investigation delves into various applications of neural methods in programming tasks, including advanced code representation techniques that enhance automation in software development [59]. Table 3 provides an overview of the digital sources in which these papers are published, while Figure 3 highlights that recent years have witnessed a significant increase in publications within this domain.

Table 3.

Distribution of collected papers by source.

The methodology for selecting research papers in this survey aligns with established approaches, such as those employed in [60]. Specifically, we manually defined key phrases and search terms related to various programming tasks to retrieve relevant articles from online digital libraries. Our approach to identifying relevant literature was methodical and targeted. We focused on search phrases involving neural approaches within the context of programming and programming-centric SE. Specifically, we frequently used keyword combinations that included terms like “neural methods”, “neural models”, and “neural networks”, paired with common prepositions followed by the phrases “programming tasks”, “programming”, and “software engineering”, as well as specific code-related task names. The primary search patterns are shown below:

- (“neural methods” | “neural models” | “neural networks”) (“in” | “for”) (“programming tasks” | “programming” | “software engineering”)

- (“neural methods” | “neural models” | “neural networks”) (“in” | “for” | “on” | “and” ) [specific task]

where “|” stands for “or”, and [specific task] is a placeholder for areas such as code translation, code generation, code clone detection, code summarization, program synthesis, and other programming-related tasks.

We also applied inclusion and exclusion criteria to refine our selection. Only studies written in English were considered. Furthermore, we specifically included studies that explore the application of neural methods within programming-centric software engineering tasks. Conversely, studies focusing on the reverse, such as applying software engineering techniques to improve AI systems or those discussing neural methods without a clear application to software or programming tasks, were excluded from our review. After the initial collection, we refined the selection by filtering articles based on specific attributes to ensure alignment with our research objectives and focus. The paper selection criteria predominantly include the following aspects:

- Breakthrough research and SOTA approaches: Most selected articles focus on SOTA neural network architectures, including Transformer-based neural networks, encoder–decoder models, and other deep learning frameworks [61]. These studies provide cutting-edge advancements in neural-based code processing.

- Recent publications and citation-based filtering: A significant portion of the papers have been published recently. To ensure that we include impactful research articles, we adopt a citation score-based filtering approach as in [60]. However, for studies published between 2022 and 2025, we prioritize the relevance of the topic without referring to citation counts.

To ensure the inclusion of impactful but recently published studies, we intentionally excluded citation counts as a criterion when selecting papers from 2022 to 2025. Many papers from this period were initially released as preprints on platforms like arXiv and had not accumulated substantial citations, even though they were later accepted by peer-reviewed venues in 2024 and 2025. Relying on citation metrics could have led to the under-representation of valuable studies that were still in the process of gaining visibility. Notably, key contributions—such as OpenAI’s GPT-4 and various iterations of Meta’s LLaMA models—were disseminated via arXiv rather than formal publication channels [62,63,64]. This reflects a broader trend toward early sharing in the AI community. To fairly represent the most relevant and innovative research from this evolving landscape, we prioritized the relevance and impact of contributions over citation numbers. During the filtering process, we first reviewed each paper’s abstract for relevance. If clarity was insufficient, we further examined the introduction, model architecture and research approach, experimental setup, and discussion sections.

In further illustrating the thematic relationships within the reviewed literature, Figure 4 presents a keyword co-occurrence network generated from the bibliometric analysis of the collected papers. The network highlights how keywords such as code, source code, learning, neural, and language models form the core of the research landscape, demonstrating strong associations with programming tasks including code translation, code generation, code summarization, and other programming tasks. These interlinked clusters reveal the growing convergence between neural methods, neural networks, code understanding and generation, and software engineering automation.

Figure 4.

Keyword co-occurrence network of reviewed publications.

4. Neural Methods for Natural Languages vs. Programming Languages

Recent developments in large-scale NLP models have revolutionized human language processing [1,65]. Given the structural and lexical similarities between programming and natural languages, researchers have adapted neural-based NLP techniques to PLs [66]. PLs are essential tools for operating computing devices, enabling application and system development for programmers. Unlike NLs, which evolve through speech and cultural influences, PLs are designed by programmers to serve as a medium of communication between humans and machines. While NLs are analyzed through the norms and grammars of their speakers, PLs are based solely on the syntax, semantics, and grammar rules defined by their designers, making them a specialized subset of NLP. As such, the application of neural-based methods to PLs has benefited from advances in NLP-driven text processing. However, neural approaches tailored to PLs must account for significant differences from those used for NLs.

In terms of using neural methods for PLs, the following are remarkable opportunities we can exploit:

- Simplified Linguistic Rules: PLs avoid challenges like pronunciation, accents (e.g., UK vs. US English), writing styles (e.g., formal vs. informal) [67], and text writing direction (e.g., Arabic vs. English), making automation easier than in NLs.

- Abundant Open-Source Code Corpora: Repositories like GitHub and coding competitions offer large collections of code written in individual PLs, providing abundant monolingual data that enhance neural model performance on language-specific programming tasks.

On the other hand, there are also some pitfalls in using neural methods for PLs as shown below:

- Limited Learning Modes: Unlike NLs, PLs are learned solely through reading and writing, preventing neural-based automation from leveraging voice or speech processing [68].

- Grammar Sensitivity: PLs are highly syntax dependent, where minor errors, like missing whitespace in Python or misplaced semicolons in compiled languages, can cause the entire program to fail.

Therefore, the notable distinction between PLs and NLs lies in syntax and ambiguity: PLs are inherently unambiguous and structured to be deterministically parsed, whereas NLs often exhibit ambiguity due to diverse dialects and informal usage. This syntactic rigidity implies that code tokens follow strict formal rules, such as matching braces and consistent indentation that are not typical considerations in NLP tasks. As a result, code tends to be more predictable than human language, given that developers frequently reuse established idioms and avoid non-standard constructs. Consequently, neural models for code often integrate structural representations, such as ASTs or program graphs, rather than processing flat token sequences alone. For instance, GraphCodeBERT [69] enhances a Transformer-based architecture by incorporating DFGs alongside the sequence of tokens to capture variable dependencies and value propagation within the code.

While general-purpose neural language models like BERT [70] have been fine-tuned to apply for both code and NL domains, models trained explicitly for code typically include additional architectural components. These may involve embedding ASTs or data-flow structures using neural network with code-comprehending traits, or using specialized attention masks. Some models also augment input representations with tokens specific to programming constructs, such as language keywords or data types. By contrast, neural models in NLP often emphasize shallow parsing and focus on linguistic challenges such as co-reference resolution and semantic interpretation.

Hence, in this context, we review some prominent NLP models and compare their equivalent models trained for PLs. For example, the Salesforce’s CodeT5 model [71] was developed from Google’s T5 model. Both employ Byte Pair Encoding tokenization and follow an encoder–decoder architecture. However, while T5 was trained on the C4 corpus, CodeT5 was pre-trained on CodeSearchNet and fine-tuned using CodeXGLUE, enabling it to process both NL and source code. T5 has since evolved into t5x and seqio models. Similarly, CodeT5 has been extended into CodeT5+, incorporating objectives such as instruction tuning, text-code matching, span denoising, causal language model pre-training, and contrastive learning. Inspired by such development, large multilingual corpora combining natural and programming language tokens are being introduced in the field [72].

Additionally, models derived from BERT have demonstrated strong performance in both NLP and programming tasks. For instance, cuBERT [73] utilizes standard Python tokenization with Word2Vec-based fine-tuning for code classification. CoCLUBERT [74] enhances code clustering through objectives like Deep Robust Clustering, CoCLUBERT-Triplet, and CoCLUBERT-Unsupervised, which outperforms its cuBERT baseline model. Microsoft’s CodeBERT [75] employs bimodal Masked Language Modeling with NL and code, along with unimodal (replaced token detection) objectives. Several CodeBERT variant models like GraphCodeBERT [69], UniXcoder [76], LongCoder [77], and others address some programming challenges.

Cross-lingual pre-training and fine-tuning techniques [78] have further enhanced PL models adaptability. A key example is the unsupervised neural machine translation (NMT) approach introduced in [65], which employs Cross-lingual Masked language pre-training, Denoising Autoencoding (DAE), and Back-Translation techniques. This approach forms the backbone for Facebook’s (currently known as Meta) TransCoder models, which were designed for code translation [79].

Furthermore, the code summarization research in [8] has introduced mechanisms such as the “copy attention mechanism”, improving the quality of code summarization. Unlike its equivalent NLP-based text summarization model presented in [80], which relies on vanilla Transformers and Recall-Oriented Understudy for Gisting Evaluation (ROUGE) scores, the code summarization model integrates multiple performance metrics, including BLEU, Metric for Evaluation of Translation with Explicit ORdering (METEOR), and ROUGE.

A key distinction between neural models for NLs and PLs lies in their training data. Unlike NL corpora, source code cannot be treated as plain text. Therefore, adapting an NLP method for PLs requires specialized preprocessing, tokenization, and model architecture. These differences can help to distinguish why directly transferring NLP models to programming tasks frequently leads to suboptimal results. Neural architectures tailored for programming tasks, through the incorporation of structural features such as ASTs, control and data flow analysis, and type information, tend to outperform their general-purpose counterparts on tasks like code completion, summarization, and bug detection.

To complement the conceptual discussions above, Table 4 provides a comparative summary of neural models designed for natural and programming languages. It distinguishes their representative tasks, data sources, and evaluation metrics. The comparison highlights how PL models (e.g., CodeBERT and CodeT5) extend NL counterparts (e.g., BERT and T5) by incorporating structural information such as ASTs and Data Flow Graphs (DFGs), which enable improved performance in code-related tasks while introducing new challenges in cross-language generalization and semantic correctness.

Table 4.

Comparative perspective on neural models for natural and programming languages.

5. Neural Methods for Programming and Code-Centric SE Tasks

In this section, we explore neural methods for programming-centric SE tasks in fine-grained detail, covering the research focus, outcomes, and future directions for each task. Table 5 shows an overall summary of the 18 programming tasks, including their main objectives related to utilizing neural methods, along with their open research issues. In selecting these tasks, we prioritized those most frequently addressed in the large volume of literature, capturing widely studied areas with consistent terminology. Tasks mentioned under variant names (e.g., code recommendation vs. code completion; vulnerability prediction vs. vulnerability detection; and static/dynamic analysis vs. code analysis) were mapped to our taxonomy. Unlike some of the prior survey papers, for instance, the review by Durrani et al. [19] where the analysis relies on automated indexing tools such as Dimensions.ai, our review uses a rigorous manual literature collection and researcher-driven analysis. Our approach, which encompasses 18 distinct programming tasks, highlights the multidimensional contributions of neural methods to the field of programming. We emphasize the evolving capabilities of neural models that are the backbone of code assistant tools such as GitHub Copilot, which have not yet been sufficiently acknowledged by recent survey work such as in [19].

5.1. Source Code Translation

Source code translation can be viewed in two ways. First, it refers to the migration of outdated versions of source code to a newer version within the same PL [81]. Second, it involves translating source code from one PL to another [7,79]. In a broader sense, code translation can encompass shifts between different programming paradigms (e.g., declarative to imperative or procedural to object-oriented). It may also involve porting Application Programming Interfaces (APIs) from one language to another [82]. Although early traditional tools for code translation have some limitations, they are cost effective compared to manual translation and provide benefits to businesses across various domains. Source code translation has applications across multiple fields, including high-tech software production, cyber-security, and more. The code translation utilization areas can be seen as follows (but not limited to):

- Platform- or application-specific optimizations for high-performance computing applications [83], especially transitioning software from outdated to modern languages.

- Upgrading software to secure, up-to-date, and well-documented PLs to enhance long-term maintainability and compliance.

- Facilitating the innovation of new PLs by simplifying legacy code migration.

- Advanced program analysis and verification [84].

Existing source code translation methods can be broadly classified into three categories: rule-based, statistical, and neural translation approaches. Additionally, for cases involving a small number of lines of code, manual translation can be a viable option. Similar categorical approaches are also found in other programming tasks, such as code generation, code search, code summarization, etc.

Table 5.

An overall summary of programming tasks using neural models, their objectives, and corresponding open research issues.

Table 5.

An overall summary of programming tasks using neural models, their objectives, and corresponding open research issues.

| Task | Objective | Open Research Issues |

|---|---|---|

| Automatic Code Edit | Auto-modification of code. | Stable benchmark datasets for evaluation. |

| Code Analysis | Evaluates structural and runtime behavior of source code. | Need for multiple representations for efficiency. |

| Code Authorship and Identification | Utilizes neural models to attribute code to developers. | Challenges in coding style variability, multi-language authorship, and AI-generated code identification. |

| Code Change Detection | Tracks commit updates in large-scale software development. | Potential for IDE integration with push notifications. |

| Code Classification | Categorizes code based on syntax, semantics, and algorithms. | Demands of expanding classification criteria. |

| Code Clone Detection | Identifies duplicate or near-duplicate code snippets to maintain software quality. | Demands of distinguishing code clone detection from code similarity detection. |

| Code Completion | Enhances productivity by auto-completing blocks of codes. | Privacy and security concerns. |

| Code Generation | Automates code creation using code-assistants. | Ensuring correctness and execution reliability. |

| Code Modeling and Representation | Uses various representations for better source code understanding. | Designing optimal representation strategies remains an open issue. |

| Code Search and Retrieval | Enables retrieval of relevant code snippets. | Safety and security of AI-searched code remain a concern. |

| Code Similarity Detection | Identifies duplicate code using neural networks. | Challenges in dataset reliability. |

| Code Summarization | Generates human-readable descriptions for code. | Evaluation is highly dependent on NLP metrics. |

| Code Vulnerability Detection | Uses various neural methods to detect security flaws. | False positives persist and need to integrate neural methods with static/dynamic analysis. |

| Comment Generation | Generate descriptive comments for code. | Need for multilingual support. |

| Decompilation | Converts machine-level code into high-level source code. | Need for improving generalization across languages. |

| Program Repair and Bug Fix | Improve program debugging and efficiency. | Integrating neural methods with program verification systems for runtime bugs. |

| Program Synthesis | Converts NL instructions into code using neural models. | Evaluation benchmarks remain an issue. |

| Source Code Translation | Migrates code across different versions of the same language or translates it between different languages [85]. | Difficulties in ensuring semantic and functional equivalence between the source and translated programs. |

Manual code translation involves an expert translating code between languages, ensuring both source and target languages meet functional requirements. While effective for small functions or short code snippets, this approach is impractical for larger codebases, as it is time-consuming, error-prone, and inefficient.

The rule-based source code translations [86,87], also known as conventional methods, are mainly dependent on the AST of the program’s source code [7]. However, there are also variations in the implementation of these code translation approaches.

In [83], the source code’s AST is converted to an eXtensible Markup Language (XML), with user-defined rules applied to optimize code translations based on platform-specific features. This research developed the Xevolver tool, built on top of the ROSE compiler infrastructure, which uses XML ASTs to enable code modifications between the source and target languages. Another example is the OP2-Clang tool [88], which utilizes the Clang/LLVM AST matcher to optimize parallel code generation. In [89], a rule-based technique using ASTs is also applied in security and code optimization for Java programs, resulting in an Eclipse plug-in tool.

Statistical methods in source code translation draw from techniques used in statistical machine translation (SMT) for NLP [90]. These approaches comprehend the source code as lexical token sequences, applying statistical language models to map these tokens between source and target languages. For instance, in [90], the source code is modeled using SMT, achieving high BLEU scores when translating Java to C#. Similarly, in [91], a phrase-based language model is applied to translate C# to Java, using a parallel corpus of 20,499 method pairs. In [92], the Semantic LAnguage Model for Source Code (SLAMC) incorporates semantic information, such as token roles, data types, scopes, and dependencies, to improve SMT-based code translation quality.

Neural networks, particularly neural language models, have greatly advanced source code translation. Numerous neural language models leverage fine-tuning techniques to excel in code-related tasks. Models like CodeBERT and CodeT5 fine-tuned for such tasks, have achieved SOTA performance [71,75].

Facebook’s TransCoder [79] employs unsupervised learning to translate between Python, Java, and C++, using monolingual word embeddings, DAE, and BT. Its self-trained extension, TransCoder-ST [93], incorporates automated unit test generation to preserve semantics during code translation. Unit tests play a crucial role not only in code translation but also in a wide range of programming tasks. As such, they are essential for self-supervised neural models to validate the functional correctness of their predictions [94]. PLBART [95] is another versatile model excelling in multiple code-related tasks, including code translation. It outperforms models like RoBERTa and CodeBERT in Java-to-C# translation based on evaluation metrics of BLEU and CodeBLEU [96].

Despite these commendable progress, challenges remain. Traditional NLP metrics like BLEU fail to capture semantic correctness in PL codes, as variations in coding style can yield low scores despite identical functionality. Effective evaluation must consider both syntax and semantics of PL code. Additionally, code translation models are often treated as a supplement to other tasks. A dedicated data pipeline and specialized neural models are needed to refine the defects that arise during code translation and to address the unique complexities of source code translation.

5.2. Code Generation

Automated code generation is crucial for various programming tasks, with diverse demands leveraging generated code’s resources [97]. Some methods use code documentation text for code generation, such as doc-strings of Python functions [98], while others integrate back-translation heuristics.

CodeT5 [71], a code model built on top of Google’s T5 model [99], incorporates NL and PL tokens, enabling bimodal (NL-PL) and PL-only training for task-specific code generation and understanding.

ASTs play a key role in structured code generation. Abstract Syntax Description Language-based semantic parsing [100], Neural Attribute Machines [101], and other research works have leveraged the AST-based structural representations of a program for code generation. Another approach stated in [102] utilizes an API-driven Java program generation, which leverages combinatorial techniques.

CoDET, short for CODE generation with generated Tests [103], evaluates correctness via test-case based code coverage, although high coverage alone does not guarantee correctness. Execution-based testing such as mutation score evaluation [104] and others offer a more reliable alternative to ensure the correctness of the model-generated codes.

Although improvements have been made over time, generated code often requires manual modifications, and ensuring error-free execution remains an ongoing challenge [105]. Code LLMs enhance efficiently the automation of code generation [106,107]; however, executability issues persist.

5.3. Comment Generation

Code comments in general-purpose programming languages provide a high-level understanding of source code written by developers. Neural models can generate descriptive comment texts for a given program [108].

An empirical study in [109] evaluates T5 and n-gram models in code comment completion tasks. The experimental demonstrations in [110] train a model that learns source code along with its comments written in Japanese NL texts, in which it distinguishes between the code and comments using procedure learning. In this research, LSTM is used for comment generation, leveraging problem statements written in the NL texts to improve program understanding. While the research work in [111] attempts automatic comment generation using NMT models, the performance is suboptimal.

In [108], the Deep Code Comment Understanding and Assessment (DComment) model is proposed to understand and classify generated comments based on their quality. Some studies explore code-to-comment translation [112] as an alternative, while evaluating the generated comments’ quality using NLP metrics. However, performance evaluation issues are still concerning as highlighted in Section 6.2.

Most comment generation models produce English-based comments, which can be a barrier for non-English-speaking programmers. Developing models that can generate comments in a multilingual fashion could enhance collaboration in software development.

5.4. Decompilation

Decompilation reverses compilation, converting machine-level code (binary or assembly code) into high-level source code. Traditional rule-based methods are costly with limited performance, prompting exploration of neural methods as alternatives. The research in [10] trains RNNs on machine code compiled from C, which is later extended into a two-phase approach [113]: generating code snippet templates and then populating them with appropriate identifier/variable values.

Neutron [114] applies attention-based NMT techniques for decompilation, while BTC (Beyond the C) [115] offers a platform-independent neural decompiler supporting multiple languages, including Go, Fortran, OCaml, and C. As reported in [116], the researchers have explored the intermediate representations (IR) of program codes with seq2seq Transformers for decompilation.

Most existing tools remain rule-based and limited to specific languages, restricting their effectiveness. While a one-fit-for-all decompiler may be infeasible, future research should focus on leveraging efficient neural models to enhance the generalization of this task across diverse languages.

5.5. Code Search and Retrieval

Developing software from scratch is time-consuming, which requires software engineers to integrate open-source code. However, effective code retrieval requires robust search tools. While traditional search engines excel at NL queries, they struggle with accurate code snippet search. Neural methods offer a promising alternative.

Code-Description Embedding Neural Network (CODEnn) [117] is a deep learning-based code search model that leverages RNN-based sequence embeddings for joint source code and description representations. CARLCS-CNN, short for Co-Attentive Representation Learning Code Search-CNN [118], enhances code search with co-attention mechanism and CNNs to improve search accuracy. The Code-to-Code Search Across Languages (COSAL) model proposed in [119] enables cross-lingual code search using static and dynamic analysis, while Deep Graph Matching and Searching (DGMS) [120] employs Relational Graph Convolutional Networks (RGCNs) and attention mechanisms for unified text-code graph matching.

Traditional information retrieval techniques have also been enhanced with neural approaches. For instance, the study in [121] integrates Word2Vec into retrieval tasks. The Multi-Modal Attention Network (MMAN) in [122] utilizes LSTM, Tree-LSTM, and Gated Graph Neural Networks (GGNNs) to capture syntactic and semantic information. In [123], the authors combine CNNs and joint embeddings for Stack Overflow queries. The Multi-Programming Language Code Search (MPLCS) model proposed in [124] extends retrieval across multiple PLs, benefiting low-resource PLs.

AI-driven tools like Microsoft Copilot and ChatGPT [3] have revolutionized code search, retrieving relevant snippets via NL prompts. Unlike traditional engines, ChatGPT bypasses irrelevant search results but still may return non-executable code. Future improvements could integrate SE tools with neural models to search a code which is ready-made for execution with no errors, enhancing usability and reliability in software development.

5.6. Code Completion

IDEs with auto-completion features significantly enhance software development productivity [125]. Various neural methods have been explored for code completion and related tasks. In [126], LSTMs with attention mechanisms were used for code completion and other group of coding tasks. The study in [66] proposes a pre-trained Transformer-based model with multitask learning to predict code tokens and their types, which is finally fine-tuned for efficient code completion.

Microsoft’s IntelliCode Compose [127] is a cloud-based tool that suggests entire lines of code with correct syntax. This model provides monolingual embeddings while leveraging multilingual knowledge, benefiting low-resource programming languages. Additionally, it enhances privacy by preventing exposure of sensitive information, addressing a key limitation in prior auto-completion tools.

Meta’s research work in [128] demonstrates the effectiveness of transfer learning for code auto-completion. Their approach is built on top of the GPT-2 and BART Transformer-based models, and applies auto-regressive and DAE objectives to improve predictions.

A critical concern in code completion is privacy and security as noted in [127]. Furthermore, since prompts used for code completion tasks often have similar structures, there is a risk that generated programs will share similar designs, potentially introducing common vulnerabilities. Future research should address these concerns by enhancing model diversity and privacy safeguards in automated code completion. Additionally, most current code auto-completion assistants struggle to retain the long-context of earlier parts of the code written by the programmer. As a result, they often suggest generic and repetitive line completions. To enable more intelligent and context-aware assistance, it is essential to develop code completion systems capable of capturing long-range dependencies, potentially across an entire file or even multiple files within a project. Such capabilities are critical to providing seamless and relevant code suggestions.

5.7. Automatic Code Edit

Editing and fixing awkward parts of code structures are routine yet time-consuming tasks for programmers. These repetitive activities consume significant effort, but recent neural models aim to automate them [129].

CodeEditor [130] is a pre-trained code editing model whose results demonstrate improved performance and generalization capabilities in code-editing tasks. It consists of three stages: collecting code snippets from repositories like GitHub, generating inferior versions (versions somewhat different from the ground truth) of the code for pre-training, and evaluating the pre-trained model under three settings, namely fine-tuning, few-shot, and zero-shot.

The multi-modal NMT-based code editing engine referred as MODIT in [9] processes code in three steps: preprocessing, token representation via an encoder–decoder attention mechanism, and output generation. The preprocessing phase integrates three input modalities: code location, contextual information, and the commit messages, which guide the editing process.

A major challenge in this field is the lack of stable benchmark datasets for source code editing. The exponential growth of demands on software development further compounds this issue, as modern applications span huge numbers of lines of code, making manual code editing tiresome. For automatic code-editing models to be effective and scalable in complex coding environments, they should be trained on diverse and inclusive source code corpora. Addressing this gap is crucial for advancing automated code editing techniques.

5.8. Code Summarization

Programmers frequently read source code written by others, requiring the detailed intuition of a particular program. Automating this process through code summarization, that is, generating human-readable descriptions for code snippets, can significantly enhance efficiency. Neural methods have shown great success in this area.

M2TS, short for Multi-Scale Multi-Modal Approach Based on Transformer for Source Code Summarization [131], utilizes AST structures and token semantics using a cross-modality fusion approach for improved code summarization. Another model in [132] applies reinforcement learning with triplet code representations such as CFG, AST, and plain texts to enhance code summary generations.

In [133], a hybrid model combines token sequences (encoded using c-encoder) with semantic graphs (encoded using g-encoder) for richer context. Structural information from code snippets has inspired research such as AST-Trans [134], which transforms AST graphs into sequences using traversal and linearization techniques, thereby reducing computational costs. In [135], the model predicts action words from code blocks, identifying their intent and job class to enhance summarization.

Although significant improvements have been achieved in the research area of code summarization, evaluating the performance of code summarization models remains challenging. NLP-based metrics fail to capture source code semantics, often leading to misleading results. Research into specialized evaluation metrics tailored for source code is essential to ensure accurate and meaningful assessments of code summarization models.

5.9. Code Change Detection

Tracking updates in large-scale software projects is challenging, especially for a software development distributed across multiple teams, which may require frequent commits and updates from each team. Neural methods have been introduced to assist programmers in efficiently managing code changes.

CORE, short for COde REview engine [136], employs LSTM models with multi-embedding and multi-attention mechanisms to review code changes, effectively capturing semantic modifications. Another model, CC2Vec [137], represents code changes by analyzing modifications across updated source code files. It preprocesses change patches by tokenizing modified code blocks and constructing a code vocabulary. Structural information from added and removed code is then processed via a Hierarchical Attention Network for better representation.

IABLSTM (short for Impact Analysis with Attention Bidirectional Long Short-Term Memory) [138] utilizes a Bidirectional Long Short-Term Memory (Bi-LSTM) with an attention mechanism to detect source code changes. It identifies differences between the original and modified code using cosine similarity, leveraging AST paths and vectorized representations. Another approach in [139] applies NMT techniques to track meaningful code modifications in pull requests, aiding collaborative development.

Along with the previous efforts, code change detection remains an active research area. A promising direction is integrating these models with push notification systems within IDEs, providing real-time alerts about modifications. This would enhance efficiency in team-based software development, ensuring developers stay informed seamlessly.

5.10. Code Similarity Detection

The rising demand for software applications has led to increased source code duplication, where the same code is reused across projects. This issue undermines creativity, infringes upon intellectual property rights, and raises privacy concerns. Neural methods have emerged as promising solutions for detecting code similarity.

The model in [11] applies NLP techniques for code similarity detection. It preprocesses source code through stemming, segmentation, embedding, and feature extraction, generating vectorized representations of code pairs. Cosine distance is then used to compute similarity scores. Another approach in [140] focusing on Scratch, a visual PL, utilizes Siamese Bi-LSTM model to capture syntactic and semantic code similarities. Manhattan distance metric is utilized to evaluate similarity between the Scratch files.

Cross-language code similarity detection is another active research area. The approach in [141] transforms source code of multiple PLs into control flow charts, applying graph similarity detection techniques to compare them.

A major challenge in code similarity detection research is the reliability of datasets. Open-source repositories may contain default IDE-generated templates, leading to unintentional similarities. Future research should focus on differentiating manually written and machine-generated code to enhance code similarity detection. Addressing these challenges is crucial for maintaining ethical and professional standards in software development.

5.11. Program Synthesis

Ideally, computer-literate end users should be able to interact with machines using clear NL instructions. However, for this to be possible, intelligent systems are needed to convert NL instructions into executable code. Program synthesis [142,143] addresses this challenge by developing models that automatically generate programs based on user-defined instructions.

PATOIS [144] is a program synthesis model which incorporates code idioms via structural components called probabilistic Tree Substitution Grammars (pTSG). Its encoder embeds NL specifications, while its decoder generates token representations of ASTs. LaSynth [145] is an input–output-based program synthesis model, focused on compiled languages like C, by integrating latent execution representations. During training, its loss function combines latent executor loss and token prediction loss. The model also generates input–output pairs to improve supervised program synthesis approaches.

CodeGen [146] is a Salesforce’s Code LLM, which employs an auto-regressive method similar to GPT models, predicting code tokens based on prior prompts. Its multi-turn programming benchmark is designed to scale problem sets according to the size of the model and dataset.

However, program synthesis model evaluation benchmarks remain an open challenge. Some studies propose synthetic datasets [147], while others generate domain-specific input–output examples [148]. Further research is needed to establish standardized and versatile evaluation benchmarks, ensure the executability of source code generated by program synthesis, and develop optimal performance evaluation metrics.

5.12. Code Modeling and Representation

This part of our survey presents existing approaches to code representation and modeling in neural-based programming tasks [149]. Effective programming tasks require robust code representation and modeling techniques [150], as these representations directly influence how neural models interpret and process source code.

Several studies have explored different code representation techniques. In [151], source codes are utilized as pair-wise AST node paths, offering a generalizable approach. Another work [152] employs IR and contextual flow graphs (XFG) to enhance semantic code representation. Graph-based methods have also gained attention in the code representation research. Message passing and grammar-based methods in [153] represent the semantics of code structurally. GraphCodeBERT [69] leverages data flow structures to model relationships between variables and computational flow. The Open-vocabulary neural language model (Open Vocab NLM) in [154] integrates Gated Recurrent Units (GRUs) and sub-word tokenization, enabling it to process billions of tokens dynamically. AST-based approaches are evolved in [155], where ST-trees mitigates long-range dependency issues using bidirectional GRUs. Flow2Vec [156] converts code to low-dimensional vector representations using high-order proximity embeddings. CodeDisen [157] leverages a variational autoencoder to disentangle syntax and semantics across PLs. SPT-code [158] incorporates code sequences, ASTs, and NL descriptions for tasks like summarization, completion, bug-fixing, translation, and code search. Another model in [116] aligns embeddings of different PLs augmented along with their IR representations and actual tokens of the source code for the code translation task.

This survey highlights a wide range of representations, from raw source code tokens, ASTs, and CFGs to IR and DFG. While combining various code representations often improves comprehension, systematic research is needed to determine the optimal combination of these possible code representation options. Enhancing the neural-based code-modeling relies on robust, task-specific representation strategies. Hence, exploring the effective augmentation of various code representation alternatives could significantly broaden the research in this field.

5.13. Code Classification

Understanding and identifying source code across multiple PLs requires intuitively grasping the objectives and distinguishing features of programs. Traditional methods, such as manual cross-checking or using predefined rules, are effective but impractical for large-scale projects, especially for developers unfamiliar with multiple PLs. Code classification offers an efficient solution to these challenges by categorizing source code based on syntax, semantics, or other defined characteristics.

In [159], Multinomial Naive Bayes (MNB) is used as the classifier, and the Term Frequency-Inverse Document Frequency (TF-IDF) is employed for feature extraction. This method classifies code snippets from 21 PLs using the Stack Overflow corpus and identifies specific language versions. Deep learning has also further improved classification accuracy. For example, Reyes et al. [160] employs an LSTM with word embeddings and dropout regularization, outperforming traditional classifiers. The research in [161] uses CNNs to classify source code across 60 PLs, achieving high F1 scores. A CNN-based approach in [162] predicts source code categories based on algorithmic structures rather than keywords, using an online judge system as its corpus. In [163], the authors leverage topic modeling and JavaParser preprocessing to identify code block functionalities for Java code classification. Beyond standard code classification tasks, Barr et al. [164] combine deep learning with combinatorial graph analysis to detect and classify code vulnerabilities, using code2vec and LSTM embeddings. The research work in [165] classifies semantic units of machine learning code using LSTM-GRU with attention mechanisms, applying MixUp augmentation to overcome data scarcity.

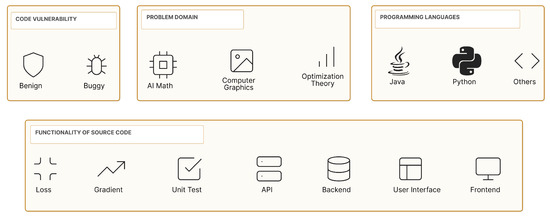

Code classification methods in the papers reviewed so far can fall into four broad categories such as algorithm or problem domain based, language based, code functionality based, and vulnerability based as shown in Figure 5. However, additional criteria such as coding paradigms (imperative, declarative, functional, and object-oriented) can be a further supplement to code classification models. Future research should focus on expanding classification taxonomies, ensuring a comprehensive categorization basis for an all-inclusive code classification task.

Figure 5.

Types of source code classification.

5.14. Code Vulnerability Detection

Source code vulnerabilities arise when errors and security flaws remain unpatched, making automated detection essential for mitigating risks. In [166], CNN and RNN deep learning models are utilized to extract feature representations and detect vulnerabilities in C and C++ code.

The Automated Vulnerability Detection framework based on Hierarchical Representation and Attention Mechanism (AVDHRAM) proposed in [167] improves vulnerability detection by structuring source code into five hierarchical levels: program, function, slice, statement, and token. However, the primary focus of this model is statement-level analysis enhanced by attention mechanisms. Another approach by Mao et al. [168] introduces a Bi-LSTM model that serializes ASTs while incorporating attention mechanisms to improve accuracy in classifying vulnerable functions. Transformer-based vulnerability detection presented in [169] uses fine-grained code slices for analyzing function calls, pointers, and expressions. In [170], three deep learning models combined with multiple code representations improve vulnerability detection.

Graph-based models like the one in [171] employ GGNN and inductive learning, using Common Vulnerability and Exposure (CVE) functions to create code slice graphs. In this work, the attention layer effectively identifies relationships among graph nodes, enhancing vulnerability detection. Wang et al. [172] combine gated graph networks and control flow analysis to distinguish benign from buggy code, addressing data scarcity by leveraging training data from open-source repositories.

Although some remarkable findings are undeniable in this area, vulnerability detection models still face challenges such as false positives and limited generalization to unseen flaws. Identifying vulnerabilities alone is insufficient; thus, classification according to severity, type, and potential impacts is crucial for comprehensive vulnerability management. Integrating neural models with static and dynamic program analysis or formal verification [173] could improve robustness. Future research should explore these integrations experimentally, advancing comprehensive vulnerability detection methodologies.

5.15. Code Analysis

Source code analysis involves multiple stages, serving various purposes and applications [174]. Prior research has explored different attributes for code quality assessment. In [175], static and dynamic properties of CFGs are utilized for code analysis. Ramadan et al. [176] perform code analysis by evaluating the execution speed of a particular program based on AST structures, generating code pairs to identify the snippet with faster execution speed.

A dynamic program analysis algorithm proposed in [177] leverages AST and CFG representations, storing contextual information in Translation Units. In [178], a hybrid analysis tool combines historical and structural schemes, featuring a Java-based parser, a Python-based Code History Miner, and an interactive interface. GENESISP, introduced in [179], integrates source and binary code data for Debian-based environments, employing 13 analysis tools. Though its neural basis is unclear, its user interface facilitates database collection and analysis of open-source software.

Although the current research in source code analysis is commendable, most neural models rely heavily on AST representations. While ASTs capture structural aspects, they fail to convey comprehensive functional and semantic information about the code. Therefore, integrating multiple representations alongside AST information can enhance the robustness of source code analysis.

5.16. Code Authorship Identification

With growing concerns over intellectual property in software development, accurate code authorship identification is essential for innovation and guaranteeing ownership rights [180]. Several studies have explored neural-based approaches to tackle this problem.

Abuhamad et al. [181] introduce a CNN-based method utilizing word embeddings and TF-IDF representations, demonstrating promising authorship attribution results on the Google Code Jam (GCJ) dataset. Kurtukova et al. [182] propose a hybrid neural network combining CNN-Bidirectional GRU (C-BiGRU), LSTM, Bi-LSTM, and other models, training on vectorized datasets before applying them to anonymized source code for authorship identification. Expanding on this, in [180], they tackle more complex real-world scenarios such as multi-language authorship attribution, coding style variations, and AI-generated code identification. Omi et al. [183] introduce a model capable of identifying multiple contributors to a single codebase by converting code snippets into AST paths and using ensemble classifiers. A CNN-based model, along with a KNN classifier, is presented in [184], emphasizing explainability in authorship attribution.

A key challenge in authorship attribution is the variability in coding styles across different PLs. Programmers often write in multiple styles, complicating the identification task. Moreover, IDEs generate code templates, such as ASP.NET MVC scaffolding in Microsoft Visual Studio, and AI-assistants can also produce large amounts of code, making it difficult to distinguish programmer-written and machine-generated code. Although progress has been made, future work should address the evolving programming practices, mixed data sources, and auto-generated code for robust authorship attribution and identification.

5.17. Program Repair and Bug Fix

Troubleshooting errors and fixing bugs in source code are daily challenges for every programmer. Manually handling these tasks for large codebases is cumbersome, necessitating automated tools. Ensemble Learning using Convolution Neural Machine Translation for Automatic Program Repair (ENCORE) is proposed in [185], leveraging a seq2seq encoder–decoder architecture with an attention mechanism and ensemble learning. The model follows three stages: input representation (tokenization), training ensemble models, and validating patches. ENCORE successfully repairs code errors in four PLs and shows potential adaptability for more PLs. Liu et al. [186] address lexical and semantic gaps between bugs and their corresponding fixes by employing lexical and semantic correlation matching, combined with focal loss, to tackle data imbalance in buggy and non-buggy classifications.

While most SOTA approaches fine-tune the pre-trained NLP models for programming tasks, Jiang et al. [187] pre-train a pure PL model on large codebases, then fine-tune it for APR. Their approach introduces a code-aware beam search strategy to manage syntax constraints and sub-word tokenization to mitigate out-of-vocabulary (OOV) issues. CIRCLE (Continual Repair aCross Programming LanguagEs) [188] is a cross-language APR model, which extends T5-based pre-trained NLP models with continual learning to repair code across multiple PLs. This approach enhances adaptability and generalization.

In real-world software development, bugs are often fixed following the post-failure measures. However, integrating self-healing mechanisms [189] with bug detection and fault localization [190] could revolutionize APR by preventing data loss or system crashes. Despite their promise, LLMs and Code LLMs struggle with runtime bugs unseen during their training stage. Addressing this requires integrating LLMs with external validation systems that provide automatic feedback for invalid patches. Ongoing research explores patch validation and refinement [189], paving the way for end-to-end APR frameworks that enhance the entire software development life cycle.

5.18. Code Clone Detection

Most modern software applications rely heavily on code reuse across their development stage. While this practice accelerates software development and productivity, it can lead to issues such as code bloating and software quality degradation, collectively referred to as code clone problems. Code clone detection models address these challenges. For instance, the research work in [191] proposes a CNN-based model with two convolutional and pooling layers, leveraging the BigCloneBench dataset for evaluation of the model on code clone detection.

Zhang et al. [192] design a clone detection method that aligns similar functionalities between source code snippets, even when their structure is different. This model uses sparse reconstruction and attention-based alignment techniques, relying on similarity scores to detect clones. Meanwhile, Zeng et al. [193] focus on computational efficiency, significantly reducing runtime. They use a weighted Recursive Autoencoder (RAE) and process source code in two phases: feature extraction to generate program vectors and clone detection via Approximate Nearest Neighbor Search (ANNS). They use Euclidean distance as a similarity metric. Functional clone detection, which identifies functionally equivalent but different code implementations, is explored in [194]. This hybrid model combines sequential tokens, syntactical ASTs, and structural CFGs to represent the source code and is evaluated on Java-based clone corpora. Recent research has expanded to cross-language clone detection as demonstrated in [195], which identifies clones across multiple PLs.

While sometimes the term code clone detection overlaps with code similarity detection, they serve distinct purposes. Although code clone detection can be considered a subset of code similarity detection, many studies use these terms interchangeably which may encounter confusion for readers. Future research should clearly distinguish these two tasks while developing an effective clone detection strategy for large-scale projects. Finally, leveraging LLMs or Code LLMs in code clone detection tasks holds promise for enhancing accuracy and scalability. By adopting recently up-to-date methodologies and expanding cross-language code clone detection capabilities, future research can offer robust solutions and clarify the distinction between code clone detection and code similarity detection, thereby improving software quality and maintainability.

To offer a deeper analytical view, we further grouped neural methods across the programming tasks based on their architectural families and learning paradigms. Broadly, these include sequence-based models (e.g., RNNs, LSTMs, and GRUs), Transformer-based large language models (e.g., CodeBERT, CodeT5, and PLBART), and graph-based neural networks (e.g., GGNN and RGCN). Each group exhibits distinct strengths and limitations. Sequence-based models excel at capturing short-range dependencies but struggle with long-contextual understanding; Transformer-based architectures handle long-range dependencies effectively, but they demand extensive computational resources and training data; graph-based approaches model code structure more explicitly but often sacrifice generalization due to task-specific designs. Table 6 summarizes the overall comparison of these neural methods’ categories in the context of programming-centric SE tasks.

Table 6.

Comparison of major neural method families applied to programming and SE tasks.

6. Data, Benchmarks, and Evaluation Metrics

6.1. Datasets and Benchmarks