A New Method for Detecting Plastic-Mulched Land Using GF-2 Imagery

Abstract

1. Introduction

2. Materials and Methods

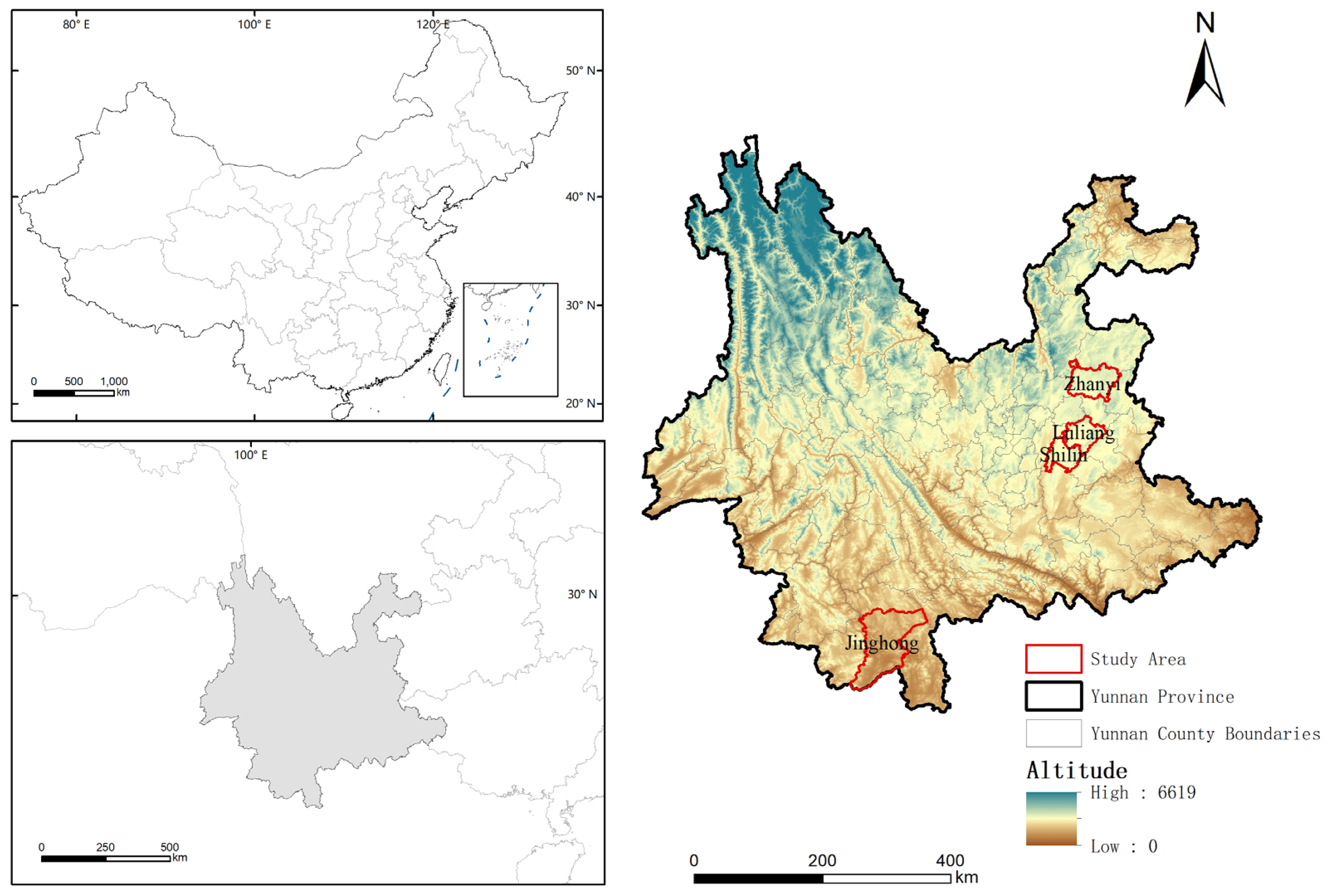

2.1. Overview of the Study Area

2.2. Image Acquisition and Pretreatment

2.3. Technical Route

2.4. Fusion Algorithm

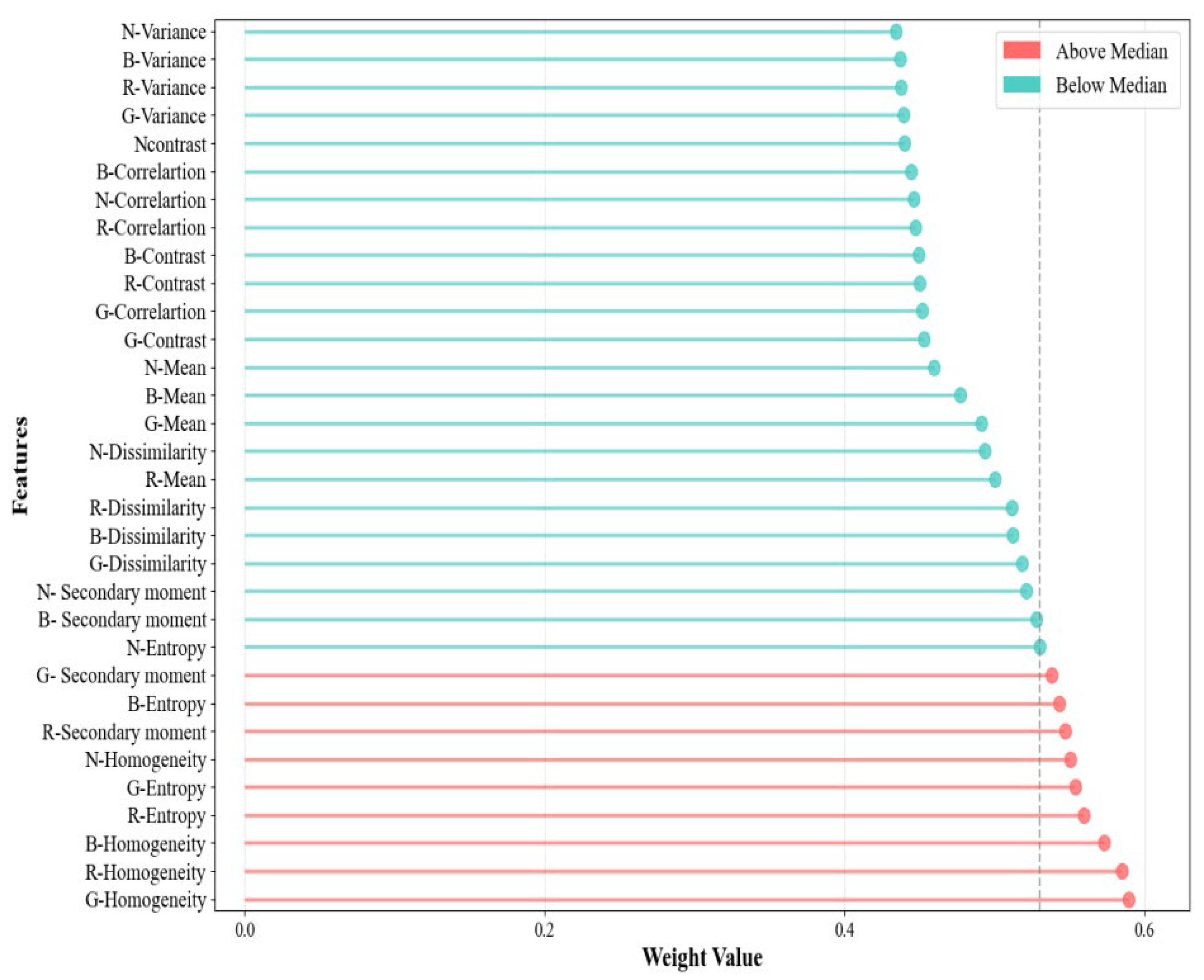

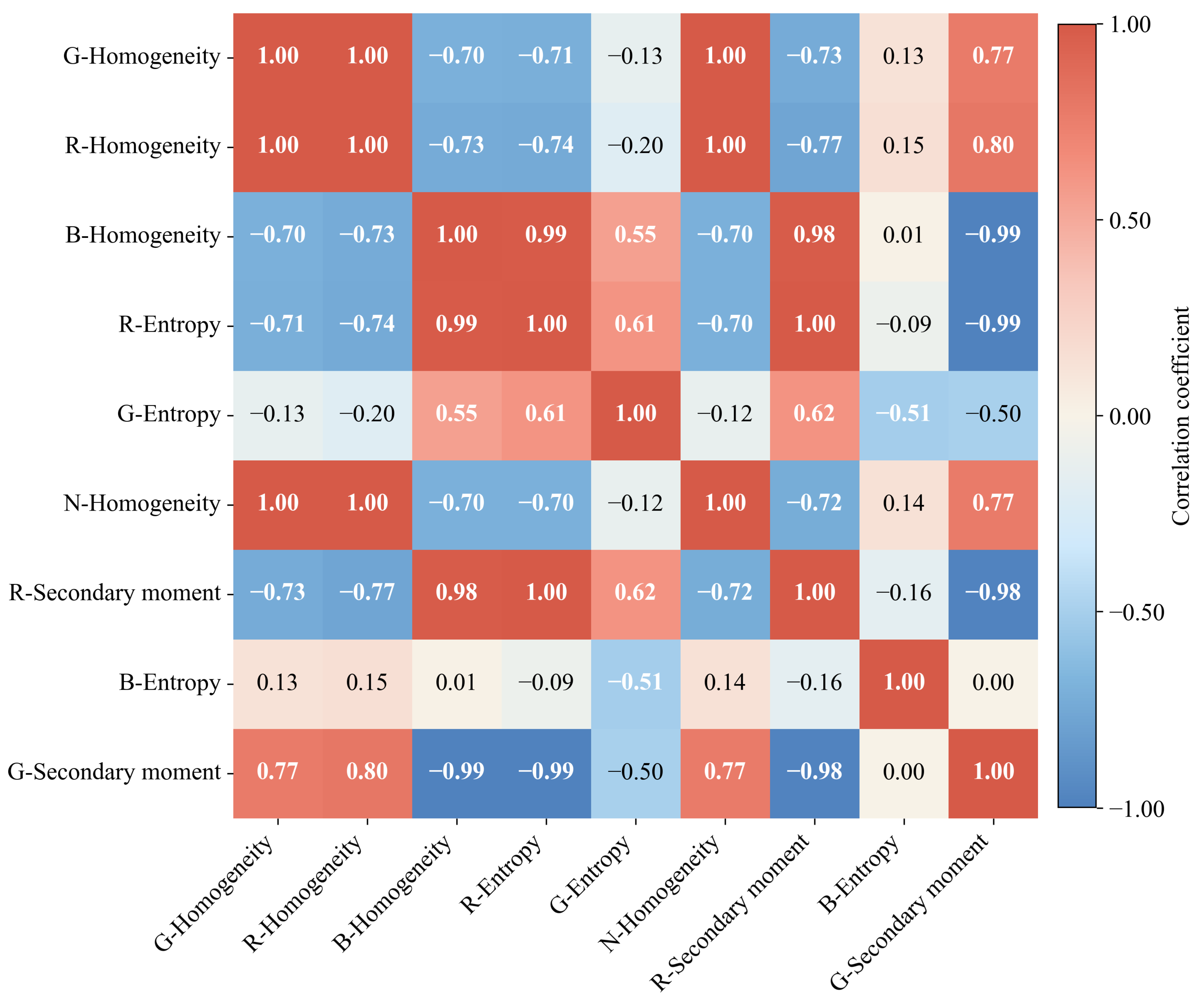

2.5. Texture Feature Calculation and Optimal Feature Selection

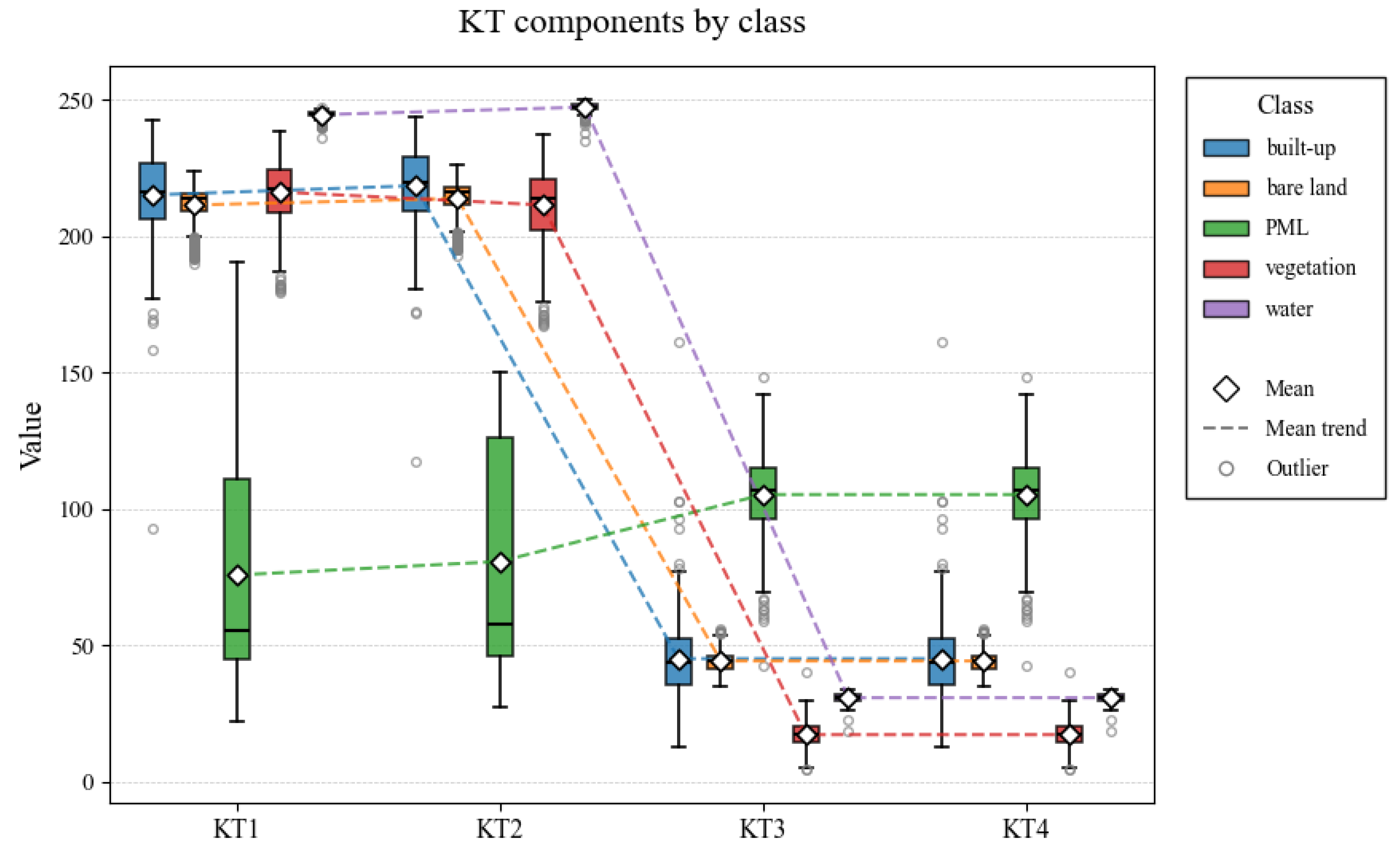

2.6. K-T Transform

2.7. Image Segmentation

3. Results

3.1. Classification Feature Selection Results

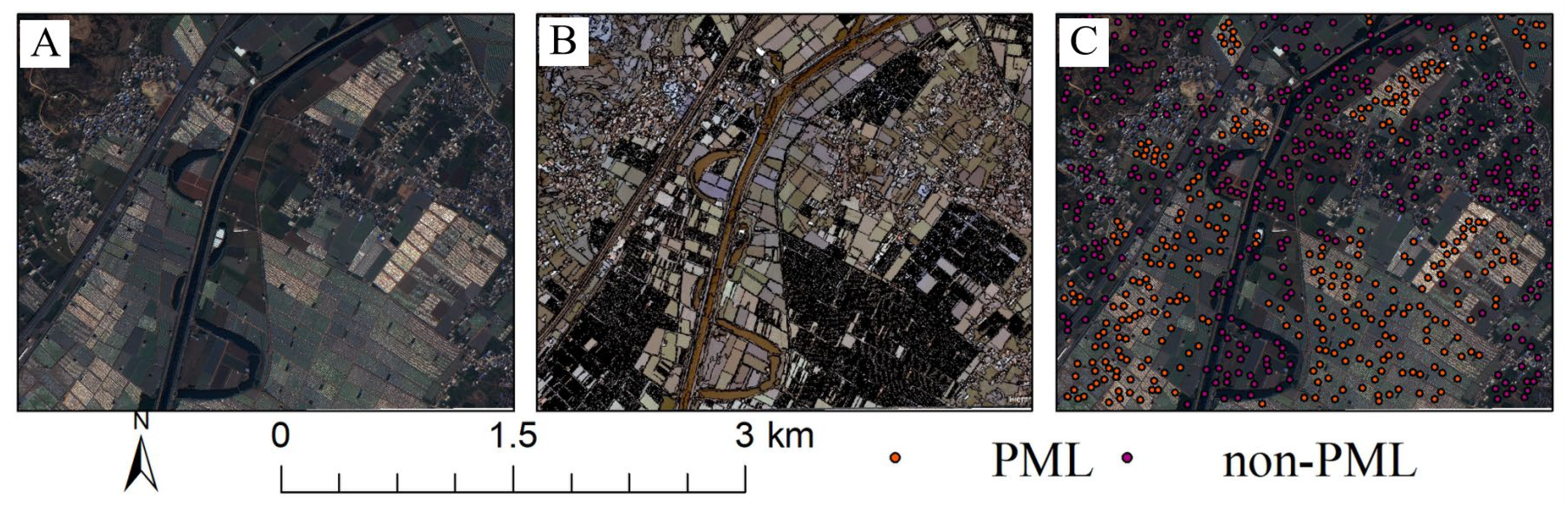

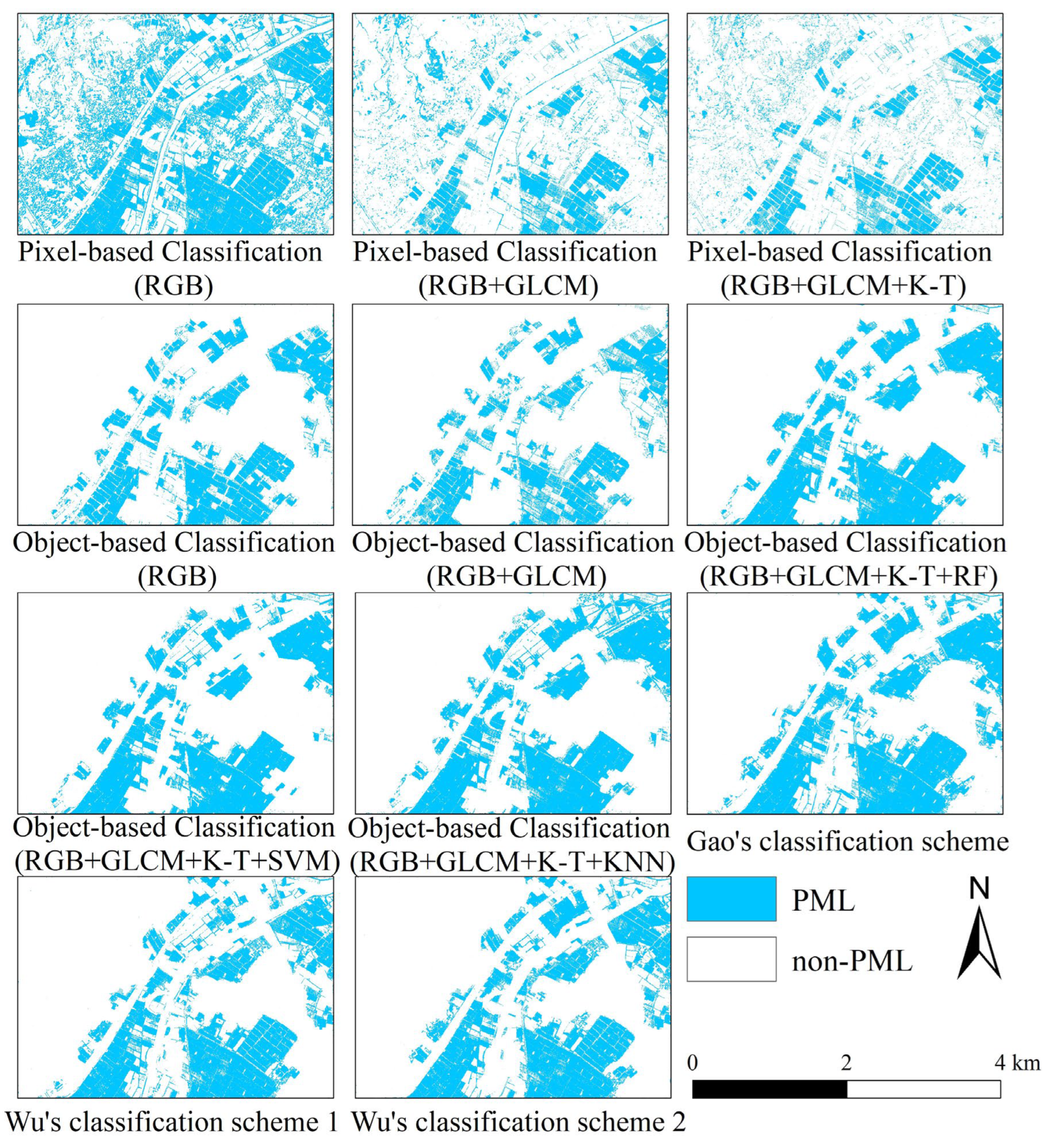

3.2. Classification Results

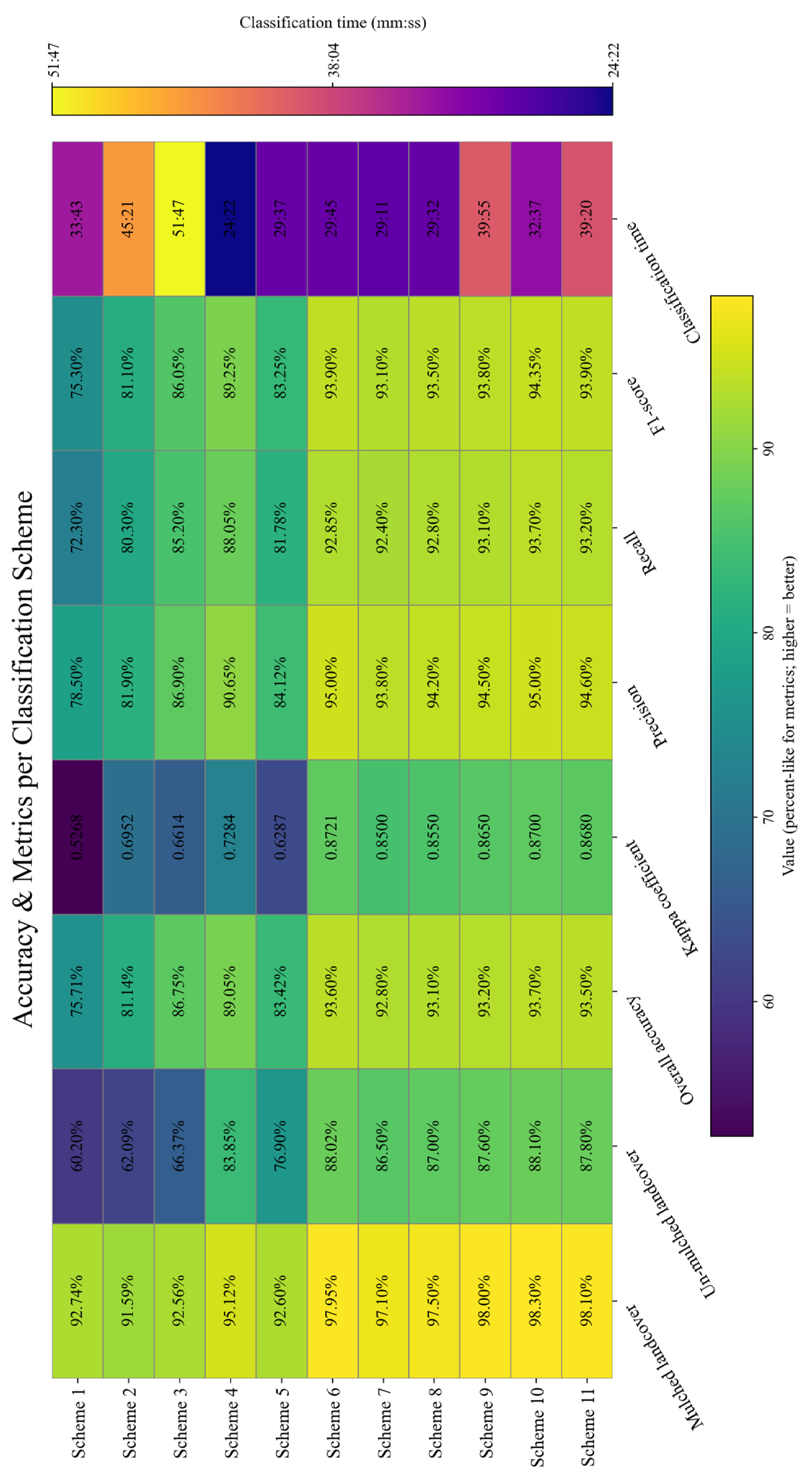

3.3. Accuracy of Different Classification Schemes

4. Discussion

4.1. Rationality Demonstration of Method

4.2. Uncertainties and Future Needs

5. Conclusions

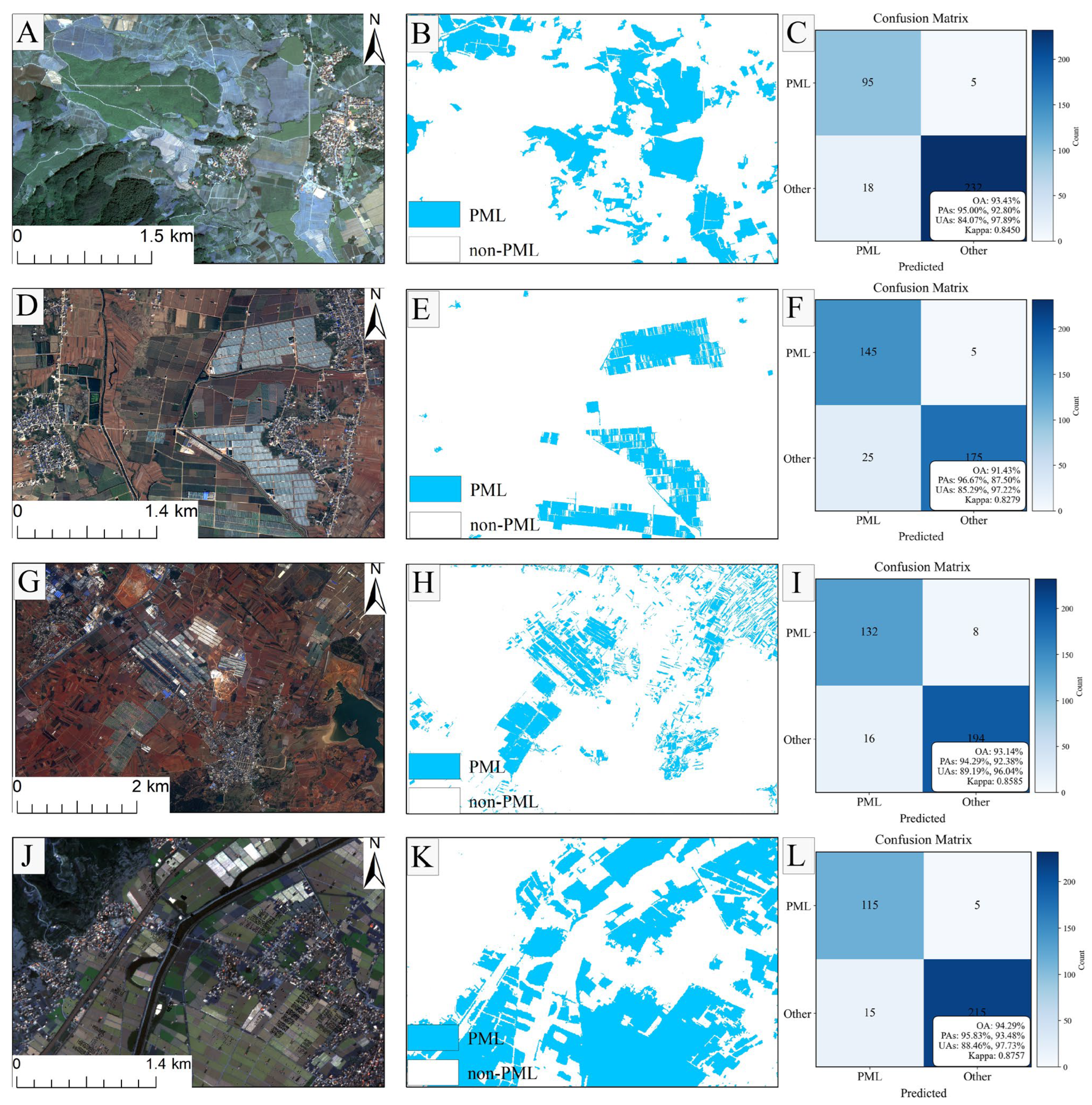

- This study demonstrates that PML can be accurately identified using object-based classification of GF-2 fused imagery, integrating the original spectral bands, optimized texture features, and the second component of the K-T transformation. The method proved transferable across different regions of Yunnan Province, achieving classification accuracies of 93.60% in Luliang County, 93.14% in Zhanyi County, and 94.29% in Shilin District, and 93.43% in Jinghong City, all of which represent relatively high levels of performance. An accuracy assessment carried out in November in the Luliang area achieved 94.29% accuracy, indicating that the method likewise exhibits high temporal stability.

- The second component of the K-T transformation, derived from GF-2 imagery using transformation coefficients from IKONOS data, effectively enhanced the spectral representation of PML and provided clear separability from other land-cover types. Incorporating this component as a classification feature substantially improved the accuracy of PML identification.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zeng, L.S.; Zhou, Z.F.; Shi, Y.X. Environmental Problems and Control Ways of Plastic Film in Agricultural Production. AMM 2013, 295–298, 2187–2190. [Google Scholar] [CrossRef]

- Zhang, Q.-Q.; Ma, Z.-R.; Cai, Y.-Y.; Li, H.-R.; Ying, G.-G. Agricultural Plastic Pollution in China: Generation of Plastic Debris and Emission of Phthalic Acid Esters from Agricultural Films. Environ. Sci. Technol. 2021, 55, 12459–12470. [Google Scholar] [CrossRef]

- Wei, Z.; Cui, Y.; Li, S.; Wang, X.; Dong, J.; Wu, L.; Yao, Z.; Wang, S.; Fan, W. A Novel Two-Step Framework for Mapping Fraction of Mulched Film Based on Very-High-Resolution Satellite Observation and Deep Learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4406214. [Google Scholar] [CrossRef]

- Yang, H.; Hu, Z.; Wu, F.; Guo, K.; Gu, F.; Cao, M. The Use and Recycling of Agricultural Plastic Mulch in China: A Review. Sustainability 2023, 15, 15096. [Google Scholar] [CrossRef]

- Jiménez-Lao, R.; Aguilar, F.J.; Nemmaoui, A.; Aguilar, M.A. Remote Sensing of Agricultural Greenhouses and Plastic-Mulched Farmland: An Analysis of Worldwide Research. Remote Sens. 2020, 12, 2649. [Google Scholar] [CrossRef]

- Yan, C.; Liu, E.; Shu, F.; Liu, Q.; Liu, S.; He, W. Review of Agricultural Plastic Mulching and Its Residual Pollution and Prevention Measures in China. J. Agric. Resour. Environ. 2014, 31, 95–102. [Google Scholar]

- Espí, E.; Salmerón, A.; Fontecha, A.; García, Y.; Real, A.I. PLastic Films for Agricultural Applications. J. Plast. Film. Sheeting 2006, 22, 85–102. [Google Scholar] [CrossRef]

- Dai, J.; Hu, C.; Flury, M.; Huang, Y.; Rillig, M.C.; Ji, D.; Peng, J.; Fei, J.; Huang, Q.; Xiong, Y.; et al. National Inventory of Plastic Mulch Residues in Chinese Croplands From 1993 to 2050. Glob. Change Biol. 2025, 31, e70297. [Google Scholar] [CrossRef] [PubMed]

- Qi, R.; Jones, D.L.; Li, Z.; Liu, Q.; Yan, C. Behavior of Microplastics and Plastic Film Residues in the Soil Environment: A Critical Review. Sci. Total Environ. 2020, 703, 134722. [Google Scholar] [CrossRef]

- Wanner, P. Plastic in Agricultural Soils—A Global Risk for Groundwater Systems and Drinking Water Supplies?—A Review. Chemosphere 2021, 264, 128453. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, R.; Wang, X.; Chen, F.; Lai, D.; Tian, C. Effects of Plastic Film Mulching with Drip Irrigation on N2O and CH4 Emissions from Cotton Fields in Arid Land. J. Agric. Sci. 2014, 152, 534–542. [Google Scholar] [CrossRef]

- Berger, S.; Kim, Y.; Kettering, J.; Gebauer, G. Plastic Mulching in Agriculture—Friend or Foe of N2O Emissions? Agric. Ecosyst. Environ. 2013, 167, 43–51. [Google Scholar] [CrossRef]

- Wang, S.; Fan, T.; Cheng, W.; Wang, L.; Zhao, G.; Li, S.; Dang, Y.; Zhang, J. Occurrence of Macroplastic Debris in the Long-Term Plastic Film-Mulched Agricultural Soil: A Case Study of Northwest China. Sci. Total Environ. 2022, 831, 154881. [Google Scholar] [CrossRef]

- Kumar, M.; Xiong, X.; He, M.; Tsang, D.C.W.; Gupta, J.; Khan, E.; Harrad, S.; Hou, D.; Ok, Y.S.; Bolan, N.S. Microplastics as Pollutants in Agricultural Soils. Environ. Pollut. 2020, 265, 114980. [Google Scholar] [CrossRef]

- Hayes, D.G.; Wadsworth, L.C.; Sintim, H.Y.; Flury, M.; English, M.; Schaeffer, S.; Saxton, A.M. Effect of Diverse Weathering Conditions on the Physicochemical Properties of Biodegradable Plastic Mulches. Polym. Test. 2017, 62, 454–467. [Google Scholar] [CrossRef]

- Steinmetz, Z.; Wollmann, C.; Schaefer, M.; Buchmann, C.; David, J.; Tröger, J.; Muñoz, K.; Frör, O.; Schaumann, G.E. Plastic Mulching in Agriculture. Trading Short-Term Agronomic Benefits for Long-Term Soil Degradation? Sci. Total Environ. 2016, 550, 690–705. [Google Scholar] [CrossRef]

- Van Schothorst, B.; Beriot, N.; Huerta Lwanga, E.; Geissen, V. Sources of Light Density Microplastic Related to Two Agricultural Practices: The Use of Compost and Plastic Mulch. Environments 2021, 8, 36. [Google Scholar] [CrossRef]

- Uzamurera, A.G.; Wang, P.-Y.; Zhao, Z.-Y.; Tao, X.-P.; Zhou, R.; Wang, W.-Y.; Xiong, X.-B.; Wang, S.; Wesly, K.; Tao, H.-Y.; et al. Thickness-Dependent Release of Microplastics and Phthalic Acid Esters from Polythene and Biodegradable Residual Films in Agricultural Soils and Its Related Productivity Effects. J. Hazard. Mater. 2023, 448, 130897. [Google Scholar] [CrossRef]

- Panno, S.V.; Kelly, W.R.; Scott, J.; Zheng, W.; McNeish, R.E.; Holm, N.; Hoellein, T.J.; Baranski, E.L. Microplastic Contamination in Karst Groundwater Systems. Groundwater 2019, 57, 189–196. [Google Scholar] [CrossRef]

- Li, J.; Liu, H.; Paul Chen, J. Microplastics in Freshwater Systems: A Review on Occurrence, Environmental Effects, and Methods for Microplastics Detection. Water Res. 2018, 137, 362–374. [Google Scholar] [CrossRef] [PubMed]

- Lebreton, L.C.M.; Van Der Zwet, J.; Damsteeg, J.-W.; Slat, B.; Andrady, A.; Reisser, J. River Plastic Emissions to the World’s Oceans. Nat. Commun. 2017, 8, 15611. [Google Scholar] [CrossRef]

- Lu, L.; Di, L.; Ye, Y. A Decision-Tree Classifier for Extracting Transparent Plastic-Mulched Landcover from Landsat-5 TM Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4548–4558. [Google Scholar] [CrossRef]

- Yang, D.; Chen, J.; Zhou, Y.; Chen, X.; Chen, X.; Cao, X. Mapping Plastic Greenhouse with Medium Spatial Resolution Satellite Data: Development of a New Spectral Index. ISPRS J. Photogramm. Remote Sens. 2017, 128, 47–60. [Google Scholar] [CrossRef]

- Zhang, P.; Du, P.; Guo, S.; Zhang, W.; Tang, P.; Chen, J.; Zheng, H. A Novel Index for Robust and Large-Scale Mapping of Plastic Greenhouse from Sentinel-2 Images. Remote Sens. Environ. 2022, 276, 113042. [Google Scholar] [CrossRef]

- Ma, X.; Shuai, Y.; Latipa, T. An Adaptive Index for Mapping Plastic-Mulched Crop Fields from Landsat-8 OLI Images. Int. J. Digit. Earth 2025, 18, 2538818. [Google Scholar] [CrossRef]

- Fu, C.; Cheng, L.; Qin, S.; Tariq, A.; Liu, P.; Zou, K.; Chang, L. Timely Plastic-Mulched Cropland Extraction Method from Complex Mixed Surfaces in Arid Regions. Remote Sens. 2022, 14, 4051. [Google Scholar] [CrossRef]

- Levin, N.; Lugassi, R.; Ramon, U.; Braun, O.; Ben-Dor, E. Remote Sensing as a Tool for Monitoring Plasticulture in Agricultural Landscapes. Int. J. Remote Sens. 2007, 28, 183–202. [Google Scholar] [CrossRef]

- Hasituya; Chen, Z.; Wang, L.; Wu, W.; Jiang, Z.; Li, H. Monitoring Plastic-Mulched Farmland by Landsat-8 OLI Imagery Using Spectral and Textural Features. Remote Sens. 2016, 8, 353. [Google Scholar] [CrossRef]

- Hasituya; Chen, Z. Mapping Plastic-Mulched Farmland with Multi-Temporal Landsat-8 Data. Remote Sens. 2017, 9, 557. [Google Scholar] [CrossRef]

- Dong, X.; Li, J.; Xu, N.; Lei, J.; He, Z.; Zhao, L. A Novel Phenology-Based Index for Plastic-Mulched Farmland Extraction and Its Application in a Typical Agricultural Region of China Using Sentinel-2 Imagery and Google Earth Engine. Land 2024, 13, 1825. [Google Scholar] [CrossRef]

- Lu, L.; Xu, Y.; Huang, X.; Zhang, H.K.; Du, Y. Large-Scale Mapping of Plastic-Mulched Land from Sentinel-2 Using an Index-Feature-Spatial-Attention Fused Deep Learning Model. Sci. Remote Sens. 2025, 11, 100188. [Google Scholar] [CrossRef]

- Koc-San, D. Evaluation of Different Classification Techniques for the Detection of Glass and Plastic Greenhouses from WorldView-2 Satellite Imagery. J. Appl. Remote Sens 2013, 7, 073553. [Google Scholar] [CrossRef]

- Luo, Q.; Liu, X.; Shi, Z.; Qu, R.; Zhao, W. Study on Plastic Mulch Identification Based on the Fusion of GF-1 and Sentinel -2 Images. Geogr. Geo Inf. Sci. 2021, 37, 39–46. [Google Scholar]

- Lu, L.; Hang, D.; Di, L. Threshold Model for Detecting Transparent Plastic-Mulched Landcover Using Moderate-Resolution Imaging Spectroradiometer Time Series Data: A Case Study in Southern Xinjiang, China. J. Appl. Remote Sens 2015, 9, 097094. [Google Scholar] [CrossRef]

- Agüera, F.; Aguilar, F.J.; Aguilar, M.A. Using Texture Analysis to Improve Per-Pixel Classification of Very High Resolution Images for Mapping Plastic Greenhouses. ISPRS J. Photogramm. Remote Sens. 2008, 63, 635–646. [Google Scholar] [CrossRef]

- Wu, J.; Liu, X.; Bo, Y.; Shi, Z.; Fu, Z. Plastic greenhouse recognition based on GF-2 data and multi-texture features. Trans. Chin. Soc. Agric. Eng. 2019, 35, 173–183. [Google Scholar]

- Gao, M.; Jiang, Q.; Zhao, Y.; Yang, W.; Shi, M. Comparison of plastic greenhouse extraction method based on GF-2 remote-sensing imagery. J. China Agric. Univ. 2018, 23, 125–134. [Google Scholar] [CrossRef]

- Yan, Z.; Chen, Z.; Dorjsuren, A.; Tuvdendorj, B. Mapping Exposure Duration of Plastic-Mulched Farmland Using Object-Scale Spectral Indices and Time Series Sentinel-2 Data. Int. J. Appl. Earth Obs. Geoinf. 2025, 143, 104782. [Google Scholar]

- Feng, Q.; Niu, B.; Chen, B.; Ren, Y.; Zhu, D.; Yang, J.; Liu, J.; Ou, C.; Li, B. Mapping of Plastic Greenhouses and Mulching Films from Very High Resolution Remote Sensing Imagery Based on a Dilated and Non-Local Convolutional Neural Network. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102441. [Google Scholar] [CrossRef]

- Sun, W.; Chen, B.; Messinger, D.W. Nearest-Neighbor Diffusion-Based Pan-Sharpening Algorithm for Spectral Images. Opt. Eng. 2014, 53, 013107. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Ali, S.A.; Parvin, F.; Vojteková, J.; Costache, R.; Linh, N.T.T.; Pham, Q.B.; Vojtek, M.; Gigović, L.; Ahmad, A.; Ghorbani, M.A. GIS-Based Landslide Susceptibility Modeling: A Comparison between Fuzzy Multi-Criteria and Machine Learning Algorithms. Geosci. Front. 2021, 12, 857–876. [Google Scholar] [CrossRef]

- Kauth, R.J.; Thomas, G.S. The Tasselled Cap—A Graphic Description of the Spectral-Temporal Development of Agricultural Crops as Seen by LANDSAT. Mach. Process. Remote. Sensed Data 1976, 1, 41–51. [Google Scholar]

- Fei, X.; Zhang, Z.; Gao, X. Study on IKONOS data fusion based on Tasseled Cap transformation. Comput. Eng. Appl. 2008, 44, 233–240. [Google Scholar]

- Yang, W.; Zhang, Y.; Yin, X.; Wang, J. Construction of ratio build-up index for GF—1 image. Remote Sens. Land Resour. 2016, 28, 35–42. [Google Scholar]

- Zhou, J.; Zhu, J.; Zuo, T. A tasseled cap transformation for IKONOS images. Mine Surverying 2006, 1, 60–70. [Google Scholar]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS Satellite, Imagery, and Products. Remote Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-Feature Combined Cloud and Cloud Shadow Detection in GaoFen-1 Wide Field of View Imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef]

- Zhang, R.; Jia, M.; Wang, Z.; Zhou, Y.; Wen, X.; Tan, Y.; Cheng, L. A Comparison of Gaofen-2 and Sentinel-2 Imagery for Mapping Mangrove Forests Using Object-Oriented Analysis and Random Forest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4185–4193. [Google Scholar] [CrossRef]

- Hu, R.; Wei, M.; Yang, C.; He, J. Taking SPOT5 remote sensing data for example to compare pixel-based and object-oriented classification. Remote Sens. Technol. Appl. 2012, 27, 366–371. [Google Scholar]

- Shen, X.; Guo, Y.; Cao, J. Object-Based Multiscale Segmentation Incorporating Texture and Edge Features of High-Resolution Remote Sensing Images. PeerJ Comput. Sci. 2023, 9, e1290. [Google Scholar] [CrossRef] [PubMed]

| K-T Transform Component | Blue Band | Green Band | Red Band | Near-Infrared Band |

|---|---|---|---|---|

| 1. Illuminance component | 0.326 | −0.311 | −0.612 | −0.650 |

| 2. Green component | 0.509 | −0.356 | −0.312 | 0.719 |

| 3. Humidity component | 0.560 | −0.325 | 0.722 | −0.243 |

| 4. The 4th component | 0.567 | 0.819 | −0.081 | −0.031 |

| Satellite | Blue Band | Green Band | Red Band | Near-Infrared Band |

|---|---|---|---|---|

| IKONOS [49] | 445–516 nm | 506–595 nm | 632–698 nm | 757–853 nm |

| GF-1 [50] | 450–520 nm | 520–590 nm | 630–690 nm | 770–890 nm |

| GF-2 [51] | 450–520 nm | 520–590 nm | 630–690 nm | 770–890 nm |

| Scheme | Classification Combinations |

|---|---|

| 1 | Pixel-based classification–RGB |

| 2 | Pixel-based classification–RGB + GLCM |

| 3 | Pixel-based classification–RGB + GLCM + KT |

| 4 | Object-oriented classification–RGB |

| 5 | Object-oriented classification–RGB + GLCM |

| 6 | Object-oriented classification–RGB + GLCM + KT (RF) |

| 7 | Object-oriented classification–RGB + GLCM + KT (SVM) |

| 8 | Object-oriented classification–RGB + GLCM + KT (KNN) |

| 9 | Object-oriented classification–Spectral&Shape feature + GLCM + VI (Gao) |

| 10 | Object-oriented classification–RGB + NIR + NDVI + PSI + LBP (Wu 1) |

| 11 | Object-oriented classification–RGB + NIR + NDVI + GLCM + LBP + PSI (Wu 2) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, S.; Zheng, S.; Chen, C.; Liu, S.; Dao, J.; Xu, C.; Wang, J. A New Method for Detecting Plastic-Mulched Land Using GF-2 Imagery. Appl. Sci. 2025, 15, 11978. https://doi.org/10.3390/app152211978

Lu S, Zheng S, Chen C, Liu S, Dao J, Xu C, Wang J. A New Method for Detecting Plastic-Mulched Land Using GF-2 Imagery. Applied Sciences. 2025; 15(22):11978. https://doi.org/10.3390/app152211978

Chicago/Turabian StyleLu, Shixian, Shuyuan Zheng, Cheng Chen, Shanshan Liu, Jian Dao, Chenwei Xu, and Jianxiong Wang. 2025. "A New Method for Detecting Plastic-Mulched Land Using GF-2 Imagery" Applied Sciences 15, no. 22: 11978. https://doi.org/10.3390/app152211978

APA StyleLu, S., Zheng, S., Chen, C., Liu, S., Dao, J., Xu, C., & Wang, J. (2025). A New Method for Detecting Plastic-Mulched Land Using GF-2 Imagery. Applied Sciences, 15(22), 11978. https://doi.org/10.3390/app152211978