MFFN-FCSA: Multi-Modal Feature Fusion Networks with Fully Connected Self-Attention for Radar Space Target Recognition

Abstract

1. Introduction

- (1)

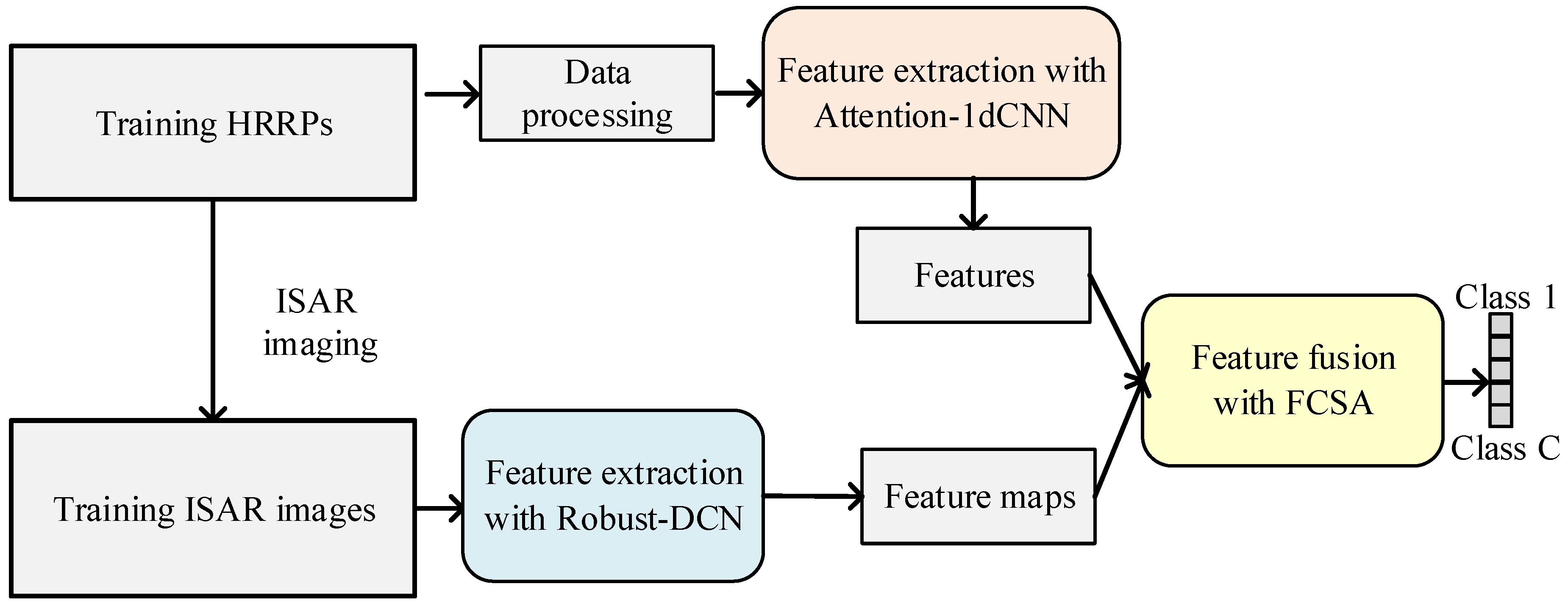

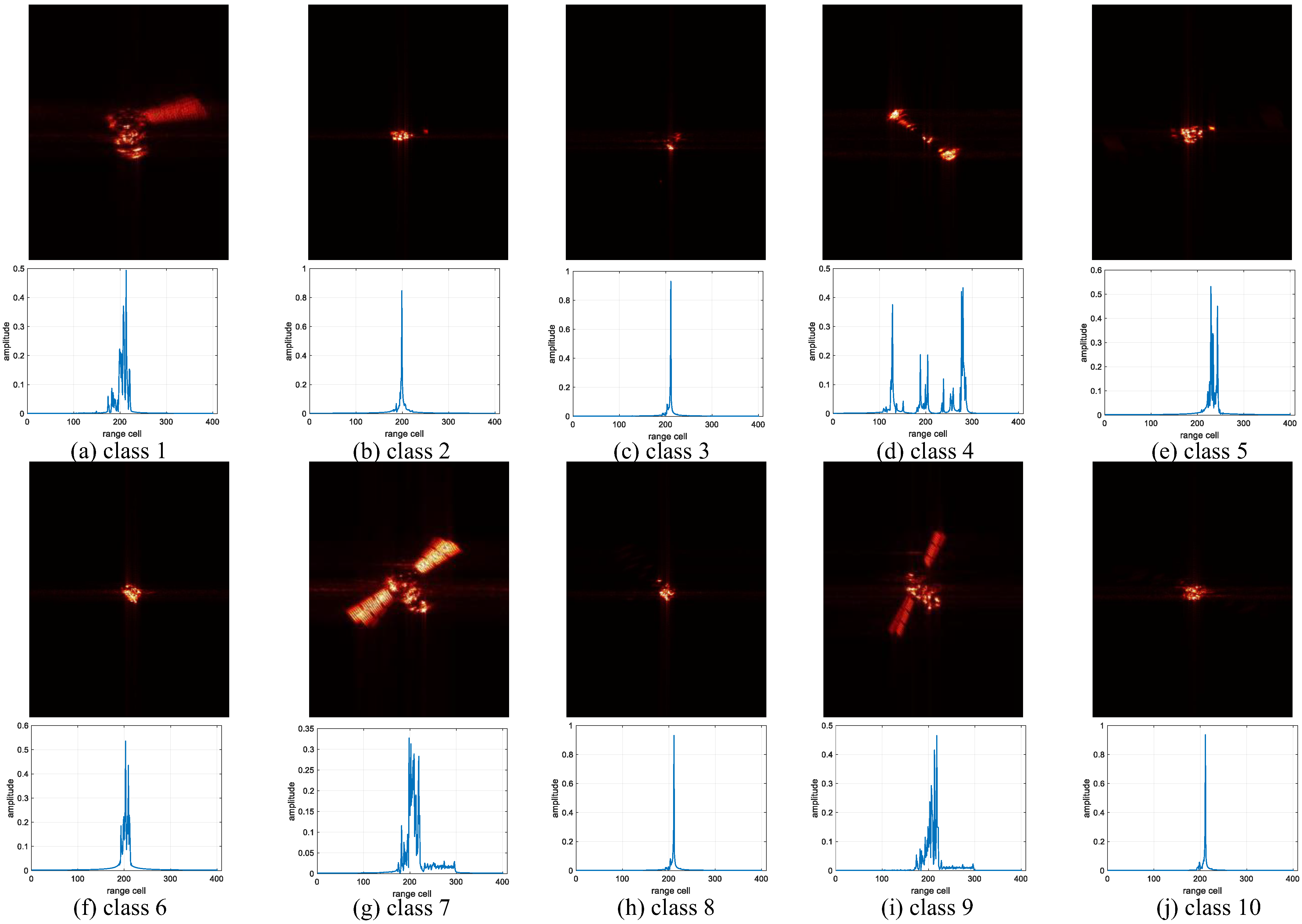

- The proposed model effectively leverages multi-modal radar data of space targets to deliver highly accurate recognition performance. Specifically, one-dimensional HRRPs capture precise radial structural signatures of the targets, while two-dimensional ISAR images provide detailed cross-range scattering distributions.

- (2)

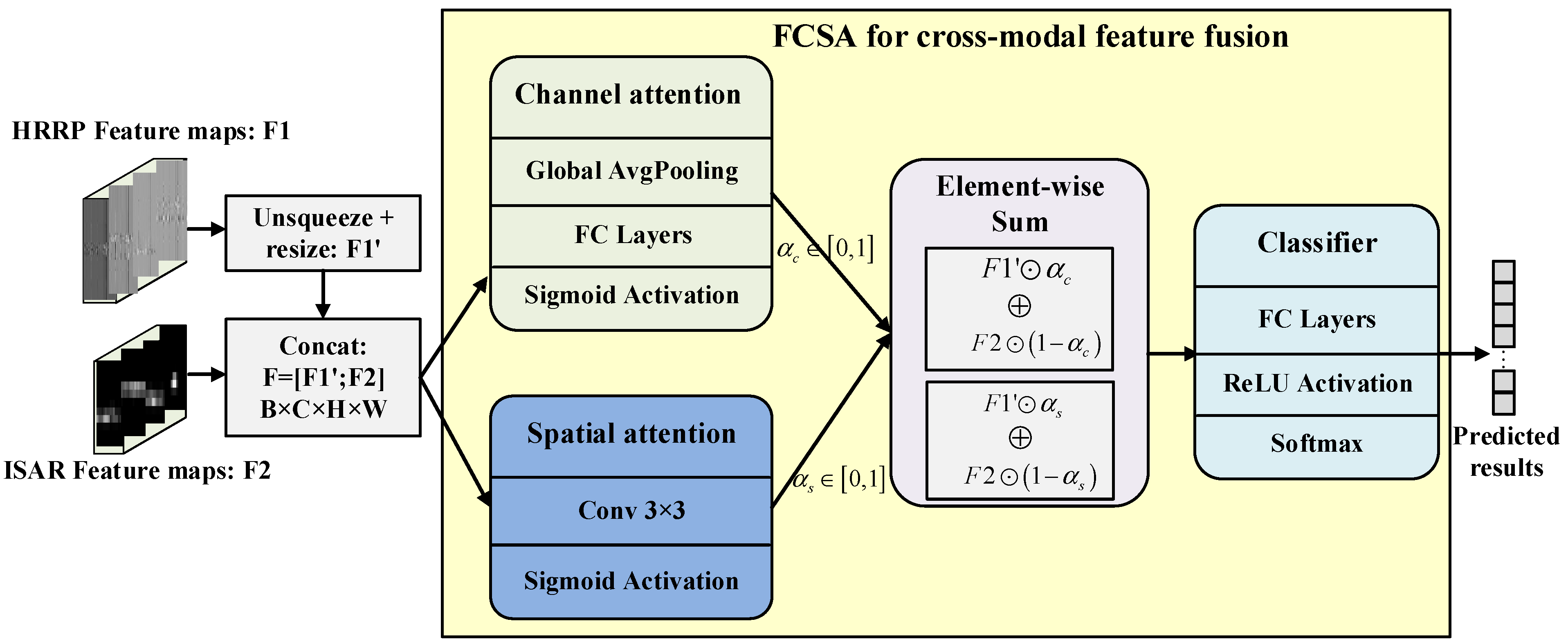

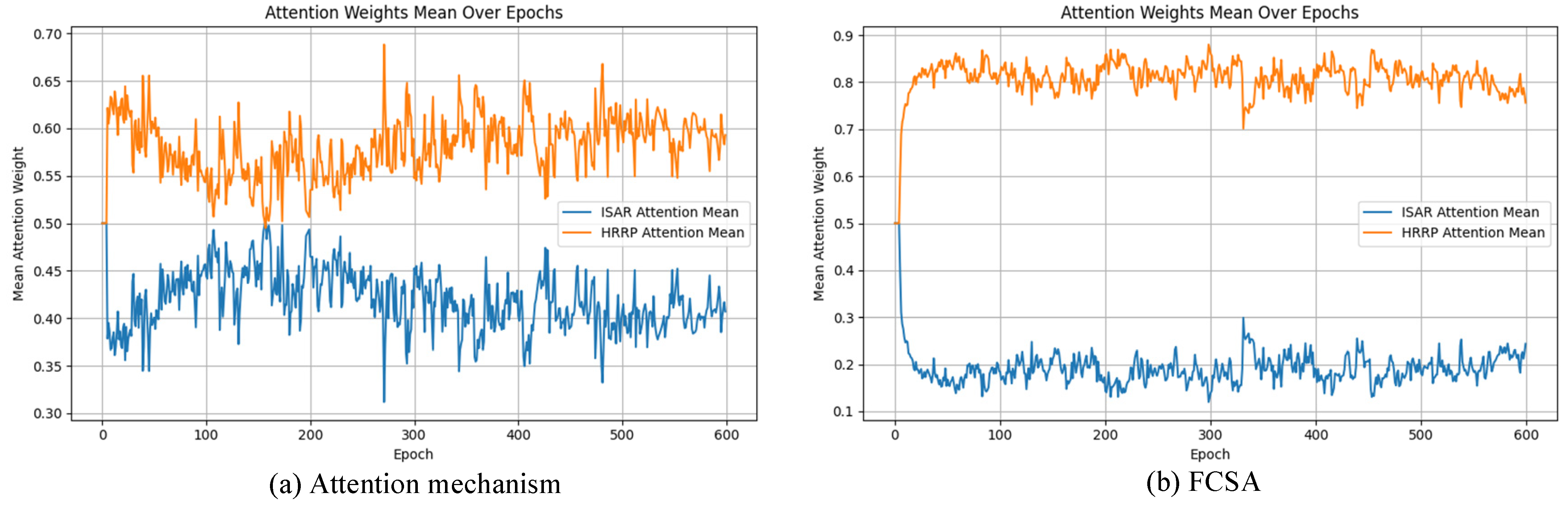

- A novel FCSA mechanism is designed in our model to dynamically integrate cross-modal features of HRRPs and ISAR images by fine-grained spatial–channel interdependency, which enables learning more comprehensive feature representations by leveraging complementary information from the heterogeneous radar modalities.

- (3)

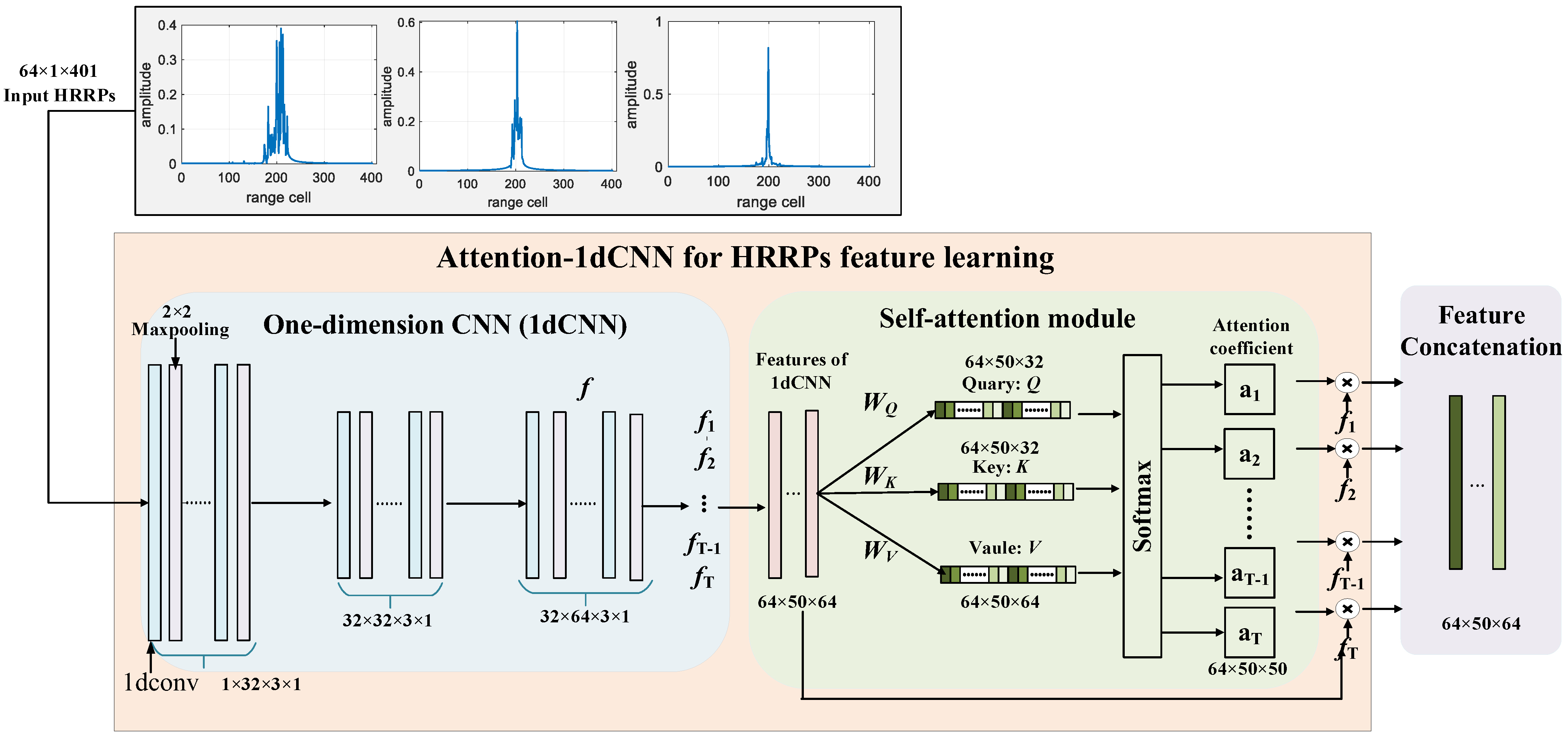

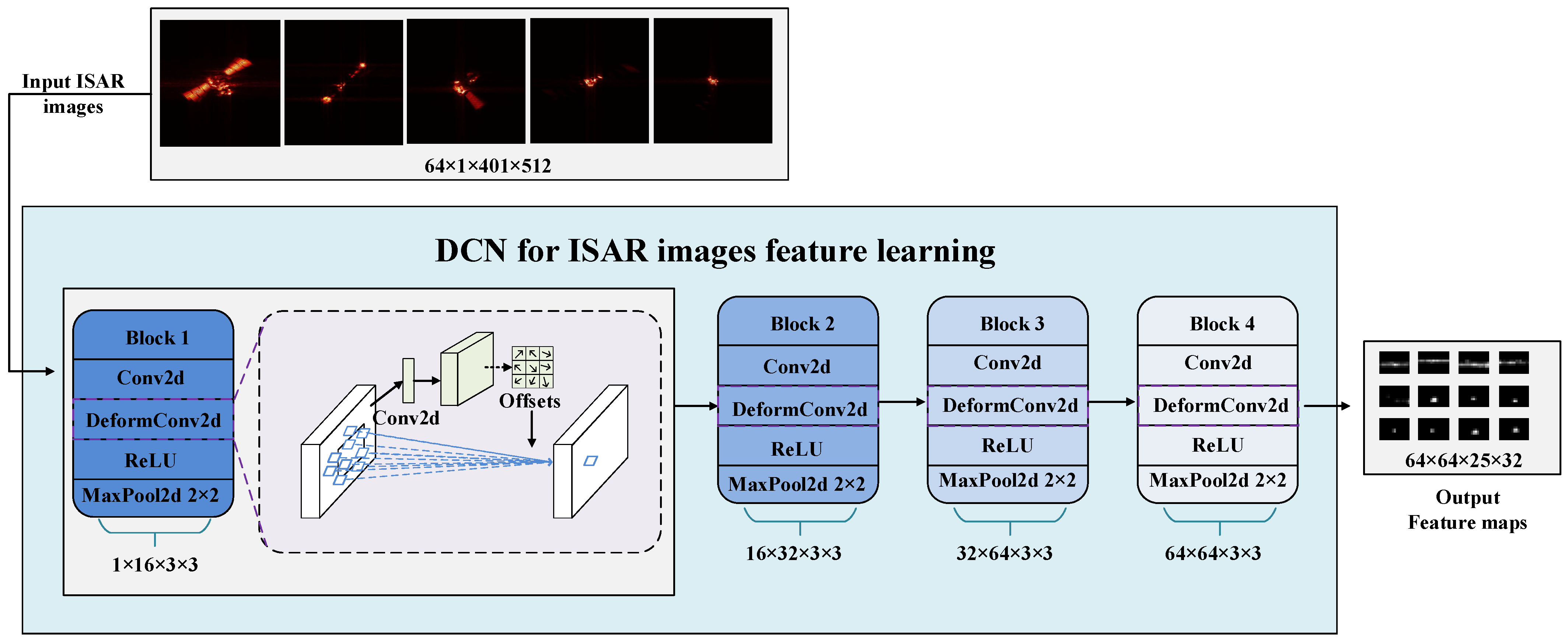

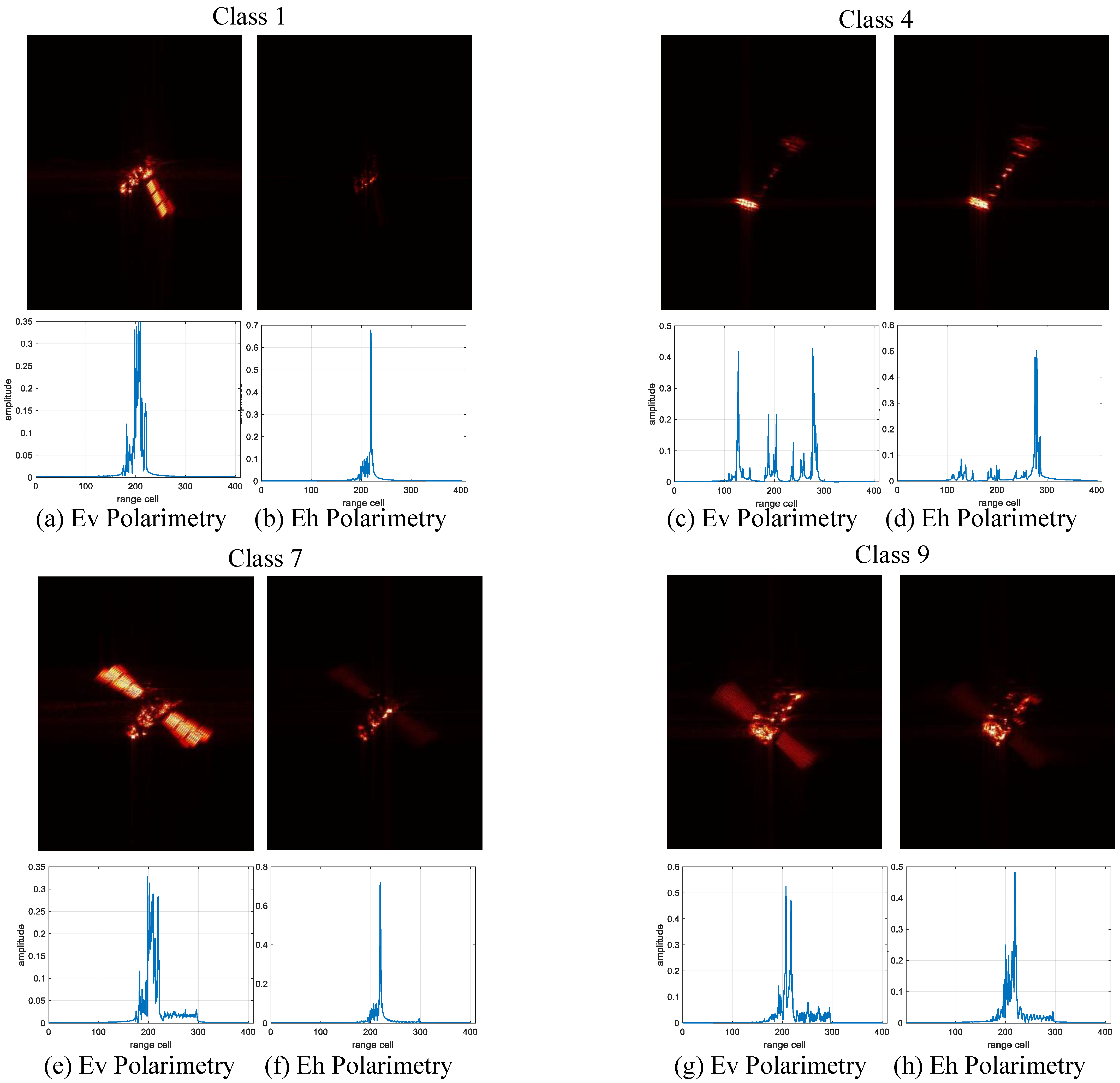

- A HRRP branch with an attention-1dCNN is proposed to learn one-dimensional structural signatures, and an ISAR branch with a robust-DCN is developed to learn robust geometric variation features of space targets, which can make use of the modalities’ radar data to learn representative features. Evaluations validate the exceptional generalization capabilities of our model under the few-shot learning and polarization variation scenarios.

2. Proposed Methods

2.1. Attention-1dCNN for HRRPs

2.2. Robust-DCN for ISAR Images

2.3. Feature Fusion Networks with FCSA

2.4. Training and Test Procedures

3. Results

3.1. Data Description and Settings

3.2. Recognition Results

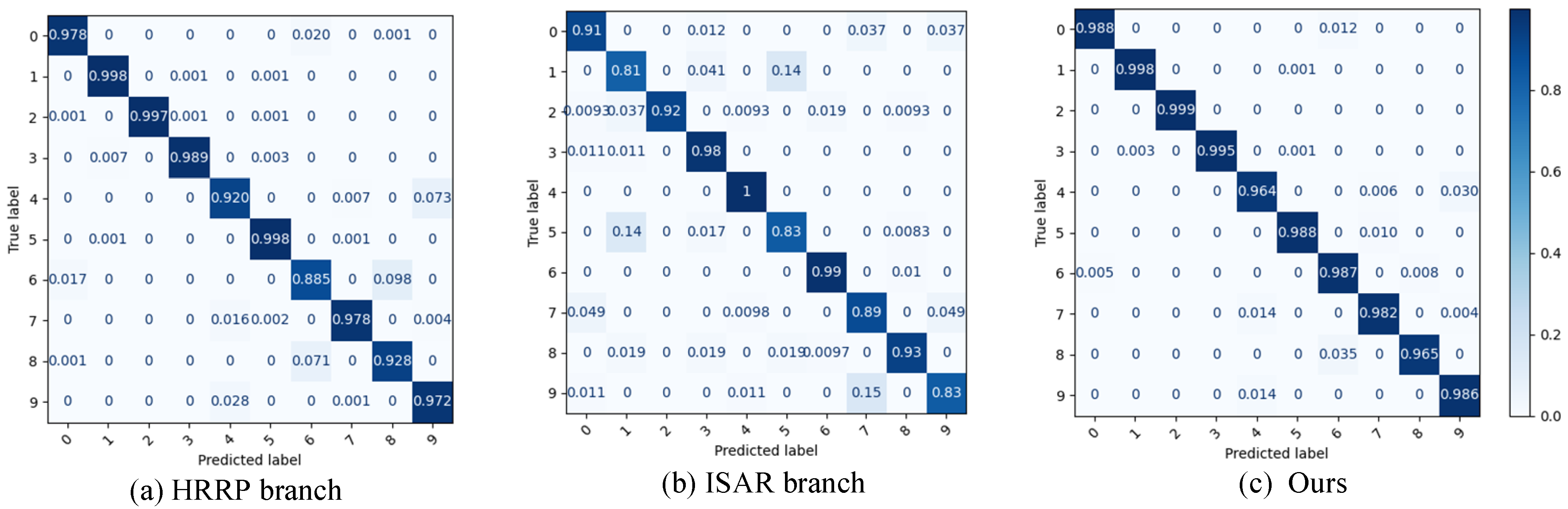

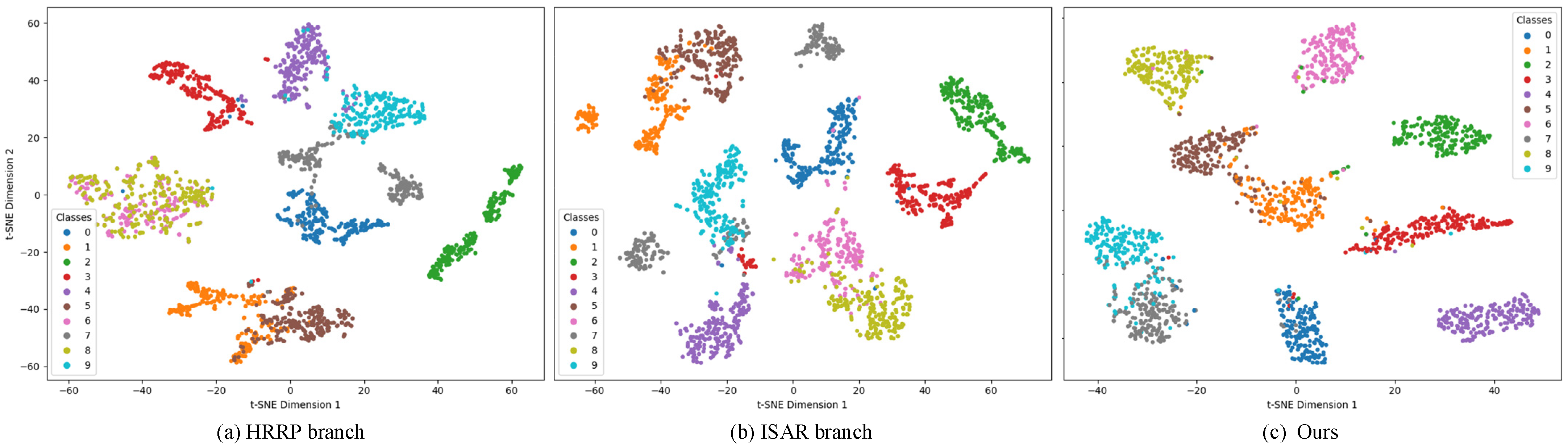

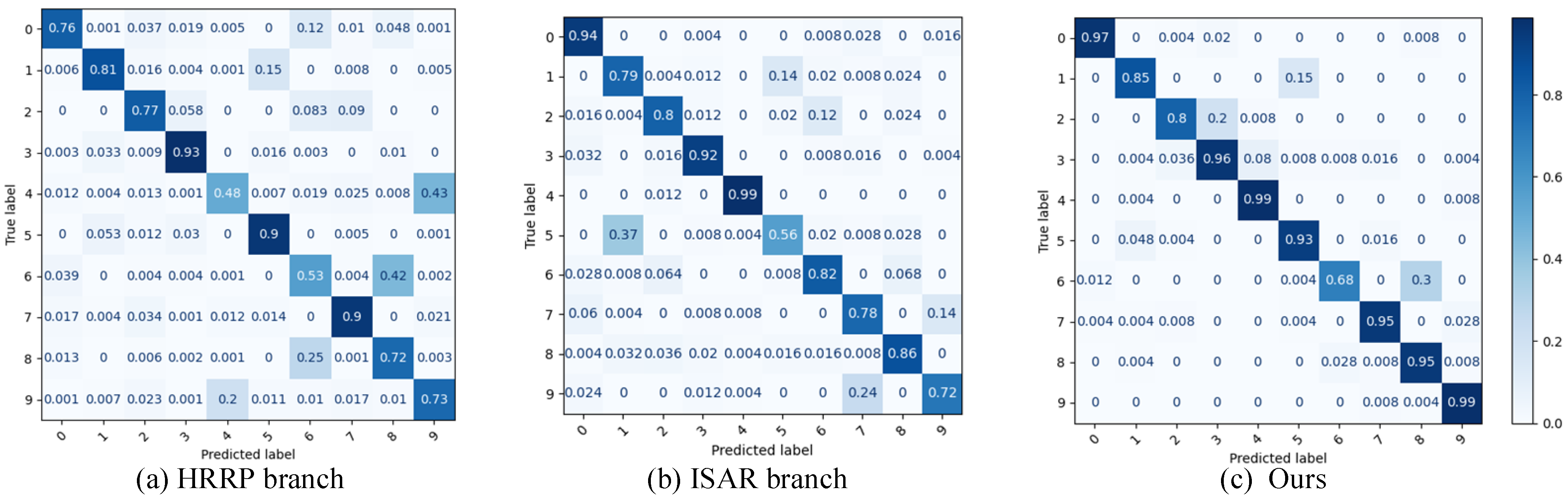

3.2.1. Target Recognition Performance

3.2.2. Ablation Study of Fusion Strategies

3.3. Model Generalization Analysis

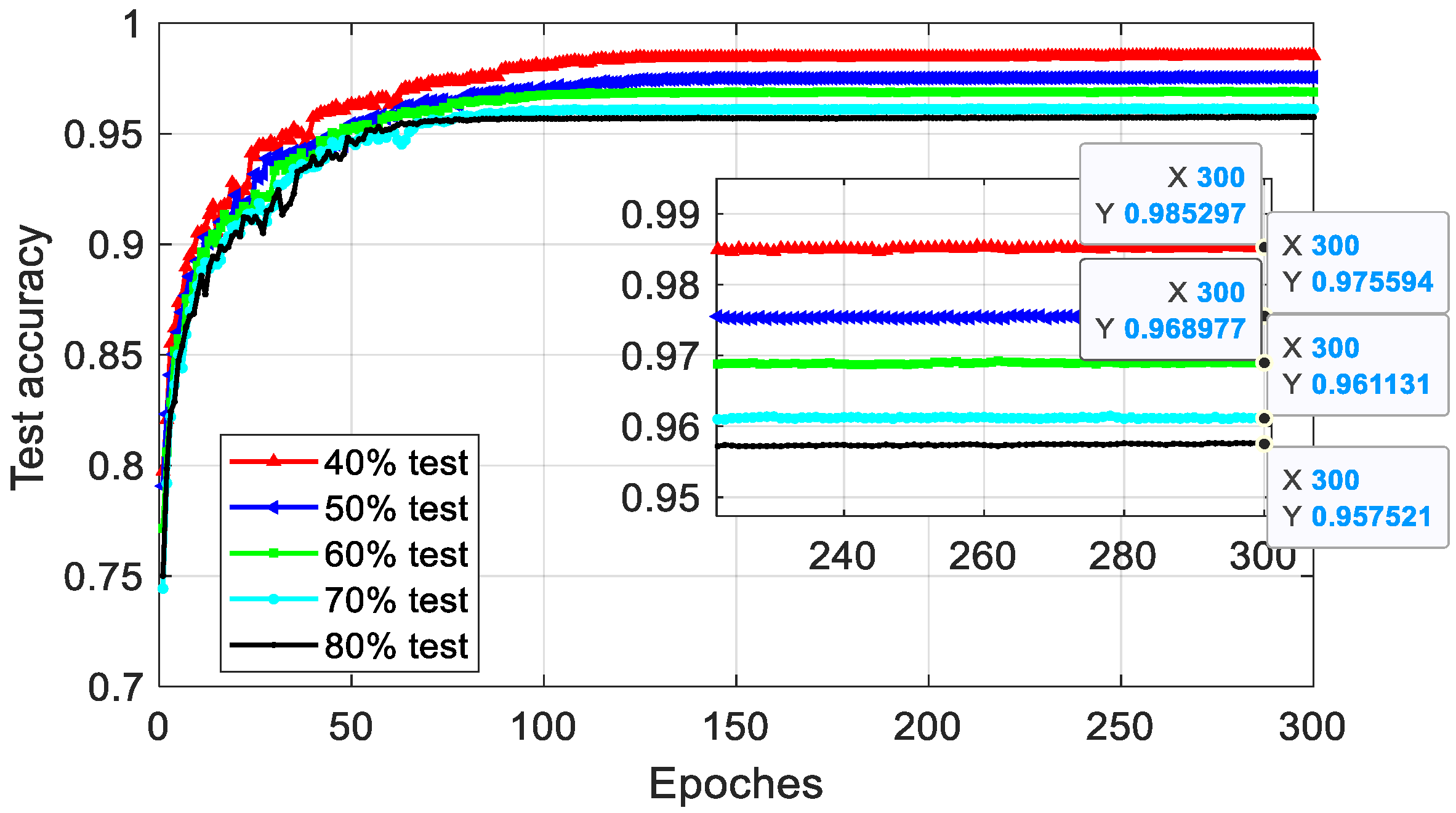

3.3.1. Few-Shot Results

3.3.2. Polarimetry Variations Results

3.4. Discussion

3.4.1. Analysis of Results and Implications

3.4.2. Limitations and Future Work

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luo, Y.; Zhang, Q.; Yuan, N.; Zhu, F.; Gu, F. Three-dimensional precession feature extraction of space targets. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 1313–1329. [Google Scholar] [CrossRef]

- Shi, Y.; Du, L.; Guo, Y. Unsupervised Domain Adaptation for SAR Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6372–6385. [Google Scholar] [CrossRef]

- Ezuma, M.; Anjinappa, C.K.; Semkin, V.; Guvenc, I. Comparative analysis of radar-cross-section-based UAV recognition techniques. IEEE Sens. J. 2022, 22, 17932–17949. [Google Scholar] [CrossRef]

- Persico, A.R.; Clemente, C.; Pallotta, L.; De Maio, A.; Soraghan, J. Micro-Doppler classification of ballistic threats using Krawtchouk moments. In Proceedings of the IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Du, C.; Xie, P.; Zhang, L.; Ma, Y.; Tian, L. Conditional prior probabilistic generative model with similarity measurement for ISAR imaging. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4013205. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Ran, L.; Xie, R. Feature extraction method for DCP HRRP-based radar target recognition via m−χ decomposition and sparsity-preserving discriminant correlation analysis. IEEE Sens. J. 2020, 20, 4321–4332. [Google Scholar] [CrossRef]

- Li, C.; Li, Y.; Zhu, W. Semisupervised space target recognition algorithm based on integrated network of imaging and recognition in radar signal domain. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 506–524. [Google Scholar] [CrossRef]

- Li, H.; Li, X.; Xu, Z.; Jin, X.; Su, F. MSDP-Net: A Multi-Scale Domain Perception Network for HRRP Target Recognition. Remote Sens. 2025, 17, 2601. [Google Scholar] [CrossRef]

- Malmgren-Hansen, D. A convolutional neural network architecture for Sentinel-1 and AMSR2 data fusion. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1890–1902. [Google Scholar] [CrossRef]

- Deng, J.; Su, F. Deep Hybrid Fusion Network for Inverse Synthetic Aperture Radar Ship Target Recognition Using Multi-Domain High-Resolution Range Profile Data. Remote Sens. 2024, 16, 3701. [Google Scholar] [CrossRef]

- Li, G.; Sun, Z.; Zhang, Y. ISAR target recognition using Pix2pix network derived from cGAN. In Proceedings of the International Radar Conference (RADAR), Toulon, France, 23–27 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, Q.; Wang, H.; Fan, L.; Li, S. A Category–Pose Jointly Guided ISAR Image Key Part Recognition Network for Space Targets. Remote Sens. 2025, 17, 2218. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Zeng, Y.; Liu, W.; Wang, R.; Deng, B. Detection of Parabolic Antennas in Satellite Inverse Synthetic Aperture Radar Images Using Component Prior and Improved-YOLOv8 Network in Terahertz Regime. Remote Sens. 2025, 17, 604. [Google Scholar] [CrossRef]

- Tian, B. Review of high-resolution imaging techniques of wideband inverse synthetic aperture radar. J. Radars 2020, 9, 765–802. [Google Scholar] [CrossRef]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and Excitation Rank Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 751–755. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, X.; Zhang, F.; Wang, L.; Xue, R.; Zhou, F. Robust pol-ISAR target recognition based on ST-MC-DCNN. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9912–9927. [Google Scholar] [CrossRef]

- Liao, L.; Du, L.; Chen, J.; Cao, Z.; Zhou, K. EMI-Net: An end-to-end mechanism-driven interpretable network for SAR target recognition under EOCs. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5205118. [Google Scholar] [CrossRef]

- Bickel, S.H. Some invariant properties of the polarization scattering matrix. Proc. IEEE 1965, 53, 1070–1072. [Google Scholar] [CrossRef]

- Chen, J.; Xu, S.; Chen, Z. Convolutional neural network for classifying space target of the same shape by using RCS time series. IET Radar Sonar Navig. 2018, 12, 1268–1275. [Google Scholar] [CrossRef]

- Song, J.; Wang, Y.; Chen, W.; Li, Y.; Wang, J. Radar HRRP recognition based on CNN. J. Eng. 2019, 2019, 7766–7769. [Google Scholar] [CrossRef]

- Zhao, Q.; Principe, J.C. Support vector machines for SAR automatic target recognition. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 643–654. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; pp. 559–599. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, L.; Wei, W.; Zhang, Y. When Low Rank Representation Based Hyperspectral Imagery Classification Meets Segmented Stacked Denoising Auto-Encoder Based Spatial-Spectral Feature. Remot. Sens. 2018, 10, 284. [Google Scholar] [CrossRef]

- Xi, Y.; Dechen, K.; Dong, Y.; Miao, W. Domain-aware generalized meta-learning for space target recognition. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5638212. [Google Scholar] [CrossRef]

- Zhang, Y.; Yuan, H.; Li, H.; Chen, J.; Niu, M. Meta-learner-based stacking network on space target recognition for ISAR images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 12132–12148. [Google Scholar] [CrossRef]

- Liu, J.; Xing, M.; Tang, W. Visualizing Transform Relations of Multilayers in Deep Neural Networks for ISAR Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7052–7064. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.H.; Liu, T.; Xie, Y.; Luo, Y. Space target recognition based on radar network systems with BiGRU-transformer and dual graph fusion network. IEEE Trans. Radar Syst. 2024, 2, 950–965. [Google Scholar] [CrossRef]

- Dong, J.; She, Q.; Hou, F. HRPNet: High-dimensional feature mapping for radar space target recognition. IEEE Sens. J. 2024, 24, 11743–11758. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, Y.; Chen, Z.; Li, C. A multiscale dual-branch feature fusion and attention network for hyperspectral images classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 8180–8192. [Google Scholar] [CrossRef]

- Wu, Y.; Guan, X.; Zhao, B.; Ni, L.; Huang, M. Vehicle detection based on adaptive multimodal feature fusion and cross-modal vehicle index using RGB-T images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2023, 16, 8166–8177. [Google Scholar] [CrossRef]

- Zhang, P.; Zhou, Z.; Huang, H.; Yang, Y.; Hu, X.; Zhuang, J.; Tang, Y. Hybrid integrated feature fusion of handcrafted and deep features for Rice blast resistance identification using UAV imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2025, 18, 7304–7317. [Google Scholar] [CrossRef]

- Wu, X.; Cao, Z.H.; Huang, T.Z.; Deng, L.J.; Chanussot, J.; Vivone, G. Fully-Connected Transformer for Multi-Source Image Fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2071–2088. [Google Scholar] [CrossRef] [PubMed]

- Ma, T.; Zhou, L.; Li, J. Space object recognition method based on wideband radar RCS data. J. Ordnance Equip. Eng. 2024, 45, 275–282. [Google Scholar] [CrossRef]

- Wang, S.; Yu, J.; Lapira, E.; Lee, J. A modified support vector data description based novelty detection approach for machinery components. Appl. Soft Comput. 2013, 13, 1193–1205. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional neural network with data augmentation for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S. Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Cao, X.; Ma, H.; Jin, J.; Wan, X.; Yi, J. A Novel Recognition-Before-Tracking Method Based on a Beam Constraint in Passive Radars for Low-Altitude Target Surveillance. Appl. Sci. 2025, 15, 9957. [Google Scholar] [CrossRef]

| Pre-Processing Methods | Accuracy | |

|---|---|---|

| One-dimensional data | Original signals | 0.845 ± 0.0108 |

| IFT | 0.930 ± 0.0137 | |

| WT + IFT | 0.956 ± 0.0161 | |

| WT + IFT + log | 0.975 ± 0.0085 | |

| HRRPs | 0.9643 ± 0.0062 | |

| Two-dimensional data | ISAR images | 0.9101 ± 0.0078 |

| Our fusion method | HRRPs + ISAR images | 0.9852 ± 0.0021 |

| Methods | Accuracy |

|---|---|

| CNN | 0.892 ± 0.0065 |

| SVM | 0.907 ± 0.0096 |

| MLP | 0.924 ± 0.0069 |

| VGG16 | 0.8846 ± 0.0044 |

| ResNet50 | 0.8950 ± 0.0052 |

| CNN-LSTM | 0.975 ± 0.0064 |

| EMI-Net [17] | 0.9794 ± 0.0042 |

| PIM-FF [38] | 0.9812 ± 0.0085 |

| Ours | 0.9852 ± 0.0021 |

| Fusion Strategies | Accuracy | |

|---|---|---|

| Statistical functions | max | 0.9685 ± 0.0059 |

| mean | 0.9702 ± 0.0077 | |

| Union functions | Cat (·) | 0.9807 ± 0.0091 |

| Cat (max, mean) | 0.9783 ± 0.0052 | |

| Attention | Attention mechanism [37] | 0.9814 ± 0.0064 |

| FCSA | 0.9852 ± 0.0021 | |

| Methods | Percentages of Training Samples | ||||

|---|---|---|---|---|---|

| 40% | 50% | 60% | 70% | 80% | |

| WT + IFT + log | 0.927 | 0.9468 | 0.9705 | 0.9713 | 0.975 |

| HRRPs | 0.9375 | 0.9461 | 0.9539 | 0.9605 | 0.9643 |

| ISAR images | 0.8903 | 0.8977 | 0.9014 | 0.9068 | 0.9101 |

| Ours | 0.9575 ± 0.0084 | 0.9611 ± 0.0051 | 0.9689 ± 0.0044 | 0.9755 ± 0.0037 | 0.9852 ± 0.0021 |

| Methods | HRRPs | ISAR Images | Ours |

|---|---|---|---|

| Accuracy | 0.7506 | 0.8188 | 0.9076 |

| Method | Calculation (Orders of Magnitude) | Test Time (s) |

|---|---|---|

| Vgg16 | 0.0531 | |

| Attention-1dCNN | 0.0057 | |

| Robust-DCN | 0.0293 | |

| Our method | 0.0372 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, L.; Jiang, Y.; Zhang, G.; Liu, Z. MFFN-FCSA: Multi-Modal Feature Fusion Networks with Fully Connected Self-Attention for Radar Space Target Recognition. Appl. Sci. 2025, 15, 11940. https://doi.org/10.3390/app152211940

Liao L, Jiang Y, Zhang G, Liu Z. MFFN-FCSA: Multi-Modal Feature Fusion Networks with Fully Connected Self-Attention for Radar Space Target Recognition. Applied Sciences. 2025; 15(22):11940. https://doi.org/10.3390/app152211940

Chicago/Turabian StyleLiao, Leiyao, Yunda Jiang, Gengxin Zhang, and Ziwei Liu. 2025. "MFFN-FCSA: Multi-Modal Feature Fusion Networks with Fully Connected Self-Attention for Radar Space Target Recognition" Applied Sciences 15, no. 22: 11940. https://doi.org/10.3390/app152211940

APA StyleLiao, L., Jiang, Y., Zhang, G., & Liu, Z. (2025). MFFN-FCSA: Multi-Modal Feature Fusion Networks with Fully Connected Self-Attention for Radar Space Target Recognition. Applied Sciences, 15(22), 11940. https://doi.org/10.3390/app152211940