Abstract

Small-object detection (SOD) remains an important and growing challenge in computer vision and is the backbone of many applications, including autonomous vehicles, aerial surveillance, medical imaging, and industrial quality control. Small objects, in pixels, lose discriminative features during deep neural network processing, making them difficult to disentangle from background noise and other artifacts. This survey presents a comprehensive and systematic review of the SOD advancements between 2023 and 2025, a period marked by the maturation of transformer-based architectures and a return to efficient, realistic deployment. We applied the PRISMA methodology for this work, yielding 112 seminal works in the field to ensure the robustness of our foundation for this study. We present a critical taxonomy of the developments since 2023, arranged in five categories: (1) multiscale feature learning; (2) transformer-based architectures; (3) context-aware methods; (4) data augmentation enhancements; and (5) advancements to mainstream detectors (e.g., YOLO). Third, we describe and analyze the evolving SOD-centered datasets and benchmarks and establish the importance of evaluating models fairly. Fourth, we contribute a comparative assessment of state-of-the-art models, evaluating not only accuracy (e.g., the average precision for small objects (AP_S)) but also important efficiency (FPS, latency, parameters, GFLOPS) metrics across standardized hardware platforms, including edge devices. We further use data-driven case studies in the remote sensing, manufacturing, and healthcare domains to create a bridge between academic benchmarks and real-world performance. Finally, we summarize practical guidance for practitioners, the model selection decision matrix, scenario-based playbooks, and the deployment checklist. The goal of this work is to help synthesize the recent progress, identify the primary limitations in SOD, and open research directions, including the potential future role of generative AI and foundational models, to address the long-standing data and feature representation challenges that have limited SOD.

1. Introduction

1.1. Background and Motivation

Object detection is a core computer vision task that involves finding and localizing objects in an image and has achieved considerable success with the advent of deep learning [1]. The general performance of detectors declines rapidly for small objects [2]. Due to the direct impact on many high-stakes, real-world SOD applications, this subfield of object detection has emerged as an important area of focus. For instance, the successful completion of search and rescue, precision agriculture, and infrastructure inspection tasks from aerial imagery collected via unmanned aerial vehicles (UAVs) depends heavily on SOD [3]. In autonomous vehicles, determining the distance of a pedestrian, another vehicle, or road debris not only requires SOD but also relies on it [4]. In industrial manufacturing, small-defect detection is critical to maintaining the quality assurance of products, while in medical imaging, the detection of microscopic lesions could be the difference between life and death [5,6].

Despite its importance, SOD is still an incredibly difficult challenge. Small objects are incredibly hard to detect reliably due to the relatively limited information provided to the model by just a few pixels and the feature dilution via deep convolutional neural networks. This has stimulated an increase in the volume of literature on designing dedicated and novel architectures, different training strategies, and innovative data handling and processing approaches to SOD. The years 2023–2025 have been a busy time, characterized by the solidification and delivery of attention mechanisms and the consolidation of transformer architecture, renewed explorations in multiscale feature fusion, and an increased emphasis on efficiency for deployment on resource-constrained edge devices. This survey builds on this latest evolution with a systematic organization and critical assessment of the recent advancements to produce an accessible overview for both researchers and practitioners traversing this complex space.

1.2. What Are Small Objects?

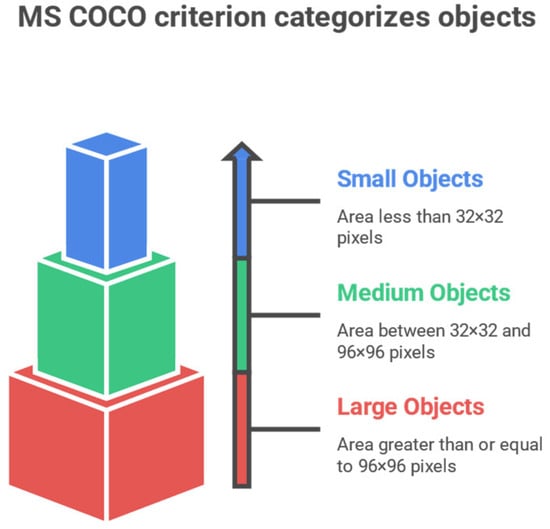

It is vital to articulate a formal and standardized definition of “small”, as this is an important aspect of defining the problem, as well as for making appropriate comparisons between methods. The commonly adopted definition comes from the widely referenced MS COCO (Microsoft Common Objects in Context) benchmark. The MS COCO criterion categorizes objects based on their pixel area in the image:

- Small objects: Area < 32 × 32 pixels;

- Medium objects: 32 × 32 pixels ≤ area < 96 × 96 pixels;

- Large objects: Area ≥ 96 × 96 pixels [7].

Figure 1 demonstrates these three categories.

Figure 1.

MS COCO criterion for object categorization.

This definition based on an absolute pixel count has set a standard, and the average precision for small objects (AP_S) is a primary metric for evaluating the small-object detection performance [8]. However, “small” can have a relative meaning. Another possibility is considering an object’s size, its absolute pixel count, in relation to the image size or other objects in the scene, as size can lead to detection difficulties. For example, an object that occupies less than 0.1% of the total image size could be considered relatively small, regardless of the pixel count [9]. This survey mainly references the COCO definition for consistency and comparability, but the relative object scale can be considered an important basis for detection or identification difficulties.

1.3. What Are the Major Challenges in Small-Object Detection?

The difficulty of detecting small objects is derived from the combination of several factors that general object detectors are not necessarily designed to account for, including the following:

- Feature information loss: Deep neural networks almost always incorporate multiple successive pooling layers or strides into convolutional layers that intentionally reduce the spatial resolution while capturing a hierarchy of increasing semantic features. This does not typically pose a problem for larger objects; however, fine-grained spatial information and weak features of small objects are generally lost in deeper layers with a smaller pixel count or resolution, rendering them indistinguishable from the background [10].

- Scale mismatch/imbalance: Small objects are often viewed in tandem with large objects in the same scene, leading to a serious scale variation problem. Feature pyramid networks (FPNs) and variations are attempts to remediate this problem, and fusing feature representations involving vastly different resolutions without diminishing small-target representations is an active area of research. Furthermore, many datasets have class imbalance, where small object(s) are far less frequent compared with large-object class(es), which leads to biased experiences in training [11].

- Low signal-to-noise ratios: Because of the small pixel count, small objects have poorer quality metrics in comparison with larger objects and are thus subject to noise, blurriness, and other image degradations. The appearance of small objects may be easily confused with background textures or sensor noise; these confusions lead to high rates of false positives and negatives [8].

- Ambiguity in context: While context is critical for object detection, it is sometimes counterproductive for small objects. For example, in dense scenes, such as crowds or cluttered aerial views, small objects may be proximate to each other in shared contexts where they may be occluded or are difficult to localize as individual instances [12].

- The annotation problem: The manual annotation of small objects is labor-intensive, expensive, and subject to error. All bounding-box annotations are subject to labeling noise, and small-object labels are especially subject to both an increased error rate and baseline inconsistencies in the generation of labels [13].

There are distinct challenges that need solutions beyond incremental improvements to general detection algorithms.

1.4. Survey Scope and Contributions

This survey considers the notable contributions to small-object detection published between January 2023 and September 2025. We specifically chose this period to examine the new trends with particular emphasis on the use of Vision Transformers and better image augmentation practices and a general focus on deployment efficiency.

The contributions of this paper are four-fold:

- Systematic and reproducible methodology: We employed PRISMA to conduct a systematic literature search and screening process that was comprehensive, measurable, and reproducible [14] to reduce selection bias and simultaneously provide a substantial, evidence-based foundation for our conclusions.

- Critical taxonomy of contemporary methods: We have generated a critical taxonomy that classifies contemporary SOD approaches into five distinct yet coherent categories: (1) multiscale feature learning; (2) transformer networks; (3) context-aware methods; (4) data augmentation; (5) architectural improvements in standard detectors. This systematic approach affords a critical perspective on the progression of these efforts and helps clarify how contemporary SOD methods solve the original challenges.

- Comprehensive quantitative and qualitative examination: In this literature review, we not only summarize previously reported results but also provide a comprehensive comparison table (a master comparison table) that compiles accuracy/efficiency performance data on various key benchmarks. Moreover, we provide qualitative strengths and weaknesses for different methods, as well as discussions on which datasets or evaluation protocols have most contributed to the field to date.

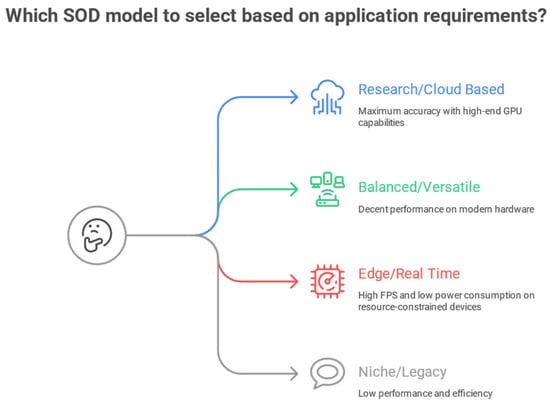

- Practical recommendations for practitioners: We acknowledge the gap that often exists between the academic understanding of a given topic and how it is then enacted in practice. Therefore, we have included a dedicated section to share practical suggestions, including a decision matrix for modeling selection based on application constraints (accuracy vs. latency), scenario-based playbooks for common use cases for SOD postmodeling, and deployment checklists.

1.5. Paper Overview

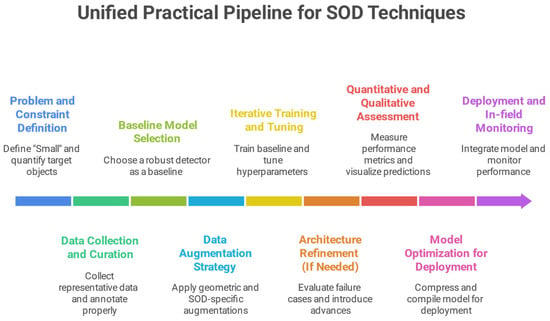

The remainder of this survey is organized as follows: In Section 2, we detail the processes employed to conduct the systematic PRISMA methodology for the literature search, screening, and selection. In Section 3, we present our critical taxonomy of contemporary SOD approaches. In Section 4, we discuss the most common datasets used to train SOD models and evaluate the model performance. In Section 5, we discuss evaluation metrics and benchmarking protocols. In Section 6, we provide an exhaustive comparative quantitative analysis of the state-of-the-art models to date. In Section 7, we evaluate the implementations and performance on edge and/or resource-constrained hardware. In Section 8, we evaluate applications through data-driven case studies of key SOD applications. In Section 9, we discuss key concepts around environmental adaptation and multidata sources and data fusion. In Section 10, we emphasize a conceptual map of the field and a unified practical pipeline. In Section 11, we provide practical guidance for model selection and deployment. In Section 12, we discuss the limitations to date and provide potentially promising future research directions. Finally, we conclude the paper in Section 13.

2. Methodology

We adopted the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 (Supplementary Materials) statement as a methodological framework to prepare a comprehensive, unbiased, and reproducible review of the recent literature to date in small-object detection [15]. PRISMA provides a structured, evidence-based guideline for conducting systematic reviews in the published literature, and its application in computer vision literature reviews is not uncommon to ensure rigor and transparency through the systematic review process [16,17]. Our methodology followed a mixed-methodology approach involving a multistage process related to search strategy, inclusion/exclusion criteria, study screening, and data extraction, as described below. The review protocol was not registered in any international database.

2.1. Search Strategy

Our literature search identified all relevant peer-reviewed articles published from 1 January 2023 to 15 September 2025. We searched the following five major academic databases that offer excellent computer science and engineering literature coverage:

- IEEE Xplore;

- ACM Digital Library;

- SpringerLink;

- ScienceDirect (Elsevier);

- Scopus;

- arXiv (for preprints of the most notable top-tier conference articles and journal articles).

To maximize the retrieval sensitivity, we developed a total search query by combining keywords from three core concept groups: (1) the object of interest; (2) the core task; (3) the enabling technology. The final query was adapted to each individual database syntax and was structured as follows:

(“small object” OR “tiny object” OR “low resolution object” OR “fine-grained object”) AND (“detection” OR “localization” OR “recognition”) AND (“deep learning” OR “convolutional neural network” OR “CNN” OR “transformer” OR “vision transformer” OR “attention” OR “YOLO”)

The search was performed in September 2025. To ensure an inclusive search, we also conducted backward reference searching (i.e., reading the reference lists of any included articles) to locate studies that may have been missed in the primary database search.

2.2. Inclusion/Exclusion Criteria

We established strict inclusion and exclusion criteria before beginning the screening process to center the research on the best possible relevant and high-quality research.

Inclusion Criteria:

- Timeframe: We screened for articles published or preprinted between 1 January 2023, and 15 September 2025.

- Main contribution: The main contribution of the article needed to be a novel method, dataset, benchmark, or literature review that addresses the small-object detection problem.

- Methodological requirement: The article needed to be based on a deep learning method or methods.

- Evaluation requirement: The method needed to have been quantitatively evaluated on at least one public benchmark dataset (e.g., MS COCO, DOTA, VisDrone, or SODA-D).

- Language and publication type: The article needed to be in English and published as a full-length conference paper or journal article, or as a technical preprint on arXiv.

Exclusion Criteria:

- Articles published outside the timeframe;

- Small-object detection was only a small part of the work and not a main goal (e.g., general object tracking or image segmentation);

- Works based on classical computer vision methods (e.g., non-deep learning);

- Articles that do not have any quantitative evaluation or are purely theoretical;

- Short papers, abstracts, posters, tutorials, and articles that are not in English;

- Patents and book chapters.

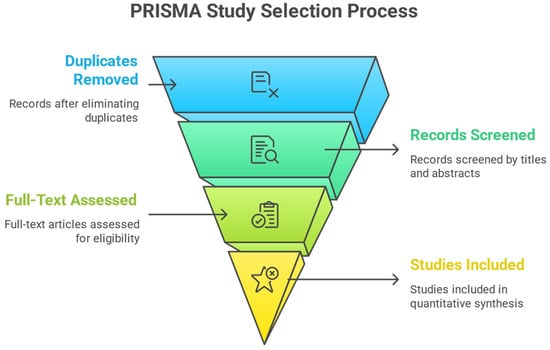

2.3. Screening and Data Extraction (PRISMA)

Screening was performed in three phases by two independent reviewers to reduce bias, and any disagreements were discussed with a third reviewer.

- Initial de-duplication: All records returned by the databases were combined, and duplicates were removed using a reference manager.

- Title and abstract screening: The titles and abstracts of the remaining articles were screened based on our inclusion/exclusion criteria, and any articles that were clearly not relevant were excluded in this screening.

- Full-text review: The full texts of the articles that were retained after the title and abstract screening were gathered and read in detail to verify their relevancy and make the final decision on their inclusion.

For each of the 112 studies included in the review, we extracted key information onto a structured data sheet. The extracted data fields included the author(s), year of publication, proposed method/model name, core technique/idea, backbone architecture, datasets used for evaluation, key performance metrics (AP, AP_S, AP_50, AR_S), reported efficiency metrics (FPS, latency, params, GFLOPs), and testing hardware. This structured data extraction served as the basis for the quantitative synthesis and comparative analysis presented in Section 6. Figure 2 visualizes the PRISMA flow from record identification to final inclusion.

Figure 2.

PRISMA study selection process.

2.4. Quality and Bias Assessment

While formal quality scoring (e.g., AMSTAR 2) is more common in medical reviews, we drew on the principles from these systems to evaluate the quality and bias in the included computer vision papers. Each paper was assessed based on whether the study was (1) clear about the contribution; (2) made a technically sound contribution; (3) gave enough detail about the experiment/study method for it to be potentially reproduced; (4) compared with relevant and contemporary methods under fair experimental conditions (e.g., same dataset, same backbone, same training schedule); (5) defined metrics clearly and reported results without ambiguity.

This served to help us interpret similar results in the overall analysis and identify studies whose claims required more critical scrutiny.

2.5. Quantitative Synthesis

The final step in our methodology was to synthesize all of the extracted quantitative data, seeking to provide a meta-view, or overview, of the state of the art in SOD. We gathered the performance and efficiency metrics from previously included papers and display them in a master comparison table (see Section 6.1). To facilitate a meaningful comparison between the methods, we organized the results according to the benchmark datasets (e.g., MS COCO, VisDrone) and, where possible, normalized the efficiency metrics by including the type of hardware used (e.g., NVIDIA A100, or RTX 4090). This quantitative synthesis enables a direct comparison between the methods across domains and identifies the overarching trends, accuracy vs. speed tradeoffs, and SOD performance frontiers. Our rigorous and transparent methodology provides credibility and utility to the findings presented in the survey.

3. Methods (2023–2025)—Critical Taxonomy

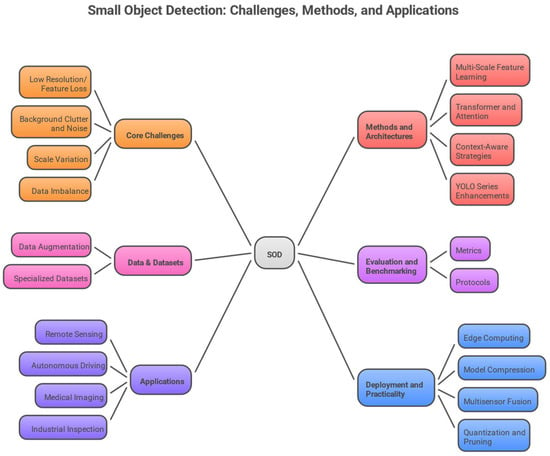

The period from 2023 to 2025 has seen a swift acceleration in method innovation aimed at small-object detection (SOD) tasks. Overall, the body of work has built on an existing tradition in general object detection methods and techniques; however, more recently, the focus has shifted to methods that address the challenges of information loss, ambiguous contexts, and scale disparity associated with small-object detection. This section offers a critical taxonomy of the most significant methods during this time. The classification contains five sections, organized around key themes: (i) multiscale feature learning; (ii) transformer-based methods; (iii) context-based methods; (iv) data augmentation; (v) improvements in a widely used class of methods: the YOLO series. Each section presents a critical overview of the methods, including a description of the ideas, important developments, and critical tradeoffs, providing readers with a structured overview of the recent SOD landscape. Figure 3 summarizes the taxonomy method for mitigating the core small-object challenges and links each family to its targeted pain point.

Figure 3.

SOD challenge mitigation.

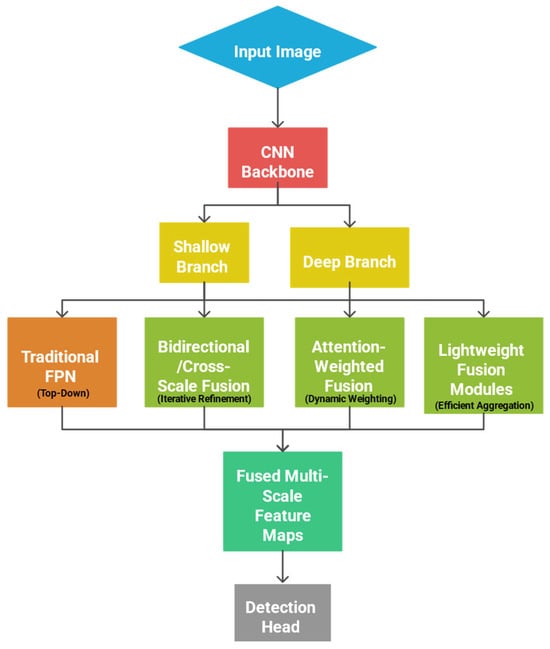

3.1. Multiscale Feature Learning and Fusion

Multiscale feature learning remains a foundational aspect of contemporary SOD methods and is based on the understanding that features acquired at different layers of the network preserve the levels of semantic and spatial information. Features from shallow layers preserve the high-resolution spatial information necessary for locating small objects, while deeper layers capture the detailed semantic information that is needed, at the very least, for classification. The hard problem with multiscale feature learning is how to fuse multiscale representations into a single feature map that is spatially precise and semantically strong enough to locate small objects. The work from 2023–2025 will help readers visualize the movement past the simple feature pyramid network (FPN) with complex yet efficient topological designs.

Innovations have tended to focus on three main areas of multiscale feature learning: (1) establishing better information flow across scales, (2) rethinking how to fuse features, and (3) advancing multiscale learning topologies.

First, and primarily, the goal is to enhance the information flow across scales. Typical top–down FPN paths can dilute high-resolution features from the shallow layers when fused with semantically strong but spatially coarse features from the deep layers [18]. For instance, the Bidirectional Feature Pyramid Network (BifPN) and other methods introduce bidirectional or cross-scale feature connection pathways that combine and more directly and iteratively refine features from both shallow and deep layers across the entire pyramid. For example, the HSF-DETR model introduces additional structures, such as a Multi-Perspective Context Block (MPCBlock) and the Multi-Scale Path Aggregation Fusion Block (MSPAFBlock), to enable efficient multiscale feature processing for small objects in challenging, multiperspective UAV imagery [19]. Moreover, others have developed a Scale Sequence Feature Fusion methodology that explicitly sequences and fuses layers to help preserve scale-specific information and improve performance under the YOLO framework [20].

Second, the fusion “mechanisms” themselves have also evolved. Rather than practicing simplified element-wise addition or concatenation, newer methods have employed professional-grade, attention-based processes or dynamically weighted fusions. This approach allows multiscale features to be weighted and retaken to the most informative multiscale features to achieve the best classification precision and allows the multiscale feature fusion to actually “learn” multiscale features for the classification of detected objects through the input. For instance, attention is simply incorporated into the fusion process to refine the aggregation of multiscale features and thereby improve the detection accuracy in cluttered images where small objects are present [21]. For instance, the PARE-YOLO model incorporates attention-based, multiscale features into its model methodology, increasing weights using multiscale features to ensure the categorization of all detected objects from aerial imagery [22]. Figure 4 shows that features flow from the backbone into shallow and deep layers and are fused via four families—the traditional top–down FPN, bidirectional/cross-scale fusion, attention/dynamically weighted fusion, and lightweight modules—converging into fused multiscale maps that feed the detection head, thereby bridging advanced fusion mechanisms with the shift toward lightweight, efficient fusion.

Figure 4.

Feature flow.

Third, there is an upward shift toward lightweight, effective fusion modules. Understanding that complicated fusion networks may incur significant computational costs has led researchers to investigate lighter-weight solutions. The SEMA-YOLO model is a perfect example of this approach. SEMA-YOLO introduces a model framework that not only enhances shallow-layer features in YOLOv5 but also develops a multiscale adaptation module that does not add any significant computational burden while enhancing tiny-object detection [23]. Others have used fusion blocks that trade performance for efficiency, achieving consistent performance improvements across detection tasks and real-time inference time that could practically be used in real-world applications [24], representing an important tradeoff. Complicated, densely connected fusion networks typically produce the best accuracy; however, their models require more flexible architectures and efficient computational requirements to be realistically trained and developed. Continued research in the area aims to identify the equilibrium of the best expressiveness for multiscale features with the lowest computational cost.

3.2. Transformer-Based Models and Attention Mechanisms

The development of transformer models and attention mechanisms, which stems from natural language processing, has already become a major trend in computer vision and has implications for SOD [25]. For example, the main component of a transformer is the self-attention mechanism, which essentially allows for the modeling of the long-range dependence between pixels or image patches. This is important, as self-attention allows the network to learn global contextual information that can be crucial when distinguishing between small objects. The research during 2023–2025 has transitioned from using general-purpose Vision Transformers (ViTs) to developing specialized transformer-based object detectors (detectors) and hybrid models developed with specific expectations based on SOD issues.

One branch of research focuses on adapting the detection transformer (DETR) framework and variants for SOD. While standard DETR models perform well, they can struggle with small-object detection due to the coarse resolution of the feature maps entered into the encoder and the slow convergence of the Hungarian matching algorithm. Recent works such as ACD-DETR and HSF-DETR present significant alterations to improve the performance in this area [19,26]. ACD-DETR is based on RT-DETR and proposes several innovations, including a Multiscale Edge-Enhanced Feature Fusion module to better capture the fine-grained boundary details of small objects in UAV images [26]. HSF-DETR adopts a hyperscale fusion design in a transformer framework to efficiently process features from various perspectives [19]. These works illustrate that the redesign of feature fusion and attention modules around the DETR framework can measurably improve the small-object detection performance.

In addition to end-to-end transformer detectors, a more common and typically more pragmatic strategy for addressing small-object detection is to introduce transformer-based components into existing CNN systems. This is a hybrid approach that preserves the advantages of CNNs regarding inductive bias and feature extraction and also benefits from the global context modeling of transformers. Common self-attention modules inserted into the backbone or neck of a detector explicitly improve feature representation [27]. For example, researchers propose incorporating a multihead mixed self-attention strategy to augment feature maps before passing them onto detection heads, forcing the model to focus on important regions of the image that may contain small objects [6]. One area of recent research investigates specific transformer variants, such as the CSWin transformer, which is particularly suitable for UAV image object detection based on the efficiency of its cross-shaped window self-attention mechanism [28].

Additionally, we are starting to see transformer-based detection heads arise as a potential alternative to traditional anchor-based or anchor-free heads. For example, the PARE-YOLO model replaces the traditional YOLO detection head with a transformer-based RT-DETR head to leverage the transformer’s ability for set prediction, negating the need for hand-tuned anchors and non-maximum suppression (NMS) [22]. This could potentially reduce the steps in the detection pipeline and ultimately result in more robust predictions, as the model can reason globally over all objects in an image simultaneously. However, transformer-based approaches are not a cure-all, as they can be hefty when it comes to computational burden and data demands, and some studies indicate that they may still face challenges with respect to extremely small-object detection compared with very optimized CNN-based systems [18]. The question remains about what kinds of attention mechanisms are lightweight and efficient but still provide global context modeling advantages without exponential computational burden.

3.3. Context-Aware Detection Strategies

Small objects often do not hold enough intrinsic visual information to support reliable detection and classification on their own. Hence, leveraging the context is especially important. Context-aware detection strategies are strategies that explicitly model the relationship between an object and its surroundings for improving detection accuracy. Recent progress has also focused on context-aware detection strategies that take more sophisticated approaches to processing and leveraging local and global contextual cues.

A well-known approach is expanding the receptive field of the network where we suspect the presence of a small object. This can be accomplished by changing the model architecture, e.g., via dilated convolutions, which expand the receptive field without expanding the parameters or losing spatial resolution. For example, the MDSF-YOLO model includes a multiscale dilated sequence fusion network to efficiently incorporate more contextual information around suspected objects [18]. The model derives features with varying dilation rates from its feature maps, which allows the model to process the area surrounding the object at multiple scales, thereby helping distance the object from background clutter.

This method is very powerful, moves away from the exclusive use of a large receptive field, and is explicitly used to model relationships between objects and scenes. For example, a model learning a “boat” (small object) is probably on the “water” (context), or “pedestrians” on a sidewalk are likely to be near their “crosswalk”. Historically, this has been achieved through graphical models; however, deep learning approaches have achieved this implicitly through attention and pooling across the global feature space. Moreover, correspondingly, transformer-based models are inherently good at this given that the self-attention mechanism learns dependencies between distance image patches (effectively models object–context and object–object relationships across the image) [21].

Some methods instead create multilevel contextual feature maps and then fuse these with the main detection features. For example, if a model uses global average pooling via a global context module to summarize the entire scene and fuse and broadcast that onto the fine-grain feature maps, then each detection site will have a higher-level understanding of the entire scene to help resolve ambiguity. For example, if it is known that an image is taken from an aerial perspective and over a maritime scene, then the prior probability suggesting that a small white speck is a boat as opposed to a car increases substantially. The research focus has recently been on making this process more dynamic. As opposed to having one static context vector, there has been a push to create models to adaptively construct context representations for all context features of the image, allowing for more nuance and context inherent to the specific area of interest in the image [29].

3.4. Data Augmentation and Generation

The performance of any detection model is directly tied to the quality and availability of the training dataset, which represents the most important challenge for SOD, where small objects are not in the training search space, creating class imbalance and poor generalization of model detection. Data augmentation methods artificially create larger training datasets, and from 2023 to 2025, we have seen growth from the standard augmentation methods to brute generation methods.

Traditional augmentation methods (random cropping, scaling, flipping, color jitter, etc.) are still standard practice, and one of the more effective for object detection is mosaic augmentation, which combines four training images into one, thereby forcing the model to learn to detect objects in different contexts and likelihoods of scales [30]. However, these methods are still limited by the diversity (or lack thereof) of the training dataset. One powerful method is a generative model approach, namely Generative Adversarial Networks, and more recently, diffusion models have shown great promise in generating novel, synthetic training data.

GAN-based augmentation has been examined for a few years, wherein a generator network learns via training to provide realistic images of objects that can be used to augment the dataset [31,32]. These augmentations may be as simple as creating entire scenes or, even better for SOD, generators create instances of small objects that are then pasted into scenes on backgrounds that make them appear realistic. This can create a “copy–paste” augmentation method that permits exact control over the quantity, location, and scale of small-sized objects to be contained in the training dataset purely for the purpose of class imbalance alleviation. GANs are useful for synthesizing new views of an object, or they may be used to perform style transfer in which an existing image is rendered to look like the scene was taken in different weather conditions [33,34].

In recent years, diffusion models have exhibited significant promise and are often better than GANs regarding the image quality and diversity [35]. Unlike GANs, diffusion models start by adding noise to an image, and then a model is trained to reverse this process. Essentially, by starting with pure noise, a diffusion model is trained to basically generate realistic images. For SOD, a diffusion model may be used to generate entire synthetic images with a plausible arrangement of small objects, or to augment existing images by in-painting or modifying backgrounds [33,36]. Augmented background and small-object arrangement training data are critically important for rarely captured classes or for capturing images that are dangerous or difficult to capture in the real world. For instance, one might generate synthetic aerial or medical images that contain the same kind of small objects or defects [37].

Despite the apparent promise of generative augmentation, we should not dismiss the challenges. The first and foremost potential concern is the “domain gap” between synthetic and real data. If the image is not very realistic, then the model could potentially be overfit to artifacts of the generation process, resulting in a poor performance on current real-world data. Reconciling generated scenes for semantic consistency and physical plausibility is an ongoing research topic. Nevertheless, generative models, and diffusion models in particular, represent a significant horizon to solving the data scarcity problem that abstractly defines SOD works [30].

3.5. Architectural Improvements for Mainstream Detectors (e.g., YOLO Series)

The YOLO (You Only Look Once) family of detectors has remained incredibly popular in both academia and practice due to its even balance of speed and accuracy. The timeframe from 2023 to 2025 has seen continued evolution within the YOLO family (e.g., YOLOv8, YOLOv9), and many of the enhancements have direct ties to SOD [19]. Generally, these enhancements are not radical redesigns but are mostly architectural and training strategy refinements that approach performance improvements for difficult-to-detect items.

One of the most prevalent trends has been the use of smaller versions of detection heads with higher resolution. For example, the traditional YOLOv3 has three detection heads that each operates on three different strides: 8, 16, and 32. In a YOLO revision, and even in custom YOLO SOD variants, a fourth head has been introduced that operates at a stride of 4 (the P2 layer), which processes significantly higher-resolution feature maps (e.g., 160 × 160 for a 640 × 640 input). This is a simple way of making predictions much closer to the features with less downsampling to preserve the smallest details in the localization of very small objects [23]. This architectural improvement is arguably one of the most direct, best methods to improve the SOD YOLO family performance.

Another important improvement is the neck of the network, which performs the feature fusion. Recent YOLO models have benefitted from the transition from the traditional feature pyramid networks (FPNs) to more sophisticated alternative architectures, such as Path Aggregation Networks (PANets) and Bidirectional FPNs (BiFPNs), which facilitate better information flow from high-resolution features to high-semantic-level features. In small-object detection (SOD), recent models such as SOD-YOLO have proposed additional improvements in the neck design to improve the small-object representation in the context of aerial images [38]. Improvements to necks commonly take the form of adding cross-scale connections or the use of attention mechanisms to help the network focus on important channel(s) of features (for example, the spatial attention mechanism).

The designs of the basic building blocks of the backbone and neck have drastically changed. For example, the network may trade standard convolutions for more efficient powers of convolutions or take the attention mechanisms and embed it in the custom core network block of the model. An example is LS-YOLO, which includes a self-attention mechanism and adapted custom region scaling loss to improve the small-object detection performance in the context of intelligent transportation [27]. These may be designed for combination with examples of custom network blocks, such as the Fusion_Block designed for YOLOv8n, to improve the individual feature fusion while maintaining a small impact on efficiency for deployment on devices with very little computational power [24].

Combining these architectural improvements with advanced training methods, including improved loss functions (e.g., Wise-IoU, EMA-GIoU) and/or data augmentations, can produce strongly optimized detectors [22], and the result is often a continual improvement in the performance vs. computation tradeoff. For the user, the YOLO flow is a matured and user-friendly SOD pipeline. The modular system allows researchers to insert, use, and experiment with new developments and advanced strategies, such as transformer heads or custom experimental fusion methodologies, in a useful application of applied research to push the barriers of detection methods for small objects or objects of interest within a wide-ranging number of applications, such as aerial, fixed-camera, or medical imaging [6,38].

4. Datasets for SOD

The performance and generalization capabilities of small-object detection models primarily hinge on the quality, diversity, and size of the datasets used for training and evaluating the models. The time period between 2023 and 2025 for SOD has built upon general-purpose datasets. However, more importantly, the development of well-curated and domain-specific datasets that best represent the practical problems posed in small-object detection (SOD) will be a significant part of the direction of SOD. This section describes the large number of datasets that are relevant to the current SOD research efforts, and how they can be organized based on the scope or application domain, while addressing the ongoing issues with the datasets curated for SOD.

4.1. General-Purpose Datasets with Small Objects

Although not specifically developed for SOD, there are a number of large-scale, general-purpose object detection datasets that contain a significant proportion of small-object instances, and thus, serve as de facto benchmarks to evaluate model robustness on object size scales:

- COCO (Common Objects in Context): COCO is still the most influential benchmark for general object detection. With a bounding-box area of less than 32 × 32 pixels to define “small” objects [9], COCO has an extremely complex aspect with 80 categories of objects, dense scenes, and substantial size variation between object class sizes. The metric established for small objects, the average precision for small objects (AP_S), represents a lower bound and informs readers of the model’s ability to accurately detect small objects. Most landmark advances in SOD cite their results on COCO test-dev or validation sets to indicate some measure of generalizability.

- LVIS (Large Vocabulary Instance Segmentation): LVIS expands the COCO challenge with a larger vocabulary of over 1200 categories designed from the long-tailed distribution. This presents the challenge of small-object detection, even as rare occurrences in the training data. For SOD research, LVIS is important for examining the way that models pinpointing small objects interact with the data and effectiveness presentation of scale in the object size. Recent work exploring zero-shot or few-shot models have just begun to explore the ability to detect pursued terms in small objects that are mostly untracked in the training dataset [39].

- Objects365: Objects365 offers a larger scale than COCO, with 365 categories, over 600,000 images, and more than 10 million bounding boxes in the category. Its scale and diversity make it an extraordinary resource, and it can be pretrained on a general-purpose dataset and then tuned on a more specific SOD dataset. The massive number of instances, which include a large number of small objects, helps with increasing the robustness and generalizability of the features and also mitigates the risk of overfitting to a smaller category to evaluate the dataset for biases [40].

4.2. Specialized SOD Datasets (2023–Current)

The general-purpose SOD datasets fail to capture the unique challenges of the SOD reality, which is why the research community is increasing the number of developed datasets that specify small or tiny objects:

- SODA-D: This dataset was specifically developed to detect small objects in driving scenes and uses 2K–4K-resolution images from a vehicle’s view of the image. The small-object detection task for traffic situations focuses on nine of the more common traffic-applicable categories—pedestrian, cyclist, and traffic sign—and the scenario depicts the small-object density in complex, cluttered urban environments, representing a realistic application of autonomous driving [41].

- SODA-A: The SODA-A is the reverse perspective of the SODA-D [39] and looks at small objects in aerial images. The “top-down”, “birds-eye” perspective of this type of aerial image dataset alleviates the difficulty of detecting objects for similar categories because vehicles, pedestrians, and boats appear significantly smaller when using remote sensing and UAV surveillance. The SODA-A dataset provides a useful tool for the research and development of models that could provide similar benchmark results for aerial image models.

- PKUDAVIS-SOD: This dataset is intended for salient object detection (SOD), which is close to but not synonymous with SOD and is sometimes referred to in the context of supersets of detection [42]. Even though it contains primarily salient objects with the goal of detecting visually prominent objects, this dataset contains images with small, salient objects that showcase the interaction of saliency and small scale and force a model to identify the object based on not only its size but also its contextual prominence.

- Sod-UAV: This dataset is geared toward small-object detection from unmanned aerial vehicles (UAVs) and provides images taken from low-altitude flights, which is a typical operational case, as most UAVs are limited to less than 400 feet [43]. The Sod-UAV dataset contains a diverse range of small objects of interest for surveillance and monitoring, such as people and vehicles, within different environment types. Test datasets such as Sod-UAV are key to validating models for realistic deployment scenarios for aerial platforms.

4.3. Domain-Specific Datasets (Aerial, Medical, Maritime/Underwater, IR/Thermal)

Often the most challenging, and thus, most practical SOD applications are in domain-specific settings, which have very specific data characteristics. More recently, there has been some momentum in curating datasets to account for domain specifics.

- Aerial and remote sensing: This is one of the most active domains for SOD. The DOTA (Dataset for Object Detection in Aerial Images) and DIOR (Dataset for Object Detection in Optical Remote Sensing Images) are the primary datasets in this domain and support the widest range of object categories, extreme image resolution (often gigapixels), and extreme-object-scale parameters. Tiny-object detection is essential for traffic monitoring applications, urban design and planning, and security surveillance, and models built for this domain must be capable of detection while accounting for rotational variance, dense clutters, and complex backgrounds [44,45].

- Medical imaging: In the medical imaging domain, SOD is important in the identification of small-scale pathologies, such as small microaneurysms detected in retinal fundus images, small polyps in colonoscopy images, and small cancerous lesions in radiology scans for cancer staging. Datasets in this domain are often private due to patient confidentiality and serve as training data in the development of clinical decision support systems. This is a highly valuable area to be working in; however, it has its own challenges, including poor contrast, ambiguous object boundaries, and high intraclass variation [46].

- Maritime and underwater surveillance: Detecting small objects such as debris, buoys, small vessels, or even people who have fallen overboard from a ship or an aerial platform has important implications for maritime safety. The challenges extend to underwater datasets, with poor visibility, light scattering, and the color distortion of objects (backscattering) presenting additional challenges. This domain forces models to perform robustly under rampant environmental degradation [47].

- IR/thermal imagery: Thermal sensors are essential for detection in low-light or adverse weather conditions, and datasets based on IR camera sensor data are applicable for pedestrian detection, wildlife monitoring, and industrial inspection. Small objects in thermal imagery lack all color and texture information and force models to rely solely on thermal signatures and shapes, which could be ambiguous and solely depend on the environment [48].

4.4. Curation and Annotation Challenges

Despite the increase in the growth of available datasets, these systems remain key bottlenecks to advancing the overall field:

- High annotation costs: The manual annotation of small objects is laborious, time-consuming, and subject to user error. Annotating small objects requires great attention to detail, often zooming in enough to accurately and precisely place a bounding box at the pixel level. This substantial annotation cost limits to scale the number of datasets manually annotated to support SOD [2].

- Annotation ambiguity and inconsistency: Often, small, blurry, and low-resolution object bounding boxes are poorly defined, leading to inconsistency in the labeled objects between annotators. This label noise could disrupt model training by over-complexifying the problem on models that are sensitive to precise object localization [49].

- Class imbalance: Most datasets usefully naturally contain small objects fewer times than larger-class objects, and thus this area of study must address the class imbalance problem. This class imbalance problem may arise not only for individual categories but also between categorized objects of varying scales and cause an inherent bias in the model performance to better detect larger instances [50].

- Limited dataset diversity: Many of the current datasets used for SOD are collected under specific conditions (e.g., certain weather conditions or regions). To assess the robustness and generalization of SOD models in real-world situations, we need datasets that include diversity across weather, lighting, season, and sensor types. One area of ongoing research is the use of generative AI and simulation platforms to augment possible dataset diversity through synthetically generated datasets; however, there is still the open issue of the domain gap between synthetic and real data [51].

5. Benchmarks and Experimental Protocols

The scientific community should have standardized benchmarks and rigorous experimental protocols to ensure that the research on small-object detection is measurable, reproducible, and meaningful. A solid evaluation framework allows for a fair comparison of the different methods and, ultimately, trustworthy insights into their respective strengths and weaknesses [4]. In the years 2023–2025, the importance of the accuracy, transparency, and reproducibility of these evaluations has also been emphasized [43,52]. This section covers the main components of a fair and thorough SOD benchmark: evaluation metrics, fair experimental protocols, and the need for systematic ablation studies.

5.1. Evaluation Metrics

Evaluating the performance of an SOD model requires metrics that have been established through existing benchmarks. While we can use the following existing general object detection metrics to evaluate the SOD performance, we must consider how we interpret the existing benchmarks in this space, especially for SOD.

- Primary accuracy metric (AP_S): The most important SOD metric is the average precision for small objects (AP_S), according to the COCO evaluation protocol. This metric calculates the mean average precision (mAP) over many IoU (Intersection over Union) thresholds (0.50–0.95) for objects with areas less than 32 × 32 pixels [9]. The AP_S value measures the ability of a model to classify small objects correctly in addition to localizing those targets correctly, which is the primary definition for measuring the SOD performance.

- Associated recall metrics (AR_S): In addition to the AP_S, the average recall for small objects (AR_S) has also been used to interpret the SOD performance. The AR_S measures the fraction of all ground-truth small objects detected by the model, averaged over IoU thresholds. A high AR_S is critical for tasks in which the missed detection of a small object could have severe consequences (e.g., medical diagnosis or security) [53].

- General performance metrics (mAP, AP_50, AP_75): While the AP_S is the primary metric of interest, we also provide the overall mAP (averaged over all object sizes), AP_50 (AP at IoU = 0.5), and AP_75 (AP at IoU = 0.75), which are useful for the overall context so that we know whether improvements in the small-object performance come at the expense of the medium- or large-object detection performance. Ideally, we can improve the AP_S by decreasing or stabilizing the performance on a certain object scale for a well-rounded model [9].

- Efficiency/resource consumption metrics: For the implementation of models in near-real-time settings, such as deployment on edge devices, metrics based only on the accuracy of the output subnet are not a complete measure. A complete benchmark suite must include a pipeline for the following suite efficiency metrics:

- Parameters (Params): The number of trainable parameters that make up a model is the primary metric of size, as it is indicative of the memory footprint for storing it (usually millions (M)). Lightweight models favor small parameter counts [54,55].

- GFLOPs (Giga Floating Point Operations per Second): This metric is a measure of the computational complexity of a model, quantified by the number of multiply–accumulates for a forward pass on a single image. GFLOPs are intended to provide a measure of computational use that is agnostic regarding the underlying hardware platform [56,57].

- Inference speed (FPS): The inference speed is measured in frames per second, which is the number of images processed per second. FPS is a valuable real-time measure; however, it is critical that it is reported with the actual GPU or CPU that was used for testing (e.g., NVIDIA A100, RTX 4090, Jetson Orin) [44,54].

- Latency: The latency is a measure of the time required to process a single image (usually given in milliseconds (ms)) and is the inverse of FPS but is typically more relevant to applications that require immediate responses. The latency should be measured for the model, including preprocessing and postprocessing, to reproduce and evaluate the full system [45,58].

5.2. Fair Protocols

Having fair and unbiased comparisons is necessary for any credible study. A fair experimental protocol means providing the same conditions to models during training and evaluation.

- All models should be trained consistently: All models are compared on the same training dataset with either the same number of epochs or iterations. If models are optimized using similar optimizers (e.g., AdamW, SGD), learning rate schedules, and data augmentation pipelines, then these decisions should remain consistent unless the goal is to compare specific optimizers, learning rate schedules, or data augmentation methods. Models should also be trained to a specific baseline, e.g., MMDetection or YOLOv8 baseline training, and reported to provide an explanation [59].

- Input resolution should be consistent: The input resolution sometimes has a substantial impact on the detection accuracy, especially regarding small objects, as well as costs. Models and their performances should be evaluated at a consistent input resolution (e.g., 640 × 640 or 1280 × 1280). If a method requires a certain input resolution or is able to function at a variable input resolution, then the method should be compared with baselines that were evaluated at that same input resolution [58].

- Report hardware/software environment: Because the performance metrics (e.g., FPS and latency) are highly dependent on the testing environment, the hardware (e.g., GPU model, CPU, RAM) and software (e.g., CUDA version, deep learning framework (e.g., PyTorch or TensorFlow), library versions) stacks used for evaluation must be reported. This transparency is essential for reproducing results [60].

- Open-source implementation: The most reliable (and reproducible) approach to reproducible research is the provision of a public source code for the proposed method and pretrained model weights [43], allowing others to verify and build upon the results. The use of platforms such as GitHub for sharing code and experimental configurations is common practice and a sign of quality research [60]. Comprehensive benchmarks that provide toolkits for standardized evaluations can help in this regard [52,61].

5.3. Systematic Ablations

Ablation studies are necessary for methodological papers, as they aim to dissect a proposed model and quantify the contribution of each individual component comprising it. A thorough ablation study shows the true understanding of the model behavior and provides evidence for the design choices made.

- Component contributions: When a new method consists of multiple novel components (e.g., a new attention mechanism, a feature fusion module, and a new loss function), ablation studies should be carried out in a systematic process, starting with a strong baseline and adding each component one by one. The incremental improvement in the AP_S and any relevant metric should be reported for each stage, clearly showing which components contributed to the performance improvement [62].

- Hyperparameter sensitivity: Most models include important hyperparameters that impact performance, and systematic ablations should show the model sensitivity to these hyperparameters. For example, if the new loss function has a weighting term, then the performance should be assessed across all possible values. This also provides practical advice for others wanting to implement or utilize the method for adaptation [63].

- Baseline model: The baseline used in an ablation study is important, and it should be an established, strong model (e.g., YOLOv8, RT-DETR). Reporting improvements over a weak or outdated baseline can be distinguishing. The purpose of ablation studies is to iterate toward a clear presentation of how the proposed innovations add genuine value to existing state-of-the-art architectures.

Table 1 provides a comprehensive quantitative comparison of the key small-object detection models from 2023 to 2025, detailing their performances on the COCO dataset and focusing on the tradeoff between the small-object detection accuracy (AP_S) and various efficiency metrics, such as the model size (Params), computational complexity (GFLOPs), and inference speed (FPS). The data were synthesized from multiple referenced papers to offer a standardized view.

Table 1.

Comprehensive, quantitative comparison of key small-object detection models from 2023 to 2025.

By following these strict standards and experiment protocols, the SOD research community can strive for a culture of transparency, reproducibility, and meaningful development while ensuring that any new method is both novel and proactively effective and useful.

6. Comparative Quantitative Analysis

This section offers a systematic quantitative analysis of the state-of-the-art small-object detection models from 2023 to 2025. By sharing performance information through peer-reviewed publications and standardized benchmarks, we depict the landscape as a whole, specifically focusing on the important balance of detection accuracy (in this analysis, primarily the AP_S—average precision for small objects) and computational cost, the quantification of which is important so that researchers can determine which models are better for certain deployment scenarios, from high-performance cloud services to resource-constrained edge devices.

6.1. Master Comparison Table

To make a fair and direct comparison, we present the key SOD model performance metrics on the COCO test-dev dataset in Table 1. This table is a resource for researchers, providing a summary of the accuracy (AP_S) and efficiency metrics. All metrics of efficiency are based on the testing performance on high-performance GPUs (ex: NVIDIA A100 or RTX 4090) to represent the peak performance unless otherwise noted for edge model behavior. However, small performance adjustments based on implementation details and/or testing environments in any given study could also have an effect on the performances across models.

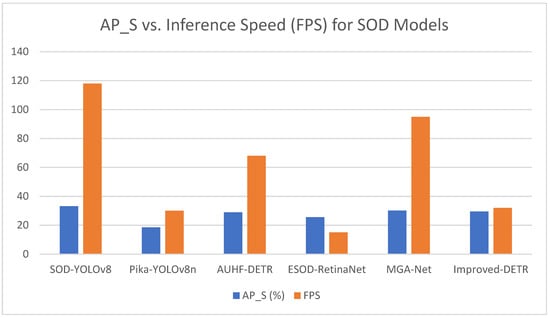

6.2. Visual Performance Summaries

To provide a better visualization of the accuracy–efficiency tradeoffs, we have visualized the data from Table 1. Drawings such as scatter plots are helpful, as they offer quick representations of the performance vs. cost tradeoffs that are related to design choices in architecture (Figure 5).

Figure 5.

Visual performance summaries AP_S vs. Inference Speed (FPS).

6.3. Trend and Significance Analysis

Overall, the quantitative data provide insight into various key trends and changes in small-object detection from 2023 to 2025:

- Hybrid architectures lead the way: The highest-performing models (e.g., SOD-YOLOv8) follow a clear trend of augmenting existing high-performing CNN-based backbones (such as those in the YOLO series) with additional modules. These changes often comprise multiscale feature fusion, some types of attention mechanisms, and/or sets of loss functions designed specifically for small objects [24,56]. This hybrid approach optimizes the powerful feature extraction capabilities of CNNs while using relatively sophisticated mechanisms to maintain and expand fine-grained features, leading to large AP_S increases.

- The emergence of efficient transformers: Even though the cost of transformer-based architectures was once prohibited the deployment of real-time detection, architectures such as RT-DETR and, more specifically, AUHF-DETR show that these architectures provide greater option pools. In the case of AUHF-DETR, this model can obtain an intriguing combination of lower total numbers of parameters and GFLOPS while retaining relevant detection accuracy [54]. Achieved through combinations of lightweight backbones, spatial attention, and architectural optimizations, these advancements present acceptable options for deployment in UAVs and embedded devices. Similarly, there are efficient advancements being made with DETR with applications for autonomous driving [57].

- Accuracy–efficiency tradeoff remains key: The visualizations make it clear that there is no single “best” model, as the best model depends on the application.

- High-accuracy cases: For applications that require the highest level of accuracy and when computational resources are available (e.g., processing offline satellite imagery), the SOD-YOLOv8 model is the best overall, as it achieves the highest AP_S but has the highest latency and GFLOPS values [56].

- Real-time cases: For high-framerate applications such as robotics and surveillance, models such as YOLOv8-S or domain-specific, efficient architectures such as ORSISOD-Net [55] are superior as FPS values are favored, resulting in some small-object accuracy loss.

- Edge-constrained cases: Models must be efficient and lightweight for deployment on resource-limited drones and embedded devices. AUHF-DETR represents a suitable model for the edge-constrained use case, as it has both low GFLOPS and a low total number of parameters and has high FPS, and it is actionable under strict latency constraints [32].

- High-resolution processing is effective: The issue of small-object detection is related to detection within the image resolution. ESOD even highlights the efficient processing of relatively high-resolution images, as downsampling images destroys small-object detection information [58]. This approach typically results in a decrease in the FPS; however, it allows for the continued high accuracy rate of native, high-resolution inputs, which is important for applications in remote sensing and quality inspection.

In summary, the quantitative evaluation highlights that the field is continuing to mature along two parallel tracks: (1) extending accuracy capabilities via complex and resource-intensive models, and (2) engineering label-efficient and lightweight models for practical deployment. Most advancements bridge the two tracks and create models that provide both accuracy and efficiency, especially by combining the best aspects of CNNs and transformers.

7. Edge and Resource-Constrained Evaluation

As previously mentioned, the deployment of small-object detection (SOD) models goes beyond the typical research setting wherein high-performance computing is available. Many of the most critical use cases, such as autonomous drones, portable medical diagnosis tools, and on-platform industrial monitoring, require fast performances on edge hardware with limited resources. The period from 2023 to 2025 has driven a consistent emphasis on evaluating and optimizing SOD models for deployment on hardware with limited computational capability, memory, and energy budgets. In this section, we discuss the common testbeds, optimization strategies, and deployment considerations unique to this SOD research and development stage.

7.1. Testbeds and Setup

The evaluation of SOD models on edge devices requires standardized hardware platforms to guarantee the comparability and reproducibility of the performance metrics. There is a range of embedded systems on the market; however, the NVIDIA Jetson family has emerged as the de facto standard for academic and industrial research because of the balance of its performance, power efficiency, and robust software ecosystem (CUDA, TensorRT).

Dominant Hardware Platforms

In the recent literature studies on SOD and general object detection models, the models are consistently evaluated on a few key devices:

- NVIDIA Jetson AGX Orin: This high-performance module is often cited as the preferred hardware for demanding edge applications requiring heavy parallel processing capabilities and is often used to validate the real-time inference capabilities of complex models in applications such as aerial segmentation and precision agriculture [64,65,66].

- NVIDIA Jetson Orin Nano: This variant is more power-efficient and is a common testbed for lightweight algorithms implementing low-cost edge intelligence [67,68], and it is often evaluated in contrast to other popular single-board computers.

- NVIDIA Jetson Xavier Series: This platform was common for real-time object detection research prior to the advent of the Orin series and continues to be a cited benchmark for legacy platforms [69].

- Raspberry Pi 5: This performant GPU is limited compared with the Jetson series but is noted for its performance capabilities with the CPU in common tests [67]. The easy access to this platform continues to have relevance in some surveillance and monitoring use cases, particularly those rooted in CPU-bound performance models or heavy model optimization.

Standardized Evaluation Environment

A typical evaluation environment may involve the deployment of the SOD model from training on the Jetson device in a Linux-based Distro OS (e.g., NVIDIA JetPack SDK). The first class of performance is typically based on detection metrics (mAP, AP_S); however, the more important metrics are based on the inference performance regarding the speed (FPS), latency (ms), power consumption (W), and memory footprint. Tools such as NVIDIA’s jtop are commonly used to monitor real-time resource consumption during inference. To provide reasonable comparisons, researchers typically report the specific Jetson module with the JetPack version, the inference framework of choice (e.g., PyTorch, TensorFlow), and acceleration libraries on top of them (e.g., NVIDIA TensorRT).

7.2. Practical Optimizations

The deployment of a deep learning model on an edge device rarely involves the direct deployment of an architecture that provides a load, and it is not uncommon to systematically apply and evaluate a series of optimization approaches to close the gap between high accuracy and the limitations of edge hardware. Typically, the approaches aim to minimize the model size, reduce the compute capacity, and decrease the inference speed, often with incremental, manageable loss in the detection accuracy.

7.2.1. Quantization

Quantization is the technique of reducing the numerical precision of a model’s weights (the parameters used to compute predictions) and/or its activations (the values generated during predictions). Low-precision representations of models typically require less memory and, with the right hardware, can utilize hardware acceleration. A few examples of float/int representation conversions for quantization are FP32 (32-bit float), INT8 (8-bit integer), and FP32 to FP16 (16-bit float).

- FP16 quantization: The first step toward making inference/training quicker is conversion from FP32 to FP16. FP16 quantization is often the easiest and fastest path to improved inference on modern GPUs (including those on the Jetson platform), with very little cost to accuracy [70]. This is typically accomplished with the NVIDIA TensorRT optimization engine. Researchers used INT8 quantization in a study and found a ~100× increase in the inference for five frames/second without a decrease in accuracy in classifying weeds from an agricultural drone [66].

- INT8 quantization: Aggressive quantization via INT8 can provide the greatest performance gains, and this is particularly true for deployment on edge devices optimized for integer arithmetic, though it does require an intermediate calibration step with a representative dataset to develop scaling factors that map the float value range to the 8-bit integer range. Some research has successfully quantified a model down to INT8 for inference; thus, quantization is a powerful concept for efficient deployment to edge devices [67].

7.2.2. Pruning

Model pruning refers to the deliberate process of removing redundant parameters (weights or entire neurons/filters) based on their importance from a trained neural network. The intent of pruning is to yield a smaller, “sparser” model that requires fewer computations and/or less memory, thereby allowing faster inference.

- Unstructured vs. structured pruning: Unstructured pruning removes individual weights by comparing their magnitudes, which may result in a sparse weight matrix necessitating dedicated hardware or a library to run efficiently. The structured pruning specialty simply prunes entire channels, filters, or layers, whatever a layer/final model needs to be a smaller, dense model that can automatically run on standard hardware.

- Prune strategically: More intentionally, advanced methods prune layers based on their perceived contributions to the underlying task, for example, the successful “minimal pruning of important layers and the extreme pruning of less” significant layers [66]. Each component always included must maintain essential feature extraction characteristics, particularly for small objects.

7.2.3. Knowledge Distillation

Knowledge distillation (KD) is a model compression method in which a smaller “student” model is trained to mimic the behavior of a larger, more accurate “teacher” model. Rather than solely training the student model on the ground-truth labels, the student is trained to output in a similar way by matching the output distributions (soft labels) of the teacher model. The knowledge learned by the teacher was previously termed “dark knowledge” and allows for a compact student model to outperform the same model trained from scratch on the same data. This method has been promoted as the key technique for thin models for edge devices [71].

7.2.4. Low-Rank Decomposition

Low-rank techniques focus on approximating the dense weight matrices of the layers in a network with smaller, low-rank matrices. Low-rank decomposition creates the approximated replacement by representing a large matrix as the product of two (or more) smaller matrices, reducing both the total number of parameters and the cost of the associated matrix multiplication operations. Low-rank models are one method for lightweight detection algorithms for edge intelligence [68].

7.3. Deployment Case Notes

The successful deployment of SOD on edge devices involves thinking holistically about the model, the optimization techniques, and the hardware that the model will eventually run on. Studies completed in 2024 and 2025 present several evidence-based findings that should be referenced:

- Integrated optimization pipelines: The best deployments often combine different optimization techniques. For example, the model can be pruned to eliminate unused parameters and then quantized to INT8 with an engine such as NVIDIA TensorRT for maximum acceleration on a Jetson AGX Orin [66].

- Hardware–software codesign: The model architecture and optimization strategy are chosen based on the target hardware. Jetson devices have consistently proven to be suitable choices because they have GPUs that are powerful and built-in support for optimization libraries such as TensorRT [65].

- Application-specific tradeoffs: The acceptable tradeoff between accuracy and speed is highly dependent on the application. For instance, the inference speed is prioritized when building emergency safe landing systems for drones for the sake of a quick and reliable performance even at the expense of reduced detection accuracy [64]. Conversely, medical diagnostic tools favor maximum accuracy and tolerate somewhat higher latency. Lightweight models designed to solve specific tasks, such as identifying the best palm fruit on harvesting machinery, are designed specifically for the tolerant constraints posed by the applications [72].

Overall, evaluating and optimizing SOD models to accommodate resource-constrained environments is an active, maturing area of interest. By adopting standardized testbeds, such as the NVIDIA Jetson series, and applying a combination of quantization, pruning, and additional compression techniques, researchers are closing the gap between high-performing SOD models and the practical demands of deploying them in real time at the edge.

8. Applications and Data-Driven Case Studies

The research that has centered on small-object detection (SOD) methodologies has been driven and validated through application in varied, high-impact domains. The reliable detection of small objects has been a critical enabling technology for applications across the remote sensing/vegetation monitoring, autonomous systems, and medical diagnosis fields. Here, we summarize a series of data-driven case studies conducted within the 2023–2025 timeframe for SOD, elucidating example problems.

8.1. Remote Sensing and Aerial Imagery

Unmanned aerial vehicles (UAVs) or drones are commonplace data collection platforms and provide unparalleled aerial perspectives of our Earth. However, due to the high altitude and wide field of view of UAV imagery (e.g., images of the surface), the target objects of interest, people, vehicles, or agricultural anomalies, will typically appear as small or tiny targets. Therefore, SOD is a critical component of drone scene analysis [1].

Key Application Areas:

- Search and rescue and surveillance: Drones are regularly utilized for monitoring large areas. SOD models enable small-target detection (e.g., individuals or vehicles) from aerial imagery, which is important for emergency response and security. Specialized architectures and algorithms, such as the proposed SOD-YOLO, are rapidly being developed to improve the small-object detection performance in UAV scene images [38].

- Precision agriculture: In agriculture, drones equipped with SOD models can perform tasks related to weed monitoring, pest detection, and crop health [5]. For example, when drones identify small insects or the early onset of brightness on leaves from a distance, interventions can be targeted to reduce costs and environmental impacts [1]. Researchers have focused on developing specialized SOD models, such as DEMNet, for detecting small instances of tea leaf blight from slightly blurry UAV images for industry quality control [73].

- Infrastructure inspection: Drones are used to efficiently inspect large-scale infrastructures (e.g., wind turbines and energy transmission service lines) and ensure the safety of inspectors. SOD is necessary for small-defect detection (e.g., small cracks, small corrosion areas, and damage from flying particles) on wind turbine blades [7,74]. Detecting these small defects requires UAV imagery taken by small quadcopters under varying weather and light installations.

Case Study: SOD-YOLO for UAV Imagery

A significant problem in UAV-based detection is that general object detectors trained on general-purpose datasets usually perform poorly for small, cluttered objects often found in aerial views. Some researchers have proposed modifications to the existing mainstream architectures in response to this issue. For example, SOD-YOLO, an enhancement of YOLOv8, was developed to improve small-object detection specifically in UAV application scenarios [38]. This enhancement was accomplished by adding additional detection heads and an attention mechanism specifically designed for fine-grained features so that repeatable improvements in recall and precision for small objects were observed in comparison with baseline models in UAV-specific datasets. Projects utilizing DJI drones for data collection have become common in the benchmarking of these models [28].

8.2. Autonomous Driving and Maritime Surveillance

In the areas of transportation and surveillance, the need for the timely detection of distant or small objects is directly related to the safety and operational performance.

Autonomous Driving

Self-driving cars rely on a combination of sensors to perceive their surroundings. The timely detection of small objects for safety functions is critical, including the identification of distant pedestrians, traffic signs, and roadway debris. Because self-driving cars can travel at high speeds, the development of robust SOD models that can process high-resolution sensor data in real time for long-range situational awareness is a time-compressed task for object detection researchers [1].

Maritime Surveillance

SOD is one of the most important applications in maritime spaces for detecting small vessels, buoys, debris, and individuals overboard. These objects may only exist as a few pixels in reference to a practically infinite dynamic background of water.

- Drone and ship-based detection: Both UAVs and ship-based camera systems are used for surveillance. Detecting other drones or small boats from these platforms is a key application for security and situational awareness [47].

- Challenges: The maritime environment creates specific challenges, with issues such as wave clutter, sun glare, and weather conditions that easily obscure or mimic small objects. Models need to be resilient to the specific environmental challenges.

8.3. Industrial and Manufacturing Defects

In the modern era of manufacturing, the focus is on the elimination of defects using automated quality control processes, and SOD algorithms are a primary part of the vision-based inspection systems for small defects on product surfaces.

- Defect targets: Defects include scratches, cracks, pinholes, and contamination on the surface of any material (e.g., semiconductors, textiles, metals, etc.). These small and sometimes subtle defects are precisely why humans have a difficult time consistently detecting them over long durations in manufacturing environments.

- Operational benefits: The operational benefits of SOD systems that utilize computer vision are the objective, repeatable, and high-throughput inspection capabilities that are achievable, and this is especially relevant in wind turbine manufacturing, where automated quality control deep learning-based systems are now used to identify small defects/imperfections in the blades prior to deployment [65]. We all recognize that the cost of failure and maintenance down the road is far greater than the initial cost of identifying potential issues early on, allowing us to be proactive. However, these detection models must achieve high accuracy/precision, as false positives can result in the needless disposal of good products in the inspection processes, while false negatives compromise the quality.

8.4. Medical Imaging and Agriculture

The basic purpose of applying SOD in both medical imaging and agriculture is the recognition of small but significant features in complex biological environments.

Medical Imaging