Research on Landslide Displacement Prediction Using Stacking-Based Machine Learning Fusion Model

Abstract

1. Introduction

- (1)

- How to construct a dynamic weight fusion mechanism that can adaptively balance the accuracy and stability of the model?

- (2)

- How much improvement can the fusion model bring in terms of prediction accuracy and stability compared with advanced single models (such as TCN, XGBoost) and traditional models (such as ARIMA)?

- (3)

- Can the model effectively capture the sudden displacement changes triggered by external factors such as heavy rainfall?

2. Foundation Model

2.1. Support Vector Regression (SVR)

2.2. Extreme Gradient Boosting (XGBoost)

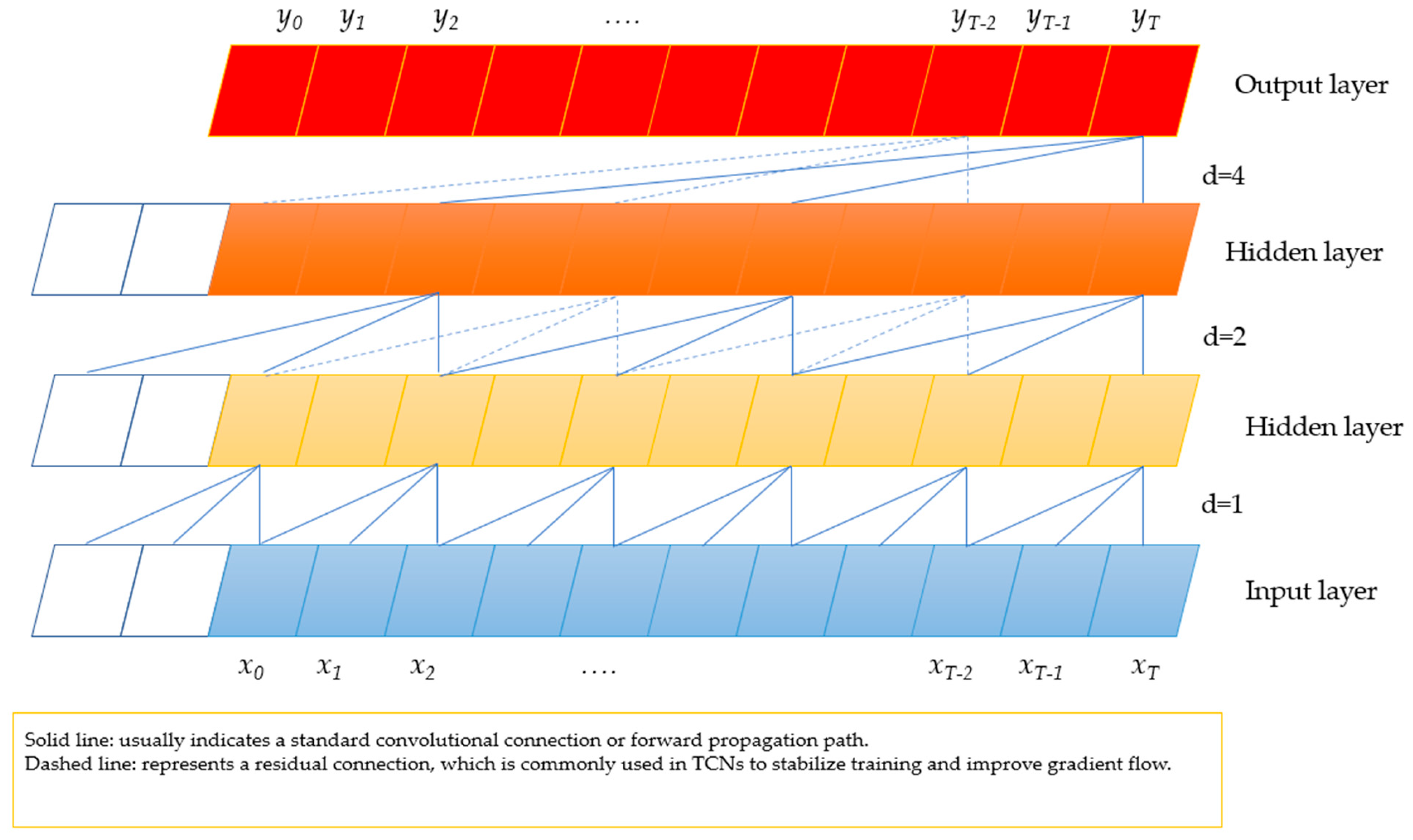

2.3. Temporal Convolutional Network (TCN)

2.4. Bayesian Optimization

- (1)

- Build a priori: Use the Gaussian process as an agent model to model the objective function.

- (2)

- Choose an acquisition function: Commonly used acquisition functions include expected improvement (EI), upper confidence boundary (UCB), and probability improvement (PI).

- (3)

- The acquisition function: In each iteration, determine the next evaluation point by optimizing the acquisition function.

- (4)

- Update proxy model: Update the Gaussian process model with new observations.

2.5. Random Forest (RF)

- Data randomness: Generate a different training subset for each tree through Bootstrap sampling.

- Feature randomness: When each node is split, k (usually or ) features are randomly selected from all m features to form a subset of features, and the optimal splitting point is selected from them.

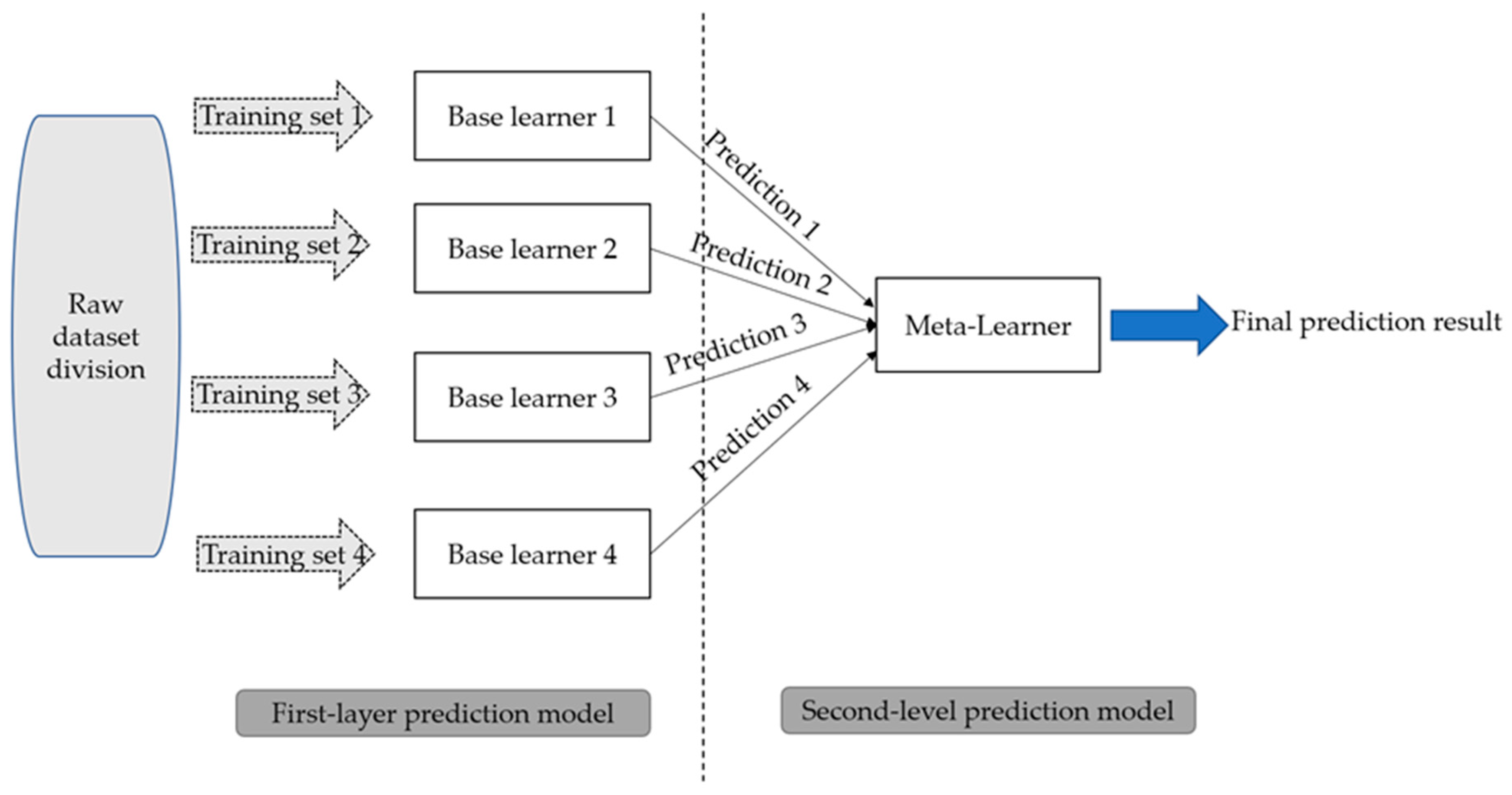

2.6. Stacking Model

- Layer 1 (base learner): Use the training data Dtrain to train multiple different base models (e.g., SVR, XGBoost, RF, TCN) independently. Subsequently, these base models are used to predict the validation set Dval, and the prediction results are used as a new feature matrix Zval. At the same time, a prediction Ztest for the test set is also generated.

- The second layer (meta-learner): The validation set predicts the result Zval and its corresponding real label Yval as a new training set to train the meta-model (usually choose a simple linear model, such as ridge regression, or a model with strong expression ability, such as XGBoost). Finally, the trained meta-model is used to predict feature Ztest for the final prediction of the test set.

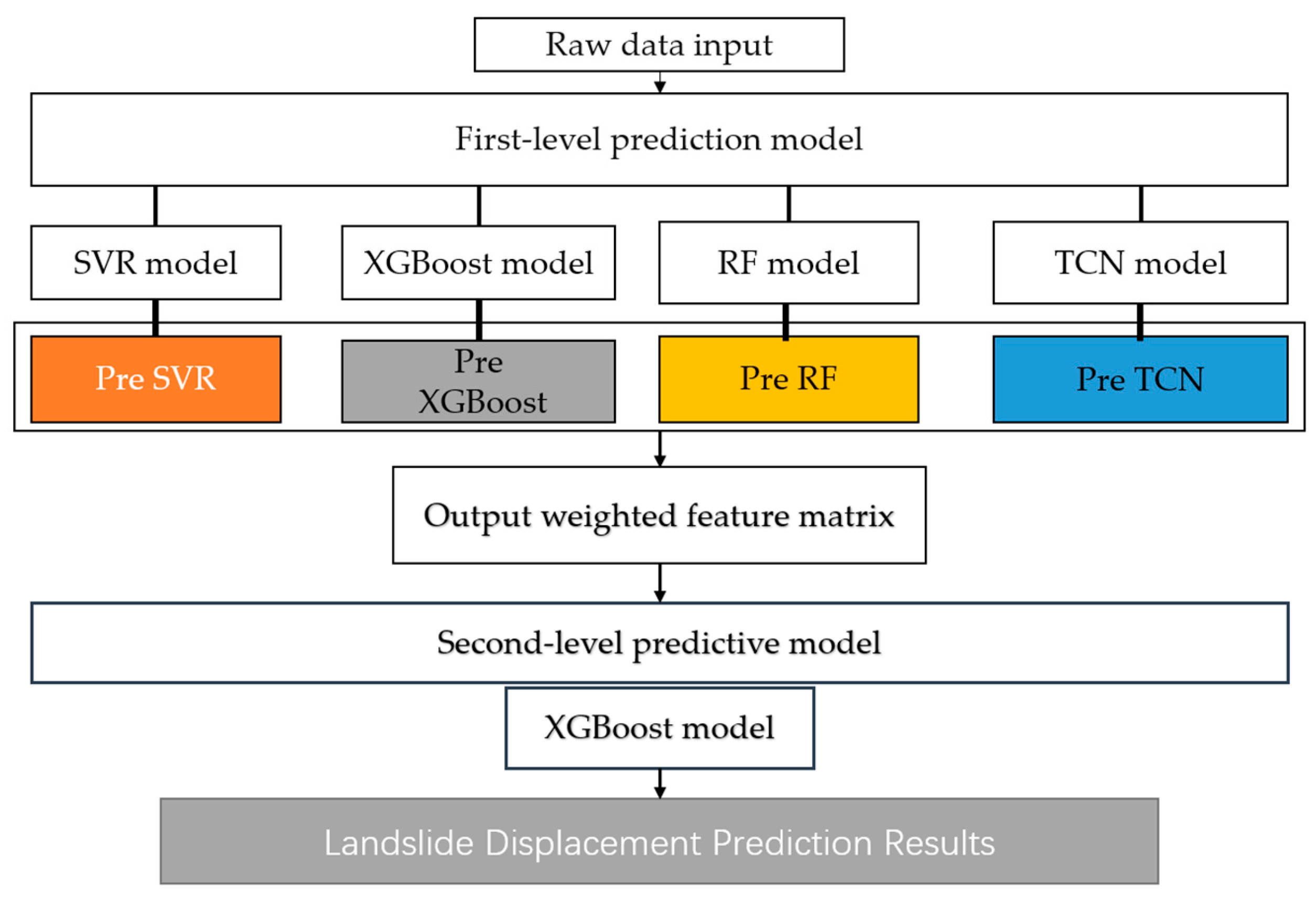

3. Model Fusion Based on Stacking Model

3.1. Dynamic Weighting Mechanism

3.2. Stacking Framework Overview

- (1)

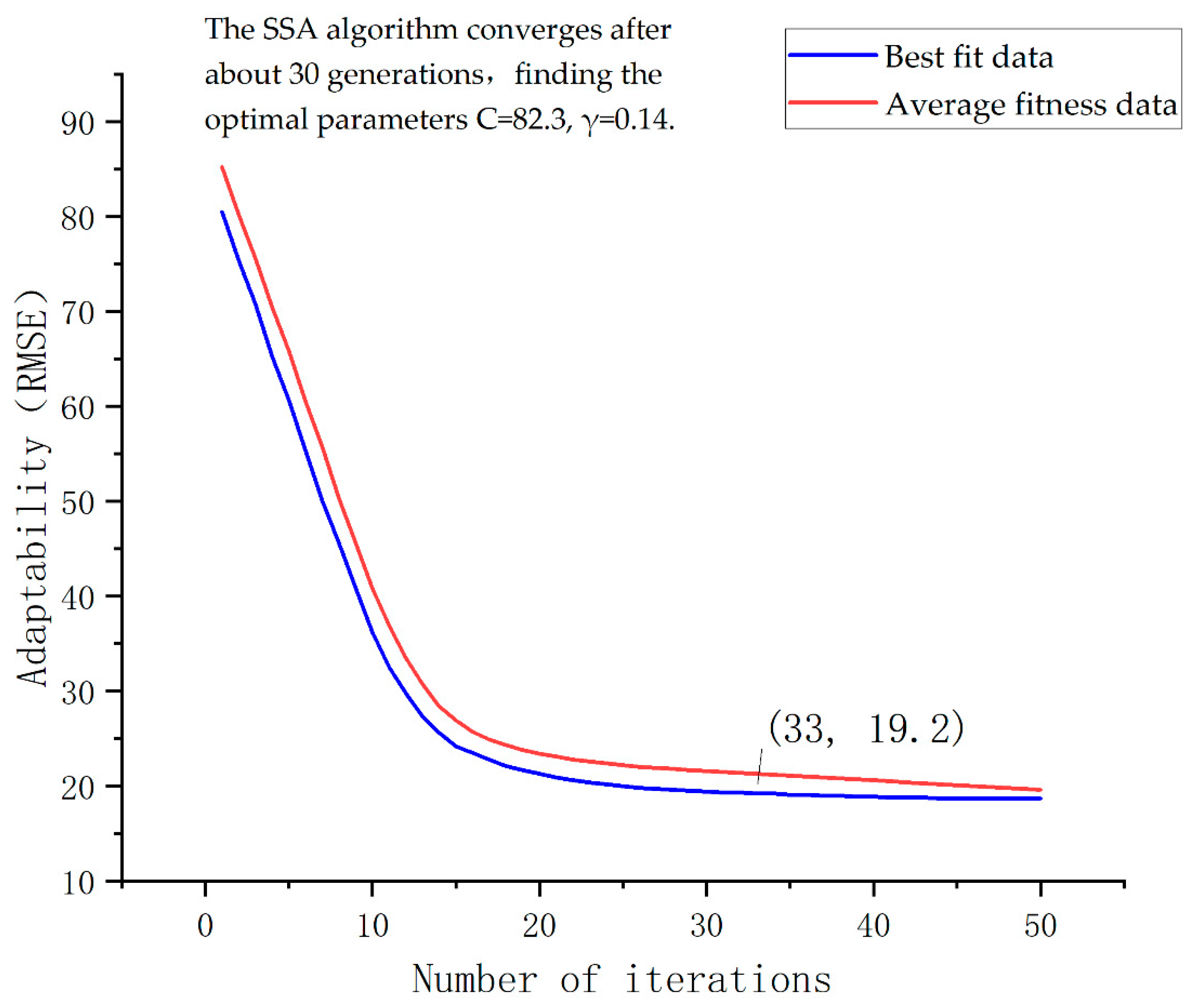

- Base model selection and hyperparameter optimization: SVR, XGBoost, TCN, and random forest algorithms with large differences and complementary performance are selected as the basic prediction model of the first layer. The hyperparameters of the SVR model are optimized by Bayesian optimization, and the hyperparameters of other models are set according to pre-experiments and empirical settings.

- (2)

- Independent training of base models: Using the divided training set data, each foundation model is trained independently to ensure that each model can fully learn the features of the displacement sequence.

- (3)

- Dynamic weight calculation and meta-feature construction: The trained foundation models are applied to the validation set to obtain their respective prediction results. Based on the prediction accuracy (RMSE) and stability (variance) of each model on the validation set, the corresponding fusion weight is calculated by using the dynamic weight formula. The predictions of each foundation model are multiplied by their dynamic weights to obtain weighted predicted values, and these weighted predictions are combined into a new meta-feature dataset.

- (4)

- Meta-model training and fusion prediction: The XGBoost meta-model of the second layer is trained by taking the newly generated meta-feature dataset as the input and the real displacement value of the corresponding validation set as the target. The meta-model learns how to optimally integrate the weighted predictions of each basic model to form the final stacking fusion prediction model.

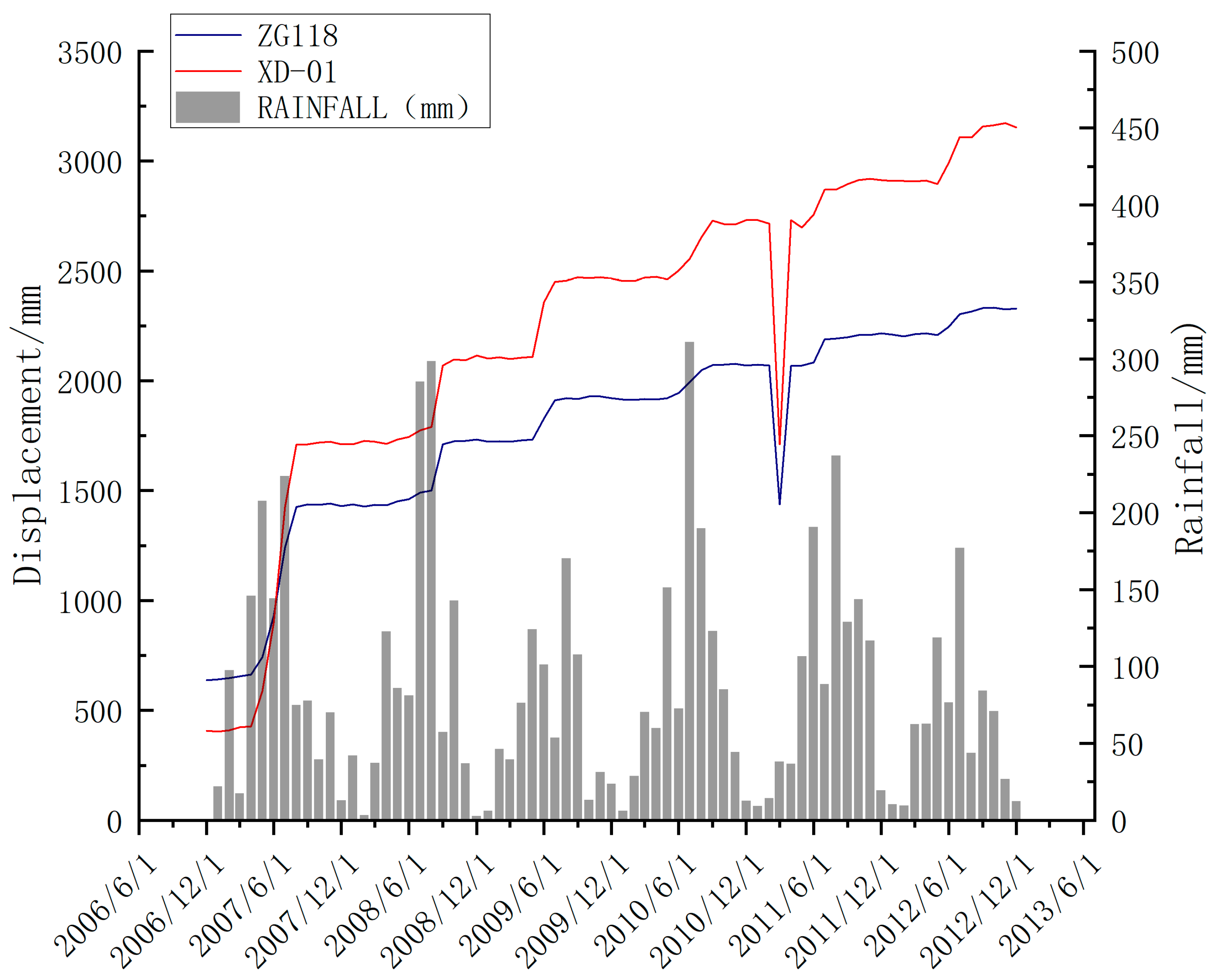

4. Prediction of Landslide Displacement in Baishui River

4.1. Overview of Engineering Geology

4.2. Data Preprocessing

4.2.1. The Grubbs Criterion Detects Outliers

4.2.2. Three-Dimensional Spline Interpolation Correction

5. Landslide Displacement Prediction

5.1. Experimental Design and Model Training

5.1.1. Data Division and Feature Engineering

5.1.2. Base Model Parameter Settings

5.1.3. Stacking Fusion Mechanism

5.2. Forecast Results and Analysis

5.2.1. Model Performance Indicators

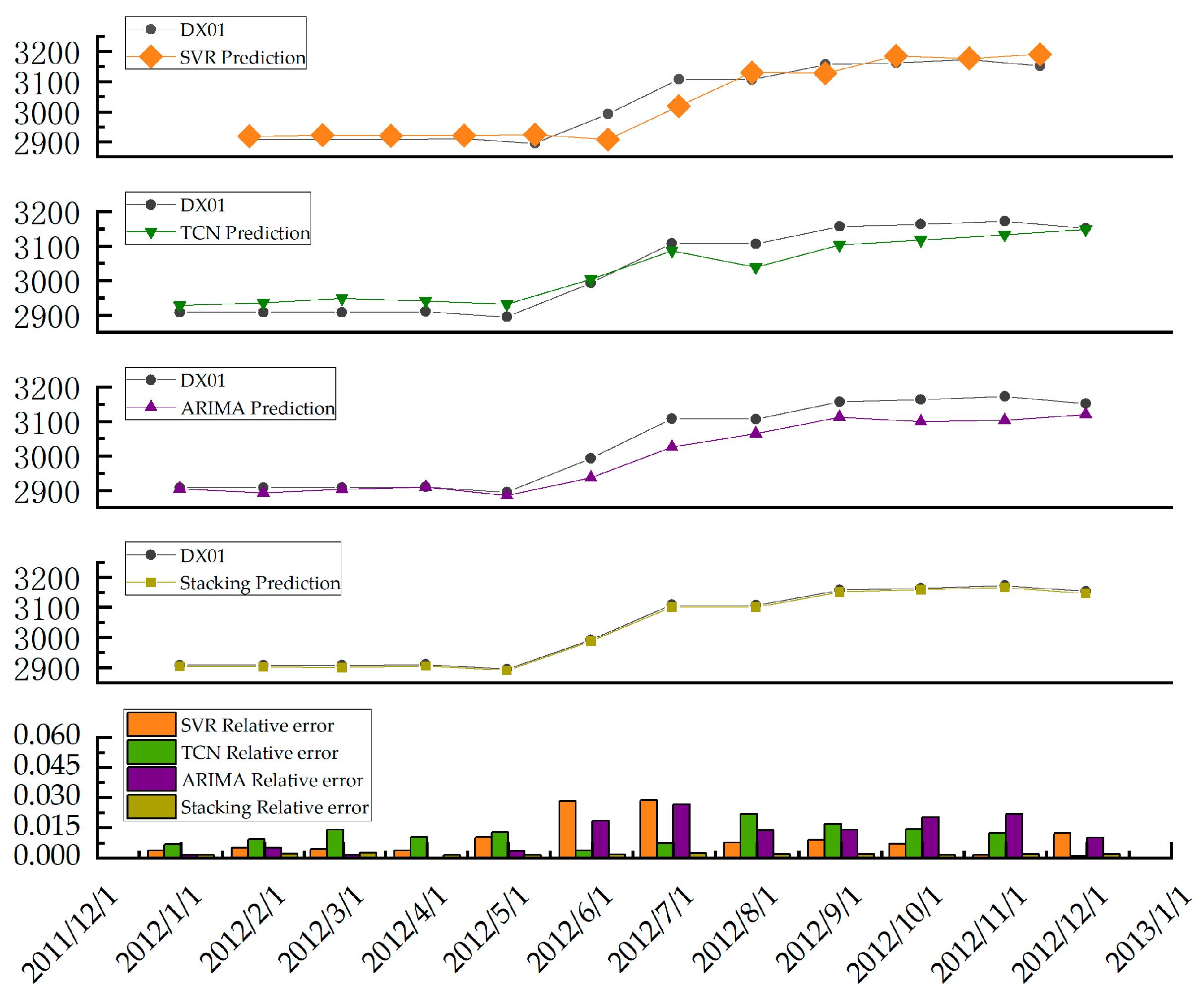

5.2.2. Comparison of Prediction Curves

5.3. Comparative Experiments and Discussions

6. Conclusions

6.1. Research Conclusions

- (1)

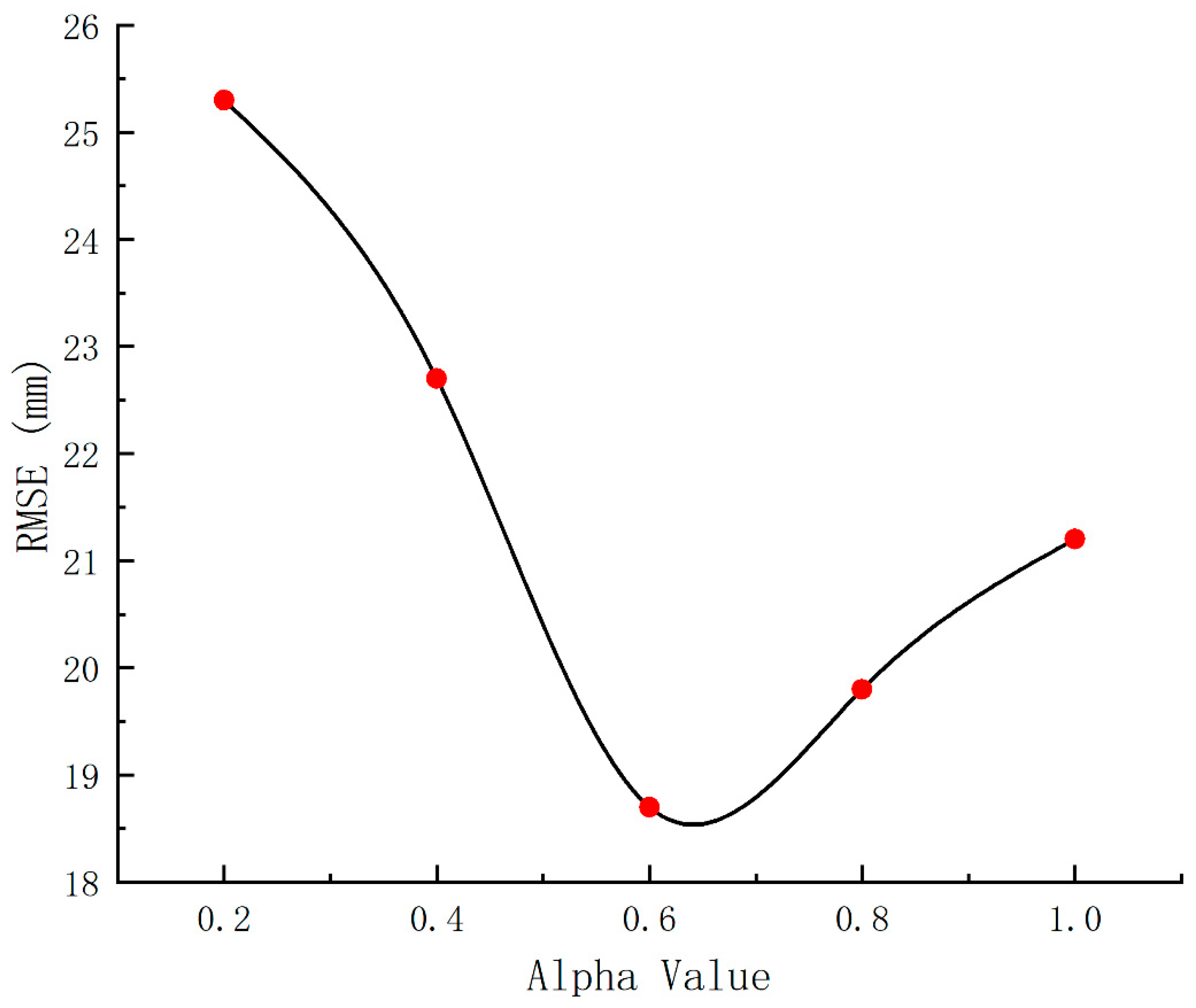

- A high-precision dynamic fusion framework was proposed as follows: by introducing a dynamic weight allocation mechanism centered on prediction accuracy (RMSE) and stability (variance), and setting an accuracy–stability balance coefficient (α = 0.6), an adaptive weighted stacking model was successfully constructed. This framework effectively addresses the lag in response of traditional static fusion methods during displacement mutation periods, significantly enhancing the predictive model’s adaptability to complex working conditions.

- (2)

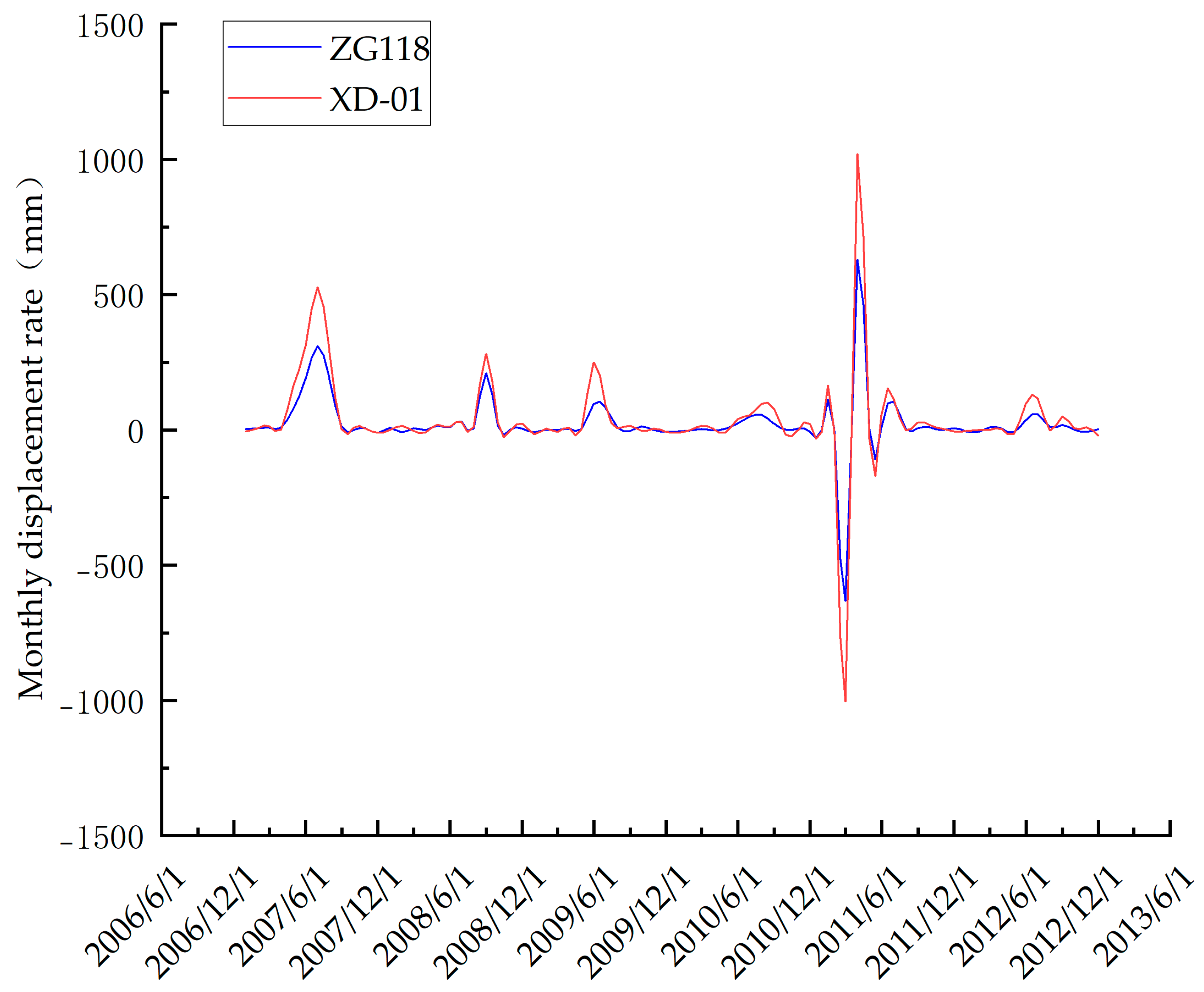

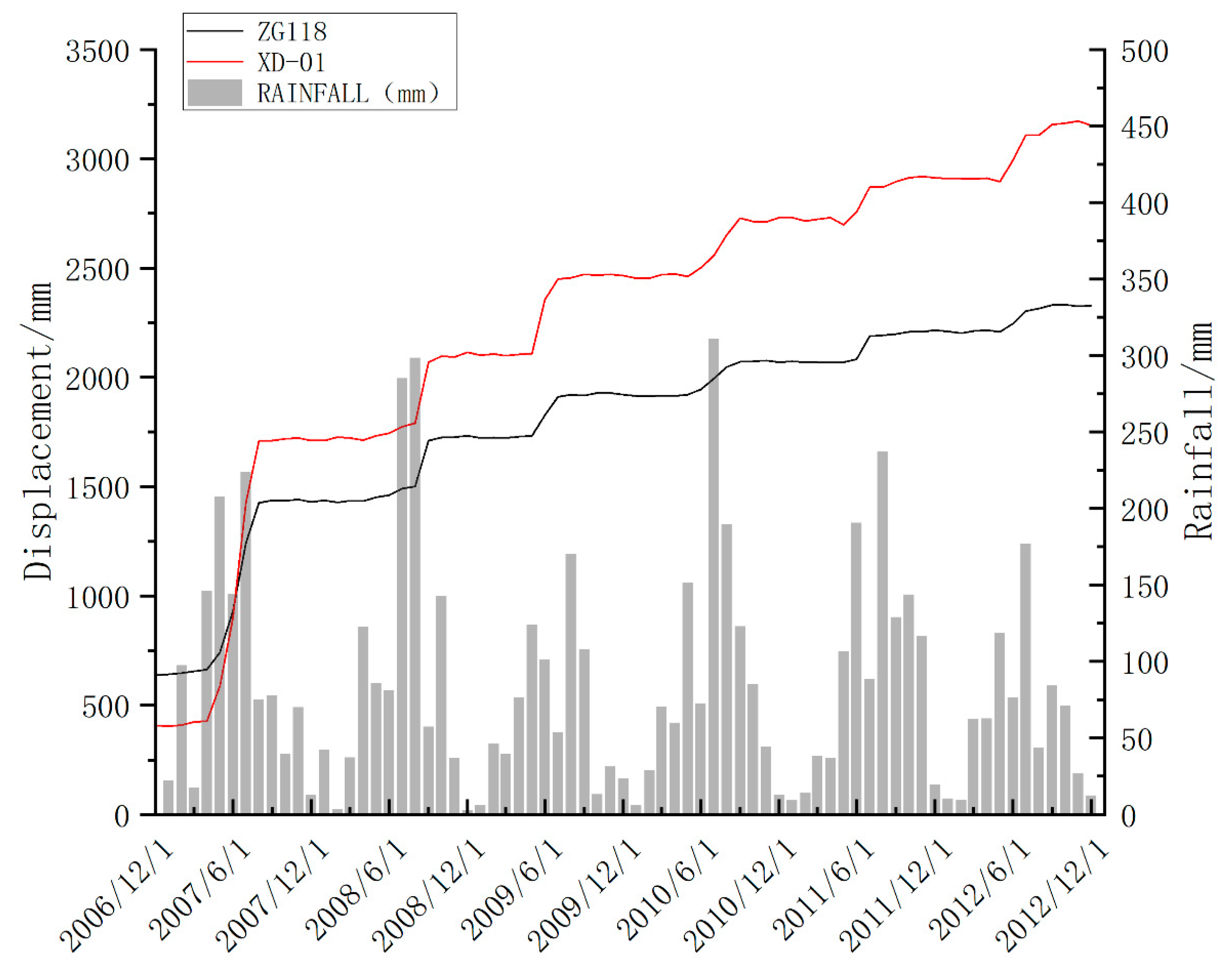

- The model’s excellent predictive performance has been validated: in an empirical study of a typical stacked-layer landslide (Baishui River landslide), the hybrid model demonstrated a significant advantage. On the test set at monitoring point XD-01, its R2 reached 0.9613, approximately 6.5% higher than the best single model (XGBoost); its RMSE (18.73 mm) and MAE (19.23 mm) decreased by 51.2% and 42.0%, respectively, compared to the TCN model. Notably, the model accurately captured the displacement surge (ΔF = 125.3 mm) triggered by heavy rainfall in July 2011, with an error of less than 5%, fully demonstrating the strong capability of multi-model collaboration in characterizing complex displacement trends and abrupt changes.

- (3)

- Effectively complementing the advantages of heterogeneous models: This stacking framework organically integrates the anti-overfitting characteristics of SVR, XGBoost, TCN, and random forest. This “ensemble of ensembles” strategy provides a more reliable technical tool for monthly-scale landslide disaster early warning in the Three Gorges Reservoir area.

6.2. Practical Application Challenges and Prospects

- (1)

- Real-time data and quality assurance: Model performance depends on high-quality, real-time displacement and rainfall data streams. In practical applications, it is necessary to establish stable and reliable automated monitoring and data transmission links, and to develop more robust algorithms to cope with more frequent data loss and noise in field environments.

- (2)

- Model generalization and data diversity: This study validated the effectiveness of the model using a single landslide case in the Baishui River. An important direction for future work is to incorporate more landslide cases with different geomechanical conditions and longer-term monitoring data to further verify and enhance the model’s generality and robustness.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

List of Abbreviations

| SVR | Support vector regression |

| XGBoost | Extreme gradient boosting |

| TCN | Temporal Convolutional Network |

| RF | Random forest |

| RMSE | Root mean square error |

| MAE | Mean absolute error |

References

- Froude, M.J.; Petley, D.N. Global fatal landslide occurrence from 2004 to 2016. Nat. Hazards Earth Syst. Sci. 2018, 18, 2161–2181. [Google Scholar] [CrossRef]

- Miao, H.B.; Wang, G.H.; Yin, K.L.; Kamai, T.; Li, Y.Y. Mechanism of the slow-moving landslides in jurassic red-strata in the three gorges reservoir, china. Eng. Geol. 2014, 171, 59–69. [Google Scholar] [CrossRef]

- Petley, D. Global patterns of loss of life from landslides. Geology 2012, 40, 927–930. [Google Scholar] [CrossRef]

- Tang, H.M.; Wasowski, J.; Juang, C.H. Geohazards in the three gorges reservoir area, china lessons learned from decades of research. Eng. Geol. 2019, 261, 105267. [Google Scholar] [CrossRef]

- Wang, Y.K.; Tang, H.M.; Huang, J.S.; Wen, T.; Ma, J.W.; Zhang, J.R. A comparative study of different machine learning methods for reservoir landslide displacement prediction. Eng. Geol. 2022, 298, 106544. [Google Scholar] [CrossRef]

- Guzzetti, F.; Mondini, A.C.; Cardinali, M.; Fiorucci, F.; Santangelo, M.; Chang, K.T. Landslide inventory maps: New tools for an old problem. Earth-Sci. Rev. 2012, 112, 42–66. [Google Scholar] [CrossRef]

- Merghadi, A.; Yunus, A.P.; Dou, J.; Whiteley, J.; ThaiPham, B.; Bui, D.T.; Avtar, R.; Abderrahmane, B. Machine learning methods for landslide susceptibility studies: A comparative overview of algorithm performance. Earth-Sci. Rev. 2020, 207, 103225. [Google Scholar] [CrossRef]

- Goetz, J.N.; Brenning, A.; Petschko, H.; Leopold, P. Evaluating machine learning and statistical prediction techniques for landslide susceptibility modeling. Comput. Geosci. 2015, 81, 1–11. [Google Scholar] [CrossRef]

- Stumpf, A.; Kerle, N. Object-oriented mapping of landslides using random forests. Remote Sens. Environ. 2011, 115, 2564–2577. [Google Scholar] [CrossRef]

- Yue, Y.G.; Cao, L.; Lu, D.W.; Hu, Z.Y.; Xu, M.H.; Wang, S.X.; Li, B.; Ding, H.H. Review and empirical analysis of sparrow search algorithm. Artif. Intell. Rev. 2023, 56, 10867–10919. [Google Scholar] [CrossRef]

- Yan, S.Q.; Liu, W.D.; Li, X.Q.; Yang, P.; Wu, F.X.; Yan, Z. Comparative study and improvement analysis of sparrow search algorithm. Wirel. Commun. Mob. Comput. 2022, 2022, 4882521. [Google Scholar] [CrossRef]

- Emmanuel, T.; Mpoeleng, D.; Maupong, T. Power plant induced-draft fan fault prediction using machine learning stacking ensemble. J. Eng. Res. 2024, 12, 82–90. [Google Scholar] [CrossRef]

- Webb, G.I.; Zheng, Z.J. Multistrategy ensemble learning: Reducing error by combining ensemble learning techniques. IEEE Trans. Knowl. Data Eng. 2004, 16, 980–991. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.H.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Drucker, H.C.; Burges, C.J.C.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. Adv. Neural Inf. Process. Syst. 1997, 9, 155–161. [Google Scholar]

- Brereton, R.G.; Lloyd, G.R. Support vector machines for classification and regression. Analyst 2010, 135, 230–267. [Google Scholar] [CrossRef]

- Wu, C.H.; Ho, J.M.; Lee, D.T. Travel-time prediction with support vector regression. IEEE Trans. Intell. Transp. Syst. 2004, 5, 276–281. [Google Scholar] [CrossRef]

- Smola, A.J.; Schölkopf, B. On a kernel-based method for pattern recognition, regression, approximation, and operator inversion. Algorithmica 1998, 22, 211–231. [Google Scholar] [CrossRef]

- Chen, T.Q.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining (Kdd’16), New York, NY, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Lea, C.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal convolutional networks: A unified approach to action segmentation. In Computer Vision—Eccv 2016 Workshops; Springer: Cham, Switzerland, 2016; Volume 9915, pp. 47–54. [Google Scholar] [CrossRef]

- Victoria, A.H.; Maragatham, G. Automatic tuning of hyperparameters using bayesian optimization. Evol. Syst. 2021, 12, 217–223. [Google Scholar] [CrossRef]

- Alibrahim, H.; Ludwig, S.A. Hyperparameter optimization: Comparing genetic algorithm against grid search and bayesian optimization. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation (Cec 2021), Kraków, Poland, 28 June–1 July 2021; pp. 1551–1559. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Naimi, A.I.; Balzer, L.B. Stacked generalization: An introduction to super learning. Eur. J. Epidemiol. 2018, 33, 459–464. [Google Scholar] [CrossRef]

| Time | ZG118 | XD-1 | Rainfall |

|---|---|---|---|

| 2010/11 | 2076.4 | 2710.5 | 12.9 |

| 2010/12 | 2069.2 | 2731.6 | 9.4 |

| 2011/1 | 2073.4 | 2731.5 | 14.5 |

| 2011/2 | 2069.7 | 2715.7 | 38.4 |

| 2011/3 | 2068.6 | 2723.8 | 36.9 |

| 2011/4 | 2067.4 | 2731.8 | 106.7 |

| 2011/5 | 2070.1 | 2696.6 | 190.7 |

| Base Model | RMSE (mm) | Variance (mm3) | wi (a = 0.6) |

|---|---|---|---|

| SVR | 42.5 | 55.0 | 0.16 |

| XGBoost | 30.2 | 25.3 | 0.34 |

| RF | 34.8 | 32.7 | 0.24 |

| TCN | 31.5 | 28.9 | 0.26 |

| Model | R2 | RMSE (mm) | MAE (mm) |

|---|---|---|---|

| SVR | 0.8743 | 40.8986 | 30.8392 |

| TCN | 0.8457 | 38.4565 | 33.1624 |

| Stacking | 0.9613 | 18.7329 | 19.2326 |

| Model | R2 | RMSE (mm) | MAE (mm) |

|---|---|---|---|

| SVR | 0.8743 | 40.8986 | 30.8392 |

| ARIMA | 0.8685 | 41.0858 | 32.0194 |

| TCN | 0.8457 | 38.4565 | 33.1624 |

| Stacking | 0.9613 | 18.7329 | 19.2326 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Hu, A.; Wang, Y.; Wu, H.; Qiu, D. Research on Landslide Displacement Prediction Using Stacking-Based Machine Learning Fusion Model. Appl. Sci. 2025, 15, 11747. https://doi.org/10.3390/app152111747

Li Y, Hu A, Wang Y, Wu H, Qiu D. Research on Landslide Displacement Prediction Using Stacking-Based Machine Learning Fusion Model. Applied Sciences. 2025; 15(21):11747. https://doi.org/10.3390/app152111747

Chicago/Turabian StyleLi, Yongqiang, Anchen Hu, Yinsheng Wang, Honggang Wu, and Daohong Qiu. 2025. "Research on Landslide Displacement Prediction Using Stacking-Based Machine Learning Fusion Model" Applied Sciences 15, no. 21: 11747. https://doi.org/10.3390/app152111747

APA StyleLi, Y., Hu, A., Wang, Y., Wu, H., & Qiu, D. (2025). Research on Landslide Displacement Prediction Using Stacking-Based Machine Learning Fusion Model. Applied Sciences, 15(21), 11747. https://doi.org/10.3390/app152111747