Optical FBG Sensor-Based System for Low-Flying UAV Detection and Localization

Abstract

1. Introduction

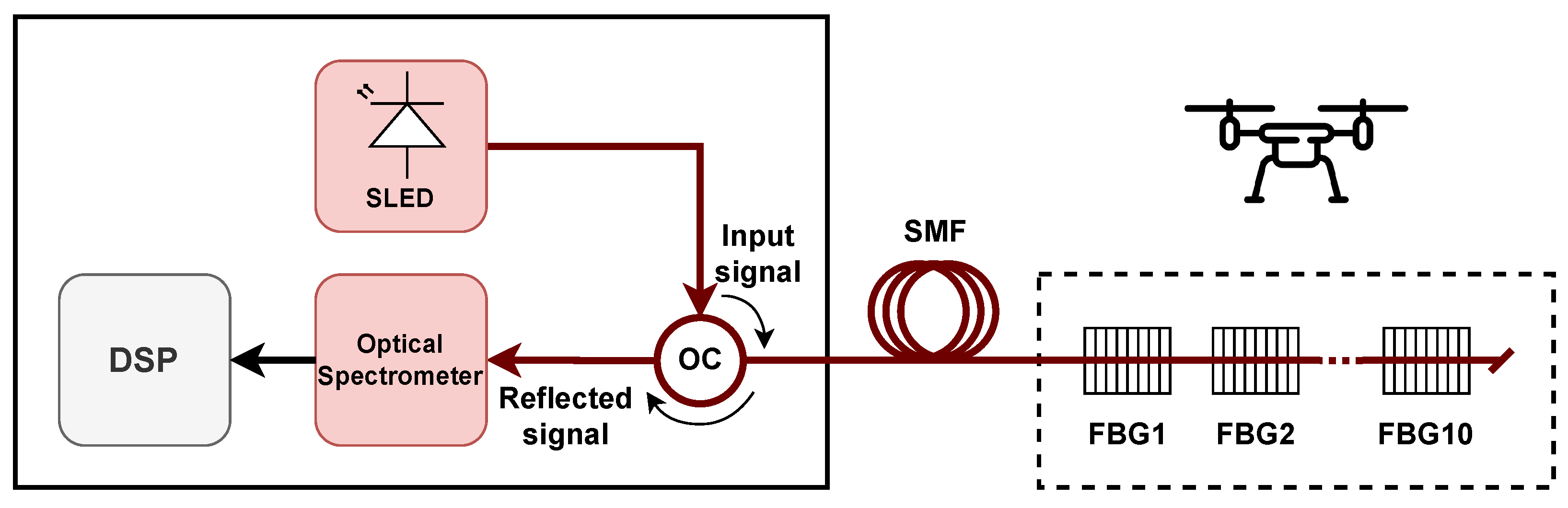

2. Methods for the Analysis of UAV Downwash

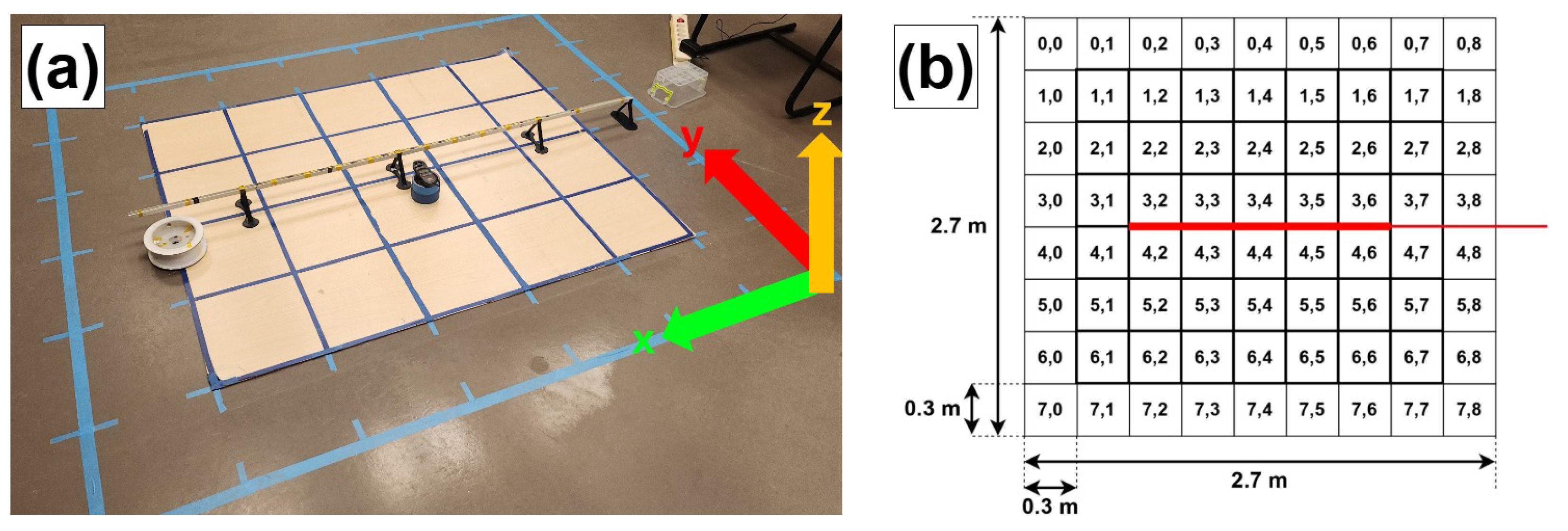

3. Experimental Setup

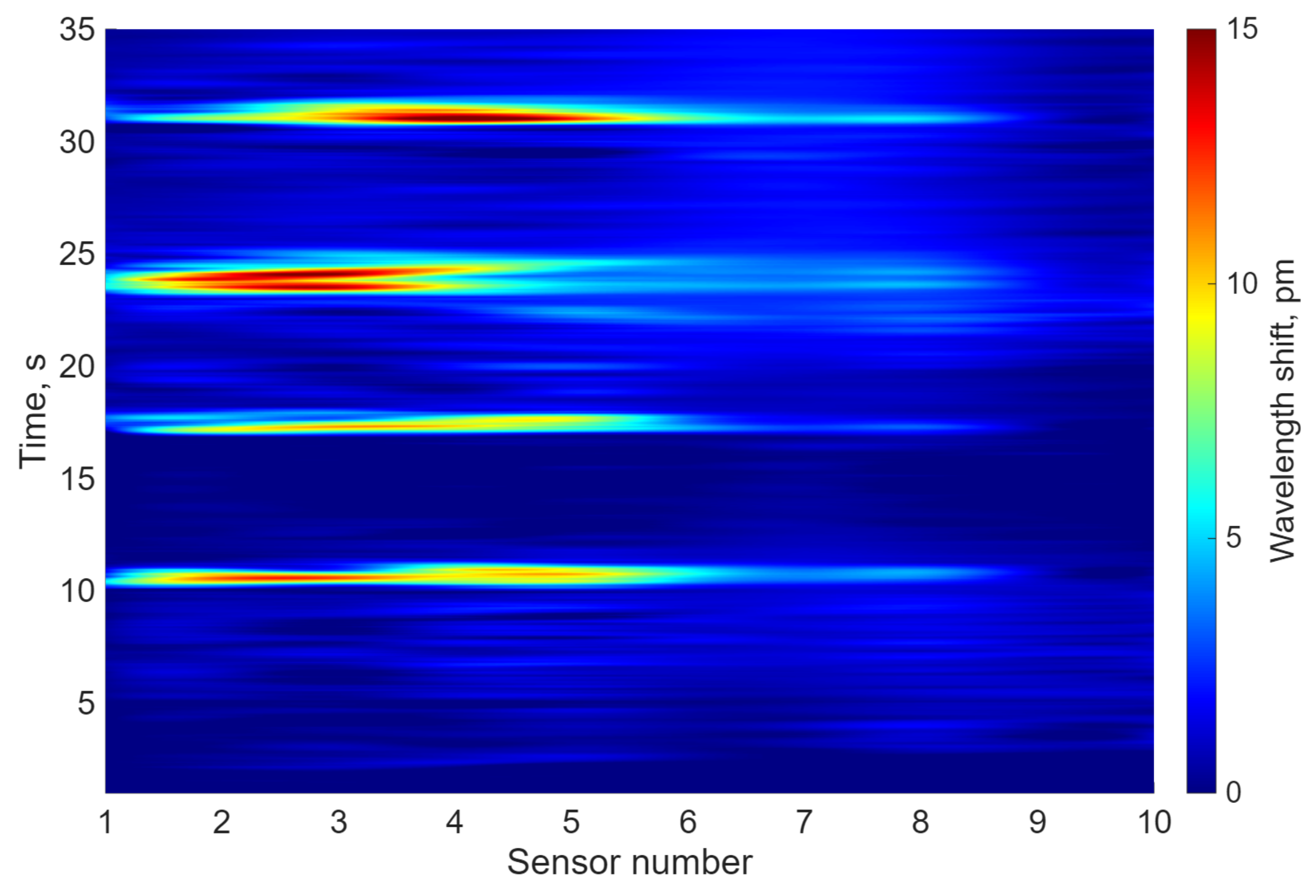

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

| CNN | convolutional neural network |

| C-UAS | counter unmanned aerial systems |

| DAS | distributed acoustic sensing |

| DSP | digital signal processor |

| EM | electromagnetic |

| EMI | electromagnetic interference |

| FBG | fiber Bragg grating |

| FMCW | frequency-modulated continuous wave |

| FWHM | full width at half-maximum |

| LiDAR | light detection and ranging |

| OC | optical circulator |

| PC | personal computer |

| RCS | radar cross-section |

| RF | radio frequency |

| ROF | radio-over-fiber |

| SLED | superluminescent light emitting diode |

| SMF | single mode optical fiber |

| SRSL | suppression ratio of side-lobes |

| UAV | unmanned aerial vehicle |

References

- Kiss, B.; Ballagi, Á.; Kuczmann, M. Overview Study of the Applications of Unmanned Aerial Vehicles in the Transportation Sector. Eng. Proc. 2024, 79, 11. [Google Scholar] [CrossRef]

- Sivakumar, M.; Tyj, N.M. A Literature Survey of Unmanned Aerial Vehicle Usage for Civil Applications. J. Aerosp. Technol. Manag. 2021, 13, e4021. [Google Scholar] [CrossRef]

- Khan, M.A.; Menouar, H.; Eldeeb, A.; Abu-Dayya, A.; Salim, F.D. On the Detection of Unauthorized Drones—Techniques and Future Perspectives: A Review. IEEE Sensors J. 2022, 22, 11439–11455. [Google Scholar] [CrossRef]

- Semenyuk, V.; Kurmashev, I.; Lupidi, A.; Alyoshin, D.; Kurmasheva, L.; Cantelli-Forti, A. Advances in UAV detection: Integrating multi-sensor systems and AI for enhanced accuracy and efficiency. Int. J. Crit. Infrastruct. Prot. 2025, 49, 100744. [Google Scholar] [CrossRef]

- Mandal, S.; Chen, L.; Alaparthy, V.; Cummings, M.L. Acoustic Detection of Drones through Real-time Audio Attribute Prediction. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020. [Google Scholar] [CrossRef]

- Akbal, E.; Akbal, A.; Dogan, S.; Tuncer, T. An automated accurate sound-based amateur drone detection method based on skinny pattern. Digit. Signal Process. 2023, 136, 104012. [Google Scholar] [CrossRef]

- Lim, J.; Joo, J.; Kim, S.C. Performance Enhancement of Drone Acoustic Source Localization Through Distributed Microphone Arrays. Sensors 2025, 25, 1928. [Google Scholar] [CrossRef]

- Liu, L.; Sun, B.; Li, J.; Ma, R.; Li, G.; Zhang, L. Localization of UAVs Using Acoustic Signals Collected by Distributed Acoustic-Electric Sensors. In Proceedings of the 2025 IEEE 15th International Conference on Signal Processing, Communications and Computing (ICSPCC), Hong Kong, 18–21 July 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Tejera-Berengue, D.; Zhu-Zhou, F.; Utrilla-Manso, M.; Gil-Pita, R.; Rosa-Zurera, M. Analysis of Distance and Environmental Impact on UAV Acoustic Detection. Electronics 2024, 13, 643. [Google Scholar] [CrossRef]

- Fang, J.; Li, Y.; Ji, P.N.; Wang, T. Drone Detection and Localization Using Enhanced Fiber-Optic Acoustic Sensor and Distributed Acoustic Sensing Technology. J. Light. Technol. 2023, 41, 822–831. [Google Scholar] [CrossRef]

- Chen, J.; Li, H.; Ai, K.; Shi, Z.; Xiao, X.; Yan, Z.; Liu, D.; Ping Shum, P.; Sun, Q. Low-Altitude UAV Surveillance System via Highly Sensitive Distributed Acoustic Sensing. IEEE Sensors J. 2024, 24, 32237–32246. [Google Scholar] [CrossRef]

- Fang, J.; Li, Y.; Ji, P.N.; Wang, T. Remote Drone Detection and Localization with Fiber-Optic Microphones and Distributed Acoustic Sensing. In Proceedings of the 2022 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 6–10 March 2022; pp. 1–3. [Google Scholar]

- Takano, H.; Nakahara, M.; Suzuoki, K.; Nakayama, Y.; Hisano, D. 300-Meter Long-Range Optical Camera Communication on RGB-LED-Equipped Drone and Object-Detecting Camera. IEEE Access 2022, 10, 55073–55080. [Google Scholar] [CrossRef]

- Zitar, R.A.; Al-Betar, M.; Ryalat, M.; Kassaymeh, S. A review of UAV Visual Detection and Tracking Methods. arXiv 2023, arXiv:2306.05089. [Google Scholar] [CrossRef]

- Liu, Z.; An, P.; Yang, Y.; Qiu, S.; Liu, Q.; Xu, X. Vision-Based Drone Detection in Complex Environments: A Survey. Drones 2024, 8, 643. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Ilipbayeva, L.; Utebayeva, D.; Smailov, N.; Matson, E.T.; Tashtay, Y.; Turumbetov, M.; Sabibolda, A. LiDAR Technology for UAV Detection: From Fundamentals and Operational Principles to Advanced Detection and Classification Techniques. Sensors 2025, 25, 2757. [Google Scholar] [CrossRef]

- Gong, J.; Yan, J.; Li, D. Radar Challenges and Solutions for Drone Detection. In Proceedings of the 2025 26th International Radar Symposium (IRS), Hamburg, Germany, 21–23 May 2025; pp. 1–8. [Google Scholar] [CrossRef]

- Larrat, M.; Sales, C. Classification of Flying Drones Using Millimeter-Wave Radar: Comparative Analysis of Algorithms Under Noisy Conditions. Sensors 2025, 25, 721. [Google Scholar] [CrossRef]

- Khawaja, W.; Ezuma, M.; Semkin, V.; Erden, F.; Ozdemir, O.; Guvenc, I. A Survey on Detection, Classification, and Tracking of UAVs Using Radar and Communications Systems. IEEE Commun. Surv. Tutor. 2025. [Google Scholar] [CrossRef]

- Gong, J.; Yan, J.; Kong, D.; Li, D. Introduction to Drone Detection Radar with Emphasis on Automatic Target Recognition (ATR) technology. arXiv 2023, arXiv:2307.10326. [Google Scholar] [CrossRef]

- Deng, M.; Ma, Y.; Wang, C. Design of Airborne Target Detection System with Fusion of Multi-Sensors. In Proceedings of the 2025 5th International Conference on Artificial Intelligence and Industrial Technology Applications (AIITA), Xi’an, China, 28–30 March 2025; pp. 330–334. [Google Scholar]

- He, T.; Hou, J.; Chen, D. Multimodal UAV Target Detection Method Based on Acousto-Optical Hybridization. Drones 2025, 9, 627. [Google Scholar] [CrossRef]

- Mehta, V.; Dadboud, F.; Bolic, M.; Mantegh, I. A Deep Learning Approach for Drone Detection and Classification Using Radar and Camera Sensor Fusion. In Proceedings of the 2023 IEEE Sensors Applications Symposium (SAS), Ottawa, ON, Canada, 18–20 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Jajaga, E.; Rushiti, V.; Ramadani, B.; Pavleski, D.; Cantelli-Forti, A.; Stojkovska, B.; Petrovska, O. An Image-Based Classification Module for Data Fusion Anti-drone System. In Proceedings of the International Conference on Image Analysis and Processing—ICIAP 2022 Workshops; Mazzeo, P.L., Frontoni, E., Sclaroff, S., Distante, C., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 422–433. [Google Scholar]

- Frid, A.; Ben-Shimol, Y.; Manor, E.; Greenberg, S. Drones Detection Using a Fusion of RF and Acoustic Features and Deep Neural Networks. Sensors 2024, 24, 2427. [Google Scholar] [CrossRef]

- Lee, H.; Han, S.; Byeon, J.I.; Han, S.; Myung, R.; Joung, J.; Choi, J. CNN-Based UAV Detection and Classification Using Sensor Fusion. IEEE Access 2023, 11, 68791–68808. [Google Scholar] [CrossRef]

- Alhussein, A.N.D.; Qaid, M.R.T.M.; Agliullin, T.; Valeev, B.; Morozov, O.; Sakhabutdinov, A. Fiber Bragg Grating Sensors: Design, Applications, and Comparison with Other Sensing Technologies. Sensors 2025, 25, 2289. [Google Scholar] [CrossRef]

- Tang, Z.; Ma, H.; Qu, Y.; Mao, X. UAV Detection with Passive Radar: Algorithms, Applications, and Challenges. Drones 2025, 9, 76. [Google Scholar] [CrossRef]

- Akinyemi, T.O.; Omisore, O.M.; Lu, G.; Wang, L. Toward a Fiber Bragg Grating-Based Two-Dimensional Force Sensor for Robot-Assisted Cardiac Interventions. IEEE Sensors Lett. 2022, 6, 5000104. [Google Scholar] [CrossRef]

- He, X.L.; Wang, D.H.; Wang, X.B.; Xia, Q.; Li, W.C.; Liu, Y.; Wang, Z.Q.; Yuan, L.B. A Cascade Fiber Optic Sensors for Simultaneous Measurement of Strain and Temperature. IEEE Sensors Lett. 2019, 3, 3502304. [Google Scholar] [CrossRef]

- Mihailov, S.J.; Ding, H.; Szabo, K.; Hnatovsky, C.; Walker, R.B.; Lu, P.; De Silva, M. High Sensitivity Fiber Bragg Grating Humidity Sensors Made With Through-the-Coating Femtosecond Laser Writing and Polyimide Coating Thickening. IEEE Sensors Lett. 2024, 8, 5000404. [Google Scholar] [CrossRef]

- Jelbuldina, M.; Korganbayev, S.; Seidagaliyeva, Z.; Sovetov, S.; Tuganbekov, T.; Tosi, D. Fiber Bragg Grating Sensor for Temperature Monitoring During HIFU Ablation of Ex Vivo Breast Fibroadenoma. IEEE Sensors Lett. 2019, 3, 5000404. [Google Scholar] [CrossRef]

- Chourasia, R.K.; Katti, A. Bragg Fibers: From Optical Properties to Applications; Springer Nature: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Kok, S.P.; Go, Y.I.; Wang, X.; Wong, M.L.D. Advances in Fiber Bragg Grating (FBG) Sensing: A Review of Conventional and New Approaches and Novel Sensing Materials in Harsh and Emerging Industrial Sensing. IEEE Sensors J. 2024, 24, 29485–29505. [Google Scholar] [CrossRef]

- Braunfelds, J.; Haritonovs, E.; Senkans, U.; Kurbatska, I.; Murans, I.; Porins, J.; Spolitis, S. Designing of Fiber Bragg Gratings for Long-Distance Optical Fiber Sensing Networks. Model. Simul. Eng. 2022, 2022, 8331485. [Google Scholar] [CrossRef]

- Vaiano, P.; Carotenuto, B.; Pisco, M.; Ricciardi, A.; Quero, G.; Consales, M.; Crescitelli, A.; Esposito, E.; Cusano, A. Lab on Fiber Technology for biological sensing applications. Laser Photonics Rev. 2016, 10, 922–961. [Google Scholar] [CrossRef]

- Yermakov, O.; Zeisberger, M.; Schneidewind, H.; Kim, J.; Bogdanov, A.; Kivshar, Y.; Schmidt, M.A. Advanced fiber in-coupling through nanoprinted axially symmetric structures. Appl. Phys. Rev. 2023, 10, 011401. [Google Scholar] [CrossRef]

- Braunfelds, J.; Senkans, U.; Skels, P.; Janeliukstis, R.; Porins, J.; Spolitis, S.; Bobrovs, V. Road Pavement Structural Health Monitoring by Embedded Fiber-Bragg-Grating-Based Optical Sensors. Sensors 2022, 22, 4581. [Google Scholar] [CrossRef]

- Senkans, U.; Silkans, N.; Spolitis, S.; Braunfelds, J. Comprehensive Analysis of FBG and Distributed Rayleigh, Brillouin, and Raman Optical Sensor-Based Solutions for Road Infrastructure Monitoring Applications. Sensors 2025, 25, 5283. [Google Scholar] [CrossRef]

- Kozlov, V.; Kharchevskii, A.; Rebenshtok, E.; Bobrovs, V.; Salgals, T.; Ginzburg, P. Universal Software Only Radar with All Waveforms Simultaneously on a Single Platform. Remote Sens. 2024, 16, 1999. [Google Scholar] [CrossRef]

- Zhang, H.; Qi, L.; Wu, Y.; Musiu, E.M.; Cheng, Z.; Wang, P. Numerical simulation of airflow field from a six–rotor plant protection drone using lattice Boltzmann method. Biosyst. Eng. 2020, 197, 336–351. [Google Scholar] [CrossRef]

- Wen, S.; Han, J.; Ning, Z.; Lan, Y.; Yin, X.; Zhang, J.; Ge, Y. Numerical analysis and validation of spray distributions disturbed by quad-rotor drone wake at different flight speeds. Comput. Electron. Agric. 2019, 166, 105036. [Google Scholar] [CrossRef]

- Halim, M.N.A.; Fung, K.V.; Marwah, O.M.F.; Rahim, M.Z.; Saleh, S.J.M.; Hassan, S. CFD simulation on airflow behavior of quadcopter fertilizing drone for pineapple plantation. AIP Conf. Proc. 2023, 2530, 040009. [Google Scholar] [CrossRef]

- Shouji, C.; Alidoost Dafsari, R.; Yu, S.H.; Choi, Y.; Lee, J. Mean and turbulent flow characteristics of downwash air flow generated by a single rotor blade in agricultural drones. Comput. Electron. Agric. 2021, 190, 106471. [Google Scholar] [CrossRef]

- Ghirardelli, M.; Kral, S.T.; Müller, N.C.; Hann, R.; Cheynet, E.; Reuder, J. Flow Structure around a Multicopter Drone: A Computational Fluid Dynamics Analysis for Sensor Placement Considerations. Drones 2023, 7, 467. [Google Scholar] [CrossRef]

- Parra, P.H.G.; Angulo, M.V.D.; Gaona, G.E.E. CFD Analysis of two and four blades for multirotor Unmanned Aerial Vehicle. In Proceedings of the 2018 IEEE 2nd Colombian Conference on Robotics and Automation (CCRA), Barranquilla, Colombia, 1–3 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Lahitani, A.R.; Permanasari, A.E.; Setiawan, N.A. Cosine similarity to determine similarity measure: Study case in online essay assessment. In Proceedings of the 2016 4th International Conference on Cyber and IT Service Management, Bandung, Indonesia, 26–27 April 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, R.; Xu, Z.; Gou, X. ELECTRE II Method Based on the Cosine Similarity to Evaluate the Performance of Financial Logistics Enterprises under Double Hierarchy Hesitant Fuzzy Linguistic Environment. Fuzzy Optim. Decis. Mak. 2023, 22, 23–49. [Google Scholar] [CrossRef]

| Ref. | Year | Type of UAV | Rotor ø, m | Type of Assessment Environment | Intended Application | Result |

|---|---|---|---|---|---|---|

| [41] | 2020 | Custom six rotor drone. | 0.381 | Lattice Boltzmann fluid simulation and indoor anemometer measurement. | Improvement of plant protection in agricultural drones. | Confirmation that LBM simulations are within 95% of the practically measured airflow components. |

| [42] | 2019 | M234-AT type four-rotor drone. | 0.766 | Lattice Boltzmann fluid simulation and wind tunnel experiment. | Accurate positioning of the pesticide spray nozzle. | Airflow vortices and downwash velocities analyzed, and optimal spray parameters confirmed. |

| [44] | 2021 | Single rotor blade of Xrotor Pro X8 CCW HOBBY-WING | 0.740 | Indoor setup with anemometer at different points below the rotor. | Better understanding of downwash generated by a single rotor in agricultural drones. | Distributions of different velocity components found, as well as vorticity and turbulence intensities evaluated. |

| [43] | 2022 | Computer generated four-rotor PINEXRI-20 equivalent. | 0.737 | Ansys Fluent software for mesh analysis. | Optimization of spray system design and location for agriculture. | Downwash velocity distributions found and reviewed. |

| [45] | 2023 | Foxtech D130 X8 four-rotor drone. | 0.710 | Ansys Fluent software and open-air measurements. | Drone turbulence study to counter propeller flow interference in wind measurements. | Airflow velocity components are analyzed in CFD, and open-air measurements are used to find the best case for accurate wind measurements. |

| [46] | 2018 | CAD generated six rotor drone. | N/A | Ansys Fluent software for mesh analysis. | Identify areas below the drone of high turbulence. | Four and two blade configurations compared. Vortex, helicity, and turbulence evaluation. |

| This paper | 2025 | DJI Avata | 0.074 | Indoor setup with integrated FBG sensors in the optical fiber. | Detect and localize drones passing over the FBG sensor array. | Measured drone-induced downwash. Achieved localization of the drone passing over the FBG sensor array, as well as flight altitude detection with accuracy up to 90 percent. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murans, I.; Zveja, R.K.; Ortiz, D.; Andrejevs, D.; Krumins, N.; Novikova, O.; Khobzei, M.; Tkach, V.; Samila, A.; Kopats, A.; et al. Optical FBG Sensor-Based System for Low-Flying UAV Detection and Localization. Appl. Sci. 2025, 15, 11690. https://doi.org/10.3390/app152111690

Murans I, Zveja RK, Ortiz D, Andrejevs D, Krumins N, Novikova O, Khobzei M, Tkach V, Samila A, Kopats A, et al. Optical FBG Sensor-Based System for Low-Flying UAV Detection and Localization. Applied Sciences. 2025; 15(21):11690. https://doi.org/10.3390/app152111690

Chicago/Turabian StyleMurans, Ints, Roberts Kristofers Zveja, Dilan Ortiz, Deomits Andrejevs, Niks Krumins, Olesja Novikova, Mykola Khobzei, Vladyslav Tkach, Andrii Samila, Aleksejs Kopats, and et al. 2025. "Optical FBG Sensor-Based System for Low-Flying UAV Detection and Localization" Applied Sciences 15, no. 21: 11690. https://doi.org/10.3390/app152111690

APA StyleMurans, I., Zveja, R. K., Ortiz, D., Andrejevs, D., Krumins, N., Novikova, O., Khobzei, M., Tkach, V., Samila, A., Kopats, A., Sics, P. E., Ipatovs, A., Braunfelds, J., Migla, S., Salgals, T., & Bobrovs, V. (2025). Optical FBG Sensor-Based System for Low-Flying UAV Detection and Localization. Applied Sciences, 15(21), 11690. https://doi.org/10.3390/app152111690