OGS-YOLOv8: Coffee Bean Maturity Detection Algorithm Based on Improved YOLOv8

Abstract

1. Introduction

2. Related Work

- Introducing ODConv: We provide ODConv, a multidimensional dynamic attention mechanism that optimizes computational efficiency and greatly improves the model’s ability to capture delicate properties of coffee beans.

- A thin module CSGSPC was created by: We examined and fixed the partial convolutions’ “information silo” problem. Our developed GS-PConv avoids the accuracy degradation often associated with conventional lightweight approaches by implementing a novel batch shuffle operation that considerably decreases computing complexity while assuring effective information flow between channels.

- Proposing the Inner-FocalerIoU loss function: We present the Inner-FocalerIoU loss function to tackle the problem of sample imbalance across the dataset’s maturity phases. This method greatly improves the model’s detection accuracy by concentrating the regression process on challenging and minority samples.

3. Materials and Methods

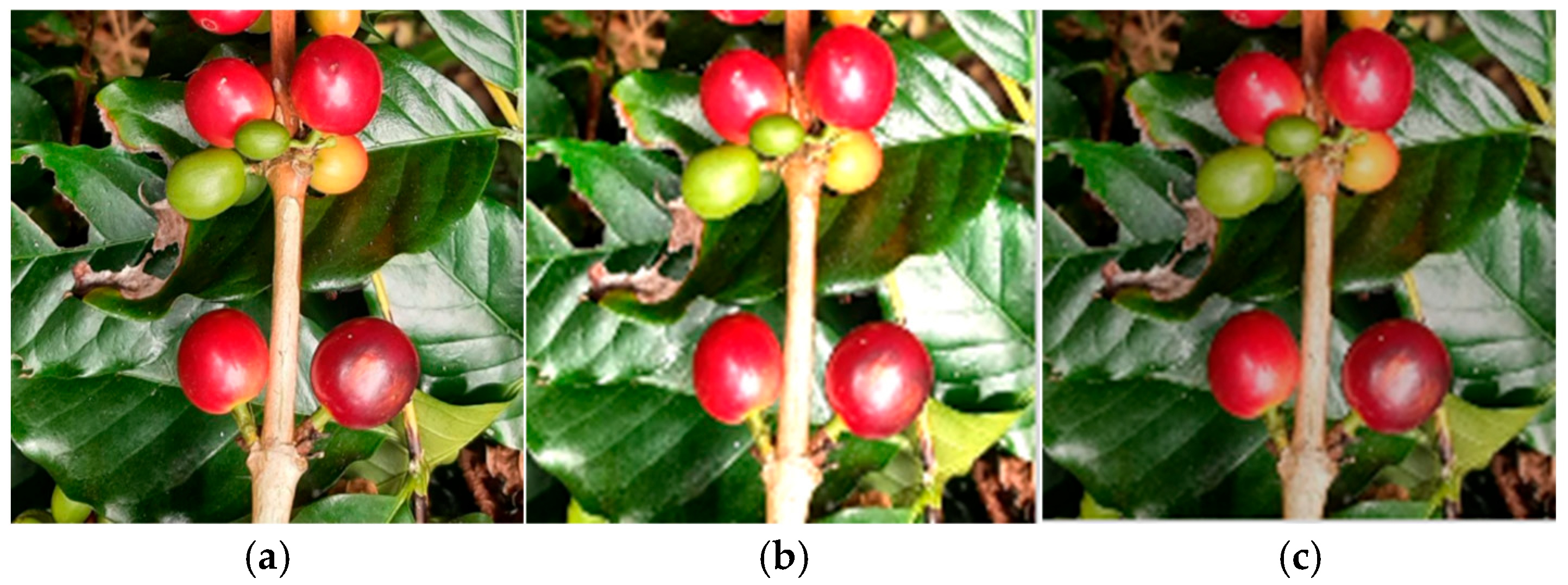

3.1. Dataset Construction

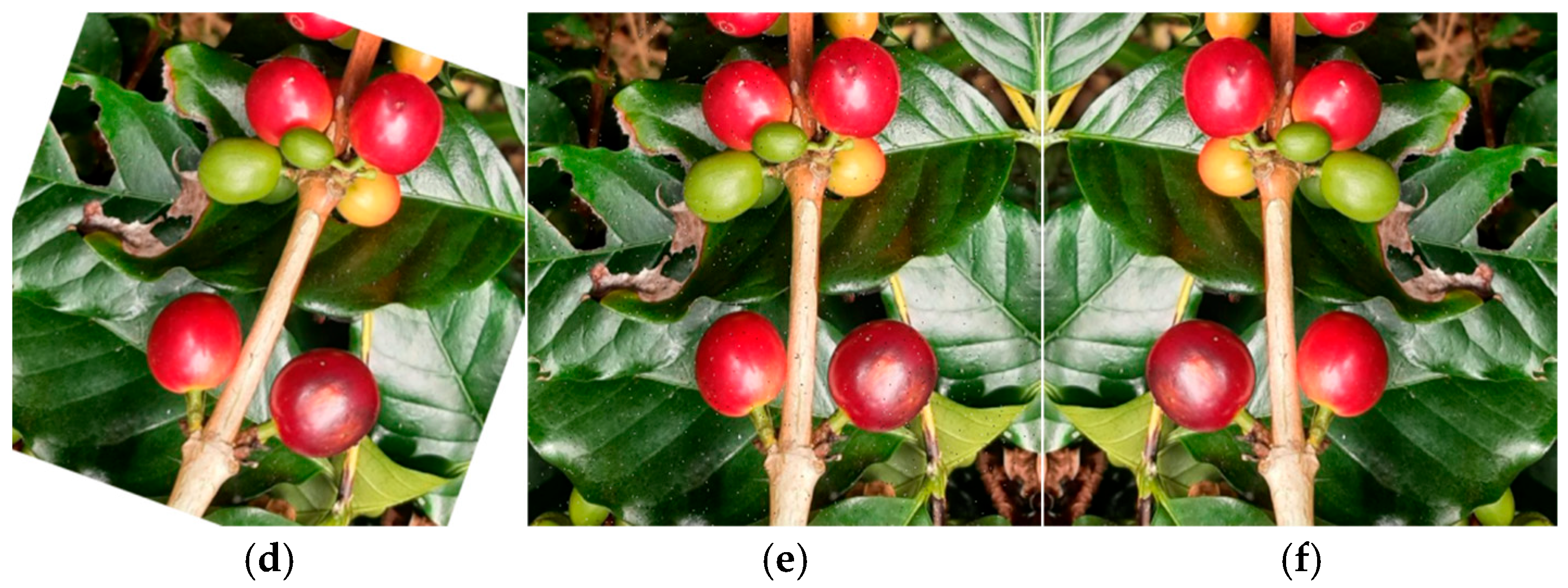

3.2. OGS-YOLOv8 Network Structure

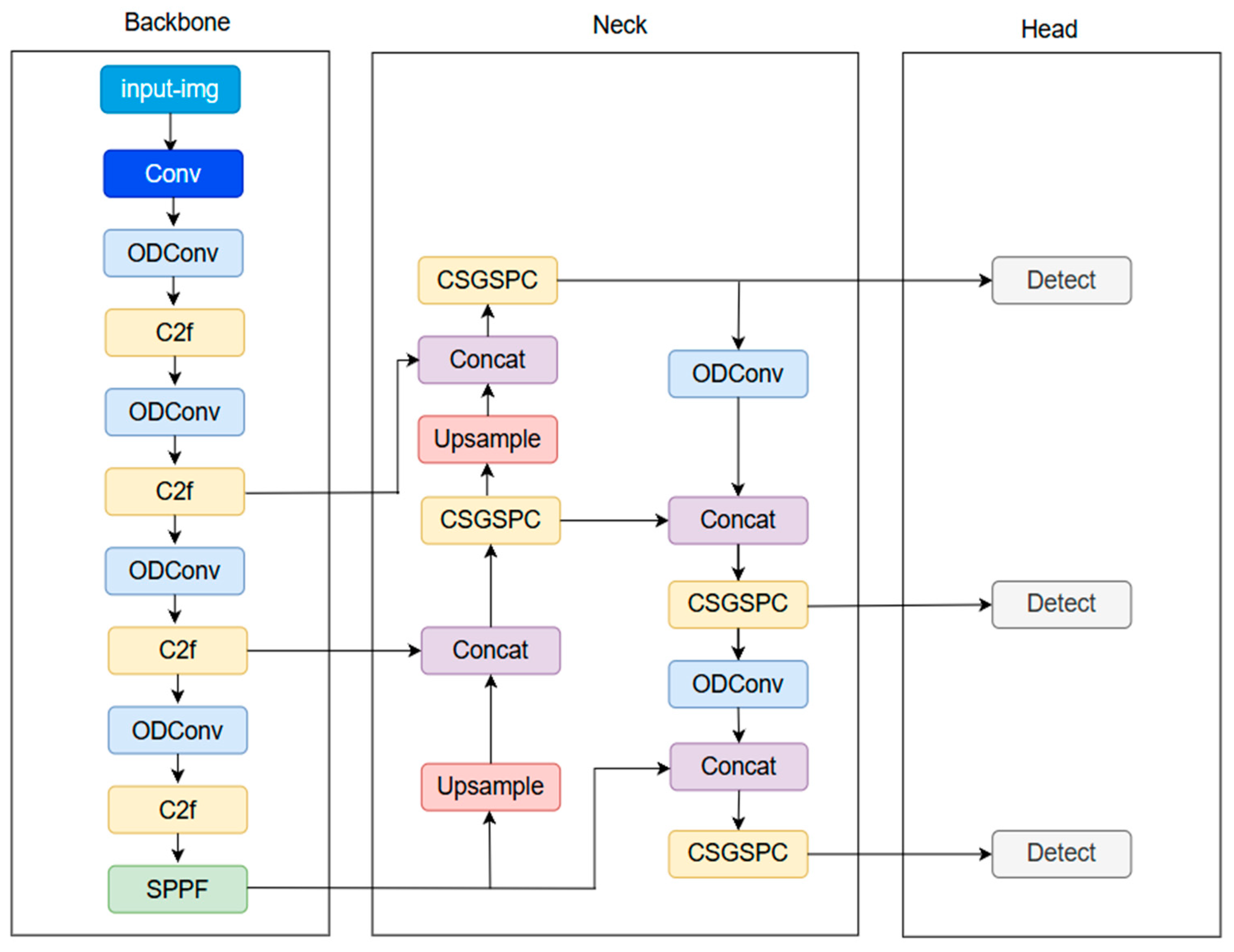

3.3. Omni-Dimensional Dynamic Convolution

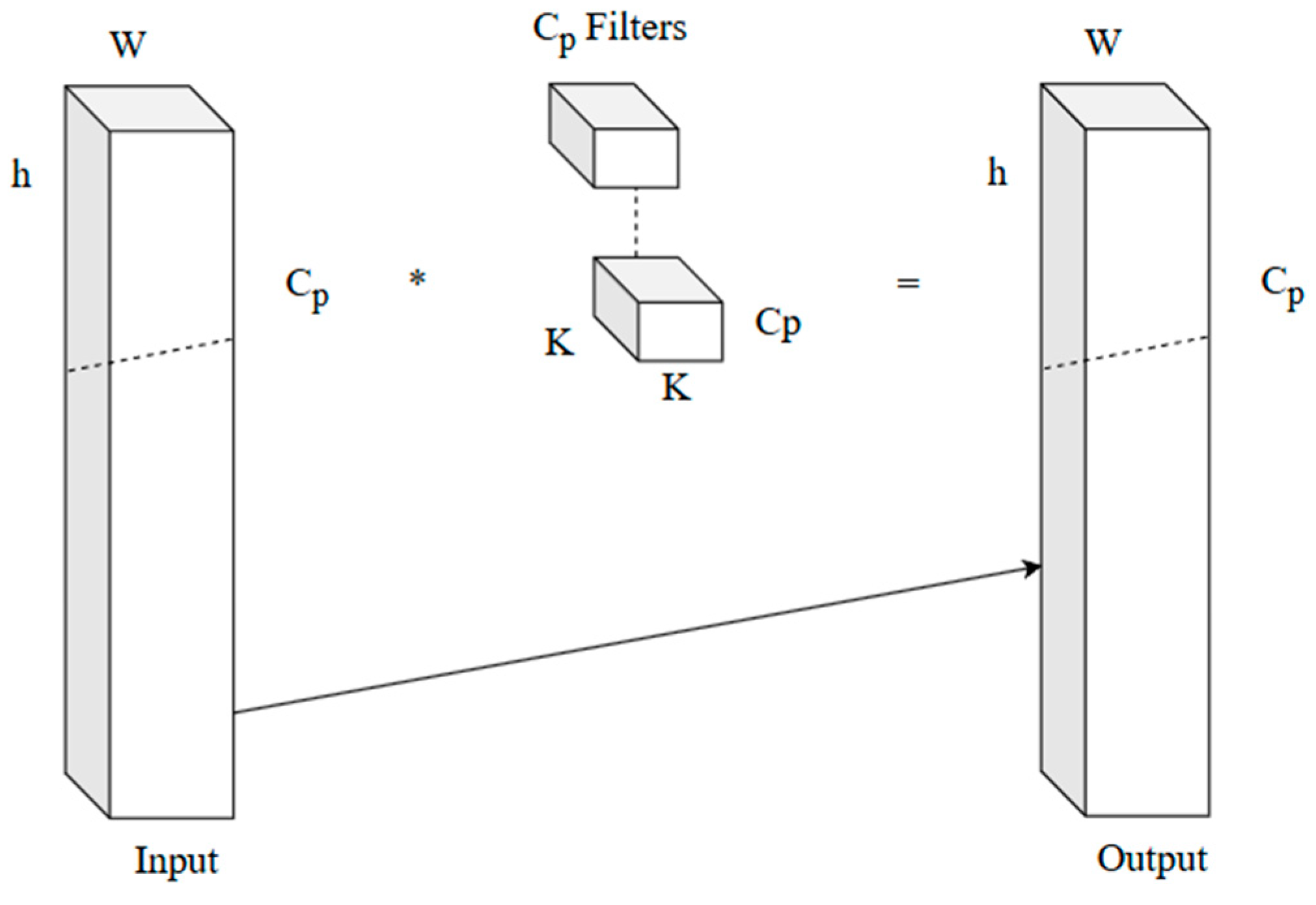

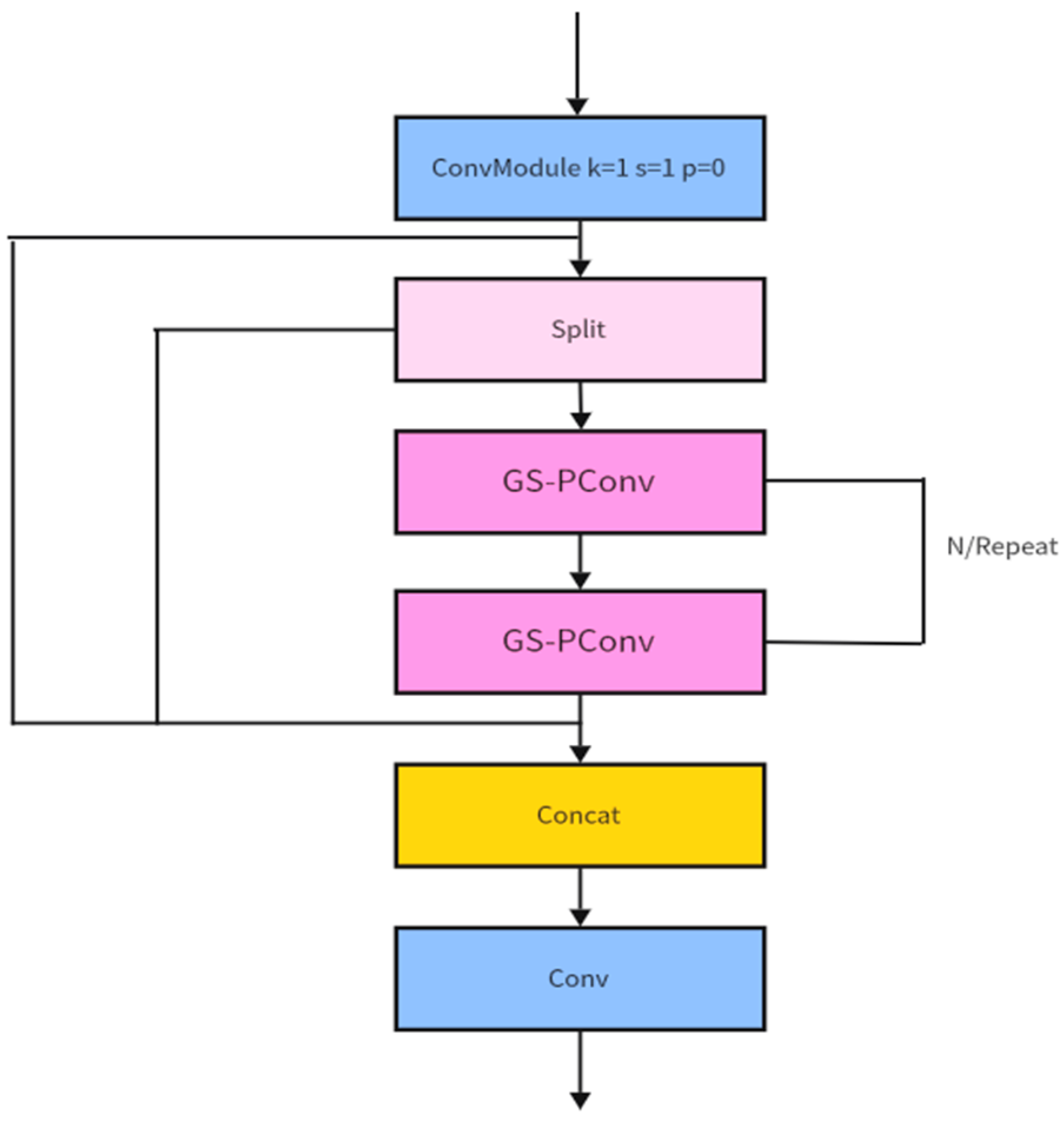

3.4. Convolutional Split Group-Shuffle Partial Convolution

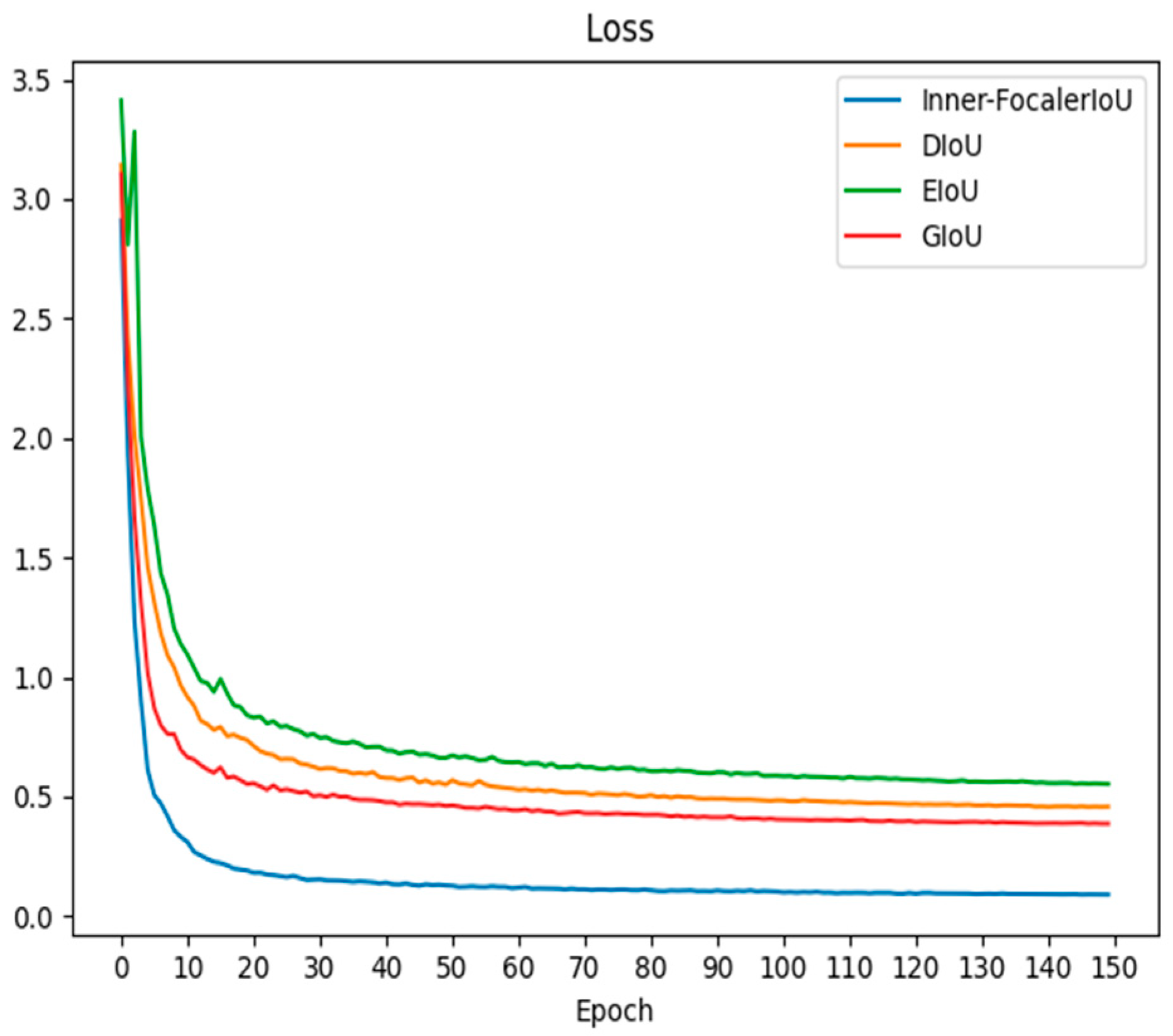

3.5. Inner-FocalerIoU Loss Function

4. Results

4.1. Environment and Parameter Adjustment

4.2. Model Evaluation Metrics

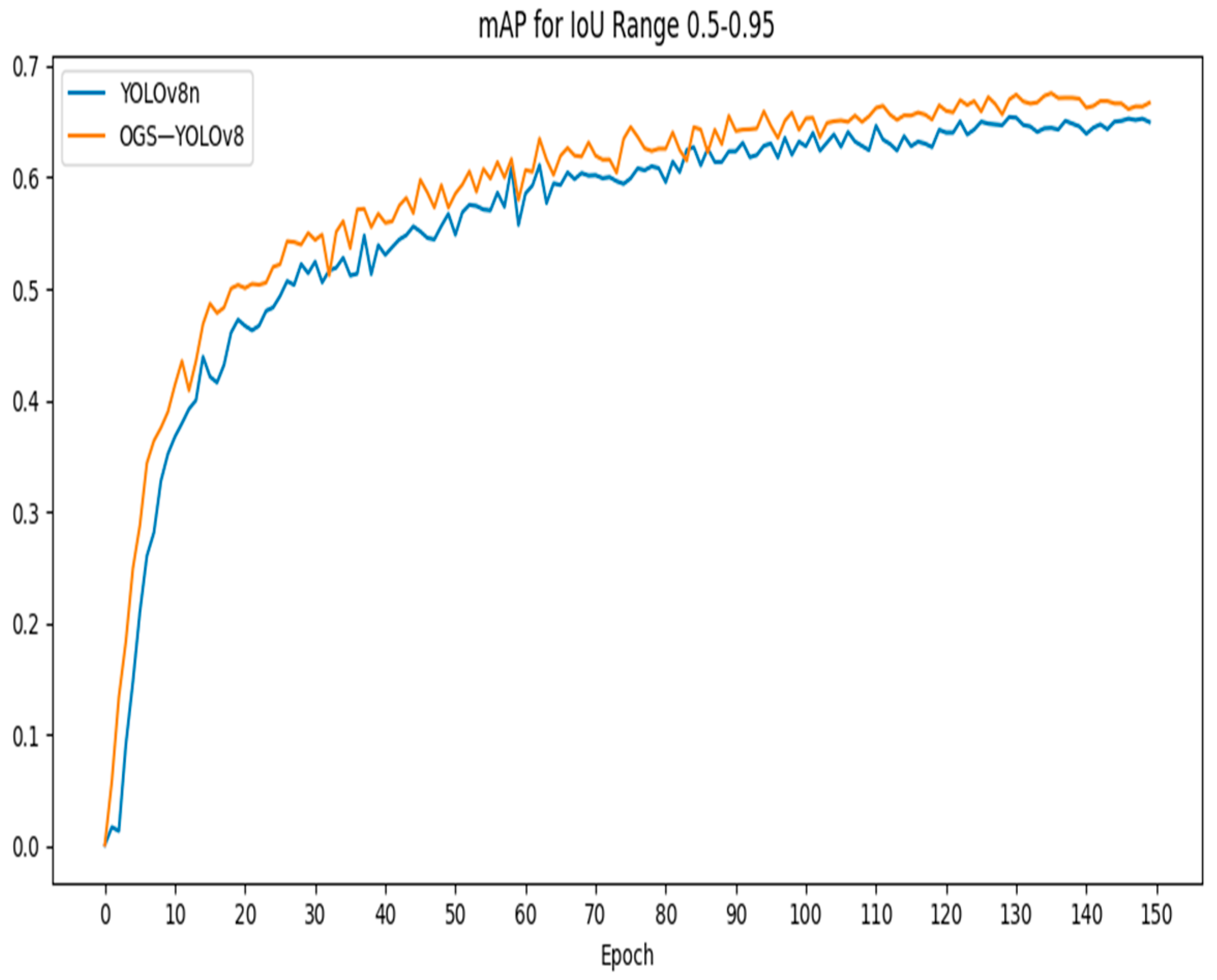

4.3. Model Training Process and Comparison

4.4. Comparison of Different Dynamic Convolutions

4.5. Comparison of Different Lightweight Convolutions

4.6. Comparison of Different Loss Functions

5. Discussion

5.1. Improved Model Ablation Experiment

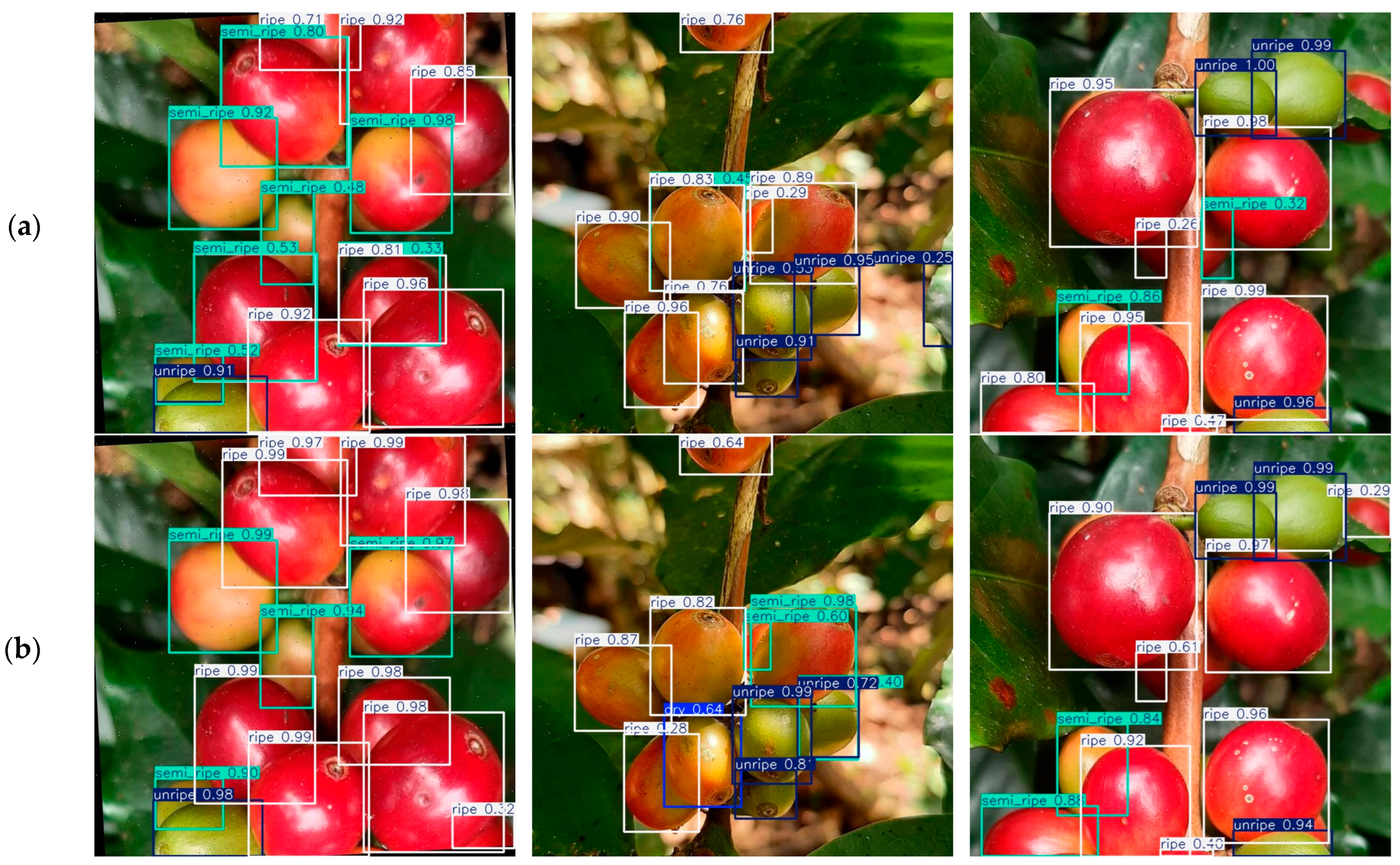

5.2. Model Comparison Experiment

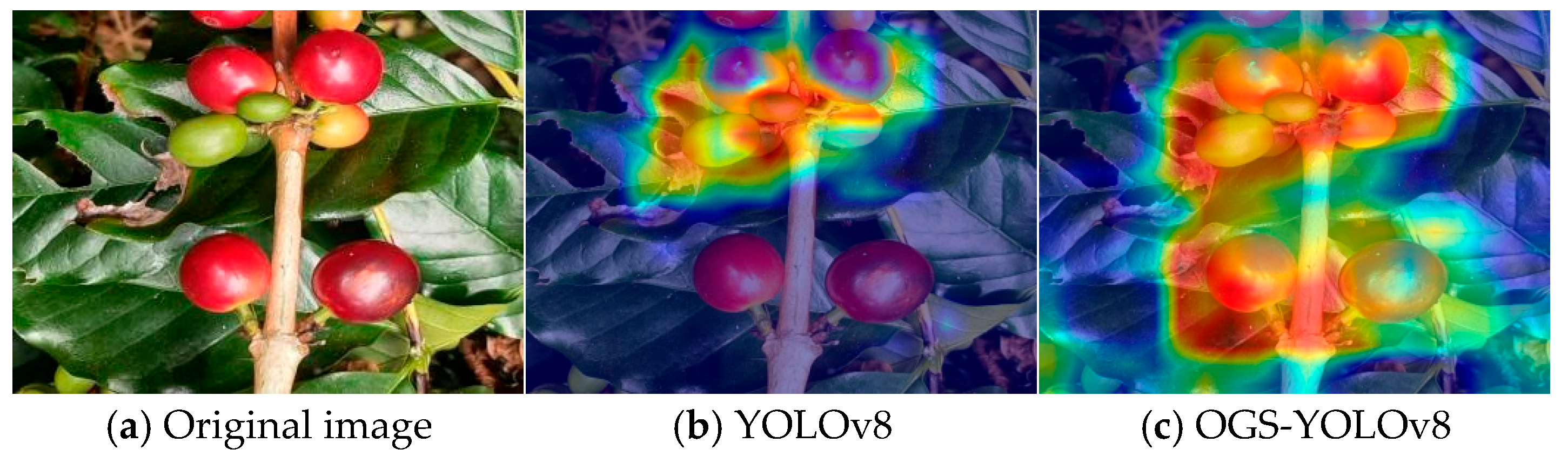

5.3. Feature Heatmap Visualization

5.4. Application Value and Limitations

6. Conclusions

6.1. Model Proposal and Improvement

6.2. Future Research Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, N.Y.Z.; Lou, Z.C.; Yang, S.L.; Mo, X. Evaluation of World Coffee Industry Competitiveness and China’s Countermeasures. South China Rural Area 2023, 39, 15–23. [Google Scholar] [CrossRef]

- Poole, R.; Kennedy, O.J.; Roderick, P.; Fallowfield, J.A.; Hayes, P.C.; Parkes, J. Coffee Consumption and Health: Umbrella Review of Meta-Analyses of Multiple Health Outcomes. BMJ 2017, 359, j5024. [Google Scholar] [CrossRef]

- Huang, J.X.; Lv, Y.L.; Li, W.R.; Xia, B.; Xiang, X.; Zhou, X.; Lou, X. Report on China Coffee Industry in 2022. Trop. Agric. Sci. Technol. 2023, 46, 1–5. [Google Scholar] [CrossRef]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. YOLO-Banana: A Lightweight Neural Network for Rapid Detection of Banana Bunches and Stalks in the Natural Environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Ignacio, J.S.; Aisma, K.N.A.E.; Caya, M.V.C. A YOLOv5-based Deep Learning Model for In-Situ Detection and Maturity Grading of Mango. In Proceedings of the 2022 6th International Conference on Communication and Information Systems (ICCIS), Chongqing, China, 21–23 April 2022; pp. 141–147. [Google Scholar] [CrossRef]

- Lian, S.; Li, L.; Tan, W.; Tan, L. Research on Tomato Maturity Detection Based on Machine Vision. In The International Conference on Image, Vision and Intelligent Systems (ICIVIS 2021); Lecture Notes in Electrical Engineering; Yao, J., Xiao, Y., You, P., Sun, G., Eds.; Springer: Singapore, 2022; Volume 813. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, Z.H.; Li, Z.F.; Liu, T.; Zhao, Z.; Wang, T. Tomato ripeness detection method based on improved YOLOv5. Chin. Agrometeorol. 2024, 45, 1521–1532. [Google Scholar] [CrossRef]

- Ma, P.W.; Zhou, J. Grape ripeness detection in complex environments based on improved YOLOv7. Trans. Chin. Soc. Agric. Eng. 2025, 41, 171–178. [Google Scholar] [CrossRef]

- Zhou, T.; Wang, J.; Mai, R.G. Real-time object detection method of pineapple ripeness based on improved YOLOv8. J. Huazhong Agric. Univ. 2024, 43, 10–20. [Google Scholar] [CrossRef]

- Tian, Y.W.; Qin, S.S.; Yan, Y.B.; Wang, J.; Jiang, F. Detecting blueberry maturity under complex field conditions using improvedYOLOv8. Trans. Chin. Soc. Agric. Eng. 2024, 40, 153–162. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, H.; Chang, P.; Huang, Y.; Zhong, F.; Jia, Q.; Chen, L.; Zhong, H.; Liu, S. CES-YOLOv8: Strawberry Maturity Detection Based on the Improved YOLOv8. Agronomy 2024, 14, 1353. [Google Scholar] [CrossRef]

- Velasquez, S.; Patricia Franco, A.; Peña, N.; Carlos Bohorquez, J.; Gutierrez, N. Classification of the Maturity Stage of Coffee Cherries Using Comparative Feature and Machine Learning. Coffee Sci. 2021, 16, e161710. [Google Scholar] [CrossRef]

- Ge, Z.; Zhang, Y.; Jiang, Y.; Ge, H.; Wu, X.; Jia, Z.; Wang, H.; Jia, K. Lightweight YOLOv7 Algorithm for Multi-Object Recognition on Contrabands in Terahertz Images. Appl. Sci. 2024, 14, 1398. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8 2024. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

- Li, C.; Zhou, A.; Yao, A. Omni-Dimensional Dynamic Convolution. arXiv 2022, arXiv:2209.07947. [Google Scholar] [CrossRef]

- Xu, W.; Liu, J.; He, Y.; Yang, Y.; Xie, X.; Yang, X. Deep Learning Model for Cold-Rolled Plate Defect Detection Based on Omni-dimensional Dynamic Convolution and Global Attention Mechanism Enhancement. Metall. Mater. Trans. B 2025, 56, 3980–3996. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic Convolution: Attention over Convolution Kernels. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 11027–11036. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation 2021. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S. Focaler-IoU: More Focused Intersection over Union Loss. arXiv 2024, arXiv:2401.10525. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Y.; Zhang, W.; Zhang, X.; Zhang, Y.; Jiang, X. Foreign Objects Identification of Transmission Line Based on Improved YOLOv7. IEEE Access 2023, 11, 51997–52008. [Google Scholar] [CrossRef]

- Zhang, X.; Song, Y.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. AKConv: Convolutional Kernel with Arbitrary Sampled Shapes and Arbitrary Number of Parameters. arXiv 2024, arXiv:2311.11587. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects. arXiv 2022, arXiv:2208.03641v1. [Google Scholar] [CrossRef]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic Snake Convolution Based on Topological Geometric Constraints for Tubular Structure Segmentation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 6047–6056. [Google Scholar] [CrossRef]

- Zhong, J.; Chen, J.; Mian, A. DualConv: Dual Convolutional Kernels for Lightweight Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 9528–9535. [Google Scholar] [CrossRef]

- Singh, P.; Verma, V.K.; Rai, P.; Namboodiri, V.P. HetConv: Beyond Homogeneous Convolution Kernels for Deep CNNs. Int. J. Comput. Vis. 2020, 128, 2068–2088. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. arXiv 2022, arXiv:2101.08158v2. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar] [CrossRef]

| Datasets | Number of Image Samples | Immature | Partially Dried | Dry | Maturity | Overmaturity |

|---|---|---|---|---|---|---|

| Training set | 1768 | 16,406 | 1859 | 583 | 2688 | 413 |

| Validation set | 500 | 3885 | 589 | 152 | 1250 | 213 |

| Test set | 255 | 2727 | 197 | 90 | 370 | 63 |

| Total | 2523 | 23,018 | 2645 | 825 | 4308 | 689 |

| Model | mAP@0.5/% | Precision/% | F1-Score/% | FLOPs/G | Inference Time/ms |

|---|---|---|---|---|---|

| YOLO v8n | 72.8 | 66.3 | 68.6 | 8.2 | 6.7 |

| ODConv-YOLO v8n | 74.1 | 70.4 | 70.5 | 6.9 | 9.2 |

| AKConv-YOLO v8n | 69.9 | 64.4 | 66.0 | 7.4 | 9.4 |

| SPD-Conv-YOLO v8n | 72.2 | 69.6 | 70.1 | 7.4 | 9.8 |

| DSConv-YOLO v8n | 70.6 | 68.9 | 69.2 | 9.5 | 10.1 |

| Model | mAP@0.5/% | Precision/% | F1-Score/% | FLOPs/G | Inference Time/ms |

|---|---|---|---|---|---|

| YOLO v8n | 72.8 | 66.3 | 68.6 | 8.2 | 6.7 |

| GS-PConv-YOLO v8n | 72.2 | 67.2 | 69.3 | 7.0 | 8.3 |

| FasterBlock-YOLO v8n | 72.5 | 64.3 | 68.9 | 7.6 | 9.9 |

| DualConv-YOLO v8n | 71.9 | 66.5 | 68.6 | 8.1 | 9.8 |

| HetConv-YOLO v8n | 71.6 | 65.0 | 67.2 | 6.6 | 15.6 |

| Model | ODConv | GS-PConv | Inner-FocalerIoU | mAP@0.5/% | Precision/% | F1-Score/% | FLOPs/G | mAP@0.5–0.95/% |

|---|---|---|---|---|---|---|---|---|

| YOLO v8n | × | × | × | 72.8 | 66.3 | 68.6 | 8.2 | 67.0 |

| Model 1 | √ | × | × | 74.1 | 70.4 | 70.1 | 6.9 | 68.2 |

| Model 2 | × | √ | × | 72.2 | 67.2 | 69.3 | 7.0 | 66.1 |

| Model 3 | × | × | √ | 73.6 | 68.8 | 70.6 | 8.1 | 67.9 |

| Model 4 | √ | √ | × | 74.3 | 72.0 | 71.5 | 6.0 | 67.7 |

| Model 5 | √ | × | √ | 74.3 | 66.4 | 71.1 | 6.9 | 68.0 |

| Model 6 | × | √ | √ | 72.0 | 68.7 | 69.5 | 7.2 | 65.4 |

| OGS-YOLOv8 | √ | √ | √ | 76.0 | 73.7 | 71.8 | 6.0 | 69.2 |

| Model | mAP@0.5/% | Precision/% | F1-Score/% | FLOPs/G | mAP@0.5–0.95/% |

|---|---|---|---|---|---|

| YOLO v8n | 72.8 | 66.3 | 68.6 | 8.2 | 67.0 |

| YOLOv3-tity | 68.8 | 66.8 | 67.2 | 12.9 | 57.5 |

| YOLO v5n | 71.3 | 73.0 | 70.4 | 4.1 | 65.1 |

| YOLOv7-tity | 73.0 | 61.2 | 68.2 | 13.1 | 67.0 |

| YOLO v10n | 72.1 | 70.8 | 69.8 | 8.2 | 66.6 |

| OGS-YOLOv8 | 76.0 | 73.7 | 71.8 | 6.0 | 69.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, N.; Wen, Y. OGS-YOLOv8: Coffee Bean Maturity Detection Algorithm Based on Improved YOLOv8. Appl. Sci. 2025, 15, 11632. https://doi.org/10.3390/app152111632

Zhao N, Wen Y. OGS-YOLOv8: Coffee Bean Maturity Detection Algorithm Based on Improved YOLOv8. Applied Sciences. 2025; 15(21):11632. https://doi.org/10.3390/app152111632

Chicago/Turabian StyleZhao, Nannan, and Yongsheng Wen. 2025. "OGS-YOLOv8: Coffee Bean Maturity Detection Algorithm Based on Improved YOLOv8" Applied Sciences 15, no. 21: 11632. https://doi.org/10.3390/app152111632

APA StyleZhao, N., & Wen, Y. (2025). OGS-YOLOv8: Coffee Bean Maturity Detection Algorithm Based on Improved YOLOv8. Applied Sciences, 15(21), 11632. https://doi.org/10.3390/app152111632