Enhancing Binary Security Analysis Through Pre-Trained Semantic and Structural Feature Matching

Abstract

1. Introduction

- We propose a novel approach that synergistically combines deep semantic features with cross-graph structural features, and to our knowledge, we are the first to integrate a pre-trained language model with a Cross-Graph Neural Network (CGNN) for binary security.

- We introduce a new pre-training task specifically designed for assembly language, enabling the model to learn the nuanced sequential nature of instructions, which is critical for understanding low-level code logic.

- We implement and validate a prototype system, Breg, and introduce four comprehensive datasets for training and evaluation. These include a real-world vulnerability dataset composed of CVEs, on which Breg demonstrates superior performance in vulnerability hunting tasks.

- Our experiments provide critical insights into the security-relevance of different feature types, quantifying the distinct impacts of semantic features, single-graph structural features, and cross-graph structural features on the task of binary code similarity detection.

2. Background

| Listing 1. A fragment of binary code from the x64 platform. |

| test r15, r15 # Test the value of register r15 (sets Zero Flag) jz loc_BEFCC # Jump to loc_BEFCC if zero flag is set cmp rdx, 4 # Compare the value in register rdx with 4 ja def_BEF22 # Jump to def_BEF22 if rdx > 4 lea rax, jpt_BEF22 # Load effective address of jpt_BEF22 into rax movsxd rcx, ds:(jpt_BEF22 - 25684Ch)[rax+rdx*4] # A memory operand add rcx, rax # Add rax to rcx (calculating a target address) jmp rcx # Indirect jump to the address stored in rcx |

3. Design of Breg

3.1. Overview

3.2. Semantic Feature Extraction

3.2.1. Pre-Training Data Generation

3.2.2. Pre-Training Task

| Listing 2. This is a snippet of code with strong sequential correlation, used for the pretraining task ISP. The instructions I1, I2, and I3 form a strongly ordered sequence due to shared register dependencies (rax, rcx). |

| lea rax, jpt_BEF22 # I1: Load base address into rax movsxd rcx, ds:(jpt_BEF22 - 25684Ch)[rax+rdx*4] # I2: Memory load using rax, to rcx add rcx, rax # I3: Use both rax and rcx for address calculation |

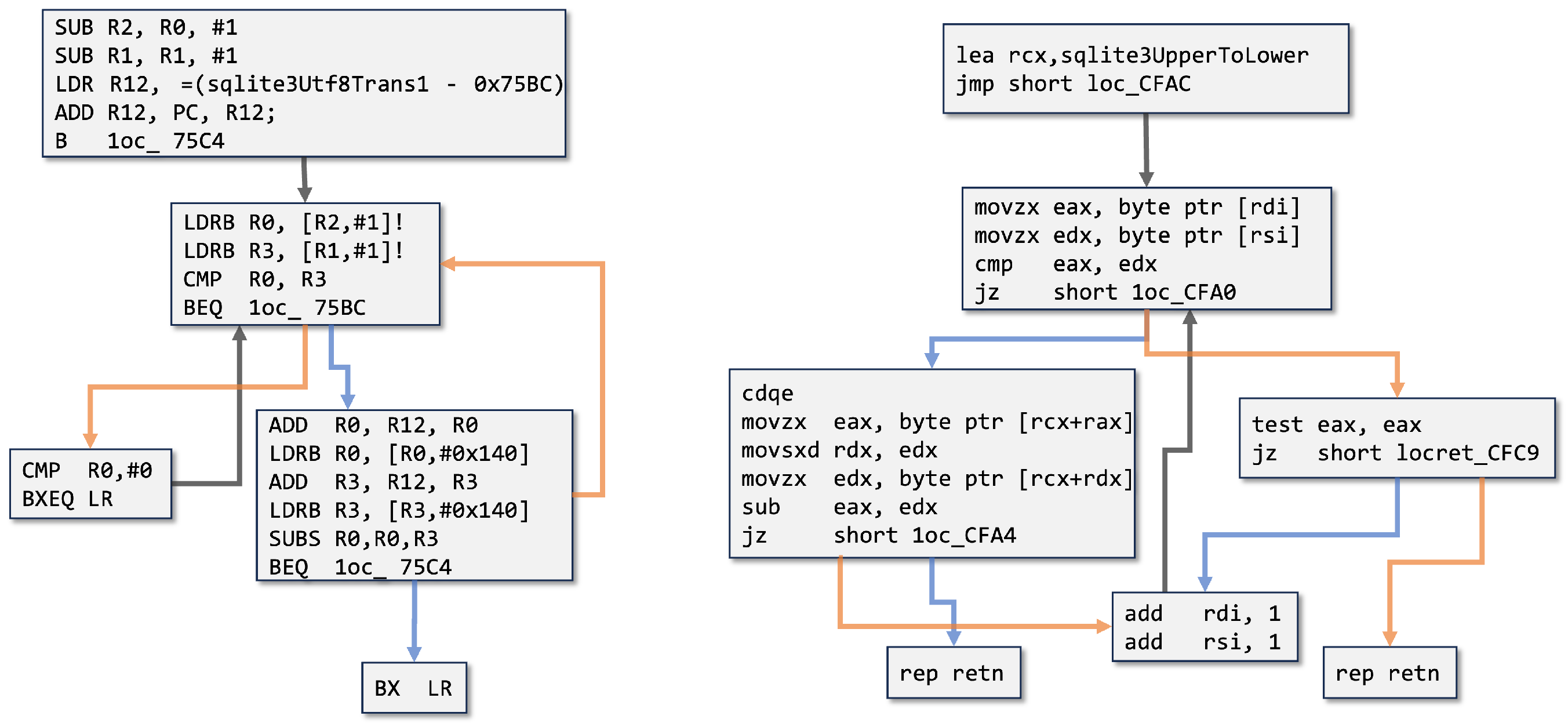

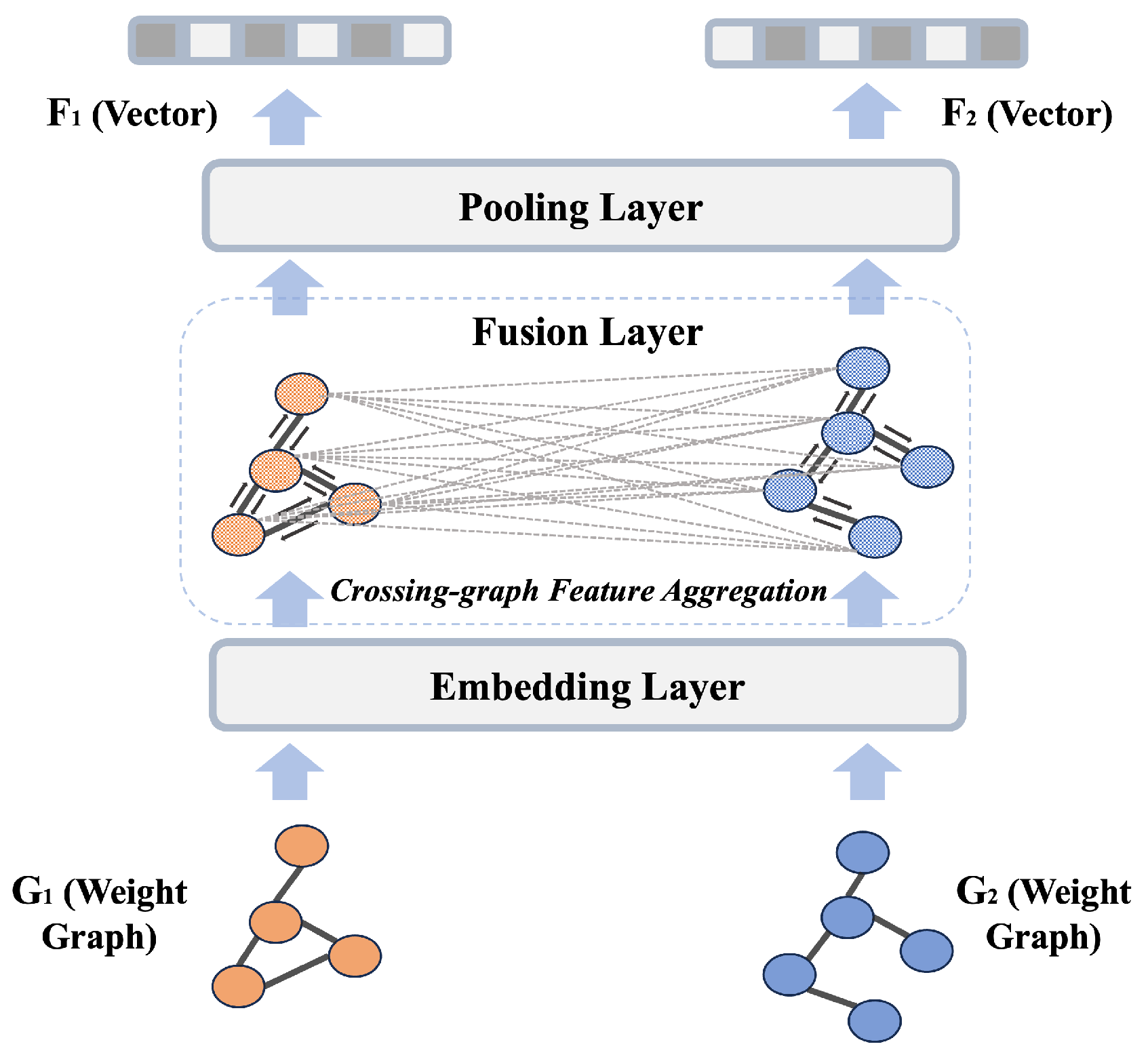

3.3. Cross-Graph Structural Feature Extraction

4. Evaluation

4.1. Datasets

- Dataset1: Dataset1 is compiled from three open-source projects: clamav, curl, and nmap, each compiled on six different platforms: arm32, arm64, mips32, mips64, x86, and x64. Compilation is performed using eight different compilers, including different versions of gcc and clang. The code is compiled using five optimization levels: (O0, O1, O2, O3, OS). Approximately 153.2 million assembly instructions from around 3,531,451 functions, serving as Breg’s pretraining dataset.

- Dataset2: Dataset2 is compiled from three open-source projects: openssl, unrar, and zlib, following the same compilation rules as Dataset1. It comprises a total of 1,148,156 functions. Dataset2 is used to assess the effectiveness of Breg.

- Dataset3: Dataset3 is compiled from a set of lightweight but diverse open-source projects, including binutils, coreutils, gmp, sqlite, and others. It is expected to be smaller in scale compared to Dataset2. Compilation for this dataset is performed on six platforms using gcc 7.0 with optimization levels (O0, O1, O2 and O3). Dataset3 includes a total of 373,993 functions and is used to test the flexibility and generality of Breg.

- Dataset4: Dataset4 consists of real CVE vulnerability functions extracted from openssl. Initially, multiple CVE vulnerability functions from openssl 1.0.2d are compiled for four different platforms, creating a vulnerability library. Then, openssl 1.0.2h is compiled as the target sample for vulnerability detection on two platforms. Dataset4 is used to evaluate the practicality of Breg in the context of binary vulnerability detection.

4.2. Experimental Setup

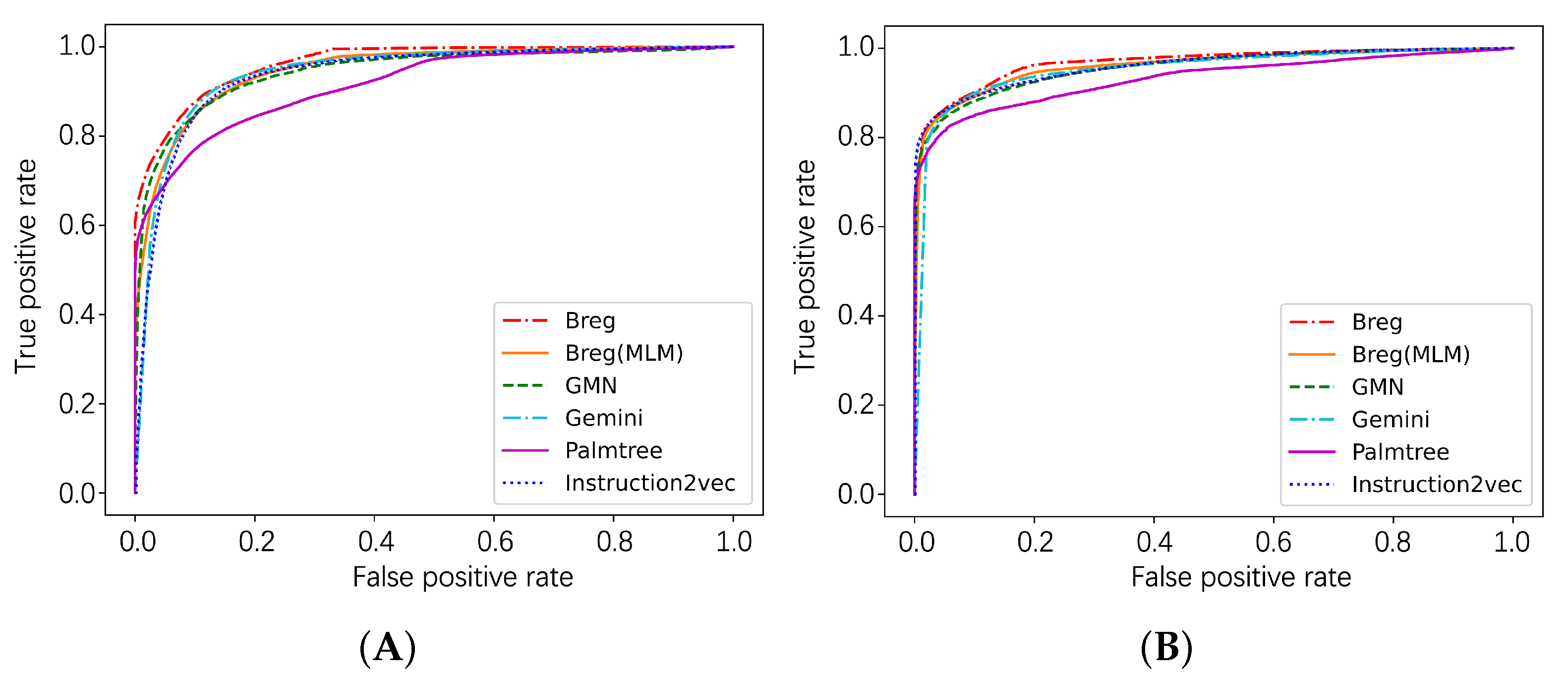

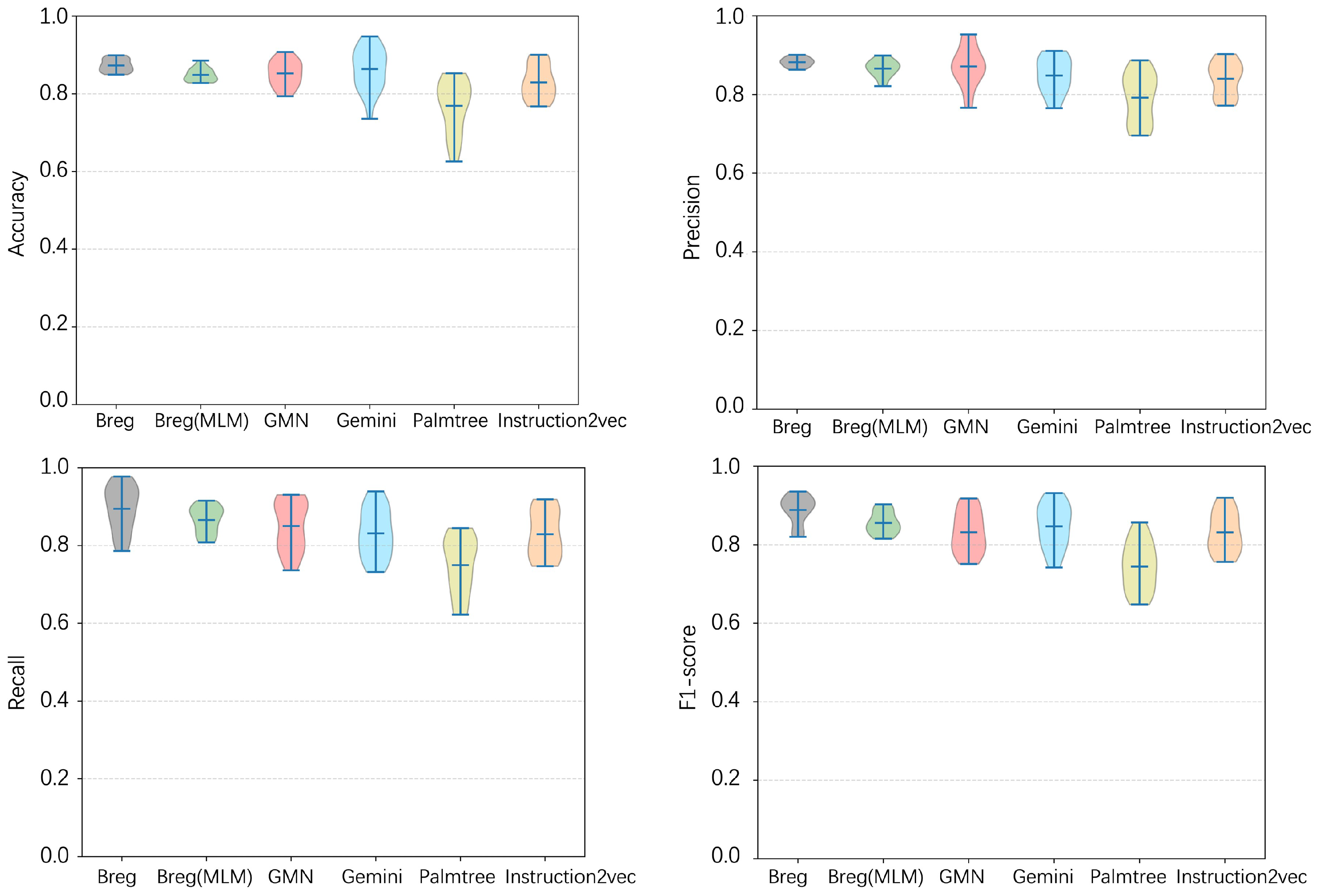

4.3. Effectiveness

4.4. Generality

4.5. Practicality

4.6. Visual Representation

4.7. Model Complexity Analysis

5. Limitation

6. Related Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jang, J.; Agrawal, A.; Brumley, D. ReDeBug: Finding unpatched code clones in entire os distributions. In Proceedings of the 2012 IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 20–23 May 2012; pp. 48–62. [Google Scholar]

- Cui, A.; Costello, M.; Stolfo, S. When Firmware Modifications Attack: A Case Study of Embedded Exploitation. Available online: https://www.ndss-symposium.org/ndss2013/ndss-2013-programme/when-firmware-modifications-attack-case-study-embedded-exploitation/ (accessed on 23 October 2025).

- Brosch, T.; Morgenstern, M. Runtime Packers: The Hidden Problem. Available online: https://www.av-test.org/fileadmin/pdf/publications/blackhat_2006_avtest_presentation_runtime_packers-the_hidden_problem.pdf (accessed on 23 October 2025).

- Marcelli, A.; Graziano, M.; Ugarte-Pedrero, X.; Fratantonio, Y.; Mansouri, M.; Balzarotti, D. How machine learning is solving the binary function similarity problem. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 2099–2116. [Google Scholar]

- Xu, X.; Liu, C.; Feng, Q.; Yin, H.; Song, L.; Song, D. Neural network-based graph embedding for cross-platform binary code similarity detection. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 363–376. [Google Scholar]

- Feng, Q.; Zhou, R.; Xu, C.; Cheng, Y.; Testa, B.; Yin, H. Scalable graph-based bug search for firmware images. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 480–491. [Google Scholar]

- Song, L. Structure2vec: Deep Learning for Security Analytics over Graphs. 2017. Available online: https://www.usenix.org/conference/scainet18/presentation/song (accessed on 23 October 2025).

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Qiu, X.; Sun, T.; Xu, Y.; Shao, Y.; Dai, N.; Huang, X. Pre-trained models for natural language processing: A survey. Sci. China Technol. Sci. 2020, 63, 1872–1897. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Guo, W.; Mu, D.; Xing, X.; Du, M.; Song, D. DEEPVSA: Facilitating value-set analysis with deep learning for postmortem program analysis. In Proceedings of the USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019. [Google Scholar]

- Raff, E.; Barker, J.; Sylvester, J.; Brandon, R.; Catanzaro, B.; Nicholas, C.K. Malware detection by eating a whole exe. In Proceedings of the Workshops at the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Liu, B.; Huo, W.; Zhang, C.; Li, W.; Li, F.; Piao, A.; Zou, W. αdiff: Cross-version binary code similarity detection with dnn. In Proceedings of the 33rd ACM/IEEE International Conference on Automated Software Engineering, Montpellier, France, 3–7 September 2018; pp. 667–678. [Google Scholar]

- Jiang, L.; Misherghi, G.; Su, Z.; Glondu, S. DECKARD: Scalable and Accurate Tree-Based Detection of Code Clones. In Proceedings of the 29th International Conference on Software Engineering (ICSE’07), Minneapolis, MN, USA, 20–26 May 2007; pp. 96–105. [Google Scholar] [CrossRef]

- Yang, S.; Cheng, L.; Zeng, Y.; Lang, Z.; Zhu, H.; Shi, Z. Asteria: Deep learning-based AST-encoding for cross-platform binary code similarity detection. In Proceedings of the 2021 51st Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Taipei, Taiwan, 21–24 June 2021; pp. 224–236. [Google Scholar]

- Allamanis, M.; Barr, E.T.; Devanbu, P.; Sutton, C. A survey of machine learning for big code and naturalness. ACM Comput. Surv. (CSUR) 2018, 51, 1–37. [Google Scholar] [CrossRef]

- Hindle, A.; Barr, E.T.; Gabel, M.; Su, Z.; Devanbu, P. On the naturalness of software. Commun. ACM 2016, 59, 122–131. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Li, Y.; Gu, C.; Dullien, T.; Vinyals, O.; Kohli, P. Graph matching networks for learning the similarity of graph structured objects. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 3835–3845. [Google Scholar]

- Li, X.; Qu, Y.; Yin, H. Palmtree: Learning an assembly language model for instruction embedding. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, 15–19 November 2021; pp. 3236–3251. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Luo, L.; Ming, J.; Wu, D.; Liu, P.; Zhu, S. Semantics-based obfuscation-resilient binary code similarity comparison with applications to software plagiarism detection. In Proceedings of the 22nd ACM SIGSOFT International Symposium on Foundations of Software Engineering, Hong Kong, China, 16–21 November 2014; pp. 389–400. [Google Scholar]

- Zuo, F.; Li, X.; Young, P.; Luo, L.; Zeng, Q.; Zhang, Z. Neural Machine Translation Inspired Binary Code Similarity Comparison beyond Function Pairs. In Proceedings of the 2019 Network and Distributed System Security Symposium. Internet Society, San Diego, CA, USA, 24–27 February 2019. [Google Scholar] [CrossRef]

- Xu, C.; Zhou, W.; Ge, T.; Wei, F.; Zhou, M. BERT-of-Theseus: Compressing BERT by Progressive Module Replacing. arXiv 2020, arXiv:2002.02925. [Google Scholar] [CrossRef]

- Wang, W.; Wei, F.; Dong, L.; Bao, H.; Yang, N.; Zhou, M. MiniLM: Deep Self-Attention Distillation for Task-Agnostic Compression of Pre-Trained Transformers. arXiv 2020, arXiv:2002.10957. [Google Scholar] [CrossRef]

- Ling, X.; Wu, L.; Wang, S.; Ma, T.; Xu, F.; Liu, A.X.; Wu, C.; Ji, S. Multilevel graph matching networks for deep graph similarity learning. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 799–813. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Bu, J.; Ester, M.; Li, Z.; Yao, C.; Yu, Z.; Wang, C. H2mn: Graph similarity learning with hierarchical hypergraph matching networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; pp. 2274–2284. [Google Scholar]

- Dullien, T. Searching Statically-Linked Vulnerable Library Functions in Executable Code, 18 December 2018. Available online: https://googleprojectzero.blogspot.com/2018/12/searching-statically-linked-vulnerable.html (accessed on 23 October 2025).

- Pagani, F.; Dell’Amico, M.; Balzarotti, D. Beyond precision and recall: Understanding uses (and misuses) of similarity hashes in binary analysis. In Proceedings of the Eighth ACM Conference on Data and Application Security and Privacy, Tempe, AZ, USA, 19–21 March 2018; pp. 354–365. [Google Scholar]

- Cui, L.; Hao, Z.; Jiao, Y.; Fei, H.; Yun, X. Vuldetector: Detecting vulnerabilities using weighted feature graph comparison. IEEE Trans. Inf. Forensics Secur. 2020, 16, 2004–2017. [Google Scholar] [CrossRef]

- Melekhov, I.; Kannala, J.; Rahtu, E. Siamese network features for image matching. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 378–383. [Google Scholar]

- Sun, H.; Cui, L.; Li, L.; Ding, Z.; Hao, Z.; Cui, J.; Liu, P. VDSimilar: Vulnerability detection based on code similarity of vulnerabilities and patches. Comput. Secur. 2021, 110, 102417. [Google Scholar] [CrossRef]

- Ding, S.H.H.; Fung, B.C.M.; Charland, P. Asm2Vec: Boosting Static Representation Robustness for Binary Clone Search against Code Obfuscation and Compiler Optimization. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 472–489. [Google Scholar] [CrossRef]

- Lee, Y.; Kwon, H.; Choi, S.H.; Lim, S.H.; Baek, S.H.; Park, K.W. Instruction2vec: Efficient Preprocessor of Assembly Code to Detect Software Weakness with CNN. Appl. Sci. 2019, 9, 4086. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Massarelli, L.; Di Luna, G.A.; Petroni, F.; Querzoni, L.; Baldoni, R. Function representations for binary similarity. IEEE Trans. Dependable Secur. Comput. 2021, 19, 2259–2273. [Google Scholar] [CrossRef]

- Yu, Z.; Cao, R.; Tang, Q.; Nie, S.; Huang, J.; Wu, S. Order matters: Semantic-aware neural networks for binary code similarity detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 1145–1152. [Google Scholar]

- Ahn, S.; Ahn, S.; Koo, H.; Paek, Y. Practical binary code similarity detection with bert-based transferable similarity learning. In Proceedings of the 38th Annual Computer Security Applications Conference, Austin, TX, USA, 5–9 December 2022; pp. 361–374. [Google Scholar]

- Pei, K.; Xuan, Z.; Yang, J.; Jana, S.; Ray, B. Trex: Learning execution semantics from micro-traces for binary similarity. arXiv 2020, arXiv:2012.08680. [Google Scholar]

| Model | Accuracy | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|

| Breg | 0.8747 | 0.8624 | 0.8741 | 0.8682 | 0.9652 |

| Breg(MLM) | 0.8713 | 0.8624 | 0.8711 | 0.8667 | 0.9487 |

| GMN | 0.8653 | 0.8702 | 0.8653 | 0.8677 | 0.9441 |

| Gemini | 0.8547 | 0.8683 | 0.8357 | 0.8572 | 0.9387 |

| Palmtree | 0.7425 | 0.8028 | 0.7431 | 0.7718 | 0.9162 |

| Instruction2vec | 0.8245 | 0.8545 | 0.8250 | 0.8395 | 0.9379 |

| Model | Accuracy | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|

| Breg | 0.8940 | 0.8985 | 0.8955 | 0.8970 | 0.9681 |

| Breg(MLM) | 0.8813 | 0.8924 | 0.8911 | 0.8917 | 0.9599 |

| GMN | 0.8840 | 0.8973 | 0.8931 | 0.8952 | 0.9555 |

| Gemini | 0.8822 | 0.8857 | 0.8840 | 0.8848 | 0.9532 |

| Palmtree | 0.7578 | 0.7997 | 0.7537 | 0.7861 | 0.9316 |

| Instruction2vec | 0.8822 | 0.8840 | 0.8822 | 0.8821 | 0.9561 |

| Model | Accuracy | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|

| Breg | 0.8778 | 0.8839 | 0.8778 | 0.8808 | 0.9495 |

| Breg(MLM) | 0.8637 | 0.8731 | 0.8772 | 0.8752 | 0.9518 |

| GMN | 0.8427 | 0.8581 | 0.8427 | 0.8503 | 0.9300 |

| Gemini | 0.8649 | 0.8639 | 0.8441 | 0.8539 | 0.9561 |

| Palmtree | 0.7578 | 0.7997 | 0.7578 | 0.7782 | 0.9316 |

| Instruction2vec | 0.8859 | 0.8791 | 0.8759 | 0.8775 | 0.9586 |

| CVE Number | Vulnerability Function |

|---|---|

| CVE-2014-3508 | OBJ_obj2txt() |

| CVE-2022-0778 | BN_mod_sqrt() |

| CVE-2023-0215 | BIO_new_NDEF () |

| CVE-2019-1563 | CMS_decrypt_set1_pkey(),PKCS7_dataDecode() |

| CVE-2023-0466 | X509_VERIFY_PARAM_add0_policy() |

| CVE-2021-3712 | ASN1_STRING_set() |

| CVE-2016-2176 | X509_NAME_oneline() |

| CVE-2016-2182 | BN_bn2dec() |

| CVE-2021-23841 | X509_issuer_and_serial_hash() |

| Model | ARM32 | MIPS32 | ||||||

|---|---|---|---|---|---|---|---|---|

| arm32 | mips32 | x64 | x86 | arm32 | mips32 | x64 | x86 | |

| Breg | 1.000 | 0.8333 | 1.000 | 0.8500 | 0.8500 | 0.8833 | 0.9333 | 0.8583 |

| Breg(MLM) | 0.9333 | 0.8583 | 1.000 | 0.8200 | 0.8500 | 0.8583 | 0.8533 | 0.7833 |

| GMN | 0.7667 | 0.6450 | 0.6700 | 0.7833 | 0.5921 | 0.7333 | 0.4308 | 0.3839 |

| Gemini | 0.9000 | 0.7283 | 0.7417 | 0.6867 | 0.6417 | 0.7950 | 0.5733 | 0.5683 |

| Palmtree | 0.8750 | 0.3790 | 0.2990 | 0.2929 | 0.2962 | 0.7850 | 0.2762 | 0.2929 |

| Instruction2vec | 1.000 | 0.8200 | 0.8833 | 0.7950 | 0.7367 | 0.8833 | 0.8533 | 0.7950 |

| Model | Parameters (M) | Training Time (Hours/Epoch) | GPU Memory (GB) |

|---|---|---|---|

| Breg | 15.2 | 3.5 | 8.2 |

| Breg(MLM) | 15.2 | 3.2 | 8.2 |

| GMN | 8.7 | 2.1 | 6.5 |

| Gemini | 6.3 | 1.8 | 5.2 |

| Palmtree | 12.5 | 2.8 | 7.3 |

| Instruction2vec | 4.2 | 1.2 | 4.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, C.; Dai, W.; Deng, Y.; Bao, L.; Xu, G. Enhancing Binary Security Analysis Through Pre-Trained Semantic and Structural Feature Matching. Appl. Sci. 2025, 15, 11610. https://doi.org/10.3390/app152111610

Yi C, Dai W, Deng Y, Bao L, Xu G. Enhancing Binary Security Analysis Through Pre-Trained Semantic and Structural Feature Matching. Applied Sciences. 2025; 15(21):11610. https://doi.org/10.3390/app152111610

Chicago/Turabian StyleYi, Chen, Wei Dai, Yiqi Deng, Liang Bao, and Guoai Xu. 2025. "Enhancing Binary Security Analysis Through Pre-Trained Semantic and Structural Feature Matching" Applied Sciences 15, no. 21: 11610. https://doi.org/10.3390/app152111610

APA StyleYi, C., Dai, W., Deng, Y., Bao, L., & Xu, G. (2025). Enhancing Binary Security Analysis Through Pre-Trained Semantic and Structural Feature Matching. Applied Sciences, 15(21), 11610. https://doi.org/10.3390/app152111610