1. Introduction

The field of neuroengineering is experiencing significant growth, with its activities characterized by several challenging tasks. One important area of focus within this field is the creation of neuromorphic hardware systems. These systems are composed of electronic circuits specifically designed to directly implement artificial neural networks (ANNs). The primary aim of developing such systems is to facilitate the application of neural computing models across a broad range of scenarios, each with its unique set of requirements. One critical need addressed by neuromorphic hardware is the acceleration of processing speed in ANN. For instance, many applications require the ability to achieve high data throughput to support fast training and/or recall cycles, such as video processing [

1]. Furthermore, some applications require real-time responses from ANN, such as in cryptography/encryption processing [

2] and in Internet of Things (IoT) environments, where it is essential to handle data from sensor networks instantly [

3]. In addition to processing speed, neuromorphic systems also meet the requirements of mobile applications. For example, robotic platforms can benefit greatly from the reduced physical size and energy consumption of these systems [

4]. Additionally, telecommunications is another example of a subject area where minimizing both hardware size and power requirements is important [

5]. In this context, neuromorphic systems generally make it feasible to deploy neural network capabilities in compact and energy-efficient devices.

ASIC (Application-Specific Integrated Circuit) and FPGA (Field-Programmable Gate Array) technologies allow the creation of highly parallel computing architectures when implementing ANNs, which can achieve greater processing speeds than traditional Central Processing Units (CPUs) [

3]. In addition, the low energy consumption of ASIC and FPGA circuits makes them well-suited for developing embedded neuromorphic systems, offering an advantage over Graphics Processing Units (GPUs) and CPUs in this regard [

6]. While ASIC chips are ideal for ultra-low power applications like brain–computer interfaces, FPGA technology is beneficial due to its shorter development cycles and lower costs for small- and medium-scale production [

7]. FPGAs can thus achieve high data throughput while minimizing space and energy use, making them a suitable choice for the types of ANN applications described above.

However, implementing ANNs directly on hardware, particularly on FPGA, also presents several challenges. These difficulties arise from the inherent complexity of neural computations and the constraints of hardware resources. Some of these difficulties include the limited capacity of the chips and the numerical precision of calculations. The challenge of limited hardware resources is related to FPGAs having a finite number of logic blocks (commonly implemented as Look-Up Tables (LUTs) ), memory, and Digital Signal Processing units (DSPs), which can restrict the size and complexity of the networks that can be implemented [

8]. The second challenge involves numerical precision, as implementing ANNs on hardware often uses fixed-point arithmetic instead of floating-point [

9]. While this strategy saves resources, it can lead to precision loss. Therefore, numerical precision should be monitored in ANN hardware implementations to ensure it does not affect model accuracy. One specific computation in which chip resources and precision issues can arise is in the implementation of neural activation functions like the sigmoid function [

10]. The sigmoid function,

, is defined as follows:

where

represents the slope of the curve and

represents the horizontal translation that adjusts the location of the curve’s inflection point. As a neural activation function, the sigmoid function is often used with

and

[

11].

Directly implementing the sigmoid function in hardware is impractical due to the extensive operations involved, such as exponentiation and division. In this way, the present work focuses on developing a hardware-based approximation of the sigmoid function using a combination of first- and second-degree polynomial functions, depending on the region in the function domain. Based on the outlined motivation, the proposal is intended to be resource-efficient to conserve chip area and maintain numerical precision comparable to previous publications.

The function approximation by low-degree polynomials, in this work, will be performed at the software level, using a hardware description language. Then, it will be up to the FPGA vendor’s hardware synthesis software, based on that description, to find the corresponding hardware implementation compatible with the characteristics of each specific chip model.

Some related works ([

12,

13,

14,

15,

16]) restricted the values of operands or boundaries between approximation regions to be powers of two, hoping this would save hardware resources. However, this restriction may result in a loss of accuracy. Previous works, as [

16,

17], have also adopted the strategy of approximating regions of the function domain using low-degree polynomials. However, they adopted arbitrary values for the boundaries between those regions. In the present work, instead, it was decided to carry out a systematic search for the best values of those boundaries, hoping that this would better identify which regions of the function would be more suitable for approximations by polynomials of which degrees.

A methodological challenge encountered in works related to FPGA algorithm implementations is the wide variety of devices, with different types of internal hardware modules available, produced by different companies, each with its own synthesis software. For instance, experimental results in [

17] were obtained for Xilinx FPGA XC7V2000 with Vivado design suit, while in [

14] the authors used Quartus II for FPGA device EP3C16F484C6 from Cyclone III family. This often makes direct comparisons between published results produced in different studies impractical. The representation of operands and coefficients used in calculations also varies from study to study, in terms of the total number of bits, the number of bits in the integer part, and the number of bits in the fractional part (when using fixed point representation), potentially rendering comparisons meaningless.

The main contributions of this work are as follows:

In the sigmoid approximation, it systematically searches for the best boundaries between approximation regions in its domain, rather than establishing them arbitrarily. The use of this strategy provided a low mean absolute approximation error value () when compared to related works.

It relaxes the common restriction on using operands that are powers of two, allowing them to assume values freely. Experimental results showed that this had no systematic negative impact on hardware resource usage and probably contributed to the quality of the results.

As a methodological contribution, the results are validated for multiple chip models, from two large companies, making the comparisons with other works fair and more general. Furthermore, all related works considered here were implemented in VHDL language according to the details provided in the original articles and their codes were made publicly available for reproduction by the scientific community and for the continuation of this research.

Also seeking fairness and a methodologically correct procedure, we advocate that the comparison with the results of related works be made by standardizing the number of bits of the operands, the FPGA synthesis programs, and the chip models for implementation.

The remainder of the paper is organized as follows: The next section presents the justification for this study and a review of the literature.

Section 3 details the proposed method, and the final two sections present the results, discussion, comparisons to published works, and conclusions.

2. Justification and Related Works

In the field of machine learning, the sigmoid function is a widely used nonlinear component, commonly serving as a neural activation function. It is frequently employed in shallow networks, such as Multilayer Perceptrons (MLPs), both during the operation phase (inference) and the training phase, in the derivative for error backpropagation and weight adjustment [

11]. However, the sigmoid function is also essential in various other neural computing models beyond MLP. For example, it regulates the retention, forgetting, and passing of information in recurrent neural networks, acting as the activation function for different gates in Long Short-Term Memory (LSTM) networks [

18], and in Gated Recurrent Units (GRUs) [

19,

20]. While the Rectified Linear Unit (ReLU) and its variants, collectively known as the ReLU family, have gained popularity as activation functions in deep learning networks [

21], the sigmoid function remains in use within deep models as well. Examples of its use can be found for modulating the flow of information in Structured Attention Networks (SANs), which incorporate structured attention mechanisms to focus on specific parts of the input data [

22]. The sigmoid function is also employed to map internal values to a range between 0 and 1 in deep models, particularly for binary decisions or probabilistic outputs, as seen in convolutional neural networks, like LeNet [

23], and in Autoencoders [

24].

The continued interest in researching the sigmoid function is also reflected in the numerous studies published on its hardware implementation. Common approaches to implementing the sigmoid function include piecewise approximation with linear or nonlinear segments, LUTs for direct storage of output values, Taylor series expansion, and Coordinate Rotation Digital Computer (CORDIC) methods. The work [

25] aims to achieve low maximum approximation error using CORDIC-based techniques to iteratively calculate trigonometric and hyperbolic functions. However, this approach demands significant chip resources. Similarly, the authors in [

26] employed Taylor’s theorem and the Lagrange form for approximation. The work also proposes incorporating the reutilization of neuron circuitry into the approximation calculation to enhance chip area efficiency.

For piecewise approximation of the sigmoid curve, the authors in [

27] utilized exponential function approximation, which involves complex division operations. A number of studies propose multiple approximation schemes. For instance, the authors in [

13] introduced an approach using three independent modules—Piecewise Linear Function (PWL), Taylor series, and the Newton–Raphson method—that can be combined to achieve the desired balance between accuracy and resource efficiency. The study in [

28] presents two methods for approximation: one centralized and the other distributed, using reconfigurable computing environments to optimize implementation.

Meanwhile, the authors in [

16] proposed different schemes for sigmoid approximation, utilizing first- or second-order polynomial functions depending on the required accuracy and resource usage. Nevertheless, most works focus on different PWL approximation strategies. Reference [

17] uses curvature analysis to segment the sigmoid function for PWL approximation, adjusting each segment based on maximum and average error metrics to balance precision and resource consumption. In another approach, the authors in [

29] employed a first-order Taylor series expansion to define the intersection points of the PWL segments, while [

30] leveraged statistical probability distribution to determine the number of segments, fragmenting the function into unequal spaces to minimize approximation error. Other approaches aim to reduce computational complexity. For example, the authors in [

31] explored a technique that combines base-2 logarithmic functions with bit-shift operations for approximation, resulting in reduced chip area.

The use of LUTs for direct storage of sigmoid output values is explored in [

32], providing a straightforward method for FPGA implementation. On the other hand, the authors in [

33] achieved high precision by using floating-point arithmetic, directly computing the sigmoid function with exponent function interpolation via the McLaurin series and Padé polynomials. However, this implementation requires a large chip area.

A restriction adopted in several related works ([

12,

13,

14,

15,

16]) was to only use multiplications by powers of 2 or operations with base-2 logarithm instead of using coefficients with generic values, in order to use shifts replacing normal multiplications, to save hardware resources. However, this risks sacrificing accuracy.

3. Materials and Methods

As in some of the related works, the scheme proposed here consists in splitting the sigmoid function domain into a small number of subdomains (

Figure 1) and in approximating the function in each subdomain by a low-degree polynomial, expecting in this way a low hardware resource usage. The polynomial degrees are chosen based in the function graph form in each subdomain. For

x in the function domain, if

,

will be approximated as being a constant equal to zero. For

,

will be approximated as being the constant one. For

and for

,

will be approximated by first degree polynomials. For

and for

,

will be approximated by second degree polynomials. However, unlike those works, the boundaries between the approximation regions will not be defined arbitrarily, but will be systematically sought, in an approximately continuous way, in order to minimize the average absolute error in the range

. This range to be explored was chosen here, as in [

17], because outside it,

is approximately constant, 0 or 1. As an alternative, the structure of the same algorithm will search for the boundaries that minimizes the maximum (the peak) of the absolute error in the same domain.

Algorithm 1 was used to search for the best approximations boundaries. It was implemented in the GNU Octave software [

34]. Initially, the search step

dx is set to 0.005. Due to the sigmoid function symmetry about the point

,

xmed is set to 0. Variables

minAvgAbsError and

minMaxAbsError are used to register the smaller average absolute error and the minimum value for the absolute error peak, respectively, known up to each iteration along the whole domain. Then, two nested

for loops generate many combinations of values to

xinf and

xmin. The particular ranges used in those

for loops in Algorithm 1 were chosen after experimentation with wider ranges and with larger steps, in a preliminar coarse experiment. Those ranges are used in a final refinement. In lines 7 and 8, symmetric values about the origin are set for

xmax and

xsup in relation to

xmin and

xinf, respectively. Lines from 9 to 14 build sequences of values for

x in each of the approximations regions. Lines 15 to 20 build sequences containing the standard values for the sigmoid in each region. In lines from 21 to 24, the Octave function

polyfit is applied to the sequences belonging to each of the regions where polynomial approximations are adopted, using the least square error criterion. The third

polyfit argument is the degree of the desired polynomial. Variables

,

,

, and

receive the polynomials coefficients of the corresponding regions. Lines from 25 to 31 calculate and concatenate the errors for each domain value. Line 32 calculates the average absolute error (

avgAbsError) and line 33 calculates the maximum absolute error (

maxAbsError) for the current

combination. Lines 34 to 42 check if the newly calculated

avgAbsError is smaller than the least average absolute error known up to this moment, in which case the corresponding optimal conditions are registered. The same kind of checking is performed for

maxAbsError in lines from 43 to 51. So, at the execution end, the best boundaries and the corresponding polynomials coefficients are known for both criteria. There was no concern about optimizing this algorithm in terms of execution time, because it only needs to be executed once. We do not adopt here the restriction or preference for operations involving powers of 2, thus leaving the possibilities of values for the polynomial coefficients and for the boundaries more free.

The application of Algorithm 1 to any other function (for example, the hyperbolic tangent, also a common neural activation function [

11]) is straightforward. It will suffice to replace the sigmoid calculation in lines from 15 to 20 by that function. Depending on that function’s characteristics, one may change the number of regions in the domain and the polynomial degrees, but the strategy remains the same.

The results obtained by executing the Algorithm 1 are shown in

Table 1.

Table 1 shows that, as expected, the average absolute error was slightly lower in the first implementation than in the second. The maximum absolute error was slightly lower in the second implementation than in the first.

| Algorithm 1 Search for the best boundaries between approximation regions |

- 1:

= 0.005 - 2:

xmed = 0 - 3:

minAvgAbsError = ∞ - 4:

minMaxAbsError = ∞ - 5:

for from to in 0.01 steps do - 6:

for from to in 0.01 steps do - 7:

▹ and symmetrical about the origin - 8:

▹ and symmetrical about the origin Build the ranges of x (sigmoid domain): - 9:

sequence from to , with increments of - 10:

sequence from to , with increments of - 11:

sequence from to , with increments of - 12:

sequence from to , with increments of - 13:

sequence from to , with increments of - 14:

sequence from to 8, with increments of Build y standard per range: - 15:

sequence built from 1 / ( 1 + exp( ) ) - 16:

sequence built from 1 / ( 1 + exp( ) ) - 17:

sequence built from 1 / ( 1 + exp( ) ) - 18:

sequence built from 1 / ( 1 + exp( ) ) - 19:

sequence built from 1 / ( 1 + exp( ) ) - 20:

sequence built from 1 / ( 1 + exp( ) ) Polynomial coefficients calculation per region: - 21:

= polyfit( , , 1 ) ▹ first degree - 22:

= polyfit( , , 2 ) ▹ second degree - 23:

= polyfit( , , 2 ) ▹ second degree - 24:

= polyfit( , , 1 ) ▹ first degree Error calculation: - 25:

= zeros( 1, size( , 2 ) ) − - 26:

= polyval( p2, ) − - 27:

= polyval( p3, ) − - 28:

= polyval( p4, ) − - 29:

= polyval( p5, ) − - 30:

= ones( 1, size( , 2 ) ) − - 31:

= concatenation( ) - 32:

= mean( abs( ) ) - 33:

= max( abs( ) ) - 34:

if then - 35:

- 36:

- 37:

- 38:

- 39:

- 40:

- 41:

- 42:

end if - 43:

if then - 44:

- 45:

- 46:

- 47:

- 48:

- 49:

- 50:

- 51:

end if - 52:

end for - 53:

end for

|

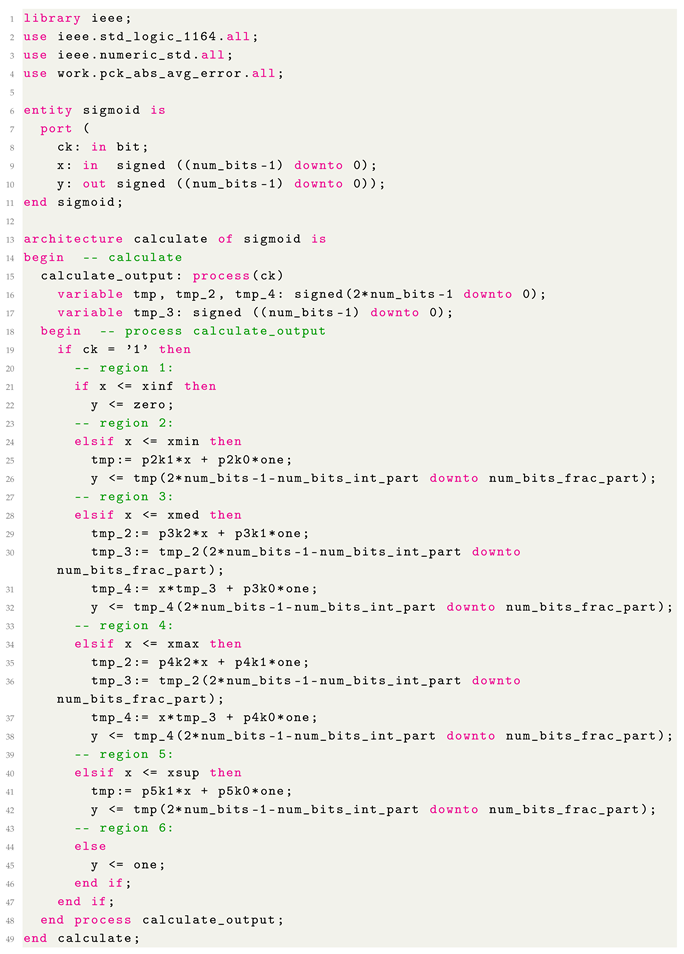

The two sigmoid modules corresponding to

Table 1 were implemented in Very High Speed Integrated Circuits Hardware Description Language (VHDL) language [

35], in a behavioral way. Each implementation consists of two VHDL files: a package file, called

pck_abs_avg_error.vhdl (in

Appendix A.1) when containing constants definitions for the first module) and a

sigmoid.vhdl file (in

Appendix A.2), containing the approximation algorithm. Those constants are the polynomial coefficients and the boundaries values. The package files for the second module are omitted here because only the constant values change between the two implementations. The

sigmoid.vhdl file is the same for both.

The input

x, the output

, the boundaries values, and the polynomial coefficients are all represented as 16 bit numbers, in fixed point, with 4 bits for the integer part and 12 bits for the fractional part, as in [

17]. So, the real number 1 is represented as the binary number

, which corresponds to the decimal number

(without considering the decimal point position). Thus, each constant in

Table 1 appears multiplied by 4096 in the VHDL file

pck_abs_avg_error.vhdl. Two’s complement representation was used to allow calculations with negative numbers, by using the type

signed from the

ieee.numeric_std VHDL package.

The sigmoid implementation in the file

sigmoid.vhdl follows directly from

Table 1 and from

Figure 1. An

x value is read at the clock (

ck) rise and the output

y is then calculated according to the

x approximation region. After each multiplication operation is performed, its result contains a number of bits that is the sum of the numbers of bits of its operands: 8 bits to the integer part and 24 bits to the fractional part. So, following this operation, the result is truncated, by discarding the 4 leftmost and the 12 rightmost bits, producing a number respecting again the convention with 16 bits. Due to the multiplication operands particular values used here (

Table 1), the resulting 4 leftmost bits are always zeros for positive results and ones for negative results (in two’s complement), allowing them to be discarded. Discarding the 12 least significant bits affects the results very little, because they have very small weights.

The proposed sigmoid calculation is not iterative, but rather a single-pass one. The input argument x is presented, the approximation region is identified, the polynomial corresponding to that region is calculated, and the result is output in a single pass (a single clock cycle), without further iterations.

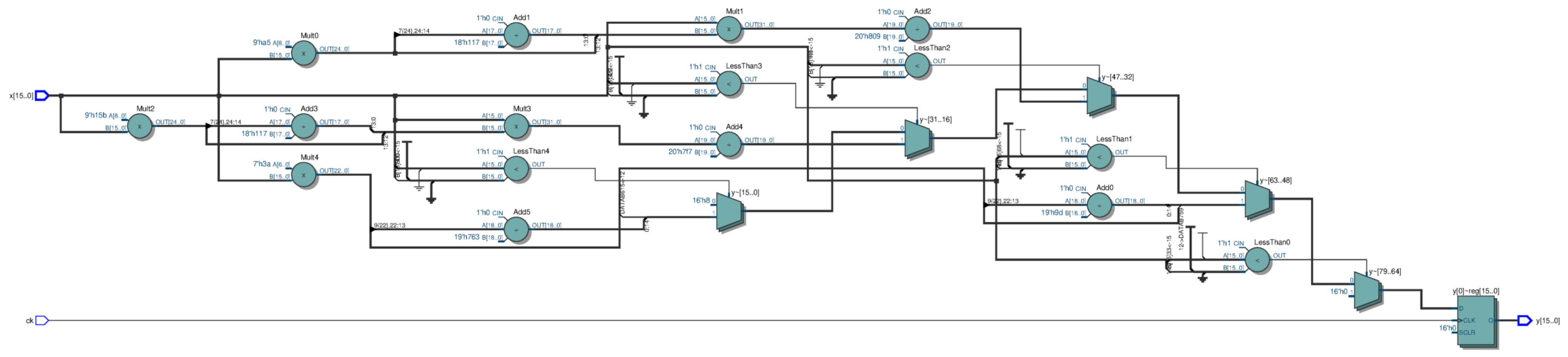

To compare the hardware resource usage and accuracy of our implementations with those of related works cited in

Section 2, we attempted to reimplement them all in a uniform way, not necessarily identical to theirs, but seeking to reproduce as faithfully as possible their algorithms and their sigmoid approximations, by using a uniform fixed-point representation, with the same number of bits (16 bits, being 4 bits for the integer part and 12 bits for the decimal part), on uniform chip models, and using uniform synthesis programs. This was possible only for some of the related works [

12,

13,

14,

15,

16,

17], because their implementations were described in sufficient detail there. Each proposed sigmoid approximation was described in VHDL. The synthesis programs were Quartus Prime Version 24.1 std, Build 1077 SC Lite Edition, for the FPGA chips Device 5CGXFC7C7F23C8 and Device MAX-10 10M02DCU324A6G, and Vivado v2025.1, for the FPGA chips Virtex-7—Device xc7v585tffg1157-3, Spartan-7—Device xc7s75fgga484-1 and Zinq-7000—Device xc7z045iffg900-2L. So, programs were chosen from companies that are among the leaders in the FPGA field and, for each company, chips of varying levels of complexity were chosen. The block diagram obtained by Quartus is in

Figure 2.

Regarding accuracy, the criterion most used in the cited works was the average absolute error of the function over the domain considered, which justifies its adoption here.

Each VHDL description used in the comparisons was simulated using the GHDL program [

36], because it is fast to execute and is capable of recording results in text files for later analysis. Each simulation run consisted in calculating and comparing the standard value of the sigmoid function,

(obtained by the GNU Octave program [

34]), with the value generated by the system described in VHDL, for each of the

= 65,536 possible values in the

x domain and obtaining, at the end, the average of the absolute error. In other words, the tests were exhaustive, performed for all representable input values with 16 bits.

4. Results

Table 2 shows the synthesis results regarding the use of hardware resources in the FPGA, for each sigmoid approximation. There are six approximations from related works and our two approximations: to minimize the error average (“ours avg.”) and to minimize the error peak (“ours max.”). The table has five parts, one for each software–device combination. It shows the FPGA resources used by each implementation as reported by the corresponding synthesis software. The kinds of those resources vary depending on the device type. For instance, only some devices have DSP blocks. The last column shows the total available number of each resource type in each FPGA device. The percentage of usage of each resource type relative to availability is also indicated. Reports from all implementations indicated that 16 registers (due to 16-bit operands) and 33 pins (16 input bits, 16 output bits, and a clock pin) were used. Thus, these results were omitted from

Table 2, keeping in it only those relevant for comparison purposes.

Comparing resource usage between implementations is not straightforward because they are of different types and available in different quantities. However, at first glance, one can see in

Table 2 that our implementations are neither systematically better nor systematically worse, in general, than the others in terms of resource usage, even though this criterion did not guide the creation of our implementations. Specifically, only the implementation in [

12] always matches ours or is advantageous.

Table 3 shows the average absolute error for all sigmoid implementations, obtained for 65,536 equally spaced values in its domain. It should be noted that, unlike with

Table 2, these results do not depend on the chip model on which the implementation was made, but only on the VHDL descriptions.

In

Table 3, one can see that only the implementation from [

13] managed to achieve a lower error than ours. However, in

Table 2, we see that it used more resources of most types compared to our implementations. Another interesting result was that our second implementation, which aimed only to obtain the minimum error peak, surpassed almost all others in the average absolute error criterion. The implementation in [

12], which is advantageous compared to ours concerning resource usage, has a much bigger average absolute error.

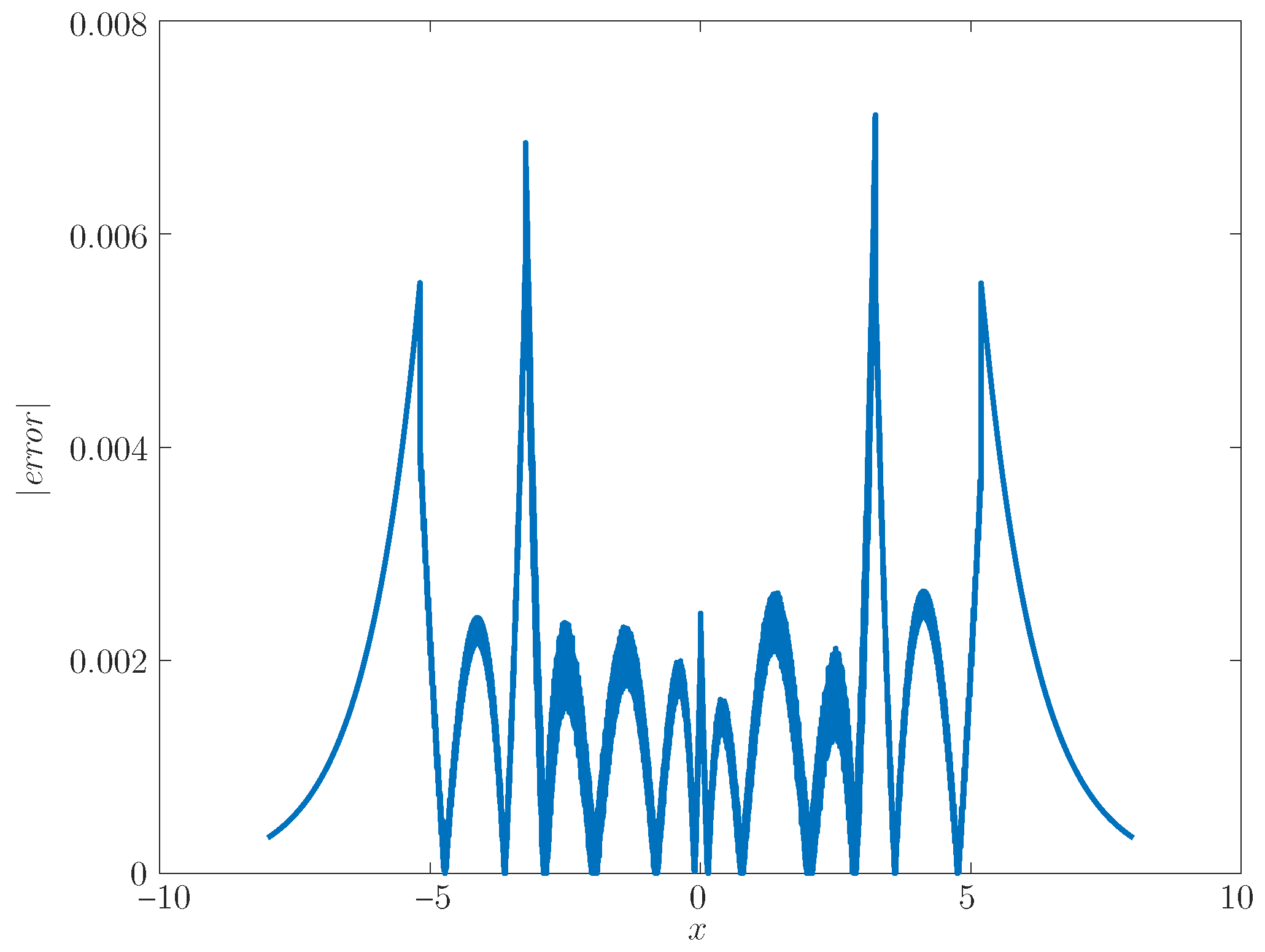

The absolute error for our first implementation is shown in

Figure 3 and for the second implementation, in

Figure 4 for the function domain.

The maximum absolute errors of our implementations were 0.0068181 for the first and 0.0071184 for the second. They are larger than those before VHDL implementations occur (

Table 1), surely due to the use of fixed point representation in 16 bits. Another effect was that now, the maximum error of the first implementation is lower than that of the second implementation. However, in some cases, the second implementation is advantageous in relation to the first in resource utilization (

Table 2).

After obtaining these results, new experiments were carried out to observe the effect of the number of bits in the representation of the operands on the average absolute error and on the use of hardware resources.

We then also implemented our approximation using 8-bit and 32-bit fixed-point representation, always keeping 4 bits for the integer part and the rest for the fractional part. We kept 4 bits in the integer part because they were enough to cover the range of values from

to

used in the experiments. The average absolute error values for these cases and for the previous case are in

Table 4:

It can be seen that the mean absolute error for both the 16-bit and 32-bit fixed-point implementations was equal to that obtained using the low-degree polynomial approximation to the sigmoid on a general-purpose 64-bit floating-point computer, prior to the VHDL description (

Table 3). Therefore, for both 16-bit and 32-bit, the mean error value is likely due solely to having approximated the sigmoid by low-degree polynomials, not to the use of those bit numbers or the fixed-point implementation.

The results of hardware resource usage as a function of the number of bits in the operands, for two chip models, are given in

Table 5. It can be seen that hardware resource usage grew much more than linearly with the number of bits in the operands. This must be taken into account in a design, especially in a case like this, where the error value saturates beyond a certain number of bits.

Table 6 presents the data path delay results for some chip models, for related works and for our implementation that aimed to minimize the mean absolute error. In it, one can see that the delay in our implementation does not differ much from most others, but that it is much lower than that of proposal [

13], which presented the lowest average absolute error.

5. Discussion and Conclusions

The sigmoid approximation for its implementation in FPGA, by dividing its domain into a few subdomains, approximating it, in each subdomain, using a low-degree polynomial, and systematically searching for the best boundaries between the subdomains, allowed us to obtain results with a good compromise between the use of hardware resources and the average absolute approximation error ( for the interval from to in the function domain). It thus becomes a good alternative to preexisting solutions.

The fact that we did not restrict operands or boundaries between approximation regions to be powers of two did not prevent relatively good results from being obtained not only in error value, but also in resource usage when compared to related works. Therefore, consideration should be given to the use of such restrictions.

The method proposed here aimed to obtain a low value for the average absolute error. In this, the work in [

13] obtained better results (

, versus ours,

), using a more elaborate method, including Newton–Raphson approximation, but requiring greater use of hardware resources in most cases (for instance, in Logic Utilization, 91 versus 25 for Ciclone V - Device 5CGXFC7C7F23C8). Therefore, it may be preferred when the importance of the error is much greater than the importance of the use of resources.

A limitation of this work was that it attempted to reproduce the implementations described in other studies, with the possibility that this reproduction might have had differences from the originals that could have influenced the results. Furthermore, some of those proposals allowed for parameter adjustments, whereas in this reproduction, to minimize the length of the work, we sought to use only a typical version of what was described in each article.

As future work, a complete neural network can be implemented in FPGA using, in each neuron, a sigmoid implementation, as proposed here, comparing its performance with a version implemented in a general-purpose computer.

One can also investigate the implementation of other activation functions, such as the hyperbolic tangent, using the principles used here for the sigmoid.