Visual Measurement of Grinding Surface Roughness Based on GE-MobileNet

Abstract

1. Introduction

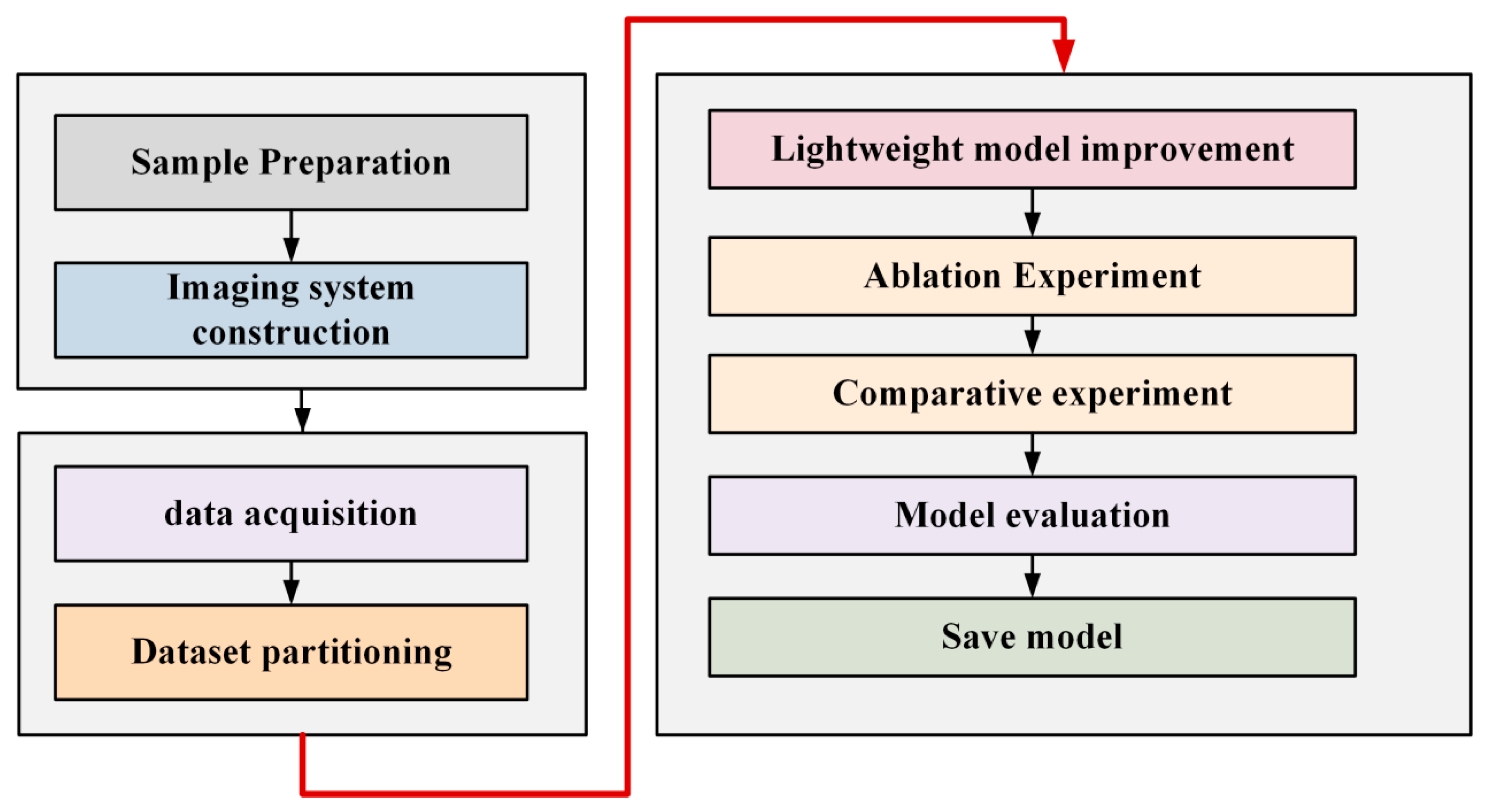

2. Methods

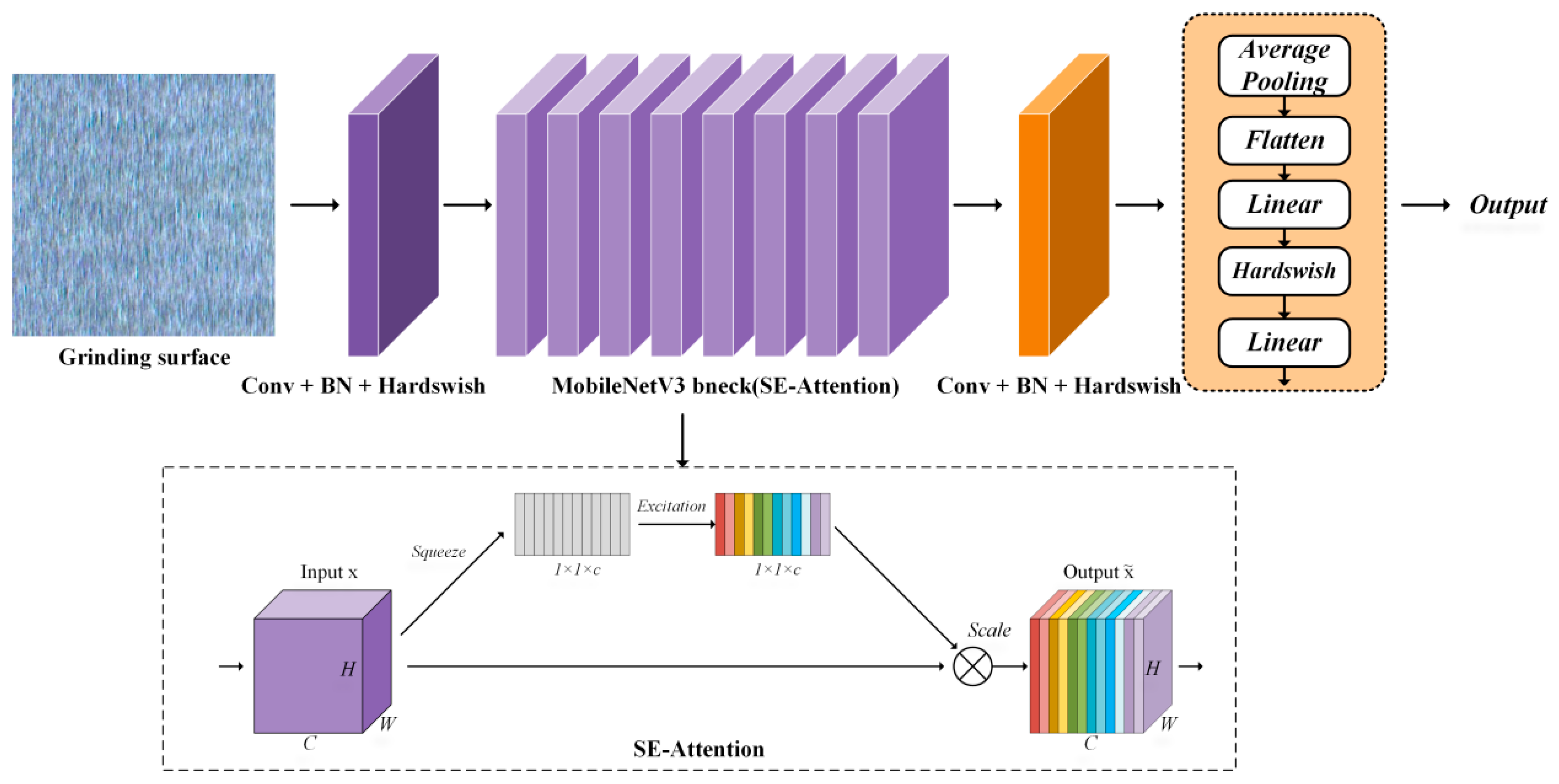

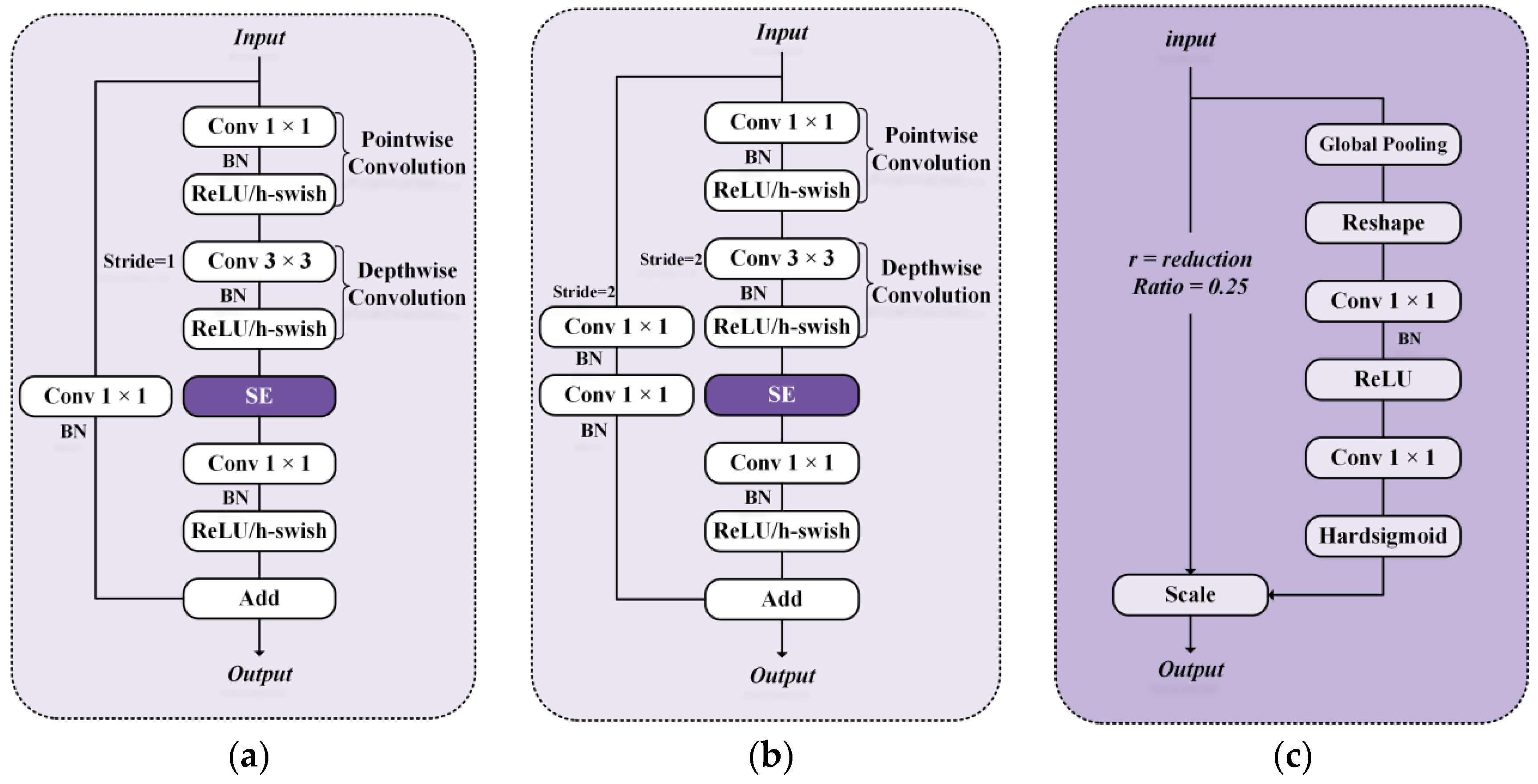

2.1. Basic MobileNetV3

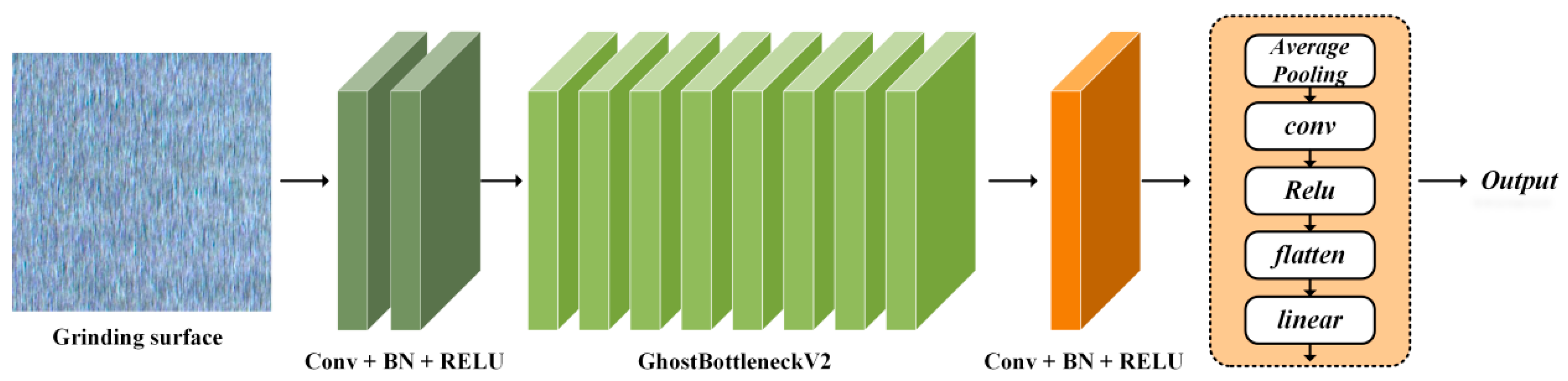

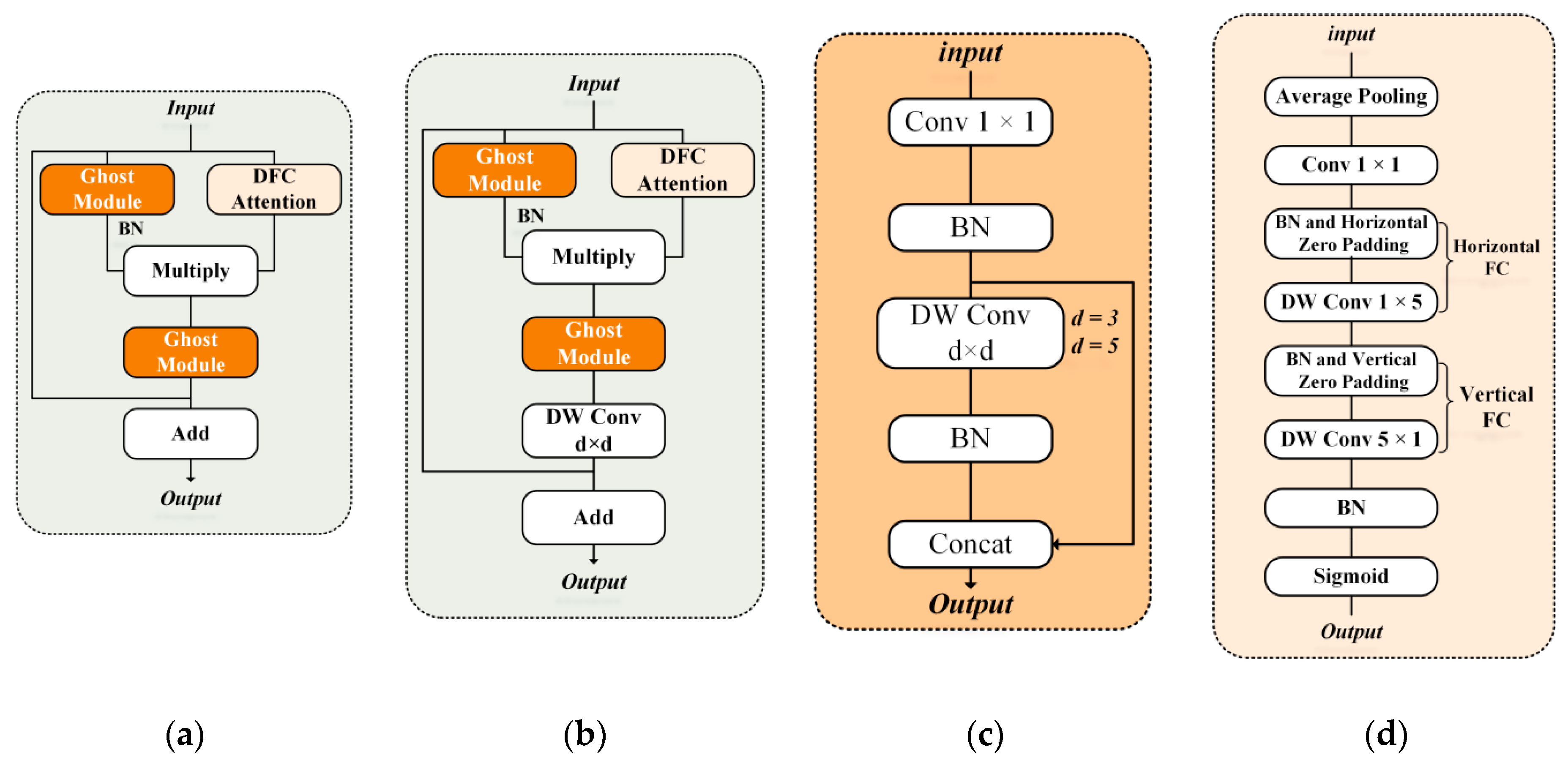

2.2. Feature Extractor GhostNetV2

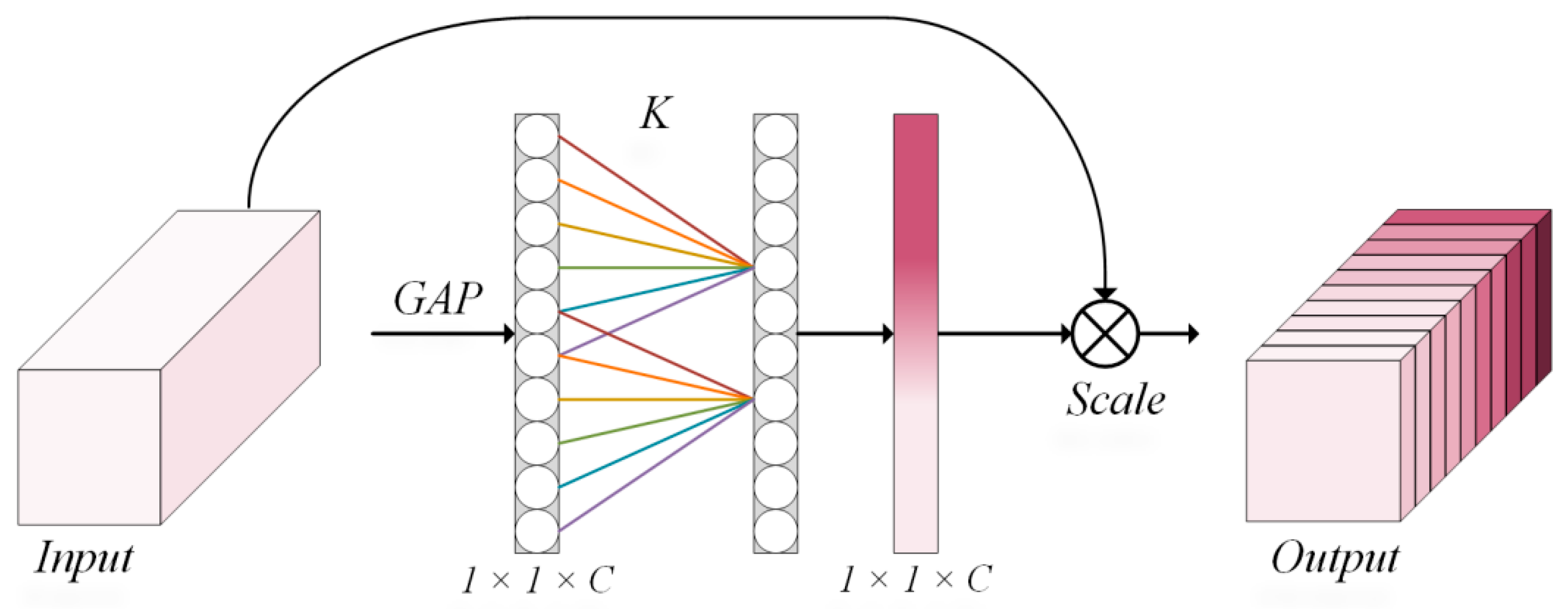

2.3. ECA Attention Mechanism

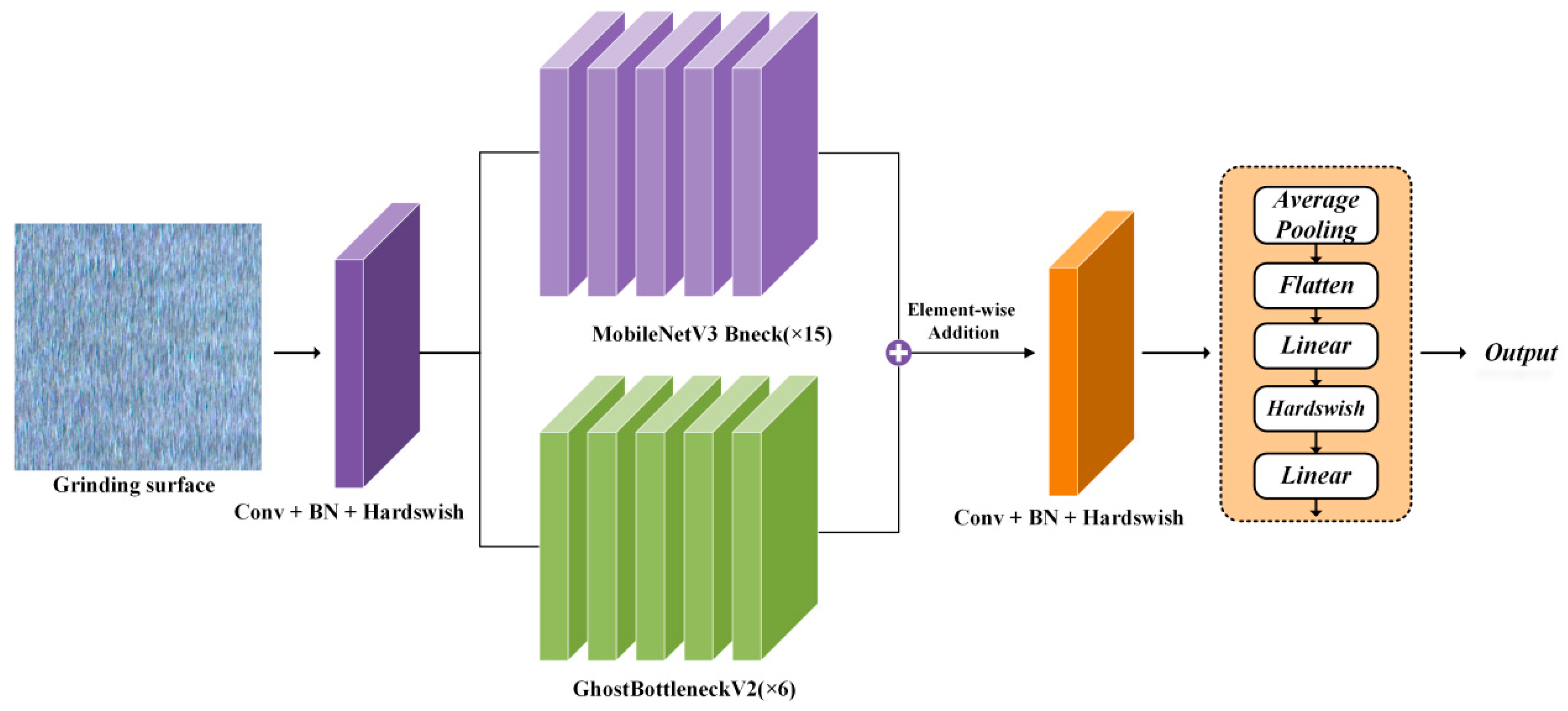

2.4. GE-MobileNet

3. Experiment

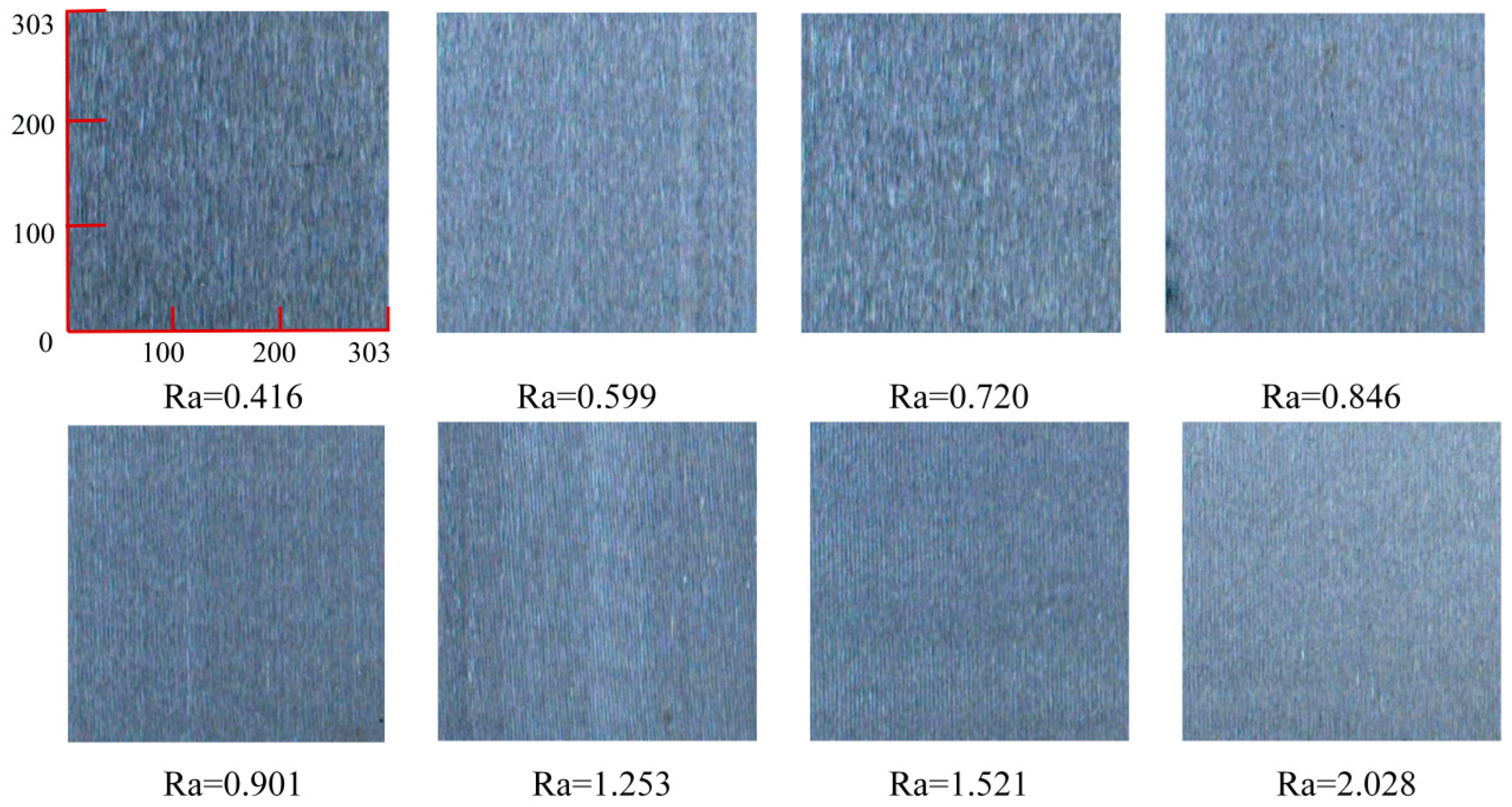

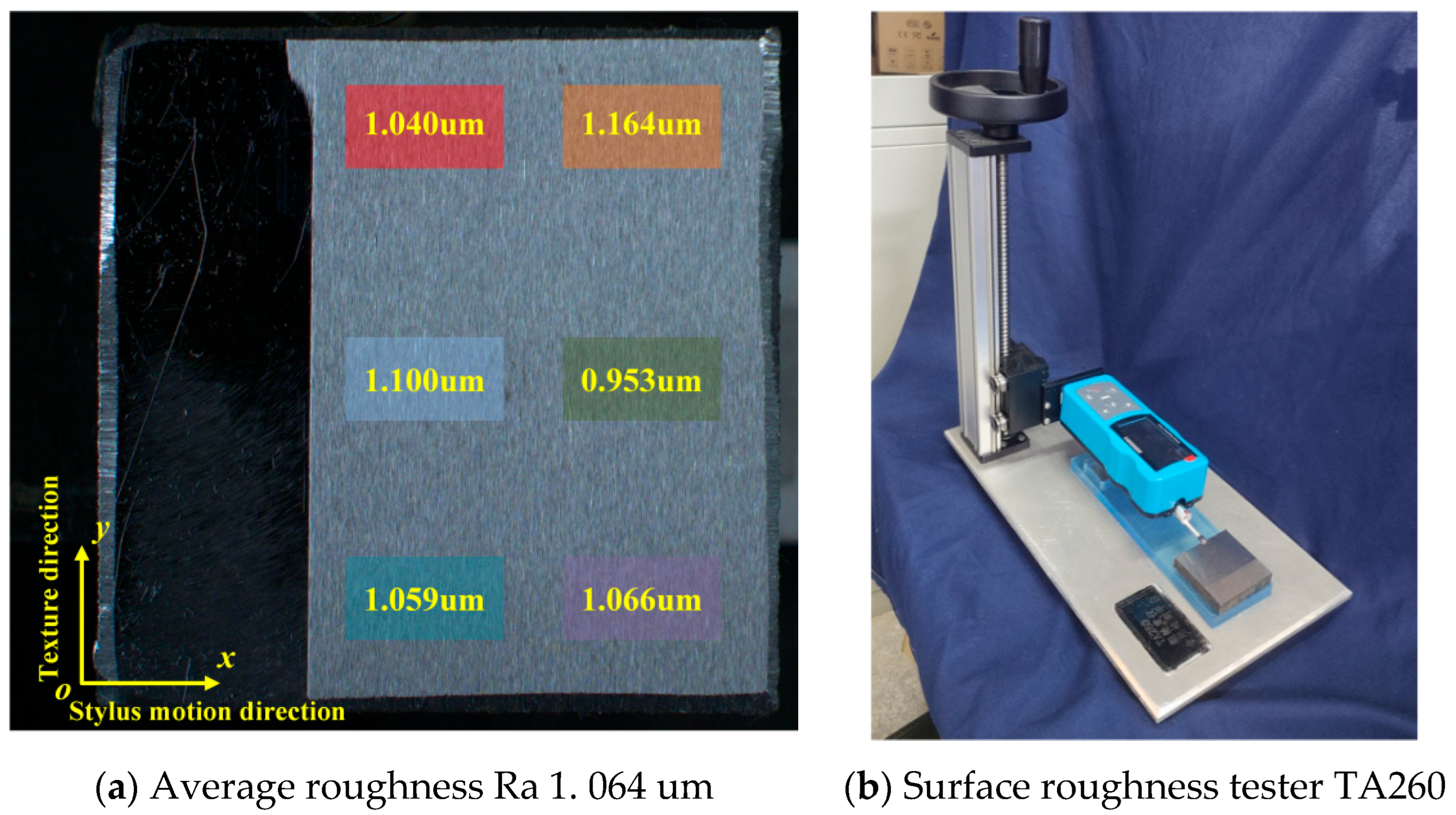

3.1. Sample Preparation and Workpiece Roughness

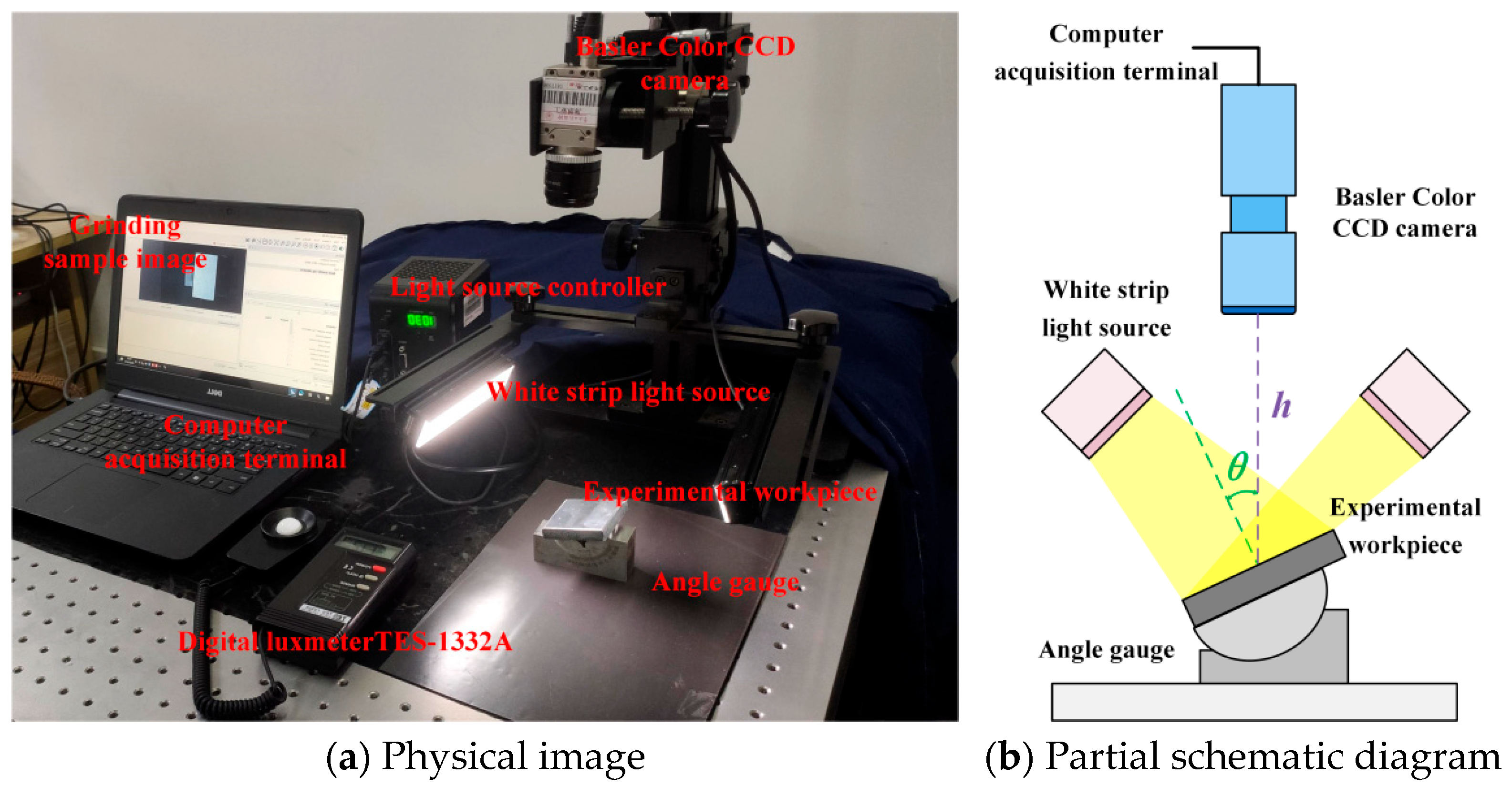

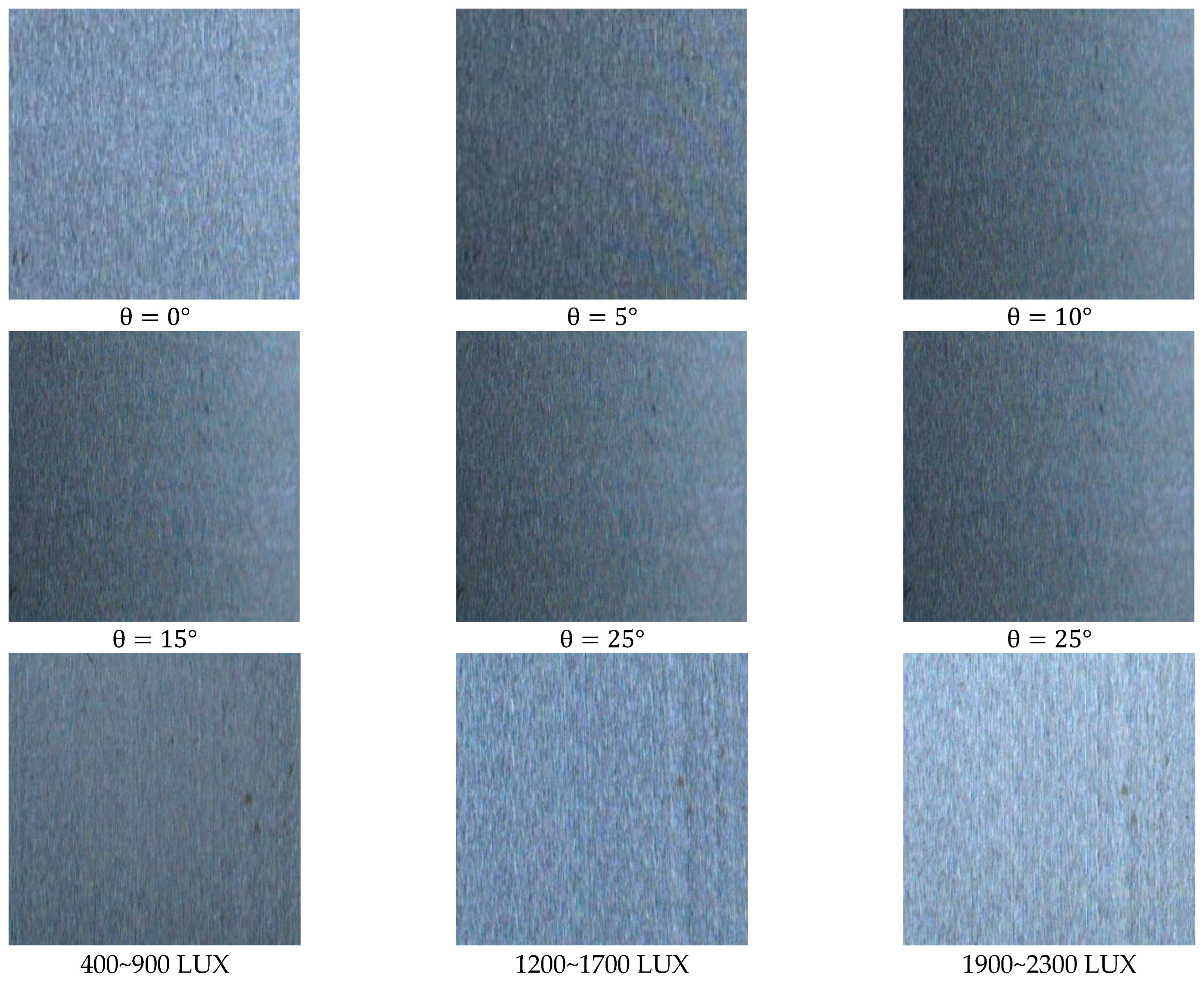

3.2. Image Acquisition System

3.3. Data Partitioning

4. Analysis and Discussion of Experimental Results

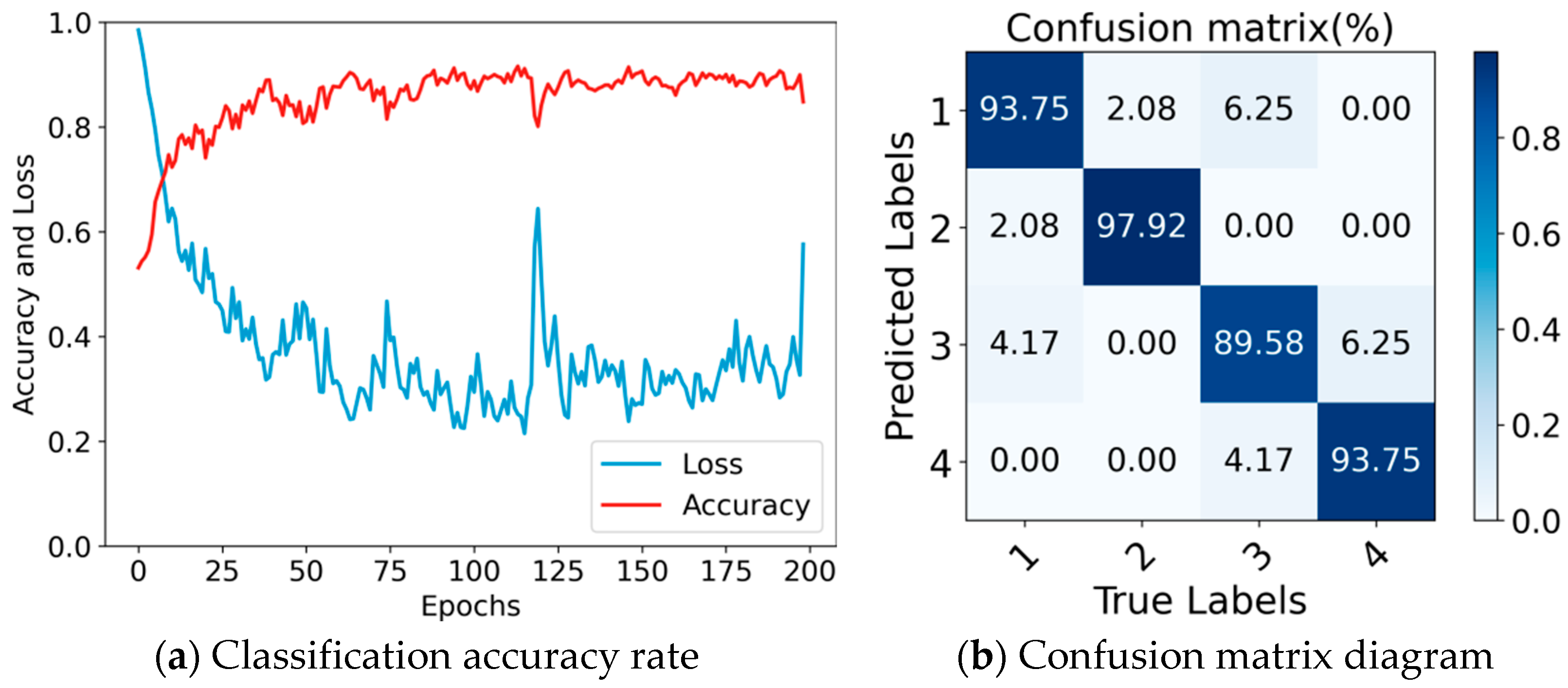

4.1. Ablation Experiment

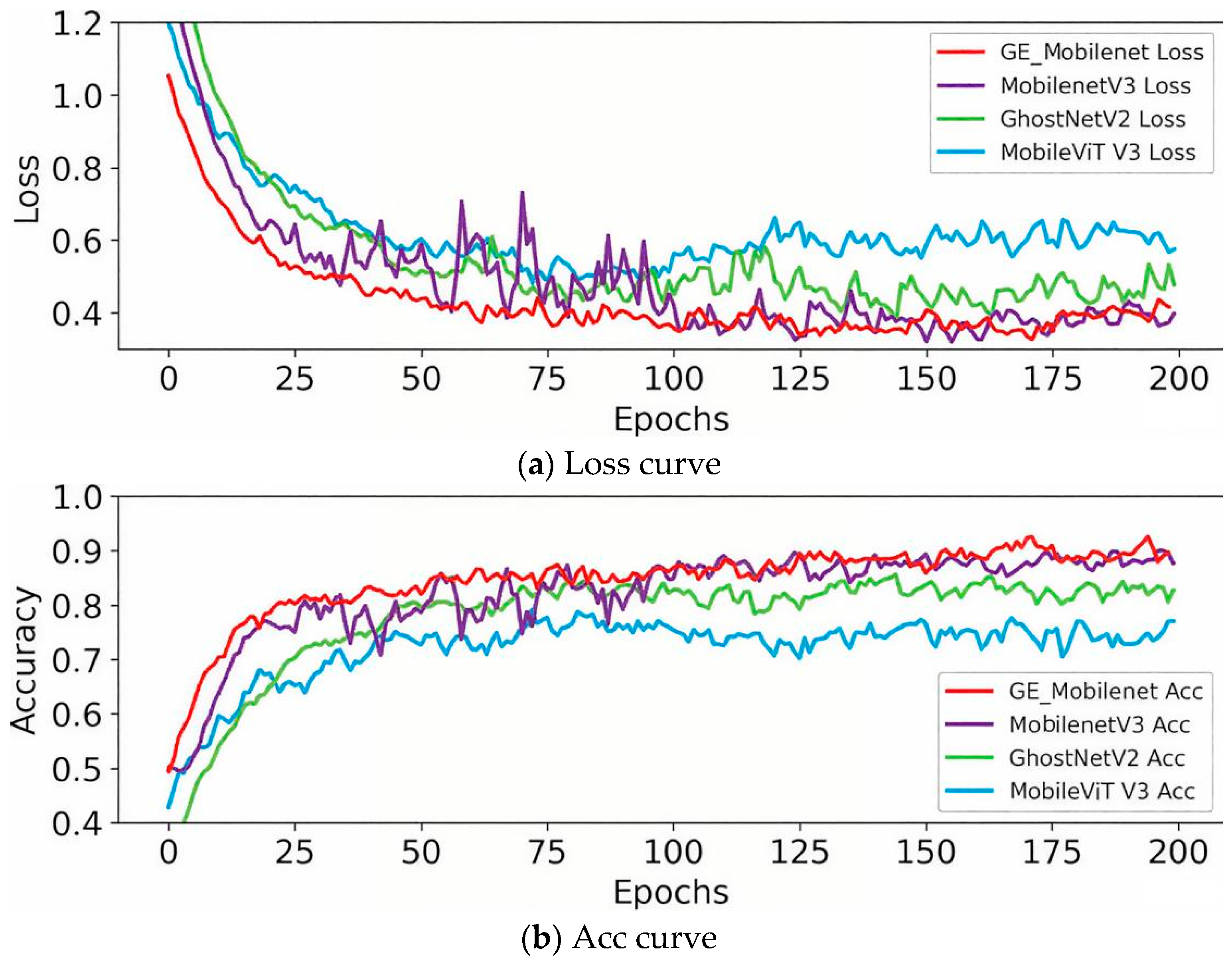

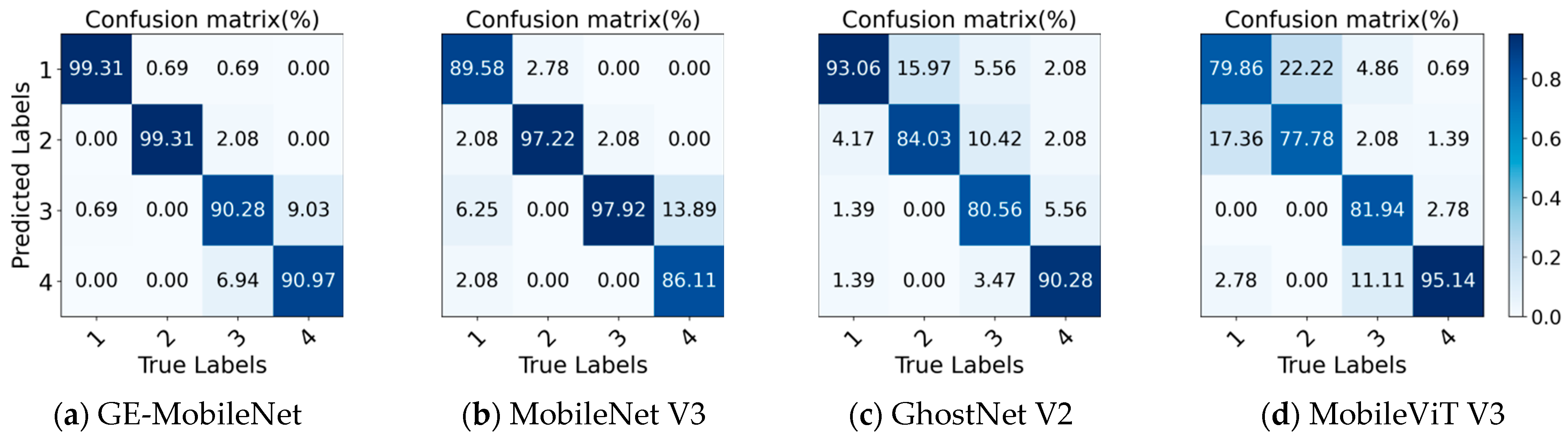

4.2. Model Comparison Analysis

4.3. Discussion

5. Conclusions

5.1. Disclosures

5.2. Materials Availability

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Karkalos, N.E.; Galanis, N.I.; Markopoulos, A.P. Surface roughness prediction for the milling of Ti–6Al–4V ELI alloy with the use of statistical and soft computing techniques. Measurement 2016, 90, 25–35. [Google Scholar] [CrossRef]

- Zhang, S.J.; To, S.; Wang, S.J.; Zhu, Z.W. A review of surface roughness generation in ultra-precision machining. Int. J. Mach. Tools Manuf. 2015, 91, 76–95. [Google Scholar] [CrossRef]

- Persson, B.N.J. On the use of surface roughness parameters. Tribol. Lett. 2023, 71, 29. [Google Scholar] [CrossRef]

- Whitehouse, D.J. Handbook of Surface and Nanometrology; Taylor & Francis: New York, NY, USA, 2002. [Google Scholar]

- Gadelmawlaa, E.S.; Kourab, M.M.; Maksoudc, T.M.A.; Elewaa, I.M.; Soliman, H.H. Roughness parameters. J. Mater. Process. Technol. 2002, 123, 133–145. [Google Scholar] [CrossRef]

- Shahabi, H.H.; Ratnam, M.M. Noncontact roughness measurement of turned parts using machine vision. Int. J. Adv. Manuf. Technol. 2010, 46, 275–284. [Google Scholar] [CrossRef]

- Ghodrati, S.; Kandi, S.G.; Mohseni, M. Nondestructive, fast, and cost-effective image processing method for roughness measurement of randomly rough metallic surfaces. J. Opt. Soc. Am. A 2018, 35, 998–1013. [Google Scholar] [CrossRef]

- Pavliček, P.; Mikeska, E. White-light interferometer without mechanical scanning. Opt. Lasers Eng. 2020, 124, 105800. [Google Scholar] [CrossRef]

- Wang, H.; Wei, C.; Tian, A.; Liu, B.; Zhu, X. Surface roughness detection method of optical elements based on region scattering. In Proceedings of the 10th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Advanced and Extreme Micro-Nano Manufacturing Technologies, Chengdu, China, 14–17 June 2021; Volume 12073, pp. 174–181. [Google Scholar]

- Huang, P.B.; Inderawati, M.M.W.; Rohmat, R.; Sukwadi, R. The development of an ANN surface roughness prediction system of multiple materials in CNC turning. Int. J. Adv. Manuf. Technol. 2023, 125, 1193–1211. [Google Scholar] [CrossRef]

- Huaian, Y.I.; Jian, L.I.U.; Enhui, L.U.; Peng, A.O. Measuring grinding surface roughness based on the sharpness evaluation of colour images. Meas. Sci. Technol. 2016, 27, 025404. [Google Scholar] [CrossRef]

- Huaian, Y.; Xinjia, Z.; Le, T.; Yonglun, C.; Jie, Y. Measuring grinding surface roughness based on singular value entropy of quaternion. Meas. Sci. Technol. 2020, 31, 115006. [Google Scholar] [CrossRef]

- Wang, Y.H.; Lai, J.Y.; Lo, Y.C.; Shih, C.H.; Lin, P.C. An Image-Based Data-Driven Model for Texture Inspection of Ground Workpieces. Sensors 2022, 22, 5192. [Google Scholar] [CrossRef]

- ELGhadoui, M.; Mouchtachi, A.; Majdoul, R. Intelligent surface roughness measurement using deep learning and computer vision: A promising approach for manufacturing quality control. Int. J. Adv. Manuf. Technol. 2023, 129, 3261–3268. [Google Scholar] [CrossRef]

- Shi, Y.; Li, B.; Li, L.; Liu, T.; Du, X.; Wei, X. Automatic non-contact grinding surface roughness measurement based on multi-focused sequence images and CNN. Meas. Sci. Technol. 2023, 35, 035029. [Google Scholar] [CrossRef]

- Huang, J.; Yi, H.; Fang, R.; Song, K. A grinding surface roughness class recognition combining red and green information. Metrol. Meas. Syst. 2023, 30, 689–702. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tang, Y.; Han, K.; Guo, J.; Xu, C.; Xu, C.; Wang, Y. GhostNetv2: Enhance cheap operation with long-range attention. Adv. Neural Inf. Process. Syst. 2022, 35, 9969–9982. [Google Scholar]

- Wadekar, S.N.; Chaurasia, A. Mobilevitv3: Mobile-friendly vision transformer with simple and effective fusion of local, global and input features. arXiv 2022, arXiv:2209.15159. [Google Scholar]

- Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Dong, X.; Yuan, L.; Liu, Z. Mobile-former: Bridging MobileNet and transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 5270–5279. [Google Scholar]

- Yi, H.; Wang, H.; Shu, A.; Huang, J. Changeable environment visual detection of grinding surface roughness based on lightweight network. Nondestruct. Test. Eval. 2024, 40, 1117–1140. [Google Scholar] [CrossRef]

- Wei, X.S.; Song, Y.Z.; Mac Aodha, O.; Wu, J.; Peng, Y.; Tang, J.; Yang, J.; Belongie, S. Fine-grained image analysis with deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8927–8948. [Google Scholar] [CrossRef]

- Zhao, B.; Feng, J.; Wu, X.; Yan, S. A survey on deep learning-based fine-grained object classification and semantic segmentation. Int. J. Autom. Comput. 2017, 14, 119–135. [Google Scholar] [CrossRef]

- Zhao, Y.; Yan, K.; Huang, F.; Li, J. Graph-based high-order relation discovery for fine-grained recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 15079–15088. [Google Scholar]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear CNN models for fine-grained visual recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1449–1457. [Google Scholar]

- Song, J.; Yang, R. Feature boosting, suppression, and diversification for fine-grained visual classification. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Virtual, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Huang, J.; Yi, H.; Shu, A.; Tang, L.; Song, K. Visual measurement of grinding surface roughness based on feature fusion. Meas. Sci. Technol. 2023, 34, 105019. [Google Scholar] [CrossRef]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–21 June 2019; pp. 2820–2828. [Google Scholar]

- Yang, T.J.; Howard, A.; Chen, B.; Zhang, X.; Go, A.; Sandler, M.; Sze, V.; Adam, H. Netadapt: Platform-aware neural network adaptation for mobile applications. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 285–300. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- ISO 21920-1:2021; Geometrical Product Specifications (GPS)—Surface Texture: Profile—Part 1: Indication of Surface Texture. International Organization for Standardization: Geneva, Switzerland, 2021. Available online: https://www.iso.org/standard/72276.html (accessed on 1 January 2024).

- Hutchings, A.; Hollywood, J.; Lamping, D.L.; Pease, C.T.; Chakravarty, K.; Silverman, B.; Choy, E.H.S.; Scott, D.G.; Hazleman, B.L.; Bourke, B.; et al. Roughness classification detection of Swin Transformer model based on the multi-angle and convertible image environment. Nondestruct. Test. Eval. 2023, 38, 394–411. [Google Scholar] [CrossRef]

| Input1 | Operator1 | Input2 | Operator2 | Expsize | #out | ECA | NL | s |

|---|---|---|---|---|---|---|---|---|

| 2242 × 3 | Conv2d, 3 × 3 | - | - | - | 16 | - | HS | 2 |

| 1122 × 16 | Bneck, 3 × 3 | 1122 × 16 | Bneck2, 3 × 3 | 16 | 16 | - | RE | 1 |

| 1122 × 16 | Bneck, 3 × 3 | 1122 × 16 | Bneck2, 3 × 3 | 64 | 24 | - | RE | 2 |

| 562 × 24 | Bneck, 3 × 3 | 562 × 24 | Bneck2, 3 × 3 | 72 | 24 | - | RE | 1 |

| 282 × 24 | Bneck, 5 × 5 | 562 × 24 | Bneck2, 5 × 5 | 72 | 40 | √ | RE | 2 |

| 282 × 40 | Bneck, 5 × 5 | 282 × 40 | Bneck2, 5 × 5 | 120 | 40 | √ | RE | 1 |

| 282 × 40 | Bneck, 5 × 5 | 282 × 40 | Bneck2, 5 × 5 | 120 | 40 | √ | RE | 1 |

| 142 × 40 | Bneck, 3 × 3 | 142 × 80 | Bneck2, 3 × 3 | 240 | 80 | - | HS | 2 |

| 142 × 80 | Bneck, 3 × 3 | - | - | 200 | 80 | - | HS | 1 |

| 142 × 80 | Bneck, 3 × 3 | - | - | 184 | 80 | - | HS | 1 |

| 142 × 80 | Bneck, 3 × 3 | - | - | 184 | 80 | - | HS | 1 |

| Input | Operator | - | ||||||

| 142 × 80 | Conv2d, 1 × 1 | - | 480 | - | HS | 2 | ||

| 72 × 80 | Pool, 7 × 7 | - | - | - | - | 1 | ||

| 12 × 480 | Linear | - | 1280 | - | HS | 1 | ||

| 12 × 1280 | Linear | - | 4 | - | - | 1 | ||

| Grinding Processing Parameters | |

|---|---|

| Infeed traverse speed (m/min) | 8 |

| Rotating speed of grinding wheel (r/min) | 1500 |

| Grinding wheel grain size (number of meshes) | {120, 100, 80, 60, 46} |

| Grinding depth (mm) | {0.005, 0.010, 0.015, 0.020} |

| Grinding wheel material, hardness, binder type | White corundum, L, ceramic binder V |

| NO | First | Second | Third | Fourth | Fifth | Sixth | Average |

|---|---|---|---|---|---|---|---|

| 1 | 0.390 | 0.439 | 0.414 | 0.361 | 0.391 | 0.502 | 0.416 |

| 2 | 0.392 | 0.439 | 0.438 | 0.448 | 0.464 | 0.409 | 0.432 |

| 3 | 0.562 | 0.502 | 0.578 | 0.562 | 0.537 | 0.525 | 0.544 |

| 4 | 0.589 | 0.565 | 0.588 | 0.684 | 0.616 | 0.552 | 0.599 |

| 5 | 0.637 | 0.653 | 0.579 | 0.603 | 0.580 | 0.558 | 0.602 |

| 6 | 0.712 | 0.657 | 0.522 | 0.560 | 0.697 | 0.544 | 0.615 |

| 7 | 0.672 | 0.705 | 0.715 | 0.656 | 0.702 | 0.701 | 0.692 |

| 8 | 0.663 | 0.662 | 0.654 | 0.676 | 0.689 | 0.822 | 0.694 |

| 9 | 0.703 | 0.727 | 0.683 | 0.708 | 0.739 | 0.686 | 0.708 |

| 10 | 0.609 | 0.684 | 0.751 | 0.788 | 0.663 | 0.822 | 0.720 |

| 11 | 0.632 | 0.903 | 0.679 | 0.545 | 0.822 | 0.764 | 0.724 |

| 12 | 0.695 | 0.778 | 0.768 | 0.809 | 0.783 | 0.743 | 0.763 |

| 13 | 0.755 | 0.848 | 0.818 | 0.795 | 0.792 | 0.824 | 0.805 |

| 14 | 0.704 | 0.764 | 0.760 | 0.745 | 1.027 | 0.999 | 0.833 |

| 15 | 0.884 | 0.834 | 0.836 | 0.806 | 0.849 | 0.838 | 0.841 |

| 16 | 0.715 | 0.995 | 0.820 | 0.612 | 0.954 | 0.980 | 0.846 |

| 17 | 0.846 | 0.821 | 0.825 | 0.960 | 0.884 | 0.809 | 0.858 |

| 18 | 0.783 | 0.776 | 0.964 | 0.710 | 0.836 | 1.189 | 0.876 |

| 19 | 0.867 | 0.891 | 0.820 | 0.954 | 0.917 | 0.907 | 0.893 |

| 20 | 1.252 | 0.846 | 0.979 | 0.676 | 0.797 | 0.810 | 0.893 |

| 21 | 0.882 | 0.868 | 1.075 | 0.708 | 0.944 | 0.931 | 0.901 |

| 22 | 0.867 | 0.887 | 0.970 | 0.844 | 0.889 | 0.988 | 0.908 |

| 23 | 0.824 | 1.039 | 0.937 | 0.786 | 0.926 | 1.059 | 0.929 |

| 24 | 1.040 | 1.164 | 1.100 | 0.953 | 1.059 | 1.066 | 1.064 |

| 25 | 1.172 | 1.324 | 1.290 | 1.101 | 1.367 | 1.262 | 1.253 |

| 26 | 1.132 | 1.456 | 1.275 | 1.084 | 1.302 | 1.366 | 1.269 |

| 27 | 1.004 | 1.249 | 1.140 | 1.356 | 1.473 | 1.676 | 1.316 |

| 28 | 1.369 | 1.339 | 1.893 | 1.674 | 1.070 | 1.397 | 1.457 |

| 29 | 1.598 | 1.659 | 1.655 | 1.388 | 1.461 | 1.362 | 1.521 |

| 30 | 1.038 | 2.048 | 1.633 | 0.895 | 1.746 | 1.808 | 1.528 |

| 31 | 1.958 | 1.386 | 1.473 | 1.463 | 1.362 | 1.799 | 1.574 |

| 32 | 1.764 | 1.670 | 1.694 | 1.642 | 1.621 | 1.515 | 1.651 |

| 33 | 2.259 | 1.497 | 2.193 | 2.321 | 2.179 | 1.582 | 2.005 |

| 34 | 1.778 | 2.142 | 1.964 | 2.087 | 2.120 | 2.077 | 2.028 |

| 35 | 1.986 | 2.065 | 2.072 | 2.040 | 2.051 | 2.111 | 2.054 |

| 36 | 2.103 | 1.932 | 2.068 | 2.167 | 2.106 | 2.118 | 2.082 |

| 37 | 1.85 | 2.173 | 2.318 | 2.022 | 2.000 | 2.366 | 2.122 |

| 38 | 2.205 | 2.012 | 2.231 | 2.059 | 2.200 | 2.108 | 2.136 |

| 39 | 2.101 | 2.158 | 2.140 | 2.186 | 2.167 | 2.212 | 2.161 |

| 40 | 2.059 | 2.197 | 2.233 | 2.259 | 2.187 | 2.192 | 2.188 |

| 41 | 2.877 | 1.257 | 2.674 | 1.217 | 3.130 | 2.099 | 2.209 |

| System Parameter | Value | Number of Conditions |

|---|---|---|

| Angle (unit: ) | 0, 5, 10, 15, 20, 25 | 6 |

| Light intensity (unit: LUX) | 400~900, 1200~1700, 1900~2300 | 3 |

| Working distance (unit: cm) | 25, 35 | 2 |

| Roughness (μm) | G1 (0.4–0.8] | G2 (0.8–1.2] | G3 [1.2–1.6) | G4 [1.6–2.3) | All |

|---|---|---|---|---|---|

| Number of training samples | 10 | 10 | 5 | 8 | 33 |

| Training set | 720 | 720 | 360 | 576 | 2376 |

| Number of test samples | 2 | 2 | 2 | 2 | 8 |

| Test set | 144 | 144 | 144 | 144 | 576 |

| Model Name | Feature Extractor | Remove the Deep Network Structure | Attention Mechanism | Accuracy% | Param |

|---|---|---|---|---|---|

| MobileNetV3 | - | - | SE | 92.71 | 3.90 M |

| Model_a | √ | - | SE | 93.58 | 8.78 M |

| Model_b | √ | - | ECA | 94.62 | 7.51 M |

| Model_c | √ | √ | SE | 92.71 | 5.74 M |

| GE-MobileNet | √ | √ | ECA | 94.97 | 5.72 M |

| Model Name | GE-MobileNet (Ours) | MobileNet V3 | GhostNet V2 | MobileViT V3 |

|---|---|---|---|---|

| Accuracy (Mean/%) | 94.97 | 92.71 | 86.98 | 83.68 |

| Accuracy (Variance) | 0.032 | 0.062 | 0.116 | 0.152 |

| Precision (Mean/%) | 94.94 | 93.37 | 87.54 | 84.26 |

| Precision (Variance) | 0.026 | 0.048 | 0.084 | 0.130 |

| F1 (Mean/%) | 94.95 | 93.04 | 87.26 | 83.97 |

| F1 (Variance) | 0.029 | 0.044 | 0.096 | 0.122 |

| Param | 5.72 M | 3.90 M | 4.88 M | 7.21 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, F.; Yi, H.; Wang, H. Visual Measurement of Grinding Surface Roughness Based on GE-MobileNet. Appl. Sci. 2025, 15, 11489. https://doi.org/10.3390/app152111489

Sun F, Yi H, Wang H. Visual Measurement of Grinding Surface Roughness Based on GE-MobileNet. Applied Sciences. 2025; 15(21):11489. https://doi.org/10.3390/app152111489

Chicago/Turabian StyleSun, Fangzhou, Huaian Yi, and Hao Wang. 2025. "Visual Measurement of Grinding Surface Roughness Based on GE-MobileNet" Applied Sciences 15, no. 21: 11489. https://doi.org/10.3390/app152111489

APA StyleSun, F., Yi, H., & Wang, H. (2025). Visual Measurement of Grinding Surface Roughness Based on GE-MobileNet. Applied Sciences, 15(21), 11489. https://doi.org/10.3390/app152111489