1. Introduction

The study of cardiovascular diseases is crucial, as they are one of the leading causes of mortality worldwide. The classification of heart diseases through the analysis of short time series, such as heart rate variability (HRV), allows for the detection of changes in cardiac activity over short time intervals, facilitating the early diagnosis of these conditions. The combination of this approach with artificial intelligence tools improves both the accuracy and speed in identifying anomalous patterns, which can transform monitoring and early detection of heart diseases, optimizing treatment and saving lives.

HRV analysis through the intervals between successive heartbeats (known as RR intervals) is a non-invasive technique that has proven useful for both the diagnosis and prognosis of heart diseases and neuropathies. Historically, statistical analysis of the RR signal was one of the first applied methods and remains highly effective. Similarly, spectral analysis of the RR sequence is another commonly used technique [

1]. Both methods have been of great interest over the past 25 years due to their non-invasive nature. Currently, advances in machine learning have generated great interest in its application for the detection and classification of cardiovascular diseases [

2,

3,

4]. These non-invasive methods provide a safer, simpler, and more cost-effective alternative compared to more complex invasive techniques. Among their main advantages are accessibility, safety, and the possibility of continuous monitoring, allowing detailed tracking of changes in HRV and, consequently, the patient’s health status.

Machine learning techniques, such as deep learning, support vector machines (SVM), random forests, and gradient boosting methods, have been successfully applied to classify patients with various heart conditions, grouping them into categories such as healthy, sick, and different subtypes of heart disease [

5,

6]. These approaches have proven to be highly effective in differentiating healthy individuals from those with heart pathologies [

7,

8,

9]. The incorporation of machine learning and deep learning algorithms offers great potential for improving early detection, risk assessment, and treatment planning [

10,

11,

12]. Models such as convolutional neural networks (CNNs) [

13] and multilayer perceptrons (MLPs) [

14] have shown promising results in diagnosing cardiovascular diseases based on clinical data [

15,

16].

HRV is a key indicator of cardiovascular health, as it reflects fluctuations in the time intervals between successive heartbeats. HRV analysis through short time series allows for the rapid detection of subtle changes in cardiac activity, which is crucial for identifying irregularities that may be early signs of heart disease. Time series are essential for studying data variation over time, enabling the identification of patterns and trends. While traditional time-series analysis focuses on long periods [

17,

18], in certain contexts, such as when data is limited or events occur over short intervals, it is essential to work with short time series [

19,

20,

21]. In the case of cardiovascular series, short-term variations, occurring within seconds or minutes, are often related to specific events such as arrhythmias, whereas long-term variations may be linked to gradual changes in cardiovascular health [

22,

23].

Various artificial intelligence (AI) methods have proven effective in time-series classification. Deep learning has been widely used for prediction and anomaly detection [

24,

25,

26,

27]. Recurrent neural networks (RNNs), long short-term memory (LSTM) networks [

28], and CNNs [

29] are particularly effective models for time-series analysis, as they can capture complex patterns and handle sequences of varying lengths [

30,

31,

32,

33]. In a similar context, a CNN together with a non-iterative extreme learning machine (ELM) has also been used to analyze the severity of heart disease [

34]. The ELM classifier is mainly valued for its fast training speed and generalization ability, comparable to conventional approaches [

35,

36,

37].

In recent years, hybrid approaches combining convolutional layers with transformer mechanisms have emerged for the analysis of short physiological signals. For example, Hassanuzzaman et al. [

38] proposed a residual 1D-CNN with attention transformer to classify short 5-second cardiac sound segments, achieving high accuracy even with brief recordings. Such architectures leverage convolutional layers’ local feature extraction with attention-based mechanisms to capture global dependencies across the signal. In the ECG domain, convolution + transformer models have been used for stress detection in an end-to-end fashion, avoiding handcrafted feature engineering [

39]. More recently, PhysioWave, a multiscale wavelet-transformer framework, has demonstrated promising performance in representing non-stationary physiological signals across modalities [

40].

Short monofractal time series analysis has revealed characteristics that are not evident in longer series [

41,

42]. Detrended fluctuation analysis (DFA) has been effective in studying short monofractal series [

43], and its generalization for multifractal analysis has found applications in numerous fields [

44]. This methodology has been successfully applied, for example, in the study of currency exchange rates [

45,

46].

As mentioned earlier, various AI-based methods allow for addressing the problem of classification using short time series. Furthermore, AI-based methods have been employed to classify short/very short monofractal series and, compared with traditional methods for mono-multifractal analysis, have achieved much better performance [

33]. In particular, the authors of [

33] used a CNN-SVM approach and compared its performance with DFA in classifying short monofractal time series, showing that CNN-SVM performs better than does DFA for monofractal series of length

.

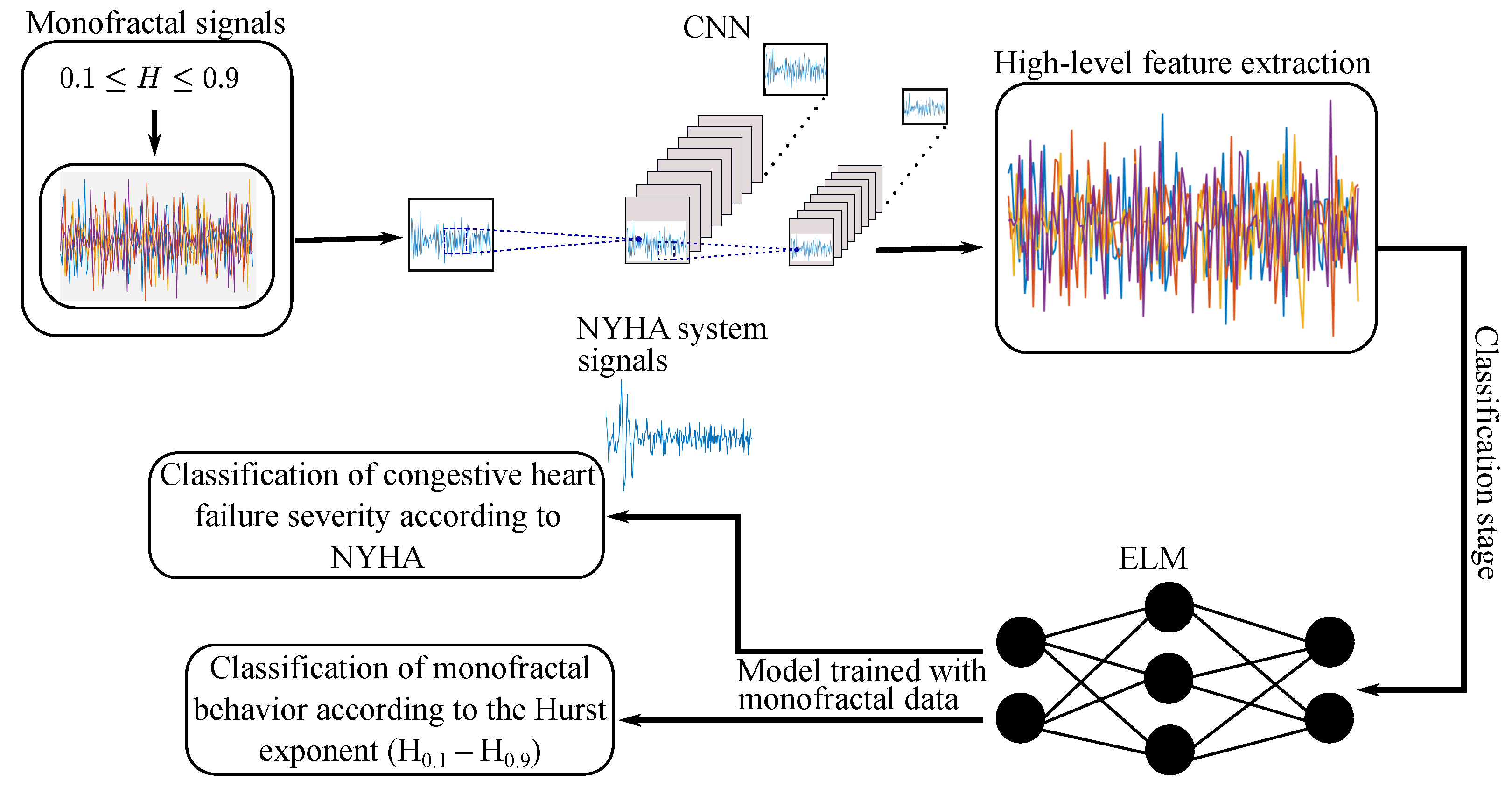

This work proposes the use of neural network models to establish a correspondence between degrees of heart disease (according to NYHA classification) and monofractal models derived from short HRV (RR intervals) time series. Specifically, a hybrid CNN-ELM approach is proposed, which integrates a CNN for monofractal feature extraction with an ELM classifier for disease severity distinction. The rationale for this scheme is that CNNs are capable of capturing hierarchical and multiscale patterns in time series, whereas ELM ensures fast training and reduces the risk of local minima. Furthermore, the performance of these models was evaluated based on the length of the RR intervals, highlighting, as an additional advantage, their fast training times, which surpassed those of previous methods.

4. Results

Within this section, we provide details on the configuration and performance of the CNN-ELM approach, trained with monofractal synthetic signals of short length. Initially, the model classified the nine categories of the H-index, modeled through the Hurst exponents. Subsequently, the robustness of the CNN-ELM model, trained with synthetic data, was evaluated in the diagnosis of cardiovascular diseases. Specifically, the selected models grouped the NYHA signals according to the degree of heart disease.

4.1. Experimental Setup and Hyperparameters

To evaluate the performance of the CNN-ELM model with synthetic and real data, processed and organized by length, we employed the classical fivefold cross-validation scheme. This learning approach grouped the monofractal samples into five uniformly sized subsets. In each iteration, the CNN-ELM was trained with four of these folds, reserving the remaining fold to assess its generalization ability. Therefore, in this paper, the test statistic’s value is presented as the average of the five runs. This training and validation strategy, commonly adopted with machine learning models, provides more stable prediction estimates and mitigates the potential for biased metrics.

4.1.1. CNN for Feature Extraction

Given the stable behavior of the deep learning system discussed in [

34], our study adopted this same methodology for feature extraction from the synthetic data. The input was processed using six fully connected deep learning blocks. Following common practice in this type of mechanism, each block in the proposed architecture included a convolutional layer and a pooling layer. The output representation of the convolutional layers was primarily processed by applying normalization techniques and the ReLU activation function. The length of the sliding windows and the convolutional kernel size in each block were the same values reported in a published paper [

34]. For instance, in learning block six, the convolutional filter size was

with a stride of 1, while for the max pooling layer, the filter size was

with a stride of 2. The Adam algorithm was adopted as the optimizer, configured with a learning rate of 0.001. In addition, an

regularization rate of 0.001, a batch size of 64, and a total of four training epochs were incorporated.

4.1.2. ELM for Classification

For the H-index classification task, we employed an ELM model. This network was trained and validated using features extracted from convolutional layers 5 (Conv 5) and 6 (Conv 6), as described in the previously outlined cross-validation scheme. The unique training process of the ELM involved randomly assigning weights and biases to the hidden layer, which was then mapped to the output layer using a sigmoid activation function. Subsequently, the output weights were determined analytically through the generalized Moore–Penrose inverse, ensuring fast and reliable classification.

Optimization of the ELM’s performance involved a sensitivity analysis of its hyperparameters,

C and

L. This was performed by fixing the feature map extracted from the convolutional layer 6 for monofractal signals of length 128. We conducted a grid search for

C from

(where

k ranged from

to 10), and for

L from 100 to 500 with an arithmetic increment of 100. As shown in

Figure 2, experimental results demonstrated superior model performance for

C values ranging from

to

and

L values from 500 to 2000 neurons. To ensure generalization, the optimal values of

and

were chosen and applied to both monofractal and cardiovascular signals.

4.2. Classification with Monofractal Signals

This section focuses on the performance of the CNN-ELM model in classifying monofractal synthetic signals, following the previously established configuration. The central purpose was to evaluate its accuracy in synthetic signals of very short duration and, from these results, to select the most suitable model trained with these characteristics. This stage was crucial in supporting the applicability and capacity for further generalization of the proposed system to real cardiovascular signals.

The overall classification results of the CNN-ELM model are summarized in

Table 3 according to signal length and convolutional architecture depth. As expected, the accuracy of the model improved progressively with increasing both signal length and CNN architecture depth. With the feature map associated with the sixth convolutional layer (Conv 6), the best accuracy rates were obtained, being 92.48% for longer signals and 68.95% for shorter signals. Overall, CNN-ELM demonstrated better generalization capability fora data of length 512.

In addition to overall accuracy, four classical machine learning metrics were employed to assess model performance in each H-category: accuracy (Acc), sensitivity (Sen), specificity (Spe), and positive predictive value (PPV) ()as described in [

34]). These complementary metrics provide more specific indicators about the classification system. The classification statistical indices obtained by CNN-ELM are summarized in

Table 4 for Conv 6. It can be seen from the table that the sensitivity was always lower than the specificity in the three scenarios, incrementing these values according to the length of the input monofractal data. In addition, for lengths 128, 256, and 512, the best-classified classes were

,

,

, and

. Overall, CNN-ELM demonstrated better generalization capability for a data of length 512.

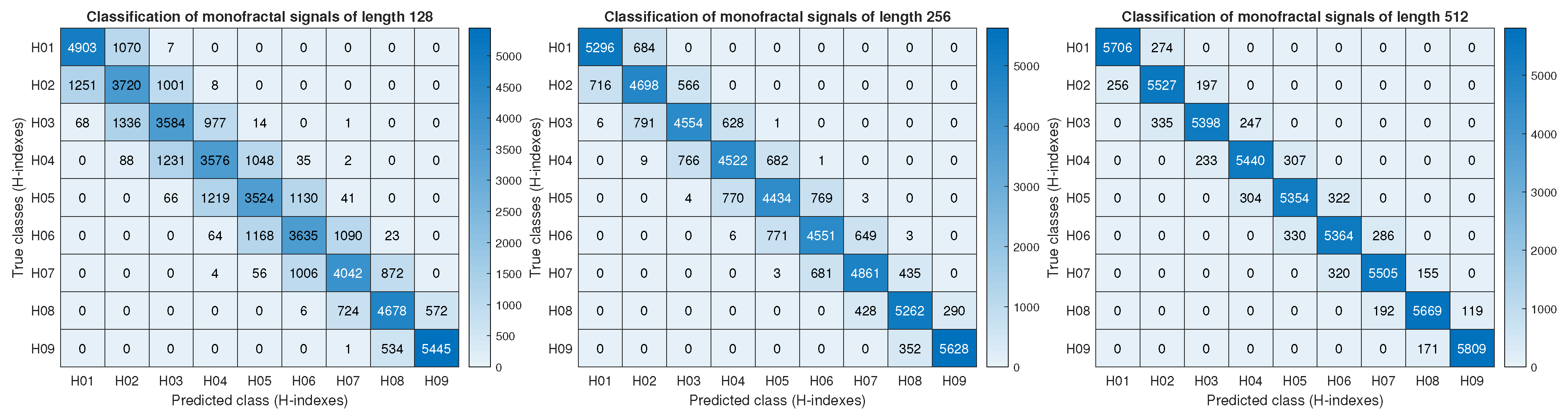

To reinforce the previous analysis, the correct and incorrect predictions of the Hurst index were summarized using a confusion matrix.

Figure 3 illustrates the performance of the CNN-ELM model, constrained to the Conv 6 layer, using data with lengths of 128, 256, and 512. All matrices were generated through the cumulative sum of results obtained from fivefold cross-validation. For instance, in the

category, an average of 55 signals were misclassified as

, while 1141 signals were correctly classified. This interpretation can be extended to the remaining entries of the matrix, allowing for a clear distinction between true positives, false positives, true negatives, and false negatives. This pattern reveals that the model struggles more with signals of length 128 compared to those of length 512, a limitation reflected in the error dispersion around the main diagonal of the

H-index.

4.3. Classification of the Degree of Heart Disease According to NYHA

Monofractal research in the health domain is an emerging area, particularly with potential applications in the diagnosis of heart disease. Monofractal research in the health domain plays a crucial role, particularly in the diagnosis of heart disease. These approaches characterize heart rate variability using synthetic signals, offering a valuable tool for early detection. Numerous methods have been developed to address such anomalies, typically focusing on long-duration time series. Most studies analyze signals lasting between 6 and 24 h, with 2500 samples being the minimum length considered. This preference largely stems from the limitations of conventional models in accurately classifying short-duration cardiac anomalies. Moreover, to the best of our knowledge, no previous study has combined monofractal synthetic time series with an evaluation of the severity of heart disease.

Given the limited number of studies in this field, our research explored the relationship between short-duration monofractal signals and cardiovascular rhythm using machine learning strategies. Specifically, the CNN-ELM model, trained on short synthetic signals, was used to map Hurst index values to classes representing healthy individuals and to categories defined by the NYHA functional classification system. For the analysis, two scenarios were considered: the first differentiates between healthy and diseased subjects in general, while the second distinguishes between healthy subjects and different degrees of cardiovascular severity according to the criteria established by the NYHA. In both cases, the cumulative confusion matrix, along with the classic metrics mentioned above, was adopted as an evaluation tool to quantify the performance of the CNN-ELM model.

Table 5 shows the performance of the CNN-ELM model in classifying healthy individuals from sick individuals according to the Hurst index, with signals of lengths 128, 256, and 512 being considered. The results describe a clear pattern of difference between healthy and sick subjects. For low

H values (

and

), Acc ranged between 50% and 54%, with very low Sen and high Spe, indicating that the model correctly identifies healthy individuals but fails to detect sick individuals. In the intermediate categories (

–

), Acc reached values of 68% to 91%, while Sen and PPV were zero due to the absence of sick subjects; Spe remained high, reflecting the ability of CNN-ELM to recognize the cases present. In the upper classes (

), the pattern repeated, confirming that healthy subjects predominate. These results are consistent with monofractal theory, given that sick subjects tend to concentrate in H<0.5, while healthy subjects have high H values.

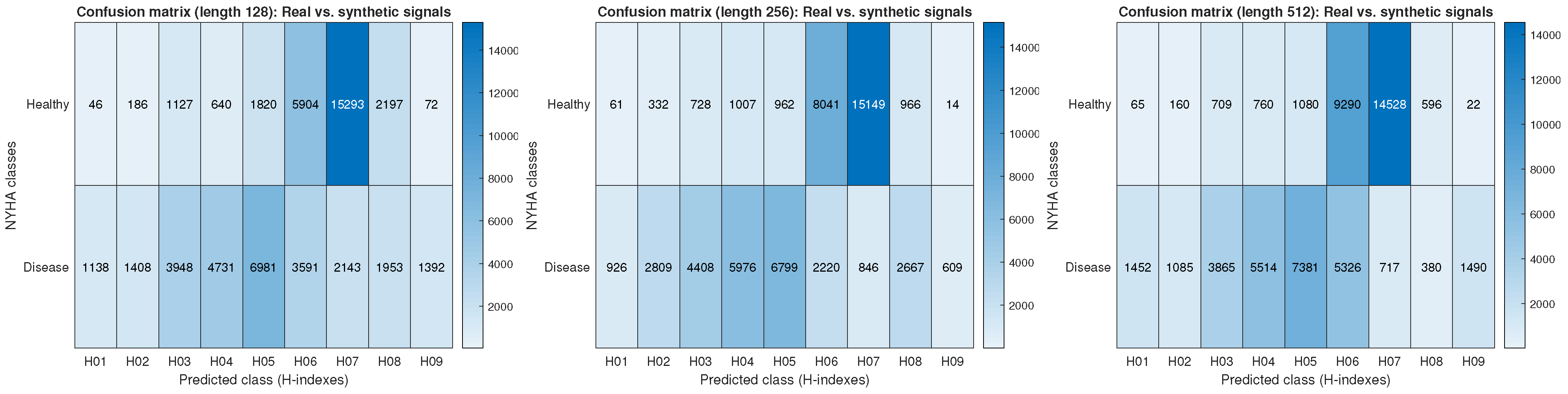

Figure 4 shows the cumulative confusion matrices for signals of lengths 128, 256, and 512, where the model distinguishes characteristic monofractal patterns within each group. Specifically, healthy subjects tended to concentrate in the

values, while sick subjects presented a higher concentration in the

range. This behavior can be explained within the theoretical framework of the monofractal process. In the case of fractal Gaussian noise type signals, a value of

indicates random behavior, characteristic of a healthy heart; on the other hand, values of

reflect anti-persistence, typical of pathological states. On the other hand, values of

represent a more balanced or slightly persistent pattern, associated with healthy complexity, indicating balanced, adaptive physiological variability. In this context, the proposed model demonstrated its ability to differentiate between normal and abnormal heart rhythms in short-term scenarios, revealing a loss of complexity and dynamic stability in signals from patients with cardiovascular disease.

In the second scenario, the analysis was extended to a more specific classification, including both healthy individuals and the different grades of heart disease defined by the NYHA classification system (NYHA I, NYHA II, NYHA III, and NYHA IV). With the model previously trained with synthetic signals to identify monofractal variations, cumulative confusion matrices (see

Figure 5) were generated for signals of lengths 128, 256, and 512. It was observed that healthy subjects exhibited a more concentrated distribution at values of

, whereas

H values tended to fall below

when heart failure severity increased, suggesting greater irregularity in heart rate variability. This transition reflects a possible relationship between the degree of heart failure and anti-persistence in the time series, consistent with pathophysiological studies linking autonomic nervous system diffusion with heart rate variability.

Table 6 reflects this behavior, showing that for H values from

to

, the model achieved moderate Acc, with very low Sen and PPV, while Spe remained high. This indicates that healthy subjects are correctly identified with a low error rate, whereas patients with advanced heart failure, corresponding to NYHA III–IV, are more difficult to classify. In the range from

to

, Sen and PPV increased, indicating improved discrimination of patients with mild-to-moderate heart failure, corresponding to NYHA I–III. For H values from

to

, Acc and Spe reached high levels, while Sen and PPV were not calculated due to the absence of patients in these ranges, which included mostly healthy subjects. Overall, these quantitative results support the trend observed above, consistent with the theory of monofractal processes and findings on heart rate variability.

In contrast to studies based on integrated signals, where patients usually present H values close to 1 (very smooth cardiac signals), this study showed more chaotic and less predictable dynamics in patients with a higher degree of heart disease. Consequently, the CNN-ELM model distinguishes between healthy and diseased subjects and, in addition, recognizes the degree of clinical severity according to the distribution of Hurst indices.

5. Discussion

The results presented demonstrate that the proposed CNN-ELM model, trained with short-range monofractal signals, is capable of characterizing healthy subjects and distinguishing between different degrees of heart failure according to the NYHA classification system. These findings support the initial hypothesis that monofractal signals, defined by Hurst exponents, can capture complex patterns associated with disease severity. In contrast to [

33], which classifies only

H-indices extracted from synthetic signals using a support vector machine, this study incorporated the ELM classifier. Although the classification results were comparable, the incorporation of the ELM network significantly reduced the training times and showed a more stable generalization ability against real data.

The confusion matrices for the three window lengths show a pattern consistent with the clinical evidence. In particular, it was observed that the healthy and NYHA I categories tended to concentrate in the range to , which is indicative of more regular and adaptive physiological signals. In contrast, NYHA classes II, III, and IV displayed a greater dispersion toward lower H values, reflecting less regularity and higher variability in the signals, which is characteristic of more advanced cardiac conditions. Specifically, the link between H values < 0.5 and pathological states supports early characterization of autonomic dysfunction and loss of cardiac variability complexity, suggesting that fractal-based indices may serve as non-invasive biomarkers to enhance risk stratification and patient monitoring in heart failure, complementing conventional clinical assessments such as the NYHA classification. Additionally, longer temporal windows are more effective in capturing the fractal properties associated with the severity of heart failure. Nevertheless, the dynamics of the model were maintained even with short signals (length 128), which supports the proposed objective of achieving effective classification using short-duration data.

From a clinical perspective, the model’s performance profile—characterized by consistently high specificity (>95% across most scenarios) but variable and generally low sensitivity (0.1–25.6%)—indicates that it operates most effectively as a rule-in diagnostic tool. This implies that a positive classification output substantially increases confidence in the presence of heart failure, supporting further confirmatory testing through established diagnostic procedures such as echocardiography or BNP measurement. However, the relatively low sensitivity highlights the need for cautious interpretation of negative results, particularly in high-risk or early-stage patients. Consequently, the proposed system is envisioned as a complementary decision-support tool capable of serving as an initial filtering mechanism within clinical workflows, aiding in the early identification of patients who may benefit from more comprehensive diagnostic evaluations. Future developments will focus on enhancing sensitivity while preserving the model’s high specificity to strengthen its applicability in both screening and diagnostic contexts.

This study differs from previous approaches by training the model only with monofractal features and demonstrating its ability to generalize to real data without relying on large volumes of clinical data. However, real signals may include multifractal or nonstationary components that are not fully represented in the synthetic ensemble. Although the model showed good classification results, its direct clinical application may require further validation in larger and more diverse cohorts. As future work, we propose extending this approach to multifractal signals, comparing the CNN-ELM with more recent architectures, such as transformers for biomedical signals, and exploring its integration into clinical workflows.

6. Conclusions

This study provides novel evidence supporting the feasibility of using short-duration synthetic signals to characterize cardiac dynamics and assess the severity of heart failure. The proposed CNN-ELM model successfully distinguished between healthy individuals and patients across the NYHA classification spectrum, even with time series as short as 128 samples. These findings reinforce the clinical relevance of the Hurst exponent as a biomarker of autonomic and cardiovascular function, particularly when associated with persistent or anti-persistent behavior in heart rate variability.

By focusing exclusively on monofractal features and avoiding the use of long or integrated real signals, the model demonstrated a strong capacity for generalization while minimizing computational cost and training time. Notably, the CNN-ELM architecture maintained consistent classification performance across different window lengths, aligning with known physiological patterns and clinical expectations.

This work stands apart from previous studies by integrating fractal theory with modern machine learning tools and by mapping the progression of heart failure through statistically meaningful H-index distributions. Nevertheless, further investigation is required to validate these results using real, multifractal, and potentially nonstationary data. Future studies should also explore alternative architectures, such as transformers, and assess the model’s integration into clinical decision-making processes.