3.1. Back Analysis Model Based on Excavation-Induced Tunnel Deformations

Deep learning is a subset of machine learning, and deep neural networks (DNNs) are a specific type of deep learning model [

35]. A DNN consists of multiple hidden layers, where each layer performs a nonlinear transformation of data through neurons and weights. The workflow involves several key steps, including data input, forward propagation, error computation, backpropagation, and parameter updates [

43].

In the forward propagation phase, each neuron receives input from the previous layer and performs a weighted summation using weights and biases. This process is mathematically expressed as follows:

where

represents the input features,

is the weight,

is the bias term, and

is the nonlinear activation function. The weights

and bias

in the equation above are not derived from a direct calculation but are learned iteratively during the training process. This process begins with the random initialization of all parameters. Subsequently, the model undergoes forward propagation to make a prediction, and the error between the prediction and the true value is quantified by a loss function. The core of the learning lies in backpropagation, where the gradient of the loss function with respect to each weight, denoted as

, is calculated. These gradients are then used to update the weights via a gradient descent optimization algorithm, following the update rule:

, where

is the learning rate. This cycle repeats until the model’s performance converges. The activation function introduces nonlinearity to the network, enabling it to learn complex data patterns. ReLU is commonly used as the activation function, and is defined as follows:

The three deep neural network architectures used in this study differ in structure [

44].

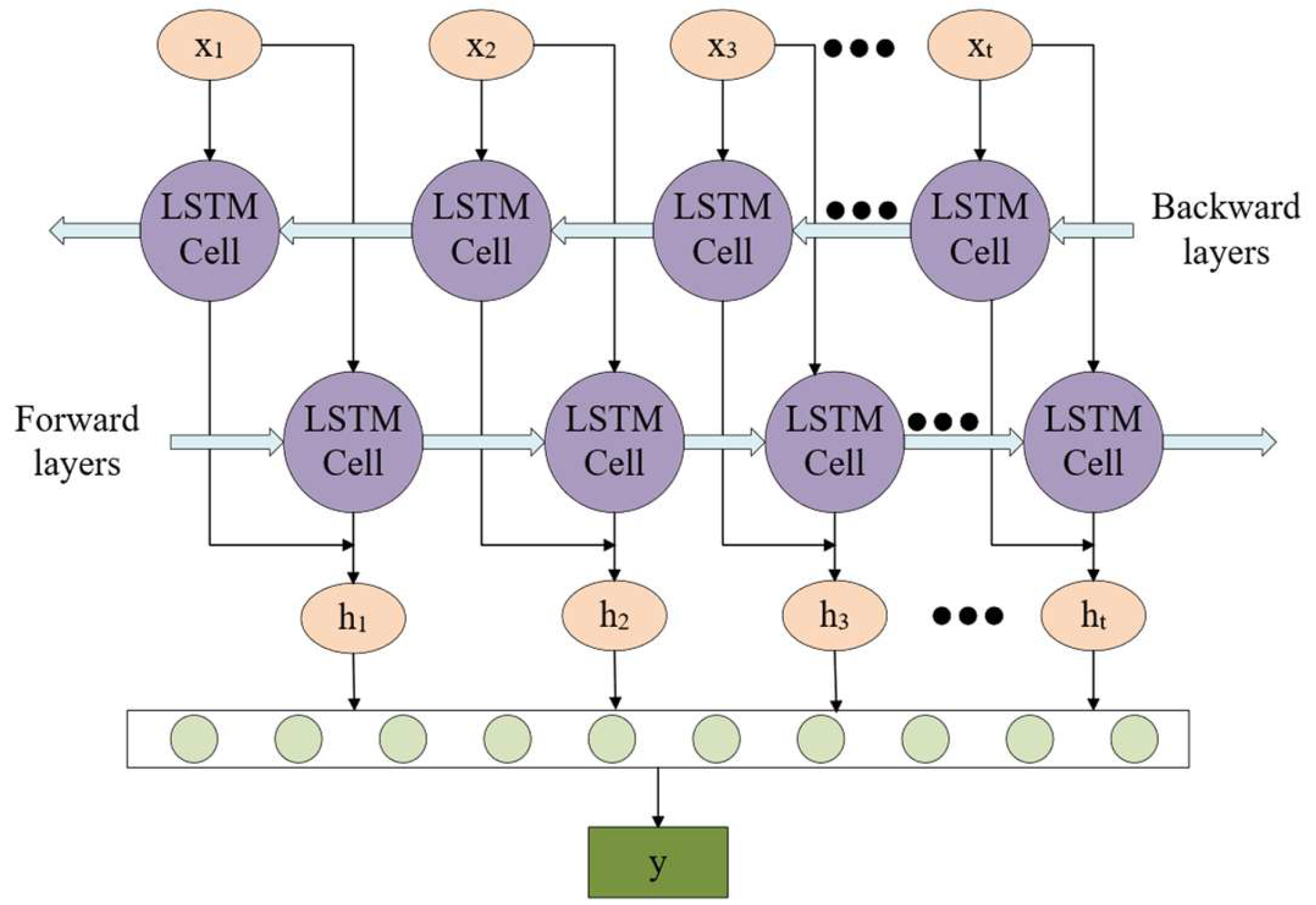

1. Bidirectional Long Short-Term Memory (BiLSTM): A BiLSTM consists of two independent LSTM units: one processes the sequence in a left-to-right direction, and the other processes the sequence in a right-to-left direction. An LSTM unit is composed of an input gate, forget gate, output gate, and cell state. The key equations for LSTM are as follows:

In the equations, , , and represent the forget gate, input gate, output gate, and hidden state, respectively. and represent the cell states before and after the update, respectively. and b are the weight vectors and bias terms, respectively. is the hidden state from the previous time step, and is the input at time step t. is the sigmoid function, and tanh is the hyperbolic tangent function.

The input sequence is a one-dimensional time series

. After processing by two stacked BiLSTM layers, the hidden state

is obtained, where the concatenated bidirectional output dimension is

. The final time step

is selected as the sequence representation and passed to a fully connected regression layer for prediction. The complete architecture of the network is shown in

Figure 6.

2. Convolutional Neural Networks (CNNs) are a powerful deep learning model and the dominant architecture in the field of computer vision. They play an irreplaceable role in tasks such as image recognition, object detection, and image generation. CNNs use convolutional kernels to slide over the input data, calculating the weighted sum of local regions to generate feature maps, thereby performing local feature extraction on the input data. The mathematical expression for the convolution operation is as follows:

In the convolution operation, represents a local region of the input data, denotes the weight of the convolution kernel, is the bias term, and refers to the size of the convolution kernel. The configuration of the convolution kernel includes parameters such as kernel size, stride, padding method, and the number of kernels. The number of kernels determines the number of output feature map channels. By using local connections and weight sharing, CNN efficiently extracts features from the input data, providing rich feature information for subsequent layers of the network.

ResNet [

45], a representative architecture of Convolutional Neural Networks (CNNs), addresses the gradient vanishing and information degradation issues in deep networks by introducing residual connections. The objective of the network learning is to predict the residual,

F(

x)=

y−

x, where

y is the output and

x is the input. Each Residual Block within the network is defined by the following basic computation:

where

x is the input (which can be the output from the previous layer), (

x,{

Wi}) is the nonlinear transformation within the residual block, and {

Wi} represents the weights of the convolutional layers.

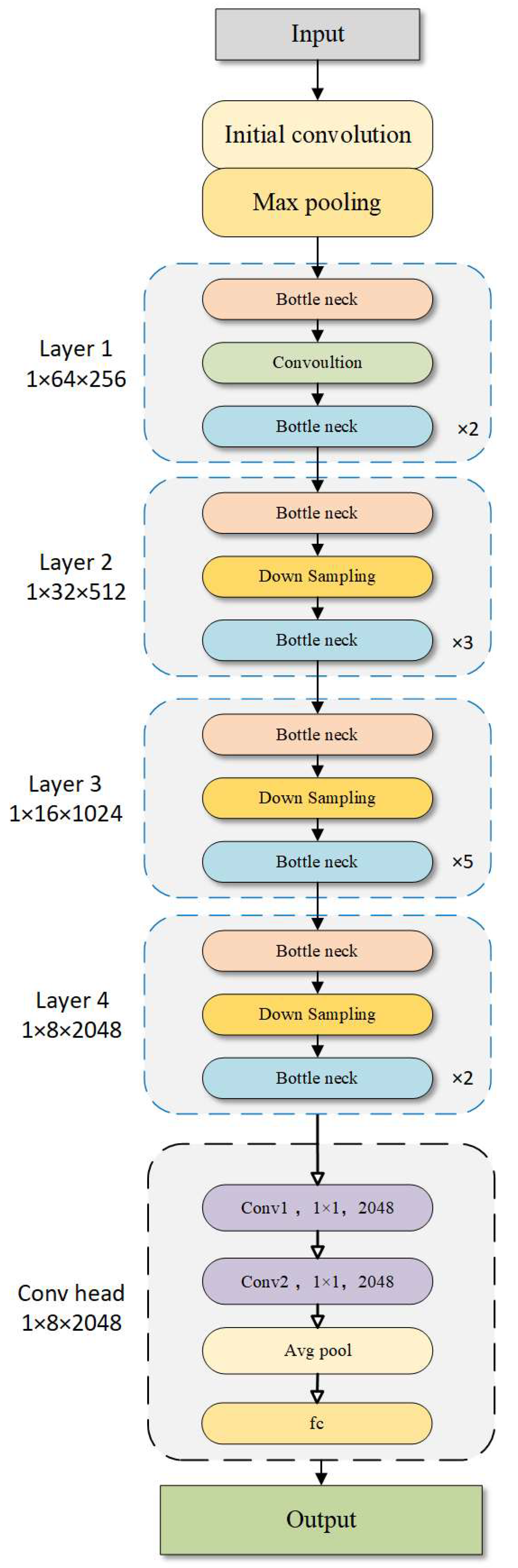

In this study, a deep neural network based on the ResNet50 architecture was primarily used to construct the rock mass evaluation model. The network is composed of an initial convolution and pooling layer, followed by a four-stage backbone, an average pooling layer, and a fully connected output layer. Each stage of the backbone includes multiple Residual Bottleneck Blocks along with a downsampling operation. The complete architecture of the network is shown in

Figure 7.

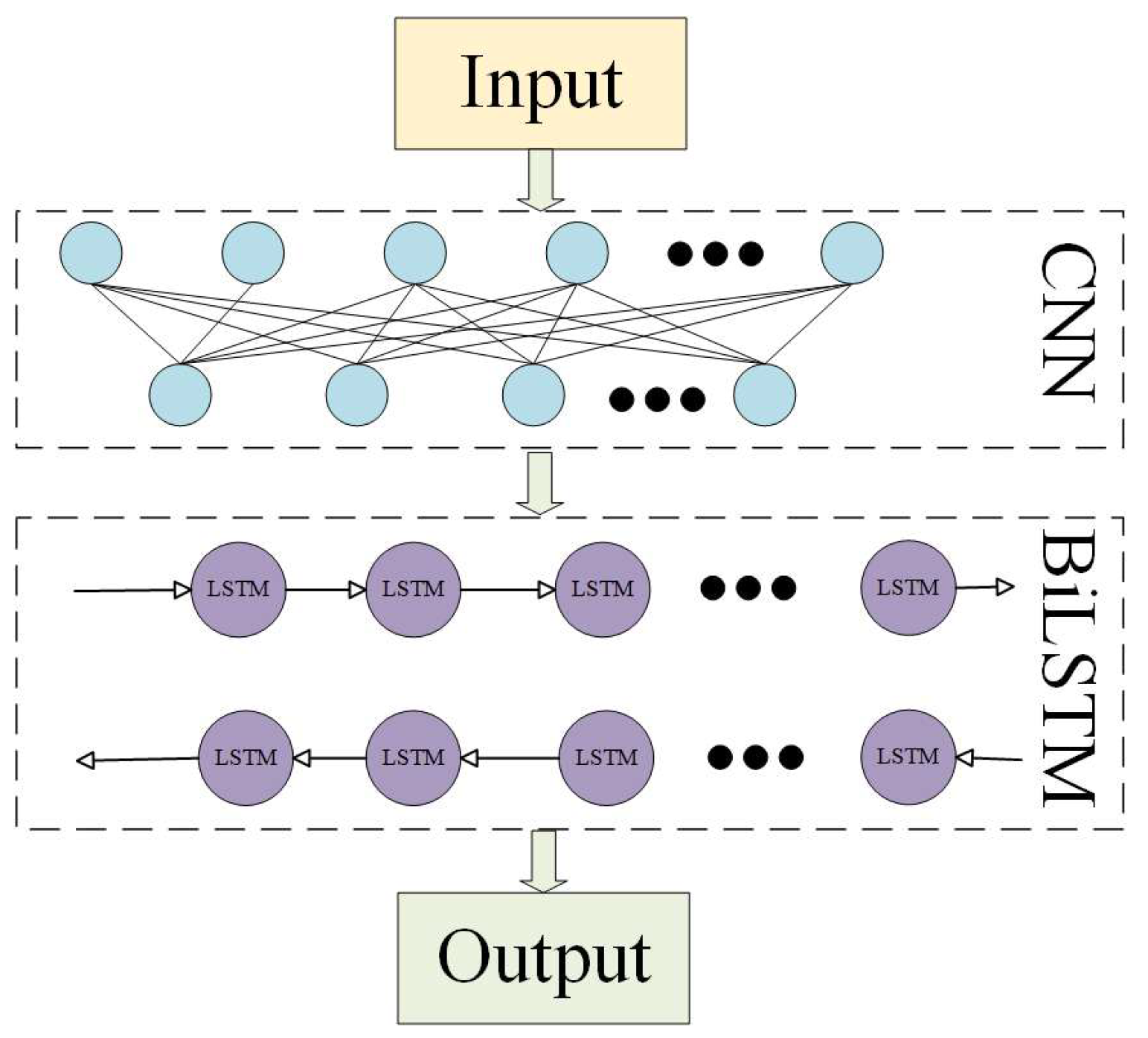

3. The CNN-BiLSTM model has been widely employed in image recognition and time-series analysis. It integrates Convolutional Neural Networks (CNNs) for spatial feature extraction and Bidirectional Long Short-Term Memory (BiLSTM) networks for temporal sequence modeling. Local spatial features are extracted by CNN, and temporal dependencies in both forward and backward directions are captured by BiLSTM. This combination enables the model to effectively represent spatial displacements and dynamic temporal variations. As a result, the architecture demonstrates reliable performance in complex sequence prediction tasks. The framework of CNN-BiLSTM is shown in

Figure 8.

For all three models, Cross Entropy Loss is used as the loss function for classification tasks. The output logits are compared with the target labels to compute the loss [

46].

The hyperparameters for the deep neural network models were selected based on grid search [

47]. Specifically, hyperparameters were initialized with default values and then adjusted through experimentation [

48]. The choice of hyperparameters has a significant impact on the model’s final performance. Models were trained with various hyperparameter settings, and the optimal hyperparameters for model were selected based on the best performance on the test set. Detailed information about the hyperparameters used in this study is provided in

Table 4.

In the regression task, model performance is evaluated using three metrics: the coefficient of determination (R

2), root mean squared error (RMSE), and the error rate within 5% [

49]. The equations for evaluation are as follows:

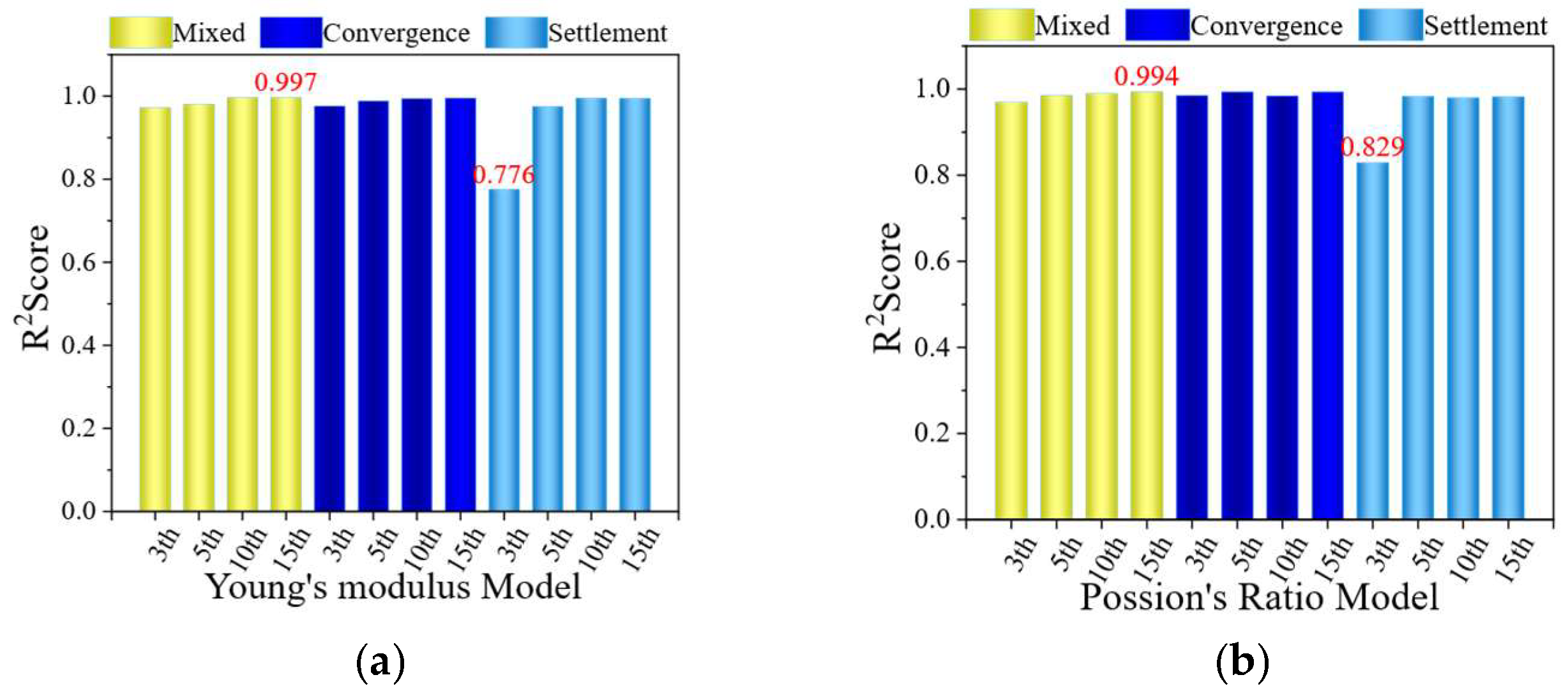

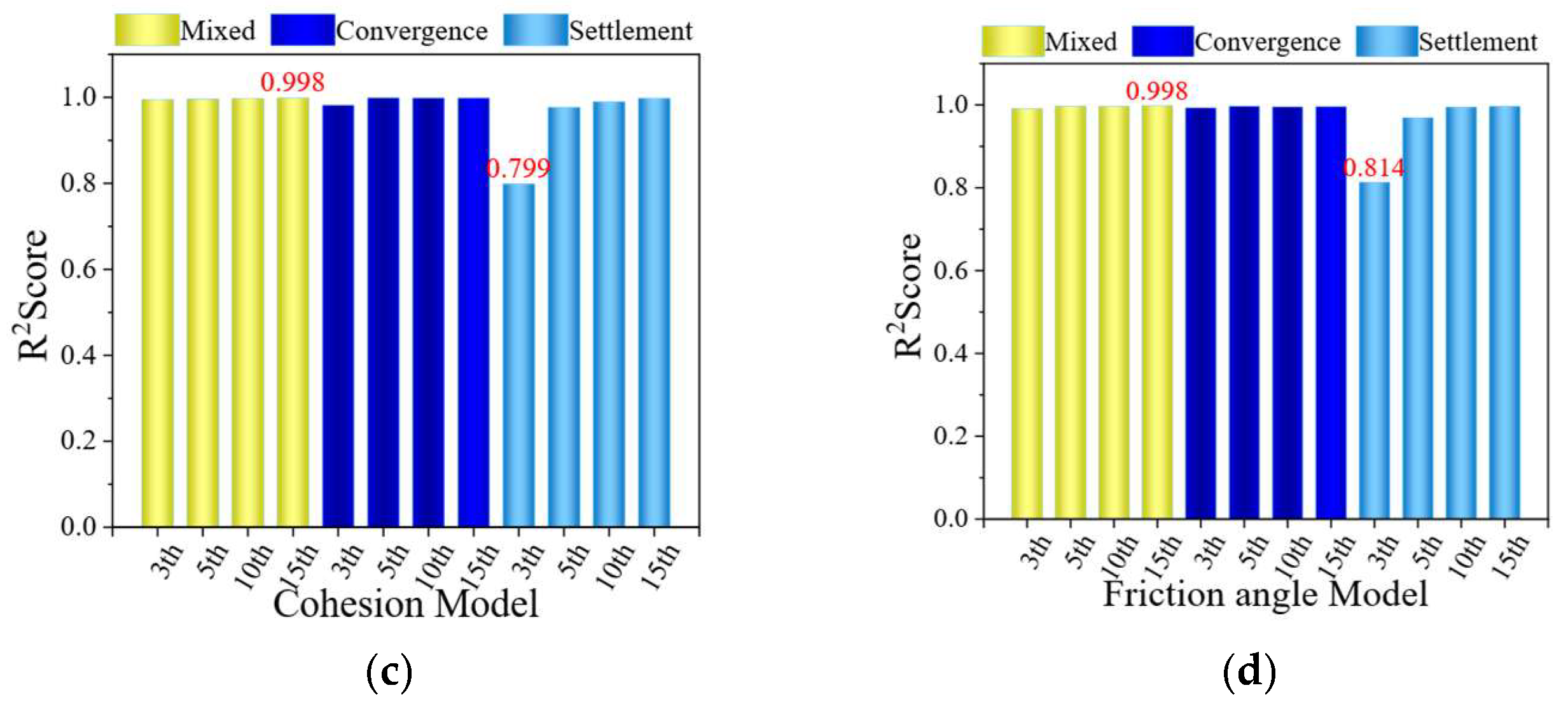

The training performance metrics of the three deep learning models are presented in

Table 5, while the proportion of test samples with prediction errors within 5% is shown in

Table 6. The results indicate that the CNN model achieved the best performance during training, with all R

2 values exceeding 0.99 and the lowest RMSE reaching 0.033 GPa. It significantly outperformed both the CNN-BiLSTM and BiLSTM models. Across different input data types, the CNN-BiLSTM model consistently yielded lower RMSE values than the BiLSTM model. This demonstrates that the convolutional layers effectively enhance the model’s feature-extraction capability. In contrast, the standalone BiLSTM model showed relatively poor performance in this study, likely due to its reliance on strong temporal continuity in the input data. Furthermore, a comparison of training results using different input data types for the same model shows that mixed input data outperformed the cases where only peripheral convergence or vault settlement was used individually. Combined with the test set performance, these results indicate that using mixed data inputs provides the most significant improvement in model accuracy and generalization.

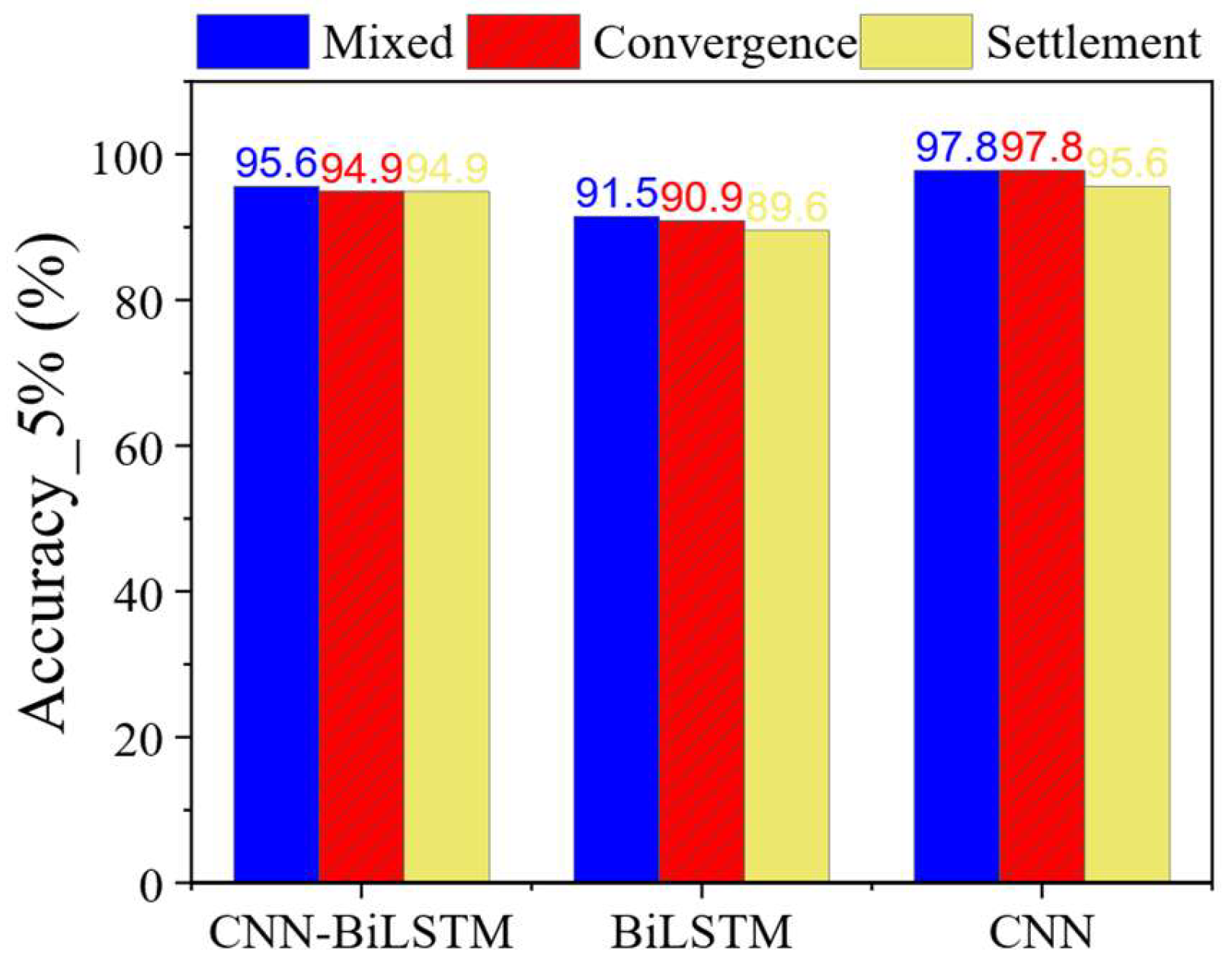

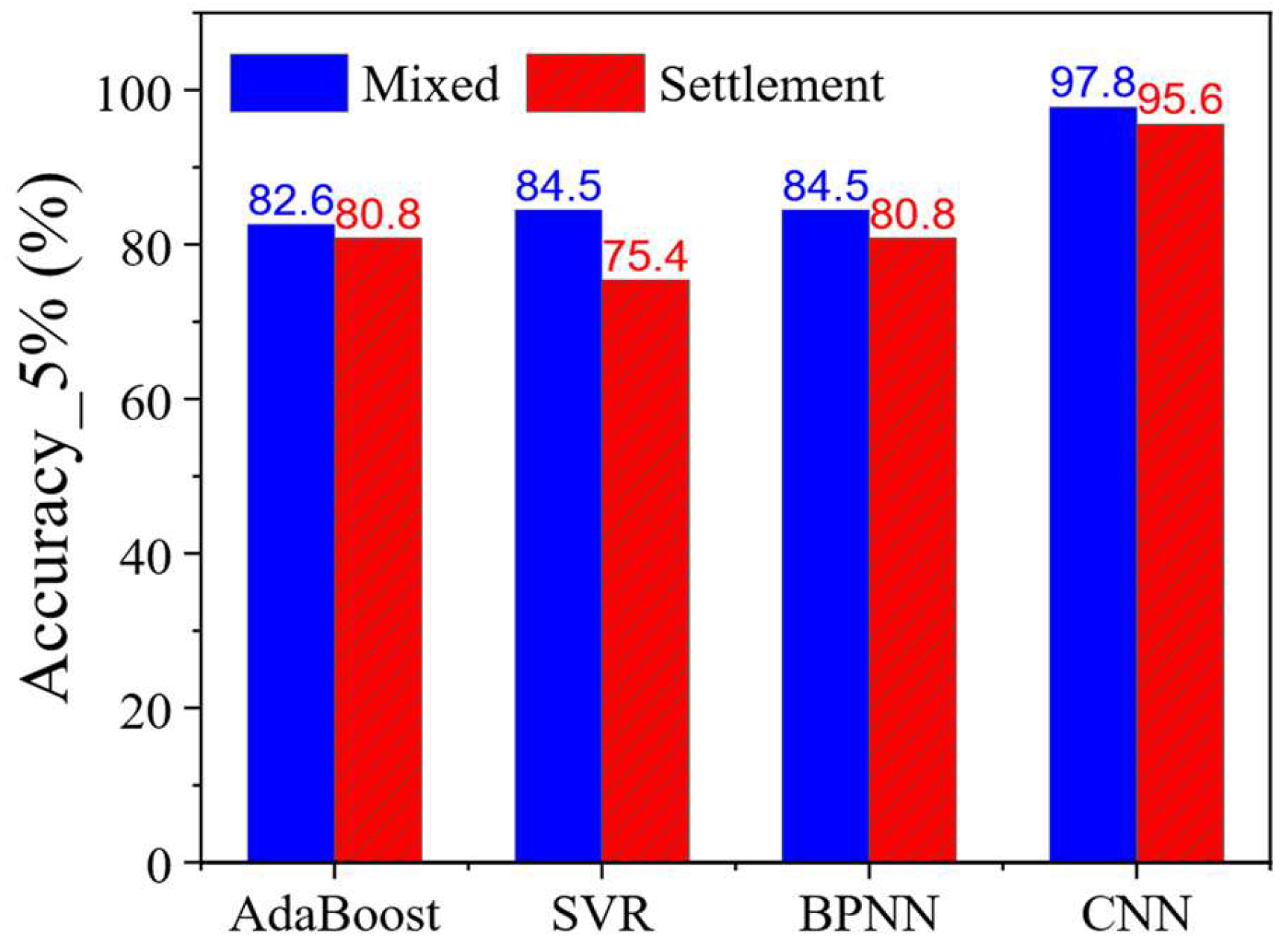

The CNN model also exhibited excellent performance on the test set. Across different input data types, the proportion of test samples with prediction errors within 5% reached up to 97.8%. Even when using only vault settlement as input, the proportion remained as high as 95.6%. In comparison, the BiLSTM model achieved a maximum accuracy of 91.5%. The CNN-BiLSTM model, which incorporates CNN-based feature extraction, achieved up to 95.6% under optimal conditions. These results indicate that the convolutional layers of CNN can effectively enhance BiLSTM’s learning capability by improving its ability to extract key features. This corresponds to an average increase of 4.3 percentage points.

As shown in

Table 5, the CNN achieves R

2 = 0.996, 0.994, and 0.994. Such high values may signal a risk of overfitting in deep learning. We mitigated this by modifying the CNN training. We used AdamW with decoupled weight decay and applied an L2 penalty to convolutional and linear layers (weight decay = 1 × 10

−5). Biases and normalization parameters were not regularized. We also used 10-fold cross-validation (k = 10) for train/validation splits.

Table 7 shows the CNN results with Mixed and Convergence inputs. R

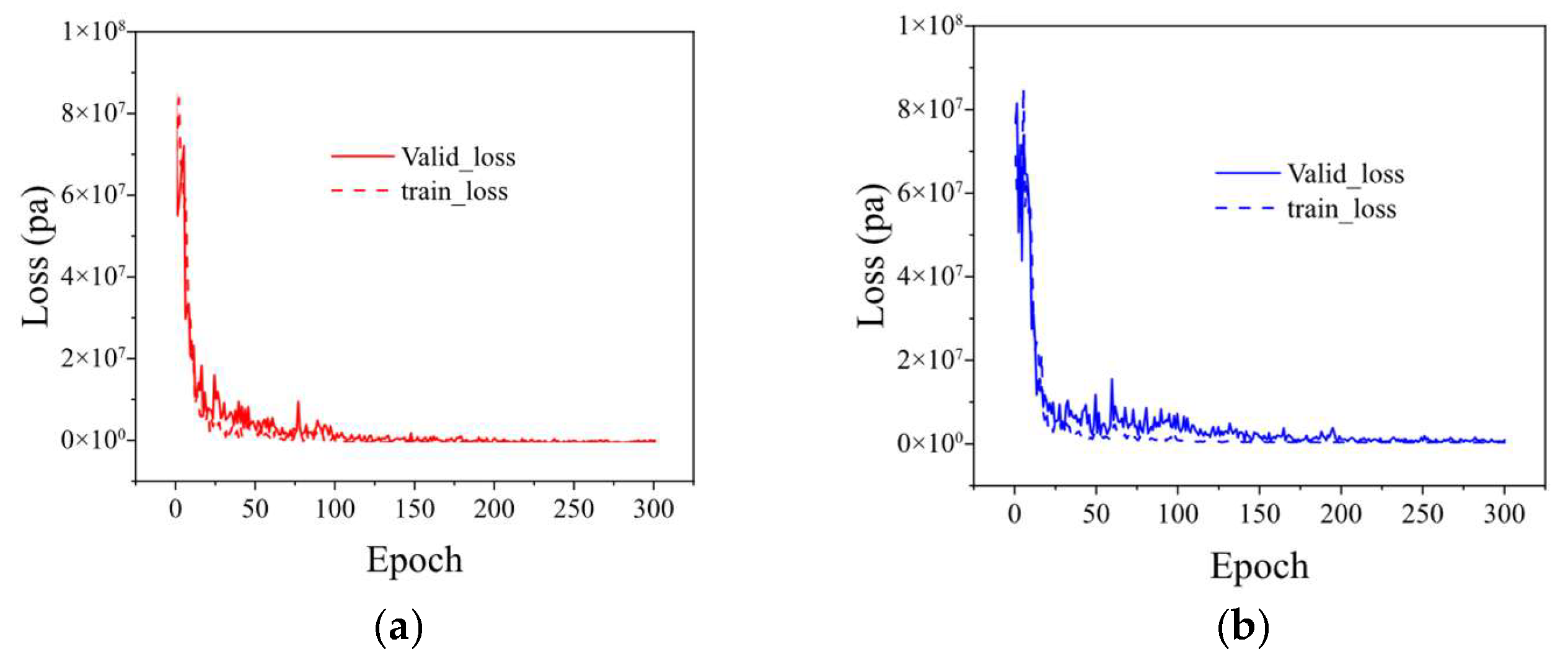

2 = 0.994724 ± 0.002577 and 0.984466 ± 0.010768, respectively. The corresponding RMSE values are 0.032247 ± 0.000387 GPa and 0.033217 ± 0.000334 GPa. This paper also report 95% confidence intervals, computed with the t distribution (df = 9, t = 2.262). Compared with a single split, the 10-fold estimates provide a more reliable measure of generalization performance. The trends remain consistent with earlier findings: the CNN outperforms CNN-BiLSTM and BiLSTM. And mixed inputs yield additional gains. The learning curve (

Figure 9) shows that the oscillation of the training loss becomes longer after the regularization is added, but from the final effect, the model performs well without the regularization technique and after the addition.

This paper attribute the superior performance of the ResNet50-based CNN to the nature of the input data, which consists of spatially distributed discrete measurement points with weak temporal correlations. Such data characteristics are better suited for CNNs, which excel at capturing local features rather than modeling temporal dependencies. Therefore, under this type of input, CNNs and their variants are able to achieve better predictive performance.

3.3. Comparison of Different Parameter Back Analysis Methods

When trained with mixed input data incorporating multiple excavation-related feature points, the ResNet50-based model achieved an RMSE of 0.033 GPa. Compared with machine learning models using single-point inputs, the RMSE was significantly reduced, demonstrating the superior performance of the proposed approach. Furthermore, among all models utilizing multi-point inputs, the ResNet50-based CNN also yielded the lowest RMSE.

Figure 10 and

Figure 11 illustrate the prediction accuracy (error within 5%) of the various models. It is evident that deep learning models incorporating multiple excavation feature points outperform others, with the ResNet50-based CNN slightly surpassing the other two deep learning models. In contrast, decision tree (DT) and random forest (RF) models showed poorer accuracy, likely due to the limited size of the training dataset or the shallow architecture, which constrains their ability to capture complex feature representations. Therefore, no comparison is made in

Figure 11.

As shown in

Figure 11, the models utilizing multi-point excavation features significantly outperform those using single-point inputs in terms of prediction accuracy. Compared with well-performing machine learning models such as BPNN and AdaBoost, the ResNet50-based CNN achieved an average improvement of 13.8% in accuracy.

In summary, the ResNet50-based CNN model demonstrates strong predictive capabilities and holds considerable potential for practical application in the back-analysis of rock mass mechanical parameters. The limitations of conventional machine learning models are mainly due to their shallow architectures, which cannot effectively capture the complex temporal and nonlinear patterns in deformation data. In contrast, DNNs offer a more robust solution [

50]. DNNs can learn intricate, nonlinear relationships between excavation-induced deformation and rock mass quality, making them well-suited for back analysis tasks. Among the deep learning models, the CNN demonstrated the best performance when trained on complete deformation characteristic curves. Therefore, in

Section 4, a modified ResNet50-based CNN was employed to analyze and discuss incomplete deformation curves, aiming to investigate the relationship between the CNN’s back-analysis accuracy and excavation progress under different input data types.