1. Introduction

In emergency situations, such as building fires, the quick and safe evacuation of visually impaired persons poses a significant challenge. Fire remains one of the most frequent and most disruptive hazards in the built environment. Statistics from 2023 from the European Fire Safety Alliance indicate that over 5000 fire-related fatalities and more than 50,000 injuries occurred across EU member states alone [

1]. Although the majority of victims are adults without visual impairments, for visually impaired people, a high fatality rate was reported. Simulations conducted by the National Institute of Standards and Technology show that a visually impaired person trapped on a smoke-filled floor is more likely to fall victim to toxic gases than a sighted peer who can interpret exit signage in the first 90 s of an alarm [

2].

Considering that the global population of people with vision impairments is estimated at 40 million (WHO) [

3] and, with the accelerated ageing of Western societies, could reach 60 million by 2035, a lot of studies have been conducted to improve their mobility and autonomy during emergency evacuation processes.

Existing evacuation strategies for people with visual impairments are split into two categories:

- -

Those based on passive accommodation, without requiring interaction or feedback, and using enlarged pictograms, Braille prints, or tactile maps;

- -

Those based on

social accommodation, or “buddy” systems, in which another person escorts the individuals with vision loss [

4].

Both categories entail challenges: tactile signage is useful only if the person is already familiar with the environment, while the buddy approach fails when guides are absent, panicked, or physically unable to assist people with visual disabilities [

5,

6,

7,

8].

Assistive and emergency-response technologies have opened new possibilities for inclusive evacuation systems [

9,

10,

11,

12]. Miniaturized inertial sensors, Bluetooth Low Energy (BLE) beacons [

13,

14], ultra-wideband (UWB) localization [

15], and low-latency AI models can now be embedded directly in smartphones or wearable devices, enabling real-time situational awareness without dependence on large infrastructure. This convergence of sensing, computation, and inclusive interface design creates a new generation of evacuation assistants capable of delivering precise, context-aware instructions in complex evacuation scenes.

In parallel, edge technology has matured to the point where modern smartphones or small SoCs (integrated in new systems) carry chips capable of trillions of operations per second while maintaining sub-watt power modes [

16,

17,

18]. These are also capable of AI computations, favoring the development of complex evacuation strategies.

These technological advancements open the possibility to transform the visually impaired user’s own phone into an intelligent evacuation assistant. This includes:

Collecting live alarm signals directly from fire detectors without relying on Wi-Fi.

Computing the safest path through a dynamic, hazard-aware cost graph in less than 200 ms.

Guiding the user via a one-ear headset, leaving the other ear canal free for ambient sounds.

Adapting instantly if the fire spreads or the user deviates from the path.

Existing commercial products (e.g., Honeywell ExitPoint, GN ReSound Assist) either broadcast global auditory beacons or perform cloud-based path-finding that collapses when networks fail. Academic prototypes partially solve specific problems of evacuation systems—as indoor positioning or smoke-aware routing—but rarely address end-to-end solutions for the evacuation of visually impaired people.

Some of the frequent gaps and limitations of the existing solutions mentioned in research studies refer to the need to develop models capable of describing complex dynamic behaviors in the presence of uncertainties and the lack of implementation and experimental testing of real-time evacuation systems, offering accessible, personalized wayfinding solutions.

Based on the considerations mentioned above, this paper proposes a system that allows a more comprehensive approach to the safe evacuation of people with vision impairments, which integrates innovative aspects related to the architecture of the application, experimental realization, modeling, simulation, and verification. Due to its decentralization, the system has the capability to rapidly re-evaluate the best options for a safe evacuation in each case. Moreover, the distributed structure leads to increased reliability and flexibility, reduces latency, and overall offers increased accessibility and autonomy for users.

The designed structure of the sensors and devices belonging to the installed fire alarm systems is not only cost-effective but also aligned with current practices, regulations, and technology.

Considered a critical aspect, possible, incidental fire extension on the initially indicated route is calculated in advance based on the continuous monitoring of the exit ways and using specific methods.

In accordance with recent research directions, for analysis and validation purposes, two new models were developed, and simulation was used.

A Delay Time Petri Nets-based model was developed to describe the updating mechanism of routes, considering the information received from the monitoring system and including situations in which the exit path must be modified due to unexpected extensions of fire. The model is scalable and useful to determine the maximum and minimum exit times in different scenarios.

For testing and verification purposes, a second model was developed using Stateflow to describe the dynamic behavior of the whole system, with the simulations being performed in MATLAB Simulink products of MathWorks R2023b.

Some potential contributions of this study include:

The development of scalable models to describe the behavior of emergency evacuation systems;

The determination of personalized and safe route planning;

The expansion of data collection, useful to increase the evacuation speed in fire buildings.

2. State of the Art

One research area gaining increasing attention is the development of smart buildings designed to facilitate quick and efficient evacuation, tailored to the specific circumstances at hand. The core concept behind this approach involves integrating Internet of Things (IoT) sensor networks into the building’s infrastructure. These sensors, which can include smoke, temperature, and gas detectors, as well as motion sensors, video cameras, or radio beacons, are connected to a centralized emergency management system. The purpose of such a network is to quickly detect hazards and relay crucial information both to emergency response teams and the systems guiding occupants within the building.

Researchers are also focused on how efficiently a system can detect fires for rapid and early warnings [

19]. Additionally, sensors have been improved for this type of early fire detection. For example, a capacitive particle analyzing smoke detector, which is highly efficient in detecting early fires, is described [

20,

21,

22,

23]. Additionally, multi-sensor photoelectric fire alarm devices are implemented in the same direction to detect fires early, thereby gaining more time for evacuation phases [

24,

25,

26]. The study [

27] introduces a fire source localization technique using distributed temperature sensing with dual-line optical fibers installed near the ceiling. By measuring the temperature of hot-air plumes, it converts the 3D localization problem into a 2D one, providing a robust and reliable method for detecting fire sources.

A relevant example of this type of system is the one proposed in [

28], which introduced an IoT framework for intelligent evacuation. In practice, sensors connected via an ad-hoc network detect critical events (such as a fire outbreak or smoke presence) and transmit the data to a central server. This server then computes optimal evacuation routes, connected specifically to the building’s context, and communicates them to users through an intuitive interface. Essentially, the IoT network functions as the building’s central system, collecting data and providing a decision-making vector that guides people toward safe paths, avoiding fire hotspots, smoke areas, or overcrowded routes.

Moreover, such systems often utilize intelligent routing algorithms; for instance, in the mentioned research, Q-learning is employed to determine optimal evacuation routes, considering both the structural layout of the building and emergency conditions.

In buildings equipped with sensors and actuators, adaptive real-time guidance can respond swiftly to changing evacuation conditions. For example, ref. [

29] describes an intelligent system that enables exit signs to dynamically alter their directions based on real-time data regarding fire locations and route conditions. In practice, these adaptive “Exit” signs can guide occupants toward the nearest safe exit. If a corridor becomes unsafe due to smoke or crowding, the system can redirect illuminated arrows toward alternative routes that have been assessed as safer.

Smoke and temperature sensors provide data used to evaluate route safety, while motion sensors or video cameras can estimate congestion along specific evacuation pathways. This system is particularly useful for the public; however, additional communication channels must be incorporated to accommodate visually impaired individuals. For instance, in addition to visual directional signs, it would be essential for the system to issue auditory signals or voice messages to guide blind occupants.

Commercial solutions offering such auditory exits already exist; one notable example is the Notifier ONYX ExitPoint system developed by Honeywell [

30]. It uses specialized speakers positioned near exits or along evacuation paths, which emit distinct, intermittent audio signals in the event of an alarm. This auditory signal guides occupants, including those with visual impairments, toward exits. Studies have demonstrated that these auditory indicators significantly reduce evacuation time and confusion, effectively compensating for the lack of visual signage for individuals who are visually impaired.

2.1. Tracking and Localization

Another role of IoT networks in evacuation scenarios involves tracking and locating individuals. Proximity sensors, RFID tags, Bluetooth, or Ultra-Wideband (UWB) systems can be strategically installed, allowing real-time identification of each person’s location. A relevant example is provided by the patent [

31], describing an “evacuation navigation device” featuring strategically placed “position monitors” within a building. These monitors can detect the location of user-held devices such as phones, wristbands, or other wearable gadgets, and relay this information to a central system. Consequently, the system can send precise instructions tailored to each individual. Additionally, it can track who has safely reached the designated outdoor assembly point. Once an occupant reaches this external location, exit sensors mark them as evacuated, aiding emergency teams in identifying who remains inside. Such evacuation tracking is particularly beneficial for individuals with disabilities, who may become trapped; their exact location can be quickly identified, enabling rapid rescue operations.

Furthermore, IoT sensors in buildings also play a crucial role in alerting individuals who are visually impaired early. Prototypes have been developed to enhance standard alarm systems. One notable example is the First Response Interactive Emergency System (FRIES), proposed by Elmannai [

32]. FRIES integrates smoke, gas, and temperature sensors connected to a central microcontroller, which, upon detecting fire or gas leaks, alerts visually impaired individuals through multiple mechanisms: flashing LEDs, speakers emitting voice instructions, and vibration motors installed in beds and sofas, intended to awaken sleeping individuals. Additionally, the system automatically notifies emergency services and personal care contacts. FRIES is designed to be affordable, accessible, and easily installable, addressing a genuine issue: statistics indicate that 90% of people with disabilities reside in developing countries, have low incomes, and cannot afford expensive solutions. While FRIES and similar systems focus primarily on immediate local protection and alerting, they represent a significant advancement, ensuring that visually impaired individuals swiftly become aware of the danger and receive clear messages. This initiates the evacuation process, which is subsequently supported by other guiding technologies.

2.2. Combined Systems

Another relevant example of integrating IoT technologies for fire detection tailored to visually impaired users is the solution proposed by [

33]. They developed an innovative system that combines a camera with artificial intelligence, integrated into a pair of smart glasses. The camera mounted on these glasses functions as a portable IoT sensor capable of capturing environmental data. The detection algorithm employed, YOLOv4, processes captured images to identify flames or smoke. Upon detecting a fire, the system immediately transmits audio notifications to the user and can also alert emergency response teams. This system effectively transforms the visually impaired user into an active participant capable of recognizing hazards and initiating the evacuation process, not only in fire emergencies but also during floods or other disasters. This approach highlights the crucial role of assistive technologies in enhancing autonomy among visually impaired individuals by enabling them to evacuate independently with minimal assistance.

IoT sensor networks installed within buildings play a fundamental role in modern evacuation solutions due to their capability to gather critical environmental and positional information about occupants. These systems can disseminate instructions through various actuators, including sound alarms, visual signals, or mobile notifications. Among the advantages of these networks are comprehensive building coverage and the possibility of automating evacuations through a centralized system that monitors real-time events and makes optimal decisions accordingly. However, implementing such systems requires significant investment and a robust communication infrastructure capable of enduring emergency conditions. Furthermore, installing these networks in older buildings presents technical challenges. Additionally, while these systems are highly beneficial for coordinating rescue teams, they are not inherently accessible to visually impaired individuals without the use of appropriate auditory interfaces.

Therefore, IoT solutions should be complemented by personal devices carried by users, capable of receiving infrastructure signals and translating them into clear and direct personalized guidance adapted to individual needs.

The paper [

34] proposes an autonomous smart cane designed specifically for indoor navigation, integrating ultrasonic sensors and a compact Pixy2 camera for recognizing objects, colors, and visual signs within the environment. Ultrasonic sensors enable real-time obstacle detection, while user guidance is achieved through a cloud-based navigation algorithm, which calculates optimal paths using Dijkstra’s algorithm and delivers clear auditory instructions. The device incorporates an ESP32 microcontroller with IoT connectivity, facilitating continuous data exchange between the cane and a cloud-based system that stores spatial information and dynamically updates obstacle data. Audio feedback is provided via an integrated text-to-speech module. Additionally, the system can operate in multiple modes (fully connected, eco, and offline), enhancing its autonomy and flexibility. Indoor localization is improved through fixed IoT beacons, achieving spatial positioning accuracy within approximately 0.5–1 m. The authors validated their system in a realistic office environment, setting obstacle-defined routes, with a sighted user (simulating a visually impaired individual) successfully navigating predefined paths, thereby demonstrating the feasibility and effectiveness of the proposed solution.

Another example of smart cane solutions for facilitating navigation and mobility can be seen in [

35], where the infrastructure consists of color lines that, together with the smart cane, can deliver route guidance. Navigation is an important topic for visually impaired people, particularly when accompanied by a system that feels familiar to them, as described in [

36].

Modern research converges on phone-centric, multi-modal aids that run edge AI locally to minimize latency. DeepNAVI, for example, executes a lightweight CNN entirely on an Android phone, converting the camera into a real-time obstacle detector; blind participants reported 25% faster room-to-room navigation than with a cane alone [

37]. In [

34], the authors built a vision-based fire-alert assistant that recognizes flame/smoke patterns with 99% accuracy and speaks alerts to blind users without cloud dependence. Wearable efforts, such as Kumar’s voice-guided smart cane (utilizing ultrasonic, IR, and flame sensors), deliver tactile and spoken warnings while staying under a USD 50 bill of materials [

38]. At the smart-city scale, ref. [

39] propose an AI-assisted IoT framework that fuses building alarms, BLE beacons, and cameras to guide VI pedestrians, emphasizing on-device inference to survive connectivity loss.

Most works focus on perception (obstacle or fire detection), but not on dynamic routing; they assume fixed paths or rely on remote clouds.

Edge-centric designs dominate the recent IEEE literature because they ensure service continuity when the Internet fails. The authors of [

40] prototype SB112, an edge gateway that fuses multi-sensor streams and auto-initiates NG112 calls in 32 ms. The authors of [

41] propose a Resilient-Edge IoT mesh with multi-radio failover; simulations maintain 96% packet delivery under partial infrastructure collapse. In paper [

42], the authors present Aegis, a cloud–edge cooperative reinforcement learning stack for multi-hazard, multi-building scenarios, where inference runs on edge nodes in under 20 ms, while the cloud handles heavy retraining.

The paper [

43] presents an intelligent, wearable guidance system that integrates multiple sensing and communication modules into a single platform, independent of smartphone usage. They are creating a standalone device that does not require a smartphone. IBGS (Intelligent Blind Guidance System) adopts a modular architecture centered around a high-performance GD32 microcontroller, which is powerful enough for multiple tasks. It incorporates a video camera for visual perception tasks such as obstacle identification and traffic light recognition, a neural network-based voice recognition module with a ConvTransformer-T architecture for handling voice commands, distance measurement modules using ultrasonic sensors for obstacle detection, a GPS receiver for localization, and an NFC chip facilitating electronic payments or object identification via tags. Additionally, the device features Wi-Fi connectivity, allowing access to cloud-based databases. The system provides real-time audio feedback to users, delivering navigation instructions and alerts, and can process voice commands, enabling hands-free interaction. Practical tests conducted in urban settings demonstrated that IBGS addresses everyday navigation challenges, including obstacle avoidance or traffic light identification, significantly enhancing independent mobility for visually impaired individuals. However, practical limitations such as dependency on data connectivity for cloud access and hardware complexity exist. Nevertheless, the device remains relatively cost-effective, with the authors highlighting their goal of widespread affordability and accessibility.

Indoor navigation, particularly within buildings or spaces that lack GPS signals, remains a significant challenge for individuals who are visually impaired. An innovative approach presented in 2022 introduces a navigation system based on Radio Frequency Identification (RFID) technology to provide effective guidance in these environments [

44]. The proposed solution comprises a smart glove and a smart shoe, both equipped with RFID readers, which communicate with passive RFID tags strategically placed on the floors or attached to relevant objects within the user’s environment. Practically, indoor pathways such as corridors or intersections can be affordably marked using passive RFID tags. As users move through the building, the RFID reader embedded in the shoe scans floor tags, enabling the system to accurately determine their current position and travel direction. Simultaneously, when users approach their RFID-tagged hand toward labelled objects, such as doors, the glove-mounted RFID reader identifies these items and provides relevant contextual information. All navigation-related data, including RFID and associated messages, are managed by a Raspberry Pi device, which also runs an audio interface that delivers voice instructions to users through headphones. Experimental evaluations conducted in a test environment demonstrated that the platform is reliable, energy-efficient, and sufficiently accurate for indoor navigation tasks, providing correct directional guidance. A key advantage of this approach is its relatively low cost and simple infrastructure, as passive RFID tags are inexpensive and require no power source. However, the main limitation is the necessity of preparing the environment with RFID tags and integrating their positions and meanings into a central database. In conclusion, the system is particularly suited to well-defined spaces or institutions capable of installing such markers, including schools for the visually impaired, office buildings, hospitals, and train stations, substantially improving independent navigation compared to relying solely on memorization.

Another research direction leverages modern communication infrastructures, such as 5G networks and edge computing, to enhance the performance of assistive devices. A representative example is the VIS4ION (Vision for Vision) project presented in 2023, which investigates a processing solution for smart glasses designed to assist visually impaired individuals [

45]. The fundamental concept involves equipping glasses with high-resolution cameras capable of capturing detailed environmental information. Instead of processing this substantial volume of data on the device, which would necessitate powerful and high-weighted hardware mounted directly on the wearable, the system transmits captured images through a 5G network to a nearby edge computing server. There, advanced artificial intelligence algorithms, too computationally intensive for mobile devices, perform extensive processing tasks, including complex object detection, identification, and even semantic scene descriptions. The processed results are quickly relayed back to the user. Consequently, users can benefit from sophisticated AI capabilities, such as recognizing distant objects, detecting fine details, or generating comprehensive scene descriptions by using just a pair of smart glasses connected via a smartphone with a 5G network to advanced systems. Initial experiments involving simulated urban navigation scenarios have demonstrated system feasibility, confirming that 5G network latency is sufficiently low for continuous, updated instructions, while object-recognition accuracy significantly improves due to enhanced computing resources. The advantages of this architecture include device miniaturization, since processing is transferred to dedicated infrastructure, and the possibility of delivering AI services more advanced than what would be feasible locally. Nevertheless, limitations clearly arise from reliance on stable and extensive 5G coverage; without a robust connection, communication is disrupted, and device functionality is lost. Additionally, privacy concerns arise from the external transmission and processing of captured images, alongside security issues, both of which must be adequately addressed before widespread adoption. Despite these challenges, initiatives like VIS4ION demonstrate the potential to integrate cutting-edge communication technologies into assistive solutions, supporting the development of smart cities that can deliver real-time, contextual support to individuals with disabilities.

Several papers suggest that evacuation time can be improved by utilizing enhanced evacuation systems that are also inclusive [

46,

47].

2.3. Fire Detection and Safety Technologies

A fire detection and alarm system is a critical component of building safety, designed to ensure the early detection of fire and the timely alerting of occupants and emergency responders, thereby minimizing both casualties and property loss. In accordance with international standards (EN 54 series in Europe and NFPA 72 in the United States) [

48,

49], such systems consist of a network of interconnected devices and subsystems that provide detection, notification, control, and communication functions. At a high level, the system architecture includes a fire alarm control panel, various types of automatic fire detectors, audible and visual notification appliances (sirens, strobes), manual call points (panic buttons), and interfaces for communication with auxiliary building systems (e.g., public address for evacuation, automatic door release). In addition, the system must be capable of sending remote alerts, including alarms and fault conditions, to fire departments or central monitoring stations. The fire alarm control panel is the main component of the system, continuously supervising all initiating devices (detectors, manual call points) and controlling all notification appliances (sirens, strobes, relays). Under normal conditions, the panel indicates a normal state. However, upon fire detection or manual activation, it switches to an alarm state and initiates evacuation notifications. Modern panels also monitor and report issues such as open circuits and low battery levels [

50]. The power architecture is dual: a primary supply from the mains and a secondary supply provided by backup batteries. According to NFPA and EN requirements, the batteries must support at least 24 h in standby mode, followed by 5–15 min in full alarm, depending on the presence of voice evacuation systems. Large facilities may also include automatic emergency generators, but battery backup remains mandatory as a last-resort power source.

Automatic fire detectors sense the presence of fire (smoke, heat, flame, or gas) and transmit alarm signals to the control panel. Common categories include:

Heat detectors, which may be fixed-temperature or rate-of-rise, trigger alarms when critical temperature thresholds or rapid increases are detected.

Smoke detectors (photoelectric, ionization, beam-type), which detect airborne particles. Photoelectric devices rely on light scattering, while ionization types measure ionic current disruption by smoke particles. Beam detectors are deployed in large open spaces.

Flame detectors (UV, IR, or combined UV/IR), which sense the radiation emitted by flames and are widely used in industrial applications.

Gas and CO detectors, which identify hazardous gases or combustion by-products.

Linear heat detection cables, which provide continuous temperature monitoring across long tunnels or cable trays.

Modern systems increasingly deploy multi-sensor detectors (for example: smoke + heat) to improve accuracy and reduce false alarms. Furthermore, addressable technologies allow each detector to have a unique digital address, providing exact fire location data to the panel. By contrast, conventional detectors identify only the alarm zone. Current trends strongly indicate the use of addressable systems, especially in medium- to large-sized buildings.

Notification appliances ensure occupants are alerted promptly:

Audible alarms (sirens) provide temporal-3 fire signals (three short pulses + pause), meeting NFPA 72 sound level requirements of at least 15 dB above ambient noise.

Visual alarms (strobes) are critical for inclusive evacuation, particularly for the hearing-impaired. EN 54-23 and ADA regulations specify coverage and intensity. Many appliances integrate a siren + strobe into a single unit.

Voice evacuation systems (EVAC) use loudspeakers to broadcast prerecorded or live evacuation instructions. These are especially effective in high-occupancy or high-rise buildings. Standards require voice intelligibility (EN 54-16, EN 54-24) [

11,

49].

Modern fire detection and alarm systems constitute an integrated safety ecosystem, where detection, notification, system control, communication, and evacuation aids function in a coordinated manner. By aligning system design with international standards such as NFPA 72, EN 54, ISO 7010, and NFPA 101, these technologies ensure reliability, accessibility, and regulatory compliance [

9,

10,

11,

48,

49]. Current research trends emphasize IoT integration, multi-sensor fusion, and enhanced interoperability, aiming to further improve the robustness and adaptability of such systems in the context of smart buildings.

Based on the above analyses, a decentralized evacuation support system for visually impaired people was proposed. It summarizes some important advantages, being designed to track the current position of each user, making personalized and quickly changeable decisions, and indicating the safest exit alternatives. For this purpose, the evaluation of the risk degree associated with the current exit route can be updated using mathematical relations in which the direct influence on the fire evolution of specific factors, including the increase in smoke density, the gradual increase of the environmental temperature, or the sudden occurrence of open flames, is weighted. These design characteristics have the potential to increase safety and total evacuation time. Another important advantage is the potential utilization of information provided by sensors belonging to the standard fire alarm system, which contributes to a decrease in the overall infrastructure cost.

3. The Guided Emergency Evacuation System

The design of a guided emergency evacuation system must bring together two critical things: life-safety reliability and inclusive accessibility. In a fire scenario, especially one involving smoke-filled environments, traditional visual cues such as exit signs and printed maps are rendered less effective for visually impaired individuals [

51,

52]. A digital assistant capable of delivering real-time, non-visual guidance directly to users can bridge this gap if it operates with low latency, high localization accuracy, and autonomy from external network infrastructure. By coupling the building’s existing fire-detection loop to a smartphone routing engine and by adding tactile and auditory guidance channels, the system can ensure that every occupant receives instructions in time to reach safety.

This chapter outlines the architectural framework and functional requirements for such a system, ensuring that design decisions are informed by both regulatory compliance and performance criteria.

The main requirements that formed the basis for the design of the proposed system were:

To provide and analyze the real-time information transmitted by the alarm system (sensors).

To elaborate on the fastest path for evacuation, considering the context of the moment.

To obtain an evacuation route in the form of successive indications easily interpreted by the visually impaired persons.

3.1. The Main Components of the Guided Evacuation System

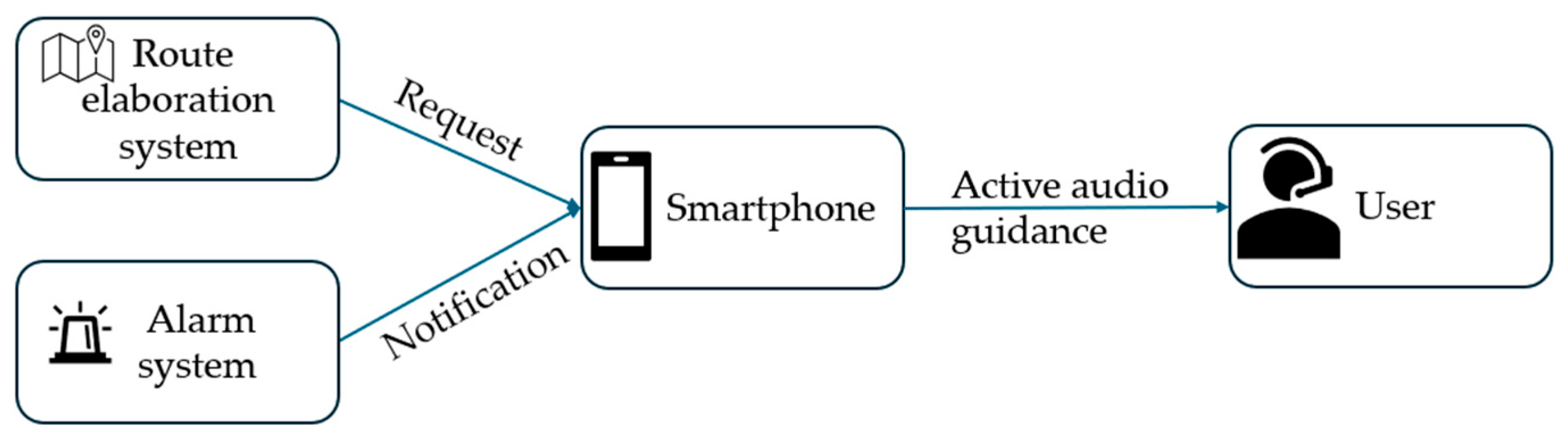

The block diagram of the presented system is proposed in

Figure 1. In emergency situations, the alarm system sends a notification to the smartphone. Based on the user’s location, the Route Elaboration System responds to the specific alarm request with the most efficient evacuation route, considering the evacuation map and the localization of the sensors that were the source of the alarm.

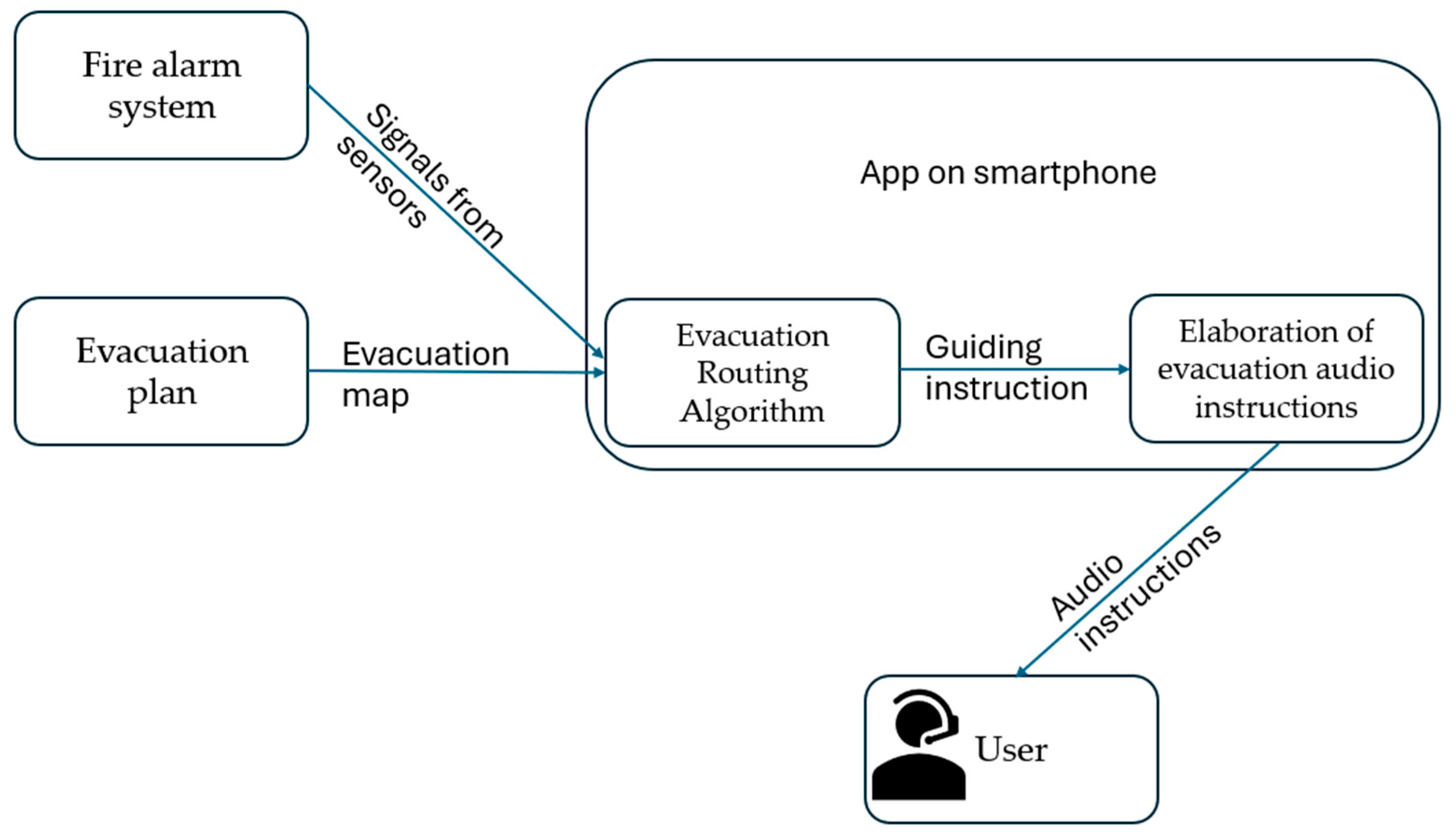

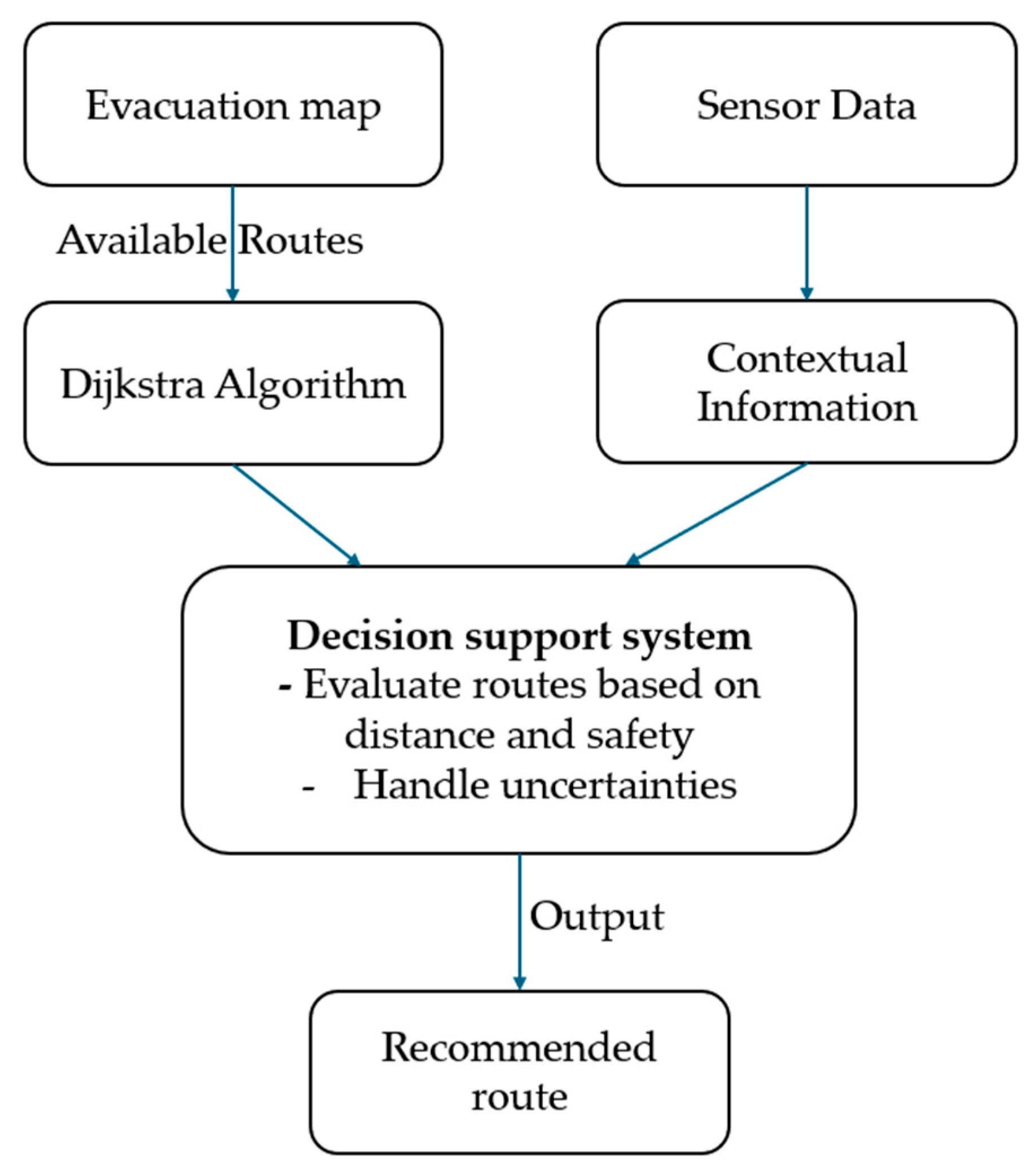

Figure 2 presents the interconnections among the main elements of the route delivery system, including: an evacuation map, a map of different relative localizations, a decision support system, real-time data collected from the alarm system’s sensors, and an output route determination.

Considering practical reasons, two design and implementation possibilities were proposed:

An approach that relies strictly on components already available in conventional fire alarm systems, ensuring full compatibility with existing infrastructures and compliance with regulatory standards;

Another approach that incorporates low-cost components that can be strategically installed to enhance both detection accuracy and overall evacuation safety, while maintaining cost-efficiency.

Architecture is intentionally decentralized; every life-critical computation takes place on the user’s handset so that the system remains functional even if some components of the communication infrastructure collapse. The smartphone app receives the general evacuation map of the building upon the user’s arrival.

To avoid the overhead associated with dynamic routing protocols, reduce latency, and improve overall performance, a two-step routing protocol was elaborated. First, a static algorithm was used to calculate all possible routes from many interest points to exits. Then, based on information received from sensors, the decision-making system selects the shortest and safest path for evacuation. The information flow diagram is described in

Figure 3.

3.2. The Decision Support System

Improves the evacuation efficiency by finding reliable routes, ensuring coverage of all exits and paths.

Evaluates and selects the best route by considering distance, time, and safety factors.

Incorporates reasoning techniques to handle uncertainty (risk assessment).

This comprehensive approach ensures that at any given moment, the user is guided towards an exit path that is not only the shortest in distance, but also the safest given the circumstances, utilizing all available information and computational intelligence to make critical decisions in real-time.

The building already hosts a conventional EN 54-5 [

49] fire-detection loop (smoke and heat sensors).

For implementation purposes, we can retrofit BLE repeaters (utilizing a low-cost coin-cell CR2477 battery with 4-year autonomy) that sniff Zigbee packets, encapsulate them in BLE Advertising PDUs, and flood the corridor every 200 ms.

Detector → Phone path budget: Zigbee hop (25 ms worst case) + BLE broadcast (avg. scanning latency 45 ms) ≤ 70 ms. This leaves ~130 milliseconds for on-device pathfinding to stay under the 200 ms total design target.

Indoor positioning fuses three sources:

Step-counter + gyroscope for dead reckoning;

BLE RSSI trilateration from wall-mounted beacons (same hardware as repeaters);

Optional UWB anchors where infrastructure exists.

3.3. Redundant Physical Cues

To comply with ISO 21542 §6.4 [

9], 30 mm PVC tactile strips (ribbed profile, R9 anti-slip) are glued 250 mm off the wall along the computed routes. The smartphone instructs users to “follow the tactile strip” whenever their lateral deviation exceeds 0.6 m, as detected via an accelerometer side-tilt pattern.

Fire stairs have gooseneck handrail extensions to guide cane contact. At horizontal landings, Braille plaques display floor numbers (

Table 1).

3.4. Considerations Regarding Cyber-Security and Privacy

Users are required to create a local account within the application; credentials are not provided for management over the Internet, on a server, or in the cloud. To upload the building plan, users receive a file from the building administrators, which is then imported into the application. For this upload process, personalized credentials are also provided. Upon entering the building, the user launches the application that starts to operate in the background until it is manually deactivated.

BLE frames are signed with a 16-bit CRC and a CBC-MAC (128-bit); keys rotate every 30 days. The phone never transmits user location; it only receives broadcast alarms, so GDPR risk is minimal.

The system is privacy-preserving by design. Key aspects include:

- -

No continuous tracking, no GPS or network-based positioning is used.

- -

No transmission of personal data: the app listens to broadcasted hazard alarms but never transmits the user’s position or movement data to the cloud or other users.

- -

GDPR-compliant architecture: the system does not store or process identifiable user data externally. All computations and logs remain on the user’s phone unless explicitly exported by the user.

3.5. The Best Route Determination

The evacuation problem is formalized as a dynamic, weighted graph search. We represent the building floor plan as a directed graph G (V, E), where each node v ∈ V is a decision point (e.g., a door, stair landing, or corridor intersection), and each edge e ∈ E is a traversable segment (e.g., a corridor or doorway). Four numerical attributes are updated in real-time and attached to every edge (

Table 2).

Depending on the fire evolution (information received from sensors), the cost C(t) of an edge is computed as:

where α is the smoke sensitivity coefficient. This parameter weights the influence of smoke density (S) along the edge. A higher α means the route delivery system strongly avoids areas with smoke, increasing the edge cost significantly when smoke is detected. Typical value in our model: α = 0.8. In simulation and testing, we obtain this value from the smoke sensors displayed in the building.

β is the crowd density coefficient. This parameter reflects the penalty associated with pedestrian congestion (D). Higher β discourages routing through crowded areas, aiming to avoid bottlenecks. Value set in the example: β = 0.5.

γ is the flame proximity high penalty. This is a high penalty applied when an edge is adjacent to an active flame sensor. γ has the highest weight, effectively making such paths prohibitively costly to ensure user safety. We also use a clearance surface in our model, where the penalty is still high. Typical value: γ = 3.0—enough to multiply edge cost by 4× when flames are detected nearby.

For example, the weighting coefficients are set experimentally to α = 0.8, β = 0.5, and γ = 3.0. Then, a 10-m corridor with dense smoke (S = 1) and moderate crowd (D = 0.4), but no active flame (F = 0), has an effective length of 10 × (1 + 0.8 + 0.2) = 20 m.

3.6. Route Robustness

A path that becomes invalid due to the failure of a single edge (corridor, door, or stairwell) and has no efficient detour is fragile for real-time guidance. To address this, we can introduce a robustness factor V(Π) for each computed path Π. This metric evaluates how much worse the route becomes if any one of its edges is blocked. We determined the robustness using the relation:

where J(Π) is the cumulative cost of the path. J_detour is the cost of the best alternate path from source to destination. If V(Π) < 1.3, it means that any single edge failure leads to at most a 30% cost increase, which we can consider acceptable for safe evacuation. This constraint is used to maintain navigational reliability, particularly in real-world fire scenarios where the exact evolution of hazards is unpredictable.

To find the best route, the function given in the following relation was used:

where J(Π) is the cumulative cost of the path Π, including geometric length, smoke levels, crowd density, and flame proximity; V(Π) is the robustness factor, which quantifies how vulnerable the path is to failure, specifically, how costly the worst-case detour becomes if any edge is blocked; λ is a parameter that controls how much priority is given to robustness over nominal efficiency. This type of cost, plus robustness formulation, is used in evacuation planning and robotics [

53].

The calculated path, having the minimum cost at time t, may include an unavoidable point, such as a blocked door that could make it unusable. By adding λV, we bias the planner toward routes that remain feasible even if conditions deteriorate a few seconds later, which can be critical during a spreading fire.

The choice of λ parameter is based on how the optimizer should work; λ is calibrated in Monte Carlo fire simulations, selecting the value that minimizes the time-to-exit plus the number of emergency re-routes. The Monte Carlo simulation was chosen because real fires vary widely; no single worst-case scenario covers all hazards. Monte Carlo [

54,

55] yields distributions (median, 99th percentile) and failure probabilities, which are crucial for safety certification. Monte Carlo simulations tune λ, balancing speed vs. robustness.

The prototype value is λ = 0.2.

The evacuation route can be modified depending on the evolution of the firing process detected by sensors.

In

Table 3, there is presented a summary of the impact of low-value and high-value coefficients.

In

Figure 5, an example of an evacuation plan is provided, with the main path indicated by green arrows to guide evacuation. If the sensors on the main stairs indicate a fire, another option is to cross that sector of the building to reach the stairs from another sector.

4. Experiments and Results

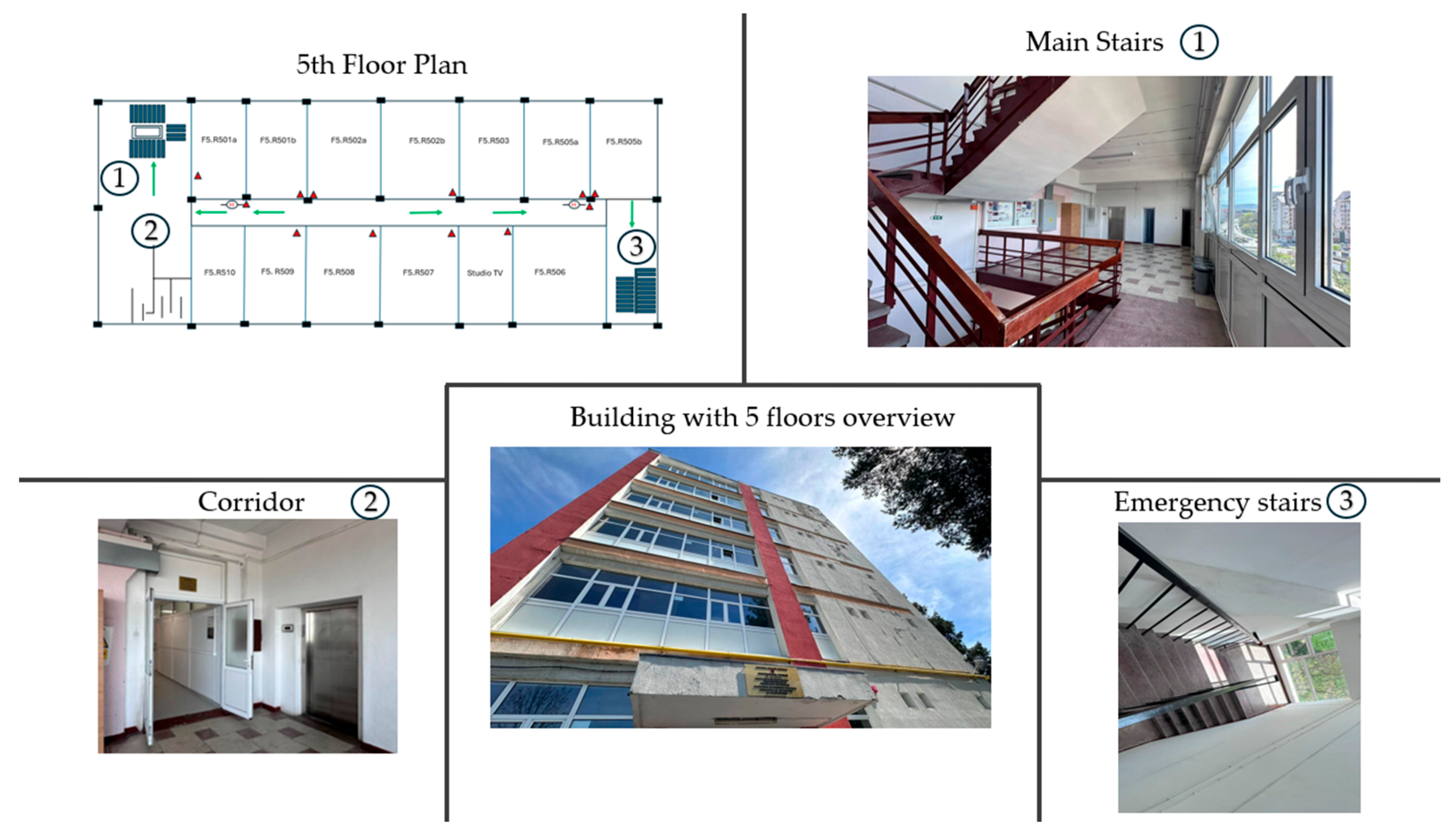

The proposed evacuation strategy was tested in scenarios where the support system was used for guidance purposes in real fire emergency cases. Different fire localizations were simulated using signals transmitted by sensors of an alarm system installed in an existing building with standard evacuation infrastructure. An experimental environment, including a building plan, is presented in

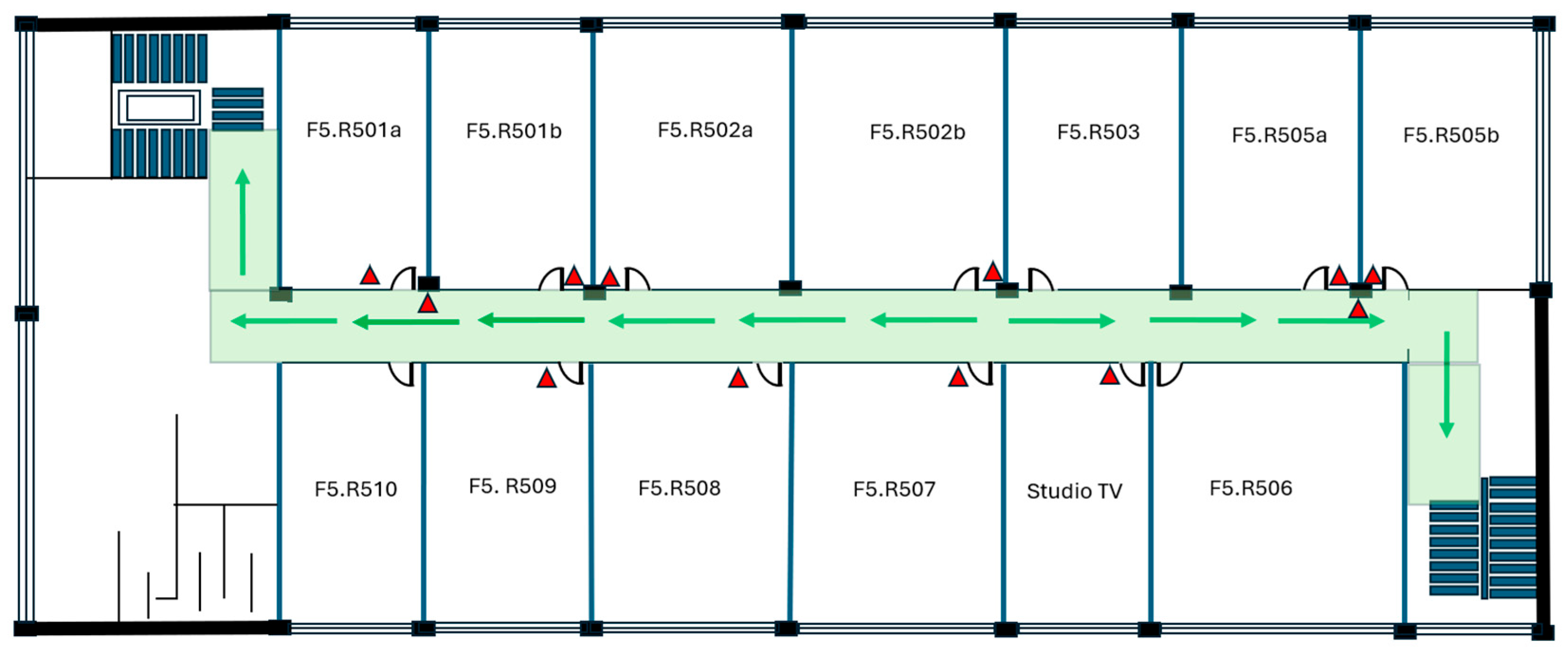

Figure 6, where images represent the main stairs (1), corridor (2), emergency stairs (3), and the building with five floors.

The key elements considered in

Figure 7 are the following description images: a simplified evacuation plan including all rooms, an example room located at the 5th floor of the building (1), the corridor (2), the emergency stairs (3), the main entrance in the corridor of the 5th floor (4), and the main stairs (5). Each part of the building has its corresponding photo.

Considering that the support system addresses people with vision impairments, sensory tactile guidance signs were 3D printed for use as a redundant specific mode of guidance. People with impaired vision feel much more comfortable having an additional means of orientation, such as sensory guidance signs. These signs can serve as an alternative in the event of power loss or an IoT network failure. The printed models included both specific visual cues (arrows, exit indicators, stairs, and floor numbers) and tactile surface patterns, enabling accessibility for visually impaired users (see

Figure 8). By combining dynamic IoT-based guidance with static highlighted physical tactile signs, the system increases robustness and inclusivity, ensuring that occupants receive clear evacuation instructions.

In

Figure 9, we can see a photo of the actual evacuation plan for the 5th floor, which is displayed in the building to aid in case of an emergency.

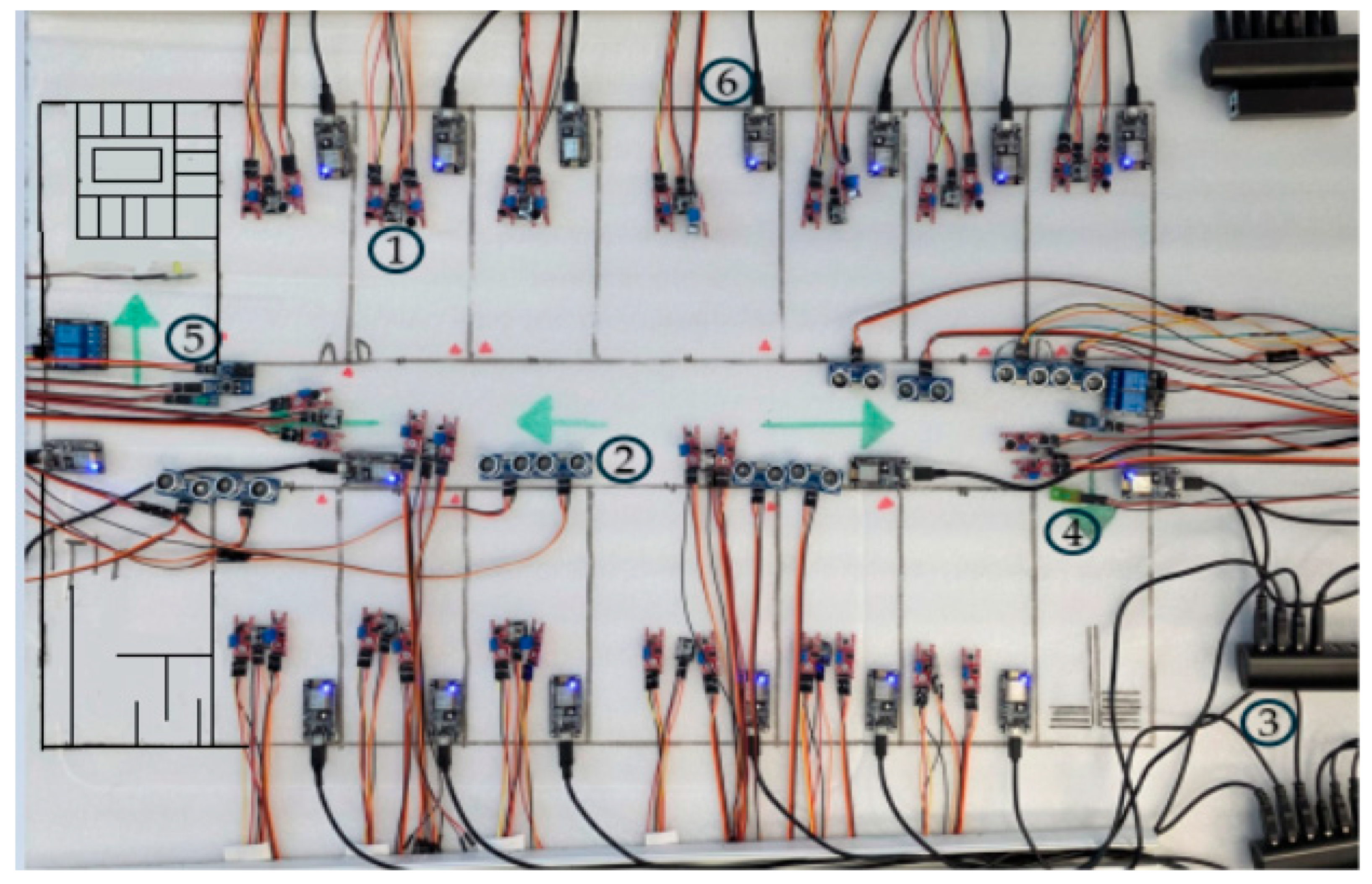

In the testing scenarios, alarm sensors were installed in the corresponding locations of the above-represented evacuation plan. For experimental purposes, we recreated the above-presented floor on a small scale, where we prepared the entire fire emergency system in accordance with international standards. The corresponding recreated digital plan is shown in

Figure 10.

The floor is divided into four zones, and, based on the location of the fire, you are directed to a specific exit. In this floor implementation, we can see two exits. One is considered the main exit, where we can find the stairs and the elevators, which are automatically blocked in case of an emergency fire. The other, annotated as Exit2, is the emergency exit.

In each room, zone, and evacuation stairs, we can see the detector system. This detector system consists of 3 sensors:

Smoke detector sensor;

Temperature sensor;

Flame detector sensor.

We implemented a test bench for a floor with all sensors to develop and test the application for evaluating fire detection and evacuation timings. We chose MQ2 as a Smoke detector sensor, DS18B20 as a Temperature sensor, and an IR Flame detector sensor. All the sensors are connected via a NodeMCU Amica as an alarm zone processing unit. NodeMCU Amica is USB powered.

We have also installed a horn with a strobe at each level, connected to a NodeMCU Amica through a 2-relay module. Additionally, we have placed two evacuation lights at each end of the floor, powered by a relay from a USB battery power pack connected to one of the USB hubs.

In

Figure 11, the reduced-scale model of the entire floor of the building is presented, which was created to assess a complex fire system, where 1 are detector systems (with the three sensors: flame detector sensor, temperature sensor, smoke detector sensor), 2 are ultrasonic sensors, 3 are LogiLink USB extenders, 4 are EXIT LEDs, 5 are alarms (and corresponding relays), and 6 are the computing units (NodeMCUs). Reduced-scale fire modeling was chosen as a recognized technique for evaluating the performance of detection and suppression systems when full-scale experiments are too costly or difficult to control.

All NodeMCUs from all alarm zones are connected through a LogiLink USB extender, which permits the extension of communication over UTP CAT5e cables due to the long distances and centralized/powered operation through USB HUBs (three USB HUBs with eight inputs for each floor). This setup enables the centralization of all information in MathWorks MATLAB on a single computer. Each room is considered a zone, and the corridor is segmented into four zones due to the coverage area of the detectors.

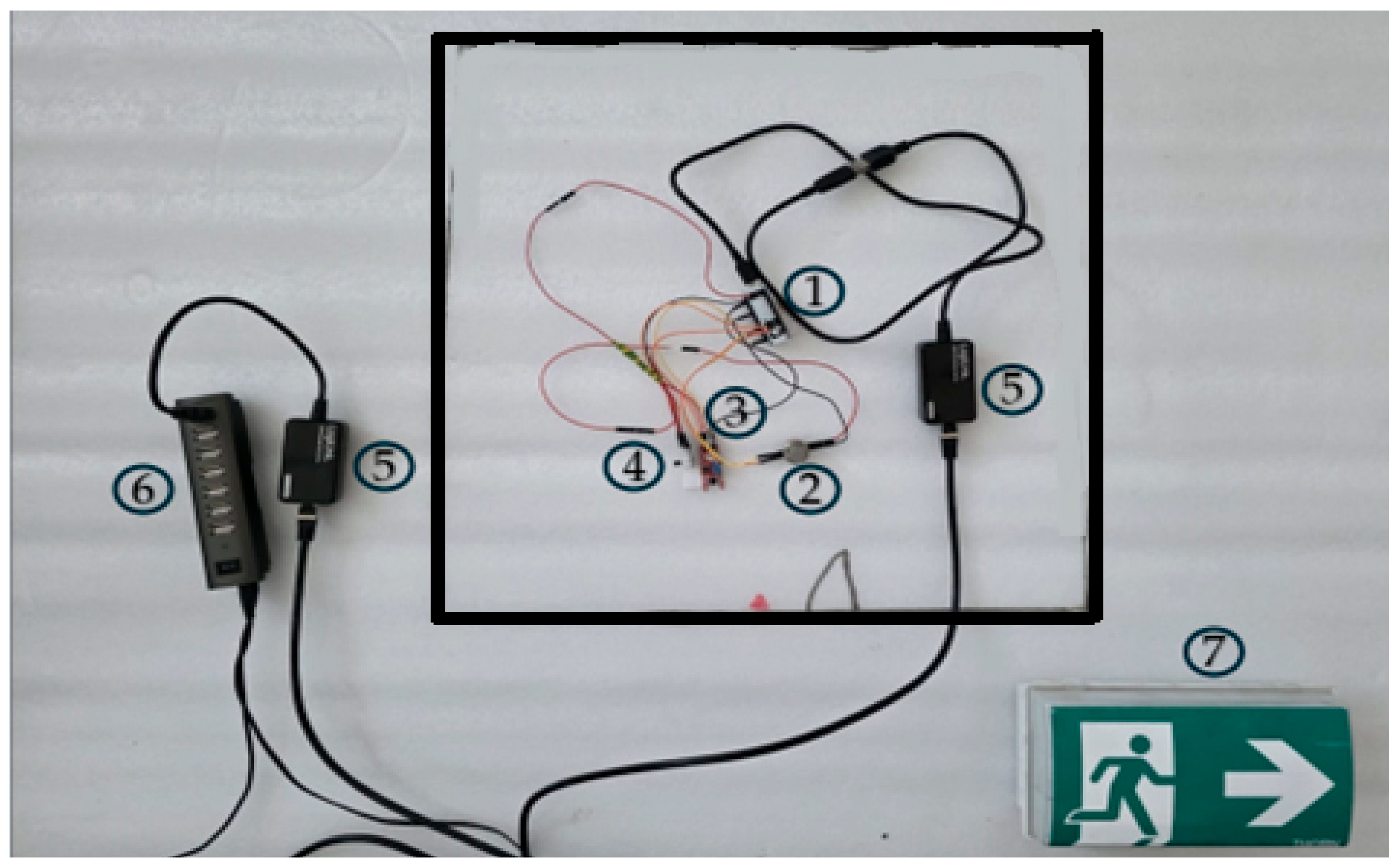

To better view and practice, we prepared a room view, where all the elements are included and it also can be seen how we created the power supply chain, using LogiLink USB extenders (

Figure 12), where 1 represents the NodeMCU, 2 the smoke detector sensor, 3 the flame detector sensor, 4 the temperature sensor, 5 the LogiLink extender, 6 the USB Hub, and 7 the EXIT sign.

In our experimental setup, ultrasonic sensors were employed to measure evacuation times from individual rooms, as well as along corridors and staircases. By continuously monitoring the distance and movement of occupants, these sensors provided accurate estimates of the time required to clear specific areas of the building. In addition to timing, the ultrasonic readings enabled the determination of evacuation direction, allowing us to assess whether occupants followed the intended routes or deviated due to obstacles or congestion. This information is particularly valuable for modeling purposes and for validating the effectiveness of the evacuation guidance system, as it links both the temporal dynamics of evacuation and the spatial patterns of movement within the simulated and real test environments. Samples of time evacuation were introduced both in a DTPN-based model and in MATLAB for the simulation part, presented in the next section of the paper.

Figure 13 represents: 1—an ultrasonic sensor, 2—a secondary ultrasonic sensor, 3—the NodeMCU, and 4—the LogiLink extender.

5. Modeling and Simulation of the Evacuation System

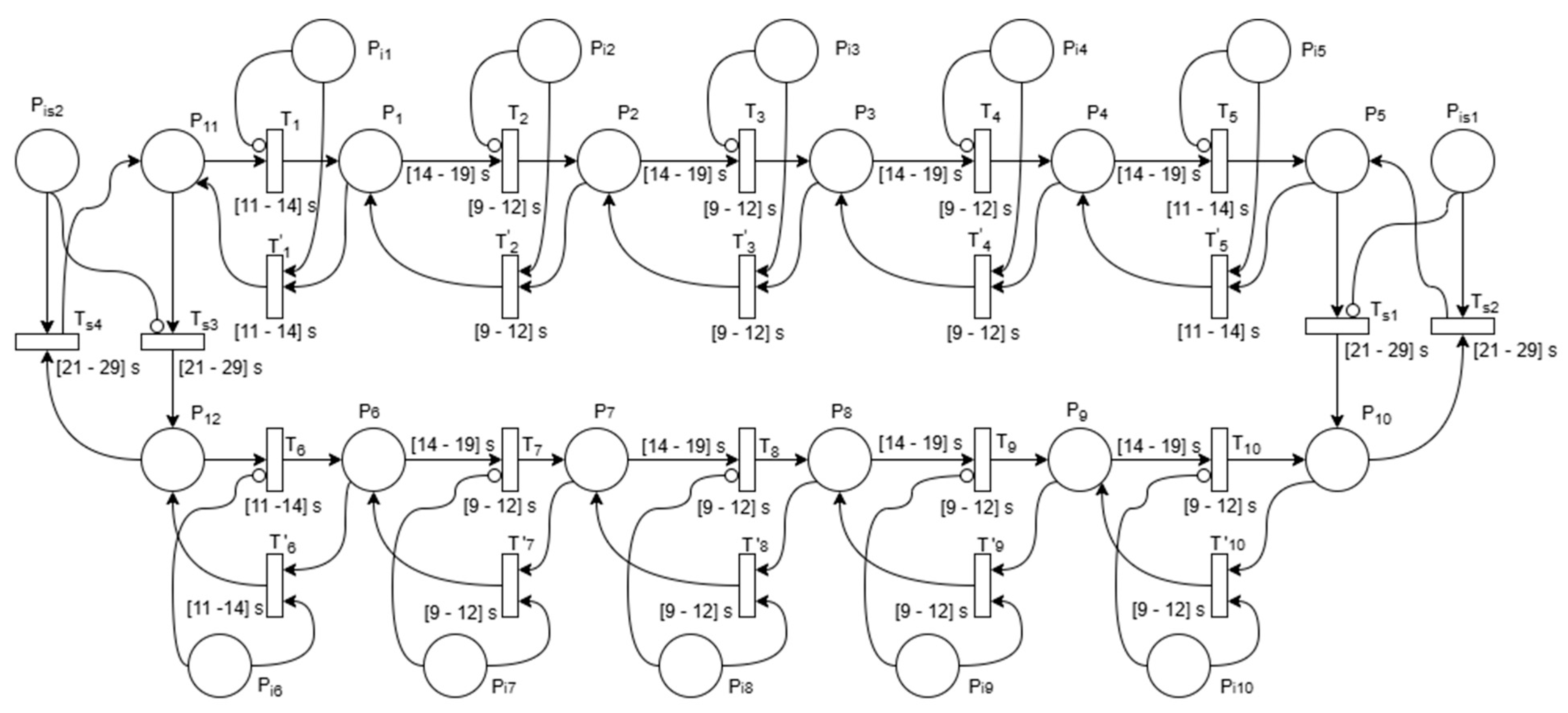

For modeling purposes, a Delay Time Petri Net (DTPN) [

56,

57], as shown in

Figure 14, was used in different evacuation scenarios. The variable durations of activities are represented by the time intervals associated with transitions. Different variable delays correspond to time intervals on arcs, oriented from places to transitions.

The model was constructed following the previously described evacuation plan. It was considered that on each floor, the room doors are opened to one of the four virtual zones in which the corridor is divided, and there are two exit paths. The oriented arcs of the net represent segments of the determined routes connecting these reference points. The dynamic evolution of the system and, implicitly, the transmitted actualized route, is modified in accordance with events signaled by sensors, such as fire or smog extension.

In the DTPN illustrated in

Figure 15, two connected floors of the building were represented. Any extension of this model is possible by simply adding similar structures. The places P1, P2, P3, P4, and P6, P7, P8, P9 represent the presence of the blind people in the four corridor zones of the two floors. The time intervals associated with the output arcs of these places are static delays, caused by different reaction times after the alarm was set off. P11, P12, P5, and P10 correspond, respectively, to the start and end points of the corridors. The markings of Pij, places, where j = 1…10, represent logical conditions depending on the presence or absence of signals transmitted by sensors that detect the extension of fire or the smog in the sectors belonging to a previously indicated route. Pij places are connected by inhibitor arcs to output transitions, representing walking activities between different zones of corridors. Consequently, the presence and the absence of tokens in these places, respectively, enable or disable the firing of their output transitions. T1…T10 and T1′…T10′ represent the movement of the blind people between the four neighboring zones and between the corridors and the fire stairs. Each transition has an associated time interval, whose two values correspond to the minimum and maximum walking times that blind people need to pass from one point to another. Transitions Ts1, Ts2, Ts3, and Ts4 have associated time intervals that represent the minimum and maximum times needed to move from one floor to another.

The values of time intervals associated with the transitions and arcs of the Petri Net were chosen considering data collected during the experimental studies.

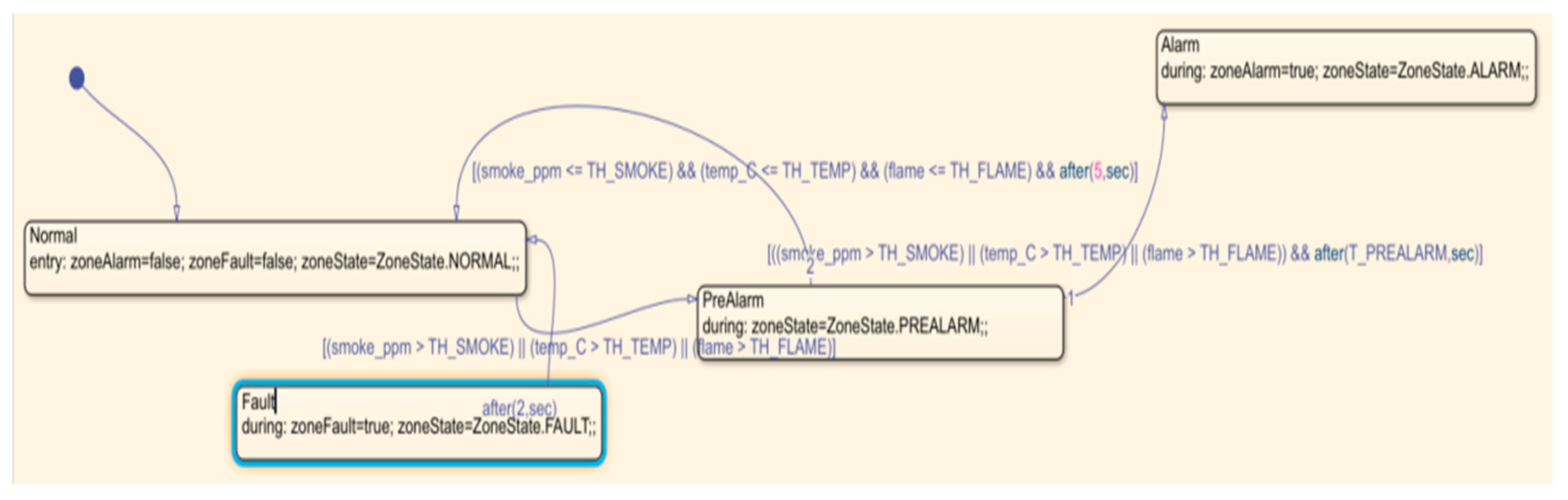

Different scenarios were simulated in MATLAB Simulink using Stateflow [

58] to represent logical decisions and system evolution.

The simulation process is illustrated in

Figure 15, where states, inputs, outputs, transitions, and associated actions were defined to describe the behavior of the dynamic system. Stateflow enables the modeling and simulation of discrete-event systems, being particularly suited for the design of complex logic and control architectures, offering a graphical interface for the creation of finite state machines and flowcharts. Its integration with Simulink allows for seamless simulation, testing, and verification within the same environment.

In this case, the simulated environment represents a single building floor, designed to test a fire detection and evacuation guidance system. The floor plan is modeled with multiple rooms—labelled Room501a to Room505b on the right side, Room506 to Room510 on the left side, and a central corridor—all interconnected through a data bus system for exchanging sensor readings and control commands. A control panel module at the bottom center manages system coordination and is linked to sirens, which provide evacuation alerts when hazardous conditions are detected.

The control logic is organized into three primary operational states:

Monitoring (Waiting)—In this idle state, all rooms and the corridor continuously transmit sensor data (e.g., temperature, smoke levels, occupancy) to the control panel. The system prioritizes low-power operation, minimizing unnecessary actuator usage until an event is detected.

Detection (Identifying)—Upon receiving abnormal readings from one or more rooms (e.g., elevated temperature or smoke presence), the system transitions to an investigative state. Here, sensor fusion logic evaluates data from multiple rooms to confirm the presence and location of the fire.

Evacuation (Activated)—Once a fire is confirmed, the system activates alarms in affected areas, triggers sirens, and can issue evacuation instructions. The control panel routes this information dynamically to guide occupants toward the safest exits, potentially using visual signals, audio cues, or mobile notifications.

Transitions between these states are governed by a combination of logical conditions, such as threshold exceedance in smoke/temperature sensors or the detection of blocked evacuation routes from corridor sensors.

The model not only validates fire detection algorithms but also tests distributed decision-making in a multi-room scenario, ensuring that the evacuation plan adapts to changing conditions, such as blocked corridors or multiple hazard sources. This simulation framework provides a scalable basis for testing IoT-integrated building evacuation systems before real-world deployment.

Based on the Simulink model, we simulated various scenarios with different sensor activations in different locations within the building. One example is shown below, where the cause that triggers the alarm is also mentioned, along with the time it was activated during the alarm (

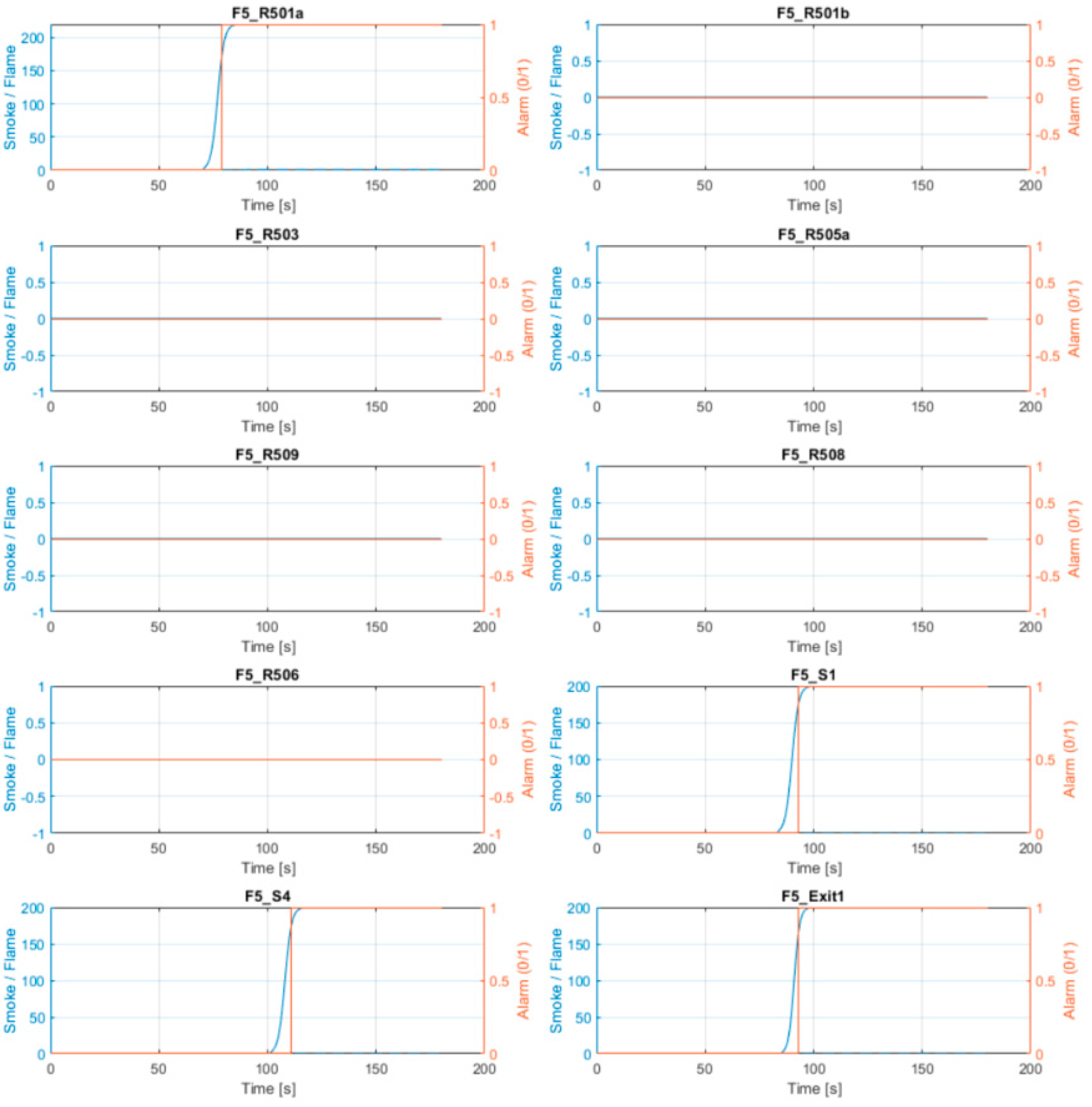

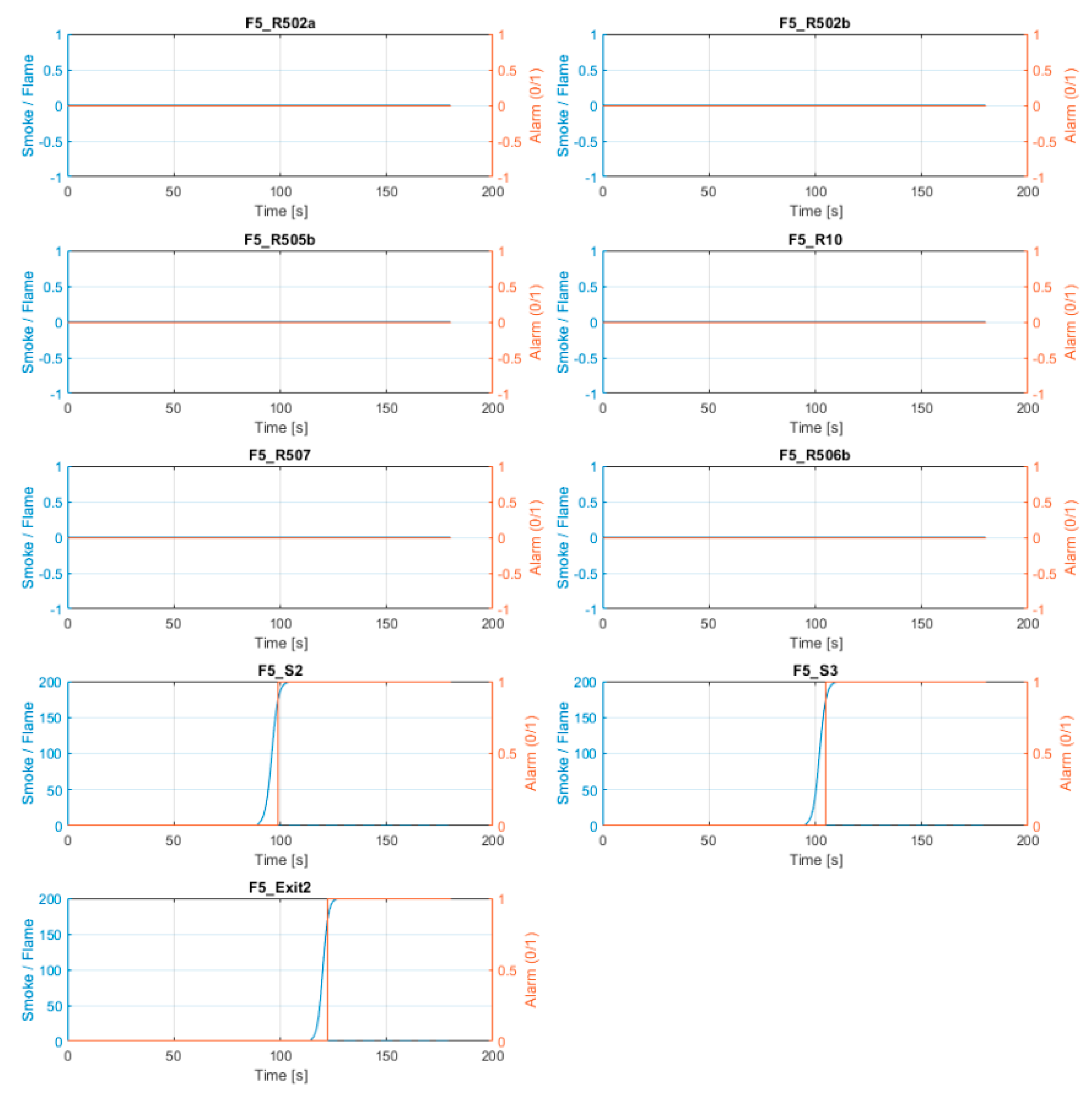

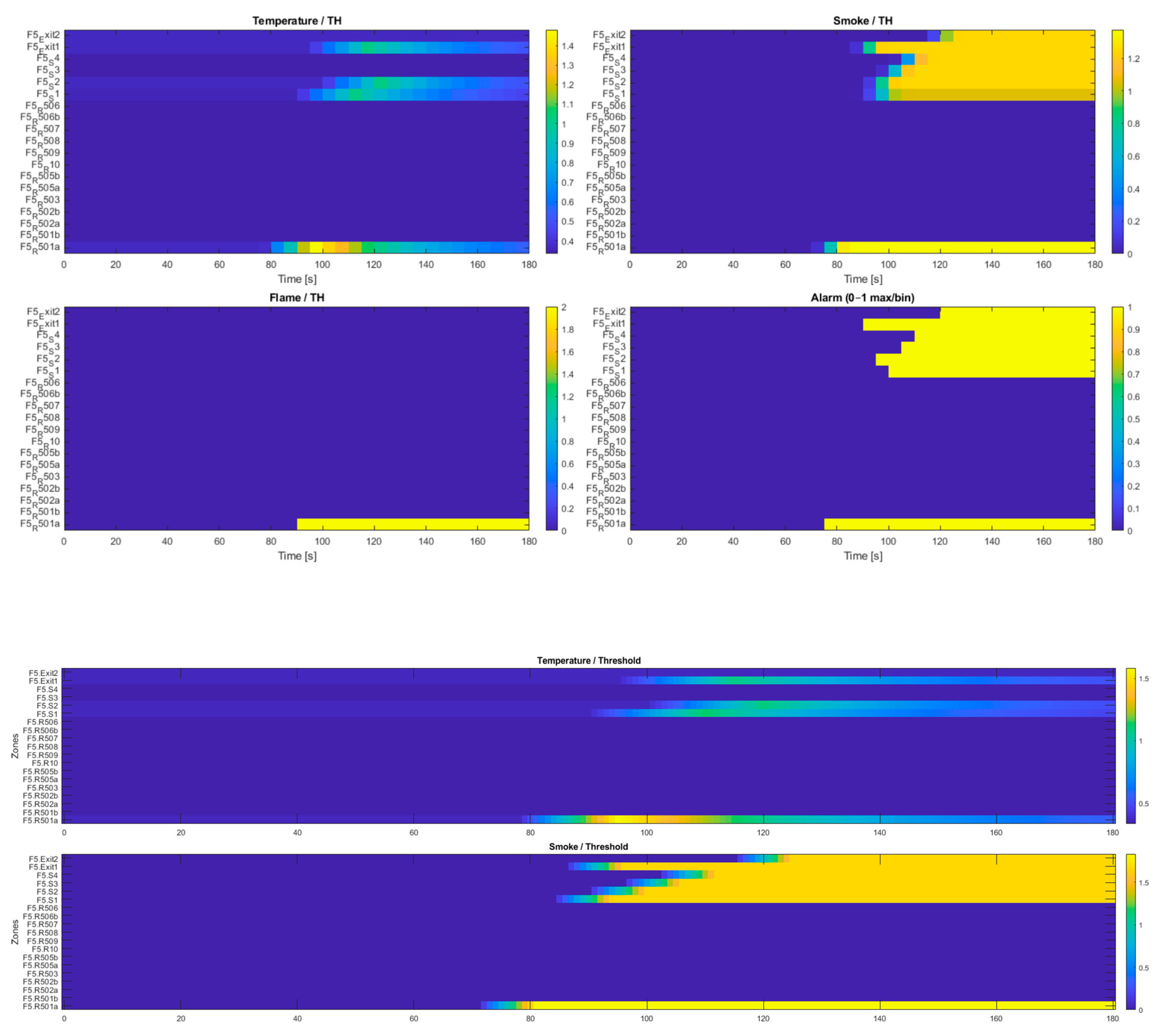

Figure 16).

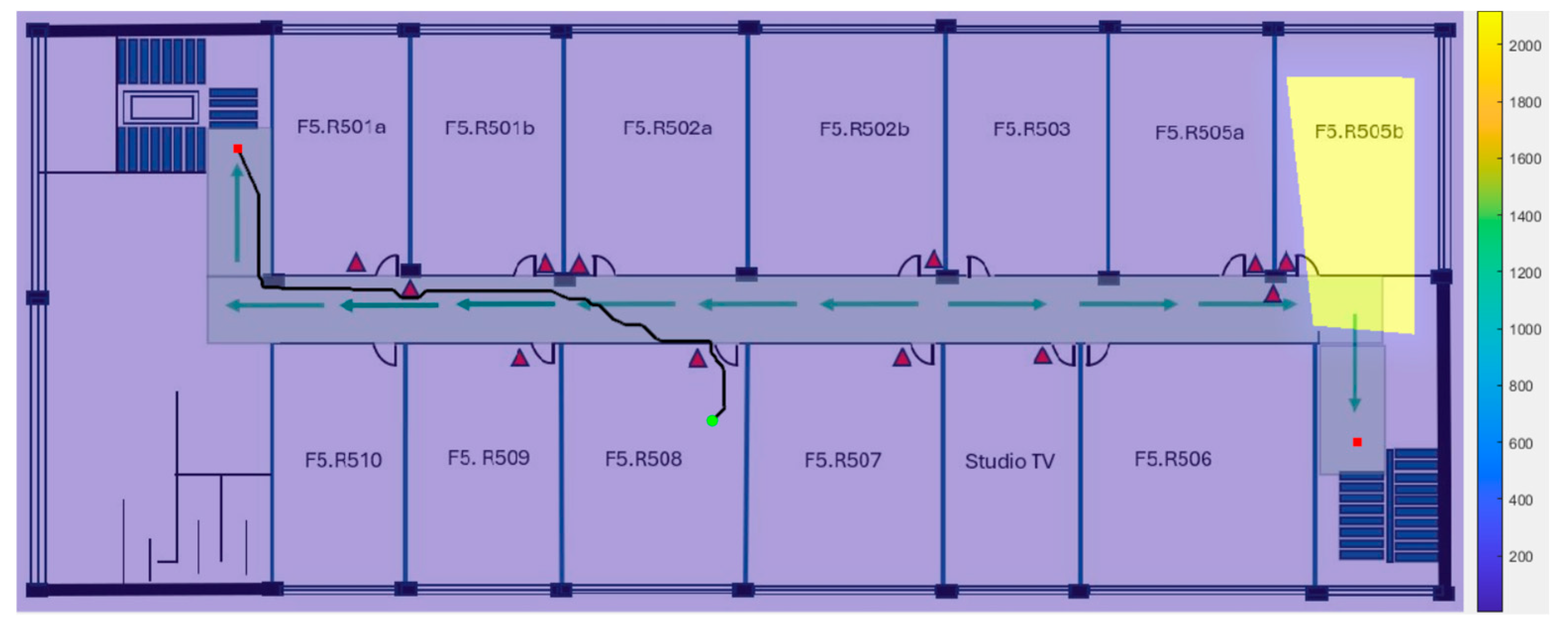

In this scenario, we simulate a starting fire in room 501A, which was initially alerted by the smoke sensor. Then, smoke propagates first to Sector 1 and Exit 1, which are closer, and then extends to the other sectors of the corridor, also triggering Exit 2. This scenario is illustrated in

Figure 17 together with the heatmap.

F5.R501a: first cause = smoke at t = 77.0 s

F5.R509: first cause = not_triggered at t = NaN s

F5.S1: first cause = smoke at t = 91.0 s

F5.S2: first cause = smoke at t = 97.0 s

F5.S3: first cause = smoke at t = 103.0 s

F5.S4: first cause = smoke at t = 109.0 s

F5.Exit1: first cause = smoke at t = 93.0 s

F5.Exit2: first cause = smoke at t = 122.0 s

The root cause of the alarms is in accordance with the heatmap plotted in

Figure 17.

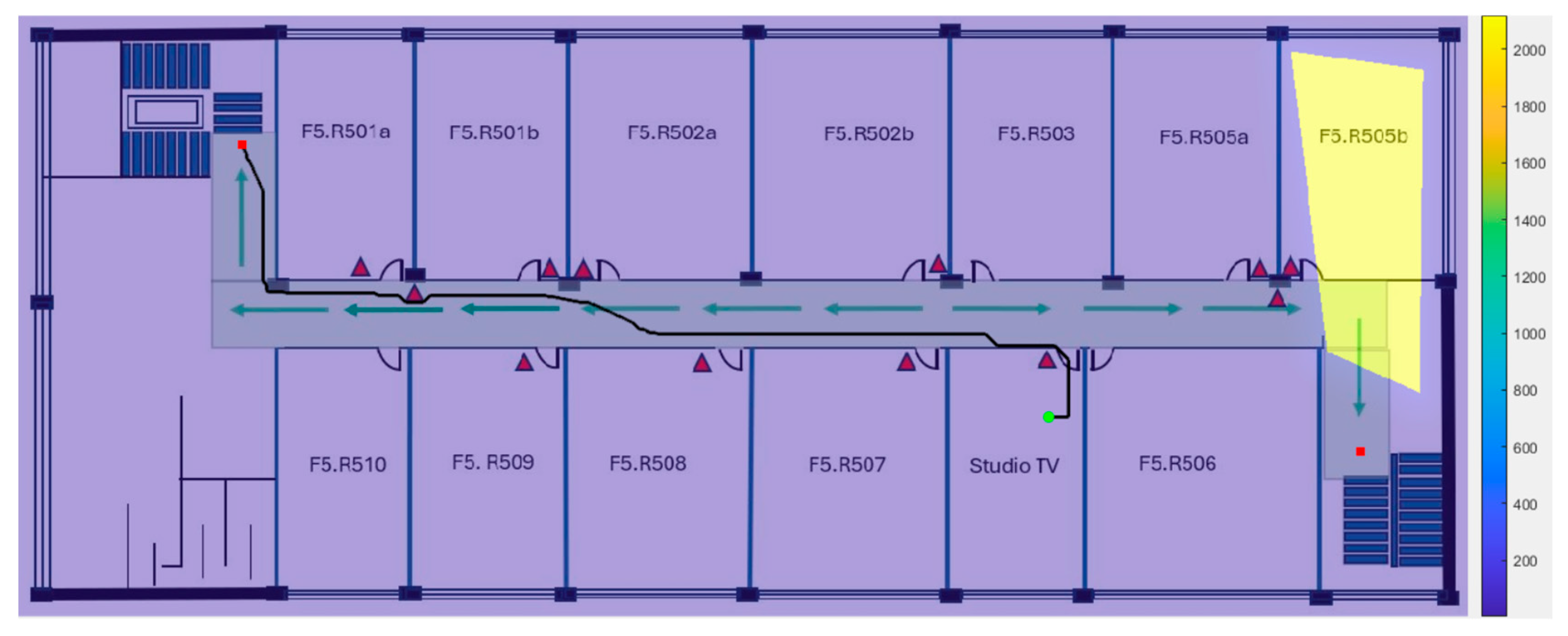

This test was repeated in many cases, one of them being exemplified in

Figure 18, where the start point was located in Room 508 (green dot), with a fire trigger activated by sensors located in Room 506b, the algorithm choosing to direct the route to the main exit (red dot), in accordance with the modified represented route.

It must be mentioned that the routing mechanism assigns the highest penalty to segments where the fire is present, for safety reasons, avoiding the paths where the fire is active. A representative scenario is depicted in

Figure 19, where the start location is positioned near the emergency exit (green dot), and the fire starts in the room adjacent to the exit, spreading to Sector 4 through the corridor (red dot and the highlighted sector 4). Therefore, the algorithm selects the long path of evacuation as the safest option.

5.1. The Route Delivery System

To improve the computational efficiency, we developed a strategy that combined static and dynamic routing. Dijkstra’s algorithm and A* algorithm, with a high penalty applied where fire is located, were used to calculate all possible routes from many interest points to exits.

Both share the same routing method described above (

Section 3.5) but differ in how they compute the next move.

Classical graph search remains attractive because it is transparent, provably optimal (within the cost function), and easy to audit for safety certification.

5.2. Graph Pruning

The full building graph can contain a lot of edges, but only ≈ 15% change cost during a 0.5s update window. In this situation, we choose to reduce the complexity of computation by pruning. We prune by depth-first, collecting the set of edges within a Manhattan radius of ≤ 15 m of any recently-triggered detector. In practice, the working graph never exceeds 60 edges, which is not a huge graph to be computed [

59].

Moving on to optimization, we were considering bidirectional searching. To accelerate convergence, we run bidirectional Dijkstra: one search tree expands forward from the user node, while another expands backward from the chosen exit. The trees meet after exploring ≈N^{½} edges. Computational complexity usually drops using this optimization method.

We also had another trial, using Heuristic A*. For heuristic A*, we add an admissible Euclidean–distance term h(n, exit) to the priority queue key. This lowers expansions at the cost of losing strict optimality under a dynamic cost map. The method can achieve increased performance compared to the classical Dijkstra algorithm.

5.3. Tests Performed

Several empirical and modeling studies highlight that even second-scale delays can affect safety in fire emergencies. For instance, a study by Zhang observes that in multi-building fire drills, “delays in response time can largely obstruct evacuation safety”—implying that each second is precious in the early minutes of escape [

4]. Deng et al. report that their evacuation time prediction model has an average error of 3.63 s, which is small but considered practically meaningful given the life-critical nature of evacuation [

60]. Real-world case studies also confirm that every second matters in evacuation contexts. Data from Swedish fire services indicate a nonlinear fatality risk curve with respect to response time: even small reductions in response time can result in measurable decreases in fatalities [

61].

We conducted several tests with individuals who are vision-impaired. These were used to validate the time reduction when a person is without guidance and when guidance is offered, together with path planning that avoids dangerous places.

An example from our tests is described below:

Start from F5.R505B sitting on a chair → find the way out and walk through the corridor → in front of F5.R507—39.99 s → in front of F5.R509—11 s (50.99) → in front of F5.R510—6.57 s (57.56) → main stairs—7.08 s → total 1.04.64 min → with guidance.

Start from F5.R505B sitting on a chair → find the way out and walk through the corridor → in front of F5.R507—59.32 s → in front of F5.R509—16.42 s → in front of F5.R510—9.58 s → main stairs—14.8 s → total 1.40.12 min → without guidance.

The distance measurements are represented below:

The corridor is 38.84 m long and 2.08 m wide. F5.R505b dimensions are: 8.79 × 5.78 m.

In this scenario, the total time for evacuation from inside a room to the main evacuation stairs is improved by 35.48 s.

In the event of fire progression near the evacuation path, our system offers significant improvement, as a new path is recalculated quickly and guides vision-impaired individuals to a safe area.

Moreover, the time reduction must be interpreted in conjunction with other improvements: the system also exhibited lower route deviation, higher decision robustness, and reduced mental workload. Together, these indicators suggest that the system’s benefits are not just quantitative but also qualitatively meaningful, increasing user confidence and autonomy during evacuation.

To evaluate the effectiveness of the proposed system, we conducted several experimental measurements. During these, a participant with complete visual impairment completed the distance in 43.63 s with the guidance, corresponding to an average walking speed of approximately 0.88 m per second (m/s). For comparison, the literature data typically report unaided walking speeds for blind individuals ranging from 0.3 to 0.75 m/s in unfamiliar indoor environments without active guidance systems. The results indicate a notable improvement in navigation efficiency, reducing hesitation and enabling more confident, continuous movement along the route.

A comparison of the median speed calculated in meters/second can be found in the

Table 4. Values from the last two rows are calculated via our experiments, and the first two rows are comparative published data [

62].

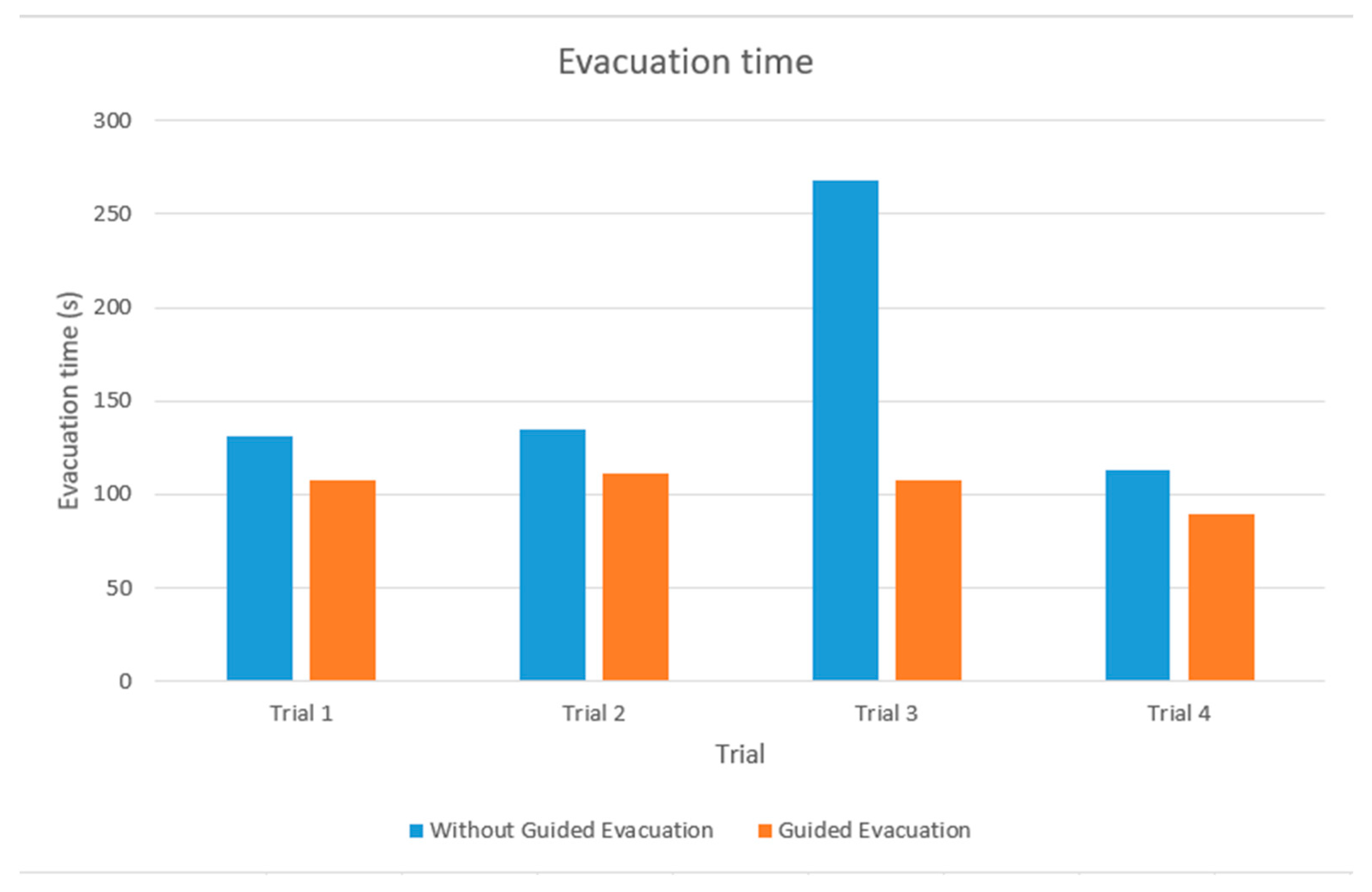

To illustrate the advantages of the proposed system, we present a comparative analysis based on our live drill and simulation data within a multi-level building with and without guidance in

Figure 20. Trial 3 represents a situation where the first chosen path was not safe anymore, because of potential fire extension.

Key Statistics:

Mean (without guidance): 152.1 s;

Mean (with guidance): 103.83 s;

Standard Deviation (without guidance): 61.87 s;

Standard Deviation (with guidance): 8.61 s;

Mean Time Improvement: 48.27 s;

Relative Improvement: 31.74%.

6. Future Developments

To implement the guidance system, an Android application was created. We focused on integration with the system and ease of usability, considering the special communication requirements of visually impaired individuals.

The development and integration of the communication subsystem within the guided system’s overall structure will be a key objective for future work.

While the current simulation demonstrates the feasibility of IoT-based fire detection and evacuation, several limitations remain:

Communication reliability: The simulation assumes perfect communication via InBus/OutBus. In real emergencies, IoT networks may suffer from latency, interference, or partial failures, which could compromise timely evacuation.

Human behavior modeling: The simulation does not currently account for human behavioral factors such as panic, hesitation, or crowd dynamics, which can heavily influence evacuation efficiency.

Future work will focus on extending the simulation to multiple environments and incorporating stochastic models of human behavior to improve realism. Additionally, enhancing network resilience through fault-tolerant IoT communication protocols will be critical for ensuring reliability in real-world deployments.

For further development, we research and assess the feasibility of introducing an artificial intelligence reinforcement learning algorithm. Although Dijkstra guarantees optimality at each update, it recomputes the solution from scratch whenever the costs change. A reinforcement learning agent can instead map states directly to actions and react in constant time. The initial architecture of a reinforcement learning network implies:

Input Layer—14-unit vector.

Hidden-1—fully-connected, 128 units, ReLU.

Hidden-2—fully-connected, 128 units, ReLU.

Actor Head—soft-max over 4 actions {Forward, Left, Right, Back}.

Critic Head—scalar value V(s).

As for the reward function, we propose the following strategy:

+10 at exit.

−0.05 per 0.5 s step (to favour speed).

−2 if smoke_index > 0.6.

−5 if crowd_density > 0.8.

−10 for entering an edge at risk of flame (F = 1).

Accessibility for Other Disabilities

While the system is optimized for visually impaired users, it also incorporates or can plan extensions for other disability profiles:

Hearing impairments:

- ○

Visual fallback via high-contrast map and flashing guidance arrows.

- ○

Planned vibro-tactile belt interface for deaf–blind users, converting directional prompts into vibration patterns.

Mobility impairments:

- ○

The routing engine can be extended to avoid staircases or select routes suitable for wheelchair users (e.g., minimum width, no steps).

7. Conclusions

Having as its main objective the safe and quick evacuation of visually impaired people during fire events, a support system integrating assistive technology was designed. The physical infrastructure needed for tests and experiments was carried out in a real environment.

Actualized information about fire evolution was used to ensure dynamic route modification to avoid the potentially dangerous areas. The modeling and simulation of the system were performed using Delay Time Petri Nets and MATLAB Simulink simulations, respectively, with Stateflow.

The DTPN-based model allows a more comprehensive analysis of all temporal aspects that have a direct influence on the total time of evacuation, and the simulations proved the reaction capacity of the system and validated the proposed evacuation strategy during the situations generated by critical events. The time durations considered for modeling purposes were set based on experimentally obtained data. In the tests performed, both normal-sighted people and people affected by mild (near-normal vision) or total blindness participated. Special attention was given to the impact of the designed system on the pre-evacuation and the wayfinding phases of the evacuation, the better performance being obtained especially during the second one, due to the orientation and guidance characteristics of the system.

The conducted simulations demonstrated that the proposed evacuation system can significantly improve evacuation performance compared to conventional approaches. By integrating IoT-based sensing, adaptive guidance, and decision-making algorithms, the system was able to optimize evacuation routes in real time. As a proof-of-concept prototype, the experimental evaluation indicated an average reduction in evacuation times of approximately 4–5 s across test scenarios. Although this improvement may appear modest at first glance, in real emergency conditions, such time savings can represent a critical advantage in ensuring occupant safety. These results confirm both the feasibility and the potential impact of our approach, while also highlighting the need for further development and large-scale validation in complex building environments.

The convergence of low-cost sensors, smartphone edge AI, and evolving accessibility regulations is reshaping what “inclusive fire safety” can mean in practice.

The proposed decentralized evacuation support system for visually impaired individuals demonstrates several key advantages confirmed through our analysis. By being informed about fire evolution via the fire alarm system, the system can dynamically guide individuals toward the safest available exits. The underlying mathematical model allows real-time updates to route risk by incorporating fire-evolution indicators—such as rising smoke density, ambient temperature increases, and the emergence of open flames. These capabilities directly enhance both safety and evacuation efficiency. Additionally, by leveraging sensor data from the existing fire alarm infrastructure, the system reduces the need for costly new hardware, supporting affordable and scalable deployment.

A test bench was implemented for a floor with all sensors to develop and test the application for fire detection and evacuation timings evaluation.

While further validation on larger and diverse building typologies is required, the prototype offers a concrete, standards-compliant template that facility managers can replicate today. This work can catalyze a shift in building codes: from accommodating visually–impaired persons to empowering them with autonomous, intelligent tools.