Efficient Reliability Block Diagram Evaluation Through Improved Algorithms and Parallel Computing

Abstract

1. Introduction

- RQ1

- How can the system’s reliability be efficiently analyzed in presence of components with arbitrary, non-parametric (i.e., numerical) failure distributions?

- RQ2

- How can computational complexity be reduced for RBD evaluation while preserving numerical accuracy?

- RQ3

- Can modern multicore architectures—from embedded processors to high-performance systems—be effectively leveraged to achieve platform-agnostic performance improvements?

2. Reliability Evaluation Methodologies

- Combinatorial models exploit the assumption of statistically independent components, i.e., a failure of one component does not impact the failure rate of other components, to efficiently evaluate the reliability [18,19]. These models include Reliability Block Diagrams (RBDs) [20,21], Fault Trees (FTs) [22,23], Reliability Graphs (RGs) [24,25], and Fault Trees with Repeated Events (FTREs) [23,26].

- State-space-based models leverage Markov Processes that allow them to model statistical, temporal, and spatial dependencies among failures at the cost of state-space explosion [18,19]. These include Continuous Time Markov Chains (CTMCs) [27,28], Stochastic Petri Nets (SPNs), and extensions such as Generalized Stochastic Petri Nets (GSPNs) and Stochastic Timed Petri Nets (STPNs) [29,30,31,32], Stochastic Reward Nets (SRNs) [33,34], and Stochastic Activity Networks (SANs) [35,36].

3. Reliability Block Diagrams

3.1. RBD Definitions

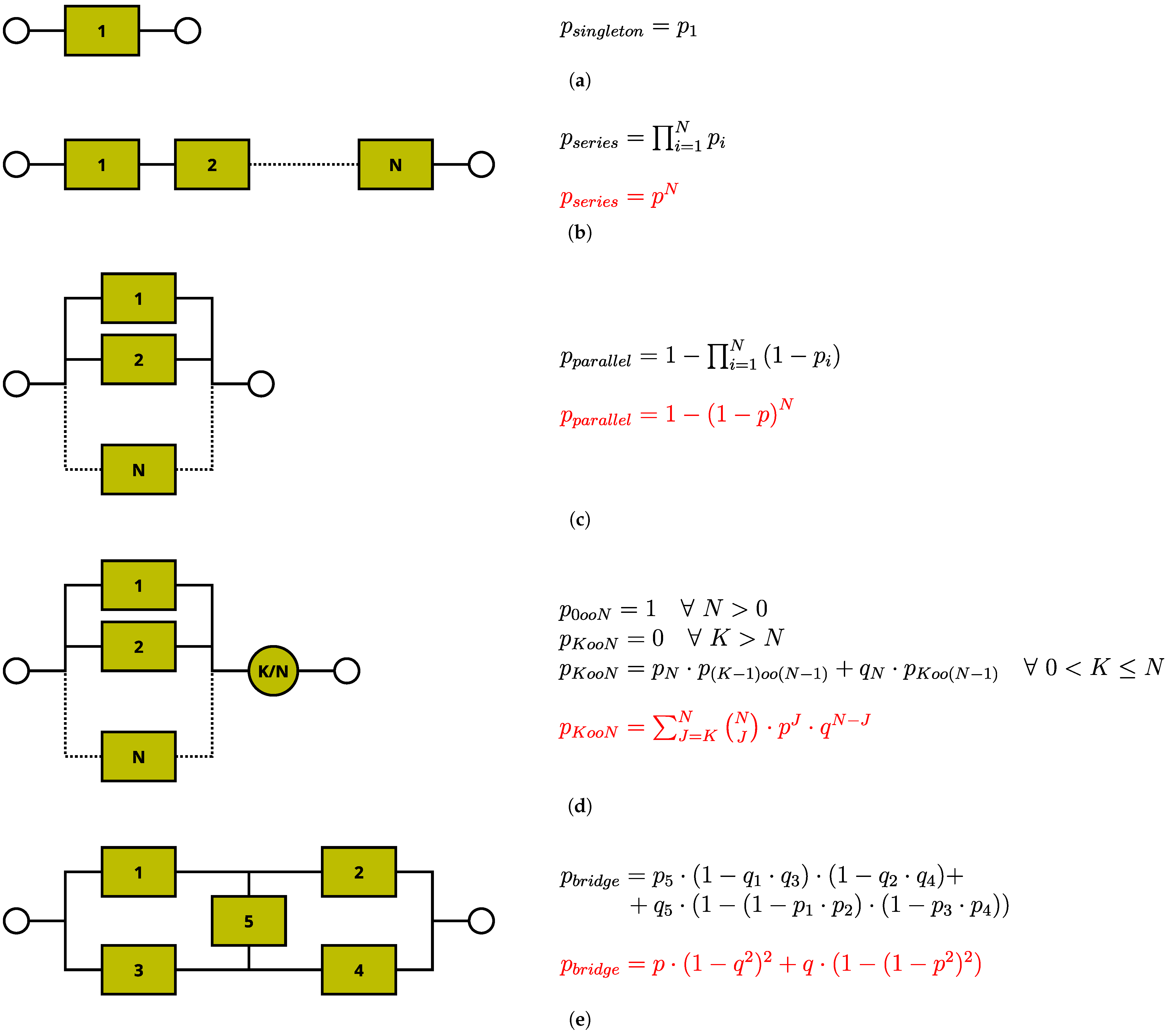

- Singleton. A component is a part of the physical system that is considered atomic w.r.t. failures. A physical component is visually represented inside the RBD model by a Singleton block. Singletons can be considered as the elementary bricks of the recursive structure of an RDB model. The state of a Singleton block is equal to success if and only if the associated component is working correctly. An example may be a stand-alone Power Supply.

- Series. This block is composed by N (sub-)blocks, and its state is equal to success if and only if all its sub-blocks are in success state. Examples are systems in which the single failure of any component produces the failure of the system.

- Parallel. This block is composed by N blocks, and its state is equal to success if and only if at least one of its sub-blocks is in success state, or, dually, a failure state of a Parallel block occurs if and only if all its blocks are in failure state. An example is a redundant Power Supply system with current sharing.

- K-out-of-N (KooN). This block is composed by N blocks, and its state is equal to success if and only if at least K blocks out of N are in success state. An example is a Triple Modular Redundancy computing system (TMR).

- Bridge. This block is introduced in order to model the possibility of an alternative success path in a parallel/series structure. As shown in Figure 1e, the block in the middle is used as a bridge between two parallel success paths, rerouting success to the other path in case of failure of one block. Typical examples can be found in network infrastructures.

3.2. Existing RBD Modeling and Evaluation Tools

- Isograph Reliability Workbench: this commercial tool supports the hierarchical definition and analysis of scalable RBD models by means of submodels. It also supports the minimal cut-set analysis of the RBD model [46]. With respect to RQ1, this tool does not support the computation of the reliability curve.

- Relyence RBD: this commercial tool allows the definition and evaluation of series, parallel, and standby configurations, providing the reliability curve using analytical formulas or through Monte Carlo simulation [47]. With respect to RQ1, this tool only supports a set of parametric distributions for its input blocks.

- ALD RAM Commander—RBD Module: this commercial tool allows the definition and evaluation of series, parallel, and KooN configurations, providing the reliability curve using analytical formulas or through Monte Carlo simulation [48]. With respect to RQ1, this tool only supports a set of parametric distributions for its input blocks.

- SHARPE: the Symbolic Hierarchical Automated Reliability and Performance Evaluator (SHARPE) tool supports the definition of hierarchical stochastic models of dependability attributes, including reliability, availability, performance and performability, and the analysis of such models [16,17]. This tool supports several formalisms to define reliability models, including RBDs, and it supports time-dependent reliability analysis. With respect to RQ1, this tool only supports a set of parametric distributions for its input blocks.

- PyRBD is an open-source tool particularly effective to model and evaluate communication networks that employs a methodology that generates RBDs from network topologies and decomposes the diagrams for faster processing, with a core focus on utilizing minimal cut-sets and Boolean algebra to compute the steady-state availability [49,50]. With respect to RQ1, this tool does not support the computation of the reliability curve and it only supports a limited set of parametric distributions for its input blocks.

- PyRBD++ is the optimized evolution of PyRBD. While maintaining the same characteristics of its predecessor, it introduces a novel iterative conditional decomposition method to improve scalability performance [51,52]. As its predecessor, this tool does not support the computation of the reliability curve and it only supports a limited set of parametric distributions for its input blocks.

3.3. Quantitative Evaluation of KooN Blocks—Traditional Approach

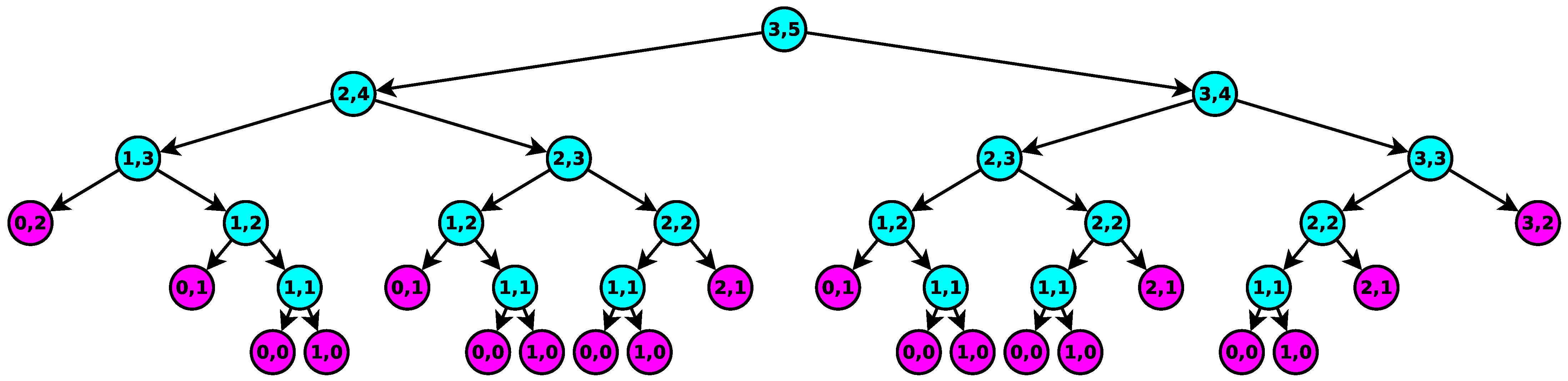

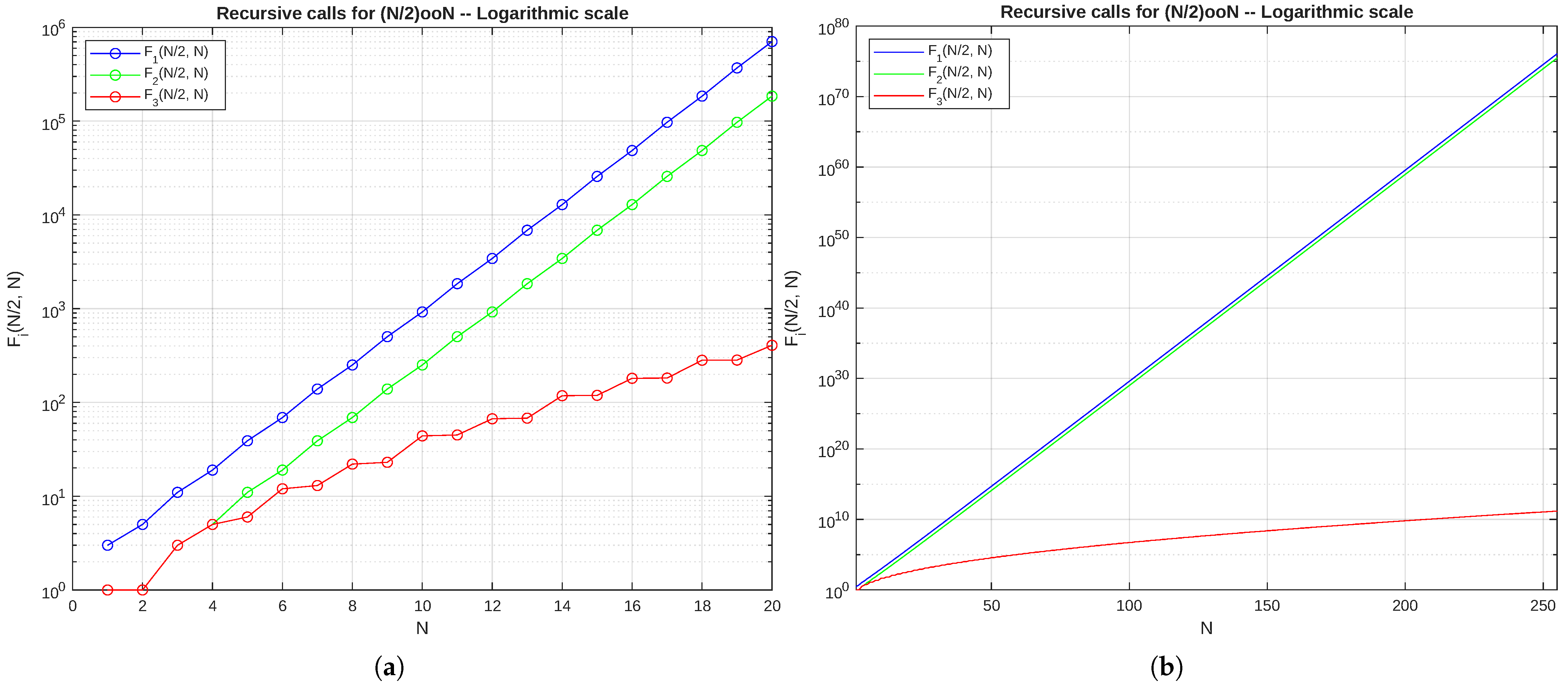

- The presented approach has trivial stop conditions that exponentially increase the number of leaf nodes, thus increasing the computation time. More specifically, it does not detect trivial KooN configurations such as Series (NooN) and Parallel (1ooN);

- Several different internal nodes with equivalent input parameters K and N are visited during the recursion tree. More specifically, in the example shown in Figure 2, two different nodes with the same parameters and are evaluated.

3.4. Quantitative Evaluation of KooN Blocks—Novel Approach

3.5. Quantitative Evaluation of Bridge Blocks—Novel Approach

4. RBD Computation Library—librbd 2.0

- to support the most common OSes, that is, Windows, MacOS and Linux;

- to support the numerical computation of the reliability curve for all RBD basic blocks; please note that this requirement is necessary to satisfy RQ1;

- to be available as a free software.

4.1. Design Choices Already Present in librbd

- The implementation of the resolution formulas for series, parallel, KooN, and bridge RBD blocks over time, up to 255 components per block.

- The availability of librbd on the majority of currently available OSes, i.e., Microsoft Windows, Linux, and MacOS.

- The implementation of librbd in C language for higher performance, introducing sporadic conditional compilation when interaction with the OS is deemed necessary [54].

- The availability of librbd as both a dynamic and static library.

- In order to minimize the numerical error, all computations use double-precision floating-point format (double) compliant with binary64 format [55].

- The implementation of formulas for RBD blocks both with generic components and with identical components.

- The implementation of several optimizations for the KooN RBD block computation, e.g., the minimization of computation steps when for blocks with identical components.

- The adoption of the Symmetric Multi-Processing (SMP), that can be enabled or disabled at compile time. When disabled, librbd is built as a Single Threaded (ST) library.

4.2. New Design Choices Introduced in librbd 2.0

- Algorithmic Complexity Reduction: To address RQ2, we implemented novel algorithms aimed at substantially reducing the computational complexity of KooN and Bridge blocks (detailed in Section 4.2.1).

- Cross-Platform Parallel Computation Techniques: To Address RQ3, ensuring optimal performance across diverse hardware architectures and OSes, this requirement necessitated several key developments:

- -

- Vectorization (SIMD): the addition of native support for the Single Instruction, Multiple Data (SIMD) paradigm for computation acceleration (Section 4.2.2).

- -

- Multi-Core Parallelism (SMP): the optimization of cache utilization within the SMP paradigm (Section 4.2.3), together with the addition of native SMP support for the Windows OS (Section 4.2.4).

4.2.1. Optimization of Algorithms for KooN and Bridge Blocks

| Algorithm 1: Computation of KooN block with generic components. |

Input: Minimum number of working components k Input: Total number of components n Input: Array R of reliabilities, where is the reliability of i-th component Function R_KooN_Recursive(k, n, R) if then # Evaluate noon block, i.e., Series block ; for do ; return ; if then # Evaluate 1oon block, i.e., Parallel block ; for do ; return ; # Compute the best value h (see Equation (5)) ; if then ; for do # Compute (see Equation (4)) and store the result over variable ; for do # Compute (see Equation (4)) and store the result over variable ; for do if then ; else ; ; ; return ; # The value h is equal to 1, use traditional recursive formula (see Equation (1)) ; ; return ; |

- they slightly reduce the complexity, hence they decrease the computation time;

- they can be easily implemented with the SIMD paradigm;

- they require a lower number of temporary variables/vectors and hence they decrease the computation time.

| Algorithm 2: Computation of Bridge block with generic components. |

Input: Array R of reliabilities, where is the reliability of i-th component Function R_Bridge_Generic(R) # Compute and variables (see Equation (6)) ; ; # Compute reliability of Bridge block (see Equation (6)) return ; |

| Algorithm 3: Computation of bridge block with identical components. |

Input: Reliability R of each component Function R_Bridge_Identical(R) # Compute unreliability U of each component ; # Compute reliability of Bridge block (see Equation (7)) return ; |

4.2.2. Single Instruction, Multiple Data (SIMD)

- Usage of vectors requires large register files that increase the required chip area and the power consumption. Due to the power consumption increase, which causes higher CPU temperatures, Dynamic Frequency Scaling techniques may automatically decrease the CPU frequency.

- The implementation of an algorithm with SIMD instructions requires human effort since compilers typically do not generate SIMD instructions.

- Several SIMD extensions have restrictions on data alignment, thus increasing the complexity of the program. Even worse, different data alignment constraints may apply to different SIMD revisions or different processors of the same manufacturer.

- Due to the increased parallelism, a higher stress is put on the memory bus since a larger data flow is processed. This stress is further increased on multi-threading applications since different threads perform different but “concurrent” memory accesses.

- Specific instructions, like Fused Multiply-Add (FMA), are not available in some SIMD instruction sets.

- SIMD instruction sets are architecture-specific and some architectures lack SIMD instructions entirely, so programmers must provide a generic non-vectorized implementation and a different vectorized implementation for each covered architecture.

4.2.3. Optimization of Cache Usage with SMP

4.2.4. Native Support to SMP on Windows

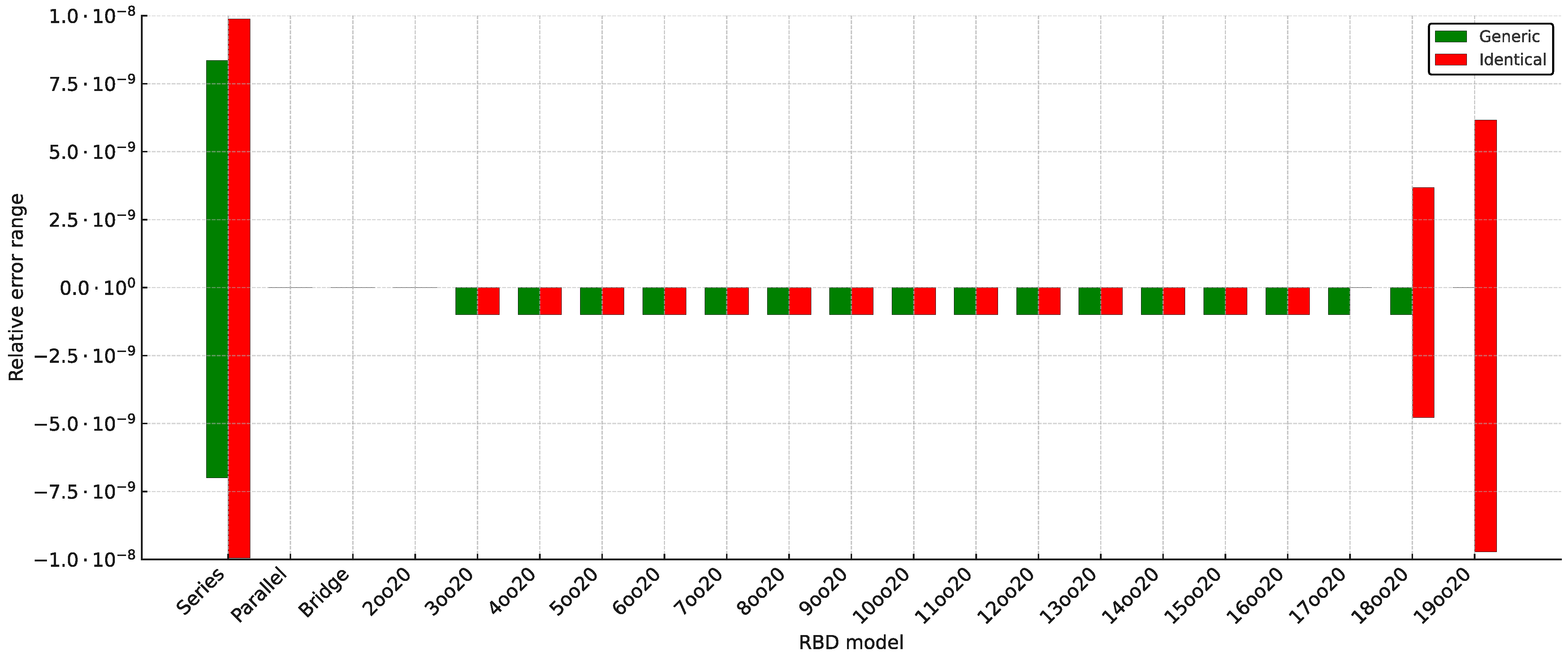

4.3. Validation

- We evaluated the RBD models for 100,000 h. By setting the sampling period to 1 h, the resulting reliability curve was determined across 100,000 time instants.

| RBD Block | Topology | Components |

|---|---|---|

| Series identical | 20 components | C1 |

| Series generic | 20 components | All |

| Parallel identical | 20 components | C1 |

| Parallel generic | 20 components | All |

| KooN identical | Koo20, | C1 |

| KooN generic | Koo20, | All |

| Bridge identical | 5 components C1 | |

| Bridge generic | 5 components | From C1 to C5 |

| Component | Component | ||

|---|---|---|---|

| C1 | 0.0000084019 | C11 | 0.0000047740 |

| C2 | 0.0000039438 | C12 | 0.0000062887 |

| C3 | 0.0000078310 | C13 | 0.0000036478 |

| C4 | 0.0000079844 | C14 | 0.0000051340 |

| C5 | 0.0000091165 | C15 | 0.0000095223 |

| C6 | 0.0000019755 | C16 | 0.0000091620 |

| C7 | 0.0000033522 | C17 | 0.0000063571 |

| C8 | 0.0000076823 | C18 | 0.0000071730 |

| C9 | 0.0000027777 | C19 | 0.0000014160 |

| C10 | 0.0000055397 | C20 | 0.0000060697 |

5. Performance Evaluation Workbench

5.1. Materials

5.2. Methods

6. Results

6.1. librbd 2.0 Performance Analysis

- For each combination of RBD model, time instants, PC used, and enabled optimizations, the reliability curve produced by the RBD computation has been stored to a file. This allows us to quickly compare the reliability curves both between different architectures and between different enabled optimizations. If the reliability curves for each modeled RBD are the same for all the sets of all enabled optimizations and the PC, then librbd 2.0 is fully validated.

- For each combination of modeled RBD, time instants, PC used, and enabled optimizations, the librbd 2.0 minimum, maximum, and median execution time observed on 15 different executions has been stored on a file. This has allowed us to quickly evaluate the performance both between different architectures and between different enabled optimizations.

6.1.1. Validation with Different Architectures and Enabled Optimizations

6.1.2. Performance Analysis with Different Architectures and Enabled Optimizations

6.2. Considerations on librbd 2.0 Performance

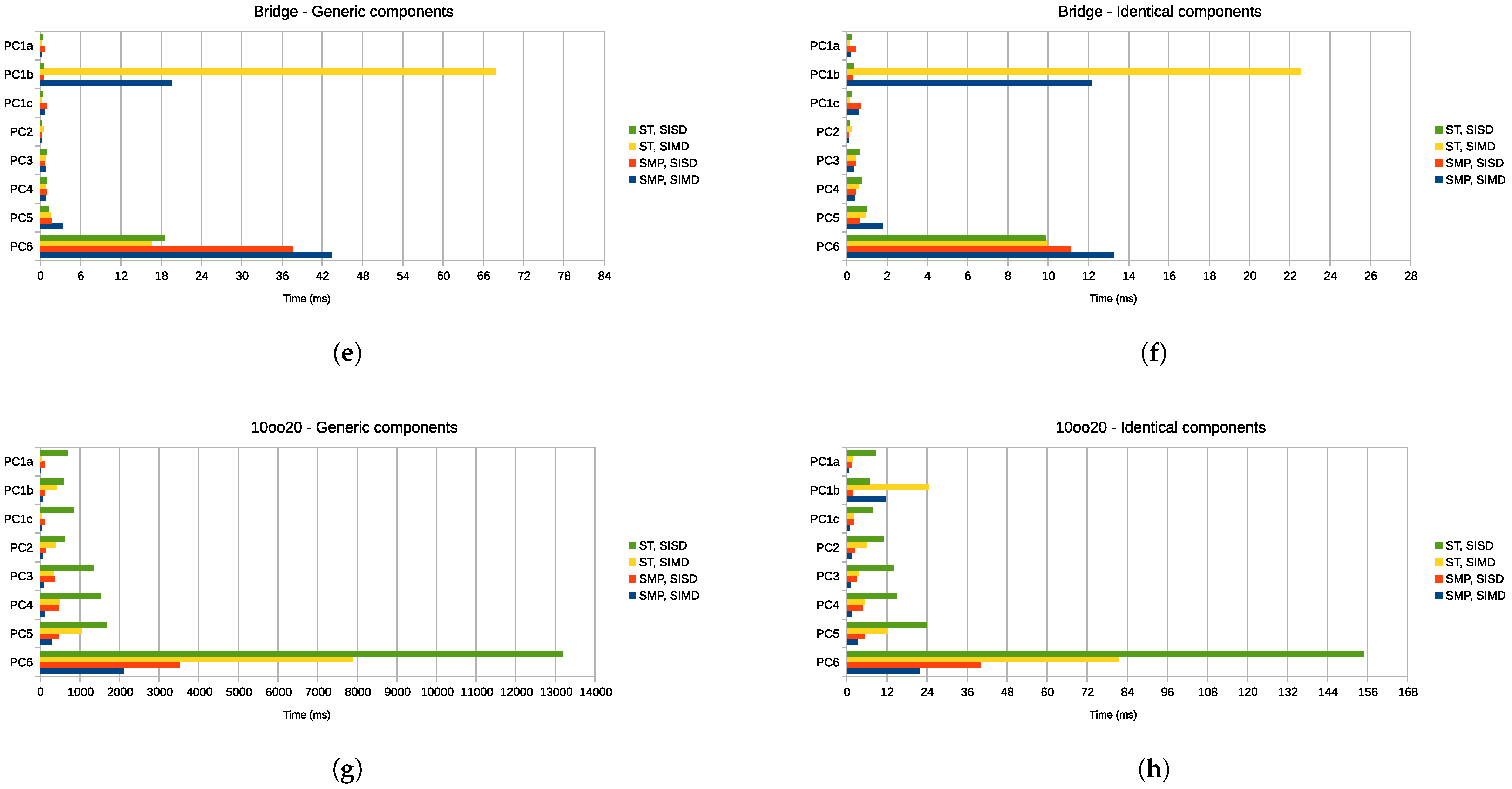

- Considering PC1b, the adoption of SIMD when compiling with Visual Studio 2022 causes a significant loss of performance. This loss of performance may be due to an incorrect setting of file-specific optimization flags or due to compiler issues that limit its capability to effectively optimize source code exploiting SIMD intrinsics.

- Considering PC1 in its three different configurations, i.e., OS and compiler, we observe that, with the exception of the ones involving SIMD and Visual Studio, the variation in the execution time among the different configurations is small, thus showing that librbd 2.0 can be effectively considered as a multi-platform library.

- Considering PC6, i.e., the PC with the lowest computational power, we observe that, in several occasions, e.g., series, parallel, and bridge with generic components, the introduction of SMP slightly degrades the performance. We suggest that this phenomenon may be caused by the memory latency, since this PC has very limited cache memory with a low bandwidth RAM. Despite PC6 being a low-power and dated embedded platform, its performance is anyway acceptable. Furthermore, its evolution, i.e., PC5, has performance results comparable with the other tested PCs.

- Considering the results obtained on all PCs, excluding the ones for 10oo20 blocks, we observe that, in several occasions, the adoption of SMP slightly degrades the performance. We suggest that this behavior may be caused by a non-optimized choice, for such simple models, of the batch size, i.e., the number of time instants concurrently processed by each thread. As already discussed in Section 4.2.3, the batch size should be properly tuned: if it is too high, a lower number of threads is instantiated, thus limiting the advantages of SMP; on the other side, if it is too low, each thread executes for a negligible amount of time and the overhead introduced by SMP, which includes the threads creation and termination, context switches, and race conditions on memory access, negates the advantages of SMP itself.

- With the exception of PC1b, the usage of SIMD extensions with ST library decreases the execution time. The actual decrease in computation time varies with the complexity of the analyzed block, i.e., more complex blocks with a higher number of computation steps per time instant have the greatest benefits.

- With respect to librbd [15], by comparing the results obtained with both librbd and librbd 2.0, we observe that bridge, parallel, and series blocks librbd 2.0 have, in general, performance comparable with the one achieved with librbd. This may be due to the fact that, for these trivial blocks, the benefits obtained with SIMD are, in general, comparable with the loss of performance due to complexity of the new source code required for the implementation of the new features. For what concerns KooN blocks, i.e., blocks characterized by a high complexity and a high number of computations per time instant, we observe huge benefits for both block with generic and identical components. For example, let us consider PC3: using librbd, an 8oo15 block with generic components on 200,000 time instants was analyzed in ms, while librbd 2.0 analyzed the same block in just ms.

- Regarding RBDs presenting complex nestings of basic blocks, librbd 2.0 can be used by applying standard functional composition, that is, if a basic block B is used to compose basic blocks , if is the function computing its success probability, the overall success probability is given by . Thus, execution time for each basic block adds up linearly.

6.3. Performance Comparison w.r.t. SHARPE

6.4. Current Limitations

- Limited support to compilers: librbd 2.0, since it exploits compiler-dependent features, requires the usage of one between GCC, Clang, and MSVC. We consider that this limitation is not important, since MSVC is the primary compiler for Microsoft Windows OSes, while both GCC and Clang are open-source compilers that are widely available over different OSes and architectures.

- Limited support to OSes: librbd 2.0 exploits both OS-dependent and compiler-dependent features to implement the SMP paradigm. As a result, when SMP is used, only Microsoft Windows, Linux, and MacOS are supported. We consider this limitation negligible, since these three OSes cover the majority of all OSes used nowadays. Furthermore, since this limitation applies to SMP version only, during compilation librbd 2.0 automatically detects the target OS and, if it is not supported, it automatically disables SMP.

- Limited support to SIMD: librbd 2.0 supports only SIMD extensions for x86, amd64, and AArch64 architectures. We believe that the supported SIMD extensions cover the majority of commercially available ones. Nonetheless, in future developments, support to additional SIMD extensions, e.g., PowerPC Vector Scalar Extension (VSX), could be introduced.

- Untested support to amd64 AVX512F SIMD: the support to AVX512F SIMD extension, which has been introduced in librbd 2.0, is still untested since the CPUs used during the performance analysis do not support this ISA. We consider this limitation not relevant due to the following two considerations: this ISA is supported by newer server CPUs only; the development of the algorithms exploiting this SIMD extension leverages the porting of FMA extension to AVX512F-specific intrinsics.

- Scalability limitations of KooN algorithm: the new algorithm designed and developed for the computation of KooN blocks with generic components is a huge improvement with respect to librbd, nonetheless its computational complexity is still an issue for big values of N. We consider this limitation negligible, since librbd 2.0 has shown that the computation of generic 10oo20 blocks is feasible in reasonable time even using low-performance PCs and given that this block can be reasonably considered a worse case scenario in practical RBD usage.

7. Conclusions and Future Developments

- RQ1

- Non-parametric reliability analysis. librbd was designed to compute the reliability of components with arbitrary, non-parametric failure distributions. Our validation against the established SHARPE tool confirms that librbd 2.0 maintains this crucial capability, with the absolute maximum relative error between the computed reliability curves remaining consistently below 1.0 across all models analyzed. This result ensures that the high numerical accuracy required by RQ1 is fully preserved.

- RQ2

- Reducing computational complexity through novel algorithms. The primary focus for reducing complexity was achieved through the implementation of novel mathematical formulas. Specifically, new algorithms were introduced to efficiently compute the reliability of the KooN block with generic components and the Bridge block with both generic and identical components. Performance analysis across various hardware platforms demonstrated the efficacy of these methods, showing a substantial reduction in computational complexity. Crucially, these new algorithms enabled the analysis of complex RBD models—such as large KooN configurations—that were previously unfeasible using librbd.

- RQ3

- Leveraging multicore architectures for platform-agnostic performance. To effectively utilize modern multicore architectures, as stipulated by RQ3, we implemented two key architectural improvements: an optimized usage of cache memory within the SMP paradigm, and native support for the SIMD programming paradigm. These techniques successfully increased the throughput of computed data and improved overall performance. Performance tests conducted on diverse PCs, CPUs, and OSes confirmed that these features contribute to achieving the platform-agnostic performance improvements necessary for deployment on a wide range of embedded and high-performance systems.

- To promote broader adoption and ease of use, we are currently working on the definition of an RBD Description Language (RDL) based on the XML format. This RDL will be leveraged, alongside librbd 2.0, to develop an application for generic system-level reliability analysis.

- To address the identified threats to validity concerning SIMD extensions (limited support and untested AVX512F—specific code), we plan to perform a dedicated test session using server CPUs and to add support for additional SIMD extensions, such as PowerPC VSX.

- To resolve the observed performance discrepancy when using SIMD with the MSVC compiler, we plan to investigate the difference in the generated Assembly code between GCC and MSVC and implement necessary tweaks to MSVC-specific compiler options.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- ISO/IEC/IEEE 24765:2010(E); International Standard-Systems and Software Engineering—Vocabulary. IEEE: New York, NY, USA, 2010; pp. 1–418. [CrossRef]

- EN 50126-1; Railway Applications—The Specification and Demonstration of Reliability, Availability, Maintainability and Safety (RAMS)-Part 1: Generic RAMS Process. Technical Report; CENELEC: Brussels, Belgium, 2017.

- Hollander, M.; Peña, E.A. Nonparametric Methods in Reliability. Stat. Sci. 2004, 19, 644. [Google Scholar] [CrossRef] [PubMed]

- Xing, L. An Efficient Binary-Decision-Diagram-Based Approach for Network Reliability and Sensitivity Analysis. IEEE Trans. Syst. Man, Cybern.-Part A Syst. Humans 2008, 38, 105–115. [Google Scholar] [CrossRef]

- Green, R.C.; Agrawal, V. A case study in multi-core parallelism for the reliability evaluation of composite power systems. J. Supercomput. 2017, 73, 5125–5149. [Google Scholar] [CrossRef]

- Nelissen, G.; Pereira, D.; Pinho, L.M. A Novel Run-Time Monitoring Architecture for Safe and Efficient Inline Monitoring. In Proceedings of the 2015 International Conference on Reliable Software Technologies (Ada-Europe 2015), Madrid, Spain, 22–26 June 2015; pp. 66–82. [Google Scholar] [CrossRef]

- van der Sande, R.; Shekhar, A.; Bauer, P. Reliable DC Shipboard Power Systems—Design, Assessment, and Improvement. IEEE Open J. Ind. Electron. Soc. 2025, 6, 235–264. [Google Scholar] [CrossRef]

- Pan, X.; Chen, H.; Shen, A.; Zhao, D.; Su, X. Reliability Assessment Method for Complex Systems Based on Non-Homogeneous Markov Processes. Sensors 2024, 24, 3446. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Wang, X. Reliability Analysis of the Multi-State k-out-of-n: F Systems with Multiple Operation Mechanisms. Mathematics 2022, 10, 4615. [Google Scholar] [CrossRef]

- Carberry, J.R.; Rahme, J.; Xu, H. Real-Time rejuvenation scheduling for cloud systems with virtualized software spares. J. Syst. Softw. 2024, 217, 112168. [Google Scholar] [CrossRef]

- Nguyen, T.A.; Min, D.; Choi, E.; Tran, T.D. Reliability and Availability Evaluation for Cloud Data Center Networks Using Hierarchical Models. IEEE Access 2019, 7, 9273–9313. [Google Scholar] [CrossRef]

- Dohi, T.; Zheng, J.; Okamura, H.; Trivedi, K.S. Optimal periodic software rejuvenation policies based on interval reliability criteria. Reliab. Eng. Syst. Saf. 2018, 180, 463–475. [Google Scholar] [CrossRef]

- Fantechi, A.; Gori, G.; Papini, M. Software rejuvenation and runtime reliability monitoring. In Proceedings of the 2022 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Charlotte, NC, USA, 31 October–3 November 2022; pp. 162–169. [Google Scholar] [CrossRef]

- Carnevali, L.; Fantechi, A.; Gori, G.; Vreshtazi, D.; Borselli, A.; Cefaloni, M.R.; Rota, L. Data-Driven Synthesis of Stochastic Fault Trees for Proactive Maintenance of Railway Vehicles. In Proceedings of the 2025 30th International Conference on Formal Methods for Industrial Critical Systems (FMICS), Aarhus, Denmark, 25–30 August 2025; pp. 162–181. [Google Scholar] [CrossRef]

- Carnevali, L.; Ciani, L.; Fantechi, A.; Gori, G.; Papini, M. An Efficient Library for Reliability Block Diagram Evaluation. Appl. Sci. 2021, 11, 4026. [Google Scholar] [CrossRef]

- Sahner, R.A.; Trivedi, K.S.; Puliafito, A. Performance and Reliability Analysis of Computer Systems: An Example-Based Approach Using the SHARPE Software Package; Kluwer Academic Publishers: Alphen aan den Rijn, The Netherlands, 1996. [Google Scholar] [CrossRef]

- SHARPE Portal. Duke University Pratt School of Engineering. Web Page. Available online: https://sharpe.pratt.duke.edu/ (accessed on 7 October 2025).

- Trivedi, K.S.; Bobbio, A. Reliability and Availability Engineering; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar] [CrossRef]

- Mahboob, Q.; Zio, E. Handbook of RAMS in Railway Systems: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar] [CrossRef]

- Moskowitz, F. The analysis of redundancy networks. Trans. Am. Inst. Electr. Eng. Part I Commun. Electron. 1958, 77, 627–632. [Google Scholar] [CrossRef]

- IEC 61078; Reliability Block Diagrams. Technical Report; IEC: Geneva, Switzerland, 2016.

- Hixenbaugh, A.F. Fault Tree for Safety; Technical Report; Boeing Aerospace Company: Seattle, WA, USA, 1968. [Google Scholar]

- IEC 61025; Fault Tree Analysis (FTA). Technical Report; IEC: Geneva, Switzerland, 2006.

- Rubino, G. Network reliability evaluation. In State-of-the-Art in Performance Modeling and Simulation; Bagchi, K., Walrand, J., Eds.; Gordon & Breach Books: London, UK, 1998; pp. 275–301. [Google Scholar]

- Bryant, R.E. Graph-Based Algorithms for Boolean Function Manipulation. IEEE Trans. Comput. 1986, C-35, 677–691. [Google Scholar] [CrossRef]

- Ericson, C.A. Fault Tree Analysis—A History. In Proceedings of the 17th International System Safety Conference, Orlando, FL, USA, 16–21 August 1999; pp. 1–9. [Google Scholar]

- Stewart, W. Introduction to the Numerical Solution of Markov Chains; Princeton University Press: Princeton, NJ, USA, 1994. [Google Scholar]

- IEC 61165; Application of Markov Techniques. Technical Report; IEC: Geneva, Switzerland, 2006.

- Molloy, M. Performance Analysis Using Stochastic Petri Nets. IEEE Trans. Comput. 1982, 31, 913–917. [Google Scholar] [CrossRef]

- Marsan, M.A.; Conte, G.; Balbo, G. A class of generalized stochastic petri nets for the performance evaluation of multiprocessor systems. ACM Trans. Comput. Syst. 1983, 2, 93–122. [Google Scholar] [CrossRef]

- Vicario, E.; Sassoli, L.; Carnevali, L. Using stochastic state classes in quantitative evaluation of dense-time reactive systems. IEEE Trans. Softw. Eng. 2009, 35, 703–719. [Google Scholar] [CrossRef]

- IEC 62551; Analysis Techniques for Dependability—Petri Net Techniques. Technical Report; IEC: Geneva, Switzerland, 2012.

- Ciardo, G.; Blakemore, A.; Chimento, P.F.; Muppala, J.K.; Trivedi, K.S. Automated Generation and Analysis of Markov Reward Models Using Stochastic Reward Nets. In Linear Algebra, Markov Chains, and Queueing Models; Meyer, C.D., Plemmons, R.J., Eds.; Springer: New York, NY, USA, 1993; pp. 145–191. [Google Scholar]

- Ciardo, G.; Trivedi, K.S. A decomposition approach for stochastic reward net models. Perform. Eval. 1993, 18, 37–59. [Google Scholar] [CrossRef]

- Meyer, J.; Movaghar, A.; Sanders, W. Stochastic Activity Networks: Structure, Behavior, and Application. In Proceedings of the International Workshop on Timed Petri Nets, Torino, Italy, 1–3 July 1985; pp. 106–115. [Google Scholar]

- Sanders, W.H.; Meyer, J.F. Stochastic Activity Networks: Formal Definitions and Concepts. In Lectures on Formal Methods and Performance Analysis: First EEF/Euro Summer School on Trends in Computer Science Bergen Dal, The Netherlands, 3–7 July 2000; Hermanns, H., Katoen, J.-P., Eds.; Springer: Berlin/Heidelberg, Germany, 2000; pp. 315–343. [Google Scholar] [CrossRef]

- Distefano, S.; Puliafito, A. Dynamic reliability block diagrams: Overview of a methodology. In Proceedings of the European Safety and Reliability Conference 2007, ESREL 2007-Risk, Reliability and Societal Safety, Stavanger, Norway, 25–27 June 2007; Volume 2. [Google Scholar]

- Distefano, S.; Puliafito, A. Dependability Evaluation with Dynamic Reliability Block Diagrams and Dynamic Fault Trees. IEEE Trans. Dependable Secur. Comput. 2009, 6, 4–17. [Google Scholar] [CrossRef]

- Dugan, J.B.; Bavuso, S.J.; Boyd, M.A. Dynamic fault-tree models for fault-tolerant computer systems. IEEE Trans. Reliab. 1992, 41, 363–377. [Google Scholar] [CrossRef]

- Codetta-Raiteri, D. The Conversion of Dynamic Fault Trees to Stochastic Petri Nets, as a case of Graph Transformation. Electron. Notes Theor. Comput. Sci. 2005, 127, 45–60. [Google Scholar] [CrossRef]

- Volk, M.; Weik, N.; Katoen, J.P.; Nießen, N. A DFT Modeling Approach for Infrastructure Reliability Analysis of Railway Station Areas. In Formal Methods for Industrial Critical Systems; Larsen, K.G., Willemse, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 40–58. [Google Scholar] [CrossRef]

- Dai, Y.-S.; Pan, Y.; Zou, X. A Hierarchical Modeling and Analysis for Grid Service Reliability. IEEE Trans. Comput. 2007, 56, 681–691. [Google Scholar] [CrossRef]

- Kim, D.S.; Ghosh, R.; Trivedi, K.S. A Hierarchical Model for Reliability Analysis of Sensor Networks. In Proceedings of the 2010 IEEE 16th Pacific Rim International Symposium on Dependable Computing (PRDC), Tokyo, Japan, 13–15 December 2010; pp. 247–248. [Google Scholar] [CrossRef]

- Fantechi, A.; Gori, G.; Papini, M. Runtime Reliability Monitoring for Complex Fault-Tolerance Policies. In Proceedings of the 2022 IEEE 6th International Conference on System Reliability and Safety (ICSRS), Venice, Italy, 23–25 November 2022; pp. 110–119. [Google Scholar] [CrossRef]

- Siewiorek, D.P.; Swarz, R.S. Reliable Computer Systems: Design and Evaluation, 3rd ed.; A. K. Peters, Ltd.: Natick, MA, USA, 1998. [Google Scholar]

- Isograph Reliability Workbench. Web Page. Available online: https://www.isograph.com/software/reliability-workbench/rbd-analysis/ (accessed on 7 October 2025).

- Relyence RBD. Web Page. Available online: https://www.relyence.com/products/rbd/ (accessed on 7 October 2025).

- ALD RAM Commander—RBD Module. Web Page. Available online: https://aldservice.com/Reliability-Products/reliability-block-diagram-rbd.html (accessed on 7 October 2025).

- Janardhanan, S.; Badnava, S.; Agarwal, R.; Mas-Machuca, C. PyRBD: An Open-Source Reliability Block Diagram Evaluation Tool. In Proceedings of the 2024 IEEE 38th International Workshop on Communications Quality and Reliability (CQR), Seattle, WA, USA, 9–12 September 2024; pp. 19–24. [Google Scholar] [CrossRef]

- PyRBD GitHub Repository. Web Page. Available online: https://github.com/shakthij98/PyRBD (accessed on 7 October 2025).

- Janardhanan, S.; Chen, Y.; Mas-Machuca, C. PyRBD++: An Open-Source Fast Reliability Block Diagram Evaluation Tool. In Proceedings of the 2025 IEEE 15th International Workshop on Resilient Networks Design and Modeling (RNDM), Trondheim, Norway, 9–11 June 2025; pp. 1–7. [Google Scholar] [CrossRef]

- PyRBD++ GitHub Repository. Web Page. Available online: https://github.com/shakthij98/PyRBD_plusplus (accessed on 7 October 2025).

- librbd GitHub Repository. Web Page. Available online: https://github.com/marcopapini/librbd (accessed on 7 October 2025).

- Fourment, M.; Gillings, M. A comparison of common programming languages used in bioinformatics. BMC Bioinform. 2008, 9, 82. [Google Scholar] [CrossRef] [PubMed]

- IEEE Std-754-2019 (Revision IEEE-754-2008); IEEE Standard for Floating-Point Arithmetic. IEEE: New York, NY, USA, 2019; pp. 1–84. [CrossRef]

- IEEE Std 1003.1-2017 (Revision of IEEE Std 1003.1-2008); IEEE Standard for Information Technology–Portable Operating System Interface (POSIX™) Base Specifications, Issue 7. IEEE: New York, NY, USA, 2018; pp. 1–3951. [CrossRef]

- pthreads-win32—Open Source POSIX Threads for Win32. Web Page. Available online: http://sourceware.org/pthreads-win32/ (accessed on 7 October 2025).

- Cygwin. Web Page. Available online: https://www.cygwin.com/ (accessed on 7 October 2025).

- Telcordia SR-332 Reliability Prediction Procedure for Electronic Equipment; Technical Report Issue 4; Telcordia Network Infrastructure Solutions (NIS): Bridgewater, NJ, USA, 2016.

- Hodson, T.O. Root-mean-square error (RMSE) or mean absolute error (MAE): When to use them or not. Geosci. Model Dev. 2022, 15, 14. [Google Scholar] [CrossRef]

| Architecture | SIMD | Vector Size (Bits) | FMA Support |

|---|---|---|---|

| x86 | SSE2 | 128 | no |

| AVX | 256 | no | |

| amd64 | FMA | 256 | yes |

| AVX512F | 512 | yes | |

| AArch64 | NEON | 128 | yes |

| RBD Model | Generic | Identical | ||

|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | |

| Series | ||||

| Parallel | ||||

| Bridge | ||||

| 2oo20 | ||||

| 3oo20 | ||||

| 4oo20 | ||||

| 5oo20 | ||||

| 6oo20 | ||||

| 7oo20 | ||||

| 8oo20 | ||||

| 9oo20 | ||||

| 10oo20 | ||||

| 11oo20 | ||||

| 12oo20 | ||||

| 13oo20 | ||||

| 14oo20 | ||||

| 15oo20 | ||||

| 16oo20 | ||||

| 17oo20 | ||||

| 18oo20 | ||||

| 19oo20 | ||||

| Name | CPU | Cores/Threads | RAM | OS & Compiler |

|---|---|---|---|---|

| PC1a | Intel i7-13700K | 8/16 @ 5.4GHz + 8/8 @ 4.2GHz | 64GB-DDR5 @ 5600MHz | Ubuntu 22.04 GCC 11.4.0 |

| PC1b | Intel i7-13700K | 8/16 @ 5.4GHz + 8/8 @ 4.2GHz | 64GB-DDR5 @ 5600MHz | Windows 11 Visual Studio 2022 |

| PC1c | Intel i7-13700K | 8/16 @ 5.4GHz + 8/8 @ 4.2GHz | 64GB-DDR5 @ 5600MHz | Windows 11 GCC 12.4.0 |

| PC2 | Apple M3 | 4/4 @ 4.06GHz + 4/4 @ 2.57GHz | 16GB-LPDDR5 @ 3200MHz | Mac OS 14.5 clang 15.0.0 |

| PC3 | Intel i7-7700HQ | 4/8 @ 3.8GHz | 32GB-DDR4 @ 2400MHz | Ubuntu 22.04 GCC 11.4.0 |

| PC4 | Intel i7-6700HQ | 4/8 @ 3.5GHz | 16GB-LPDDR3 @ 2133MHz | Mac OS 10.13.6 clang 10.0.0 |

| PC5 | Broadcom BCM2712 | 4/4 @ 2.4GHz | 8GB-LPDDR4X @ 2133MHz | Raspberry Pi OS 12 GCC 12.2.0 |

| PC6 | Broadcom BCM2837 | 4/4 @ 1.2GHz | 1GB-LPDDR2 @ 900MHz | Raspberry Pi OS 11 GCC 10.2.1 |

| RBD Block | N |

|---|---|

| Series Generic | |

| Series Identical | |

| Parallel Generic | |

| Parallel Identical | |

| ooN Generic | |

| ooN Identical | |

| Bridge Generic | |

| Bridge Identical | |

| KooN Generic, | |

| KooN Identical, |

| RBD Model | Generic | Identical | ||

|---|---|---|---|---|

| SHARPE | librbd 2.0 | SHARPE | librbd 2.0 | |

| Series | 2140.0 | 0.1 | 1150.0 | 0.1 |

| Parallel | 2320.0 | 0.1 | 1350.0 | 0.1 |

| Bridge | 990.0 | 0.7 | 1010.0 | 0.6 |

| 2oo20 | 2480.0 | 2.5 | 1510.0 | 0.5 |

| 3oo20 | 2590.0 | 8.1 | 1640.0 | 0.7 |

| 4oo20 | 2690.0 | 16.7 | 1730.0 | 0.8 |

| 5oo20 | 2780.0 | 26.8 | 1820.0 | 1.0 |

| 6oo20 | 2910.0 | 32.6 | 1900.0 | 0.9 |

| 7oo20 | 3020.0 | 35.0 | 2000.0 | 1.0 |

| 8oo20 | 3010.0 | 33.5 | 2020.0 | 1.2 |

| 9oo20 | 3110.0 | 35.8 | 2070.0 | 0.9 |

| 10oo20 | 3050.0 | 33.2 | 2080.0 | 1.1 |

| 11oo20 | 3020.0 | 35.1 | 2090.0 | 1.1 |

| 12oo20 | 2970.0 | 35.0 | 2030.0 | 1.0 |

| 13oo20 | 2970.0 | 32.8 | 2010.0 | 1.0 |

| 14oo20 | 2840.0 | 31.6 | 1930.0 | 1.1 |

| 15oo20 | 2810.0 | 29.9 | 1900.0 | 1.0 |

| 16oo20 | 2730.0 | 25.8 | 1750.0 | 0.9 |

| 17oo20 | 2630.0 | 15.2 | 1650.0 | 0.7 |

| 18oo20 | 2410.0 | 7.3 | 1490.0 | 0.6 |

| 19oo20 | 2270.0 | 2.4 | 1340.0 | 0.6 |

| 15oo30 | 4870.0 | 2024.0 | 3510.0 | 1.2 |

| 20oo40 | 7300.0 | 53,901.3 | 5450.0 | 2.1 |

| 25oo50 | 10,150.0 | 2,277,788.3 | 7810.0 | 3.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gori, G.; Papini, M.; Fantechi, A. Efficient Reliability Block Diagram Evaluation Through Improved Algorithms and Parallel Computing. Appl. Sci. 2025, 15, 11397. https://doi.org/10.3390/app152111397

Gori G, Papini M, Fantechi A. Efficient Reliability Block Diagram Evaluation Through Improved Algorithms and Parallel Computing. Applied Sciences. 2025; 15(21):11397. https://doi.org/10.3390/app152111397

Chicago/Turabian StyleGori, Gloria, Marco Papini, and Alessandro Fantechi. 2025. "Efficient Reliability Block Diagram Evaluation Through Improved Algorithms and Parallel Computing" Applied Sciences 15, no. 21: 11397. https://doi.org/10.3390/app152111397

APA StyleGori, G., Papini, M., & Fantechi, A. (2025). Efficient Reliability Block Diagram Evaluation Through Improved Algorithms and Parallel Computing. Applied Sciences, 15(21), 11397. https://doi.org/10.3390/app152111397