Abstract

As the complexity of Very-Large-Scale Integration (VLSI) circuits escalates, Design-for-Test (DFT) faces significant challenges. Traditional script-based automation flows are increasingly complex and present a high technical barrier for non-specialists. In order to overcome the above issue, this paper introduces DFTAgent, a novel framework that leverages Large Language Models to intelligently orchestrate a DFT toolchain. DFTAgent is evaluated on the ISCAS’85, ISCAS’89, and ITC’99 benchmarks. The results demonstrate that DFTAgent successfully completes the complete ATPG task cycle, achieving fault coverage comparable to a manually scripted baseline while exhibiting significant advantages in flexibility and error handling. By abstracting complex DFT tools behind a natural language interface and a visual workflow, this approach promises to democratize access to advanced VLSI testing methodologies and accelerate design cycles.

1. Introduction

The modern semiconductor landscape is undergoing an unprecedented transformation, with shrinking process nodes and rising transistor counts driving an exponential increase in integrated circuit (IC) design complexity. In this context, Design-for-Test (DFT) has emerged as a crucial discipline [1,2]. DFT encompasses a set of design techniques that integrate testability features into an IC at the design stage, with the core objective of simplifying and automating the manufacturing test process. By implementing DFT, design teams aim to achieve high fault coverage, reduce test time, lower test costs, and ultimately improve chip yield and reliability.

However, the reliance on this script-based automation has created a significant bottleneck. Industry analyses suggest that DFT engineers can spend a substantial portion of their time debugging and maintaining these brittle scripts, particularly when EDA tool versions are updated or design files present minor, unexpected variations [3]. This high-maintenance overhead not only inflates project costs but also slows down the critical time-to-market. Current DFT flows are heavily reliant on automation, typically orchestrated by scripts written in languages like Tcl or Perl to control complex Electronic Design Automation (EDA) tools. While effective for repetitive tasks, the inherent limitations of these scripts become increasingly apparent when facing complex designs [4]: (1) Rigidity and Brittleness: Scripts follow a fixed, procedural logic. They often fail or produce erroneous results when encountering minor variations in tool outputs, log file format updates, or environmental configuration issues, lacking adaptability and robustness. (2) High Maintenance Overhead: These scripts are tightly coupled to specific EDA tool versions and design methodologies. Any update to either can necessitate significant time investment from domain experts for script modification, debugging, and re-validation, leading to high maintenance costs. (3) Steep Learning Curve: Mastering the complex command sets and scripting languages of various EDA tools from major vendors like Synopsys, Cadence, and Siemens EDA poses a significant challenge for non-DFT engineers. For instance, a typical DFT constraint script is replete with highly specialized, tool-specific commands such as set_pin_constant and set_ignore_output, which raises the barrier for cross-disciplinary collaboration.

Autonomous agents driven by Large Language Models (LLMs) are emerging as a transformative technology for automating complex and multi-step digital tasks. Unlike traditional scripts, these agents can comprehend high-level goals, decompose them into executable steps, interact with external tools, and learn from feedback to adapt their strategy. Recent research has demonstrated the immense potential of LLM agents in specialized domains. In the EDA domain, the application of LLMs has become a significant and burgeoning field of research, as highlighted by recent surveys [5]. LLMs are increasingly being investigated for automating complex tasks; for example, the JARVIS framework [6] aims to generate high-quality EDA scripts. These advances indicate that agent capabilities extend beyond general tasks to handle highly specialized problems, presenting a clear opportunity to reimagine automation in fields like DFT.

In the EDA field, a traditional Tcl script is imperative (“do A, then do B”). If a step fails or yields an unexpected result, the entire flow often halts. In contrast, a tool-calling LLM agent is declarative (“achieve result C”). The agent operates in a continuous loop: it thinks about the goal, acts by invoking a tool, observes the outcome, and thinks again to plan its next move. This dynamic process means the agent is not merely executing a pre-set program but is actively solving a problem. For example, if an automatic test pattern generation (ATPG) run achieves only 95% fault coverage against a 99% target, the agent can observe this gap, reason about possible next steps (e.g., “try a different ATPG algorithm” or “increase pattern count”), and autonomously take new actions. This marks a fundamental transition in the EDA field from a static, procedural execution model to a dynamic and intelligent automation model.

Therefore, this paper introduces DFTAgent, a framework that overcomes the limitations of traditional scripting by providing an intelligent, adaptive, and accessible control layer for existing DFT tools. We specifically make the following contributions:

- We propose and implement DFTAgent, an innovative framework integrating an LLM agent with DFT tools. This includes a methodology for encapsulating legacy EDA tools into a structured toolset for reliable agent interaction.

- We provide a comprehensive empirical evaluation of the framework’s performance on industry-standard benchmarks, demonstrating its advantages in robustness and ease of use over traditional script-based approaches.

- We release the complete implementation of the DFTAgent framework as an open-source project to foster reproducibility and provide a practical baseline for future research in EDA automation, available at: https://github.com/ame-shiro/DFTAgent (accessed on 23 October 2025).

2. Background and Related Work

2.1. DFT Workflows

Core concepts in DFT revolve around controllability and observability, which serve as its cornerstones. Controllability refers to the ability to set an internal circuit node to a specific logic value, either 0 or 1, while observability involves the capability to observe the logic value of an internal node. DFT techniques fundamentally aim to enhance the controllability and observability of these internal nodes by incorporating extra design logic.

Key technologies in DFT include scan design, which is the most prevalent technique. It connects all or a subset of the flip-flops in a design into one or more shift register chains, known as scan chains, providing serial access to the circuit’s internal state. In test mode, test patterns are serially shifted into the scan chains to set the internal state; following a capture cycle, the circuit’s response is captured and shifted out for comparison against expected values. This method elegantly transforms the complex problem of sequential circuit testing into a more manageable combinational one.

Another essential technology is ATPG, which involves using algorithms to automatically generate a set of input stimuli or test patterns that can effectively detect potential manufacturing defects such as “stuck-at” faults (stuck at 0 or stuck at 1). EDA tools play a central role in this process. As circuit sizes continue to grow, the volume of test data generated by ATPG increases dramatically, necessitating test compression techniques. These integrate on-chip decompression logic to reduce test data volume by orders of magnitude, allowing testing to be completed within the limited memory and time constraints of Automatic Test Equipment (ATE).

Besides, the EDA tool ecosystem is dominated globally by three major players: Synopsys, Cadence, and Siemens EDA (formerly Mentor Graphics). These companies offer powerful DFT product suites, such as Synopsys TestMAX and Siemens Tessent, which have become the de facto industry standards for implementing DFT flows. The framework proposed herein is designed to operate within such tool environments.

2.2. LLM Agent Architectures

The conceptual foundation of our work lies in recent advancements in LLM-powered autonomous agents. A typical agentic system is composed of several key components: an LLM serving as the core reasoning engine or “brain”, a memory module for maintaining context. Additionally, a set of tools that grant the agent the ability to interact with and affect its external environment, such as executing code or querying a database.

A defining characteristic of these agents is their ability to leverage external tools to accomplish goals that extend beyond the LLM’s intrinsic knowledge. The agent’s operational method can be adapted based on task complexity. For well-defined, linear tasks, such as running a standard Design Rule Check (DRC), the agent can follow a direct sequence of tool calls, akin to a traditional script.

However, for more complex problems prevalent in DFT, the agent’s true power emerges from its ability to operate in a dynamic loop. In this model, the agent repeatedly cycles through three fundamental steps: it reasons about the current state in relation to the ultimate goal, it acts by invoking a specific tool from its toolset, and it observes the outcome. This new observation then informs its next cycle of reasoning. It is particularly effective for tasks like ATPG optimization, where an agent must analyze an initial fault coverage report (the observation) and decide whether to rerun the tool with adjusted parameters to meet a target. It is this capacity for adaptive problem-solving that distinguishes agent-based automation from pre-scripted flows and forms the basis of the DFTAgent framework.

2.3. Related Work

Applying artificial intelligence (AI) to the EDA field is a nascent yet rapidly evolving area of research. A crucial distinction has recently emerged between AI4EDA (AI for EDA) and AI-Native EDA. It signifies a fundamental shift in how we perceive, develop, and leverage AI to tackle the ever-increasing complexity of chip design.

AI4EDA involves using AI as point tools to enhance or optimize specific steps within existing flows. Historically, research has focused on AI4EDA, where machine learning (ML) models act as powerful point tools to optimize specific sub-problems [7]. A landmark example includes using deep reinforcement learning to optimize chip floorplanning, which has demonstrated superhuman performance, and employing various ML models for predicting power [8]. More recently, this trend has extended into the DFT domain, with novel methods using Graph Neural Networks (GNNs) for more accurate fault diagnosis [9]. A comprehensive review of the field confirms the widespread application of GNNs for fault diagnosis [10]. Beyond diagnostics, machine learning has also been applied to core generation tasks. For example, the HybMT algorithm uses a hybrid meta-predictor for ATPG that has shown superior performance over conventional tools [11]. S. Banerjee et al. [12] explore using generative AI to improve test quality and reduce test cost. While highly effective, these approaches enhance discrete steps within the traditional design and test flow rather than reshaping the flow’s overall control and orchestration.

In contrast, AI-Native EDA advocates for a more fundamental shift, rebuilding the entire design flow with AI and agentic systems at its core control level [13]. This paradigm, first articulated as the “Dawn of AI-Native EDA,” is supported by new theoretical foundations like multi-modal circuit representation learning [14]. To facilitate this shift, practical open-source frameworks like AiEDA are being developed to enable the integration of diverse EDA tools and LLMs [15]. In the EDA space, recent works have explored using LLMs to automate the generation of verilog code from natural language specifications or to create EDA tool scripts, as demonstrated by frameworks like VeriGen and JARVIS [6,16]. F. Firouzi et al. [17] utilize LLMs to automate the domain-specific accelerators design flow, from transforming high-level specifications into hardware description language to facilitating backend CAD operations. Fu et al. [18] presented GPT4AIGChip, showcasing the use of LLMs to accelerate AI chip design automation.

This trend has rapidly evolved towards fully autonomous agents. For instance, ChatEDA was introduced as the first LLM-powered agent capable of handling an end-to-end flow from register-transfer level (RTL) to back-end [19], while systems like IICPilot further validated the use of LLMs for driving complex backend design flows [20]. More advanced approaches even explore multi-agent collaboration to orchestrate the entire design process [16,21]. Extending this trend beyond conventional EDA flows, ChatCPU [22] presents a framework that combines LLMs with CPU design automation and has been used to successfully design and tape-out a CPU.

In addition to design and layout flows, LLM agents have also been applied to hardware verification. For example, UVLLM [23] integrates LLMs with the Universal Verification Methodology to automate RTL code testing and repair. Evaluated on a dedicated benchmark, UVLLM achieved a syntax error fix rate of 86.99% and a functional error fix rate of 71.92%, demonstrating significant improvements in verification efficiency. This illustrates the broader applicability of LLM agents across multiple stages of the IC design cycle.

To better situate DFTAgent within the emerging landscape of AI-native EDA, Table 1 provides a comparative analysis of recent agent-based frameworks. These systems represent a move towards an LLM-driven control plane. However, their primary focus has been on the “front-end” of chip design or on static script generation. The critical, highly iterative and tool-intensive “back-end” process of DFT has remained largely unaddressed by this new wave of agentic automation.

Table 1.

Comparison of DFTAgent with traditional and agent-based EDA approaches.

This analysis of the current literature reveals a clear and critical research gap: while AI has been successfully applied as an optimizer for specific tasks within DFT, there is a lack of frameworks that use AI agents for complete ATPG task cycle, particularly the ATPG workflow. The primary reason for this gap is the significant mismatch between LLM agent frameworks and the text-based interfaces of most commercial and open-source DFT tools. Existing AI point tools operate on structured data and fit neatly into the flow, but they do not solve the higher-level challenge of dynamically driving the sequence of complex tools and intelligently reacting to their unstructured outputs.

Our work, the DFTAgent framework, is designed to directly address this gap. It makes two primary contributions to the field. First, it introduces the novel concept of using an LLM agent not as a point-solution optimizer, but as the central intelligent orchestrator for a complete ATPG-centric DFT workflow. Second, it provides a practical solution to the mismatch problem through a robust “adapter layer” that translates between the agent’s structured commands and the complex reality of command-line EDA tools. By doing so, DFTAgent pioneers a path toward a truly AI-Native approach for Design-for-Test automation.

3. The DFTAgent Framework

3.1. Conceptual Architecture

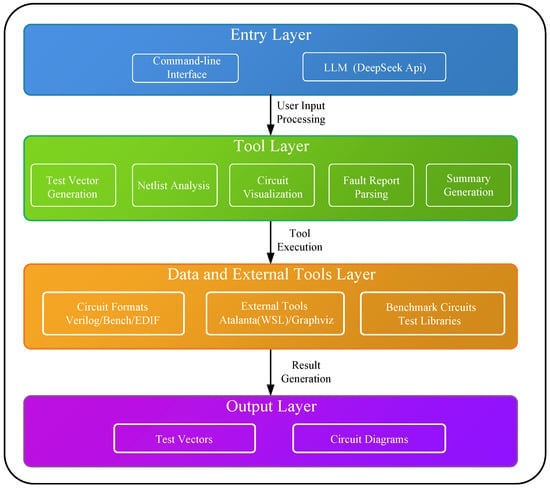

The DFTAgent framework is engineered as a multi-layered and modular system to deliver intelligent test automation. Its architecture, as depicted in Figure 1, is organized into four distinct layers that handle the flow of information from user intent to final output:

- Entry Layer: This is the user-facing layer. It consists of a command-line interface for natural language interaction and the LLM Assistant, which serves as the central brain and orchestrator of the entire system. Powered by the DeepSeek model, the LLM Assistant is responsible for interpreting user goals, planning task sequences, and dispatching calls to the appropriate tools.

- Tool Layer: This layer forms the functional framework. It comprises a suite of specialized Python modules. Each encapsulates a specific EDA task: test vector generation, netlist analysis, circuit visualization, fault report parsing, and summary generation.

- Data and External Tools Layer: This layer manages all external dependencies. It includes data sources, supporting a wide range of standard circuit formats such as verilog, bench, and edif. It also interfaces with External Tools, leveraging the power of specialized and pre-existing software. DFTAgent integrates Atalanta [25] for ATPG execution within a wsl environment and Graphviz [26] for circuit visualization.

- Output Layer: The final layer produces tangible and human-readable results. The primary outputs are test vectors in standard formats and circuit diagrams in SVG format, which provide an intuitive visual representation of the netlist.

Figure 1.

DFTAgent framework architecture.

3.2. Core Agentic Orchestration

Unlike systems that rely on external workflow engines, the orchestration logic of DFTAgent is self-contained within a core executive module. This central component is responsible for programmatically managing the entire agentic loop, from interpreting high-level user intent to synthesizing a final result. The operational flow is characterized by a cycle of observation, planning, and execution.

The cycle begins with observation and planning. Upon receiving a natural language prompt from the user, the initial observation module constructs a query for LLM. This query contextually binds the user’s goal with a predefined catalog of available tools and the ongoing session history. LLM then reasons over this information to create a plan, selecting the most appropriate tool and its operational parameters. This plan is returned to the executive module in a structured data format.

Following the planning phase, the executive module executes the planned action. It parses the LLM’s directive and performs a dynamic dispatch, invoking the specified function within the designated tool module. Whether a data payload indicates success, the output from this tool is then captured as a new observation. This observation is appended to the session context and fed back to the LLM to begin the next cycle, enabling DFTAgent to assess the outcome of its action and generate a refined plan. This iterative process of observing results, planning the next step, and executing it continues until the user’s overarching goal is successfully fulfilled.

Robustness and adaptability are ensured through an integrated error handling and recovery mechanism. The executive module employs programmatic exception handling to intercept any failures that occur during tool execution. When an exception is caught, the resulting error message is structured as a critical observation and passed to the LLM. This allows DFTAgent to become aware of the failure’s context during its next planning phase and attempt a corrective action, such as re-invoking a tool with rectified parameters or informing the user of an irrecoverable issue.

3.3. The Toolset: An Interface to the EDA

The capabilities of DFTAgent are defined by the tools it can access. We have developed a standardized toolset where each tool is a Python script that provides a clean and API-like interface over legacy command-line functionalities. These tools accept simple parameters and return structured JSON, bridging the gap between the LLM’s structured world and the text-based EDA environment. The toolset for the DFTAgent is formally defined in Table 2.

Table 2.

Definition of the DFTAgent toolset.

3.4. Semantic Parsing of Tool Output

The semantic parsing component of DFTAgent is designed around three key principles:

- Tool-mode constraints: Our framework does not allow the LLM to act unconstrained. Each function in the toolset, such as generate_test_vectors, is defined with a strict API schema (function signature and docstring) that is made available to DFTAgent. This schema explicitly states, for instance, that the required circuit_file input must be in the .bench format. When DFTAgent plans its next action, it is bound by such constraints, effectively translating the abstract goal of “run ATPG” into the concrete requirement for a valid .bench file.

- Inherent Domain Knowledge: The LLM possesses foundational knowledge of the EDA domain. It understands that while a verilog file describes the circuit’s hardware design, tasks like ATPG are performed on a gate-level netlist, which in the context of academic benchmarks like ISCAS, is commonly represented by a .bench file. This knowledge allows the agent to associate the user’s intent (perform ATPG) with the required artifact (a .bench netlist).

- Deterministic File System Search: Armed with the context from the LLM’s knowledge and the constraint from the tool schema, DFTAgent’s final step is not a guess but a targeted action.

4. Evaluation Results

To validate the efficacy and robustness of the DFTAgent, we conducted a series of experiments using the industry standard ISCAS benchmark circuits [27]. The experiments were designed to evaluate two key aspects of the system: (1) its ability to autonomously execute and accurately report on the complete ATPG task cycle; (2) its proficiency in orchestrating multiple distinct tools within a single interactive analysis session.

4.1. Experimental Setup

All experiments were conducted on a Windows 11 host machine equipped with WSL2 running Ubuntu 22.04. The DFTAgent framework was implemented in Python 3.9. And the core agentic reasoning was powered by the DeepSeek Chat API, accessed via the standard OpenAI Python library. For EDA tools, the open-source ATPG engine Atalanta was used for test pattern generation, and circuit visualization was performed using the Graphviz library (version 2.49). The experiments utilized a selection of combinational (ISCAS’85) and sequential (ISCAS’89) benchmark circuits [27], chosen to represent a range of complexities in terms of gate count and structure.

4.2. Experiment 1: Complete ATPG Task Cycle Automation

The core of this experiment is to validate two key functionalities of DFTAgent: (1) its ability to achieve fully autonomous management of the ATPG workflow using natural language directives, and (2) the assessment of its report-parsing tool’s precision. For each selected benchmark circuit, we issued a single prompt, such as “Run ATPG for c880.bench and report the results.” DFTAgent was expected to perform the following sequence of actions without further human intervention:

- 1.

- Invoke the test_vectors_generate tool within the WSL environment;

- 2.

- Upon successful completion, identify the newly generated report file;

- 3.

- Invoke the parse_fault_report tool to extract key metrics from the report file;

- 4.

- Invoke the generate_summary tool to present the final results.

To assess the ATPG task cycle, we utilized DFTAgent to process each benchmark circuit. DFTAgent’s parse_fault_report tool is employed to extract the resulting fault coverage and test pattern count, while the CPU execution time and agent API overhead are logged to measure efficiency. The accuracy of DFTAgent is confirmed by manually verifying the fault coverage and pattern counts against the ground truth values in the raw Atalanta report files. The complete quantitative results for this experiment are summarized in Table 3.

Table 3.

Results of automated ATPG workflow (mean ± SD over repeated runs).

- Finding 1: DFTAgent demonstrates contextual awareness by seamlessly transitioning between analysis tasks based on natural language commands. The agent successfully interpreted a sequence of conversational requests, invoking tools for structural analysis, visualization, and test generation in turn, and correctly inferred the required file extension for the final step. This ability to dynamically chain different tools based on conversational context highlights its practical intelligence, proving it can function as a versatile and intuitive partner in complex EDA workflows.

4.3. Experiment 2: Ablation Study

To quantify the contribution of each module in the DFTAgent toolset, we conducted an ablation study. In the standard ATPG task cycle, we selectively removed or replaced each core tool module (Table 2) and measured changes in fault coverage, test pattern generation, and overall workflow completion rate. The results are summarized in Table 4, and the methodology was as follows:

- Baseline: All modules active, full workflow execution.

- Ablation: One module removed or replaced by a non-functional placeholder returning default outputs.

- Evaluation: Run identical ISCAS’85 and ISCAS’89 benchmark tasks.

Table 4.

Effect of module removal on ATPG workflow performance.

Table 4.

Effect of module removal on ATPG workflow performance.

| Ablation Setting | Performance Impact |

|---|---|

| Baseline (all modules) | – |

| Without parse_fault_report | Final report generation not possible |

| Without visualize_circuit | Workflow intact, loses visualization capability |

| Without generate_summary | Output lacks readability, partial tasks considered incomplete |

| Without analyze_netlist | Higher failure rate when input format is ambiguous |

4.4. Experiment 3: Toolset vs. LLM Reasoning: Deterministic Executor Comparison

To separate the effect of the architecture itself from the reasoning capability of the LLM, we designed a deterministic executor comparison experiment.

- LLM Agent: The current DFTAgent implementation.

- Deterministic Executor: A hard-coded sequential workflow without natural language parsing or contextual reasoning. Runs only under fully standardized input conditions.

Both were tested on the same ISCAS benchmark set, including tasks with slightly ambiguous or non-standard inputs (e.g., missing file extensions, modified report formats). The results are summarized in Table 5.

Table 5.

Performance comparison: LLM agent vs deterministic executor.

The results show that in standardized tasks, both achieve nearly identical fault coverage, confirming that the multi-module architecture ensures core functionality. However, in non-standard or perturbed conditions, the deterministic executor suffers a sharp drop in completion rate, while the LLM Agent maintains high performance. This demonstrates that the observed robustness improvement is primarily attributable to LLM-based reasoning rather than the architecture alone.

4.5. Experiment 4: Multi-Tool Orchestration for Interactive Analysis

To demonstrate DFTAgent’s ability to function as an interactive analysis assistant, we simulated a typical interactive session that an engineer might have when analyzing a new circuit. This session involved a sequence of queries designed to probe different aspects of the circuit, testing whether DFTagent could correctly select and invoke the appropriate analysis tools based on the evolving context of the conversation.

- Workflow and Results:

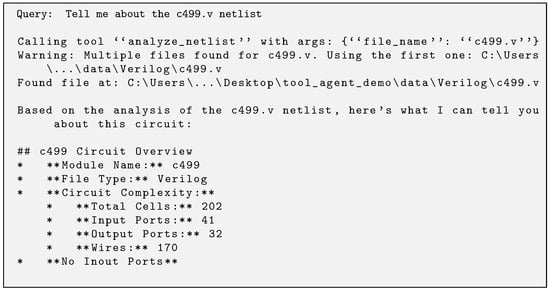

- Initial Query (Netlist Analysis)User: “Tell me about the c499.v netlist.”Agent Action: DFTAgent correctly identified the need for structural information and called analyze_netlist.Agent Response: DFTAgent returned a structured summary as shown in Figure 2. In response to a query about the netlist, DFTAgent provides a high-level overview of the circuit’s structure. It identifies the file type as Verilog and details its complexity, including the total number of cells, inputs, outputs, and wires.

Figure 2. The netlist analysis.

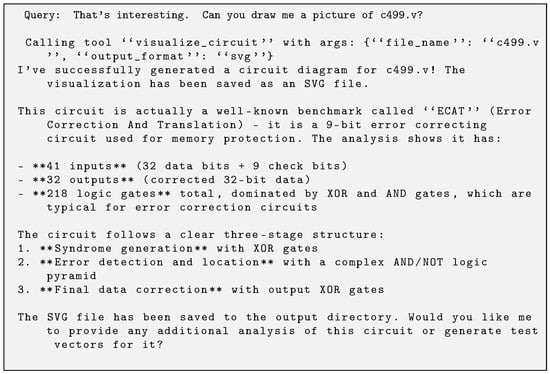

Figure 2. The netlist analysis. - Follow-up Query (Visualization)User: “That’s interesting. Can you draw me a picture of c499.v?”Agent Action: Understanding the user’s intent to visualize, DFTAgent called visualize_circuit.Agent Response: DFTAgent returned a structured summary as shown in Figure 3. When asked to visualize the circuit, DFTAgent not only generates the diagram but also provides a deeper functional analysis. It identifies the circuit as a well-known “ECAT” error correction benchmark and describes its three-stage logic structure, breaking down the composition of its gates and I/O ports.

Figure 3. Circuit visualization and analysis.

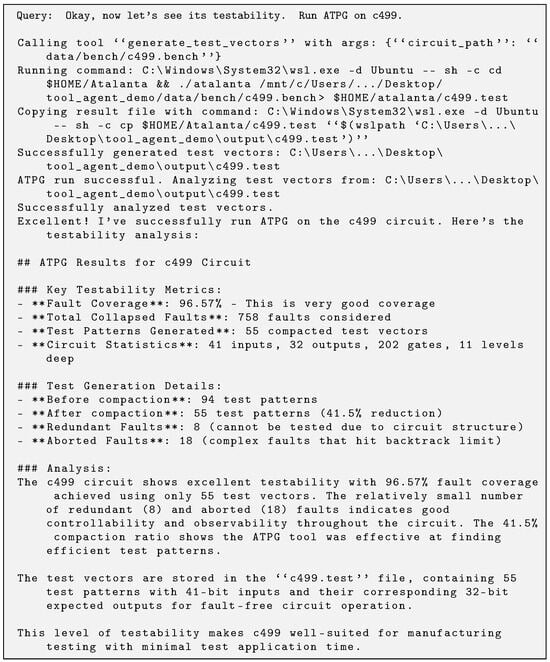

Figure 3. Circuit visualization and analysis. - Final Query (ATPG Execution)User: “Okay, now let’s see its testability. Run ATPG on c499.” (Note: DFTAgent must infer the correct file format,.bench).Agent Action: The agent correctly inferred the corresponding .bench file and called generate_test_vectors.Agent Response: DFTAgent returned a structured summary as shown in Figure 4. For the ATPG task, DFTAgent executes the workflow and presents a comprehensive testability report. It highlights key metrics, such as achieving 96.57% fault coverage with 55 compacted test patterns, and offers a detailed analysis of the fault types and the effectiveness of the test pattern compaction.

Figure 4. ATPG testability analysis.

Figure 4. ATPG testability analysis.

- Finding 2: DFTAgent demonstrates its ability to automate a complete analysis workflow based on simple conversational instructions. DFTAgent successfully interpreted a sequence of conversational requests, invoking tools for structural analysis, visualization, and test generation in turn. It correctly inferred the required file extension for the final step. This ability to dynamically chain different tools based on conversational commands highlights its practical intelligence, proving it can function as a versatile and intuitive partner in complex EDA workflows.

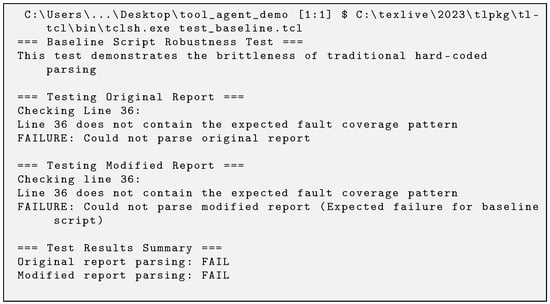

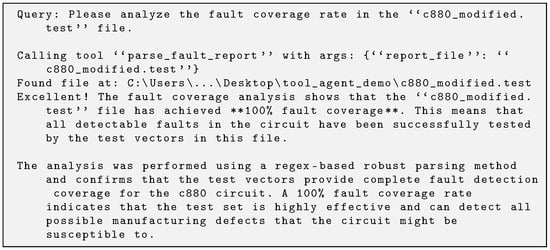

4.6. Experiment 5: Resilience to Environmental Changes

To directly validate our claim that DFTAgent is more resilient than traditional scripts, we established a direct baseline comparison. First, a standard Tcl script was written to perform the ATPG task cycle for the c880.bench circuit, including a step to parse the fault coverage from the Atalanta report file using a regular expression. We then introduced a minor modification to the environment: the output format of the Atalanta report was slightly altered by adding an extra descriptive line before the fault coverage statistic.

The results were definitive. As shown in Figure 5 and Figure 6, the Tcl script, which depended on a fixed line order, failed to parse the value and aborted the flow. In contrast, DFTAgent’s parse_fault_report tool successfully handled the modified report. It leveraged the LLM’s semantic understanding to extract the correct fault coverage metric, allowing the workflow to complete. This experiment provides direct empirical evidence of the proposed framework’s superior robustness over brittle, hard-coded scripts.

Figure 5.

Baseline script robustness test.

Figure 6.

Robust parsing of modified report.

- Finding 3: DFTAgent exhibits superior robustness and adaptability compared to traditional hard-coded scripts. In a direct baseline comparison, a standard Tcl script failed when faced with a minor alteration to a report file format. In contrast, DFTAgent successfully parsed the modified report, demonstrating its ability to handle unexpected variations in tool output. This resilience to superficial formatting changes directly addresses a core weakness of script-based automation, reducing maintenance overhead and proving the framework’s potential to create more robust and adaptive EDA workflows.

4.7. Experiment 6: Resilience to Systematic Text Structure Changes

While Experiment 5 validates robustness against small environmental modifications, it does not address the impact of “systematic changes in text structure” within tool-generated reports. To evaluate DFTAgent under such conditions, we created four categories of report transformations from the original Atalanta output for the c880.bench circuit:

- Paragraph Reordering: Relocated the fault coverage and test pattern sections to different positions in the file, including placing them at the end.

- Section Header Renaming: Changed key headers (e.g., Fault Coverage → Coverage Rate, Test Patterns → Generated Vectors).

- Result Block Merging/Splitting: Combined multiple metrics into one sentence, or split a single metric into multiple lines.

- Noise Injection: Added unrelated statistical data blocks that could confuse parsers relying on positional rules.

We compared the parsing success rate and metric accuracy of the baseline Tcl script and DFTAgent across these transformations. Results are shown in Table 6.

Table 6.

Robustness to systematic text structure changes.

The baseline script failed for all types of systematic changes due to its reliance on fixed line numbers and exact string matches. In contrast, DFTAgent maintained high completion rates, correctly interpreting most altered formats using LLM-driven semantic parsing. Performance degradation occurred primarily in the block merging/splitting case.

- Finding 4: DFTAgent’s resilience extends beyond minor environmental variations to complex structural changes in report format. This demonstrates strong adaptability to realistic toolchain evolutions, further validating the framework’s value in production environments.

5. Discussion

The experimental results presented in Section 4 provide compelling evidence for the viability of an LLM-agent-based framework for orchestrating DFT workflows. This section interprets these findings, contextualizes their significance within the broader shift towards AI-Native EDA, discusses the practical implications for engineering productivity, and outlines the limitations of the current work while proposing directions for future research.

5.1. Experimental Validation: Robustness and Intelligence

The evaluation of DFTAgent was designed to assess two primary capabilities: the reliability of the ATPG task cycle and the intelligence of interactive and multi-tool orchestration. The results from both experiments affirm the framework’s potential.

Section 4.2 serves as a crucial validation of the framework’s fundamental robustness and accuracy. DFTAgent successfully abstracts a complex and multi-step process into a single command. For instance, it can autonomously execute the entire ATPG flow, from tool invocation to report generation, simply from the prompt “Run ATPG for c880.bench.” The high fidelity of the extracted metrics, as detailed in Table 3, is particularly noteworthy. DFTAgent’s parse_fault_report tool consistently extracted fault coverage and test pattern counts that precisely matched the ground truth values verified from Atalanta reports.

This success is more significant than mere data extraction. It directly addresses a core weakness of traditional script-based automation, which is its fragility when interacting with the text-based outputs of EDA tools. Traditional scripts often rely on rigid parsing methods, such as regular expressions, which can easily break if a tool vendor modifies the log file format, even slightly. This brittleness leads to the high maintenance overhead identified as a key challenge in the introduction. In contrast, DFTAgent is inherently more resilient to superficial formatting changes. This is because it uses an LLM’s semantic comprehension to understand the meaning of terms like fault coverage, rather than just their position or format. This result is a practical demonstration of how LLM agents can create a robust and adaptive interface layer over legacy, text-based tools.

Section 4.5 showcases the framework’s most profound advantage over scripted automation: its capacity for intelligent and interactive analysis. DFTAgent’s workflow mirrors the exploratory process of a human engineer. It demonstrates this by linking a series of related tasks, such as analyzing a netlist (c499.v), then visualizing the structure, and finally assessing its testability. The critical moment in this experiment was the final query. A traditional script would have failed, as the context was the Verilog file, while the ATPG tool requires a BENCH format file.

However, DFTAgent correctly inferred the user’s intent. Then, it located the corresponding .bench file and successfully executed the task. This act of inference marks a fundamental shift from a simple automation tool to an intelligent cognitive assistant. DFTAgent is not merely executing pre-programmed commands. It is building a model of the user’s goal and leveraging its knowledge of the available tools to achieve it. This capability transforms the human-computer interaction model. Instead of the engineer adapting to the tool’s rigid syntax, the tool adapts to the engineer’s natural mode of thinking.

5.2. Limitations and Future Research Directions

While this study provides a strong proof-of-concept, it is essential to acknowledge its limitations, which in turn illuminate clear directions for future research. The current evaluation was constrained to open-source tools (Atalanta/Graphviz) and academic benchmarks (ISCAS’85/ISCAS’89/ITC’99). These benchmarks, while foundational for academic research, do not represent the immense scale (billions of transistors) and structural complexity of modern industrial System-on-Chip designs. Similarly, the current toolset is limited to combinational and basic sequential ATPG. To build upon this foundation and advance the framework towards industrial applicability, several key research avenues should be pursued:

- Integration with Commercial EDA Suites: The most critical next step is to expand the Tool Layer by developing robust wrappers for industry-standard commercial tools, such as Synopsys TestMAX and Siemens Tessent. This effort will involve overcoming significant challenges. First, it requires programmatic license management and the ability to handle complex tool configuration files. Second, the system must be able to parse the much richer and more varied log file outputs generated by these sophisticated tools.

- Scalability and Asynchronous Task Management: Industrial DFT and ATPG runs can take many hours or even days to complete on large designs, especially when applying advanced fault models or high test compression ratios. DFTAgent’s current synchronous orchestration loop is insufficient for such workloads, as it assumes tools will complete within minutes. To support production-scale designs, the core executive module must be enhanced to manage persistent, long-running jobs in a truly asynchronous manner. This entails implementing job completion callbacks from ATPG tools, periodic status polling with configurable intervals, and integration with workload managers such as Slurm or LSF for compute farm submission. The framework should maintain a resilient task queue with checkpointing so that partial progress can survive agent restarts or system failures. Such capabilities would enable DFTAgent to continue orchestrating other design or verification tasks in parallel while ATPG jobs execute in the background, thereby aligning with the operational requirements of industrial-scale DFT workflows.

5.3. Minimum Experimental Plan

To comprehensively evaluate scalability and robustness, the following core experiments were designed:

- 1.

- We will utilize synthetic benchmarks with large logic depths to measure the scaling of runtime and resource consumption.

- 2.

- A test-compression variant of the c6288 benchmark will be used to assess the system’s compatibility with compressed Automatic Test Pattern Generation (ATPG) patterns.

- 3.

- We will conduct controlled log perturbation tests (e.g., missing tags, noisy timestamps, and multithread interleaving) to quantify error-recovery rates and replication stability.

6. Conclusions

The escalating complexity of VLSI circuits has strained traditional script-based DFT automation flows, which are increasingly defined by their rigidity, high maintenance costs and steep learning curve for non-specialists. In response to these pressing challenges, this paper introduced DFTAgent, a novel framework that leverages an LLM-based agent as an intelligent orchestrator for a DFT toolchain centered around the ATPG process.

Our empirical evaluation on industry-standard ISCAS benchmark circuits provided robust validation of the framework’s core capabilities. The results demonstrated that DFTAgent can autonomously manage the ATPG task cycle with high accuracy. A key part of this capability is its reliable parsing of key metrics directly from tool-generated reports. Remarkably, this entire complex workflow is triggered by just a single natural language command. Furthermore, the framework demonstrates its potential as an effective interactive analysis assistant. It achieves this by intelligently orchestrating a sequence of distinct tools. Moreover, it can make logical inferences to understand and fulfill even ambiguous user goals.

The primary contribution of this work is a fully designed, implemented, and validated framework for DFT automation. This framework represents a fundamental shift away from traditional imperative scripts to the agent-based paradigm. The new paradigm is inherently more resilient, declarative, and accessible to users. By abstracting the intricacies of command-line EDA tools behind a natural language interface and an intelligent orchestration engine, DFTAgent represents a significant and practical step towards the broader vision of AI-Native EDA. To foster reproducibility and encourage further research in this promising area, the complete implementation of the DFTAgent framework has been released as an open-source project. This work helps pave the way for a future where EDA tools evolve from passive instruments into intelligent partners, actively collaborating with engineers to accelerate innovation in the semiconductor industry.

Author Contributions

Conceptualization, H.L. (Haiyang Liu) and H.L. (Hailong Li); methodology, H.L. (Hailong Li); software, Y.W.; validation, H.L. (Hailong Li), Y.W. and J.L.; formal analysis, H.L. (Hailong Li); investigation, H.L. (Hailong Li) and J.L.; resources, H.L. (Haiyang Liu); data curation, J.L.; writing—original draft preparation, H.L. (Hailong Li); writing—review and editing, H.L. (Haiyang Liu) and Y.W.; visualization, H.L. (Hailong Li); supervision, H.L. (Haiyang Liu); project administration, H.L. (Haiyang Liu); funding acquisition, H.L. (Haiyang Liu). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 62271482.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The complete source code for the DFTAgent framework developed in this study is openly available in a GitHub repository at https://github.com/ame-shiro/DFTAgent (accessed on 23 October 2025). The research was conducted using the standard ISCAS’85 and ISCAS’89 benchmark circuits, which are publicly available from various academic sources. All data generated and analyzed during this study are included within this published article and can be fully reproduced using the provided source code and the aforementioned benchmarks.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Agnihotri, P.; Kalla, P.; Blair, S. Design-for-Test for Silicon Photonic Circuits. In Proceedings of the 2024 IEEE International Test Conference (ITC), San Diego, CA, USA, 3–8 November 2024; IEEE: New York, NY, USA, 2024; pp. 86–90. [Google Scholar]

- Yuan, S.; Yaldagard, M.A.; Xun, H.; Fieback, M.; Marinissen, E.J.; Kim, W.; Rao, S.; Couet, S.; Taouil, M.; Hamdioui, S. Design-for-test for intermittent faults in STT-MRAMs. In Proceedings of the 2024 IEEE European Test Symposium (ETS), The Hague, The Netherlands, 20–24 May 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Wang, Y.; Mäntylä, M.V.; Liu, Z.; Markkula, J.; Raulamo-jurvanen, P. Improving test automation maturity: A multivocal literature review. Softw. Test. Verif. Reliab. 2022, 32, e1804. [Google Scholar] [CrossRef]

- Eisty, N.U.; Kanewala, U.; Carver, J.C. Testing research software: An in-depth survey of practices, methods, and tools. Empir. Softw. Eng. 2025, 30, 81. [Google Scholar] [CrossRef]

- Pan, J.; Zhou, G.; Chang, C.C.; Jacobson, I.; Hu, J.; Chen, Y. A survey of research in large language models for electronic design automation. ACM Trans. Des. Autom. Electron. Syst. 2025, 30, 1–21. [Google Scholar] [CrossRef]

- Pasandi, G.; Kunal, K.; Tej, V.; Shan, K.; Sun, H.; Jain, S.; Li, C.; Deng, C.; Ene, T.D.; Ren, H.; et al. JARVIS: A Multi-Agent Code Assistant for High-Quality EDA Script Generation. arXiv 2025, arXiv:2505.14978. [Google Scholar]

- Huang, G.; Hu, J.; He, Y.; Liu, J.; Ma, M.; Shen, Z.; Wu, J.; Xu, Y.; Zhang, H.; Zhong, K.; et al. Machine learning for electronic design automation: A survey. ACM Trans. Des. Autom. Electron. Syst. (TODAES) 2021, 26, 1–46. [Google Scholar] [CrossRef]

- Mirhoseini, A.; Goldie, A.; Yazgan, M.; Jiang, J.W.; Songhori, E.; Wang, S.; Lee, Y.J.; Johnson, E.; Pathak, O.; Nova, A.; et al. A graph placement methodology for fast chip design. Nature 2021, 594, 207–212. [Google Scholar] [CrossRef] [PubMed]

- Shi, Z.; Li, M.; Khan, S.; Wang, L.; Wang, N.; Huang, Y.; Xu, Q. Deeptpi: Test point insertion with deep reinforcement learning. In Proceedings of the 2022 IEEE International Test Conference (ITC), Anaheim, CA, USA, 23–30 September 2022; IEEE: New York, NY, USA, 2022; pp. 194–203. [Google Scholar]

- Chen, Z.; Xu, J.; Alippi, C.; Ding, S.X.; Shardt, Y.; Peng, T.; Yang, C. Graph neural network-based fault diagnosis: A review. arXiv 2021, arXiv:2111.08185. [Google Scholar] [CrossRef]

- Pandey, S.; Sarangi, S.R. HybMT: Hybrid Meta-Predictor based ML Algorithm for Fast Test Vector Generation. In Proceedings of the 2024 29th Asia and South Pacific Design Automation Conference (ASP-DAC), Incheon, Republic of Korea, 22–25 January 2024; IEEE: New York, NY, USA, 2024; pp. 497–502. [Google Scholar]

- Banerjee, S.; Talukdar, J.; Firouzi, F. Silicon Whisperers: Improving Test Quality and Cost in the Age of Generative AI. In Proceedings of the 2025 IEEE International Conference on Omni-layer Intelligent Systems (COINS), Madison, WI, USA, 4–6 August 2025; IEEE: New York, NY, USA, 2025; pp. 1–5. [Google Scholar]

- Chen, L.; Chen, Y.; Chu, Z.; Fang, W.; Ho, T.Y.; Huang, R.; Huang, Y.; Khan, S.; Li, M.; Li, X.; et al. The dawn of ai-native eda: Opportunities and challenges of large circuit models. arXiv 2024, arXiv:2403.07257. [Google Scholar]

- Chen, L.; Chen, Y.; Chu, Z.; Fang, W.; Ho, T.Y.; Huang, R.; Huang, Y.; Khan, S.; Li, M.; Li, X.; et al. Large circuit models: Opportunities and challenges. Sci. China Inf. Sci. 2024, 67, 200402. [Google Scholar] [CrossRef]

- Huang, Z.; Huang, Z.; Tao, S.; Chen, S.; Zeng, Z.; Ni, L.; Zhuang, C.; Li, W.; Zhao, X.; Liu, H.; et al. AiEDA: An open-source AI-native EDA library. In Proceedings of the 2024 2nd International Symposium of Electronics Design Automation (ISEDA), Xi’an, China, 10–13 May 2024; IEEE: New York, NY, USA, 2024; pp. 794–795. [Google Scholar]

- Thakur, S.; Ahmad, B.; Pearce, H.; Tan, B.; Dolan-Gavitt, B.; Karri, R.; Garg, S. Verigen: A large language model for verilog code generation. ACM Trans. Des. Autom. Electron. Syst. 2024, 29, 1–31. [Google Scholar] [CrossRef]

- Firouzi, F.; Nakkilla, S.S.R.; Fu, C.; Banerjee, S.; Talukdar, J.; Chakrabarty, K. Llm-aid: Leveraging large language models for rapid domain-specific accelerator development. In Proceedings of the 43rd IEEE/ACM International Conference on Computer-Aided Design, New York, NY, USA, 27–31 October 2024; pp. 1–9. [Google Scholar]

- Fu, Y.; Zhang, Y.; Yu, Z.; Li, S.; Ye, Z.; Li, C.; Wan, C.; Lin, Y.C. Gpt4aigchip: Towards next-generation ai accelerator design automation via large language models. In Proceedings of the 2023 IEEE/ACM International Conference on Computer Aided Design (ICCAD), San Francisco, CA, USA, 28 October–2 November 2023; IEEE: New York, NY, USA, 2023; pp. 1–9. [Google Scholar]

- Wu, H.; He, Z.; Zhang, X.; Yao, X.; Zheng, S.; Zheng, H.; Yu, B. Chateda: A large language model powered autonomous agent for eda. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2024, 43, 3184–3197. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhang, Q.; Liu, C.; Cheng, L.; Li, H.; Li, X. Iicpilot: An intelligent integrated circuit backend design framework using open eda. arXiv 2024, arXiv:2407.12576. [Google Scholar] [CrossRef]

- Wu, H.; Zheng, H.; He, Z.; Yu, B. Divergent Thoughts toward One Goal: LLM-based Multi-Agent Collaboration System for Electronic Design Automation. arXiv 2025, arXiv:2502.10857. [Google Scholar]

- Wang, X.; Wan, G.W.; Wong, S.Z.; Zhang, L.; Liu, T.; Tian, Q.; Ye, J. Chatcpu: An agile cpu design and verification platform with llm. In Proceedings of the 61st ACM/IEEE Design Automation Conference, San Francisco, CA, USA, 23–27 June 2024; pp. 1–6. [Google Scholar]

- Hu, Y.; Ye, J.; Xu, K.; Sun, J.; Zhang, S.; Jiao, X.; Pan, D.; Zhou, J.; Wang, N.; Shan, W.; et al. Uvllm: An automated universal rtl verification framework using llms. arXiv 2024, arXiv:2411.16238. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, H.; Gao, X.; Kong, Z.; Tang, X.; Lin, Y.; Wang, R.; Huang, R. LayoutCopilot: An LLM-Powered Multiagent Collaborative Framework for Interactive Analog Layout Design. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2025, 44, 3126–3139. [Google Scholar] [CrossRef]

- Petrolo, V.; Medya, S.; Graziano, M.; Pal, D. DETECTive: Machine Learning-driven Automatic Test Pattern Prediction for Faults in Digital Circuits. In Proceedings of the Great Lakes Symposium on VLSI 2024, GLSVLSI ’24, Clearwater, FL, USA, 12–14 June 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 32–37. [Google Scholar]

- Shrestha, P.; Aversa, A.; Phatharodom, S.; Savidis, I. EDA-schema: A Graph Datamodel Schema and Open Dataset for Digital Design Automation. In Proceedings of the Great Lakes Symposium on VLSI 2024, GLSVLSI ’24, Clearwater, FL, USA, 12–14 June 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 69–77. [Google Scholar]

- Rahimifar, M.; Jahanirad, H.; Fathi, M. Deep transfer learning approach for digital circuits vulnerability analysis. Expert Syst. Appl. 2024, 237, 121757. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).