“I’m a Fish!”: Exploring Children’s Engagement with Human–Data Interactions in Museums

Abstract

1. Introduction

2. Background

2.1. Embodied Interaction

2.2. Human–Data Interaction (HDI)

2.3. Public Interactive Displays

2.4. Social Learning

2.5. Children’s Experience in Museums

3. Materials and Methods

3.1. Our HDI Prototype

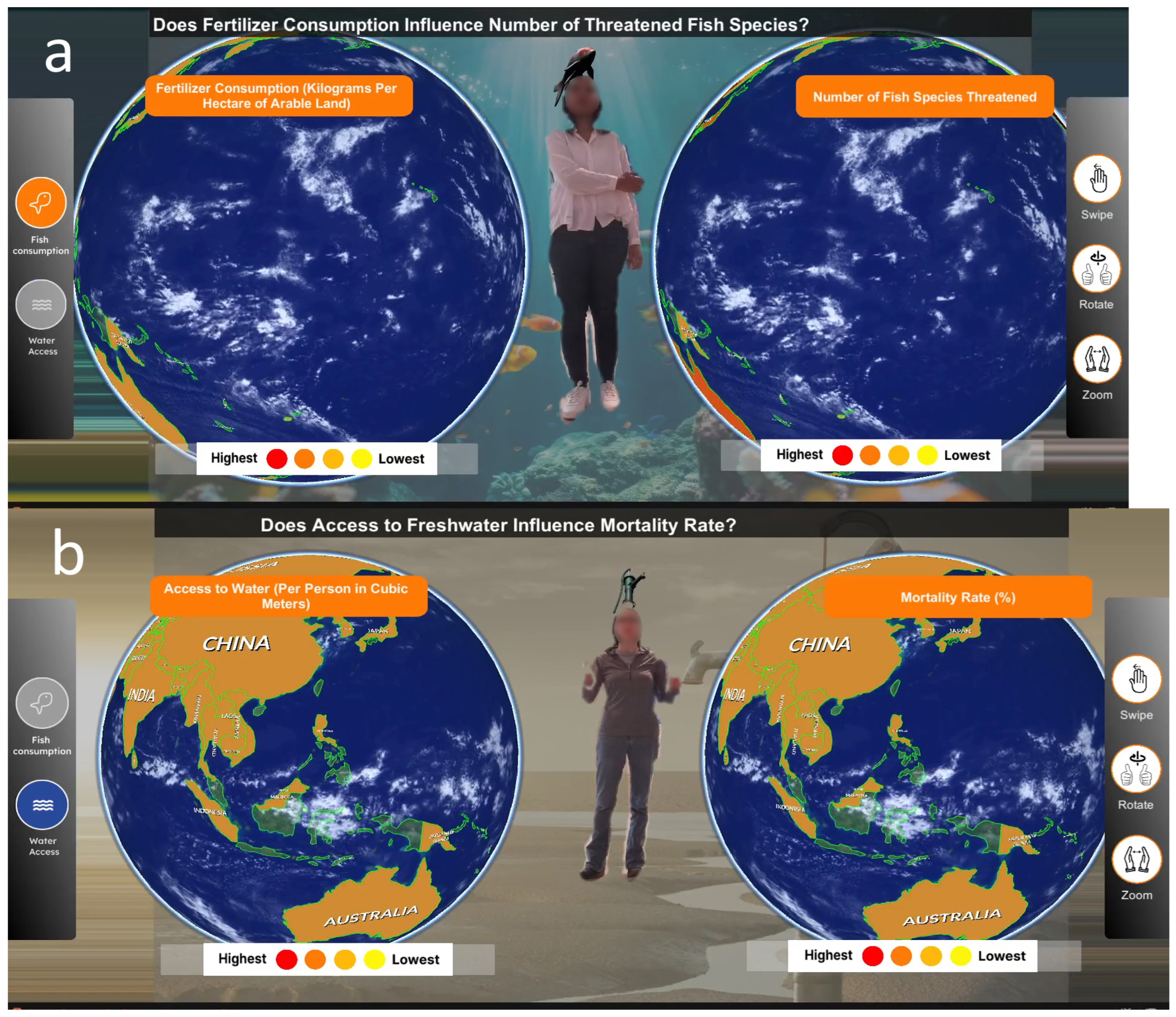

3.1.1. Data Visualization Scenarios

- 1.

- Fish Endangerment Scenario

- 2.

- Water Access Scenario

3.1.2. Software Description and Content

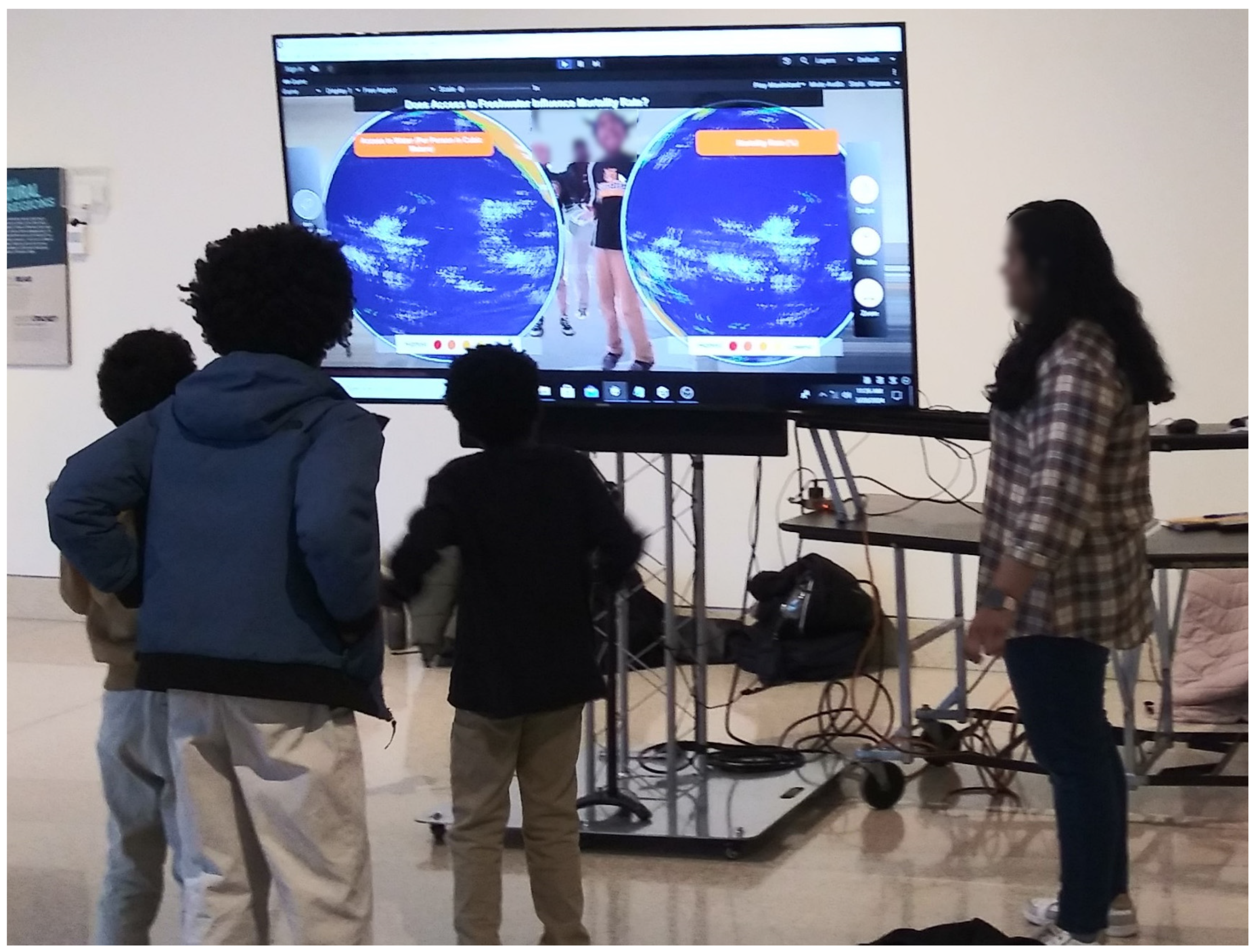

3.1.3. Hardware Description and Setup

3.2. Participants

3.3. Observations and Analysis Methods

3.3.1. Screen Captures

3.3.2. Camera Footage Recordings

3.4. Research Questions

- RQ1. How do children interact socially and physically with data-centric interactive displays in a museum setting?

- RQ2. What hindered children’s engagement with the data on display?

4. Results

4.1. RQ1. How Do Children Interact Socially and Physically with Data-Centric Interactive Displays in a Museum Setting?

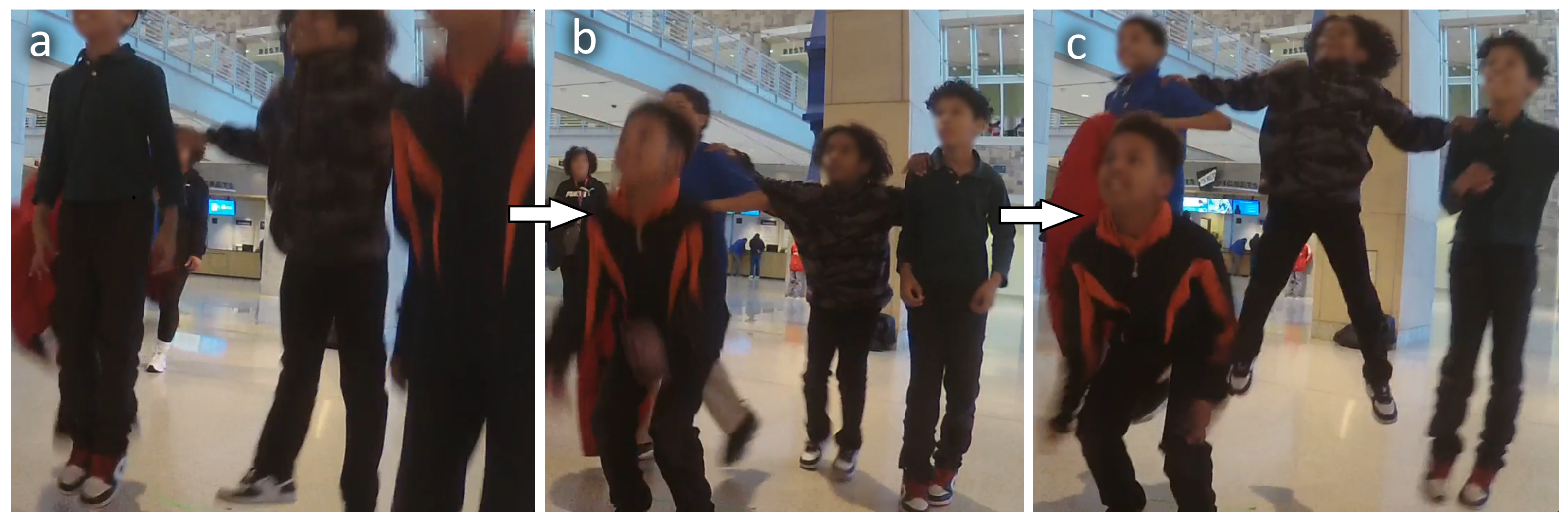

- Peer Instruction

25:30: A moderator asks the group, “Who has the hat?”, instructing the teens on how to interact with the display. Initially confused, the group does not follow the gesture instructions.

One girl then takes the initiative, creating her own gesture by opening her arms and leading the others.

They immediately follow her cues, waving and jumping in front of the screen, experimenting with their positioning.

28:45: Another child, acting as the leader, calls to the others, “Guys, watch this!”, as he performs a swiping gesture to interact with the display.

The others mimic his actions, trying to swipe in unison. When a moderator explains that the child with the “fish on their head” is the controller, the other children actively support their peer, insisting, “Yes, you do, just swipe,” and demonstrating the gesture when their friend is unsure.

30:54: When another child receives control of the display, the group encourages him to “come closer” and “try swiping.”

- Moderators and Parents’ Guidance

5:44: Several kids tried to take control of the display, causing confusion.

Moderator: “The one with the fish on their head can swipe.”

Kid: “Where is the fish?”

Moderator: “It switched. You’ll gesture… now it’s the fish.”

Later, as more kids approached, the moderator stepped in again to clarify:

Moderator: “Whoever has the fish is in control.”

“1:04–6:25: A mother with two young children—Hannah, around 3–4 years old, and Iris, likely 2 years old—arrived at the display. The mother, immediately fascinated by the large screen, called out to her children, “Oh, Hannah, come here, look!” She waved at the screen and said excitedly, “Ohh, there you are. Heyyy,” as Hannah stepped in front of the screen and mimicked her gestures. The mother then asked, “What are you supposed to do? Do I spin?”

As the mother tried to figure out the controls, she started swinging her finger, mimicking a spinning action. The moderator intervened, explaining, “You can swipe.” The mother responded with, “Oh, swipe innnn,” demonstrating the action to Hannah. The moderator added, “You can also zoom in,” prompting the mother to say, “Oh, left, right. Oh, zoom in and out.” She continued to model the gestures for Hannah, who was now actively engaged with the display.

When a third child arrived and inquired, “Hey, what’s that?” while pointing at the screen, the moderator replied, “Hat.” The mother joined in, encouraging the child, “Do you want to swipe it?” As the child attempted to swipe but struggled, the moderator demonstrated, “Like that. To the right.” The mother reinforced this by saying, “There you go. See how it changed.”

Later, when Iris got too close to the camera, the mother directed her, “No touch, thank you,” and repositioned her children, saying, “Let Iris have one turn up front,” ensuring that each child had a fair chance to interact with the display. As the older sister returned to the screen, the mother reiterated, “Let’s go,” signaling the end of their session.”

- Taking Turns

- Solo Interaction with the Display: One Actor and Multiple Spectators

- Coordinated Team Efforts

- Role-Playing and Imagination

8:33: A group of older kids walks by the screen with their chaperone. One of the girls notices the display but doesn’t engage.

Another girl steps up and, seeing herself on the screen, starts walking as if on a runway.

A second girl joins her, role-playing, “Okay, guys, the forecast for today is... umm, cloudy, wet, well, not wet but... hmmm.”

They both look at the screen as she continues, “It’s cold and chilly, so kids may not be able to go outside. They might get cold and sick.”

Their chaperone calls, “Alright, girls, let’s go,” and they say goodbye, with one girl blowing kisses at the screen.

9:39: The girl turns back and blows another kiss to the camera before leaving.

4.2. RQ2. What Hindered Children’s Engagement with the Data on Display?

4.2.1. Barriers to Engaging with the Data

4.2.2. Technology Does Not Respond as Expected to Gestures and Body Movements

Ava steps up to the display and begins using gestures, but quickly encounters difficulties. She asks, “How do you rotate the steering wheel, like this or...?”

Moderator: “Yeah, yeah, small steering wheel-like movements.”

Ava tries again but seems confused. Moderator: “You can zoom in, zoom out.”

Ava continues to struggle, and after a few failed attempts, says to the display, “What’s wrong? Stop!” She laughs, adding, “I like you right there.”

Finally, after repeated attempts, she says, “Yes, I got it. Okay,” but her frustration is evident.

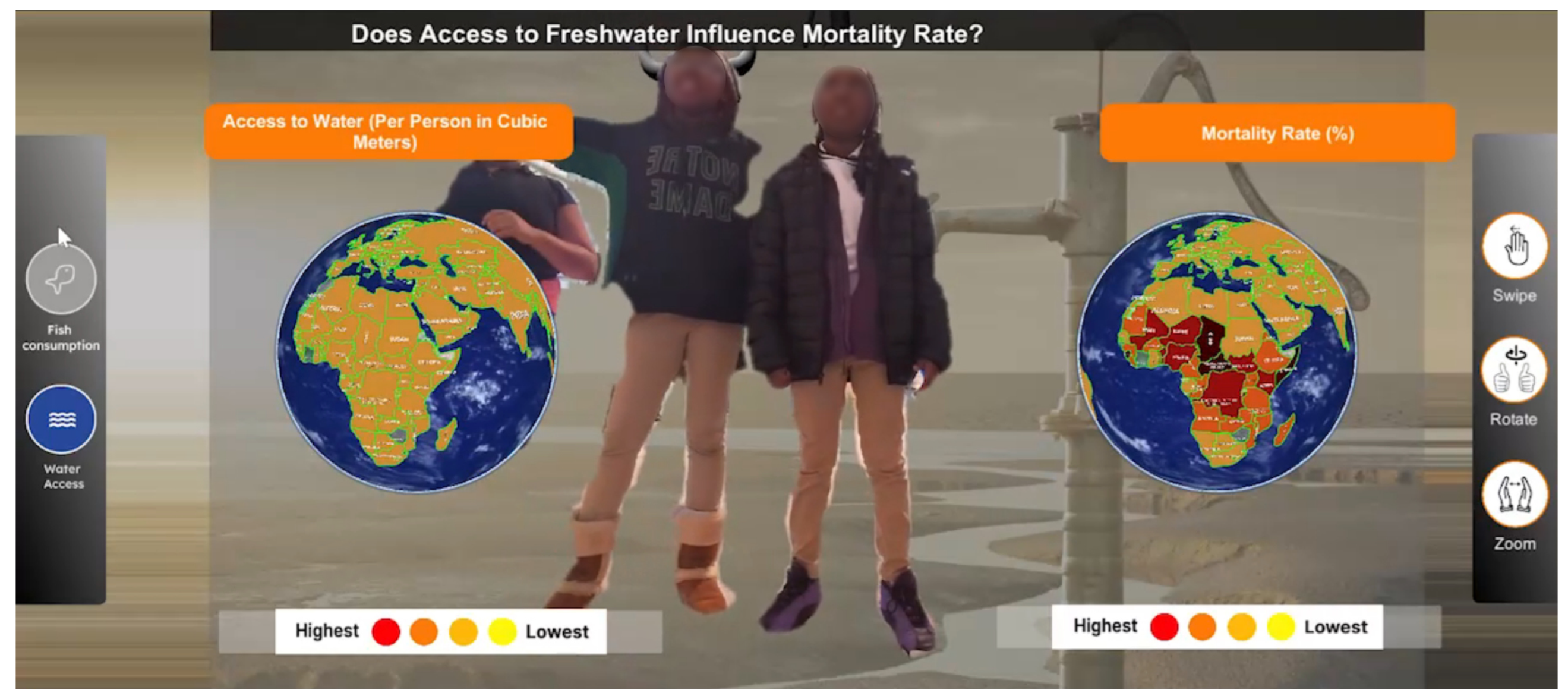

4.3. An Example of Children’s Interaction with the Display

An older child (7-8 years old) approaches the screen, sees her reflection, and exclaims, “Whaaaat?” She moves slowly side to side, testing the screen’s tracking.

Two women (likely moms) move closer. The child says, “Mom, I want to see what this is.” She gets closer, saying, “What’s this?” Another child (her friend) appears behind her and pushes her aside, saying, “I want to see.”

The moms tell the kids to back up.

The first child, who now has the hat with horns on the sides, says, “I’m a bull,” and they all laugh. Both kids stand in front of the screen, eyes fixed on it.

The second child says, “Why do I have the fire hydrant?” He pushes the first child again. The first child responds, “I’m the fire hydrant.”

They start jostling to get the hat. The first child says, “Switch already. How can we switch?” The second child replies, “It’s not a fire hydrant. It’s a water spell.” He points at the screen, saying, “You see that thing right here?”

The moms step in, “Don’t touch the screen.”

The second child repeats, “It’s a water spell.”

The facilitators arrive. “One at a time,” they instruct. “If you want to move the screen, you can swipe.”

The moms tell the kids, “One of you come here. One of you stay, okay? Try swiping.”

The facilitators demonstrate how to swipe.

“Jump once,” one facilitator instructs.

The first child jumps. The second child joins in, and they both start jumping. The first child counts, “1, 2, 3, and jump!” They laugh.

The moms remind them, “One at a time.”

The second child tells the first, “You get out of the way.”

The first child responds, “No, I’m a fish.”

The second child protests, “I want to be a fish. You go, go.”

The moms and facilitators repeat, “One at a time.”

The first child moves away while the second child continues. The moms show him how to use his hands to zoom in and out. “Now do this with your hands,” they say.

The second child ignores the instruction and jumps again.

The moms redirect, “Pay attention. Look, this way.”

The second child follows the hand motions, zooming in and out.

“There you go,” the moms say.

The facilitators show him how to turn the wheel, “Like driving a car.”

Everyone says, “Wow,” as they see the changes on the screen.

The moms express their delight, “Look at that.”

The child continues excitedly jumping. He jumps about 20 times.

The first child returns to the front and mimics the zooming and swiping gestures.

The moms conclude, “That’s cool.”

The group leaves.

5. Discussion

5.1. Implications for the Design of Human–Data Interaction (HDI) Installations “with” Children

5.2. Designing for Different Age Groups

5.3. Supporting Peer Collaboration

5.4. Facilitating Control and Taking Turns

5.5. Preventing Overcrowding

5.6. Supporting Play and Imagination with Data

6. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HDI | Human–Data Interaction |

| PIDs | Public Interactive Displays |

| SDK | Software Development Kit |

| IRB | Institutional Review Board |

References

- Wolff, A.; Wermelinger, M.; Petre, M. Exploring design principles for data literacy activities to support children’s inquiries from complex data. Int. J.-Hum.-Comput. Stud. 2019, 129, 41–54. [Google Scholar] [CrossRef]

- Roberts, J.; Lyons, L.; Cafaro, F.; Eydt, R. Interpreting data from within: Supporting human-data interaction in museum exhibits through perspective taking. In Proceedings of the ACM International Conference Proceeding Series, Aarhus Denmark, 17–20 June 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 7–16. [Google Scholar] [CrossRef]

- Rogoff, B. Apprenticeship in Thinking: Cognitive Development in Social Context; Oxford University Press: Oxford, UK, 1990. [Google Scholar]

- Antle, A.N. Research opportunities: Embodied child–computer interaction. Int. J.-Child-Comput. Interact. 2013, 1, 30–36. [Google Scholar] [CrossRef]

- Atkins, L.J.; Velez, L.; Goudy, D.; Dunbar, K.N. The unintended effects of interactive objects and labels in the science museum. Sci. Educ. 2009, 93, 161–184. [Google Scholar] [CrossRef]

- Andre, L.; Durksen, T.; Volman, M.L. Museums as avenues of learning for children: A decade of research. Learn. Environ. Res. 2017, 20, 47–76. [Google Scholar] [CrossRef]

- Eriksson, E.; Baykal, G.E.; Torgersson, O. The role of learning theory in child-computer interaction—A semi-systematic literature review. In Proceedings of the 21st Annual ACM Interaction Design and Children Conference, Braga, Portugal, 27–30 June 2022; pp. 50–68. [Google Scholar]

- Cafaro, F. Using embodied allegories to design gesture suites for human-data interaction. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing—UbiComp’12, Pittsburgh, PA, USA, 5–8 September 2012; p. 560. [Google Scholar] [CrossRef]

- Elmqvist, N. Embodied human-data interaction. In Proceedings of the ACM CHI 2011 Workshop “Embodied Interaction: Theory and Practice in HCI, Vancouver, BC, Canada, 7–12 May 2011; Volume 1, pp. 104–107. [Google Scholar]

- Cafaro, F.; Roberts, J. Data Through Movement: Designing Embodied Human-Data Interaction for Informal Learning; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Trajkova, M.; Alhakamy, A.; Cafaro, F.; Mallappa, R.; Kankara, S.R. Move Your Body: Engaging Museum Visitors with Human-Data Interaction. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems—CHI ’20, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Mishra, S.; Cafaro, F. Full body interaction beyond fun: Engaging museum visitors in human-data interaction. In Proceedings of the Twelfth International Conference on Tangible, Embedded, and Embodied Interaction, Stockholm, Sweden, 18–21 March 2018; pp. 313–319. [Google Scholar]

- Dourish, P. Where the Action Is; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Hornecker, E. The role of physicality in tangible and embodied interactions. Interactions 2011, 18, 19–23. [Google Scholar] [CrossRef]

- Perry, D.L. What Makes Learning Fun? Principles for the Design of Intrinsically Motivating Museum Exhibits; Rowman Altamira: Lanham, MD, USA, 2012. [Google Scholar]

- Lindgren, R.; Tscholl, M.; Wang, S.; Johnson, E. Enhancing learning and engagement through embodied interaction within a mixed reality simulation. Comput. Educ. 2016, 95, 174–187. [Google Scholar] [CrossRef]

- Kostic, Z.; Dumas, C.; Pratt, S.; Beyer, J. Exploring Mid-Air Hand Interaction in Data Visualization. IEEE Trans. Vis. Comput. Graph. 2023, 30, 6347–6364. [Google Scholar] [CrossRef]

- Eslambolchilar, P.; Stawarz, K.; Dias, N.V.; McNarry, M.A.; Crossley, S.G.; Knowles, Z.; Mackintosh, K.A. Tangible data visualization of physical activity for children and adolescents: A qualitative study of temporal transition of experiences. Int. J.-Child-Comput. Interact. 2023, 35, 100565. [Google Scholar] [CrossRef]

- Johnson-Glenberg, M.C.; Megowan-Romanowicz, C. Embodied science and mixed reality: How gesture and motion capture affect physics education. Cogn. Res. Princ. Implic. 2017, 2, 24. [Google Scholar] [CrossRef] [PubMed]

- Johnson-Glenberg, M.C.; Yu, C.S.P.; Liu, F.; Amador, C.; Bao, Y.; Yu, S.; LiKamWa, R. Embodied mixed reality with passive haptics in STEM education: Randomized control study with chemistry titration. Front. Virtual Real. 2023, 4, 1047833. [Google Scholar] [CrossRef]

- Acevedo, P.; Magana, A.J.; Walsh, Y.; Will, H.; Benes, B.; Mousas, C. Embodied immersive virtual reality to enhance the conceptual understanding of charged particles: A qualitative study. Comput. Educ. X Real. 2024, 5, 100075. [Google Scholar] [CrossRef]

- Price, S.; Yiannoutsou, N.; Vezzoli, Y. Making the body tangible: Elementary geometry learning through VR. Digit. Exp. Math. Educ. 2020, 6, 213–232. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, L. Skeleton tracking accuracy and precision evaluation of kinect v1, kinect v2, and the azure kinect. Appl. Sci. 2021, 11, 5756. [Google Scholar] [CrossRef]

- Funken, M.; Hanne, T. Comparing Classification Algorithms to Recognize Selected Gestures Based on Microsoft Azure Kinect Joint Data. Information 2025, 16, 421. [Google Scholar] [CrossRef]

- Popovici, D.M.; Iordache, D.; Comes, R.; Neamțu, C.G.D.; Băutu, E. Interactive exploration of virtual heritage by means of natural gestures. Appl. Sci. 2022, 12, 4452. [Google Scholar] [CrossRef]

- Mendoza, M.A.D.; De La Hoz Franco, E.; Gómez, J.E.G. Technologies for the preservation of cultural heritage—A systematic review of the literature. Sustainability 2023, 15, 1059. [Google Scholar] [CrossRef]

- Ress, S.; Cafaro, F.; Bora, D.; Prasad, D.; Soundarajan, D. Mapping history: Orienting museum visitors across time and space. J. Comput. Cult. Herit. (JOCCH) 2018, 11, 1–25. [Google Scholar] [CrossRef]

- Müller, J.; Walter, R.; Bailly, G.; Nischt, M.; Alt, F. Looking Glass: A Field Study on Noticing Interactivity of a Shop Window. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar] [CrossRef]

- Ackad, C.; Tomitsch, M.; Kay, J. Skeletons and Silhouettes: Comparing User Representations at a Gesture-based Large Display. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems—CHI ’16, San Jose, CA, USA, 7–12 May 2016; pp. 2343–2347. [Google Scholar] [CrossRef]

- Mortier, R.; Haddadi, H.; Henderson, T.; McAuley, D.; Crowcroft, J. Human-Data Interaction: The Human Face of the Data-Driven Society. 2014. Available online: https://ssrn.com/abstract=2508051 (accessed on 21 October 2025).

- Victorelli, E.Z.; Dos Reis, J.C.; Hornung, H.; Prado, A.B. Understanding human-data interaction: Literature review and recommendations for design. Int. J.-Hum.-Comput. Stud. 2020, 134, 13–32. [Google Scholar] [CrossRef]

- Roberts, J.; Lyons, L. The value of learning talk: Applying a novel dialogue scoring method to inform interaction design in an open-ended, embodied museum exhibit. Int. J.-Comput.-Support. Collab. Learn. 2017, 12, 343–376. [Google Scholar] [CrossRef]

- Schauble, L.; Gleason, M.; Lehrer, R.; Bartlett, K.; Petrosino, A.; Allen, A.; Clinton, K.; Ho, E.; Jones, M.; Lee, Y.S.; et al. Supporting science learning in museums. In Learning Conversations in Museums; Routledge: Oxfordshire, UK, 2003; pp. 428–455. [Google Scholar]

- Black, G. The Informal Museum Learning Experience. In Museums and the Challenge of Change; Routledge: Oxfordshire, UK, 2020; pp. 145–159. [Google Scholar]

- Roberts, J.; Lyons, L. Examining spontaneous perspective taking and fluid self-to-data relationships in informal open-ended data exploration. In Situating Data Science; Routledge: Oxfordshire, UK, 2022; pp. 32–56. [Google Scholar]

- Falk, J.H.; Dierking, L.D. The Museum Experience Revisited; Routledge: Oxfordshire, UK, 2016. [Google Scholar]

- Cafaro, F.; Panella, A.; Lyons, L.; Roberts, J.; Radinsky, J. I see you there! Developing identity-preserving embodied interaction for museum exhibits. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April 2013; pp. 1911–1920. [Google Scholar] [CrossRef]

- Parker, C.; Tomitsch, M.; Kay, J. Does the public still look at public displays? A field observation of public displays in the wild. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Singapore, 8–12 October 2018; Volume 2, pp. 1–24. [Google Scholar]

- Parker, C.; Tomitsch, M.; Davies, N.; Valkanova, N.; Kay, J. Foundations for Designing Public Interactive Displays that Provide Value to Users. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems—CHI ’20, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar] [CrossRef]

- Müller, J.; Wilmsmann, D.; Exeler, J.; Buzeck, M.; Schmidt, A.; Jay, T.; Krüger, A. Display blindness: The effect of expectations on attention towards digital signage. In Pervasive Computing, Proceedings of the 7th International Conference, Pervasive 2009, Nara, Japan, 11–14 May 2009; Proceedings 7; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–8. [Google Scholar]

- Parra, G.; Klerkx, J.; Duval, E. Understanding Engagement with Interactive Public Displays: An Awareness Campaign in the Wild. In Proceedings of the International Symposium on Pervasive Displays—PerDis ’14, Copenhagen, Denmark, 3–4 June 2014; pp. 180–185. [Google Scholar] [CrossRef]

- Memarovic, N.; Langheinrich, M.; Alt, F.; Elhart, I.; Hosio, S.; Rubegni, E. Using Public Displays to Stimulate Passive Engagement, Active Engagement, and Discovery in Public Spaces. In Proceedings of the Media Architecture Biennale Conference: Participati, Aarhus, Denmark, 15–17 November 2012. [Google Scholar]

- Weber, D.; Voit, A.; Kollotzek, G.; van der Vekens, L.; Hepting, M.; Alt, F.; Henze, N. PD notify: Investigating personal content on public displays. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–6. [Google Scholar]

- Horn, M.S.; Banerjee, A.; Bar-El, D.; Wallace, I.H. Engaging families around museum exhibits: Comparing tangible and multi-touch interfaces. In Proceedings of the Interaction Design and Children Conference—IDC ’20, London, UK, 21–24 June 2020; pp. 556–566. [Google Scholar] [CrossRef]

- Kruger, R.; Carpendale, M. Orientation and Gesture on Horizontal Displays. In Proceedings of the UbiComp 2002 Workshop on Collaboration with Interactive Walls and Tables, Citeseer, Göteborg, Sweden, 29 September–1 October 2002. [Google Scholar]

- Alt, F.; Shirazi, A.S.; Kubitza, T.; Schmidt, A. Interaction techniques for creating and exchanging content with public displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April –2 May 2013; pp. 1709–1718. [Google Scholar]

- Dalsgaard, P.; Dindler, C.; Halskov, K. Understanding the dynamics of engaging interaction in public spaces. In Human-Computer Interaction–INTERACT 2011, Proceedings of the 13th IFIP TC 13 International Conference, Lisbon, Portugal, 5–9 September 2011; Proceedings, Part II 13; Springer: Berlin/Heidelberg, Germany, 2011; pp. 212–229. [Google Scholar]

- Müller, J.; Alt, F.; Michelis, D.; Schmidt, A. Requirements and design space for interactive public displays. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1285–1294. [Google Scholar]

- Müller, M.; Otero, N.; Milrad, M. Guiding the design and implementation of interactive public displays in educational settings. J. Comput. Educ. 2024, 11, 823–854. [Google Scholar] [CrossRef]

- Mai, C.; Hußmann, H. The Audience Funnel for Head Mounted Displays in Public Environments. In Proceedings of the 2018 IEEE 4th Workshop on Everyday Virtual Reality (WEVR), Virtual, 18 March 2018; Volume 5. [Google Scholar]

- Michelis, D.; Müller, J. The audience funnel: Observations of gesture based interaction with multiple Large Displays in a City Center. Int. J.-Hum.-Comput. Interact. 2011, 27, 562–579. [Google Scholar] [CrossRef]

- Brignull, H.; Rogers, Y. Enticing People to Interact with Large Public Displays in Public Spaces. In Proceedings of the Human-Computer Interaction INTERACT ’03: IFIP TC13 International Conference on Human-Computer Interaction, Zurich, Switzerland, 1–5 September 2003. [Google Scholar]

- Wouters, N.; Downs, J.; Harrop, M.; Cox, T.; Oliveira, E.; Webber, S.; Vetere, F.; Vande Moere, A. Uncovering the honeypot effect: How audiences engage with public interactive systems. In Proceedings of the 2016 ACM Conference on Designing Interactive Systems, Brisbane, QLD, Australia, 4–8 June 2016; pp. 5–16. [Google Scholar]

- Tomitsch, M.; Ackad, C.; Dawson, O.; Hespanhol, L.; Kay, J. Who cares about the content? An analysis of playful behaviour at a public display. In Proceedings of the PerDis 2014—Proceedings: 3rd ACM International Symposium on Pervasive Displays 2014. Association for Computing Machinery, Copenhagen, Denmark, 3–4 June 2014; pp. 160–165. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: London, UK, 1978; Volume 86. [Google Scholar]

- Matthews, K.E.; Andrews, V.; Adams, P. Social learning spaces and student engagement. High. Educ. Res. Dev. 2011, 30, 105–120. [Google Scholar] [CrossRef]

- Casey, G.; Wells, M. Remixing to design learning: Social media and peer-to-peer interaction. J. Learn. Des. 2015, 8, 38–54. [Google Scholar] [CrossRef]

- Cannella, G.S. Learning through social interaction: Shared cognitive experience, negotiation strategies, and joint concept construction for young children. Early Child. Res. Q. 1993, 8, 427–444. [Google Scholar] [CrossRef]

- Falk, J.H.; Dierking, L.D. Learning from Museums; Rowman & Littlefield: Lanham, MD, USA, 2018. [Google Scholar]

- Allen, S. Looking for learning in visitor talk: A methodological exploration. In Learning Conversations in Museums; Routledge: Oxfordshire, UK, 2003; pp. 265–309. [Google Scholar]

- Shaffer, S.E. Engaging Young Children in Museums; Routledge: Oxfordshire, UK, 2016. [Google Scholar]

- Willard, A.K.; Busch, J.T.; Cullum, K.A.; Letourneau, S.M.; Sobel, D.M.; Callanan, M.; Legare, C.H. Explain this, explore that: A study of parent–child interaction in a children’s museum. Child Dev. 2019, 90, e598–e617. [Google Scholar] [CrossRef]

- Haden, C.A.; Cohen, T.; Uttal, D.H.; Marcus, M. Building learning: Narrating experiences in a children’s museum. In Cognitive Development in Museum Settings; Routledge: Oxfordshire, UK, 2015; pp. 84–103. [Google Scholar]

- Anderson, D.; Piscitelli, B.; Weier, K.; Everett, M.; Tayler, C. Children’s museum experiences: Identifying powerful mediators of learning. Curator Mus. J. 2002, 45, 213–231. [Google Scholar] [CrossRef]

- Carr, M.; Clarkin-Phillips, J.; Soutar, B.; Clayton, L.; Wipaki, M.; Wipaki-Hawkins, R.; Cowie, B.; Gardner, S. Young children visiting museums: Exhibits, children and teachers co-author the journey. Child. Geogr. 2018, 16, 558–570. [Google Scholar] [CrossRef]

- Quinto Lima, S.; Buraglia, G.; Kam-Kwai, W.; Roberts, J. Data Bias Recognition in Museum Settings: Framework Development and Contributing Factors. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025; pp. 1–15. [Google Scholar]

- Alhakamy, A.; Trajkova, M.; Cafaro, F. Show Me How You Interact, I Will Tell You What You Think: Exploring the Effect of the Interaction Style on Users’ Sensemaking about Correlation and Causation in Data. In Proceedings of the 2021 ACM Designing Interactive Systems Conference—DIS ’21, Virtual, 28 June–2 July 2021; pp. 564–575. [Google Scholar] [CrossRef]

- Bowman, R.; Nadal, C.; Morrissey, K.; Thieme, A.; Doherty, G. Using thematic analysis in healthcare HCI at CHI: A scoping review. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–18. [Google Scholar]

- Braswell, G.S. Variations in Children’s and adults’ engagement with museum artifacts. Visit. Stud. 2012, 15, 123–135. [Google Scholar] [CrossRef]

- Iversen, O.S.; Smith, R.C.; Dindler, C. Child as Protagonist: Expanding the Role of Children in Participatory Design. In Proceedings of the 2017 Conference on Interaction Design and Children—IDC ’17, Stanford, CA, USA, 27–30 June 2017; pp. 27–37. [Google Scholar] [CrossRef]

- Muravevskaia, E.; Kuriappan, B.; Markopoulos, P.; Lekshmi, S.; M, K.; Schrier, K. Exploring Empathic Design for Children Based on Role-Play Activities: Opportunities and Challenges within the Indian Context. In Proceedings of the 23rd Annual ACM Interaction Design and Children Conference—IDC ’24, Delft, The Netherlands, 17–20 June 2024; pp. 715–719. [Google Scholar] [CrossRef]

- O’neill, D.K.; Astington, J.W.; Flavell, J.H. Young children’s understanding of the role that sensory experiences play in knowledge acquisition. Child Dev. 1992, 63, 474–490. [Google Scholar] [CrossRef] [PubMed]

- Endedijk, H.M.; Meyer, M.; Bekkering, H.; Cillessen, A.; Hunnius, S. Neural mirroring and social interaction: Motor system involvement during action observation relates to early peer cooperation. Dev. Cogn. Neurosci. 2017, 24, 33–41. [Google Scholar] [CrossRef]

- Kosmas, P.; Zaphiris, P. Words in action: Investigating students’ language acquisition and emotional performance through embodied learning. Innov. Lang. Learn. Teach. 2020, 14, 317–332. [Google Scholar] [CrossRef]

- Macrine, S.L.; Fugate, J.M. Movement Matters: How Embodied Cognition Informs Teaching and Learning; MIT Press: Cambridge, MA, USA, 2022. [Google Scholar]

- Feltovich, P.J.; Spiro, R.J.; Coulson, R.L. Learning, teaching, and testing for complex conceptual understanding. In Test Theory for a New Generation of Tests; Routledge: Oxfordshire, UK, 2012; pp. 181–217. [Google Scholar]

- Bernstein, E.; Phillips, S.R.; Silverman, S. Attitudes and perceptions of middle school students toward competitive activities in physical education. J. Teach. Phys. Educ. 2011, 30, 69–83. [Google Scholar] [CrossRef]

- Ryan, A.M.; Shim, S.S. An exploration of young adolescents’ social achievement goals and social adjustment in middle school. J. Educ. Psychol. 2008, 100, 672. [Google Scholar] [CrossRef]

- Martinovic, D.; Freiman, V.; Lekule, C.S.; Yang, Y. The roles of digital literacy in social life of youth. In Encyclopedia of Information Science and Technology, 4th ed.; IGI Global: Hershey, PA, USA, 2018; pp. 2314–2325. [Google Scholar]

- Dooley, C.M.M.; Welch, M.M. Nature of Interactions Among Young Children and Adult Caregivers in a Children’s Museum. Early Child. Educ. J. 2014, 42, 125–132. [Google Scholar] [CrossRef]

- Hurtienne, J.; Israel, J.H. Image schemas and their metaphorical extensions. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction—TEI ’07, Baton Rouge, LA, USA, 15–17 February 2007; ACM Press: New York, NY, USA, 2007; p. 127. [Google Scholar] [CrossRef]

- Follmer, S.; Raffle, H.; Go, J.; Ballagas, R.; Ishii, H. Video play: Playful interactions in video conferencing for long-distance families with young children. In Proceedings of the 9th International Conference on Interaction Design and Children, Barcelona, Spain, 9–12 June 2010; pp. 49–58. [Google Scholar]

| System Function | Gesture |

|---|---|

| Switch Dataset | Swipe or Jump |

| Rotate Globes | Using a steering wheel gesture |

| Zoom In or Out | Moving hands closer together or farther apart |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Friedman, A.; Tazike, M.; Gokpinar Shelton, E.; Patel, N.; Alhakamy, A.; Cafaro, F. “I’m a Fish!”: Exploring Children’s Engagement with Human–Data Interactions in Museums. Appl. Sci. 2025, 15, 11304. https://doi.org/10.3390/app152111304

Friedman A, Tazike M, Gokpinar Shelton E, Patel N, Alhakamy A, Cafaro F. “I’m a Fish!”: Exploring Children’s Engagement with Human–Data Interactions in Museums. Applied Sciences. 2025; 15(21):11304. https://doi.org/10.3390/app152111304

Chicago/Turabian StyleFriedman, Adina, Mahya Tazike, Esen Gokpinar Shelton, Nachiketa Patel, A’aeshah Alhakamy, and Francesco Cafaro. 2025. "“I’m a Fish!”: Exploring Children’s Engagement with Human–Data Interactions in Museums" Applied Sciences 15, no. 21: 11304. https://doi.org/10.3390/app152111304

APA StyleFriedman, A., Tazike, M., Gokpinar Shelton, E., Patel, N., Alhakamy, A., & Cafaro, F. (2025). “I’m a Fish!”: Exploring Children’s Engagement with Human–Data Interactions in Museums. Applied Sciences, 15(21), 11304. https://doi.org/10.3390/app152111304