MambaUSR: Mamba and Frequency Interaction Network for Underwater Image Super-Resolution

Abstract

1. Introduction

- We present the first application of SSM to underwater SR, showcasing the potential of Mamba for efficient and effective global modeling in underwater image processing.

- We propose a frequency state-space module (FSSM) that explores long-term dependencies to resolve detail blurring and low contrast in underwater images.

- We devise a frequency-assisted enhancement module (FAEM) that integrates FFT with CA to efficiently extract high-frequency features, resulting in a more natural reconstructed image.

2. Related Work

2.1. Underwater Image SR

2.2. Fourier Transform

3. Proposed Method

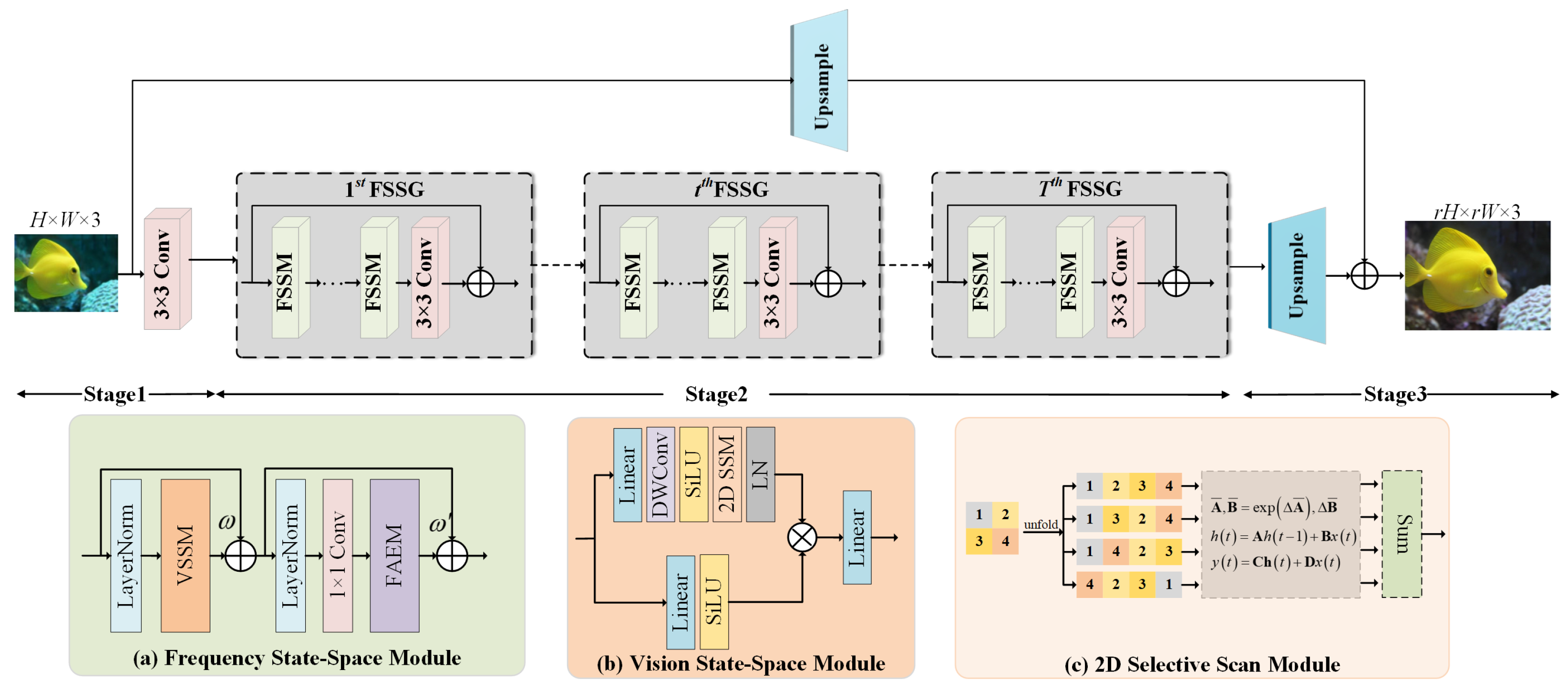

3.1. Overall Network Architecture

3.2. Frequency State-Space Modules

3.3. Vision State-Space Module

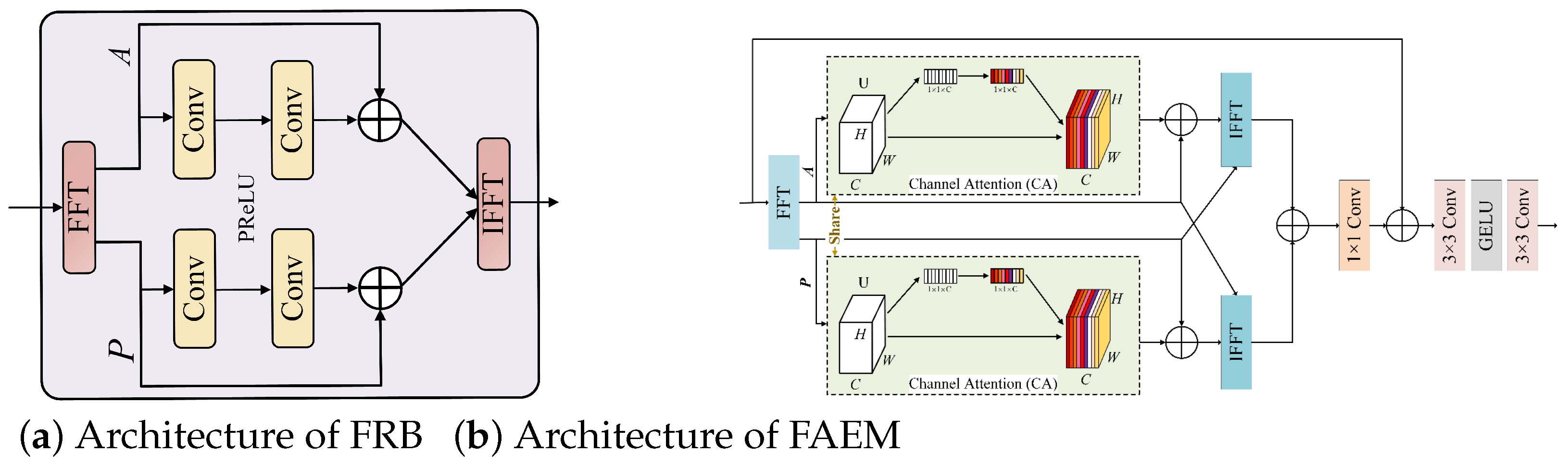

3.4. Frequency-Assisted Enhancement Module

| Algorithm 1: The implementation of Frequency-assisted Enhancement Module (FAEM) |

Input: Input Feature Matrix Output: Resultant Matrix

|

4. Experiments

4.1. Dataset and Experimental Setup

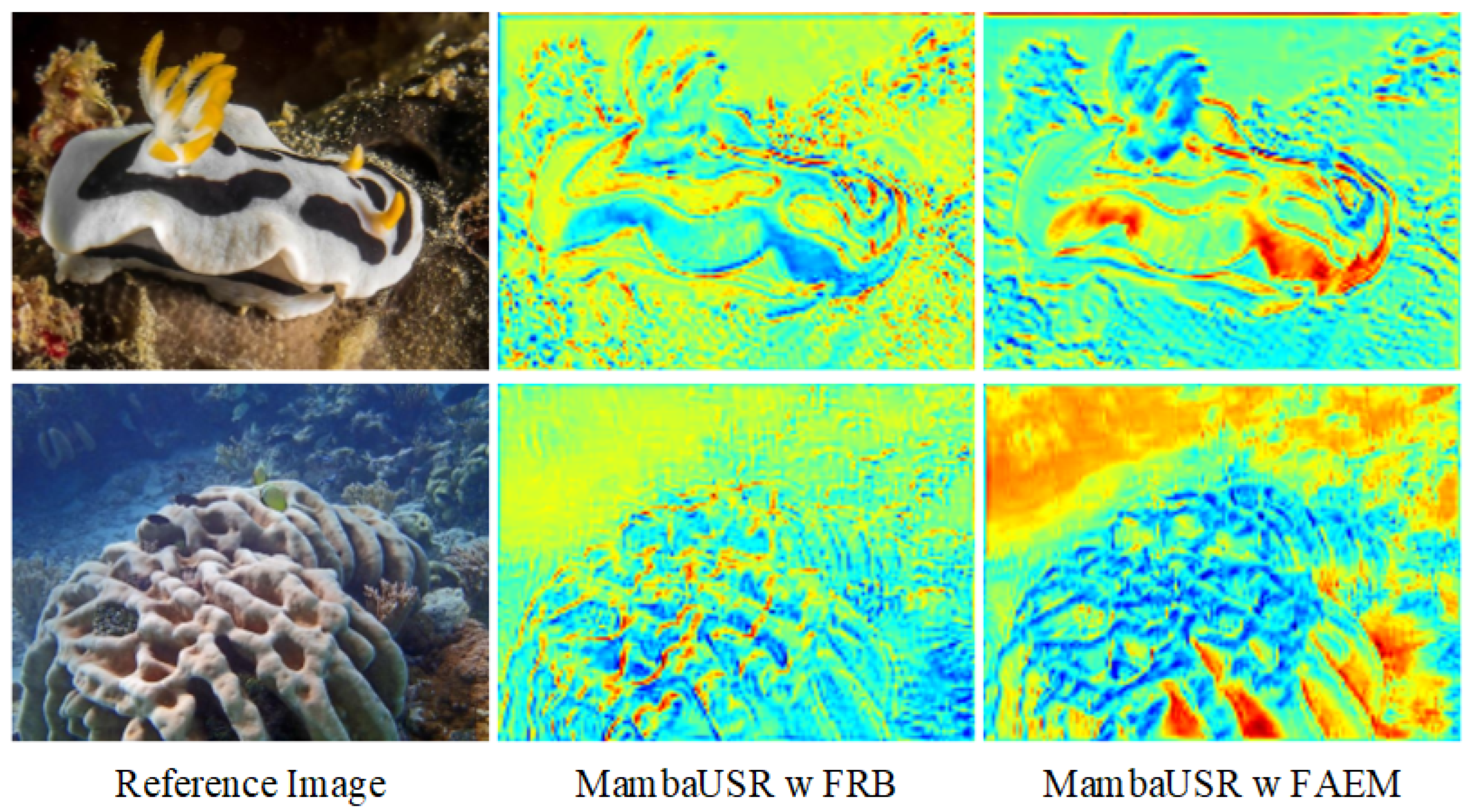

4.2. Ablation Study

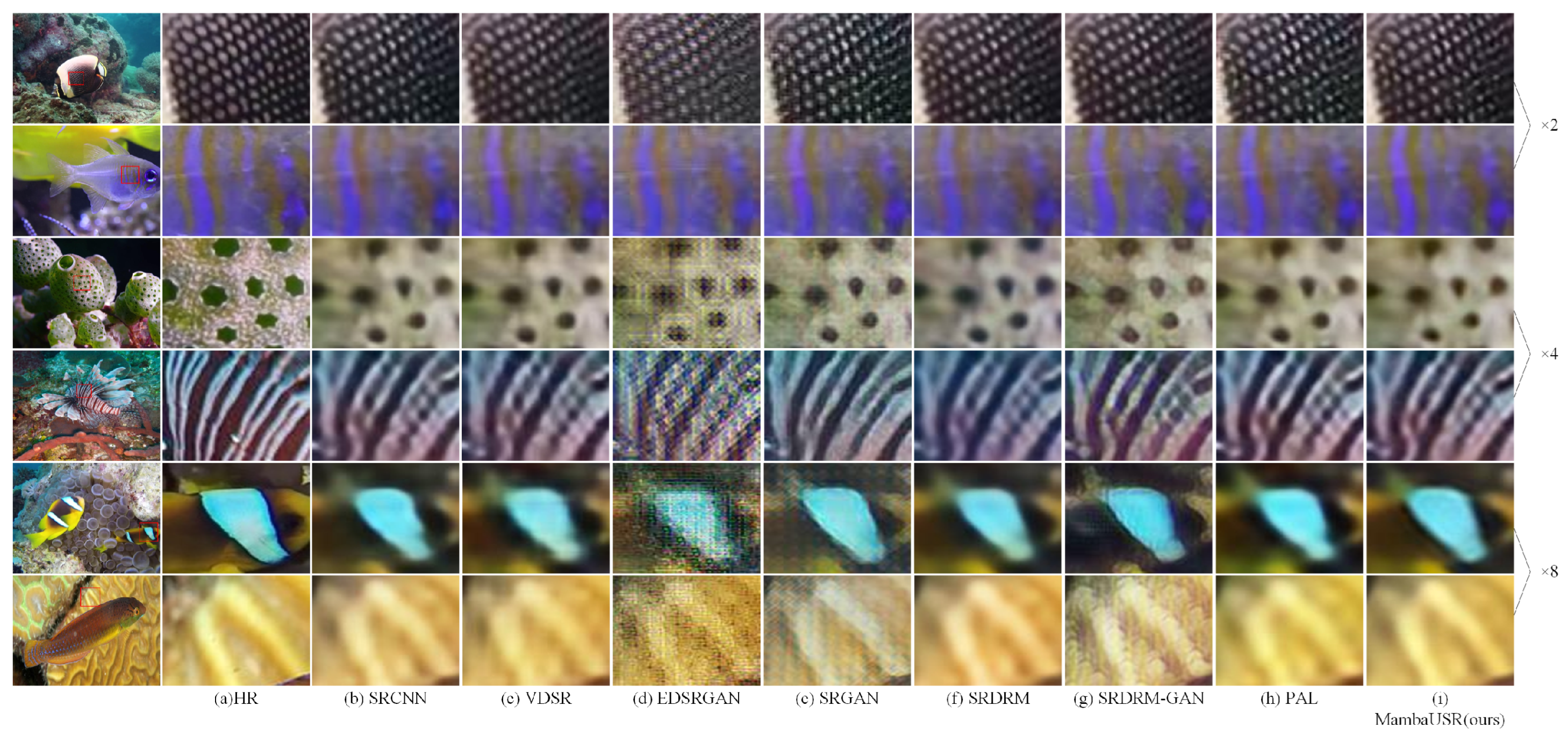

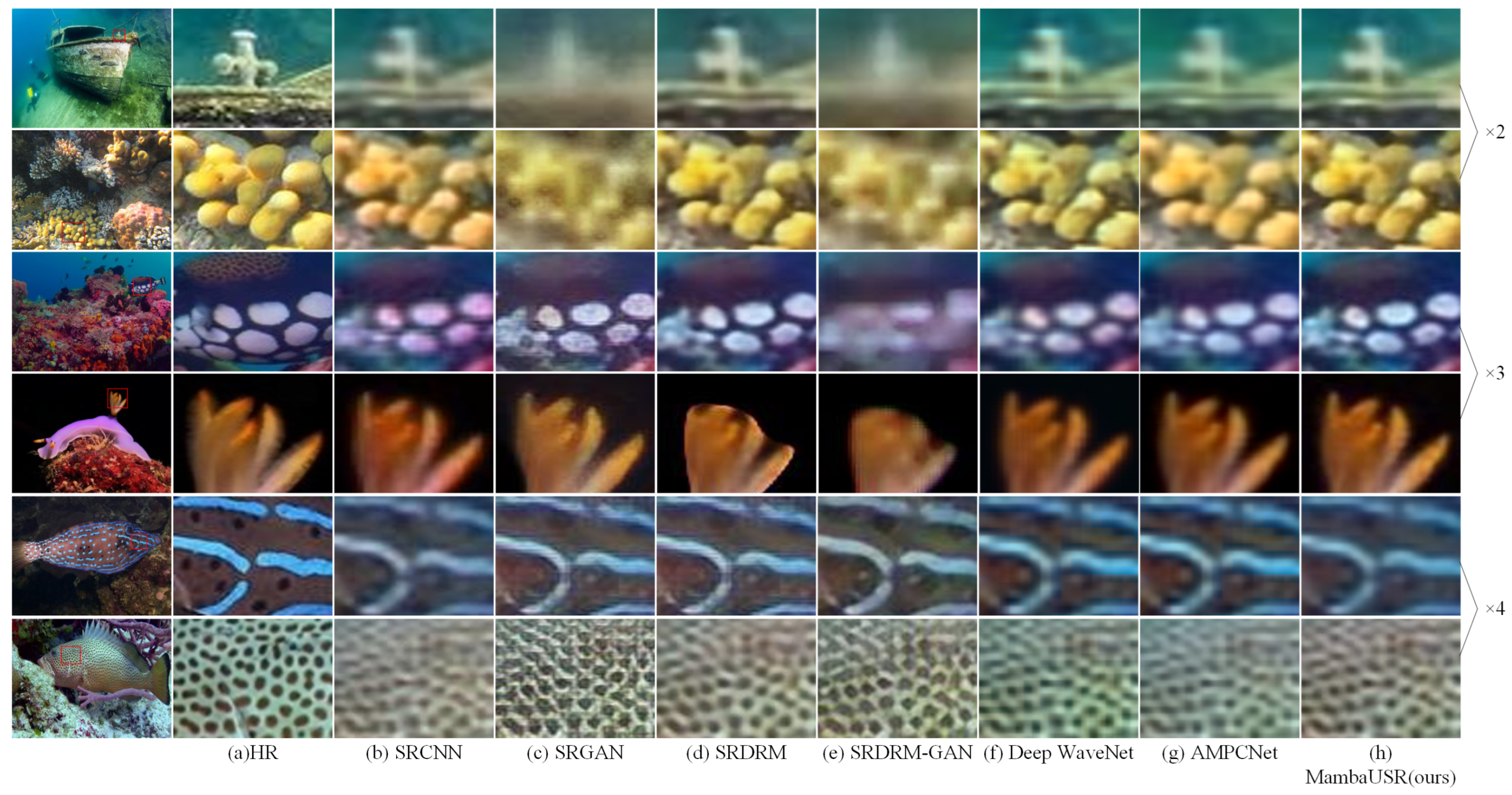

4.3. Comparison with Underwater SR

4.3.1. Comparison on USR-248 Dataset

4.3.2. Comparison on the UFO-120 Dataset

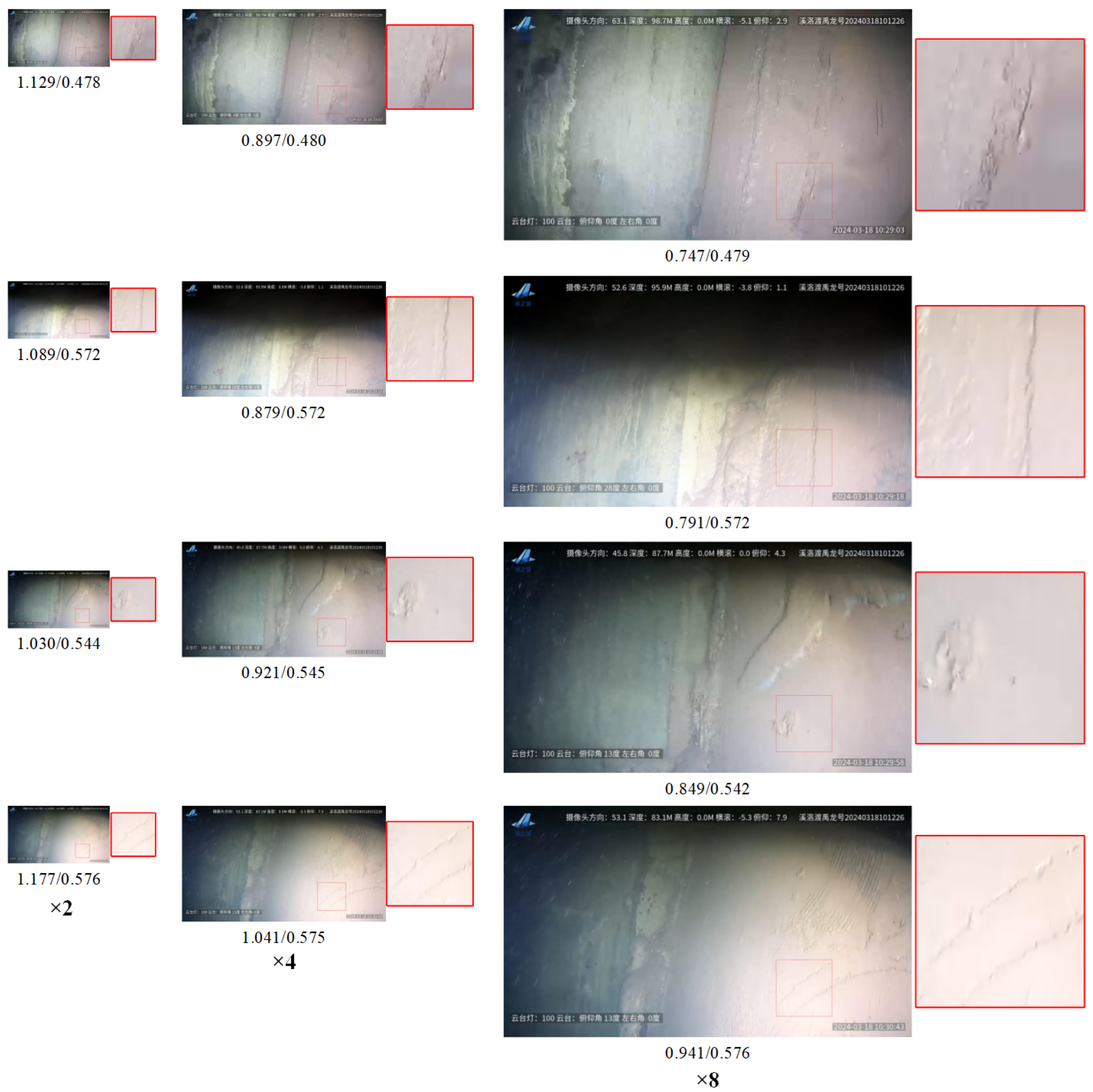

4.3.3. Application in XLD Hydraulic Project

4.4. Model Efficiency

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, J.; Chen, C.; Tang, J.; Wu, G.S. From Coarse to Fine: Hierarchical Pixel Integration for Lightweight Image Super-Resolution. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1666–1674. [Google Scholar] [CrossRef]

- Zhou, Z.T.; Li, G.P.; Wang, G.Z. A Hybrid of Transformer and CNN for Efficient Single Image Super-Resolution via Multi-Level Distillation. Displays 2023, 76, 102352. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.T.; Cao, Y.; Hu, H.; Wei, Y.X.; Zhang, Z.; Lin, S.; Guo, B.N. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Lu, Z.S.; Liu, H.; Li, J.C.; Zhang, L.L. Efficient Transformer for Single Image Super-Resolution. arXiv 2021, arXiv:2108.11084. [Google Scholar]

- Yang, C.H.; Chen, Z.H.; Espinosa, M.; Ericsson, L.; Wang, Z.Y.; Liu, J.M.; Crowley, E.J. Plainmamba: Improving Non-Hierarchical Mamba in Visual Recognition. arXiv 2024, arXiv:2403.17695. [Google Scholar]

- Zhu, L.H.; Liao, B.C.; Zhang, Q.; Wang, X.L.; Liu, W.Y.; Wang, X.G. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar]

- Cheng, D.; Kou, K.I. FFT Multichannel Interpolation and Application to Image Super-Resolution. Signal Process. 2019, 162, 21–34. [Google Scholar] [CrossRef]

- Kong, L.S.; Dong, J.X.; Ge, J.J.; Li, M.Q.; Pan, J.S. Efficient Frequency Domain-Based Transformers for High-Quality Image Deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5886–5895. [Google Scholar]

- Islam, M.J.; Enan, S.S.; Luo, P.G.; Sattar, J. Underwater Image Super-Resolution Using Deep Residual Multipliers. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 900–906. [Google Scholar]

- Islam, M.J.; Luo, P.G.; Sattar, J. Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception. arXiv 2020, arXiv:2002.01155. [Google Scholar]

- Zhang, Y.; Yang, S.X.; Sun, Y.M.; Liu, S.D.; Li, X.G. Attention-Guided Multi-Path Cross-CNN for Underwater Image Super-Resolution. Signal Image Video Process. 2022, 16, 155–163. [Google Scholar] [CrossRef]

- Wang, H.; Wu, H.; Hu, Q.; Chi, J.N.; Yu, X.S.; Wu, C.D. Underwater Image Super-Resolution Using Multi-Stage Information Distillation Networks. J. Vis. Commun. Image Represent. 2021, 77, 103136. [Google Scholar] [CrossRef]

- Yang, H.H.; Huang, K.C.; Chen, W.T. Laffnet: A Lightweight Adaptive Feature Fusion Network for Underwater Image Enhancement. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 685–692. [Google Scholar]

- Sharma, P.; Bisht, I.; Sur, A. Wavelength-Based Attributed Deep Neural Network for Underwater Image Restoration. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–23. [Google Scholar] [CrossRef]

- Peng, L.T.; Zhu, C.L.; Bian, L.H. U-Shape Transformer for Underwater Image Enhancement. IEEE Trans. Image Process. 2023, 32, 3066–3079. [Google Scholar] [CrossRef]

- Mei, X.K.; Ye, X.F.; Zhang, X.F.; Liu, Y.S.; Wang, J.T.; Hou, J.; Wang, X.L. UIR-Net: A Simple and Effective Baseline for Underwater Image Restoration and Enhancement. Remote Sens. 2022, 15, 39. [Google Scholar] [CrossRef]

- Ren, T.D.; Xu, H.Y.; Jiang, G.Y.; Yu, M.; Zhang, X.; Wang, B.; Luo, T. Reinforced Swin-ConvS Transformer for Simultaneous Underwater Sensing Scene Image Enhancement and Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4209616. [Google Scholar] [CrossRef]

- Gu, J.; Dong, C. Interpreting Super-Resolution Networks with Local Attribution Maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9199–9208. [Google Scholar]

- Gu, A.; Johnson, I.; Timalsina, A.; Rudra, A.; Ré, C. How to Train Your Hippo: State Space Models with Generalized Orthogonal Basis Projections. arXiv 2022, arXiv:2206.12037. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-Time Sequence Modeling with Selective State Spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Guan, M.S.; Xu, H.Y.; Jiang, G.Y.; Yu, M.; Chen, Y.Y.; Luo, T.; Song, Y. WaterMamba: Visual State Space Model for Underwater Image Enhancement. arXiv 2024, arXiv:2405.08419. [Google Scholar]

- Liu, Y.; Tian, Y.J.; Zhao, Y.Z.; Yu, H.T.; Xie, L.X.; Wang, Y.W.; Ye, Q.X.; Jiao, J.B.; Liu, Y.F. Vmamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Dong, C.Y.; Zhao, C.; Cai, W.L.; Yang, B. O-Mamba: O-Shape State-Space Model for Underwater Image Enhancement. arXiv 2024, arXiv:2408.12816. [Google Scholar]

- Yao, Z.S.; Fan, G.D.; Fan, J.F.; Gan, M.; Chen, C.L.P. Spatial-Frequency Dual-Domain Feature Fusion Network for Low-Light Remote Sensing Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4706516. [Google Scholar] [CrossRef]

- Wang, C.Y.; Jiang, J.J.; Zhong, Z.W.; Liu, X.M. Spatial-Frequency Mutual Learning for Face Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22356–22366. [Google Scholar]

- Wang, Z.; Zhao, Y.W.; Chen, J.C. Multi-Scale Fast Fourier Transform Based Attention Network for Remote-Sensing Image Super-Resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2728–2740. [Google Scholar] [CrossRef]

- Chen, X.L.; Wei, S.Q.; Yi, C.; Quan, L.W.; Lu, C.Y. Progressive Attentional Learning for Underwater Image Super-Resolution. In International Conference on Intelligent Robotics and Applications; Springer: Cham, Switzerland, 2020; pp. 233–243. [Google Scholar]

- Wang, L.; Li, X.; Li, K.; Mu, Y.; Zhang, M.; Yue, Z.X. Underwater Image Restoration Based on Dual Information Modulation Network. Sci. Rep. 2024, 14, 5416. [Google Scholar] [CrossRef]

- Pramanick, A.; Sur, A.; Saradhi, V.V. Harnessing Multi-Resolution and Multi-Scale Attention for Underwater Image Restoration. Vis. Comput. 2025, 41, 8235–8254. [Google Scholar] [CrossRef]

- Dharejo, F.A.; Ganapathi, I.I.; Zawish, M.; Alawode, B.; Alathbah, M.; Werghi, N.; Javed, S. SwinWave-SR: Multi-Scale Lightweight Underwater Image Super-Resolution. Inf. Fusion 2024, 103, 102127. [Google Scholar] [CrossRef]

- Chang, B.C.; Yuan, G.J.; Li, J.J. Mamba-Enhanced Spectral-Attentive Wavelet Network for Underwater Image Restoration. Eng. Appl. Artif. Intell. 2025, 143, 109999. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.Q.; Jiang, K.; Chen, Y.Z.; Zhang, Q.; Lin, C.W. Frequency-Assisted Mamba for Remote Sensing Image Super-Resolution. IEEE Trans. Multimed. 2025, 27, 1783–1796. [Google Scholar] [CrossRef]

- Shao, M.W.; Qiao, Y.J.; Meng, D.Y.; Zuo, W.M. Uncertainty-Guided Hierarchical Frequency Domain Transformer for Image Restoration. Knowl.-Based Syst. 2023, 263, 110306. [Google Scholar] [CrossRef]

- Huang, J.; Liu, Y.J.; Zhao, F.; Yan, K.Y.; Zhang, J.H.; Huang, Y.K.; Zhou, M.; Xiong, Z.W. Deep Fourier-Based Exposure Correction Network with Spatial-Frequency Interaction. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2022; pp. 163–180. [Google Scholar]

- Zhang, H.P.; Xu, H.L.; Yu, X.S.; Zhang, X.Y.; Wu, C.D. Leveraging Frequency and Spatial Domain Information for Underwater Image Restoration. J. Phys. Conf. Ser. 2024, 2832, 012001. [Google Scholar] [CrossRef]

- Guo, H.; Li, J.M.; Dai, T.; Ouyang, Z.H.; Ren, X.D.; Xia, S.T. Mambair: A Simple Baseline for Image Restoration with State-Space Model. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2024; pp. 222–241. [Google Scholar]

- Chen, Z.; Zhang, Y.L.; Gu, J.J.; Kong, L.H.; Yang, X.K.; Yu, F. Dual Aggregation Transformer for Image Super-Resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 12312–12321. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.M.; Tang, X.O. Learning a Deep Convolutional Network for Image Super-Resolution. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Kim, J.W.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.H.; Kim, H.W.; Nah, S.J.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.T.; Yu, K.; Wu, S.X.; Gu, J.J.; Liu, Y.H.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

| Model | PSNR (dB) | SSIM | UIQM |

|---|---|---|---|

| MambaUSR w FRB | 24.93 | 0.6709 | 2.8179 |

| MambaUSR w/o FAEM | 25.02 | 0.6758 | 2.8208 |

| MambaUSR w/o VSSM | 24.91 | 0.6579 | 2.8107 |

| MambaUSR | 25.07 | 0.6853 | 2.8297 |

| Scale | Method | FLOPs (G) | Params (M) | PSNR (dB) | SSIM | UIQM |

|---|---|---|---|---|---|---|

| SRCNN [39] | 21.3 | 0.06 | 26.81 | 0.76 | 2.74 | |

| VDSR [40] | 205.28 | 0.67 | 28.98 | 0.79 | 2.57 | |

| EDSRGAN [41] | 273.34 | 1.38 | 27.12 | 0.77 | 2.67 | |

| SRGAN [42] | 377.76 | 5.95 | 28.05 | 0.78 | 2.74 | |

| SRResNet [42] | 222.37 | 1.59 | 25.98 | 0.72 | – | |

| ×2 | ESRGAN [43] | 4274.68 | 16.7 | 26.66 | 0.75 | 2.7 |

| SRDRM [9] | 203.91 | 0.83 | 28.36 | 0.80 | 2.78 | |

| SRDRM-GAN [9] | 289.38 | 11.31 | 28.55 | 0.81 | 2.77 | |

| PAL [27] | 203.82 | 0.83 | 28.41 | 0.80 | – | |

| AMPCNet [11] | – | 1.15 | 29.54 | 0.80 | 2.77 | |

| Deep WaveNet [14] | 21.47 | 0.28 | 29.09 | 0.80 | 2.73 | |

| MambaUSR (ours) | 93.8 | 2.73 | 29.75 | 0.52 | 2.75 | |

| SRCNN [39] | 21.3 | 0.06 | 23.38 | 0.67 | 2.38 | |

| VDSR [40] | 205.28 | 0.67 | 25.7 | 0.68 | 2.44 | |

| EDSRGAN [41] | 206.42 | 1.97 | 21.65 | 0.65 | 2.40 | |

| SRGAN [42] | 529.86 | 5.95 | 24.76 | 0.69 | 2.42 | |

| SRResNet [42] | 85.49 | 1.59 | 24.15 | 0.66 | – | |

| ×4 | ESRGAN [43] | 1504.09 | 16.7 | 23.79 | 0.66 | 2.38 |

| SRDRM [9] | 291.73 | 1.9 | 24.64 | 0.68 | 2.46 | |

| SRDRM-GAN [9] | 377.2 | 12.38 | 24.62 | 0.69 | 2.48 | |

| PAL [27] | 303.42 | 1.92 | 24.89 | 0.69 | – | |

| AMPCNet [11] | – | 1.17 | 25.90 | 0.66 | 2.58 | |

| Deep WaveNet [14] | 5.59 | 0.29 | 25.20 | 0.68 | 2.54 | |

| MambaUSR (ours) | 23.9 | 2.75 | 26.14 | 0.66 | 2.53 | |

| SRCNN [39] | 21.3 | 0.06 | 19.97 | 0.57 | 2.01 | |

| VDSR [40] | 205.28 | 0.67 | 23.58 | 0.63 | 2.17 | |

| EDSRGAN [41] | 189.69 | 2.56 | 19.87 | 0.58 | 2.12 | |

| SRGAN [42] | 567.88 | 5.95 | 20.14 | 0.60 | 2.10 | |

| SRResNet [42] | 51.28 | 1.59 | 19.26 | 0.55 | – | |

| ×8 | ESRGAN [41] | 811.44 | 16.7 | 19.75 | 0.58 | 2.05 |

| SRDRM [9] | 313.68 | 2.97 | 21.20 | 0.60 | 2.18 | |

| SRDRM-GAN [9] | 399.15 | 13.45 | 20.25 | 0.61 | 2.17 | |

| PAL [27] | 325.51 | 2.99 | 22.51 | 0.63 | – | |

| AMPCNet [11] | – | 1.25 | 23.83 | 0.62 | 2.25 | |

| Deep WaveNet [14] | 1.62 | 0.34 | 23.25 | 0.62 | 2.21 | |

| MambaUSR (ours) | 6.43 | 2.84 | 23.97 | 0.55 | 2.20 |

| Method | PSNR (dB) | SSIM | UIQM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ×2 | ×3 | ×4 | ×2 | ×3 | ×4 | ×2 | ×3 | ×4 | |

| SRCNN [41] | 24.75 | 22.22 | 19.05 | 0.72 | 0.65 | 0.56 | 2.39 | 2.24 | 2.02 |

| SRGAN [42] | 26.11 | 23.87 | 21.08 | 0.75 | 0.70 | 0.58 | 2.44 | 2.39 | 2.56 |

| SRDRM [9] | 24.62 | – | 23.15 | 0.72 | – | 0.67 | 2.59 | – | 2.57 |

| SRDRM-GAN [9] | 24.61 | – | 23.26 | 0.72 | – | 0.67 | 2.59 | – | 2.55 |

| Deep WaveNet [14] | 25.71 | 25.23 | 25.08 | 0.77 | 0.76 | 0.74 | 2.99 | 2.96 | 2.97 |

| AMPCNet [11] | 25.24 | 25.73 | 24.70 | 0.71 | 0.70 | 0.70 | 2.93 | 2.85 | 2.88 |

| URSCT [17] | 25.96 | – | 23.59 | 0.80 | – | 0.66 | – | – | – |

| MambaUSR (ours) | 25.76 | 26.15 | 25.11 | 0.74 | 0.74 | 0.69 | 2.93 | 2.97 | 2.84 |

| Method | FLOPs (G) | Params (M) | PSNR (dB) | SSIM | Average (s) | Efficiency (fps) |

|---|---|---|---|---|---|---|

| SRCNN | 21.30 | 0.06 | 19.97 | 0.57 | 0.08496 | 11.77 |

| VDSR | 205.28 | 0.67 | 23.58 | 0.63 | 0.08852 | 11.30 |

| EDSRGAN | 189.69 | 2.56 | 19.87 | 0.58 | 0.04632 | 21.59 |

| SRGAN | 567.88 | 5.95 | 20.14 | 0.6 | 0.09454 | 10.58 |

| SRResNet | 51.28 | 1.59 | 19.26 | 0.55 | 0.03418 | 29.26 |

| ESRGAN | 811.44 | 16.7 | 19.75 | 0.58 | 0.09310 | 10.74 |

| SRDRM | 313.68 | 2.97 | 21.2 | 0.6 | 0.08940 | 11.19 |

| SRDRM-GAN | 399.15 | 13.45 | 20.25 | 0.61 | 0.09063 | 11.03 |

| PAL | 325.51 | 2.99 | 22.51 | 0.63 | 0.08068 | 12.39 |

| AMPCNet | - | 1.25 | 23.83 | 0.62 | 0.03815 | 26.21 |

| Deep WaveNet | 1.62 | 0.34 | 23.25 | 0.62 | 0.03514 | 28.46 |

| MambaUSR (ours) | 6.43 | 2.84 | 23.97 | 0.55 | 0.03657 | 27.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, G.; Zhang, J.; Chen, Z. MambaUSR: Mamba and Frequency Interaction Network for Underwater Image Super-Resolution. Appl. Sci. 2025, 15, 11263. https://doi.org/10.3390/app152011263

Shen G, Zhang J, Chen Z. MambaUSR: Mamba and Frequency Interaction Network for Underwater Image Super-Resolution. Applied Sciences. 2025; 15(20):11263. https://doi.org/10.3390/app152011263

Chicago/Turabian StyleShen, Guangze, Jingxuan Zhang, and Zhe Chen. 2025. "MambaUSR: Mamba and Frequency Interaction Network for Underwater Image Super-Resolution" Applied Sciences 15, no. 20: 11263. https://doi.org/10.3390/app152011263

APA StyleShen, G., Zhang, J., & Chen, Z. (2025). MambaUSR: Mamba and Frequency Interaction Network for Underwater Image Super-Resolution. Applied Sciences, 15(20), 11263. https://doi.org/10.3390/app152011263